A Leader-Assisted Decentralized Adaptive Formation Method for UAV Swarms Integrating a Pre-Trained Semantic Broadcast Communication Model

Abstract

Highlights

- A proximal policy optimization (PPO)-based semantic broadcast model for collective perception with significant communication efficiency.

- A multi-agent proximal policy optimization (MAPPO)-based decentralized adaptive formation (DecAF) framework, incorporating the semantic broadcast communication (SemanticBC) mechanism.

- The proposed solution significantly improves global perception and formation efficiency compared with methods that rely on local observations.

- The proposed framework improves scalability and robustness in large UAV fleets by enabling efficient global communication, adaptive formation, and obstacle avoidance with minimal communication overhead.

Abstract

1. Introduction

- ●

- To address the consistency conflicts caused by limited local observations in decentralized UAV swarm formation and the loss of communication efficiency due to redundant transmissions, this paper proposes SemanticBC-DecAF, a decentralized adaptive formation (DecAF) scheme based on a leader–follower architecture and incorporating SemanticBC. By leveraging the task-oriented nature of semantic communication, the proposed scheme enhances the swarm’s capability for autonomous obstacle avoidance and adaptive formation in complex environments.

- ●

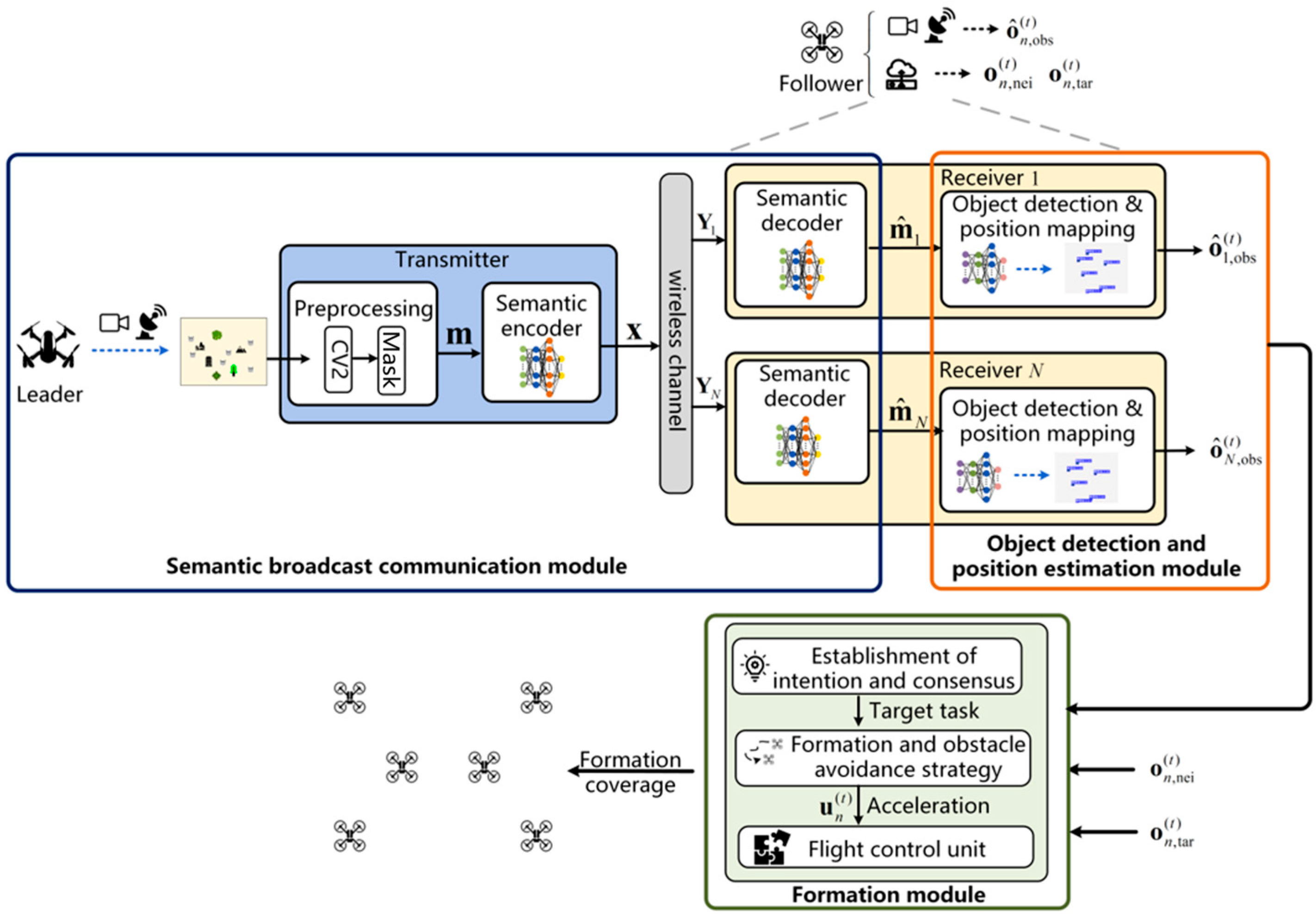

- To enhance the efficiency of information transmission, the proposed semantic broadcast communication framework encodes obstacle-containing images at the transmitter (leader) and broadcasts them to the receivers (followers), thereby ensuring the effective delivery of critical information while significantly reducing communication overhead. In this framework, the semantic broadcast communication module employs the proximal policy optimization (PPO) algorithm to alternately optimize the shared encoder and multiple decoders.

- ●

- To alleviate the consistency conflicts in obstacle perception that may arise from discrepancies in local observations among swarm agents, a pre-trained YOLOv5-based object detection and localization module is deployed on the receiver UAVs. Using the images reconstructed by the semantic decoder as input, this module enables precise extraction of global obstacle position information. Subsequently, the predicted global obstacle positions are combined with each UAV’s local observations and jointly fed into the formation control module, which is built upon multi-agent proximal policy optimization (MAPPO), thereby guiding the UAVs to accomplish autonomous obstacle avoidance and adaptive formation tasks.

- ●

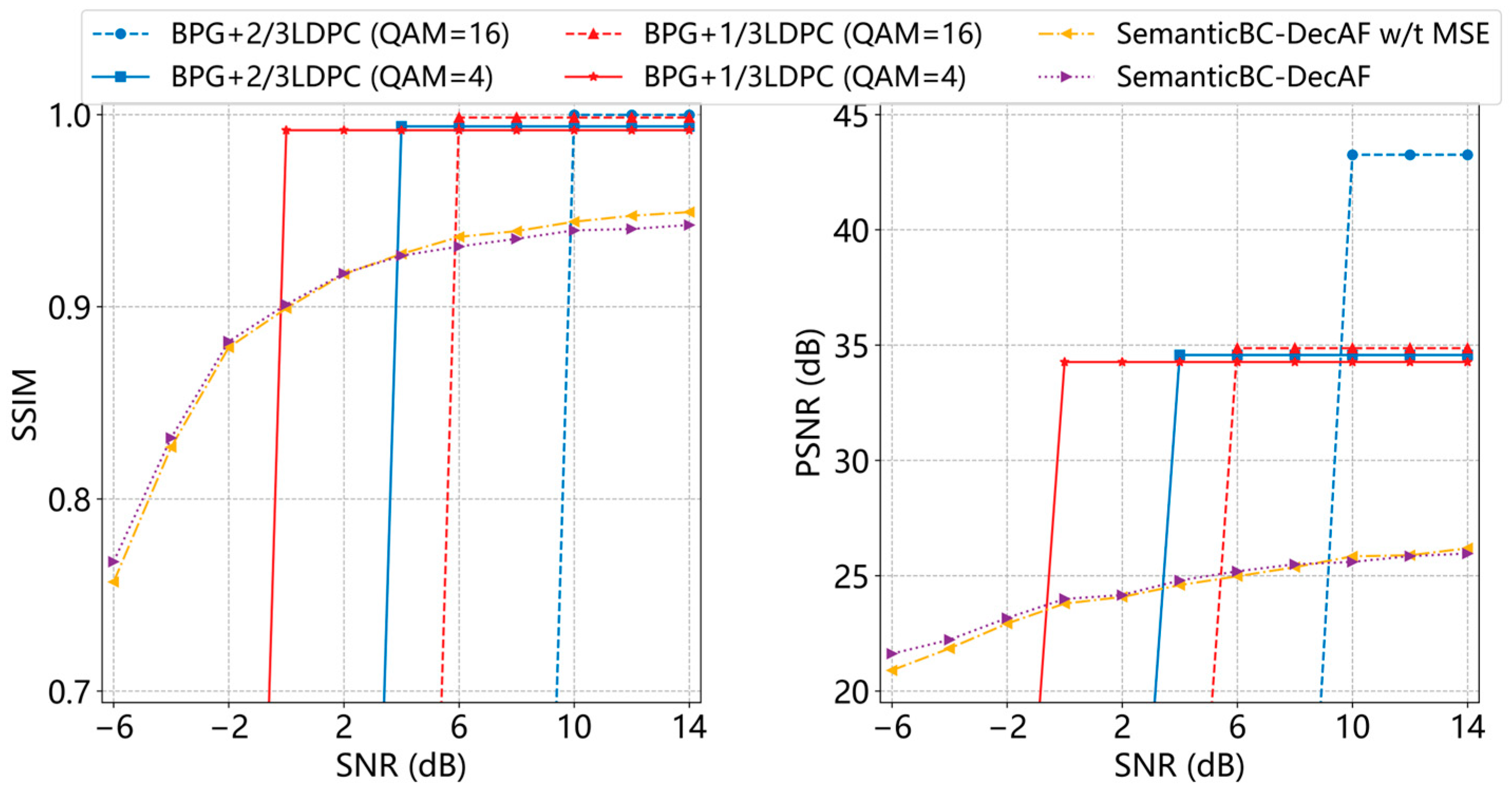

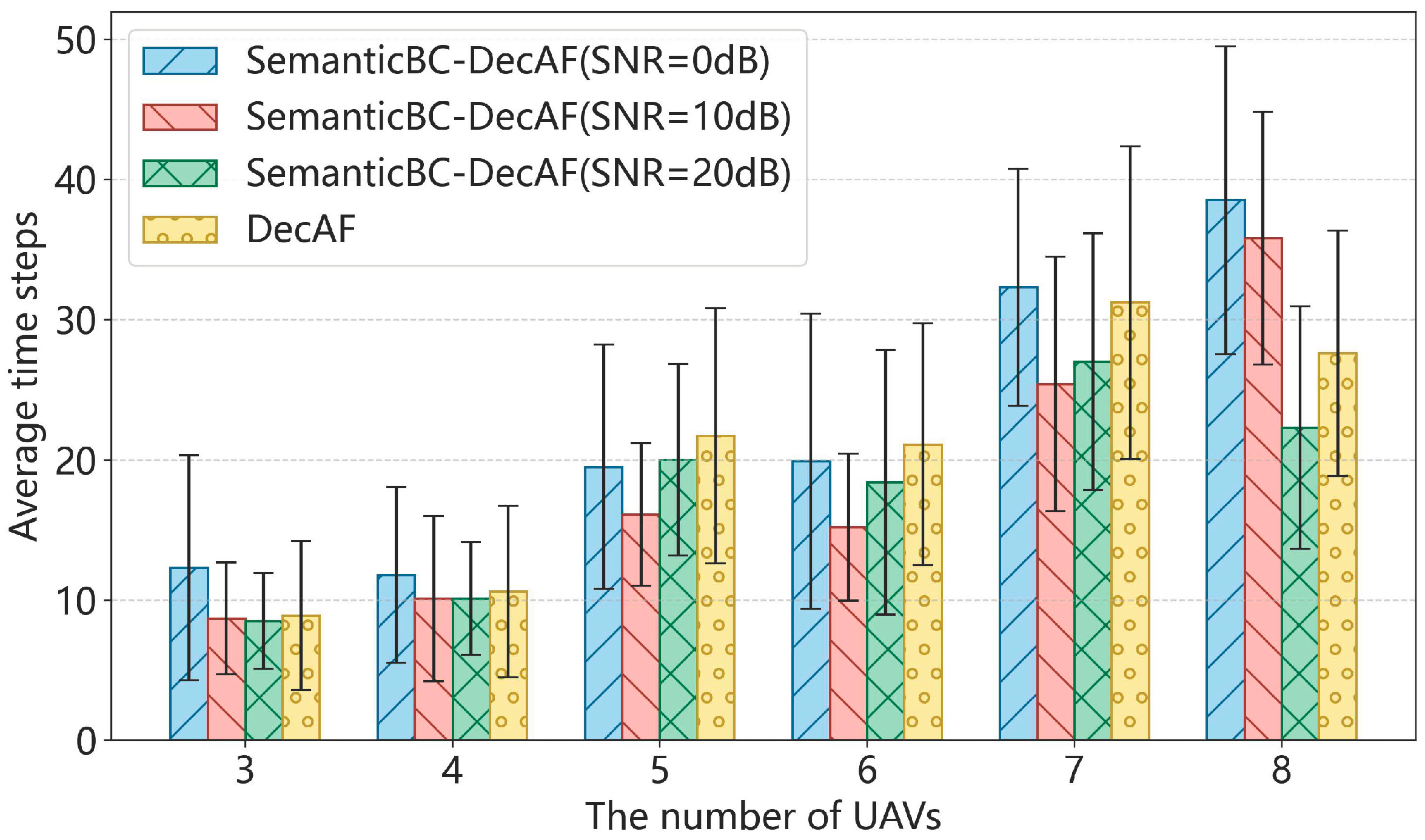

- Finally, the proposed scheme is implemented and evaluated in the multi-agent simulation environment MPE [15]. Experimental results demonstrate that, compared with conventional communication methods and semantic broadcast communication based on joint source–channel coding (JSCC), SemanticBC-DecAF exhibits more pronounced advantages in terms of semantic image reconstruction accuracy and adaptability to varying channel conditions. Moreover, relative to the traditional DecAF scheme that relies on local observations for obstacle avoidance and formation, the integration of semantic broadcast communication not only significantly improves the decision-making efficiency of autonomous obstacle avoidance but also accelerates the completion of adaptive formation to a certain extent.

2. Task Description and System Model

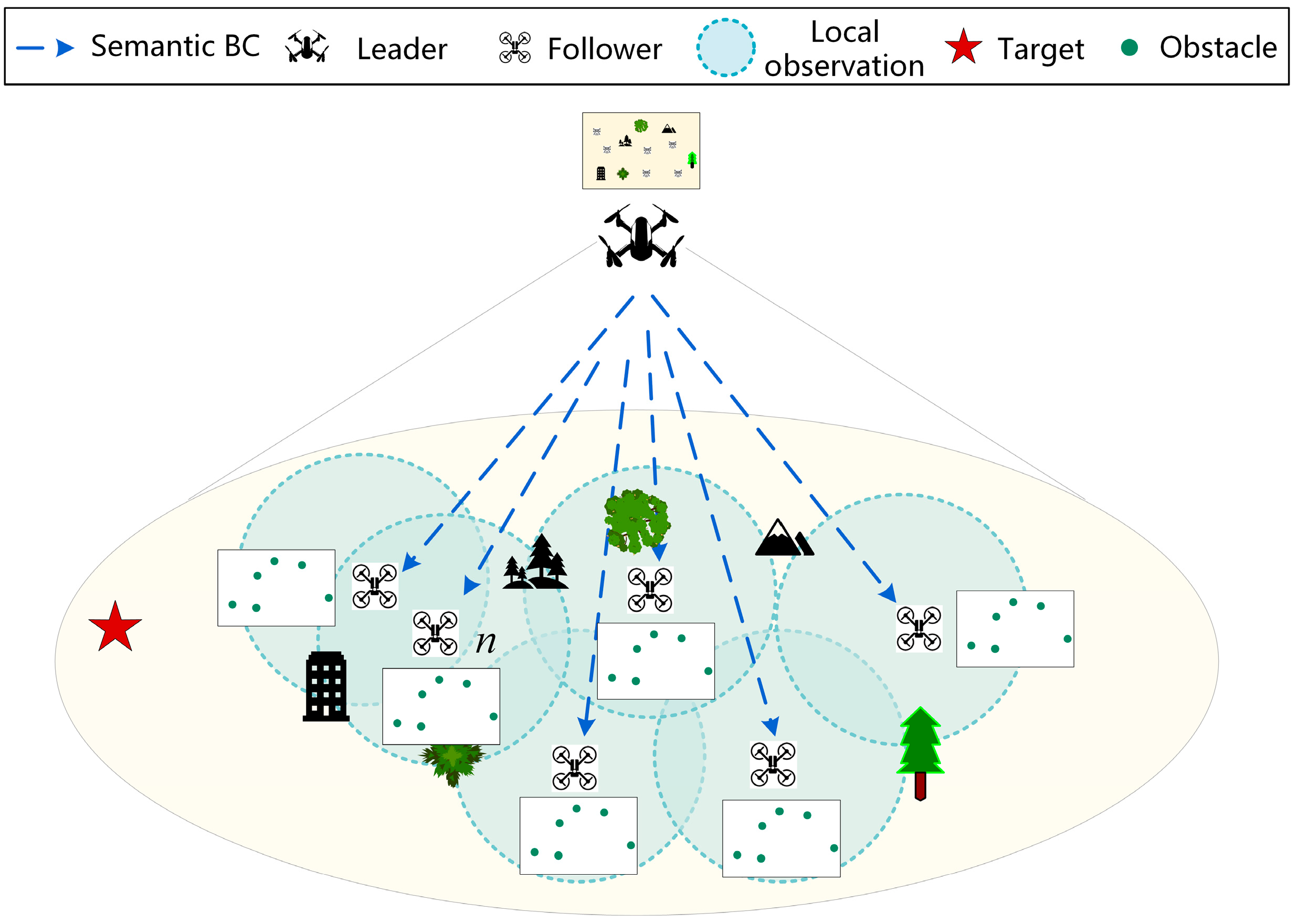

2.1. Task Description

2.2. System Model

- State: At time step , the local state of agent is represented as , where denotes the local observation state of agent , including the observation information of its neighbors and the target, defined as . Here, represents the neighbor state, while denotes the relative position with respect to the target. In addition, corresponds to the global obstacle position information estimated by the object detection and localization module.

- Action: We assume that the UAV swarm flies at a fixed speed and altitude on the same horizontal plane. Based on the state , the formation and obstacle-avoidance policy outputs the acceleration of agent , denoted as .

- Reward: The lower-level formation control reward mainly consists of the formation reward , the target navigation reward , and the obstacle avoidance reward . The specific details of the reward will be elaborated in [8]. Finally, the reward function is formulated as follows:

3. PPO-Based Semantic Broadcast Communication and Decentralized Adaptive Formation

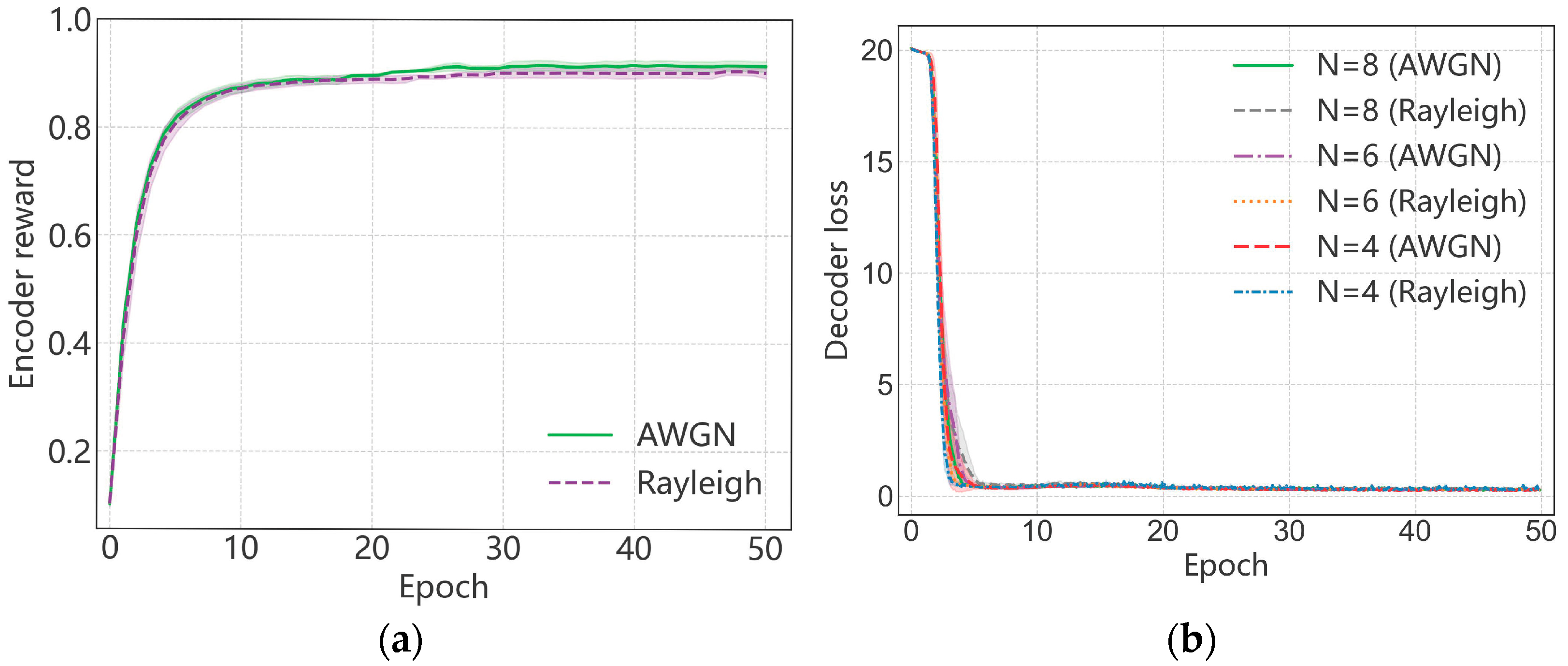

3.1. PPO-Based Semantic Broadcast Communication

3.1.1. Optimization of the Semantic Decoder Network

3.1.2. Optimization of the Semantic Encoder Network

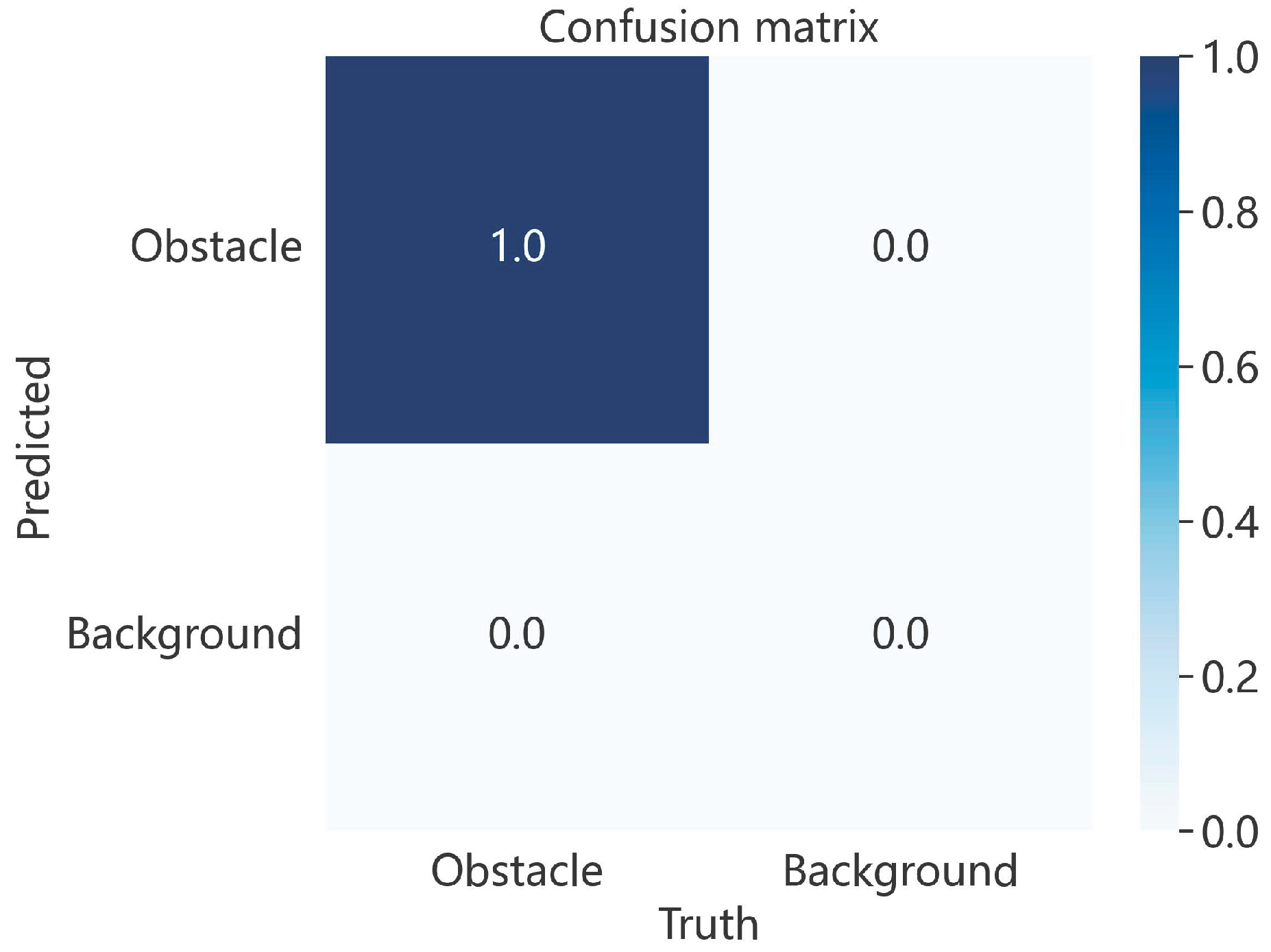

3.2. Object Detection and Position Estimation

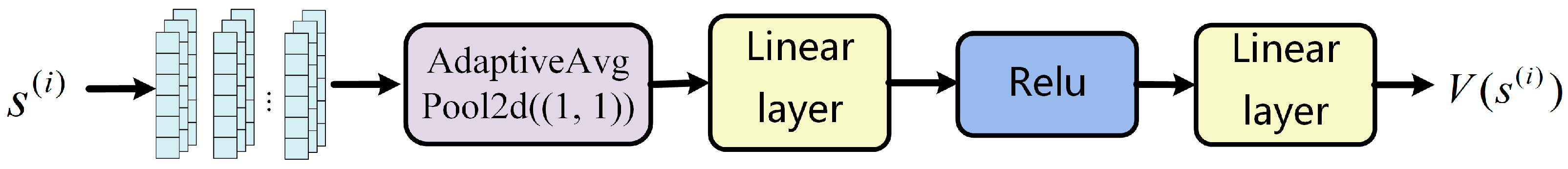

3.3. MAPPO-Based Adaptive Formation Strategy

- (a)

- Training of the formation and navigation layer: In an obstacle-free environment, the output of the obstacle-avoidance module is set to zero, and only the first-stage policy parameters are trained. This enables the UAVs to perform navigation and formation control based on the neighboring information and the target information . The corresponding value network parameters are updated as .

- (b)

- Training of the obstacle avoidance layer: In an environment with randomly placed static obstacles, the second-stage policy is trained to endow the agents with stable obstacle-avoidance capability. The corresponding value network parameters are updated as .

- (c)

- Joint fine-tuning: The policy parameters obtained in stage I are combined with the obstacle avoidance parameters from stage II to form the initial policy. This policy is then jointly fine-tuned in environments containing obstacles, resulting in the complete policy . The corresponding value network parameters are updated as .

4. Simulation Results

4.1. Simulation Settings

- SemanticBC-DecAF w/t MSE: This method adopts the same network architecture as SemanticBC-DecAF; however, reinforcement learning is not incorporated during training. Instead, the optimization is carried out using the conventional MSE loss function.

- BPG+LDPC [22]: The BPG encoder (https://github.com/def-/libbpg (accessed on 24 September 2025)) enables efficient image compression, while the 5G LDPC encoder (https://github.com/NVlabs/sionna (accessed on 24 September 2025)) achieves high-performance channel error correction through sparse matrix design. To further compare the proposed semantic communication mechanism with conventional communication systems, the BPG and LDPC coding schemes are extended to the semantic broadcast communication scenario, employing standard 5G LDPC coding rates and different orders of quadrature amplitude modulation (QAM) for simulation. Specifically, LDPC coding schemes with rates of 2/3 (corresponding to code length (3072,4608)) and 1/3 (corresponding to code length (1536,4608)) are adopted, combined with 4-QAM and 16-QAM modulation for communication simulations.

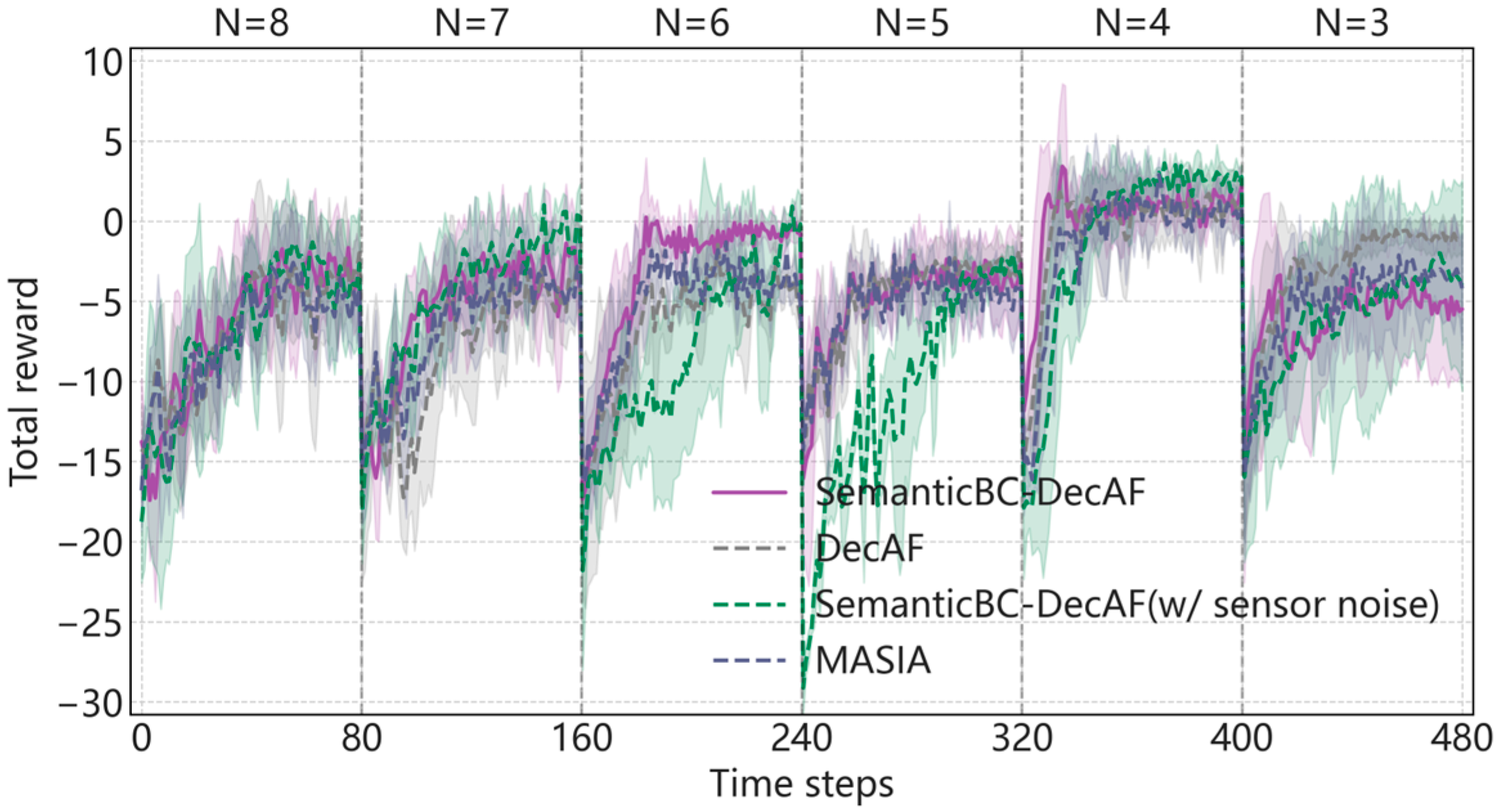

- DecAF: DecAF is the underlying formation strategy proposed in [8], whose policy relies on local observation information and environmental perception to accomplish obstacle avoidance and navigation. In this chapter, it is adopted as a decentralized formation control baseline independent of any semantic communication mechanism, in order to assess the performance gains in task coordination brought by leveraging globally perceived obstacle position information via semantic broadcast communication.

4.2. Numerical Results and Analysis

4.2.1. Semantic Transmission Performance

4.2.2. Position Estimation Performance

4.2.3. Decentralized Adaptive Formation Performance

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Salimi, M.; Pasquier, P. Deep reinforcement learning for flocking control of UAVs in complex environments. In Proceedings of the 6th International Conference on Robotics and Automation Engineering (ICRAE), Guangzhou, China, 19–22 November 2021; pp. 344–352. [Google Scholar]

- Xing, X.; Zhou, Z.; Li, Y. Multi-UAV adaptive cooperative formation trajectory planning based onan improved MATD3 algorithm of deep reinforcement learning. IEEE Trans. Veh. Technol. 2024, 73, 12484–12499. [Google Scholar] [CrossRef]

- Ye, T.; Qin, W.; Zhao, Z. Real-time object detection network in UAV-vision based on CNN and transformer. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar]

- Jiang, L.; Yuan, B.; Du, J. Mffsodnet: Multi-scale feature fusion small object detection network for UAV aerial images. IEEE Trans. Instrum. Meas. 2024, 73, 1–14. [Google Scholar]

- Xu, Z.; Zhang, B.; Li, D. Consensus learning for cooperative multi-agent reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7 February 2023; pp. 11726–11734. [Google Scholar]

- Jiang, W.; Bao, C.; Xu, G. Research on autonomous obstacle avoidance and target tracking of UAV based on improved dueling DQN algorithm. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 5110–5115. [Google Scholar]

- Monahan, G.E. State of the art—A survey of partially observable Markov decision processes: Theory, models, and algorithms. Manag. Sci. 1982, 28, 1–16. [Google Scholar] [CrossRef]

- Xiang, Y.; Li, S.; Li, R.; Zhao, Z.; Zhang, H. Decentralized Consensus Inference-based Hierarchical Reinforcement Learning for Multi-Constrained UAV Pursuit-Evasion Game. arXiv 2025, arXiv:2506.18126. [Google Scholar]

- Foerster, J.; Assael, I.A.; De Freitas, N. Learning to communicate with deep multi-agent reinforcement learning. In Proceedings of the 30th Conference on Neural Information Processing Systems (NeurIPS), Barcelona, Spain, 5–10 December 2016; pp. 2145–2153. [Google Scholar]

- Sukhbaatar, S.; Fergus, R. Learning multiagent communication with backpropagation. In Proceedings of the 30th Conference on Neural Information Processing Systems (NeurIPS), Barcelona, Spain, 5–10 December 2016; pp. 2252–2260. [Google Scholar]

- Guan, C.; Chen, F.; Yuan, L. Efficient multi-agent communication via self-supervised information aggregation. In Proceedings of the 36th Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022; pp. 1020–1033. [Google Scholar]

- Shahar, F.S.; Sultan, M.T.H.; Nowakowski, M.; Łukaszewicz, A. UGV-UAV Integration Advancements for Coordinated Missions: A Review. J. Intell. Robot. Syst. 2025, 111, 69. [Google Scholar] [CrossRef]

- Chang, X.; Yang, Y.; Zhang, Z.; Jiao, J.; Cheng, H.; Fu, W. Consensus-Based Formation Control for Heterogeneous Multi-Agent Systems in Complex Environments. Drones 2025, 9, 175. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Lowe, R.; Wu, Y.I.; Tamar, A. Multi-agent actor-critic for mixed cooperative-competitive environments. In Proceedings of the 31th Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 6380–6391. [Google Scholar]

- Tong, S.; Yu, X.; Li, R. Alternate Learning-Based SNR-Adaptive Sparse Semantic Visual Transmission. IEEE Trans. Wirel. Commun. 2024, 24, 1737–1752. [Google Scholar] [CrossRef]

- De Boer, P.T.; Kroese, D.P.; Mannor, S. A tutorial on the cross-entropy method. Ann. Oper. Res. 2005, 134, 19–67. [Google Scholar] [CrossRef]

- Jani, M.; Fayyad, J.; Al-Younes, Y. Model compression methods for YOLOv5: A review. arXiv 2023, arXiv:2307.11904. [Google Scholar]

- Khanam, R.; Hussain, M. What is YOLOv5: A deep look into the internal features of the popular object detector. arXiv 2024, arXiv:2407.20892. [Google Scholar]

- Yu, C.; Velu, A.; Vinitsky, E. The surprising effectiveness of ppo in cooperative multi-agent games. In Proceedings of the 36th Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022; pp. 24611–24624. [Google Scholar]

- Pan, C.; Yan, Y.; Zhang, Z. Flexible formation control using hausdorff distance: A multi-agent reinforcement learning approach. In Proceedings of the 30th European signal processing conference (EUSIPCO), Belgrade, Serbia, 29 August–2 September 2022; pp. 972–976. [Google Scholar]

- Richardson, T.; Kudekar, S. Design of low-density parity check codes for 5G new radio. IEEE Commun. Mag. 2018, 56, 28–34. [Google Scholar] [CrossRef]

| Parameters Description | Value |

|---|---|

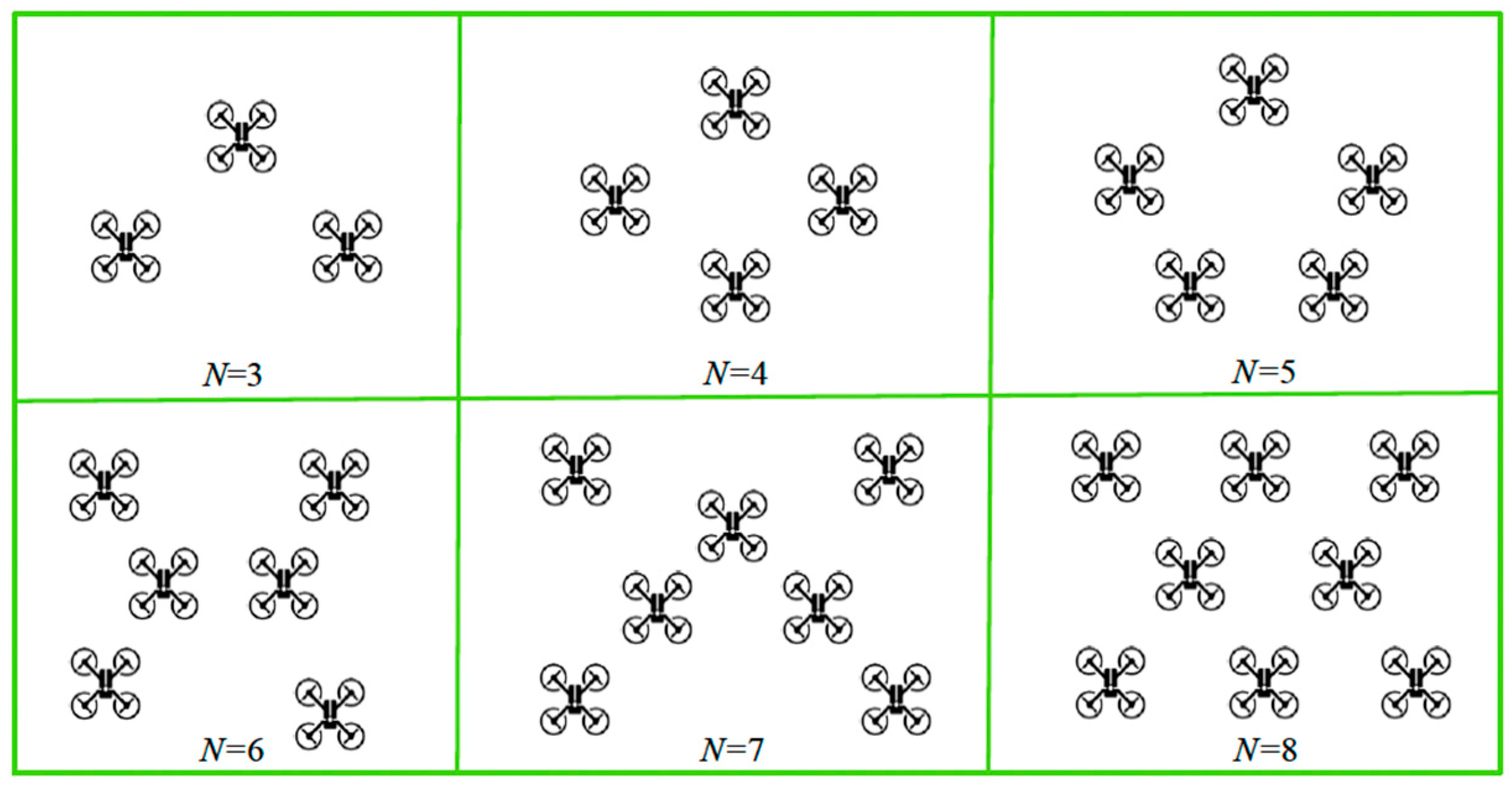

| The number of UAVs | |

| Set of UAV swarm sizes | |

| Range of UAV velocities (m/s) | [−1,1] |

| Reward weights | |

| Neighbor observation distance (m) | |

| Minimum safety distance (m) | |

| Warning distance (m) |

| Parameters | Description | Value |

|---|---|---|

| Batch size | ||

| Learning rates for the semantic decoder, semantic encoder, and value network | ||

| Number of local updates of the semantic decoders | ||

| Training epochs | ||

| The fixed bits for image transmission |

| Modules | Layer | Dimensions | Activation |

|---|---|---|---|

| Input | Image | \ | |

| Encoder | Convolutional layer | ReLU | |

| Linear layer | \ | ||

| Sampling strategy | Gaussian sampling | ||

| \ | |||

| Channel | AWGN/Rayleigh | ||

| \ | |||

| Decoder | Linear layer | \ | |

| Convolutional layer | ReLU, Sigmoid | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, X.; Zhang, B.; Li, R. A Leader-Assisted Decentralized Adaptive Formation Method for UAV Swarms Integrating a Pre-Trained Semantic Broadcast Communication Model. Drones 2025, 9, 681. https://doi.org/10.3390/drones9100681

Xu X, Zhang B, Li R. A Leader-Assisted Decentralized Adaptive Formation Method for UAV Swarms Integrating a Pre-Trained Semantic Broadcast Communication Model. Drones. 2025; 9(10):681. https://doi.org/10.3390/drones9100681

Chicago/Turabian StyleXu, Xing, Bo Zhang, and Rongpeng Li. 2025. "A Leader-Assisted Decentralized Adaptive Formation Method for UAV Swarms Integrating a Pre-Trained Semantic Broadcast Communication Model" Drones 9, no. 10: 681. https://doi.org/10.3390/drones9100681

APA StyleXu, X., Zhang, B., & Li, R. (2025). A Leader-Assisted Decentralized Adaptive Formation Method for UAV Swarms Integrating a Pre-Trained Semantic Broadcast Communication Model. Drones, 9(10), 681. https://doi.org/10.3390/drones9100681