A GPS-Free Bridge Inspection Method Tailored to Bridge Terrain with High Positioning Stability

Abstract

Highlights

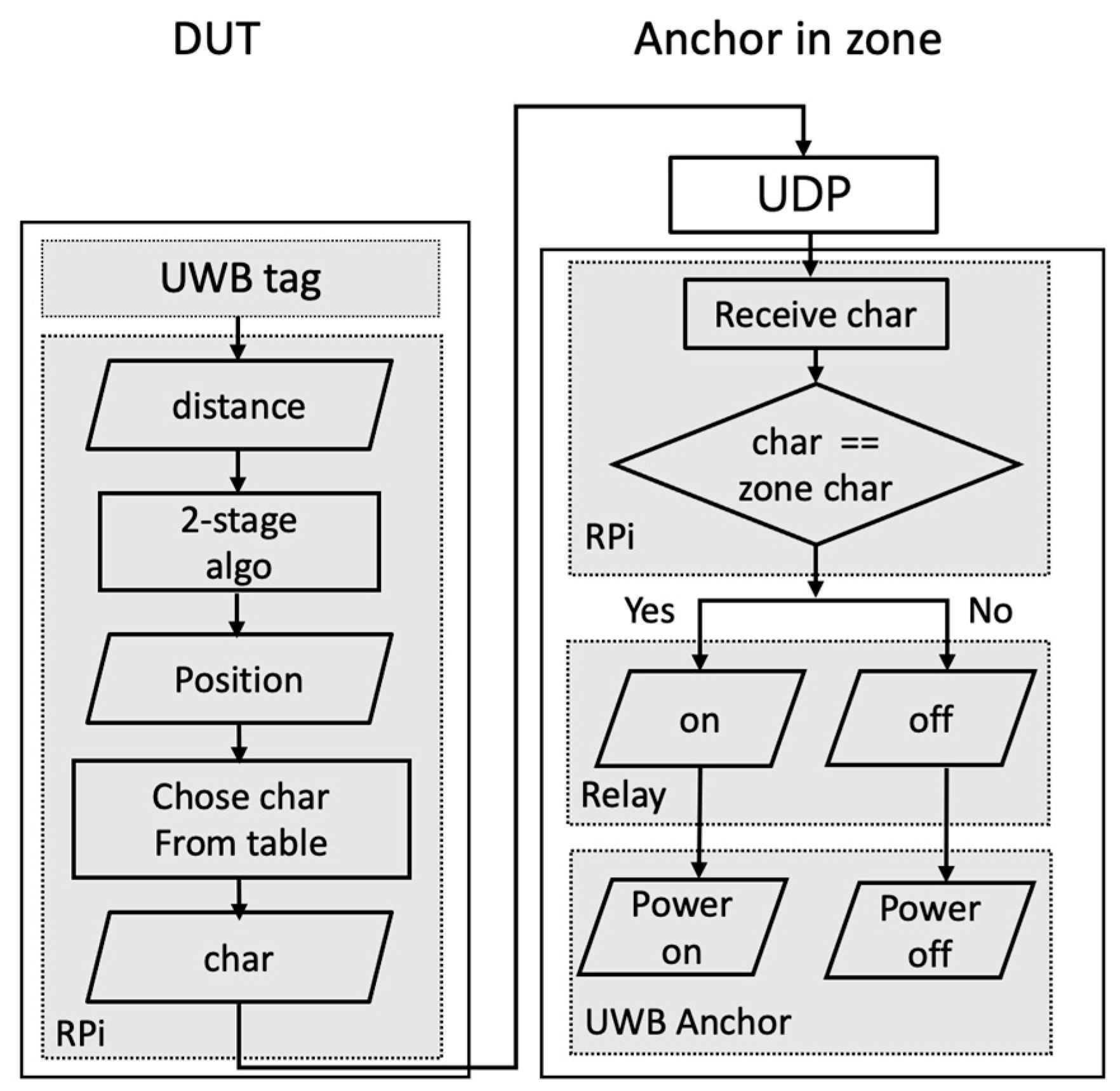

- The system uses a handover mechanism to prevent electromagnetic interference between anchors and ensure accurate positioning by quickly controlling anchor switches in distinct zones. This is suitable for bridges hundreds of meters long, using dozens of UWB anchors, but with a total of no more than six assigned anchor IDs.

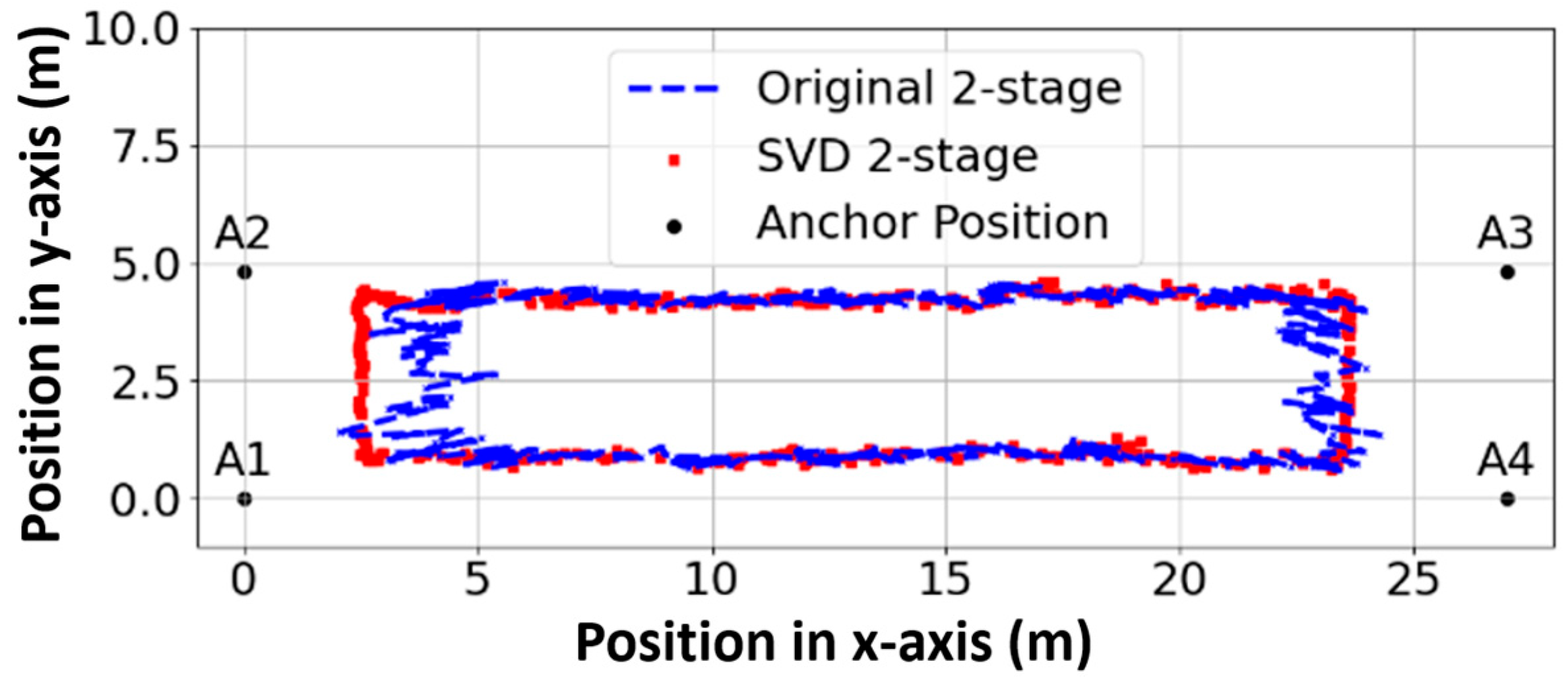

- The positioning algorithm uses an enhanced two-stage method that adapts to the terrain under the bridge, which reduces the elevation error by ten times compared with the original two-stage method and by half compared to the Taylor series method, successfully improving the UAV’s position accuracy to 0.2–0.5 m.

- Combining the bipartite graph and vertex coloring analogy, the number of anchor points and anchor IDs can be optimized, so that the length of bridges that can be inspected by this method can be extended to several kilometers.

- The positioning results using the enhanced two-stage method are robust for various terrains under the bridge. Combined with further extended analysis, the anchor configuration can be optimized, and the positioning accuracy can be well controlled.

Abstract

1. Introduction

- Presents an inspection system tailored to various bridge terrains and conducts practical experiments on real bridge structures.

- Applies a handover mechanism to prevent electromagnetic interference among anchors, and ensures accurate positioning by quickly controlling anchor switches in distinct areas.

- Utilizes an enhanced two-stage method that adapts to the terrain under the bridge, which reduces the error in height by about ten times compared with the original two-stage method and about half that of the Taylor series method.

2. Framework of Bridge Inspection

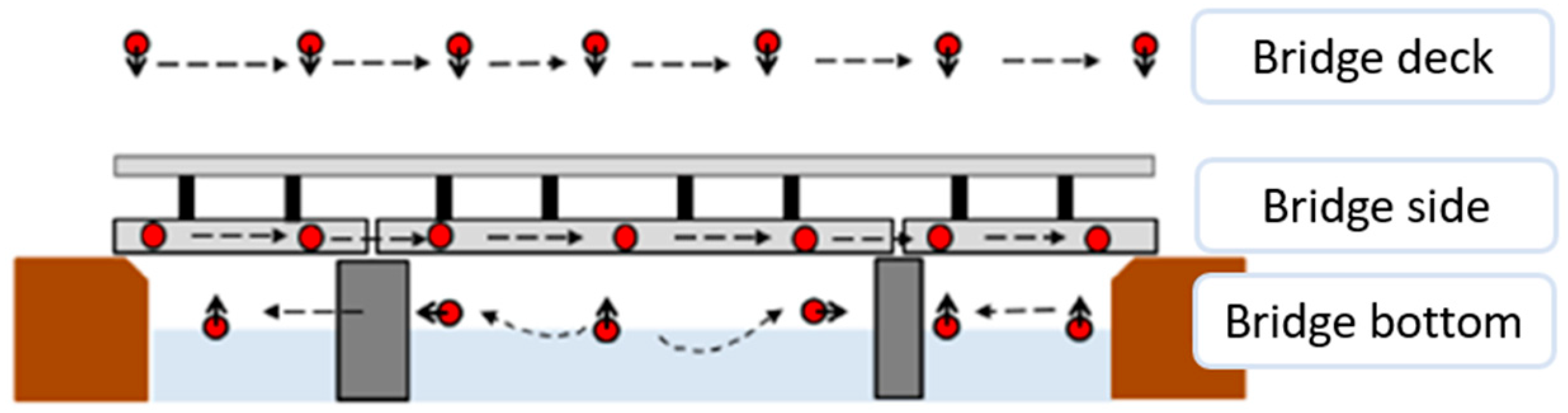

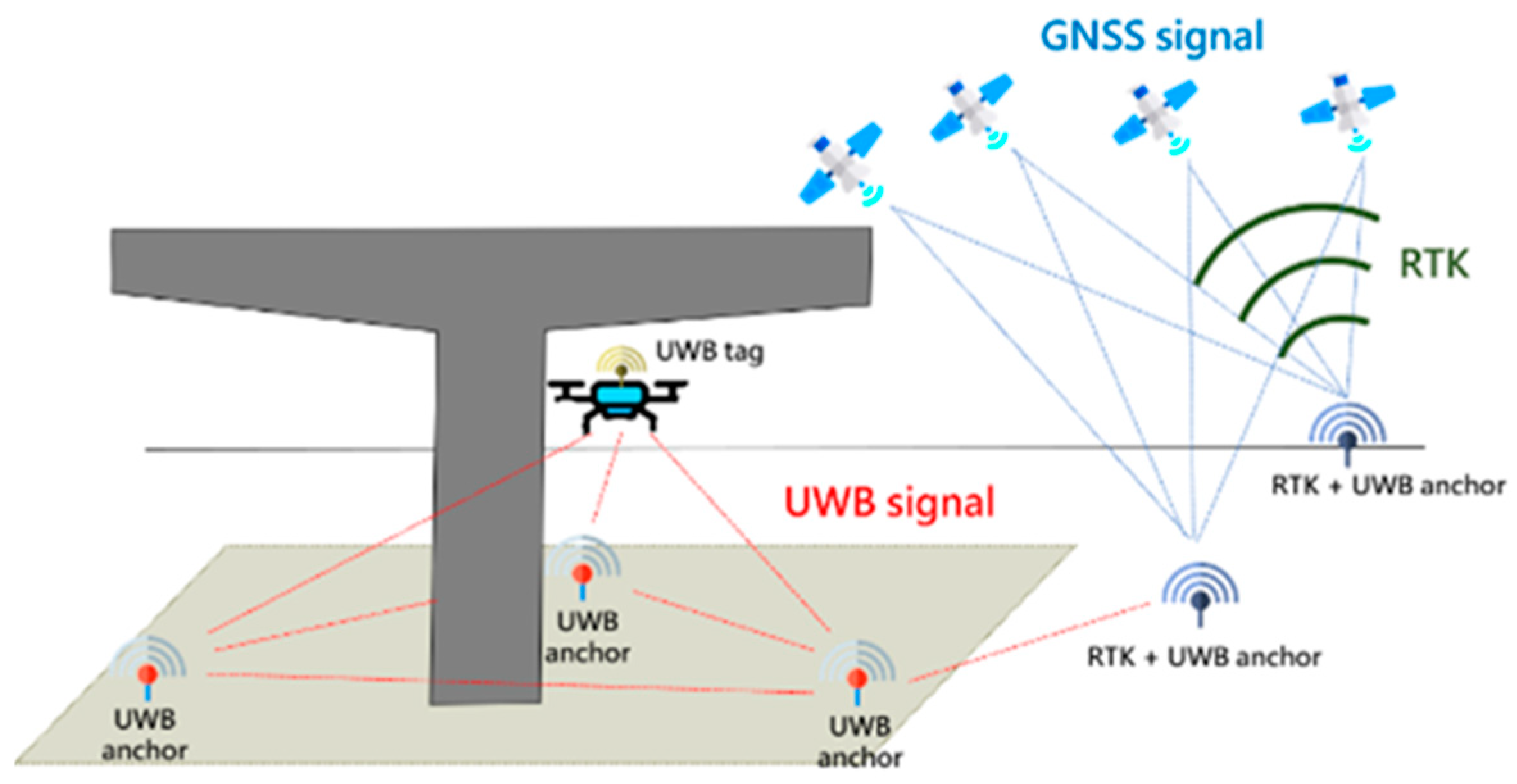

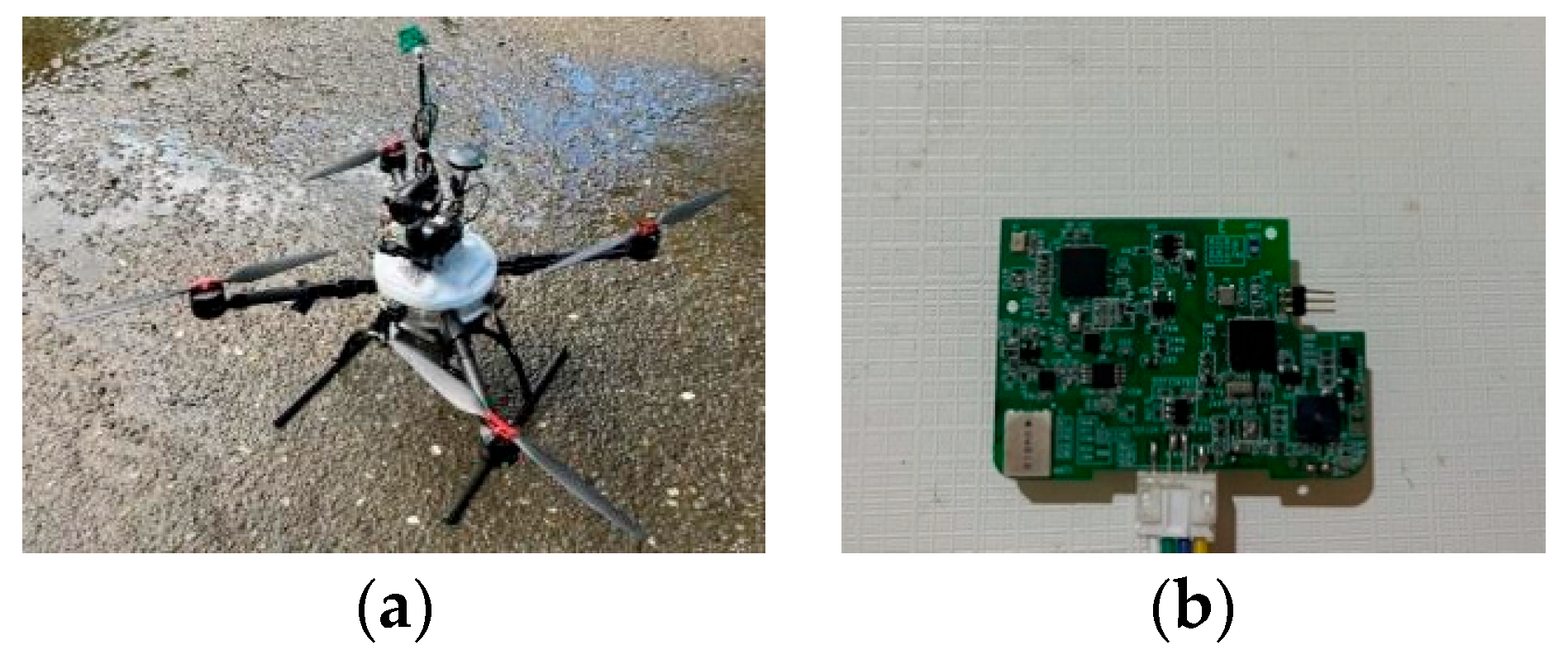

2.1. Data Acquisition Using UAV

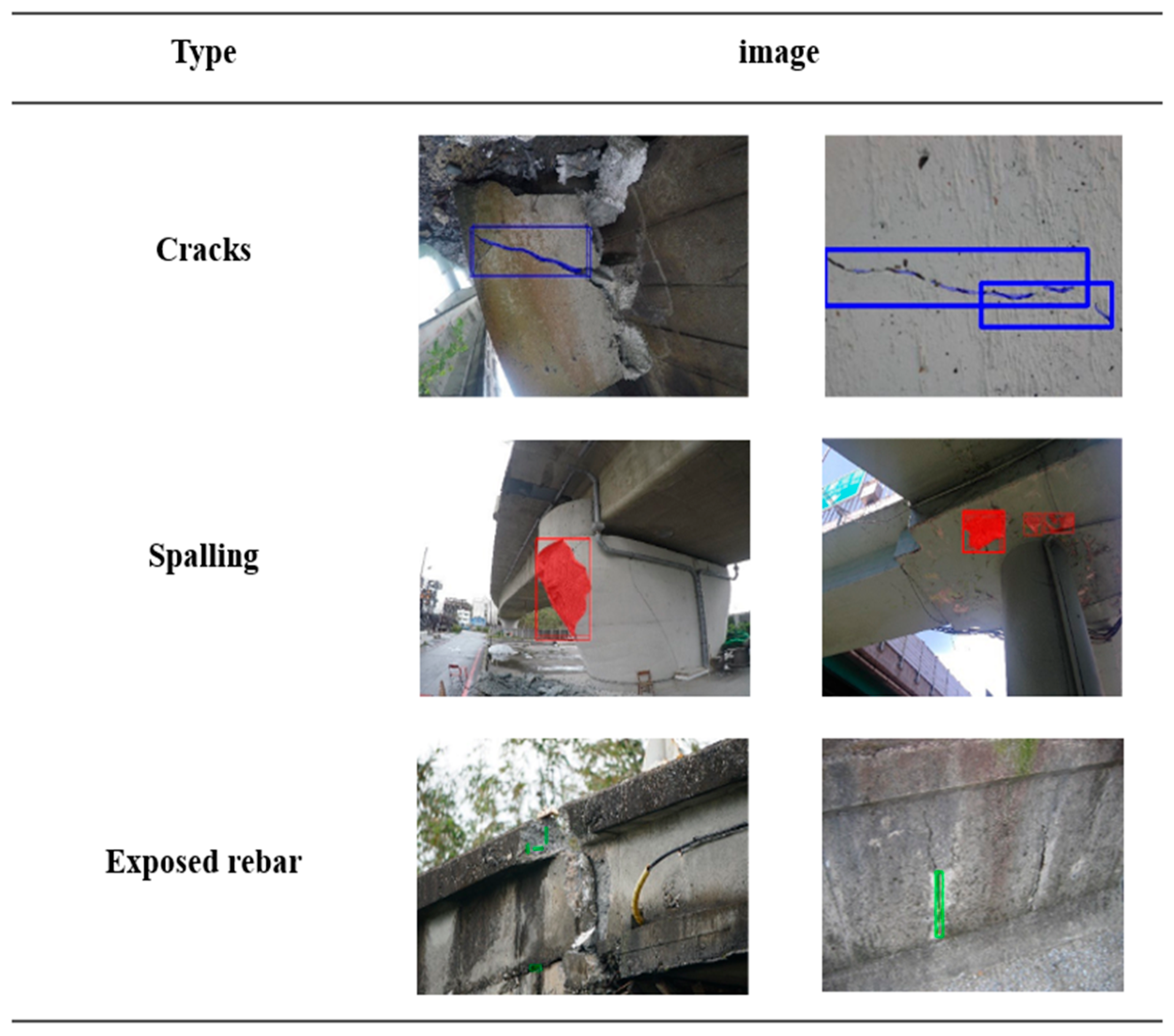

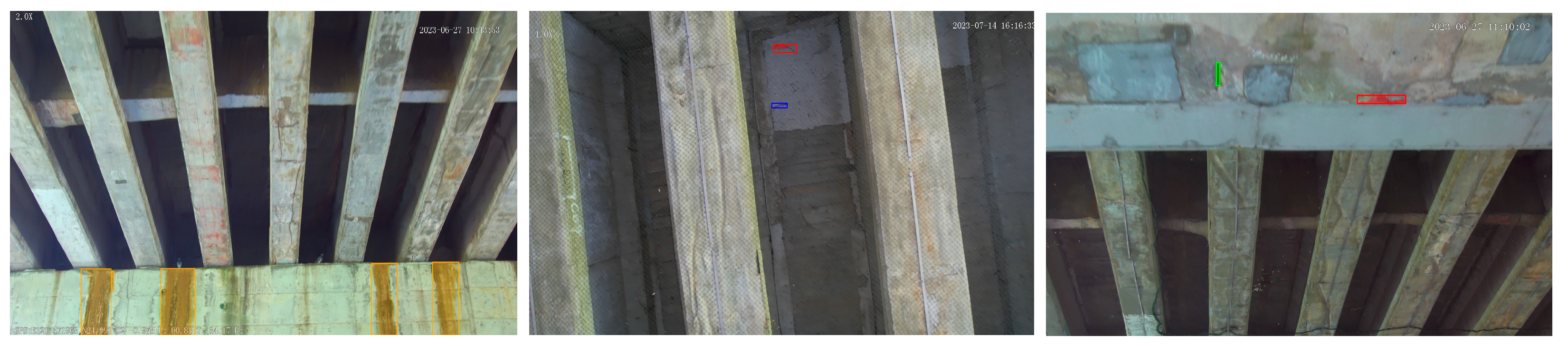

2.2. Automatic Detection of Damaged Structures

2.3. Detection Results to the Inspection Checklists

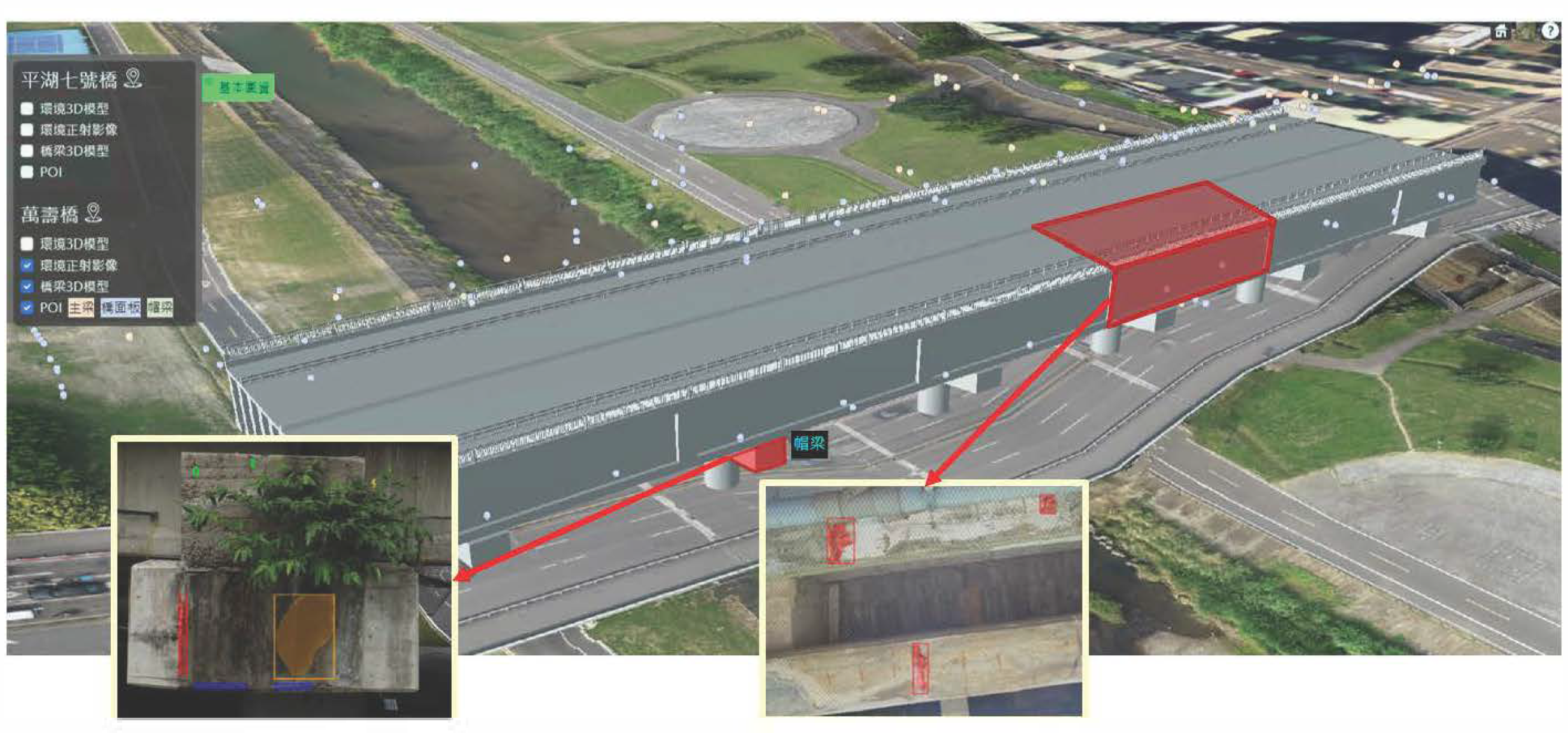

2.4. Build a Detection Management System

3. Positioning by Anchors Handover and SVD-Enhanced Method

3.1. Statement of the Handover Problem

3.1.1. Handover Mechanism

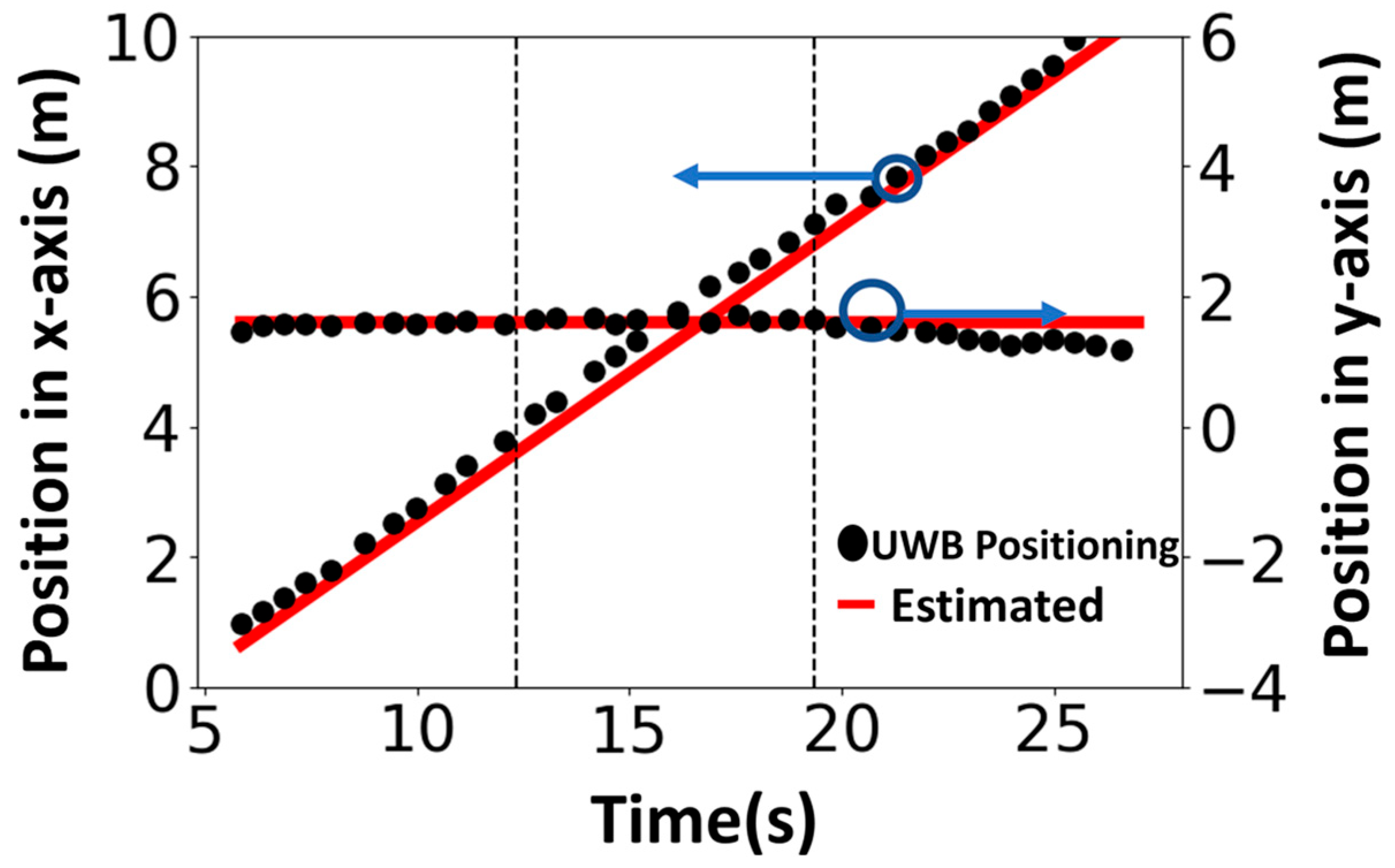

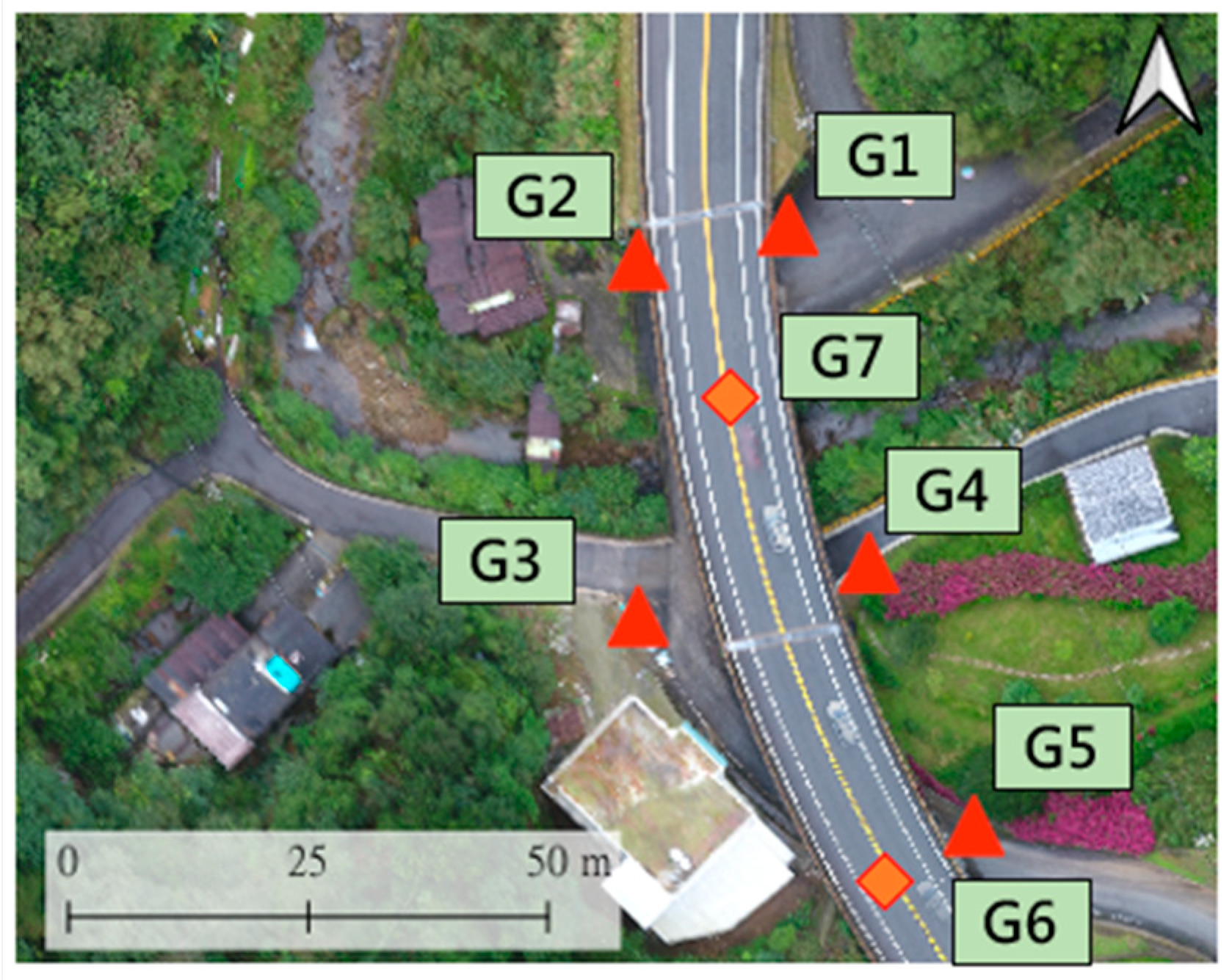

3.1.2. Experiment for Handover

3.2. SVD-Enhanced Positioning in Slant Terrains

3.2.1. Enhanced Two-Stage Algorithm

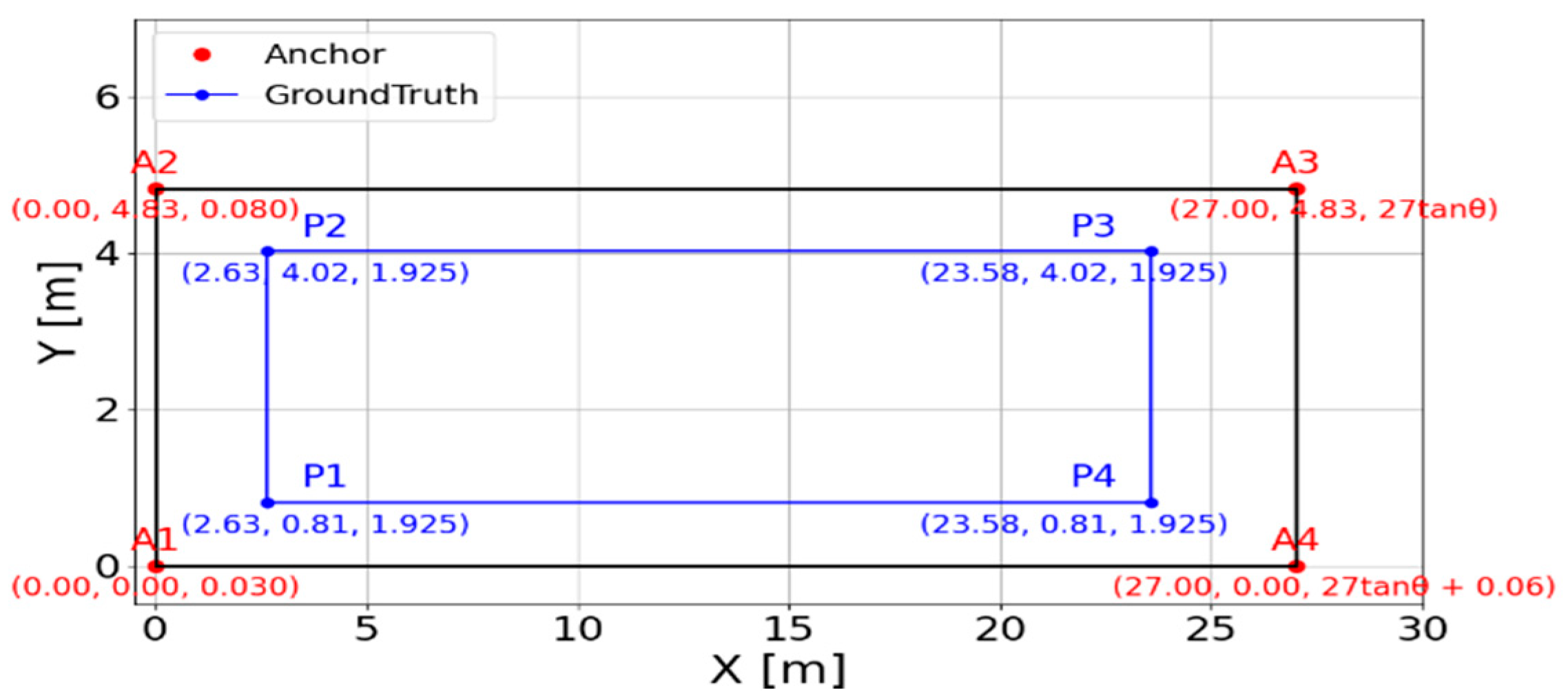

3.2.2. Experiment for Positioning

4. Bridge Inspection Experiment

4.1. Experiment Settings

4.1.1. Hardware

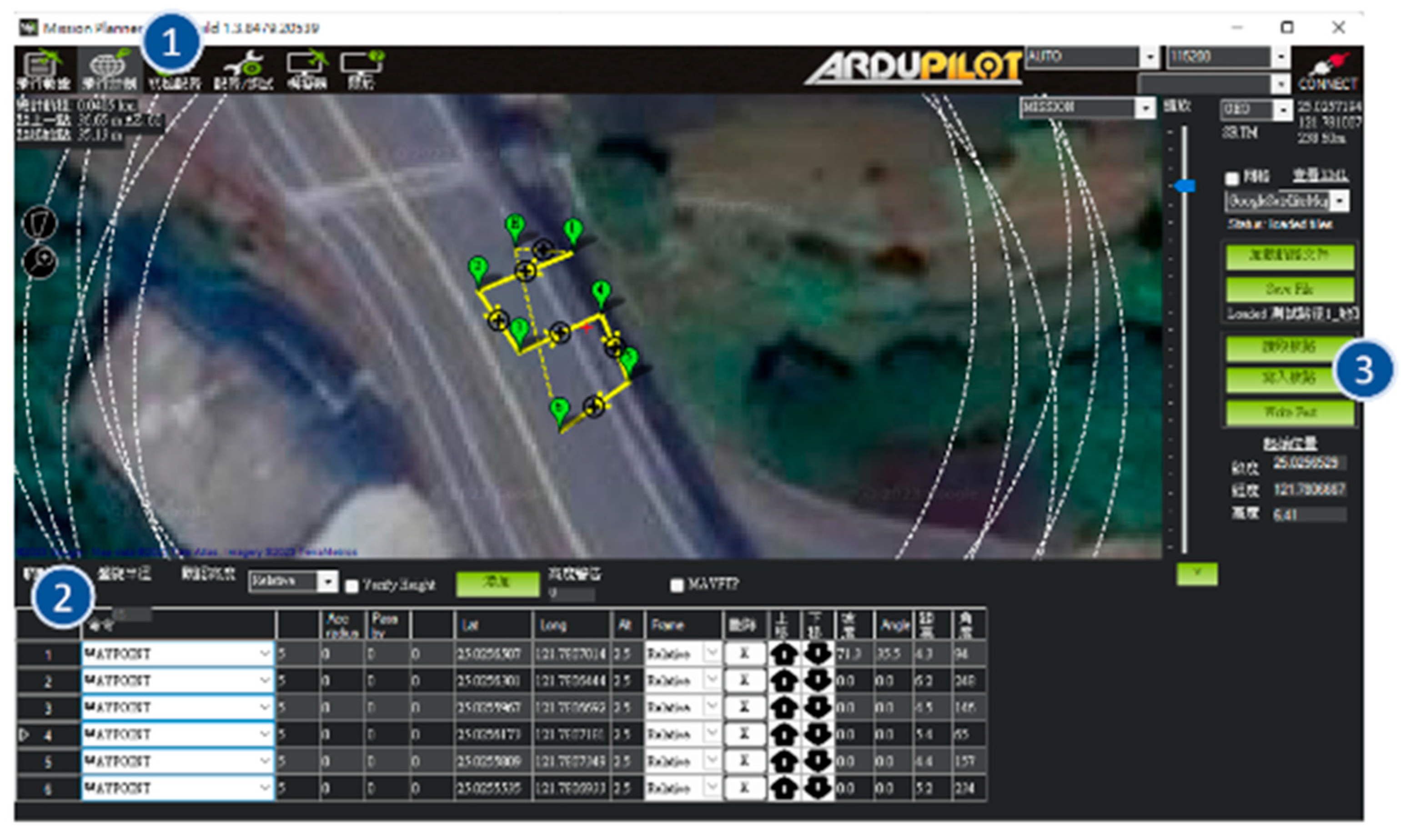

4.1.2. Software

4.2. Selection of Validation Bridges

4.3. Image Acquisition

5. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vera, A. Concrete Bridge Railing Collapses onto Tennessee Interstate, Injuring One Person. CNN, 3 April 2019. [Google Scholar]

- Lin, J.J.; Ibrahim, A.; Sarwade, S.; Golparvar-Fard, M. Bridge inspection with aerial robots: Automating the entire pipeline of visual data capture, 3D mapping, defect detection, analysis, and reporting. J. Comput. Civ. Eng. 2021, 35, 04020064. [Google Scholar] [CrossRef]

- Morgenthal, G.; Hallermann, N.; Kersten, J.; Taraben, J.; Debus, P.; Helmrich, M.; Rodehorst, V. Framework for automated UAS-based structural condition assessment of bridges. Autom. Constr. 2019, 97, 77–95. [Google Scholar] [CrossRef]

- Yang, Y.; Khalife, J.; Morales, J.J.; Kassas, M. UAV waypoint opportunistic navigation in GNSS-denied environments. IEEE Trans. Aerosp. Electron. Syst. 2021, 58, 663–678. [Google Scholar] [CrossRef]

- Khalife, J.; Kassas, Z.M. On the achievability of submeter-accurate UAV navigation with cellular signals exploiting loose network synchronization. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 4261–4278. [Google Scholar] [CrossRef]

- Khalife, J.; Kassas, Z.M. Opportunistic UAV navigation with carrier phase measurements from asynchronous cellular signals. IEEE Trans. Aerosp. Electron. Syst. 2019, 56, 3285–3301. [Google Scholar] [CrossRef]

- Tomiczek, A.P.; Bridge, J.A.; Ifju, P.G.; Whitley, T.J.; Tripp, C.S.; Ortega, A.E.; Poelstra, J.J.; Gonzalez, S.A. Small unmanned aerial vehicle (sUAV) inspections in GPS denied area beneath bridges. Struct. Congr. 2018, 205–216. [Google Scholar] [CrossRef]

- Whitley, T.; Tomiczek, A.; Tripp, C.; Ortega, A.; Mennu, M.; Bridge, J.; Ifju, P. Design of a small unmanned aircraft system for bridge inspections. J. Sens. 2020, 20, 5358. [Google Scholar] [CrossRef]

- Abiko, S.; Sakamoto, S.Y.; Hasegawa, T.; Shimaji, N. Development of constant altitude flight system using two dimensional laser range finder with mirrors. In Proceedings of the 2017 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Munich, Germany, 3–7 July 2017. [Google Scholar]

- Petritoli, E.; Leccese, F.; Leccisi, M. Inertial navigation systems for UAV: Uncertainty and error measurements. In Proceedings of the 2019 IEEE 5th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Torino, Italy, 19–21 June 2019; pp. 1–5. [Google Scholar]

- Scaramuzza, D.; Zhang, Z. Visual-inertial odometry of aerial robots. arXiv 2019, arXiv:1906.03289. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, Y.; Liu, W.; Chen, X. Novel approach to position and orientation estimation in vision-based UAV navigation. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 687–700. [Google Scholar] [CrossRef]

- Balamurugan, G.; Valarmathi, J.; Naidu, V.P.S. Survey on UAV navigation in GPS denied environments. In Proceedings of the 2016 International conference on Signal Processing, Communication, Power and Embedded System (SCOPES), Odisha, India, 3–5 October 2016. [Google Scholar]

- Mansur, S.; Habib, M.; Pratama, G.N.P.; Cahyadi, I.A.; Ardiyanto, I. Real time monocular visual odometry using optical flow: Study on navigation of quadrotors UAV. In Proceedings of the 3rd International Conference on Science and Technology—Computer (ICST), Yogyakarta, Indonesia, 11–12 July 2017. [Google Scholar]

- El Bouazzaoui, I.; Florez, S.A.R.; El Ouardi, A. Enhancing RGB-d SLAM performances considering sensor specifications for indoor localization. IEEE Sens. J. 2021, 22, 4970–4977. [Google Scholar] [CrossRef]

- Warren, M.; Corke, P.; Upcroft, B. Long-range stereo visual odometry for extended altitude flight of unmanned aerial vehicles. Int. J. Robot. 2016, 35, 381–403. [Google Scholar] [CrossRef]

- Shan, M.; Bi, Y.; Qin, H.; Li, J.; Gao, Z.; Lin, F.; Chen, B.M. A brief survey of visual odometry for micro aerial vehicles. In Proceedings of the 42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 23–26 October 2016. [Google Scholar]

- Thai, V.P.; Zhong, W.; Pham, T.; Alam, S.; Duong, V. Detection, tracking and classification of aircraft and drones in digital towers using machine learning on motion patterns. In Proceedings of the 2019 Integrated Communications, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 9–11 April 2019. [Google Scholar]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Bryson, M.; Sukkarieh, S. Observability analysis and active control for airborne SLAM. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 261–280. [Google Scholar] [CrossRef]

- Alkendi, Y.; Seneviratne, L.; Zweiri, Y. State of the art in vision-based localization techniques for autonomous navigation systems. IEEE Access 2021, 9, 76847–76874. [Google Scholar] [CrossRef]

- Ali, R.; Kang, D.; Suh, G.; Cha, Y.J. Real-time multiple damage mapping using autonomous UAV and deep faster region-based neural networks for GPS-denied structures. Autom. Constr. 2021, 130, 103831. [Google Scholar] [CrossRef]

- Jiang, S.; Wu, Y.; Zhang, J. Bridge coating inspection based on two-stage automatic method and collision-tolerant unmanned aerial system. Autom. Constr. 2023, 146, 104685. [Google Scholar] [CrossRef]

- Chen, Y.-E.; Liew, H.-H.; Chao, J.-C.; Wu, R.-B. Decimeter-accuracy positioning for drones using two-stage trilateration in a GPS-denied environment. IEEE Internet Things J. 2022, 10, 8319–8326. [Google Scholar] [CrossRef]

- Si, M.; Wang, Y.; Zhou, N.; Seow, C.; Siljak, H. A hybrid indoor altimetry based on barometer and UWB. J. Sens. 2023, 23, 4180. [Google Scholar] [CrossRef]

- Nguyen, T.M.; Zaini, A.H.; Guo, K.; Xie, L. An ultra-wideband-based multi-UAV localization system in GPS-denied environments. In Proceedings of the International Micro Air Vehicle Conference and Competition 2016, Beijing, China, 17–21 October 2016. [Google Scholar]

- Wang, P.-H.; Wu, R.-B. An ultra-wideband handover system for GPS-free bridge inspection using drones. Sensors 2025, 25, 1923. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X. The IMU/UWB fusion positioning algorithm based on a particle filter. ISPRS Int. J. Geo-Inf. 2017, 6, 235. [Google Scholar] [CrossRef]

- Foy, W.H. Position-location solutions by Taylor-series estimation. IEEE Trans. Aerosp. Electron. Syst. 1976, 2, 187–194. [Google Scholar] [CrossRef]

- Chan, Y.T.; Ho, K.C. A simple and efficient estimator for hyperbolic location. IEEE Trans. Signal Process. 1994, 42, 1905–1915. [Google Scholar] [CrossRef]

- Tsai, C.-L.; Wu, R.-B. Enhanced UAV localization and outlier detection using SVD-enhanced UWB for bridge inspections. IEEE Internet Thing J. 2025, 12, 33111–33119. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Neuhold, G.; Ollmann, T.; Bulo, S.R.; Kontschieder, P. The mapillary vistas dataset for semantic understanding of street scenes. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

| Method | RMSE (m) | Condition Number | Per-Point CPU Time (ms) | ||

|---|---|---|---|---|---|

| X-Axis | Y-Axis | Z-Axis | |||

| Two-stage (original) | 1.0191 | 0.2003 | 1.358 | 606 | 0.083 |

| Two-stage (SVD) | 0.0485 | 0.1894 | 0.521 | 6.52 | 0.078 |

| Taylor-series algorithm | 0.0820 | 0.1521 | 0.3128 | NA | 0.16 |

| Location (Case) | Type | Dimensions (m2) | Number of UWB Anchors | Achieved Accuracy | Remarks |

|---|---|---|---|---|---|

| Bridge A | Small bridge | 30 × 10 | 7 (G1–G7) | High (sub-meter level) | 5 anchors placed around the perimeter (GPS-based), plus 2 beneath the bridge to enhance UAV stability during under-bridge flights; G5–G6 only 9 m apart due to site constraints. |

| Bridge B | Long-span bridge | 166 × 29.5 (urban area) | 27 | High (sub-meter level) | Anchors distributed along the entire span; additional temporary anchors placed on riverbanks to maintain network stability across large water gaps (>40 m between piers). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, J.-H.; Hsu, C.-R.; Han, J.-Y.; Wu, R.-B. A GPS-Free Bridge Inspection Method Tailored to Bridge Terrain with High Positioning Stability. Drones 2025, 9, 678. https://doi.org/10.3390/drones9100678

Bai J-H, Hsu C-R, Han J-Y, Wu R-B. A GPS-Free Bridge Inspection Method Tailored to Bridge Terrain with High Positioning Stability. Drones. 2025; 9(10):678. https://doi.org/10.3390/drones9100678

Chicago/Turabian StyleBai, Jia-Hau, Chin-Rou Hsu, Jen-Yu Han, and Ruey-Beei Wu. 2025. "A GPS-Free Bridge Inspection Method Tailored to Bridge Terrain with High Positioning Stability" Drones 9, no. 10: 678. https://doi.org/10.3390/drones9100678

APA StyleBai, J.-H., Hsu, C.-R., Han, J.-Y., & Wu, R.-B. (2025). A GPS-Free Bridge Inspection Method Tailored to Bridge Terrain with High Positioning Stability. Drones, 9(10), 678. https://doi.org/10.3390/drones9100678