An Innovative New Approach to Light Pollution Measurement by Drone

Abstract

1. Introduction

- A completely new customised design for an unmanned platform for light pollution measurements, which is adapted to mount non-standard sensors (not originally designed for mounting on a UAV) allowing registration in the nadir and zenith directions.

- Adaptation and use of traditional photometric sensors in a new configuration (on the UAV), such as a spectrometer and sky quality meter (SQM).

- Use of a multispectral camera for nighttime measurements.

- Use of a calibrated visible light camera on a drone for nighttime measurements.

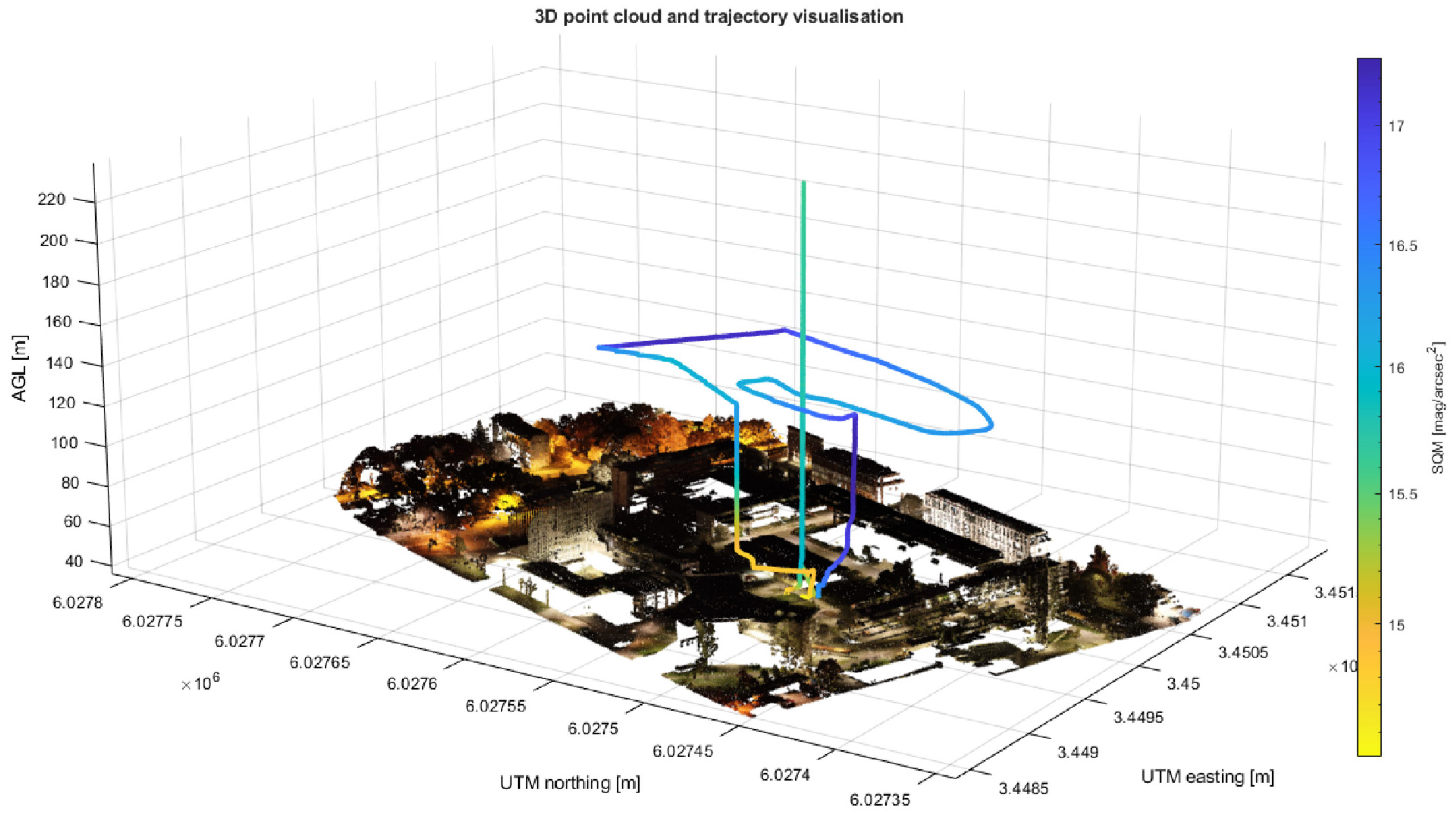

- Generation of products allowing visualisation of multimodal photometric data together with preservation of the geographical coordinate system.

- Development and validation of a new methodology for nighttime measurements of light pollution from UAVs.

2. Materials and Methods

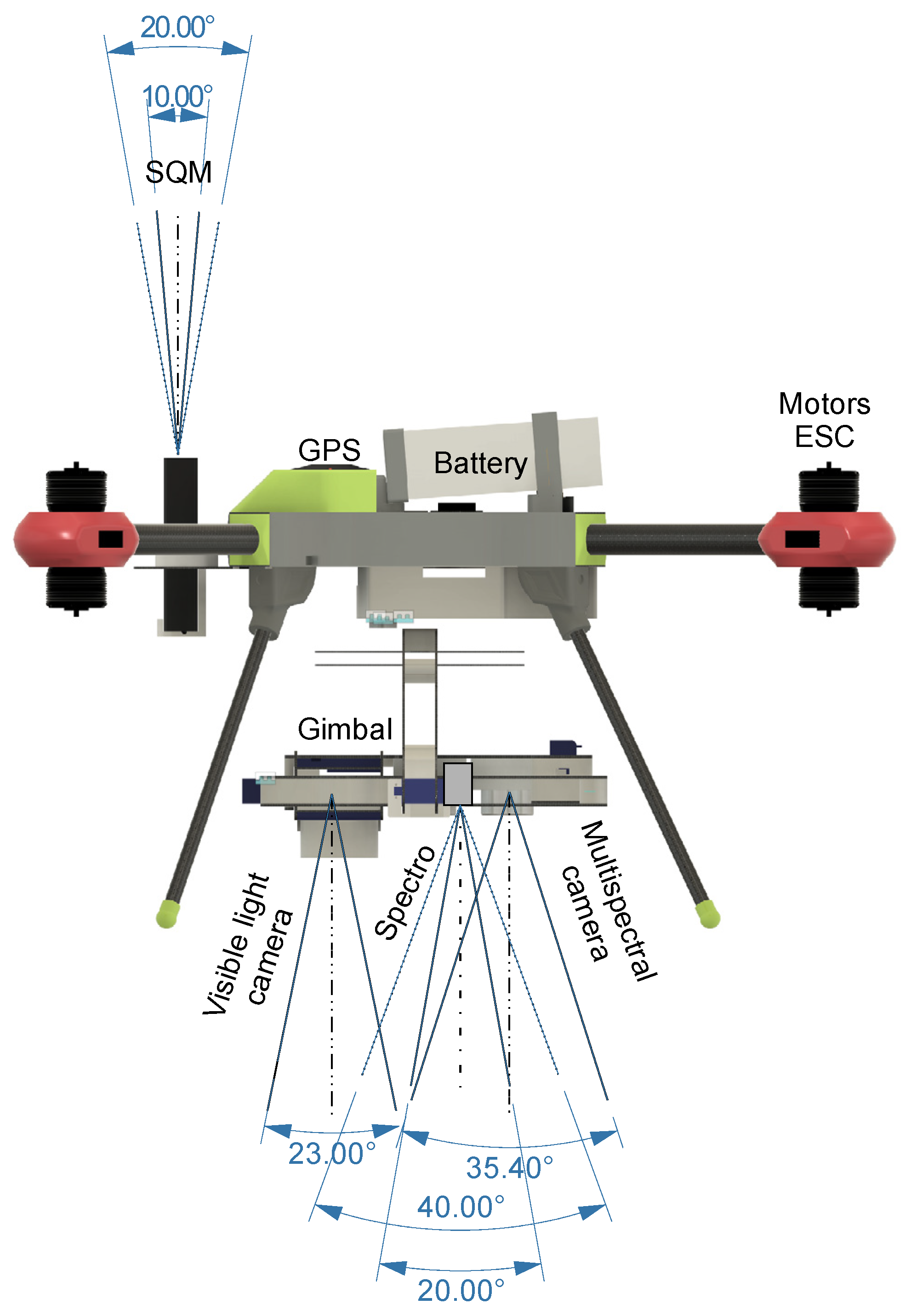

2.1. UAV Construction

2.2. Measurement Equipment

- L is the spectral radiance in W/m2/sr/nm,

- p is the normalised RAW pixel value,

- is the normalised black level value,

- are the radiometric calibration coefficients,

- V(x, y) is the vignette polynomial function for pixel location (x, y),

- is the image exposure time,

- g is the sensor gain setting (can be found in metadata tags),

- x, y are the pixel column and row number, respectively.

- is the corrected intensity of pixel at x,y,

- I(x,y) is the original intensity of pixel at x,y,

- k is the correction factor by which the raw pixel value should be divided to correct for vignette,

- r is the distance of the pixel (x,y) from the vignette centre, in pixels,

- (x,y) is the coordinate of the pixel being corrected,

- is the principal point orientation.

- represents the corrected SQM values,

- means the measured SQM values,

- T is the measured temperature at the measuring point.

2.3. Product Specification

2.4. Experiment

3. Results

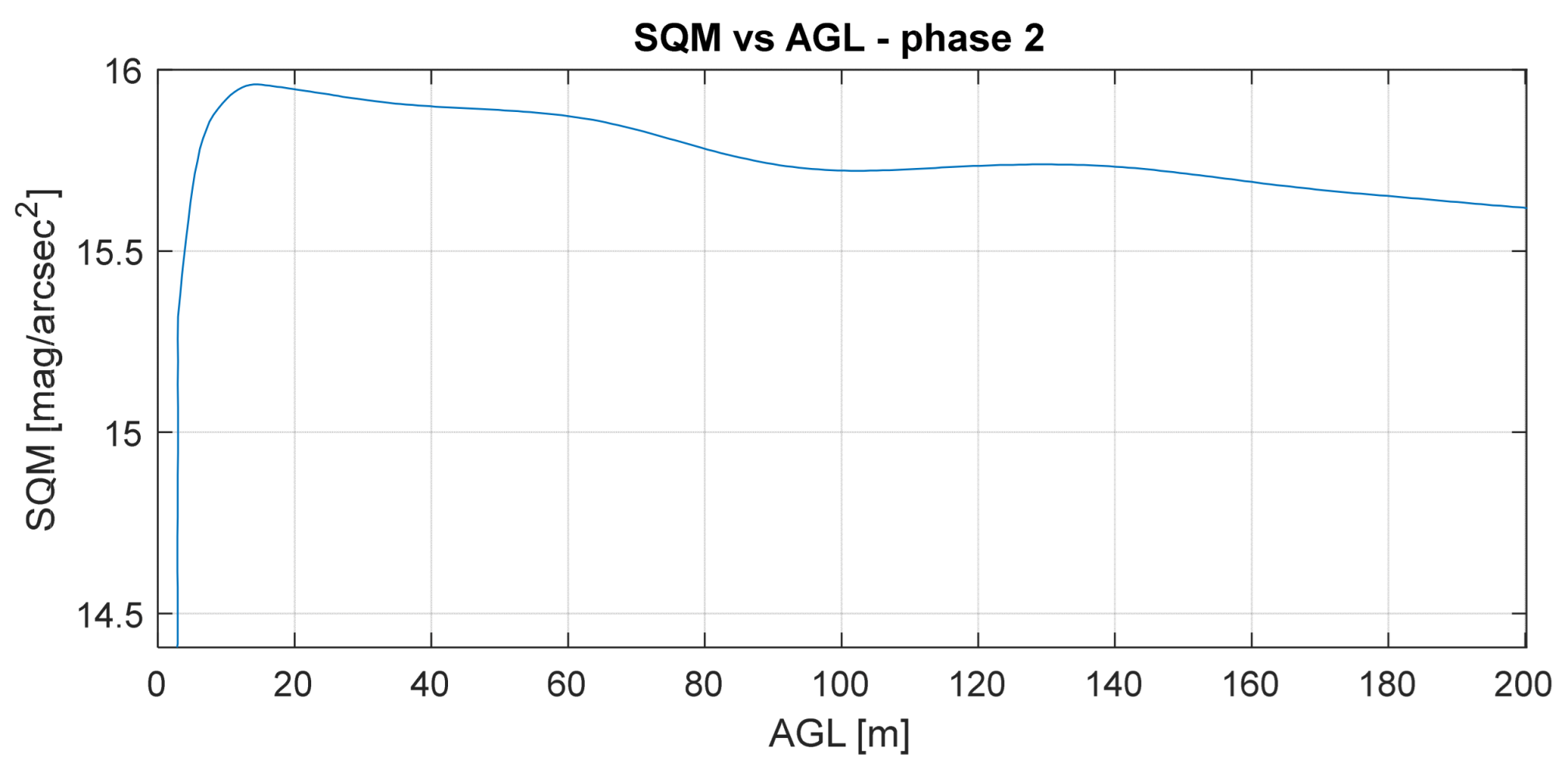

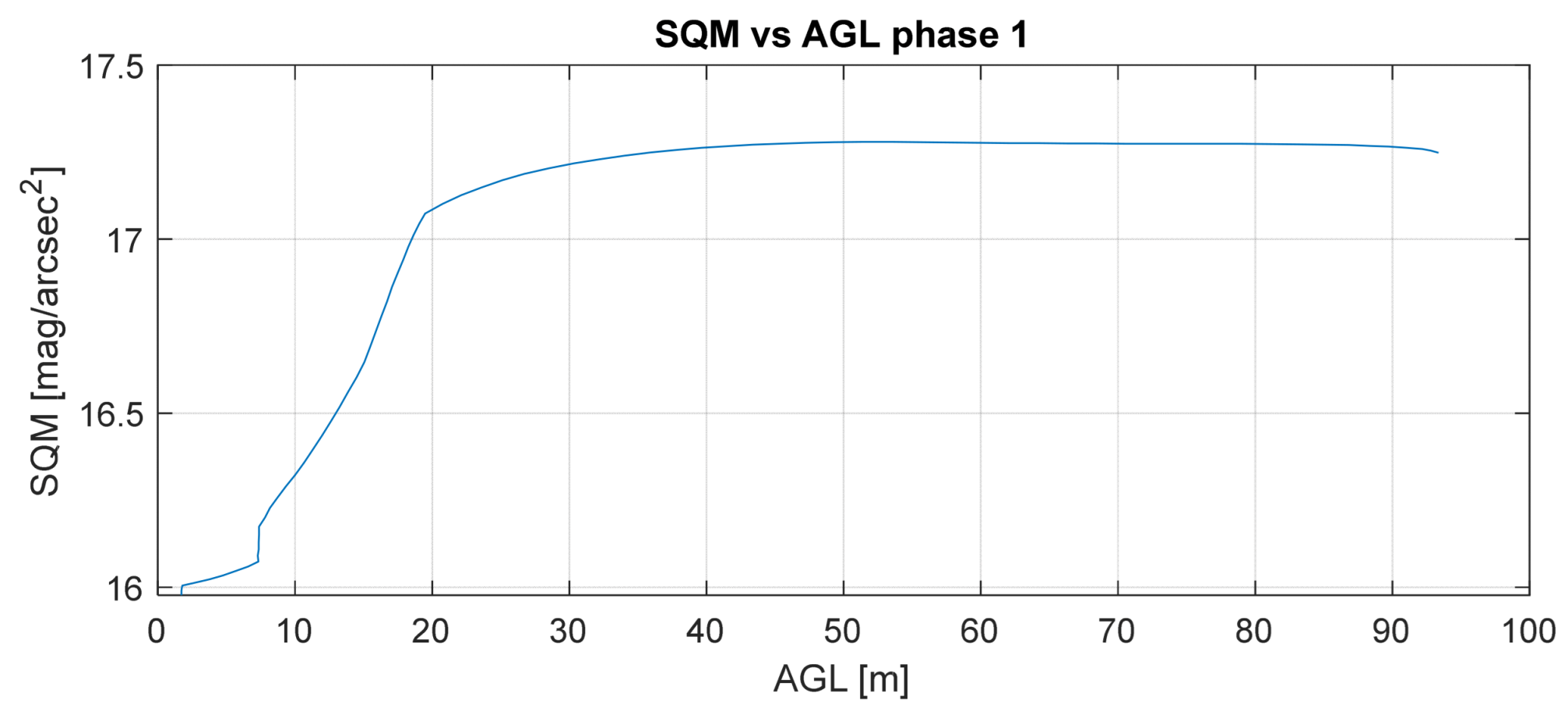

3.1. SQM

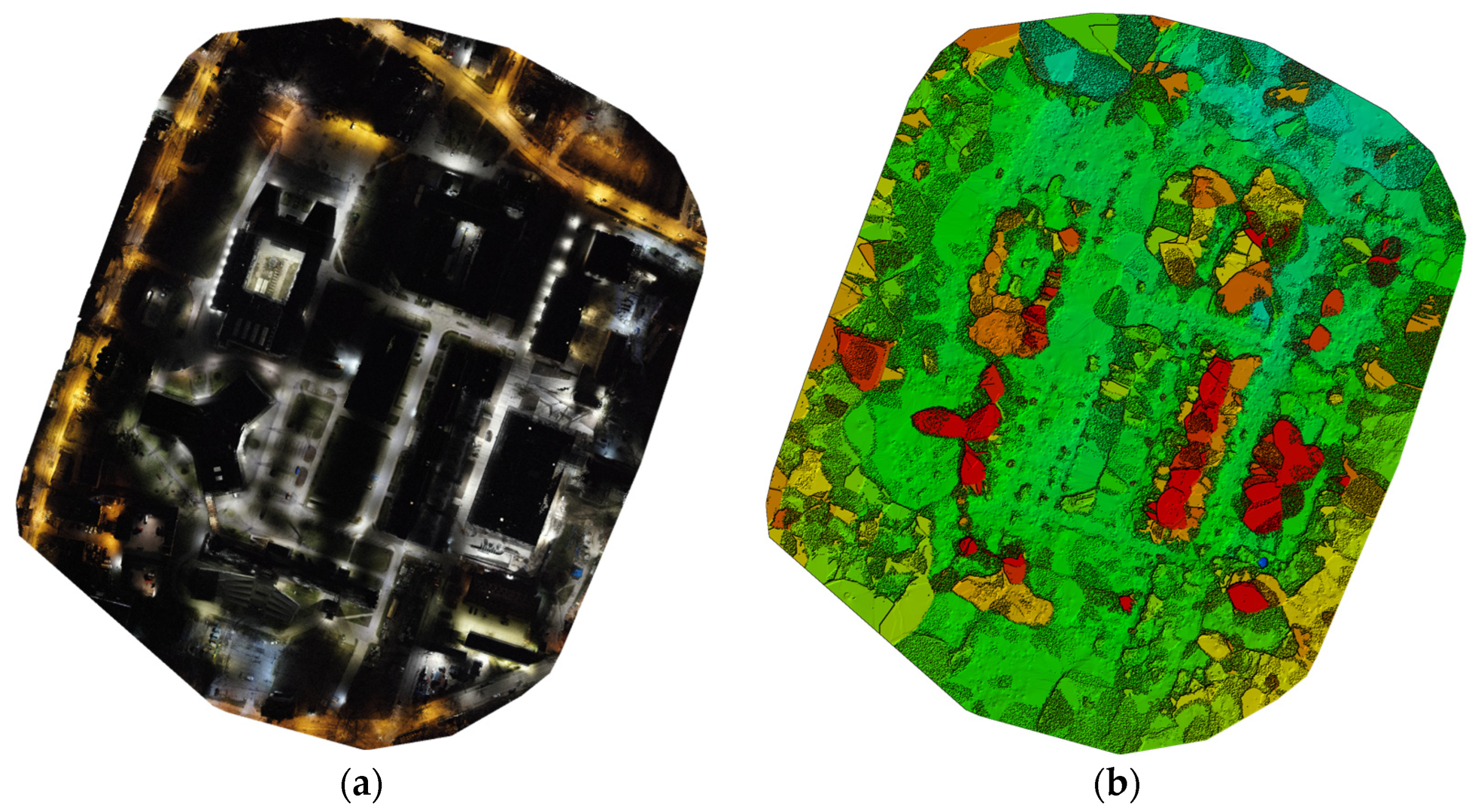

3.2. Nighttime Orthophoto Map

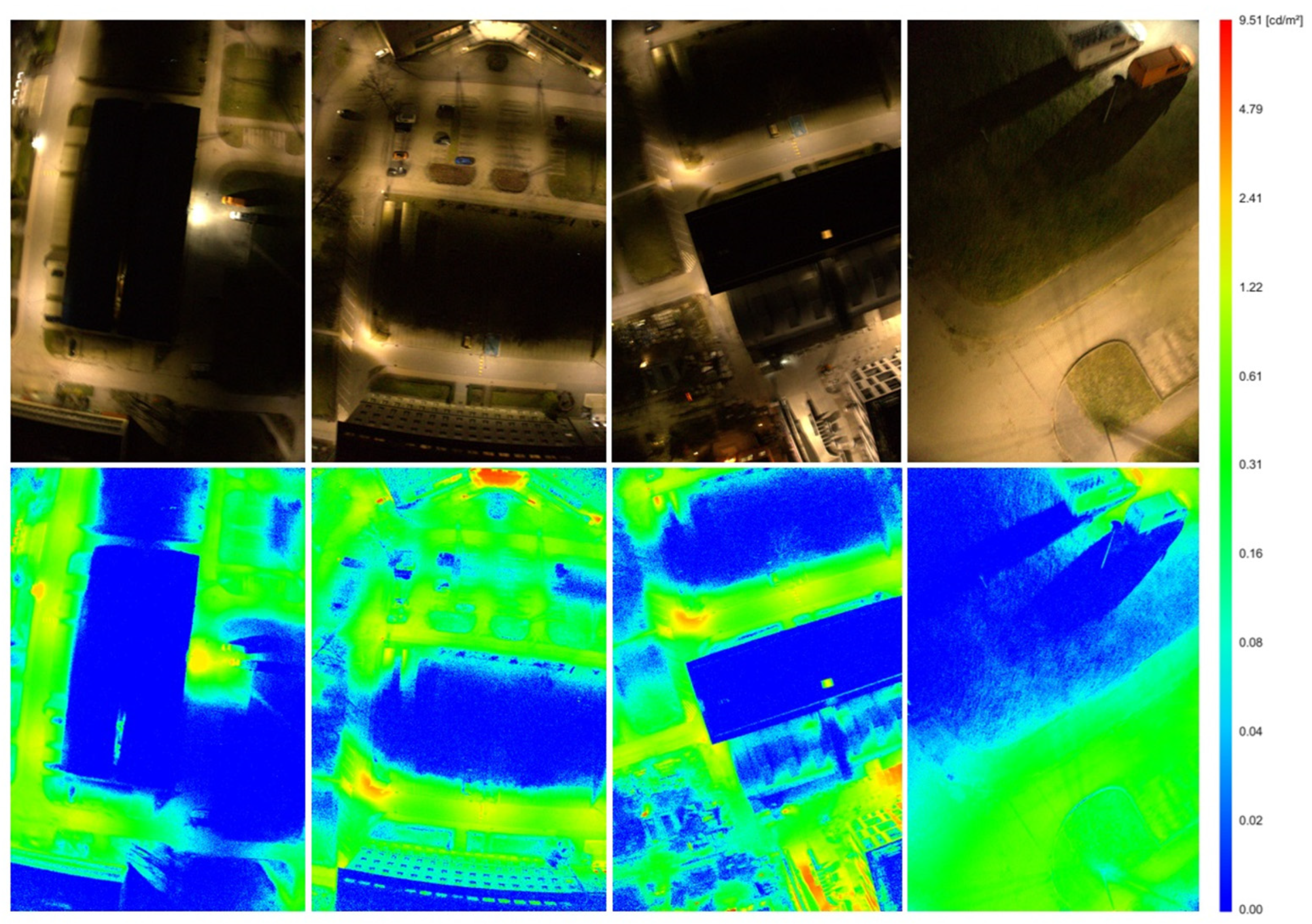

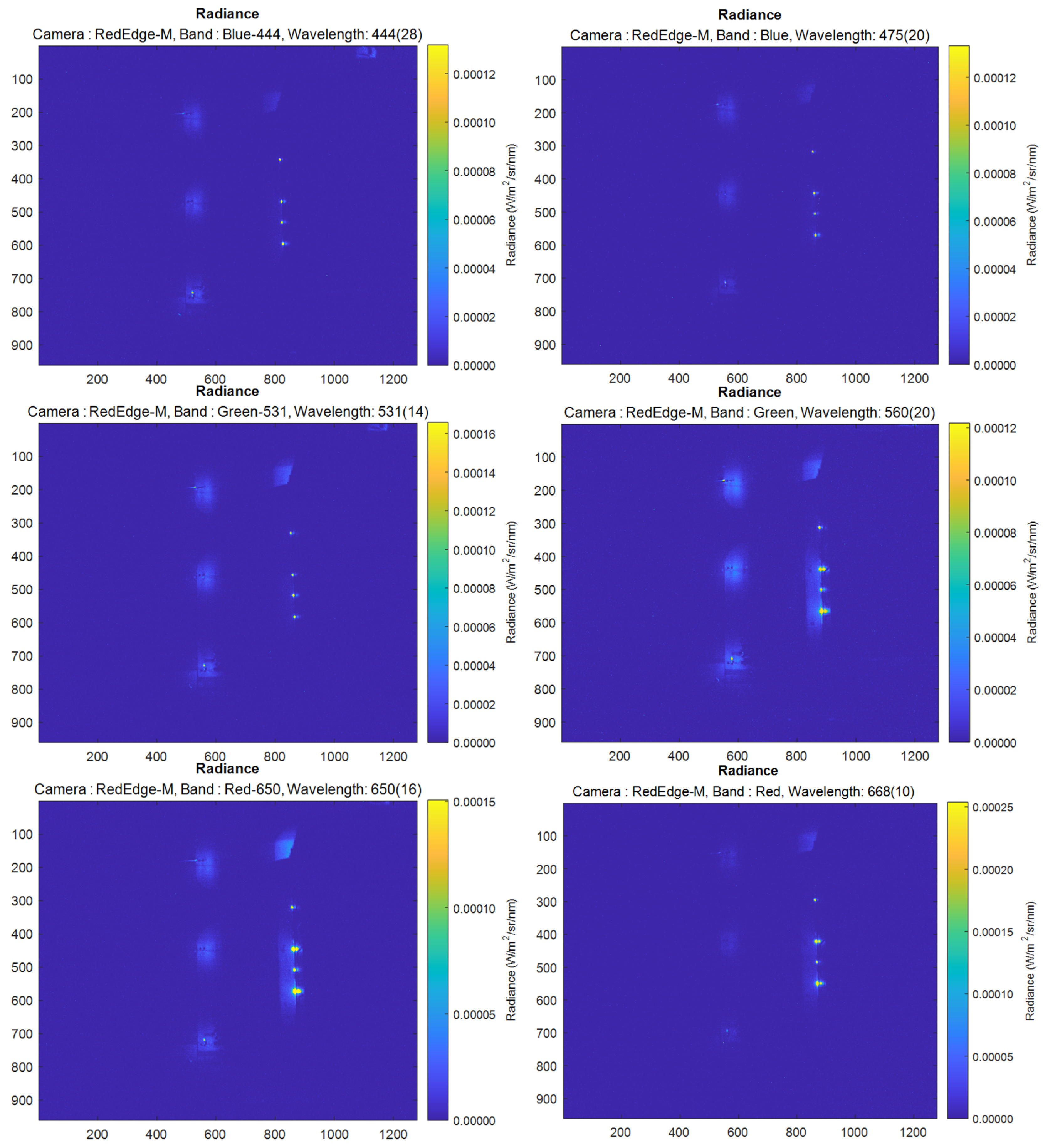

3.3. Nighttime Multispectral Images

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Grand View Research Commercial UAV Market Size, Share & Trends Analysis Report by Product (Fixed Wing, Rotary Blade, Nano, Hybrid), by Application (Agriculture, Energy, Government, Media & Entertainment, Construction), by Region, and Segment Forecasts, 2023–2030. 2022. Available online: https://www.grandviewresearch.com/industry-analysis/commercial-uav-market (accessed on 1 August 2024).

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Kovanič, Ľ.; Topitzer, B.; Peťovský, P.; Blišťan, P.; Gergeľová, M.B.; Blišťanová, M. Review of Photogrammetric and Lidar Applications of UAV. Appl. Sci. 2023, 13, 6732. [Google Scholar] [CrossRef]

- Staniszewski, R.; Messyasz, B.; Dąbrowski, P.; Burdziakowski, P.; Spychała, M. Recent Issues and Challenges in the Study of Inland Waters. Water 2024, 16, 1216. [Google Scholar] [CrossRef]

- Kwon, D.Y.; Kim, J.; Park, S.; Hong, S. Advancements of Remote Data Acquisition and Processing in Unmanned Vehicle Technologies for Water Quality Monitoring: An Extensive Review. Chemosphere 2023, 343, 140198. [Google Scholar] [CrossRef] [PubMed]

- Szczurek, A.; Gonstał, D.; Maciejewska, M. The Gas Sensing Drone with the Lowered and Lifted Measurement Platform. Sensors 2023, 23, 1253. [Google Scholar] [CrossRef]

- Fadhil, M.J.; Gharghan, S.K.; Saeed, T.R. LoRa Sensor Node Mounted on Drone for Monitoring Industrial Area Gas Pollution. Eng. Technol. J. 2023, 195, 1152. [Google Scholar] [CrossRef]

- Rohi, G.; Ejofodomi, O.; Ofualagba, G. Autonomous Monitoring, Analysis, and Countering of Air Pollution Using Environmental Drones. Heliyon 2020, 6, e03252. [Google Scholar] [CrossRef]

- de Castro, A.I.; Shi, Y.; Maja, J.M.; Peña, J.M. UAVs for Vegetation Monitoring: Overview and Recent Scientific Contributions. Remote Sens. 2021, 13, 2139. [Google Scholar] [CrossRef]

- Su, S.; Yan, L.; Xie, H.; Chen, C.; Zhang, X.; Gao, L.; Zhang, R. Multi-Level Hazard Detection Using a UAV-Mounted Multi-Sensor for Levee Inspection. Drones 2024, 8, 90. [Google Scholar] [CrossRef]

- Jessin, J.; Heinzlef, C.; Long, N.; Serre, D. A Systematic Review of UAVs for Island Coastal Environment and Risk Monitoring: Towards a Resilience Assessment. Drones 2023, 7, 206. [Google Scholar] [CrossRef]

- Akhloufi, M.A.; Couturier, A.; Castro, N.A. Unmanned Aerial Vehicles for Wildland Fires: Sensing, Perception, Cooperation and Assistance. Drones 2021, 5, 15. [Google Scholar] [CrossRef]

- De Keukelaere, L.; Moelans, R.; Knaeps, E.; Sterckx, S.; Reusen, I.; De Munck, D.; Simis, S.G.H.; Constantinescu, A.M.; Scrieciu, A.; Katsouras, G.; et al. Airborne Drones for Water Quality Mapping in Inland, Transitional and Coastal Waters—MapEO Water Data Processing and Validation. Remote Sens. 2023, 15, 1345. [Google Scholar] [CrossRef]

- Zhao, C.; Li, M.; Wang, X.; Liu, B.; Pan, X.; Fang, H. Improving the Accuracy of Nonpoint-Source Pollution Estimates in Inland Waters with Coupled Satellite-UAV Data. Water Res. 2022, 225, 119208. [Google Scholar] [CrossRef] [PubMed]

- Bará, S.; Falchi, F. Artificial Light at Night: A Global Disruptor of the Night-Time Environment. Philos. Trans. R. Soc. B Biol. Sci. 2023, 378, 20220352. [Google Scholar] [CrossRef] [PubMed]

- Svechkina, A.; Portnov, B.A.; Trop, T. The Impact of Artificial Light at Night on Human and Ecosystem Health: A Systematic Literature Review. Landsc. Ecol. 2020, 35, 1725–1742. [Google Scholar] [CrossRef]

- Falcón, J.; Torriglia, A.; Attia, D.; Viénot, F.; Gronfier, C.; Behar-Cohen, F.; Martinsons, C.; Hicks, D. Exposure to Artificial Light at Night and the Consequences for Flora, Fauna, and Ecosystems. Front. Neurosci. 2020, 14, 602796. [Google Scholar] [CrossRef]

- Hölker, F.; Bolliger, J.; Davies, T.W.; Giavi, S.; Jechow, A.; Kalinkat, G.; Longcore, T.; Spoelstra, K.; Tidau, S.; Visser, M.E.; et al. 11 Pressing Research Questions on How Light Pollution Affects Biodiversity. Front. Ecol. Evol. 2021, 9, 767177. [Google Scholar] [CrossRef]

- Cupertino, M.D.C.; Guimarães, B.T.; Pimenta, J.F.G.; Almeida, L.V.L.D.; Santana, L.N.; Ribeiro, T.A.; Santana, Y.N. LIGHT POLLUTION: A Systematic Review about the Impacts of Artificial Light on Human Health. Biol. Rhythm. Res. 2023, 54, 263–275. [Google Scholar] [CrossRef]

- Hänel, A.; Posch, T.; Ribas, S.J.; Aubé, M.; Duriscoe, D.; Jechow, A.; Kollath, Z.; Lolkema, D.E.; Moore, C.; Schmidt, N.; et al. Measuring Night Sky Brightness: Methods and Challenges. J. Quant. Spectrosc. Radiat. Transf. 2018, 205, 278–290. [Google Scholar] [CrossRef]

- Fiorentin, P.; Bertolo, A.; Cavazzani, S.; Ortolani, S. Calibration of Digital Compact Cameras for Sky Quality Measures. J. Quant. Spectrosc. Radiat. Transf. 2020, 255, 107235. [Google Scholar] [CrossRef]

- Mander, S.; Alam, F.; Lovreglio, R.; Ooi, M. How to Measure Light Pollution—A Systematic Review of Methods and Applications. Sustain. Cities Soc. 2023, 92, 104465. [Google Scholar] [CrossRef]

- Zielinska-Dabkowska, K.M.; Szlachetko, K.; Bobkowska, K. An Impact Analysis of Artificial Light at Night (ALAN) on Bats. A Case Study of the Historic Monument and Natura 2000 Wisłoujście Fortress in Gdansk, Poland. Int. J. Environ. Res. Public Health 2021, 18, 11327. [Google Scholar] [CrossRef] [PubMed]

- Kurkela, M.; Maksimainen, M.; Julin, A.; Virtanen, J.-P.; Männistö, I.; Vaaja, M.T.; Hyyppä, H. Applying Photogrammetry to Reconstruct 3D Luminance Point Clouds of Indoor Environments. Archit. Eng. Des. Manag. 2022, 18, 56–72. [Google Scholar] [CrossRef]

- Bolliger, J.; Hennet, T.; Wermelinger, B.; Bösch, R.; Pazur, R.; Blum, S.; Haller, J.; Obrist, M.K. Effects of Traffic-Regulated Street Lighting on Nocturnal Insect Abundance and Bat Activity. Basic Appl. Ecol. 2020, 47, 44–56. [Google Scholar] [CrossRef]

- Bouroussis, C.A.; Topalis, F.V. Assessment of Outdoor Lighting Installations and Their Impact on Light Pollution Using Unmanned Aircraft Systems—The Concept of the Drone-Gonio-Photometer. J. Quant. Spectrosc. Radiat. Transf. 2020, 253, 107155. [Google Scholar] [CrossRef]

- Rabaza, O.; Molero-Mesa, E.; Aznar-Dols, F.; Gómez-Lorente, D. Experimental Study of the Levels of Street Lighting Using Aerial Imagery and Energy Efficiency Calculation. Sustainability 2018, 10, 4365. [Google Scholar] [CrossRef]

- Li, X.; Levin, N.; Xie, J.; Li, D. Monitoring Hourly Night-Time Light by an Unmanned Aerial Vehicle and Its Implications to Satellite Remote Sensing. Remote Sens. Environ. 2020, 247, 111942. [Google Scholar] [CrossRef]

- Aldao, E.; González-Jorge, H.; Pérez, J.A. Metrological Comparison of LiDAR and Photogrammetric Systems for Deformation Monitoring of Aerospace Parts. Measurement 2021, 174, 109037. [Google Scholar] [CrossRef]

- Saputra, H.; Ananda, F.; Dinanta, G.P.; Awaluddin, A.; Edward, E. Optimization of UAV-Fixed Wing for Topographic Three Dimensional (3D) Mapping in Mountain Areas. In Proceedings of the 11th International Applied Business and Engineering Conference, Riau, Indonesia, 21 September 2023; EAI: Newton, MA, USA, 2024. [Google Scholar]

- Burdziakowski, P.; Bobkowska, K. UAV Photogrammetry under Poor Lighting Conditions—Accuracy Considerations. Sensors 2021, 21, 3531. [Google Scholar] [CrossRef]

- Massetti, L.; Paterni, M.; Merlino, S. Monitoring Light Pollution with an Unmanned Aerial Vehicle: A Case Study Comparing RGB Images and Night Ground Brightness. Remote Sens. 2022, 14, 2052. [Google Scholar] [CrossRef]

- Bhattarai, D.; Lucieer, A. Optimising Camera and Flight Settings for Ultrafine Resolution Mapping of Artificial Night-Time Lights Using an Unoccupied Aerial System. Drone Syst. Appl. 2024, 12, 1–11. [Google Scholar] [CrossRef]

- Wüller, D.; Gabele, H. The Usage of Digital Cameras as Luminance Meters. In Digital Photography III; SPIE: Bellingham, WA, USA, 20 February 2007; Volume 6502, p. 65020U. [Google Scholar]

- Tate, C.G.; Moyers, R.L.; Corcoran, K.A.; Duncan, A.M.; Vacaliuc, B.; Larson, M.D.; Melton, C.A.; Hughes, D. Artificial Illumination Identification from an Unmanned Aerial Vehicle. J. Appl. Remote Sens. 2020, 14, 34528. [Google Scholar] [CrossRef]

- Suport Micasense Radiometric Calibration Model for MicaSense Sensors. Available online: https://support.micasense.com/hc/en-us/articles/115000351194-Radiometric-Calibration-Model-for-MicaSense-Sensors (accessed on 1 August 2024).

- Daniels, L.; Eeckhout, E.; Wieme, J.; Dejaegher, Y.; Audenaert, K.; Maes, W.H. Identifying the Optimal Radiometric Calibration Method for UAV-Based Multispectral Imaging. Remote Sens. 2023, 15, 2909. [Google Scholar] [CrossRef]

- Mamaghani, B.; Salvaggio, C. Multispectral Sensor Calibration and Characterization for SUAS Remote Sensing. Sensors 2019, 19, 4453. [Google Scholar] [CrossRef]

- Puschnig, J.; Wallner, S.; Schwope, A.; Näslund, M. Long-Term Trends of Light Pollution Assessed from SQM Measurements and an Empirical Atmospheric Model. Mon. Not. R. Astron. Soc. 2022, 518, 4449–4465. [Google Scholar] [CrossRef]

- Bustamante-Calabria, M.; de Miguel, A.; Martín-Ruiz, S.; Ortiz, J.-L.; Vílchez, J.M.; Pelegrina, A.; García, A.; Zamorano, J.; Bennie, J.; Gaston, K.J. Effects of the COVID-19 Lockdown on Urban Light Emissions: Ground and Satellite Comparison. Remote Sens. 2021, 13, 258. [Google Scholar] [CrossRef]

- Ściężor, T. Effect of Street Lighting on the Urban and Rural Night-Time Radiance and the Brightness of the Night Sky. Remote Sens. 2021, 13, 1654. [Google Scholar] [CrossRef]

- Schnitt, S.; Ruhtz, T.; Fischer, J.; Hölker, F.; Kyba, C.C.M. Temperature Stability of the Sky Quality Meter. Sensors 2013, 13, 12166–12174. [Google Scholar] [CrossRef]

- Luo, W.; Kramer, R.; Kompier, M.; Smolders, K.; de Kort, Y.; van Marken Lichtenbelt, W. Personal Control of Correlated Color Temperature of Light: Effects on Thermal Comfort, Visual Comfort, and Cognitive Performance. Build. Environ. 2023, 238, 110380. [Google Scholar] [CrossRef]

- Burdziakowski, P. The Effect of Varying the Light Spectrum of a Scene on the Localisation of Photogrammetric Features. Remote Sens. 2024, 16, 2644. [Google Scholar] [CrossRef]

- Ges, X.; Bará, S.; García-Gil, M.; Zamorano, J.; Ribas, S.J.; Masana, E. Light Pollution Offshore: Zenithal Sky Glow Measurements in the Mediterranean Coastal Waters. J. Quant. Spectrosc. Radiat. Transf. 2018, 210, 91–100. [Google Scholar] [CrossRef]

- Karpińska, D.; Kunz, M. Vertical Variability of Night Sky Brightness in Urbanised Areas. Quaest. Geogr. 2023, 42, 5–14. [Google Scholar] [CrossRef]

| Type | Horizontal FOV (deg) | Vertical FOW (deg) | Resolution (pix) | Spectral Range (Bandwidth) (nm) | Data Type | Result Data | Units | Sensor Weight |

|---|---|---|---|---|---|---|---|---|

| Visible light Camera | 36° | 23° | 6000 × 4000 | Image (RAW) | Spectral radiance | W/m2/nm/sr | 344 g body 116 g lens | |

| Spectrometer | ~20°—HWHM * ~40°—FWHM ** | ~20°—HWHM * ~40°—FWHM ** | 380 to 780 nm (1 nm) | Text (CSV) | Illuminance, Spectral Power Distribution | lux, mW/m2 | 70 g | |

| Sky Quality Meter | ~10°—HWHM * ~20°—FWHM ** | ~10°—HWHM * ~20°—FWHM ** | Text (CSV) | mag/arcsec2 | 110 g | |||

| Multispectral camera | 47.2° | 35.4° | 1280 × 960 | coastal blue 444(28) blue 475(32) green 531(14) green 560(27) red 650(16) red 668(14) red edge 705(10) red edge 717(12) red edge 740(18) NIR 842(57) | Image 12 bit (TIFF) | 460 g |

| Product | Data Type | Source—Sensor | Values/Units | Treatment/Processing Type (Whether Directly—Raw or after Using Software—Which) |

|---|---|---|---|---|

| RGB images | Raster | Sony Alpha 6000 with E PZ 16–50 mm F3.5-5.6 OSS lens | DN [-] | Raw file |

| RGB images | Raster | Sony Alpha 6000 with E PZ 16–50 mm F3.5-5.6 OSS lens | Surface brightness [-] | MATLAB R2024a |

| Images—luminance values | Raster | Sony Alpha 6000 with E PZ 16–50 mm F3.5-5.6 OSS lens | Surface luminance [cd/m2] | iQ Luminance 3.1.0. |

| RGB daytime orthomosaics | Raster | Sony Alpha 6000 with E PZ 16–50 mm F3.5-5.6 OSS lens | Surface brightness [-] | Agisoft Metashape Professional 2.1.3. |

| RGB daytime orthomosaics | Raster | Sony Alpha 6000 with E PZ 16–50 mm F3.5-5.6 OSS lens | Surface brightness [-] | Agisoft Metashape Professional 2.1.3. |

| Sky brightness | Point | SQM LU-DL | Sky brightness [mag/arcsec2]. | Raw file |

| Photometric data from the spectrometer | Point | UPRtek MK350D | Illuminance [lux], CCT [K], CIE Chromaticity Coordinates [-], CRI, Percent Flicker [%], Spectral Power Distribution (SPD) [mW/m2], λp [nm], Blue Light Weighted Irradiance (Eb) w/m2, Blue Light Hazard Efficacy of Luminous Radiation (Kbv) [w/lm], Blue Light Hazard Blue-ray % (BL%), Blue Light Hazard Risk Group (RG) | Raw file |

| Multispectral images night | Raster | MicaSense Dual RedEdge-MX, RedEdge-MX Blue | DN [-] | Raw file/processed |

| Multispectral images night | Raster | MicaSense Dual RedEdge-MX, RedEdge-MX Blue | Radiance (W/m2/sr/nm) | MATLAB R2024a/Python 2.12.6/OpenCV 4.10.0 |

| Orthomosaics for 9 spectral channels | Raster | MicaSense Dual RedEdge-MX, RedEdge-MX Blue | Agisoft Metashape Professional 2.1.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bobkowska, K.; Burdziakowski, P.; Tysiac, P.; Pulas, M. An Innovative New Approach to Light Pollution Measurement by Drone. Drones 2024, 8, 504. https://doi.org/10.3390/drones8090504

Bobkowska K, Burdziakowski P, Tysiac P, Pulas M. An Innovative New Approach to Light Pollution Measurement by Drone. Drones. 2024; 8(9):504. https://doi.org/10.3390/drones8090504

Chicago/Turabian StyleBobkowska, Katarzyna, Pawel Burdziakowski, Pawel Tysiac, and Mariusz Pulas. 2024. "An Innovative New Approach to Light Pollution Measurement by Drone" Drones 8, no. 9: 504. https://doi.org/10.3390/drones8090504

APA StyleBobkowska, K., Burdziakowski, P., Tysiac, P., & Pulas, M. (2024). An Innovative New Approach to Light Pollution Measurement by Drone. Drones, 8(9), 504. https://doi.org/10.3390/drones8090504