Review of Crop Phenotyping in Field Plot Experiments Using UAV-Mounted Sensors and Algorithms

Abstract

1. Introduction

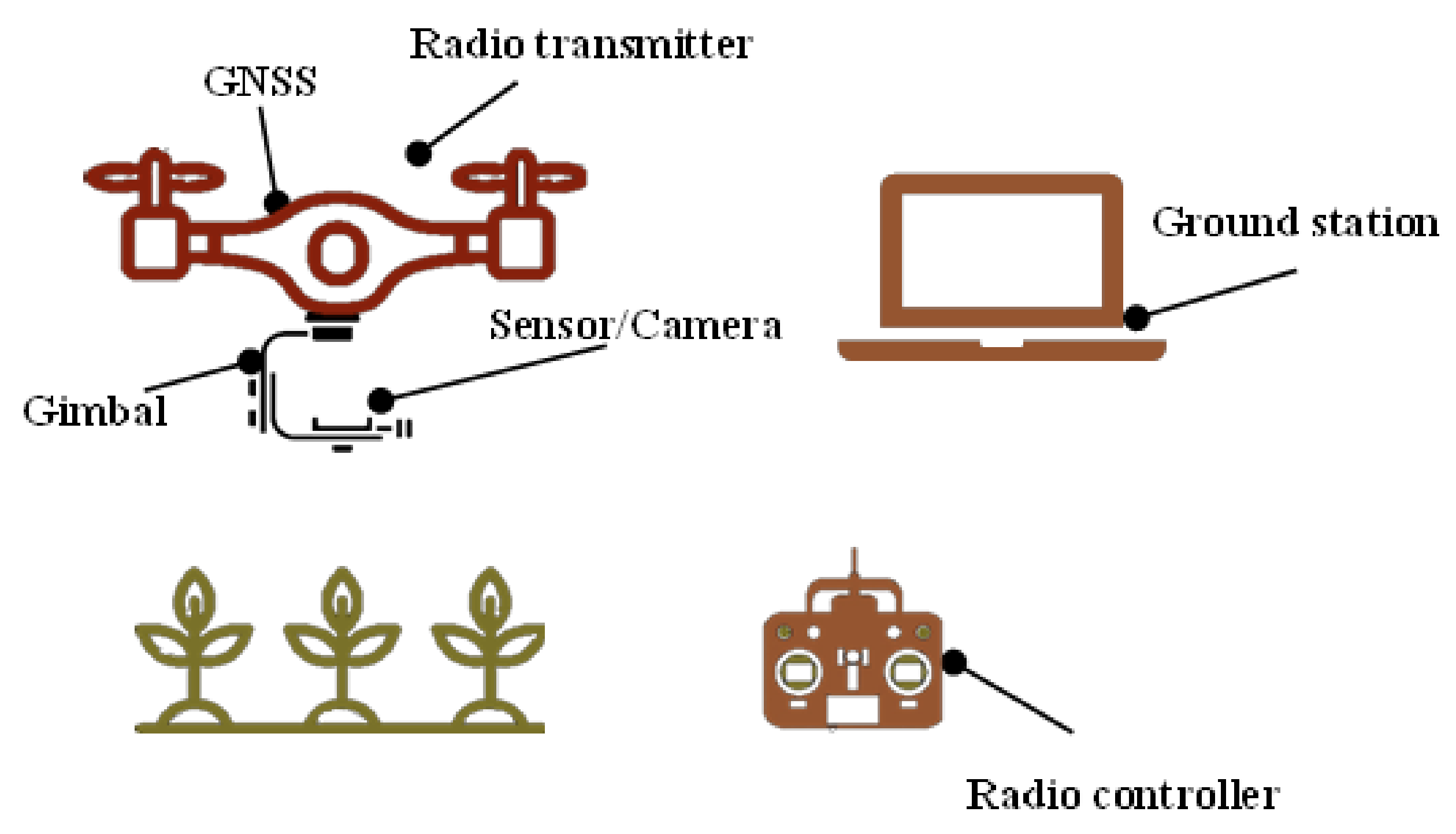

2. Breeding and Field Phenotyping

3. UAV and Sensors

| Type of UAV | Advantages | Limitations | References |

|---|---|---|---|

Rotary-wing | Highly maneuverable, suitable for small or irregularly shaped fields, high-resolution imaging, detailed mapping of crops | Limited flight time, less efficient for large-scale mapping, vulnerable to windy conditions | [9,18,19] |

Fixed-wing | Speed, long flight time, ideal for covering large areas quickly | Inability to hover in place, need a large open space | [20,21] |

Gas helicopter | Stable in windy conditions, able to be used for long periods of time, can carry a heavier payload | High cost, complexity, loud noise | [6,22] |

Hybrid | Integrates the advantages of rotary-wing and fixed-wing UAVs. Taking off and landing use rotary-wing mode, and long-distance surveys use fixed-wing mode. | High cost, complexity, requires professional pilots | [23] |

3.1. RGB Camera

3.2. Multispectral Camera

3.3. Hyperspectral Camera

3.4. Thermal Camera

3.5. LiDAR

3.6. Microwave Sensors

4. Crop Reflectance and Chemical Information

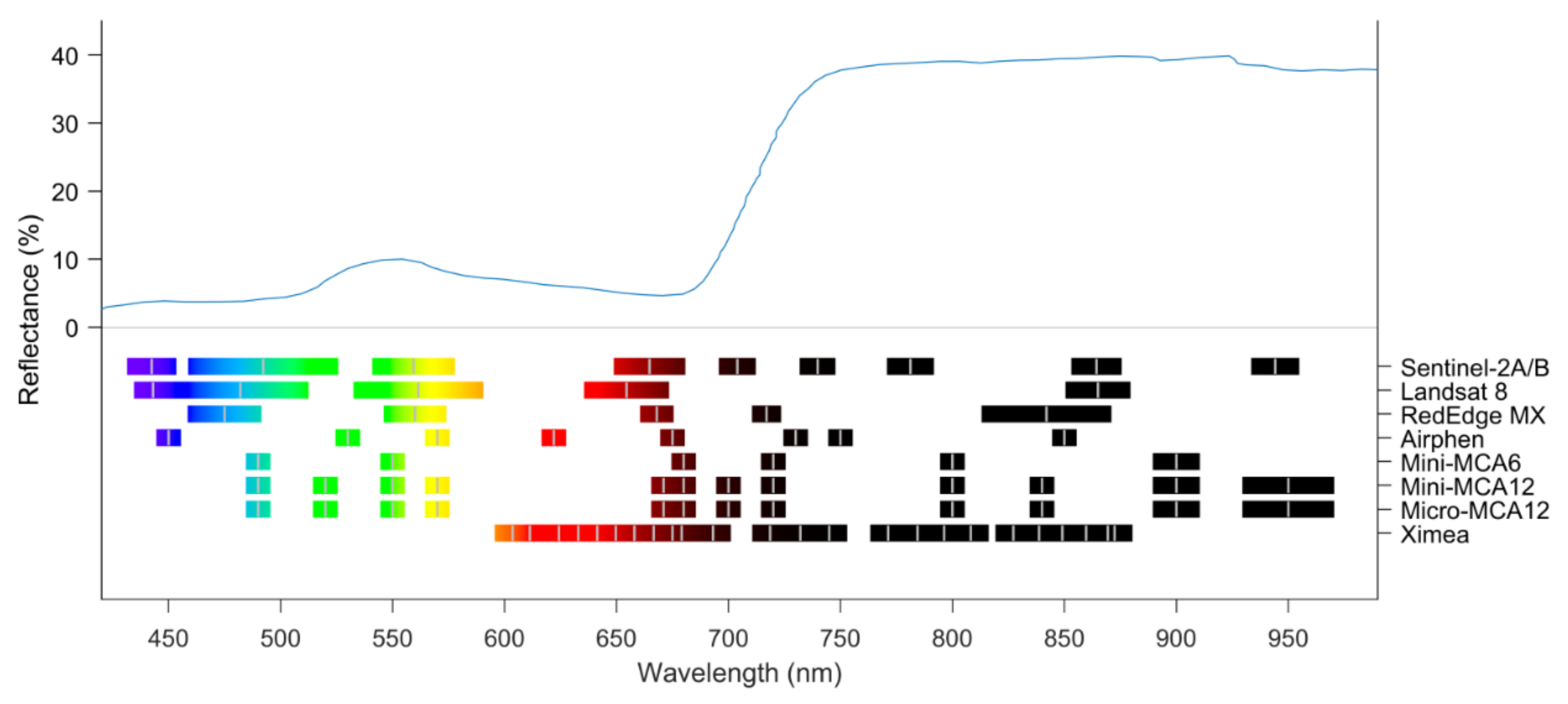

4.1. Crop Reflectance Figure

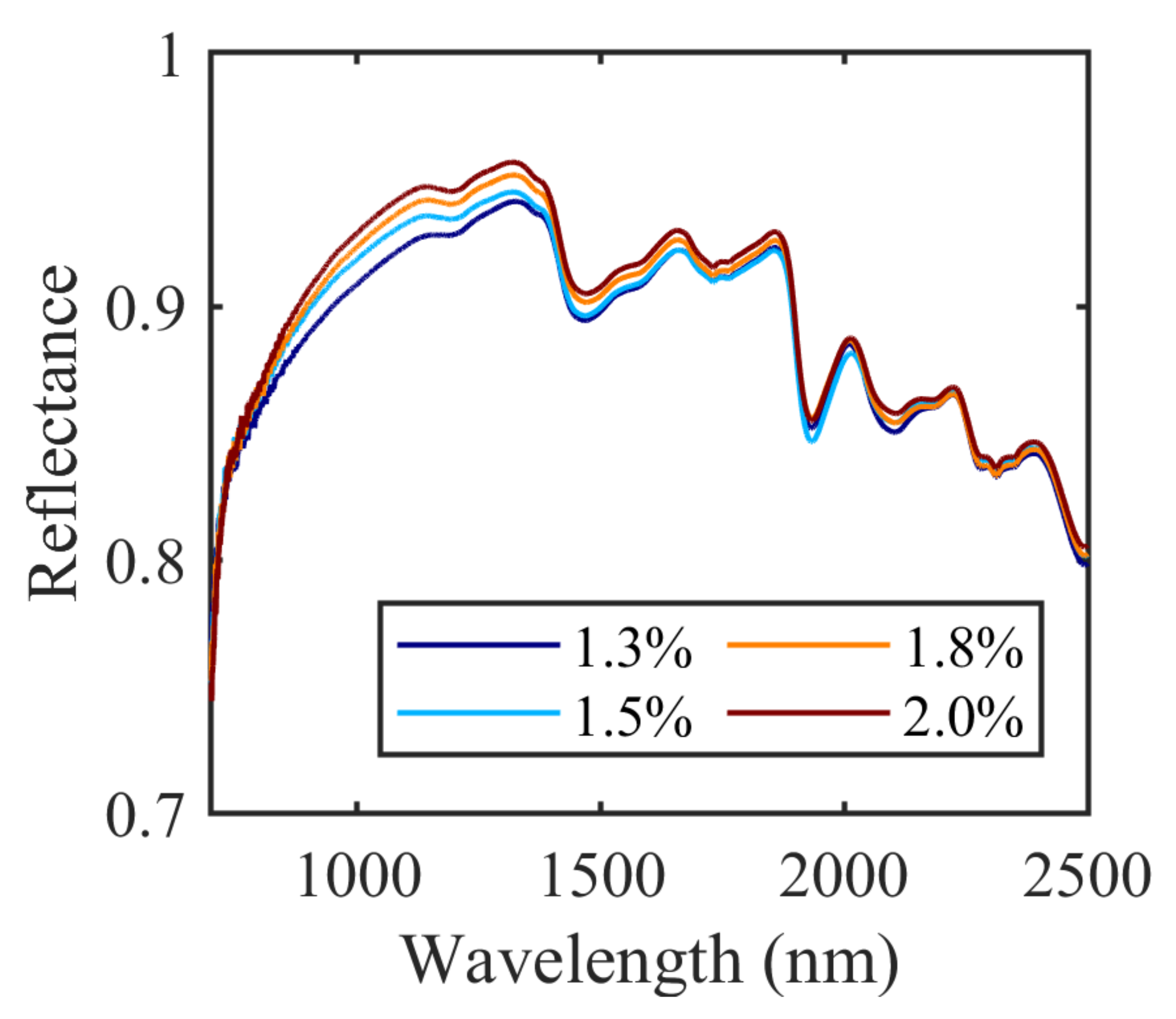

4.2. Plant Spectrum Figure

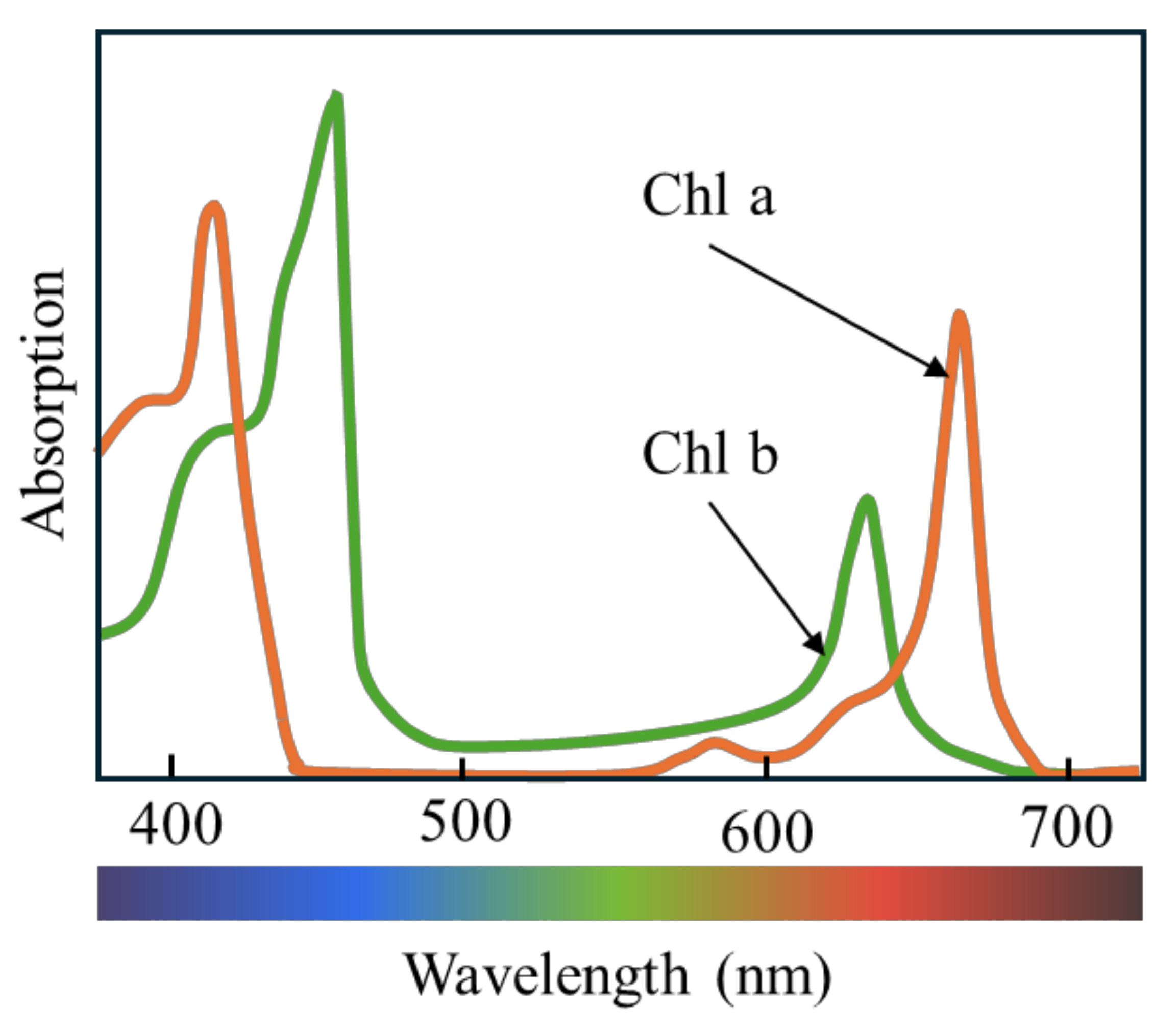

4.3. Relationship between Chl and N in Crops

4.4. N and Biomass

5. Extracting Phenotypic Traits from UAV Image

5.1. Georeferencing, Structure-from-Motion, and Radiometric Calibration

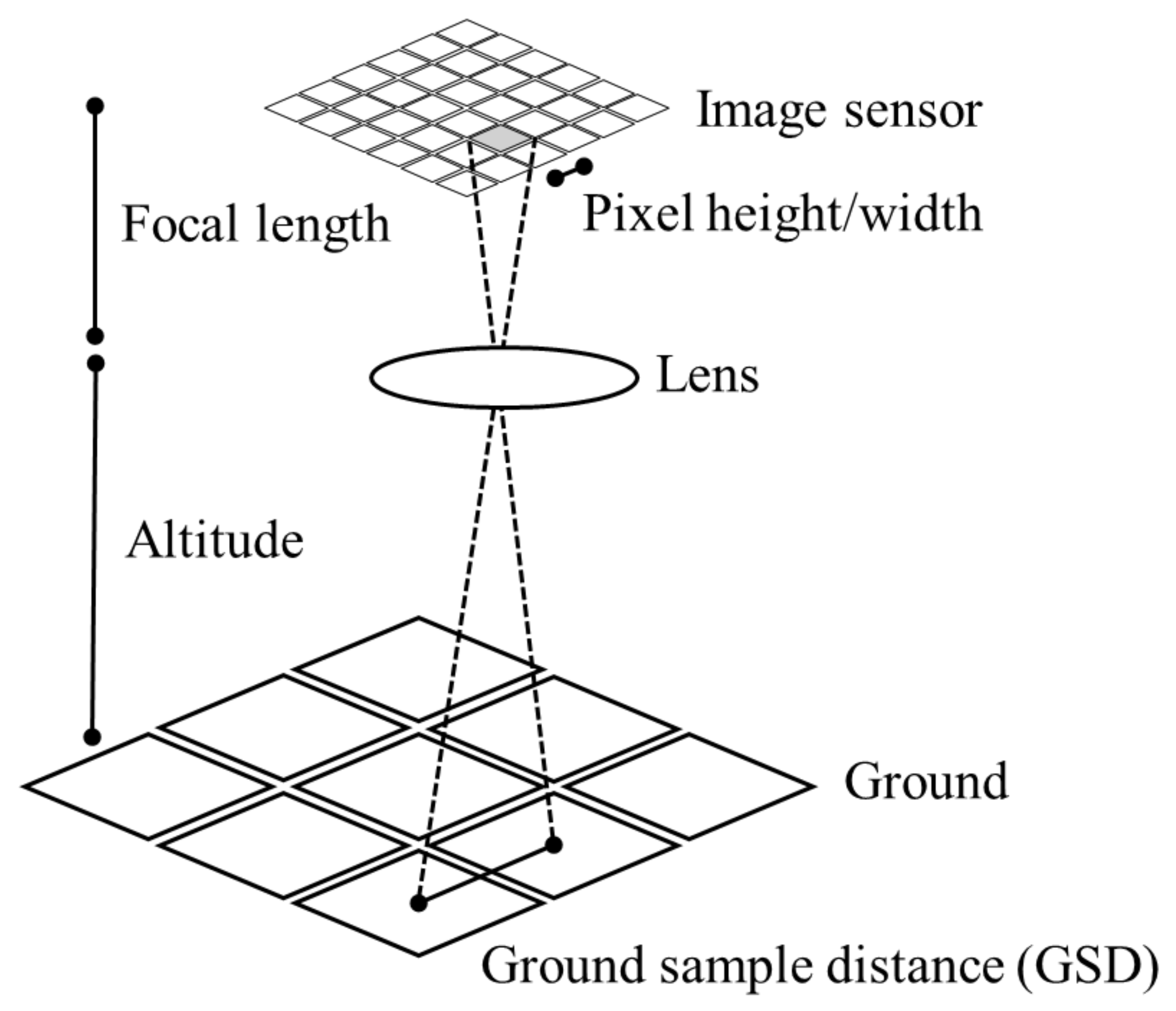

5.2. Ground Sampling Distance

5.3. Region of Interest and Extracted Features

5.4. Data Analysis

6. Phenotypic Applications

6.1. Chl and N

6.2. Height and Lodging

6.3. Biomass and Yield

6.4. LAI

6.5. Plant Number and Area Cover

7. Discussions

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tilman, D.; Balzer, C.; Hill, J.; Befort, B.L. Global food demand and the sustainable intensification of agriculture. Proc. Natl. Acad. Sci. USA 2011, 108, 20260–20264. [Google Scholar] [CrossRef]

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef]

- Araus, J.L.; Kefauver, S.C. Breeding to adapt agriculture to climate change: Affordable phenotyping solutions. Curr. Opin. Plant Biol. 2018, 45, 237–247. [Google Scholar] [CrossRef]

- Reynolds, M.; Chapman, S.; Crespo-Herrera, L.; Molero, G.; Mondal, S.; Pequeno, D.N.L.; Pinto, F.; Pinera-Chavez, F.J.; Poland, J.; Rivera-Amado, C.; et al. Breeder friendly phenotyping. Plant Sci. 2020, 295, 110396. [Google Scholar] [CrossRef]

- Wang, S.; Garcia, M.; Ibrom, A.; Bauer-Gottwein, P. Temporal interpolation of land surface fluxes derived from remote sensing—Results with an unmanned aerial system. Hydrol. Earth Syst. Sci. 2020, 24, 3643–3661. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R.; et al. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Feng, L.; Chen, S.; Zhang, C.; Zhang, Y.; He, Y. A comprehensive review on recent applications of unmanned aerial vehicle remote sensing with various sensors for high-throughput plant phenotyping. Comput. Electron. Agric. 2021, 182, 106033. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Xie, C.; Yang, C. A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 2020, 178, 105731. [Google Scholar] [CrossRef]

- Priyanka, G.; Choudhary, S.; Anbazhagan, K.; Naresh, D.; Baddam, R.; Jarolimek, J.; Parnandi, Y.; Rajalakshmi, P.; Kholova, J. A step towards inter-operable Unmanned Aerial Vehicles (UAV) based phenotyping; A case study demonstrating a rapid, quantitative approach to standardize image acquisition and check quality of acquired images. ISPRS Open J. Photogramm. Remote Sens. 2023, 9, 100042. [Google Scholar] [CrossRef]

- Das, S.; Chapman, S.; Christopher, J.; Choudhury, M.R.; Menzies, N.W.; Apan, A.; Dang, Y.P. UAV-thermal imaging: A technological breakthrough for monitoring and quantifying crop abiotic stress to help sustain productivity on sodic soils—A case review on wheat. Remote Sens. Appl. Soc. Environ. 2021, 23, 100583. [Google Scholar] [CrossRef]

- Wen, T.; Li, J.H.; Wang, Q.; Gao, Y.Y.; Hao, G.F.; Song, B.A. Thermal imaging: The digital eye facilitates high-throughput phenotyping traits of plant growth and stress responses. Sci. Total Environ. 2023, 899, 165626. [Google Scholar] [CrossRef] [PubMed]

- Arya, S.; Sandhu, K.S.; Singh, J.; Kumar, S. Deep learning: As the new frontier in high-throughput plant phenotyping. Euphytica 2022, 218, 47. [Google Scholar] [CrossRef]

- Bazrafkan, A.; Delavarpour, N.; Oduor, P.G.; Bandillo, N.; Flores, P. An Overview of Using Unmanned Aerial System Mounted Sensors to Measure Plant Above-Ground Biomass. Remote Sens. 2023, 15, 3543. [Google Scholar] [CrossRef]

- Naito, H.; Ogawa, S.; Valencia, M.O.; Mohri, H.; Urano, Y.; Hosoi, F.; Shimizu, Y.; Chavez, A.L.; Ishitani, M.; Selvaraj, M.G.; et al. Estimating rice yield related traits and quantitative trait loci analysis under different nitrogen treatments using a simple tower-based field phenotyping system with modified single-lens reflex cameras. ISPRS J. Photogramm. 2017, 125, 50–62. [Google Scholar] [CrossRef]

- Mahlein, A.K. Plant Disease Detection by Imaging Sensors—Parallels and Specific Demands for Precision Agriculture and Plant Phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned Aerial Vehicles in Agriculture: A Review of Perspective of Platform, Control, and Applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Boon, M.A.; Drijfhout, A.P.; Tesfamichael, S. Comparison of a Fixed-Wing and Multi-Rotor Uav for Environmental Mapping Applications: A Case Study. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W6, 47–54. [Google Scholar] [CrossRef]

- Rahman, M.F.F.; Fan, S.; Zhang, Y.; Chen, L. A Comparative Study on Application of Unmanned Aerial Vehicle Systems in Agriculture. Agriculture 2021, 11, 22. [Google Scholar] [CrossRef]

- del Cerro, J.; Cruz Ulloa, C.; Barrientos, A.; de León Rivas, J. Unmanned Aerial Vehicles in Agriculture: A Survey. Agronomy 2021, 11, 203. [Google Scholar] [CrossRef]

- Reddy Maddikunta, P.K.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.-V. Unmanned Aerial Vehicles in Smart Agriculture: Applications, Requirements, and Challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

- Bandini, F.; Lopez-Tamayo, A.; Merediz-Alonso, G.; Olesen, D.; Jakobsen, J.; Wang, S.; Garcia, M.; Bauer-Gottwein, P. Unmanned aerial vehicle observations of water surface elevation and bathymetry in the cenotes and lagoons of the Yucatan Peninsula, Mexico. Hydrogeol. J. 2018, 26, 2213–2228. [Google Scholar] [CrossRef]

- Barbosa, B.D.S.; Ferraz, G.A.S.; Gonçalves, L.M.; Marin, D.B.; Maciel, D.T.; Ferraz, P.F.P.; Rossi, G. RGB vegetation indicies applied to grass monitoring: A qualitative analysis. Agron. Res. 2019, 17, 349–357. [Google Scholar] [CrossRef]

- Francesconi, S.; Harfouche, A.; Maesano, M.; Balestra, G.M. UAV-Based Thermal, RGB Imaging and Gene Expression Analysis Allowed Detection of Fusarium Head Blight and Gave New Insights Into the Physiological Responses to the Disease in Durum Wheat. Front. Plant Sci. 2021, 12, 628575. [Google Scholar] [CrossRef] [PubMed]

- Volpato, L.; Pinto, F.; Gonzalez-Perez, L.; Thompson, I.G.; Borem, A.; Reynolds, M.; Gerard, B.; Molero, G.; Rodrigues, F.A., Jr. High Throughput Field Phenotyping for Plant Height Using UAV-Based RGB Imagery in Wheat Breeding Lines: Feasibility and Validation. Front. Plant Sci. 2021, 12, 591587. [Google Scholar] [CrossRef] [PubMed]

- Wilke, N.; Siegmann, B.; Klingbeil, L.; Burkart, A.; Kraska, T.; Muller, O.; van Doorn, A.; Heinemann, S.; Rascher, U. Quantifying Lodging Percentage and Lodging Severity Using a UAV-Based Canopy Height Model Combined with an Objective Threshold Approach. Remote Sens. 2019, 11, 515. [Google Scholar] [CrossRef]

- Tan, S.Y.; Mortensen, A.K.; Ma, X.; Boelt, B.; Gislum, R. Assessment of grass lodging using texture and canopy height distribution features derived from UAV visual-band images. Agric. For. Meteorol. 2021, 308, 108541. [Google Scholar] [CrossRef]

- Gilliot, J.M.; Michelin, J.; Hadjard, D.; Houot, S. An accurate method for predicting spatial variability of maize yield from UAV-based plant height estimation: A tool for monitoring agronomic field experiments. Precis. Agric. 2020, 22, 897–921. [Google Scholar] [CrossRef]

- Taniguchi, S.; Sakamoto, T.; Imase, R.; Nonoue, Y.; Tsunematsu, H.; Goto, A.; Matsushita, K.; Ohmori, S.; Maeda, H.; Takeuchi, Y.; et al. Prediction of heading date, culm length, and biomass from canopy-height-related parameters derived from time-series UAV observations of rice. Front. Plant Sci. 2022, 13, 998803. [Google Scholar] [CrossRef]

- Roth, L.; Barendregt, C.; Bétrix, C.-A.; Hund, A.; Walter, A. High-throughput field phenotyping of soybean: Spotting an ideotype. Remote Sens. Environ. 2022, 269, 112797. [Google Scholar] [CrossRef]

- Buchaillot, M.L.; Gracia-Romero, A.; Vergara-Diaz, O.; Zaman-Allah, M.A.; Tarekegne, A.; Cairns, J.E.; Prasanna, B.M.; Araus, J.L.; Kefauver, S.C. Evaluating Maize Genotype Performance under Low Nitrogen Conditions Using RGB UAV Phenotyping Techniques. Sensors 2019, 19, 1815. [Google Scholar] [CrossRef] [PubMed]

- del Pozo, A.; Espinoza, S.; Barahona, V.; Inostroza, L.; Gerding, M.; Humphries, A.; Lobos, G.; Cares, J.; Ovalle, C. Aerial and ground-based phenotyping of an alfalfa diversity panel to assess adaptation to a prolonged drought period in a Mediterranean environment of central Chile. Eur. J. Agron. 2023, 144, 126751. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite-1 Symposium, Washington, DC, USA, 10–14 December 1973; pp. 309–317. Available online: https://ntrs.nasa.gov/citations/19740022614 (accessed on 10 May 2024).

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Barnes, E.M.C.; Richards, S.E.; Colaizzi, P.D.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000. [Google Scholar]

- Gracia-Romero, A.; Kefauver, S.C.; Fernandez-Gallego, J.A.; Vergara-Díaz, O.; Nieto-Taladriz, M.T.; Araus, J.L. UAV and Ground Image-Based Phenotyping: A Proof of Concept with Durum Wheat. Remote Sens. 2019, 11, 1244. [Google Scholar] [CrossRef]

- Wan, L.; Zhang, J.; Dong, X.; Du, X.; Zhu, J.; Sun, D.; Liu, Y.; He, Y.; Cen, H. Unmanned aerial vehicle-based field phenotyping of crop biomass using growth traits retrieved from PROSAIL model. Comput. Electron. Agric. 2021, 187, 106304. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Saric, R.; Nguyen, V.D.; Burge, T.; Berkowitz, O.; Trtilek, M.; Whelan, J.; Lewsey, M.G.; Custovic, E. Applications of hyperspectral imaging in plant phenotyping. Trends Plant Sci. 2022, 27, 301–315. [Google Scholar] [CrossRef] [PubMed]

- Lu, B.; Dao, P.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an unmanned aerial vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Giménez-Gallego, J.; González-Teruel, J.D.; Soto-Valles, F.; Jiménez-Buendía, M.; Navarro-Hellín, H.; Torres-Sánchez, R. Intelligent thermal image-based sensor for affordable measurement of crop canopy temperature. Comput. Electron. Agric. 2021, 188, 106319. [Google Scholar] [CrossRef]

- Lin, Y. LiDAR: An important tool for next-generation phenotyping technology of high potential for plant phenomics? Comput. Electron. Agric. 2015, 119, 61–73. [Google Scholar] [CrossRef]

- Christiansen, M.P.; Laursen, M.S.; Jorgensen, R.N.; Skovsen, S.; Gislum, R. Designing and Testing a UAV Mapping System for Agricultural Field Surveying. Sensors 2017, 17, 2703. [Google Scholar] [CrossRef] [PubMed]

- ten Harkel, J.; Bartholomeus, H.; Kooistra, L. Biomass and Crop Height Estimation of Different Crops Using UAV-Based Lidar. Remote Sens. 2019, 12, 17. [Google Scholar] [CrossRef]

- Acharya, B.S.; Bhandari, M.; Bandini, F.; Pizarro, A.; Perks, M.; Joshi, D.R.; Wang, S.; Dogwiler, T.; Ray, R.L.; Kharel, G.; et al. Unmanned Aerial Vehicles in Hydrology and Water Management: Applications, Challenges, and Perspectives. Water Resour. Res. 2021, 57, e2021WR029925. [Google Scholar] [CrossRef]

- Huang, X.D.; Reba, M.; Coffin, A.; Runkle, B.R.K.; Huang, Y.B.; Chapman, B.; Ziniti, B.; Skakun, S.; Kraatz, S.; Siqueira, P.; et al. Cropland mapping with L-band UAVSAR and development of NISAR products. Remote Sens. Environ. 2021, 253, 112180. [Google Scholar] [CrossRef]

- Nobel, P.S. Light. In Physicochemical and Environmental Plant Physiology; Nobel, P.S., Ed.; Academic Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Bec, K.B.; Huck, C.W. Breakthrough Potential in Near-Infrared Spectroscopy: Spectra Simulation. A Review of Recent Developments. Front. Chem. 2019, 7, 48. [Google Scholar] [CrossRef] [PubMed]

- Kuska, M.T.; Behmann, J.; Mahlein, A.K. Potential of hyperspectral imaging to detect and identify the impact of chemical warfare compounds on plant tissue. Pure Appl. Chem. 2018, 90, 1615–1624. [Google Scholar] [CrossRef]

- Roca, M.; Chen, K.; Pérez-Gálvez, A. Chlorophylls. In Handbook on Natural Pigments in Food and Beverages: Industrial Applications for Improving Food Color; Carle, R.S.R.M., Ed.; Woodhead Publishing: Cambridge, UK, 2016; pp. 125–158. [Google Scholar]

- Gholizadeh, M.H.; Melesse, A.M.; Reddi, L. A Comprehensive Review on Water Quality Parameters Estimation Using Remote Sensing Techniques. Sensors 2016, 16, 1298. [Google Scholar] [CrossRef]

- Maheswari, M.; Murthy, A.N.G.; Shanker, A.K. Nitrogen Nutrition in Crops and Its Importance in Crop Quality. In The Indian Nitrogen Assessment: Sources of Reactive Nitrogen, Environmental and Climate Effects, Management Options, and Policies; Abrol, Y.P., Adhya, T.K., Aneja, V.P., Raghuram, N., Pathak, H., Kulshrestha, U., Sharma, C., Singh, B., Eds.; Elsevier: Amsterdam, The Netherlands, 2017; pp. 175–186. [Google Scholar]

- Chen, M. Photosynthesis: Chlorophylls. In Encyclopedia of Biological Chemistry; Lennarz, W.J., Lane, M.D., Eds.; Academic Press: Cambridge, MA, USA, 2021; Volume 2, pp. 157–162. [Google Scholar]

- Quemada, M.; Gabriel, J.L.; Zarco-Tejada, P. Airborne Hyperspectral Images and Ground-Level Optical Sensors As Assessment Tools for Maize Nitrogen Fertilization. Remote Sens. 2014, 6, 2940–2962. [Google Scholar] [CrossRef]

- Wang, S.; Guan, K.; Wang, Z.H.; Ainsworth, E.A.; Zheng, T.; Townsend, P.A.; Liu, N.F.; Nafziger, E.; Masters, M.D.; Li, K.Y.; et al. Airborne hyperspectral imaging of nitrogen deficiency on crop traits and yield of maize by machine learning and radiative transfer modeling. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102617. [Google Scholar] [CrossRef]

- Li, X.Y.; Ata-UI-Karim, S.T.; Li, Y.; Yuan, F.; Miao, Y.X.; Yoichiro, K.; Cheng, T.; Tang, L.; Tian, X.S.; Liu, X.J.; et al. Advances in the estimations and applications of critical nitrogen dilution curve and nitrogen nutrition index of major cereal crops. A review. Comput. Electron. Agric. 2022, 197, 106998. [Google Scholar] [CrossRef]

- Zhang, K.; Ma, J.F.; Wang, Y.; Cao, W.X.; Zhu, Y.; Cao, Q.; Liu, X.J.; Tian, Y.C. Key variable for simulating critical nitrogen dilution curve of wheat: Leaf area ratio-driven approach. Pedosphere 2022, 32, 463–474. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Wallace, L. Direct Georeferencing of Ultrahigh-Resolution UAV Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2738–2745. [Google Scholar] [CrossRef]

- Chawade, A.; van Ham, J.; Blomquist, H.; Bagge, O.; Alexandersson, E.; Ortiz, R. High-Throughput Field-Phenotyping Tools for Plant Breeding and Precision Agriculture. Agronomy 2019, 9, 258. [Google Scholar] [CrossRef]

- Jeziorska, J. Flight Planning GIS/MEA 584: Mapping and Analysis Using UAS. Available online: https://ncsu-geoforall-lab.github.io/uav-lidar-analytics-course/lectures/2017_Flight_planning.html#/ (accessed on 13 May 2024).

- Wang, F.M.; Yi, Q.X.; Hu, J.H.; Xie, L.L.; Yao, X.P.; Xu, T.Y.; Zheng, J.Y. Combining spectral and textural information in UAV hyperspectral images to estimate rice grain yield. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102397. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Sasano, K.; Katsura, K. Improving efficiency of ground-truth data collection for UAV-based rice growth estimation models: Investigating the effect of sampling size on model accuracy. Plant Prod. Sci. 2024, 27, 1–13. [Google Scholar] [CrossRef]

- Gano, B.; Dembele, J.S.B.; Ndour, A.; Luquet, D.; Beurier, G.; Diouf, D.; Audebert, A. Using UAV Borne, Multi-Spectral Imaging for the Field Phenotyping of Shoot Biomass, Leaf Area Index and Height of West African Sorghum Varieties under Two Contrasted Water Conditions. Agronomy 2021, 11, 850. [Google Scholar] [CrossRef]

- Wilke, N.; Siegmann, B.; Postma, J.A.; Muller, O.; Krieger, V.; Pude, R.; Rascher, U. Assessment of plant density for barley and wheat using UAV multispectral imagery for high-throughput field phenotyping. Comput. Electron. Agric. 2021, 189, 106380. [Google Scholar] [CrossRef]

- Ostos-Garrido, F.J.; de Castro, A.I.; Torres-Sanchez, J.; Piston, F.; Pena, J.M. High-Throughput Phenotyping of Bioethanol Potential in Cereals Using UAV-Based Multi-Spectral Imagery. Front. Plant Sci. 2019, 10, 948. [Google Scholar] [CrossRef]

- Sharma, P.; Leigh, L.; Chang, J.; Maimaitijiang, M.; Caffe, M. Above-Ground Biomass Estimation in Oats Using UAV Remote Sensing and Machine Learning. Sensors 2022, 22, 601. [Google Scholar] [CrossRef] [PubMed]

- Simões, I.O.P.S.; Rios do Amaral, L. Uav-Based Multispectral Data for Sugarcane Resistance Phenotyping of Orange and Brown Rust. Smart Agric. Technol. 2023, 4, 100144. [Google Scholar] [CrossRef]

- Yang, Y.; Wei, X.B.; Wang, J.; Zhou, G.S.; Wang, J.; Jiang, Z.T.; Zhao, J.; Ren, Y.L. Prediction of Seedling Oilseed Rape Crop Phenotype by Drone-Derived Multimodal Data. Remote Sens. 2023, 15, 3951. [Google Scholar] [CrossRef]

- Shu, M.Y.; Fei, S.P.; Zhang, B.Y.; Yang, X.H.; Guo, Y.; Li, B.G.; Ma, Y.T. Application of UAV Multisensor Data and Ensemble Approach for High-Throughput Estimation of Maize Phenotyping Traits. Plant Phenomics 2022, 2022, 9802585. [Google Scholar] [CrossRef] [PubMed]

- Vong, C.N.; Conway, L.S.; Zhou, J.F.; Kitchen, N.R.; Sudduth, K.A. Early corn stand count of different cropping systems using UAV-imagery and deep learning. Comput. Electron. Agric. 2021, 186, 106214. [Google Scholar] [CrossRef]

- Habibi, L.N.; Watanabe, T.; Matsui, T.; Tanaka, T.S.T. Machine Learning Techniques to Predict Soybean Plant Density Using UAV and Satellite-Based Remote Sensing. Remote Sens. 2021, 13, 2548. [Google Scholar] [CrossRef]

- Wang, X.; Yang, W.; Lv, Q.; Huang, C.; Liang, X.; Chen, G.; Xiong, L.; Duan, L. Field rice panicle detection and counting based on deep learning. Front. Plant Sci. 2022, 13, 966495. [Google Scholar] [CrossRef] [PubMed]

- Hashemi-Beni, L.; Gebrehiwot, A.; Karimoddini, A.; Shahbazi, A.; Dorbu, F. Deep Convolutional Neural Networks for Weeds and Crops Discrimination From UAS Imagery. Front. Remote Sens. 2022, 3, 755939. [Google Scholar] [CrossRef]

- Schreiber, L.V.; Amorim, J.G.A.; Guimaraes, L.; Matos, D.M.; da Costa, C.M.; Parraga, A. Above-ground Biomass Wheat Estimation: Deep Learning with UAV-based RGB Images. Appl. Artif. Intell. 2022, 36, 2055392. [Google Scholar] [CrossRef]

- Tanabe, R.; Matsui, T.; Tanaka, T.S.T. Winter wheat yield prediction using convolutional neural networks and UAV-based multispectral imagery. Field Crops Res. 2023, 291, 108786. [Google Scholar] [CrossRef]

- Nevavuori, P.; Narra, N.; Lipping, T. Crop yield prediction with deep convolutional neural networks. Comput. Electron. Agric. 2019, 163, 104859. [Google Scholar] [CrossRef]

- Herrmann, I.; Bdolach, E.; Montekyo, Y.; Rachmilevitch, S.; Townsend, P.A.; Karnieli, A. Assessment of maize yield and phenology by drone-mounted superspectral camera. Precis. Agric. 2019, 21, 51–76. [Google Scholar] [CrossRef]

- Tirado, S.B.; Hirsch, C.N.; Springer, N.M. UAV-based imaging platform for monitoring maize growth throughout development. Plant Direct 2020, 4, e00230. [Google Scholar] [CrossRef] [PubMed]

- Wan, L.; Zhu, J.; Du, X.; Zhang, J.; Han, X.; Zhou, W.; Li, X.; Liu, J.; Liang, F.; He, Y.; et al. A model for phenotyping crop fractional vegetation cover using imagery from unmanned aerial vehicles. J. Exp. Bot. 2021, 72, 4691–4707. [Google Scholar] [CrossRef] [PubMed]

- Bhadra, S.; Sagan, V.; Skobalski, J.; Grignola, F.; Sarkar, S.; Vilbig, J. End-to-end 3D CNN for plot-scale soybean yield prediction using multitemporal UAV-based RGB images. Precis. Agric. 2023, 25, 834–864. [Google Scholar] [CrossRef]

- Zhou, J.; Beche, E.; Vieira, C.C.; Yungbluth, D.; Zhou, J.; Scaboo, A.; Chen, P. Improve Soybean Variety Selection Accuracy Using UAV-Based High-Throughput Phenotyping Technology. Front. Plant Sci. 2021, 12, 768742. [Google Scholar] [CrossRef]

- Aharon, S.; Peleg, Z.; Argaman, E.; Ben-David, R.; Lati, R.N. Image-Based High-Throughput Phenotyping of Cereals Early Vigor and Weed-Competitiveness Traits. Remote Sens. 2020, 12, 3877. [Google Scholar] [CrossRef]

- Jenal, A.; Hüging, H.; Ahrends, H.E.; Bolten, A.; Bongartz, J.; Bareth, G. Investigating the Potential of a Newly Developed UAV-Mounted VNIR/SWIR Imaging System for Monitoring Crop Traits—A Case Study for Winter Wheat. Remote Sens. 2021, 13, 1697. [Google Scholar] [CrossRef]

- Jenal, A.; Bareth, G.; Bolten, A.; Kneer, C.; Weber, I.; Bongartz, J. Development of a VNIR/SWIR Multispectral Imaging System for Vegetation Monitoring with Unmanned Aerial Vehicles. Sensors 2019, 19, 5507. [Google Scholar] [CrossRef]

- Jiang, Z.Y.; Huete, A.R.; Kim, Y.; Didan, K. 2-band enhanced vegetation index without a blue band and its application to AVHRR data. Proc. SPIE 2007, 6679, 45–53. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Dhal, S.; Wyatt, B.M.; Mahanta, S.; Bhattarai, N.; Sharma, S.; Rout, T.; Saud, P.; Acharya, B.S. Internet of Things (IoT) in digital agriculture: An overview. Agron. J. 2023, 116, 1144–1163. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Katsura, K. A novel neural network model to achieve generality for diverse morphologies and crop science interpretability in rice biomass estimation. Comput. Electron. Agric. 2024, 218, 108653. [Google Scholar] [CrossRef]

- Tataridas, A.; Kanatas, P.; Chatzigeorgiou, A.; Zannopoulos, S.; Travlos, I. Sustainable Crop and Weed Management in the Era of the EU Green Deal: A Survival Guide. Agronomy 2022, 12, 589. [Google Scholar] [CrossRef]

| Crops | Camera | GSD cm Pixel−1 | Explanatory Variables 1 | Predicted Traits | Data Analysis 2 | Ref. | |

|---|---|---|---|---|---|---|---|

| Type | Model | ||||||

| Alfalfa | RGB | DJI Zenmuse XT2 | 0.19 | Saturation, a*, b*, Canopy temperature | LAI, Forage yield (FY) | LME | [33] |

| Thermal | |||||||

| Barley | RGB | Sony Alpha 6000 | 0.85 | DSM | Plant height, lodging percentage | Average lodging severity, weighted average lodging severity | [27] |

| Barley, wheat | RGB | Sony Alpha 6000 | 0.2–0.59 | ExGR, NDVI | Plant density | ANOVA, LRM | [66] |

| Multispectral | Micasense RedEdge | 0.69–1.36 | |||||

| Barley, wheat | RGB Multispectral | Parrot Sequioa | 31.25 | Resized RGB images, Resized NDVI images | Yield | CNN | [78] |

| Barley, Wheat, Triticale | Multispectral | Mini-MCA6 Tetracam | 0.54 | NDVI, ExG, GNDVI | Total dry biomass, Sugar release, Theoretical ethanol Yield, Bioethanol potential | ANOVA, LRM | [67] |

| Maize | RGB | Lumix GX7 Panasonic | 0.94 | GGA, Hue, NDLab, TGI, NGRDI, CSI | Grain yield | ANOVA, LSD, LRM | [32] |

| Maize | RGB | Canon IXUS 127 HS | 2 | DSM | Biomass, Grain yield | LRM, exponential regression, power regression, GAM | [29] |

| Maize | RGB | DJI Phantom 4 Advanced | 0.3 | Resized RGB images | Seedlings | CNN | [72] |

| Maize | RGB | Sony A5100 | 1.96 20.87 | GNDVI, NDVI, NDREI, REIP, SIPI | Grain yield, Canopy cover, LAI, Relative Water content, Ear weight | PLS-R, PLS-DA | [79] |

| Multispectral | Mini MCA12 Tetracam | ||||||

| Maize | RGB | DJI Phantom 4 Advanced | 0.82 | DSM | Plant height | LRM, ANOVA | [80] |

| Oat | Multispectral | MicaSense RedEdge-MX | 1.74 | GNDVI, NDVI, NGRDI, RVI, DVI, EVI, CVI, TVI, PSRI, BGI, VARI, GLI | Aboveground biomass | PLSR, SVM, RF, ANN | [68] |

| Oilseed rape, rice, wheat, cotton | RGB | Sony NEX 7 | 0.6 | Canopy reflectance | Fractional vegetation cover | PROSAIL-GP, RF | [81] |

| Multispectral | MQ022HG-IM-SM5 × 5-NIR2 Ximea | ||||||

| Red fescue, Perennial ryegrass, Tall fescue | RGB | Canon ELPH 110 HS S.O.D.A senseFly | 1.38–2.27 | Texture, Height | Lodging | SVM | [28] |

| Rice | RGB | DJI Phantom 4 Pro | 0.2 | DSM | Biomass, Heading date, Culm length, Grain weight, Panicle number, Panicle length | LME | [30] |

| Rice | RGB | FUJIFILM GFX 100 camera | 0.2 | Resized RGB images | Rice panicles detection | CNN | [74] |

| Rice, Oilseed rape | Multispectral | MQ022HG-IM-SM5X5-NIR2 Ximea | 1.12 | Canopy reflectance, fractional vegetation cover | LAI, Leaf/canopy Chl content, Biomass | PROSAIL, RF | [38] |

| Soybean | RGB | Sony α9 ILCE-9 | 0.6 | DSM | Plant height, canopy cover, LAI | Fitted P-splines | [31] |

| Soybean | RGB | DJI Phantom 4 Pro | 3.4 | Resized RGB images | Yield | CNN | [82] |

| Soybean | RGB | DJI Phantom 4 Pro | 0.35 | Resized RGB images | Seedlings detection | CNN | [73] |

| Soybean | Multispectral | MicaSense RedEdge-M | 2.08 | DSM, 36 vegetation indices (e.g., CiGreen, GNDVI, TGI) | Selected or non-selected superior breeding lines by breeders | ANOVA, LASSO, PCA | [83] |

| Sugarcane, weed | RGB | Canon G9X camera | 5 | Resized RGB images | Weed/crop classification | CNN | [75] |

| Sugarcane | Multispectral | MicaSense RedEdge-MX | 1.77 | NDVI, GNDVI, NDREI, RVI, CiGreen, CiRE, DVI, EVI, CVI, TVI, PSRI, BGI, VARI, GLI | Orange and brown rust resistance | SVM, KNN, RF, ANN, DT | [69] |

| Triticale | RGB | DJI Phantom 4 Pro | 0.6 | ExG, PSA, DSM | Early Vigor and weed competitiveness | Three-parameter sigmoid equation | [84] |

| Wheat | RGB | DJI Zenmuse X3 | 2.14 | Resized RGB images | Biomass | CNN | [76] |

| Wheat | Multispectral | Parrot Sequoia MicaSense Rededge altum | 1.4–7.1 | Resized images for each band, EVI2 | Yield | LRM CNN | [77] |

| Wheat | RGB | DJI Zenmuse X5 | 0.5 | VEG, GLI, Spike temperature | Fusarium Head Blight detection | ANOVA, PCA, HSD | [25] |

| Thermal | DJI Zenmuse XT | ||||||

| Wheat | RGB | Lumix GX7 Panasonic | 0.94 | GA, GGA, NGRDI, TGI, NDVI | Grain yield | ANOVA, LSD, Bivariate Pearson correlation | [37] |

| Multispectral | Tetracam Micro MCA12 | ||||||

| Thermal | FLIR Tau2 640 | ||||||

| Wheat | RGB | Canon Powershot 110 Sony NEX5 DJI Zenmuse X5 | 0.7–1.7 | DSM | Plant height | LME | [26] |

| Wheat | RGB | DJI Phantom 4 Pro | 0.7 | NRI, GnyLi, DSM | Biomass, moisture, N concentration, N uptake | LRM, power regression | [85] |

| VNIR/SWIR | Prototype [86] | 1.3 | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tanaka, T.S.T.; Wang, S.; Jørgensen, J.R.; Gentili, M.; Vidal, A.Z.; Mortensen, A.K.; Acharya, B.S.; Beck, B.D.; Gislum, R. Review of Crop Phenotyping in Field Plot Experiments Using UAV-Mounted Sensors and Algorithms. Drones 2024, 8, 212. https://doi.org/10.3390/drones8060212

Tanaka TST, Wang S, Jørgensen JR, Gentili M, Vidal AZ, Mortensen AK, Acharya BS, Beck BD, Gislum R. Review of Crop Phenotyping in Field Plot Experiments Using UAV-Mounted Sensors and Algorithms. Drones. 2024; 8(6):212. https://doi.org/10.3390/drones8060212

Chicago/Turabian StyleTanaka, Takashi Sonam Tashi, Sheng Wang, Johannes Ravn Jørgensen, Marco Gentili, Armelle Zaragüeta Vidal, Anders Krogh Mortensen, Bharat Sharma Acharya, Brittany Deanna Beck, and René Gislum. 2024. "Review of Crop Phenotyping in Field Plot Experiments Using UAV-Mounted Sensors and Algorithms" Drones 8, no. 6: 212. https://doi.org/10.3390/drones8060212

APA StyleTanaka, T. S. T., Wang, S., Jørgensen, J. R., Gentili, M., Vidal, A. Z., Mortensen, A. K., Acharya, B. S., Beck, B. D., & Gislum, R. (2024). Review of Crop Phenotyping in Field Plot Experiments Using UAV-Mounted Sensors and Algorithms. Drones, 8(6), 212. https://doi.org/10.3390/drones8060212