Drone High-Rise Aerial Delivery with Vertical Grid Screening

Abstract

1. Introduction

2. System Design and Architecture

2.1. System Goals, Challenges, and Model Assumptions

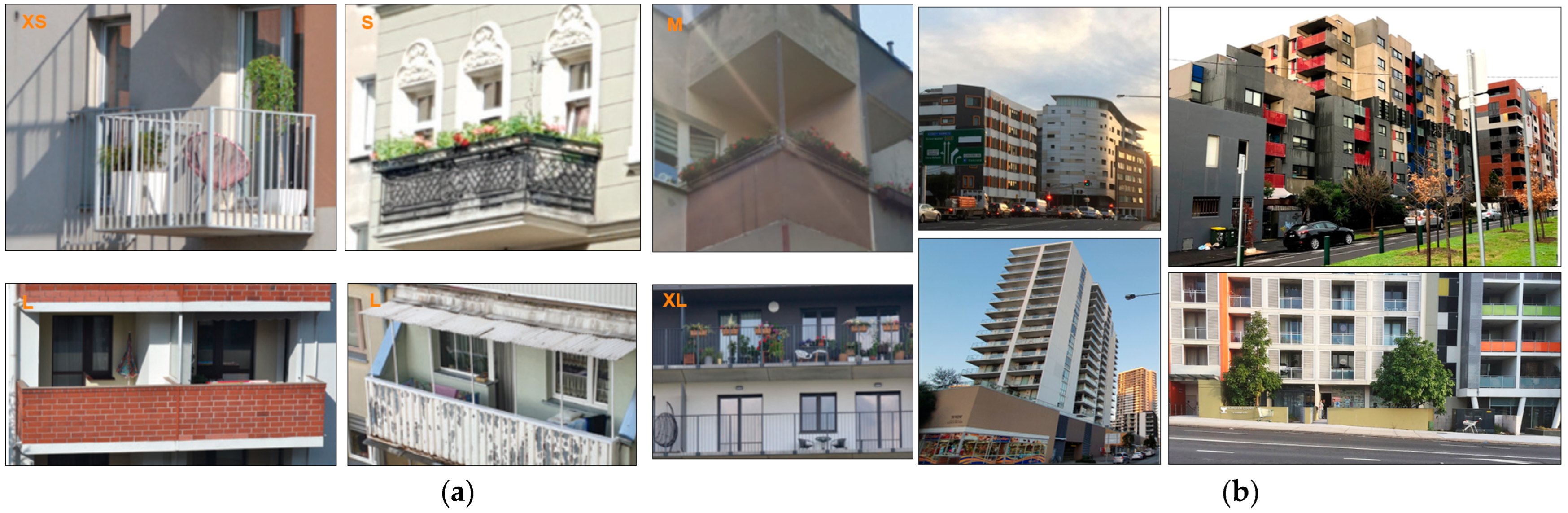

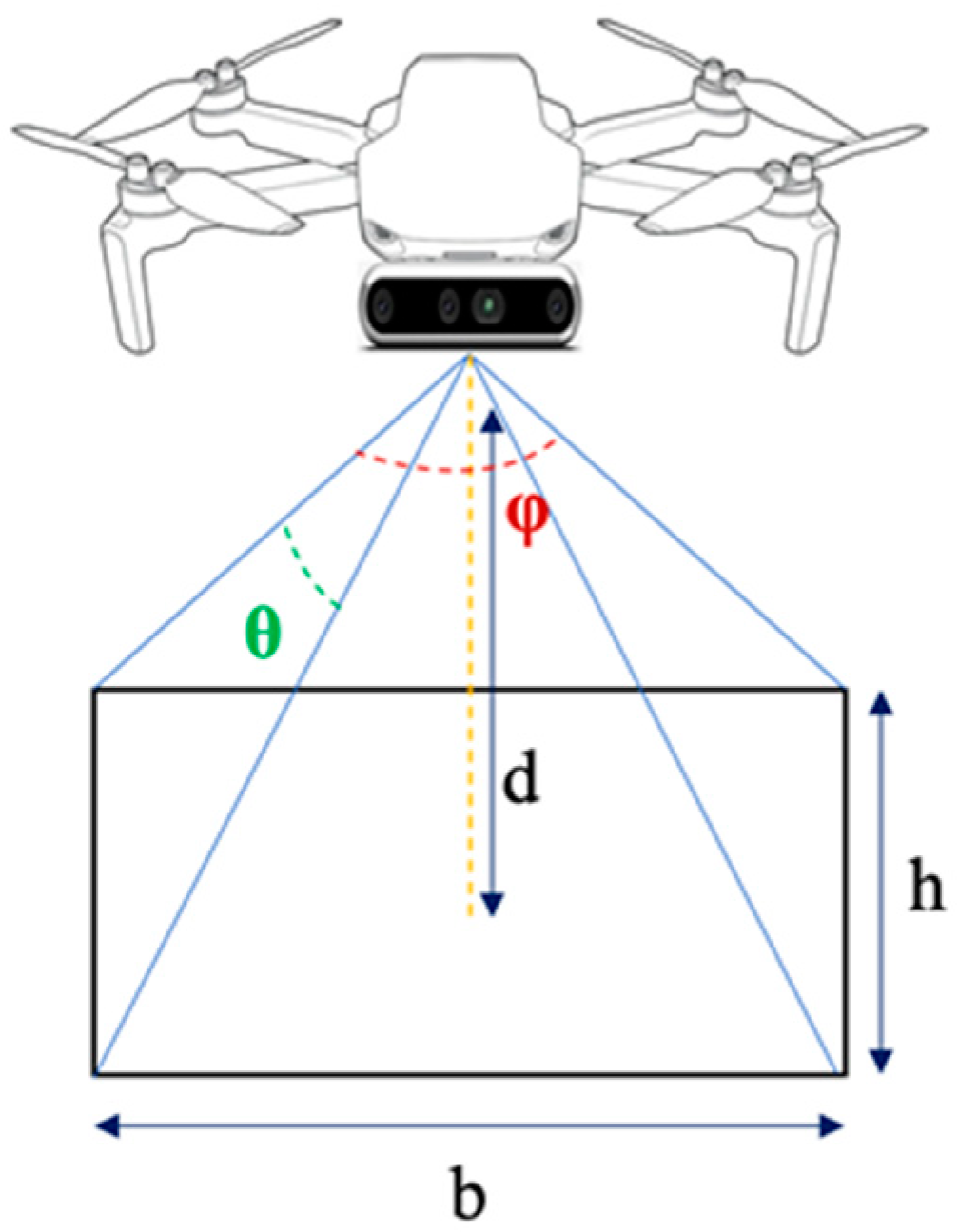

2.2. Vertical Grid Screening

2.3. Marker Detection

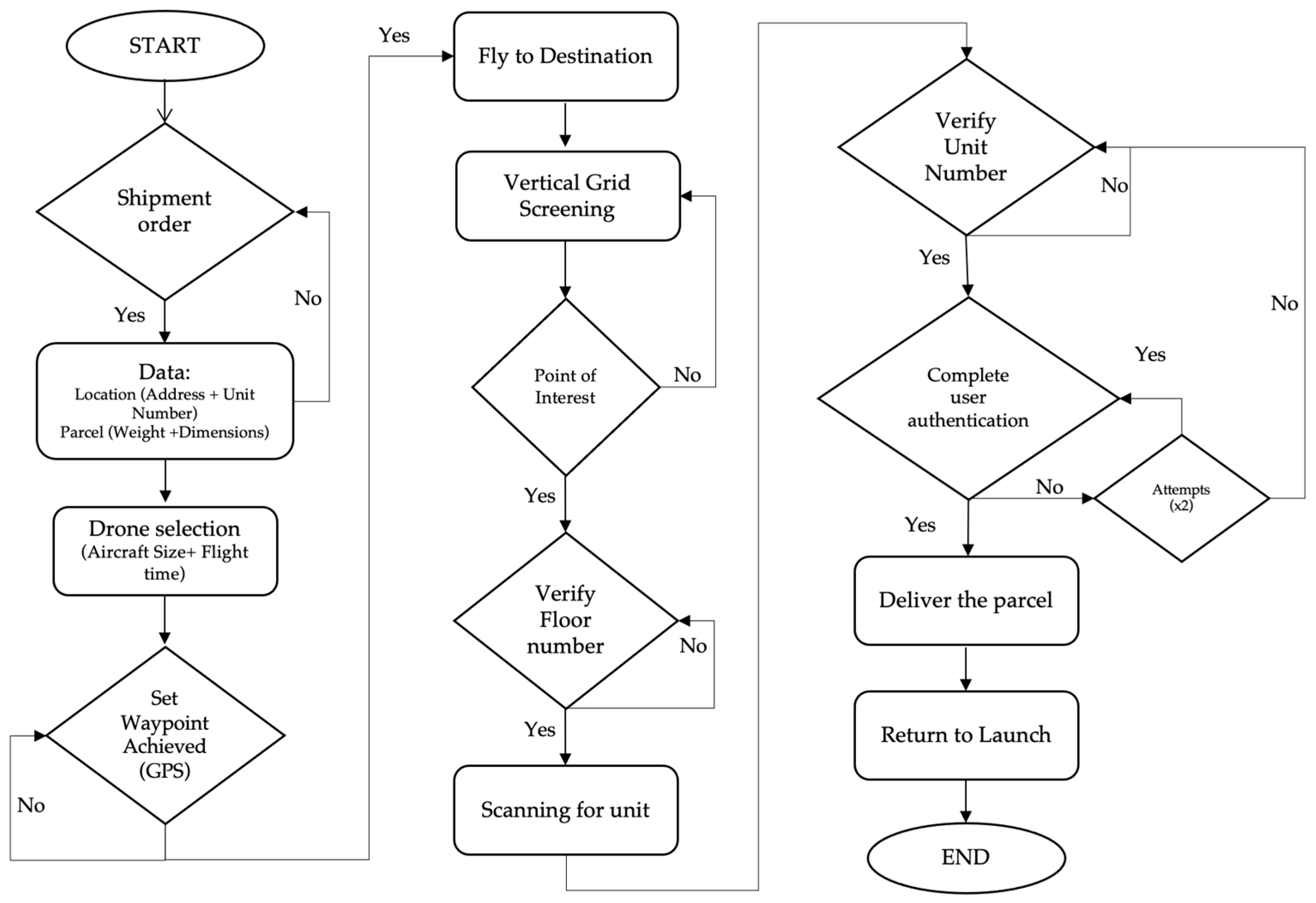

3. End-to-End Drone Vertical Delivery System

3.1. Block Diagram of the Delivery System

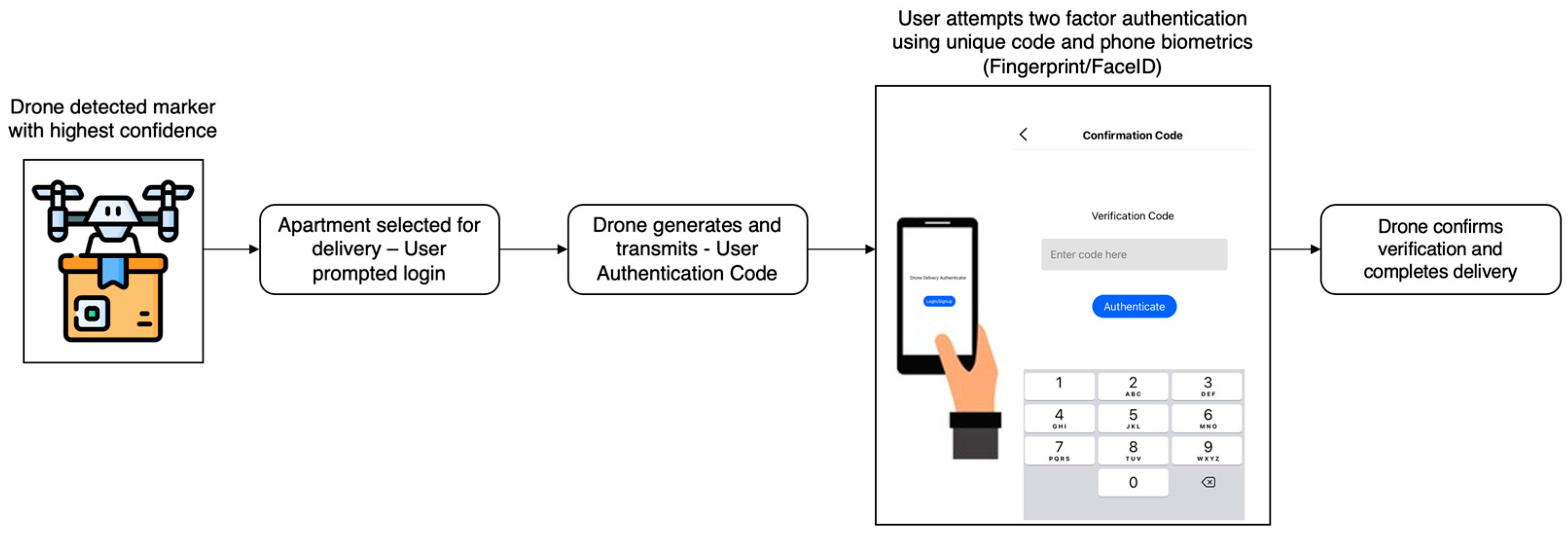

3.2. Verification

4. Drone Vertical Delivery System Implementation

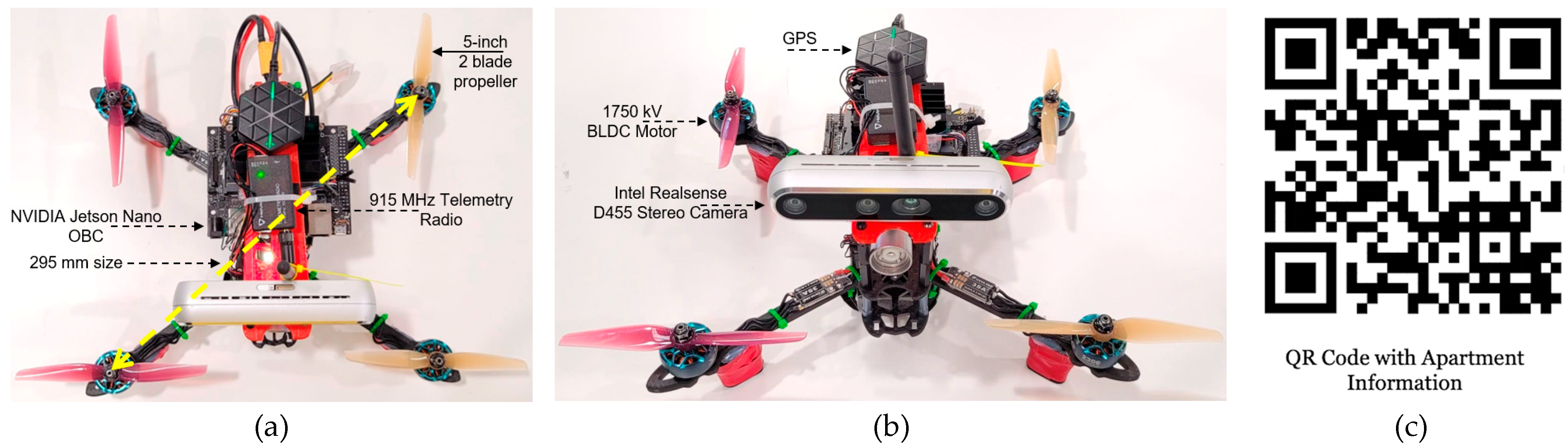

4.1. Drone Specifications

4.2. Types of Onboard Computer

4.3. Marker Detection Subsystem

4.4. Drone Controller Software

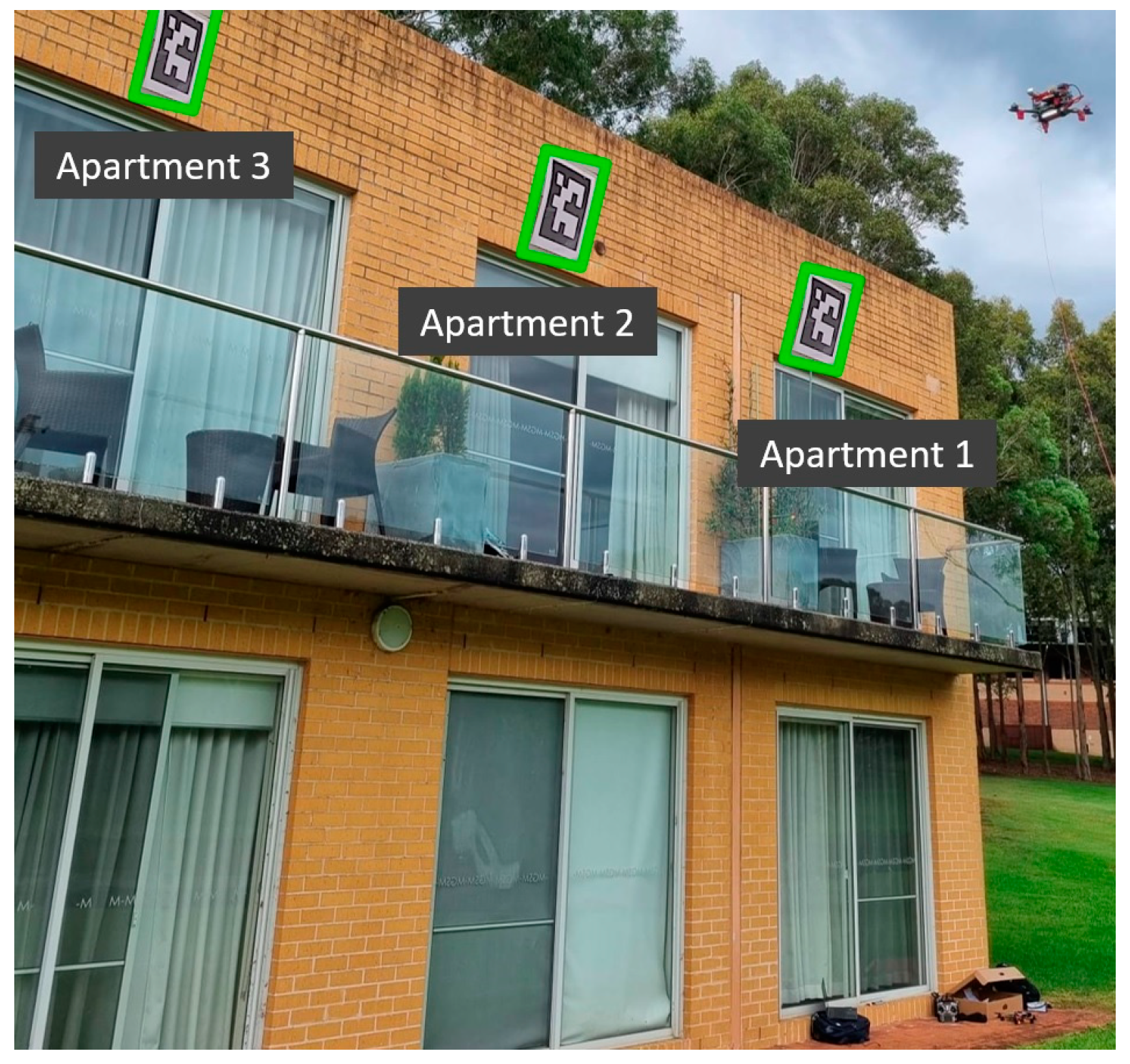

5. Apartment Marker Detection

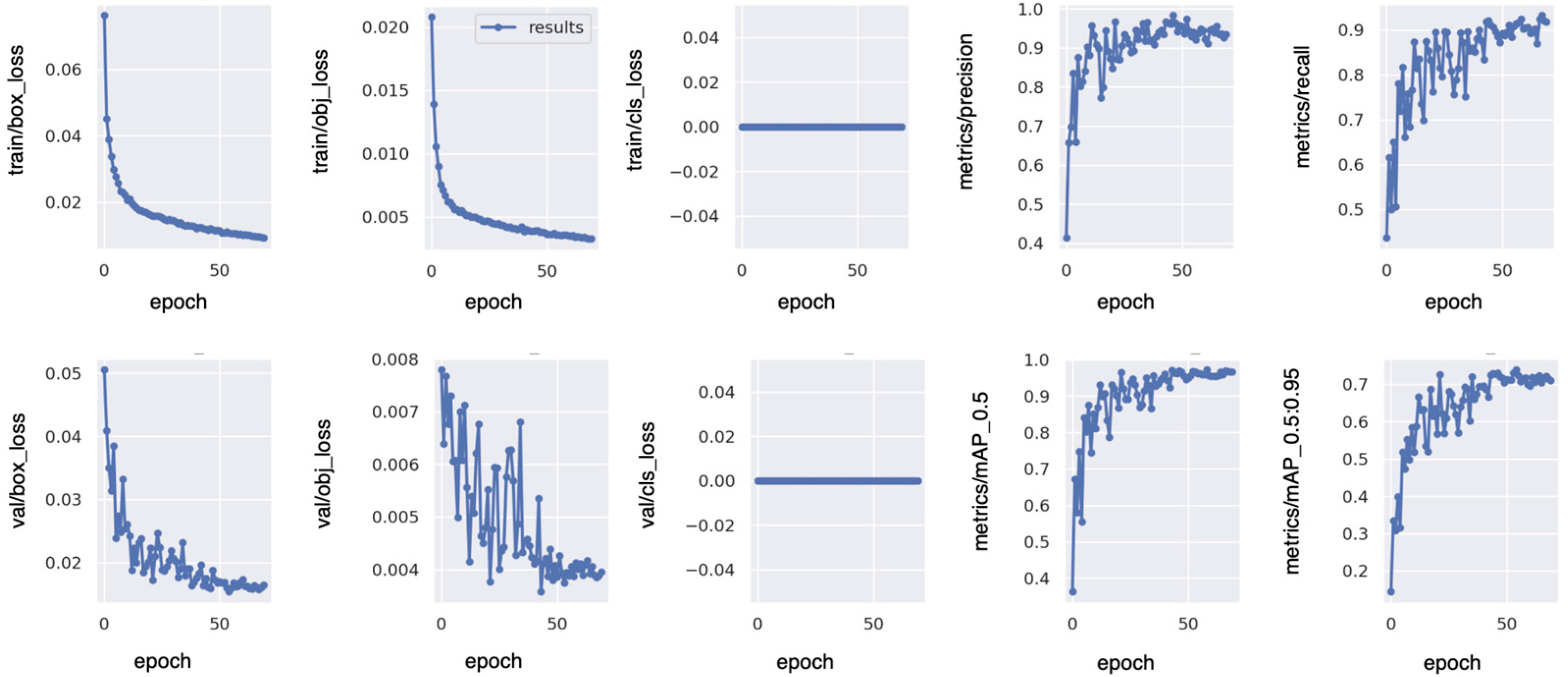

5.1. Model Comparison

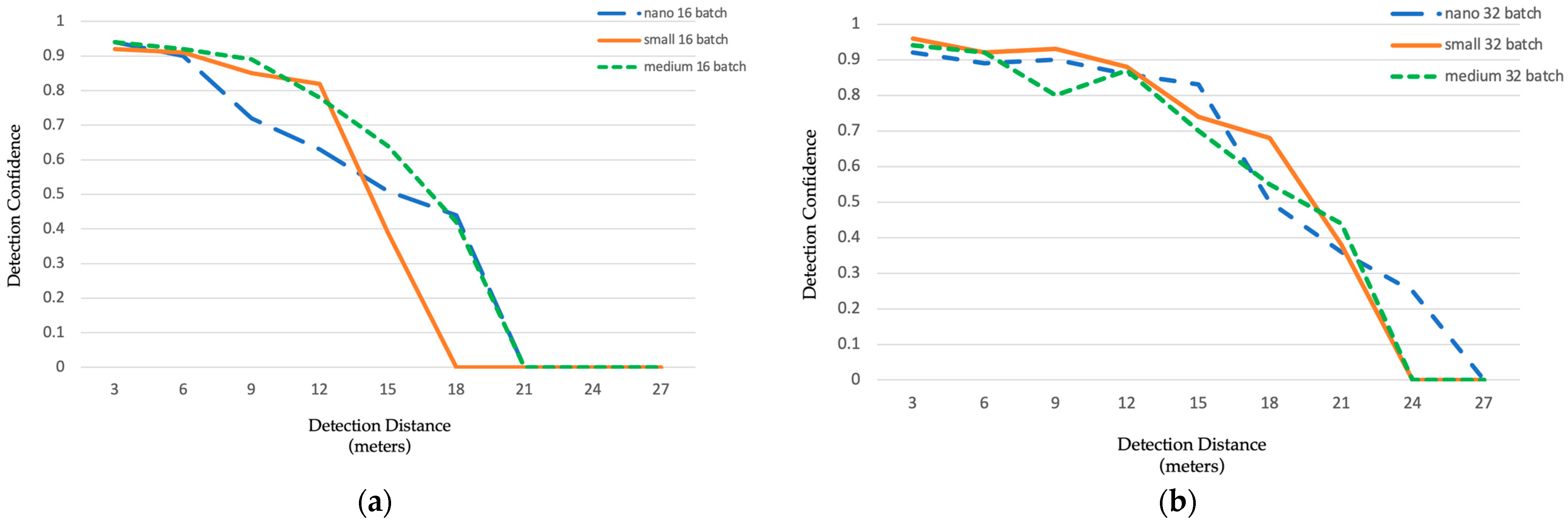

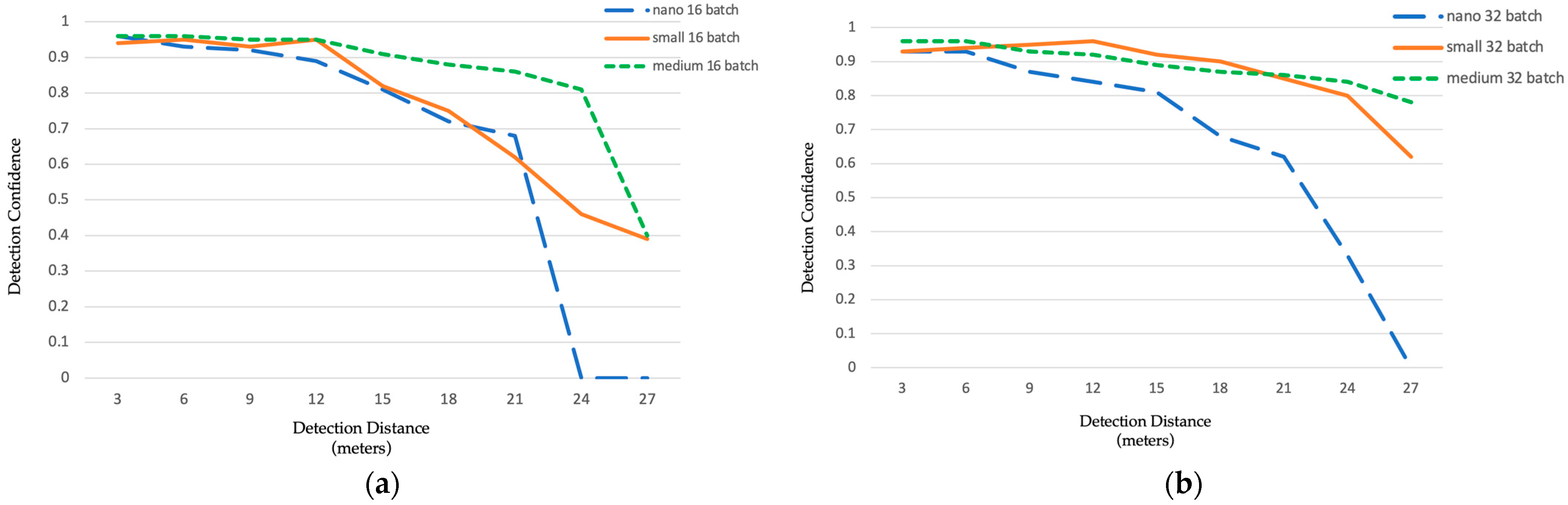

5.2. Marker Detection Performance

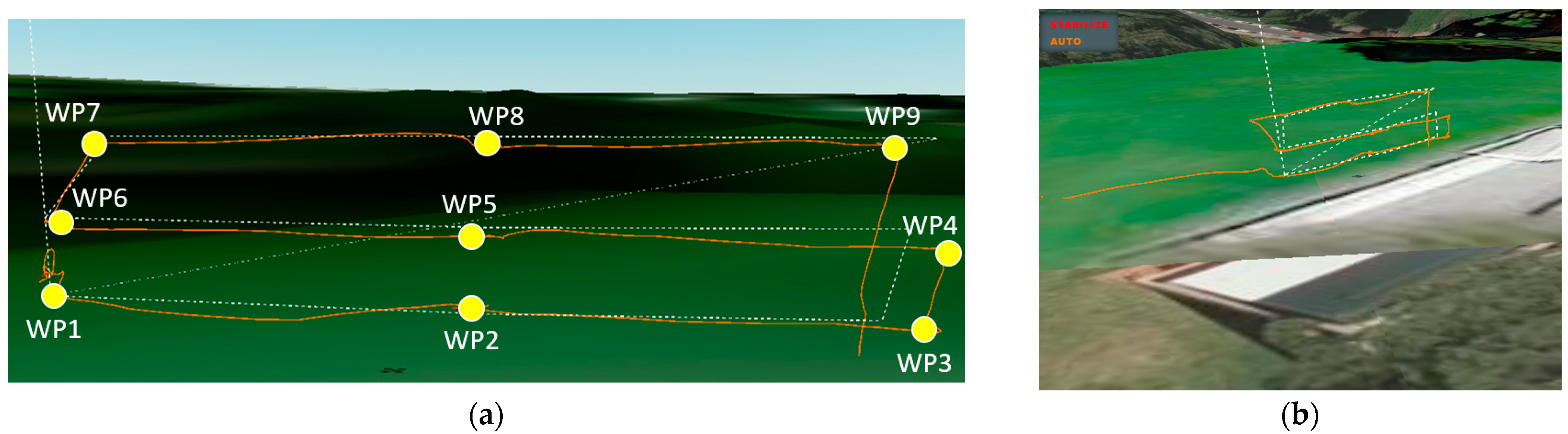

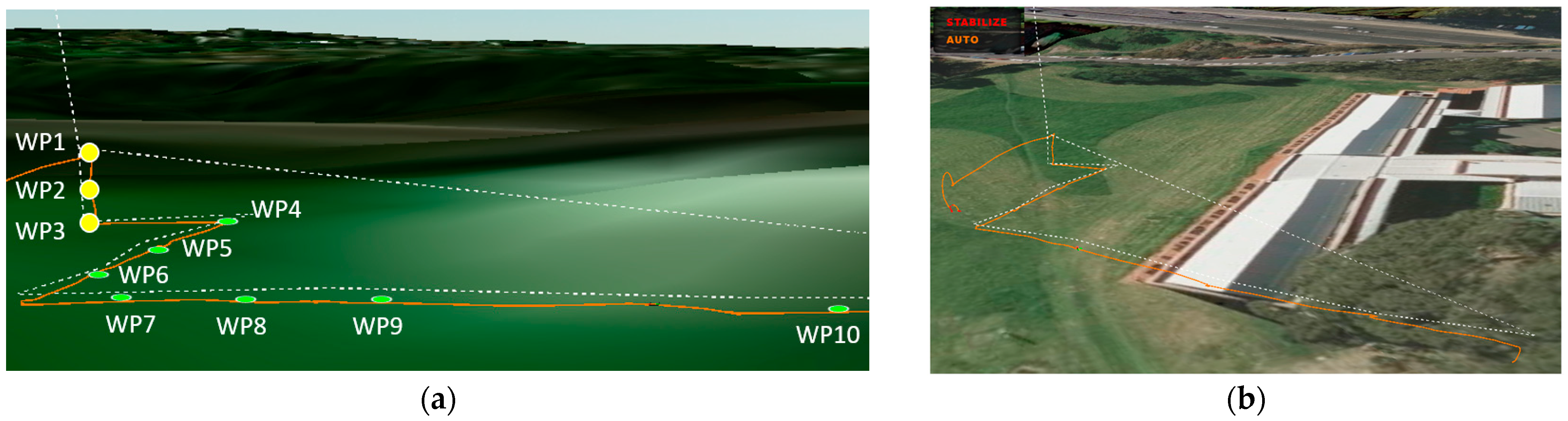

6. Drone Vertical Search Performance

6.1. Formatting of Mathematical Components

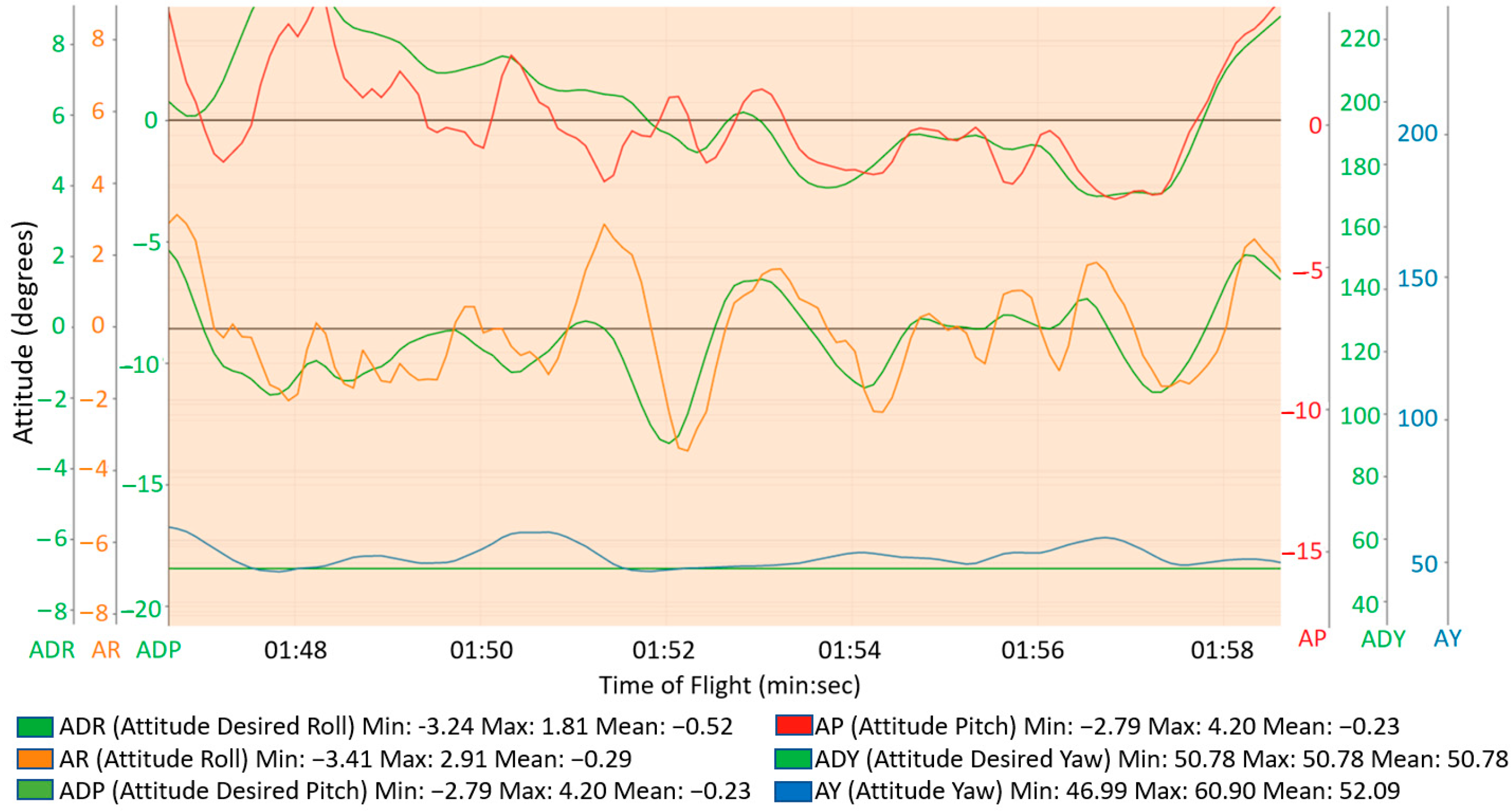

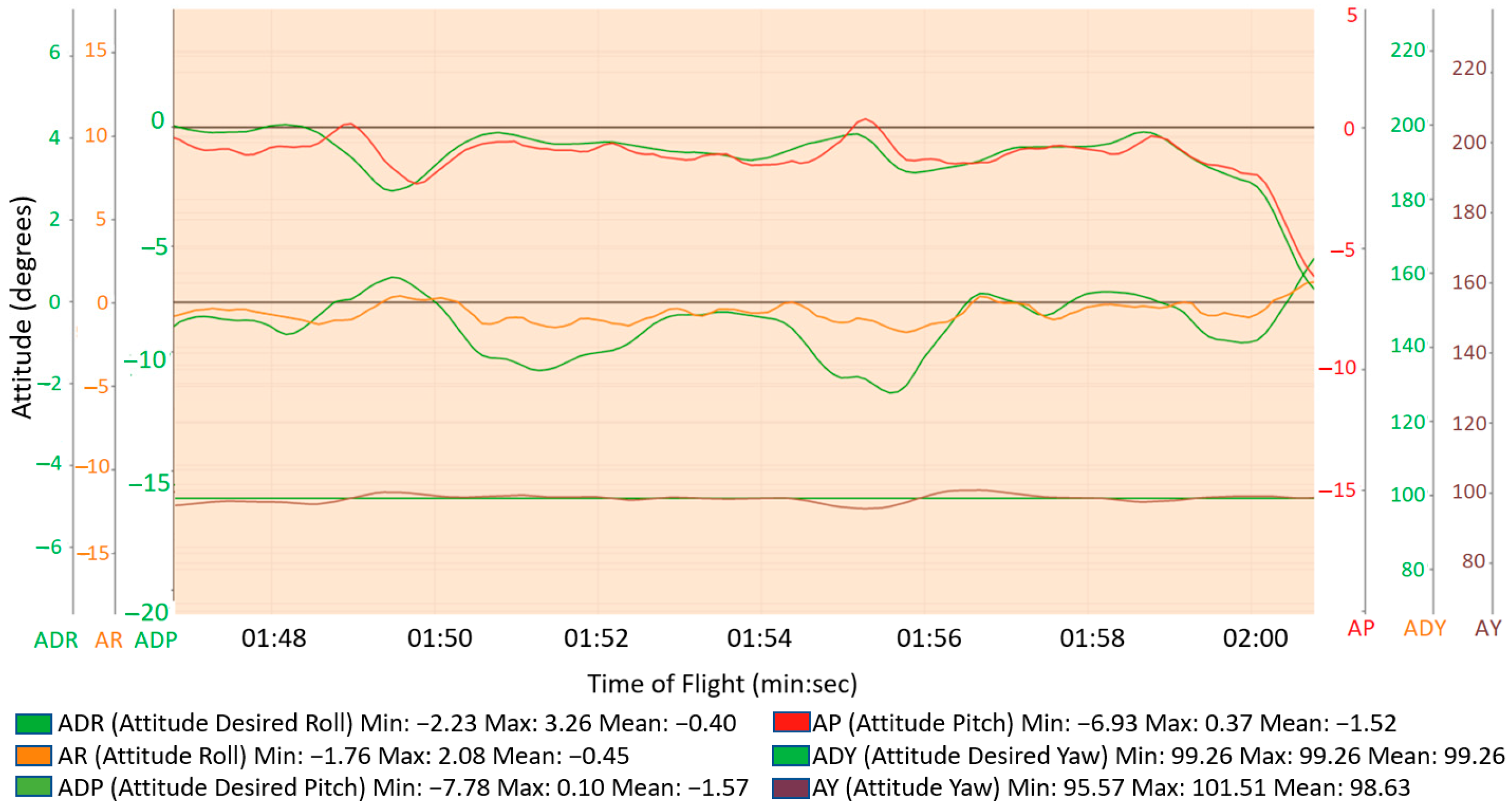

6.2. Outdoor Flight Test Results

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| VGS | Vertical Grid Screening |

| GS | Grid Screening |

| SS | Square Screening |

| YOLO | You Only Look Once (Real-Time Object Detection) |

| UAV | Unmanned Aerial Vehicle |

| ACT | Australian Capital Territory |

| TDRA | Truck Drone Routing Algorithm |

| MILP | Mixed Integer Linear Program |

| GPS | Global Positioning System |

| FoV | Field of View |

| AI | Artificial Intelligence |

| GA | Genetic Algorithm |

| CPP | Coverage Path Planning |

| ArUco | Augmented Reality University of Cordoba |

| mAP | mean Average Precision |

| ROS | Robot Operating System |

| FC | Flight Controller |

| RTL | Return to Launch |

| LiDAR | Light Detection and Ranging |

| OBC | Onboard Computer |

| DoF | Degree of Freedom |

| IMU | Inertial Measurement Unit |

| BLDC | Brushless Direct Current |

| SSH | Secure Shell |

| USB | Universal Serial Bus |

| UART | Universal Asynchronous Receiver-Transmitter serial communication |

| ESC | Electronic Speed Controller |

| ADC | Air Drone control |

Appendix A. Additional Information

| Specifications | Drone System |

|---|---|

| Frame Material | Carbon Fiber |

| Frame size | 295 (width) mm × 295 (length) mm × 55 (height) mm |

| Propeller size | 5 inches |

| Motor (KV) | 1750 |

| Flight Controller | Pixhawk 1 M |

| Telemetry | RadioLink 915 MHz |

| Firmware | Ardupilot 4.3.4 |

| GPS | GPS M8N with compass |

| Max Carrying Load | 1100 gms |

| Onboard Computer | NVIDIA Jetson nano |

| Camera | Intel Realsense d455 |

| Ranging Sensor | TF Mini LiDAR |

References

- Benarbia, T.; Kyamakya, K. A Literature Review of Drone-Based Package Delivery Logistics Systems and Their Implementation Feasibility. Sustainability 2022, 14, 360. [Google Scholar] [CrossRef]

- Tuia, D.; Kellenberger, B.; Beery, S.; Costelloe, B.R.; Zuffi, S.; Risse, B.; Mathis, A.; Mathis, M.W.; van Langevelde, F.; Burghardt, T.; et al. Perspectives in Machine Learning for Wildlife Conservation. Nat. Commun. 2022, 13, 792. [Google Scholar] [CrossRef]

- Rejeb, A.; Abdollahi, A.; Rejeb, K.; Treiblmaier, H. Drones in Agriculture: A Review and Bibliometric Analysis. Comput. Electron. Agric. 2022, 198, 107017. [Google Scholar] [CrossRef]

- Ho, Y.H.; Tsai, Y.J. Open Collaborative Platform for Multi-Drones to Support Search and Rescue Operations. Drones 2022, 6, 132. [Google Scholar] [CrossRef]

- Ahmad, T.; Cavazza, M.; Matsuo, Y.; Prendinger, H. Detecting Human Actions in Drone Images Using YoloV5 and Stochastic Gradient Boosting. Sensors 2022, 22, 7020. [Google Scholar] [CrossRef] [PubMed]

- Liao, K.C.; Wu, H.Y.; Wen, H.T. Using Drones for Thermal Imaging Photography and Building 3D Images to Analyze the Defects of Solar Modules. Inventions 2022, 7, 67. [Google Scholar] [CrossRef]

- Eskandaripour, H.; Boldsaikhan, E. Last-Mile Drone Delivery: Past, Present, and Future. Drones 2023, 7, 77. [Google Scholar] [CrossRef]

- Reed, S.; Campbell, A.M.; Thomas, B.W. The Value of Autonomous Vehicles for Last-Mile Deliveries in Urban Environments. Manag. Sci. 2022, 68, 280–299. [Google Scholar] [CrossRef]

- Brunner, G.; Szebedy, B.; Tanner, S.; Wattenhofer, R. The Urban Last Mile Problem: Autonomous Drone Delivery to Your Balcony. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems, ICUAS 2019, Atlanta, GA, USA, 11–14 June 2019; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 1 June 2019; pp. 1005–1012. [Google Scholar]

- Ackerman, E.; Koziol, M. The blood is here: Zipline’s medical delivery drones are changing the game in Rwanda. IEEE Spectr. 2019, 56, 24–31. [Google Scholar] [CrossRef]

- Thornton, S.; Gallasch, G.E. Swarming logistics for tactical last-mile delivery. In Proceedings of the International Conference on Science and Innovation for Land Power, Adelaide, Australia, 4–6 September 2018; Volume 2018. [Google Scholar]

- Kellermann, R.; Biehle, T.; Fischer, L. Drones for Parcel and Passenger Transportation: A Literature Review. Transp. Res. Interdiscip. Perspect. 2020, 4, 100088. [Google Scholar] [CrossRef]

- Sun, L.; Chen, J.; Li, Q.; Huang, D. Dramatic Uneven Urbanization of Large Cities throughout the World in Recent Decades. Nat. Commun. 2020, 11, 5366. [Google Scholar] [CrossRef] [PubMed]

- AlphaBeta Report for Wing, The Potential Impact of Delivery Drones in the Australian Capital Territory. Available online: https://wing.com/en_au/resource-hub/articles/act-report/ (accessed on 9 March 2023).

- Chen, K.W.; Xie, M.R.; Chen, Y.M.; Chu, T.T.; Lin, Y.B. DroneTalk: An Internet-of-Things-Based Drone System for Last-Mile Drone Delivery. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15204–15217. [Google Scholar] [CrossRef]

- Deutsche Post DHL Group (16 May 2019). DHL Express Launches Its First Regular Fully-Automated and Intelligent Urban Drone Delivery Service. Available online: https://www.dpdhl.com/en/media-relations/press-releases/2019/dhl-launches-its-first-regular-fully-automated-and-intelligent-urban-drone-delivery-service.html (accessed on 23 April 2023).

- Cho, S.; Lee, D.; Jung, Y.; Lee, U.; Shim, D.H. Development of a Cooperative Heterogeneous Unmanned System for Delivery Services. J. Inst. Control Robot. Syst. 2014, 20, 1181–1188. [Google Scholar] [CrossRef]

- Matternet. Available online: https://mttr.net/ (accessed on 23 April 2023).

- Flytrex, Drone Delivery in Panama City. Available online: https://www.youtube.com/watch?v=inu78yhL8ZU (accessed on 23 April 2023).

- Aurambout, J.P.; Gkoumas, K.; Ciuffo, B. Last Mile Delivery by Drones: An Estimation of Viable Market Potential and Access to Citizens across European Cities. Eur. Transp. Res. Rev. 2019, 11, 30. [Google Scholar] [CrossRef]

- Moshref-Javadi, M.; Hemmati, A.; Winkenbach, M. A Truck and Drones Model for Last-Mile Delivery: A Mathematical Model and Heuristic Approach. Appl. Math. Model. 2020, 80, 290–318. [Google Scholar] [CrossRef]

- Di Puglia Pugliese, L.; Guerriero, F. Last-Mile Deliveries by Using Drones and Classical Vehicles. In Mathematics and Statistics; Springer: New York, NY, USA, 2017; Volume 217, pp. 557–565. [Google Scholar]

- Di Puglia Pugliese, L.; Macrina, G.; Guerriero, F. Trucks and Drones Cooperation in the Last-Mile Delivery Process. Networks 2021, 78, 371–399. [Google Scholar] [CrossRef]

- Curlander, J.C.; Gilboa-Amir, A.; Kisser, L.M.; Koch, R.A.; Welsh, R.D. Multi-Level Fulfilment Center for Unmanned Aerial Vehicles. U.S. Patent 9,777,502, 3 October 2017. [Google Scholar]

- Golroudbari, A.A.; Sabour, M.H. Recent Advancements in Deep Learning Applications and Methods for Autonomous Navigation—A Comprehensive Review. arXiv 2023, arXiv:2302.11089. [Google Scholar]

- Razzaq, S.; Xydeas, C.; Mahmood, A.; Ahmed, S.; Ratyal, N.I.; Iqbal, J. Efficient Optimization Techniques for Resource Allocation in UAVs Mission Framework. PLoS ONE 2023, 18, e0283923. [Google Scholar] [CrossRef]

- Dissanayaka, D.; Wanasinghe, T.R.; De Silva, O.; Jayasiri, A.; Mann, G.K.I. Review of Navigation Methods for UAV-Based Parcel Delivery. IEEE Trans. Autom. Sci. Eng. 2023, 1–15. [Google Scholar] [CrossRef]

- Mechali, O.; Xu, L.; Xie, X.; Iqbal, J. Theory and Practice for Autonomous Formation Flight of Quadrotors via Distributed Robust Sliding Mode Control Protocol with Fixed-Time Stability Guarantee. Control Eng. Pract. 2022, 123, 105150. [Google Scholar] [CrossRef]

- Rao, B.; Gopi, A.G.; Maione, R. The Societal Impact of Commercial Drones. Technol. Soc. 2016, 45, 83–90. [Google Scholar] [CrossRef]

- Yoo, W.; Yu, E.; Jung, J. Drone Delivery: Factors Affecting the Public’s Attitude and Intention to Adopt. Telemat. Inform. 2018, 35, 1687–1700. [Google Scholar] [CrossRef]

- Eeshwaroju, S.; Jakkula, P.; Abdellatif, I. An IoT Based Three-Dimensional Dynamic Drone Delivery (3D4) System. In Proceedings of the 2020 IEEE Cloud Summit, Cloud Summit 2020, Harrisburg, PA, USA, 21–22 October 2020; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 1 October 2020; pp. 119–123. [Google Scholar]

- EHang and Yonghui Launched China’s First Flagship Store with Drone Delivery Service. Available online: https://www.ehang.com/news/410.html#:~:text=Join%20Us-,EHang%20and%20Yonghui%20Launched%20China’s%20First%20Flagship%20Store%20with%20Drone,and%20aerial%20drone%20food%20delivery. (accessed on 23 April 2023).

- Kannan, S.S.; Min, B.C. Autonomous Drone Delivery to Your Door and Yard. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems, ICUAS 2022, Dubrovnik, Croatia, 21–24 June 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022; pp. 452–461. [Google Scholar]

- Smektała, M.; Baborska-Narożny, M. The Use of Apartment Balconies: Context, Design and Social Norms. Build Cities 2022, 3, 134–152. [Google Scholar] [CrossRef]

- Easthope, H.; Crommelin, L.; Troy, L.; Davison, G.; Nethercote, M.; Foster, S.; van den Nouwelant, R.; Kleeman, A.; Randolph, B.; Horne, R. Improving Outcomes for Apartment Residents and Neighbourhoods; AHURI Final Report; Australian Housing and Urban Research Institute Limited: Melbourne, VIC, Australia, 2020. [Google Scholar] [CrossRef]

- Quan, L.; Han, L.; Zhou, B.; Shen, S.; Gao, F. Survey of UAV Motion Planning. IET Cyber-Syst. Robot. 2020, 2, 14–21. [Google Scholar] [CrossRef]

- Zhou, H.; Xiong, H.L.; Liu, Y.; Tan, N.D.; Chen, L. Trajectory Planning Algorithm of UAV Based on System Positioning Accuracy Constraints. Electronics 2020, 9, 250. [Google Scholar] [CrossRef]

- Nam, L.H.; Huang, L.; Li, X.J.; Xu, J.F. An Approach for Coverage Path Planning for UAVs. In Proceedings of the 2016 IEEE 14th International Workshop on Advanced Motion Control, AMC 2016, Auckland, New Zealand, 22–24 April 2016; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 20 June 2016; pp. 411–416. [Google Scholar]

- Lu, Y.; Xue, Z.; Xia, G.S.; Zhang, L. A Survey on Vision-Based UAV Navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Chang, C.W.; Lo, L.Y.; Cheung, H.C.; Feng, Y.; Yang, A.S.; Wen, C.Y.; Zhou, W. Proactive Guidance for Accurate UAV Landing on a Dynamic Platform: A Visual–Inertial Approach. Sensors 2022, 22, 404. [Google Scholar] [CrossRef]

- Miranda, V.R.F.; Rezende, A.M.C.; Rocha, T.L.; Azpúrua, H.; Pimenta, L.C.A.; Freitas, G.M. Autonomous Navigation System for a Delivery Drone. J. Control Autom. Electr. Syst. 2022, 33, 141–155. [Google Scholar] [CrossRef]

- Li, B.; Wang, B.; Tan, X.; Wu, J.; Wei, L. Corner Location and Recognition of Single ArUco Marker under Occlusion Based on YOLO Algorithm. J. Electron. Imaging 2021, 30, 033012. [Google Scholar] [CrossRef]

- Nagatomo, M.; Aburada, K.; Okazaki, N.; Park, M. Evaluation of Ad-Hoc Secure Device Pairing Method with Accelerometer and Camera Using Marker. Int. J. Netw. Comput. 2019, 9, 318–338. [Google Scholar] [CrossRef]

- Mariusz Kornatowski, P.; Mintchev, S.; Floreano, D. An Origami-Inspired Cargo Drone. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 4–28 September 2017; ISBN 9781538626825. [Google Scholar]

- Ebeid, E.; Skriver, M.; Terkildsen, K.H.; Jensen, K.; Schultz, U.P. A Survey of Open-Source UAV Flight Controllers and Flight Simulators. Microprocess. Microsyst. 2018, 61, 11–20. [Google Scholar] [CrossRef]

- Wilson, A.N.; Kumar, A.; Jha, A.; Cenkeramaddi, L.R. Embedded Sensors, Communication Technologies, Computing Platforms and Machine Learning for UAVs: A Review. IEEE Sens. J. 2022, 22, 1807–1826. [Google Scholar] [CrossRef]

- Meier, L.; Tanskanen, P.; Fraundorfer, F.; Pollefeys, M. PIXHAWK: A System for Autonomous Flight Using Onboard Computer Vision. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2992–2997. [Google Scholar]

- Meier, L.; Honegger, D.; Pollefeys, M. PX4: A Node-Based Multithreaded Open Source Robotics Framework for Deeply Embedded Platforms. In Proceedings of the 2015 IEEE international conference on robotics and automation (ICRA), Seattle, WA, USA, 26–30 May 2015; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 29 June 2015; Volume 2015, pp. 6235–6240. [Google Scholar]

- Delaune, J.; Bayard, D.S.; Brockers, R. Range-Visual-Inertial Odometry: Scale Observability Without Excitation; Range-Visual-Inertial Odometry: Scale Observability Without Excitation. IEEE Robot. Autom. Lett. 2021, 6, 2421–2428. [Google Scholar] [CrossRef]

- Stagsted, R.K.; Vitale, A.; Renner, A.; Larsen, L.B.; Christensen, A.L.; Sandamirskaya, Y. Event-Based PID Controller Fully Realized in Neuromorphic Hardware: A One DoF Study. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 24 October 2020; pp. 10939–10944. [Google Scholar]

- Benhadhria, S.; Mansouri, M.; Benkhlifa, A.; Gharbi, I.; Jlili, N. VAGADRONE: Intelligent and Fully Automatic Drone Based on Raspberry Pi and Android. Appl. Sci. 2021, 11, 3153. [Google Scholar] [CrossRef]

- Braga, R.G.; da Silva, R.C.; Ramos, A.C.B.; Mora-Camino, F. Collision Avoidance Based on Reynolds Rules: A Case Study Using Quadrotors. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2018; Volume 558, pp. 773–780. [Google Scholar]

| Feature | NVIDIA Jetson Nano | Raspberry Pi 4B |

|---|---|---|

| CPU | ARM Cortex-A57 (64 bit) | ARM Cortex-A72 (64 bit) |

| GPU and Cores | Maxwell with CUDA with 128 Cores | Video Core VI 3D (0 Cores) |

| Memory | 4 GB LPDDR4 | 4 GB LPDDR4 |

| Storage | Micro SD (8 GB to 1 TB) | Micro SD or 16 GB eMMC |

| Power Requirements (Under Load) | 2.56 W–7.30 W (620 mA to 1430 mA) | 10 W to 20 W (3 A to 6 A) |

| Wireless Connectivity | None | Wi-Fi and Bluetooth |

| Board Dimensions | 100 × 79 mm | 65 × 56 mm |

| Yolov5 | 16 Batch Size | 32 Batch Size | ||

|---|---|---|---|---|

| Models | Avg. Precision | F1 Score | Avg. Precision | F1 Score |

| Nano | 0.798 | 0.82 | 0.85 | 0.872 |

| Small | 0.905 | 0.91 | 0.916 | 0.923 |

| Medium | 0.92 | 0.929 | 0.935 | 0.94 |

| Yolov5 Models | Time of Detection (ms) 480 × 640 Pixel Resolution | Time of Detection (ms) 960 × 1280 Pixel Resolution | ||

|---|---|---|---|---|

| 16 Batch Size | 32 Batch Size | 16 Batch Size | 16 Batch Size | |

| Nano | 53 | 54 | 171 | 173 |

| Small | 122 | 123 | 400 | 389 |

| Medium | 287 | 289 | 989 | 995 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seth, A.; James, A.; Kuantama, E.; Mukhopadhyay, S.; Han, R. Drone High-Rise Aerial Delivery with Vertical Grid Screening. Drones 2023, 7, 300. https://doi.org/10.3390/drones7050300

Seth A, James A, Kuantama E, Mukhopadhyay S, Han R. Drone High-Rise Aerial Delivery with Vertical Grid Screening. Drones. 2023; 7(5):300. https://doi.org/10.3390/drones7050300

Chicago/Turabian StyleSeth, Avishkar, Alice James, Endrowednes Kuantama, Subhas Mukhopadhyay, and Richard Han. 2023. "Drone High-Rise Aerial Delivery with Vertical Grid Screening" Drones 7, no. 5: 300. https://doi.org/10.3390/drones7050300

APA StyleSeth, A., James, A., Kuantama, E., Mukhopadhyay, S., & Han, R. (2023). Drone High-Rise Aerial Delivery with Vertical Grid Screening. Drones, 7(5), 300. https://doi.org/10.3390/drones7050300