Vision-Based Navigation Techniques for Unmanned Aerial Vehicles: Review and Challenges

Abstract

1. Introduction

1.1. Contributions of This Study

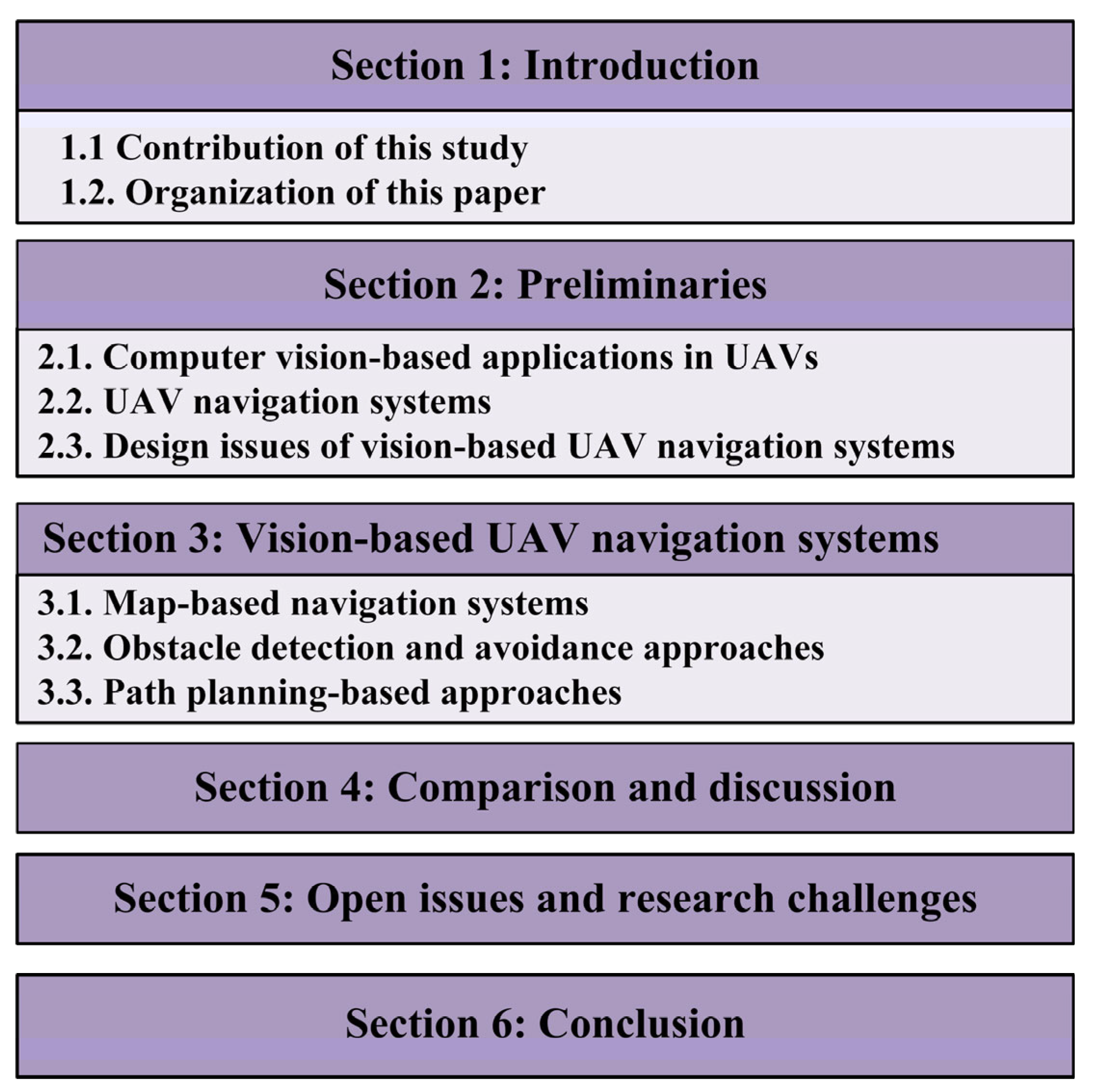

1.2. Organization of This Paper

2. Preliminaries

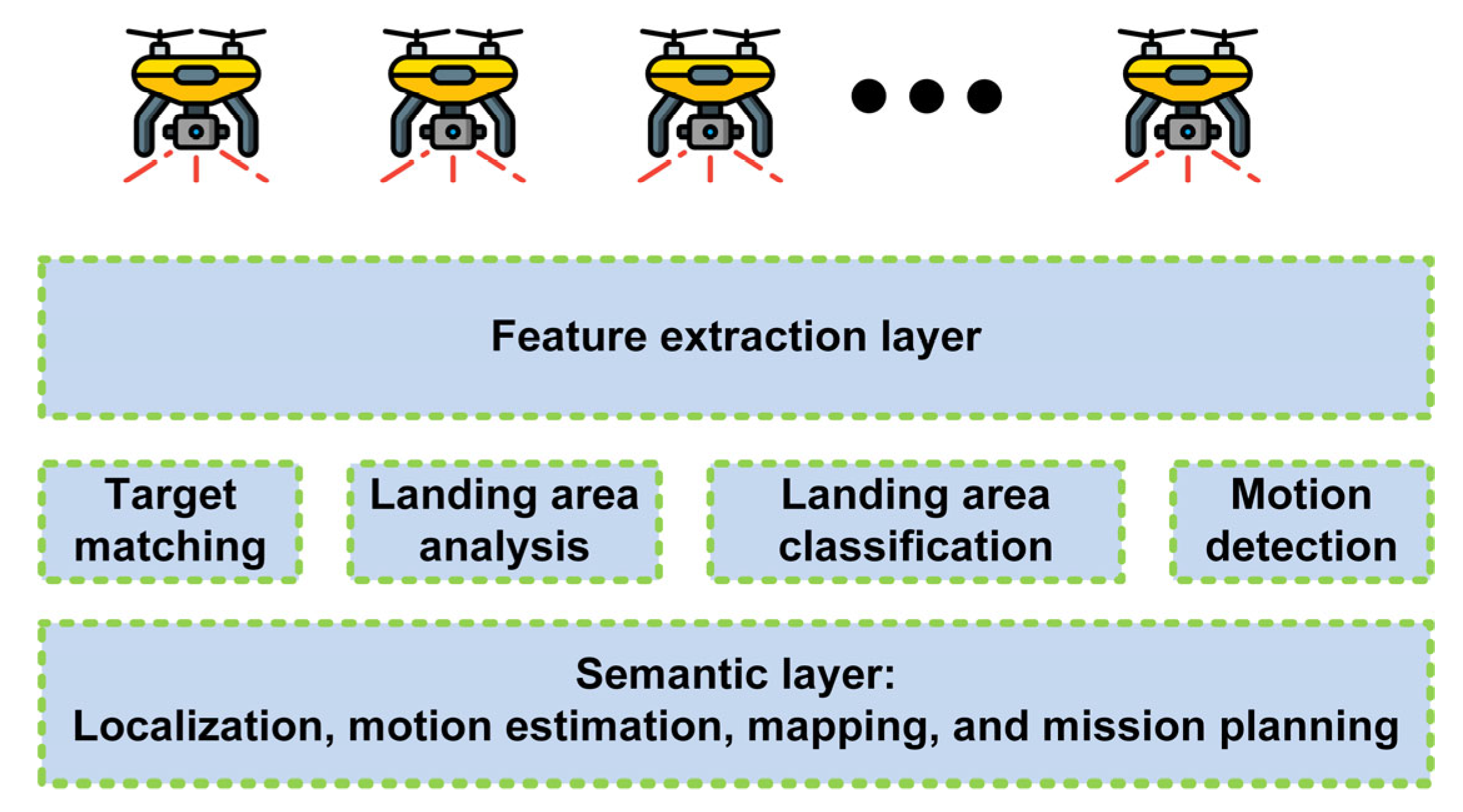

2.1. Computer Vision-Based Applications in UAVs

2.2. UAV Navigation Systems

2.2.1. Pose Estimation

GPS

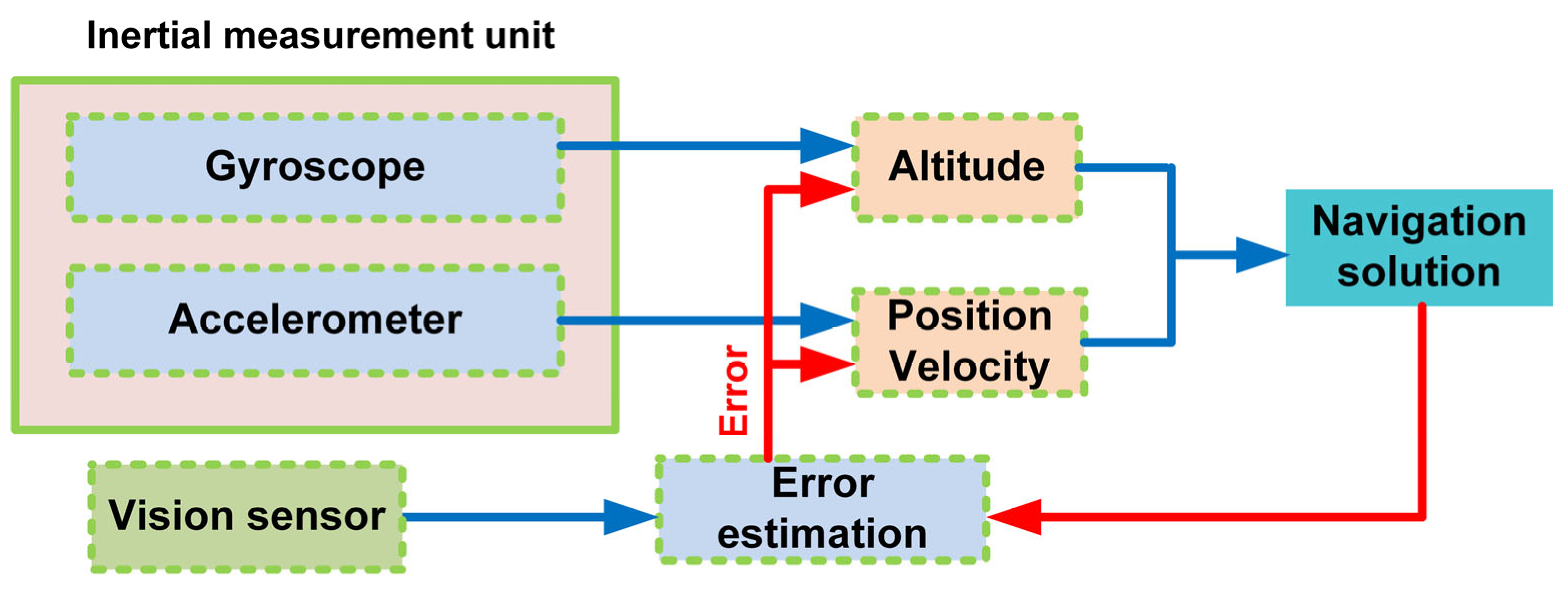

GPS-Aided Systems

Vision-Based Systems

2.2.2. Visual Obstacle Detection and Avoidance

2.2.3. Visual Servoing

2.3. Design Issues of Vision-Based UAV Navigation Systems

2.3.1. Accuracy

2.3.2. Availability

2.3.3. Complexity and Cost

2.3.4. Generalization

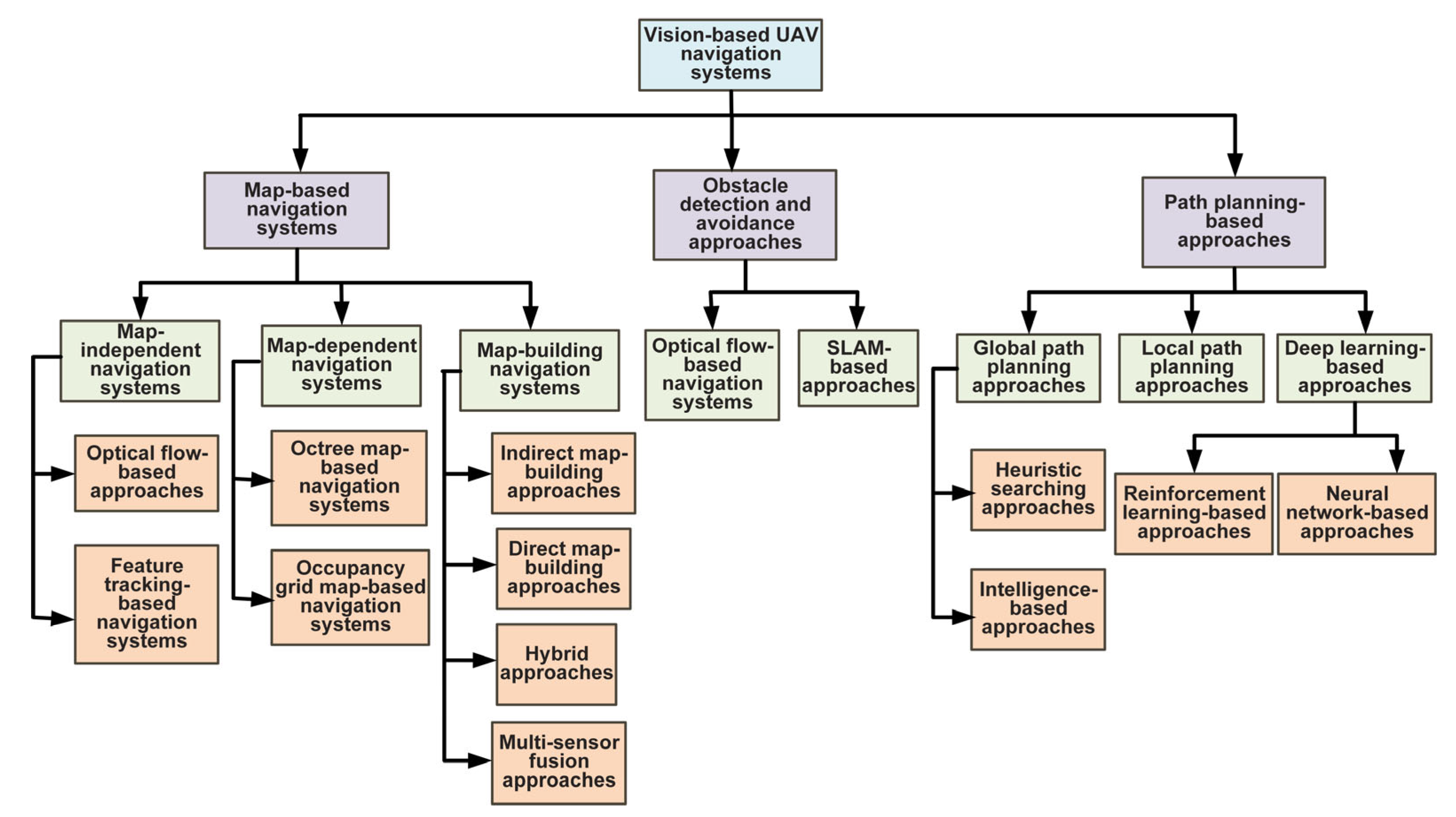

3. Vision-Based UAV Navigation Systems

3.1. Map-Based Navigation Systems

3.1.1. Map-Independent Navigation System

3.1.2. Map-Dependent Navigation Systems

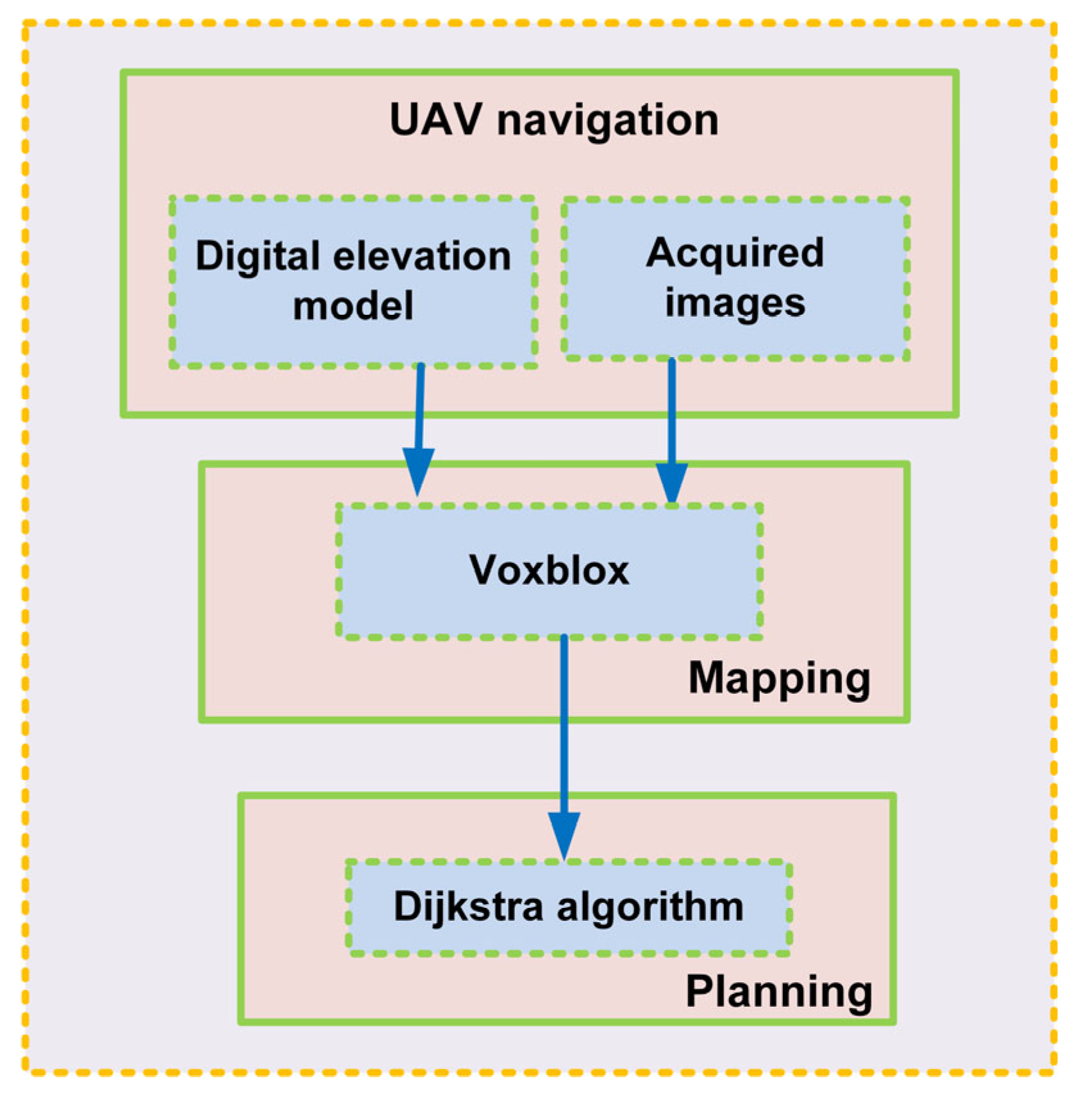

3.1.3. Map-Building-Based Navigation Systems

Indirect Map-Building Approaches

Direct Map-Building-Based Approaches

Hybrid Approaches

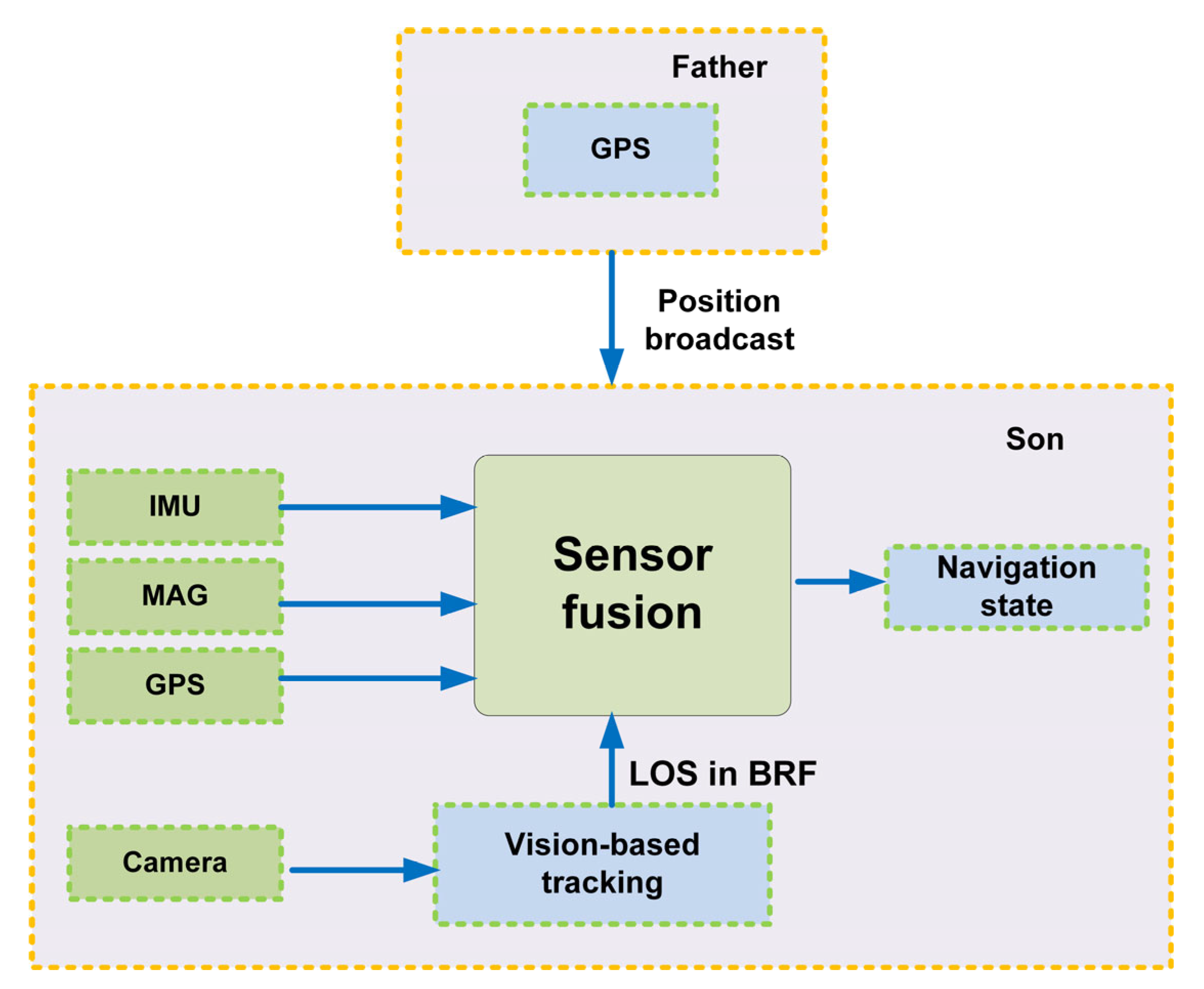

Multi-Sensor Fusion Approaches

3.2. Obstacle Detection and Avoidance Approaches

3.2.1. Optical Flow-Based Approaches

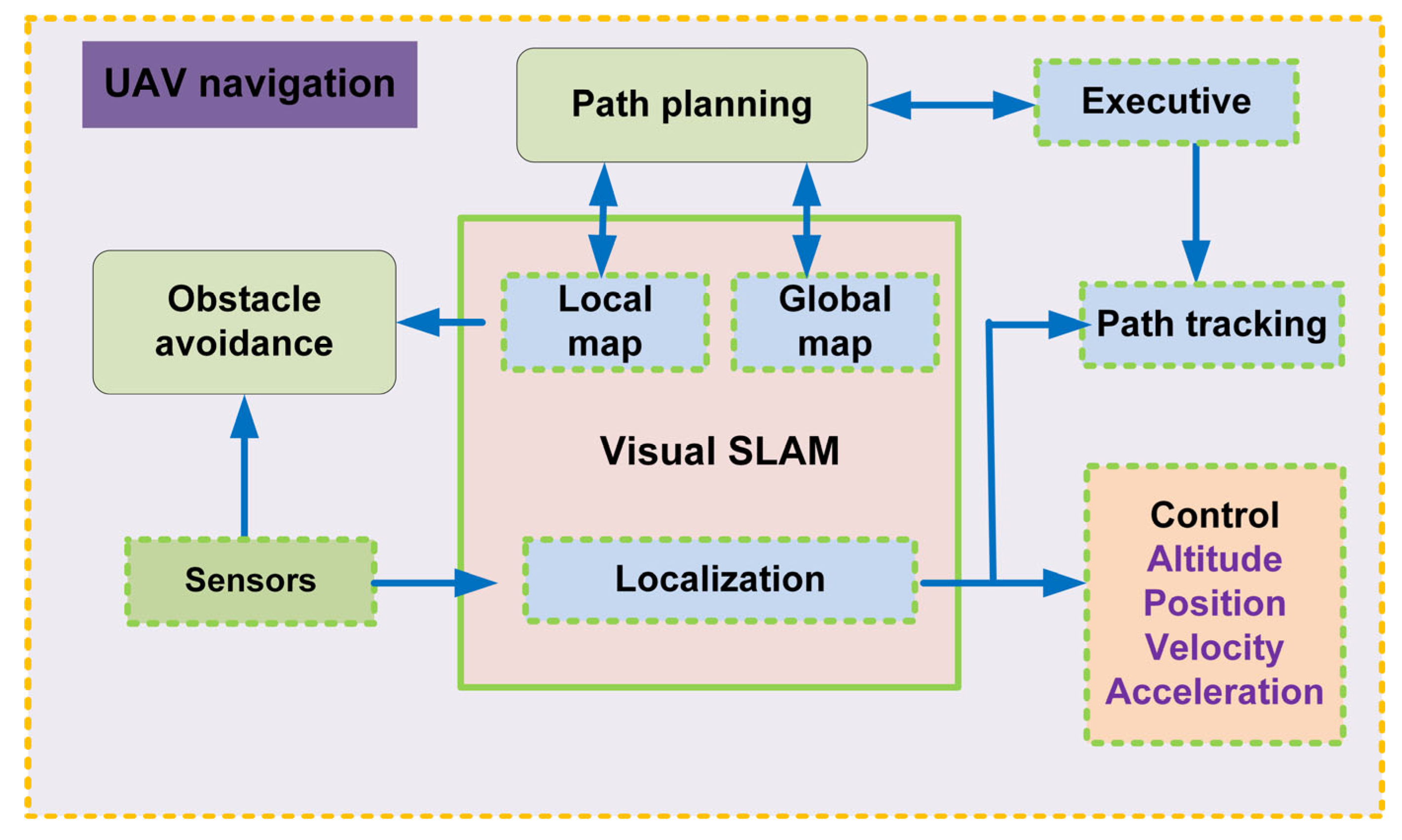

3.2.2. SLAM-Based Approaches

3.3. Path-Planning-Based Approaches

3.3.1. Global Path-Planning Approaches

Heuristic Searching Methods

Intelligence-Based Approaches

3.3.2. Local Path-Planning Approaches

3.3.3. Deep Learning-Based Approaches

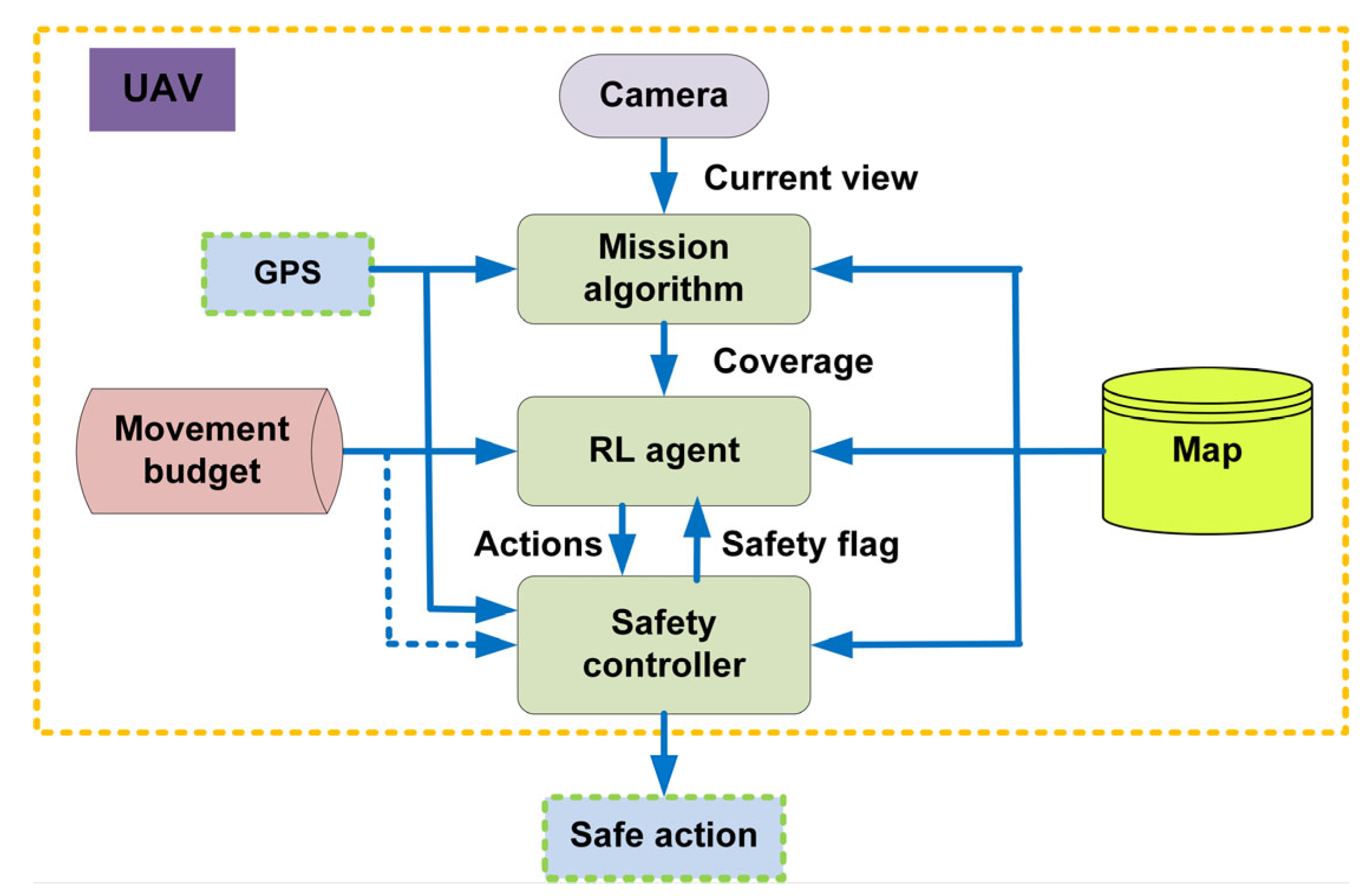

Reinforcement Learning (RL)-Based Approaches

Neural Network-Based Approaches

4. Comparison and Discussion

5. Open Issues and Research Challenges

5.1. Scalability

5.2. Computational Power

5.3. Reliability

5.4. Robustness

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wei, Z.; Zhu, M.; Zhang, N.; Wang, L.; Zou, Y.; Meng, Z.; Wu, H.; Feng, Z. UAV-assisted data collection for internet of things: A survey. IEEE Internet Things J. 2022, 9, 15460–15483. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Moh, S. Routing protocols for Unmanned Aerial Vehicle Networks: A survey. IEEE Access 2019, 7, 99694–99720. [Google Scholar] [CrossRef]

- Alam, M.M.; Arafat, M.Y.; Moh, S.; Shen, J. Topology control algorithms in multi-unmanned aerial vehicle networks: An extensive survey. J. Netw. Comput. Appl. 2022, 207, 103495. [Google Scholar] [CrossRef]

- Poudel, S.; Moh, S. Task assignment algorithms for Unmanned Aerial Vehicle Networks: A comprehensive survey. Veh. Commun. 2022, 35, 100469. [Google Scholar] [CrossRef]

- Sonkar, S.; Kumar, P.; George, R.C.; Yuvaraj, T.P.; Philip, D.; Ghosh, A.K. Real-time object detection and recognition using fixed-wing Lale VTOL UAV. IEEE Sens. J. 2022, 22, 20738–20747. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Moh, S. Localization and clustering based on swarm intelligence in UAV Networks for Emergency Communications. IEEE Internet Things J. 2019, 6, 8958–8976. [Google Scholar] [CrossRef]

- Alam, M.M.; Moh, S. Joint Topology Control and routing in a UAV swarm for crowd surveillance. J. Netw. Comput. Appl. 2022, 204, 103427. [Google Scholar] [CrossRef]

- Kanellakis, C.; Nikolakopoulos, G. Survey on computer vision for uavs: Current developments and trends. J. Intell. Robot. Syst. 2017, 87, 141–168. [Google Scholar] [CrossRef]

- Al-Kaff, A.; Martín, D.; García, F.; de Escalera, A.; María Armingol, J. Survey of computer vision algorithms and applications for unmanned aerial vehicles. Expert Syst. Appl. 2018, 92, 447–463. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Moh, S. Bio-inspired approaches for energy-efficient localization and clustering in UAV networks for monitoring wildfires in remote areas. IEEE Access 2021, 9, 18649–18669. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, H.; Liu, B.; Liu, Y.; Wu, J.; Lu, Z. A visual navigation framework for the aerial recovery of uavs. IEEE Trans. Instrum. Meas. 2021, 70, 5019713. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Moh, S. Location-aided delay tolerant routing protocol in UAV networks for Post-Disaster Operation. IEEE Access 2018, 6, 59891–59906. [Google Scholar] [CrossRef]

- Miclea, V.-C.; Nedevschi, S. Monocular depth estimation with improved long-range accuracy for UAV environment perception. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5602215. [Google Scholar] [CrossRef]

- Zhao, X.; Pu, F.; Wang, Z.; Chen, H.; Xu, Z. Detection, tracking, and geolocation of moving vehicle from UAV using monocular camera. IEEE Access 2019, 7, 101160–101170. [Google Scholar] [CrossRef]

- Wilson, A.N.; Kumar, A.; Jha, A.; Cenkeramaddi, L.R. Embedded Sensors, Communication Technologies, computing platforms and Machine Learning for uavs: A Review. IEEE Sens. J. 2022, 22, 1807–1826. [Google Scholar] [CrossRef]

- Yang, T.; Li, Z.; Zhang, F.; Xie, B.; Li, J.; Liu, L. Panoramic UAV surveillance and recycling system based on structure-free camera array. IEEE Access 2019, 7, 25763–25778. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Moh, S. A q-learning-based topology-aware routing protocol for flying ad hoc networks. IEEE Internet Things J. 2022, 9, 1985–2000. [Google Scholar] [CrossRef]

- Tang, Y.; Hu, Y.; Cui, J.; Liao, F.; Lao, M.; Lin, F.; Teo, R.S. Vision-aided multi-uav autonomous flocking in GPS-denied environment. IEEE Trans. Ind. Electron. 2019, 66, 616–626. [Google Scholar] [CrossRef]

- Qian, J.; Pei, L.; Zou, D.; Liu, P. Optical flow-based gait modeling algorithm for pedestrian navigation using smartphone sensors. IEEE Sens. J. 2015, 15, 6797–6804. [Google Scholar] [CrossRef]

- Qian, J.; Chen, K.; Chen, Q.; Yang, Y.; Zhang, J.; Chen, S. Robust visual-lidar simultaneous localization and mapping system for UAV. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6502105. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Moh, S. A survey on cluster-based routing protocols for Unmanned Aerial Vehicle Networks. IEEE Access 2019, 7, 498–516. [Google Scholar] [CrossRef]

- De Lucena, A.N.; Da Silva, B.M.; Goncalves, L.M. Double hybrid tailsitter unmanned aerial vehicle with vertical takeoff and landing. IEEE Access 2022, 10, 32938–32953. [Google Scholar] [CrossRef]

- Diels, L.; Vlaminck, M.; De Wit, B.; Philips, W.; Luong, H. On the optimal mounting angle for a spinning lidar on a UAV. IEEE Sens. J. 2022, 22, 21240–21247. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Moh, S. JRCS: Joint Routing and charging strategy for logistics drones. IEEE Internet Things J. 2022, 9, 21751–21764. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (uavs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Cho, O.-H.; Ban, K.-J.; Kim, E.-K. Stabilized UAV flight system design for Structure Safety Inspection. In Proceedings of the 16th International Conference on Advanced Communication Technology, PyeongChang, Republic of Korea, 16–19 February 2014. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Poudel, S.; Moh, S. Medium access control protocols for flying Ad Hoc Networks: A Review. IEEE Sens. J. 2021, 21, 4097–4121. [Google Scholar] [CrossRef]

- Li, B.; Mu, C.; Wu, B. A survey of vision based autonomous aerial refueling for unmanned aerial vehicles. In Proceedings of the 2012 Third International Conference on Intelligent Control and Information Processing, Dalian, China, 15–17 July 2012. [Google Scholar] [CrossRef]

- Dong, J.; Ren, X.; Han, S.; Luo, S. UAV Vision aided INS/odometer integration for Land Vehicle Autonomous Navigation. IEEE Trans. Veh. Technol. 2022, 71, 4825–4840. [Google Scholar] [CrossRef]

- Alam, M.M.; Moh, S. Survey on Q-learning-based position-aware routing protocols in flying ad hoc networks. Electronics 2022, 11, 1099. [Google Scholar] [CrossRef]

- Hui, Y.; Xhiping, C.; Shanjia, X.; Shisong, W. An unmanned air vehicle (UAV) GPS location and Navigation System. ICMMT’98. In Proceedings of the 1998 International Conference on Microwave and Millimeter Wave Technology, (Cat. No.98EX106), Beijing, China, 18–20 August 1998. [Google Scholar] [CrossRef]

- Gomes, L.L.; Leal, L.; Oliveira, T.R.; Cunha, J.P.V.S.; Revoredo, T.C. Unmanned Quadcopter control using a motion capture system. IEEE Lat. Am. Trans. 2016, 14, 3606–3613. [Google Scholar] [CrossRef]

- Alarcón, F.; García, M.; Maza, I.; Viguria, A.; Ollero, A. A Precise and GNSS-Free Landing System on Moving Platforms for Rotary-Wing UAVs. Sensors 2019, 19, 886. [Google Scholar] [CrossRef]

- Hao, Y.; Xu, A.; Sui, X.; Wang, Y. A Modified Extended Kalman Filter for a Two-Antenna GPS/INS Vehicular Navigation System. Sensors 2018, 18, 3809. [Google Scholar] [CrossRef] [PubMed]

- Pavel, M.I.; Tan, S.Y.; Abdullah, A. Vision-Based Autonomous Vehicle Systems Based on Deep Learning: A Systematic Literature Review. Appl. Sci. 2022, 12, 6831. [Google Scholar] [CrossRef]

- Lin, J.; Wang, Y.; Miao, Z.; Zhong, H.; Fierro, R. Low-complexity control for vision-based landing of quadrotor UAV on unknown moving platform. IEEE Trans. Ind. Inform. 2022, 18, 5348–5358. [Google Scholar] [CrossRef]

- González-Sieira, A.; Cores, D.; Mucientes, M.; Bugarín, A. Autonomous Navigation for uavs managing motion and sensing uncertainty. Robot. Auton. Syst. 2020, 126, 103455. [Google Scholar] [CrossRef]

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous localization and mapping: A survey of current trends in autonomous driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. [Google Scholar] [CrossRef]

- Wang, S.; Lv, X.; Li, J.; Ye, D. Coarse semantic-based motion removal for robust mapping in dynamic environments. IEEE Access 2020, 8, 74048–74064. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera slam. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef]

- Blösch, M.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Vision based MAV navigation in unknown and unstructured environments. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation 2010, Anchorage, AK, USA, 3–7 May 2010. [Google Scholar] [CrossRef]

- Xie, X.; Yang, T.; Ning, Y.; Zhang, F.; Zhang, Y. A Monocular Visual Odometry Method Based on Virtual-Real Hybrid Map in Low-Texture Outdoor Environment. Sensors 2021, 21, 3394. [Google Scholar] [CrossRef]

- Nistér, D.; Naroditsky, O.; Bergen, J. Visual odometry for ground vehicle applications. J. Field Robot. 2006, 23, 3–20. [Google Scholar] [CrossRef]

- Harris, C.G.; Stephens, M.J. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference, Citeseer, Manchester, UK, 31 August–2 September 1988. [Google Scholar]

- Jiao, Y.; Wang, Y.; Ding, X.; Fu, B.; Huang, S.; Xiong, R. 2-entity random sample consensus for robust visual localization: Framework, methods, and verifications. IEEE Trans. Ind. Electron. 2021, 68, 4519–4528. [Google Scholar] [CrossRef]

- Muhovic, J.; Mandeljc, R.; Bovcon, B.; Kristan, M.; Pers, J. Obstacle tracking for unmanned surface vessels using 3-D Point Cloud. IEEE J. Ocean. Eng. 2020, 45, 786–798. [Google Scholar] [CrossRef]

- Fabrizio, F.; De Luca, A. Real-time computation of distance to dynamic obstacles with multiple depth sensors. IEEE Robot. Autom. Lett. 2017, 2, 56–63. [Google Scholar] [CrossRef]

- Keipour, A.; Pereira, G.A.S.; Bonatti, R.; Garg, R.; Rastogi, P.; Dubey, G.; Scherer, S. Visual Servoing Approach to Autonomous UAV Landing on a Moving Vehicle. Sensors 2022, 22, 6549. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.-W.; Hung, H.-A.; Yang, P.-H.; Cheng, T.-H. Visual Servoing of a Moving Target by an Unmanned Aerial Vehicle. Sensors 2021, 21, 5708. [Google Scholar] [CrossRef] [PubMed]

- Altug, E.; Ostrowski, J.P.; Mahony, R. Control of a quadrotor helicopter using visual feedback. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No.02CH37292), Washington, DC, USA, 11–15 May 2002. [Google Scholar] [CrossRef]

- Horn, B.K.P.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence—Volume 2, Vancouver, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar] [CrossRef]

- Santos-Victor, J.; Sandini, G.; Curotto, F.; Garibaldi, S. Divergent stereo for robot navigation: Learning from bees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 15–17 June 1993. [Google Scholar] [CrossRef]

- Herissé, B.; Hamel, T.; Mahony, R.; Russotto, F.-X. Landing a VTOL unmanned aerial vehicle on a moving platform using optical flow. IEEE Trans. Robot. 2012, 28, 77–89. [Google Scholar] [CrossRef]

- Maier, J.; Humenberger, M. Movement detection based on dense optical flow for unmanned aerial vehicles. Int. J. Adv. Robot. Syst. 2013, 10, 146. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, Y.; Liu, W.; Chen, X. Novel approach to position and orientation estimation in vision-based UAV navigation. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 687–700. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, W.; Wu, Y. Novel technique for vision-based UAV navigation. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 2731–2741. [Google Scholar] [CrossRef]

- ESRI Inc. ArcView 8.1 and ArcInfo 8.1. 2004. Available online: http://www.esri.com/ (accessed on 1 June 2022).

- USGS National Map Seamless Server. 2010. Available online: http://seamless.usgs.gov (accessed on 1 June 2022).

- Khansari-Zadeh, S.M.; Saghafi, F. Vision-based navigation in autonomous close proximity operations using Neural Networks. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 864–883. [Google Scholar] [CrossRef]

- Cho, D.-M.; Tsiotras, P.; Zhang, G.; Holzinger, M. Robust feature detection, acquisition and tracking for relative navigation in space with a known target. In Proceedings of the AIAA Guidance, Navigation, and Control (GNC) Conference, Boston, MA, USA, 8–11 August 2013. [Google Scholar] [CrossRef]

- Li, J.; Allinson, N.M. A comprehensive review of current local features for Computer Vision. Neurocomputing 2008, 71, 1771–1787. [Google Scholar] [CrossRef]

- Szenher, M.D. Visual Homing in Dynamic Indoor Environments. Available online: http://hdl.handle.net/1842/3193 (accessed on 15 November 2022).

- Cesetti, A.; Frontoni, E.; Mancini, A.; Zingaretti, P.; Longhi, S. A Vision-based guidance system for UAV navigation and safe landing using natural landmarks. J. Intell. Robot. Syst. 2009, 57, 233–257. [Google Scholar] [CrossRef]

- Wertz, J.R. Spacecraft Attitude Determination and Control; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1978. [Google Scholar]

- Vetrella, A.R.; Fasano, G. Cooperative UAV navigation under nominal GPS coverage and in GPS-challenging environments. In Proceedings of the 2016 IEEE 2nd International Forum on Research and Technologies for Society and Industry Leveraging a better tomorrow (RTSI) 2016, Bologna, Italy, 7–9 September 2016. [Google Scholar] [CrossRef]

- Fournier, J.; Ricard, B.; Laurendeau, D. Mapping and exploration of complex environments using persistent 3D model. In Proceedings of the Fourth Canadian Conference on Computer and Robot Vision (CRV ‘07) 2007, Montreal, QC, Canada, 28–30 May 2007. [Google Scholar] [CrossRef]

- Gutmann, J.-S.; Fukuchi, M.; Fujita, M. 3D perception and Environment Map Generation for humanoid robot navigation. Int. J. Robot. Res. 2008, 27, 1117–1134. [Google Scholar] [CrossRef]

- Dryanovski, I.; Morris, W.; Xiao, J. Multi-volume occupancy grids: An efficient probabilistic 3D Mapping Model for Micro Aerial Vehicles. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems 2010, Taipei, Taiwan, 18–22 October 2010. [Google Scholar] [CrossRef]

- Saranya, K.C.; Naidu, V.P.; Singhal, V.; Tanuja, B.M. Application of vision based techniques for UAV position estimation. In Proceedings of the 2016 International Conference on Research Advances in Integrated Navigation Systems (RAINS) 2016, Bangalore, India, 6–7 May 2016. [Google Scholar] [CrossRef]

- Gupta, A.; Fernando, X. Simultaneous Localization and Mapping (SLAM) and Data Fusion in Unmanned Aerial Vehicles: Recent Advances and Challenges. Drones 2022, 6, 85. [Google Scholar] [CrossRef]

- Moravec, H.P. The stanford CART and the CMU Rover. Proc. IEEE 1983, 71, 872–884. [Google Scholar] [CrossRef]

- Davison Real-time simultaneous localisation and mapping with a single camera. In Proceedings of the Ninth IEEE International Conference on Computer Vision 2003, Nice, France, 13–16 October 2003. [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality 2007, Nara, Japan, 13–16 November 2007. [Google Scholar] [CrossRef]

- Mahon, I.; Williams, S.B.; Pizarro, O.; Johnson-Roberson, M. Efficient view-based slam using visual loop closures. IEEE Trans. Robot. 2008, 24, 1002–1014. [Google Scholar] [CrossRef]

- Celik, K.; Chung, S.-J.; Clausman, M.; Somani, A.K. Monocular Vision Slam for indoor aerial vehicles. 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems 2009, St. Louis, MO, USA, 10–15 October 2009. [Google Scholar] [CrossRef]

- Han, J.; Chen, Y.Q. Multiple UAV formations for cooperative source seeking and contour mapping of a radiative signal field. J. Intell. Robot. Syst. 2013, 74, 323–332. [Google Scholar] [CrossRef]

- Valgaerts, L.; Bruhn, A.; Mainberger, M.; Weickert, J. Dense versus sparse approaches for estimating the Fundamental Matrix. Int. J. Comput. Vis. 2011, 96, 212–234. [Google Scholar] [CrossRef]

- Ranftl, R.; Vineet, V.; Chen, Q.; Koltun, V. Dense monocular depth estimation in complex dynamic scenes. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Bavle, H.; De La Puente, P.; How, J.P.; Campoy, P. VPS-SLAM: Visual planar semantic slam for aerial robotic systems. IEEE Access 2020, 8, 60704–60718. [Google Scholar] [CrossRef]

- Oleynikova, H.; Taylor, Z.; Fehr, M.; Siegwart, R.; Nieto, J. Voxblox: Incremental 3D Euclidean signed distance fields for on-board MAV Planning. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2017, Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar] [CrossRef]

- Chen, S.; Chen, H.; Chang, C.-W.; Wen, C.-Y. Multilayer mapping kit for autonomous UAV navigation. IEEE Access 2021, 9, 31493–31503. [Google Scholar] [CrossRef]

- Zhang, B.; Zhu, D. A new method on motion planning for mobile robots using Jump Point Search and bezier curves. Int. J. Adv. Robot. Syst. 2021, 18, 172988142110192. [Google Scholar] [CrossRef]

- Silveira, G.; Malis, E.; Rives, P. An efficient direct approach to visual slam. IEEE Trans. Robot. 2008, 24, 969–979. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision 2011, Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-slam: Large-scale direct monocular slam. In Proceedings of the Computer Vision—ECCV 2014; Springer: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar] [CrossRef]

- Kummerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2O: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar] [CrossRef]

- Lan, H.; Jianmei, S. Research of autonomous vision-based absolute navigation for Unmanned Aerial Vehicle. In Proceedings of the 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV) 2016, Phuket, Thailand, 13–15 November 2016. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar] [CrossRef]

- Desouza, G.N.; Kak, A.C. Vision for Mobile Robot Navigation: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 237–267. [Google Scholar] [CrossRef]

- Lynen, S.; Achtelik, M.W.; Weiss, S.; Chli, M.; Siegwart, R. A robust and modular multi-sensor fusion approach applied to MAV Navigation. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar] [CrossRef]

- Magree, D.; Johnson, E.N. Combined laser and vision-aided inertial navigation for an indoor unmanned aerial vehicle. In Proceedings of the American Control Conference 2014, Portland, OR, USA, 4–6 June 2014. [Google Scholar] [CrossRef]

- Gosiewski, Z.; Ciesluk, J.; Ambroziak, L. Vision-based obstacle avoidance for unmanned aerial vehicles. In Proceedings of the 2011 4th International Congress on Image and Signal Processing 2011, Shanghai, China, 15–17 October 2011. [Google Scholar] [CrossRef]

- Ameli, Z.; Aremanda, Y.; Friess, W.A.; Landis, E.N. Impact of UAV Hardware Options on Bridge Inspection Mission Capabilities. Drones 2022, 6, 64. [Google Scholar] [CrossRef]

- Strübbe, S.; Stürzl, W.; Egelhaaf, M. Insect-inspired self-motion estimation with dense flow fields—An adaptive matched filter approach. PLoS ONE 2015, 10, e0128413. [Google Scholar] [CrossRef] [PubMed]

- Haag, J.; Denk, W.; Borst, A. Fly Motion Vision is based on Reichardt detectors regardless of the signal-to-noise ratio. Proc. Natl. Acad. Sci. USA 2004, 101, 16333–16338. [Google Scholar] [CrossRef]

- Ruffier, F.; Viollet, S.; Amic, S.; Franceschini, N. Bio-inspired optical flow circuits for the visual guidance of Micro Air Vehicles. In Proceedings of the 2003 International Symposium on Circuits and Systems, ISCAS ‘03, Bangkok, Thailand, 25–28 May 2003. [Google Scholar] [CrossRef]

- Bertrand, O.J.; Lindemann, J.P.; Egelhaaf, M. A bio-inspired collision avoidance model based on spatial information derived from motion detectors leads to common routes. PLoS Comput. Biol. 2015, 11, e1004339. [Google Scholar] [CrossRef]

- Moreno-Armendariz, M.A.; Calvo, H. Visual slam and obstacle avoidance in real time for Mobile Robots Navigation. In Proceedings of the 2014 International Conference on Mechatronics, Electronics and Automotive Engineering 2014, Cuernavaca, Mexico, 18–21 November 2014. [Google Scholar] [CrossRef]

- Zhihai, H.; Iyer, R.V.; Chandler, P.R. Vision-based UAV flight control and obstacle avoidance. In Proceedings of the 2006 American Control Conference 2006, Minneapolis, MN, USA, 14–16 June 2006. [Google Scholar] [CrossRef]

- Lin, H.-Y.; Peng, X.-Z. Autonomous quadrotor navigation with vision based obstacle avoidance and path planning. IEEE Access 2021, 9, 102450–102459. [Google Scholar] [CrossRef]

- Peng, X.-Z.; Lin, H.-Y.; Dai, J.-M. Path planning and obstacle avoidance for vision guided quadrotor UAV navigation. In Proceedings of the 2016 12th IEEE International Conference on Control and Automation (ICCA) 2016, Kathmandu, Nepal, 1–3 June 2016. [Google Scholar] [CrossRef]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Image Analysis; Springer: Berlin/Heidelberg, Germany, 2003; pp. 363–370. [Google Scholar] [CrossRef]

- Bai, G.; Xiang, X.; Zhu, H.; Yin, D.; Zhu, L. Research on obstacles avoidance technology for UAV based on improved PTAM algorithm. In Proceedings of the 2015 IEEE International Conference on Progress in Informatics and Computing (PIC) 2015, Nanjing, China, 18–20 December 2015. [Google Scholar] [CrossRef]

- Esrafilian, O.; Taghirad, H.D. Autonomous Flight and obstacle avoidance of a quadrotor by Monocular Slam. In Proceedings of the 2016 4th International Conference on Robotics and Mechatronics (ICROM) 2016, Tehran, Iran, 26–28 October 2016. [Google Scholar] [CrossRef]

- Potena, C.; Nardi, D.; Pretto, A. Joint Vision-based navigation, control and obstacle avoidance for uavs in Dynamic Environments. In Proceedings of the 2019 European Conference on Mobile Robots (ECMR) 2019, Prague, Czech Republic, 4–6 September 2019. [Google Scholar] [CrossRef]

- Yang, L.; Xiao, B.; Zhou, Y.; He, Y.; Zhang, H.; Han, J. A robust real-time vision based GPS-denied navigation system of UAV. In Proceedings of the 2016 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER) 2016, Chengdu, China, 19–22 June 2016. [Google Scholar] [CrossRef]

- Vachtsevanos, G.; Kim, W.; Al-Hasan, S.; Rufus, F.; Simon, M.; Shrage, D.; Prasad, J.V.R. Autonomous vehicles: From flight control to mission planning using Fuzzy Logic Techniques. In Proceedings of the 13th International Conference on Digital Signal Processing, Santorini, Greece, 2–4 July 1997. [Google Scholar] [CrossRef]

- Rouse, D.M. Route planning using pattern classification and search techniques. In Proceedings of the IEEE National Aerospace and Electronics Conference, Dayton, OH, USA, 22–26 May 1989. [Google Scholar] [CrossRef]

- Szczerba, R.J.; Galkowski, P.; Glicktein, I.S.; Ternullo, N. Robust algorithm for real-time route planning. IEEE Trans. Aerosp. Electron. Syst. 2000, 36, 869–878. [Google Scholar] [CrossRef]

- Stentz, A. Optimal and efficient path planning for partially-known environments. In Proceedings of the 1994 IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994. [Google Scholar] [CrossRef]

- Belge, E.; Altan, A.; Hacıoğlu, R. Metaheuristic Optimization-Based Path Planning and Tracking of Quadcopter for Payload Hold-Release Mission. Electronics 2022, 11, 1208. [Google Scholar] [CrossRef]

- Zhang, Q.; Ma, J.; Liu, Q. Path planning based Quadtree representation for mobile robot using hybrid-simulated annealing and ant colony optimization algorithm. In Proceedings of the 10th World Congress on Intelligent Control and Automation 2012, Beijing, China, 6–8 July 2012. [Google Scholar] [CrossRef]

- Andert, F.; Adolf, F. Online world modeling and path planning for an unmanned helicopter. Auton. Robot. 2009, 27, 147–164. [Google Scholar] [CrossRef]

- Wang, X.; Tan, G.-z.; Lu, F.-L.; Zhao, J.; Dai, Y.-s. A Molecular Force Field-Based Optimal Deployment Algorithm for UAV Swarm Coverage Maximization in Mobile Wireless Sensor Network. Processes 2020, 8, 369. [Google Scholar] [CrossRef]

- Souza, R.M.J.A.; Lima, G.V.; Morais, A.S.; Oliveira-Lopes, L.C.; Ramos, D.C.; Tofoli, F.L. Modified Artificial Potential Field for the Path Planning of Aircraft Swarms in Three-Dimensional Environments. Sensors 2022, 22, 1558. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Zhu, Y.; Kang, H.; Sun, X.; Chen, Q.; Wang, D. UAV Path Planning Based on Multi-Stage Constraint Optimization. Drones 2021, 5, 144. [Google Scholar] [CrossRef]

- Liu, Y.; Xu, W. Application of improved Hopfield Neural Network in path planning. J. Phys. Conf. Ser. 2020, 1544, 012154. [Google Scholar] [CrossRef]

- Yue, L.; Chen, H. Unmanned vehicle path planning using a novel Ant Colony algorithm. EURASIP J. Wirel. Commun. Netw. 2019, 2019, 136. [Google Scholar] [CrossRef]

- Yang, L.; Fan, S.; Yu, B.; Jia, Y. A Coverage Sampling Path Planning Method Suitable for UAV 3D Space Atmospheric Environment Detection. Atmosphere 2022, 13, 1321. [Google Scholar] [CrossRef]

- Liang, H.; Bai, H.; Sun, R.; Sun, R.; Li, C. Three-dimensional path planning based on Dem. 2017 36th Chinese Control Conference (CCC) 2017, Dalian, China, 26–28 July 2017. [Google Scholar] [CrossRef]

- Mittal, M.; Mohan, R.; Burgard, W.; Valada, A. Vision-based autonomous UAV navigation and landing for urban search and rescue. Springer Proc. Adv. Robot. 2022, 20, 575–592. [Google Scholar] [CrossRef]

- Autoland. Available online: http://autoland.cs.uni-freiburg.de./ (accessed on 16 September 2022).

- Li, Z.; Zhao, J.; Zhou, X.; Wei, S.; Li, P.; Shuang, F. RTSDM: A Real-Time Semantic Dense Mapping System for UAVs. Machines 2022, 10, 285. [Google Scholar] [CrossRef]

- Chen, S.; Zhou, W.; Yang, A.-S.; Chen, H.; Li, B.; Wen, C.-Y. An End-to-End UAV Simulation Platform for Visual SLAM and Navigation. Aerospace 2022, 9, 48. [Google Scholar] [CrossRef]

- Lu, S.; Ding, B.; Li, Y. Minimum-jerk trajectory planning pertaining to a translational 3-degree-of-freedom parallel manipulator through piecewise quintic polynomials interpolation. Adv. Mech. Eng. 2020, 12, 168781402091366. [Google Scholar] [CrossRef]

- Maciel-Pearson, B.G.; Marchegiani, L.; Akcay, S.; Abarghouei, A.; Garforth, J.; Breckon, T.P. Online deep reinforcement learning for autonomous UAV navigation and exploration of outdoor environments. arXiv 2019, arXiv:1912.05684. [Google Scholar]

- He, L.; Aouf, N.; Whidborne, J.; Song, B. Deep reinforcement learning based local planner for UAV obstacle avoidance using demonstration data. arXiv 2020, arXiv:2008.02521. [Google Scholar]

- Yu, J.; Sun, H.; Sun, J. Improved Twin Delayed Deep Deterministic Policy Gradient Algorithm Based Real-Time Trajectory Planning for Parafoil under Complicated Constraints. Appl. Sci. 2022, 12, 8189. [Google Scholar] [CrossRef]

- Theile, M.; Bayerlein, H.; Nai, R.; Gesbert, D.; Caccamo, M. UAV coverage path planning under varying power constraints using deep reinforcement learning. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Las Vegas, NV, USA, 24 October–24 January 2021. [Google Scholar]

- Theile, M.; Bayerlein, H.; Nai, R.; Gesbert, D.; Caccamo, M. UAV path planning using global and local map information with deep reinforcement learning. In Proceedings of the UAV Path Planning Using Global and Local Map Information with Deep Reinforcement Learning, Ljubljana, Slovenia, 6–10 December 2021. [Google Scholar]

- Chhikara, P.; Tekchandani, R.; Kumar, N.; Chamola, V.; Guizani, M. DCNN-ga: A deep neural net architecture for navigation of UAV in indoor environment. IEEE Internet Things J. 2021, 8, 4448–4460. [Google Scholar] [CrossRef]

- Menfoukh, K.; Touba, M.M.; Khenfri, F.; Guettal, L. Optimized Convolutional Neural Network Architecture for UAV navigation within Unstructured Trail. In Proceedings of the 2020 1st International Conference on Communications, Control Systems and Signal Processing (CCSSP) 2020, El Oued, Algeria, 16–17 May 2020. [Google Scholar] [CrossRef]

- Silvestrini, S.; Lavagna, M. Deep Learning and Artificial Neural Networks for Spacecraft Dynamics, Navigation and Control. Drones 2022, 6, 270. [Google Scholar] [CrossRef]

- Tullu, A.; Endale, B.; Wondosen, A.; Hwang, H.-Y. Machine Learning Approach to Real-Time 3D Path Planning for Autonomous Navigation of Unmanned Aerial Vehicle. Appl. Sci. 2021, 11, 4706. [Google Scholar] [CrossRef]

| UAV Category | Type | Weight (Kg) | Flight Altitude (m) | Range (km) | Endurance (Hour) | Applications |

|---|---|---|---|---|---|---|

| Rotary wings | Nano | <0.5 | 100 | <1 | 0.2 to 0.5 | Surveying and mapping |

| Micro | <5 | 250 | <5 to 10 | 1 | Environmental monitoring | |

| Mini | <20 to 30 | 150 to 300 | <10 | <1 | Aerial photography | |

| Fixed wings | Close-range | 25 to 150 | 3000 | 10 to 30 | 2 to 4 | Surveillance tasks |

| Short-range | 50 to 250 | 3000 | 30 to 70 | 3 to 6 | Aerial mapping | |

| Medium-range (MR) | 150 to 500 | 5000 | 70 to 200 | 6 to 10 | Professional applications | |

| MR endurance | 500 to 1500 | 8000 | >500 | 10 to 18 | Civil applications | |

| Low-altitude UAV | Low-altitude deep-penetration | 350 to 2500 | 50 to 9000 | >250 | 0.5 to 1 | Coverage |

| Low-altitude long-endurance | 15 to 25 | 3000 | >500 | >24 | Large-scale surveillance | |

| Medium-altitude long-endurance | 1000 to 1500 | 3000 | >500 | 24 to 48 | Weather tracking | |

| High-altitude UAV | High-altitude long-endurance | 2500 to 5000 | 20,000 | >2000 | 24 to 48 | Military surveillance and espionage |

| Stratospheric | >2500 | >2000 | >20,000 | >48 | Carrying advanced intelligence | |

| Exo-stratospheric | 1000 to 1500 | 2500 | >30,000 | 24 to 48 | Data collection | |

| Special task | Unmanned combat UAV | >1000 | 12,000 | 1500 | 2 | Military combat and surveillance |

| Lethal | >800 | 4000 | 300 | 3 to 4 | Drone strikes and battlefield intelligence | |

| Decoys | 150 to 250 | 50 to 50,000 | 0 to 500 | >4 | Long-range cruise missiles |

| Application Domain | Application Details | Application Areas |

|---|---|---|

| Autonomous landing | UAV takeoff and landing | VTOL and Fixed-wing UAVs |

| Autonomous surveillance | Using aerial photography for surveillance and observation | Smart city traffic monitoring and smart farming |

| Mapping | Topographical and geospatial data collection | 3D Semantic Mapping |

| Search and rescue operation | Information collection in a disaster area | Object detection in drone image or video |

| Aerial refueling | Refueling commercial aircraft by tanker aircraft during flight | Refueling systems: Boom and receptacle, and probe and drogue |

| Inspection | Public and private property inspection, remote monitoring, and maintenance | Power lines, wind turbines, and oil/gas pipelines monitoring |

| Subsystem | Description | Approach |

|---|---|---|

| Pose estimation (Localization) | Estimate the UAV’s orientation and position in 2D and 3D | Visual odometry and simultaneous localization and mapping (SLAM)-based |

| Obstacle detection and avoidance | Making the appropriate decisions to avoid obstacles and collision zones | Stereo and monocular camera-based |

| Visual servoing | By using visual data, maintain the stability of the UAV and its flying maneuvers | Visual image-based |

| Ref. | Type | Method | Main Theme | Functions | Sensing | Advantages | Limitations |

|---|---|---|---|---|---|---|---|

| [51] | Map-independent | Optical flow | Brightness constancy, small motion, and smooth flow | Able to handle image sequences | Single camera and global optical flow | The brightness level and additive noise are also not affected | Performance can be affected due to image motion |

| [52] | Map-independent | Optical flow | Method of differences and constant flow for all pixels | Image registration | Single camera and local optical flow | Less expensive and faster method with compared traditional image registration | Can be affected by image noise |

| [53] | Map-independent | Optical flow | Based on a divergent stereo approach | Reflex-type control of motion | Stereo camera and computation of optical flow | Adjust with forward velocity control and flexible approach | Sample size have effect on performance |

| [54] | Map-independent | Optical flow | Non-linear controller for optical flow measurement | Scene changes detection and description | Multi-sensor and spherical optical flow | Higher system stability | System complexity can be high |

| [55] | Map-independent | Optical flow | The deviation of all pixels from the anticipated geometry | Human detection in disaster | Multi-sensor and dense optical Flow | Great performance in high mobility | System complexity can be high |

| [56] | Map-independent | Optical flow | UAVs position and Orientation estimation and filter date of terrain using EKF | Tracking | Multi-camera and extended Kalman filter | Low control estimation error | Data of DEM may not extract during flight |

| [57] | Map-independent | Optical flow | UAVs position estimation and filtered data of terrain using particle filter | Tracking | Multi-camera and state vector augmentation for error control | Low positional error | Data of DEM may not extract during flight |

| [60] | Map-independent | Optical flow | UAV estimation and navigation | Flight formation and aerial refueling | Single camera and vision-based neural network algorithm | Great performance in terms of position accuracy and orientation estimation | Low data rate to locate the image |

| [61] | Map-independent | Feature tracking | Feature selection/filtering and a feature-pattern matching algorithm | Detection of the features and movements of any moving object | Single camera | Higher location accuracy | High computational cost |

| [62] | Map-independent | Feature tracking | A behavioral navigation method | A behavioral navigation method Fuzzy-based obstacle avoidance | Single camera | Local features detection and descriptor | Poor data quality and high time complexity |

| [63] | Map-independent | Feature tracking | Image-based visual homing | Image-based visual homing observation of the invariant features of the environment from different perspectives | Single camera | Does not require GPS | Topological visual homing has system complexity |

| [64] | Map-independent | Feature tracking | A feature-based image-matching algorithm is used to find the natural landmarks | Guidance and safe landing of UAV | Multi-camera | Do not require artificial landmark during flight | High computational power required |

| [66] | Map-independent | Feature tracking | Can leverage both strong GPS and nominal GPS | UAV navigation and control | Single camera | Suitable for GPS challenges situation | Requires additional sensors |

| [67] | Map-dependent | Octree map | Ray tracing technique | Mapping and surveillance | Depth camera and 3D volumetric sensor | Low cost and easy to use | Specular reflections can happen in 3D systems |

| [68] | Map-dependent | Occupancy grid map | Precise segmentation of range data | 3D mapping and obstacle detection | Stereo camera and vision sensor | High accuracy in segmentation | Expensive solution |

| [69] | Map-dependent | Octree map | An extended scan line grouping approach and precise segmentation of the range data into planar segments | Localization and path planning for mini-UAVs | Depth camera | Applicable to both indoor and outdoor environments | Costly |

| [70] | Map-dependent | Occupancy grid map | Estimate the position of UAV using RANSAC feature detection and normalized cross correlation with prior edge detection | Position estimation of UAV | Single camera | Easy to develop and use | Higher computation cost in terms of iteration number |

| [73] | Map-building | Indirect | A top-down Bayesian Network | Localization and mapping | Single camera | Real-time feature extraction-based localization | Real-time map has uncertainty effect |

| [74] | Map-building | Indirect | Parallel tracking and mapping method | Tracking and mapping | Single camera | Higher accuracy in tracking | Need high computational power |

| [75] | Map-building | Indirect | Cholesky factorization modification-based method | Localization and mapping | Single camera | High localization accuracy | High computational complexity |

| [76] | Map-building | Indirect | Monocular vision navigation-based method | Indoor navigation for UAV | Single camera | High accuracy in indoor environment | Data organization is difficult |

| [77] | Map-building | Indirect | Contour mapping strategy and the formation control utilized | Mapping | Single camera | Useful for formation control | High mobility can reduce performance |

| [78] | Map-building | Indirect | Dense energy-based method | Estimation of the fundamental matrix | Single camera | Automated featured detection | High complexity system |

| [79] | Map-building | Indirect | Segmented optical flow field method | A dense depth map estimation | Single camera | Useful for complex situation | Error may happen on depth estimation |

| [80] | Map-building | Indirect | Lightweight and real-time visual semantic SLAM | Indoor navigation | Multi-camera | High accuracy in navigation | Very high computational cost |

| [84] | Map-building | Direct | Based on image alignment or registration | Object position estimation | Single camera | Less computational time and easy to use | Require large amount of image data |

| [85] | Map-building | Direct | Dense tracking and mapping-based method | Tracking and mapping | Single camera | High tracking accuracy in high dynamic mobility | System complexity high |

| [86] | Map-building | Direct | Large-scale direct monocular SLAM method | Map optimization | Single camera | Higher tracking accuracy | Scale independent |

| [89] | Map-building | Hybrid | Semi-direct monocular visual odometry method | Motion estimation | Single camera | Useful in GPS-denied environment | Feature attraction may face difficulties in high mobility |

| [91] | Map-building | Multi-sensor fusion | Dubbed multi-sensor fusion EKF method | UAV outdoor navigation | Multi-sensors | Fast and easy to use | Expensive and requires additional sensors |

| [92] | Map-building | Multi-sensor fusion | Laser-based SLAM method | UAV indoor navigation | Multi-sensors | Useful in indoor environment | Expensive |

| Ref. | Type | Method | Main Theme | Functions | Advantages | Limitations |

|---|---|---|---|---|---|---|

| [95] | Optical flow | Matched filter approach | Koenderink and van Doorn (KvD) method | Motion estimation | Adaptive approach in high motion | Not suitable for complex environment |

| [96] | Optical flow | Bionic insect vision | SNR level detection | Motion control and estimation | Can operate in noisy environment | Additional sensor required |

| [97] | Optical flow | Bionic insect vision | Fly elementary motion detection-based UAV controlling | UAV altitude control | Visual motion system removes motion ambiguity | High complexity |

| [98] | Optical flow | Bionic insect vision | Geometrically determined optic flow for UAV collision detection | UAV collision avoidance | Avoid collision during flight | Higher computational complexity |

| [99] | Optical flow | Artificial potential field | Artificial potential field method for obstacle detection | UAV obstacle detection | Fast response | Unreachable near obstacles |

| [100] | Optical flow | Motion field estimation | Motion field information method | Flight control and obstacle avoidance | Less computation cost | Unreachable near obstacles |

| [101] | Optical flow | Map-based offline path-planning method | Optical flow is used for obstacle detection and avoidance, and map-based offline path planning is used for navigation | Obstacle avoidance and path planning | Easy to operate and deploy | Expensive |

| [103] | Optical flow | Polynomial expansion transform method | Two frame-based motion estimation | Motion estimation | Effective and reduce the estimation error | High computation cost |

| [104] | SLAM | An improved PTAM method | Author used PTAM algorithm for UAV ground control | Indoor environment | Provide self-localization and works in indoor environment | PTAM algorithm more suitable for large environment |

| [105] | SLAM | ORB-SLAM and potential field | Reconstructed map with Kalman filter for UAV flight control | UAV obstacle avoidance | High accuracy in obstacle detection | High iteration is required for algorithm convergence |

| [106] | SLAM | Coupling vision-based navigation systems | Utilized NMPC controller to improve the navigation performance with static and dynamic obstacle avoidance | UAV navigation, flight control, and obstacle avoidance | High accuracy in obstacle detection | High iteration is required for algorithm convergence |

| Ref. | Type | Method | Main Theme | Functions | Advantages | Limitations |

|---|---|---|---|---|---|---|

| [108] | Global | Fuzzy logic approach | Created an orographic database to make a digital map and then applied heuristic A-star algorithm | UAV navigation and route planner | User friendly and flexible | May face problems in processing the imprecise data |

| [109] | Global | A-star algorithm on square grid | Apply pattern classifier to obtain the low-cost optimal path | UAV route planning | Able to find low-cost path | High computation cost |

| [110] | Global | A-star search (SAS) algorithm | Reduces the complexity by applying constraints | UAV real-time route planning | Minimize route path | High complexity |

| [111] | Global | Dynamic A-star search | Created dynamic A-star algorithm for partially or unknown environment | Path optimization | Optimize the path in unknown environment | Complex search algorithm |

| [112] | Global | Hybrid algorithm based on HHO and GWO | Avoid failure when no prior information is provided | Path planning and obstacle avoidance | Optimal path with minimal energy and time consumption | Using UAVs in difficult environments without exploring other possible applications |

| [113] | Global | Simulate anneal arithmetic | Genetic algorithm and simulate anneal arithmetic (SAA) algorithm | Using the crossover and mutation operations in the genetic algorithm with the Metropolis criteria to enhance path planning efficiency | Suitable for multi-object optimization | Long search time |

| [114] | Global | Simulated annealing | The improved simulated annealing algorithm and the conjugate direction method | Keep its knowledge of obstacles up-to-date and constantly renew it | Able to work for 3D environment perception | High complexity system |

| [115] | Local | Artificial potential force field method | A novel molecular force field-based method | UAV swarm coverage | Suitable for real-time obstacle avoidance | Unable to avoid trap area |

| [116] | Local | Artificial potential field | Using force field to avoid collision during flight | UAV path planning | More accurate result | Expensive system |

| [118] | Local | Hopfield neural network | A-star algorithm is applied to choose the nodes in the search area then Hopfield network is used for network stability | UAV path planning | Ability to parallel computing | High computation cost |

| [120] | Local | Sampling-based | Sampling-based 3D path planning | UAV path planning | Energy efficient path planning | Complex collision-detection geometries may not possibly use sampling-based approach |

| [127] | RL-based | Double state-input strategy | Extended double deep Q-network for the unknown environment including harsh environment | UAV navigation | Able to solve very complex problems | High computation cost and higher power required |

| [128] | RL-based | DRL-based | DRL-based obstacle detection for unknown environment | UAV obstacle avoidance | Higher performance | High computation cost and higher power required |

| [130] | RL-based | DDQN-based | DDQN-based coverage path planning for the unknown environment including harsh environment | UAV path planning | Proposed approach is useful for complex environment | Extremely expensive to train dataset |

| [132] | Neural network-based | Convolutional neural network with genetic algorithms | Genetic algorithm is used with neural network for hyperparameters tuning | UAV indoor navigation | Able to work in both indoor and outdoor environment | High computation cost and higher power required |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arafat, M.Y.; Alam, M.M.; Moh, S. Vision-Based Navigation Techniques for Unmanned Aerial Vehicles: Review and Challenges. Drones 2023, 7, 89. https://doi.org/10.3390/drones7020089

Arafat MY, Alam MM, Moh S. Vision-Based Navigation Techniques for Unmanned Aerial Vehicles: Review and Challenges. Drones. 2023; 7(2):89. https://doi.org/10.3390/drones7020089

Chicago/Turabian StyleArafat, Muhammad Yeasir, Muhammad Morshed Alam, and Sangman Moh. 2023. "Vision-Based Navigation Techniques for Unmanned Aerial Vehicles: Review and Challenges" Drones 7, no. 2: 89. https://doi.org/10.3390/drones7020089

APA StyleArafat, M. Y., Alam, M. M., & Moh, S. (2023). Vision-Based Navigation Techniques for Unmanned Aerial Vehicles: Review and Challenges. Drones, 7(2), 89. https://doi.org/10.3390/drones7020089