UAV Path Planning in Multi-Task Environments with Risks through Natural Language Understanding

Abstract

:1. Introduction

- (1)

- We propose a novel interactive framework for automatic path planning with a multi-task UAV through the understanding of compound natural language commands.

- (2)

- We propose a multi-task command understanding method using RNN-based tagging and semantic annotation, which can extract keywords that describe the task types and the task requirements instructed by the human operator.

- (3)

- We propose a novel algorithm to efficiently select the start and the exit waypoints for each task zone from a small set of candidate waypoints according to the tasks.

2. Problem Statement

3. Method

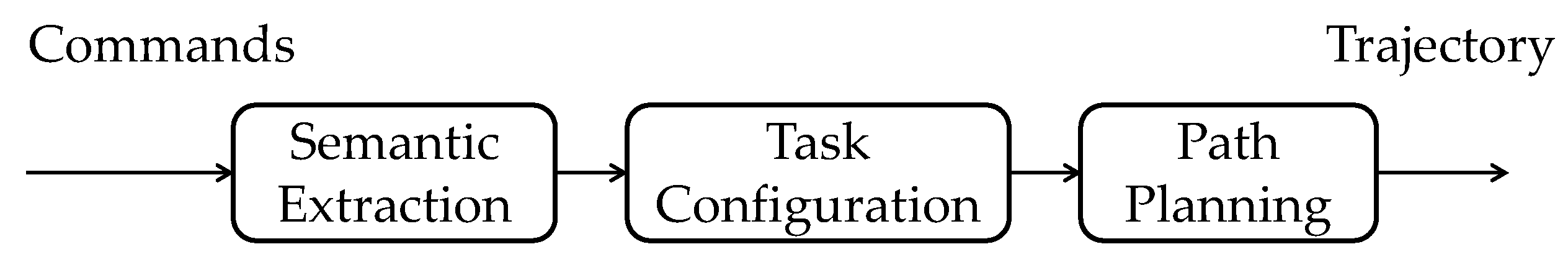

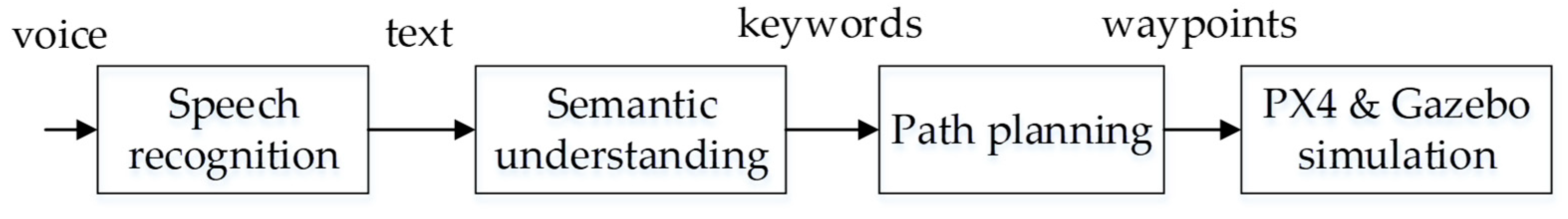

3.1. System Framework

3.2. Task Zone Modeling

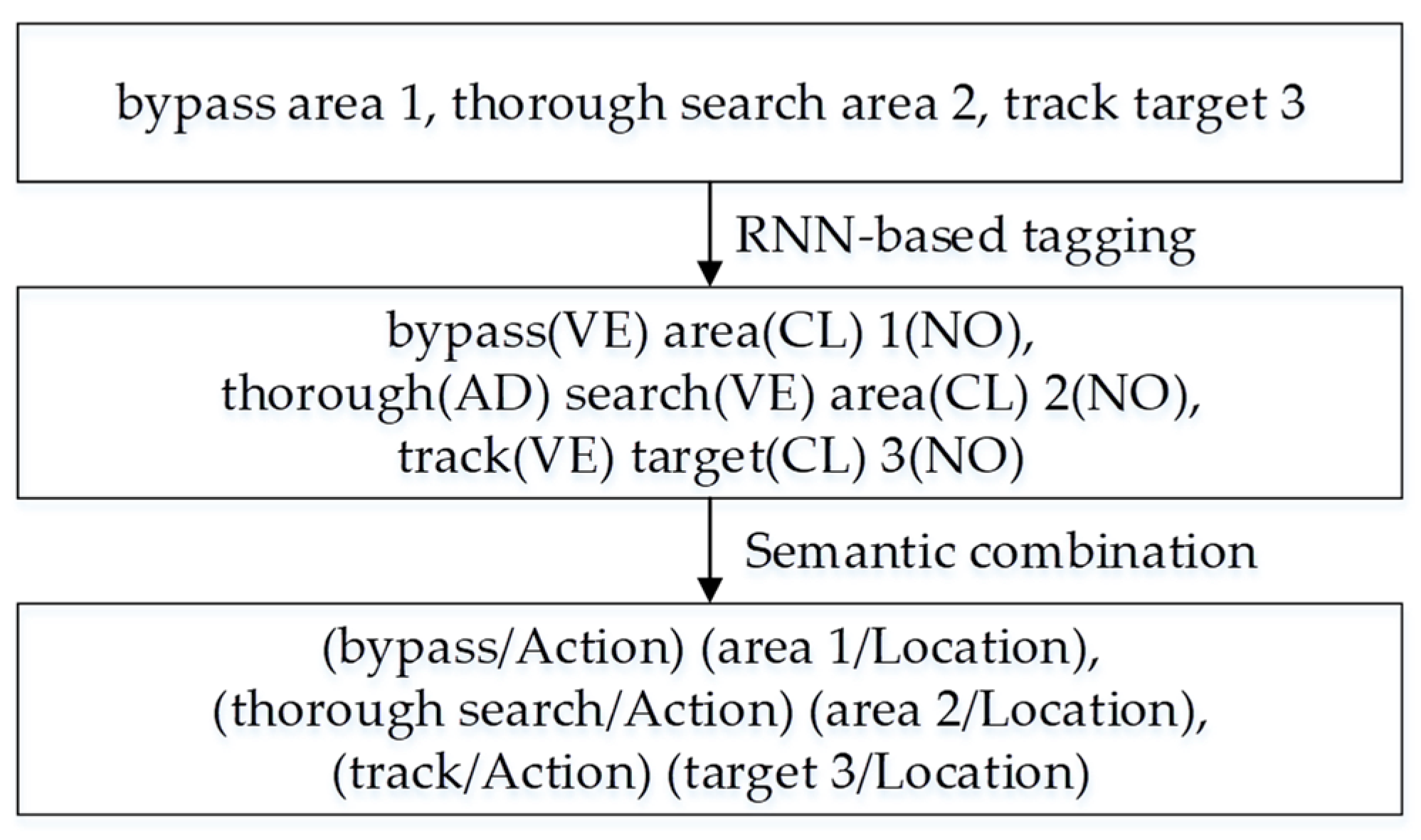

3.3. RNN-Based NLU for UAV Path Planning

3.4. Path Planning with RRT and Dubins Curves

| Algorithm 1 Waypoints generation and selection based on NLU results |

| Input: structured commands {Acti, Loci}, (i = 1, 2, …, n); |

| start point S0 and end point En+1. |

| Output: waypoints {WPj} and connections. |

| 1 Obtain the sequence of tasks {Taski}, (i = 1, 2, …, n); |

| 2 For 1≤i≤n |

| 3 Locate the corresponding risky zone Zi; |

| 4 Generate a set of candidate waypoints {WPk(Zi)}; |

| 5 Select a path planning algorithm Algi; |

| 6 Select the start point Si∈{WPk(Zi)} closest to Ei-1 or S0; |

| 7 Select the end point Ei∈{WPk(Zi)} closest to Oi+1 or En+1; |

| 8 End |

| 9 Connect {S0, S1, E1,…, Sn, En, En+1}. |

4. Simulations and Results

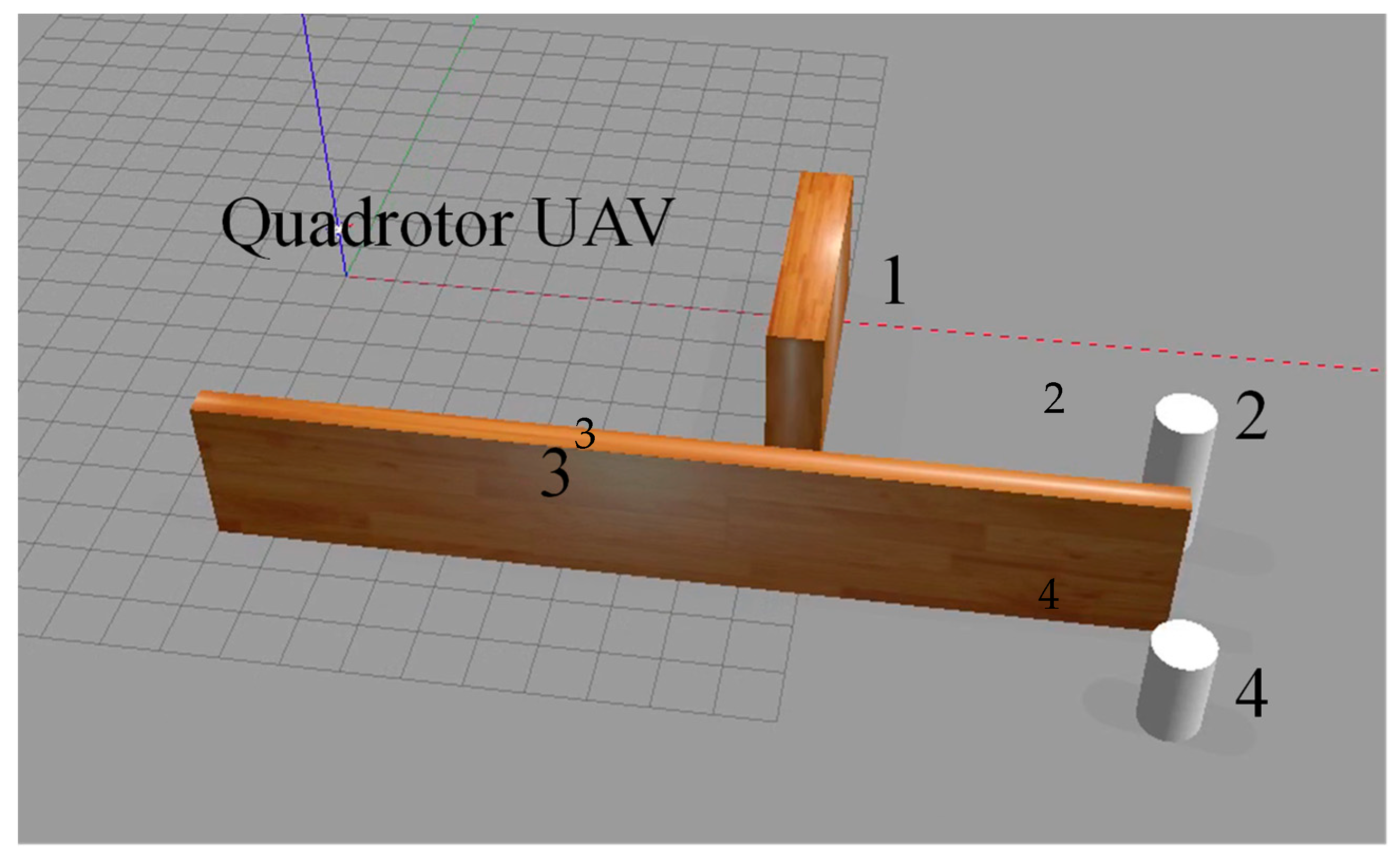

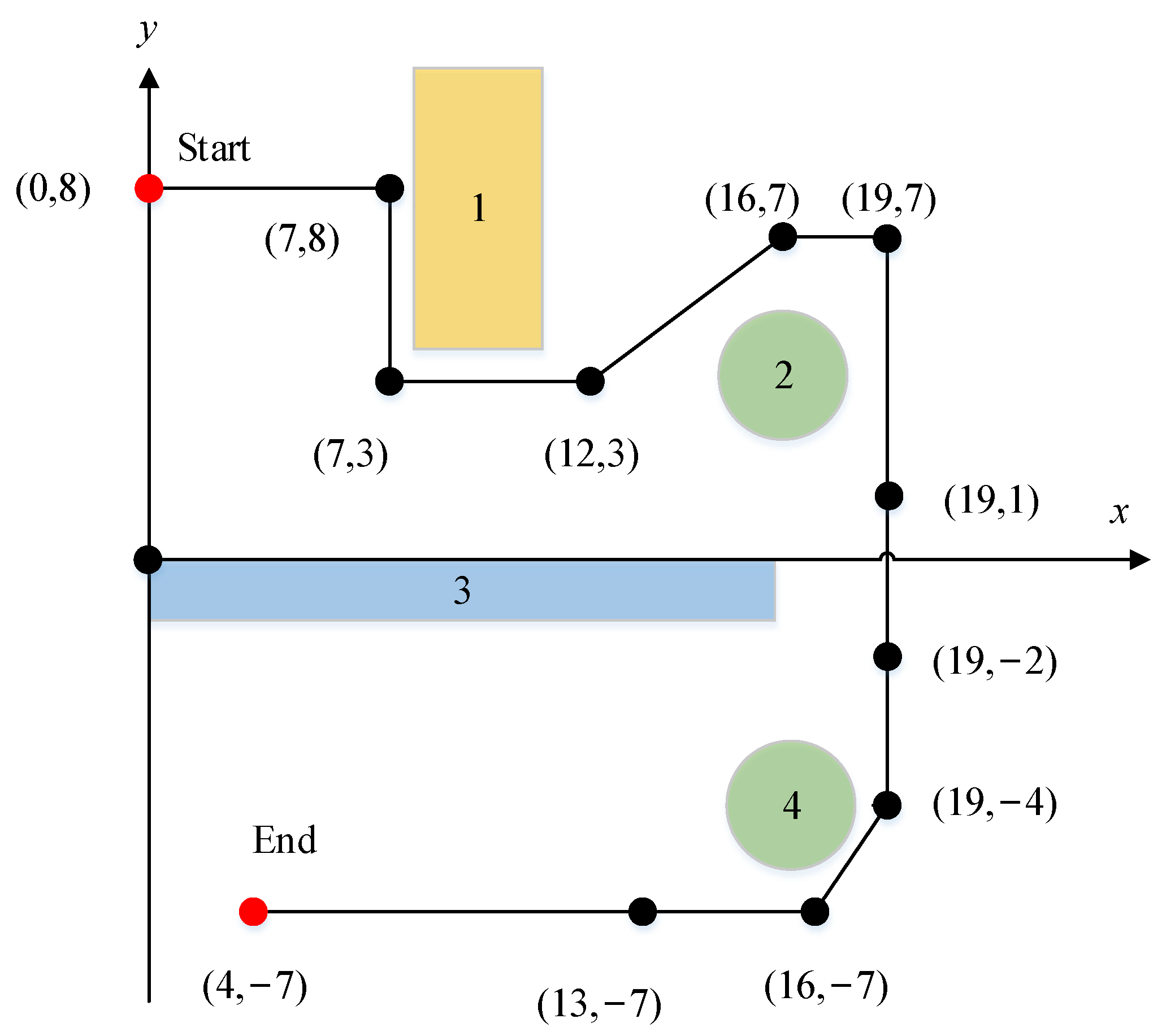

4.1. Environmental Settings

4.2. Simulation Results

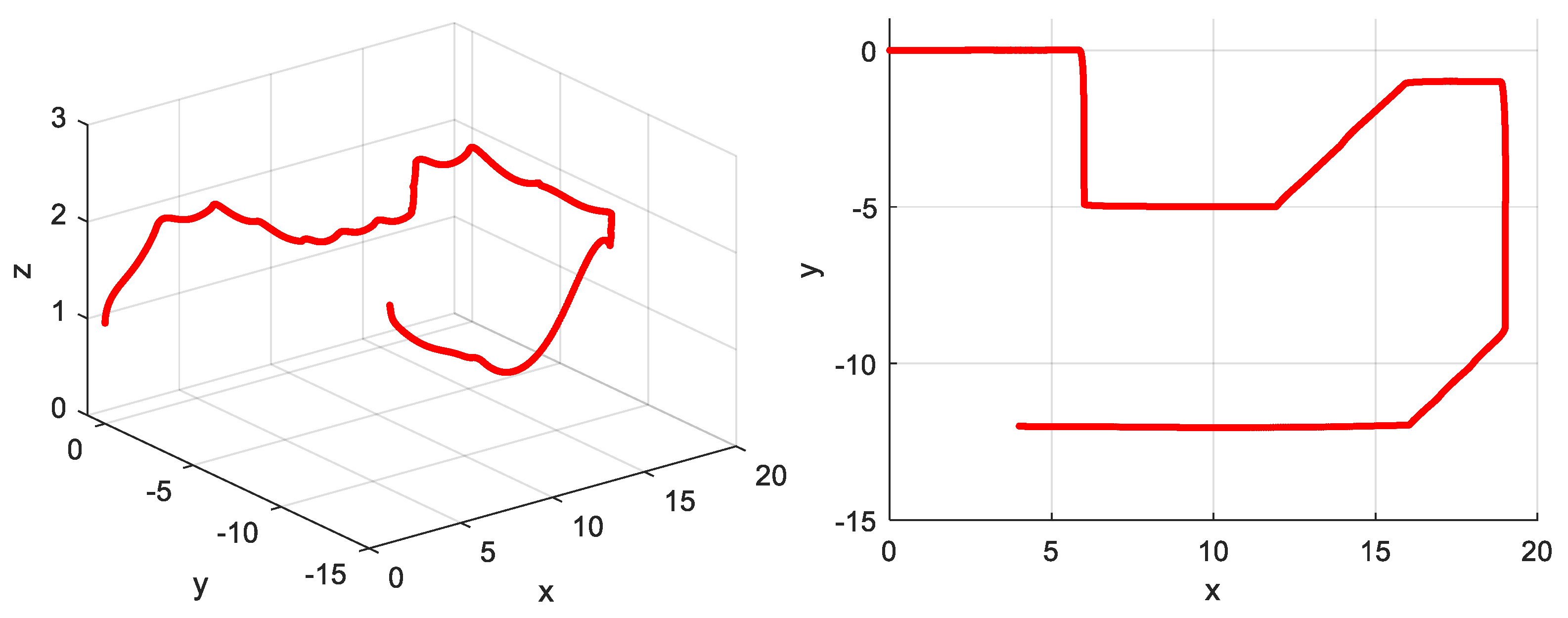

4.2.1. Simulation 1: Obstacle Avoidance

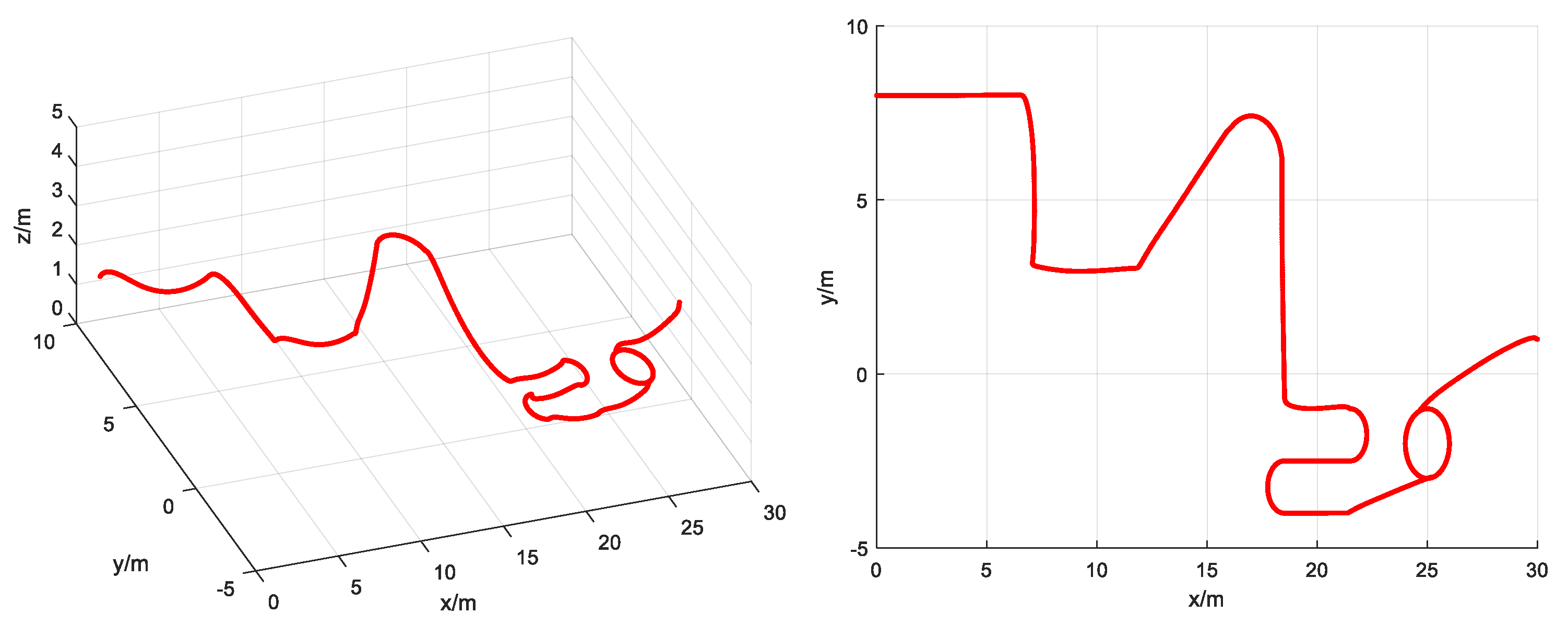

4.2.2. Simulation 2: Reconnaissance and Surveillance

4.2.3. Operational Time

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shao, Q.; Li, J.; Li, R.; Zhang, J.; Gao, X. Study of Urban Logistics Drone Path Planning Model Incorporating Service Benefit and Risk Cost. Drones 2022, 6, 418. [Google Scholar] [CrossRef]

- Gubán, M.; Udvaros, J. A Path Planning Model with a Genetic Algorithm for Stock Inventory Using a Swarm of Drones. Drones 2022, 6, 364. [Google Scholar] [CrossRef]

- Lewicka, O.; Specht, M.; Specht, C. Assessment of the Steering Precision of a UAV along the Flight Profiles Using a GNSS RTK Receiver. Remote Sens. 2022, 14, 6127. [Google Scholar] [CrossRef]

- Acharya, B.S.; Bhandari, M. Machine Learning and Unmanned Aerial Vehicles in Water Quality Monitoring. Sustain. Horiz. 2022, 3, 100019. [Google Scholar] [CrossRef]

- Paraforos, D.S.; Sharipov, G.M.; Heiß, A.; Griepentrog, H.W. Position Accuracy Assessment of a UAV-mounted Sequoia+ Multispectral Camera Using a Robotic Total Station. Agriculture 2022, 12, 885. [Google Scholar] [CrossRef]

- Nemra, A.; Aouf, N. Robust INS/GPS Sensor Fusion for UAV Localization Using SDRE Nonlinear Filtering. IEEE Sens. J. 2010, 10, 789–798. [Google Scholar] [CrossRef] [Green Version]

- Chu, H.; Yi, J.; Yang, F. Chaos Particle Swarm Optimization Enhancement Algorithm for UAV Safe Path Planning. Appl. Sci. 2022, 12, 8977. [Google Scholar] [CrossRef]

- Yan, C.; Xiang, X.; Wang, C. Towards Real-Time Path Planning through Deep Reinforcement Learning for a UAV in Dynamic Environments. J. Intell Robot. Syst. 2020, 98, 297–309. [Google Scholar] [CrossRef]

- Zammit, C.; van Kampen, E.-J. Comparison Between A* and RRT Algorithms for 3D UAV Path Planning. Unmanned Syst. 2022, 10, 129–146. [Google Scholar] [CrossRef]

- Song, Y.; Steinweg, M.; Kaufmann, E.; Scaramuzza, D. Autonomous Drone Racing with Deep Reinforcement Learning. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 1205–1212. [Google Scholar]

- Kaufmann, E.; Loquercio, A.; Ranftl, R.; Müller, M.; Koltun, V.; Scaramuzza, D. Deep Drone Acrobatics. Robot. Sci. Syst. 2020, 40, 12–14. [Google Scholar] [CrossRef]

- Zhou, X.; Wen, X.; Wang, Z.; Gao, Y.; Li, H.; Wang, Q.; Tiankai, Y.; Haojian, L.; Yanjun, C.; Chao, X.; et al. Swarm of micro flying robots in the wild. Sci. Robot. 2022, 7, 1–17. [Google Scholar] [CrossRef]

- Wang, C.; Wu, L.; Yan, C.; Wang, Z.; Long, H.; Yu, C. Coactive design of explainable agent-based task planning and deep reinforcement learning for human-UAVs teamwork. Chin. J. Aeronaut. 2020, 33, 2930–2945. [Google Scholar] [CrossRef]

- Pan, S. Design of intelligent robot control system based on human–computer interaction. Int. J. Syst. Assur. Eng. Manag. 2021, 4, 1–10. [Google Scholar] [CrossRef]

- Xiang, X.; Tan, Q.; Zhou, H.; Tang, D.; Lai, J. Multimodal Fusion of Voice and Gesture Data for UAV Control. Drones 2022, 6, 201. [Google Scholar] [CrossRef]

- Krings, S.C.; Yigitbas, E.; Biermeier, K.; Engels, G. Design and Evaluation of AR-Assisted End-User Robot Path Planning Strategies. In Proceedings of the Companion of the 2022 ACM SIGCHI Symposium on Engineering Interactive Computing Systems, Sophia Antipolis, France, 21–24 June 2022; pp. 14–18. [Google Scholar]

- Tammvee, M.; Anbarjafari, G. Human activity recognition-based path planning for autonomous vehicles. Signal Image Video Process. 2021, 15, 809–816. [Google Scholar] [CrossRef]

- Seaborn, K.; Miyake, N.P.; Pennefather, P.; Otake-Matsuura, M. Voice in human-agent interaction: A survey. ACM Comput. Surv. (CSUR) 2021, 54, 1–43. [Google Scholar] [CrossRef]

- Malik, M.; Malik, M.K.; Mehmood, K.; Makhdoom, I. Automatic speech recognition: A survey. Multimed. Tools Appl. 2021, 80, 9411–9457. [Google Scholar] [CrossRef]

- Weld, H.; Huang, X.; Long, S.; Poon, J.; Han, S.C. A survey of joint intent detection and slot filling models in natural language understanding. ACM Comput. Surv. (CSUR) 2021, 55, 1–38. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Graves, A. Sequence transduction with recurrent neural networks. arXiv 2012, arXiv:1211.3711. [Google Scholar]

- Park, C.; Seo, J.; Lee, S.; Lee, C.; Moon, H.; Eo, S.; Lim, H.S. BTS: Back TranScription for speech-to-text post-processor using text-to-speech-to-text. In Proceedings of the 8th Workshop on Asian Translation (WAT2021), Bangkok, Thailand, 5–6 August 2021; pp. 106–116. [Google Scholar]

- Korayem, M.H.; Azargoshasb, S.; Korayem, A.H.; Tabibian, S. Design and Implementation of the Voice Command Recognition and the Sound Source Localization System for Human-Robot Interaction. Robotica 2021, 39, 1779–1790. [Google Scholar] [CrossRef]

- Lin, Y.Y.; Zheng, W.Z.; Chu, W.C.; Han, J.Y.; Hung, Y.H.; Ho, G.M.; Chang, C.-Y.; Lai, Y.-H. A Speech Command Control-Based Recognition System for Dysarthric Patients Based on Deep Learning Technology. Appl. Sci. 2021, 11, 2477. [Google Scholar] [CrossRef]

- Qi, J.; Ding, X.; Li, W.; Han, Z.; Xu, K. Fusing Hand Postures and Speech Recognition for Tasks Performed by an Integrated Leg–Arm Hexapod Robot. Appl. Sci. 2020, 10, 6995. [Google Scholar] [CrossRef]

- Contreras, R.; Ayala, A.; Cruz, F. Unmanned aerial vehicle control through domain-based automatic speech recognition. Computers 2020, 9, 75. [Google Scholar] [CrossRef]

- Wu, T.W.; Juang, B.H. Knowledge Augmented Bert Mutual Network in Multi-Turn Spoken Dialogues. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 7487–7491. [Google Scholar]

- Taniguchi, A.; Ito, S.; Taniguchi, T. Spatial Concept-based Topometric Semantic Mapping for Hierarchical Path-planning from Speech Instructions. arXiv 2022, arXiv:2203.10820. [Google Scholar]

- Taniguchi, A.; Hagiwara, Y.; Taniguchi, T.; Inamura, T. Spatial concept-based navigation with human speech instructions via probabilistic inference on Bayesian generative model. Adv. Robot. 2020, 34, 1213–1228. [Google Scholar] [CrossRef]

- Primatesta, S.; Guglieri, G.; Rizzo, A. A risk-aware path planning strategy for UAVs in urban environments. J. Intell Robot. Syst. 2019, 95, 629–643. [Google Scholar] [CrossRef]

- Barbeau, M.; Garcia-Alfaro, J.; Kranakis, E. Risky Zone Avoidance Strategies for Drones. In Proceedings of the 2021 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Virtual event, 12–17 September 2021; pp. 1–6. [Google Scholar]

- Ma, Z.; Wang, C.; Niu, Y.; Wang, X.; Shen, L. A saliency-based reinforcement learning approach for a UAV to avoid flying obstacles. Robot. Auton. Syst. 2018, 100, 108–118. [Google Scholar] [CrossRef]

- Jotheeswaran, J.; Koteeswaran, S. Feature selection using random forest method for sentiment analysis. Indian J. Sci. Technol. 2016, 9, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Chen, D.; Huang, Z.; Zou, Y. Leveraging Bilinear Attention to Improve Spoken Language Understanding. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 7142–7146. [Google Scholar]

- Qin, L.; Liu, T.; Che, W.; Kang, B.; Zhao, S.; Liu, T. A co-interactive transformer for joint slot filling and intent detection. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronyo, ON, Canada, 6–11 June 2021; pp. 8193–8197. [Google Scholar]

- Zhang, L.; Ma, D.; Zhang, X.; Yan, X.; Wang, H. Graph lstm with context-gated mechanism for spoken language understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 9539–9546.

- Zhou, P.; Huang, Z.; Liu, F.; Zou, Y. PIN: A novel parallel interactive network for spoken language understanding. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 13–18 September 2021; pp. 2950–2957. [Google Scholar]

- Thomas, S.; Kuo, H.K.J.; Saon, G.; Tüske, Z.; Kingsbury, B.; Kurata, G.; Kons, Z.; Hoory, R. RNN transducer models for spoken language understanding. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronyo, ON, Canada, 6–11 June 2021; pp. 7493–7497. [Google Scholar]

- Shivakumar, P.G.; Georgiou, P. Confusion2vec: Towards enriching vector space word representations with representational ambiguities. PeerJ Comput. Sci. 2019, 5, e195. [Google Scholar] [CrossRef] [Green Version]

- Gupta Manyam, S.; Casbeer, D.W.; Von Moll, A.; Fuchs, Z. Shortest Dubins Paths to Intercept a Target Moving on a Circle. J. Guid. Control. Dyn. 2022, 45, 1–14. [Google Scholar] [CrossRef]

- Meng, X.; Song, Y. Application Research and Implementation of Voice Control System Based on Android Speech Recognition. J. Phys. Conf. Ser. 2021, 1865, 042122. [Google Scholar] [CrossRef]

| Task Type | Task Zone Modeling | Solution |

|---|---|---|

| Avoid obstacles | Cylinder, Cuboid | Bypass closely |

| Avoid radar or missile | Hemisphere | Bypass far enough |

| Reconnaissance | Rectangle, Circle | Coverage search |

| Surveillance | Circle | Hover tracking |

| Method | Average Time (In Seconds) | |

|---|---|---|

| Simulation 1 | Manual waypoints selection | 9.39 |

| Ours | 4.62 | |

| Simulation 2 | Manual waypoints selection | 17.66 |

| Ours | 6.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Zhong, Z.; Xiang, X.; Zhu, Y.; Wu, L.; Yin, D.; Li, J. UAV Path Planning in Multi-Task Environments with Risks through Natural Language Understanding. Drones 2023, 7, 147. https://doi.org/10.3390/drones7030147

Wang C, Zhong Z, Xiang X, Zhu Y, Wu L, Yin D, Li J. UAV Path Planning in Multi-Task Environments with Risks through Natural Language Understanding. Drones. 2023; 7(3):147. https://doi.org/10.3390/drones7030147

Chicago/Turabian StyleWang, Chang, Zhiwei Zhong, Xiaojia Xiang, Yi Zhu, Lizhen Wu, Dong Yin, and Jie Li. 2023. "UAV Path Planning in Multi-Task Environments with Risks through Natural Language Understanding" Drones 7, no. 3: 147. https://doi.org/10.3390/drones7030147

APA StyleWang, C., Zhong, Z., Xiang, X., Zhu, Y., Wu, L., Yin, D., & Li, J. (2023). UAV Path Planning in Multi-Task Environments with Risks through Natural Language Understanding. Drones, 7(3), 147. https://doi.org/10.3390/drones7030147