Demystifying the Differences between Structure-from-MotionSoftware Packages for Pre-Processing Drone Data

Abstract

:1. Introduction

2. Methods

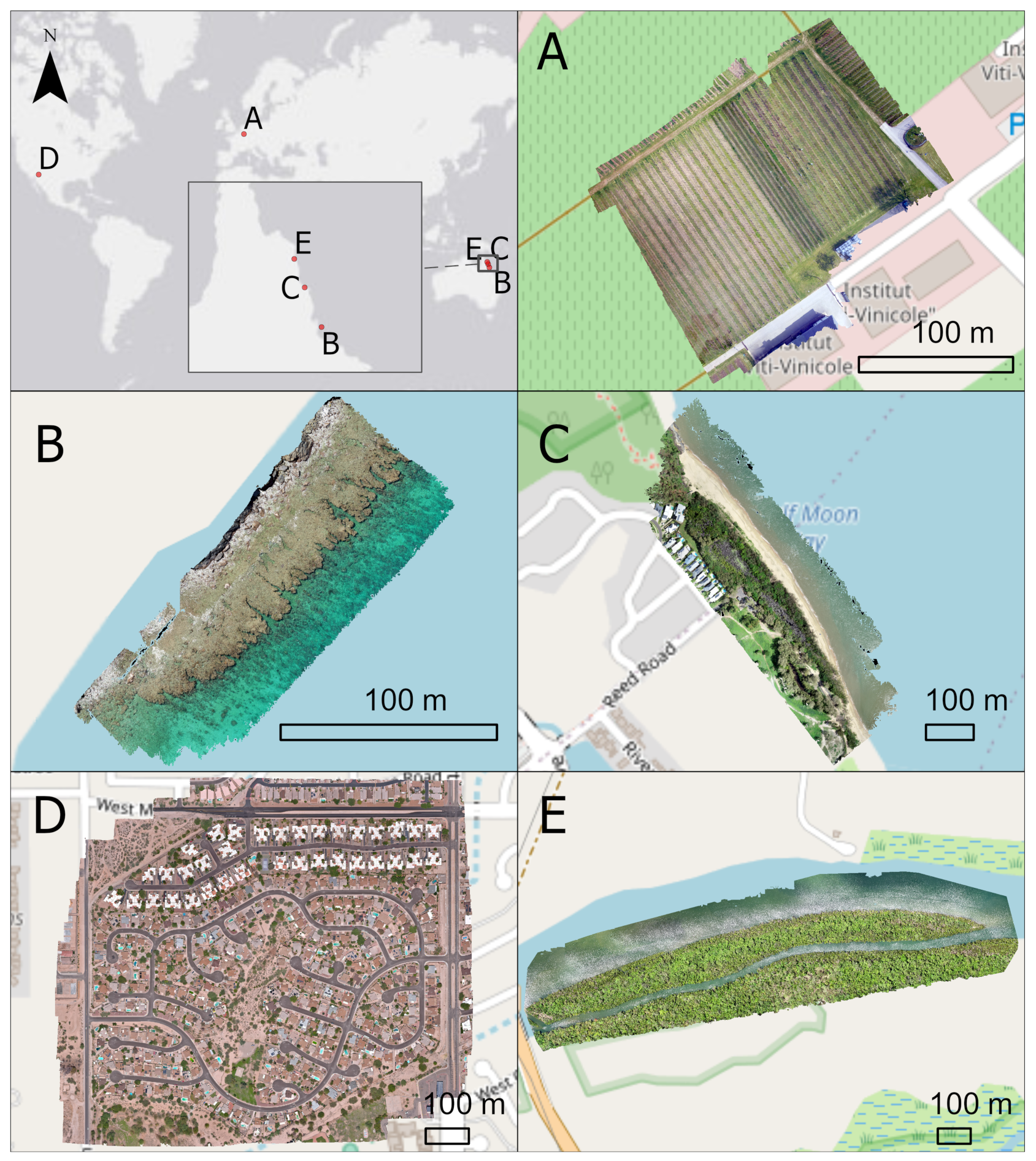

2.1. Study Sites and Input Data

2.2. Software Packages

2.3. Comparing Output File Dimensions and Specifications

2.4. Comparing Orthomosaics

- a

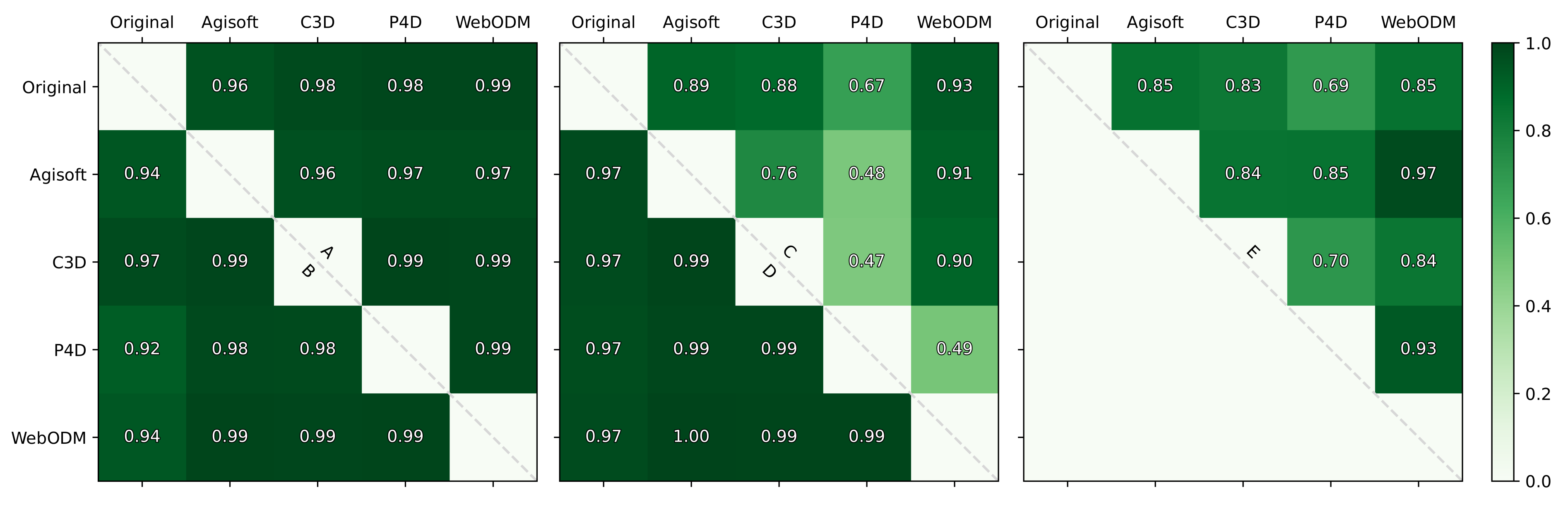

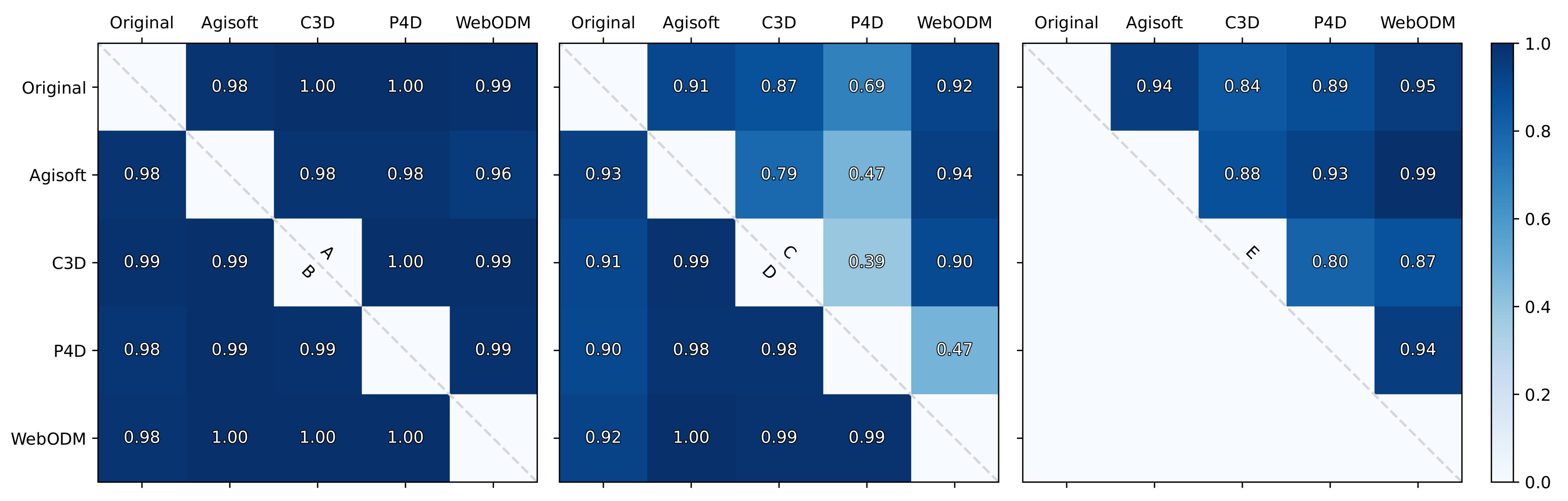

- Colour correlation score: The luminance value of each pixel was extracted from each colour channel (red, green, and blue) from the original drone images, as well as the output orthomosaic. A density histogram was subsequently plotted to visualise the similarity between the unprocessed and the processed image of each colour band. A correlation score [56] was also calculated to quantify the resemblance of each histogram with each other using the equation below:where and are the colour density histograms of any two out of five sources (original drone images and outputs from four software) being compared,and N is the total number of histogram bins (256 for 8 bit true colour images). A correlation score close to one indicates high similarity between the colour density of the input images and that of the orthomosaic, while a score approaching zero indicates low similarity;

- b

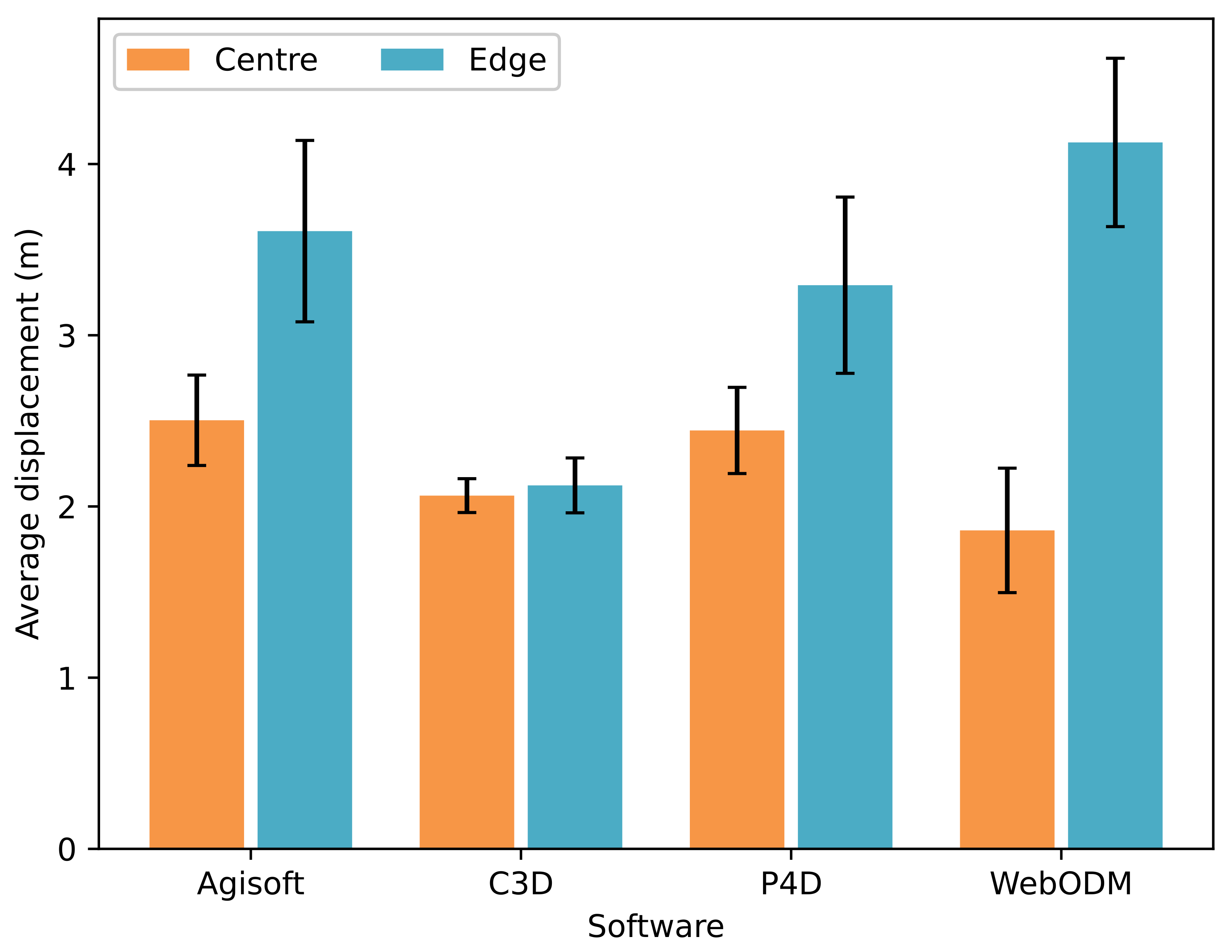

- Geographic shift: As no GCPs were available, traditional horizontal and vertical accuracy assessments (i.e., [37]) could not be conducted; instead, Dataset D had very clear and identifiable features within an urban environment, and we used it to calculate the geographic shift resulting after processing with the different software packages. We digitised the polygon boundaries of 20 identifiable features across each of the four orthomosaics, plus a reference satellite image available within the Esri ArcGIS Pro base maps [57]. We ensured that 50% of identifiable features were outlined in the centre region (within 150 m of the orthomosaic centre) and 50% around the edge (within 150 m of the orthomosaic edge). We then calculated the centroid coordinates of each polygon and the distance between feature locations in each software orthomosaic in relation to the same feature location within the satellite base map. Averages (±SE) of the distance from satellite features were calculated for each software at both the centre and edge of orthomosaics;

- c

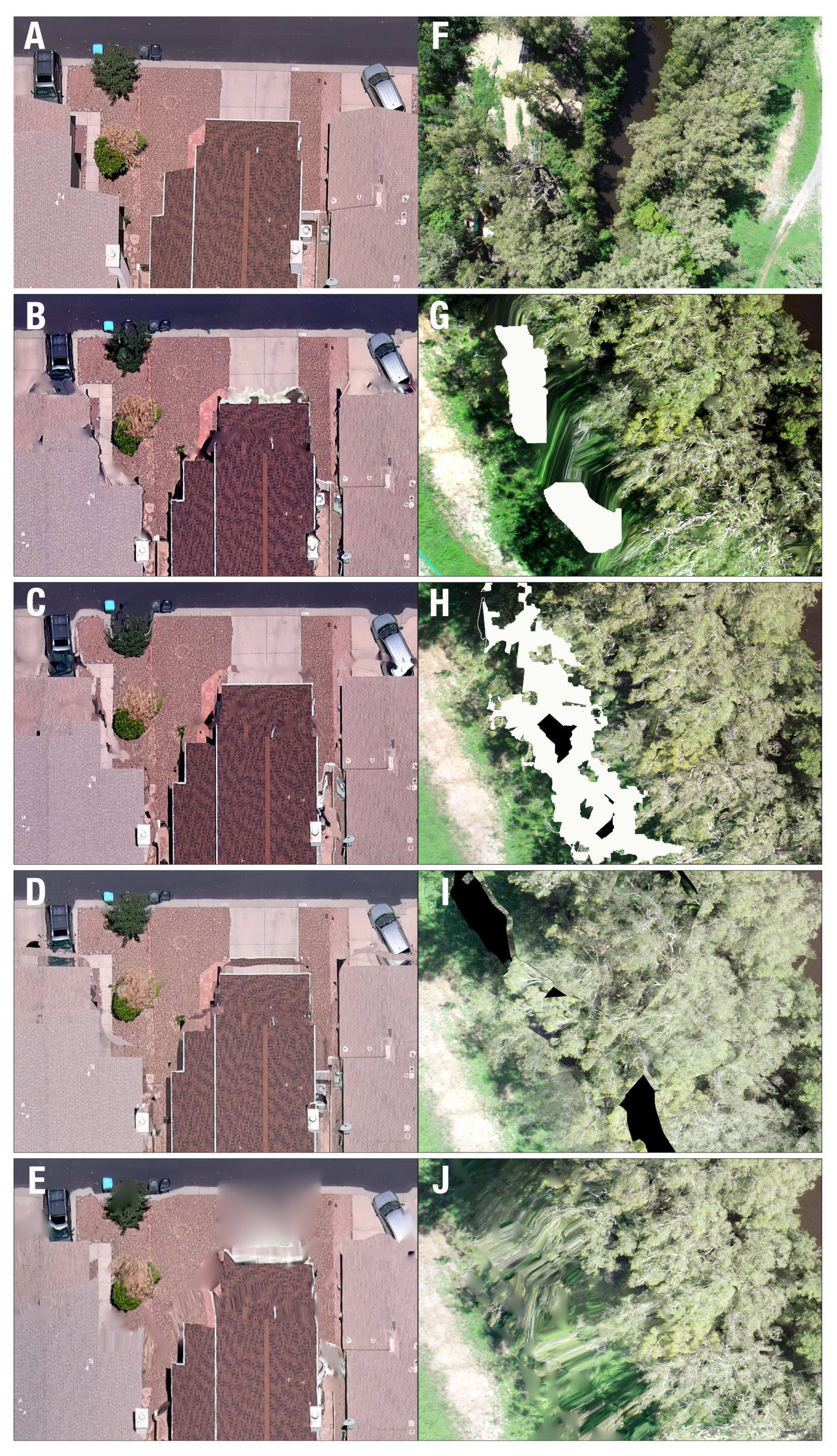

- Visible artefacts: All orthomosaic outputs were visually scanned through to select obvious distortion and artefacts in the map, ensuring both the middle and edges of the datasets were evaluated.

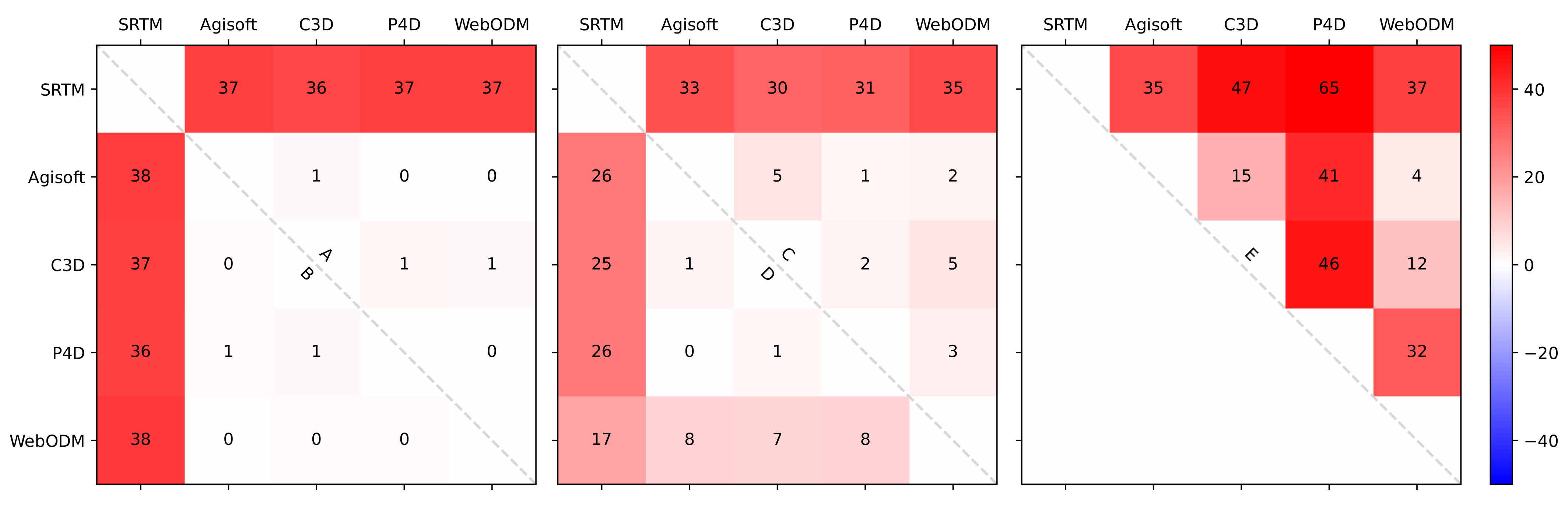

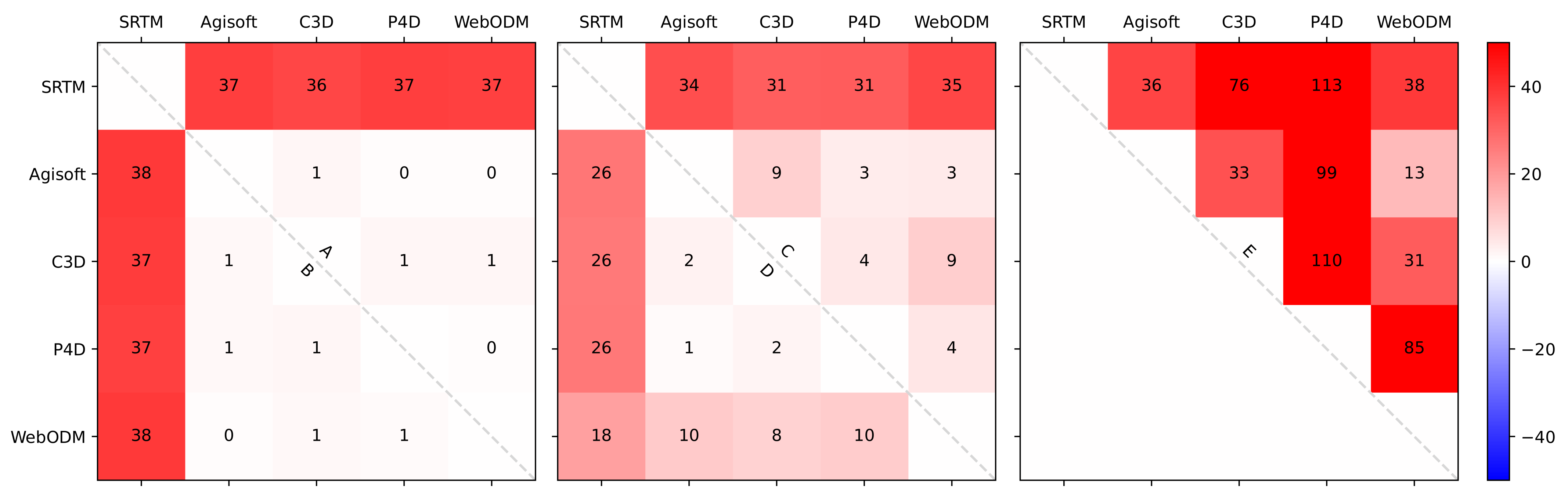

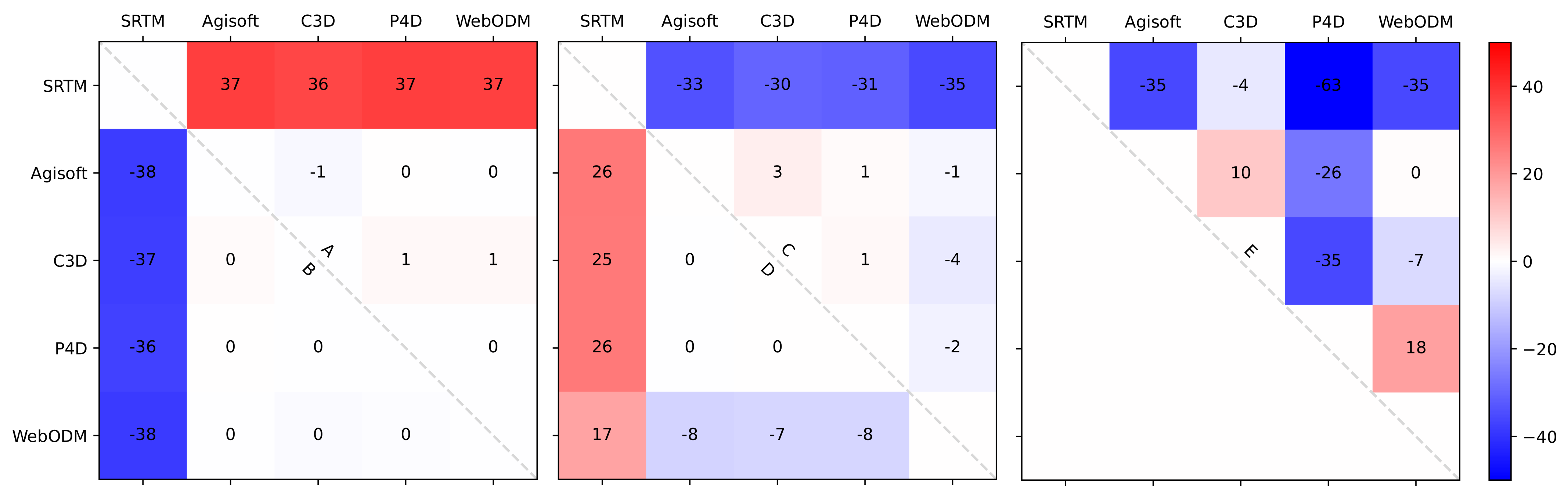

2.5. Comparing Digital Surface Models

- a

- The mean bias error (MBE) measures the average magnitude of differences (i.e., errors) between any two DSM outputs. It also takes the error direction into consideration (Equation (3));

- b

- The mean absolute error (MAE) measures the average of the absolute differences between two DSM layers, where all individual differences have equal weight (Equation (4));

- c

- The root-mean-squared error (RMSE) is a quadratic scoring rule that also measures the average magnitude of the error and is the square root of the average of the squared differences between two observations (Equation (5)). Combining the MBE and MAE will demonstrate the magnitude and direction (i.e., higher or lower) of the difference between any two DSM datasets. Combining the MAE and RMSE, on the other hand, will provide the variance of the difference (i.e., all pixel have a relative uniform difference or not) between two DSMs.where , are the pixel values (i.e., elevation) at the same location of the paired up DSMs and N is the total number of overlapping pixels.

3. Results and Discussion

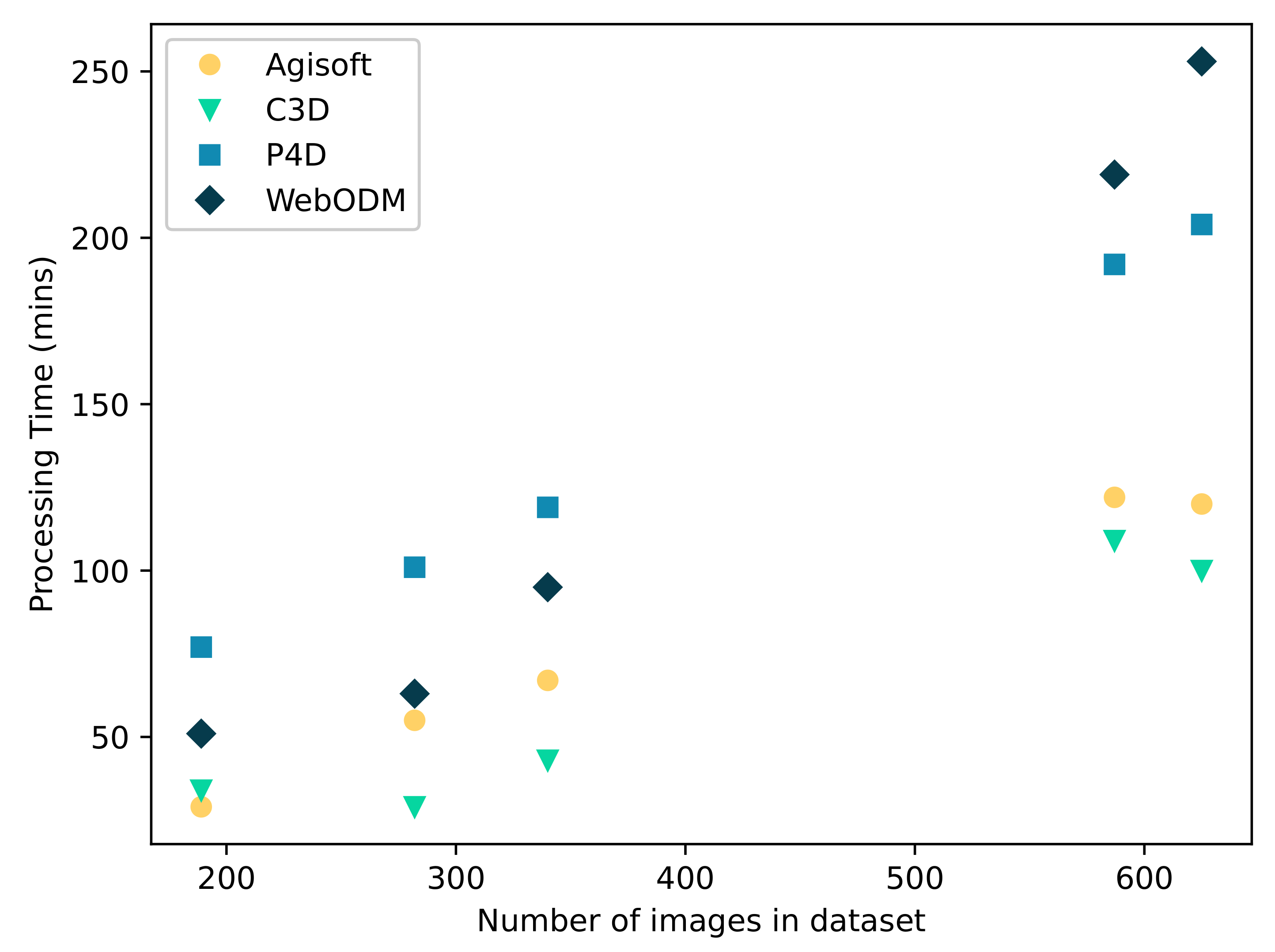

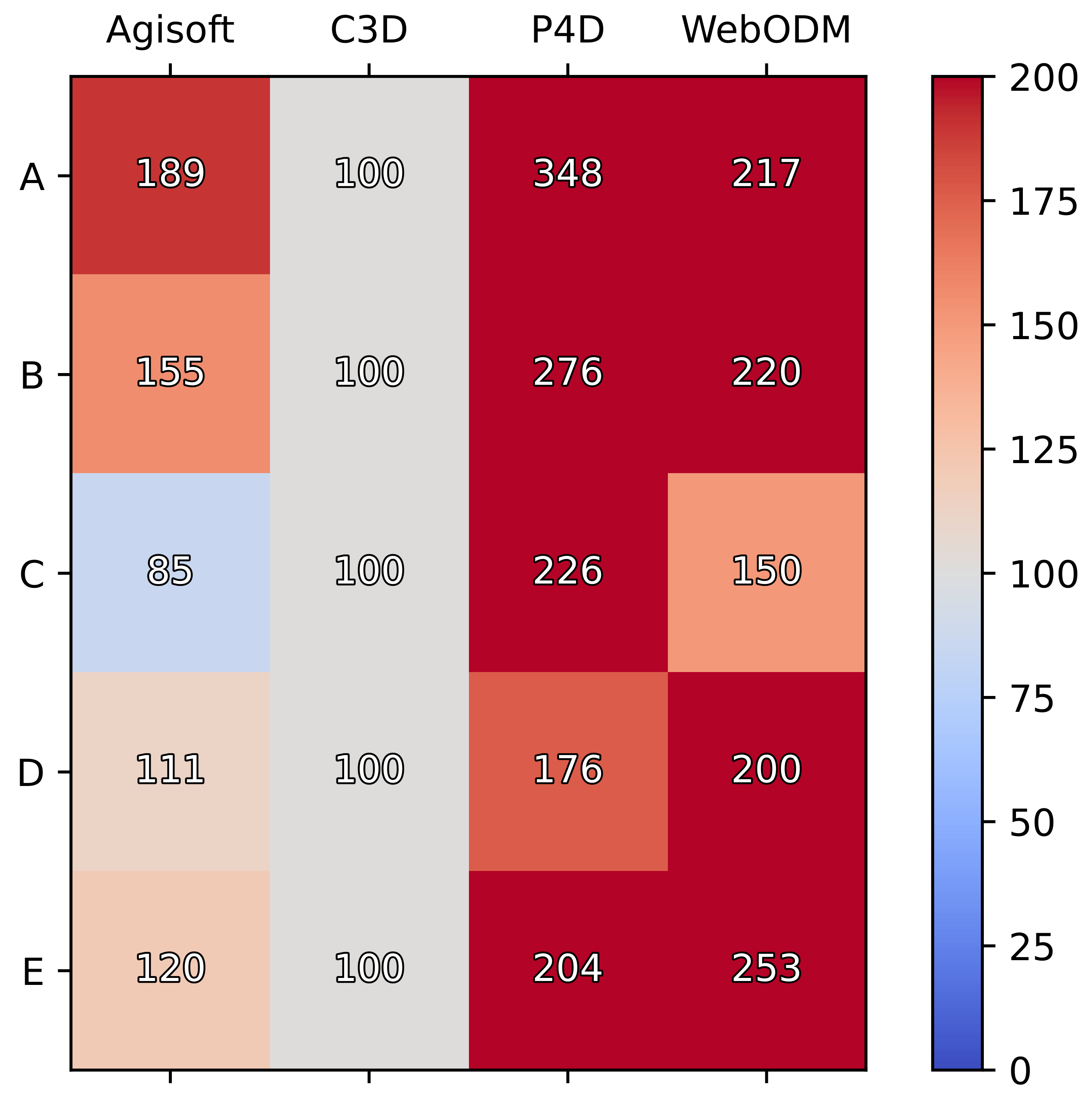

3.1. Comparing Output File Dimensions and Specifications

3.2. Comparing Orthomosaics

3.2.1. Colour Density Correlation Score

3.2.2. Geographic Shift

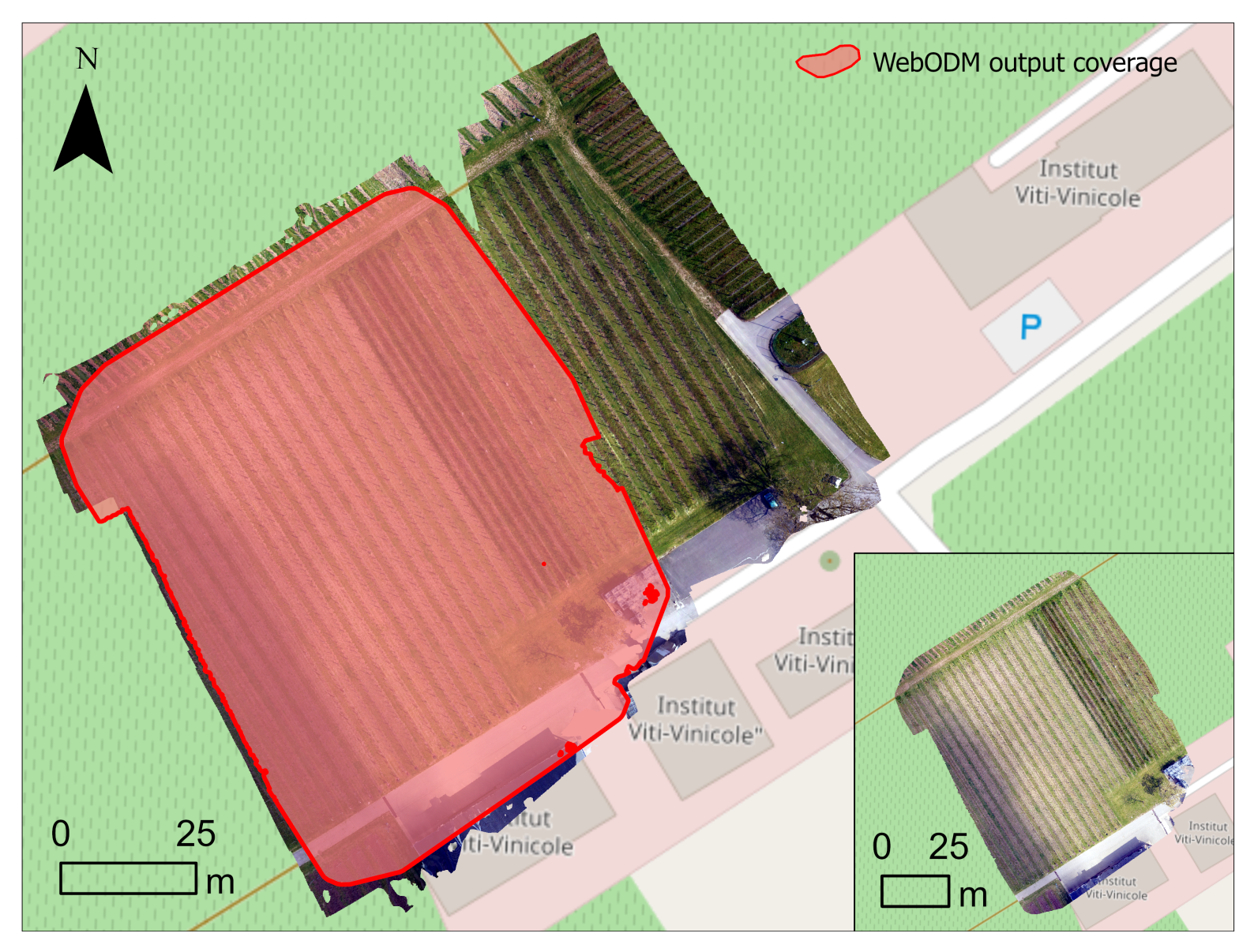

3.2.3. Visual Artefacts

3.2.4. Comparing Digital Surface Model

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AgiSoftMS | AgiSoft Metashape |

| C3D | Correlator3D |

| DEM | Digital elevation model |

| DSM | Digital surface model |

| DTM | Digital terrain model |

| ODM | OpenDroneMap |

| OS | Operating system |

| P4D | Pix4Dmapper |

| GCP | Ground control point |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| GSD | Ground sampling distance |

| RTK | Real-time kinematic positioning |

| SfM | Structure-from-Motion |

| UAV | Unmanned aerial vehicle |

Appendix A

| Features | Dataset | AgiSoft | C3D | P4D | WebODM |

|---|---|---|---|---|---|

| File size (MB) | A | 63 | 134 | 482 | 3 |

| B | 93 | 119 | 617 | 13 | |

| C | 106 | 145 | 410 | 84 | |

| D | 207 | 171 | 1590 | 242 | |

| E | 284.4 | 372.1 | 930 | 365.4 | |

| X resolution (cm) | A | 3.7 | 4 | 0.76 | 5 |

| B | 2.5 | 3.3 | 0.6 | 5 | |

| C | 8.6 | 10 | 2.1 | 5 | |

| D | 10.7 | 12.5 | 2.4 | 5 | |

| E | 10 | 12.5 | 2.55 | 5 | |

| Y resolution (cm) | A | 3.7 | 4 | 0.76 | 5 |

| B | 2.5 | 3.3 | 0.6 | 5 | |

| C | 8.2 | 10 | 2.1 | 5 | |

| D | 10.7 | 12.5 | 2.4 | 5 | |

| E | 10 | 12.5 | 2.55 | 5 | |

| Coverage () | A | 13,541 | 12,286 | 14,437 | 8850 |

| B | 12,507 | 12,591 | 9043 | 11,626 | |

| C | 149,168 | 151,489 | 99248 | 139,317 | |

| D | 537,804 | 531,526 | 534,683 | 55,1744 | |

| E | 544,650 | 517,100 | 322,233 | 465,383 | |

| Relative coverage (%) | A | 100 | 91 | 107 | 65 |

| B | 100 | 101 | 72 | 93 | |

| C | 100 | 102 | 67 | 93 | |

| D | 100 | 99 | 99 | 103 | |

| E | 100 | 95 | 59 | 85 | |

| Projected coordinate system | A | WGS 1984 UTM Zone 32N | |||

| B | WGS 1984 UTM Zone 55S | ||||

| C | NA | WGS 1984 UTM Zone 55S | |||

| D | WGS 1984 UTM Zone 12N | ||||

| E | WGS 1984 UTM Zone 55S | ||||

| Geographic coordinate system | A | ||||

| B | |||||

| C | WGS 1984 | ||||

| D | |||||

| E | |||||

| Features | Dataset | AgiSoft | C3D | P4D | WebODM |

|---|---|---|---|---|---|

| File size (MB) | A | 1110 | 1520 | 617 | 12 |

| B | 1510 | 2920 | 752 | 16 | |

| C | 1280 | 2810 | 620 | 150 | |

| D | 3270 | 3540 | 2160 | 565 | |

| E | 4290 | 6450 | 1310 | 674.2 | |

| X resolution (cm) | A | 0.9 | 0.8 | 0.76 | 5 |

| B | 0.6 | 0.6 | 0.6 | 5 | |

| C | 2.1 | 2 | 2 | 5 | |

| D | 2.7 | 0.25 | 2.4 | 5 | |

| E | 2.6 | 2.6 | 2.55 | 5 | |

| Y resolution (cm) | A | 0.6 | 0.8 | 0.76 | 5 |

| B | 0.6 | 0.6 | 0.6 | 5 | |

| C | 2.1 | 2 | 2 | 5 | |

| D | 2.3 | 2.5 | 2.4 | 5 | |

| E | 2.5 | 2.6 | 2.55 | 5 | |

| Coverage () | A | 13,439 | 12,180 | 13,558 | 8640 |

| B | 12,500 | 11,186 | 8833 | 11,584 | |

| C | 148,473 | 146,799 | 99,288 | 138,452 | |

| D | 536,672 | 532,164 | 532,849 | 549,017 | |

| E | 542,947 | 510,800 | 304,165 | 461,166 | |

| Relative coverage (%) | A | 100 | 91 | 101 | 64 |

| B | 100 | 89 | 71 | 93 | |

| C | 100 | 99 | 67 | 93 | |

| D | 100 | 99 | 99 | 102 | |

| E | 100 | 94 | 56 | 85 | |

| Projected coordinate system | A | WGS 1984 UTM Zone 32N | |||

| B | WGS 1984 UTM Zone 55S | ||||

| C | NA | WGS 1984 UTM Zone 55S | |||

| D | WGS 1984 UTM Zone 12N | ||||

| E | WGS 1984 UTM Zone 55S | ||||

| Geographic coordinate system | A | ||||

| B | |||||

| C | WGS 1984 | ||||

| D | |||||

| E | |||||

References

- Anderson, K.; Westoby, M.J.; James, M.R. Low-Budget Topographic Surveying Comes of Age: Structure from Motion Photogrammetry in Geography and the Geosciences. Prog. Phys. Geogr. Earth Environ. 2019, 43, 163–173. [Google Scholar] [CrossRef]

- Joyce, K.; Duce, S.; Leahy, S.; Leon, J.; Maier, S. Principles and Practice of Acquiring Drone-Based Image Data in Marine Environments. Mar. Freshw. Res. 2019, 70, 952–963. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight Unmanned Aerial Vehicles Will Revolutionize Spatial Ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Barnetson, J.; Phinn, S.; Scarth, P. Mapping Woody Vegetation Cover across Australia’s Arid Rangelands: Utilising a Machine-Learning Classification and Low-Cost Remotely Piloted Aircraft System. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101909. [Google Scholar] [CrossRef]

- Almeida, A.; Gonçalves, F.; Silva, G.; Mendonça, A.; Gonzaga, M.; Silva, J.; Souza, R.; Leite, I.; Neves, K.; Boeno, M.; et al. Individual Tree Detection and Qualitative Inventory of a Eucalyptus Sp. Stand Using UAV Photogrammetry Data. Remote Sens. 2021, 13, 3655. [Google Scholar] [CrossRef]

- Talucci, A.C.; Forbath, E.; Kropp, H.; Alexander, H.D.; DeMarco, J.; Paulson, A.K.; Zimov, N.S.; Zimov, S.; Loranty, M.M. Evaluating Post-Fire Vegetation Recovery in Cajander Larch Forests in Northeastern Siberia Using UAV Derived Vegetation Indices. Remote Sens. 2020, 12, 2970. [Google Scholar] [CrossRef]

- Furukawa, F.; Laneng, L.A.; Ando, H.; Yoshimura, N.; Kaneko, M.; Morimoto, J. Comparison of RGB and Multispectral Unmanned Aerial Vehicle for Monitoring Vegetation Coverage Changes on a Landslide Area. Drones 2021, 5, 97. [Google Scholar] [CrossRef]

- Lam, O.H.Y.; Dogotari, M.; Prüm, M.; Vithlani, H.N.; Roers, C.; Melville, B.; Zimmer, F.; Becker, R. An Open Source Workflow for Weed Mapping in Native Grassland Using Unmanned Aerial Vehicle: Using Rumex Obtusifolius as a Case Study. Eur. J. Remote Sens. 2021, 54, 71–88. [Google Scholar] [CrossRef]

- Hsu, A.J.; Kumagai, J.; Favoretto, F.; Dorian, J.; Guerrero Martinez, B.; Aburto-Oropeza, O. Driven by Drones: Improving Mangrove Extent Maps Using High-Resolution Remote Sensing. Remote Sens. 2020, 12, 3986. [Google Scholar] [CrossRef]

- Cohen, M.C.L.; de Souza, A.V.; Liu, K.b.; Rodrigues, E.; Yao, Q.; Ryu, J.; Dietz, M.; Pessenda, L.C.R.; Rossetti, D. Effects of the 2017–2018 Winter Freeze on the Northern Limit of the American Mangroves, Mississippi River Delta Plain. Geomorphology 2021, 394, 107968. [Google Scholar] [CrossRef]

- Cohen, M.C.L.; de Souza, A.V.; Liu, K.B.; Rodrigues, E.; Yao, Q.; Pessenda, L.C.R.; Rossetti, D.; Ryu, J.; Dietz, M. Effects of Beach Nourishment Project on Coastal Geomorphology and Mangrove Dynamics in Southern Louisiana, USA. Remote Sens. 2021, 13, 2688. [Google Scholar] [CrossRef]

- Windle, A.E.; Poulin, S.K.; Johnston, D.W.; Ridge, J.T. Rapid and Accurate Monitoring of Intertidal Oyster Reef Habitat Using Unoccupied Aircraft Systems and Structure from Motion. Remote Sens. 2019, 11, 2394. [Google Scholar] [CrossRef] [Green Version]

- Fallati, L.; Saponari, L.; Savini, A.; Marchese, F.; Corselli, C.; Galli, P. Multi-Temporal UAV Data and Object-Based Image Analysis (OBIA) for Estimation of Substrate Changes in a Post-Bleaching Scenario on a Maldivian Reef. Remote Sens. 2020, 12, 2093. [Google Scholar] [CrossRef]

- David, C.G.; Kohl, N.; Casella, E.; Rovere, A.; Ballesteros, P.; Schlurmann, T. Structure-from-Motion on Shallow Reefs and Beaches: Potential and Limitations of Consumer-Grade Drones to Reconstruct Topography and Bathymetry. Coral Reefs 2021, 40, 835–851. [Google Scholar] [CrossRef]

- Laporte-Fauret, Q.; Marieu, V.; Castelle, B.; Michalet, R.; Bujan, S.; Rosebery, D. Low-Cost UAV for High-Resolution and Large-Scale Coastal Dune Change Monitoring Using Photogrammetry. J. Mar. Sci. Eng. 2019, 7, 63. [Google Scholar] [CrossRef] [Green Version]

- Fabbri, S.; Grottoli, E.; Armaroli, C.; Ciavola, P. Using High-Spatial Resolution UAV-Derived Data to Evaluate Vegetation and Geomorphological Changes on a Dune Field Involved in a Restoration Endeavour. Remote Sens. 2021, 13, 1987. [Google Scholar] [CrossRef]

- Rende, S.F.; Bosman, A.; Di Mento, R.; Bruno, F.; Lagudi, A.; Irving, A.D.; Dattola, L.; Giambattista, L.D.; Lanera, P.; Proietti, R.; et al. Ultra-High-Resolution Mapping of Posidonia Oceanica (L.) Delile Meadows through Acoustic, Optical Data and Object-based Image Classification. J. Mar. Sci. Eng. 2020, 8, 647. [Google Scholar] [CrossRef]

- Benjamin, A.R.; Abd-Elrahman, A.; Gettys, L.A.; Hochmair, H.H.; Thayer, K. Monitoring the Efficacy of Crested Floatingheart (Nymphoides Cristata) Management with Object-Based Image Analysis of UAS Imagery. Remote Sens. 2021, 13, 830. [Google Scholar] [CrossRef]

- Higgisson, W.; Cobb, A.; Tschierschke, A.; Dyer, F. Estimating the Cover of Phragmites Australis Using Unmanned Aerial Vehicles and Neural Networks in a Semi-Arid Wetland. River Res. Appl. 2021, 37, 1312–1322. [Google Scholar] [CrossRef]

- Papp, L.; van Leeuwen, B.; Szilassi, P.; Tobak, Z.; Szatmári, J.; Árvai, M.; Mészáros, J.; Pásztor, L. Monitoring Invasive Plant Species Using Hyperspectral Remote Sensing Data. Land 2021, 10, 29. [Google Scholar] [CrossRef]

- Drever, M.C.; Chabot, D.; O’Hara, P.D.; Thomas, J.D.; Breault, A.; Millikin, R.L. Evaluation of an Unmanned Rotorcraft to Monitor Wintering Waterbirds and Coastal Habitats in British Columbia, Canada. J. Unmanned Veh. Syst. Virtual Issue 2016, 1, 256–267. [Google Scholar] [CrossRef]

- Oosthuizen, W.C.; Krüger, L.; Jouanneau, W.; Lowther, A.D. Unmanned Aerial Vehicle (UAV) Survey of the Antarctic Shag (Leucocarbo Bransfieldensis) Breeding Colony at Harmony Point, Nelson Island, South Shetland Islands. Polar Biol. 2020, 43, 187–191. [Google Scholar] [CrossRef]

- Mustafa, O.; Braun, C.; Esefeld, J.; Knetsch, S.; Maercker, J.; Pfeifer, C.; Rümmler, M.C. Detecting Antarctic Seals and Flying Seabirds by UAV. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, IV-2/W5, 141–148. [Google Scholar] [CrossRef] [Green Version]

- Mhango, J.K.; Harris, E.W.; Green, R.; Monaghan, J.M. Mapping Potato Plant Density Variation Using Aerial Imagery and Deep Learning Techniques for Precision Agriculture. Remote Sens. 2021, 13, 2705. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Terzi, A.; Bibi, S.; Vakouftsi, F.; Pantzios, V. Towards a Fully Open-Source System for Monitoring of Crops with UAVs in Precision Agriculture. In Proceedings of the 24th Pan-Hellenic Conference on Informatics, Athens, Greece, 20–22 November 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 322–326. [Google Scholar] [CrossRef]

- Gallardo-Salazar, J.L.; Pompa-García, M. Detecting Individual Tree Attributes and Multispectral Indices Using Unmanned Aerial Vehicles: Applications in a Pine Clonal Orchard. Remote Sens. 2020, 12, 4144. [Google Scholar] [CrossRef]

- Kucharczyk, M.; Hugenholtz, C.H. Pre-Disaster Mapping with Drones: An Urban Case Study in Victoria, British Columbia, Canada. Nat. Hazards Earth Syst. Sci. 2019, 19, 2039–2051. [Google Scholar] [CrossRef] [Green Version]

- Jiménez-Jiménez, S.I.; Ojeda-Bustamante, W.; Ontiveros-Capurata, R.E.; Marcial-Pablo, M.d.J. Rapid Urban Flood Damage Assessment Using High Resolution Remote Sensing Data and an Object-Based Approach. Geomat. Nat. Hazards Risk 2020, 11, 906–927. [Google Scholar] [CrossRef]

- Berra, E.F.; Peppa, M.V. Advances and Challenges of Uav Sfm Mvs Photogrammetry and Remote Sensing: Short Review. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH: Göttingen, Germany, 2020; Volume XLII-3-W12-2020, pp. 267–272. [Google Scholar] [CrossRef]

- Xin, Y.; Li, J.; Cheng, Q. Automatic Generation of Remote Sensing Image Mosaics for Mapping Large Natural Hazards Areas. In Geomatics Solutions for Disaster Management; Li, J., Zlatanova, S., Fabbri, A.G., Eds.; Lecture Notes in Geoinformation and Cartography; Springer: Berlin/Heidelberg, Germany, 2007; pp. 61–73. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An Automated Technique for Generating Georectified Mosaics from Ultra-High Resolution Unmanned Aerial Vehicle (UAV) Imagery, Based on Structure from Motion (SfM) Point Clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef] [Green Version]

- James, M.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV Topographic Surveys Processed with Structure-from-Motion: Ground Control Quality, Quantity and Bundle Adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef] [Green Version]

- Tmušić, G.; Manfreda, S.; Aasen, H.; James, M.R.; Gonçalves, G.; Ben-Dor, E.; Brook, A.; Polinova, M.; Arranz, J.J.; Mészáros, J.; et al. Current Practices in UAS-based Environmental Monitoring. Remote Sens. 2020, 12, 1001. [Google Scholar] [CrossRef] [Green Version]

- Smith, M.; Carrivick, J.; Quincey, D. Structure from Motion Photogrammetry in Physical Geography. Prog. Phys. Geogr. Earth Environ. 2016, 40, 247–275. [Google Scholar] [CrossRef] [Green Version]

- Brach, M.; Chan, J.C.W.; Szymanski, P. Accuracy Assessment of Different Photogrammetric Software for Processing Data from Low-Cost UAV Platforms in Forest Conditions. iForest Biogeosci. For. 2019, 12, 435. [Google Scholar] [CrossRef]

- Gross, J.W.; Heumann, B.W. A Statistical Examination of Image Stitching Software Packages for Use with Unmanned Aerial Systems. Photogramm. Eng. Remote Sens. 2016, 82, 419–425. [Google Scholar] [CrossRef]

- Casella, V.; Chiabrando, F.; Franzini, M.; Manzino, A.M. Accuracy Assessment of a UAV Block by Different Software Packages, Processing Schemes and Validation Strategies. ISPRS Int. J. Geo-Inf. 2020, 9, 164. [Google Scholar] [CrossRef] [Green Version]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient Structure from Motion for Large-Scale UAV Images: A Review and a Comparison of SfM Tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Chen, P.F.; Xu, X.G. A Comparison of Photogrammetric Software Packages for Mosaicking Unmanned Aerial Vehicle (UAV) Images in Agricultural Application. Acta Agron. Sin. 2020, 46, 1112–1119. [Google Scholar] [CrossRef]

- Pix4D. Pix4Dmapper; Pix4D: Denver, CO, USA, 2017; Available online: https://support.pix4d.com/hc/en-us/articles/202557839-Interface (accessed on 13 December 2021).

- AgiSoft LLC. AgiSoft Metashape; AgiSoft LLC: St. Petersburg, Russia, 2021; Available online: http://agisoft.ca/ (accessed on 13 December 2021).

- SimActive. Correlator 3D; SimActive: Montreal, QC, Canada, 2021; Available online: https://www.simactive.com/ (accessed on 13 December 2021).

- OpenDroneMap. Web Open Drone Map (ODM). Available online: https://www.opendronemap.org/ (accessed on 13 December 2021).

- Geonadir. Available online: https://data.geonadir.com/ (accessed on 13 December 2021).

- AgiSoft LLC. AgiSoft Metashape User Manual—Professional Edition, Version 1.5; AgiSoft LLC: St. Petersburg, Russia, 2019. [Google Scholar]

- SimActive. Correlator3DTM User Manual, Version 8.3.0; SimActive: Montréal, QC, Canada, 2019. [Google Scholar]

- Pix4D. Pix4Dmapper User Manual, Version 4.1; Pix4D: Denver, CO, USA, 2017; Available online: https://support.pix4d.com/hc/en-us/articles/204272989-Offline-Getting-Started-and-Manual-pdf (accessed on 13 December 2021).

- OpenDroneMap. ODM-A Command Line Toolkit to Generate Maps, Point Clouds, 3D Models and DEMs from Drone, Balloon or Kite Images; OpenDroneMap: Gurugram, India, 2020; Available online: https://opendronemap.org (accessed on 13 December 2021).

- Bruscolini, M. Vineyard in Luxembourg. Available online: https://data.geonadir.com/project-details/341 (accessed on 13 December 2021).

- Joyce, K.E.; Koci, J.; Duce, S. SE Pelorus March 2021 Part 1. Available online: https://data.geonadir.com/project-details/139 (accessed on 13 December 2021).

- Joyce, K.E. Trinity Park January 2021. Available online: https://data.geonadir.com/project-details/98 (accessed on 13 December 2021).

- Rogers, D. Tucson Arizona. Available online: https://data.geonadir.com/project-details/353 (accessed on 13 December 2021).

- Hale, M. Lung Island Annan River Yuku Baja. Available online: https://data.geonadir.com/project-details/523 (accessed on 13 December 2021).

- Esri Inc. ArcGIS Pro; Esri Inc.: Redlands, CA, USA, 2019. [Google Scholar]

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 25, 120–123. [Google Scholar]

- Esri. World Imagery—Overview. Available online: https://www.arcgis.com/home/item.html?id=10df2279f9684e4a9f6a7f08febac2a9 (accessed on 13 December 2021).

- Wechsler, S.P. Uncertainties Associated with Digital Elevation Models for Hydrologic Applications: A Review. Hydrol. Earth Syst. Sci. Discuss. 2007, 11, 1481–1500. [Google Scholar] [CrossRef] [Green Version]

- Observation, E.R.; Center, S.E. Shuttle Radar Topography Mission (SRTM) 1 Arc-Second Global. 2017. Available online: https://www.usgs.gov/centers/eros/science/usgs-eros-archive-digital-elevation-shuttle-radar-topography-mission-srtm-1?qt-science_center_objects=0#qt-science_center_objects (accessed on 13 December 2021).

- Szypuła, B. Quality Assessment of DEM Derived from Topographic Maps for Geomorphometric Purposes. Open Geosci. 2019, 11, 843–865. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Tonkin, T.N.; Midgley, N.G. Ground-Control Networks for Image Based Surface Reconstruction: An Investigation of Optimum Survey Designs Using UAV Derived Imagery and Structure-from-Motion Photogrammetry. Remote Sens. 2016, 8, 786. [Google Scholar] [CrossRef] [Green Version]

- Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P. Accuracy of Digital Surface Models and Orthophotos Derived from Unmanned Aerial Vehicle Photogrammetry. J. Surv. Eng. 2017, 143, 04016025. [Google Scholar] [CrossRef]

- Hutton, J.J.; Lipa, G.; Baustian, D.; Sulik, J.; Bruce, R.W. High Accuracy Direct Georeferencing of the Altum Multi-Spectral UAV Camera and Its Application to High Throughput Plant Phenotyping. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH: Göttingen, Germany, 2020; Volume XLIII-B1-2020, pp. 451–456. [Google Scholar] [CrossRef]

- Hugenholtz, C.; Brown, O.; Walker, J.; Barchyn, T.; Nesbit, P.; Kucharczyk, M.; Myshak, S. Spatial Accuracy of UAV-Derived Orthoimagery and Topography: Comparing Photogrammetric Models Processed with Direct Geo-Referencing and Ground Control Points. Geomatica 2016, 70, 21–30. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Wallace, L. Direct Georeferencing of Ultrahigh-Resolution UAV Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2738–2745. [Google Scholar] [CrossRef]

- Mandlburger, G.; Pfennigbauer, M.; Schwarz, R.; Flöry, S.; Nussbaumer, L. Concept and Performance Evaluation of a Novel UAV-Borne Topo-Bathymetric LiDAR Sensor. Remote Sens. 2020, 12, 986. [Google Scholar] [CrossRef] [Green Version]

- Vélez-Nicolás, M.; García-López, S.; Barbero, L.; Ruiz-Ortiz, V.; Sánchez-Bellón, Á. Applications of Unmanned Aerial Systems (UASs) in Hydrology: A Review. Remote Sens. 2021, 13, 1359. [Google Scholar] [CrossRef]

- Liao, J.; Zhou, J.; Yang, W. Comparing LiDAR and SfM Digital Surface Models for Three Land Cover Types. Open Geosci. 2021, 13, 497–504. [Google Scholar] [CrossRef]

- Rogers, S.R.; Manning, I.; Livingstone, W. Comparing the Spatial Accuracy of Digital Surface Models from Four Unoccupied Aerial Systems: Photogrammetry Versus LiDAR. Remote Sens. 2020, 12, 2806. [Google Scholar] [CrossRef]

- Ekaso, D.; Nex, F.; Kerle, N. Accuracy Assessment of Real-Time Kinematics (RTK) Measurements on Unmanned Aerial Vehicles (UAV) for Direct Geo-Referencing. Geo-Spat. Inf. Sci. 2020, 23, 165–181. [Google Scholar] [CrossRef] [Green Version]

| Common Features | No. of Images | Drone | Sensor | Array Size | |

|---|---|---|---|---|---|

| A | Agricultural crops, road | 282 | DJI Phantom 3 Standard | 1/2.3 CMOS | 4000 × 3000 |

| B | Water, coral reef | 340 | DJI Phantom 4 Pro | 1 CMOS | 5472 × 3648 |

| C | Mangroves, tree, beach, water, road, residential buildings | 189 | DJI Phantom 4 Pro | 1 CMOS | 4864 × 3648 |

| D | Road, cars, residential buildings | 587 | Autel Robotics Evo II Pro | 1 CMOS | 5472 × 3648 |

| E | River, tree, forest | 625 | DJI Phantom 4 Pro | 1 CMOS | 5472 × 3648 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pell, T.; Li, J.Y.Q.; Joyce, K.E. Demystifying the Differences between Structure-from-MotionSoftware Packages for Pre-Processing Drone Data. Drones 2022, 6, 24. https://doi.org/10.3390/drones6010024

Pell T, Li JYQ, Joyce KE. Demystifying the Differences between Structure-from-MotionSoftware Packages for Pre-Processing Drone Data. Drones. 2022; 6(1):24. https://doi.org/10.3390/drones6010024

Chicago/Turabian StylePell, Taleatha, Joan Y. Q. Li, and Karen E. Joyce. 2022. "Demystifying the Differences between Structure-from-MotionSoftware Packages for Pre-Processing Drone Data" Drones 6, no. 1: 24. https://doi.org/10.3390/drones6010024

APA StylePell, T., Li, J. Y. Q., & Joyce, K. E. (2022). Demystifying the Differences between Structure-from-MotionSoftware Packages for Pre-Processing Drone Data. Drones, 6(1), 24. https://doi.org/10.3390/drones6010024