A 3D Vision Cone Based Method for Collision Free Navigation of a Quadcopter UAV among Moving Obstacles

Abstract

:1. Introduction

- (1)

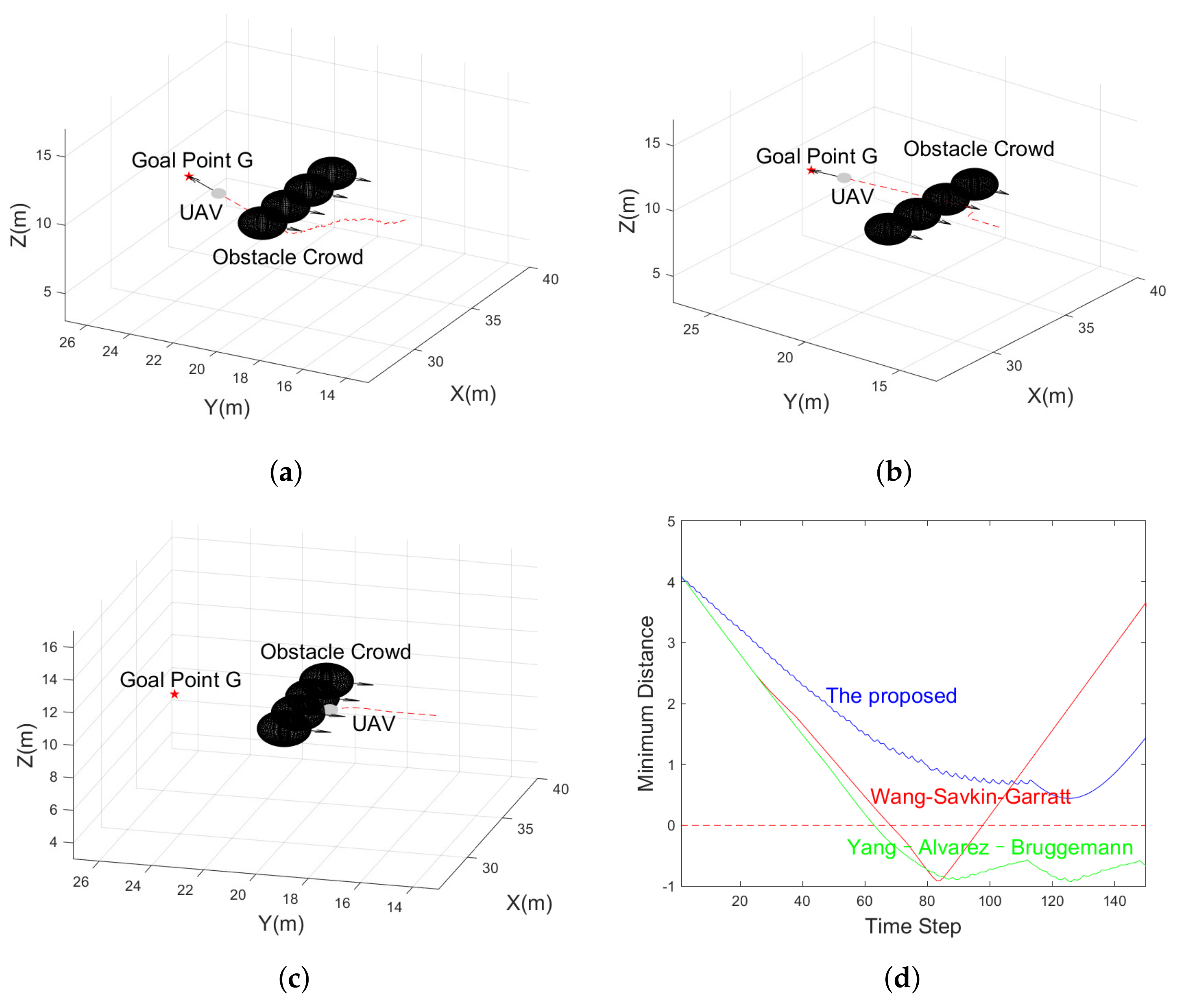

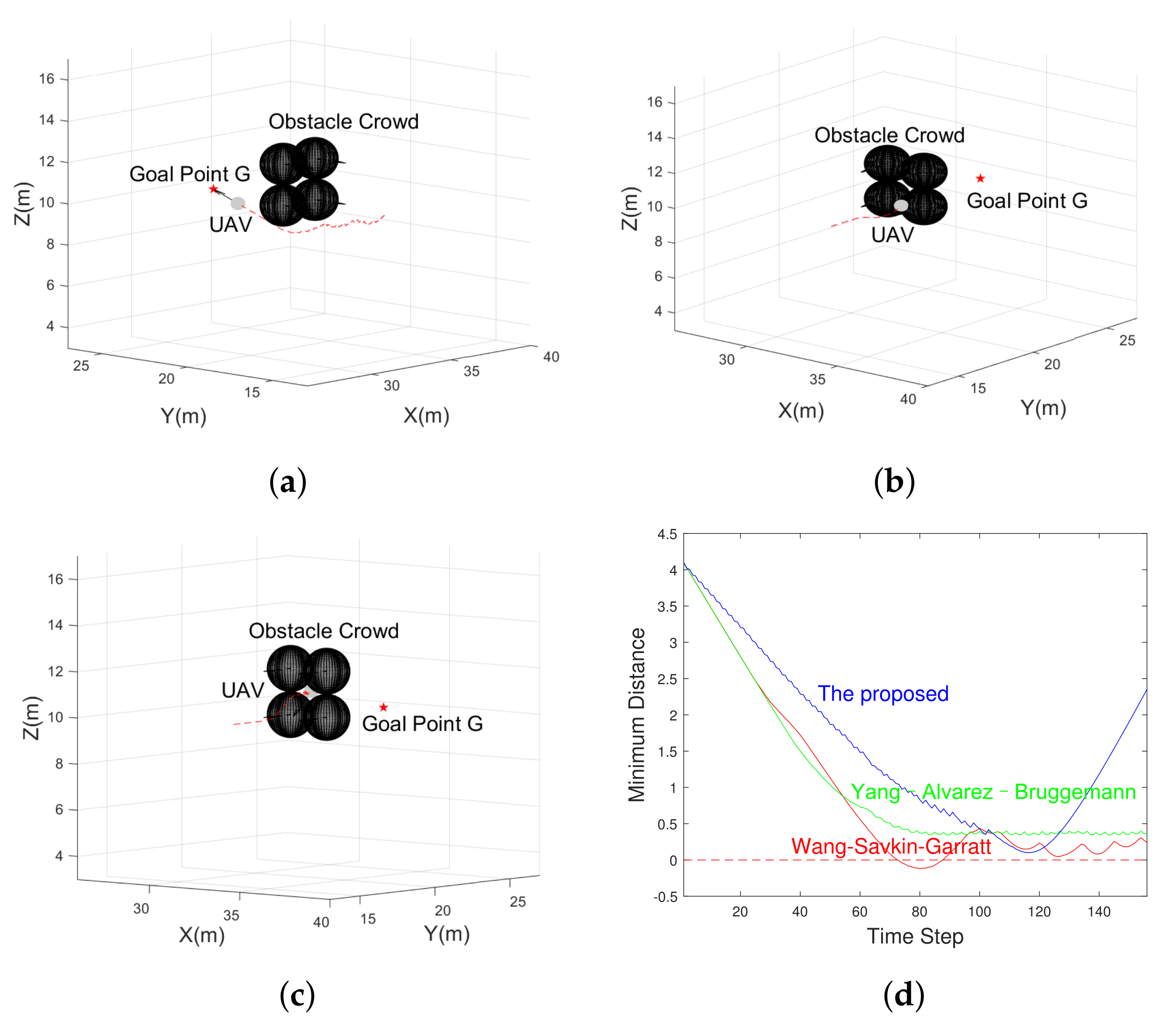

- Two existing 3D navigation algorithms are simulated in different obstacles settings, and their drawbacks are pointed out.

- (2)

- A 3D vision cone-based navigation algorithm is proposed, enabling the UAV to seek a path through crowd-spaced obstacles in the unknown dynamic environments and non-cooperative scenarios.

- (3)

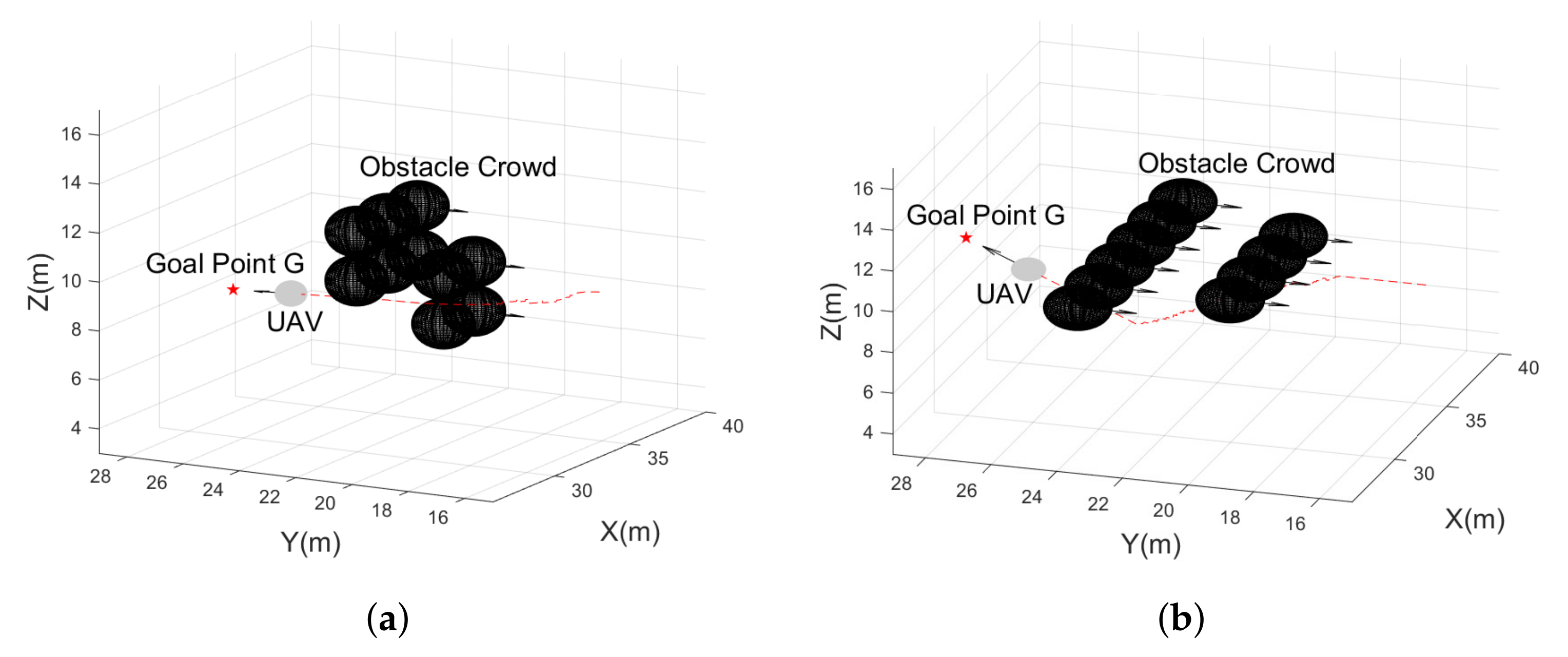

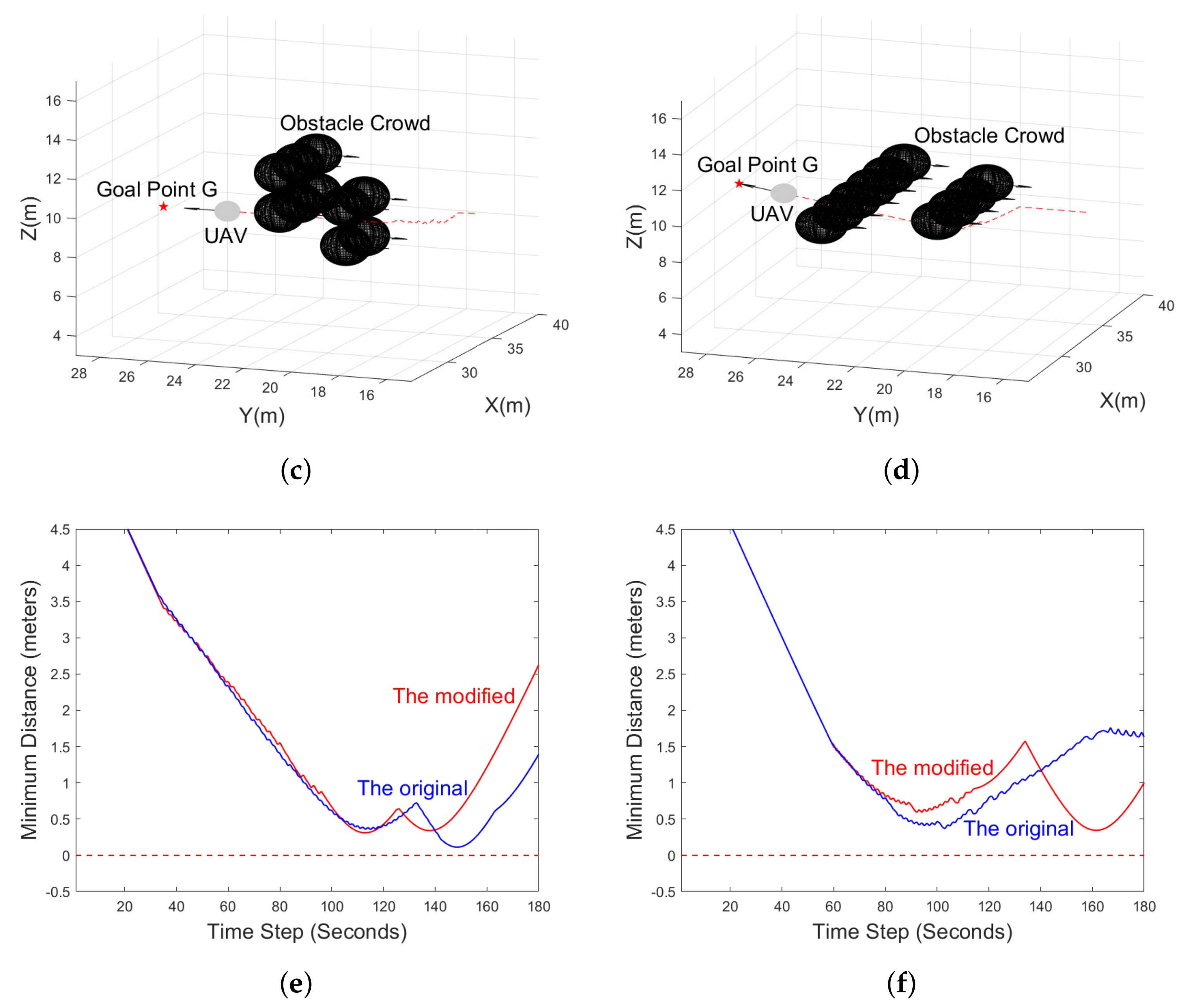

- Several simulations are conducted in MATLAB to compare the proposed algorithm with the other two state-of-the-art navigation algorithms in different unknown dynamic environments. As a result, the feasibility and superiority of the proposed 3D navigation algorithm are verified.

- (4)

- A modified idea of the proposed navigation algorithm is studied to improve the algorithm’s navigation performance further.

2. Related Work

3. Problem Statement

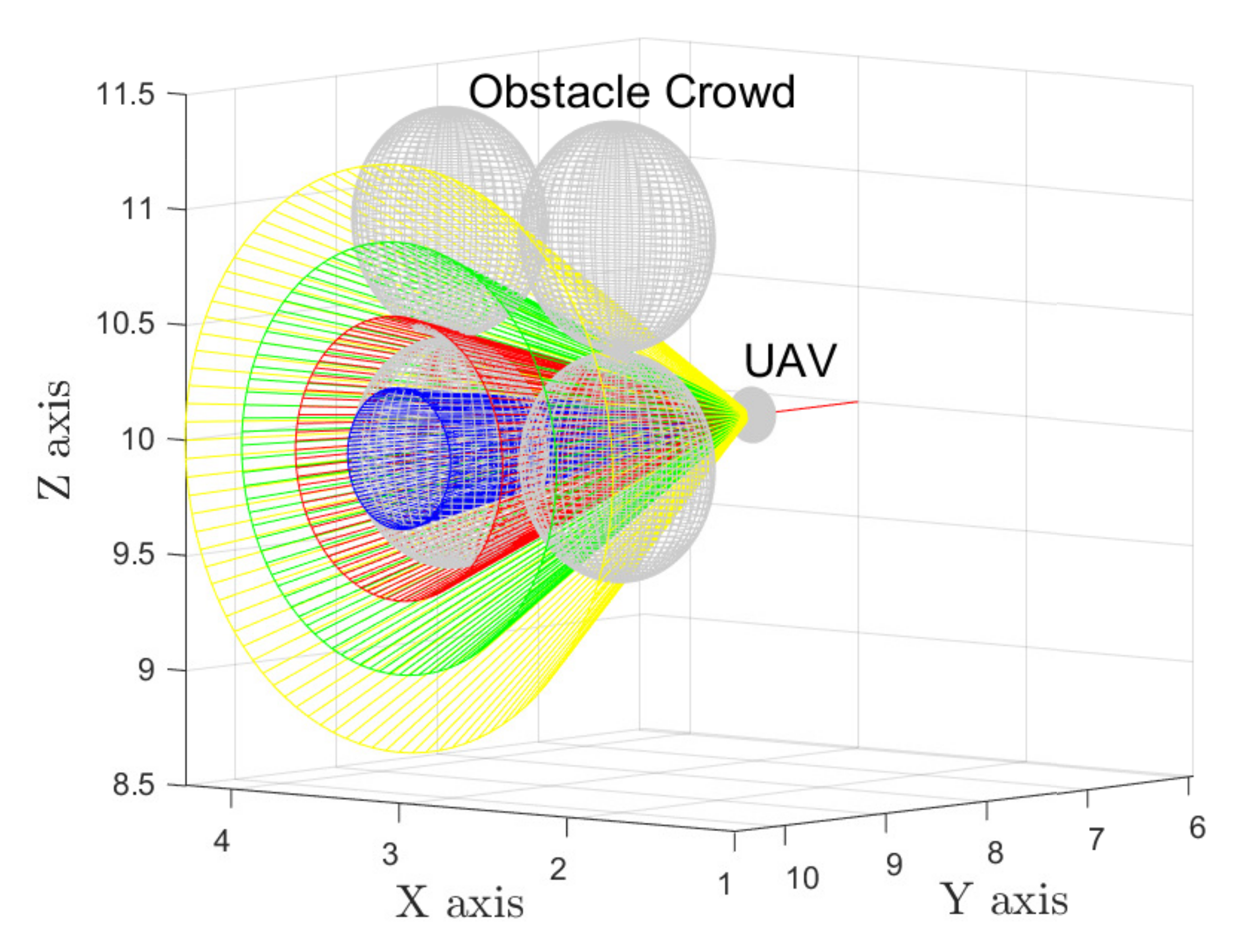

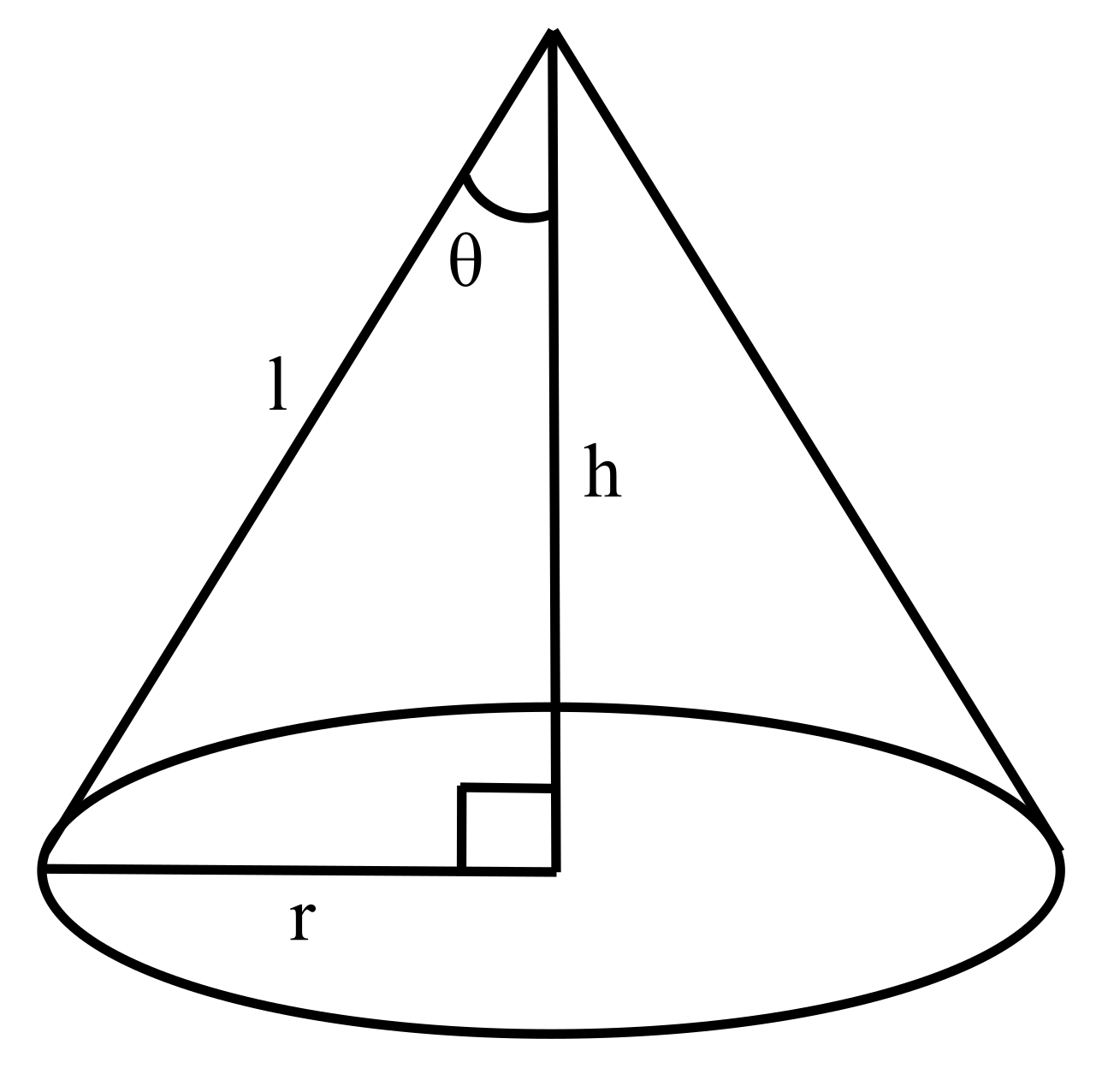

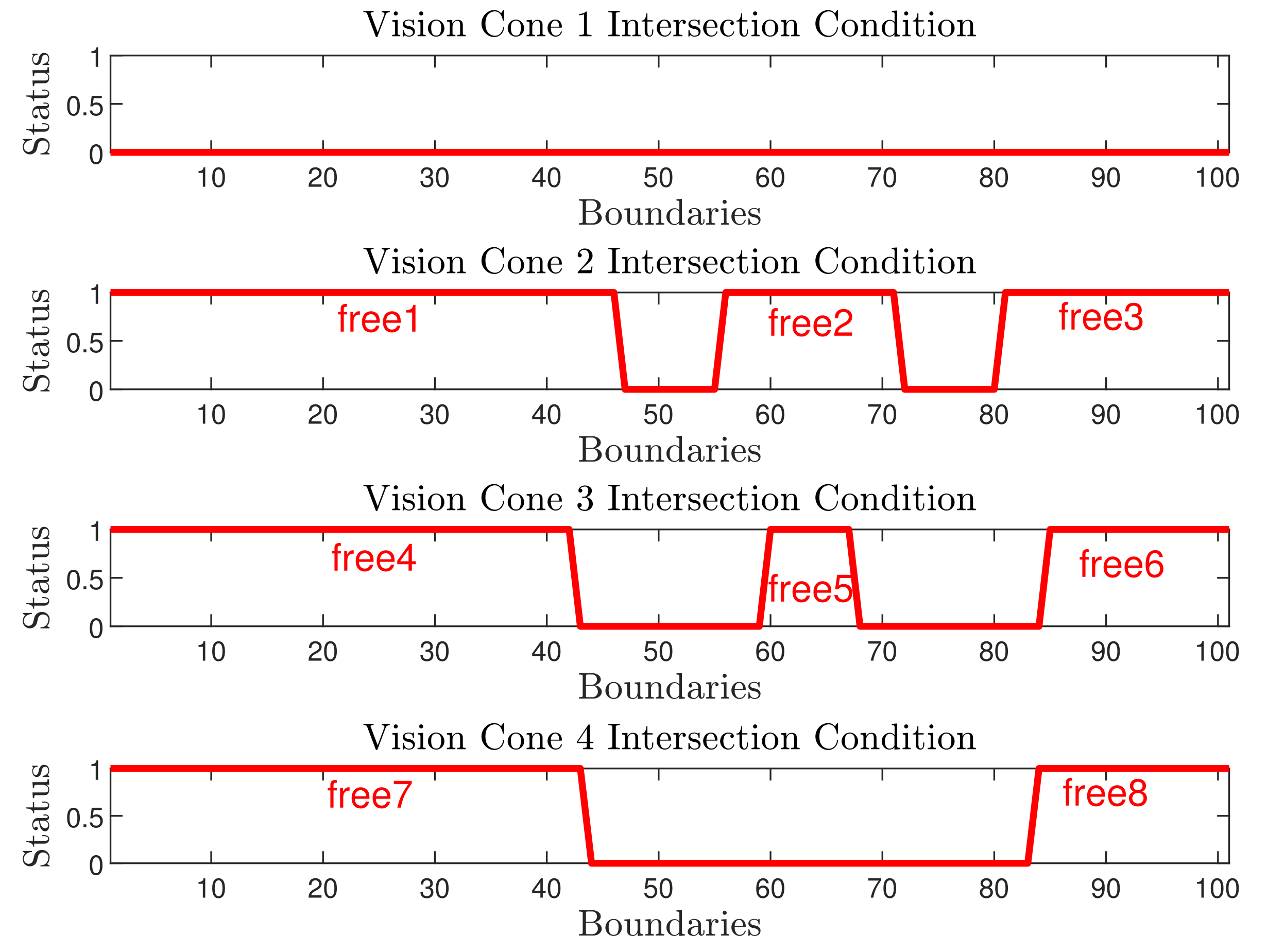

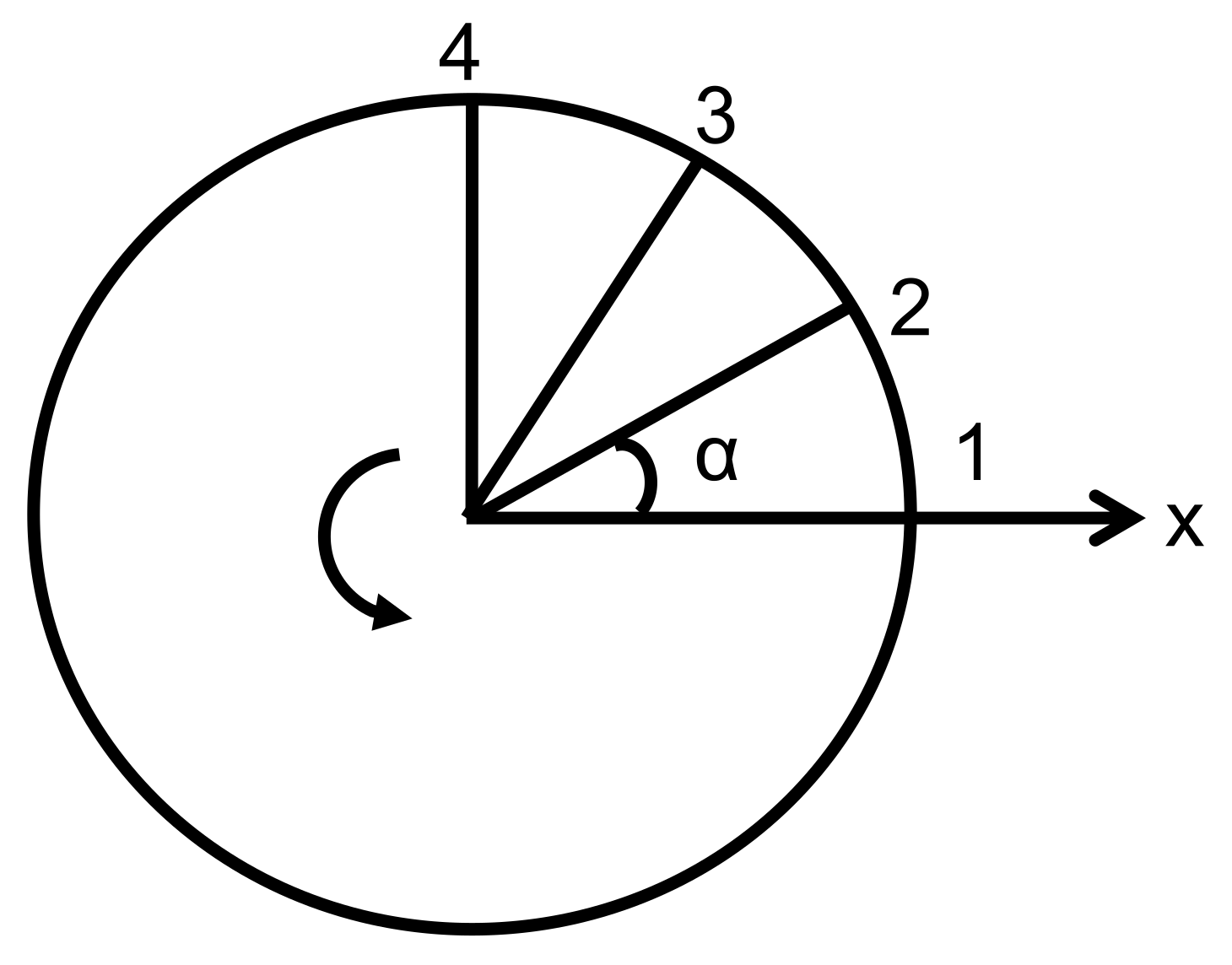

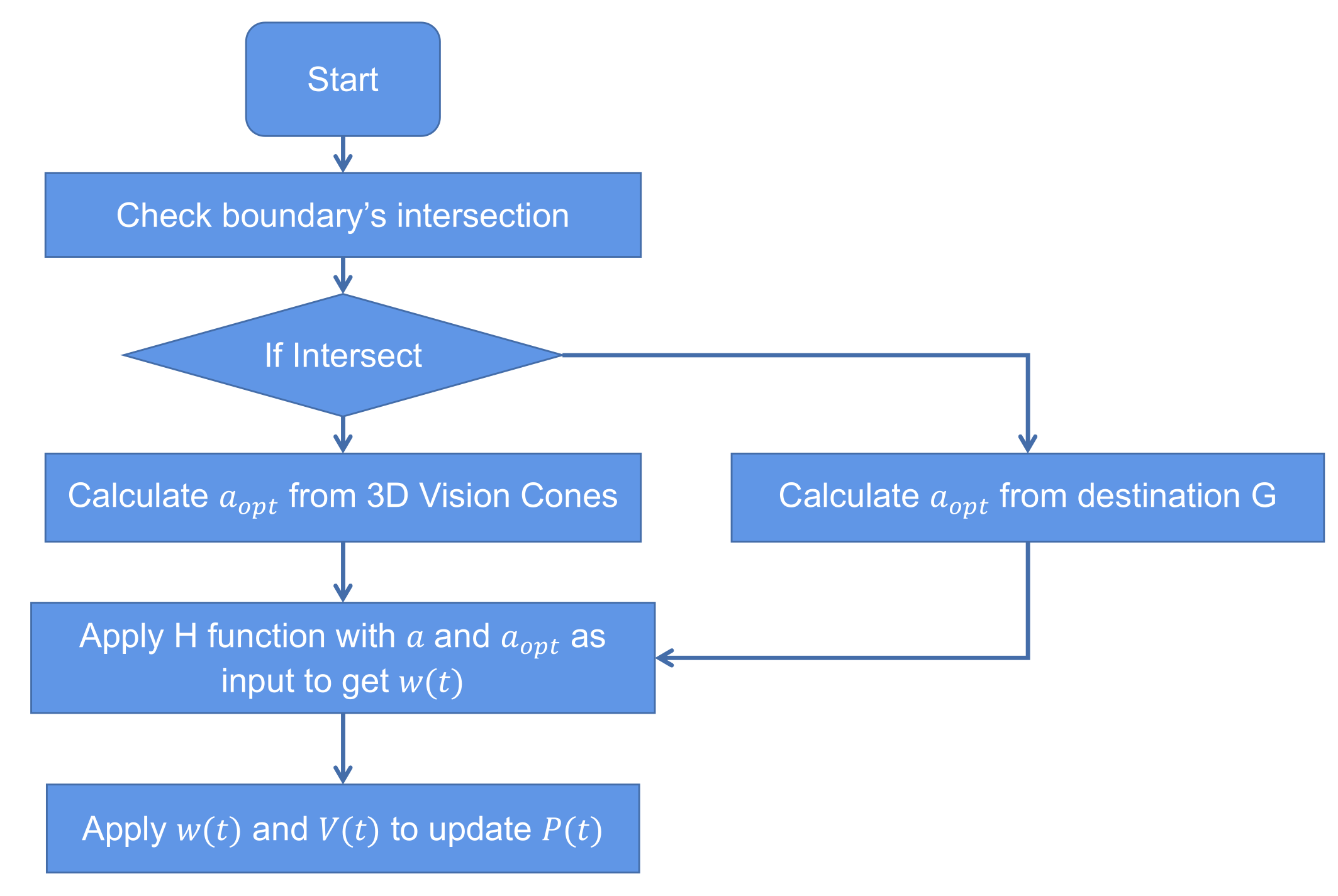

4. 3D Vision Cone-Based UAV Navigation Algorithm

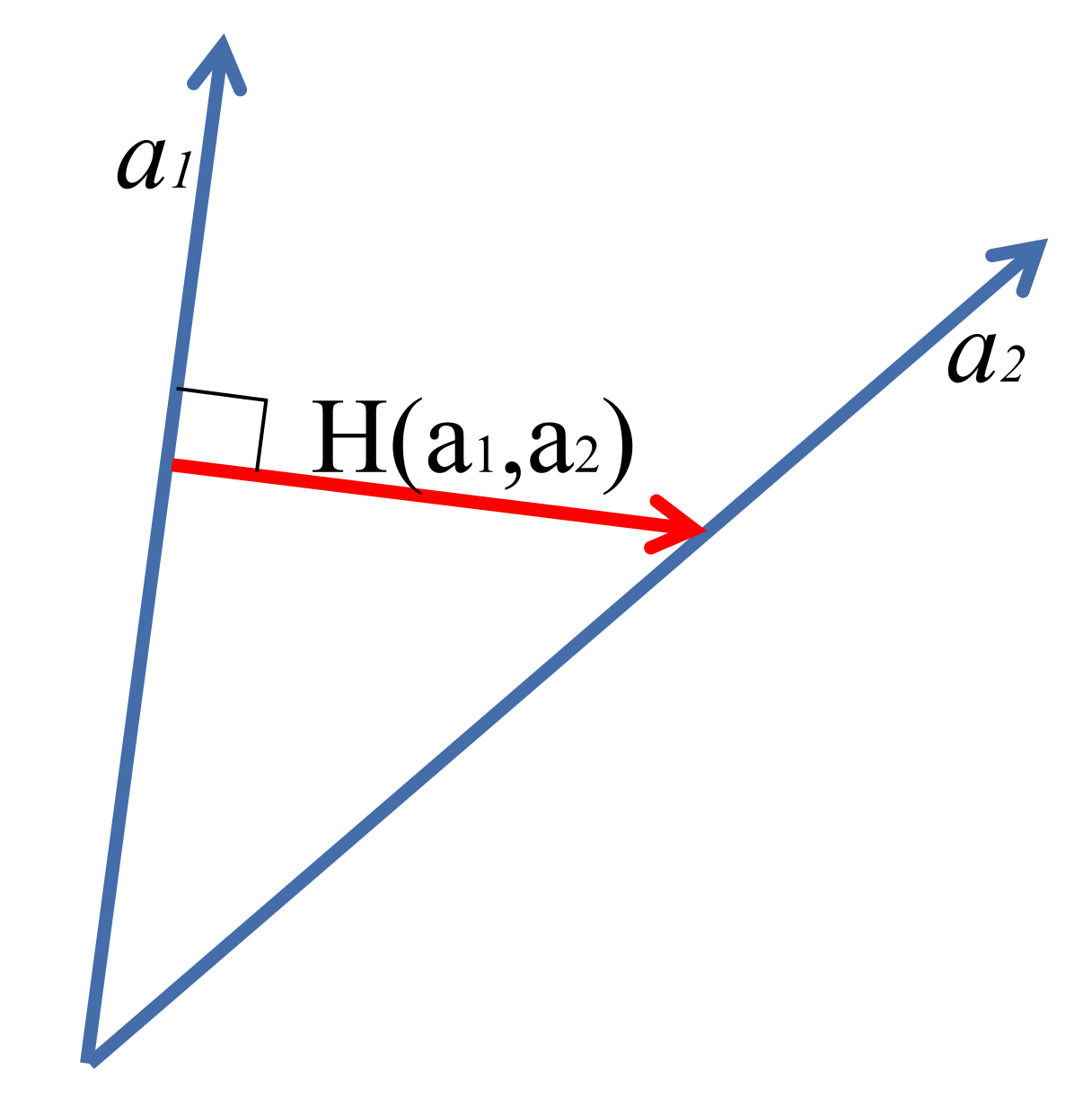

4.1. 3D Vision Cone-Based Obstacle Avoidance Control Law

4.2. Destination Reaching Control Law

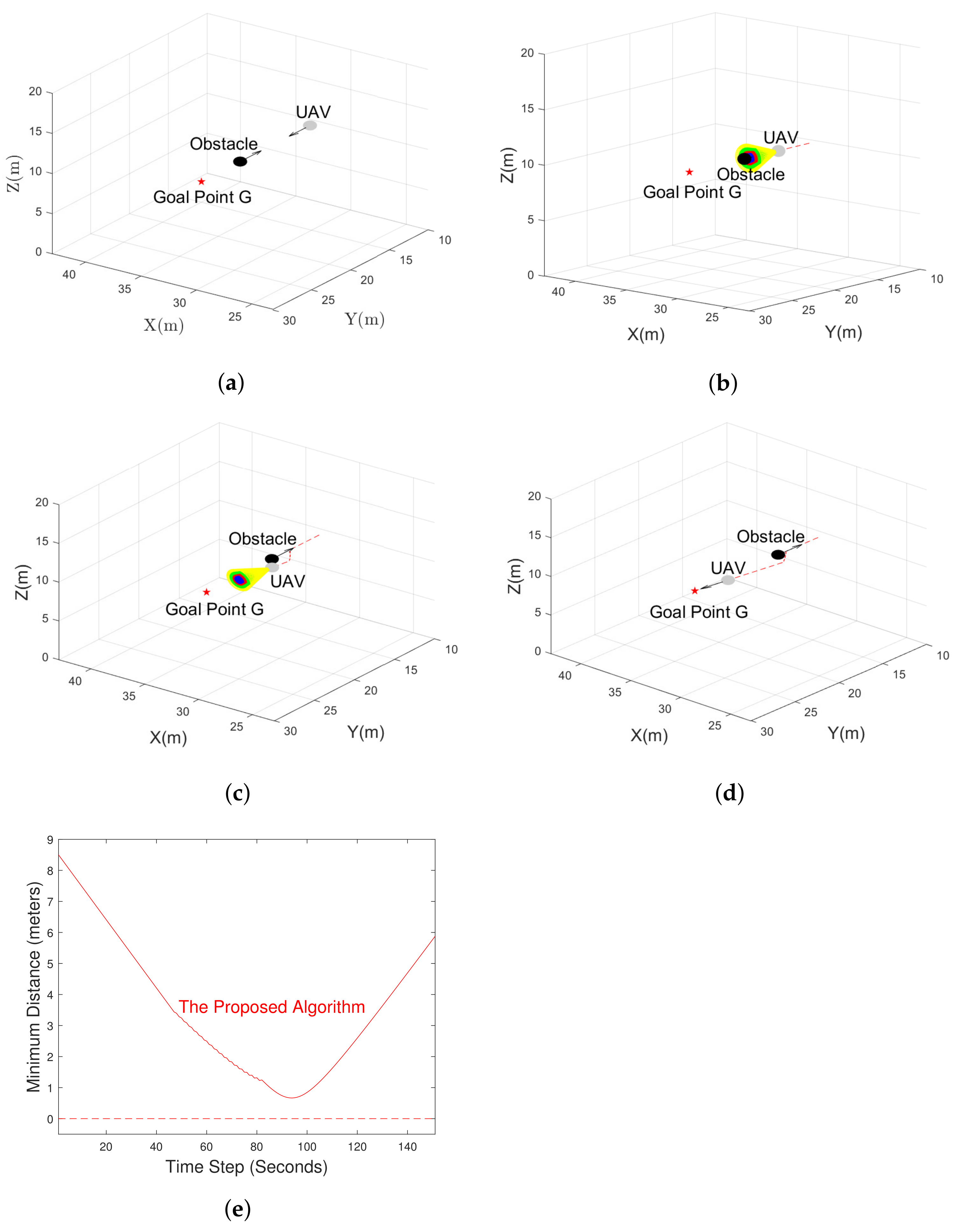

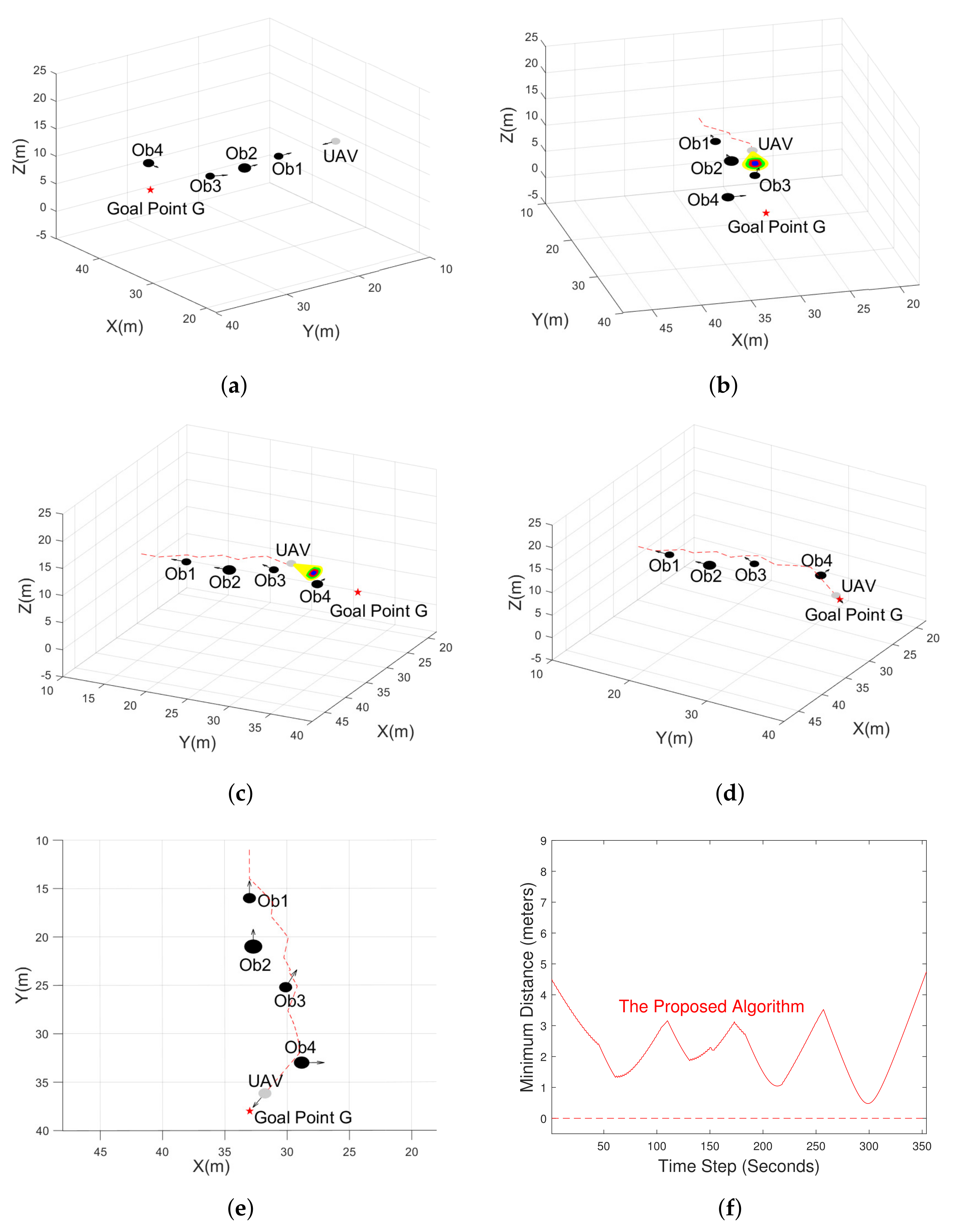

5. Computer Simulation Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| UAV’s current motion direction. | |

| UAV’s optimal motion direction generated from 3D vision cones. | |

| UAV’s cartesian coordinates. | |

| Scalar variable indicates UAV’s linear velocity. | |

| 3-D velocity vector of the UAV. | |

| i-th obstacle’s linear velocity. | |

| Maximum linear velocity determined by the performance of the UAV. | |

| Minimum linear velocity determined by the performance of the UAV. | |

| Maximum linear velocity that obstacles can reach. | |

| Control signal applied to change the content of . | |

| Maximum control effort determined by the performance of the UAV. |

Abbreviations

| UAV | Unmanned aerial vehicle |

| MPC | Model Predictive Control |

| PID | Proportional Integral Derivative |

| SMC | Sliding Mode Control |

| RL | Reinforcement Learning |

| CQL | Classic Q Learning |

| BPN | Back Propagation Neural Network |

| DNN | Deep Neural Network |

| CNN | Convolutional Neural Network |

| RCNN | Region Based Convolutional Neural Networks |

| NQL | Neural Q Learning |

| DQN | Deep Q Network |

| ODN | Object Detection Network |

| DRL | Deep Reinforcement Learning |

References

- Xiang, W.; Xinxin, W.; Jianhua, Z.; Jianchao, F.; Xiu and, S.; Dejun, Z. Monitoring the thermal discharge of hongyanhe nuclear power plant with aerial remote sensing technology using a UAV platform. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2958–2961. [Google Scholar] [CrossRef]

- Li, X.; Savkin, A.V. Networked Unmanned Aerial Vehicles for Surveillance and Monitoring: A Survey. Future Internet 2021, 13, 174. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, H. Occlusion-Aware UAV Path Planning for Reconnaissance and Surveillance. Drones 2021, 5, 98. [Google Scholar] [CrossRef]

- de Moraes, R.S.; de Freitas, E.P. Multi-UAV Based Crowd Monitoring System. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 1332–1345. [Google Scholar] [CrossRef]

- Moranduzzo, T.; Melgani, F. Monitoring structural damages in big industrial plants with UAV images. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 4950–4953. [Google Scholar] [CrossRef]

- Marinov, M.B.; Topalov, I.; Ganev, B.; Gieva, E.; Galabov, V. UAVs Based Particulate Matter Pollution Monitoring. In Proceedings of the 2019 IEEE XXVIII International Scientific Conference Electronics (ET), Sozopol, Bulgaria, 12–14 September 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Gupta, M.; Abdelsalam, M.; Khorsandroo, S.; Mittal, S. Security and Privacy in Smart Farming: Challenges and Opportunities. IEEE Access 2020, 8, 34564–34584. [Google Scholar] [CrossRef]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-based crop and weed classification for smart farming. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3024–3031. [Google Scholar] [CrossRef]

- Tokekar, P.; Hook, J.V.; Mulla, D.; Isler, V. Sensor Planning for a Symbiotic UAV and UGV System for Precision Agriculture. IEEE Trans. Robot. 2016, 32, 1498–1511. [Google Scholar] [CrossRef]

- Reddy, P.K.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q. Unmanned Aerial Vehicles in Smart Agriculture: Applications, Requirements, and Challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

- Khosravi, M.; Pishro-Nik, H. Unmanned Aerial Vehicles for Package Delivery and Network Coverage. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Sawadsitang, S.; Niyato, D.; Tan, P.; Wang, P. Joint Ground and Aerial Package Delivery Services: A Stochastic Optimization Approach. IEEE Trans. Intell. Transp. Syst. 2019, 20, 2241–2254. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Savkin, A.V.; Huang, C. Round Trip Routing for Energy-Efficient Drone Delivery Based on a Public Transportation Network. IEEE Trans. Transp. Electrif. 2020, 6, 1368–1376. [Google Scholar] [CrossRef]

- Sawadsitang, S.; Niyato, D.; Tan, P.S.; Wang, P. Supplier Cooperation in Drone Delivery. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–5. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Ansari, N. Resource Allocation in UAV-Assisted M2M Communications for Disaster Rescue. IEEE Wirel. Commun. Lett. 2019, 8, 580–583. [Google Scholar] [CrossRef]

- Liang, Y.; Xu, W.; Liang, W.; Peng, J.; Jia, X.; Zhou, Y.; Duan, L. Nonredundant Information Collection in Rescue Applications via an Energy-Constrained UAV. IEEE Internet Things J. 2019, 6, 2945–2958. [Google Scholar] [CrossRef]

- Atif, M.; Ahmad, R.; Ahmad, W.; Zhao, L.; Rodrigues, J.J. UAV-Assisted Wireless Localization for Search and Rescue. IEEE Syst. J. 2021, 15, 3261–3272. [Google Scholar] [CrossRef]

- Aiello, G.; Hopps, F.; Santisi, D.; Venticinque, M. The Employment of Unmanned Aerial Vehicles for Analyzing and Mitigating Disaster Risks in Industrial Sites. IEEE Trans. Eng. Manag. 2020, 67, 519–530. [Google Scholar] [CrossRef]

- Matveev, A.S.; Wang, C.; Savkin, A.V. Real-time navigation of mobile robots in problems of border patrolling and avoiding collisions with moving and deforming obstacles. Robot. Auton. Syst. 2012, 60, 769–788. [Google Scholar] [CrossRef]

- Low, E.M.P.; Manchester, I.R.; Savkin, A.V. A biologically inspired method for vision-based docking of wheeled mobile robots. Robot. Auton. Syst. 2007, 55, 769–784. [Google Scholar] [CrossRef]

- Hoy, M.C.; Matveev, A.S.; Savkin, A.V. Algorithms for collision-free navigation of mobile robots in complex cluttered environments: A Survey. Robotica 2018, 33, 463–497. [Google Scholar] [CrossRef] [Green Version]

- Matveev, A.S.; Savkin, A.V.; Hoy, M.C.; Wang, C. Safe Robot Navigation among Moving and Steady Obstacles; Elsevier: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Savkin, A.V.; Wang, C. Seeking a path through the crowd: Robot navigation in unknown dynamic environments with moving obstacles based on an integrated environment representation. Robot. Auton. Syst. 2014, 62, 1568–1580. [Google Scholar] [CrossRef]

- Hernandez, J.D.; Vidal, E.; Vallicrosa, G.; Galceran, E.; Carreras, M. Online path planning for autonomous underwater vehicles in unknown Environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1152–1157. [Google Scholar]

- Tordesillas, J.; How, J.P. MADER: Trajectory planner in multiagent and dynamic environments. IEEE Trans. Robot. 2021. [Google Scholar] [CrossRef]

- Munoz, F.; Espinoza, E.; Gonzalez, I.; Garcia Carrillo, L.; Salazar, S.; Lozano, R. A UAS obstacle avoidance strategy based on spiral trajectory tracking. In Proceedings of the International Conference on Unmanned Aircraft Systems, Denver, CO, USA, 9–12 June 2015; pp. 593–600. [Google Scholar]

- Li, H.; Savkin, A.V. Wireless Sensor Network Based Navigation of Micro Flying Robots in the Industrial Internet of Things. IEEE Trans. Ind. Inform. 2018, 14, 3524–3533. [Google Scholar] [CrossRef]

- Wang, C.; Savkin, A.; Garratt, M. A strategy for safe 3D navigation of non-holonomic robots among moving obstacles. Robotica 2018, 36, 275–297. [Google Scholar] [CrossRef]

- Yang, X.; Alvarez, L.M.; Bruggemann, T. A 3D Collision Avoidance Strategy for UAVs in a Non-Cooperative Environment. J. Intell. Robot Syst. 2013, 70, 315–327. [Google Scholar] [CrossRef] [Green Version]

- Dijkstra, E. A note on two problems in connexion with graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef] [Green Version]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A Formal Basis for the Heuristic Determination of Minimum Cost Paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- LaValle, S.M. Rapidly-Exploring Random Trees: A New Tool for Path Planning; Technical Report; Computer Science Department, Iowa State University: Ames, IA, USA, 1998. [Google Scholar]

- Salau, B.; Challoo, R. Multi-obstacle avoidance for UAVs in indoor applications. In Proceedings of the 2015 International Conference on Control Instrumentation, Communication and Computational Technologies (ICCICCT), Kumaracoil, India, 18–19 December 2015; pp. 786–790. [Google Scholar] [CrossRef]

- Shim, D.H.; Sastry, S. An Evasive Maneuvering Algorithm for UAVs in See-and-Avoid Situations. In Proceedings of the 2007 American Control Conference, New York, NY, USA, 9–13 June 2007; pp. 3886–3891. [Google Scholar] [CrossRef]

- Santos, M.C.P.; Santana, L.V.; Brandão, A.S.; Sarcinelli-Filho, M. UAV obstacle avoidance using RGB-D system. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 312–319. [Google Scholar] [CrossRef]

- Elmokadem, T.; Savkin, A.V. A Hybrid Approach for Autonomous Collision-Free UAV Navigation in 3D Partially Unknown Dynamic Environments. Drones 2021, 5, 57. [Google Scholar] [CrossRef]

- Wang, S.; Wang, L.; He, X.; Cao, Y. A Monocular Vision Obstacle Avoidance Method Applied to Indoor Tracking Robot. Drones 2021, 5, 105. [Google Scholar] [CrossRef]

- Lee, J.-W.; Lee, W.; Kim, K.-D. An Algorithm for Local Dynamic Map Generation for Safe UAV Navigation. Drones 2021, 5, 88. [Google Scholar] [CrossRef]

- Azevedo, F.; Cardoso, J.S.; Ferreira, A.; Fernandes, T.; Moreira, M.; Campos, L. Efficient Reactive Obstacle Avoidance Using Spirals for Escape. Drones 2021, 5, 51. [Google Scholar] [CrossRef]

- Wei, B.; Barczyk, M. Experimental Evaluation of Computer Vision and Machine Learning-Based UAV Detection and Ranging. Drones 2021, 5, 37. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2018, 28, 91–99. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7263–7271. [Google Scholar]

- Khokhlov, I.; Davydenko, E.; Osokin, I.; Ryakin, I.; Babaev, A.; Litvinenko, V.; Gorbachev, R. Tiny-YOLO object detection supplemented with geometrical data. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–5. [Google Scholar]

- Sadeghi, F.; Levine, S. Cad2rl: Real single-image flight without a single real image. arXiv 2016, arXiv:1611.042012016. [Google Scholar]

- Gandhi, D.; Pinto, L.; Gupta, A. Learning to fly by crashing. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3948–3955. [Google Scholar]

- Loquercio, A.; Maqueda, A.; Del-Blanco, C.; Scaramuzza, D. Dronet: Learning to fly by driving. IEEE Robot. Autom. Lett. 2018, 3, 1088–1095. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.56022013. [Google Scholar]

- Kahn, G.; Villaflor, A.; Ding, B.; Abbeel, P.; Levine, S. Selfsupervised deep reinforcement learning with generalized computation graphs for robot navigation. In Proceedings of the 2018 IEEE International, Kansas City, MI, USA, 16–19 September 2018. [Google Scholar]

- Zhou, B.; Wang, W.; Wang, Z.; Ding, B. Neural Q Learning Algorithm based UAV Obstacle Avoidance. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, Y.; González-Prelcic, N.; Heath, R.W. Collision-Free UAV Navigation with a Monocular Camera Using Deep Reinforcement Learning. In Proceedings of the 2020 IEEE 30th International Workshop on Machine Learning for Signal Processing (MLSP), Espoo, Finland, 21–24 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Lee, T.; Mckeever, S.; Courtney, J. Flying Free: A Research Overview of Deep Learning in Drone Navigation Autonomy. Drones 2021, 5, 52. [Google Scholar] [CrossRef]

- Muñoz, G.; Barrado, C.; Çetin, E.; Salami, E. Deep Reinforcement Learning for Drone Delivery. Drones 2019, 3, 72. [Google Scholar] [CrossRef] [Green Version]

- Watkins, C.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Matveev, A.S.; Hoy, M.C.; Savkin, A.V. 3D environmental extremum seeking navigation of a nonholonomic mobile robot. Automatica 2014, 50, 1802–1815. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ming, Z.; Huang, H. A 3D Vision Cone Based Method for Collision Free Navigation of a Quadcopter UAV among Moving Obstacles. Drones 2021, 5, 134. https://doi.org/10.3390/drones5040134

Ming Z, Huang H. A 3D Vision Cone Based Method for Collision Free Navigation of a Quadcopter UAV among Moving Obstacles. Drones. 2021; 5(4):134. https://doi.org/10.3390/drones5040134

Chicago/Turabian StyleMing, Zhenxing, and Hailong Huang. 2021. "A 3D Vision Cone Based Method for Collision Free Navigation of a Quadcopter UAV among Moving Obstacles" Drones 5, no. 4: 134. https://doi.org/10.3390/drones5040134

APA StyleMing, Z., & Huang, H. (2021). A 3D Vision Cone Based Method for Collision Free Navigation of a Quadcopter UAV among Moving Obstacles. Drones, 5(4), 134. https://doi.org/10.3390/drones5040134