Abstract

Recent developments in technology and data processing for Unoccupied Aerial Vehicles (UAVs) have revolutionized the scope of ecosystem monitoring, providing novel pathways to fill the critical gap between limited-scope field surveys and limited-customization satellite and piloted aerial platforms. These advances are especially ground-breaking for supporting management, restoration, and conservation of landscapes with limited field access and vulnerable ecological systems, particularly wetlands. This study presents a scoping review of the current status and emerging opportunities in wetland UAV applications, with particular emphasis on ecosystem management goals and remaining research, technology, and data needs to even better support these goals in the future. Using 122 case studies from 29 countries, we discuss which wetland monitoring and management objectives are most served by this rapidly developing technology, and what workflows were employed to analyze these data. This review showcases many ways in which UAVs may help reduce or replace logistically demanding field surveys and can help improve the efficiency of UAV-based workflows to support longer-term monitoring in the face of wetland environmental challenges and management constraints. We also highlight several emerging trends in applications, technology, and data and offer insights into future needs.

Keywords:

wetland; unoccupied aerial vehicle; UAV; UAS; drone; management; conservation; restoration; monitoring; high spatial resolution 1. Introduction

Unoccupied aerial vehicles (UAVs) have emerged in the global remote sensing community as small, flying robots that can access dangerous or remote regions, capture high-resolution imagery, and facilitate environmental monitoring and research ranging from broad applications in agricultural management [1] to specialized marine mammal behavioral ecology [2]. UAVs are beneficial to environmental monitoring because they bridge the constraints in complex, dynamic, limited-access environments that historically have been challenging to survey [3]. Furthermore, they reduce the amount of time and labor expended on surveying and sampling on the ground [4,5,6,7], providing time for targeted managerial activities that may otherwise be overlooked, such as restoration assessments [8,9,10,11]. In recent years, technological advances in UAV instruments such as greater spectral complexity via LiDAR and multispectral sensors and increased volumetric estimations from Structure from Motion (SfM) photogrammetric techniques have transformed applications of UAVs in environmental research [12]. Newer UAV applications in environmental research involving hyperspectral sensing have also expanded species-related analyses such as invasive plant evaluations [13] and mangrove species detection [14,15], although sensor costs and storage capacity limitations impact the opportunities for hyperspectral studies to become ubiquitous in current research [16]. While many UAV-based environmental projects center on terrestrial analyses (e.g., [17]), aquatic research has become a recent frontier in UAV studies (e.g., [18]). As hydrological regimes continue to shift with climate change [19], UAVs will prove to be critical tools in the monitoring and management of freshwater and marine ecosystems around the world.

Wetland ecosystems present a particularly important and interesting case for UAV applications. Globally threatened and disappearing at alarming rates, wetlands provide critical ecosystem services such as hydrological regulation, sequestration of carbon and mitigation of sea level rise, and support of biodiversity and critical habitats at land-water interfaces [20,21,22]. Protecting and amplifying these services via restoration, conservation and management measures often requires studying and monitoring wetlands at a landscape scale; however, the scope of wetland field surveys is frequently limited by difficult access, hazardous field conditions and the risk of disturbing sensitive plant and animal species [23,24,25]. These challenges are amplified in diverse and heterogeneous wetlands spanning complex topographic and hydrological gradients, which may require intensive surveys with large numbers of sampling locations [26,27,28,29].

UAVs are uniquely positioned to cost-effectively overcome these challenges at local observation scales via spatially comprehensive coverage, customizable flight schedules, and diverse sensor instruments for specific applications [18,30,31,32,33]. The high spatial resolution of UAV-derived imagery makes them an especially valuable surrogate for field assessments by enabling visual recognition of landscape elements and evaluation of multiple indicators of wetland habitat and ecological status (e.g., [7,34,35,36]). At the same time, some essential aspects of UAV workflows pertaining to in situ infrastructure—e.g., positioning of launching and landing sites, installation of georeferencing markers, among others—may be challenged by wetland landscape properties and accessibility similarly to ground surveys. These considerations may affect the scope of research questions and application goals that can be supported by UAV technology in a given wetland context. Understanding these opportunities and constraints is thus highly important for guiding decisions about UAV use, selecting the appropriate instruments and optimizing their application workflows to maximize their informative value, efficiency, and safety.

In response to these needs, the overarching goal of this review is to assess current scope and emerging directions in UAV applications in wetlands with particular emphasis on research applications relevant to ecosystem management and monitoring. Other researchers have explored similar topics involving the use of UAVs in aquatic ecology, wetland identification, and hydrologic modeling [2,30,37]. Vélez-Nicolás et al. [38] conducted a literature review on UAV applications in hydrology and selected 122 research papers for analysis, while Jeziorska [37] explored UAV sensors and cameras for a broad array of applications, and coupled this with a focus on 20 highlighted research papers pertaining to wetland and hydrological modeling. Mahdianpari et al. [30] examined wetland classification studies from 1980–2019 across North America using a variety of remote sensing techniques, and found only four UAV-based studies, all of which took place in Florida. Our study both complements and expands on these findings by reviewing research in management categories and detailing methods specific to wetland mapping, modeling, change detection, and new methods. We also provide insight on environmental management applications, future research opportunities, and data replicability. We focus on the following specific questions: 1) What the current state of UAV applications in wetlands is and what types of management goals and needs they respond to; 2) What emerging opportunities in approach, technology, and data are evident and what frontiers these opportunities open for wetland science, restoration, conservation and management; and 3) What barriers and wetland-specific constraints limit the scope of UAV use and what considerations and future research could strengthen the ability of these tools to support wetland monitoring and management in the face of field challenges.

2. Scoping Literature Review

2.1. Literature Selection

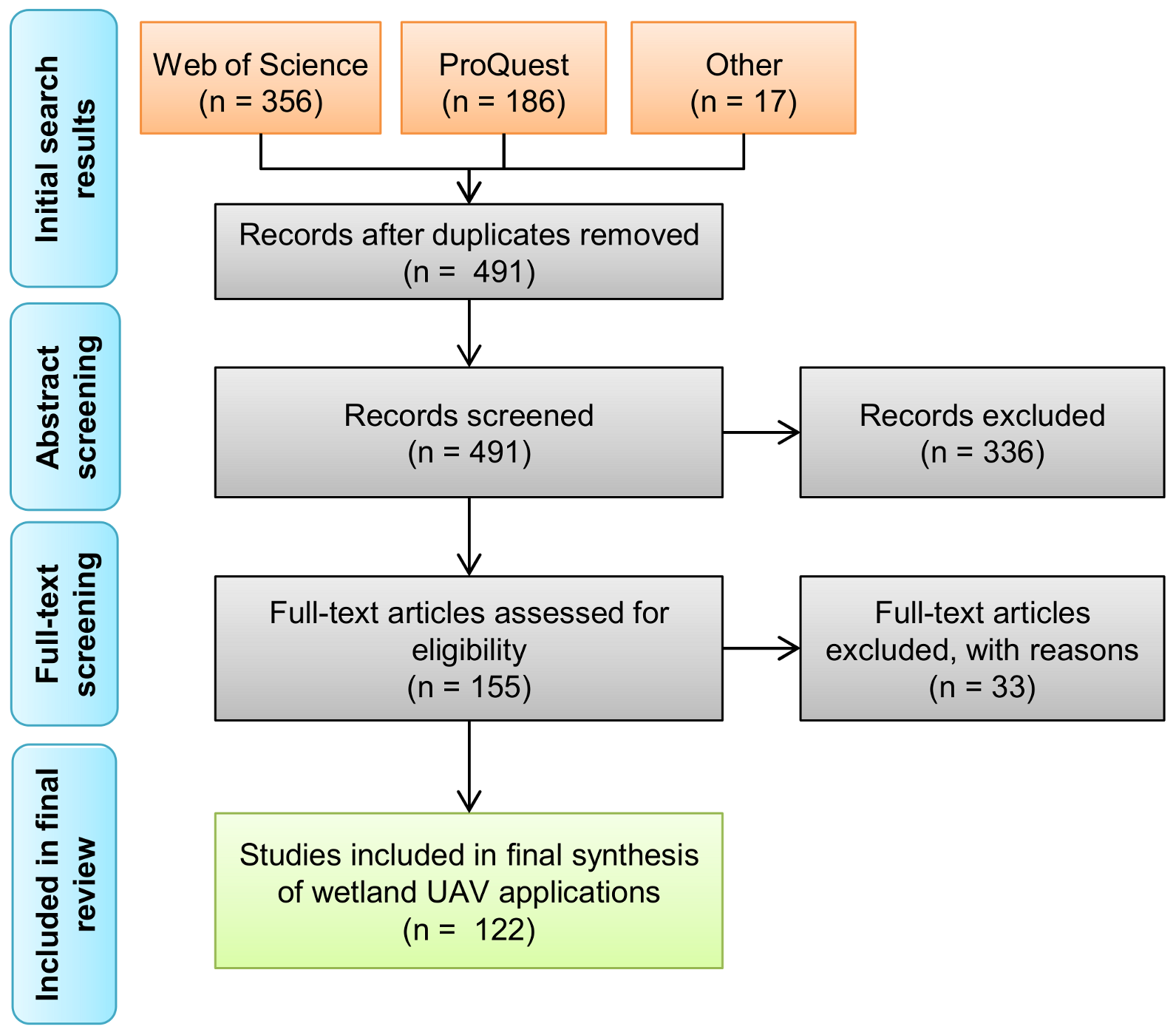

Our scoping synthesis focused on the peer reviewed studies published before 6 March 2021. We performed a literature search on Thomson Reuters Web of Science and ProQuest databases using topic keyword combinations such as ("unmanned aerial" or "uninhabited aerial" or “unoccupied aerial” or UAV* or UAS or drone*) AND (wetland* or marsh* or swamp or estuary or estuarine or coastal or riparian or floodplain* or bog) AND (restoration or conservation or management) and included 17 additional papers identified via a Google Scholar search and other literature. After removing duplicates among the search engines, we screened paper abstracts, titles, and keywords to include research that represented case studies involving UAV applications in wetland settings, considering both natural wetland types [30,39] and man-made wetlands. At this stage, we excluded review, opinion-style, and other papers that did not present UAV applications as case studies, leading to a pool of 155 candidate papers (Figure 1). However, we retained the papers in which the abstract, title and/or keywords did not provide sufficient information about case study specifics and reviewed those via full text assessment.

Figure 1.

Structure and workflow of the scoping literature review, following the PRISMA Group recommendations (Liberati et al., 2009, doi:10.1371/journal.pmed1000097).

Finally, we performed a full-text screening of the remaining papers and further excluded studies in which UAVs were mentioned but not used, as well as one study that used UAVs to support other remote sensing data analyses without providing any specific detail on the UAV instrument, its operation, or data processing. We also excluded papers in which the studied landscapes potentially included wetlands by description, but the UAV-related analyses and information extraction did not cover wetlands (e.g., studies of non-wetland coastal geomorphology). Finally, we reviewed instances in which multiple papers were published by the same leading author or team based on the same UAV data acquisitions. Such papers were treated as one broader study in our reviewed pool, unless they followed different research objectives and accordingly applied different methodologies of data processing or extracted different types of information from the data. This led us to select a final pool of 122 papers (Figure 1). From these selected studies, we extracted information about wetland type, study location, targets of UAV surveys, as well as information about UAV platform and sensor instruments, flight logistics, and data processing, when applicable or available.

2.2. Geographic and Technical Characteristics of the Reviewed UAV Applications

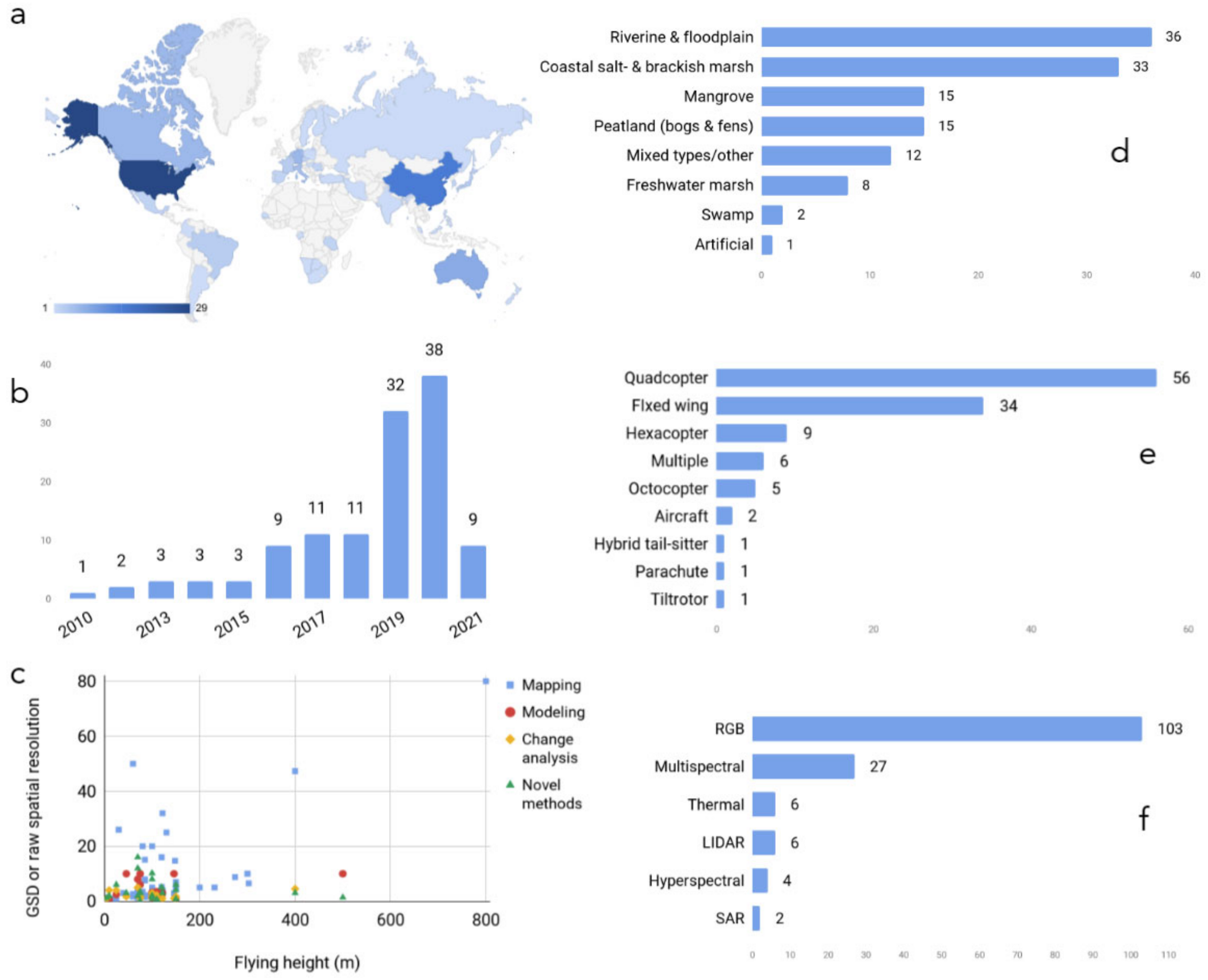

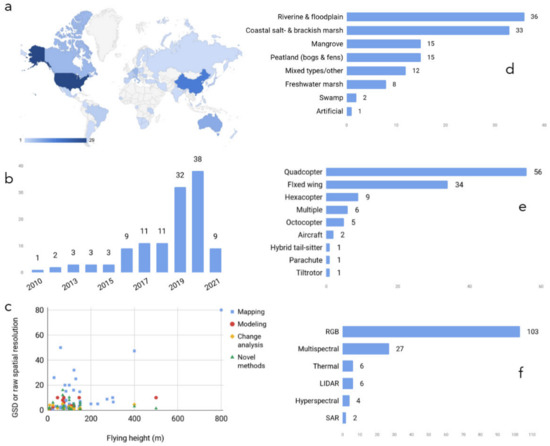

Of the 122 studies we analyzed, most were published in riparian environments using RGB imagery on quadcopter UAVs. The 122 studies we reviewed came from six continents and focused on a wide range of wetland environments (Figure 2). The annual number of publications has increased over the main part of the study period (January 2010 to December 2020). The majority of studies took place in riverine and floodplain (n = 36), coastal salt and brackish marsh (n = 33), mangrove (n = 15), and peatland (bogs and fens) (n = 15) ecosystems in the United States (n = 29), China (n = 15), Australia (n = 8), Germany (n = 6), Canada (n = 6), and Italy (n = 5, respectively). There was a clear dominance of RGB sensors used alone or with other sensors (n = 103), followed by multispectral sensors used alone or in combination with other sensors (n = 27), and most studies used quadcopters (n = 56), followed by fixed-wing vehicles (n = 34). Study areas, which were derived from the study area map when not stated directly in the text, varied between 0.2 hectares to 44,447 ha, with 40 ha as the median. Flights were conducted at altitudes between 5 and 800 m, with 100 m above ground level as both the median and most commonly flown altitude. Ground sampling distance (GSD) varied between 0.55 cm and 80 cm; however, several studies aggregated UAV data to pixel sizes larger than GSD, often to match other coarser-resolution datasets used in their analyses.

Figure 2.

Summary of reviewed studies: (a) map of publications; (b) publication year, (c) flying height and ground sampling distance (GSD), (d) survey type, (e) type of UAV, and (f) UAV sensor.

RGB sensors were the most commonly used sensors throughout the reviewed studies (Figure 2f), either alone or in combination with another sensor (n = 103). These sensors are preferable because they are inexpensive, typically provided as the native camera on a UAV, and RGB orthophotographs were used in numerous vegetation mapping cases (e.g., [40,41,42,43,44,45]). Multispectral sensors were also prevalent in wetland studies (n = 27), as they are useful in mapping shallow environments (down to about 1 meter underwater) [46] and for vegetation health assessments [27,47,48]. Only six cases used thermal data, six used LiDAR, and four used hyperspectral sensors, although this will likely change as these sensors become smaller and less expensive. Among other instruments, synthetic aperture radar (SAR) is common in remote sensing of wetlands involving satellite and aircraft data [49], particularly for flood mapping and in regions with high cloud density [50,51]. However, SAR was not as common within wetland UAV studies (n = 2) as it is expensive, is more complex to process than optical imagery, and does not have as high spatial resolution as other sensors such as RGB, multispectral, or LiDAR data [52].

One of the most common methods used throughout the reviewed papers is Structure from Motion (SfM) using RGB imagery. This photogrammetric technique can be employed to estimate the volume or height of vegetation in the study area [29,48,53,54,55,56,57,58]. SfM is similar to LiDAR in that it generates point clouds for volumetric estimations, although sensors are cheaper and lighter than current LiDAR counterparts [59]. However, as LiDAR is an active technology with crown-penetrating capability, the resulting point clouds are denser and provide more volumetric structure than do those from SfM [60]. A potential solution to this tradeoff is combining LiDAR data with SfM data [61]. Combined LiDAR and SfM methods can be used not only in vegetation inventory studies, as demonstrated in this review, but also in surface water, flooding detection analysis [38], and morphological features [35].

Several flight planning apps and processing software packages were used throughout the reviewed studies. These include mission planning apps such as Pix4DCapture [62,63], Map Pilot App [64,65], Litchi [58,66], UgCS [11], and Autopilot for DJI [67]. Most researchers used AgiSoft PhotoScan processing software for SfM and orthomosaic stitching [11,25,61,65,68], as well as Pix4DMapper, ArcGIS, QGIS, eCognition, R, Python, MATLAB, Lastools, and LiDAR360. The most common data products that were derived from these apps and software packages were orthomosaics (n = 105), which can serve multiple purposes and users and can then be post-processed through a variety of methods including manual digitization, simple classification, and machine learning algorithms. Generally, software falls into two categories: desktop and cloud-based options. Desktop tools provide more options and customizability at the expense of powerful computing hardware. Cloud-based options remain more limited, but besides computing power, they offer unique advantages in terms of ease of file sharing and collaboration, and easier file storage and data archiving [8].

Image classification techniques often involved using Esri or AgiSoft software, with occasional classifications made in Python [56], Google Earth Engine [36]), eCognition [10,14,24,27,29,42,63,69,70,71,72], and ENVI [13,14,73] software. Vegetation classification often used Random Forest algorithms [23,27,55,69,74,75,76], and there was a significant cluster of studies using object-based image analysis (OBIA) [23,24,29,69,70,77,78]. A recent study used deep learning algorithms such as convolutional neural network architectures to classify coastal wetland land cover [79]. Other common data products relevant to the scope of this paper involved derived outputs from SfM: digital terrain models (DTMs), digital surface models (DSMs), canopy height models (CHMs), and aboveground biomass of vegetation (AGB) (in n = 72 studies).

3. Wetland Management Applications and Goals

3.1. Broad Management Goals in Wetland UAV Applications

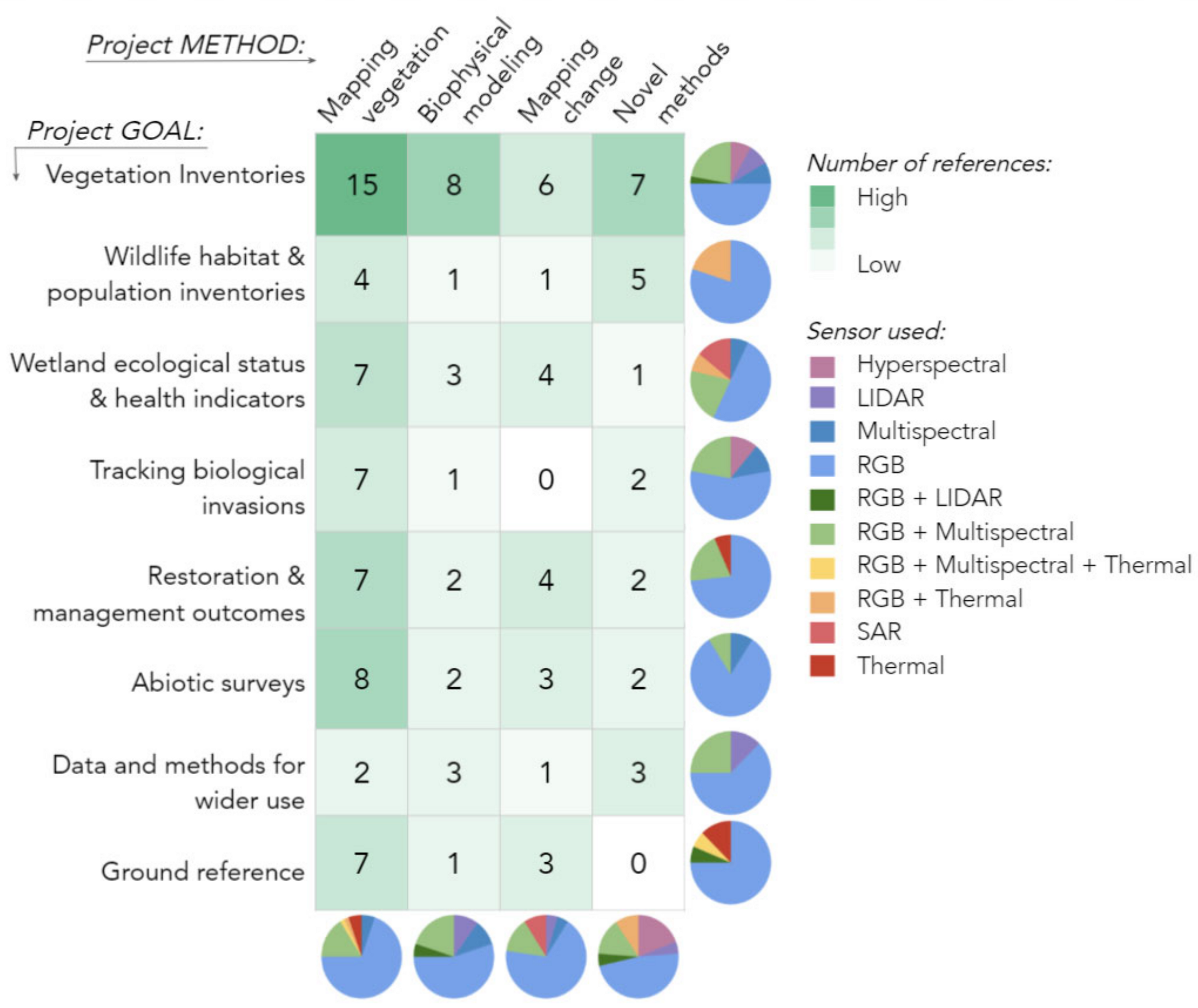

After selecting 122 studies for review, we further categorized the papers by their primary project goal (Figure 3, Table 1) and application of UAV technology to better understand the choice of UAV instruments, survey logistics, and methods of data analysis. Specifically, we differentiated baseline inventories of vegetation from studies focusing on invasive plant species and UAV-derived indicators of wetland ecological status and health that could focus on cover types beyond vegetation (Table 1A). We also distinguished studies of restoration and management outcomes because specific goals of such interventions could affect both the choice of UAV workflows and the information extracted from their data. Wildlife habitat and population inventories were also treated as a separate group because landscape targets assessed in such applications (e.g., nesting sites, animal individuals) often differed from land cover or floristic targets. As another form of inventory, we also distinguished studies focusing on abiotic factors such as hydrological or geomorphological characteristics.

Figure 3.

Matrix showing project goal and method used, shaded by the number of references reviewed; pie charts show sensor types used for each goal and method category (references for the matrix are provided in Table 1 below).

Table 1.

Distribution of reviewed studies by the primary goal of wetland unoccupied aerial vehicle (UAV) application and primary method category.

Another group included studies producing UAV surveying and/or image analysis methods for wider use, without clearly attributing such data or methods to any of the other goal types and in some cases using their study site as a case study rather than the underlying reason for the UAV application. Finally, we also distinguished the studies using UAV data as a reference for broader-scale analyses with satellite or airborne imagery. Although UAVs were typically not the focal tool in such analyses, their applications were often described in detail as a case for cost-effective source of training and validation datasets for other remote sensing products.

Given that many of the papers in our pool included assessment of wetland land cover, vegetation, and geomorphology (e.g., terrain) in some form, a degree of overlap among the categories in Table 1A was expected. In cases where the paper fit in more than one category, we assigned it a lead category and addressed the overlap in the text. To understand goal-specific analyses in more detail, we further subdivided the studies for each goal by their method: (1) mapping, focusing predominantly on plant delineation, species identification, or habitat classification from UAV imagery; (2) modeling, i.e., studies predicting environmental or biophysical wetland properties (e.g., vegetation biomass) based on UAV data and empirical measurements (typically from temporally matching field surveys); (3) change analysis over time, and (4) novel methods, which included studies developing UAV survey protocols and ecosystem monitoring workflows without an explicit goal of mapping, modeling, or change analysis.

The overall distribution of studies among project goals and methods shows a strong prevalence of vegetation inventory applications, most of which focused on mapping (Figure 3). Similarly, mapping was the leading type of analysis in abiotic inventories, restoration, and management assessments, while studies of wetland ecological health were the next three most prevalent goals (Figure 3). Interestingly, despite the obvious appeal of UAVs for monitoring of wetland change analysis, such applications were relatively few, accounting for less than one-third of studies for any of the major goals. Nevertheless, many assessments of single-date imagery acknowledged the importance of change detection in their discussion sections, commenting on the utility of monitoring over time. Modeling of vegetation or ecosystem parameters from UAV data was also less common than mapping; however, our pool included examples of modeling studies for every major goal except abiotic factor inventories (Figure 3). A similar number of studies focused on UAV surveying workflows and logistics, which were present in almost all goals except the use of UAVs as reference data. Specific applications focusing on these project goals and analysis types are discussed in subsequent Section 3.2, Section 3.3, Section 3.4, Section 3.5, Section 3.6, Section 3.7, Section 3.8 and Section 3.9.

3.2. Vegetation Inventories

Not surprisingly, vegetation mapping dominated the reviewed literature. Nearly one third of the papers reviewed (n = 36) focused on the use of UAVs for wetland mapping, modeling, change detection, and novel method development. These inventory studies used the largest variety of sensors: RGB, Multispectral, Hyperspectral, LiDAR, and combinations of these (Figure 3). Thirteen papers focused on case studies mapping wetlands from coarse to fine scales. Rupasinghe et al. [82] and Castellanos-Galindo et al. [80] each highlighted the use of green vegetation indices (e.g., Visible Atmospherically Resistant Index (VARI) and Green Leaf Index) developed from RGB imagery along with DSM to classify general shoreline land cover and wetland habitat. Two early examples of UAVs for wetland mapping [15,61] proved that the spectral and spatial resolution provided by an RGB orthophoto was sufficient to identify key wetland vegetation features. Zweig et al. [40] were able to use a more automated approach to classify freshwater wetland community types in the Florida Everglades using an RGB orthophoto, and Morgan et al. [41] reported a similar approach mapping riparian wetland community types. Other case studies incorporated DSM information in classification. For instance, Palace et al. [81] used artificial neural networks (ANN) to classify peatland vegetative cover types in Sweden, while Corti Menses et al. [83] used a novel RGB vegetation index (i.e., excess green and excess red) and the point geometry from DSM to classify the density, vitality, and shape of aquatic reed beds in a lake in southern Germany. One paper [85] incorporated thermal bands with RGB for mapping riparian vegetation in the Yongding River Basin in China.

Five papers focused on the use of OBIA to capture multi-scale attributes of wetland mosaics. OBIA is a frequently used set of techniques to extract individual features from high spatial resolution imagery [147]. The method uses spatial and spectral information to segment an image into semantically and ecologically meaningful multi-scale objects, which are then classified based on numerous decision rules. Because wetlands can be very spatially heterogeneous, often displaying multi-scale patterns of vegetation [148,149], the OBIA framework can be very helpful [150]. Four papers embraced an OBIA workflow to map wetland features. For example, the complex vegetation mosaic found in peatlands and heathlands were mapped using OBIA techniques applied to RGB orthomosaics by Bertacchi et al. [69] and Díaz-Varella et al. [70], respectively. Shang et al. [77] used an OBIA method to classify coastal wetland community types in China. Durgan et al. [29] and Broussard et al. [24] included the DSM product in their OBIA classification of floodplain and coastal marsh vegetation, respectively. Both found the OBIA method improved classification accuracy, and each report accuracies up to 85%.

Because wetland vegetation exhibit high rates of net primary productivity [151,152], and wetland aboveground biomass is an important indicator of carbon storage, productivity, and health, many papers reviewed and evaluated the use of UAVs for AGB mapping. Several papers reviewed focused on UAV data in estimating aboveground biomass and linking spatial AGB estimates to models that helped to scale field data to larger landscapes. Two studies focused on the use of SfM from RGB data [53,87] to estimate biomass. Others linked targeted UAV data with satellite imagery to map biomass, including combining NDVI from multispectral UAV imagery and Landsat imagery [33], UAV-LiDAR and Sentinel-2 imagery [86], and RGB UAV imagery with Sentinel-1 and Sentinel-2 imagery [62]. Two studies [54,67] used SfM with RGB imagery to capture detailed models of riparian vegetation in order to reconstruct physical models of structure and shading properties, while another riverine study [23] used RGB and multispectral orthophotos with an OBIA approach. They first mapped spectrally similar riparian objects and then applied in situ carbon stocks estimations to the objects to estimate the entire riparian forest carbon reservoir.

Monitoring vegetation dynamics is critical for management, and six papers described analysis of multi-date UAV missions. Two studies mapped vegetation change as a response to an external stressor [88,92] and four mapped seasonal changes at the community level [89,90,91] or at the species level [93]. Vegetation change was revealed through spectral indices [92,93], via DSMs derived from multispectral cameras [89,90,91], or LiDAR [88].

The use of UAVs in wetland science is growing, as are novel methods for analyzing data. Several papers discussed new uses of sensors common in remote sensing but less tested with UAVs, such as hyperspectral [94] or LiDAR [95]; or new sensor combinations [5,71]. These cases suggested that data other than RGB imagery can be powerful for wetland vegetation mapping. For example, Zhu et al. [71] showed how the combination of SAR, optical (from the Geofeng-2 satellite (GF-2)) and a DSM from a fixed-wing UAV could be used to map mangrove biomass in a plantation in China. Pinton et al. [5] developed a new method for correcting the impact of slope on vegetation characteristics using UAV-borne LiDAR instrumentation. Other papers discussed the use of Machine Learning algorithms to characterize vegetation. Among those, Cao et al. [14] found support vector machines (SVMs) to be the best classifier of hyperspectral imagery of mangrove species; Li et al. [15] transformed hyperspectral, RGB, and DSM data prior to classification of 13 mangrove species with SVM, and Pashaei et al. [79] evaluated a range of convolutional neural network (CNN) architectures to map coastal saltmarsh with RGB imagery. Collectively, these novel applications suggest that the success of vegetation inventories with UAVs can be greatly boosted by joint use of richer datasets and more sophisticated algorithms. Finally, such analyses are becoming increasingly feasible and accessible to a wider range of users thanks to novel open-source computing tools, such as Weka software used in [14], free Amazon Web Services cloud computing in [79], and CloudCompare software in [5].

3.3. Wildlife Habitat and Population Inventories

Spatially comprehensive and customizable UAV observations can tremendously support monitoring of wildlife populations and habitats, while greatly reducing the scope and duration of human presence compared to direct field surveys. This capacity becomes invaluable in wetlands with massive field access limitations or applications with large numbers of animal individuals or nesting sites, where field surveys can be severely compromised by physical view obstructions and limited vantage points [36,63,101]. At the same time, the success of UAV-based wildlife applications also heavily rely on the detectability of such targets from the aerial view, which may increase with larger size and conspicuousness of individual species or their habitat features [63,101].

Most wildlife-related wetland UAV studies in our pool have focused on birds (seven out of ten papers) with two others considered mammals [100,102] and crustaceans (crab) in relation to illegal trapping [97]. One study assessed a broader suite of coastal habitats with salt marshes and mudflats among those [104]. Among less directly habitat-focused studies, one paper from the wetland health category focused on a rare wetland plant species indicator of susceptible deer activity in a wetland with increasing deer population [108], while another application discussed an abiotic transformation of the landscape induced by American beaver (Castor canadensis) [127]. Most frequently, such analyses targeted: 1) animal populations or colonies [36,63,101,103]; 2) habitat components [99,100,101]; and 3) evidence of wildlife activity on the landscape [96,100,108,127]. The majority of these UAV applications used RGB cameras or a combination of RGB and thermal sensors (Figure 3).

Inventories of bird colonies are especially well facilitated by UAVs when targeting larger waterbird species or their nests located on top of plant canopies [36,101,103], both through human [101] and automated machine-learning image recognition [36,63]. Wildlife detection potential can be substantially amplified by the use of thermal cameras that enhance identification of animals based on the contrast between their body temperatures and environment [102]. In avian studies, unique thermal properties of nesting material can also help identify nest features from thermal data even when the birds are not present [101]. A “dual-camera” approach combining RGB with thermal sensing is thus highly appealing to animal inventorying applications [101].

Importantly, by their nature, UAV observations of wildlife can often be a form of disturbance and, as such, require a particular level of procedural control and elevated degree of supervision during flights. This issue was manifested in bird inventories as an important tradeoff between enhanced spatial detail and increased disturbance to nesting colonies with lower flight altitudes [101,103]. While assessing and avoiding the degree of animal disturbance during UAV observations is a recommended practice [63,101], establishing a specific relationship between altitude and disturbance may not always be feasible. Instead, other proxies can be used such as the number of birds leaving the nests during the survey [101].

UAV-induced disturbance becomes less concerning in studies focusing on detecting habitat elements [99,104] or impacts of wildlife activity on wetland landscapes [96,108], where flights can be conducted outside of the primary breeding and nesting seasons. Specific uses of UAV data in such habitat-oriented assessments are versatile, including both visual assessments of recognizable features, such as beaver dams [100] and classifications of UAV imagery into habitat features of specific relevance to species, such as tussocks in [99], or mudflats, oyster reefs, and salt marshes in [104]. Notably, modifications of the landscape by wildlife can be seen both as an indicators of conservation success, e.g., when signifying the outcome of reintroduction [100], and as a disturbance and ecosystem change drivers, e.g., in cases of grazing and trampling [96,108]. Given the indirect nature of such evidence, UAV surveys should ideally be accompanied or validated with other assessments, such as in situ camera traps, to provide local nuances on animal movement and habitat use for more robust and comprehensive conclusions about wildlife population status and activity [108].

3.4. Wetland Ecological Status and Health Indicators

Wetland monitoring at the whole-site and regional scale often aims to make diagnostic assessments of ecological status and health and, ideally, to detect early warning signals of degradation, decline, or disturbance that may lead to irreversible long-term disruptions such as losses of water quality, critical habitat, or similar issues [34,47,138,144]. Emerging applications of UAVs are extremely promising for informing cost-effective yet spatially comprehensive indicators of wetland health at the landscape scale and support long-term monitoring needs. Our pool of studies in this category included seven mapping, three modeling, three change analysis, and one novel method application that either targeted a particular aspect of wetland ecological health such as water quality (e.g., [105]) and vegetation indicators (e.g., [42,108,111]) or assessed the overall ecological status using different indicators (e.g., [7,34]). These studies employed RGB, Multispectral, Hyperspectral, and SAR sensors, as well as combinations of RGB and Multispectral and RGB and Thermal sensors (Figure 3). Multi-sensor combinations especially benefit holistic assessments targeting multiple health indicators by helping to strategically optimize surveying workload between less demanding and easily replicable tasks (such as visual recognition from passive RGB imagery) and more intensive data processing endeavors (such as LiDAR-derived modeling of geomorphology and vegetation structure) [7,34,35].

A common, notable characteristic among these studies was the focus on disturbance and stress factors leaving a sizable fingerprint on wetland ecosystems that can be detected with the help of UAVs more comprehensively and cost-effectively than with field surveys [34,111]. Certain assessments are almost uniquely made possible by the customizable aspects of UAV surveying, such as monitoring wetland body volumes and water budgets based on the 3D information enabled by image overlap [107]. At the same time, a relatively novel history of such applications clearly shows the need for comparative assessments, equipment use trials, and field verifications to optimize workflows for a given set of objectives. For instance, Cândido et al. [105] tested multiple spectral indices of water quality derived from RGB information, which can be computed from a wide array of camera sensors. A comprehensive coastal ecosystem assessment in [34] used different instrument types and altitudes for different indicators. For example, they used RGB cameras at lower altitudes for monitoring pollution, littering, and shoreline position, and employed a thermal sensor at higher altitudes to detect the structure of wetlands and signals of vegetation decay. Vegetation-based indicators play an especially prominent role in such assessments, including the presence of characteristic [27,35,42,48,106] or special status [64,108] native plant species or signals of vegetation mortality and stress [27,34,42]. Among abiotic indicators, several geomorphological and hydrological characteristics can be informed by UAV data, including shoreline microtopography and slope gradients, water quality, surface roughness, and evidence of anthropogenic impacts [7,34,35,47,107].

Interestingly, only a small fraction of such studies focused on wetland change using UAV data from more than one time frame [108,109,110,111]. Two studies focusing on coastal wetland change and signals of marsh dieback in Southeastern USA [109,110] performed change analysis using UAVSAR data provided and managed by the U.S. National Aeronautics and Space Administration (NASA). Another change study in this category [108] tracked the spatial distribution of an endangered native plant species in a wetland with expanding deer population and increasing vegetation disturbance. Finally, the study of a characteristic disturbance-sensitive wet floodplain plant species highlighted the importance of inter-annual fluctuations in patch dynamics in response to various landscape stressors even in sustainable populations [111]. Together, these studies highlight an important twofold value of multi-temporal UAV information: 1) the ability to more accurately assess site-scale changes in species coverage than with field surveys, particularly for rare species that may be missed by discrete plots, and 2) the capacity to cover the time span commensurate with the scale of the processes and detectable impact, which is critical for unveiling mechanistic drivers of change.

3.5. Tracking Biological Invasions

Invasive plants are some of the most widespread biological threats to wetland environments, causing rapid ecological and economic damage throughout these biomes [13,25,116]. In our review, there were nine studies involving invasive plant identification, consisting of six mapping, one modeling, and two novel method papers (Figure 3). Issues caused by invasive species involve reduced habitat for native populations [74,112,113], modified nutrient cycling [113], and negative impacts on livestock [55,116]. Although some invasive aquatic species have beneficial traits, such as increased carbon capture and food for fish [55], aquatic managers typically aim to remove these species from the environment. Management goals pertaining to invasive species largely involve plant identification and remediation, and UAVs allow for on-the-ground immediate assessment and response. Specific objectives for these studies include plant identification [55,68,74,112], biomass and plant height estimates [116], and herbicide application assessments [116]. Invasive plants that are often targeted for removal include species such as wild rice (Zizania latifolia) [116], hogweed (Heracleum mantegazzianum), Himalayan balsam (Impatiens glandulifera), Japanese knotweed (Fallopia japonica and F. sachalinensis) [25], sea couch grass (Elymus athericus) [55], blueberry hybrids (Vaccinium corymbosum x angustifolium) [114], saltmarsh cordgrass (Spartina alterniflora) [74], water hyacinth (Eichornia crassipes), water primrose (Ludwigia spp.) [13], glossy buckthorn (Frangula alnus) [115], and common reed (Phragmites sp.) [68,112,115], which is one of the most “problematic invasive species in wetlands in North America” [113].

Aerial imagery is a useful tool in the management of invasives, and aerial photography has been employed for this purpose since the beginning of the twentieth century [153]. UAVs have expanded the spatial and temporal capabilities of vegetation mapping, and this increased resolution allows for more fine-scale detection of individual species [55]. Spectral indices such as the normalized difference vegetation index (NDVI) [55,112] and the normalized difference water index (NDWI) [55] are common for invasive species detection, while texture-based analysis [68], deep learning [114], and object-based image analysis (OBIA) are successful methods used for invasive species classification [55,74]. As many of the UAV-based invasive species studies, we reviewed used RGB (n = 7) and multispectral (n = 3) imagery, orthophotos [25,74,112], and digital surface models [55,116] were common data products. To overcome spectral limitations of such data, some studies developed sophisticated mapping approaches using Machine Learning algorithms; for example, Cohen and Lewis [115] proposed a monitoring system for two common invasive plants in the Great Lakes coastal ecosystems with the CNN-based software for automated recognition of these species. A more recent study demonstrates that imaging spectroscopy through aerial hyperspectral sensors can increase vegetation classification accuracy [13]. While mapping invasive plants is relatively easy to learn and produces sharable outputs with managers and stakeholders [116], limitations of this method include difficulties identifying submerged or emergent aquatic plants due to visibility issues from turbid water and solar reflection on the water’s surface [154]. With reduced sensor costs and increased data storage capacity, hyperspectral sensors on UAVs will pave the way for more accurate identification of invasive species [13].

3.6. Restoration and Management Outcomes

Restoration projects provide ecosystem services such as habitat for aquatic flora and fauna, improved water quality and quantity [120], and increased carbon storage [56]. In our review, we found fifteen papers that focused on restoration goals, involving seven mapping, two modeling, four change over time, and two novel method studies (Figure 3). Although restoration monitoring is a vital component of ecological management, it is rarely prioritized due to time and personnel constraints associated with ground surveys, lags between project implementation and data analysis, and the need for high-resolution data [26,120]. UAVs are tools that enable restoration assessment at the whole-site levels and allow for cost-effective change analysis of restored environments [8,9,121,122] due to their flexibility in flight times and heights and lack of disturbance to sensitive restoration areas [56]. Furthermore, repeat aerial surveys facilitate adaptive management techniques [118] of iteratively examining and modifying projects, and this continual decision-making capability helps ensure long-term success of restoration projects.

UAV-based restoration monitoring involves a variety of objectives, including biomass estimates [120], hydrological mapping [8,9,11,123], morphological evaluations [43,57], and classification of plant communities in peatlands [10,56,119,122] and coastal regions [44,117,118,121]. While many researchers leverage RGB aerial imagery for restoration monitoring [8,9,120,122,123], multispectral imagery, and specifically the near-infrared wavelength, which can detect species and topography in shallow wetland environments [155]. This is particularly useful in invasive species identification [121] and peatland biodiversity assessments [56] at restoration sites. Additionally, thermal aerial imagery can be used to locate groundwater discharge in restored peatlands, greatly reducing time spent conducting ground-based seep detection studies [11]. Vertical takeoff and landing in constricted or topographically-complex areas generates popularity of quadcopter UAV usage for small restoration study regions [8,9,10,11,120,122], while fixed-wing UAVs are preferred when mapping larger restoration projects [56,118,121,123]. UAVs prove to be efficient tools to track human-induced ecological modifications [9,11,44,118,122,123], and cheaper and lighter multispectral sensors for quadcopter, and fixed-wing UAVs will help advance future restoration assessments and analysis.

3.7. Abiotic Surveys

The mapping and modeling of abiotic environmental features such as land cover classes [4,58,65,124,129] and water [32,45,66,127,131] are important for understanding surface dynamics and how landscapes evolve, which complements the multitude of UAV wetland vegetation studies by providing information on the structure and habitat of these species. We reviewed fifteen papers pertaining to abiotic surveys, which was the second largest number of papers in one category, following the vegetation inventory group (Figure 3). A common theme in abiotic mapping and change detection is SfM analysis derived from RGB imagery [4,58,127,129]. In these studies, point clouds are generated to construct digital surface models that estimate volumetric compositions of the landscape, similar to LiDAR data [4,58]. This is a useful technique in abiotic UAV research that pertains to modeling shoreline losses and habitat destruction [4,130], floodplain connectivity [127], carbon storage estimates [129], and topobathymetry [58,128]. Real-time kinematic (RTK) GPS can greatly enhance the precision of such studies [4,5,45,58,65,124,131] and ground control points (GCPs) [4,45,58,65,66,124,129] can generate greater horizontal and vertical accuracy in landscape modeling and change detection studies. In particular, Correll and colleagues [28] rigorously employed RTK to measure elevation in a tidal marsh. Their study reported that raw UAS data “do not” work well for predicting ground-level elevation of tidal marshes, likely because a DSM is not equivalent to a DEM [28]. Other projects in the realm of abiotic mapping involve RGB analysis of soil structure and infiltration [131], delineations of riparian zones [125], and saltmarsh shoreline deposition [126], in addition to thermal analysis of river to floodplain connectivity [127], river temperature heterogeneity in fish habitats [45], and peatland groundwater seepage detection [32].

UAVs are particularly important in abiotic mapping and modeling studies because the hydrodynamic complexity and tidal fluxes characteristic to many of these environments can leave little time for ground surveys [58] and sites like blanket bogs are often difficult to access because they are remote [129]. UAVs are therefore helpful in these studies because they reduce the cost of ground surveys and in situ sensor deployment [4,66], can access regions that are difficult to reach [58], and are relatively easy to deploy and use [129]. UAVs are successful in achieving abiotic management goals of reduced erosion [4,124], flood monitoring [58], and carbon and peatland conservation [129]. Future abiotic mapping and modeling techniques will benefit from increased UAV and in situ paired sampling methods, as demonstrated by Pinton et al. [66] who used dye tracing coupled with RGB imagery to measure saltmarsh surface velocities and Isokangas et al. [32] who used stable isotopes paired with RGB, multispectral, and thermal imaging to evaluate peatland water purifying processes.

3.8. Data and Methods for Wider Use

Applications of UAVs as a source of reference information demonstrate their special potential to support broader-scale analyses from satellite and other (piloted) aerial imagery [72,75,138,139,140,141,142,143,144,145,146]. Our pool included 12 papers in this category, mostly using RGB but also RGB + Thermal, RGB + Multi + Thermal, and Thermal instruments (Figure 3). Most commonly, such applications use UAV images to infer the identity and location of wetland land cover and vegetation types [72,138,139,140,142], sometimes up to individual plant species level [146] to generate training and/or validation samples for landscape-scale wetland classifications. Assignment of reference categories can range from manual delineation of specific class extents or smaller samples based on their visual recognition from the ‘raw’ UAV imagery [139,140] to more automated classifications using computer-based algorithms [72,138]. High spatial detail and recognizability of landscape features from UAVs increases the analyst’s confidence in selection of such samples compared to, e.g., coarser-resolution imagery, while whole-site coverage allows obtaining a much larger number of samples compared to field surveys given site access constraints [29,139,146]. In addition to hard classifications, UAV-derived high-resolution maps can also support fuzzy analyses from coarser-resolution data, such as spectral unmixing of pixels into fractions of contributing cover types, both as a source of “endmember” examples [138] and as a basis for validating endmember fractions estimated from satellite imagery [75,138].

Reference information from UAVs can include not only thematic identities of wetland surface components but also their quantitative properties that can be assessed visually [141,143] or via statistical summary of the mapped imagery [145]. For example, a study of an invasive wetland grass [143] relied on visual interpretation of its percent cover from image portions matching the field survey plots, while a study of mangrove ecosystems [141] took advantage of high spatial detail in UAVs to identify areas of different tree density to calibrate the analyses based on satellite data. Similarly, a study focusing on a forested bog in Czech Republic [135] developed a UAV-based workflow for tree inventories based on the UAV-derived CHMs. Such applications also highlight important opportunities for regional up-scaling of ecosystem properties with remote sensing indicators using models derived from UAV data [145], since the latter can be more accurately matched with fine-scale ground measurements in both space and time than from satellite image pixels.

Finally, in some applications, UAVs were used to complement other satellite, piloted aerial, and historical geospatial data sources by helping to characterize landscape qualities, composition, and status from a unique spatial perspective not possible with these other sources [144,146]. For instance, a historical analysis of a river floodplain change [144] found UAV orthoimagery and DEM valuable for measuring terrain geomorphology, vegetation distribution as well as signatures of human land use to complement the analyses with historical maps and satellite imagery (Landsat and Sentinel-2). In such applications, UAV datasets can both fill a gap in historical image series by complementing piloted aerial photography archives, and elucidate certain landscape characteristics at a great level of interpretation fidelity, especially within regions unreachable by field surveys [144]. Notably, the informative richness offered by very high UAV resolution can be significant even in studies working with already fairly high spatial resolution of 1–4 meters, such as Pleiades [138], RapidEye, and Worldview satellites [140]; Spot-6 at 1.5 m [141]; or USA national aerial imagery products [146], in addition to medium-resolution products such as Landsat and Sentinel-2 [72,75,139].

At the same time, several important technical factors have been mentioned as potential limitations in these applications, such as mismatches between spectral values of UAV and satellite products for a given electromagnetic band or index and limitations of recognition at fine semantic levels [138,139,146]. It may also be difficult to derive automated classifications of spectrally rich and heterogeneous UAV data for mapping reference classes, particularly with a limited number of spectral bands, which may lead to manual corrections of classified maps or even hand digitization of the key land cover types from the raw data [75,146]. Finally, in a number of cases, UAV-derived field information may not be sufficient or may require additional validation with field surveys and ground-level photographs of sampling locations [139,146]. Addressing these challenges may be possible by performing flights at different stages of the plant growing season or hydrological cycle [139,146] to capture unique phenological aspects of classes. Spectrally limited UAV data may be strategically applied to workflow steps where they are likely to be most useful, e.g., for a fine-scale mapping of easily distinguishable classes, to facilitate more detailed classifications within these categories from other sources [146].

3.9. Ground Reference Applications

A smaller group of studies (n = 8) in our pool applied UAVs towards developing generalizable methodologies for image processing, information extraction, and/or field surveying [6,76,78,132,133,134,136,137] using RGB, RGB and Multispectral, and LiDAR sensors (Figure 3). Such studies often emphasized methodology-building efforts more than site-specific ecological questions and discussed the relevance of the proposed analyses and workflows to wetland management, conservation, or research beyond their specific case study sites. Four of these applications focused on UAV data processing for mapping and quantitative assessments of vegetation [76,133,134] and hydrological properties [132], while four other studies concentrated primarily on UAV surveying workflows [6,136,137], with one study proposing a full workflow for both surveying and subsequent image processing for riverine and estuarine landscape change assessment [78].

Overall, despite the small size of this literature pool, the versatility of its topics highlights the importance of this emerging methodology-developing trend for a wide array of wetland management and monitoring needs. Furthermore, it is obvious that some of these needs can be uniquely facilitated by the special nature of UAV data and surveying protocols. For instance, methodology to generate multi-angular landscape images from overlapping UAV image tiles showed a strong potential to improve object-based classification of complex landscapes at land-water ecotones [76] and a powerful strategy to overcome the limitations of low spectral richness in RGB orthoimagery mosaics. Among non-vegetation applications, a method to map groundwater table in peatlands proposed by Rahman et al. [132] relies on UAV-derived orthophotographs to detect surface water bodies and on photogrammetric point clouds to extract landscape samples of water elevation, which together enable a continuous interpolation of groundwater levels across a broader landscape. Similarly, the UAV-borne LiDAR surveying workflow together with a customized software system developed by Guo et al. [133] holds a strong promise to alleviate the challenge of characterizing 3D vegetation structure as critical determinant of habitat and ecosystem function in complex, limited-access sites such as mangrove forests. Another example of vegetation structure modeling involved prediction of mangrove leaf area index (LAI) based on different NDVI measures computed from UAV and satellite data [134]. Notably, in the latter study, UAV indices tended to correlate with field-measured LAI less strongly than satellite-based ones, except a well-performing “scaled” UAV-based form of NDVI which included adjustments for the greenness of pure vegetation and pure non-vegetated background. Not surprisingly, the role field-collected validation data such as direct vegetation measurements [133,134] in or groundwater well data [132] was important in these studies even though it was not always required for the ultimate use of the developed methods.

In turn, studies prioritizing the development of field survey workflows were often motivated by the need to generate data and methods serving potentially different users and management questions [6,78,136,137]. Examples of mentioned ongoing or potential wetland applications supported by UAV data included monitoring changes in aquatic and emergent habitats and landscape structure [6,78,137], tracking invasive and native plant species [137], delineating different types of wetlands for wetland management [137], and geomorphological assessments [78], involving very practical purposes such as decisions on wetland reclamation [136]. Not surprisingly, both orthoimagery and SfM play critical roles in the anticipated data uses and receive close attention in field surveying workflows. Another notable consideration for UAV surveying workflows discussed by Thamm et al. [6] involved ground truthing specifically of satellite-based SAR products, which can be difficult to interpret visually due to the nature of this active remote sensing, but which are critical in humid, cloudy regions such as their study area in Tanzania.

Versatility of potential data uses from such efforts also raises important considerations about the optimal timing of data collection, including multi-seasonal acquisitions [6,137], as well as practical measures to maximize the efficiency of time in the field to optimize the monitoring routines [6]. The latter issue may also require innovative strategies around UAV instrumentation: for instance, Kim et al. [136] discussed the advantages of a fixed/rotary hybrid UAV system to meet multiple challenges of coastal assessment including the large survey area requiring longer flights, high wind requiring resistance, and the ability to hover, among other features. Relatedly, development of surveying workflows for universal wetland management uses needs to pay close attention to positioning error and both horizontal and vertical accuracy [78,136], and understand which levels of error are acceptable for specific applications [136].

4. Discussion

4.1. Technological Opportunities and Strenghts in Wetland Applications of UAVs

Several important strengths of the expanding UAV technology are evident across the pool of wetland applications reviewed in this synthesis. First, UAVs provide spatially-comprehensive coverage that enable researchers and managers to view the wetland as an entire entity. This large spatial extent differs from field surveys that capture discrete rather than continuous data at smaller spatial and temporal resolutions, although certain types of data necessarily must come from ground observations, such as soil samples [117,131]. High resolution imagery from a UAV enables a high degree of visual recognition, providing an array of opportunities from basic surveying (e.g., plant cover, vegetation types with distinct morphological properties, visual texture and color) [55,63,68,81] to mapping, modeling, and quantifying wetland characteristics. Also, the customizable timing of UAV flights allows for users to avoid cloud issues, which are prevalent in wetland environments [156], and track wetland vegetation phenology, which is critical at early stages after disturbances and restoration. UAV customization permits users to choose their sensor of choice (i.e., RGB, multispectral, LiDAR, thermal, hyperspectral) that can be adapted to the study’s objective, whether it pertains to the morphological changes of a saltmarsh environment or to the aboveground biomass estimations in a mangrove ecosystem. User-defined flights are also advantageous in wetland studies because they enable repeat missions (e.g., [92,93]) at various flying heights (some even over 400 m above ground level) (e.g., [35,71,74]), which create various corresponding ground sampling distances used to address the research question at hand. Finally, UAVs are highly beneficial in wetland environments because they help overcome challenges of site access [157], and provide cost-effective alternatives to traditional surveying methods that require ample labor and time [53].

4.2. Field Operations in Wetland Setting

Several important considerations may affect feasibility of flight surveys even when UAVs of desirable type are available to achieve a given management goal. Regulations on UAV surveying often affect spatial scope, timing, and feasibility of wetland surveys [25,40], and may differ among countries as discussed by earlier reviews [37,158,159]. Not surprisingly, previous efforts (e.g., [3,136]) highlight regulations as one necessary improvement to make UAVs more ubiquitous in science applications. For example, reduced restrictions on lighter remote-piloted aircraft operations in Australia were mentioned as an important way to facilitate novel ecological research [53,160]. A study performed in the Czech Republic cited drone traffic restrictions as a limiting factor in the flight altitude [8]. In the USA, multiple regulations have been developed for various aspects of field UAV operations, including field crew size, flying altitudes, proximity to airports, and other considerations (see, for example, summaries of key regulations by the Federal Aviation Administration (FAA) in Zweig et al. [40] and Jeziorska [37]). A riparian study in the UK [54] mentioned UAV flight regulations as a reason for dividing the study area into sections and acquiring their images separately. In addition to common protocols related to weather, airspace regulations, and proximity to specific land uses [24,40], wetlands may invoke additional regulatory constraints due to presence of sensitive species and habitats [101]. For instance, wildlife inventories may often require permitting for both field surveys of animal populations and on-site UAV use and may demand that researchers and managers formally assess and minimize the disturbance during field sessions [63,101,103]. Such restrictions not only can become direct obstacles to data collection in certain contexts [5,24], but also affect user perceptions about the adoption of UAVs as a monitoring tool [161].

The dynamic and heterogeneous environmental setting of wetlands creates multiple challenges for both spatial scope and timing of UAV applications. Site entry issues prohibitive for field surveys may also significantly reduce access for efficient UAV operation due to lack of suitable landing and launching sites [24,144] and low mobile network coverage prohibiting real-time georeferencing [25,27]. A vivid example of these challenges was noted by [24], where the use of an airboat was necessary to maintain radio communication when directing the instrument to a landing location due to the lack of suitable sites close to the target wetland area. Special constraints arise around the establishment and maintenance of ground control points (GCPs), since wetland environments significantly limit the capacity to make GCPs “permanent” and to keep them static given wind, flooding, and movement of sediment [35,99,104,116,124,126]. Not surprisingly, studies often relied on a small number of GCPs (median number of GCPs among reporting studies was ~11 per site) and develop various temporary solutions to facilitate ground referencing. For example, Howell et al. [116] used inflatable party balloons for a study of wetland plant biomass; however, strong winds could cause the balloons to deflate or shift, reducing the precision of their positioning. Similarly, the discussion of georeferencing in avian colony inventories [101] proposed buoys as reference features to support image mosaicking in submerged areas near nesting sites. Finally, it may be necessary to avoid site disturbance during GCP installation and upkeep; for instance, one application [5,66] deployed GCPs on metal poles by boat during the high tide to both ensure their elevation above the water and reduce the impact on marsh vegetation. As real-time positioning technologies such as RTK are becoming more accessible, the labor-intensive task of GCP placement and maintenance may become less relevant over time (e.g., [7,28,131,144]).

More generally, studies showed a substantial variation in reporting positional accuracies and errors both for image mosaicking and for the field georeferencing. Approximately half of the studies (56 papers) mentioned using GCPs and 49 of them provided some information on the number of points, and even fewer (~40 studies) made comments about GCP positional accuracy. Only 55 studies (~46% of the pool) reported some form of survey and image mosaicking error. Since implications of mosaicking and positioning errors are likely to substantially vary among management goals (e.g., [28,119,128,133]), the lack of clear accuracy benchmarks as well as standards for accuracy and error reporting in UAV applications presents an important action item for the wetland UAV community in the future.

The timing of UAV operations may be significantly constrained by weather conditions and airspace restrictions, which may limit the number of flights in a given field session and affect data collection consistency across different sites [25,27,104]. Such impacts are especially strong in coastal tidal wetlands where a targeted phase in the seasonal ecosystem cycle (e.g., plant biomass) must be monitored at a low tide stage and with the appropriate field conditions, such as low wind speed for operational safety and high solar elevation for minimizing shadows [25,28,65]. Difficulties to recover the UAV instrument from a wetland in the case of malfunction or failure place a special importance on UAV flight time, battery, data storage capacity, and other factors affecting flight duration and the success of landing [24,144], and underscore the need for efficient use of time and logistical resources [6]. Prioritizing shorter operation times is also critical for reducing UAV disturbance to wetland wildlife and exposure of human operators to potential field hazards. An added benefit from this perspective is selecting easily replaceable instruments (i.e., different camera and sensor types compatible with the same carrier platform) to assess multiple indicators and meet various application goals concurrently [133].

4.3. Considerations in UAV Data Processing and Management

Several important aspects of UAV data processing can also be challenged by wetland environments and thus need to be considered early in application workflows. For instance, three-dimensional spatial accuracy in UAV-derived products is dictated both by the field operational factors (e.g., positioning system in the instrument, precision of GCPs, and other reference locations) and data post-processing (e.g., additional geo-rectification to correct for spatial shifts in image mosaics [119]). Positional inaccuracies may accumulate and propagate across workflows, eroding the quality and reliability of the final products [162]. Such concerns are especially problematic in studies heavily relying on 3D information, such as assessments of site elevation change in response to restoration treatments or sea level rise [28,35,43,57,88,118,130].

Reported georeferencing and positioning challenges make it obvious that spatial accuracies around ~0.1-1m, which are common, for example, in ground-truthing for satellite data analyses, become too coarse relative to very small (<0.1m) pixel sizes possible with modern UAVs. Simultaneously, the dynamic nature of wetland environments inherently imposes a fundamental degree of positional uncertainty due to wind-induced movement of vegetation, fluctuations in water extent, and changes in mudflat, sediment, and ground debris. Effectively, this means that even very high levels of positional accuracy may not always guarantee the “true” match between wetland surface elements and the spatial location of a given small pixel. This issue has not been extensively discussed; however, it raises an important need to better understand what minimum spatial resolution is likely to realistically represent landscape features in a given wetland setting and what spatial errors are acceptable in aligning the imagery with field reference locations. This also raises a question of how much spatial accuracy is really necessary for a given study objective and what levels of error may lead to similar analysis outcomes without substantially impacting the quality of inference from UAV data.

Very high spatial detail in UAV imagery can also become a massive challenge for automated computer-based algorithms in mapping and change detection [25,27,72,75,78]. Small pixel size amplifies wetland spectral heterogeneity by highlighting local variability in illumination, shadows, vegetation stress, background flooding, and similar factors. This may decrease the ability to successfully distinguish landscape classes [42,139,141], especially from spectrally limited RGB imagery [75,76,104]. Although multiple techniques to navigate these challenges have been proposed, such as image texture measures summarizing local heterogeneity at the pixel or local moving window scales and OBIA tools [29,55,69,76,78,104], their use among wetland UAV studies remains somewhat limited and calls for more guiding recommendations. Image texture metrics were used with mixed success in the reviewed studies and showed strong sensitivity to spectral artifacts caused by topographic breaks, orthomosaicking distortions, and similar factors [27,55,68,140,143]. For OBIA, similar to previous applications of satellite and piloted aerial imagery [150], studies reported challenges in choosing among different options for segmentation parameters [25,27,78], as well as disagreements among different classification algorithms [29], sometimes causing the analysts to resort to fully manual delineation [75]. Such mapping limitations can be aggravated by challenges to accuracy assessment, such as the lack of standardized assessment protocols [24], variability in accuracy among different seasons [145], and the implications of human error in field visits for accuracy assessment in UAV images [68].

A notable challenge for wetland management that remains is the limited guidance on developing efficient long-term monitoring plans. Among the papers that frame their work as specifically relevant to monitoring, the actual published case study was often limited to single-date analysis. As a result, there are few insights into potential opportunities and challenges of change analysis with data from UAVs. Potential opportunities include the ability to capture fine-scale changes in wetland plant communities at relevant temporal scales. Relevant challenges are introduced with multi-temporal fine-scale imagery because imagery can be susceptible to environmental conditions at the time of data acquisition, requiring radiometric calibration between images or normalization between multi-date DSMs. Some studies circumvented this need by performing a visual photointerpretation at different time frames within a signal wetland site (e.g., [108]); however, this can become a demanding task for larger wetland regions or multiple time frames. However, none of the papers investigating change mentioned radiometric calibration, and only nine papers overall discussed radiometric calibration [14,23,29,55,56,62,65,71,91]. Although in single-date and single-site applications, radiometric calibration can be automatically facilitated by relevant software packages and mosaicking tools, longer-term and regional applications would require a systematic inter-calibration for discrete mosaics created for isolated sites or different image dates [24,138].

Finally, while several papers discussed the need to perform reproducible scientific studies and acknowledged the need for data sharing, only nine studies had some mention of data accessibility (i.e., data and/or scripts), and only two [36,139] explicitly provided links to an accessible repository (e.g., Github, ArcGIS Online). A few others mentioned that data were available upon request, or were available at a third-party collection. For example, two papers by Ramsey et al. [109,110] used NASA’s UAVSAR data, which are collected, processed, and hosted by NASA for wider use by other parties.

4.4. Perspectives for UAV Use in Long-Term Wetland Monitoring

The collective experience offered by the reviewed applications suggests several insights for optimizing the use of UAV workflows for wetland management needs. Overall, the UAVs hold a strong appeal for replacing human observers on the ground [9,31,57] and fill an important information gap between limited-scope field surveys and non-customizable satellite observations [53,138]. At the same time, it is obvious that a full replacement of human potential is not yet possible, nor is it necessarily desirable. Many wetland applications require some amount of field data collection [138,140], such as measurements of vegetation biomass, height, and other parameters used in modeling efforts (e.g., [62,87,116,133,134]), as well as characteristics of soil and water chemical properties that cannot be observed from the above-ground aerial platforms [105,117,131]. Second, certain targets, such as rare, endangered or problematic plant species, may be too fine-scale in their spatial distribution even for small UAV pixels and thus require field verification [35,55,108].

These issues highlight an important need to better understand the sufficiency of UAV-enabled opportunities for a given management goal and strategically apply this understanding to maximize both cost-effectiveness and informative value of data acquisitions. Given common budget limitations, the tradeoff between growing sophistication of rapidly advancing UAV instruments and high-risk wetland environmental setting may favor robust and, ideally, less expensive sensors and workflows. Furthermore, developing or investing in a long-term monitoring approach using UAVs is complicated by the fact that the technology is changing rapidly and/or the cost of hardware and software is falling quickly. Beyond the instrument expenses, however, wetland applications inevitably necessitate other costs, such as training and labor of personnel, post-flight data processing and management, travel to and from the sites, field logistics, and GCP management [5,24,29,116], all of which can be probed for improving workflow efficiency.

On the field operations side, a critical precursor of such efficiency lies in navigating the tradeoff among spatial coverage and timing of operations and informative content of the data. For a given camera field of view, reducing flight altitude can increase the flight time of a study site, but also spatial resolution [34,65,67,69,115,133]. Similarly, expanding the overlap among images at a given altitude may improve the quality of photogrammetric products such as DSMs [57,95], but escalate both the time of data collection and post-processing computational workload [13,65]. For these reasons, efforts pursuing complex, multi-faceted monitoring indicators may especially benefit from multiple flights and/or instruments with different specifications [7,34].

Importantly, however, a number of wetland applications might be severely constrained by the insufficiently low flight altitudes, limiting the ability to recognize specific landscape features or perform visual assessments of water coverage and similar indicators [34,43,69,97,106,115]. Among the few studies operating at ≤20m altitudes in our pool, Bertacchi et al. [69] concluded that the most accurate taxonomic identification of plants at the species/genus level was possible only with the images taken from 5 m altitude, compared to 10 and 25 m tested in the same study, especially for smaller species with more cryptic distribution. Cohen and Lewis [115] found 20 m to be superior compared to 30 and 60 m for detecting and mapping density of somewhat more conspicuous invasive shrub and reed plant species. Some applications may necessitate extremely low, near-surface surveying—for example, monitoring of a coastal marsh breach in Pontee and Serato [43] had to fly UAVs “as near low water as was possible” to observe rapid changes in water volume, and even this strategy could not fully resolve some of the view challenges. This evidence suggests that near-surface observations at very low altitudes could provide extremely useful surrogates for ground-level photographs and some other data collected by field surveyors [139]. Besides regulatory constraints such as the risk of wildlife disturbance [101,103], limitations on flying heights were not always clearly stated, and could stem, for example, from practical considerations related to battery life and efficient time use [115], or the intent to optimize the total number of photos [100]. In some contexts, altitude choices were also driven by characteristics of plant cover and observation goals; for instance, to enable photogrammetric modeling of tree biomass from UAV data, Navarro et al. [62] used 25 m altitude in a mangrove plantation with trees 0.35–3.4 m in height to facilitate their crown observations from different view angles. Overall, such considerations clearly highlight the need to better understand the implications of flying height limitations for both the data quality and efficiency of post-processing and develop more specific guidelines for different management goals.

It is also critical to anticipate and minimize the effects of potential field survey shortcomings on data post-processing and information extraction. For example, sharpness and exposure in visible and thermal camera settings may strongly affect the quality of images and ease of visual interpretation of wetland targets [34,101,102], which means that failure to select the appropriate settings can render the ultimate datasets much less usable. Relatedly, allocation of ground surveying units (e.g., vegetation plots) should anticipate potential challenges in their co-location or identification from UAV data and, when possible, record assisting information, such as cost-effective ground photographs [139,146]. Constraints on GCP installation and management can be alleviated by combining a smaller number of “formal” control points with additional field checkpoints for validation and quality assessments [8,9,29,48,107,136]. For example, in a coastal assessment for reclamation purposes, Kim et al. [136] used 426 checkpoints surveyed with the total station. When applicable, it is also possible to incorporate reference data and additional control points from non-field sources, such as high-resolution Google Earth imagery in [11] and distinctive terrain objects visible in the imagery in [10].

From the data processing perspective, it is important to evaluate the potential implications of uncertainties in spatial resolution and positional accuracy for project goals in order to decide on the optimal minimum mapping units (MMUs) and the appropriate strategies in navigating such uncertainties. An MMU choice should ideally balance the benefits of high spatial resolution with the needs to improve signal-to-noise ratio and avoid mixed pixels, both of which may increase with greater pixel size and also vary with electromagnetic aspects of data and instruments [13,109,119]. This means that data analyses, especially computer-based routines, may not always require the highest “achievable” spatial resolution and may, in fact, benefit from some degree of data aggregation and filtering to reduce noise [14,96]. Interestingly, several studies used MMUs substantially exceeding the original ground sampling distance of their UAV data (e.g., [14,35,56,67,75,87]), often in order to match the coarser scales of other relevant datasets. Rather than simply representing local spectral variation, texture measures can be strategically used to accentuate certain forms of heterogeneity most relevant to class differences, including more sophisticated filters that can be more easily computed with modern tools [68]. Importantly, OBIA can also be approached as a strategy for “smart” pixel aggregation and for overcoming spectral richness limitations based on texture differences at the object level, as well as object size, shape, and context (e.g., adjacency to other object types), in addition to spectral values [27,104,147,148,150,163]. Challenges in selecting OBIA segmentation parameters in complex wetland surfaces can be alleviated by focusing on smaller “primitive” objects increasing signal-to-noise ratio that don’t have to capture full extents of landscape entities [150] and that can be more easily matched with ground-surveyed locations [29,139]. Emerging tools enable more automated selection of segmentation parameters to generate such primitives (e.g., [164,165,166]), while the full-sized class patches can be recovered via their classification and merging [150].

Finally, a broader path towards cost-effectiveness may lie in co-aligning UAV applications with systematic regional monitoring efforts and creating wider opportunities for data use beyond individual case studies [6,7,78,137]. Opportunities to reduce operational and travel expenses may arise, for example, when UAVs are hosted in permanent research stations and can be operated by long-term personnel, or when flights can be logistically aligned with other environmental field survey campaigns to help reduce travel cost and synchronize collection of complementary data.

5. Emerging Trends

5.1. Emerging Technologies