1. Introduction

Managing shark–human interactions is a key social and environmental challenge requiring a balance between responsibilities of providing beachgoer safety and the responsibility to maintain shark populations and a healthy marine ecosystem. Sharks pose some limited risk to public safety [

1]; however, there is a recognized social and environmental responsibility to develop strategies that effectively keep people safe and ensure the least harm to the environment [

2]. This process is still evolving, particularly in countries with yearly shark interactions. For instance, in Australia, The Shark Meshing (Bather Protection) Program NSW [

3] is a hazard mitigation strategy in place since 1937 which is known to cause harm to vulnerable species [

4] and is listed as a Key Threatening Process by the NSW Scientific Committee with ecological and economic costs [

5]. With the range of emerging technologies offering new potential to mitigate harm to people and sharks alike, there are new ways to manage interactions without such high costs.

Aerial surveillance has historically been used to help identify sharks in beach zones and warn beachgoers in order to avoid shark incidents. Australia started one of the earliest “shark patrols” known through the Australian Aerial Patrol in the Illawarra in 1957 [

6]; currently, across the country, 1000s of km are patrolled by airplanes and helicopters on any weekend. For decades, aerial patrols have absolutely helped mitigate shark interactions, predominantly by alerting lifeguards/lifesavers to enact beach closures [

7], and further are essential for a range of safety and rescue purposes. However, a recent study suggested the ability to reliably detect sharks from planes and helicopters has shown limited effectiveness, with only 12.5% and 17.1% of shark analogues spotted for fixed-wing and helicopter observers, respectively [

8]. Though the assessment of such studies has been debated [

9], there is certainly agreement that new technologies could assist expansion of aerial shark spotting and communication of threats to lead to better outcomes for humans and sharks alike.

The recent expansion of unmanned aerial vehicles (UAVs), often known as drones, offers new abilities to enhance traditional aerial surveillance. In recent years alone, drones have been used to evaluate beaches and coastal waters [

10,

11,

12] and monitor and study wildlife [

13,

14,

15], from jellyfish [

16], sea turtles [

17,

18,

19], salt-water crocodiles [

20], dolphins and whales [

21,

22,

23,

24], and manatees [

25] to rays [

26] and sharks [

27,

28,

29,

30]. This expansion in surveillance is likely related to the market release of consumer drones with advanced camera imaging; for instance, the DJI Phantom introduced in 2016. Whether rotor-copter or fixed wing, rapid development of commercial drones means more flying “eyes” can be deployed in more locations for conservation and management [

31]. Using these methods for shark detection again shows great promise; drones are now a part of several lifeguard programs in Australia in multiple states [

32] and are continuously being evaluated for reliability [

33]. Other UAVs are also being considered [

34]; a review by Bryson and Williamson (2015) into the use of UAVs for Marine Surveys highlighted the potential of unmanned tethered platforms such as balloons with imaging especially over fixed locations [

35], and such platforms were successfully tested for shark spotting applications by Adams et al. [

36]. These platforms may be able to overcome current issues facing drones: restrictions resulting from limited battery life, limited flight areas per air safety regulations (in Australia this is facilitated by Civil Aviation Safety Authority (CASA) [

37]), and the need for pilot training and equipment expertise. Regardless of platform, the use of piloted and, critically, autonomous UAVs in wildlife management will almost certainly expand and offer new opportunities, particularly to complement the use of conventional drones.

One critical limitation in traditional aerial surveillance is the need for human engagement with the technology, principally for visual detection. However, again the recent expansion of machine learning/artificial intelligence and in particular Deep Learning tools [

38] in the last decade has offered significant advantages as an approach to image classification as well as the detection and localization of objects from images and video sequences. While traditional image analysis relies on human-engineered signatures or features to localize objects of interest in images and performance depends on how well the features are defined, Deep Learning provides an effective way to automatically learn or teach a detector (algorithm) the features from a large amount of sample images with the known location of the objects of interest. This concept of using automated identification from photos/videos has been explored for years (especially from images gathered from camera traps); with the expansion of available drones, automated detection has bloomed as a tool for wildlife monitoring [

39,

40]. Thus Deep Learning detection algorithms for sharks [

41,

42] (as well as other marine wildlife and beachgoers) are expanding and offer superior tools to be integrated with aerial surveillance for automated detection.

Combining UAVs and machine learning with smart wearables like smartwatches creates an additional opportunity to build scalable systems to manage shark interactions with individuals. Again, a recent technology expansion (mainly in 2017) has created an era of available smartwatches with cellular communication technology, offering the potential to link surveillance with personalized alerting. Our project was designed to overcome the gap between these emerging techniques for real- time personal shark detection by integrating aerial reconnaissance, smart image recognition, and wireless wearable technology. Sharkeye had two main objectives: (1) bridge data from aerial monitoring to alert smartwatches and (2) test the system as a pilot on location. Objective 1 required technical integration from the aerial acquisition of data to the app along the architecture stack including validating key components of hardware, software, and services (connectivity). To execute, our team developed an improved detection algorithm by training the system using machine learning based on aerial footage of real sharks, rays and surfers collected at local beaches; determined how to host and deploy the algorithm in the cloud; and expanded a shark app to run on smartwatches and push alerts to beachgoers in the water. For Objective 2, field trials were conducted at Surf Beach Kiama over a period of three days using methods for testing continuous aerial surveillance. To test detection accuracy, we deployed moving shark analogues as well as swimmers, surfers, and paddleboarders while recording the response through the use of multiple smartphones and three smartwatches simultaneously. In addition to the main objectives and related work, a backup emergency communication system between a swimmer in danger and a lifeguard was also tested, as well as deployment of a communication relay on the blimp to further demonstrate the potential of the Sharkeye platform.

The system was successfully deployed in a trial with over 350 detection events recorded with an accuracy of 68.7%. This is a significant achievement considering that our algorithm was developed using <1 h of training footage, highlighting the huge potential of the system given more training data to improve confidence. Overall, Sharkeye showed potential in the convergence of drones, Internet of Things, machine learning, and smart wearables for human–animal management. We believe and advocate continued investment to leverage existing technology and utilize emerging technology to tackle both shark and human safety objectives. Further investment would significantly improve accuracy and prediction and expand identification. This capability to expand to other beach and water-based objects suggests the platform can be used to develop tools for effective and affordable beach management. A common tool that allows for real-time superior shark detection as well as monitoring beach usage, swimmer safety, pollution, etc., would help de-risk investment in R&D and deployment. Ultimately, as the Sharkeye system is not only platform agnostic, it has potential to assist cross-agency objectives of data collection and analysis for consumer safety, efficiency gains, market competitiveness, and even development for export, we strongly recommend resourcing funds to expand such work.

2. Materials and Methods

The Sharkeye project developed an interface between two previous initiatives, Project Aerial Inflatable Remote Shark–Human Interaction Prevention (Project AIRSHIP), a blimp-based megafauna monitoring system, and Sharkmate, a predictive app based on the likelihood of shark presence. We successfully optimized the equipment and process, i.e., our platform, required to automate video collection from the blimp, send feed to a cloud-hosted algorithm, determine shark presence, and send alerts via smartwatches. The components of the system are described below.

2.1. Aerial Imaging from UAVs

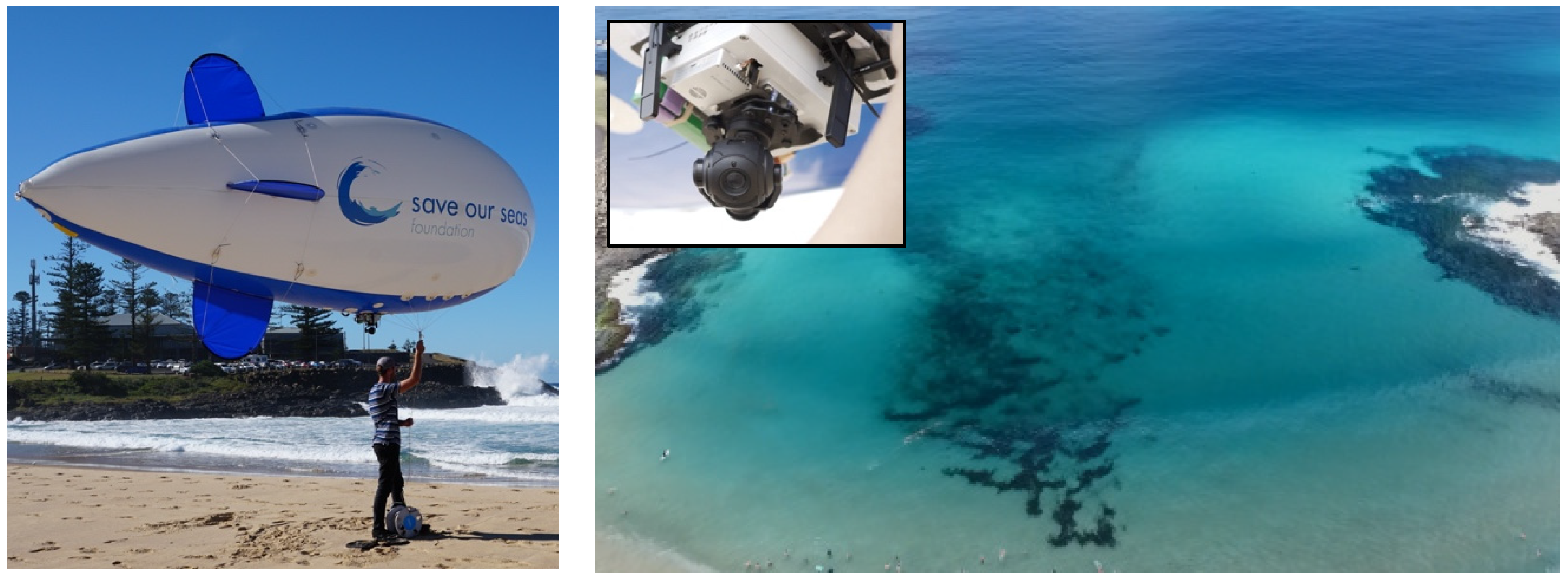

A blimp-based system, Project Aerial Inflatable Remote Shark–Human Interaction Prevention (Project AIRSHIP) was used for the aerial surveillance component of the Sharkeye project. Project AIRSHIP was developed with the intent to help mitigate the risk of shark–human interactions with zero by-catch; the blimp system was developed and used for several years before the Sharkeye project was initiated. The blimp uses a high-definition mounted camera, designed to provide continuous aerial coverage of swimming and surfing areas [

36].

As a first step of Sharkeye, we collected new footage of wildlife from the blimp to help train the detection algorithm. To collect new footage, the blimp-mounted camera system was tethered above the surf zone of Surf Beach in Kiama, NSW. For context on the observation area, Kiama Surf Beach is described as an east-facing embayed beach, 270 m in length, that is exposed to most swell with average wave heights of 1–1.5 m [

43]. Footage of the surf zone was generally collected from 11:00 am until 4:00 pm during a six-week period (excluding weekends and public holidays) from December 2017 to January 2018. Deployment was coordinated around the work hours of the professional lifeguard service at Kiama. Once the algorithm was trained, the blimp was redeployed (May 2018) to test the effectiveness of real-time detection.

Images were collected via a live streaming camera with 10x zoom on a 3-axis gimbal (Tarot Peeper camera with custom modifications). This setup provided a relatively stable feed even in windy conditions (>30 km/h), with footage transmitted to a ground station at a resolution of 1080 p. To focus the collection of images, the direction and zoom of the camera was controlled by an operator on the ground and was visually monitored on an HD screen. The blimp itself is 5 m in length and 1.5 m in diameter (Airship Solutions Pty Ltd., Australia) and served as a stable platform for the camera. The blimp enabled collection over an 8-hour collection period, as limited only by the camera battery. The blimp was launched in the morning and taken down at night. When not in use, it was stored fully inflated to minimize helium usage, taking 9000 L of helium for initial inflation. The blimp loses helium at a rate of <1% a day, requiring a small top up every few days (100–200 L). Images of the system are provided in

Figure 1.

Sharkeye utilized the ability of blimps to detect sharks at ocean beaches. Sharks, and other large marine species including stingrays and seals, were frequently spotted and tracked by observers monitoring the blimp feed [

36]. This work validates the ability of humans to observe and identify fauna from this aerial platform and provided a benchmark against which the performance of the algorithm can could be compared.

2.2. Detection Algorithm Development

The project employed a Deep Learning Neural Network (DNN) for the detection algorithm for various animals as well as people in the water (sharks, rays, and surfers). The following is a summary of the dataset development, the network architecture, the algorithm training and evaluation.

To create the algorithm, raw footage from the blimp-mounted camera was collected over several weeks at a specific beach. This footage was brought back to the laboratory to be manually screened for the presence of marine life. The spliced footage was then used to extract images for algorithm creation and testing and divided into training and testing datasets. One of every five frames of the video selections were annotated with the locations and types of objects and each frame divided into 448 × 448 blocks with 50% overlapping. The training datasets where then processed in a DNN developed by our labs.

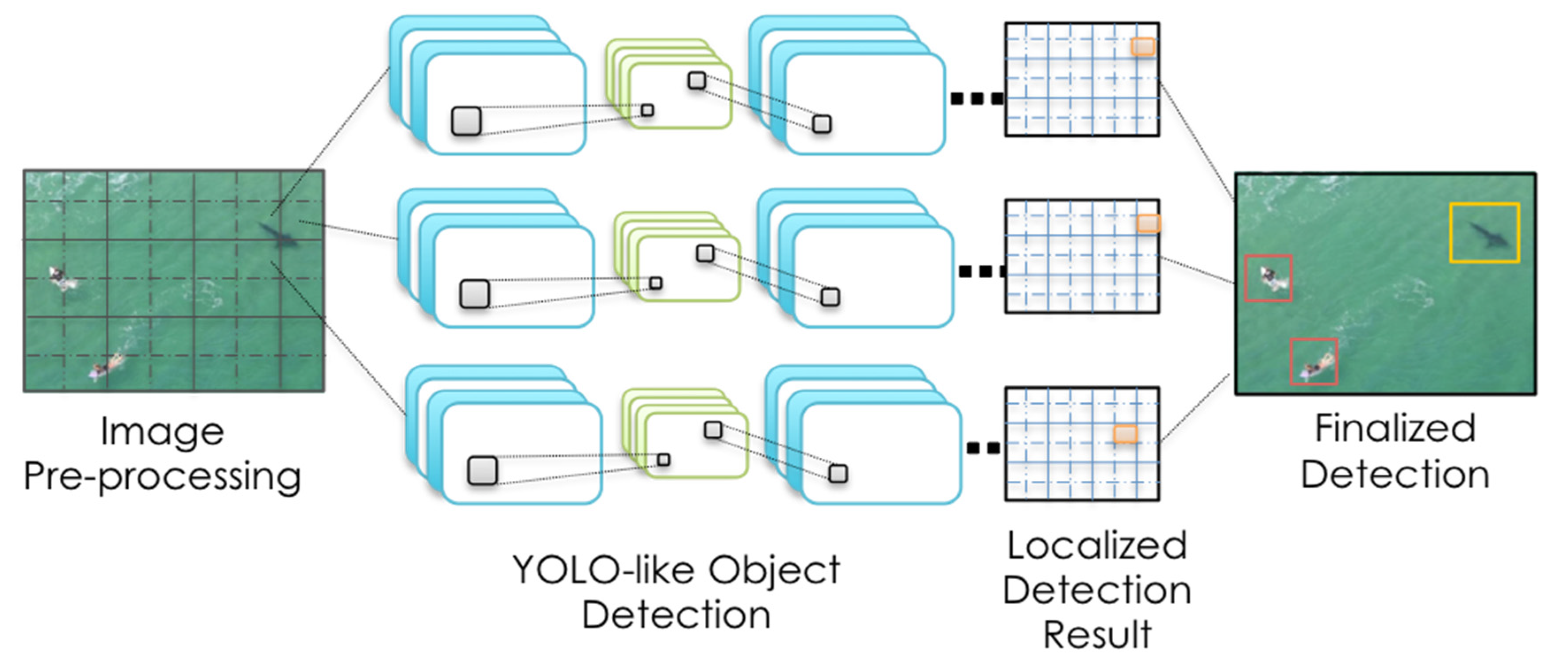

The network architecture was based on You Only Look Once (YOLO) as described in Redmon and Farhati 2017 [

44,

45]. It consisted of nineteen convolutional layers and five max-pooling layers with a softmax classification output. It used 3 × 3 filters to extract features and double the number of channels after every pooling step, with twelve 3 × 3 convolutional layers in total. Between every 3 × 3 convolutional layers, 1 × 1 filters are used to compress the feature representation. There are seven 1 × 1 convolutional layers. At the end of the network, three extra 3 × 3 convolutional layers with 1024 filters each followed by a final 1 × 1 convolutional layer with the number of outputs for detection are added for detection. The network is augmented with a preprocessing component to process the video frames before they are taken for training and detection in real-time. A visualization of the process is outlined in

Figure 2.

The commonly used mini-batch strategy [

44] was used to train the network, with a batch size of 64, a weight decay of 0.0005 and a momentum of 0.9. In this case, the learning rate was set to 0.00001 and max iteration was set to 45,000, with the threshold used for detection set to 0.6. For training, the frequently used data augmentation techniques, including random crops, rotations, hue, saturation, and exposure shifts, were used [

44]. Once the algorithm was created, it was then tested versus the testing dataset in the laboratories. Once validated on the training dataset, the final algorithm was then ready for hosting and deployment in the cloud-based architecture. Results from the algorithm could then be pushed to additional alerting components of the Sharkeye system.

2.3. Personal Alerting via an App

We expanded on the previously developed SharkMate app to provide the user interface (UI) to facilitate real-time alerting accessible for beachgoers in the water. For context, the SharkMate app was designed as a proof-of-principle demonstration, developed as a means to aggregate data contributing to a range of risk factors surrounding shark incidents. Analysis of data from the Australian Shark Attack File (ASAF, Taronga Zoo [

46]) and International Shark Attack File (ISAF [

47]) and a range of correlation studies including Fisheries Occasional Publication No. 109, 2012 [

48] found statistically significant risk factors contributing to shark incidents such as proximity to river mouths and recent incidents or sightings. It is important to acknowledge that sharks are wild animals and their movements and actions are not completely predictable. However, historical data does present factors that are significant in contributing to the risk of shark incidents. It is also important to distinguish factors that are purely correlative and are not causal.

The app derives its location-specific data from a range of sources. Climate data was sourced from the Australian Bureau of Meteorology (BOM). Geographic data was used to map and identify proximity of river mouths, outfalls and seal colonies. This was combined with data such as the presence of mitigation strategies (including the presence of blimp) and lifeguards/lifesavers. Historical incidents and sightings were sourced from ASAF and ISAF. Real-time sightings of sharks and bait balls from across Australia were scraped from verified Twitter accounts. This data was then aggregated to a centralised database.

The app used a series of cloud-based programming steps to provide predictions. Information from the various sources is updated every hour. Scripts are used to retrieve information such as water temperature and the presence of lifeguards at the beach. These values are then run through a series of conditional statements, each individually scaled to reflect the respective impact they have on risk. The output of these conditions is a likelihood score, a number out of ten, designed to be easily compared to other beaches. The higher the number, the higher the risk. Finally, after the impact of all factors has been processed to generate an aggregate score, this value is then sent to the cloud, where it can be accessed from the SharkMate app.

The functionality of the app was later expanded to facilitate real-time alerts from the blimp via Apple Watch. Prior to this work, the SharkMate iPhone app (using iOS) was near completion. However, the SharkMate smartwatch app (using smartOS) needed further development. Swift 4 and XCode9 were used to create and program both the iPhone and Apple Watch applications. As the smartwatch functions vary from the smartphones, the smartwatch app could not simply be converted. Regardless, push notifications were developed to alert the user of the presence of a shark displayed on the Apple Watch app. This was achieved by taking manual and automated triggers of cloud functions that then processed the data and pushed it over the cloud to the watch. With the app and watch validated, the components were complete to integrate the end-to-end alerting system.

2.4. Integration Across the Stack

Integration was required to connect the various pieces of hardware and discrete custom and commercial software to execute real-time notifications of automatically detected sharks in the ocean via the smartwatches. To integrate these features, our team created the interface between:

The camera mounted to the blimp (or the video feed from a separate drone),

The receiving ground station,

The machine learning model (algorithm),

The smartwatches, and

The SmartMate app (iOS phone/watchOS).

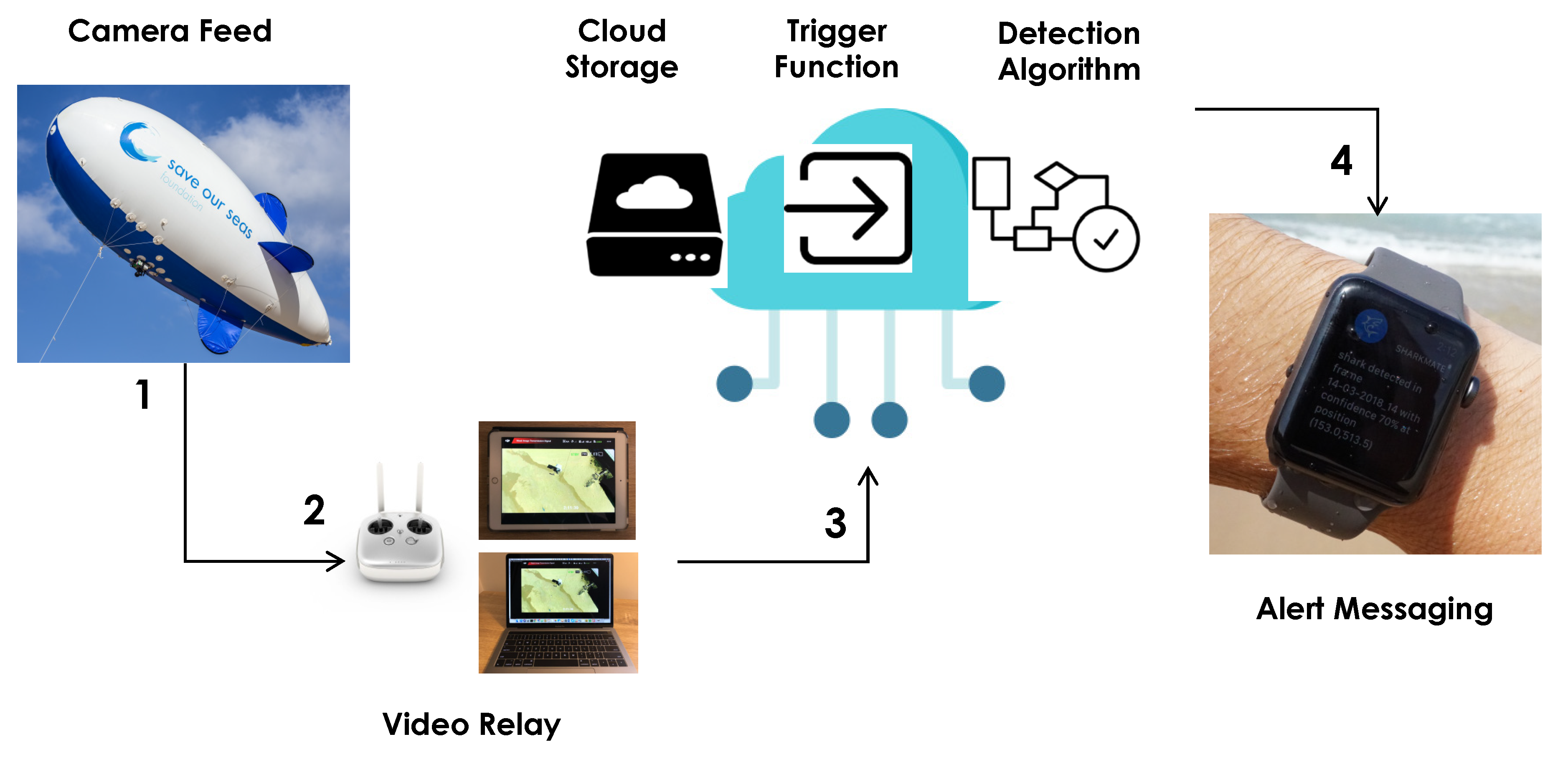

Several additional pieces of software were required to facilitate integration. Firstly, a program was developed to initiate processing using the algorithm; this software transferred images captured from the live video feed and uploaded them to the cloud for processing. Secondly, once the images were processed from the algorithm, an additional script was created to process the outputs of the machine learning model and send appropriate push notification messages via Amazon Web Services SNS (simple notification service pub/sub messaging) integration with the Apple Push Notification Service. The data flow is outlined in

Figure 3. To test the system before deployment, the camera was removed from the blimp, and aerial shark images from the internet and from our training set were placed in front to simulate a live feed. This was done on a local beach to take into account environmental conditions that might be observed during field testing. Although simplistic, the test run showed the integrated components worked; alerts were triggered, and notifications received on the smartwatches.

2.5. Field Testing and Additional Drone-based Hosting

Once the individual Sharkeye components had been completed and validated, the system was then deployed for field testing. Initially, the blimp was inflated, and the camera attached to the underside mounting. The live camera feed was then established to laptops on the beach and monitors in the surf club. As before, to provide a preliminarily check of communication in the system, aerial images of sharks (via printouts) were placed in front of the camera, the feed sent to the cloud to process the detection algorithm, and alerts were monitored on several smart phones and smartwatches.

Once the check was complete, the blimp was launched to a height of approximately 60 m via a tether to the beach. A shark analogue (plywood cutout) was then placed on the sand and successfully detected as a verification to establish the system was working. The analogue was then deployed in the surf zone and detection monitored on screens, on 3 smartwatches and on various phones with the app installed. Detection rates were recorded in the cloud. Alerts on multiple smartwatches were then tested while in the water near the shore with success. Images of the field testing are seen in

Figure 4. For environmental context, the weather conditions on the testing days were average, partially cloudy, while the surf was higher than normal (see

Supplementary Files for videos of the conditions and setup in action).

To test the system on a mobile platform, the camera feed was successfully changed to a drone operated by a certificated drone pilot (DJI Phantom 4, Opterra Australia). A surfer wearing a smartwatch was deployed, the drone was then set to observe, and send the feed to the cloud as with the blimp. In that case, the surfer received alerts at 150 m from shore. Additionally, while filming, a stingray was observed from the drone. The team took advantage of the situation and was able to detect the native wildlife in the field and with alerts. Finally, we deployed a stand up paddleboarder (SUP) with a smartwatch and observed detection via the system simultaneously on the beach and in the water.

3. Results and Discussion

Overall, our Sharkeye system was successfully deployed during the field trial, demonstrating that automated real-time personal shark detection is possible using existing technologies: aerial imaging, cloud-based machine learning analysis, and alerting via smartwatches.

3.1. Creating the Detection Algorithm

In order to develop the dataset to train the algorithm, the blimp system captured over 100 h of raw footage over a six-week period. As mentioned, the footage was then manually screened at the laboratory for the presence of marine life. Upon review, multiple sharks were sighted during approximately 30 min of total time. Other marine animals were far more commonly observed, with stingrays spotted at least 50 times, as well numerous seals and baitfish schools. It should be noted that during the data collection, the monitored video feed also provided a beneficial vantage point for lifeguards. One rescue was also observed from the blimp during the original footage collection. Additionally, lifeguards monitoring the video feed identified sharks in real time on several occasions. On one of these occasions, the blimp operator spotted a shark in close proximity to a body boarder and alerted lifeguards who evacuated swimmers from the water.

Four video sequences, one hour and seven minutes in total, containing sharks, stingrays and surfers were chosen from the video footage collected by a blimp overlooking the beach and ocean. After analysis this led to a dataset having ~10,805 annotated images. The dataset was then divided randomly into three subsets: 6915 images for training, 1730 images for validation, and 2160 images for off-line performance evaluation. This training set was extremely limited, particularly for sharks; based on <30 min of footage of sharks seen in the beach area during operation of the blimp. However, even with this limited training data the system was able to detect various objects from the images set aside for testing with confidence. Using a standard accuracy calculation (number of correctly detected objects + number of correctly detected "no" objects/total instances), evaluation in the lab showed an accuracy of 91.67%, 94.52% and 86.27% for sharks, stingrays, and surfers, respectively, using the testing dataset.

The authors acknowledge the limitations of the algorithm in the context of deployment for shark spotting. The utility of the training and testing datasets was directly linked to the conditions seen during the recording of the subject at hand: whether shark, ray, or surfer. This resulting environment and activity during the sighting, including weather, wave activity, glare (time of day), animal depth, etc., will all have an impact on the ability and accuracy of the resulting algorithm to detect a subject in different conditions. For the footage used for the algorithm training, the conditions would be described as average and mild for periods of the Australian summer in the Illawarra region, ranging from overcast to partially cloudy.

On reflection, developing the detection algorithm for sharks, stingrays and surfers proved more challenging than conventional objects due to a number of reasons. First, these objects are typically underwater causing significant deformation of their outlines that makes defining borders difficult. Second, the colour of the objects can vary due to the depth in the water, meaning the algorithm must be able to distinguish an object under multiple conditions. Third, when collecting video from aerial platforms, the objects can be too small to use due to the distance from the camera. Fourth, it can be challenging to collect the amount of footage that is required to have enough images to effectively train a deep neural network with accuracy and precision (i.e., footage of sharks at Kiama); as well as the scarcity of sharks to validate a trained algorithm (i.e. resulting in the need to deploy shark analogues to test). With access to further training data and access to sharks in the wild, in a variety of environmental conditions, we anticipate that the accuracy can be greatly expanded and improved.

3.2. Design and Testing the Smartwatch User Interface

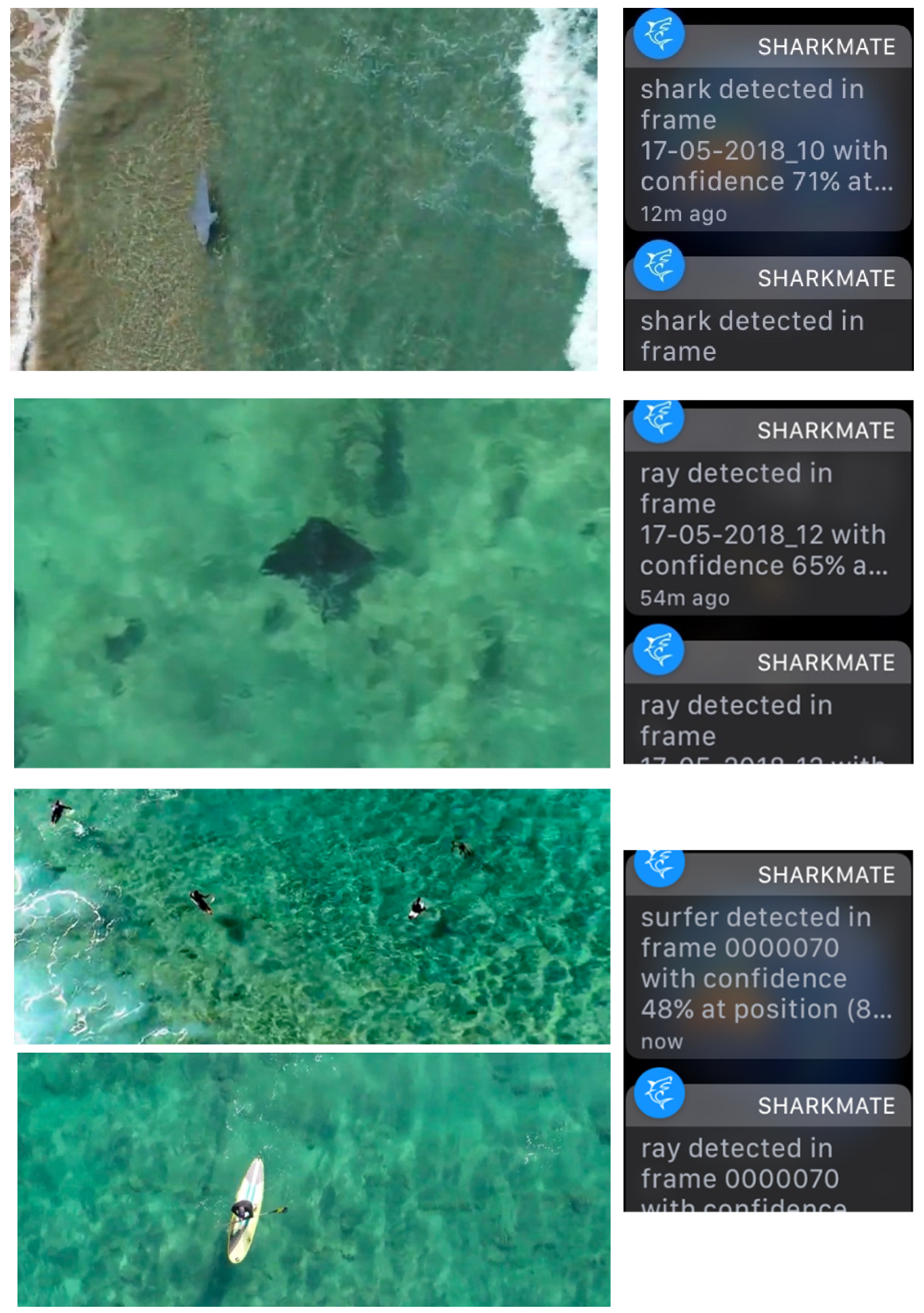

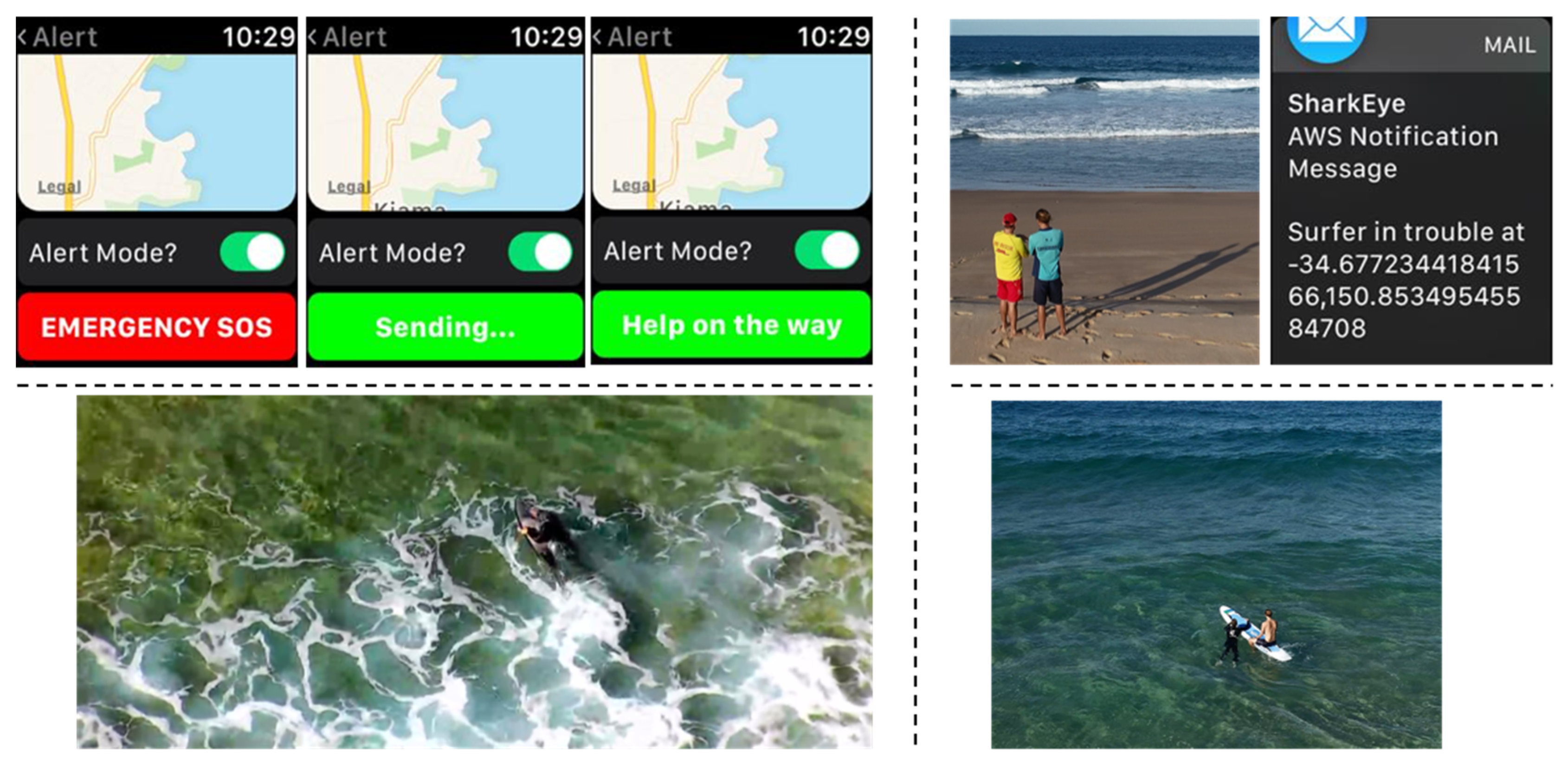

The user interface and design of the watch app was identified as a crucial element of the Sharkeye system. Users in the water have limited ability to control the watch due to moisture on the glass when in the surf. Because of this, the UI and experience were designed to be accessible and responsive in an aquatic environment. The smartwatch app was setup to receive the message that a shark has been spotted, and the user should exit the water. This was presented in the form of attention-grabbing actions across three senses (sight, sound, and feel) that were performed by the watch. Besides a visual notification, the speakers in the watch also produce a sound. Vibration was also ultilised to further ensure that someone wearing the watch was alerted to the potential danger. Additional alerts were simultaneously sent to the smart phone (examples of alerts are seen in

Figure 5).

Overall connectivity of new wearables was an important factor in app development. The Apple Watch used in the demonstration was one of the only smartwatches that was waterproof and also boasted GPS and cellular capabilities at the time of demonstration; this ultimately allowed for use in the surf and the required long distance connectivity for the system to receive alerts from the cloud. However, there were difficulties in translating the iPhone app to the Apple Watch app due to the network limitations with the wearable; communication with the database is sometimes limited when the user is not connected to an internet source or SIM card. The user interface is completely different on the two devices due to the different dimensions that required additional development in creating the UI. Certainly, the potential of the system is not limited to Apple products and other wearables and operating systems could be used in the future.

3.3. Real-Time Aerial Shark Detection and Alerting

During the deployment trial period, 373 detection events were recorded. A detection event was defined as an automatic alert from the system onto the smartwatch and/or smart phone, and which was then validated by a researcher against the truth/falsity of the AI detection. These alerts occurred with a latency of less than 1 min from image collection to notification. The detection events could be broken down into detected sharks, rays, or surfers (as seen in

Figure 6). To analyse the events, we examined whether there was an actual shark analogue, ray or surfer being imaged and also detected by the algorithm. The output of the detection event was manually annotated as falling into one the categories (shark analogue, ray, surfer) as the ground truth. The data was then run through a classifier, and we compared the ground truth for both raw counts and for binary presence/absence classification.

This arrangement was successfully able to detect shark analogues, surfers, and rays with an accuracy of 68.7% when considering a simple no presence of an animal versus presence of an animal. While a suitable accuracy for a demonstration, our testing showed ample room for improvement. The system had trouble distinguishing between sharks, rays and humans with perfect confidence and most often mis-classified objects as rays. Further, at times, artefacts causing false positives were observed; the system thought shadows of waves were animals, or shadows from the blimp or drone itself. However, from the context of a proof of principle, once trained the algorithm performed sufficiently well in a different situation than it had been taught and effectively picked up objects darker than surroundings.

The reduction in accuracy from the training dataset and the field data could be due in part to the differing conditions seen between the footage collection periods. The demonstration took place in autumn, 5 months after the training data was collected in the summer. Further, while the weather was somewhat comparable, mild and partially sunny, the swell was notably bigger during the testing period. Obviously with further training, especially using more footage from on-beach locations in varied conditions, the algorithm would be improved for both the ability to pick up real objects and the ability to distinguish between them. Further work in the post-image capture analysis could also improve the system significantly; particularly methodologies that evaluate the detection in context. For instance, techniques like those used by Hodgson et al. [

49] and replicated by other groups [

19], where the observations are related to parameters like sea visibility, glare and glitter, and sea state, would not only help to understand the accuracy, but could also potentially be used to further improve the detection algorithm.

In terms of differences between aerial platforms, there was little difference in image quality between the blimp-mounted camera and the quadcopter drone camera. The ground station used over the last few seasons at the beach had an effect on the image quality and had some reception issues, highlighting the need to consider a casing for this equipment to prevent corrosion from the sea air. The area of coverage is dependent on the height of the blimp (restricted to 120 m or 400 ft). We deployed the blimp at 70 m to provide complete coverage of a beach that is 270 m in length to a distance of 250 m offshore. This ensures both the flagged swimming area and the surf banks were covered by the blimp. On longer beaches, the blimp may be deployed higher (to a limit of 120 m) to achieve a larger area of coverage. However, this may reduce the accuracy of observers/algorithms, so the area of coverage and accuracy would need to be assessed and optimized.

3.4. Platform for additional Lifesaving Technology

In addition to the notification system of sharks and rays to surfers and swimmers, our team was able to use the smartwatch app to provide a secondary emergency notification system. In an emergency, surfers and swimmers who need help could use the watch app to send a notification to life savers with the fact they need help as well as the location reported by the watch app. To achieve this, we added a simple web API that the smartwatch app could call, which would then pass on the details via email. We integrated this functionality to the SharkMate app on the smartwatch by adding a map and alert button to an alert section in the watch app UI to send out that alert using the web API. The alerting system is shown in

Figure 7, followed by the response email as displayed on a lifesaver’s watch shown below. To demonstrate the system, two tests were carried out—one with a surfer in trouble and another with a swimmer in trouble—ending with a mock rescue. The demonstration proved the viability of using the platform for in-water emergencies, including potential use during a shark interaction.

3.5. Overcoming Deadzones—Creating an On-Demand IoT Network

The emergence of Internet of Things (IoT) has led to new types of wireless data communication, offering long-range, low-power and low-bandwidth connectivity. Those new technologies are using non-licensed radio frequencies to communicate. With these new protocols, devices can last for years on a single battery, while communicating from distances of over 100 km. A major open source initiative in this space is The Things Network, which offers a fully distributed IoT data infrastructure based on LoRa (short for Long Range) technology [

50]. The University of Wollongong and the SMART infrastructure facility have deployed multiple gateways offering free access to the Things Network infrastructure in the Illawarra area [

51]. An advantage of this infrastructure is that the network can be easily expanded by connecting new gateways, increasing the coverage of the radio network. Ultimately, such networks could be used to power and collect data from sensors and other instruments across local beaches.

The use of the blimp allowed us to show the possibility of extending the network even over the ocean by integrating a nano-gateway. A nano-gateway was designed by the SMART IoT hub, composed of a LoRa Gateway module (RAK831) coupled to a Raspberry pi zero. The LoRa Gateway module demodulates LoRa signals received from sensors, while the Raspberry pi zero uses 4G connectivity to push data to the cloud. This nano-gateway is powered by battery, which offers the possibility to easily expand the network to isolated areas at a very low cost. Embedded in the blimp, the gateway offers large area radio coverage. To map the signal, we used a mobile device that sends messages containing GPS coordinates through the LoRa network. When the gateway receives those signals, meta-data can be extracted from the message, displaying the position of the sensor on a map and the strength of the signal. Positions are displayed live on the website

https://ttnmapper.org/, which is crowd-sourced map coverage of the Things Network [

52]. The mobile device was taken into the sea by a lifeguard on a paddleboard to test the coverage of the nano-gateway. As shown in the experiments in

Figure 8, the signal reported locations even at a distance of 1 km, which was the extent of the experiment. For beach surveillance, the demonstration shows potential to quickly deploy an autonomous solution where classical internet connection is not available.

3.6. Considerations for Future Deployment.

The system has the potential to improve on the more traditional view of aerial surveillance that requires operators to analyse images and more critically requires lifeguards to audibly alert beachgoers. Conceptualizing future deployment to a large number of beaches, we would recommend streamlining the hardware on the beach with a view to minimize setup requirements and cost. Critically we would explore where it makes sense to do the process computing “at the edge” (on the beach) sending only essential data or notifications via the cloud, given the bandwidth constraints (that may vary from beach to beach) as well as operating costs.

To expand the algorithm for other beaches and scenarios, more video footage would need to be collected, under a wide range of weather and beach conditions, and used to train the algorithm. Further research on the network architecture is needed so the architecture would be able to deal with varied conditions to improve the detection accuracy. Generally, the network and training procedure can be easily and efficiently scaled to multiple objects; we demonstrated the use of detection for sharks, rays, and surfers simultaneously. Scaling again would require sufficient video footage to teach the algorithm and scaled to multiple sites with more cloud processing resources. The system is also algorithm “agnostic”, i.e., capable of hosting new independent algorithms developed by external groups.

With regards to the coverage of the Sharkeye system and alerting potential of the SmartMate app, future work could encapsulate the expansion of beaches affiliated with the likelihood score (currently, there are approximately 100 beaches predominantly in NSW, Australia) and the inclusion of further research into specific risk factors. As a proof of principle, in its current form, the SharkMate app has both provided a platform for users to develop a greater understanding of the local risk factors contributing to shark incidents and also acted as a means to broadcast real-time shark sightings by the blimp. Expansion of the work may see the introduction of machine learning into the risk models as it has proved successful in the other elements of this project. Further, as wearable technology continues to improve and capabilities extend, there is potential to enhance the communication between lifeguards and the beachgoers through a range of mediums other than audio/text alert. Improvements in connectivity could allow lifeguards to communicate at a significant distance through video and audio in real-time through wearables—a possible enhancement for the SharkMate app. Such improvements extend beyond shark incident mitigation into other aspects of beach safety.

The alerting aspect of the Sharkeye platform could also integrate into other existing or future apps utilized for shark monitoring. The authors would like to add a word of caution that users should never only depend on an app, Sharkmate or otherwise, as the basis for deciding on the risk of being in the water. Technology will never replace common sense, particularly when in areas where sharks are known to frequent and especially when detection conditions are poor.

Although tested and trained using footage from a novel blimp-based aerial platform, the system is cross-compatible with any video-based aerial surveillance method and potentially the only automated system proven on both blimps and drones. Given the advantages and shortcomings of these various surveillance methods to the application of shark safety [

14,

36], it is likely that a mosaic approach will be most effective, whereby blimps and balloons, drones and traditional aircraft are used alongside each other to provide appropriate coverage. Therefore, by designing the system to be compatible with any method of image capture, we allow for increased uptake potential and a broader pool of application.

4. Conclusions

Through limited training (via self-collected footage), we were able to develop a detection algorithm that could identify several distinct objects (sharks, rays, surfers, paddleboarders) and report to various smart devices. However, as described in the discussion, with more training and optimization, the algorithm can be significantly improved for accuracy in prediction. There is also the possibility to expand to a host of other beach- and water-based objects. With the basis of the system in place, the algorithm could be expanded to look at a variety of relevant aspects of shark management, including rapid identification of various species, behavioral patterns for specific shark species, and interspecies relationships (e.g., following bait balls), potentially offering new ways of identifying (and ultimately preventing) what triggers attacks with humans.

The Sharkeye system brings Industry 4.0 to shark spotting and sits at the flourishing nexus of drones, sensors, the Internet of Things (IoT), AI, edge and cloud computing. However, our system has the potential to go well beyond looking at specific animals; it can be used as an effective tool for identifying other threats to assist in beach management. Further data collection and algorithm development could enable a system that could monitor beach usage and safety (such as swimmers in trouble), identify rips and other conditions, and then warn beachgoers, as well as monitor pollution, water and air quality, all the while simultaneously offering a platform for real-time alerting for the presence of sharks. We believe widespread deployment is truly feasible if the platform provides a range of enhancements for public safety and integrates as a tool for lifeguards/lifesavers, councils, and other stakeholders alike. This strategy would reduce investment risk and provide greater returns than resourcing various discrete piece-wise shark-specific technologies at individual beaches. We heavily caution that although automation shows promise for providing safer beaches, it should be seen as a tool for lifeguards/lifesavers rather than as a replacement. If a shark is sighted from the blimp, professionals must be notified via smartwatch alerts to verify and respond. We would also like to see detection algorithms tested in continuous and varied real-life conditions to verify their reliability; this is essential to ensure that the public has confidence in an automated system that would work with human observers.