Abstract

The current study sets out to develop an empirical line method (ELM) radiometric calibration framework for the reduction of atmospheric contributions in unmanned aerial systems (UAS) imagery and for the production of scaled remote sensing reflectance imagery. Using a MicaSense RedEdge camera flown on a custom-built octocopter, the research reported herein finds that atmospheric contributions have an important impact on UAS imagery. Data collected over the Lower Pearl River Estuary in Mississippi during five week-long missions covering a wide range of environmental conditions were used to develop and test an ELM radiometric calibration framework designed for the reduction of atmospheric contributions from UAS imagery in studies with limited site accessibility or data acquisition time constraints. The ELM radiometric calibration framework was developed specifically for water-based operations and the efficacy of using generalized study area calibration equations averaged across variable illumination and atmospheric conditions was assessed. The framework was effective in reducing atmospheric and other external contributions in UAS imagery. Unique to the proposed radiometric calibration framework is the radiance-to-reflectance conversion conducted externally from the calibration equations which allows for the normalization of illumination independent from the time of UAS image acquisition and from the time of calibration equation development. While image-by-image calibrations are still preferred for high accuracy applications, this paper provides an ELM radiometric calibration framework that can be used as a time-effective calibration technique to reduce errors in UAS imagery in situations with limited site accessibility or data acquisition constraints.

1. Introduction

Unmanned aerial systems (UAS) offer a new era of local-scale environmental monitoring where, in certain applications, UAS provide a cost-effective and timely approach for collection of environmental data [1,2,3]. UAS can be configured with a variety of multispectral and hyperspectral imaging sensors that can be flown at flexible temporal frequencies producing imagery with very high spatial resolution [4]. This provides the opportunity to vastly expand our ability to detect and measure the impacts of hazards [5,6,7,8,9,10,11], monitor environmental conditions [12,13], track species propagation [14,15], manage resources [16,17,18,19,20], monitor changes in surface conditions [21,22,23,24,25,26], and cross-validate existing platforms [27,28]. UAS offer the opportunity to collect data during various environmental conditions and during inclement weather conditions in a rapid response configuration where there is valuable data to be collected, such as the extent of flooding [29,30]; however, these new opportunities bring new challenges. For example, UAS data collection will occur at different flight altitudes, throughout different times of day, and under various environmental conditions which will have important impacts on the UAS remotely sensed imagery [31,32,33]. It is, therefore, important to understand how atmospheric contributions to UAS imagery vary with changes in UAS flight configuration, and to develop an effective approach to obtain scaled remote sensing reflectance data from UAS imagery.

The flight altitudes of small UAS (sUAS) typically do not exceed 250 m due to governmental restrictions on UAS operations [34]. As a result, numerous UAS studies have implemented an empirical line method (ELM) for radiometric calibration of UAS imagery [35,36,37,38,39,40,41,42,43,44,45,46,47]. The foundation of an ELM is the regression of ground-based spectroradiometer measurements and airborne remotely sensed measurements of a spectrally stable calibration target to develop calibration equations that convert image digital numbers (DNs) to physical at-surface reflectance units [48]. Due to the nonlinearity observed between consumer-grade digital cameras and surface reflectance [44], UAS radiometric calibrations may require more than two calibration targets to be present in the imagery to address any nonlinearity of the sensor [4]. For example, using nine near-Lambertian calibration targets, Wang and Myint [40] found an exponential relationship between their consumer-grade sensor-recorded digital numbers (DNs) and ground-based spectroradiometer reflectance. Following the simplified ELM radiometric calibration framework recommended by Wang and Myint [40], Mafanya et al. [45] found that using a calibration target with six grey levels could quantify this nonlinear relationship and produced an accurate image calibration.

Some requirements that must be met when selecting calibration targets include: (1) the target should be large enough to fill the sensor’s instantaneous field of view (IFOV) and to minimize adjacency effects; (2) the target should be orthogonal to the sensor; (3) the target should have Lambertian reflectance properties; and (4) the target should be free from vegetation [49]. Chemically-treated and laboratory-calibrated tarps make for great calibration targets for applications using the ELM [50]; however, these can be impractical depending on the size of the sensor’s IFOV. Some studies have relied on targets such as black asphalt and concrete for use with remotely-sensed satellite and manned aircraft systems [48,51]. There are problems with using these man-made surfaces because of the lack of control that may translate into noise in the data, such as light-coloured compounds in certain asphalts [48]. For UAS applications, the size of the calibration tarp should not be an issue because of the high image spatial resolution; rather, recent studies have prioritized the cost of the calibration tarp construction because the benefit of using UAS in the first place was the relatively low operating costs to implement a remote sensing system with high spatial resolution and flexible sampling frequency [40,45]. To develop a more cost-effective calibration target, Wang and Myint [40] tested 10 different materials for use as calibration targets and found that Masonite hardboard painted with a nine-level grey gradient worked best. However, it is impractical to use Masonite hardboard in UAS operations based over water or in complex terrain where the panel integrity could be compromised during transportation and deployment in unforgiving workspace conditions.

Wang and Myint [40] demonstrated the efficacy of a simplified ELM radiometric calibration framework to calibrate UAS imagery on an image-by-image approach, where a calibration target is required in each image prior to the mosaicking of the images to produce the final multi-band orthomosaic; however, this approach is not feasible for relatively large-scale studies (> 90 ha) due to the high spatial resolution and relatively small footprint of UAS images [52]. Instead, Mafanya et al. [45] proposed the potential for calibrating a large uniform area with calibration equations derived from a single point. While their proposed large-scale simplified ELM radiometric calibration presents a cost-effective and time-efficient framework for the conversion of UAS DNs to reflectance units, Mafanya et al. [45] mention that there is a need to test this method on larger areas. Furthermore, the framework developed by Mafanya et al. [45] does not address the unique needs of water-based UAS image collection missions where there is a need for a waterproof calibration target that can be stowed within a small compartment and rapidly deployed orthogonally to the airborne sensor in a limited space (e.g., top of the boat canopy).

There has been a considerable increase in the adaption of UAS in a variety of academic and industry operations. Hence, there is a need to better define the implications of atmospheric contributions on UAS imagery and a need to work toward a universal radiometric calibration framework for the reduction of atmospheric contributions in UAS imagery. This study takes a qualitative approach to assess the magnitude of atmospheric contributions on UAS-derived imagery at multiple UAS flight altitudes and provides an ELM radiometric calibration framework focused on the reduction of atmospheric contributions in UAS imagery collected in complex sampling environments requiring rapid deployment and processing of imagery. As such, the objective of the current study is to develop a radiometric calibration framework for the reduction of atmospheric contributions and obtain scaled remote sensing reflectance data from UAS imagery collected in complex and limited sampling environments.

2. Materials and Methods

2.1. Calibration Tarp

A portable fabric calibration target was constructed to provide a spectrally consistent target in the UAS imagery that could be deployed in both land and water operations. Three spectrally distinct fabric panels were used to balance the amount of equipment carried to the sample sites and the need to quantify any non-linearity in the UAS-spectroradiometer relationship. The darkest panel was a 6% reflectivity value, the medium panel was a 22% reflectivity value, and the lightest panel was a 44% reflectivity value to avoid overexposure of the sensor. Considerations while constructing the target included:

- The targets should be large enough to fill the IFOV of multiple sensors and to minimize adjacency effects, yet small enough to be portable and used on a boat.

- The targets should lay orthogonal to the sensor.

- The targets should have near-Lambertian reflectance properties.

- The targets should be durable and washable.

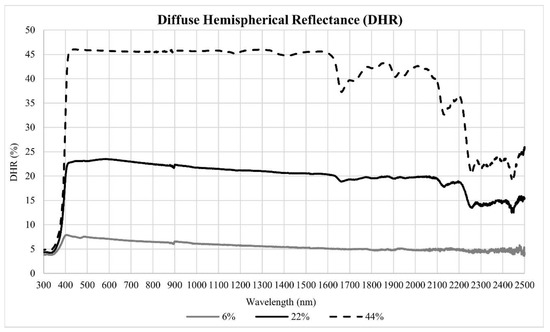

The final calibration target was composed of three 60.96 cm x 1.22 m grey strips of coated Type 822 fabric sewn together using a 2.54 cm overlapping seam and a 5.08 cm folded hem around the perimeter. The Type 822 fabric is a durable, high-strength woven polyester fabric with an Oxford weave that provides near-Lambertian reflectance properties; (Group 8 Technology, Inc., Provo, UT). The coating of the panels was a pigmented acrylic/silicone polymer that was neutral in hue and devoid of spectral content from 420 to 1600 nm. The targets were laboratory-calibrated using a Perkin-Elmer 1050 Spectrophotometer with an integrating sphere so that the band average diffuse hemispherical reflectivity (DHR) of the individual fabric webs were ~6%, ~22%, and ~44% (R +- 0.05R) (Figure 1; Table 1).

Figure 1.

Laboratory-measured diffuse hemispherical reflectance (DHR) from 300–2500 nm for the 6%, 22%, and the 44% calibration reference panels.

Table 1.

Laboratory-measured band average reflectance for each calibration reference panel.

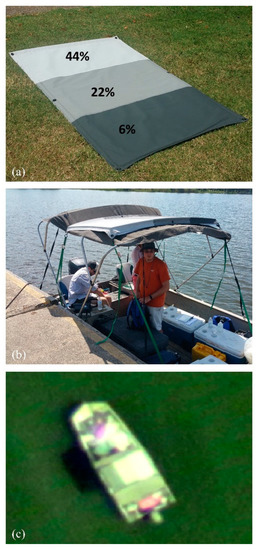

The panels were sewn onto a larger 1.22 m × 1.83 m piece of uncoated Type 822 fabric. The larger 1.22 m × 1.83 m piece of uncoated Type 822 included a pouch with a Velcro opening and six size-four reinforced clawgrip grommets. The reinforced clawgrip grommets allowed for the tarp to be securely affixed to a boat for water operations. The pouch allowed for an insert (e.g., plywood or other rigid material) so that the calibration target would lie flat on the ground (Figure 2a) or on the top of a boat canopy (Figure 2b). The greatest care was taken to prevent creasing while deploying the calibration target.

Figure 2.

Constructed calibration targets deployed on land during North Farm experiments (a) and on a boat canopy during Lower Pearl River missions (b). Tie downs are loosened during flights in the Lower Pearl River study area to allow the calibration reference panels to lie orthogonal to unmanned aerial systems (UAS) when deployed on top of boat canopy (c). The darkest panel is 6% reflectance, the medium panel is 22% reflectance, and the lightest panel is 44% reflectance.

2.2. Study Site Configurations

2.2.1. Mississippi State University North Farm

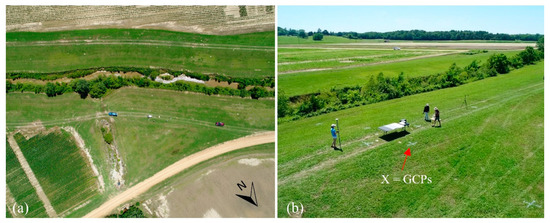

An experiment to quantify the magnitude of atmospheric contributions to UAS imagery at various flight altitudes was conducted at the R. R. Foil Plant Science Research Center (North Farm) on the campus of Mississippi State University (Figure 3). Research teams routinely fly here for precision agriculture applications, and a certificate of authorization (CoA) allowed for an operating altitude of 244 m. A preliminary flight to identify best practices (North Farm 1.0) was conducted. In this flight, it was determined that the calibration reference panels should be placed on a dark table to create a buffer between the tarp and vegetation pixels and ensure the fabric tarp could be pulled tight. It was also determined that 30-meter flight altitude intervals would provide a balance between battery power and the loiter time required at each altitude to collect 30 images of the reference target from each viewing angle. Images collected from seven viewing angles–one set at nadir followed by six additional locations distributed regularly around the calibration–while maintaining a northward orientation allowed for the assessment of the Lambertian properties of the constructed calibration tarp. Conditions with minimal horizontal and vertical winds are preferred to limit the amount of battery required to move between flight altitudes.

Figure 3.

(a) R. R. Foil Plant Science Research Center (North Farm) study area on the campus of Mississippi State University. (b) View of the North Farm 2.0 research site configuration where Xs represent the eight ground control points (GCPs).

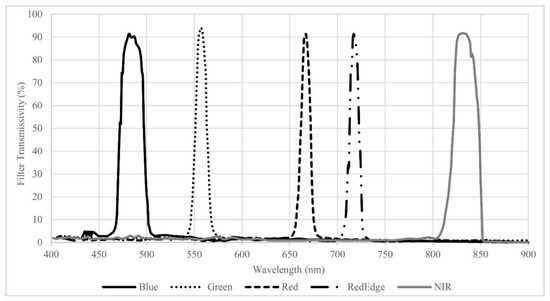

North Farm 2.0 (NF2) was conducted on May 2017 during clear sky conditions with light variable winds. The North Farm 2.0 experiment was composed of an X8 octocopter carrying a five-band MicaSense RedEdge® (MicaSense Inc., Seattle, WA, USA) multi-spectral imager with a horizontal resolution of 8 cm when flown 122 m above ground level (AGL) and a footprint of 1280 x 960 pixels. (Figure 4, Table 2). The MicaSense camera settings were left fully auto; however, the imagery was radiometrically calibrated and normalized for exposure time and gain following a customized MicaSense RedEdge image processing workflow based on MicaSense image processing scripts explained further in Section 2.4. The experiment was carried out over a one-hour period from 13:45–14:45 local time (LT). A Windsonde UAS meteorological sensor flown on the UAS recorded consistent relative humidity values of about 45% throughout the flight column.

Figure 4.

MicaSense RedEgde laboratory-measured spectral response functions.

Table 2.

Specifications of the components making up the Unmanned Aerial Systems (UAS) flown during the North Farm experiment and during the Lower Pearl River missions.

2.2.2. Lower Pearl River

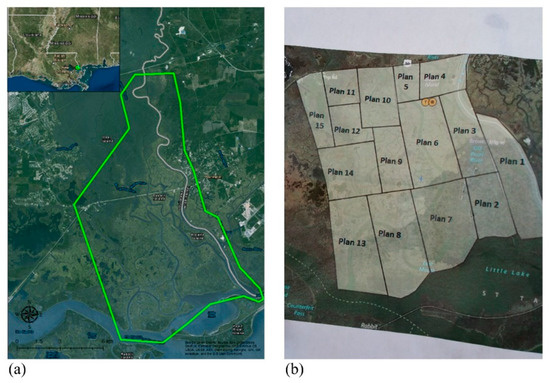

UAS imagery collected over the Lower Pearl River was used to develop and test the simplified ELM radiometric calibration framework. The Lower Pearl River study area spanned a 90 km2 area over the Lower Pearl River Estuary southeast of Slidell, Louisiana (Figure 5a). The region is a complex coastal estuary with a mix of low marsh grasses, braided streams, dense forests, man-made structures, and prominent aquatic vegetation. The area is subject to high humidity and sometimes strong sea breeze, often initiating sporadic precipitation events during the warm season. The flight range was limited by the Federal Aviation Administration (FAA) line of sight requirements in place at the time of the study. The changing vegetation, from marsh grass in the southern portion to dense forest in the northern portion, limited the northern extent of flight coverage because line of sight with the UAS became a challenge as vegetation converted to a forested landscape. Accessibility challenges throughout the Lower Pearl River also made it impossible to capture the calibration reference panels in every flight mosaic. Additionally, the north-south orientation of the river network limited the east-west extent of flight coverage to keep the UAS within sight from a boat in the river.

Figure 5.

(a) Lower Pearl River Estuary study area and (b) image of the flight plans conducted during the August 2015 mission. Flight lines were determined on a per-flight basis to account for wind conditions to optimize flight time; long flight lines were typically preferred. For example, Plan 8 would typically fly in a north-northwest to south-southeast flight line orientation.

The Lower Pearl River UAS was composed of the Nova Block 3 fixed-wing unmanned aerial vehicle (UAV) flying with a modified Canon EOS Rebel SL1 DSLR camera (Figure 6, Table 2). The Nova Block 3 was capable of flying for 80 minutes on a single battery charge. The Canon EOS Rebel SL1 DSLR collected data in three spectral wavebands at a 2.62 cm spatial resolution when flown 122 m above ground level (AGL), with a 24-bit depth, and a footprint of 5184 × 3456 pixels. Only imagery from the August 2015 and December 2015 Lower Pearl River missions was used to develop the study area calibration equations because the calibration reference panels were not constructed until July 2015. Camera settings manually configured for the August 2015 mission were set to an exposure time of 1/4000s, exposure bias of −0.7 step, and focal length of 20 mm. ISO and f-stop were not manually configured and varied across the sampled area. Camera settings for the December 2015 mission were set to an exposure time of 1/4000s, exposure bias of −1.0 step, focal length of 20 mm. Again, ISO and f-stop were not manually configured and varied across the sampled area. It is important to note that the variations in camera settings across the scene were normalized during the flight mosaicking process explained in Section 2.4.

Figure 6.

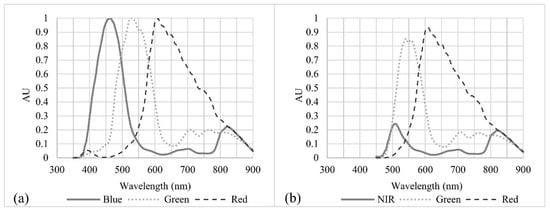

Spectral response functions of (a) the original Canon DSLR camera without the yellow filter and (b) the Canon DSLR camera with the Wratten #12 yellow filter. The modified (b) Canon DSLR was flow on the UAS during Lower Pearl River missions and the imagery.

The original camera collected data from 300–1000 nm (Figure 6a); however, the sensor was retrofitted with a Wratten #12 yellow filter that blocks wavelengths less than 450 nm (Figure 6b). The purpose of this filter was to convert the blue sensor to a surrogate near-infrared (NIR) sensor. By limiting the response to blue visible wavelengths, the camera was converted to a green-red-NIR colour-infrared (CIR) camera; however, the resulting NIR spectral band had a rather noisy and weak signature.

2.3. Image Collection Procedures

2.3.1. Atmospheric Contribution Experiment (North Farm)

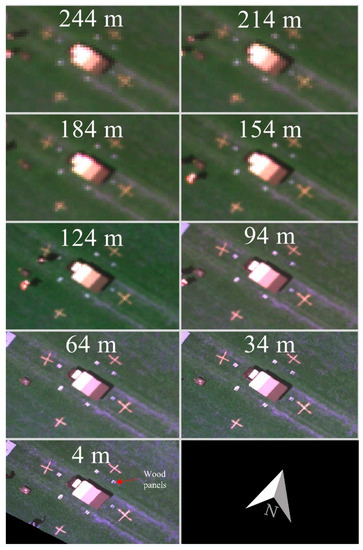

Ground control points (GCPs) were collected using a handheld differential GPS unit (Trimble Geo-7x) from eight locations, marked as “X” in Figure 3b and Figure 7, and postprocessed to obtain a 1 cm horizontal accuracy allowing for both georeferencing of the imagery and the creation of polygons over the calibration reference panels that would be used to carefully extract the panel image values of the calibration tarp in each image. Flights were conducted from 4 to 244 m above ground level (AGL) at 30-meter intervals with a constant north heading. A minimum of 30 images of the three greyscale (6%, 22%, 44%) spectrally homogenous reference panels were collected at seven viewing angles, one set at nadir followed by six additional locations distributed regularly around the calibration reference panels, to assess the Lambertian properties of the calibration tarp.

Figure 7.

Example MicaSense RedEdge true color composite images from each flight altitude during the North Farm experiments. During image collection, the UAS heading stayed fixed facing toward the north. A minimum of 30 images were collected at seven viewing angles, one set at nadir then six additional sets while hovering over numbered wood panels distributed evenly around the tarp.

2.3.2. Radiometric Calibration Framework Development (Lower Pearl River)

Flight data collection began in December 2014 with follow-up missions at a near two-month interval (Table 3). While regular operations began in December 2014, the deployment of the calibration reference panels started during the August 2015 mission. The primary goal of the UAS deployments were to image the greatest aerial extent possible for the mapping of the river system to support the National Weather Service (NWS) Lower Mississippi River Forecast Center (LMRFC) operations; therefore, flight lines varied during each deployment to account for wind direction and to optimize flight time (Figure 5b). This combination of water-based operations covering a wide range of environmental conditions and variable flight characteristics made for an ideal dataset to develop and examine the performance of a simplified ELM calibration framework for the reduction of atmospheric contributions in UAS imagery and production of scaled remote sensing reflectance imagery with UAS data collected in a rapid deployment configuration with limited site accessibility. However, it is important to note that the framework was tested in a coastal estuary when sky conditions were clear, with scattered clouds, or mostly cloudy; therefore, storms and other atmospheric conditions beyond typical, non-extreme weather conditions were not included in the data.

Table 3.

Dates of the Lower Pearl River missions, sensors flown, and image resolution based on flight 244 m altitude. Note that the calibration reference panel deployment began during the August 2015 mission.

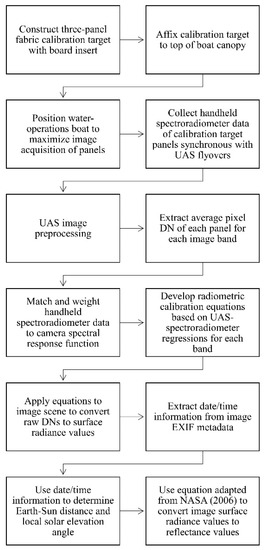

Missions lasted four to six days and imagery was collected between 08:00–16:00 LT with four to five flights per day producing eight to fifteen image acquisition instances of the calibration target per day (32–90 per mission). Regular operations involved two boats, one dedicated to flight operations and the other dedicated to water operations. The flight operations boat was tasked with the flight of the UAS and the download and storage of flight data. The water operations boat worked with the flight operations boat so that in-situ water quality information was collected each time the UAS flew directly overhead the water operations boat. The water operations boat also carried the calibration reference panels and the handheld spectroradiometer. Ground reference data of the calibration reference panels were collected in synchrony with UAS flyovers of the water operations boat using a GER 1500 spectroradiometer (Spectravista Inc., Poughkeepsie, NY, USA). Three GER 1500 spectroradiometer hyperspectral samples of each calibration reference panel were collected and averaged to produce an average spectral signature for each grey value. The workflow from field operations to the final image calibration is summarized in Figure 8.

Figure 8.

Workflow of Lower Pearl River data collection and radiometric calibration procedure of unmanned aerial system (UAS) imagery.

2.4. Image Processing

2.4.1. MicaSense RedEdge Imagery (North Farm)

The Geospatial Data Abstraction Library (GDAL) was used to stack the bands and to assign the appropriate WGS84 projection. Minor manual georeferencing corrections were made to ensure the imagery aligned with the ground control points collected by a differential GPS unit. Any images that had obvious artifacts were removed from the analysis. Rectangular shapefiles were manually created and edited to ensure only those pixels representative of the calibration reference panels would be included in the analysis. Imagery was organized by the flight altitude at which the imagery was collected using information from the onboard GPS.

The exposure time was not held constant during the experiment; therefore, as the scene footprint increased with higher flight altitudes, the MicaSense would dynamically adjust exposure settings to compensate for changes in returned signal from new targets in the scene (Table 4).

Table 4.

Average exposure times of the MicaSense RedEdge cameras used in the North Farm images collected at each altitude. Note the shift to consistent exposure settings at 124 m.

These variations were normalized by extracting exposure time and gain information from the image metadata and applying the calibration procedure to the orthomosaics as outlined in the MicaSense tutorial [53]. This procedure converted the raw pixel values to absolute spectral radiance values (W/m2/nm/sr). A slight modification was made to the MicaSense postprocessing procedure by removing vignette correction (Equation (1)) because darkening at the corners of the scene had little effect on the pixels representing the calibration tarp at the centre of the scene.

where is the normalized raw pixel value, is the normalized black level value, ,, are the radiometric calibration coefficients, is the image exposure time, is the sensor gain setting, are the pixel column and row number, and is the spectral radiance in W/m2/nm/sr.

All image rasters and panel polygons were projected to an Albers equal-area conic projection (EPSG:5070). This is an imperative step because geographic information systems (GIS) require that data are assigned a relevant projected coordinate system (PCS) for the accurate implementation of spatial analysis tools. The pixel values of each panel were extracted and averaged based on the panel polygon delineation using the ArcGIS 10.3 Spatial Analyst Zonal Statistics tool. The outcome from this analysis was two distributions, one of the uncorrected digital number image values and one of the MicaSense corrected radiance image values.

To further investigate patterns in the MicaSense distributions, a bootstrap resampling technique was applied to the post-processed radiance mean values and the median of the bootstrapped mean values were plotted. This procedure increased the robustness of the sample dataset by reducing the impact of outliers on the plotted median values for each waveband.

2.4.2. Canon EOS Rebel SL1 DSLR Imagery (Lower Pearl River)

Flight mosaics were generated for each mission using a camera alignment technique in the Agisoft Photoscan Pro software. This technique used the orientation of the camera at the time of the image registration and common points in overlapping images to generate tie points to triangulate the camera’s position. A point cloud was generated from this alignment procedure and was examined for any anomalies. The final point cloud was used to generate a mesh on which a GeoTIFF orthomosaic was exported. Variations in camera settings were normalized across the scene in this mosaic processing. All post-processed imagery can be retrieved online (http://www.gri.msstate.edu/geoportal/).

2.5. Empirical Line Calibration

This section provides a breakdown of the method used to convert the imagery with DN values to calibrated remote sensing reflectance values. The water operations boat would regularly change location after each ground-reference spectroradiometer measurement to reposition to a location that would be orthogonal to a future UAS flight line. During each sampling session, the water operations boat documented the time and location (latitude and longitude) of the sampling site. Upon downloading the UAS imagery, the water operations boat location was manually tagged whenever it was visible in the uncorrected UAS imagery and the calibration reference panels were delineated and stored as rectangular polygons. Because the water operations boat was regularly changing location, the boat location tags included both “sampling” and “in-transit” situations. To prevent inclusion of the “in-transit” locations in the analysis, the UAS DN pixel values were only extracted and averaged across the pixels within each of the delineated calibration reference panels when the manually tagged location information of the boat matched the location information at the time of ground-reference spectroradiometer collection. Hence, the final dataset used for the regression was a series of UAS-weighted ground spectroradiometer radiance values and extracted UAS DN data pairs.

A semi-automated quality control procedure was applied to carefully remove erroneous samples. This procedure included an algorithm that scanned all samples and flagged outliers. Those outliers were then manually interrogated and either retained or discarded. To increase the robustness of the radiometric calibration equations for the study area, the remaining samples from August 2015 and December 2015 missions were combined into a single dataset.

A necessary step was to convert the ground-reference hyperspectral spectroradiometer dataset to the multispectral UAS data by applying the Canon DSLR spectral response function to the handheld GER 1500 spectroradiometer data. This was accomplished by weighting spectroradiometer data values collected during the August 2015 and December 2015 missions with the relative spectral response function of each of the bands of the CIR camera:

where is the radiance computed for each of the CIR spectral bands, is the hyperspectral radiance measured using the hand-held spectroradiometer, λi and λj are the lower and upper limits of the bands, and is the relative spectral response function of the CIR bands [54,55].

The limited site accessibility across the Lower Pearl River study area prevented the deployment of the calibration reference panels during every UAS image reconnaissance flight; therefore, image-by-image radiometric calibrations were not possible. While alternatives included radiometric calibrations on a diurnal basis or seasonal basis, exploratory analyses (not shown) indicated that combining data from multiple missions produced more robust calibration equations. This allowed for a single set of radiometric calibration equations to be applied to the full study area independent from sampling time, thereby decreasing the time impacts of the radiometric calibration procedure on rapid UAS deployment operations. Therefore, spectroradiometer–UAS image pairs were combined from multiple flights and conditions to develop robust calibration equations to minimize errors across all UAS missions and atmospheric conditions. Using these spectroradiometer–UAS image pairs, regressions between the UAS weighted spectroradiometer radiance values and the UAS DN values of the calibration reference panels were developed for each spectral band. It is important to note that both radiance and remote sensing reflectance values were computed from the spectroradiometer data; however, spectroradiometer radiance values were used for developing the calibration equations rather than the remote sensing reflectance in regressions so that solar illumination could be applied later to the radiance data to obtain more accurate remote sensing reflectance relative to when the image acquisition took place. Two-thirds of the available site data from August 2015 and December 2015 were randomly selected and used to develop the calibration equations. The remaining third of data was used to verify the performance of the calibration equations. The calibration equations were then applied to each associated image band across the full mission mosaics.

Once the imagery was calibrated and the UAS DNs were converted to radiance, the imagery was normalized for illumination by converting from radiance to remote sensing reflectance in each waveband using the following equation adapted from NASA [56]:

where is the spectral radiance, d is the Earth–Sun distance in astronomical units, is the local solar elevation angle [which is equivalent to 90° minus the local solar zenith angle ()], and is the average spectral global irradiance at the surface for each band based on the American Society for Testing and Materials (ASTM) G-173-03 direct normal spectra.

This method of conducting the radiometric calibration for obtaining radiance, then the use of average surface irradiance and the solar illumination geometry for obtaining remote sensing reflectance was selected because the solar illumination conditions in past and future UAS applications will be different than the solar illumination conditions experienced during the development of the calibration equations. For example, some constraints of the current study included: (1) UAS images were collected in some areas of the Lower Pearl River estuary that were inaccessible preventing the deployment of the calibration reference panels; (2) illumination conditions changed between UAS flights conducted at different times of the day and during different times of the year; and (3) three flight campaigns (December 2014, March 2015, and May 2015) were conducted prior to the development and deployment of the calibration reference panels. Therefore, this was the best approach for reducing errors in that previously collected imagery. Furthermore, rather than developing new calibration equations for each time of the year to account for the prevailing illumination conditions, this approach allows for the application of the calibration equations to the data from the imaging sensor throughout the year to obtain radiance first, and then use the solar irradiance of the corresponding day to produce precise remote sensing reflectance imagery.

Performance of the calibration equations was assessed using the normalized root mean square error (NRMSE), the normalized mean absolute error (NMAE), goodness of fit (R2), and a Mann–Whitney U test. The normalized NRMSE and NMAE were calculated by dividing the root mean square error (RMSE) and the mean absolute error (MAE) by the range of the observed spectroradiometer data. This normalization facilitates the comparison of results with future datasets of different scales. The Mann–Whitney U test is a nonparametric test that assesses whether the distributions of observed and predicted datasets are equal; therefore, the Mann–Whitney U test was used to test the null hypothesis (Ho) that the distribution of the predicted and actual datasets are equal.

3. Results and Discussion

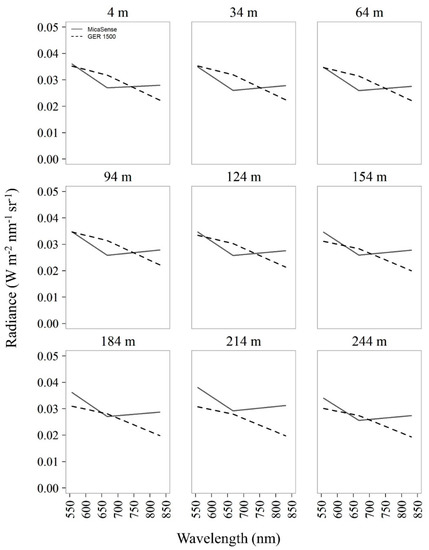

From the NF2 experiment, it was clear that an ELM radiometric calibration would be required for the Lower Pearl River UAS imagery. This is illustrated by comparing the GER 1500 ground spectroradiometer radiance values with the airborne green, red, and NIR MicaSense radiance values at each flight altitude (Figure 9). There was an important and clear increasing trend in atmospheric contributions to the UAS imagery, especially at flight altitudes higher than 124 m. Compared to the ground-based spectroradiometer radiance values, the MicaSense green and NIR radiance values returned greater signal to the imager at all flight altitudes. The MicaSense red band did not show radiance values as high as green and NIR bands because this specific band had a lower spectral response than is shown in the spectral response function from the manufacturer in Figure 4, indicating that it needs calibration. This points to the fact that coefficients derived in the lab and applied to this imagery in Equation (1) may not perform with the same accuracy in the field [57]. Nevertheless, the consistently higher radiance in the green band with increased altitude confirms that the imagery was impacted by atmospheric contributions. In addition to atmospheric scattering, there were challenges in obtaining representative spectral information from the 22% and 44% calibration reference panels because of saturated pixels (i.e., DN values >=65,520). This oversaturation was caused by the automated MicaSense exposure and ISO settings and it reduced the effectiveness of the prescribed MicaSense radiometric calibration procedures. Overall, the outcomes from the North Farm experiment suggested that an ELM radiometric calibration was required prior to obtaining biophysical information from the Lower Pearl River UAS imagery in order to develop new calibration coefficients to adjust for: (1) errors emanating from applying lab-derived coefficients to field imagery, (2) changes in sensor spectral response that occur after laboratory calibrations, and (3) atmospheric contributions to the imagery.

Figure 9.

Airborne MicaSense median of bootstrapped mean radiance values (solid line) plotted against ground-based GER 1500 Spectroradiometer median radiance values collected over the 6% panel at each flight altitude. Ground-based GER 1500 spectroradiometer data were collected just after MicaSense image acquisition while UAS climbed to next sampling altitude to reduce human interference during UAS data collection.

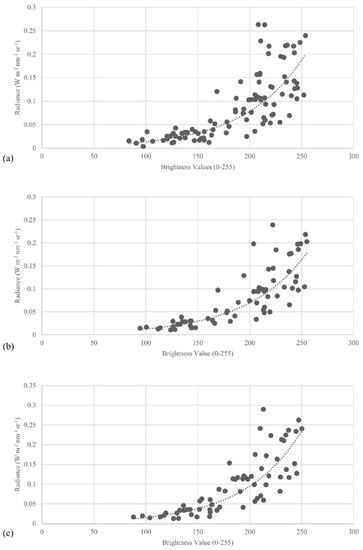

The Canon DSLR flown in the Lower Pearl River missions exhibited an exponential relationship between the raw Canon DNs and the GER 1500 ground spectroradiometer measured radiance values (Figure 10). These exponential relationships agree with past research [40,44,45,48]. The goodness of fit for each band (R2 green = 0.77, R2 red = 0.79, R2 nir = 0.77) is relatively high considering the current study was conducted over water and incorporated spectroradiometer–UAS DN pairs that spanned multiple missions to develop the radiometric calibration equations. Normalized RMSE and normalized MAE are also within acceptable ranges for each spectral band (Table 5). The Mann–Whitney U test results were not significant, indicating that the distribution of the predicted and measured datasets for all spectral bands are not significantly different. This is consistent with the outcomes from Wang and Myint [40] confirming that the simplified ELM radiometric calibration framework developed in the current study effectively reduces external factors influencing UAS imagery, including atmospheric contributions, while also producing scaled remote sensing reflectance imagery for all Lower Pearl River missions.

Figure 10.

Lower Pearl River UAS-weighted ground spectroradiometer–UAS image pairs for Canon DSLR (a) green band, (b) red band, and (c) NIR band from the August 2015 and December 2015 missions.

Table 5.

Resulting calibration equations and verification metrics from the Lower Pearl River radiometric calibration.

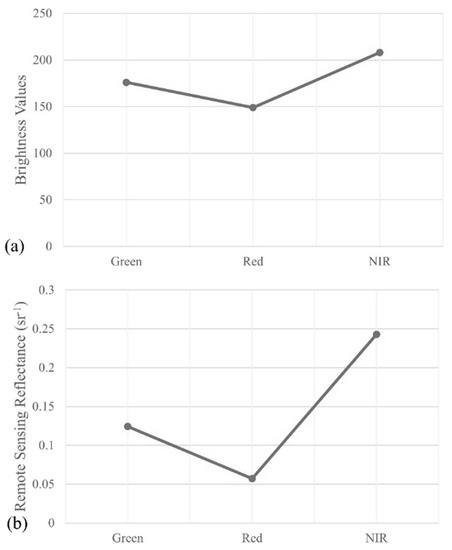

To further illustrate the performance of the presented ELM radiometric calibration framework, 2000 vegetation pixels were randomly selected and their median spectral response was plotted for the August 2015 mission imagery (Figure 11). Healthy vegetation should return a much stronger NIR signal than green and red. However, the brightness values in green and red bands were quite high, as observed in Figure 11a. These high values in the green and red bands are due to atmospheric contributions to the imagery and errors emanating from inherent differences in the spectral responses of the sensor bands (as shown in Figure 6). After applying the calibration equations, the green and red signals decreased resulting in a typical vegetation spectral response. The further dividing of solar irradiance to obtain remote sensing reflectance helped to minimize additional error due to variations in solar conditions throughout the image collection period (Figure 11b). A unique aspect of the proposed framework is this separation of radiance calibration and normalization of solar irradiance which allowed for the correction of any imagery collected with the same Canon DSLR camera independent from the time of collection; therefore, this framework also allows for the application of the calibration equations to imagery collected at a different time.

Figure 11.

Spectra of 2000 manually selected vegetation pixels in the Canon DSLR Lower Pearl River August 2015 imagery for the (a) uncorrected imagery, and (b) corrected and scaled remote sensing reflectance imagery.

If irradiance can be measured during every UAS flight, then a more proper conversion of radiance to reflectance should be the goal of every study to produce the most accurate conversion from radiance to remote sensing reflectance. In the event that these irradiance measurements are unavailable due to study site accessibility or missing reference panels during data acquisition, then this framework offers a viable alternative for reducing errors in UAS imagery. In the current study, the development of these calibration equations using numerous sampling areas across multiple missions and under various environmental conditions created robust operational calibration equations. While this framework produced a lower goodness-of-fit value compared to recent land-based studies, the calibration equations better represent the varied conditions of the study area and are effective in reducing atmospheric contributions and other external factors across the full study area without requiring ground-reference spectroradiometer data in every flight mosaic. However, it is imperative to acknowledge that some error will persist because the calibration equations were developed using information collected under a variety of conditions, such as changes in illumination conditions across the scene due to scattered clouds.

Consistent illumination conditions across the flight mosaics were one of the greatest challenges, because it was rare that the sampling coincided with an entirely clear-sky day in the coastal estuary environment. This created local illumination variations that led to situations where the UAS flyover of the boat and the time of the ground reference spectroradiometer sampling occurred under sunny conditions while some portions of the scene were influenced by cloud shadows when sampled by the UAS. Some UAS flyovers of the boat and ground reference spectroradiometer sampling occurred under a cloud shadow, so those cloudy local illumination effects were quantified to some degree by the calibration equation. Ideally, however, the future integration of automated techniques to normalize cloud shadow effects by collecting upward irradiance data onboard the UAS during flights would help to overcome the cloud shadow effects.

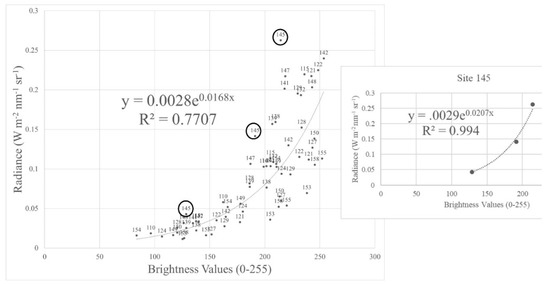

It is likely that the accuracy of the Lower Pearl River radiometric calibrations would be further improved with the incorporation of a bidirectional reflectance distribution function (BRDF) correction [58,59]. Furthermore, an image-by-image calibration could potentially help acquire the level of accuracy identified in previous UAS-based ELM radiometric calibration studies (Figure 12); however, image-by-image calibration equations were not feasible in this study because the UAS was often flying over inaccessible areas (e.g., forested wetlands or salt marshes) and it was not possible to deploy the calibration reference panels nor collect hand-held spectroradiometer data in these areas.

Figure 12.

Example of a site-specific calibration developed from a single image compared to the multi-site calibration equation for the green band. Labels at each point indicate the sample site where the data were obtained. The three circles in the left graph denote the site data extracted to generate the image-by-image calibration (right graph). Equations in each graph correspond to the multi-site calibration based on all points in the dataset (left graph) and the single-site calibration (right graph).

This points to the importance of identifying mission spectral accuracy requirements prior to the execution of any UAS field operation. If greater spectral accuracy is required, then image-by-image calibrations may be preferred; however, image-by-image calibrations will only be applicable to missions sampling a relatively small area. A recent approach by Mafanya et al. [45] proposes an effective method for improving calibration accuracy for larger scenes by implementing a statistical assessment to ensure the scene being corrected is not significantly different than the scene from which the calibration equations were developed. While shown to be effective for land-based studies, future research is required to assess the performance of this approach in water-based studies. Furthermore, it remains uncertain how corrections should be applied to scenes significantly different than the scene from which the calibration equations were developed. Hence, the proposed ELM radiometric calibration framework and subsequent calculation of remote sensing reflectance offers a method for developing and applying calibration equations to large study areas independent from diurnal and seasonal variations. The proposed framework will be best adapted in studies with limited site accessibility or in rapid response configurations where temporal constraints limit the ability to deploy and sample calibration reference panels within the area of interest.

4. Conclusions

The objective of the current study was to develop a simplified empirical line method (ELM) radiometric calibration framework for the reduction of atmospheric contributions in unmanned aerial system (UAS) imagery and for the production of scaled remote sensing reflectance imagery for UAS operations with limited site accessibility and data acquisition time constraints. Experiments were conducted at the R. R. Foil Plant Science Research Center (North Farm) on the campus of Mississippi State University to quantify the degree to which atmospheric contributions implicate UAS imagery. Imagery collected over the Mississippi State University North Farm experimental agricultural facility indicated that atmospheric contributions to UAS imagery have the greatest impacts on shorter wavelength bands (e.g., green band), and there is a gradual increase in these atmospheric contributions within a relatively small flight column (4-244 m above ground level). A simplified ELM radiometric calibration was proposed to reduce the impacts of these atmospheric contributions on UAS imagery. Unique to the current study was the water-based nature of regular UAS imagery reconnaissance missions. This required the modification of existing UAS radiometric calibration frameworks and led to the development of a rapidly deployable calibration target and a cost- and time-effective radiometric calibration framework for correcting UAS imagery across various applications.

Results agreed with previous studies that an exponential relationship exists between the UAS recorded DNs and spectroradiometer radiance values. Calibration equations developed from these ground spectroradiometer–UAS relationships performed well, indicating that the proposed radiometric calibration framework effectively reduces atmospheric contributions in UAS imagery. A benefit of the proposed framework is that the calibration equations were developed independently from the solar illumination normalization so that the calibration equations can be applied to any past or future imagery collected over the study area with the same imaging sensor. This greatly extends the capacity to improve UAS image accuracy in a variety of complex and rapid response sampling conditions where highly controlled calibration target deployment and intensive radiometric calibration procedures are not feasible. As such, the current study helps pave the way for future research exploring UAS-based remote sensing applications. The figures presented can be used as reference to understand the varied implications of atmospheric contributions on UAS imagery relative to flight altitude; however, additional experiments under various conditions would be useful to further examine these atmospheric contributions to UAS imagery across additional locations and conditions. Follow-up experiments involving a hyperspectral spectral imaging sensor, advanced on-board meteorological instrumentation, and higher flight altitudes could further distinguish planetary boundary layer factors impacting UAS imagery.

Author Contributions

Project conceptualization, C.M.Z., P.D., J.L.D., and R.M.; Methodology, C.M.Z. and P.D.; Software, L.H.; Validation, C.M.Z., P.D. and L.H.; Formal analysis, C.M.Z.; Investigation, C.M.Z., P.D. and L.H.; Resources, P.D., R.M., and L.H.; Data curation, C.M.Z. and L.H.; Writing—original draft preparation, C.M.Z. and P.D.; Writing—review and editing, C.M.Z., P.D., J.L.D., R.M. and L.H.; Visualization, C.M.Z.; Supervision, P.D., J.L.D., R.M.; Project administration, R.M.; Funding acquisition, P.D., J.L.D., and R.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Oceanic and Atmospheric Administration (NOAA) project “Sensing hazards with operational unmanned technology for the river forecasting centers (SHOUT4Rivers)” [award number NA11OAR4320199] through the Northern Gulf Institute, a NOAA Cooperative Institute at the Mississippi State University.

Acknowledgments

The authors would like to thank the anonymous reviewers for their constructive feedback which led to substantial improvements to the article. We thank the UAS pilots David Young and Sean Meacham and those who assisted with fieldwork including Gray Turnage, Louis Wasson, Saurav Silwal, John Van Horn, Landon Sanders, and Preston Stinson. In addition, we would like to thank Madhur Devkota and Wondimagegn Beshah for their help in collecting and processing spectroradiometer data.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Reuder, J.; Brisset, P.; Jonassen, M.M.; Mayer, S. The Small Unmanned Meteorological Observer SUMO: A new tool for atmospheric boundary layer research. Meteorol. Z. 2009, 18, 141–147. [Google Scholar] [CrossRef]

- Hardin, P.J.; Hardin, T.J. Small-Scale Remotely Piloted Vehicles in Environmental Research. Geogr. Compass 2010, 4, 1297–1311. [Google Scholar] [CrossRef]

- Milas, A.S.; Cracknell, A.P.; Warner, T.A. Drones-the third generation source of remote sensing data. Int. J. Remote Sens. 2018, 39, 7125–7137. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.; Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Martínez-de Dios, J.R.; Merino, L.; Caballero, F.; Ollero, A.; Viegas, D.X. Experimental results of automatic fire detection and monitoring with UAVs. For. Ecol. Manag. 2006, 234, S232. [Google Scholar] [CrossRef]

- Ollero, A.; Martínez-de-Dios, J.R.; Merino, L. Unmanned aerial vehicles as tools for forest-fire fighting. For. Ecol. Manag. 2006, 234, S263. [Google Scholar] [CrossRef]

- Aanstoos, J.V.; Hasan, K.; O’Hara, C.G.; Prasad, S.; Dabbiru, L.; Mahrooghy, M.; Nobrega, R.; Lee, M.; Shrestha, B. Use of remote sensing to screen earthen levees. In Proceedings of the 2010 IEEE 39th Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 13–15 October 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Adams, S.M.; Friedland, C.J. A Survey of Unmanned Aerial Vehicle (UAV) Usage for Imagery Collection in Disaster Research and Management. In Proceedings of the 9th International Workshop on Remote Sensing for Disaster Response, Stanford, CA, USA, 15–16 September 2011. [Google Scholar]

- Chou, T.; Yeh, M.; Chen, Y.; Chen, Y. Disaster monitoring and management by the unmanned aerial vehicle technology. ISPRS TC VII Symp. 2010, XXXVIII, 137–142. [Google Scholar]

- Li, Y.; Gong, J.H.; Zhu, J.; Ye, L.; Song, Y.Q.; Yue, Y.J. Efficient dam break flood simulation methods for developing a preliminary evacuation plan after the Wenchuan Earthquake. Nat. Hazards Earth Syst. Sci. 2012, 12, 97–106. [Google Scholar] [CrossRef]

- Messinger, M.; Silman, M. Unmanned aerial vehicles for the assessment and monitoring of environmental contamination: An example from coal ash spills. Environ. Pollut. 2016, 218, 889–894. [Google Scholar] [CrossRef]

- Hardin, P.J.; Jensen, R.R. Small-Scale Unmanned Aerial Vehicles in Environmental Remote Sensing: Challenges and Opportunities. Giscience Remote Sens. 2011, 48, 99–111. [Google Scholar] [CrossRef]

- Frew, E.W.; Elston, J.; Argrow, B.; Houston, A.; Rasmussen, E. Sampling severe local storms and related phenomena: Using unmanned aircraft systems. IEEE Robot. Autom. Mag. 2012, 19, 85–95. [Google Scholar] [CrossRef]

- Zaman, B.; Jensen, A.M.; McKee, M. Use of high-resolution multispectral imagery acquired with an autonomous unmanned aerial vehicle to quantify the spread of an invasive wetlands species. Int. Geosci. Remote Sens. Symp. 2011, 803–806. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Hunt, E.R.; Dean Hively, W.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; McCarty, G.W. Acquisition of NIR-green-blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; De Castro, A.I.; Peña-Barragán, J.M. Configuration and Specifications of an Unmanned Aerial Vehicle (UAV) for Early Site Specific Weed Management. PLoS ONE 2013, 8. [Google Scholar] [CrossRef]

- Gómez-Candón, D.; De Castro, A.I.; López-Granados, F. Assessing the accuracy of mosaics from unmanned aerial vehicle (UAV) imagery for precision agriculture purposes in wheat. Precis. Agric. 2014, 15, 44–56. [Google Scholar] [CrossRef]

- Erena, M.; Montesinos, S.; Portillo, D.; Alvarez, J.; Marin, C.; Fernandez, L.; Henarejos, J.M.; Ruiz, L.A. Configuration and specifications of an unmanned aerial vehicle for precision agriculture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 809–816. [Google Scholar] [CrossRef]

- Barreiro, A.; Domínguez, J.M.; Crespo, A.J.; Gonzalez-Jorge, H.; Roca, D.; Gomez-Gesteira, M. Integration of UAV photogrammetry and SPH modelling of fluids to study runoff on real terrains. PloS ONE 2014, 9. [Google Scholar] [CrossRef]

- Casella, E.; Rovere, A.; Pedroncini, A.; Stark, C.P.; Casella, M.; Ferrari, M.; Firpo, M. Drones as tools for monitoring beach topography changes in the Ligurian Sea (NW Mediterranean). Geo-Mar. Lett. 2016, 36, 151–163. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV Remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Tamminga, A.D.; Eaton, B.C.; Hugenholtz, C.H. UAS-based remote sensing of fluvial change following an extreme flood event. Earth Surf. Process. Landf. 2015, 40, 1464–1476. [Google Scholar] [CrossRef]

- Messinger, M.; Asner, G.; Silman, M.; Messinger, M.; Asner, G.P.; Silman, M. Rapid Assessments of Amazon Forest Structure and Biomass Using Small Unmanned Aerial Systems. Remote Sens. 2016, 8, 615. [Google Scholar] [CrossRef]

- Husson, E.; Ecke, F.; Reese, H. Comparison of manual mapping and automated object-based image analysis of non-submerged aquatic vegetation from very-high-resolution UAS images. Remote Sens. 2016, 8, 724. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Notario-García, M.D.; Meroño de Larriva, J.E.; Sánchez de la Orden, M.; García-Ferrer Porras, A. Validation of measurements of land plot area using UAV imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 33, 270–279. [Google Scholar] [CrossRef]

- Padula, F.; Pearlman, A.J.; Cao, C.; Goodman, S. Towards Post-Launch Validation of GOES-R ABI SI Traceability with High-Altitude Aircraft, Small near Surface UAS, and Satellite Reference Measurements; Butler, J.J., Xiong, X. (Jack), Gu, X., Eds.; SPIE: Bellingham, WA, USA, 2016; p. 99720V. [Google Scholar] [CrossRef]

- De Cubber, G.; Balta, H.; Doroftei, D.; Baudoin, Y. UAS deployment and data processing during the Balkans flooding. In Proceedings of the 2014 IEEE International Symposium on Safety, Security, and Rescue Robotics (2014), Hokkaido, Japan, 27–30 October 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, Y. Advances in Remote Sensing of Flooding. Water 2015, 7, 6404–6410. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant. Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Cracknell, A.P. UAVs: Regulations and law enforcement. Int. J. Remote Sens. 2017, 38, 3054–3067. [Google Scholar] [CrossRef]

- Berni, J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring From an Unmanned Aerial Vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor correction and radiometric calibration of a {6-Band} multispectral imaging sensor for UAV remote sensing. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B1, 393–398. [Google Scholar] [CrossRef]

- Xu, K.; Gong, Y.; Fang, S.; Wang, K.; Lin, Z.; Wang, F. Radiometric Calibration of UAV Remote Sensing Image with Spectral Angle Constraint. Remote Sens. 2019, 11, 1291. [Google Scholar] [CrossRef]

- Lucieer, A.; Malenovský, Z.; Veness, T.; Wallace, L. HyperUAS-Imaging Spectroscopy from a Multirotor Unmanned Aircraft System. J. F. Robot. 2014, 31, 571–590. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Wang, C.; Myint, S. A Simplified Empirical Line Method of Radiometric Calibration for Small Unmanned Aircraft Systems-Based Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1876–1885. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Species classification using Unmanned Aerial Vehicle (UAV)-acquired high spatial resolution imagery in a heterogeneous grassland. ISPRS J. Photogramm. Remote Sens. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Yang, G.; Li, C.; Wang, Y.; Yuan, H.; Feng, H.; Xu, B.; Yang, X. The DOM Generation and Precise Radiometric Calibration of a UAV-Mounted Miniature Snapshot Hyperspectral Imager. Remote Sens. 2017, 9, 642. [Google Scholar] [CrossRef]

- Coburn, C.A.; Smith, A.M.; Logie, G.S.; Kennedy, P. Radiometric and spectral comparison of inexpensive camera systems used for remote sensing. Int. J. Remote Sens. 2018, 39, 4869–4890. [Google Scholar] [CrossRef]

- Logie, G.S.J.; Coburn, C.A. An investigation of the spectral and radiometric characteristics of low-cost digital cameras for use in UAV remote sensing. Int. J. Remote Sens. 2018, 39, 4891–4909. [Google Scholar] [CrossRef]

- Mafanya, M.; Tsele, P.; Botai, J.O.; Manyama, P.; Chirima, G.J.; Monate, T. Radiometric calibration framework for ultra-high-resolution UAV-derived orthomosaics for large-scale mapping of invasive alien plants in semi-arid woodlands: Harrisia pomanensis as a case study. Int. J. Remote Sens. 2018, 39, 1–22. [Google Scholar] [CrossRef]

- Miyoshi, G.T.; Imai, N.N.; Tommaselli, A.M.G.; Honkavaara, E.; Näsi, R.; Moriya, É.A.S. Radiometric block adjustment of hyperspectral image blocks in the Brazilian environment. Int. J. Remote Sens. 2018, 39, 4910–4930. [Google Scholar] [CrossRef]

- Jeong, Y.; Yu, J.; Wang, L.; Shin, H.; Koh, S.M.; Park, G. Cost-effective reflectance calibration method for small UAV images. Int. J. Remote Sens. 2018, 39, 7225–7250. [Google Scholar] [CrossRef]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Che, N.; Price, J.C. Survey of radiometric calibration results and methods for visible and near infrared channels of NOAA-7, -9, and -11 AVHRRs. Remote Sens. Environ. 1992, 41, 19–27. [Google Scholar] [CrossRef]

- Moran, M.S.; Bryant, R.; Thome, K.; Ni, W.; Nouvellon, Y.; Gonzalez-Dugo, M.P.; Qi, J.; Clarke, T.R. A refined empirical line approach for reflectance factor retrieval from Landsat-5 TM and Landsat-7 ETM+. Remote Sens. Environ. 2001, 78, 71–82. [Google Scholar] [CrossRef]

- Karpouzli, E.; Malthus, T. The empirical line method for the atmospheric correction of IKONOS imagery. Int. J. Remote Sens. 2003, 24, 1143–1150. [Google Scholar] [CrossRef]

- Hakala, T.; Suomalainen, J.; Peltoniemi, J.I. Acquisition of Bidirectional Reflectance Factor Dataset Using a Micro Unmanned Aerial Vehicle and a Consumer Camera. Remote Sens. 2010, 2, 819–832. [Google Scholar] [CrossRef]

- MicaSense RedEdge and Altum Image Processing Tutorials. Available online: https://github.com/micasense/imageprocessing (accessed on 10 December 2019).

- Kidder, S.Q.; Vonder Haar, T.H. Satellite Meteorology: An Introduction; Academic Press: Cambridge, MA, USA, 1995; ISBN 9780080572000. [Google Scholar]

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing; Elsevier: Amsterdam, The Netherlands, 2006; ISBN 9781493300723. [Google Scholar]

- NASA. Landsat 7 Science Data Users Handbook. Available online: https://landsat.gsfc.nasa.gov/wp-content/uploads/2016/08/Landsat7_Handbook.pdf (accessed on 1 May 2020).

- Cao, S.; Danielson, B.; Clare, S.; Koenig, S.; Campos-Vargas, C.; Sanchez-Azofeifa, A. Radiometric calibration assessments for UAS-borne multispectral cameras: Laboratory and field protocols. ISPRS J. Photogramm. Remote Sens. 2019, 149, 132–145. [Google Scholar] [CrossRef]

- Tu, Y.-H.; Phinn, S.; Johansen, K.; Robson, A.; Tu, Y.-H.; Phinn, S.; Johansen, K.; Robson, A. Assessing Radiometric Correction Approaches for Multi-Spectral UAS Imagery for Horticultural Applications. Remote Sens. 2018, 10, 1684. [Google Scholar] [CrossRef]

- Stow, D.; Nichol, C.J.; Wade, T.; Assmann, J.J.; Simpson, G.; Helfter, C. Illumination Geometry and Flying Height Influence Surface Reflectance and NDVI Derived from Multispectral UAS Imagery. Drones 2019, 3, 55. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).