Fractal Dimension-Based Multi-Focus Image Fusion via Coupled Neural P Systems in NSCT Domain

Abstract

1. Introduction

- (1)

- The coupled neural P systems (CNP) are used to process low-frequency components in order to obtain better background information;

- (2)

- A fractal dimension-based focus measure (FDFM) combined with spatial frequency (SF) is used to process high-frequency components, thereby obtaining more detailed image information;

- (3)

- Through extensive qualitative and quantitative experiments conducted on three datasets, our method consistently outperforms state-of-the-art (SOTA) techniques, demonstrating superior performance.

2. Related Works

2.1. Spatial Domain-Based Image Fusion

2.2. Transform Domain-Based Image Fusion

2.3. Deep Learning-Based Image Fusion

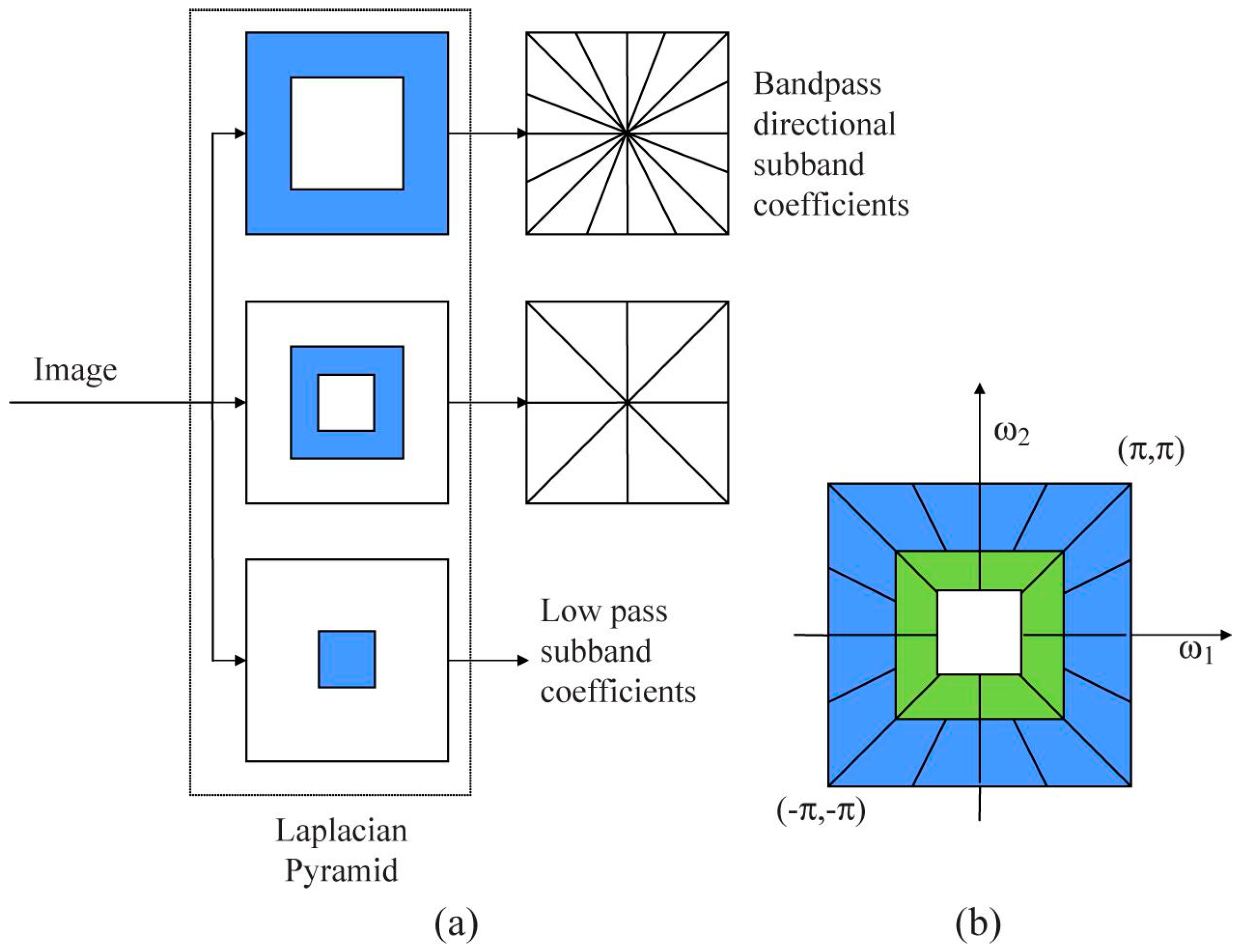

3. Nonsubsampled Contourlet Transform

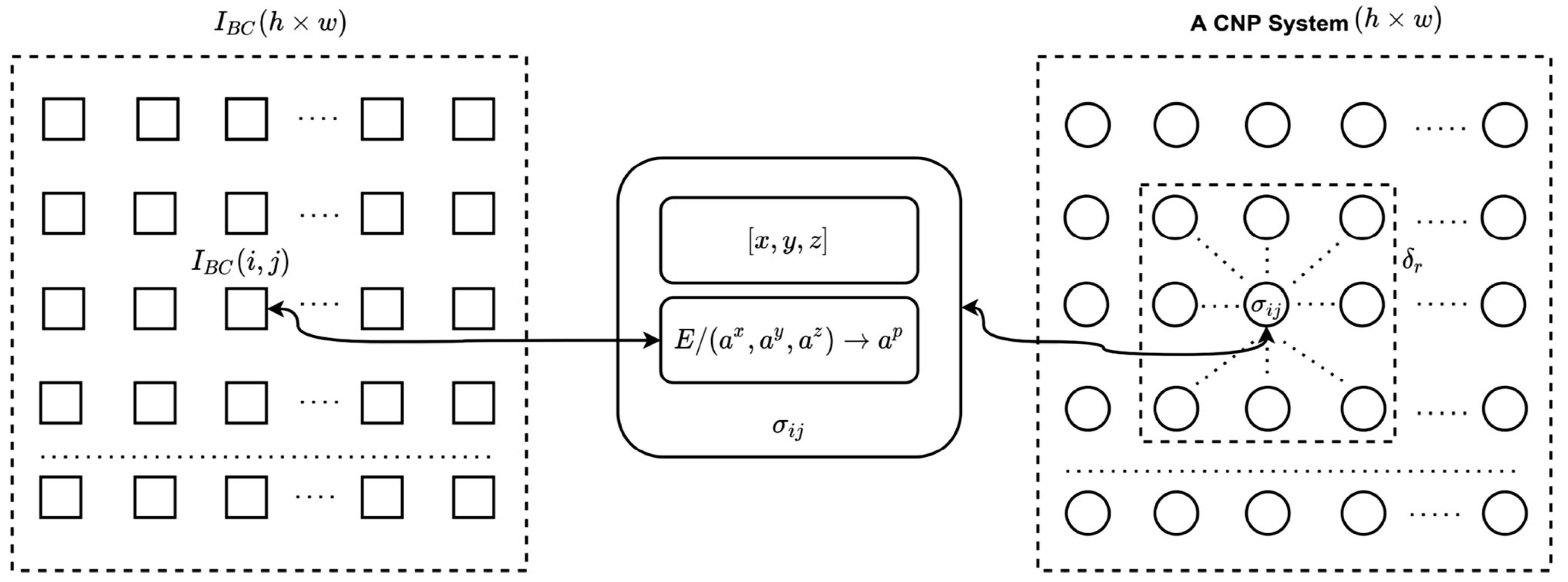

4. Coupled Neural P Systems

- (1)

- is an alphabet (the objective a is known as the spike);

- (2)

- are an array of coupled neurons of the formwhere

- (a)

- is the value of spikes in feeding input unit in neuron ;

- (b)

- is the value of spikes in linking input unit in neuron ;

- (c)

- is the value of spikes in dynamic threshold unit in neuron ;

- (d)

- denotes the finite set of spiking rules, of the form , where E is the firing condition, , , and .

- (3)

- , where r is the neighborhood radius.

5. The Proposed Method

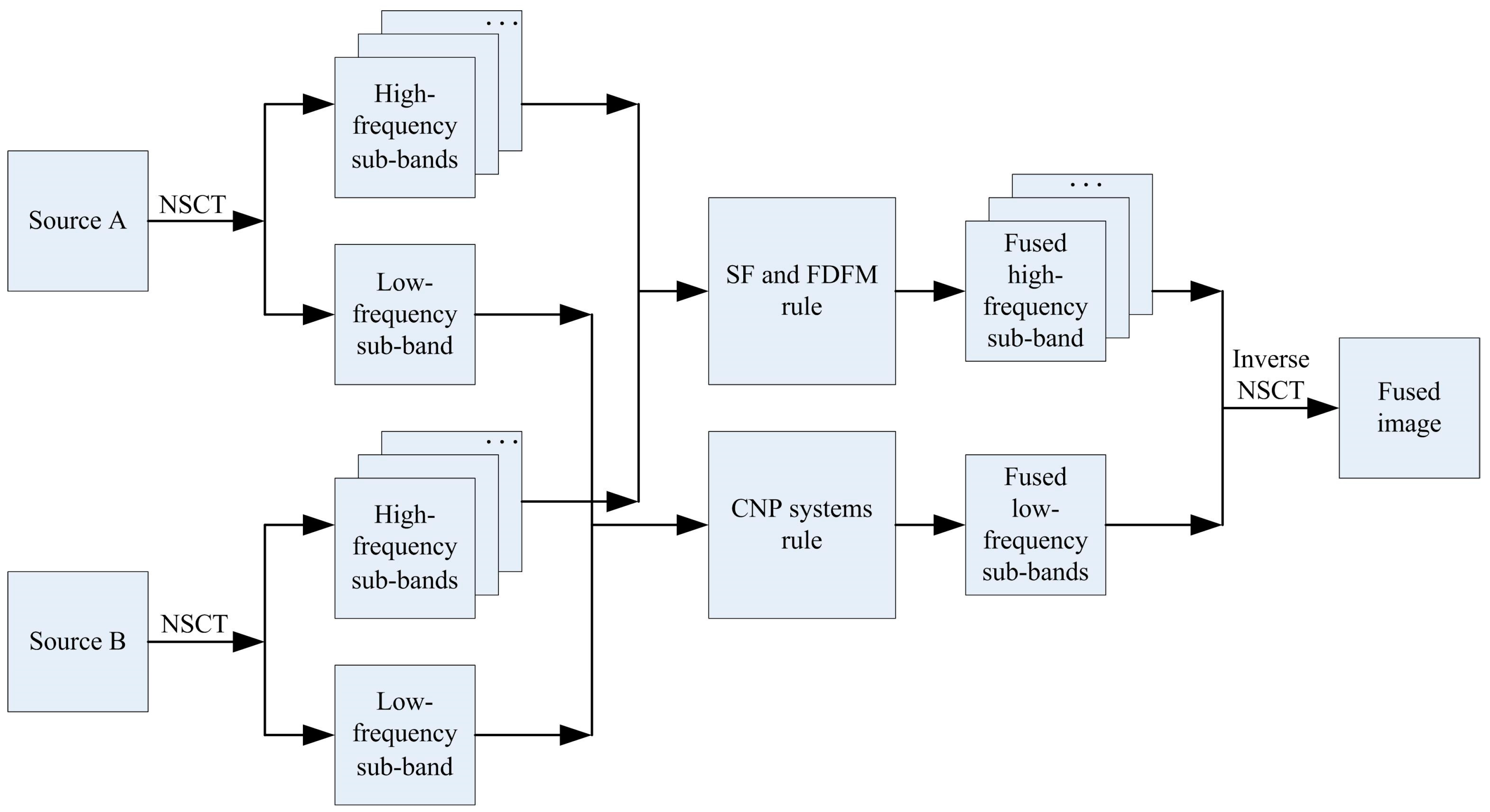

5.1. NSCT Decomposition

5.2. Low-Frequency Coefficient Fusion

5.3. High-Frequency Coefficient Fusion

5.4. Inverse NSCT Transform

| Algorithm 1 Proposed MFIF method |

| Input: the source images: A and B Parameters: The number of NSCT decomposition levels: , the number of directions at each decomposition level: , Main step: Step 1: NSCT decomposition For each source image Perform NSCT decomposition on to generate , , ; End Step 2: Low-frequency components fusion For each source image Calculate the CNP for using Equations (1)–(7); End Merge and using Equation (8) to generate ; Step 3: High-frequency components fusion For each level For each direction For each source image Calculate the SF for using Equations (9) and (10); Calculate the FDFM for using Equations (11) and (12); End Merge and using Equation (13); End End Step 4: Inverse NSCT Perform inverse NSCT on to generate ; Output: the fused image . |

6. Experimental Results and Discussion

6.1. Experimental Setup

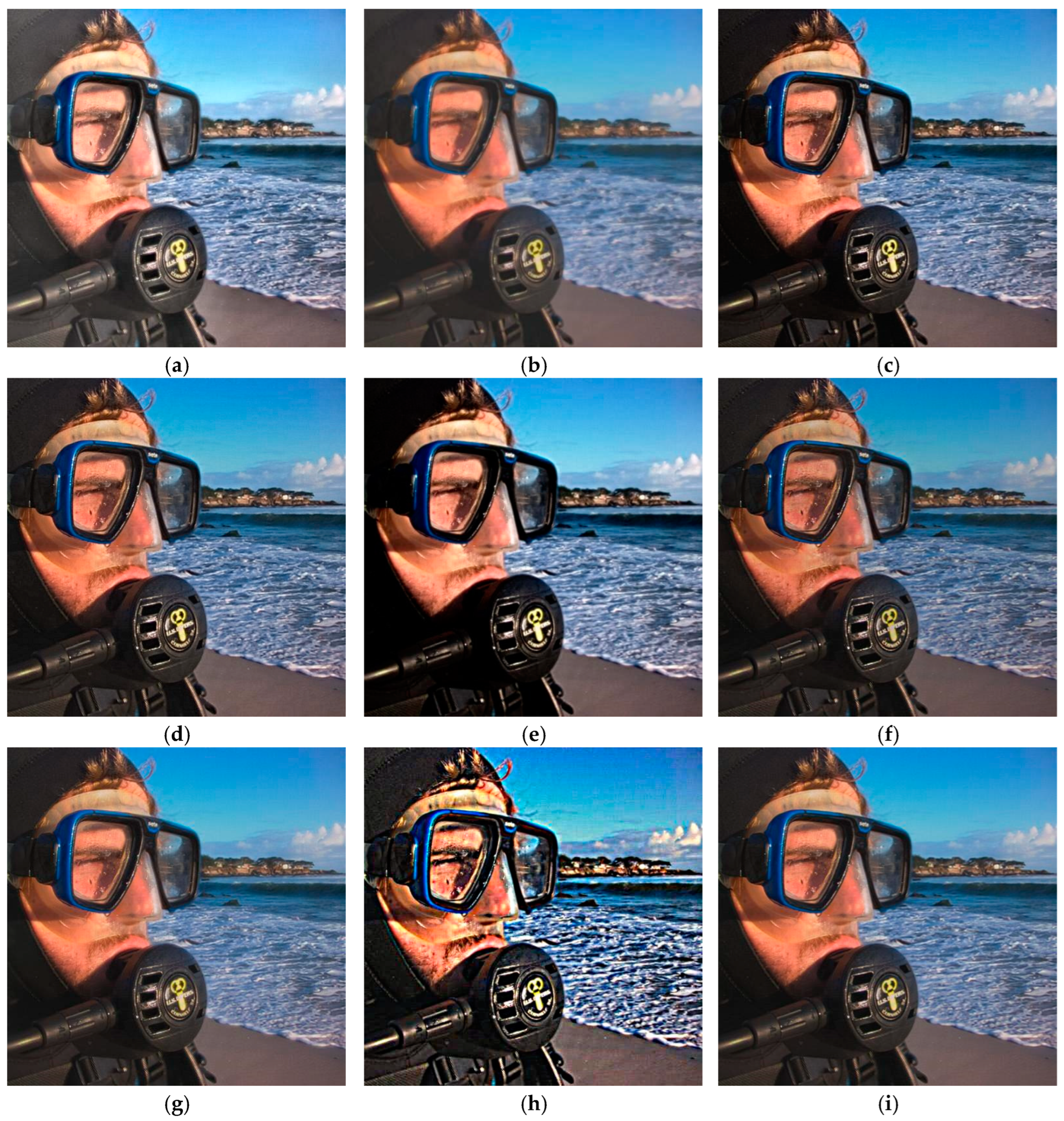

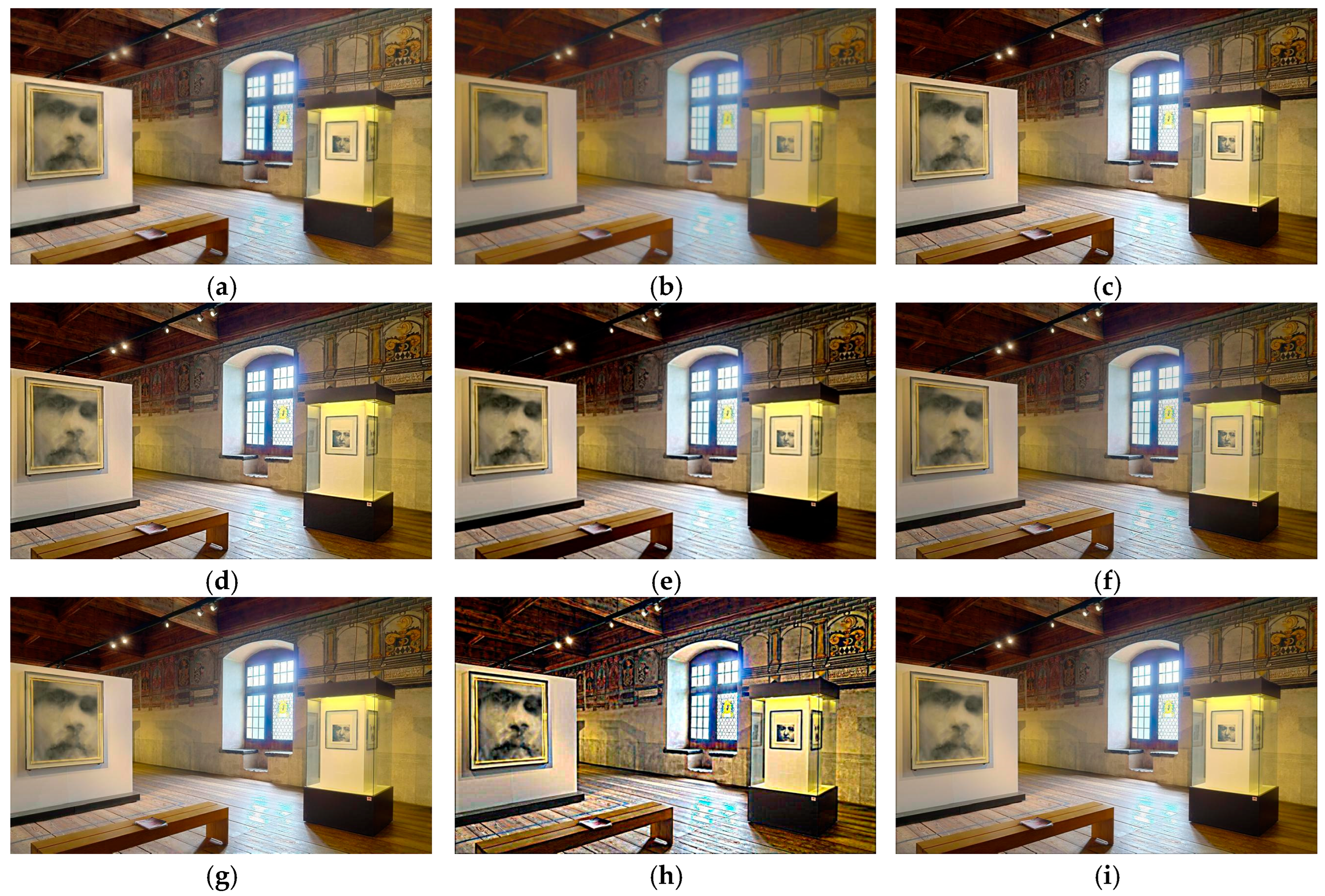

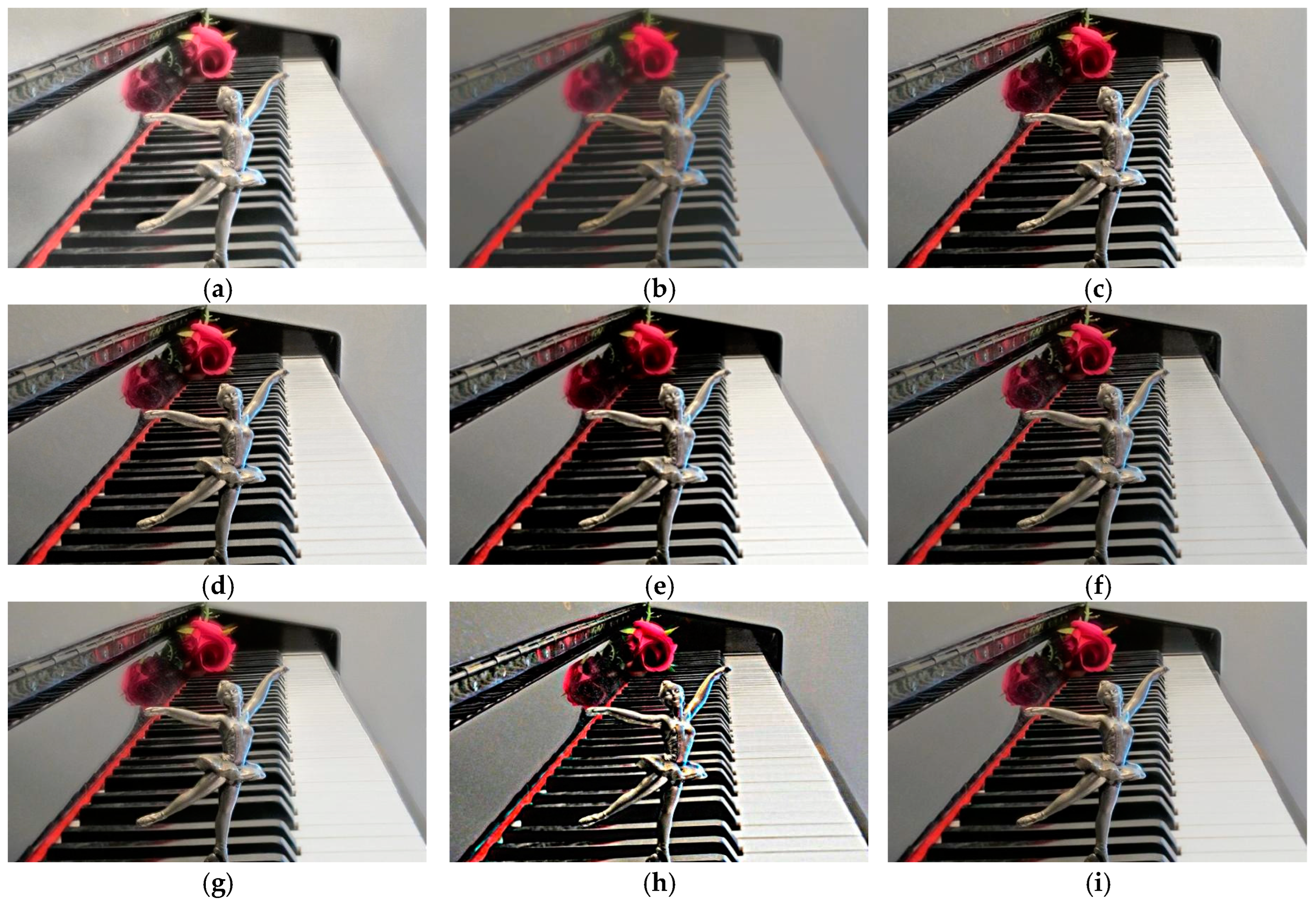

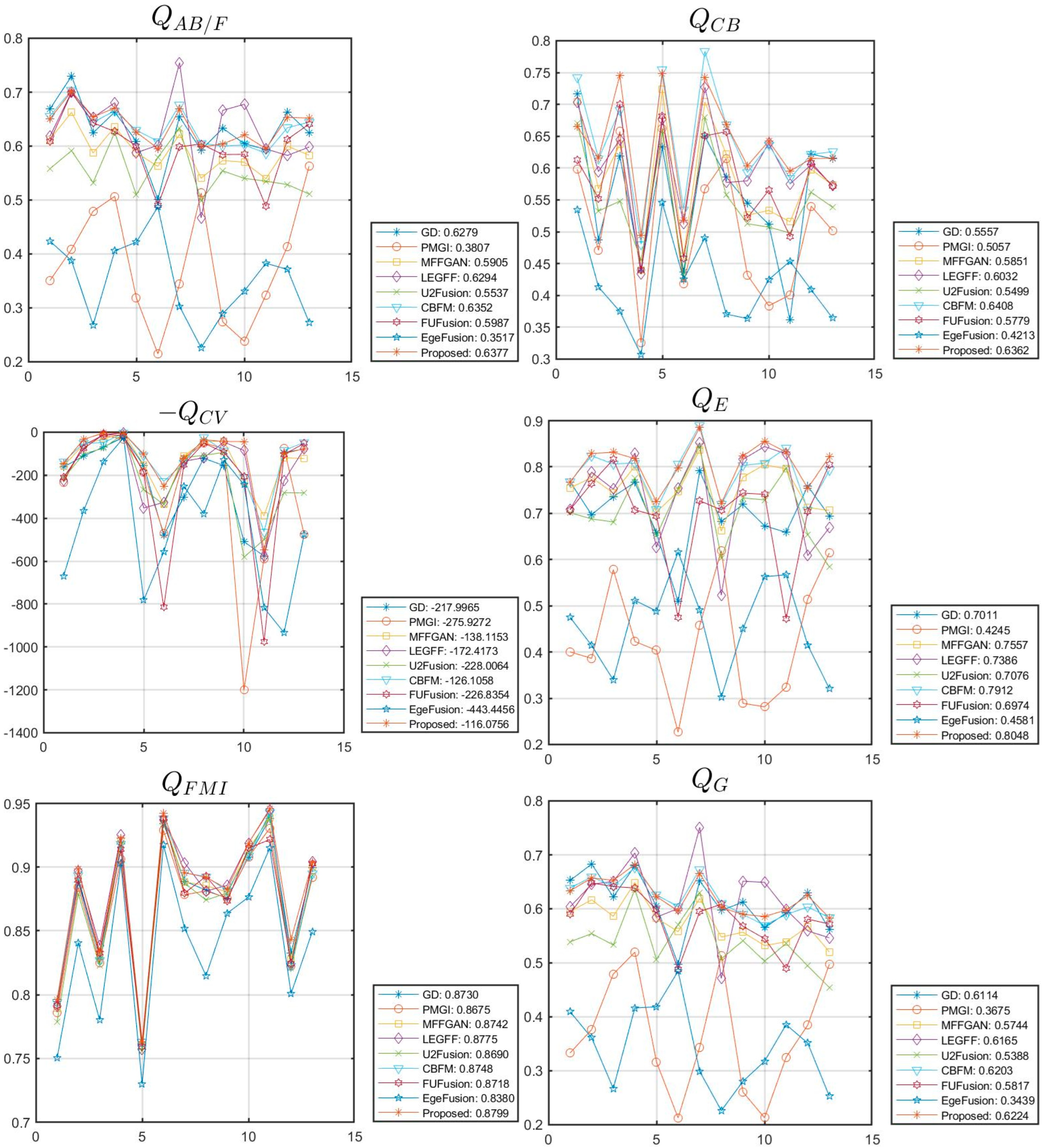

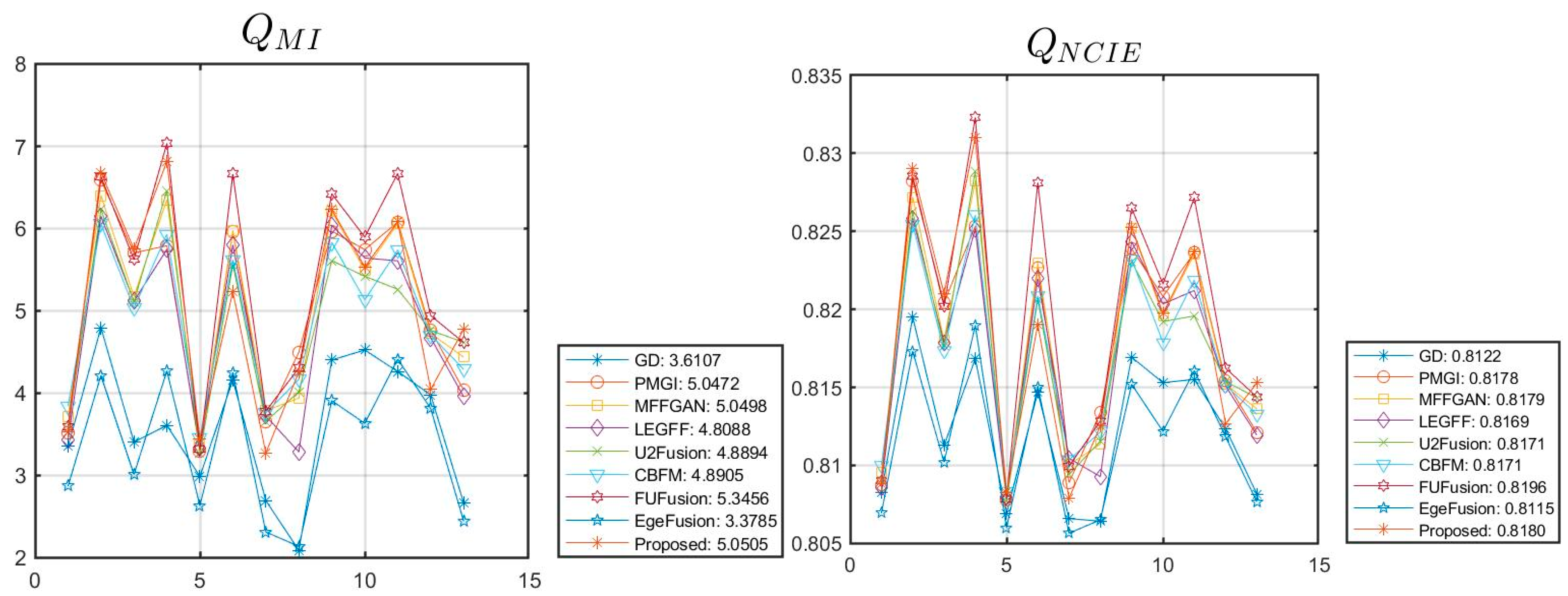

6.2. Fusion Results and Discussion

- (1)

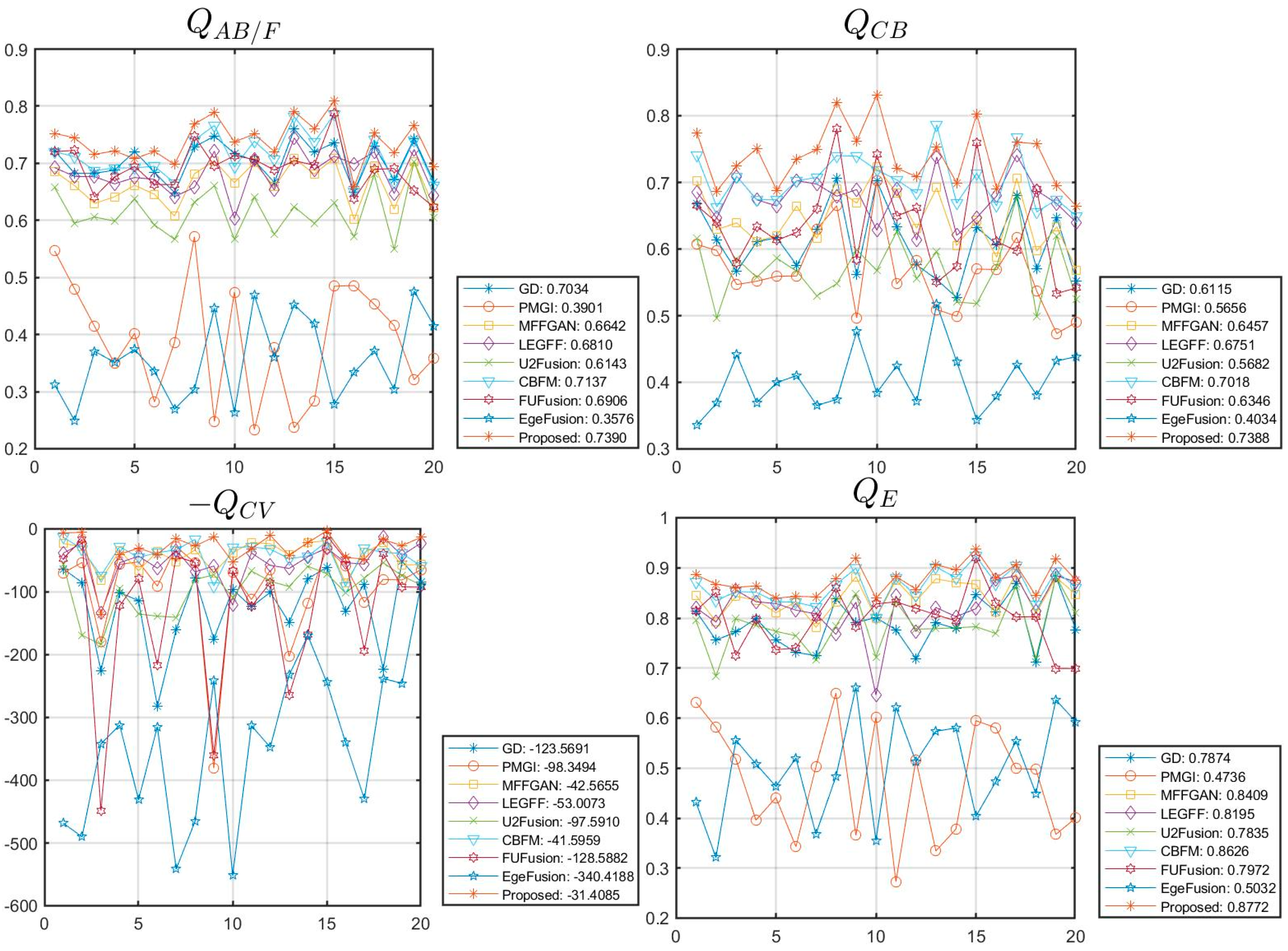

- Results on the Lytro Dataset

- (2)

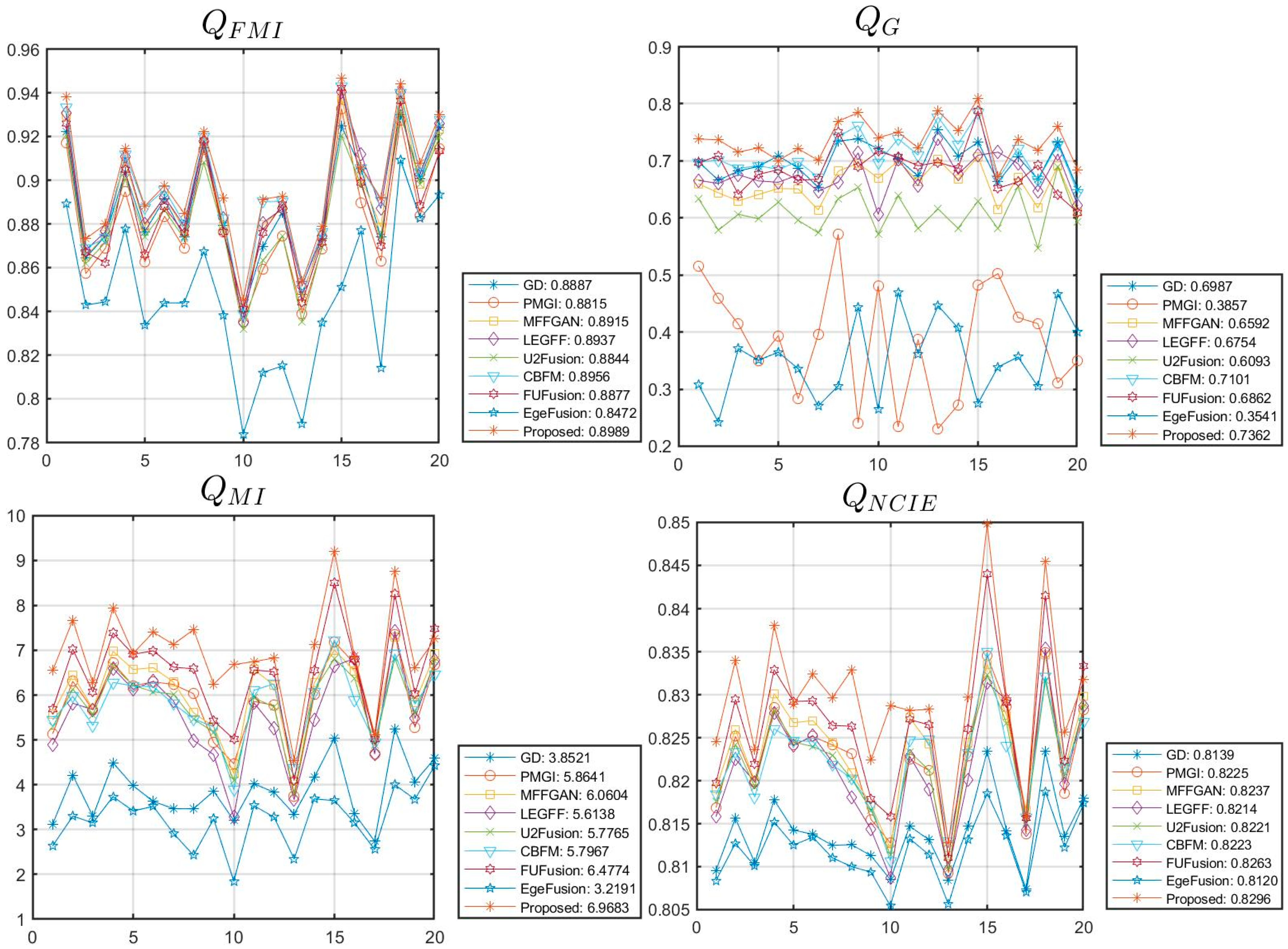

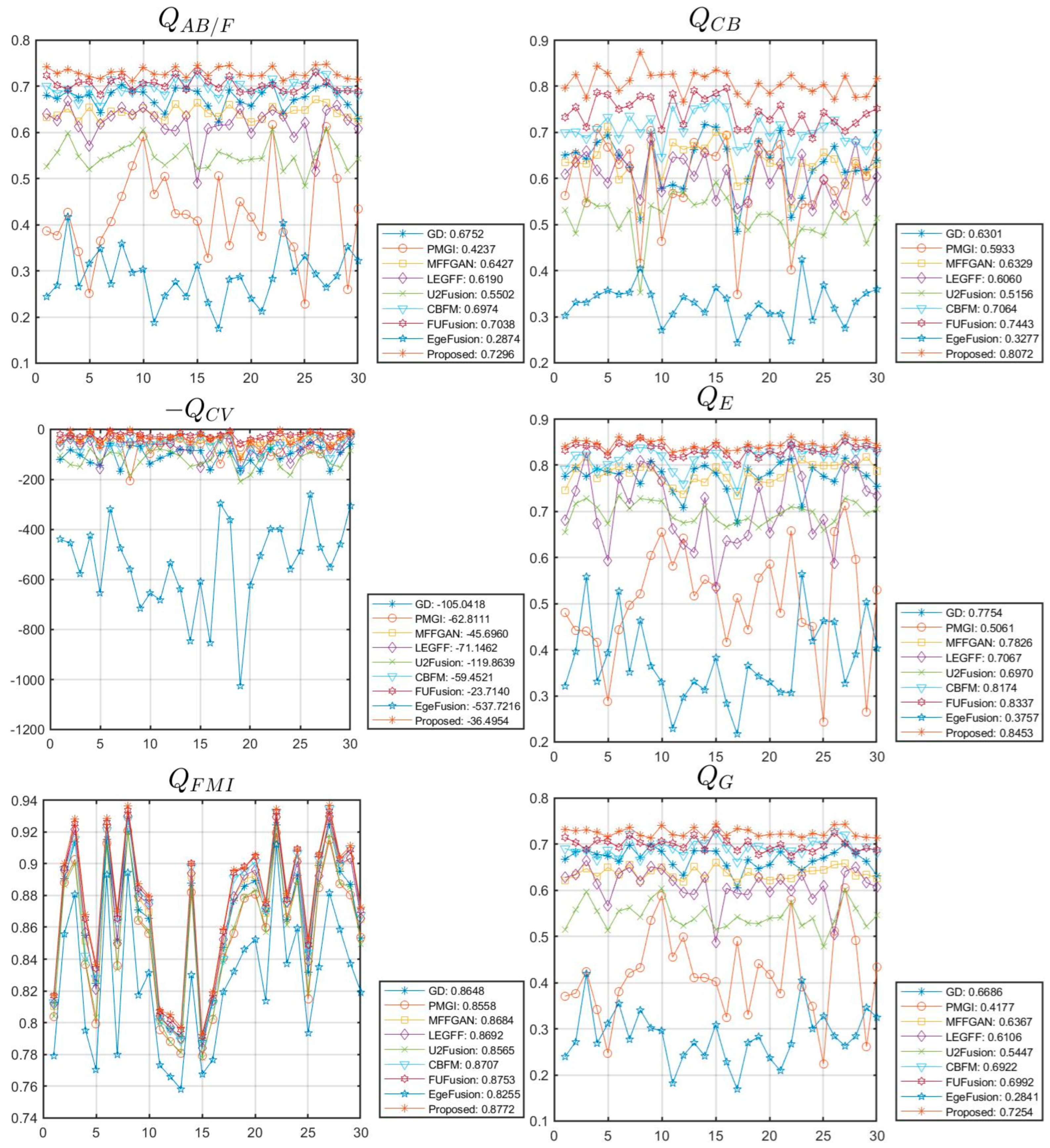

- Results on the MFI-WHU Dataset

- (3)

- Results on the MFFW Dataset

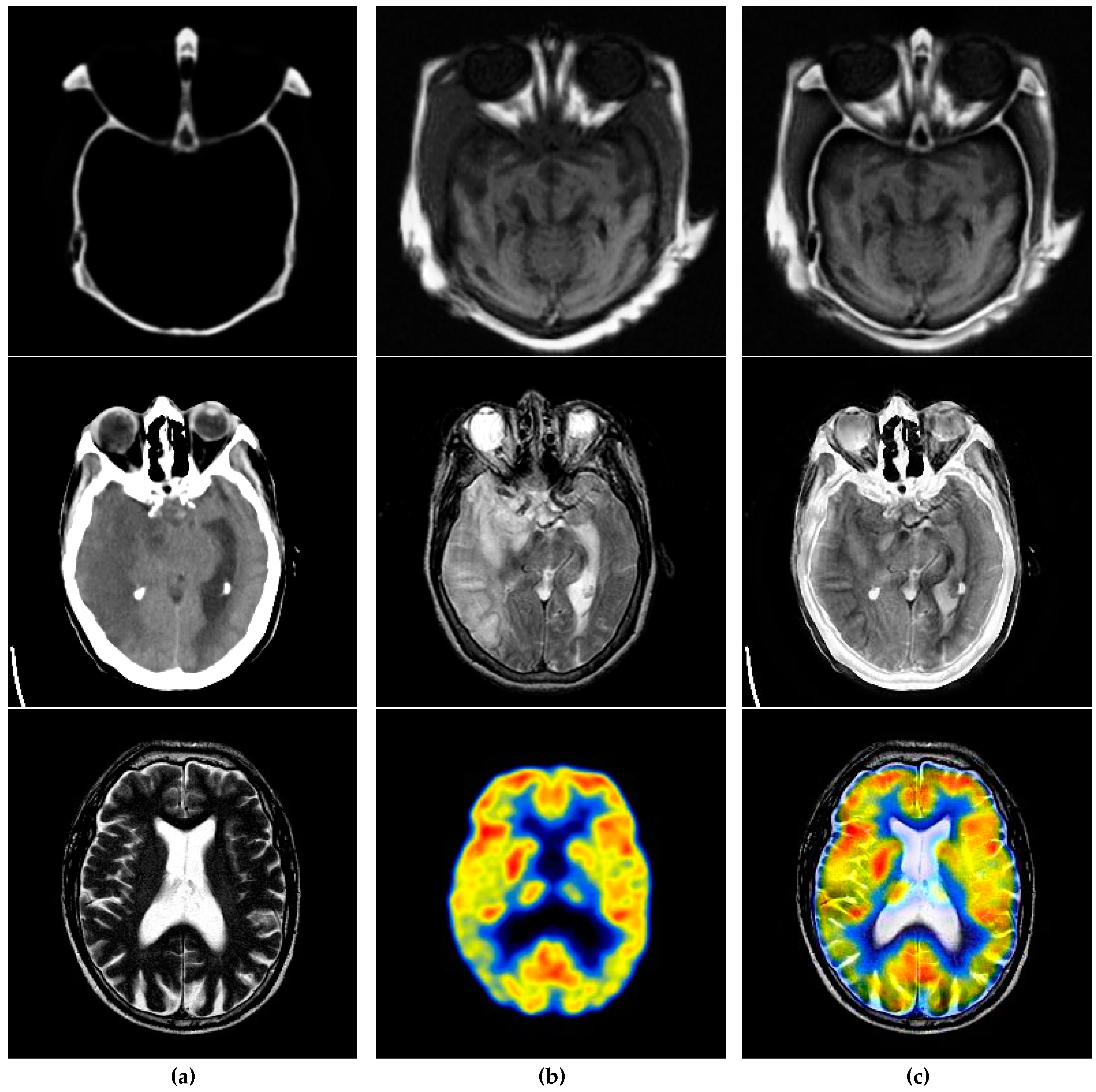

6.3. Application Extension

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, C.; Yan, K. Focus-aware and deep restoration network with transformer for multi-focus image fusion. Digit. Signal Process. 2024, 149, 104473. [Google Scholar] [CrossRef]

- Zhang, J.; Liao, Q.; Ma, H. Exploit the best of both end-to-end and map-based methods for multi-focus image fusion. IEEE Trans. Multimed. 2024, 26, 6411–6423. [Google Scholar] [CrossRef]

- Qiao, L.; Wu, S.; Xiao, B. Boosting robust multi-focus image fusion with frequency mask and hyperdimensional computing. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 3538–3550. [Google Scholar] [CrossRef]

- Li, B.; Zhang, L.; Liu, J.; Peng, H. Multi-focus image fusion with parameter adaptive dual channel dynamic threshold neural P systems. Neural Netw. 2024, 179, 106603. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Lv, M.; Li, L.; Jin, Q.; Jia, Z.; Chen, L.; Ma, H. Multi-focus image fusion via distance-weighted regional energy and structure tensor in NSCT domain. Sensors 2023, 23, 6135. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Qi, Z.; Cheng, J.; Chen, X. Rethinking the effectiveness of objective evaluation metrics in multi-focus image fusion: A statistic-based approach. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5806–5819. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Li, J. Fusion of full-field optical angiography images via gradient feature detection. Front. Phys. 2024, 12, 1397732. [Google Scholar] [CrossRef]

- Wu, M.; Yang, L.; Chai, R. Research on multi-scale fusion method for ancient bronze ware X-ray images in NSST domain. Appl. Sci. 2024, 14, 4166. [Google Scholar] [CrossRef]

- Li, L.; Wang, L.; Wang, Z.; Jia, Z. A novel medical image fusion approach based on nonsubsampled shearlet transform. J. Med. Imaging Health Inform. 2019, 9, 1815–1826. [Google Scholar]

- Lv, M.; Jia, Z.; Li, L.; Ma, H. Multi-focus image fusion via PAPCNN and fractal dimension in NSST domain. Mathematics 2023, 11, 3803. [Google Scholar] [CrossRef]

- Li, L.; Si, Y.; Wang, L.; Jia, Z.; Ma, H. A novel approach for multi-focus image fusion based on SF-PAPCNN and ISML in NSST domain. Multimed. Tools Appl. 2020, 79, 24303–24328. [Google Scholar] [CrossRef]

- Peng, H.; Wang, J. Coupled neural P systems. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 1672–1682. [Google Scholar] [CrossRef]

- Li, B.; Peng, H. Medical image fusion method based on coupled neural P systems in nonsubsampled shearlet transform domain. Int. J. Neural Syst. 2021, 31, 2050050. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Ma, H.; Jia, Z.; Si, Y. A novel multiscale transform decomposition based multi-focus image fusion framework. Multimed. Tools Appl. 2021, 80, 12389–12409. [Google Scholar] [CrossRef]

- Qi, Y.; Yang, Z. A multi-channel neural network model for multi-focus image fusion. Expert Syst. Appl. 2024, 247, 123244. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar]

- Li, L.; Lv, M.; Jia, Z.; Jin, Q.; Liu, M.; Chen, L.; Ma, H. An effective infrared and visible image fusion approach via rolling guidance filtering and gradient saliency map. Remote Sens. 2023, 15, 2486. [Google Scholar] [CrossRef]

- Huo, X.; Deng, Y.; Shao, K. Infrared and visible image fusion with significant target enhancement. Entropy 2022, 24, 1633. [Google Scholar] [CrossRef]

- Fiza, S.; Safinaz, S. Multi-focus image fusion using edge discriminative diffusion filter for satellite images. Multimed. Tools Appl. 2024, 83, 66087–66106. [Google Scholar] [CrossRef]

- Yan, X.; Qin, H.; Li, J. Multi-focus image fusion based on dictionary learning with rolling guidance filter. J. Opt. Soc. Am. A-Opt. Image Sci. Vis. 2017, 34, 432–440. [Google Scholar] [CrossRef]

- Adeel, H.; Riaz, M. Multi-focus image fusion using curvature minimization and morphological filtering. Multimed. Tools Appl. 2024, 83, 78625–78639. [Google Scholar] [CrossRef]

- Tang, H.; Liu, G.; Qian, Y. EgeFusion: Towards edge gradient enhancement in infrared and visible image fusion with multi-scale transform. IEEE Trans. Comput. Imaging 2024, 10, 385–398. [Google Scholar] [CrossRef]

- Do, M.N.; Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Ma, H. Pulse coupled neural network-based multimodal medical image fusion via guided filtering and WSEML in NSCT domain. Entropy 2021, 23, 591. [Google Scholar] [CrossRef]

- Guo, K.; Labate, D. Optimally sparse multidimensional representation using shearlets. SIAM J. Math. Anal. 2007, 39, 298–318. [Google Scholar] [CrossRef]

- Li, L.; Ma, H. Saliency-guided nonsubsampled shearlet transform for multisource remote sensing image fusion. Sensors 2021, 21, 1756. [Google Scholar] [CrossRef]

- Paul, S.; Sevcenco, I.; Agathoklis, P. Multi-exposure and multi-focus image fusion in gradient domain. J. Circuits Syst. Comput. 2016, 25, 1650123. [Google Scholar] [CrossRef]

- Li, L.; Lv, M.; Jia, Z.; Ma, H. Sparse representation-based multi-focus image fusion method via local energy in shearlet domain. Sensors 2023, 23, 2888. [Google Scholar] [CrossRef]

- Luo, Y.; Luo, Z. Infrared and visible image fusion: Methods, datasets, applications, and prospects. Appl. Sci. 2023, 13, 10891. [Google Scholar] [CrossRef]

- Jie, Y.; Li, X.; Wang, M.; Tan, H. Multi-focus image fusion for full-field optical angiography. Entropy 2023, 25, 951. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Tan, K. Multi-focus image fusion using residual removal and fractional order differentiation focus measure. Signal Image Video Process. 2024, 18, 3395–3410. [Google Scholar] [CrossRef]

- Tang, D.; Xiong, Q. A novel sparse representation based fusion approach for multi-focus images. Expert Syst. Appl. 2022, 197, 116737. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, Y.; Ward, R.K.; Chen, X. Multi-focus image fusion with complex sparse representation. IEEE Sens. J. 2024. Early Access. [Google Scholar]

- Shen, D.; Hu, H.; He, F.; Zhang, F.; Zhao, J.; Shen, X. Hierarchical prototype-aligned graph neural network for cross-scene hyperspectral image classification. Remote Sens. 2024, 16, 2464. [Google Scholar] [CrossRef]

- Akram, R.; Hong, J.S.; Kim, S.G. Crop and weed segmentation and fractal dimension estimation using small training data in heterogeneous data environment. Fractal Fract. 2024, 8, 285. [Google Scholar] [CrossRef]

- Zhou, M.; Li, B.; Wang, J. Optimization of hyperparameters in object detection models based on fractal loss function. Fractal Fract. 2022, 6, 706. [Google Scholar] [CrossRef]

- Zhao, P.; Zheng, H.; Tang, S. DAMNet: A dual adjacent indexing and multi-deraining network for real-time image deraining. Fractal Fract. 2023, 7, 24. [Google Scholar] [CrossRef]

- Fang, J.; Ning, X. A multi-focus image fusion network combining dilated convolution with learnable spacings and residual dense network. Comput. Electr. Eng. 2024, 117, 109299. [Google Scholar] [CrossRef]

- Wang, S.; Chen, Z.; Qi, F. Fractal geometry and convolutional neural networks for the characterization of thermal shock resistances of ultra-high temperature ceramics. Fractal Fract. 2022, 6, 605. [Google Scholar] [CrossRef]

- Sun, H.; Wu, S.; Ma, L. Adversarial attacks on GAN-based image fusion. Inf. Fusion 2024, 108, 102389. [Google Scholar] [CrossRef]

- Yu, Y.; Qin, C. An end-to-end underwater-image-enhancement framework based on fractional integral retinex and unsupervised autoencoder. Fractal Fract. 2023, 7, 70. [Google Scholar] [CrossRef]

- Zhang, X. Deep learning-based multi-focus image fusion: A survey and a comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4819–4838. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Sun, P. IFCNN: A general image fusion framework based on convolutional neural network. Inf. Fusion 2020, 54, 99–118. [Google Scholar] [CrossRef]

- Hu, X.; Jiang, J.; Liu, X.; Ma, J. ZMFF: Zero-shot multi-focus image fusion. Inf. Fusion 2023, 92, 127–138. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, H.; Xiao, Y. Rethinking the image fusion: A fast unified image fusion network based on proportional maintenance of gradient and intensity. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12797–12804. [Google Scholar]

- Xu, H.; Ma, J.; Jiang, J. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 502–518. [Google Scholar] [CrossRef]

- Li, J.; Zhang, J.; Yang, C.; Liu, H.; Zhao, Y.; Ye, Y. Comparative analysis of pixel-level fusion algorithms and a new high-resolution dataset for SAR and optical image fusion. Remote Sens. 2023, 15, 5514. [Google Scholar] [CrossRef]

- Li, L.; Si, Y.; Jia, Z. Remote sensing image enhancement based on non-local means filter in NSCT domain. Algorithms 2017, 10, 116. [Google Scholar] [CrossRef]

- Li, L.; Si, Y.; Jia, Z. A novel brain image enhancement method based on nonsubsampled contourlet transform. Int. J. Imaging Syst. Technol. 2018, 28, 124–131. [Google Scholar] [CrossRef]

- Peng, H.; Li, B. Multi-focus image fusion approach based on CNP systems in NSCT domain. Comput. Vis. Image Underst. 2021, 210, 103228. [Google Scholar] [CrossRef]

- Panigrahy, C.; Seal, A.; Mahato, N.K. Fractal dimension based parameter adaptive dual channel PCNN for multi-focus image fusion. Opt. Lasers Eng. 2020, 133, 106141. [Google Scholar] [CrossRef]

- Zhang, X.; Boutat, D.; Liu, D. Applications of fractional operator in image processing and stability of control systems. Fractal Fract. 2023, 7, 359. [Google Scholar] [CrossRef]

- Zhang, X.; Dai, L. Image enhancement based on rough set and fractional order differentiator. Fractal Fract. 2022, 6, 214. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, S.; Zhang, J. Adaptive sliding mode consensus control based on neural network for singular fractional order multi-agent systems. Appl. Math. Comput. 2022, 434, 127442. [Google Scholar] [CrossRef]

- Zhang, X.; Lin, C. A unified framework of stability theorems for LTI fractional order systems with 0 < α < 2. IEEE Trans. Circuit Syst. II-Express 2020, 67, 3237–3241. [Google Scholar]

- Di, Y.; Zhang, J.; Zhang, X. Robust stabilization of descriptor fractional-order interval systems with uncertain derivative matrices. Appl. Math. Comput. 2023, 453, 128076. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, Y. Admissibility and robust stabilization of continuous linear singular fractional order systems with the fractional order α: The 0 < α < 1 case. ISA Trans. 2018, 82, 42–50. [Google Scholar]

- Zhang, J.; Yang, G. Low-complexity tracking control of strict-feedback systems with unknown control directions. IEEE Trans. Autom. Control 2019, 64, 5175–5182. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Q.; Ding, W. Global output-feedback prescribed performance control of nonlinear systems with unknown virtual control coefficients. IEEE Trans. Autom. Control 2022, 67, 6904–6911. [Google Scholar] [CrossRef]

- Zhang, J.; Ding, J.; Chai, T. Fault-tolerant prescribed performance control of wheeled mobile robots: A mixed-gain adaption approach. IEEE Trans. Autom. Control 2024, 69, 5500–5507. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, K.; Wang, Q. Prescribed performance tracking control of time-delay nonlinear systems with output constraints. IEEE/CAA J. Autom. Sin. 2024, 11, 1557–1565. [Google Scholar] [CrossRef]

- Di, Y.; Zhang, J.-X.; Zhang, X. Alternate admissibility LMI criteria for descriptor fractional order systems with 0 < α < 2. Fractal Fract. 2023, 7, 577. [Google Scholar] [CrossRef]

- Qu, X.; Yan, J.; Xiao, H. Image fusion algorithm based on spatial frequency-motivated pulse coupled neural networks in nonsubsampled contourlet transform domain. Acta Autom. Sin. 2008, 34, 1508–1514. [Google Scholar] [CrossRef]

- Nejati, M.; Samavi, S.; Shirani, S. Multi-focus image fusion using dictionary-based sparse representation. Inf. Fusion 2015, 25, 72–84. [Google Scholar] [CrossRef]

- Zhang, H.; Le, Z. MFF-GAN: An unsupervised generative adversarial network with adaptive and gradient joint constraints for multi-focus image fusion. Inf. Fusion 2021, 66, 40–53. [Google Scholar] [CrossRef]

- Xu, S.; Wei, X.; Zhang, C. MFFW: A newdataset for multi-focus image fusion. arXiv 2020, arXiv:2002.04780. [Google Scholar]

- Zhang, Y.; Xiang, W. Local extreme map guided multi-modal brain image fusion. Front. Neurosci. 2022, 16, 1055451. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Li, X.; Liu, W. CBFM: Contrast balance infrared and visible image fusion based on contrast-preserving guided filter. Remote Sens. 2023, 15, 2969. [Google Scholar] [CrossRef]

- Jie, Y.; Chen, Y.; Li, X.; Yi, P.; Tan, H.; Cheng, X. FUFusion: Fuzzy sets theory for infrared and visible image fusion. Lect. Notes Comput. Sci. 2024, 14426, 466–478. [Google Scholar]

- Yang, H.; Zhang, J.; Zhang, X. Injected infrared and visible image fusion via L1 decomposition model and guided filtering. IEEE Trans. Comput. Imaging 2022, 8, 162–173. [Google Scholar]

- Liu, Z.; Blasch, E.; Xue, Z. Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: A comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 94–109. [Google Scholar] [CrossRef]

- Haghighat, M.; Razian, M. Fast-FMI: Non-reference image fusion metric. In Proceedings of the IEEE 8th International Conference on Application of Information and Communication Technologies, Astana, Kazakhstan, 15–17 October 2014; pp. 424–426. [Google Scholar]

- Available online: http://www.med.harvard.edu/AANLIB/home.html (accessed on 1 March 2024).

- Aiadi, O.; Khaldi, B.; Korichi, A. Fusion of deep and local gradient-based features for multimodal finger knuckle print identification. Clust. Comput. 2024, 27, 7541–7557. [Google Scholar] [CrossRef]

- Li, L.; Ma, H.; Jia, Z. Multiscale geometric analysis fusion-based unsupervised change detection in remote sensing images via FLICM model. Entropy 2022, 24, 291. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Ma, H.; Zhang, X.; Zhao, X.; Lv, M.; Jia, Z. Synthetic aperture radar image change detection based on principal component analysis and two-level clustering. Remote Sens. 2024, 16, 1861. [Google Scholar] [CrossRef]

- Li, L.; Ma, H.; Jia, Z. Change detection from SAR images based on convolutional neural networks guided by saliency enhancement. Remote Sens. 2021, 13, 3697. [Google Scholar] [CrossRef]

- Li, L.; Ma, H.; Jia, Z. Gamma correction-based automatic unsupervised change detection in SAR images via FLICM model. J. Indian Soc. Remote Sens. 2023, 51, 1077–1088. [Google Scholar] [CrossRef]

| Year | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| GD | 2016 | 0.7220 | 0.6684 | 63.5814 | 0.8144 | 0.9222 | 0.6985 | 3.1161 | 0.8096 |

| PMGI | 2020 | 0.5466 | 0.6070 | 70.2785 | 0.6316 | 0.9169 | 0.5156 | 5.1347 | 0.8169 |

| MFFGAN | 2021 | 0.6860 | 0.7026 | 23.3439 | 0.8451 | 0.9296 | 0.6599 | 5.5783 | 0.8190 |

| LEGFF | 2022 | 0.6923 | 0.6857 | 38.6156 | 0.8205 | 0.9306 | 0.6658 | 4.8919 | 0.8158 |

| U2Fusion | 2022 | 0.6575 | 0.6164 | 56.5810 | 0.7952 | 0.9206 | 0.6338 | 5.2894 | 0.8176 |

| CBFM | 2023 | 0.7201 | 0.7403 | 13.3863 | 0.8720 | 0.9334 | 0.6974 | 5.4462 | 0.8184 |

| FUFusion | 2024 | 0.7202 | 0.6652 | 47.2751 | 0.8146 | 0.9259 | 0.6967 | 5.6856 | 0.8197 |

| EgeFusion | 2024 | 0.3120 | 0.3356 | 468.3896 | 0.4318 | 0.8892 | 0.3080 | 2.6294 | 0.8084 |

| Proposed | 0.7524 | 0.7745 | 6.5508 | 0.8862 | 0.9380 | 0.7382 | 6.5466 | 0.8245 |

| Year | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| GD | 2016 | 0.6823 | 0.6135 | 85.4217 | 0.7559 | 0.8645 | 0.6660 | 4.2116 | 0.8156 |

| PMGI | 2020 | 0.4798 | 0.5977 | 53.3298 | 0.5816 | 0.8573 | 0.4592 | 6.3071 | 0.8251 |

| MFFGAN | 2021 | 0.6609 | 0.6291 | 28.2393 | 0.7931 | 0.8651 | 0.6440 | 6.4491 | 0.8260 |

| LEGFF | 2022 | 0.6770 | 0.6466 | 22.7596 | 0.7920 | 0.8680 | 0.6603 | 5.8173 | 0.8225 |

| U2Fusion | 2022 | 0.5951 | 0.4969 | 168.9820 | 0.6838 | 0.8619 | 0.5786 | 6.1325 | 0.8242 |

| CBFM | 2023 | 0.7116 | 0.6634 | 31.8620 | 0.8348 | 0.8690 | 0.7019 | 5.9728 | 0.8234 |

| FUFusion | 2024 | 0.7226 | 0.6400 | 16.7184 | 0.8511 | 0.8671 | 0.7088 | 7.0218 | 0.8294 |

| EgeFusion | 2024 | 0.2492 | 0.3688 | 490.2211 | 0.3210 | 0.8429 | 0.2419 | 3.2940 | 0.8127 |

| Proposed | 0.7445 | 0.6870 | 5.3153 | 0.8672 | 0.8732 | 0.7369 | 7.6750 | 0.8339 |

| Year | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| GD | 2016 | 0.7034 | 0.6115 | 123.5691 | 0.7874 | 0.8887 | 0.6987 | 3.8521 | 0.8139 |

| PMGI | 2020 | 0.3901 | 0.5656 | 98.3494 | 0.4736 | 0.8815 | 0.3857 | 5.8641 | 0.8225 |

| MFFGAN | 2021 | 0.6642 | 0.6457 | 42.5655 | 0.8409 | 0.8915 | 0.6592 | 6.0604 | 0.8237 |

| LEGFF | 2022 | 0.6810 | 0.6751 | 53.0073 | 0.8195 | 0.8937 | 0.6754 | 5.6138 | 0.8214 |

| U2Fusion | 2022 | 0.6143 | 0.5682 | 97.5910 | 0.7835 | 0.8844 | 0.6093 | 5.7765 | 0.8221 |

| CBFM | 2023 | 0.7137 | 0.7018 | 41.5959 | 0.8626 | 0.8956 | 0.7101 | 5.7967 | 0.8223 |

| FUFusion | 2024 | 0.6906 | 0.6346 | 128.5882 | 0.7972 | 0.8877 | 0.6862 | 6.4774 | 0.8263 |

| EgeFusion | 2024 | 0.3576 | 0.4034 | 340.4188 | 0.5032 | 0.8472 | 0.3541 | 3.2191 | 0.8120 |

| Proposed | 0.7390 | 0.7388 | 31.4085 | 0.8772 | 0.8989 | 0.7362 | 6.9683 | 0.8296 |

| Year | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| GD | 2016 | 0.6739 | 0.6571 | 82.3636 | 0.7953 | 0.8906 | 0.6829 | 4.1802 | 0.8180 |

| PMGI | 2020 | 0.3769 | 0.6528 | 25.6514 | 0.4425 | 0.8883 | 0.3772 | 7.0843 | 0.8320 |

| MFFGAN | 2021 | 0.6371 | 0.6336 | 37.0591 | 0.7936 | 0.8950 | 0.6337 | 6.9928 | 0.8315 |

| LEGFF | 2022 | 0.6253 | 0.6348 | 37.4732 | 0.7451 | 0.8959 | 0.6345 | 6.2296 | 0.8271 |

| U2Fusion | 2022 | 0.5571 | 0.4815 | 140.7225 | 0.7173 | 0.8867 | 0.5574 | 6.2829 | 0.8273 |

| CBFM | 2023 | 0.6846 | 0.7014 | 28.2899 | 0.8218 | 0.8963 | 0.6835 | 6.5658 | 0.8290 |

| FUFusion | 2024 | 0.7024 | 0.7546 | 24.4700 | 0.8452 | 0.8970 | 0.7039 | 8.0555 | 0.8390 |

| EgeFusion | 2024 | 0.2691 | 0.3308 | 453.2295 | 0.3956 | 0.8557 | 0.2720 | 3.1227 | 0.8148 |

| Proposed | 0.7281 | 0.8256 | 6.4076 | 0.8534 | 0.9000 | 0.7288 | 9.0964 | 0.8476 |

| Year | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| GD | 2016 | 0.6876 | 0.5795 | 137.2797 | 0.7862 | 0.8655 | 0.6842 | 3.9284 | 0.8140 |

| PMGI | 2020 | 0.5906 | 0.4640 | 97.4159 | 0.6549 | 0.8559 | 0.5880 | 5.1975 | 0.8190 |

| MFFGAN | 2021 | 0.6536 | 0.5945 | 64.3859 | 0.7661 | 0.8695 | 0.6497 | 5.3730 | 0.8198 |

| LEGFF | 2022 | 0.6546 | 0.5709 | 58.2280 | 0.7660 | 0.8744 | 0.6462 | 4.8070 | 0.8173 |

| U2Fusion | 2022 | 0.6053 | 0.5280 | 86.2756 | 0.7221 | 0.8578 | 0.6035 | 5.1287 | 0.8187 |

| CBFM | 2023 | 0.7031 | 0.6458 | 59.4391 | 0.8212 | 0.8757 | 0.7015 | 5.3351 | 0.8196 |

| FUFusion | 2024 | 0.7070 | 0.7063 | 30.6077 | 0.8411 | 0.8784 | 0.7061 | 5.9021 | 0.8224 |

| EgeFusion | 2024 | 0.3031 | 0.2703 | 653.9751 | 0.3296 | 0.8315 | 0.2953 | 2.8712 | 0.8110 |

| Proposed | 0.7408 | 0.8250 | 38.0683 | 0.8550 | 0.8795 | 0.7409 | 7.8104 | 0.8348 |

| Year | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| GD | 2016 | 0.6752 | 0.6301 | 105.0418 | 0.7754 | 0.8648 | 0.6686 | 3.6940 | 0.8136 |

| PMGI | 2020 | 0.4237 | 0.5933 | 62.8111 | 0.5061 | 0.8558 | 0.4177 | 5.4884 | 0.8210 |

| MFFGAN | 2021 | 0.6427 | 0.6329 | 45.6960 | 0.7826 | 0.8684 | 0.6367 | 5.6832 | 0.8222 |

| LEGFF | 2022 | 0.6190 | 0.6060 | 71.1462 | 0.7067 | 0.8692 | 0.6106 | 4.8291 | 0.8183 |

| U2Fusion | 2022 | 0.5502 | 0.5156 | 119.8639 | 0.6970 | 0.8565 | 0.5447 | 5.1498 | 0.8194 |

| CBFM | 2023 | 0.6974 | 0.7064 | 59.4521 | 0.8174 | 0.8707 | 0.6922 | 5.6757 | 0.8224 |

| FUFusion | 2024 | 0.7038 | 0.7443 | 23.7140 | 0.8337 | 0.8753 | 0.6992 | 6.6901 | 0.8284 |

| EgeFusion | 2024 | 0.2874 | 0.3277 | 537.7216 | 0.3757 | 0.8255 | 0.2841 | 2.8055 | 0.8111 |

| Proposed | 0.7296 | 0.8072 | 36.4954 | 0.8453 | 0.8772 | 0.7254 | 7.8107 | 0.8371 |

| Year | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| GD | 2016 | 0.7297 | 0.4875 | 110.5392 | 0.6970 | 0.8882 | 0.6829 | 4.7958 | 0.8195 |

| PMGI | 2020 | 0.4084 | 0.4709 | 61.0451 | 0.3863 | 0.8842 | 0.3766 | 6.5896 | 0.8282 |

| MFFGAN | 2021 | 0.6626 | 0.5677 | 57.0983 | 0.7777 | 0.8923 | 0.6165 | 6.3993 | 0.8272 |

| LEGFF | 2022 | 0.7001 | 0.5947 | 60.1930 | 0.7886 | 0.8968 | 0.6457 | 6.1499 | 0.8258 |

| U2Fusion | 2022 | 0.5914 | 0.5325 | 101.9227 | 0.6879 | 0.8790 | 0.5539 | 6.2297 | 0.8262 |

| CBFM | 2023 | 0.7037 | 0.6146 | 51.8537 | 0.8237 | 0.8933 | 0.6588 | 6.0571 | 0.8254 |

| FUFusion | 2024 | 0.6992 | 0.5522 | 76.2735 | 0.7652 | 0.8897 | 0.6479 | 6.6305 | 0.8285 |

| EgeFusion | 2024 | 0.3877 | 0.4132 | 365.6116 | 0.4142 | 0.8406 | 0.3611 | 4.2145 | 0.8173 |

| Proposed | 0.7013 | 0.6155 | 33.3908 | 0.8290 | 0.8987 | 0.6571 | 6.6801 | 0.8290 |

| Year | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| GD | 2016 | 0.6279 | 0.5557 | 217.9965 | 0.7011 | 0.8730 | 0.6114 | 3.6107 | 0.8122 |

| PMGI | 2020 | 0.3807 | 0.5057 | 275.9272 | 0.4245 | 0.8675 | 0.3675 | 5.0472 | 0.8178 |

| MFFGAN | 2021 | 0.5905 | 0.5851 | 138.1153 | 0.7557 | 0.8742 | 0.5744 | 5.0498 | 0.8179 |

| LEGFF | 2022 | 0.6294 | 0.6032 | 172.4173 | 0.7386 | 0.8775 | 0.6165 | 4.8088 | 0.8169 |

| U2Fusion | 2022 | 0.5537 | 0.5499 | 228.0064 | 0.7076 | 0.8690 | 0.5388 | 4.8894 | 0.8171 |

| CBFM | 2023 | 0.6352 | 0.6408 | 126.1058 | 0.7912 | 0.8748 | 0.6203 | 4.8905 | 0.8171 |

| FUFusion | 2024 | 0.5987 | 0.5779 | 226.8354 | 0.6974 | 0.8718 | 0.5817 | 5.3456 | 0.8196 |

| EgeFusion | 2024 | 0.3517 | 0.4213 | 443.4456 | 0.4581 | 0.8380 | 0.3439 | 3.3785 | 0.8115 |

| Proposed | 0.6377 | 0.6362 | 116.0756 | 0.8048 | 0.8799 | 0.6224 | 5.0505 | 0.8180 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L.; Zhao, X.; Hou, H.; Zhang, X.; Lv, M.; Jia, Z.; Ma, H. Fractal Dimension-Based Multi-Focus Image Fusion via Coupled Neural P Systems in NSCT Domain. Fractal Fract. 2024, 8, 554. https://doi.org/10.3390/fractalfract8100554

Li L, Zhao X, Hou H, Zhang X, Lv M, Jia Z, Ma H. Fractal Dimension-Based Multi-Focus Image Fusion via Coupled Neural P Systems in NSCT Domain. Fractal and Fractional. 2024; 8(10):554. https://doi.org/10.3390/fractalfract8100554

Chicago/Turabian StyleLi, Liangliang, Xiaobin Zhao, Huayi Hou, Xueyu Zhang, Ming Lv, Zhenhong Jia, and Hongbing Ma. 2024. "Fractal Dimension-Based Multi-Focus Image Fusion via Coupled Neural P Systems in NSCT Domain" Fractal and Fractional 8, no. 10: 554. https://doi.org/10.3390/fractalfract8100554

APA StyleLi, L., Zhao, X., Hou, H., Zhang, X., Lv, M., Jia, Z., & Ma, H. (2024). Fractal Dimension-Based Multi-Focus Image Fusion via Coupled Neural P Systems in NSCT Domain. Fractal and Fractional, 8(10), 554. https://doi.org/10.3390/fractalfract8100554