1. Introduction

Determining the roots of nonlinear equations of the form

is among the oldest problems in science and engineering, dating back to at least 2000 BC, when the Babylonians discovered a general solution to quadratic equations. In 1079, Omer Khayyams developed a geometric method for solving cubic equations. In 1545, in his book Ars Magna, Girolomo Cardano published a universal solution to a cubic polynomial equation. Cordano was one of the first authors to use complex numbers, but only to derive real solutions to generic polynomials. Niel Hernor Abel [

1] proved Abel’s Impossibility theorem “There is no solution in radical to general polynomial with arbitrary coefficient of degree five or higher” in 1824. The fact established in the 17th century that every generic polynomial equation of positive degree has a solution, possibly non-real, was completely demonstrated at the beginning of the 19th century as the “Fundamental theorem of algebra” [

2].

From the beginning of the 16th century to the end of the 19th century, one of the main problems in algebra was to find a formula that computed the solution of a generic polynomial with arbitrary coefficients of degree equal to or greater than five. Because there are no analytical or implicit methods for solving this problem, we must rely on numerical techniques to approximate the roots of higher-degree polynomials. These numerical algorithms can be further divided into two categories: those that estimate one polynomial root at a time and those that approximate all polynomial roots simultaneously. Work in this area began in 1970, with the primary goal of developing numerical iterative techniques that could locate polynomial roots on contemporary, state-of-the-art computers with optimal speed and efficiency [

3]. Iterative approaches are currently used to find polynomial roots in a variety of disciplines. In signal processing, polynomial roots are the frequencies of signals representing sounds, images, and movies. In control theory, they characterize the behavior of control systems and can be utilized to enhance system stability or performance. The prices of options and futures contracts are determined by estimating the roots of (

1); therefore, it is critical to compute them accurately so that they can be priced precisely.

In recent years, many iterative techniques for estimating the roots of nonlinear equations have been proposed. These methods use several quadrature rules, interpolation techniques, error analysis, and other techniques to improve the convergence order of previously known single-root-finding procedures [

4,

5,

6]. In this paper, we will focus on iterative approaches for solving single-variable nonlinear equations one root at a time. However, we will generalize these methods to locate all distinct roots of nonlinear equations simultaneously. A sequential approach for finding all the zeros in a polynomial necessitates repeated deflation, which can produce significantly inaccurate results due to rounding errors propagating in finite-precision floating-point arithmetic. As a result, we employ more precise, efficient, and stable simultaneous approaches. The literature is vast and dates back to 1891, when Weierstrass introduced the single-step derivative-free simultaneous method [

7] for finding all polynomial roots, which was later rediscovered by Kerner [

8], Durand [

9], Dochev [

10], and Presic [

11]. Gauss–Seidal [

12] and Petkovic et al. [

13] introduced the second-order method for approximating all roots simultaneously; Börsch–Supan [

14] and Mir [

15] presented the third-order method; Provinic et al [

16] introduced the fourth-order method; and Zhang et al [

17] the fifth-order method. Additional enhancements in efficiency were demonstrated by Ehlirch in [

18] and Milovanovic et al. in [

19]; Nourein proposed a fourth-order method in [

20]; and Petkovic et al. a six-order simultaneous method with derivatives in [

21]. Former [

22] introduced the percentage efficacy of simultaneous methods in 2014. Later, in 2015, Proinov et al. [

23] presented a general convergence theorem for simultaneous methods and a description of the application of the Weierstrass root approximating methodology. In 2016, Nedzibove [

24] developed a modified version of the Weierstrass method, and in 2020, Marcheva et al. [

25] presented the local convergence theorem. Shams et al. [

26,

27] presented the computational efficiency ratios for the simultaneous approach on initial vectors to locate all polynomial roots in 2020, as well as the global convergence in 2022 [

28]. Additional advancements in the field can be found in [

29,

30,

31,

32,

33] and the references therein.

The primary goal of this study is to develop Caputo-type fractional inverse simultaneous schemes that are more robust, stable, computationally inexpensive, and CPU-efficient compared to existing methods. The theory and analysis of inverse fractional parallel numerical Caputo-type schemes, as well as their practical implementation utilizing artificial neural networks (ANNs) for approximating all roots of (

1), are also thoroughly examined. Simultaneous schemes, Caputo-type fractional inverse simultaneous schemes, and simultaneous schemes based on artificial neural networks are all discussed and compared in depth. The main contributions of this study are as follows:

Two novel fractional inverse simultaneous Caputo-type methods are introduced in order to locate all the roots of (

1).

A local convergence analysis is presented for the parallel fractional inverse numerical schemes that are proposed.

A rigorous complexity analysis is provided to demonstrate the increased efficiency of the new method.

The Levenberg–Marquardt Algorithm is utilized to compute all of the roots using ANNs.

The global convergence behavior of the proposed inverse fractional parallel root-finding method with random initial estimate values is illustrated.

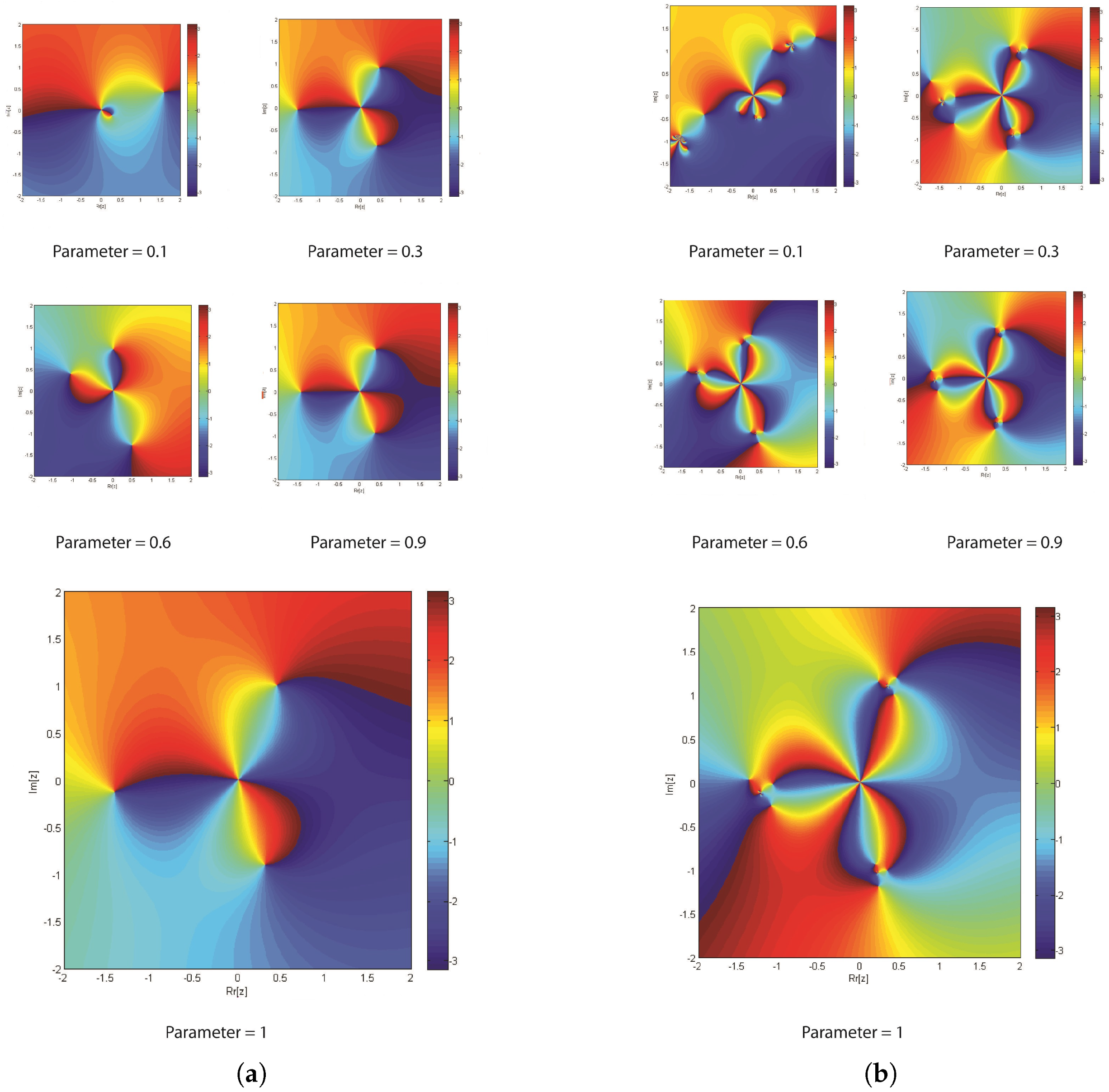

The efficiency and stability of the new method are numerically assessed using dynamical planes.

The general applicability of the method for various nonlinear engineering problems is thoroughly studied using different stopping criteria and random initial guesses.

To the best of our knowledge, this contribution is novel. A review of the existing body of literature indicates that research on fractional parallel numerical methods for simultaneously locating all roots of (

1) is extremely limited. This paper is organized as follows. In

Section 2, a number of fundamental definitions are given. The construction, analysis, and assessment of inverse fractional algorithms are covered in

Section 3. A comparison is made between the artificial neural network aspects of recently developed parallel methods and those of existing schemes in

Section 4. A cost analysis of classical and fractional parallel schemes is detailed in

Section 5. A dynamical analysis of global convergence is presented in

Section 6. In order to evaluate the newly developed techniques in comparison to the parallel computer algorithms that are currently documented in the literature,

Section 7 solves a number of nonlinear engineering applications and reports on the numerical results. The global convergence behavior of the inverse parallel scheme is also compared to that of a simultaneous neural network-based method in this section. Finally, some conclusions arising from this work are drawn in

Section 8.

7. Analysis of Numerical Results

In this section, we illustrate a few numerical experiments to compare the performance of our proposed simultaneous methods, FINS

FINS

and FINS

FINS

of order ten, to that of the ANN in some real-world applications. The calculations were carried out in quadruple-precision (128-bit) floating-point arithmetic using Maple 18. The algorithm was terminated based on the stopping criterion:

where

represents the absolute error. The stopping criteria for both the fractional inverse numerical simultaneous method and the ANN training were 5000 iterations and

. The elapsed times were obtained using a laptop equipped with a third-generation Intel Core i3 CPU and 4 GB of RAM. In our experiments, we compare the results of the newly developed fractional numerical schemes FINS

–FINS

and FINS

–FINS

to the Weierstrass method (WDKM), defined as

the convergent method by Zhang et al. (ZPHM), defined as

and the Petkovic method (MPM), defined as

where

and

We generate random starting guess values using Algorithms 1 and 2, as shown in

Table A1,

Table A2,

Table A3,

Table A4,

Table A5, and

Table A6. The parameter values utilized in the numerical results are reported below.

| The ANN parameter values utilized in examples 1–4. |

|

| Epochs: |

| MSE: |

| Gradient: |

| Mu: |

Real-World Applications

In this section, we apply our new inverse methods FINSFINS and FINSFINS to solve some real-world applications.

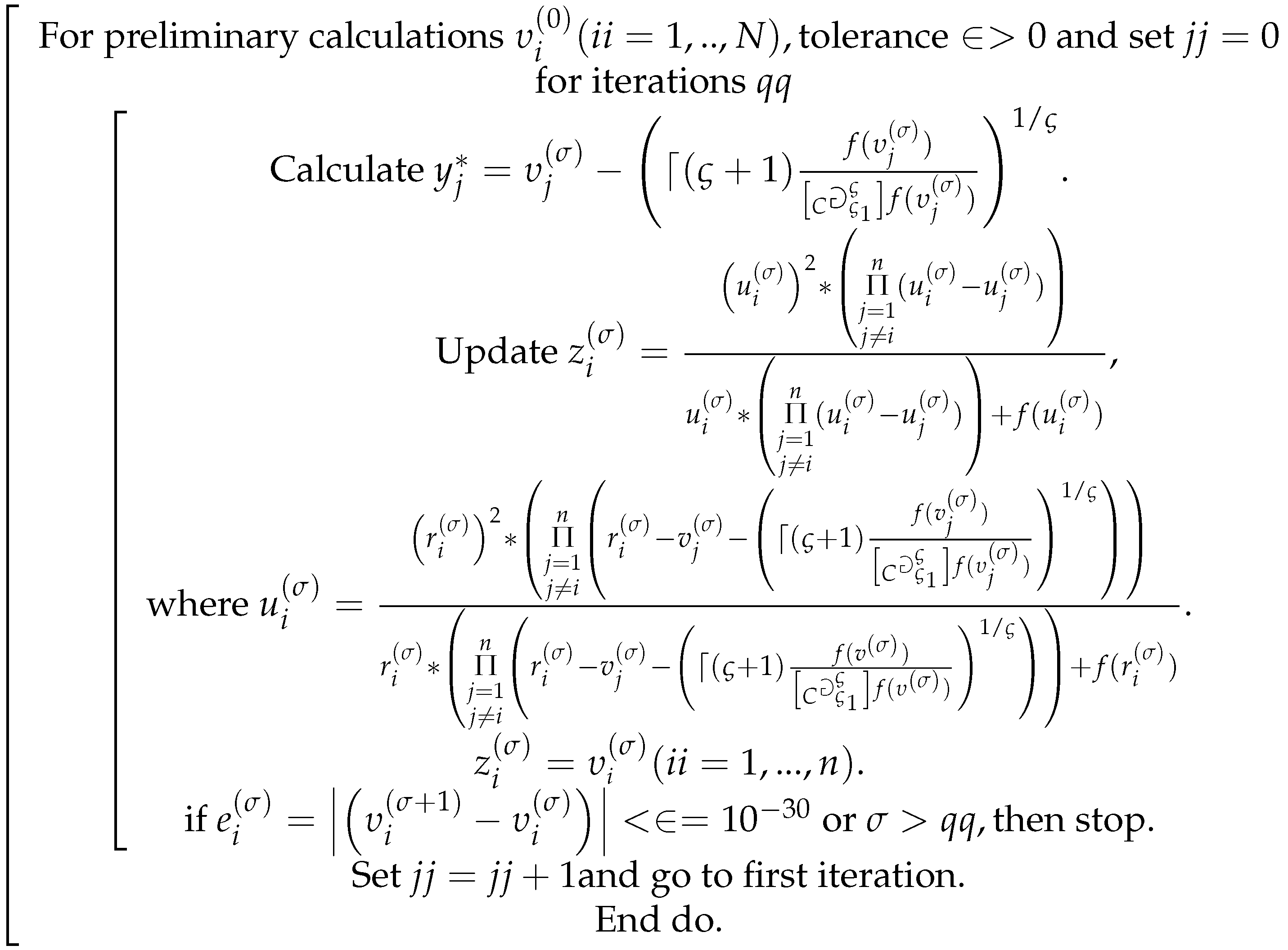

| Algorithm 1 Inverse fractional numerical scheme: FINS |

![Fractalfract 07 00849 i001 Fractalfract 07 00849 i001]() |

| Algorithm 2 Finding the random co-efficient of the polynomial |

![Fractalfract 07 00849 i002 Fractalfract 07 00849 i002]() |

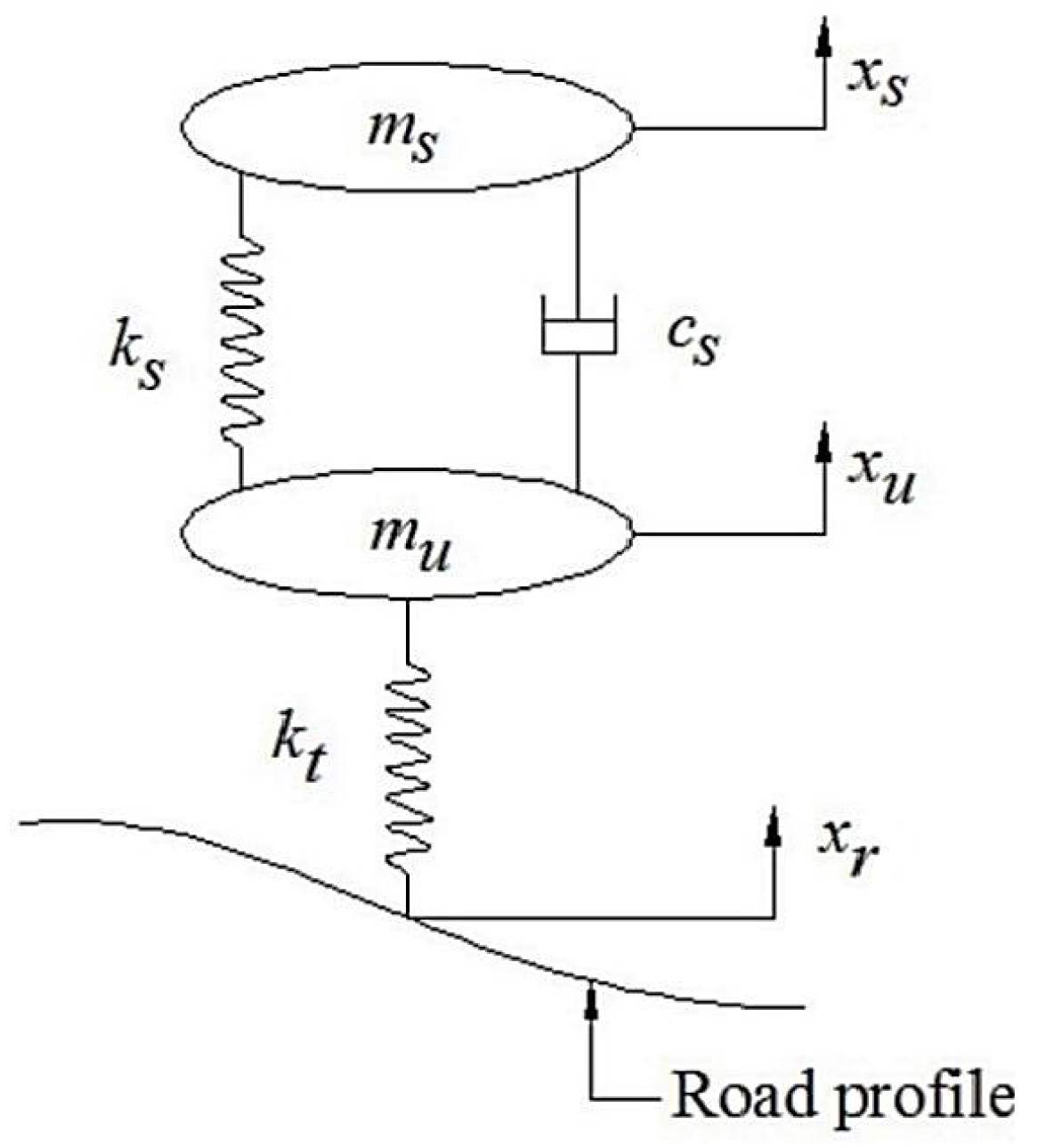

Example 1 (Quarter-car suspension model).

The shock absorber, or damper, is a component of the suspension system that is used to control the transient behavior of the vehicle mass and the suspension mass (see Pulvirenti [

70] and Konieczny [

71]). Because of its nonlinear behavior, it is one of the most complicated suspension system components. The damping force of the damper is characterized by an asymmetric nonlinear hysteresis loop (Liu [

72]). In this example, the vehicle’s characteristics are simulated using a quarter-car model with two degrees of freedom, and the damper effect is investigated using linear and nonlinear damping characteristics. Simpler models, such as linear and independently linear ones, fall short of explaining the damper’s actions. The mass motion equations are as follows:

where

and

are the spring stiffness and suspension coefficients in the tire stiffness system;

and

are the over- and under-sprung masses; and

and

are the displacements of the over- and under-masses.

The coefficient of damping force

F in (

43) is approximated by the polynomial [

73]:

measuring the displacement, velocity, and acceleration of a mass over time.

Figure 4 illustrates how the model can be used to develop and optimize vehicle systems for a range of driving situations, including comfort during travel, interacting with others, and stability.

The Caputo-type derivative of (

44) is given as:

The exact roots of Equation (

44) are

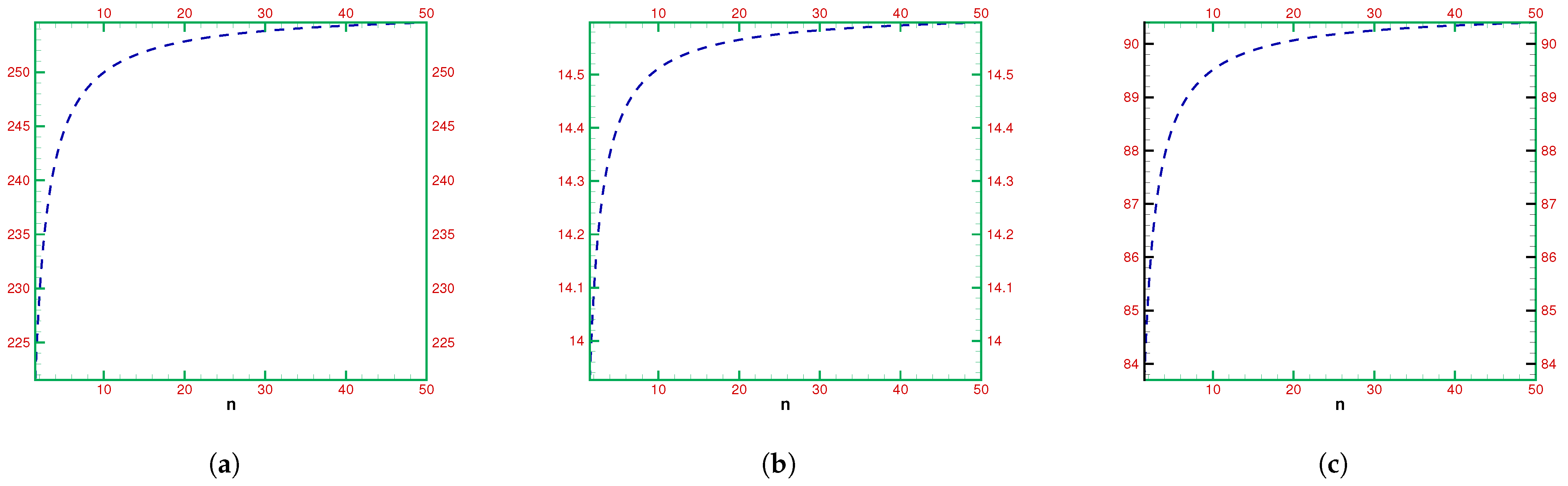

Next, the convergence rate and computational order of the numerical schemes FINS

–FINS

are defined. In order to quantify the global convergence rate of the inverse parallel fractional scheme, a random initial guess value

[0.213, 0.124, 1.02, 1425] is generated using the built-in MATLAB rand() function. With a random initial estimate, FINS

converges to the exact roots after 9, 8, 7, and 7 iterations and requires, respectively, 0.04564, 0.07144, 0.07514, 0.01247, and 0.045451 s to converge for different fractional parameters, i.e., 0.1, 0.3, 0.5, 0.8, and 1.0. The results in

Table 4 clearly indicate that as the value of

grows from 0.1 to 1.0, the rate of convergence of FINS

increases. Unlike ANNs, our newly developed algorithm converges to the exact roots for a range of random initial guesses, confirming a global convergence behavior.

When the initial approximation is close to the exact roots, the rate of convergence increases significantly, as illustrated in

Table 5 and

Table 6.

The following initial estimate values increase the convergence rates:

The outcomes of the ANN-based inverse simultaneous schemes (ANNFN

–ANNFN

) are shown in

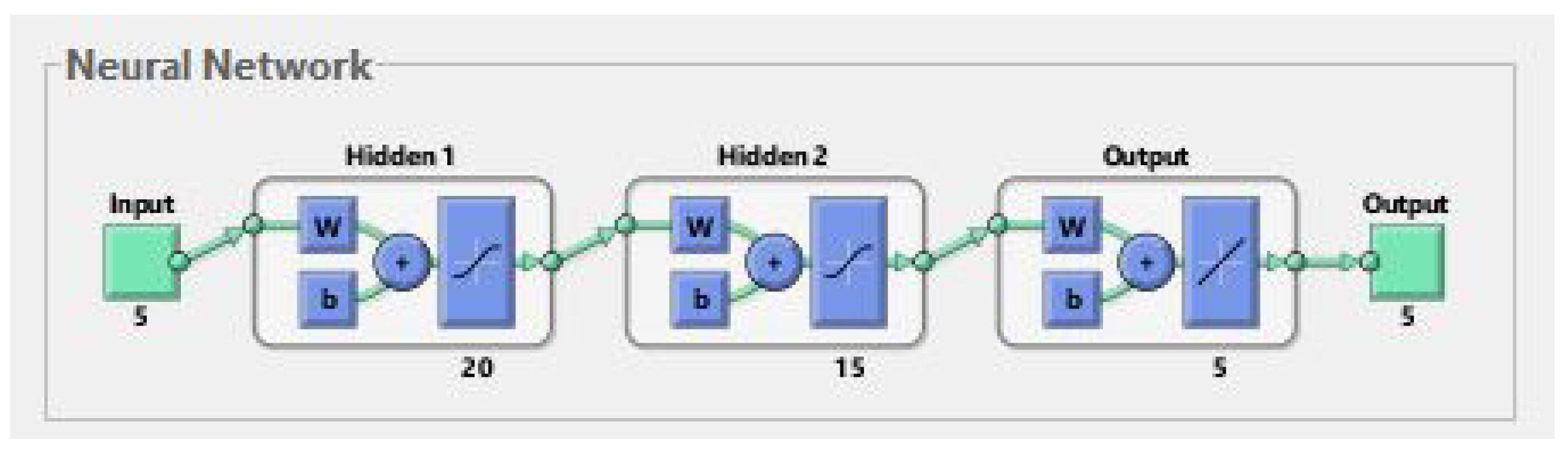

Table 7. The real coefficients of the nonlinear equations utilized in engineering application 1 were fed into the ANNs, and the output was the exact roots of the relevant nonlinear equations, as shown in

Figure 1. The head of the data set utilized in the ANNs is shown in

Table A1 and

Table A5, which provides the approximate root of the nonlinear equation used in engineering application 1 as an output. To generate the data sets, polynomial coefficients were produced at random in the interval [0, 1], and the exact roots were calculated using Matlab. According to

Appendix A Table A1, the ANNs are currently unaware of which roots are real and which roots are complex; therefore, the ANNs were trained using

of the samples from these data sets. The remaining

was utilized to assess the ANNs’ generalization skills by computing a performance metric on the samples that were not used to train the ANNs. For a polynomial of degree 4, the ANN required 5 input data points, two hidden layers, and 10 output data points (the real and imaginary parts of the calculated root). In order to represent all the roots of engineering application 1,

Figure 5a,

Figure 6a,

Figure 7a,

Figure 8a,

Figure 9a, display the error histogram (EPH), mean square error (MSE), regression plot (RP), transition statistics (TS), and fitness overlapping graphs of the target and outcomes of the LMA-ANN for each instance’s training, testing, and validation.

Table 7 provides a summary of the performance of ANNFN

–ANNFN

in terms of the mean square error (MSE), percentage effectiveness (Per-E), execution time in seconds (Ex-time), and iteration number (Error-it).

The numerical results of the simultaneous schemes with initial guess values that vary close to the exact roots are shown in

Table 8. In terms of the residual error, CPU time, and maximum error (Max-Error), our new methods exhibit better results compared to the existing methods after the same number of iterations.

The proposed root-finding method, represented in

Figure 1, is based on the Levenberg–Marquardt technique of artificial neural networks. The “nftool” fitting tool, which is included in the ANN toolkit in MATLAB, is used to approximate roots of polynomials with randomly generated coefficients.

For training, testing, and validation, the input and output results of the LMA-ANNs’ fitness overlapping graphs are shown in

Figure 5a. According to the histogram, the error is

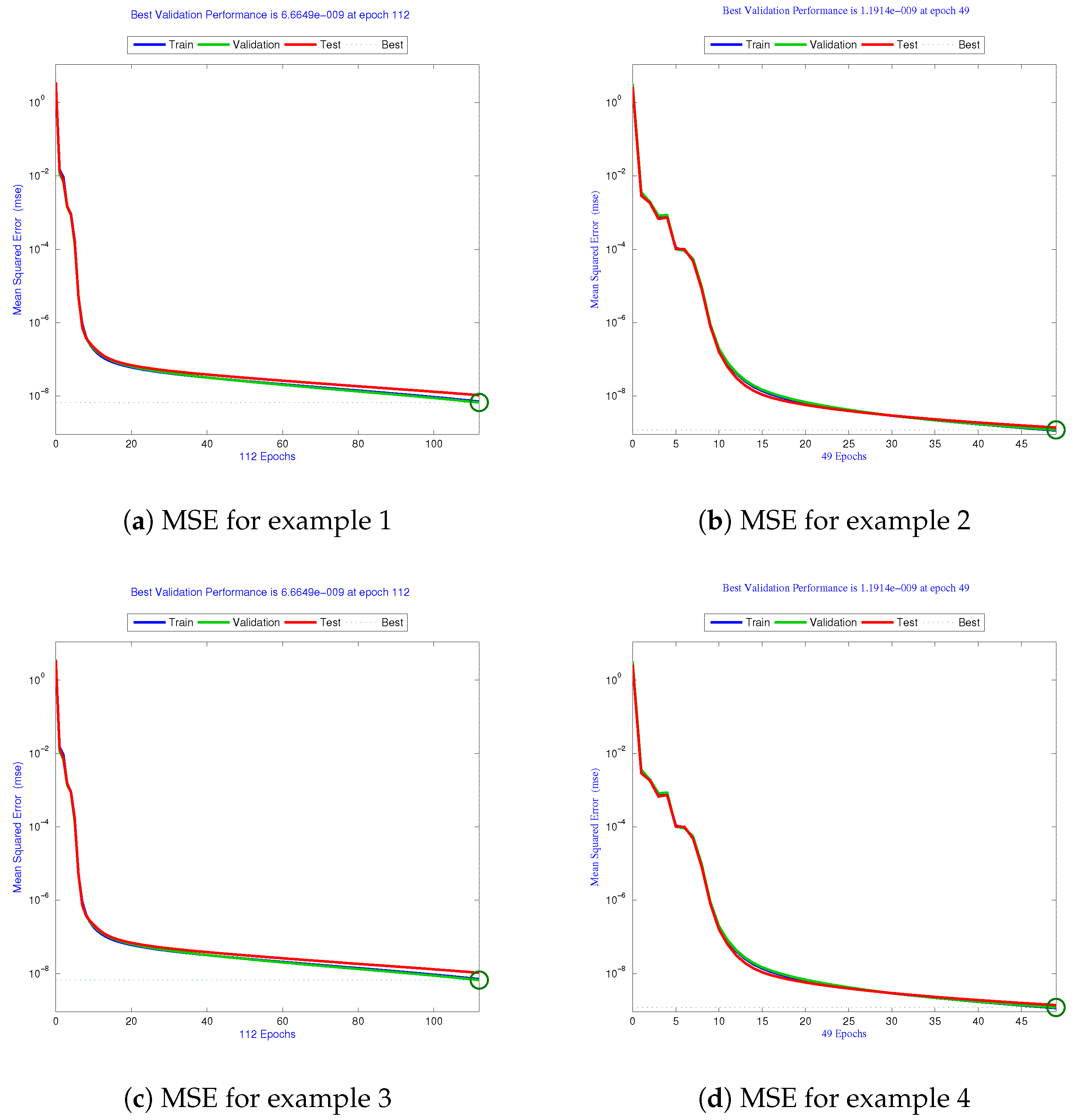

, demonstrating the consistency of the suggested solver. For engineering application 1, the MSE of the LMA-ANNs when comparing the expected outcome to the target solution is

at epoch 112, as shown in

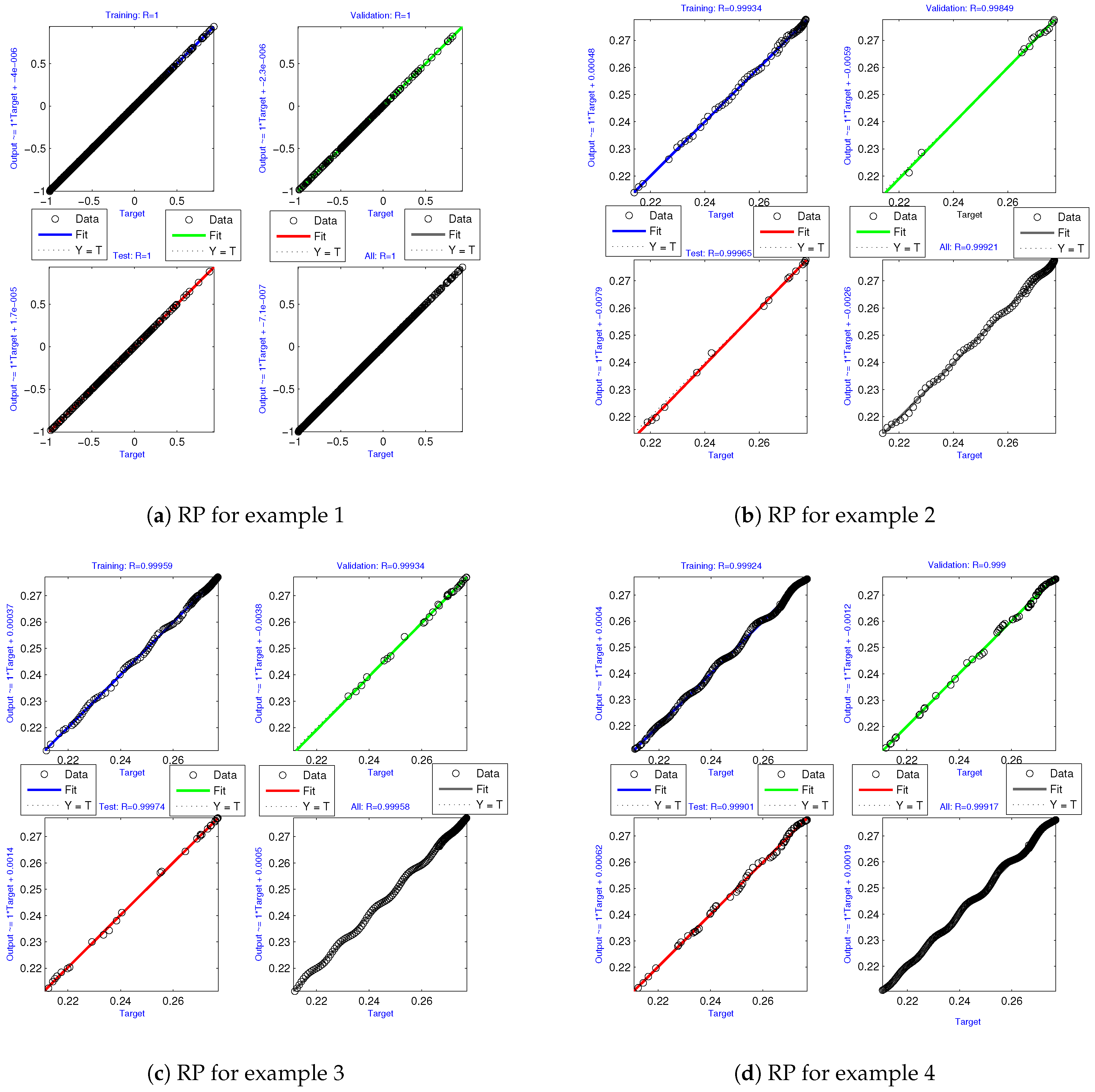

Figure 6a. The expected and actual results of the LMA-ANNs are linearly related, as shown in

Figure 7a.

Figure 8a illustrates the efficiency, consistency, and reliability of the engineering application 1 simulation, where Mu is the adaptation parameter for the algorithm that trained the LMA-ANNs. The choice of Mu directly affects the error convergence and maintains its value in the range [0, 1]. For engineering application 1, the gradient value is

with a Mu parameter of

.

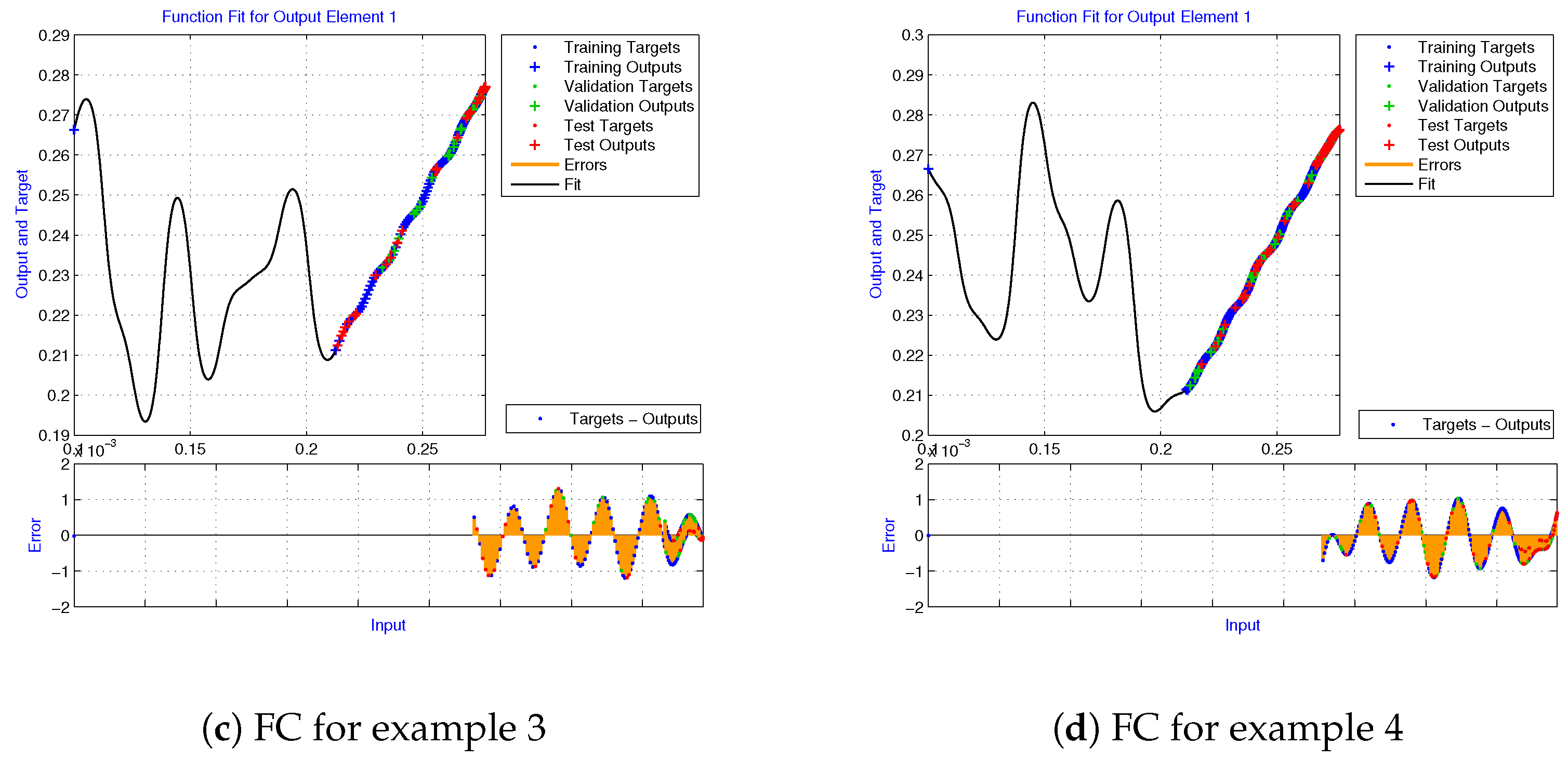

Figure 8a shows how the results for the minimal Mu and gradient converge closer as the network becomes more efficient at training and testing. In turn, the fitness curve simulations and regression analysis simulations are displayed in

Figure 9a. When R is near 1, the correlation is strong; however, it becomes unreliable when R approaches 0. A reduced MSE causes a decreased response time.

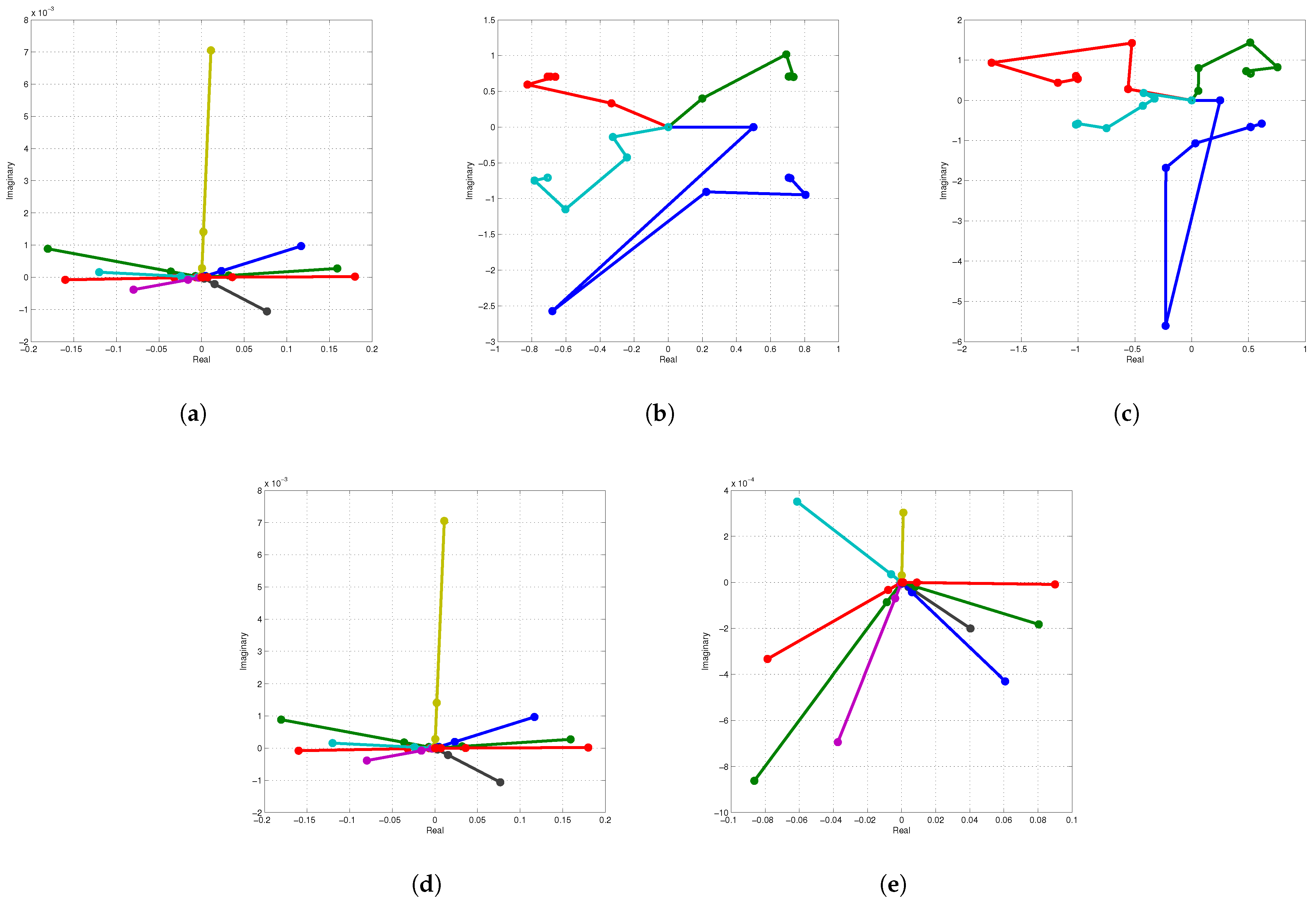

Figure 10a–e depict the root trajectories for various initial estimate values.

Example 2 (

Blood Rheology Model [75]).

Nanofluids are synthetic fluids made of nanoparticles dispersed in a liquid such as water or oil that are typically less than 100 nanometers in size. These nanoparticles can be used to improve the heat transfer capabilities or other properties of the base fluid. They are frequently chosen for their special thermal, electrical, or optical characteristics. Casson nanofluid, like other nanofluids, can be used in a variety of contexts, such as heat-transfer systems, the cooling of electronics, and even medical applications. The introduction of nanoparticles into a fluid can enhance its thermal conductivity and other characteristics, potentially leading to enhanced heat exchange or other intended results in specific applications. A basic fluid such as water or plasma will flow in a tube so that its center core travels as a plug with very little deflection and a velocity variance toward the tube wall according to the Casson fluid model. In our experiment, the plug flow of Casson fluids was described as:

Using

in Equation (

45), we have:

The Caputo-type derivative of (

46) is given as:

The exact roots of Equation (

46) are:

In order to quantify the global convergence rate of the inverse parallel fractional schemes FINS

–FINS

, a random initial guess value

[12.01, 14.56, 4.01, 45.5, 3.45, 78.9, 14.56, 47.89] is generated by the built-in MATLAB rand() function. With a random initial estimate, FINS

converges to the exact roots after 9, 8, 7, 6, and 5 iterations and requires, respectively, 0.04564, 0.07144, 0.07514, 0.01247, and 0.045451 s to converge for different fractional parameters, namely 0.1, 0.3, 0.5, 0.8, and 1.0. The results in

Table 9 clearly indicate that as the value of

grows from 0.1 to 1.0, the rate of convergence of FINS

increases. Unlike ANNs, our newly developed algorithm converges to the exact roots for a range of random initial guesses, confirming a global convergence behavior.

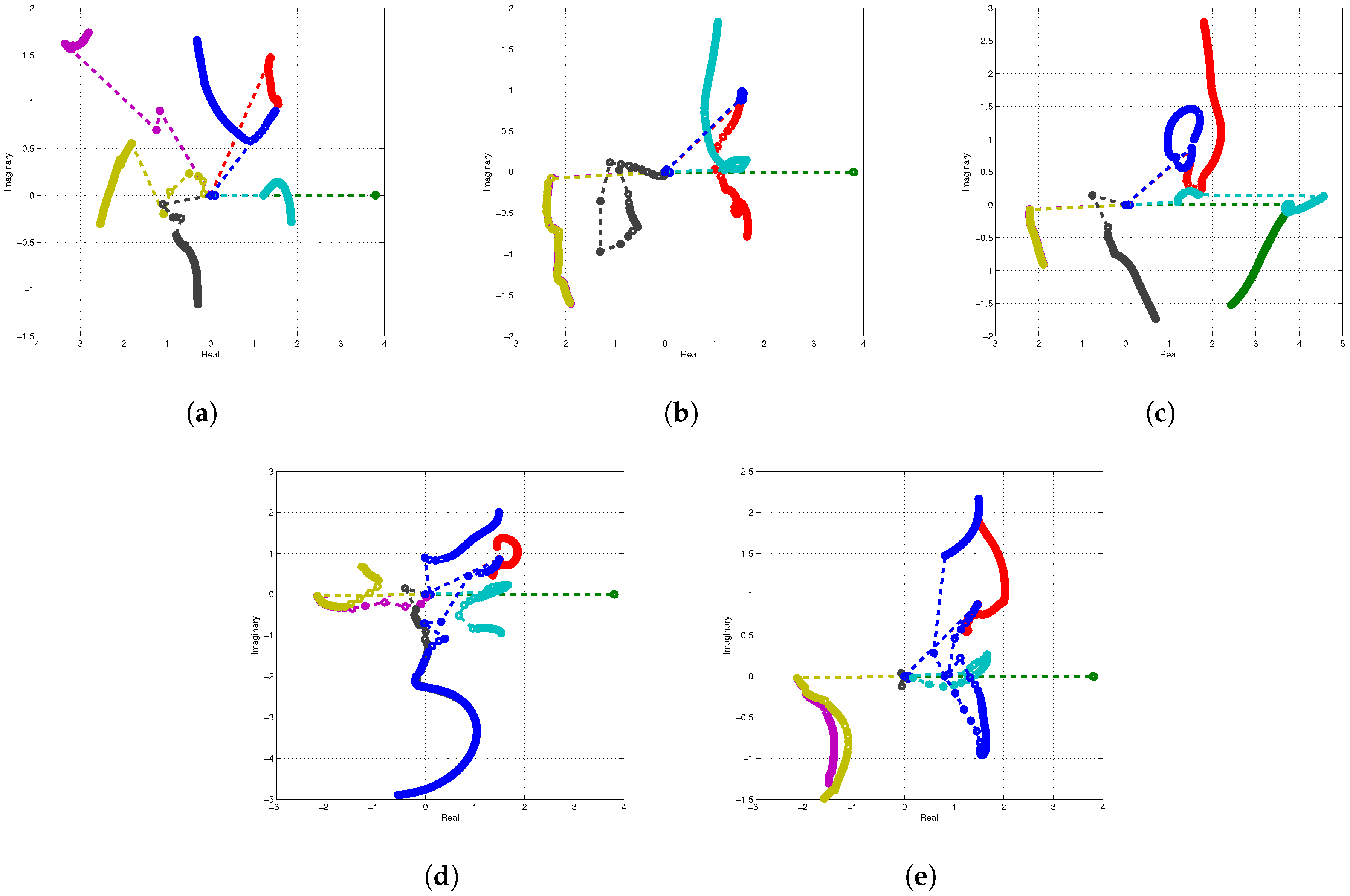

Figure 11a–e depict the root trajectories for various initial estimate values. When the initial approximation is close to the exact roots, the rate of convergence increases significantly, as illustrated in

Table 10 and

Table 11.

The following initial estimate value results in an increase in the convergence rates:

Table 12 displays the results of inverse simultaneous methods based on artificial neural networks. The ANNs were trained using

of the data set samples, with the remaining

used to assess their ability to generalize using a performance metric. For a polynomial of degree 8, the ANN required 9 input data points, two hidden layers, and 18 output data points. In order to represent all the roots of engineering application 2,

Figure 5b,

Figure 6b,

Figure 7b,

Figure 8b,

Figure 9b, display the EPH, MSE, RP, TS, and fitness overlapping graphs of the target and outcomes of the LMA-ANN algorithm for the training, testing, and validation of each instance.

Table 12 provides a summary of the performance of ANNFN

–ANNFN

in terms of the MSE, Per-E, Ex-time, and Error-it.

For training, testing, and validation, the input and output results of the LMA-ANNs’ fitness overlapping graphs are shown in

Figure 5b. According to the histogram, the error is 0.51, demonstrating the consistency of the suggested solver. For engineering application 2, the MSE of the LMA-ANNs compares the expected outcomes to the target solution, as shown in

Figure 6b. The MSE for example 2 is

at epoch 49. The expected and actual results of the LMA-ANNs are linearly related, as shown in

Figure 7b.

Figure 8b illustrates the efficiency, consistency, and reliability of the engineering application 2 simulation. For engineering application 2, the gradient value is

with a Mu parameter of

.

Figure 8b shows how the results for the minimal Mu and gradient converge closer as the network becomes more efficient at training and testing. The fitness curve and regression analysis results are displayed in Figure b. When R is near 1, the correlation parameter is close; however, it becomes unreliable when R is near 0. A reduced MSE causes a decreased response time.

The ANNs for various values of the fractional parameter, namely 0.1, 0.3, 0.5, 0.7, 0.8, and 1.0, are shown in

Table 12 as ANNFN

through ANNFN

.

The numerical results of the simultaneous schemes with initial guess values that vary close to the exact roots are shown in

Table 13. In terms of the residual error, CPU time, and maximum error (Max-Error), our newly developed strategies surpass the existing methods on the same number of iterations.

Example 3 (

Hydrogen atom’s Schrödinger wave equation [76]).

The Schrödinger wave equation is a fundamental equation in quantum mechanics that was invented in 1925 by Austrian physicist Erwin Schrödinger and specifies how the quantum state of a physical system changes over time. It is used to predict the behavior of particles, such as electrons in atomic and molecular systems. The equation is defined for a single particle of mass

m moving in a central potential as follows:

where r is the distance of the electron from the core and ∈ is the energy. In spherical coordinates, (

47) has the following form:

The general solution can be obtained by decomposing the final equation into angular and radial components. The angular component can be further reduced into two equations (see, e.g., [

77]), one of which leads to the Legendre equation:

In the case of azimuth symmetry,

, the solution of (

49) can be expressed using Legendre polynomials. In our example, we computed the zeros of the members of the aforementioned family of polynomials (

49) all at once. Specifically, we used

The Caputo-type derivative of (

50) is given as:

In order to quantify the global convergence rate of the inverse parallel fractional schemes FINS

–FINS

, a random initial guess value

[2.32, 5.12, 2.65, 4.56, 2.55, 2.36, 9.35, 5.12, 5.23, 4.12] is generated by the built-in MATLAB rand() function. With a random initial estimate, FINS

converges to the exact roots after 9, 8, 7, 7, and 6 iterations and requires, respectively, 0.04564, 0.07144, 0.07514, 0.01247, and 0.045451 s to converge for different fractional parameters, namely 0.1, 0.3, 0.5, 0.8, and 1.0. The results in

Table 14 clearly indicate that as the value of

grows from 0.1 to 1.0, the rate of convergence of FINS

increases. Unlike ANNs, our newly developed algorithm converges to the exact roots for a range of random initial guesses, confirming a global convergence behavior.

Figure 12a–e depict the root trajectories for various initial estimate values. When the initial approximation is close to the exact roots, the rate of convergence increases significantly, as illustrated in

Table 15 and

Table 16.

The ANNs for various values of the fractional parameter, namely 0.1, 0.3, 0.5, 0.7, 0.8, and 1.0, are shown in

Table 17 as ANNFN

through ANNFN

. The following initial guess value results in an increase in convergence rates:

Table 17 displays the results of the inverse simultaneous methods based on artificial neural networks. The ANNs were trained using

of the data set samples, with the remaining

used to assess their ability to generalize using a performance metric. For a polynomial of degree 10, the ANN required 11 input data points, two hidden layers, and 22 output data points. In order to represent all the roots of engineering application 3,

Figure 5c,

Figure 6c,

Figure 7c,

Figure 8c,

Figure 9c, display the EPH, MSE, RP, TS, and fitness overlapping graphs of the target and outcomes of the LMA-ANN algorithm for the training, testing, and validation.

Table 17 provides a summary of the performance of ANNFN

–ANNFN

in terms of the MSE, Per-E, Ex-time, and Error-it.

For training, testing, and validation, the input and output results of the LMA-ANNs’ fitness overlapping graphs are shown in

Figure 5c. According to the histogram, the error is

, demonstrating the consistency of the suggested solver. For engineering application 3, the MSE of the LMA-ANNs compares the expected outcomes to the target solution, as shown in

Figure 6c. The MSE for example 3 is

at epoch 112. The expected and actual results of the LMA-ANNs are linearly related, as shown in

Figure 7c.

Figure 8c illustrates the efficiency, consistency, and reliability of the engineering application 3 simulation. The gradient value is

with a Mu parameter of

.

Figure 8c shows how the results for the minimal Mu and gradient converge closer as the network becomes more efficient in training and testing. The fitness curve and regression analysis results are displayed in Figure c. When R is near 1, the correlation parameter is close; however, it becomes unreliable when R is near 0. A reduced MSE causes a decreased response time.

The ANNs for various values of the fractional parameter, namely 0.1, 0.3, 0.5, 0.7, 0.8, and 1.0, are shown in

Table 17 as ANNFN

through ANNFN

.

The numerical results of the simultaneous schemes with initial guess values that vary close to the precise roots are shown in

Table 18. In terms of the residual error, CPU time, and maximum error (Max-Error), our newly developed strategies surpass the existing methods on the same number of iterations.

Example 4 (Mechanical Engineering Application).

Mechanical engineering, like most other sciences, makes extensive use of thermodynamics [

78]. The temperature of dry air is related to its zero-pressure specific heat, denoted as

, through the following polynomial:

To calculate the temperature at which a heat capacity of, say, 1.2 kJ/kgK occurs, we replace

in the equation above and obtain the following polynomial:

with the exact roots

The Caputo-type derivative of (

52) is given as:

In order to quantify the global convergence rate of the inverse parallel fractional schemes FINS

–FINS

, a random initial guess value

is generated by the built-in MATLAB rand() function. With a random initial estimate, FINS

converges to the exact roots after 9, 8, 7, 5, and 4 iterations and requires, respectively, 0.04164, 0.07144, 0.02514, 0.012017, and 0.015251 s to converge for different fractional parameters, namely 0.1, 0.3, 0.5, 0.8, and 1.0. The results in

Table 19 clearly indicate that as the value of

grows from 0.1 to 1.0, the rate of convergence of FINS

increases. Unlike ANNs, our newly developed algorithm converges to the exact roots for a range of random initial guesses, confirming a global convergence behavior.

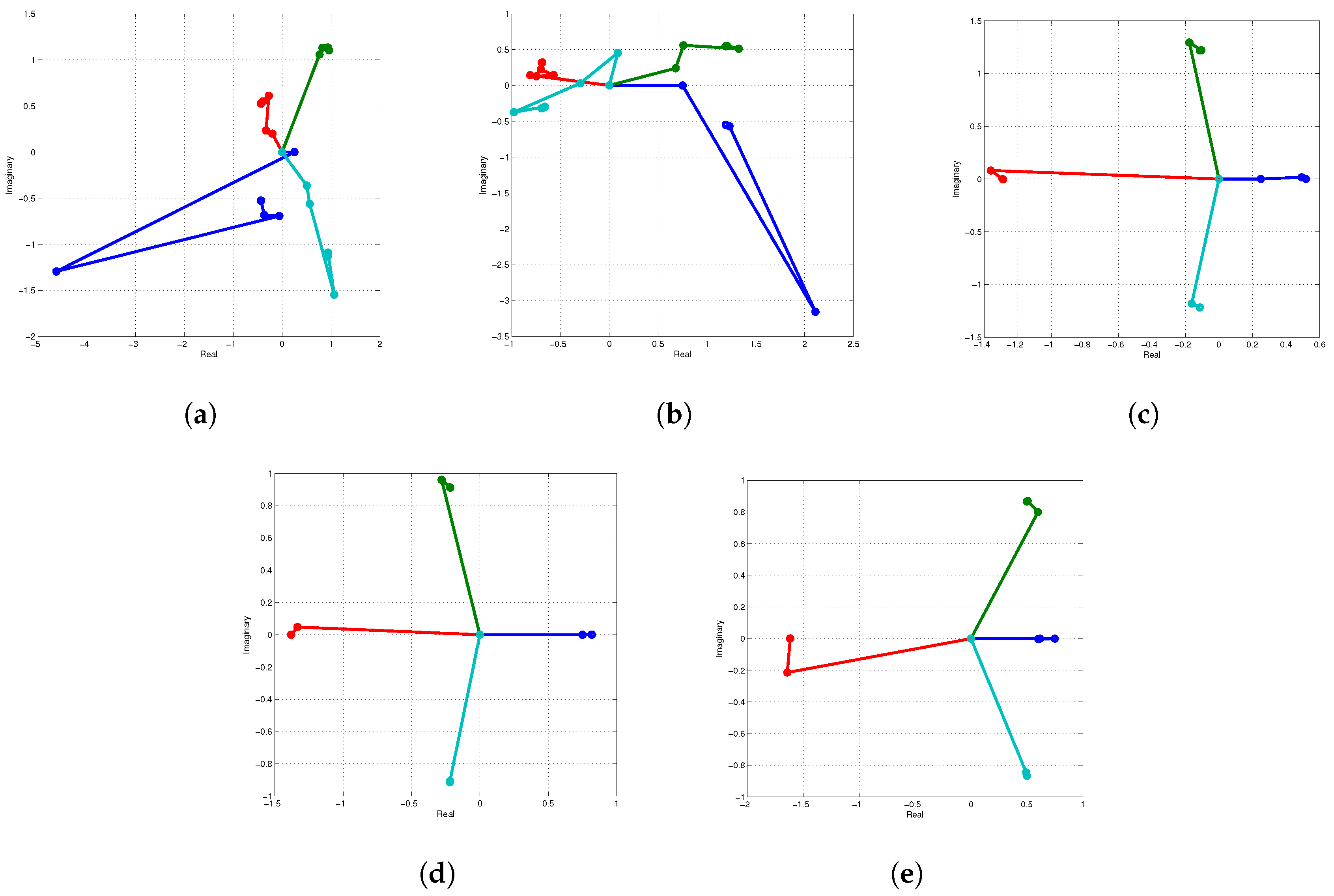

Figure 13a–e depict the root trajectories for various initial estimate values. When the initial approximation is close to the exact roots, the rate of convergence increases significantly, as illustrated in

Table 20 and

Table 21.

The following initial guess value results in an increase in the convergence rates:

The initial estimates of (

40) are as follows:

Table 22 displays the results of the inverse simultaneous methods based on artificial neural networks. The ANNs were trained using

of the data set samples, with the remaining

used to assess their ability to generalize using a performance metric. For a polynomial of degree 4, the ANN required 5 input data points, two hidden layers, and 10 output data points. In order to represent all the roots of engineering application 4,

Figure 5d,

Figure 6d,

Figure 7d,

Figure 8d,

Figure 9d, display the EPH, MSE, RP, TS, and fitness overlapping graphs of the target and outcomes of the LMA-ANN algorithm for the training, testing, and validation.

Table 17 provides a summary of the performance of ANNFN

–ANNFN

in terms of the MSE, Per-E, Ex-time, and Error-it.

For training, testing, and validation, the input and output results of the LMA-ANNs’ fitness overlapping graphs are shown in

Figure 5d. According to the histogram, the error is 1.08

, demonstrating the consistency of the suggested solver. For engineering application 4, the MSE of the LMA-ANNs compares the expected outcomes to the target solution, as shown in

Figure 6d. The MSE for example 4 is

at epoch 49. The expected and actual results of the LMA-ANNs are linearly related, as shown in

Figure 7d.

Figure 8d illustrates the efficiency, consistency, and reliability of the engineering application 4 simulation. The gradient value is 3.0416

with a Mu parameter of 1.0

.

Figure 8d shows how the results for the minimal Mu and gradient converge closer as the network becomes more efficient in training and testing. The fitness curve and regression analysis results are displayed in Figure d. When R is near 1, the correlation parameter is close; however, it becomes unreliable when R is near 0. A reduced MSE causes a decreased response time.

The ANNs for various values of the fractional parameter, namely 0.1, 0.3, 0.5, 0.7, 0.8, and 1.0, are shown in

Table 22 as ANNFN

through ANNFN

.

Table 23 presents the numerical results of the simultaneous schemes when the initial guess values approach the exact roots. When evaluating the same number of iterations, our newly devised strategies outperform the existing methods in terms of the maximum error (Max-Error), residual error, and CPU time. When evaluating the same number of iterations, our newly proposed strategies outperform the existing methods in terms of the residual error, CPU time, and maximum error (Max-Error).

The root trajectories of the nonlinear equations arising from engineering applications 1–4 clearly demonstrate that our FINS–FINS schemes converge to the exact roots starting from random initial guesses, and the rate of convergence increases as the value of increases from 0.1 to 1.0.