Abstract

This paper mainly considers the parameter estimation problem for several types of differential equations controlled by linear operators, which may be partial differential, integro-differential and fractional order operators. Under the idea of data-driven methods, the algorithms based on Gaussian processes are constructed to solve the inverse problem, where we encode the distribution information of the data into the kernels and construct an efficient data learning machine. We then estimate the unknown parameters of the partial differential Equations (PDEs), which include high-order partial differential equations, partial integro-differential equations, fractional partial differential equations and a system of partial differential equations. Finally, several numerical tests are provided. The results of the numerical experiments prove that the data-driven methods based on Gaussian processes not only estimate the parameters of the considered PDEs with high accuracy but also approximate the latent solutions and the inhomogeneous terms of the PDEs simultaneously.

1. Introduction

In the era of big data, the study of data-driven methods and probabilistic machine-learning methods has increasingly attracted researchers [1,2,3]. Exploiting data-driven methods and machine-learning methods to solve the forward and inverse problem of partial differential equations (PDEs) has been valued [4,5,6,7,8,9,10,11], and (deep) neural networks are preferred [12,13,14,15,16,17,18,19,20].

Compared with other machine-learning methods, such as regularized least-squares classifiers (RLSCs) [21] and support vector machines (SVMs) [22], Gaussian processes possess a strict mathematical basis and coincide with the Bayesian estimation method in essence [23]. Raissi et al. (2017) [24] considered a new algorithm for the inverse problem of the PDEs controlled by linear operators based on Gaussian process regression. Different from classical methods, such as the Tikhonov regularization method [25], Gaussian processes solve inverse problems of the PDEs from the perspective of statistical inference.

In the Gaussian processes, the Bayesian method is introduced to encode the distribution information of the data into structured prior information [26], so as to construct an efficient data learning machine, estimate the unknown parameters of the PDEs, infer the solution of the considered equations and quantify the uncertainty of the prediction solution. The research on Gaussian processes solving the forward and inverse problem of the PDEs is a new branch of probabilistic numerics in numerical analysis [27,28,29,30,31,32,33,34].

In this paper, the inverse problem is to estimate the unknown parameters of the considered equations from (noisy) observation data. We extend the Gaussian processes method to deal with the inverse problem of several types of PDEs, which include high-order partial differential equations, partial integro-differential equations, fractional partial differential equations and the system of partial differential equations.

Finally, several numerical tests are provided. The results of the numerical experiments prove that the data-driven methods based on Gaussian processes not only estimate the parameters of the considered PDEs with high accuracy but also approximate the latent solutions and the inhomogeneous terms of the PDEs simultaneously.

The sections of this paper are organized as follows. In Section 2, the basic workflow of Gaussian processes solving the inverse problems of the PDEs is provided. Section 3 describes the estimation algorithm of the fractional PDEs and the linear PDEs system based on Gaussian processes in detail. In Section 4, numerical experiments are performed to prove the validity of the proposed methodology. Finally, our conclusions are given in Section 5.

2. Mathematical Model and Methodology

The following partial differential equations are considered in this paper,

where x represents a vector of D dimensions, is the latent solution of (1), is the inhomogeneous term, is a linear operator, and represents the unknown parameter. For the sake of simplicity, we can introduce the following heat equation as an example,

where heat diffusivity is the unknown parameter .

Assume that we obtain observations and from the latent solution and the inhomogeneous term , respectively, then we can estimate the unknown parameter and approximate the latent solutions of the forward differential equations system according to the posterior results. However, one of the advantages of the methods used in this paper is that we do not need to consider the initial and boundary conditions of problems, because (noisy) observation data from the latent solution and the inhomogeneous term can give enough distribution information of the functions.

As with other machine-learning methods, Gaussian processes can be applied to solve regression problem and classification problem. Moreover Gaussian processes can be seen as a class of methods called kernel machines [35]. However, compared with other kernel machine methods, such as support vector machines (SVMs) and relevance vector machines (RVMs) [36], the strict probability theory of Gaussian processes limits the popularization of this method in industrial circles. Another drawback of Gaussian processes is that the computational cost may be expensive.

2.1. Gaussian Process Prior

Take a Gaussian process prior hypothesis as follows,

and assume that covariance function has the following squared exponential form,

where is the hyper-parameters of the kernel (covariance function) , for Equation (4), . Any prior information of , such as monotonicity and periodicity, can be encoded into the kernel .

Since the linear transformation of Gaussian processes, such as differentiation and integration, is still Gaussian, we can obtain

where the covariance function is .

Moreover, the covariance function between and is and the covariance function between and is . The proposal and reasoning of Equation (5) are the core of Gaussian processes to estimate the unknown parameters of the PDEs [24]. In this step, the parameter information of the PDEs is encoded into the kernels , and . Furthermore, we can utilize the joint density function of and for maximum likelihood estimation of parameters . The greatest contribution of Gaussian process regression is that we transform the unknown parameters of the linear operator into the hyper-parameters of the kernels , and .

By Mercer’s theorem [37], a positive definite covariance function can be decomposed into , where and are eigenvalues and eigenfunctions, respectively, satisfying . is treated as a set of orthogonal basis and a reproducing kernel Hilbert space (RKHS) is constructed [38], which can be seen as a kernel trick [38]. However, the covariance function in Equation (4) has no finite decomposition. Different covariance functions, such as rational quadratic covariance functions and Matérn covariance functions should be selected under different prior information [23]. The most important thing is that the kernel considered should cover the prior information [24].

2.2. Data Training

From the properties of the Gaussian processes [23], we find

where , .

According to (6), we train the parameters and the hyper-parameters by minimizing the following negative log marginal likelihood,

where N is the length of , and we consider a Quasi–Newton optimizer for training [39].

Add noise on the observed data of formula (6), we introduce notations that and , where and . Assume that and are mutually independent additive noise.

The training procedure is the core of the algorithm, which reveals the “regression nature” of Gaussian processes. Furthermore, it is worth mentioning that the negative log marginal likelihood (7) is not only suitable for training the model, it automatically trades off between data-fit and model complexity. While minimizing the term in Equation (7), we use the term to penalize the model complexity [23]. This regularization-like mechanism is a key property of Gaussian process regression, which effectively prevents overfitting.

In truth, model training is solving parameters and hyper-parameters by minimizing Equation (7), and this defines a non-convex optimization problem, which is friendly to machine learning. Heuristic algorithms can be introduced to solve it, such as the whale optimization algorithm [40] and the ant colony optimization algorithm [41]. It is also worth noting that the computational cost of training has a cubic relationship with the amount of the training data due to the Cholesky decomposition of the covariance functions in (7), and the papers [42,43,44] considered this conundrum.

2.3. Gaussian Process Posterior

By the conditional distribution of Gaussian processes [23], the posterior distribution of the prediction at point can be directly written as

where .

By the deduction of [11], the posterior distribution of the prediction at the point can be written as

where .

The posterior mean of (8) and (9) can be seen as the predicted solution of and , respectively. Furthermore, the posterior variance is the direct result of the Bayesian method, which can be used to measure the reliability of the prediction solution.

3. Inverse Problem for Fractional PDEs and the System of Linear PDEs

This section provides the process to estimate the unknown parameters of fractional PDEs and the system of linear PDEs in detail when Gaussian processes are used. However, the processing of these two types of PDEs is more complicated than the other two types of PDEs considered in this paper.

3.1. Processing of Fractional PDEs

The following fractional partial differential equations are considered,

where and the fractional order is a given parameter, is a continuous real function absolutely integrable in , and its arbitrary-order partial derivatives are also continuous function absolutely integrable in . The fractional partial derivative in (10) is defined in the Caputo sense [45] as the following

where is a positive number (, ), and is the gamma function.

The key step of solving (10) is the deriving of kernels with the fractional order operators. By [46,47], the four-dimensional Fourier transforms are applied with respect to the temproral variable and with respect to the spatial variable on to obtain , as the excellent properties of the squared exponential covariance function assures the feasibility of the Fourier transform of derivatives of , on the basis of that and its partial derivatives vanish for . We find the following intermediate kernels,

Then, we perform the inverse Fourier transform on , and to obtain kernels , and , respectively. Furthermore, the other steps are exactly the same as described in Section 2.

3.2. Processing of the System of Linear PDEs

Consider the systems of linear partial differential equations as follows,

where x represents a vector of D dimensions, , , and are linear operators, and denotes the unkonwn parameters of (13).

Assume the following prior hypotheses

to be two mutually independent Gaussian processes, and the covariance functions have a squared exponential form (4). Adding noise to the observed data, we introduce notations that , , and , where , , and .

According to the prior hypotheses, we find

where ,

According to (15), parameters and hyper-parameters can be trained by minimizing the following negative log marginal likelihood

where N is the length of .

After the training step, we can write the posterior distribution of , , and as follows,

where

4. Numerical Tests

This section provides four examples to prove the validity of the methodology proposed. We consider the inverse problem of four types of PDEs, which include high-order partial differential equations, partial integro-differential equations, fractional partial differential equations and the system of partial differential equations.

Introduce the relative error between the exact solution and the prediction solution to represent the prediction error of the algorithm,

where represents the predicted point, is the exact solution at this point, and is the corresponding prediction solution.

4.1. Simulation for a High-Order Partial Differential Equation

Example 1.

where , . The exact solution is and the inhomogeneous term is .

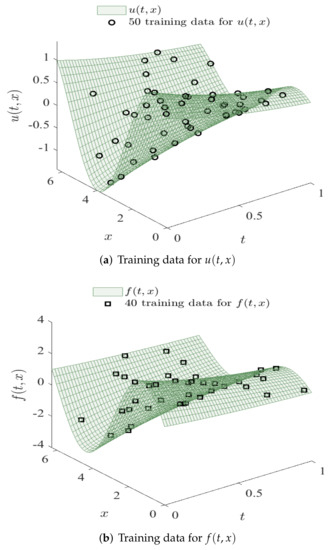

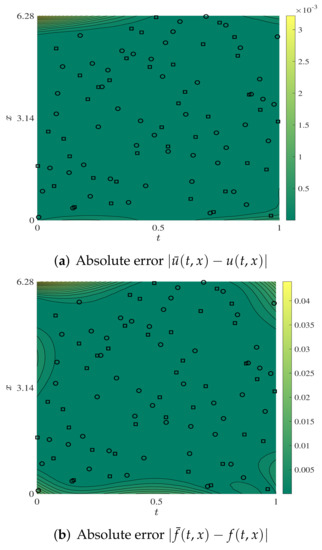

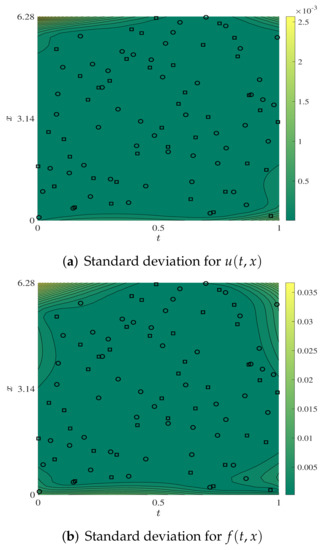

Numerical experiments are performed with the noiseless data of Example 1. We denote that the amount of the training data of is , and the number of the training data of is . Take and in this subsection. Fix to be (1,1), and the estimated value is (1.014005, 1.014634). Figure 1 shows the distribution of the training data of and . Figure 2 shows the estimation error and of and , respectively. Figure 3 shows the posterior standard deviation of the corresponding prediction solution. It can be seen from Figure 2 and Figure 3 that the posterior standard deviation is positively correlated with the prediction error. The posterior distribution of Gaussian processes can return a satisfactory numerical approximation to and .

Figure 1.

High-order partial differential equation: training data for and .

Figure 2.

High-order partial differential equation: prediction error for and .

Figure 3.

High-order partial differential equation: Standard deviation for and .

Table 1 shows the estimation values of and the relative error for and , where is fixed to be (1, 1), (1, 2), (2, 1) and (2, 2), in turn. Furthermore, the experimental results prove that the results of parameter estimation based on Gaussian processes have relatively high accuracy when high-order PDEs are considered.

Table 1.

High-order partial differential equation: estimated values of and the relative error for and , where , , and is fixed to be (1, 1), (1, 2), (2, 1) and (2, 2) in turn.

4.2. Simulation for a Fractional Partial Differential Equation

Example 2.

where and the fractional order α is a given parameter. Assume , and the fractional partial derivative in (20) is defined in the Caputo sense as the following,

The exact solution is and the inhomogeneous term is .

Experiments are performed with noiseless data of Example 2. Table 2 shows the estimation values of Q and the relative error for and , where is fixed to be (0.25, 1), (0.5, 1), (0.75, 1), (0.25, 2), (0.5,2) and (0.75,2), in turn. The experimental results show that the results of parameter estimation based on Gaussian processes have relatively high accuracy when fractional partial differential equations are considered. Furthermore, the posterior distribution of Gaussian processes can return a satisfactory numerical approximation to and .

Table 2.

Fractional partial differential equation: estimated values of Q and the relative error for and where , , is fixed to be (0.25, 1), (0.5, 1), (0.75, 1), (0.25, 2), (0.5, 2) and (0.75, 2), in turn.

4.3. Simulation for a Partial Integro-Differential Equation

Example 3.

where , , and . The exact solution is , and the inhomogeneous term is .

Experiments are performed with the noiseless data of Example 3. Table 3 shows the estimation values of and the relative error for and , where is fixed to be (1, 1), (1, 2), (2, 1) and (2, 2), in turn. The experimental results show that the results of parameter the estimation based on Gaussian processes have relatively high accuracy when partial integro-differential equations are considered. Furthermore, the posterior distribution of Gaussian processes can return a satisfying numerical approximation to and .

Table 3.

Partial integro-differential equation: estimated values of and the relative error for and , where , , is fixed to be (1, 1), (1, 2), (2, 1) and (2, 2), in turn.

Moreover, we investigate the impact of the amount of training data and the noise level on the accuracy of the estimation, where is taken as (1, 1). Table 4 shows the estimation values of and the relative error for and , where and are fixed to be 10, 20, 30, 40 and 50, in turn. The results show that the larger amount of training data, the higher the prediction accuracy in general. Table 5 shows the estimation values and the relative error where , and the levels of addictive noise from the training data are taken at different values.

Table 4.

Numerical results for Example 3. Impact of the amount of training data: estimated values of and the relative error for and , where is fixed to be (1,1) and noise-free data is used.

Table 5.

Numerical results for Example 3. Impact of the levels of addictive noise: estimated values of and the relative error for and where , is fixed to be (1,1).

According to Table 5, we can conclude that the method can estimate parameters with relatively gratifying accuracy when noise-free data can not be obtained, although the estimation accuracy is sensitive to the noise of , which is likely due to the high complexity of the PDEs considered in this paper. Furthermore, we have to admit that this high sensitivity to the noise of the latent solution u greatly limits the scope of application of Gaussian processes. In summary, this problem deserves further research.

4.4. Simulation for a System of Partial Differential Equations

Example 4.

where , . , , and satisfy Equation (23).

Experiments are performed with noiseless data of Example 4. Denote that the amount of training data of is , the amount of training data is , the amount of training data is , and the amount of training data is . Take and in the experiments.

Table 6 shows the estimation values of (a, b, c, d) and the corresponding prediction errors for u, v, and , where (a, b, c, d) is fixed to be (1, 1, 1, 1), (1, 1, 2, 2), (2, 2, 1, 1) and (2, 2, 2, 2), in turn. The experimental results show that the results of parameter estimation based on Gaussian processes have high accuracy, and the posterior distribution of Gaussian processes can return a satisfying numerical approximation to u, v, and , when the system of linear PDEs is considered.

Table 6.

A system of partial differential equations: estimated values of (a, b, c, d) and the relative error for u, v, and , where , , and (a, b, c, d) is fixed to be (1, 1, 1, 1), (1, 1, 2, 2), (2, 2, 1, 1) and (2, 2, 2, 2), in turn.

5. Conclusions

In this paper, we explored the possibility of using Gaussian processes in solving inverse problems of complex linear partial differential equations, which include high-order partial differential equations, partial integro-differential equations, fractional partial differential equations and a system of partial differential equations. The main points of the Gaussian processes method were to encode the distribution information of the data into kernels (covariance functions) of Gaussian process priors, transform unknown parameters of the linear operators into the hyper-parameters of the kernels, train the parameters and hyper-parameters through minimizing the negative log marginal likelihood and infer the solution of the considered equations.

Numerical experiments showed that the data-driven method based on Gaussian processes had high prediction accuracy when estimating the unknown parameters of the PDEs considered, which proved that Gaussian processes have impressive performance in dealing with linear problems. Furthermore, the posterior distribution of Gaussian processes can return a satisfactory numerical approximation to the latent solution and the inhomogeneous term of the PDEs. However, the estimation accuracy of unknown parameters was sensitive to the noise of the latent solution, which still deserves further research. In the future work, we may focus on how to exploit the Gaussian process to solve the inverse problem of nonlinear PDEs and on how to solve the problem of multi-parameter estimation for fractional partial differential equations.

Author Contributions

All the authors contributed equally to this paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by National Natural Science Foundation of China (No. 71974204).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rudy, S.H.; Brunton, S.L.; Proctor, J.L.; Kutz, J.N. Data-driven discovery of partial differential equations. Sci. Adv. 2017, 3, e1602614. [Google Scholar] [CrossRef] [PubMed]

- Ghahramani, Z. Probabilistic machine learning and artificial intelligence. Nature 2015, 521, 452–459. [Google Scholar] [CrossRef] [PubMed]

- Chen, I.Y.; Joshi, S.; Ghassemi, M.; Ranganath, R. Probabilistic machine learning for healthcare. Annu. Rev. Biomed. Data Sci. 2021, 4, 393–415. [Google Scholar] [CrossRef]

- Maslyaev, M.; Hvatov, A.; Kalyuzhnaya, A.V. Partial differential equations discovery with EPDE framework: Application for real and synthetic data. J. Comput. Sci. 2021, 53, 101345. [Google Scholar] [CrossRef]

- Lorin, E. From structured data to evolution linear partial differential equations. J. Comput. Phys. 2019, 393, 162–185. [Google Scholar] [CrossRef]

- Arbabi, H.; Bunder, J.E.; Samaey, G.; Roberts, A.J.; Kevrekidis, I.G. Linking machine learning with multiscale numerics: Data-driven discovery of homogenized equations. JOM 2020, 72, 4444–4457. [Google Scholar] [CrossRef]

- Chang, H.; Zhang, D. Machine learning subsurface flow equations from data. Comput. Geosci. 2019, 23, 895–910. [Google Scholar] [CrossRef]

- Martina-Perez, S.; Simpson, M.J.; Baker, R.E. Bayesian uncertainty quantification for data-driven equation learning. Proc. R. Soc. A 2021, 477, 20210426. [Google Scholar] [CrossRef]

- Dal Santo, N.; Deparis, S.; Pegolotti, L. Data driven approximation of parametrized PDEs by reduced basis and neural networks. J. Comput. Phys. 2020, 416, 109550. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Kaipio, J.; Somersalo, E. Statistical and Computational Inverse Problems; Springer Science & Business Media: New York, NY, USA, 2006. [Google Scholar]

- Kremsner, S.; Steinicke, A.; Szölgyenyi, M. A deep neural network algorithm for semilinear elliptic PDEs with applications in insurance mathematics. Risks 2020, 8, 136. [Google Scholar] [CrossRef]

- Guo, Y.; Cao, X.; Liu, B.; Gao, M. Soling partial differential equations using deep learning and physical constraints. Appl. Sci. 2020, 10, 5917. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, Y.; Sun, H. Physics-informed learning of governing equations from scarce data. Nat. Commun. 2021, 12, 6136. [Google Scholar] [CrossRef]

- Gelbrecht, M.; Boers, N.; Kurths, J. Neural partial differential equations for chaotic systems. New J. Phys. 2021, 23, 43005. [Google Scholar] [CrossRef]

- Cheung, K.C.; See, S. Recent advance in machine learning for partial differential equation. CCF Trans. High Perform. Comput. 2021, 3, 298–310. [Google Scholar] [CrossRef]

- Omidi, M.; Arab, B.; Rasanan, A.H.; Rad, J.A.; Par, K. Learning nonlinear dynamics with behavior ordinary/partial/system of the differential equations: Looking through the lens of orthogonal neural networks. Eng. Comput. 2021, 38, 1635–1654. [Google Scholar] [CrossRef]

- Lagergren, J.H.; Nardini, J.T.; Lavigne, G.M.; Rutter, E.M.; Flores, K.B. Learning partial differential equations for biological transport models from noisy spatio-temporal data. Proc. R. Soc. A 2020, 476, 20190800. [Google Scholar] [CrossRef]

- Koyamada, K.; Long, Y.; Kawamura, T.; Konishi, K. Data-driven derivation of partial differential equations using neural network model. Int. J. Model. Simul. Sci. Comput. 2021, 12, 2140001. [Google Scholar] [CrossRef]

- Kalogeris, I.; Papadopoulos, V. Diffusion maps-aided Neural Networks for the solution of parametrized PDEs. Comput. Methods Appl. Mech. Eng. 2021, 376, 113568. [Google Scholar] [CrossRef]

- Rifkin, R.; Yeo, G.; Poggio, T. Regularized least-squares classification. Nato Sci. Ser. Sub Ser. III Comput. Syst. Sci. 2003, 190, 131–154. [Google Scholar]

- Drucker, H.; Wu, D.; Vapnik, V.N. Support vector machines for spam categorization. IEEE Trans. Neural Netw. 2002, 10, 1048–1054. [Google Scholar] [CrossRef]

- Williams, C.K.; Rasmussen, C.E. Gaussian Processes for Machine Learning; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Machine learning of linear differential equations using Gaussian processes. J. Comput. Phys. 2017, 348, 683–693. [Google Scholar] [CrossRef]

- Yang, S.; Xiong, X.; Nie, Y. Iterated fractional Tikhonov regularization method for solving the spherically symmetric backward time-fractional diffusion equation. Appl. Numer. Math. 2021, 160, 217–241. [Google Scholar] [CrossRef]

- Bernardo, J.M.; Smith, A.F. Bayesian Theory; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Oates, C.J.; Sullivan, T.J. A modern retrospective on probabilistic numerics. Stat. Comput. 2019, 29, 1335–1351. [Google Scholar] [CrossRef]

- Hennig, P.; Osborne, M.A.; Girolami, M. Probabilistic numerics and uncertainty in computations. Proc. R. Soc. A Math. Phys. Eng. Sci. 2015, 471, 20150142. [Google Scholar] [CrossRef]

- Conrad, P.R.; Girolami, M.; Särkkä, S.; Stuart, A.; Zygalakis, K. Statistical analysis of differential equations: Introducing probability measures on numerical solutions. Stat. Comput. 2017, 27, 1065–1082. [Google Scholar] [CrossRef]

- Hennig, P. Fast probabilistic optimization from noisy gradients. In Proceedings of the International Conference on Machine Learning PMLR, Atlanta, GA, USA, 17–19 June 2013; pp. 62–70. [Google Scholar]

- Kersting, H.; Sullivan, T.J.; Hennig, P. Convergence rates of Gaussian ODE filters. Stat. Comput. 2020, 30, 1791–1816. [Google Scholar] [CrossRef]

- Raissi, M.; Karniadakis, G.E. Hidden physics models: Machine learning of nonlinear partial differential equations. J. Comput. Phys. 2018, 357, 125–141. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Inferring solutions of differential equations using noisy multi-fidelity data. J. Comput. Phys. 2017, 335, 736–746. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Numerical Gaussian processes for time-dependent and nonlinear partial differential equations. SIAM J. Sci. Comput. 2018, 40, A172–A198. [Google Scholar] [CrossRef]

- Scholkopf, B.; Smola, A.J.; Bach, F. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Tipping, M.E. Sparse Bayesian learning and the relevance vector machine. J. Mach. Learn. Res. 2001, 1, 211–244. [Google Scholar]

- Konig, H. Eigenvalue Distribution of Compact Operators; Birkhäuser: Basel, Switzerland, 2013. [Google Scholar]

- Berlinet, A.; Thomas-Agnan, C. Reproducing Kernel Hilbert Spaces in Probability and Statistics; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Zhu, C.; Byrd, R.H.; Lu, P.; Nocedal, J. Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound-constrained optimization. ACM Trans. Math. Softw. (TOMS) 1997, 23, 550–560. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Dorigo, M.; Stützle, T. Ant colony optimization: Overview and recent advances. In Handbook of Metaheuristics; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Raissi, M.; Babaee, H.; Karniadakis, G.E. Parametric Gaussian process regression for big data. Comput. Mech. 2019, 64, 409–416. [Google Scholar] [CrossRef]

- Snelson, E.; Ghahramani, Z. Sparse Gaussian processes using pseudo-inputs. In Advances in Neural Information Processing Systems 18 (NIPS 2005); MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Liu, H.; Ong, Y.S.; Shen, X.; Cai, J. When Gaussian process meets big data: A review of scalable GPs. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 4405–4423. [Google Scholar] [CrossRef]

- Milici, C.; Draganescu, G.; Machado, J.T. Introduction to Fractional Differential Equations; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Podlubny, I. Fractional Differential Equations; Academic Press: San Diego, CA, USA, 1998. [Google Scholar]

- Povstenko, Y. Linear Fractional Diffusion-Wave Equation for Scientists and Engineers; Birkhäuser: New York, NY, USA, 2015. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).