It is ready to prove the main results in this paper.

5.1. Proof of Theorem 2

Here, the eigenproblem is . The proof is finished in five steps. In the first four steps, is imposed temporarily, but this condition is dropped off in Remark 3.

Step 1. Obviously, for the operator

in

, there exists a linear operator

in

with the same operator norm, whose element is as follows:

It is easy to see that for every

, there holds the following:

by utilizing the integration by parts since

. Splitting the right hand side of (

36) into two integrals, the linear operator

has a decomposition

, where their corresponding elements share the same relations, i.e.,

for every

.

Step 2. It is worth mentioning that the linear operator

in

with its elements of the forms

which was discussed in [

5]. There exists a decomposition

, where the linear operators

D and

O are corresponding to infinite-dimensional matrices, whose elements are respectively a leading order diagonal piece and a higher order off-diagonal piece of

. Accurately speaking, according to the proof of Theorem 1 in Appendix A in [

5], there holds the following:

for

, where

for every

.

Remark 2. By applying Lemma 3 above, it is accidentally found that the remainder order of in [5] (or see (38) above) is not correct, while (56) is the right one instead. In order to obtain the exact rate of convergence of

O, (

39) could be rewritten as follows:

It is sufficient to discuss the case of

because of the symmetry with respect to the subscripts

m and

n in (

37). Whenever

is even or not, it is clear that the following holds:

which leads to the following (the notation

means

f and

g are the same order of magnitude):

by noticing the boundedness of

in

.

Step 3. It is time to deal with the linear operator

, whose element is as follows:

Simply decompose into as done in Step 2, where , and .

Step 3.1. To calculate the elements of

, firstly divide the square

into two sub-domains

,

(see

Figure 1), where

represents the triangle enclosed by the lines

,

and

;

is the triangle enclosed by

,

and

. It leads to the following:

Through the change of variables,

it maps

and

to

and

respectively. By the changing of variables in the double integration, it implies the following:

It is convenient to denote two integral terms on the right hand side of (

46) by

and

.

Combined with the formulae for trigeometric functions, it implies the following:

Substituting the above identities into

, it can be deduced the following:

Step 3.2. Since the singularity among the integrands in only occurs at , it seems that the contribution of should be much greater than the one of . [id=ADD,comment=appending]. To see it, the following integral identities are needed. They are mainly based on Lemma 2 and Lemma 3.

To calculate

, the order of

needs to be estimated. By setting

and

, Lemma 2 gives the following:

for

. Based on the same idea, the order of the remainder term can be improved. For example, the following is true for

:

Moreover, there holds the following:

for

.

By setting

and

, the two identities in Lemma 3 are turned into the following:

for

, in order to calculate

.

On the one hand, it is mentioned in Remark 2 that the asymptotics in (

38) are not correct. As a matter of fact, using Lemma 3, the correct ones can be deduced. That is, the diagonal part of the matrix corresponding to fractional Brownian motion can be revised as follows:

for

.

On the other hand, by using the integration by parts (see the proof of Lemma 3) and the second mean value theorem for Riemann integrals, the following is valid:

for

. Furthermore, there holds the following:

for

.

Step 3.3. Calculate

and

in the case of

. Firstly, using the fundamental theorem for Riemann integrals in (

47), it implies the following:

which gives the following:

in terms of Lemma 3 (see (

55) and (

58)). Observing that for

,

it means that

i.e., the order of

is the same as

.

Next, the goal is to calculate

. It is clear that the following holds:

which leads to the following:

in terms of Lemma 2 (see (

51) and (

53)). After all, it gives the following:

which verifies that the contribution of

is smaller than the one of

.

Since

for

,

is the same order as

for

.

Step 3.4. Calculate

and

in the case of

. At first, (

47) can be transformed into the following:

which gives the following:

by virtue of (

57) and (

55). Secondly, the following is valid:

which implies the following:

by using (

50)–(

52). Since

it implies the following:

i.e.,

is the same order as

for

.

Step 4. Summarize all asymptotic information for . Noting that and , has also a decomposition , just like the linear operator A in Step 2, if and are set.

The orders of the elements of

are as follows. As for the diagonal piece, combined (

56) with (

72), it gives the following:

for

. As for the off-diagonal piece, noticing (

42) and (

66), it implies the following:

for

.

Indeed, it is easily found (See Remark 3) that the results for the orders of the elements of

are still true for

since every function on both sides of (

73) and (

74) is holomorphic in (0,1).

Step 5. It is clear that

is self-adjoint, positive and compact in

. For any fixed

,

is well-defined by the spectral decomposition theorem. Hence,

can be turned into the following:

where

The order of the elements of

is

for

, so the order of the ones of

is

for

. If

, the elements of

are square summable. Therefore,

is a Hilbert–Schmidt operator (and thus compact). The eigenvalues of

are square summable, and thus (arranged in order of decreasing magnitude) satisfy the following:

Given any

, by setting

, the following is true:

in terms of Lemma 4. Since

is arbitrarily chosen, the above inequality can be rewritten as follows:

Now, Lemma 5 yields the following:

by setting

,

and

. Letting

(i.e.,

) and making use of the arbitrariness of

, it implies the following:

There are two error terms in the above inequality. It is necessary to merge them together. Obviously, the orders of the error terms need to be compared, which leads to the following two cases:

- 1.

If

(i.e.,

), there holds the following:

- 2.

If

(i.e.,

), there holds the following:

Repeating the above argument with , gives the following:

- 1.

If

, there holds the following:

- 2.

If

, there holds the following:

The proof is completed.□

Remark 3. During processing the proof of Theorem 2, is imposed. In fact, (see (35)) is holomorphic with respect to the variable H in , and so are and . Moreover, the first three terms on the right hand side of (73) are holomorphic in , and so is the remaining term in (73). In terms of the principle of analytic continuation, (73) is still valid for . The same argument works for the off-diagonal piece in the case of . 5.2. Proof of Theorem 3

Following the lines in the proof of Theorem 2, it is easy to justify Theorem 3. Here, the sketch of its proof is given, and the different parts from the steps in the proof of Theorem 2 are emphasized. Step 3.2. in the proof of Theorem 2 is skipped since the technical lemmas are exhibited there.

Formally speaking, the covariance function

is the “mixed partial derivative” of

. From the point of view of the general white noise theory (cf. [

4]), the sfBm is the integral process of the one related to

in a rigorous sense since the following holds:

if

. Hence, it is reasonable to study the eigenproblem

.

Step 1. The matrix element related to the linear operator

becomes the following:

By splitting the right hand side of the above identity into two integrals,

has a decomposition

, where

corresponds to the part of fractional Brownian noise (cf. [

5,

6]).

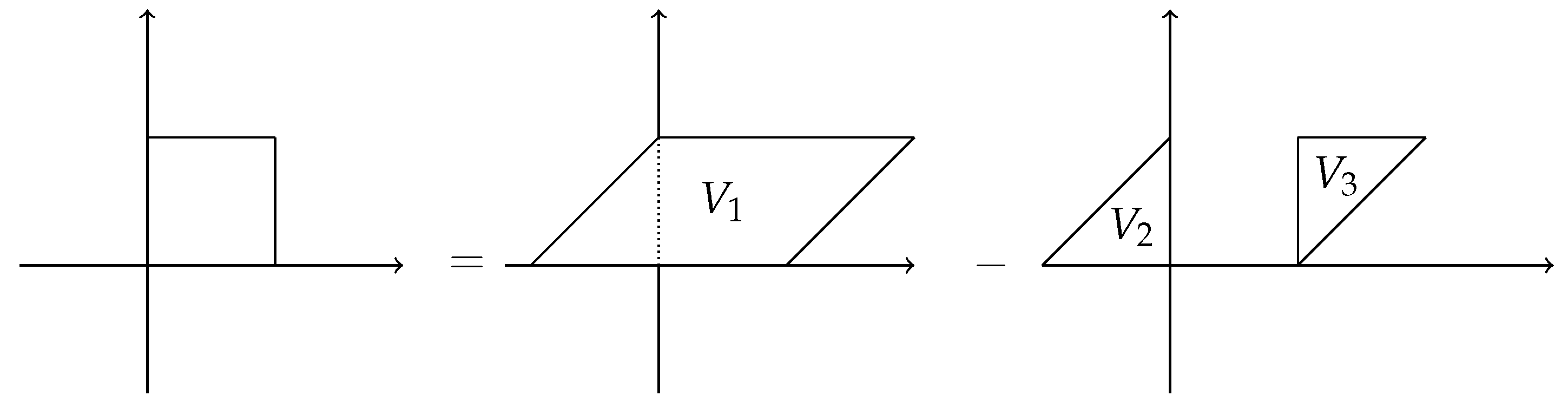

To calculate

, through imitating the method in [

5],

can be represented by a parallelogram

minus two triangles

and

(see

Figure 2), where

is enclosed by the lines

,

,

and

;

is enclosed by

,

and

; and

is enclosed by

,

and

.

By denoting

it’s clear that

By changing the variables

,

in double integral, the following can be deduced:

To calculate

, first of all, perform the mapping

to

by changing variables

, and

. Then, the integral over this region is greatly simplified under the change of variables

,

, which gives the following:

To calculate

, two sub-domains

,

are chosen as is done in Step 3.1. of

Section 5.1. Designating

it gives the following:

Step 2. Now, it is time to extract diagonal and off-diagonal information from

. By setting

, and

, a decomposition

is obtained. Moreover, there hold the following:

The details for handling the part are emphasized, but the ones for are omitted, except for the conclusions.

Step 2.1. Calculate

and

in the case of

. Simple calculations show the following:

Processing, as in Step 3 in

Section 5.1, it is no trouble to verify the following:

Using (

51), (

53), (

55) and (

58), it implies the following:

Using the same techniques as Step 3.3 with Lemma 3, it is obvious that

Step 2.2. Calculate

and

in the case of

. It is easy to check the following:

Following the similar procedures as Step 3. in

Section 5.1, there holds the following:

Using (

50)–(

52) and (

57), it leads to the following:

Step 2.3. Summarize the asymptotic information for the matrix elements of

. The asymptotics for the diagonal piece of

are as follows:

if

, and the ones for the off-diagonal piece are as follows:

if

.

Step 3. Noticing that

is self-adjoint and positive, given any

,

can also be written as

with

. Since the order of the elements of

is

when

, the order of the ones of

is

when

. If

, it is easy to verify whether the elements of

are square summable. In fact,

The square summability of

is verified since

when

. Therefore,

is a Hilbert–Schmidt operator (and thus compact). Using Lemma 4, it is immediately obtained the following:

Setting

,

and

in Lemma 5, it can be deduced the following:

Choosing

(i.e.,

), it implies the following:

Repeating the argument with

,

gives the following:

The proof is completed.□