5. Numerical Experiments with Discussion

Various types of test problems are considered from different sources including [

3,

19,

22]. The approximate solutions

up to 50 decimal places are shown against each test function. The error tolerance

to stop the number of iterations is set to

, whereas the precision is chosen to be as large as 4000, and

N shows the total number of iterations taken by the method to achieve the required tolerance. In addition, physically applicable nonlinear models such as the Van der Waals equation, the Shockley ideally diode electric circuit model, the conversion of substances in a batch reactor, and the Lorenz equations in meteorology are taken into consideration to demonstrate the applicability of the proposed method

. Obtained numerical results are tabulated for further analysis. Each table contains different initial guesses, numbers of iterations required by a method to achieve the preset error tolerance, function evaluations needed for each method, approximated computational orders of convergence

where

, absolute errors, absolute values of functions at the last iteration, and the execution (CPU) time in seconds.

For the test problem 2 (

, two initial guesses are chosen for simulations, as can be seen in

Table 3. For the initial guess

, it is observed that the minimum number of iterations is taken by the methods

and

to achieve the error tolerance; however, the smallest error is given by

while consuming an equivalent amount of CPU time. The method

takes the maximum number of iterations at

. Under the second initial guess

, although many methods including

take the equal number of iterations to achieve

, the smallest absolute error and thus smallest absolute functional value is achieved by

. This shows that if an initial guess lying near to the solution of

is passed to

, then the method yields the smallest error.

For the test problem 2 (

, two initial guesses are chosen for simulations as can be seen in

Table 4. One of them is taken far away from the approximate solution of the quintic equation

. For

, the smallest possible absolute error is yielded by

, but at the cost of the maximum number of iterations and largest amount of CPU time. Next comes

with an absolute error of 9.2097

and greater time efficiency, but it requires one more iteration when compared with

, which achieves an absolute error of 1.9515

with only four iterations while consuming a reasonable amount of CPU time. When an initial guess lying near to the root is chosen, the method

achieves the smallest error, but it requires one extra iteration when compared with

and

. The most expensive methods (in terms of N, FV, and CPU time) for this particular test problem seem to be

and

.

For the transcendental problem 2 (

, two initial guesses are chosen for simulations, as can be seen in

Table 5. Under both of the initial guesses, the maximum numbers of function evaluations are taken by

followed by

to achieve the desired error tolerance. The smallest absolute functional values are obtained with

and

, where

seems to be the most expensive method in terms of the number of iterations. Although the method

consumes the fewest number of CPU seconds, its ACOC is only eight, contradicting the theoretical order of convergence found in [

21].

For the transcendental problem 2 (

, two initial guesses are chosen for simulations as can be seen in

Table 6. One of the guesses is taken far away from the approximate solution of

. Under both initial guesses, it is observed that the method

takes the fewest iterations, fewest function evaluations, and fewest CPU seconds with unsatisfactory absolute errors under

. The method

diverges for the second initial guess

, whereas

is the most expensive method concerning

N and

, in particular. The proposed method

performs reasonably well under the initial guesses and does not diverge under any situation.

For the test problem 2 (

, three initial guesses are chosen for simulations as can be seen in

Table 7. Two of the guesses lie far away from the approximate root of

. It is easy to observe that the method

performs better than other methods, even when the initial guesses are not near to the approximate root, since the number of iterations to attain the required accuracy is the smallest with

Once again, the most expensive method regarding

N and

proves to be

, whereas

does not succeed towards the desired root when the initial guesses are assumed to be away from the root. The absolute error achieved by

with

is the smallest when compared with the results of other methods.

Example 3. Volume from Van der Waals’ Equation [37]. The Van der Waals equation is represented by the following model: After some simplifications, one obtains the following polynomial of nonlinear form: The Van der Waals equation of state was formulated in 1873, with two constants

a and

b (Van der Waals constants) determined from the behavior of a substance at the critical point. The equation is based on two effects, which are the molecular size and attractive force between the molecules. The model (

43) is a modified version of the ideal gas equation

where

n shows the number of moles,

R stands for the universal gas constant (0.0820578),

T is the absolute temperature,

V shows the volume, and

P denotes the absolute pressure. If

moles of benzene vapor form under

atm with

and

, then one has

The approximate solution up to 50 dp is given as:

Being cubic, the Equation (

45) certainly possesses one real root. Here, we aim to show the performance of

on this model. Therefore, the model is numerically solved by

and the other six methods chosen for comparison. It can be observed in

Table 8 that

achieves the smallest possible error

along with functional values nearest to 0 in a reasonable amount of time, irrespective of the initial guesses.

Example 4. The Shockley Ideally Diode Electric Circuit Model.

The Shockley diode model giving the voltage going through the diode is represented by the following equation:where stands for the saturation current, n is the emission coefficient, is the thermal voltage, and J is the diode current. Using the Kirchhoff’s second law () and Ohm’s law (), a root-finding model can be found. The final structure for the model would be as follows: Assuming values of parameters from [38], we obtain the following equation that is nonlinear in the variable J: The approximate solution of the above equation correct to 50 dp is as follows: Table 9 presents numerical simulations for (

47) with two different initial conditions of 0.5 and 1.8, under which the smallest absolute error seems to lie under the column of the proposed method

. Further analysis can easily be conducted by the careful examination of the results tabulated therein.

Example 5. Conversion of Species within a Chemical Reactor [39]. The following nonlinear equation arises in chemical engineering during the conversion of species in a chemical reactor:where x stands for the fractional conversion of the species; thus, it must lie in (0,1). The approximate solution of the above equation correct to 50 dp is as follows: Numerical results can be found in

Table 10, where it is shown that

has outperformed all other methods in terms of the absolute errors under consideration under two different initial conditions.

Example 6. The Two-Dimensional Bratu Model [40]. The two-dimension Bratu system is given by the following partial differential equation:subject to the following boundary conditions The two-dimensional Bratu system has two bifurcated exact solutions for , a unique solution for , and no solutions for . The exact solution to (49) is determined as follows:where θ is an undetermined constant satisfying the boundary conditions and assumed to be the solution of (49). Using the procedure described in [41], one obtains Differentiating (52) with respect to θ and setting , the critical value satisfies By eliminating λ from (52) and (53), we have the value of for the critical satisfyingand . We then obtain from (53). Numerical simulations performed in Table 11 show that the proposed three-step method takes a smaller number of iterations and produces considerably smaller absolute errors with a reasonable amount of CPU time. Now, we consider five different kinds of nonlinear multidimensional equations and numerically solve them with

,

, and

since the methods

,

, and

were either divergent or not applicable on systems of nonlinear equations. For the systems considered, various types of initial guesses are used, and for comparison purposes, the approximate solution and the normed error

having the same tolerance

and CPU time are taken into consideration. It can be observed in

Table 12,

Table 13,

Table 14,

Table 15 and

Table 16 that the smallest possible absolute error is achieved only with the proposed method—that is,

—in a reasonably affordable time period.

Example 7. The nonlinear system of two equations from [3,41] is given as: The exact solution of the system (55) is . The numerical results for this system are shown in Table 12 under the proposed and other three methods. Example 8. Another nonlinear system of three equations taken from [42] is shown below:where its approximate solution up to 50 dp is shown below: The numerical results for the system (56) are shown in Table 13. Example 9. A three-dimensional nonlinear system is taken from [3] as given below:where its approximate solution up to 50 dp is as follows: The numerical results for the system (58) are shown in Table 14. Example 10. (Catenary curve and the ellipse ([43], p. 83)): Given below is a nonlinear system of two equations that describe trajectories for the catenary and the ellipse, respectively. We are interested in finding their intersection point that lies in the first quadrant of the cartesian plane. The system has been solved under the proposed method and other methods under consideration. The performance of each method is shown in Table 15, whereas an approximate solution up to 50 dp of the system (60) is shown in comparison to the system. Example 11. Steady-State Lorenz Equations ([44], p. 816). In this problem, we consider a system developed by Edward Lorenz, who was an American meteorologist studying atmospheric convection around the Earth’s surface. Lorenz’s nonlinear system is a set of three ordinary differential equations, as given below: In order to study the steady-state behavior of the system (

62), we take

and

to obtain the following nonlinear algebraic system:

The approximate solution for the system (

63) correct to 50 dp is given as

The nonlinear steady-state system (

63) has been numerically solved in

Table 16.

6. Concluding Remarks

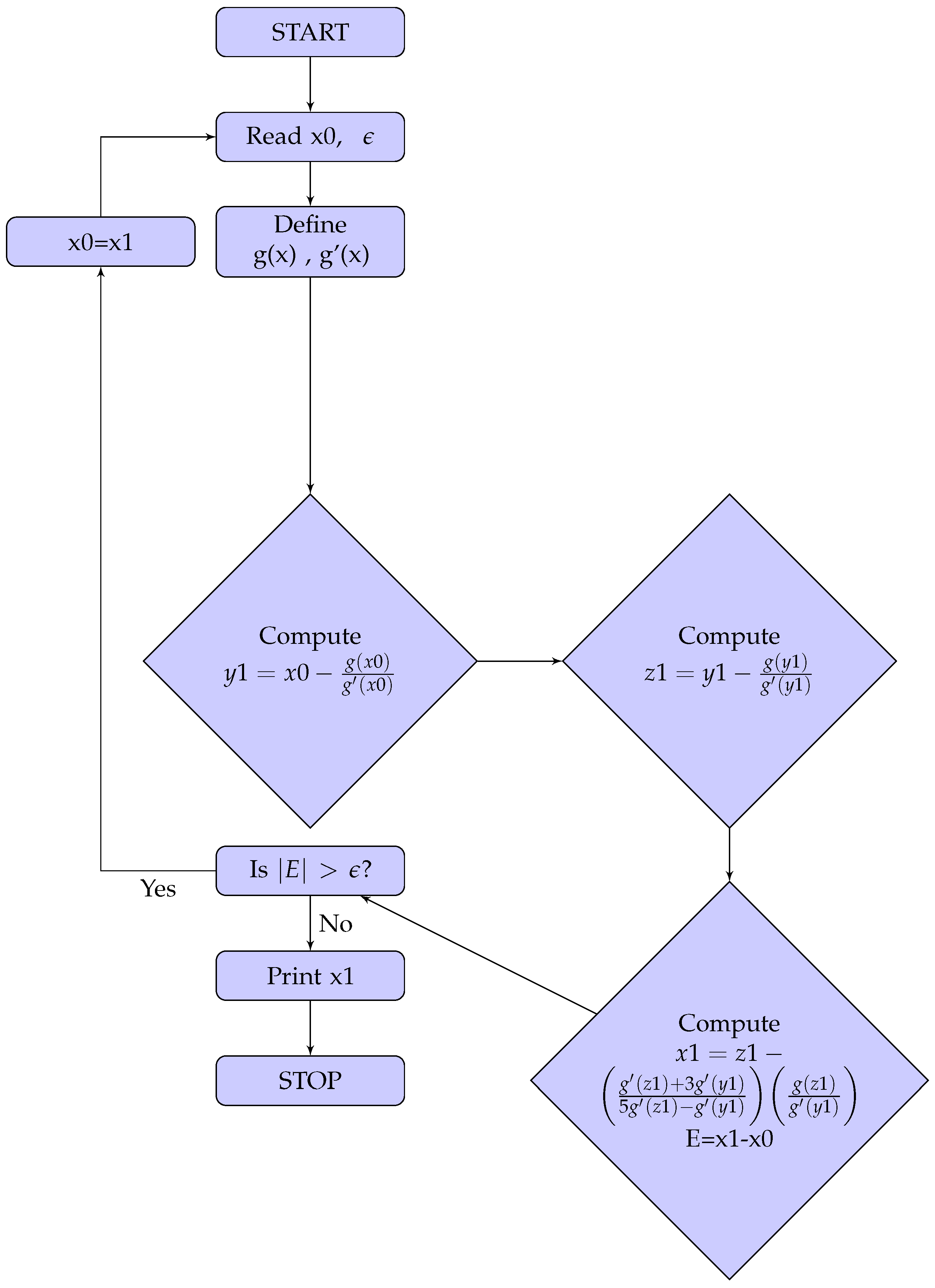

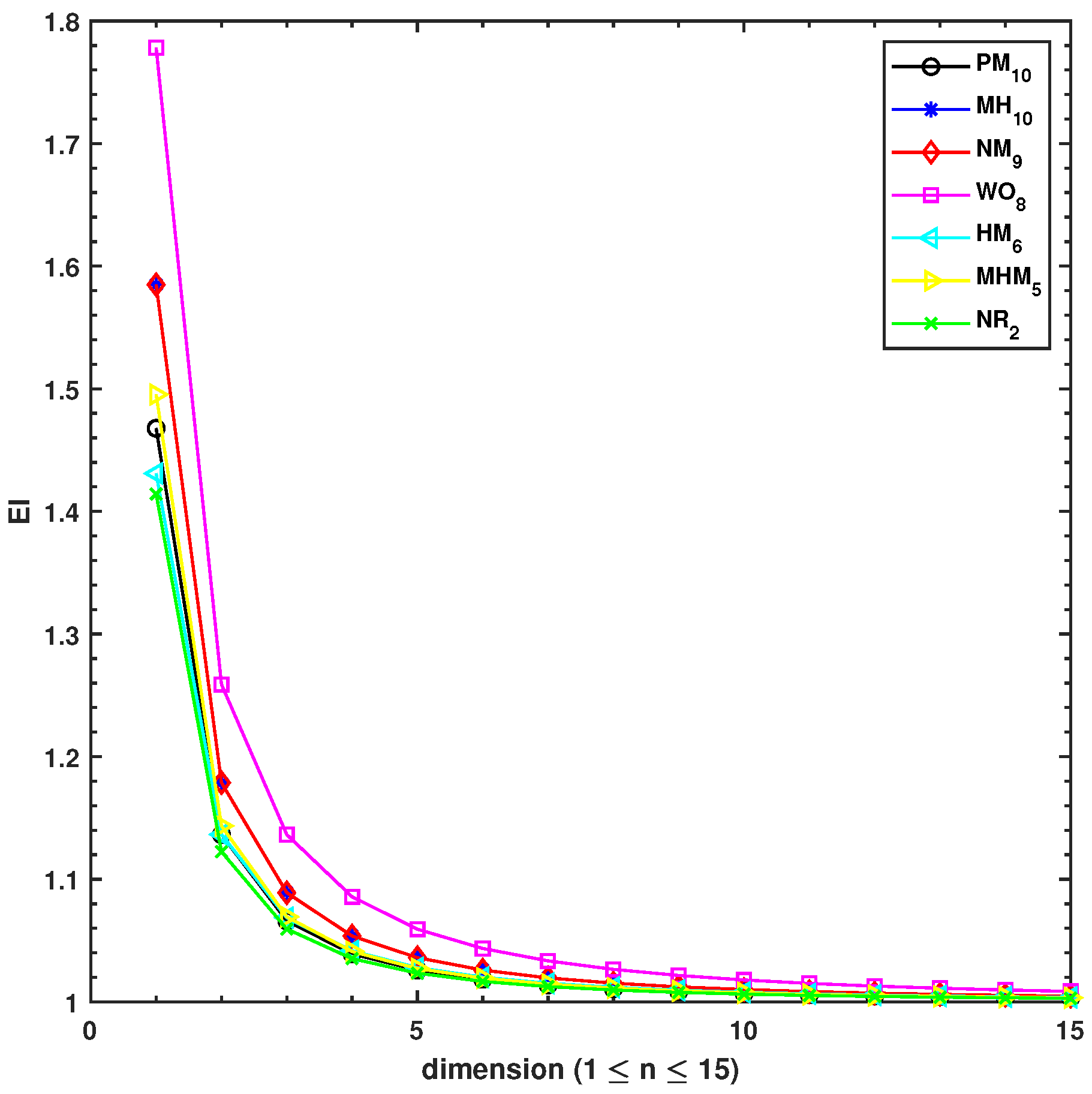

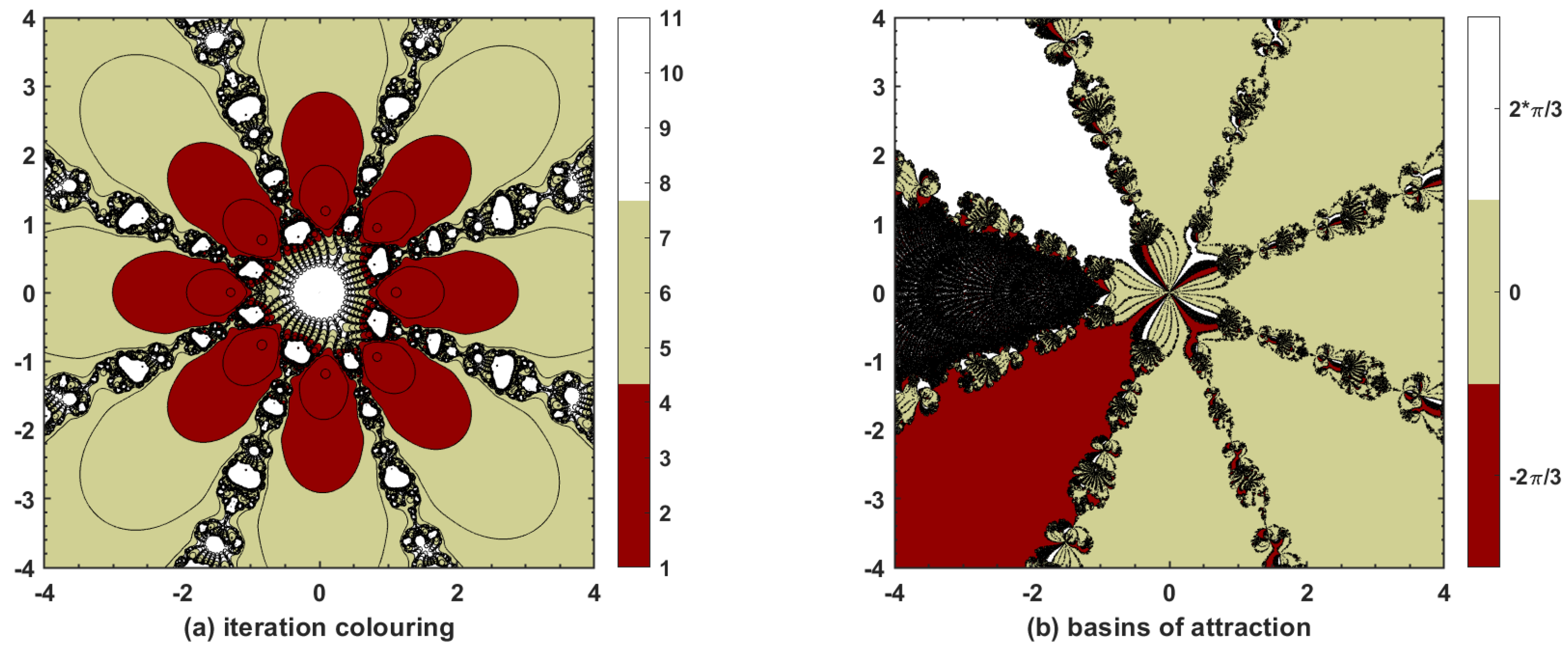

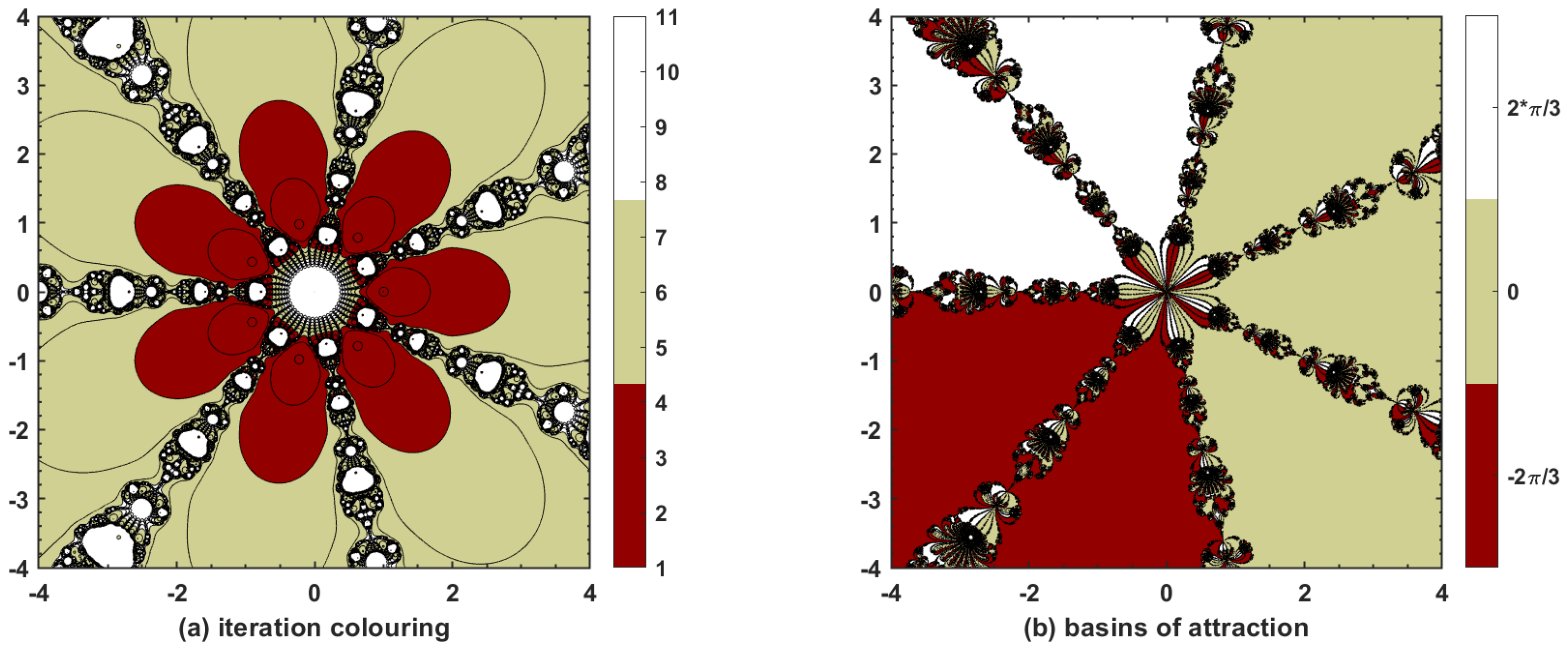

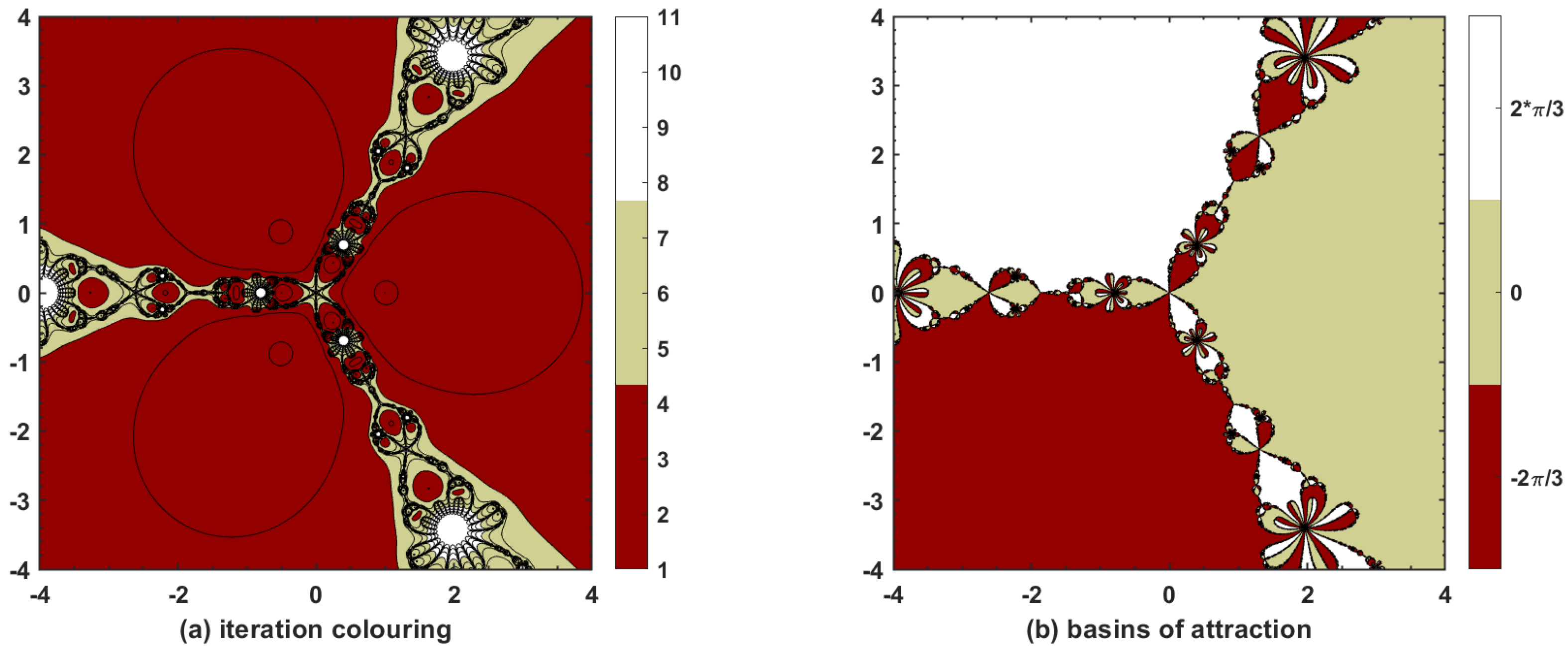

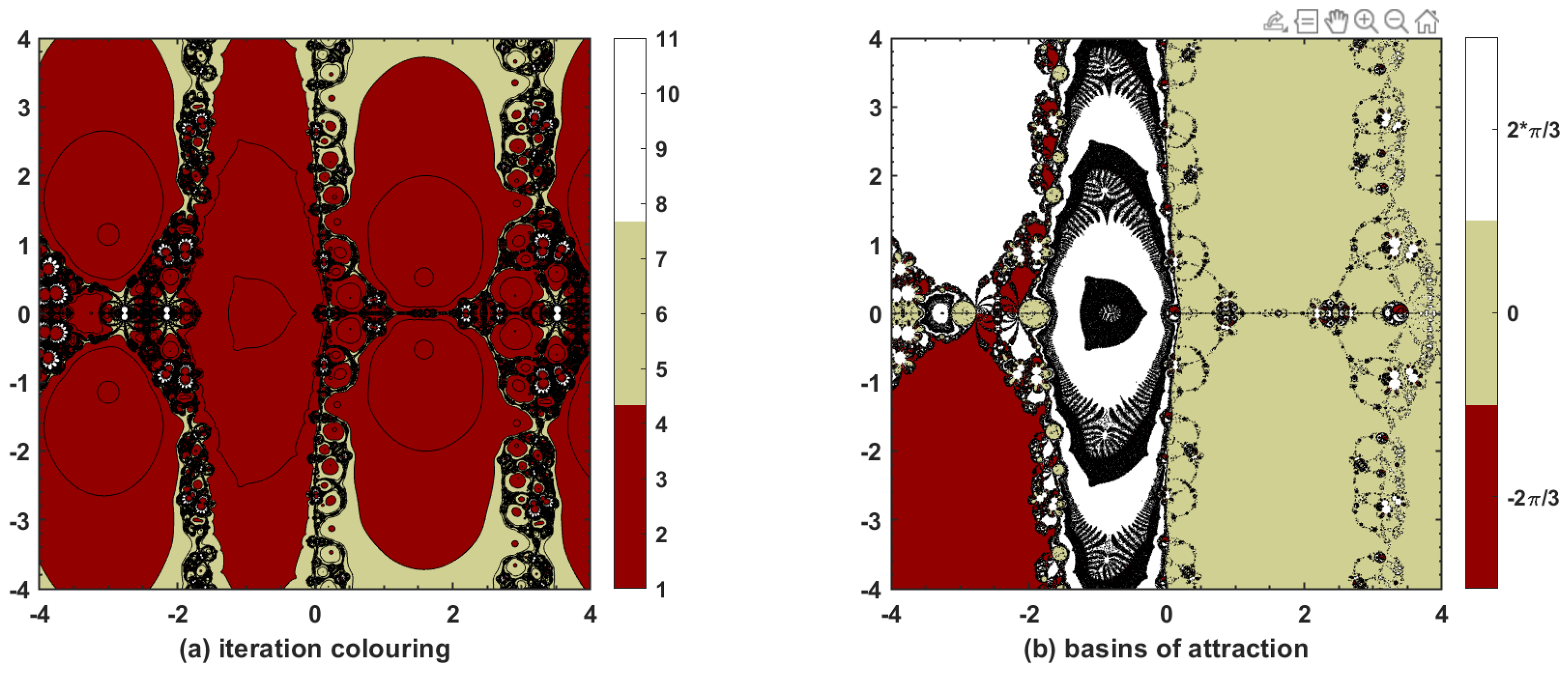

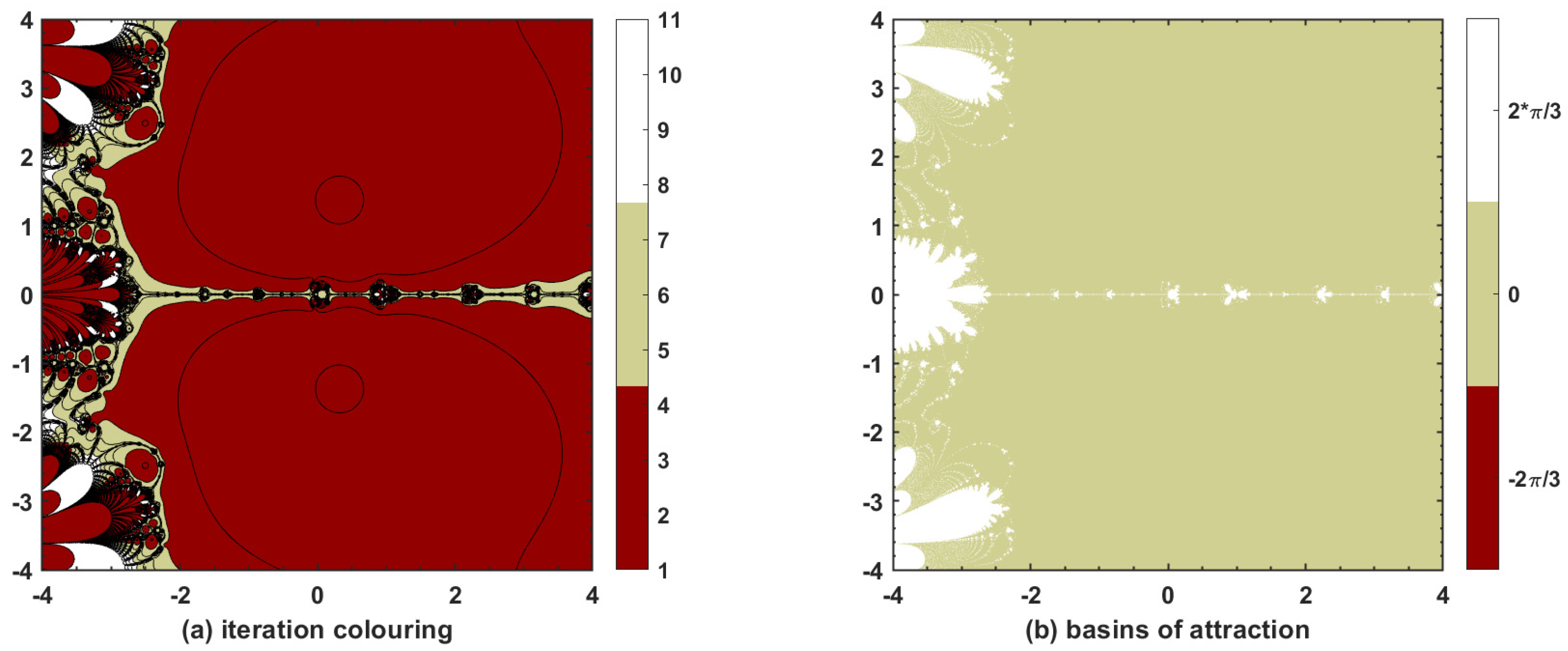

This research study is based on devising a new, highly effective three-step iterative method with tenth-order convergence. The convergence is proved theoretically via Taylor’s series expansion for single and multi-variable nonlinear equations, and the approximate computational order of convergence confirms such findings. Thus, the proposed method is applicable not only to single nonlinear equations but also to nonlinear systems. Moreover, dynamical aspects of are also explored with basins of attraction that show quite esthetic phase plane diagrams when applied to complex-valued functions, thereby proving the stability of the method when initial guesses are taken within the vicinity of the underlying nonlinear model. Finally, different types of nonlinear equations and systems, including those used in physical and natural sciences, are chosen to be tested with and with various well-known optimal and non-optimal methods in the sense of King–Traub. In most of the cases, is found to have better results, particularly when it comes to the number of iterations N to achieve required accuracy, ACOC, absolute error, and absolute functional value. It is also worthwhile to note that the proposed method always converges, irrespective of whether the initial guess passed to it lies near to or away from the approximate solution. Hence, is a competitive iterative method with tenth-order convergence for solving nonlinear equations and systems.

We understand that methods of very high order are only of academic interest since approximations to the solutions of very high accuracy are not needed in practice. On the other hand, such methods are, to some extent, complicated and do not offer much of an increase in computational efficiency. Moreover, the method proposed in this article lies in the family of methods without memory, requiring the evaluation of three Jacobian matrices, and thereby becomes computationally expensive. To avoid computational complexity, we will propose, in future studies, a modification of the proposed method by replacing the first-order derivative with a suitable finite-difference approximation. In addition, the proposed method will also be analyzed for its semi-local convergence.