Abstract

Online learning has become increasingly prevalent in real-world applications, where data streams often comprise heterogeneous feature types—both nominal and numerical—and labels may not arrive synchronously with features. However, most existing online learning methods assume homogeneous data types and synchronous arrival of features and labels. In practice, data streams are typically heterogeneous and exhibit asynchronous label feedback, making these methods insufficient. To address these challenges, we propose a novel algorithm, termed Online Asynchronous Learning over Streaming Nominal Data (OALN), which maps heterogeneous data into a continuous latent space and leverages a model pool alongside a hint mechanism to effectively manage asynchronous labels. Specifically, OALN is grounded in three core principles: (1) It utilizes a Gaussian mixture copula in the latent space to preserve class structure and numerical relationships, thereby addressing the encoding and relational learning challenges posed by mixed feature types. (2) It performs adaptive imputation through conditional covariance matrices to seamlessly handle random missing values and feature drift, while incrementally updating copula parameters to accommodate dynamic changes in the feature space. (3) It incorporates a model pool and hint mechanism to efficiently process asynchronous label feedback. We evaluate OALN on twelve real-world datasets; the average cumulative error rates are 23.31% and 28.28% under the missing rates of 10% and 50%, respectively, and the average AUC scores are 0.7895 and 0.7433, which are the best results among the compared algorithms. And both theoretical analyses and extensive empirical studies confirm the effectiveness of the proposed method.

1. Introduction

Online learning [1,2,3,4,5] has achieved significant success in recent years across a wide range of domains, particularly in scenarios involving continuously arriving data streams. Unlike traditional batch learning methods, which require access to the entire dataset upfront, online learning incrementally updates models in real time as new data become available [6]. This characteristic substantially reduces computational and storage overheads, while providing notable adaptability to complex real-world applications, such as natural disaster monitoring in ecological systems [7], real-time credit card fraud detection [8], and skin disease diagnosis [9,10]. As a result, online learning has emerged as a pivotal technique for data stream analytics in the era of big data.

Existing online learning techniques are generally categorized into two paradigms: online static learning [11] and online dynamic learning [12]. Online static methods, having been developed earlier, have accumulated a substantial body of research and are valued for their simplicity, computational efficiency, and ease of deployment. By leveraging incremental gradient updates and regularization, these approaches effectively handle stable data distributions, particularly in resource-constrained environments [11]. In contrast, online dynamic learning emphasizes adaptability to non-stationary data distributions and evolving feature spaces [13]. Through feature mapping and embedding strategies, these methods dynamically adjust model parameters, thereby maintaining high predictive performance even as feature dimensions or data sources grow increasingly complex [14]. Furthermore, real-world data streams often contain mixed-type variables [15,16], introducing additional challenges to the learning process.

Nevertheless, despite their individual strengths, existing methods face significant limitations in complex real-world environments, particularly in addressing two key challenges. First, the dynamic evolution of mixed feature spaces—comprising both numerical and nominal attributes—often leads to changes in feature dimensionality [17,18], making it difficult for traditional methods to maintain predictive performance. Second, the asynchronous nature of data streams, in which features and labels arrive at irregular intervals and from diverse sources, frequently results in incomplete or delayed information that adversely affects model training [19,20,21]. Accordingly, the development of an effective online learning algorithm capable of simultaneously managing mixed-type data, evolving feature spaces, and asynchronous arrivals of features and labels has become a critical and urgent research challenge—one that motivates the primary objective of this study.

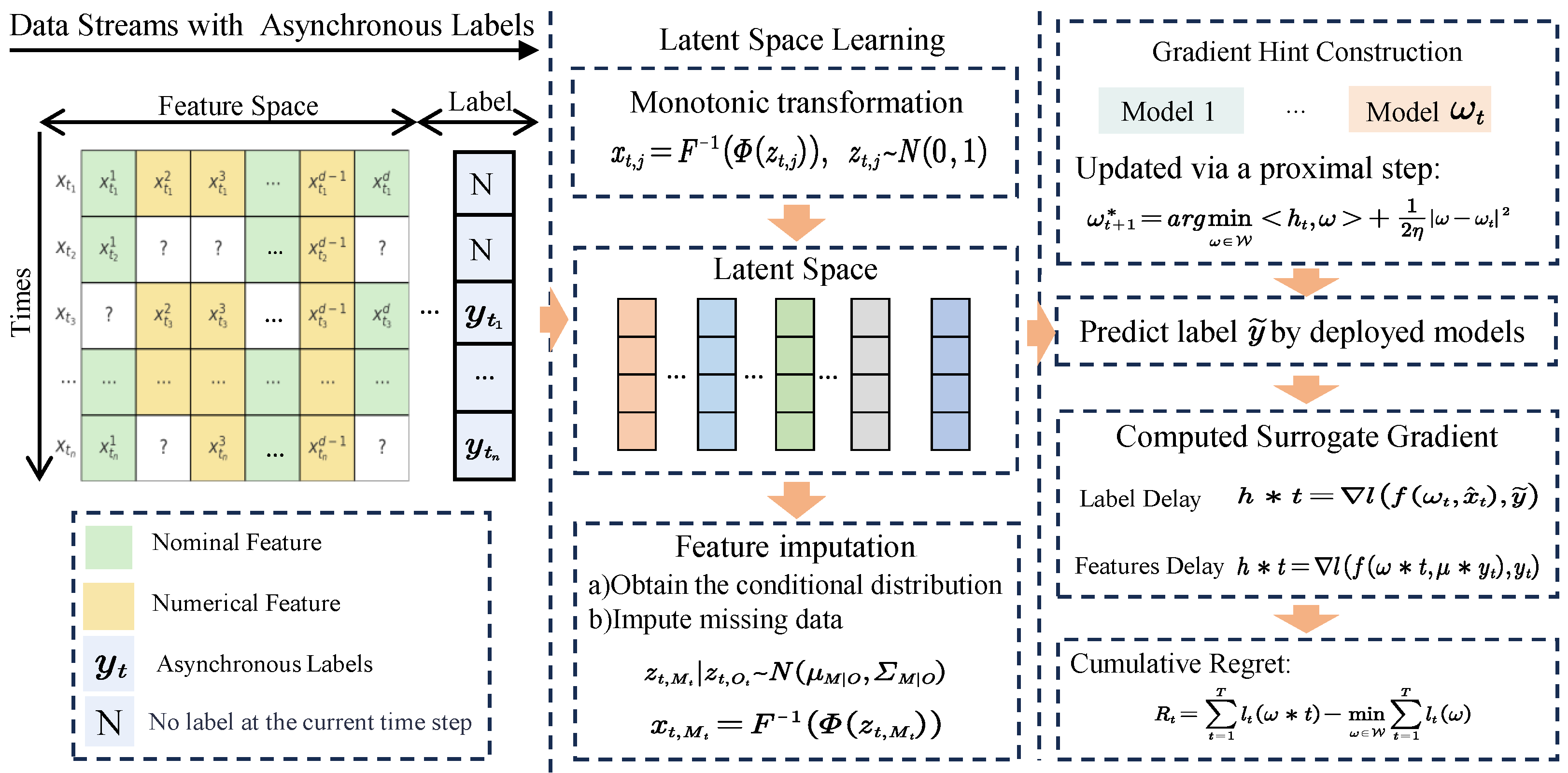

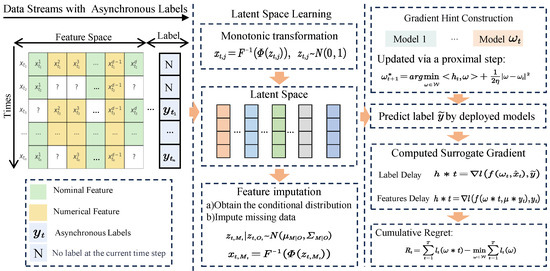

Our proposed method, Online Asynchronous Learning over Streaming Nominal Data (OALN), presents a unified framework designed to address missing data and asynchronous supervision in dynamic data streams through the integration of three key components. First, it incorporates a real-time imputation module that combines a Gaussian Copula model with an online expectation-maximization algorithm to jointly estimate feature distributions and impute missing values for both nominal and numerical attributes, thereby ensuring statistical consistency and completeness across heterogeneous feature spaces. Second, to maintain predictive robustness under evolving data characteristics, OALN trains multiple classifiers on the imputed feature space and integrates their predictions using adaptively updated ensemble weights. Third, it introduces a simulated delay mechanism to model the asynchronous arrival of features and labels, substantially enhancing the model’s resilience in real-world scenarios characterized by irregular and non-synchronized inputs. An overview of the proposed framework is presented in Figure 1.

Figure 1.

Flowchart of our OALN model.

To achieve the aforementioned advantages, this paper makes the following key contributions:

- (1)

- We formally identify and unify the challenges of evolving feature spaces, heterogeneous data types, and asynchronous label arrivals within a coherent online learning framework.

- (2)

- We propose a novel algorithm, termed OALN, which integrates real-time estimation of mixed-type feature distributions via an Extended Gaussian Copula model, coupled with an adaptive and incremental learning strategy tailored to dynamic and asynchronous data streams.

- (3)

- We design a comprehensive evaluation framework that jointly simulates evolving feature dimensions, mixed-type data, and asynchronous label availability, thereby enabling a rigorous assessment of OALN ’s robustness and practical applicability.

The remainder of this paper is organized as follows: Section 2 reviews the related work. Section 4 introduces the construction of the latent feature space and the handling of asynchronous labels to capture feature preferences. Section 5 presents the experimental results and discussions. Finally, Section 6 concludes the paper and outlines directions for future research.

2. Related Work

This work is related to online static learning [11], online dynamic learning [12], and asynchronous learning methods [19,22,23]. As shown in Table 1, although extensive research has been conducted in the field of online learning [24,25,26,27], few studies have addressed scenarios involving incomplete feature spaces and the asynchronous arrival of mixed-type data streams. In real-world applications, data streams often arrive asynchronously with varying delays [21,28], leading to incomplete and dynamically evolving feature spaces, where conventional online learning methods demonstrate limited effectiveness. To tackle these challenges, this study introduces a novel online learning framework, termed OALN, which integrates the incremental updating capability of online static learning, the adaptability of online dynamic learning for continuously evolving feature spaces, and the robustness of asynchronous learning methods for handling irregularly arriving data. The subsequent sections will provide a comprehensive review of existing studies on online static learning, online learning in dynamic feature spaces, and asynchronous learning methods, highlighting their limitations in practical scenarios.

Table 1.

Comparison of previous methods with OALN. Blank cell: not mentioned; ✓: mentioned but no details or weak; ✓✓: mentioned with details or strong.

2.1. Online Static Learning

Online static learning is one of the earliest and most extensively studied paradigms in the field of online learning [11]. It assumes that data arrive sequentially over time while the underlying distribution remains relatively stable. Based on this assumption, existing methods employ incremental gradient updates and regularization techniques, allowing efficient updates to model parameters upon receiving each new data instance, with minimal computational overhead. For example, in scenarios such as embedded systems or real-time analytics platforms, online static learning algorithms are well-suited for delivering low-latency predictions and high throughput due to their inherently incremental nature.

Nonetheless, these methods assume that the data distribution remains unchanged and that the feature space is fixed and complete—conditions that are often violated in real-world settings [24,32]. In practice, data may arrive with irregular delays [28,33,34], exhibit random feature omissions, or involve dynamically evolving feature spaces with mixed data types [35]. Under such circumstances, traditional online static learning models struggle to adapt, leading to significant declines in predictive performance. Consequently, these limitations hinder their ability to meet the stringent requirements for stability and accuracy in complex real-world scenarios. However, our OALN algorithm integrates mixed-feature modeling and real-time imputation with incremental updates, thereby ensuring stable predictive accuracy even in incomplete or evolving feature spaces.

2.2. Online Learning in Dynamic Feature Space

Online learning in dynamic feature spaces focuses on adapting models to feature sets that expand or contract as new data arrive [36]. Such methods typically employ mapping or embedding strategies to reconcile discrepancies between old and new feature dimensions, allowing the learned parameters to be shared or transferred [37]. Some approaches use linear transformations to align features, while others adopt nonlinear or kernel-based strategies to handle complex mappings more effectively [12,14]. By dynamically adjusting model parameters as the feature space evolves [15], these methods aim to maintain strong predictive accuracy despite shifting or expanding feature sets.

Although current approaches to dynamic feature space learning better accommodate the variability in incoming data, they often focus primarily on numerical features or assume a relatively stable marginal distribution [38]. When applied to more heterogeneous contexts (e.g., nominal features or missing data), these methods may fail to preserve critical structural information that cannot be captured by linear or simplified mappings [20,28]. Moreover, balancing model complexity with adaptation frequency remains a challenge: overly frequent model updates can incur high computational costs, while infrequent updates may result in degraded accuracy as the feature space evolves. Nevertheless, our OALN algorithm leverages real-time estimation of mixed-feature distributions and adaptive incremental updates, enabling efficient and accurate learning even under frequent changes in feature dimensionality.

2.3. Asynchronous Learning Methods

Asynchronous learning methods are designed to handle data streams that arrive at irregular intervals or with unpredictable delays, allowing the model to update independently of a fixed scheduling mechanism [22,39]. These methods often adopt decentralized or distributed architectures, in which partial updates are accumulated and subsequently integrated into a global model once the data becomes available [40,41]. By decoupling training from a synchronized data arrival schedule, asynchronous approaches offer robustness against real-world contingencies such as network latencies, sensor malfunctions, or uneven data rates [19,42].

Despite their robustness to timing irregularities, many asynchronous learning methods still assume a relatively complete and stable feature set and expect labels to be available in tandem with the data [43,44]. In practice, delayed or missing labels, along with mixed data types such as nominal and numerical features, can significantly degrade the performance of these methods [31,39,45]. Moreover, coordination overhead may become a bottleneck in large-scale scenarios, where numerous asynchronous model updates must be merged without losing critical information or introducing inconsistencies [40]. In contrast, our OALN algorithm integrates asynchronous updates with mixed-feature adaptive modeling, allowing the model to learn from partial data without requiring full synchronization, while maintaining predictive robustness under unpredictable conditions.

3. Problem Statement

The main emphasis of our OALN algorithm lies in binary classification, with the option of straightforward expansion to multiclass scenarios employing One-vs-Rest [46] or One-vs-One [47] strategies. Define an input sequence as instances arriving in real time, where denotes a vector of dimension recorded in instance , accompanied by a corresponding label . Moreover, due to practical constraints in data collection and feedback, the label corresponding to may not be revealed until time , where . For mixed-type streaming data types, we define as , where and represent nominal and numerical variables, respectively. At each round t, the combination of the online learner and the current weights of the model makes a prediction based on the currently available features. Once the label arrives at time , the loss is computed, and the model is updated accordingly. Our objective is to identify a sequence of functions that can effectively handle both the evolving feature space and the asynchronous arrival of labels. Formally, we define our empirical risk minimization (ERM) objective as:

where denotes the loss function, measuring the discrepancy between the predicted output and the ground-truth label. is the true label corresponding to , which is collected at time step , and is the corresponding input feature vector. By continually updating the online learner, this framework aims to accommodate the dynamic feature space and asynchronous feedback in data streams, ultimately improving predictive performance on evolving data sequences.

4. The Proposed Approach

To effectively address the challenges posed by mixed-type, incomplete input features and delayed label feedback in online learning, our approach is composed of two key modules. First, we propose a latent feature encoding and imputation mechanism (Section 4.1) based on the Extended Gaussian Copula, which transforms partially observed, heterogeneous inputs into complete and statistically consistent representations. Second, we design an online learning algorithm (Section 4.2) tailored for asynchronous labels, which utilizes hint-based updates to adaptively learn even when full supervision is not immediately available. Together, these components ensure robustness under both input uncertainty and delayed feedback.

4.1. Latent Feature Encoding and Imputation

In real-time machine learning systems, data often arrives through streaming and in an incomplete manner. Such data typically contains mixed-type features, encompassing nominal (categorical), ordinal, and continuous variables [17,30]. Compounding this challenge is the fact that data collection in practice is imperfect, frequently leading to missing entries due to latency, hardware failure, user interruption, or privacy-preserving protocols. These missing values can occur in both structured input and the associated labels .

Our learning objective for OALN is to train an online model that maps a sequence of input instances to predictions, even when such instances are incomplete or partially observed. To support accurate and robust model training, we must therefore construct a feature representation module that can handle heterogeneous feature types; missing values in arbitrary dimensions; and statistical dependencies across features.

To address this need, we propose a probabilistic latent encoding framework based on the Extended Gaussian Copula (EGC) model, which serves as a front-end encoder for the online learner. We select the Extended Gaussian Copula owing to its formal separation of marginal distributions from the underlying dependence structure, its closed-form and bijective CDF transformations that guarantee statistically coherent and semantically consistent imputations, and its robust covariance estimation amenable to low-rank or diagonal approximations—substantially reducing storage and computational overhead in high-dimensional settings. These attributes collectively provide a theoretically sound and practically efficient encoding mechanism for online learning. This module takes partially observed mixed-type feature vectors as input and outputs fully specified, semantically consistent, and distribution-aware representations , which can be safely used in any downstream predictive task.

- Latent Mapping of Mixed-Type Features. To enable unified modeling of heterogeneous input data , which may consist of categorical, ordinal, and continuous variables, we adopt a latent variable framework wherein each observed feature is represented as a transformation of a latent Gaussian variable. The core assumption is that there exists a latent vector , from which the observed values are generated via a decoding function specific to the type of each variable.

For categorical features , we define a latent sub-vector and apply the Gaussian-max transformation:

where is a learnable shift vector. This operation retains the unordered nature of nominal variables without imposing an artificial structure, while also allowing the modeling of arbitrary categorical distributions through the learned biases .

For ordinal or continuous features , we adopt a monotonic transformation based on the Gaussian Copula [48]:

where is the empirical cumulative distribution function of the observed data and is the standard normal CDF [49]. In an offline setting, can be obtained by sorting the full dataset and directly accumulating quantiles; however, in an online streaming environment where it is infeasible to retain all historical samples, one typically discretizes the variable’s domain into bins and maintains the count per bin along with the total sample count. Upon arrival of each new sample, only the corresponding bin count and the overall count are incrementally updated, and the empirical CDF at any value is estimated by dividing the cumulative bin counts below that value by the current total. This scheme supports constant- or logarithmic-time updates, satisfying the real-time requirements of high-throughput data streams while approximately preserving the original marginal distribution. The transformation thus embeds data into a latent Gaussian space without altering its marginal properties.

By applying these transformations across all variables, we obtain a full latent representation , where D is the sum of the dimensions required for each variable’s encoding. This provides a consistent and information-rich representation of the instance, regardless of missing values.

- Joint Latent Modeling and Dependency Structure. After constructing the latent representation for all input variables, we assume the complete latent vector follows a multivariate Gaussian distribution:where captures all pairwise dependencies among the latent sub-components. This joint distribution enables the model to learn global correlations between variables of different types, including cross-type dependencies such as correlations between categorical and continuous variables.

Unlike heuristic encoding methods that treat features independently or separately model each type, the EGC framework leverages the joint covariance structure to support statistical coupling between all dimensions. This is particularly important in the presence of missing data, as the unobserved variables can be inferred based on their conditional relationship to the observed ones. Furthermore, the use of empirical marginals in the decoding process ensures that the generated values maintain fidelity to the original data distribution, even when imputed.

This formulation bridges the gap between traditional multivariate Gaussian models (which are not suitable for categorical data) and purely non-parametric imputation methods (which lack a principled latent structure). The result is a model that is both expressive and grounded in probabilistic inference, making it especially well-suited for online learning tasks with noisy, sparse, or mixed-type inputs.

- Probabilistic Inference and Feature Imputation. Given an instance with observed entries indexed by and missing entries indexed by , the goal is to infer the missing values using the posterior distribution of the corresponding latent variables , conditioned on the observed latent components .

The first step is to map the observed variables into the latent space. For ordinal and continuous features, this is achieved by applying the inverse transformation . For categorical variables, the observed value corresponds to an inequality constraint region in , defined by

This results in a partially observed latent vector , from which we compute the posterior of the missing latent dimensions using standard Gaussian conditioning:

where .

After obtaining the conditional distribution, we perform imputation by drawing samples (in the case of multiple imputation) or using the conditional mean (for single imputation), and mapping the latent variables back to the data space. For continuous and ordinal features, this is achieved via ; for categorical variables, the imputed class is determined by the argmax operation.

The EGC model thus supports two practical modes: (1) Single imputation, which is efficient and appropriate for real-time prediction tasks where one consistent input is required. (2) Multiple imputation, which is valuable when capturing uncertainty is important, such as in Bayesian learning or ensemble settings.

By combining expressive latent modeling with exact Gaussian inference, our approach provides a principled and effective mechanism for handling missing features in online learning scenarios. This imputed feature vector will serve as the input to the label-delayed learning module described in the next section.

4.2. Handling Asynchronous Labels

The central challenge in asynchronous online learning lies in the absence of immediate supervision [31]. Instead of deferring updates until true labels arrive, we propose a paradigm shift: enable timely model updates by constructing informative surrogate gradients using whatever partial information is currently available. This simple but powerful idea—learning through approximate signals—forms the foundation of our OALN and enables provably effective learning even under severely delayed or missing labels.

- Hint-Driven Online Learning under Delayed Supervision. In many practical online learning scenarios, full instance–label pairs are not observed synchronously. Specifically, at time step t, the learner receives a (possibly imputed) feature vector , while the corresponding ground-truth label may only arrive after an unknown delay , or may be missing entirely due to supervision dropout. This asynchronous label setting violates the standard assumption of immediate feedback, rendering conventional update rules such asinapplicable when is unavailable [50]. Here, denotes the model parameter at time t, is the learning rate, is the prediction function, and is a convex loss function, such as logistic or hinge loss.

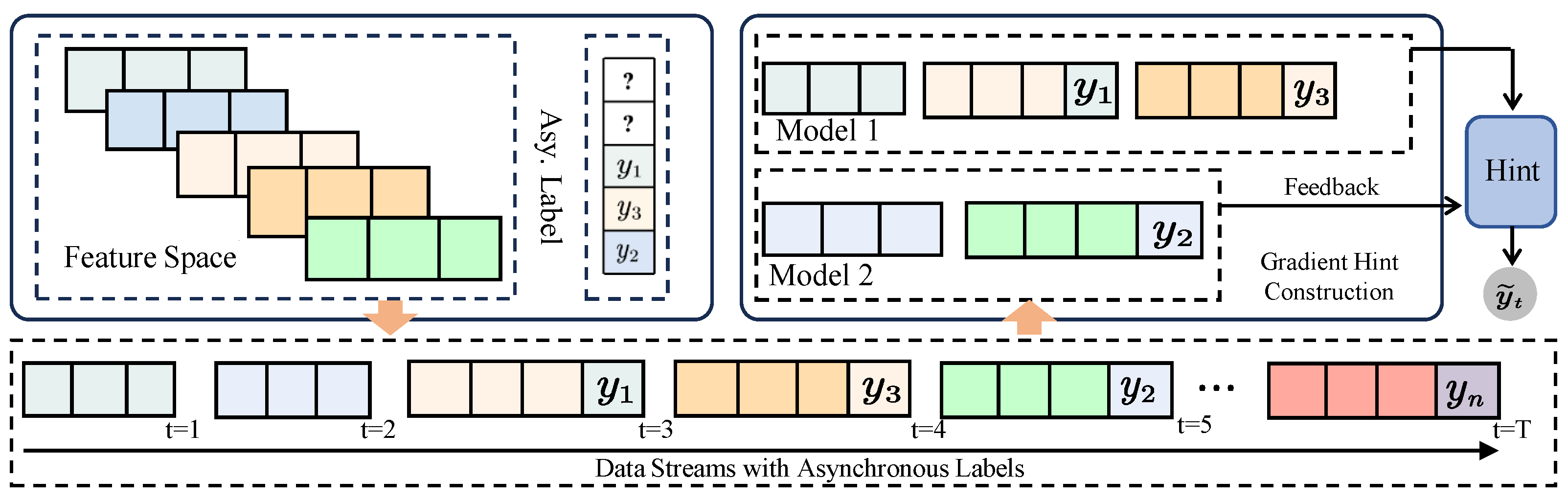

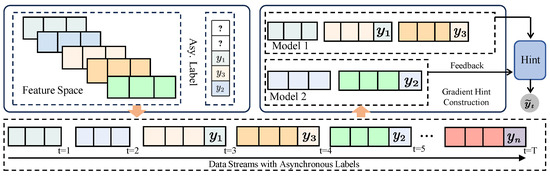

To overcome this limitation, we propose a general-purpose solution based on gradient hint construction, the general principle of which is shown in Figure 2. At each time t, the learner forms a surrogate gradient vector , which approximates the true gradient using partially observed information. The model is then updated via a proximal step:

where is a compact convex feasible set, and denotes the standard inner product.

Figure 2.

Handling asynchronous labels via hint-driven base learners in OALN. The data stream is divided into sub-sequences based on the availability of features and labels. Each base learner updates using surrogate gradients constructed from partial observations, enabling timely learning under delayed or missing supervision.

This update can be interpreted as an implicit Euler step over a linearized surrogate loss, balancing descent direction with proximity to the current model . When the actual label becomes available, the learner can retroactively refine , or use it to update future hints. This mechanism naturally interpolates between different feedback regimes: when , it recovers full-information gradient descent; when , it performs no update. Such flexibility enables learning under irregular, delayed, and partial supervision, which are common in streaming and edge intelligence settings.

- Construction and Regret Analysis of Hint Gradients. The construction of the hint vector is the core mechanism that enables learning under delayed supervision in the OALN algorithm. At each time step t, the learner must determine how to approximate the true loss gradient , despite potentially missing parts of the instance–label pair . The strategy for computing depends on which elements are currently available.

When the feature vector is observed but the label has not yet arrived, we estimate a soft pseudo-label using an ensemble of previously deployed models , such as via confidence-weighted majority voting or exponential decay averaging [51]. Concretely, each model is assigned a confidence weight:

where denotes the cumulative loss of model s over a sliding window up to time t, and is a decay parameter. These weights are then normalized, and updated online after each labeled feedback via exponential smoothing:

where is a smoothing coefficient that governs the trade-off between the prior confidence and the newly observed loss-based weight. This scheme ensures that higher-performing models contribute more strongly to , while poor performers are gradually down-weighted.

The surrogate gradient is then computed using

where is the prediction function, and is a convex loss.

Alternatively, when only the label is received and the corresponding features are missing, we approximate the input using a class-wise prototype:

where denotes the historical index set for label y. The hint gradient is computed as

In the case where both and are unavailable at time t, the learner defers the update entirely, or adopts a temporal smoothing strategy such as reusing the previous update direction . This ensures continuity in learning while awaiting supervision.

To formally analyze the performance impact of hint-based updates, we consider the cumulative regret after T steps:

where if the label is eventually received, and is a convex, bounded parameter space.

We assume that (i) the loss is convex and L-Lipschitz in ; (ii) each hint satisfies ; and (iii) the hypothesis space has the following diameter:

Then, the expected regret is bounded by

Choosing and yields , confirming that the OALN algorithm achieves no-regret learning even with approximate supervision.

This result demonstrates that as long as hint gradients approximate the true gradients with bounded error, the model remains competitive. Coupled with the latent feature imputation from Section 4.1, our framework provides a principled approach to online learning under dual uncertainty: incomplete inputs and delayed feedback.

4.3. Discussion

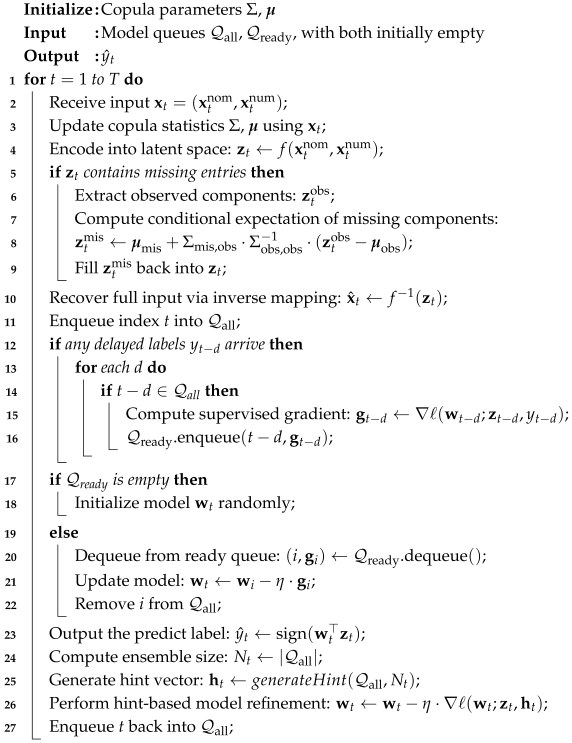

- Algorithm Design and Analysis. The OALN algorithm addresses incomplete mixed nominal–numeric inputs and asynchronous label feedback in real-time streams by combining Gaussian Copula-based latent encoding, conditional imputation, adaptive gradient updates, and hint-guided model refinement within a unified streaming framework, the schematic diagram is shown in the Algorithm 1. At each time step t, a partially observed instance is mapped into a latent representation . Missing entries are filled via conditional expectation under current copula parameters , and the completed vector is inverted back to the original feature space. OALN maintains two queues— (model pool) and (ready-to-update models). Upon arrival of delayed labels , corresponding historical models execute supervised proximal gradient updates; otherwise, OALN synthesizes surrogate gradients from an ensemble-derived hint vector, ensuring uninterrupted learning despite feedback latency. Our theoretical analysis bounds per-step time complexity by and memory by . In practical settings where the number of missing values , imputation cost reduces to . Scalability is further enhanced through rank-one copula updates, low-rank () or diagonal copula approximations that reduce storage to , dynamic pruning of stale models to bound , and parallel hint generation across multi-core resources. Low-rank copula approximation compresses the full covariance matrix by keeping only its principal directions, cutting both memory and computation costs while retaining the essential correlation structure—this makes online copula updates much faster and more scalable without sacrificing accuracy. These optimizations render OALN both memory-efficient and computationally tractable in high-dimensional, irregularly delayed streaming environments.For more details on algorithm design and analysis, please refer to Appendix B.

- Edge Deployment and Model Slimming. To support deployment on resource-constrained edge devices, we apply a combination of techniques: compressing the copula covariance via low-rank or diagonal approximations to reduce storage from to ; pruning stale models to limit the model pool memory to ; performing incremental rank-one updates at per step instead of full matrix recomputations; and tuning the delayed-label buffer to a small window , yielding overhead. With and aggressively pruned , total memory can shrink to , making OALN feasible within typical edge budgets. We believe this concrete guidance will facilitate real-world, on-device deployment of OALN.

| Algorithm 1: The OALN Algorithm |

|

- Algorithm Limitation. OALN still relies on fixed-width bins to incrementally update the empirical CDF and to estimate the Gaussian Copula correlation matrix. Under high missingness or when most feature values are zero, many bins remain empty, producing a coarse, step-like CDF and highly noisy correlation estimates. This noise undermines the stability of the latent variables and degrades the accuracy of subsequent online model updates. Moreover, once bin boundaries are fixed they cannot adapt to distributional drift, limiting OALN’s effectiveness on high-cardinality or rapidly changing sparse features. OALN’s model pool scheduling and weighting depend solely on the “old” labels that have arrived. When label delays are heavy-tailed, bursty, or excessively long, the noise in performance estimates rises sharply, causing incorrect model switches and accumulated prediction errors. At the same time, feedback latency severely impairs the algorithm’s ability to detect and adapt to concept drift, constraining real-time responsiveness and robustness in live streaming environments.

5. Experiments

This section presents the experiments conducted to address the following research questions, which collectively evaluate the effectiveness of the OALN algorithm:

- RQ1: Under varying missing rates, does OALN outperform other methods?

- RQ2: How does the performance of OALN and baseline algorithms change as the missing rate increases?

- RQ3: Under different levels of asynchronous label arrival, how does OALN perform, and what performance trends emerge in comparison to other methods?

5.1. General Setting

- Dataset. We evaluate OALN on twelve real-world datasets spanning a wide range of application domains [52]. To simulate dynamic feature spaces, we introduce random missingness at varying rates. Table 2 summarizes the key characteristics of these datasets.

Table 2. Characteristics of the studied datasets (Inst. and Feat. are short names for Instance and Feature; Nominal Feat. and Numerical Feat. are short names for Nominal Feature and Numerical Feature; and Class Ratios represents the ratio of positive labels to negative labels in the dataset).

Table 2. Characteristics of the studied datasets (Inst. and Feat. are short names for Instance and Feature; Nominal Feat. and Numerical Feat. are short names for Nominal Feature and Numerical Feature; and Class Ratios represents the ratio of positive labels to negative labels in the dataset). - Evaluation Metrics. We adopt the cumulative error rate (CER) as one of the evaluation metrics to quantify classification errors in online learning. CER is widely used in prior work [17,35] and is defined aswhere N denotes the total number of samples, and the angle-bracket notation evaluates to 1 if the condition inside is true, and to 0 otherwise. A lower CER indicates better model performance.

We use the Area Under the Receiver Operating Characteristic Curve (AUC) to evaluate the ability of a binary classifier to distinguish between positive and negative samples [53]. AUC is widely applied in domains such as medical diagnosis, credit scoring, and other decision-critical tasks [54]. It is defined as

where FPR (false-positive rate) is the ROC curve’s horizontal axis and TPR (true-positive rate) is its vertical axis. AUC equals the area under this curve; values closer to 1 indicate stronger discriminative power and better model performance.

- Compared Methods. We compare OALN with five related algorithms. Brief descriptions of each are provided below:

- OLI2DS [14]: An online learning algorithm that operates over varying feature subsets by adaptively weighting incomplete subspaces. It integrates dynamic cost strategies and sparse online updates to enhance model performance on complex data streams.

- OLD3S [55]: A deep learning-based method that employs parallel dual models, dynamic fusion, and autoencoder-based regularization to improve robustness and stability under streaming conditions.

- OLD3S-L [55]: A lightweight variant of OLD3S that retains the dynamic fusion mechanism while reducing encoding depth and fusion dimensionality, significantly decreasing model size and online update complexity for resource-constrained environments.

- OGD [56]: A classical online learning algorithm that updates model weights based on the gradient of each incoming sample, using a time-decaying step size to ensure simplicity and efficiency.

- FOBOS [57]: An online method that combines gradient descent with regularization, enabling incremental updates while encouraging model sparsity through penalization of irrelevant features.

- Implementation Details. To ensure fairness in our experiments, we employ a random-removal strategy, independently removing 10% and 50% of instances from each of the eleven datasets. Simultaneously, we set the learning rate to 0.3, the number of training epochs to 30, and the optimizer’s weight factors to and . The batch size is defined as one-thirtieth of the feature dimensionality, the test set size is 1024, and the number of solver iterations is set to 200. At the same time, we also set the random seed = 2025. This procedure generates nonstationary data streams while ensuring that all models receive identical input sequences. We assume a Missing Completely At Random (MCAR) mechanism for all removals [48].

5.2. Performance Comparison (RQ1)

Based on the results in Table 3, Table 4, Table 5 and Table 6, which report the cumulative error rate (CER) and AUC scores for each algorithm under 10% and 50% missing rates (with label delay for OALN), we perform statistical analyses utilizing the win/loss ratio, alongside the Wilcoxon signed-rank test (p-value) [58]. First, our algorithm demonstrates consistently strong performance: at a 10% missing rate, the average CER across the eleven datasets is 23.31%, and at a 50% missing rate, it is 28.28%—both lower than the corresponding averages of the baseline methods. Moreover, across the 60 total evaluation scenarios, OALN achieves a lower CER than all competing algorithms in 57 scenarios at 10% missingness and in 52 scenarios at 50% missingness. Second, regarding AUC scores in the same 60 scenarios, OALN outperforms all competitors in 57 scenarios at 10% missingness and in 58 scenarios at 50% missingness. The mean AUC values achieved by OALN are 0.7895 at 10% missingness and 0.7433 at 50% missingness, both higher than the corresponding averages of the comparison methods. These results indicate that by constructing a hybrid feature space combining nominal and numerical data, OALN maintains high predictive accuracy and stability under nonstationary data streams and asynchronous label feedback. Furthermore, our experiments confirm that the use of a mixed Gaussian Copula—through online estimation of joint nominal–numerical distributions and conditional imputation of missing values—significantly enhances predictive performance under various missingness patterns.

Table 3.

The comparison results for cumulative error rate. We repeated the experiment 5 times with label delay T = 5 for each dataset, averaged the cumulative error rate (CER), and calculated the standard variance of the 5 values. Experimental results (CER ± standard variance) for 12 datasets in the case of missing rate 10%. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table 4.

The comparison results for Area Under Curve. We repeated the experiment 5 times with label delay T = 5 for each dataset, averaged the Area Under Curve (AUC), and calculated the standard variance of the 5 values. Experimental results (AUC ± standard variance) for 12 datasets in the case of missing rate 10%.The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table 5.

The comparison results for cumulative error rate. We repeated the experiment 5 times with label delay T = 5 for each dataset, averaged the cumulative error rate (CER), and calculated the standard variance of the 5 values. Experimental results (CER ± standard variance) for 12 datasets in the case of missing rate 50%.The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table 6.

The comparison results for Area Under Curve. We repeated the experiment 5 times with label delay T = 5 for each dataset, averaged the Area Under Curve (AUC), and calculated the standard variance of the 5 values. Experimental results (AUC ± standard variance) for 12 datasets in the case of missing rate 50%.The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

5.3. Trend Comparison (RQ2)

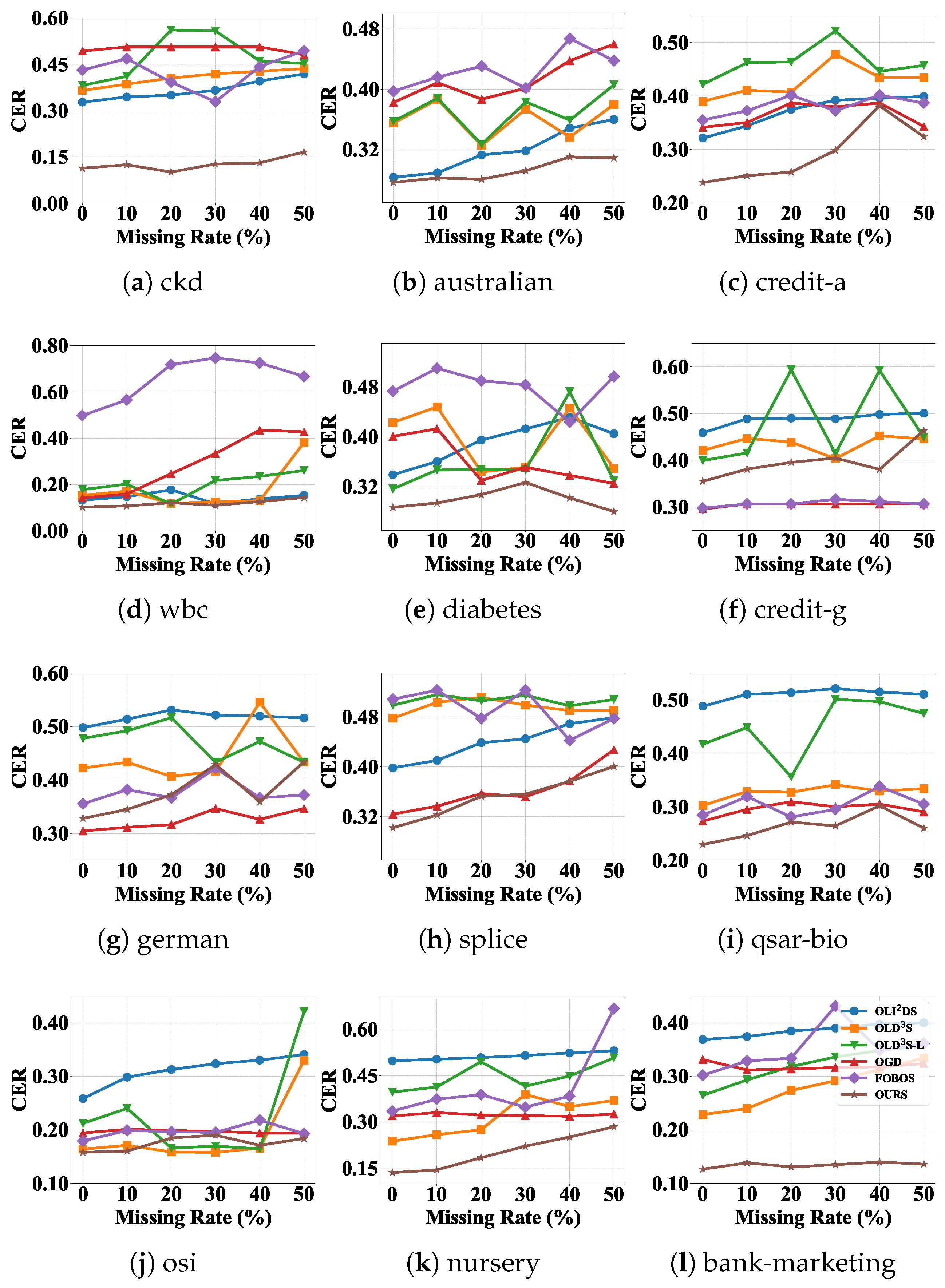

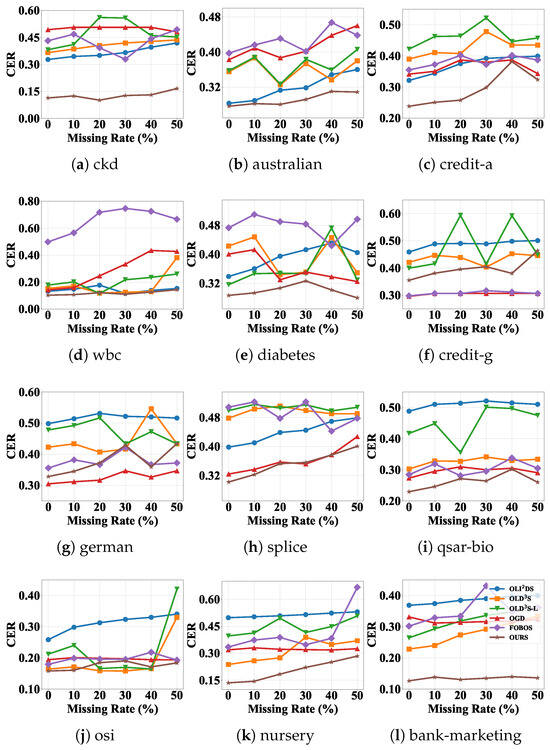

By comparing OLI2DS, OLD3S, OLD3S-L, OGD, and FOBOS, we draw the following conclusions. To analyze how each algorithm’s performance varies with different missing rates, Figure 3 presents the CER curves for all methods under the nonstationary setting, with label delay for OALN.

Figure 3.

The cumulative error rate (CER) of 12 datasets under different algorithms and missing rates with label delay T = 5. As the missing rate increases across the datasets, the cumulative error rate (CER) of all algorithms exhibits an overall upward trend; notably, OALN maintains a lower average CER than the other methods, demonstrating its superior performance.

As the missing rate increases, OALN’s CER remains lower than that of the other algorithms in most cases; in Figure 3a, OALN outperforms its competitors in over 55% of the scenarios. Overall, the CER values for all methods tend to increase as the missingness rate rises. Furthermore, Figure 3e,f,j reveal that OLD3S-L exhibits large CER oscillations on certain datasets as the missing rate grows. This behavior arises because OLD3S-L employs only a shallow autoencoder, which can marginally recover major features at low missing rates; however, when the missing rate reaches 30%, 40%, or higher, essential feature information is severely lost, and the shallow network fails to accurately reconstruct or denoise the data. Consequently, noise in the imputed features is directly propagated to the classifier, resulting in pronounced fluctuations in CER. In Figure 3d, we observe that FOBOS’s CER is markedly higher than that of the other methods. This is because FOBOS assumes a fixed and complete feature space and lacks a mechanism for adapting to dynamically changing feature dimensions. When features are missing, its parameter updates rely on incomplete or erroneous input distributions, leading to rapid error accumulation. Moreover, in missing-data scenarios, leveraging the covariance structure among observed features is critical for effective imputation. FOBOS, however, applies regularization only in the parameter space and entirely disregards feature-level correlations, rendering it incapable of inferring missing values from the available features. Similarly, in Figure 3j–k, OLI2DS exhibits higher CER than the other algorithms in most cases, suggesting that its accuracy degrades on larger or more complex datasets.

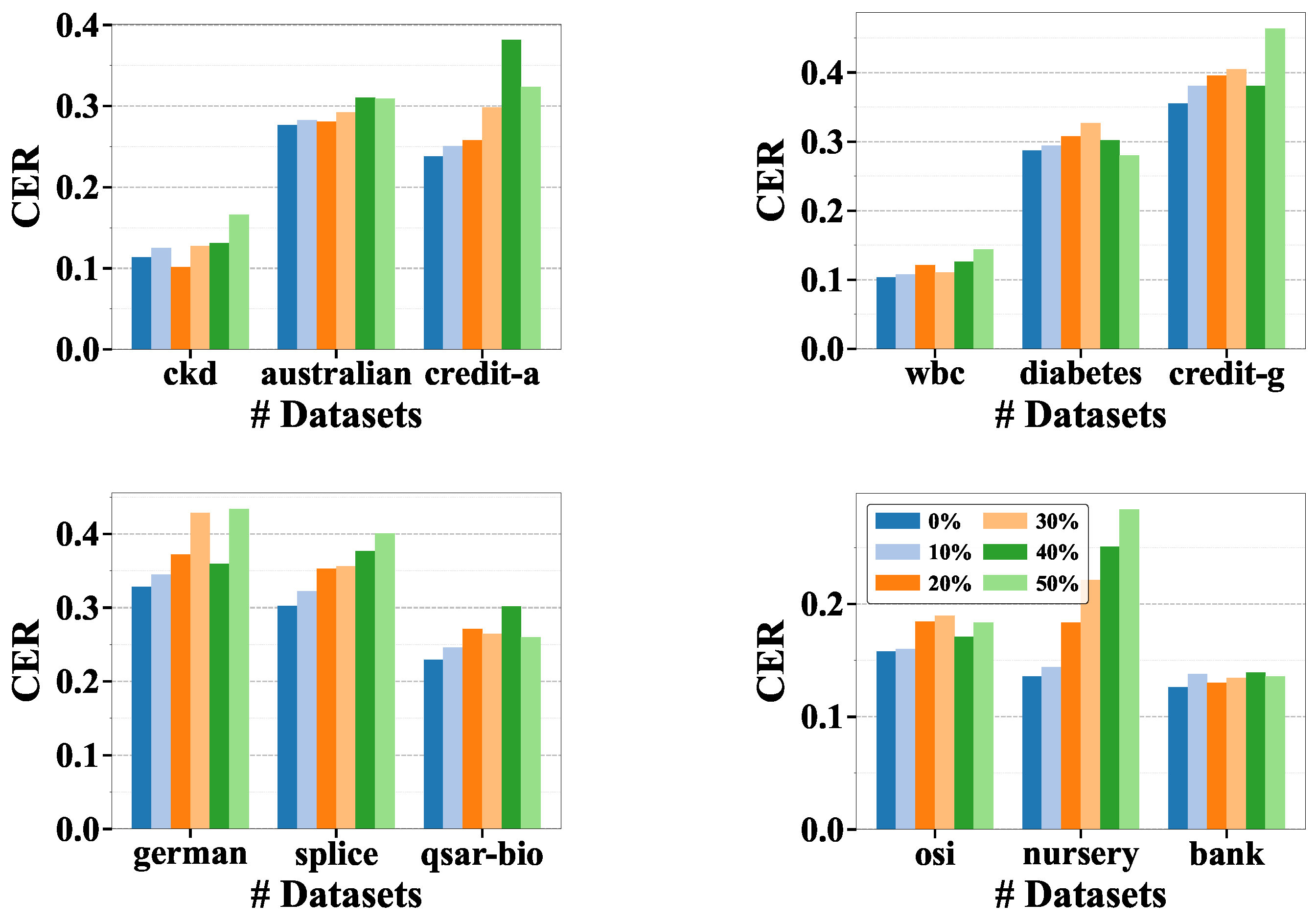

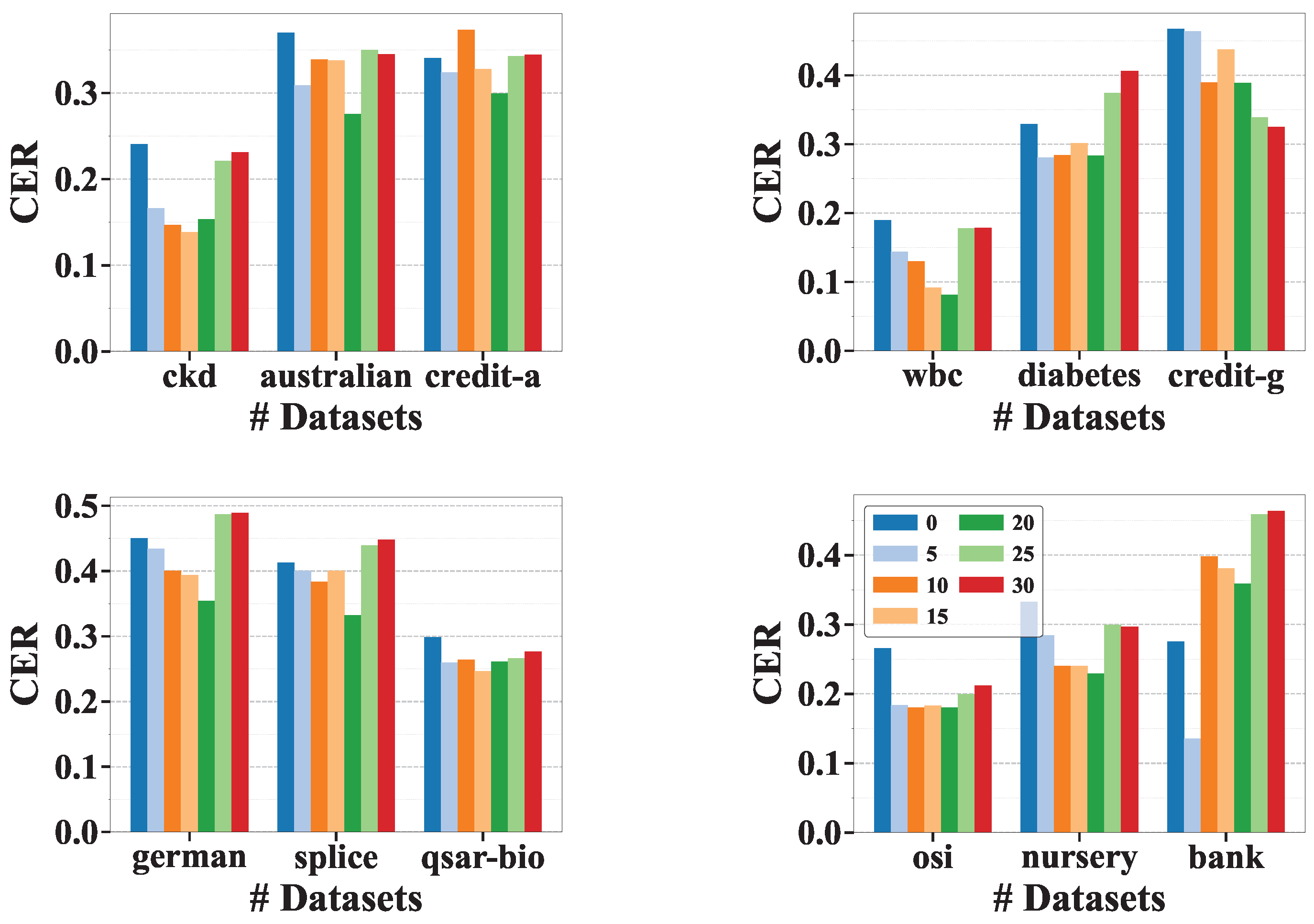

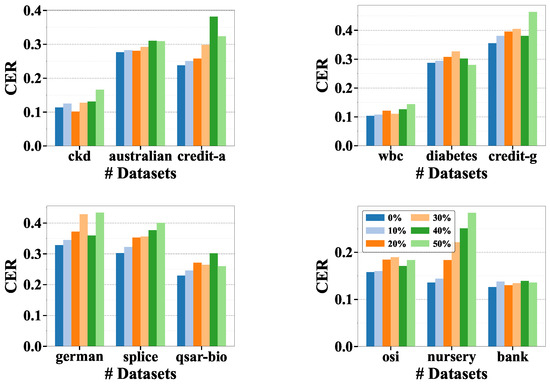

To assess whether OALN encounters similar issues, we present in Figure 4 bar charts showing OALN’s CER at different missing rates (with label delay ), in order to further analyze its robustness under increasing missingness. As shown in Figure 4, the CER values for all datasets increase steadily with rising missing rates, yet remain relatively stable without significant oscillations, and outperform the competing methods in most cases. Notably, on large-scale datasets such as osi and nursery, OALN maintains consistently strong performance. These findings demonstrate that our conditional covariance-based adaptive imputation mechanism, combined with incremental copula parameter updates for dynamic feature space adaptation, effectively addresses both feature missingness and large-sample scenarios, thereby ensuring the model’s accuracy, robustness, and overall superiority.

Figure 4.

Cumulative error rate (CER) for each dataset under varying missing rates at delay T = 5 in the OALN algorithm (the dataset “bank” is the abbreviation of the dataset “bank-marketing”). As the missing rate of the dataset increases, the cumulative error rate (CER) generally shows an upward trend.

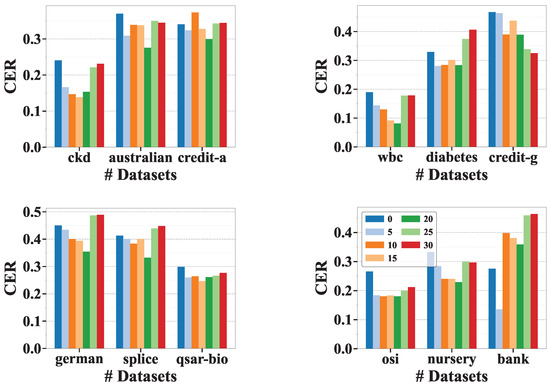

5.4. Asynchronous Label Processing (RQ3)

To answer RQ3, we fix the missing rate at 50% and simulate asynchronous label feedback by varying the delay intensity . Figure 5 shows that as the label delay T increases, the cumulative error rate (CER) decreases initially and then increases, with an inflection point at approximately . When labels are delayed, OALN continues to receive and process additional feature samples before the true label becomes available, performing incremental updates of the copula parameters and at each step. Longer delays incorporate more samples into the covariance estimation and feature imputation processes, yielding more stable estimates of both the covariance matrix and the mean vector, as well as higher-quality imputations. This leads to more accurate input features for the classifier, reduces misclassification, and lowers the overall CER. Furthermore, for , each incoming sample—even without an associated true label—triggers a hint update: a pseudo-label generated by the ensemble of existing submodels in the model pool, followed by a gradient update. With longer delays, the number of hint-based updates increases, offering the model additional opportunities to smooth ensemble weights and correct predictive biases. By the time the true label arrives, the model has already transitioned to a better parameter region, rendering subsequent gradient updates more robust.

Figure 5.

Cumulative error rates (CERs) across datasets under various label delays at a 50% missing rate using the OALN algorithm (the dataset “bank” is the abbreviation of the dataset “bank-marketing”). As the delay intensity increases, the cumulative error rate (CER) generally shows a trend of first decreasing and then increasing. It reaches an inflection point when the delay intensity T = 20, and the cumulative error rate changes from decreasing to increasing.

However, as the delay intensity T continues to increase, its adverse impact on the model gradually outweighs the mechanisms described above, causing the cumulative error rate (CER) to halt its decline and begin to increase.

In summary, appropriate label delays act as a “warm-up” mechanism for OALN, enabling comprehensive covariance estimation, improved imputation, and pseudo-label refinement prior to receiving true-label feedback. However, as the delay intensity T continues to increase, the model begins to suffer from the negative effects of the delay, leading to a decline in performance. Consequently, there exists an “equilibrium point” between model performance and delay intensity: before this point, the model can maintain a low cumulative error rate (CER) when the true labels eventually arrive; after this point, the model’s CER gradually increases.

6. Conclusions

In this study, we address the modeling challenges posed by nominal and numerical features under conditions of feature missingness and asynchronous label feedback in online learning. Data streams arrive in real time with heterogeneous and dynamically evolving feature types, which can significantly impair model accuracy and robustness. To mitigate these issues, we propose the Online Asynchronous Learning over Streaming Nominal Data (OALN) algorithm. OALN leverages a mixed Gaussian Copula to dynamically capture correlations among features and incorporates a model pool with a hint mechanism to ensure data integrity, stability, and accuracy in the presence of missing values, heterogeneous features, and delayed labels. Experimental results show that OALN consistently achieves high predictive accuracy across varying missing rates and delay intensities, outperforming competing methods. These advances highlight OALN’s potential for effectively handling heterogeneous, incomplete, and asynchronously labeled data in real-time online learning scenarios.

By virtue of its robust handling of incomplete mixed-type data and asynchronous feedback, OALN is well suited for real-time streaming applications such as industrial IoT, smart grids, energy management, and online financial risk control. Although OALN handles moderate missingness and fixed delays, its reliance on static, bin-based CDF updates produces step-like distributions and noisy copula correlations under high feature sparsity, while depending solely on delayed labels for model selection introduces estimation noise and impairs concept-drift adaptation. In this work, we simulate feature dropout under the MCAR assumption, where missingness is independent of both observed and unobserved data. While simplifying the evaluation, this may overestimate imputation and learning performance. Future work will consider MAR and MNAR settings to more fully assess robustness under realistic missing-data mechanisms. We will also investigate adaptive distribution summaries (e.g., sketch-based or dynamic binning), develop delay-aware weighting schemes and integrated drift-detection mechanisms, and design robust, adaptive techniques to handle non-MCAR missing data, all aimed at improving robustness and real-time responsiveness in highly sparse, irregularly delayed streaming environments.

Author Contributions

Conceptualization, H.L. and S.Z.; methodology, S.Z. and L.L.; software, J.C. and T.W.; validation, H.L., S.Z. and S.L.; formal analysis, S.Z.; investigation, H.L. and J.C.; resources, S.Z.; data curation, L.L.; writing—original draft preparation, S.Z.; writing—review and editing, H.L., J.T. and S.H.; visualization, T.W.; supervision, S.H.; project administration, S.H.; funding acquisition, S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Jinan University, the National Natural Science Foundation of China (No.62272198), Guangdong Key Laboratory of Data Security and Privacy Preserving (No. 2023B1212060036), Guangdong–Hong Kong Joint Laboratory for Data Security and Privacy Preserving (No. 2023B1212120007), Guangdong Basic and Applied Basic Research Foundation under Grant (No. 2024A1515010121), and the Special Funds for the Cultivation of Guangdong College Students’ Scientific and Technological Innovation (Climbing Program Special Funds) under Grant pdjh2025ak028.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A

As shown in Table A1, Table A2, Table A3, Table A4, Table A5, Table A6, Table A7 and Table A8, even at missing rates of 0%, 20%, 30%, and 40% with label delay T = 5, our OALN algorithm consistently delivers superior performance in both cumulative error rate (CER) and AUC. Compared to all baseline methods, OALN achieves the lowest average CER and the highest average AUC score across these missingness levels.

Table A1.

Cumulative error rate (CER) under the missing rate 0% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table A1.

Cumulative error rate (CER) under the missing rate 0% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

| Dataset | OLI2DS | OLD3S | OLD3S-L | OGD | FOBOS | OALN |

|---|---|---|---|---|---|---|

| ckd | ||||||

| australian | ||||||

| credit-a | ||||||

| wbc | ||||||

| diabetes | ||||||

| credit-g | ||||||

| german | ||||||

| splice | ||||||

| qsar-bio | ||||||

| osi | ||||||

| nursery | ||||||

| bank-marketing | ||||||

| loss/win | ||||||

| p-value | ||||||

| F-rank |

Table A2.

AUC under missing rate 0% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table A2.

AUC under missing rate 0% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

| Dataset | OLI2DS | OLD3S | OLD3S-L | OGD | FOBOS | OALN |

|---|---|---|---|---|---|---|

| ckd | ||||||

| australian | ||||||

| credit-a | ||||||

| wbc | ||||||

| diabetes | ||||||

| credit-g | ||||||

| german | ||||||

| splice | ||||||

| qsar-bio | ||||||

| osi | ||||||

| nursery | ||||||

| bank-marketing | ||||||

| loss/win | ||||||

| p-value | ||||||

| F-rank |

Table A3.

Cumulative error rate (CER) under missing rate 20% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table A3.

Cumulative error rate (CER) under missing rate 20% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

| Dataset | OLI2DS | OLD3S | OLD3S-L | OGD | FOBOS | OALN |

|---|---|---|---|---|---|---|

| ckd | ||||||

| australian | ||||||

| credit-a | ||||||

| wbc | ||||||

| diabetes | ||||||

| credit-g | ||||||

| german | ||||||

| splice | ||||||

| qsar-bio | ||||||

| osi | ||||||

| nursery | ||||||

| bank-marketing | ||||||

| loss/win | ||||||

| p-value | ||||||

| F-rank |

Table A4.

AUC under missing rate 20% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table A4.

AUC under missing rate 20% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

| Dataset | OLI2DS | OLD3S | OLD3S-L | OGD | FOBOS | OALN |

|---|---|---|---|---|---|---|

| ckd | ||||||

| australian | ||||||

| credit-a | ||||||

| wbc | ||||||

| diabetes | ||||||

| credit-g | ||||||

| german | ||||||

| splice | ||||||

| qsar-bio | ||||||

| osi | ||||||

| nursery | ||||||

| bank-marketing | ||||||

| loss/win | ||||||

| p-value | ||||||

| F-rank |

Table A5.

Cumulative error rate (CER) under missing rate 30% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table A5.

Cumulative error rate (CER) under missing rate 30% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

| Dataset | OLI2DS | OLD3S | OLD3S-L | OGD | FOBOS | OALN |

|---|---|---|---|---|---|---|

| ckd | ||||||

| australian | ||||||

| credit-a | ||||||

| wbc | ||||||

| diabetes | ||||||

| credit-g | ||||||

| german | ||||||

| splice | ||||||

| qsar-bio | ||||||

| osi | ||||||

| nursery | ||||||

| bank-marketing | ||||||

| loss/win | ||||||

| p-value | ||||||

| F-rank |

Table A6.

AUC under missing rate 30% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table A6.

AUC under missing rate 30% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

| Dataset | OLI2DS | OLD3S | OLD3S-L | OGD | FOBOS | OALN |

|---|---|---|---|---|---|---|

| ckd | ||||||

| australian | ||||||

| credit-a | ||||||

| wbc | ||||||

| diabetes | ||||||

| credit-g | ||||||

| german | ||||||

| splice | ||||||

| qsar-bio | ||||||

| osi | ||||||

| nursery | ||||||

| bank-marketing | ||||||

| loss/win | ||||||

| p-value | ||||||

| F-rank |

Table A7.

Cumulative error rate (CER) under missing rate 40% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table A7.

Cumulative error rate (CER) under missing rate 40% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

| Dataset | OLI2DS | OLD3S | OLD3S-L | OGD | FOBOS | OALN |

|---|---|---|---|---|---|---|

| ckd | ||||||

| australian | ||||||

| credit-a | ||||||

| wbc | ||||||

| diabetes | ||||||

| credit-g | ||||||

| german | ||||||

| splice | ||||||

| qsar-bio | ||||||

| osi | ||||||

| nursery | ||||||

| bank-marketing | ||||||

| loss/win | ||||||

| p-value | ||||||

| F-rank |

Table A8.

AUC under missing rate 40% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

Table A8.

AUC under missing rate 40% with label delay T = 5. The best results are in bold. • indicates the cases in our method that lose the comparison. * shows the total number of wins and losses for OALN.

| Dataset | OLI2DS | OLD3S | OLD3S-L | OGD | FOBOS | OALN |

|---|---|---|---|---|---|---|

| ckd | ||||||

| australian | ||||||

| credit-a | ||||||

| wbc | ||||||

| diabetes | ||||||

| credit-g | ||||||

| german | ||||||

| splice | ||||||

| qsar-bio | ||||||

| osi | ||||||

| nursery | ||||||

| bank-marketing | ||||||

| loss/win | ||||||

| p-value | ||||||

| F-rank |

Appendix B

For the algorithm and analysis, in detail, at each time step t, a mixed-type instance is first encoded into via a Gaussian Copula. Missing entries are imputed by the conditional expectation under the current copula parameters and then mapped back to the original feature space. OALN maintains two queues: stores all models, and holds models awaiting label feedback. When a delayed label arrives, the corresponding historical model is retrieved to compute the supervised gradient , which is applied via a stochastic proximal update. If no label is available, OALN generates a surrogate gradient from an ensemble of models in , using a hint vector to continue learning. Complexity per iteration includes for copula updates, for encoding and imputation, for supervised updates, and plus for hint generation and queue operations, totaling . Memory usage combines , reducible to with low-rank copula approximations and further optimized by rank-one updates, model pruning, and parallel hint computation.

References

- Bhatia, K.; Sridharan, K. Online learning with dynamics: A minimax perspective. In Proceedings of the 34th Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; pp. 15020–15030. [Google Scholar]

- Wu, X.; Zhu, X.; Wu, G.Q.; Ding, W. Data mining with Big Data. IEEE Trans. Knowl. Data Eng. 2014, 26, 97–107. [Google Scholar]

- Mitra, S.; Gopalan, A. On adaptivity in information-constrained online learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 5199–5206. [Google Scholar]

- Makris, C.; Simos, M.A. OTNEL: A Distributed Online Deep Learning Semantic Annotation Methodology. Big Data Cogn. Comput. 2020, 4, 31. [Google Scholar] [CrossRef]

- Mohandas, R.; Southern, M.; O’Connell, E.; Hayes, M. A Survey of Incremental Deep Learning for Defect Detection in Manufacturing. Big Data Cogn. Comput. 2024, 8, 7. [Google Scholar] [CrossRef]

- Ma, W. Projective quadratic regression for online learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 5093–5100. [Google Scholar]

- Fu, X.; Seo, E.; Clarke, J.; Hutchinson, R.A. Link prediction under imperfect detection: Collaborative filtering for ecological networks. IEEE Trans. Knowl. Data Eng. 2021, 33, 3117–3128. [Google Scholar] [CrossRef]

- Phadke, A.; Kulkarni, M.; Bhawalkar, P.; Bhattad, R. A review of machine learning methodologies for network intrusion detection. In Proceedings of the IEEE 3rd International Conference on Computational Methodologies and Communications, Erode, India, 27–29 March 2019; pp. 272–275. [Google Scholar]

- Zhang, D.; Jin, M.; Cao, P. ST-MetaDiagnosis: Meta learning with spatial transformer for rattle skin disease diagnosis. In Proceedings of the International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Republic of Korea, 16–19 December 2020; pp. 2153–2160. [Google Scholar]

- Alkhnibashi, O.S.; Mohammad, R.; Hammoudeh, M. Aspect-Based Sentiment Analysis of Patient Feedback Using Large Language Models. Big Data Cogn. Comput. 2024, 8, 167. [Google Scholar] [CrossRef]

- Millan-Giraldo, M.; Sanchez, J.S. Online Learning Strategies for Classification of Static Data Streams. In Proceedings of the 8th WSEAS International Conference on Multimedia, Internet and Video Technologies/8th WSEAS International Conference on Distance Learning and Web Engineering, Stevens Point, WI, USA, 23 September 2008. [Google Scholar]

- Yan, H.; Liu, J.; Xiao, J.; Niu, S.; Dong, S.; You, D.; Shen, L. Online learning for data streams with bi-dynamic distributions. Inf. Sci. 2024, 676, 120796. [Google Scholar] [CrossRef]

- Song, Y.; Lu, J.; Lu, H.; Zhang, G. Learning data streams with changing distributions and temporal dependency. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 3952–3956. [Google Scholar] [CrossRef]

- You, D.; Xiao, J.; Wang, Y.; Yan, H.; Wu, D.; Chen, Z.; Shen, L.; Wu, X. Online Learning From Incomplete and Imbalanced Data Streams. IEEE Trans. Knowl. Data Eng. 2023, 35, 10650–10665. [Google Scholar] [CrossRef]

- Beyazit, E.; Alagurajah, J.; Wu, X. Online learning from data streams with varying feature spaces. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 3232–3239. [Google Scholar]

- Qiu, J.; Zhuo, S.; Yu, P.S.; Wang, C.; Huang, S. Online Learning for Noisy Labeled Streams. ACM Trans. Knowl. Discov. Data 2025, 19, 1–29. [Google Scholar] [CrossRef]

- He, Y.; Dong, J.; Hou, B.; Wang, Y.; Wang, F. Online Learning in Variable Feature Spaces with Mixed Data. In Proceedings of the 21st IEEE International Conference on Data Mining (ICDM), Auckland, New Zealand, 7–10 December 2021. [Google Scholar]

- Zhuo, S.; Wu, D.; He, Y.; Huang, S.; Wu, X. Online Learning from Mix-typed, Drifted, and Incomplete Streaming Features. ACM Trans. Knowl. Discov. Data 2025. [Google Scholar] [CrossRef]

- Meidan, Y.; Bohadana, M.; Mathov, Y.; Mirsky, Y.; Shabtai, A.; Breitenbacher, D.; Elovici, Y. N-Baiot—Network-Based Detection of IoT Botnet Attacks Using Deep Autoencoders. IEEE Pervasive Comput. 2018, 17, 12–22. [Google Scholar] [CrossRef]

- Krawczyk, B.; Minku, L.L.; Gama, J.; Stefanowski, J.; Wozniak, M. Ensemble learning for data stream analysis: A survey. Inf. Fusion 2017, 37, 132–156. [Google Scholar] [CrossRef]

- Wu, X.; Yu, K.; Ding, W.; Wang, H.; Zhu, X. Online feature selection with streaming features. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1178–1192. [Google Scholar]

- Zhang, Z.; Qian, Y.; Zhang, Y.; Jiang, Y.; Zhou, Z. Adaptive Learning for Weakly Labeled Streams. In Proceedings of the 28th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Washington, DC, USA, 14–18 August 2022; pp. 2556–2564. [Google Scholar]

- Zhao, P.; Cai, L.; Zhou, Z. Handling concept drift via model reuse. Mach. Learn. 2020, 109, 533–568. [Google Scholar] [CrossRef]

- Lian, H.; Wu, D.; Hou, B.; Wu, J.; He, Y. Online Learning from Evolving Feature Spaces with Deep Variational Models. IEEE Trans. Knowl. Data Eng. 2023, 36, 4144–4162. [Google Scholar] [CrossRef]

- Chapelle, O. Modeling delayed feedback in display advertising. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, New York, NY, USA, 24–27 August 2014; pp. 1097–1105. [Google Scholar]

- Joulani, P.; György, A.; Szepesvári, C. Online Learning under Delayed Feedback. In Proceedings of the 34th International Conference on Machine Learning (ICML), Atlanta, GA, USA, 16–21 June 2013; pp. 1453–1461. [Google Scholar]

- Zhuo, S.D.; Qiu, J.J.; Wang, C.D.; Huang, S.Q. Online feature selection with varying feature spaces. IEEE Trans. Knowl. Data Eng. 2024, 36, 4806–4819. [Google Scholar] [CrossRef]

- Weinberger, M.J.; Ordentlich, E. On delayed prediction of individual sequences. IEEE Trans. Inform. Theory 2002, 48, 1959–1976. [Google Scholar] [CrossRef]

- You, D.; Yan, H.; Xiao, J.; Chen, Z.; Wu, D.; Shen, L.; Wu, X. Online Learning for Data Streams With Incomplete Features and Labels. IEEE Trans. Knowl. Data Eng. 2024, 36, 4820–4834. [Google Scholar] [CrossRef]

- Zhou, P.; Zhang, S.; Mu, L.; Yan, Y. Online Learning from Capricious Data Streams via Shared and New Feature Spaces. Appl. Intell. 2024, 54, 9429–9445. [Google Scholar] [CrossRef]

- Qian, Y.Y.; Zhang, Z.Y.; Zhao, P.; Zhou, Z.H. Learning with Asynchronous Labels. ACM Trans. Knowl. Discov. Data 2024, 18, 1–27. [Google Scholar] [CrossRef]

- He, Y.; Wu, B.; Wu, D.; Beyazit, E.; Chen, S.; Wu, X. Toward Mining Capricious Data Streams: A Generative Approach. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1228–1240. [Google Scholar] [CrossRef] [PubMed]

- Bedi, A.S.; Koppel, A.; Rajawat, K. Asynchronous online learning in multi-agent systems with proximity constraints. IEEE Trans. Signal Inf. Process. Netw. 2019, 5, 479–494. [Google Scholar] [CrossRef]

- Qunrud, K.; Khashabi, D. Online Learning with Adversarial Delays. In Proceedings of the 28th Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28, pp. 1270–1278. [Google Scholar]

- Wu, D.; Zhuo, S.; Wang, Y.; Chen, Z.; He, Y. Online Semi-Supervised Learning with Mix-Typed Streaming Features. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 4720–4728. [Google Scholar]

- Hoi, S.C.; Sahoo, D.; Lu, J.; Zhao, P. Online learning: A comprehensive survey. Neurocomputing 2021, 459, 249–289. [Google Scholar] [CrossRef]

- Singh, C.; Sharma, A. A review of online supervised learning. Evol. Syst. 2023, 14, 343–364. [Google Scholar] [CrossRef]

- Wang, C.; Sahebi, S. Continuous Personalized Knowledge Tracing: Modeling Long-Term Learning in Online Environments. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 2616–2625. [Google Scholar]

- Saito, Y.; Morishita, G.; Yasui, S. Dual Learning Algorithm for Delayed Conversions. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, China, 25–30 July 2020; pp. 1849–1852. [Google Scholar]

- Su, Y.; Zhang, L.; Dai, Q.; Zhang, B.; Yan, J.; Wang, D.; Bao, Y.; Xu, S.; He, Y.; Yan, W. An Attention-Based Model for Conversion Rate Prediction with Delayed Feedback via Post-Click Calibration. In Proceedings of the 29th International Joint Conferences on Artificial Intelligence (IJCAI), Yokohama, Japan, 7–15 January 2021; pp. 3522–3528. [Google Scholar]

- Wang, Y.; Zhang, J.; Da, Q.; Zeng, A. Delayed Feedback Modeling for the Entire Space Conversion Rate Prediction. arXiv 2020, arXiv:2011.11826. [Google Scholar]

- Grzenda, M.; Gomes, H.M.; Bifet, A. Delayed labelling evaluation for data streams. Data Min. Knowl. Discov. 2020, 34, 1237–1266. [Google Scholar] [CrossRef]

- Héliou, A.; Mertikopoulos, P.; Zhou, Z. Gradient-free Online Learning in Continuous Games with Delayed Rewards. In Proceedings of the 37th International Conference on Machine Learning (ICML), Virtual, 13–18 July 2020; pp. 4172–4181. [Google Scholar]

- Hsieh, Y.; Iutzeler, F.; Malick, J.; Mertikopoulos, P. Multi-Agent Online Optimization with Delays: Asynchronicity, Adaptivity, and Optimism. J. Mach. Learn. Res. 2022, 23, 1–49. [Google Scholar]

- Zhang, X.; Jia, H.; Su, H.; Wang, W.; Xu, J.; Wen, J. Counterfactual Reward Modification for Streaming Recommendation with Delayed Feedback. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR), Virtual, Canada, 11–15 July 2021; pp. 41–50. [Google Scholar]

- Xu, J. An extended one-versus-rest support vector machine for multi-label classification. Neurocomputing 2011, 74, 3114–3124. [Google Scholar] [CrossRef]

- Sáez, J.A.; Galar, M.; Luengo, J.; Herrera, F. Analyzing the presence of noise in multi-class problems: Alleviating its influence with the one-vs-one decomposition. Knowl. Inf. Syst. 2014, 38, 179–206. [Google Scholar] [CrossRef]

- Zhao, Y.; Udell, M. Missing Value Imputation for Mixed Data via Gaussian Copula. In Proceedings of the 26th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual, CA, USA, 6–10 July 2020; pp. 636–646. [Google Scholar]

- Kouhanestani, M.A.; Zamanzade, E.; Goli, S. Statistical Inference on the Cumulative Distribution Function Using Judgment Post Stratification. J. Comput. Appl. Math. 2025, 458, 116340. [Google Scholar] [CrossRef]

- Rakhlin, A.; Sridharan, K. Online Learning with Predictable Sequences. In Proceedings of the 26th Annual Conference on Computational Learning Theory (COLT), Princeton, NJ, USA, 12–14 June 2013; pp. 993–1019. [Google Scholar]

- Zhou, Z.H. Rehearsal: Learning from Prediction to Decision. Front. Comput. Sci. 2022, 16, 164352. [Google Scholar] [CrossRef]

- Dua, D.; Taniskidou, E.K. UCI Machine Learning Repository. 2017. Available online: http://archive.ics.uci.edu/ml (accessed on 29 June 2025).

- Fawcett, T. An Introduction to ROC Analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Hajian-Tilaki, K.O. Receiver Operating Characteristic (ROC) Curve Analysis for Medical Diagnostic Test Evaluation. Casp. J. Intern. Med. 2013, 4, 627–635. [Google Scholar]

- Lian, H.; Atwood, J.S.; Hou, B.; Wu, J.; He, Y. Online Deep Learning from Doubly-Streaming Data. arXiv 2022, arXiv:2204.11793. [Google Scholar]

- Zinkevich, M. Online Convex Programming and Generalized Infinitesimal Gradient Ascent. In Proceedings of the 20th International Conference on Machine Learning, 2003, Washington, DC, USA, 21–24 August 2003. [Google Scholar]

- Singer, Y.; Duchi, J.C. Efficient learning using forward-backward splitting. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, British Columbia, 7–10 December 2009. [Google Scholar]

- Demšar, J. Statistical Comparisons of Classifiers over Multiple Data Sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).