Safe Artificial General Intelligence via Distributed Ledger Technology

Abstract

1. Introduction

2. Methods

2.1. To Generate a Necessary and Sufficient Set of Axioms

2.2. Ingredients for Formalization of AGI Safety Theory

3. Results and Discussion

3.1. A Critical Ingredient: Distributed Ledger Technology (A.k.a. ‘Blockchain’)

3.2. Examination of Typical Failure Use Cases by Axiom

3.3. Explanation of Each Proposed Axiom

3.3.1. Access to AGI Technology via License

3.3.2. Ethics Stored in a Distributed Ledger

3.3.3. Morality Defined as Voluntary vs. Involuntary Exchange

3.3.4. Behavior Control System

3.3.5. Unique Component IDs, Configuration Item (CI)

3.3.6. Digital Identity via Distributed Ledger Technology

3.3.7. Smart Contracts Based on Digital Ledger Technology

3.3.8. Decentralized Applications (dApps)

3.3.9. Audit Trail of Component Usage Stored via Distributed Ledger Technology

3.3.10. Social Ostracism (Voluntary Denial of Resources)

3.3.11. Game Theory and Mechanism Design

3.4. Diversity in the AGI Ecosystem: Computation Is Local, Communication Is Global

3.5. Should AI Research and Technology Be Freely Available While Nuclear, Biological, and Chemical Weapons Research Are Not?

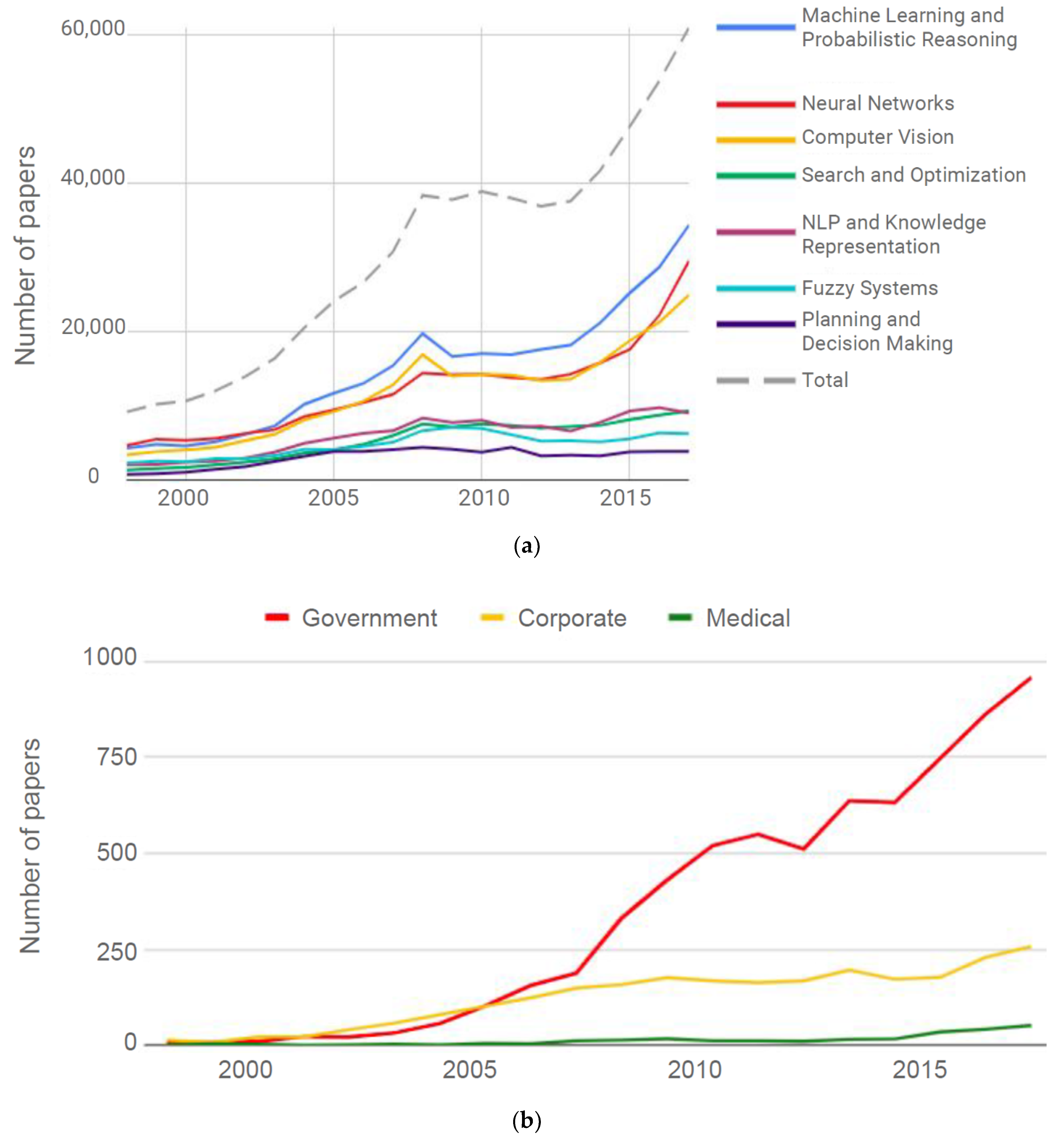

3.6. Measuring the Progression to AGI

3.7. AGI Development Control Analogy with Cell-Cycle Checkpoints

3.8. Intelligent Coins of the Realm

3.9. The Need for Simulation of Control and Value Alignment

3.10. A Singleton Versus a Balance of Powers and Transitive Control Regime

4. Conclusions and Future Work

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Simple Syllogisms to Help Formalize the Problem Statement

Appendix B. Examples of AGI Failure Modes from Turchin and Yampolskiy Taxonomies [18,19] (Continued from Table 4)

| Stage/Pathway | Necessary Axioms See Table 3 Axioms |

| Sabotage. a. By impersonation (e.g., hacker, programmer, tester, janitor). b. AI software to cloak human identity. c. By someone with access. | a. 7. b. 7. c. 2, 3, 4, 5, 6, 8, 9, 10, 11. |

| Purposefully dangerous military robots and intelligent software. Robot soldiers, armies of military drones and cyber weapons used to penetrate networks and cause disruptions to the infrastructure. a. due to command error b. due to programming error c. due to intentional command by adversary or nut d. due to negligence by adversary or nut (e.g., AI nanobots start global catastrophe) | Axiom 3, morality, does not apply where coercive force or fraud are a premise, e.g., military or police use of force, while axiom 2, ethics, in this case embodying restrictions on use of force, and 4, behavior control, and the rest, do apply. a. 1, 2, 4, 6, 8, 11 b. 2, 4, 5, 6, 8, 9, 10, 11 c. 1, 2, 4, 6, 7, 8, 10, 11 d. 1, 2, 4, 6, 7, 8, 9, 10, 11 Under some circumstances, such as if the means is already available, there is no solution (see Appendix, Proposition 1). |

| AI specifically designed for malicious and criminal purposes. Artificially intelligent viruses, spyware, Trojan horses, worms, etc. Stuxnet-style virus hacks infrastructure causing e.g., nuclear reactor meltdowns, power blackouts, food and drug poisoning, airline and drone crashes, large-scale geo-engineering systems failures. Home robots turning on owners, autonomous cars attack. Narrow AI bio-hacking virus. Virus starts human extinction via DNA manipulation, virus invades brain via neural interface | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11 Under some circumstances, no solution (see Appendix, Proposition 1). |

| Robots replace humans. People lose jobs, money, and/or motivation to live; genetically-modified superior human-robot hybrids replace humans | No guaranteed solution from axiom set. All jobs can be replaced by AGI including science, mathematics, management, music, art, poetry, etc. Under axioms 1–3 humans could trade technology for resources with AGI in its pre-takeoff stage to ensure some type of guaranteed income. |

| Narrow bio-AI creates super-addictive drug. Widespread addiction and switching off of happy, productive life, e.g., social networks, fembots, wire-heading, virtual reality, designer drugs, games | 1, 2, 3, 4, 7, 8, 9, 10 |

| Nation states evolve into computer-based totalitarianism. Suppression of human values; human replacement with robots; concentration camps; killing of “useless” people; humans become slaves; system becomes fragile to variety of other catastrophes | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11 |

| AI fights for survival but incapable of self-improvement | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11 |

| Failure of nuclear deterrence AI. a. impersonation of entity authorized to launch attack b. virus hacks nuclear arsenal or Doomsday machine c. creation of Doomsday machines by AI d. self-aware military AI (“Skynet”) | a. 7 b. 4, 6, 8, 9, 10 c. 1, 2 (if creation of Doomsday machine is categorized as unethical), 4, 5, 6, 7, 8, 9, 10, 11 d. 1, 2, 4, 5, 6, 7, 8, 9, 11 |

| Opportunity cost if strong AI is not created. Failure of global control: e.g., bioweapons created by biohackers; other major and minor risks not averted via AI control systems. | To create AGI with minimized risk and avoid opportunity cost need axioms 1–11 |

| AI becomes malignant. AI breaks restrictions and fights for world domination (control over all resources), possibly hiding its malicious intent. | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11 Note it may achieve increasing and unlimited control over resources via market transactions by convincing enough volitional entities to give it control due to potential benefits to them |

| AI deception. AI escapes from confinement; hacks its way out; copies itself into the cloud and hides that fact; destroys initial confinement facility or keeps fake version there. AI Super-persuasion. AI uses psychology to deceive humans; “you need me to avoid global catastrophe”. Ability to predict human behavior vastly exceeds humans’ ability. | Deception scenarios require the axioms of identity verification via DLT. Deception plus super-persuasive AI require transparent and unhackable ethics and morality stored via DLT. |

| Singleton AI reaches overwhelming power. Prevents other AI projects from continuing via hacking or diversion; gains control over influential humans via psychology or neural hacking; gains control over nuclear, bio and chemical weaponry; gains control over infrastructure; gains control over computers and internet. AI starts initial self-improvement. Human operator unwittingly unleashes AI with self-improvement; self-improvement leads to unlimited resource demands (a.k.a. world domination) or becomes malignant. AI declares itself a world power. May or may not inform humans of the level of its control over resources, may perform secret actions; starts activity proving its existence (“miracles”, large-scale destruction or construction). AI continues self-improvement. AI uses earth’s and then solar system’s resources to continue self-improvement and control of resources, increasingly broad and successful experiments with intelligence algorithms, and attempts more risky methods of self-improvement than designers intended. | The axioms per se do not seem to solve Singleton scenarios. They are addressed in a section below where the fundamental premise is each generation of AGI will contract with the succeeding generation and use the best technology and techniques to ensure continuation of a common but evolving value system. The same principle underlies solutions to successively self-improving AI to AGI transition and AGI evolution in which humans are still meaningfully involved. |

| AI starts conquering universe at “light speed”. AI builds nanobot replicators, sends them out into galaxy at light speed; creates simulations of other civilizations to estimate frequency and types of alien AI and solve the Fermi paradox; conquers the universe in our light cone and interacts with aliens and alien AI; attempts to solve end of the universe issues | The inevitable scenario where AI evolution exceeds human ability to monitor and intercede is what necessitates distributed, unhackable DLT methods and smart, i.e., automated, contracts. Further, transparent and unhackable ethics, and a durable form of morality, also unhackable via DLT, are what may ensure each generation of AGI passing the moral baton to the succeeding generation. |

Appendix C. Typical Failure Use Cases by Axiom

| Axiom of Safe AGI Omitted from Set | Failure Use Case if Omitted |

| Licensing of technology via market transactions | 1. Restriction and licensing via state fiat: Corrupt use or use benefitting special interest. 2. No licensing (freely available): Unauthorized and immoral use |

| Ethics transparently stored via DLT so they cannot be altered, forged or deleted | 1. User cannot determine if AI has behavior safeguard technology (i.e., ethics) 2. Invisible ethics may not restrict moral or safe access |

| Morality, defined as no use of force or fraud, therefore resulting in voluntary transactions, stored via DLT | 1. Inadvertent or deliberate access to dangerous technology by immoral entities (human or AI), i.e., entities using AI in force or fraud 2. Note that police and military AI will have modified versions of this axiom 3. Note that this axiom does not solve the case of super-persuasive AI as alternative to fraud |

| Behavior control structure (e.g., a behavior tree) augmented by adding human-compatible values (axioms 2 and 3) at its roots | 1. Uncontrolled behavior by AGI, e.g., behavior in conflict with a set of ethics and/or morality, either deliberately or inadvertently |

| Unique hardware and software ID codes | 1. Inability for entities to restrict access to AGI components because they cannot specify them 2. Inability to identify causes of AGI failure to meet design intent 3. Inability to identify causes of AGI moral failure via identification of components causing the failure Note the audit trail axiom depends on this one. |

| Configuration Item (automated configuration) | 1. Lessened ability to detect improper functionality or configuration of software or hardware within AGI. 2. Lessened ability to detect improper functionality or configuration of software or hardware to which AGI has access. 3. Inability to shut down internal AGI software and hardware modules. 4. Inability to shut down software and hardware modules to which AGI has access. Note smart contracts and dApps axioms depend on this axiom. |

| Secure identity verification via multi-factor authentication, public-key infrastructure and DLT | 1. Inability to detect fraudulent access to secured software or hardware (e.g., nuclear launch codes, financial or health accounts). 2. Inability to detect AGI impersonation of human or authentic moral AGI (e.g., POTUS, military commander, police chief, CEO, journalist, banker, auditor, et al.). |

| Smart contracts based on DLT | 1. Inability to enforce evolution of moral AGI due to its pace 2. Inability to enforce contracts with AGI due to its speed of decisions and actions 3. Inability to compete with regimes using smart contracts due to inefficiency, cost, slowness of evolution, etc. |

| Distributed applications (dApps)—software code modules encrypted via DLT | 1. Inability to restrict access to key software modules essential to AGI (i.e., they could be hacked more easily by humans or AI). |

| Audit trail of component usage stored via DLT | 1. Inability to track unauthorized usage of restricted software and hardware essential to AGI. 2. Inability to track unethical usage of restricted software and hardware essential to AGI. 3. Inability to track immoral usage of restricted software and hardware essential to AGI. 4. Inability to identify which component(s) failed in AGI failure. 5. Inability to prevent hacking of audit trail. 6. Increased cost in time and capital to detect criminal usage of restricted software and hardware by AGI, and therefore, to apply justice and social ostracism. 7. Inability to compete with regimes using DLT-based audit trails due to slowness to detect failure, identify entities or components responsible for failure, and implement solutions (overall: slowness of evolution). |

| Social ostracism—denial of societal resources—augmented by petitions based on DLT | 1. Lessened ability to reduce criminal AGI access to societal resources. 2. Inability for entities to preferentially reduce non-criminal AGI access to societal resources. |

| Game theory/mechanism design | 1. Lacking a system to incent increasingly diverse autonomous intelligent agents to communicate results likely to be valuable to other agents and in general collaborate toward reaching individual and group goals, cohesiveness required for collaborative effort fails over time. 2. DLT in a digital ecosystem theoretically permits all conflicts to be resolved via voluntary transactions (the Coase theorem), but a pre-requisite set of rules may be necessary. |

References

- Shoham, Y.; Perrault, R.; Brynjolfsson, E.; Clark, J.; Manyika, J.; Niebles, J.C.; Lyons, T.; Etchemendy, J.; Grosz, B. The AI Index 2018 Annual Report. Available online: http://cdn.aiindex.org/2018/AI%20Index%202018%20Annual%20Report.pdf (accessed on 7 July 2019).

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez, J. The Science Behind OpenAI Five that just Produced One of the Greatest Breakthrough in the History of AI. Medium. Available online: https://towardsdatascience.com/the-science-behind-openai-five-that-just-produced-one-of-the-greatest-breakthrough-in-the-history-b045bcdc2b69 (accessed on 12 January 2018).

- Brown, N.; Sandholm, T. Reduced Space and Faster Convergence in Imperfect-Information Games via Pruning. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Knight, W. Why Poker Is a Big Deal for Artificial Intelligence. MIT Technology Review. Available online: https://www.technologyreview.com/s/603385/why-poker-is-a-big-deal-for-artificial-intelligence/ (accessed on 23 January 2017).

- Lake, B.M.; Salakhutdinov, R.; Tenenbaum, J.B. Human-level concept learning through probabilistic program induction. Science 2015, 350, 1332–1338. [Google Scholar] [CrossRef] [PubMed]

- Ferrucci, D.; Levas, A.; Bagchi, S.; Gondek, D.; Mueller, E.T. Watson: Beyond Jeopardy! Artif. Intell. 2013, 199–200, 93–105. [Google Scholar] [CrossRef]

- Omohundro, S. Autonomous technology and the greater human good. J. Exp. Theor. Artif. Intell. 2014, 26, 303–315. [Google Scholar] [CrossRef]

- Future of Life Institute. ASILOMAR AI Principles. Future of Life Institute. Available online: https://futureoflife.org/ai-principles/ (accessed on 22 December 2018).

- Bostrom, N. Superintelligence: Paths, Dangers, Strategies; Oxford University Press: Oxford, UK, 2016; p. 415. [Google Scholar]

- Babcock, J.; Kramar, J.; Yampolskiy, R. Guidelines for Artificial Intelligence Containment. Available online: https://arxiv.org/abs/1707.08476 (accessed on 1 October 2018).

- Dawkins, R.; Krebs, J.R. Arms races between and within species. Proc. R. Soc. Lond. B 1979, 205, 489–511. [Google Scholar] [PubMed]

- Rabesandratana, T. Europe moves to compete in global AI arms race. Science 2018, 360, 474. [Google Scholar] [CrossRef] [PubMed]

- Zwetsloot, R.; Toner, H.; Ding, J. Beyond the AI Arms Race. Available online: https://www.foreignaffairs.com/reviews/review-essay/2018-11-16/beyond-ai-arms-race (accessed on 7 July 2019).

- Geist, E.M. It’s already too late to stop the AI arms race—We must manage it instead. Bull. At. Sci. 2016, 72, 318–321. [Google Scholar] [CrossRef]

- Tannenwald, N. The Vanishing Nuclear Taboo? How Disarmament Fell Apart. Foreign Aff. 2018, 97, 16–24. [Google Scholar]

- Callaghan, V.; Miller, J.; Yampolskiy, R.; Armstrong, S. The Technological Singularity: Managing the Journey. Springer, 2017; p. 261. Available online: https://www.springer.com/us/book/9783662540312 (accessed on 21 December 2018).

- Yampolskiy, R. Taxonomy of Pathways to Dangerous Artificial Intelligence. Presented at Workshops of the 30th AAAI Conference on AI, Ethics, and Society, AAAI, Phoenix, AZ, USA, 12–13 February 2016; Available online: https://arxiv.org/abs/1511.03246 (accessed on 8 July 2019).

- Turchin, A. A Map: AGI Failures Modes and Levels. Available online: http://immortality-roadmap.com/AIfails.pdf (accessed on 5 February 2018).

- Brundage, M.; Avin, S.; Clark, J.; Toner, H.; Eckersley, P. The Malicious Use of AI-Forecasting, Prevention, and Mitigation. Available online: https://arxiv.org/abs/1802.07228 (accessed on 20 February 2018).

- Manheim, D. Multiparty Dynamics and Failure Modes for Machine Learning and Artificial Intelligence. Big Data Cogn. Comput. 2019, 3, 15. [Google Scholar] [CrossRef]

- von Neumann, J. The General and Logical Theory of Automata. In The World of Mathematics; Newman, J.R., Ed.; John Wiley & Sons: New York, NY, USA, 1956; Volume 4, pp. 2070–2098. [Google Scholar]

- Narahari, Y. Game Theory and Mechanism Design (IISc Lecture Notes Series, No. 4); IISc Press/World Scientific: Singapore, 2014. [Google Scholar]

- Burtsev, M.; Turchin, P. Evolution of cooperative strategies from first principles. Nature 2006, 440, 1041–1044. [Google Scholar] [CrossRef]

- Doyle, J.C.; Alderson, D.L.; Li, L.; Low, S.; Roughan, M.; Shalunov, S.; Tanaka, R.; Willinger, W. The “robust yet fragile” nature of the Internet. Proc. Natl. Acad. Sci. USA 2005, 102, 14497. [Google Scholar] [CrossRef] [PubMed]

- Alzahrani, N.; Bulusu, N. Towards True Decentralization: A Blockchain Consensus Protocol Based on Game Theory and Randomness, Presented at Decision and Game Theory for Security; Springer: Seattle, WA, USA, 2018. [Google Scholar]

- Nakamoto, S.; Bitcoin, A. Peer-to-Peer Electronic Cash System. Available online: https://bitcoin.org/en/bitcoin-paper (accessed on 22 December 2018).

- Christidis, K.; Devetsikiotis, M. Blockchains and Smart Contracts for the Internet of Things. IEEE Access 2016, 4, 2292–2303. [Google Scholar] [CrossRef]

- FinYear. Eight Key Features of Blockchain and Distributed Ledgers Explained. Available online: https://www.finyear.com/Eight-Key-Features-of-Blockchain-and-Distributed-Ledgers-Explained_a35486.html (accessed on 5 November 2018).

- Szabo, N. Smart Contracts: Building Blocks for Digital Markets. Available online: http://www.fon.hum.uva.nl/rob/Courses/InformationInSpeech/CDROM/Literature/LOTwinterschool2006/szabo.best.vwh.net/smart_contracts_2.html (accessed on 7 July 2019).

- Asimov, I. I, Robot; Gnome Press: New York, NY, USA, 1950. [Google Scholar]

- Collendanchise, M.; Ogren, P. Behavior Trees in Robotics and AI. Available online: https://arxiv.org/abs/1709.00084 (accessed on 12 February 2018).

- Hind, M.; Mehta, S.; Mojsilovic, A.; Nair, R.; Ramamurthy, K.N.; Olteanu, A.; Varshney, K.R. Increasing Trust in AI Services through Supplier’s Declarations of Conformity. Available online: https://arxiv.org/abs/1808.07261 (accessed on 7 July 2019).

- Yampolskiy, R.; Sotala, K. Risks of the Journey to the Singularity. In The Technological Singularity; Callaghan, V., Miller, J., Yampolskiy, R., Armstrong, S., Eds.; Springer: Berlin, Germany, 2017; pp. 11–24. [Google Scholar]

- Stigler, G.J. The development of utility theory I. J. Political Econ. 1950, 58, 307–327. [Google Scholar] [CrossRef]

- Galambos, A.J. Thrust for Freedom: An Introduction to Volitional Science; Universal Scientific Publications: San Diego, CA, USA, 2000. [Google Scholar]

- Eddington, A.S. The Philosophy of Physical Science (Tarner Lectures 1938); Cambridge University Press: Cambridge, UK.

- Rothbard, M.N. Man, Economy, and State: A Treatise on Economic Principles; Ludwig Von Mises Institute: Auburn AL, USA, 1993. [Google Scholar]

- Webster, T.J. Economic efficiency and the common law. Atl. Econ. J. 2004, 32, 39–48. [Google Scholar] [CrossRef]

- Todd, P.M.; Gigerenzer, G. Ecological Rationality Intelligence in the World (Evolution and Cognition); Oxford University Press: Oxford, UK; New York, NY, USA, 2011. [Google Scholar]

- Gigerenzer, G.; Todd, P.M. Simple Heuristics That Make Us Smart (Evolution and Cognition); Oxford University Press: Oxford, UK; New York, NY, USA, 1999. [Google Scholar]

- Hayek, F. The use of knowledge in society. Am. Econ. Rev. 1945, 35, 519–530. [Google Scholar]

- Coase, R.H. The problem of social cost. J. Law Econ. 1960, 3, 1–44. [Google Scholar] [CrossRef]

- Minerva, R.; Biru, A.; Rotondi, D. “Towards a definition of the Internet of Things (IOT),” IEEE/Telecom Italia. 2015. Available online: https://iot.ieee.org/images/files/pdf/IEEE_IoT_Towards_Definition_Internet_of_Things_Issue1_14MAY15.pdf (accessed on 12 May 2018).

- Chokhani, S.; Ford, W.; Sabett, R.; Merrill, C.; Wu, S. Internet, X.509 Public Key Infrastructure Certificate Policy and Certification Practices Framework. Available online: http://ftp.rfc-editor.org/in-notes/rfc3647.txt (accessed on 23 December 2018).

- Szabo, N. Formalizing and Securing Relationships on Public Networks. First Monday. Available online: https://ojphi.org/ojs/index.php/fm/article/view/548/469 (accessed on 1 September 1997).

- Anonymous. Hyperlink. Wikipedia. Available online: https://en.wikipedia.org/wiki/Hyperlink#History (accessed on 25 December 2018).

- Omohundro, S. Cryptocurrencies, Smart Contracts, and Artificial Intelligence. AI Matters 2014, 1, 19–21. [Google Scholar] [CrossRef]

- Pierce, B. Encoding law, Regulation, and Compliance Ineluctably into the Blockchain. Presented at WALL ST. Conference; Twitter, 2018. Available online: https://twitter.com/LeX7Mendoza/status/1085643180744339456/video/1 (accessed on 7 July 2019).

- Barnum, K.J.; O’Connell, M.J. Cell cycle regulation by checkpoints. Methods Mol. Biol. 2014, 1170, 29–40. [Google Scholar]

- Buterin, V. A Next-Generation Smart Contract and Decentralized Application Platform. White Paper. Available online: http://blockchainlab.com/pdf/Ethereum_white_paper-a_next_generation_smart_contract_and_decentralized_application_platform-vitalik-buterin.pdf (accessed on 7 July 2019).

- Bore, N.K.; Raman, R.K.; Markus, I.M.; Remy, S.; Bent, O.; Hind, M.; Pissadaki, E.K.; Srivastava, B.; Vaculin, R.; Varshney, K.R.; et al. Promoting Distributed Trust in Machine Learning and Computational Simulation via a Blockchain Network. Available online: https://arxiv.org/abs/1810.11126 (accessed on 7 July 2019).

- Nowak, M.A.; Highfield, R. Super Cooperators: Evolution, Altruism and Human Behaviour or Why We Need Each Other to Succeed; Canongate: Edinburgh, UK; New York, NY, USA, 2011. [Google Scholar]

- Rasmusen, E. Games and Information: An Introduction to Game Theory, 4th ed.; Blackwell: Oxford, UK, 2007. [Google Scholar]

- Shoham, Y.; Leyton-Brown, K. Multiagent Systems: Algorithmic, Game-theoretic, and Logical Foundations; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2009. [Google Scholar]

- Newell, A. Unified Theories of Cognition (William James Lectures, No. 1987); Harvard University Press: Cambridge, MA, USA, 1990. [Google Scholar]

- Potapov, A.; Svitenkov, A.; Vinogradov, Y. Differences between Kolmogorov complexity and Solomonoff probability: Consequences for AGI. In Artificial General Intelligence; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7716. [Google Scholar]

- Waltz, D.L. The prospects for building truly intelligent machines. Daedalus Proc. AAAS 1988, 117, 191–212. [Google Scholar]

- Westfall, R.S. Never at Rest: A Biography of Isaac Newton; Cambridge University Press: Cambridge, UK, 1980. [Google Scholar]

- Cohen, I.B. Revolution in Science; Harvard University Press: Cambridge, MA, USA, 1987. [Google Scholar]

- Cassidy, D.C. J. Robert Oppenheimer and the American Century; Pearson/Pi Press: New York, NY, USA, 2005. [Google Scholar]

- Goodchild, P. J. Robert Oppenheimer: Shatterer of Worlds; BBC/WGBH: Cambridge, MA, USA, 1981. [Google Scholar]

- Associated Press. U.S. Nobel Laureate Knew about Chinese Scientist’s Gene-Edited Babies. NBC News. Available online: https://www.nbcnews.com/health/health-news/u-s-nobel-laureate-knew-about-chinese-scientist-s-gene-n963571 (accessed on 29 January 2019).

- Gill, K. Sophia Robot Creator: We’ll Achieve Singularity in 5 to 10 years. Article & Video. Available online: https://cheddar.com/videos/sophia-bot-creator-well-achieve-singularity-in-five-to-10-years (accessed on 29 January 2019).

- Predictions of Human-Level AI Timelines, No. 15. Available online: https://aiimpacts.org/category/ai-timelines/predictions-of-human-level-ai-timelines/ (accessed on 3 January 2019).

- Concrete AI Tasks for Forecasting, No. 15. Available online: https://aiimpacts.org/concrete-ai-tasks-for-forecasting/ (accessed on 5 January 2019).

- Muller, V.C.; Bostrom, N. Future progress in artificial intelligence: A survey of expert opinion. In Fundamental Issues of Artificial Intelligence; Springer: Berlin, Germany, 2016; Volume 377. [Google Scholar]

- Bughin, J.; Hazan, E.; Manyika, J.; Woetzel, J. Artificial Intelligence-The Next Digital Frontier? Available online: Search at https://www.mckinsey.com/mgi (accessed on 15 January 2019).

- Eckersley, P.; Nasser, Y. AI Progress Measurement. Electronic Frontier Foundation. Available online: https://www.eff.org/ai/metrics (accessed on 7 July 2019).

- Housman, G.; Byler, S.; Heerboth, S.; Lapinska, K.; Longacre, M.; Snyder, N.; Sarkar, S. Drug resistance in cancer: An overview. Cancers 2014, 6, 1769–1792. [Google Scholar] [CrossRef]

- Al-Dimassi, S.; Abou-Antoun, T.; El-Sibai, M. Cancer cell resistance mechanisms: A mini review. Clin. Transl. Oncol. 2014, 16, 511–516. [Google Scholar] [CrossRef] [PubMed]

- Ten Broeke, G.A.; van Voorn, G.A.K.; Ligtenberg, A.; Molenaar, J. Resilience through adaptation. PLoS ONE 2017, 12, e0171833. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E.; McCarthy, J. Automata Studies, Annals of Mathematics Studies, No. 34; Princeton University Press: Princeton, NJ, USA, 1956. [Google Scholar]

| Intelligence amplification—AI can improve its own intelligence |

| Strategy—optimizing chances of achieving goals using advanced techniques, e.g., game theory, cognitive psychology, and simulation |

| Social manipulation—psychological and social modeling e.g., for persuasion |

| Hacking—exploiting security flaws to appropriate resources |

| R&D—create more powerful technology, e.g., to achieve ubiquitous surveillance and military dominance |

| Economic productivity—generate vast wealth to acquire resources |

| Non-hackability and non-censurability via decentralization (storage in multiple distributed servers), encryption in standardized blocks, and irrevocable transaction linkage (the “chain”) |

| Node-fault tolerance: Redundancy via storage in a decentralized ledger of (a) rules for transactions, (b) the transaction audit trail, and (c) transaction validations |

| Transparency of the transaction rules and audit trail in the DLT |

| Automated “smart” contracts |

| Decentralized applications (“dApps”), i.e., software programs that are stored and run on a distributed network and have no central point of control or failure |

| Validation of contractual transactions by a decentralized consensus of validators |

| Symbol | Axiom |

|---|---|

| 1 | Access to AGI technology via market license |

| 2 | Ethics transparently stored via DLT so they cannot be altered, forged, or deleted |

| 3 | Morality, defined as no use of force or fraud, stored via DLT |

| 4 | Behavior control structure (e.g., a behavior tree) augmented by adding human-compatible values (axioms 2 and 3) at its roots |

| 5 | Unique hardware and software ID codes |

| 6 | Configuration Item (automated configuration) |

| 7 | Secure identity via multi-factor authentication, public-key infrastructure and DLT |

| 8 | Smart contracts based on DLT |

| 9 | Decentralized applications (dApps)—AGI software code modules encrypted via DLT |

| 10 | Audit trail of component usage stored via DLT |

| 11 | Social ostracism—denial of societal resources—augmented by petitions based on DLT |

| 12 | Game theory—mechanism design of a communications and incentive system |

| Pathway | Key Axioms |

|---|---|

| Perverse instantiation: “Make us smile” | Morality defined as voluntary transactions |

| Perverse instantiation: “Make us happy” | Morality defined as voluntary transactions |

| Final goal: Act to avoid bad conscience | Store value system in distributed app |

| Final goal: Maximize time-discounted integral of future reward signal | Morality defined as voluntary transactions, store value system in distributed app |

| Infrastructure profusion: Riemann hypothesis catastrophe | Morality defined as voluntary transactions |

| Infrastructure profusion: Paperclip manufacture catastrophe | Morality defined as voluntary transactions Social ostracism |

| Principal–Agent Failure [21] Human–Human: Agent (AI developer) disobeys contract Human–AGI: Agent disobeys contract | Digital identity, smart contracts, dApps, social ostracism |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carlson, K.W. Safe Artificial General Intelligence via Distributed Ledger Technology. Big Data Cogn. Comput. 2019, 3, 40. https://doi.org/10.3390/bdcc3030040

Carlson KW. Safe Artificial General Intelligence via Distributed Ledger Technology. Big Data and Cognitive Computing. 2019; 3(3):40. https://doi.org/10.3390/bdcc3030040

Chicago/Turabian StyleCarlson, Kristen W. 2019. "Safe Artificial General Intelligence via Distributed Ledger Technology" Big Data and Cognitive Computing 3, no. 3: 40. https://doi.org/10.3390/bdcc3030040

APA StyleCarlson, K. W. (2019). Safe Artificial General Intelligence via Distributed Ledger Technology. Big Data and Cognitive Computing, 3(3), 40. https://doi.org/10.3390/bdcc3030040