F-Formations for Social Interaction in Simulation Using Virtual Agents and Mobile Robotic Telepresence Systems

Abstract

1. Introduction

- R1

- When joining social interactions, do teleoperators adhere to (respect) F-formations?

- R2

- For joining the groups, would teleoperators prefer an autonomous feature?

2. Background and Related Works

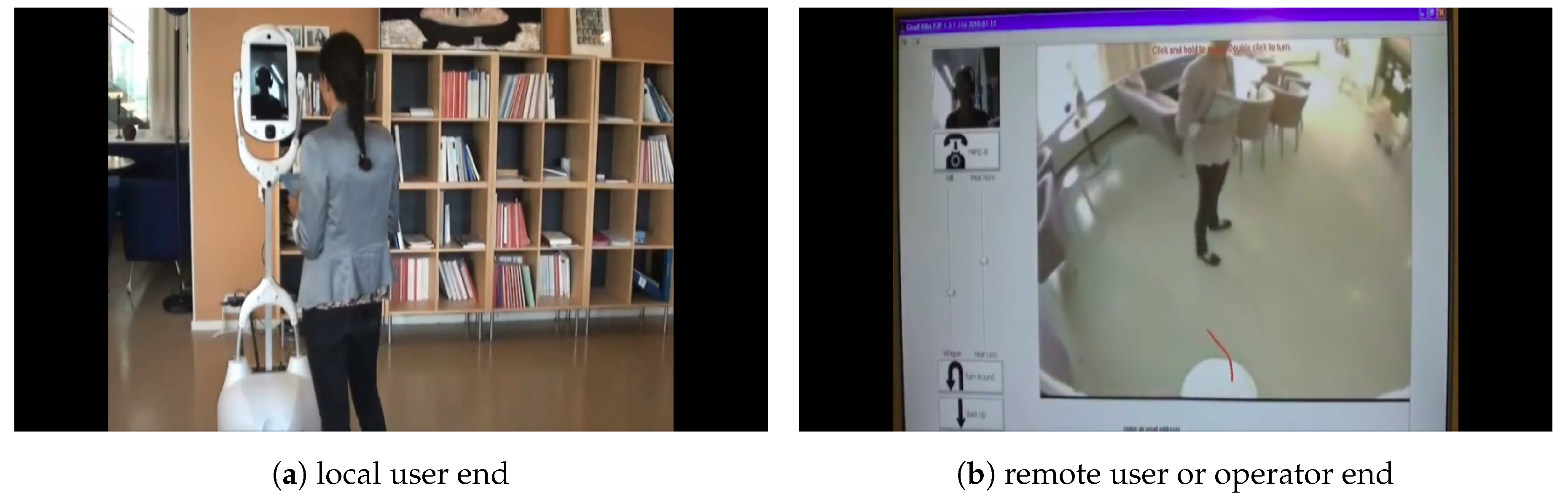

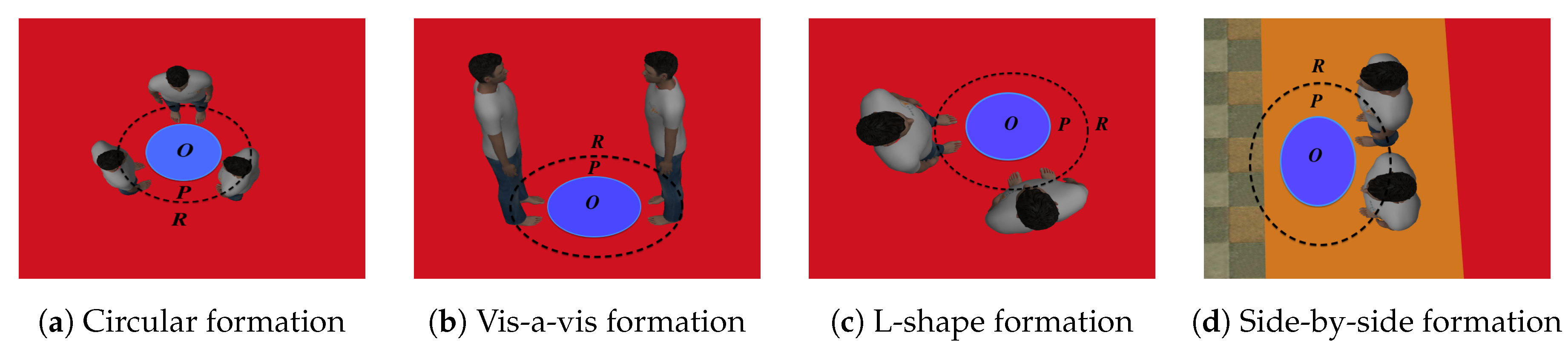

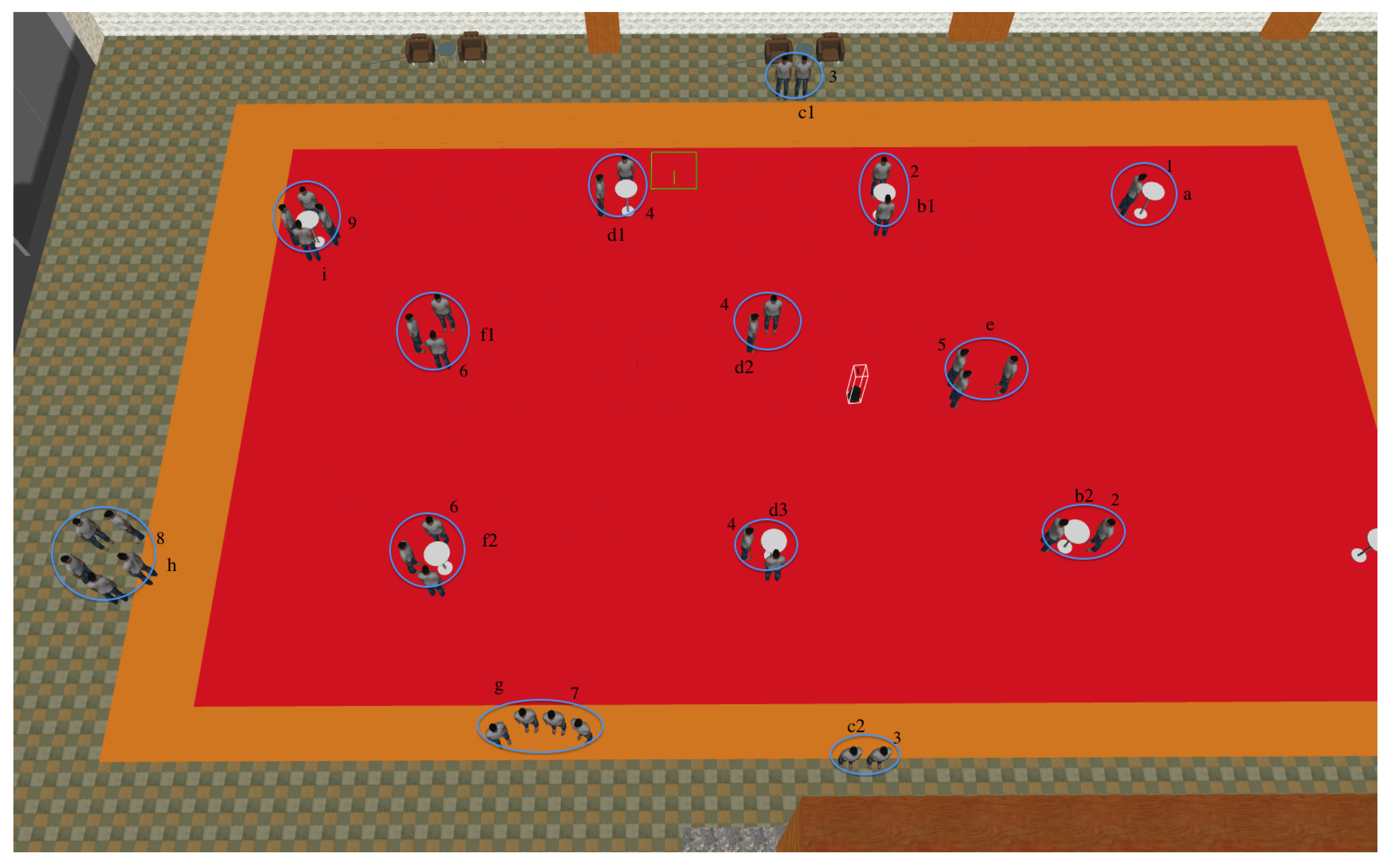

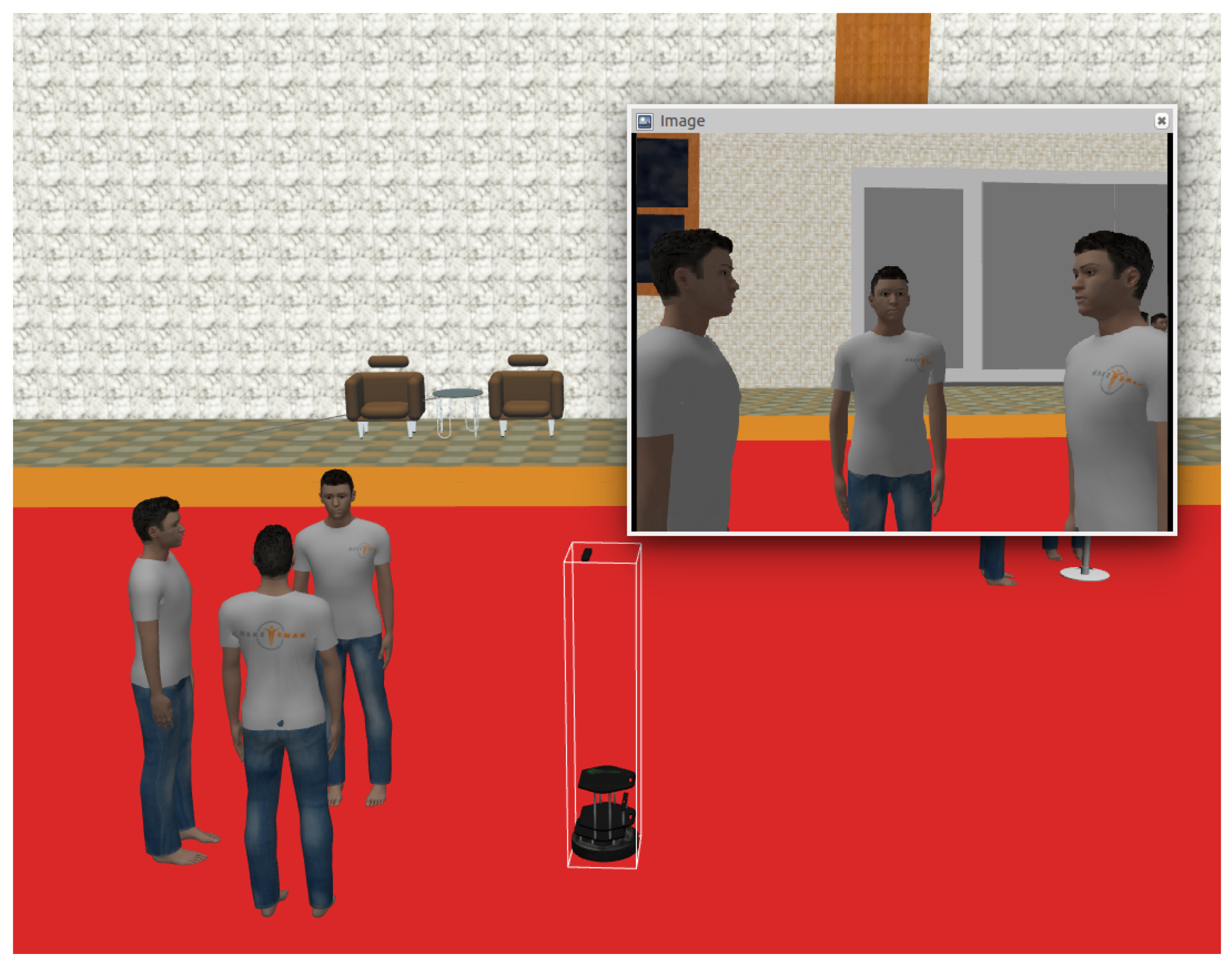

3. Simulation Environment for MRP Systems

- A virtual agent (VA) (singleton) was standing in front of a round table.

- Two pairs of VAs were conversing in vis-a-vis formations with a table in between them.

- Two pairs of VAs were standing in side-by-side formations without tables: one pair in front of the wall looking at the pallet wall and another one in front of a pair of sofas facing the whole hall and looking at the VAs around, while conversing.

- Three pairs of VAs were conversing in L-shape formations: two pairs around a table and one pair without a table with different configurations.

- Three VAs were conversing in a Triangular formation without a table.

- Two groups of three VAs were conversing standing in circular formations: one group around a table and another group without a table.

- Four VAs were conversing in a semi-circular formation without a table and facing the wall’s texture.

- Five VAs were standing in a circular formation without a table.

- Four VAs were standing around a table in a circular formation.

4. Procedure

- Information to subjects: The researcher started the experiment by explaining the process (cover story) to the subject as follows: “This is a conference lobby and you are a staff member from the conference. You are supposed to interview all the people or groups in the lobby’.’ Then, after showing the egocentric view window, the researcher said, “This is the view from the robot’s camera, which is used by you to observe the scene for the rest of the experiment, and you will be able to operate the robot from this controller. You have to interview all the people or groups in the lobby, and when you are done interviewing one of them, inform or notify me. After my consent, you could proceed to the next person or group and then interview them. In this case, interviewing means you place the robot in a spot that you think is suitable and let me know. We continue this process until you have visited all the people or groups”.

- Socio-demographic questionnaire: The subject filled out the consent form and socio-demographic questionnaire.

- Teleoperation practice: The subject practiced teleoperating the robot in the simulated environment without any VAs (see Figure 4).

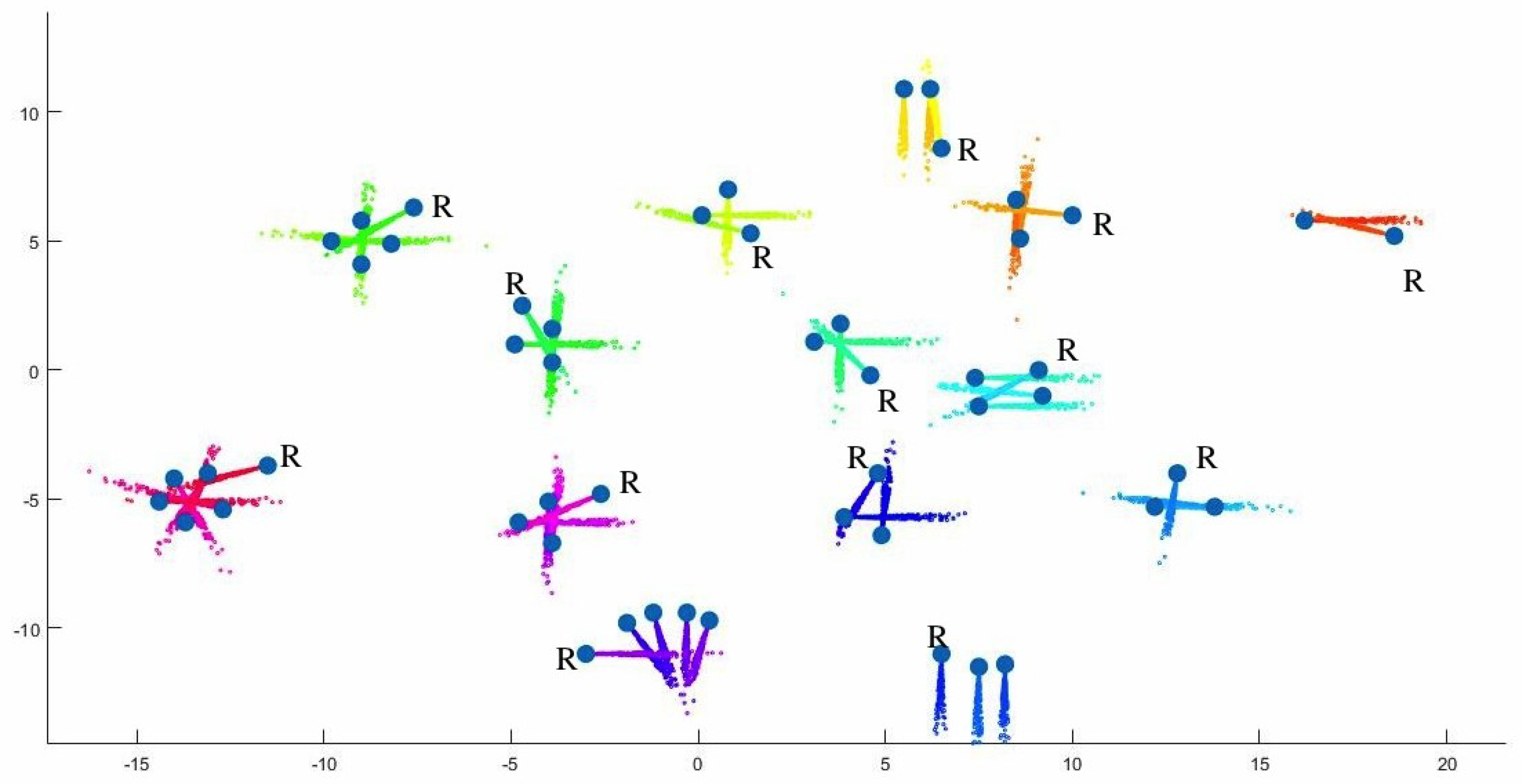

- Teleoperation interaction: The subject teleoperated the robot and conducted interviews with the VAs in Figure 5. This interaction was done by joining the groups, i.e., the subject placed the robot according to his/her convenience and informed the researcher that the robot had been placed and continued until he/she thought that all people or groups in the scenario had been visited.

- Questions after experiment: His/her recording from the exocentric view was shown to the subject, who then filled out the questionnaire “Questions after experiment”.

4.1. Data Collection

- Socio-demographic questionnaire;

- The video recordings of the exocentric view and the egocentric view in the simulated environment;

- Rosbag data acquisition mechanism used to record the length of the path;

- Questions after the experiment.

4.2. Method and Metrics to Evaluate our Experiment

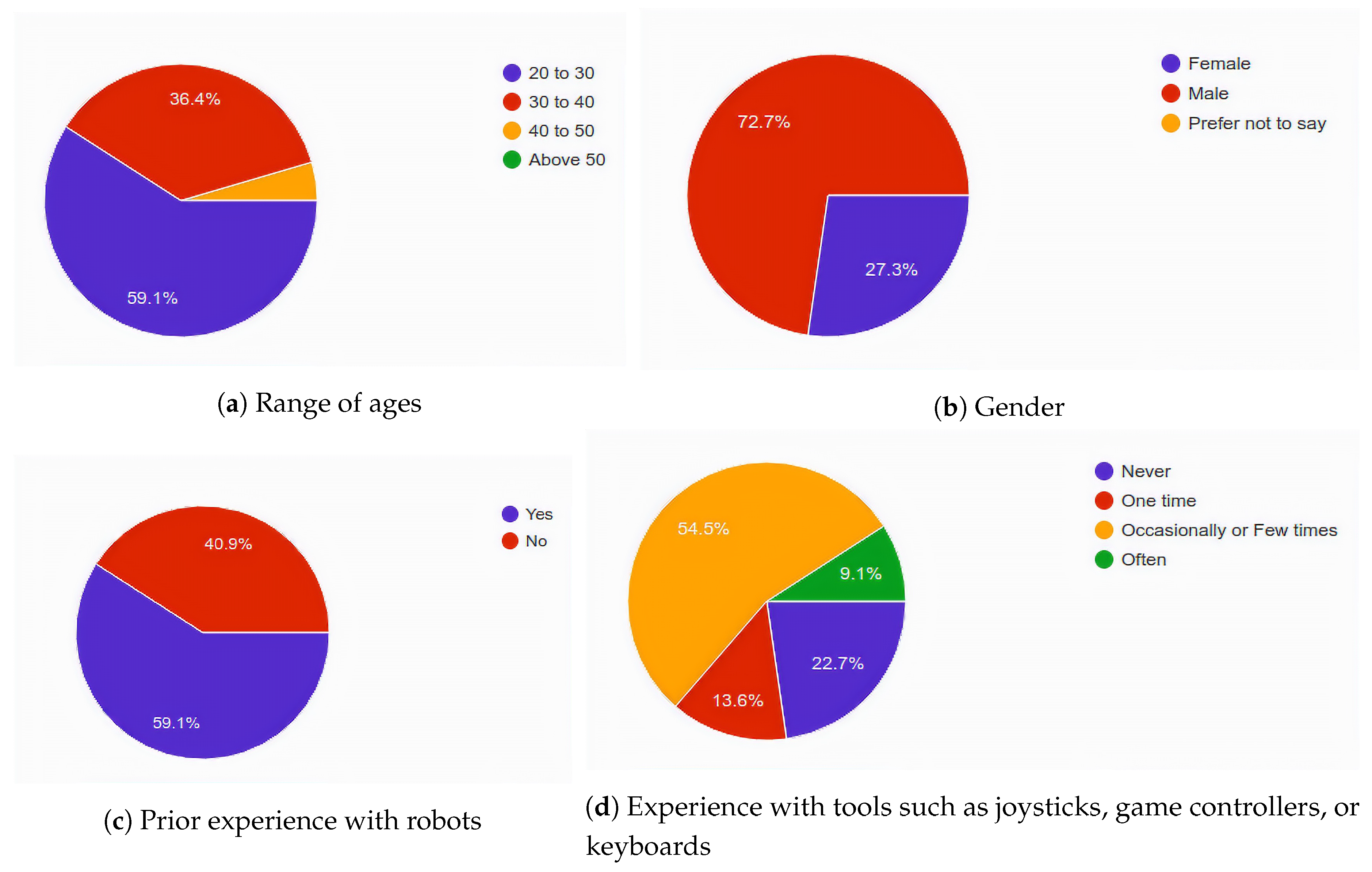

4.3. Subjects

5. Results and Discussions

- Modified formations after the placement of the robot in the groups;

- F-formations while joining the social group interactions;

- Autonomous Feature.

5.1. Modified Formations after the Placement of the Robot in the Groups

- When a single VA was standing, then the teleoperator joined the dyadic interaction in a vis-a-vis formation, only one subject joined in an L-shape formation instead.

- When two VAs were standing in the vis-a-vis formation and the teleoperator joined them, then the formation became circular.

- When two VAs were standing in the L-shape formation and the teleoperator joined them, then the formation became circular.

- When two VAs were standing in the side-by-side formation in front of the wall, the teleoperator joined them sideways and the interaction still remained a side-by-side formation. This happened often, but in 4 cases, the teleoperator placed the robot in front of them, transforming the formation into a circular one.

- When two VAs were standing in the side-by-side formation facing the open space, then the teleoperator joined them from the front and the formation became a triangle.

- When three VAs were standing in the triangle formation and the teleoperator joined, then the formation became circular.

- When three VAs were standing in the circular formation and the teleoperator joined, the formation still remained circular.

- When four VAs were standing in the circular formation and there was no spot left, all teleoperators approached and placed the robot in between two VAs and the formation still remained circular.

- When four VAs were standing in the semi-circular formation in front of the wall, then the teleoperator approached them from either side and the formation still remained semi-circular. This happened often, but in 2 cases, the teleoperator placed the robot in front of them, transforming the formation into a circular one.

- When five VAs were standing in the Circular formation and the teleoperator joined, the formation still remained Circular.

5.2. F-Formations when Joining the Social Group Interactions

5.3. Autonomous Feature

6. Conclusions and Future Works

Author Contributions

Funding

Conflicts of Interest

Appendix A. Socio-Demographic Questionnaire

- What is your age? [20–30, 30–40, 40–50, Above 50]

- What is your gender? [Female, Male, Prefer not to say]

- What is your research area in robotics? [Interaction, Mechanics, Cognition, Artificial Intelligence, Perception, Control, Other (with blank space)]

- Do you have any prior experience with robots? [Yes, No]

- Did you ever operate a telepresence robot? [Never, One time, Occasionally or a few times, Often]

- Did you ever use tools such as a joystick, game controller, or keyboard to drive a robot? [Never, One time, Occasionally or a few times, Often]

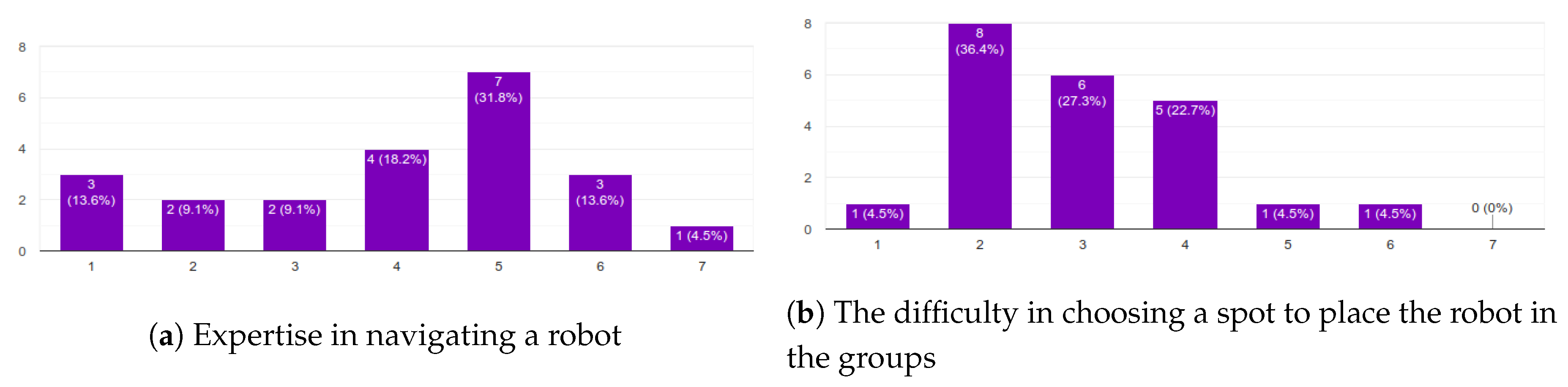

- On a scale of 1–7, how do you rate your technical expertise to navigate a robot? [1 = low, 7 = high]

Appendix B. Questions after the Experiment

- What do you think of the scene? [Bad, Ok, Good, Better, Best]

- Did you see any flaws in the scene? [Yes, No, Not sure]

- If yes, are they important to this particular experiment? [Important, Can be ignored, Will not be noticed by the user]

- If important, mention the flaws. [blank space]

- Why did you place the robot in that particular position? [I thought it was an ideal position to be placed; So that I could see the face/s of the person/s; So that I could see the shoulders of the person/s; So that I could see the upper part of the body; Other (with blank space)]

- How hard was it to select the spot? [Likert scale: 0 = low, 7 = high]

- How hard was it to drive the robot? [Likert scale: 0 = low, 7 = high]

- Would you prefer to have a button to place the robot in groups or would you prefer to do it yourself? [Prefer a button, Prefer to place myself, Other (with blank space)]

- If “other”, why? [blank space]

- Would you prefer an autonomous feature that navigates around and places the robot in the group? [Yes, No, Other (with blank space)]

- After seeing the global view, would you change your spot if given a chance? [Yes, No, Maybe]

- If yes, why? [blank space]

References

- Michaud, F.; Boissy, P.; Labonte, D.; Corriveau, H.; Grant, A.; Lauria, M.; Cloutier, R.; Roux, M.-A.; Iannuzzi, D.; Royer, M.P. Telepresence robot for home care assistance. In Proceedings of the AAAI Spring Symposium: Multidisciplinary Collaboration for Socially Assistive Robotics, Palo Alto, CA, USA, 26–28 March 2007; pp. 50–55. [Google Scholar]

- Michaud, F.; Boissy, P.; Labonté, D.; Briere, S.; Perreault, K.; Corriveau, H.; Grant, A.; Lauria, M.; Cloutier, R.; Roux, M.-A.; et al. Exploratory design and evaluation of a homecare teleassistive mobile robotic system. Mechatronics 2010, 20, 751–766. [Google Scholar] [CrossRef]

- Tsui, K.M.; Desai, M.; Yanco, H.A.; Uhlik, C. Telepresence robots roam the halls of my office building. In Proceedings of the 1st Workshop on Social Robotic Telepresence co-located with 6th ACM/IEEE international Conference on Human-Robot Interaction (HRI), Lausanne, Switzerland, 6–9 March 2011; pp. 58–59. [Google Scholar]

- Kristoffersson, A.; Coradeschi, S.; Loutfi, A. A Review of Mobile Robotic Telepresence. Adv. Hum.-Comput. Interact. 2013. [Google Scholar] [CrossRef]

- Bailenson, J.N.; Blascovich, J.; Beall, A.C.; Loomis, J.M. Interpersonal distance in immersive virtual environments. Personal. Soc. Psychol. Bull. 2003, 29, 819–833. [Google Scholar] [CrossRef] [PubMed]

- Hall, E.T. The Hidden Dimension; Doubleday: Garden City, NY, USA, 1966. [Google Scholar]

- Cristani, M.; Paggetti, G.; Vinciarelli, A.; Bazzani, L.; Menegaz, G.; Murino, V. Towards computational proxemics: Inferring social relations from interpersonal distances. In Proceedings of the 2011 IEEE Third International Conference on Privacy, Security, Risk and Trust and 2011 IEEE Third International Conference on Social Computing, Boston, MA, USA, 9–11 October 2011; pp. 290–297. [Google Scholar]

- Kendon, A. Spacing and orientation in co-present interaction. In Development of Multimodal Interfaces: Active Listening and Synchrony; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–15. [Google Scholar]

- Kendon, A. Conducting Interaction: Patterns of Behavior in Focused Encounters; Studies in Interactional Linguistics; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Kristoffersson, A.; Eklundh, K.S.; Loutfi, A. Measuring the Quality of Interaction in Mobile Robotic Telepresence: A Pilot’s Perspective. Int. J. Soc. Robot. 2013, 5, 89–101. [Google Scholar] [CrossRef]

- Vroon, J.; Joosse, M.; Lohse, M.; Kolkmeier, J.; Kim, J.; Truong, K.; Englebienne, G.; Heylen, D.; Evers, V. Dynamics of social positioning patterns in group-robot interactions. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Kobe, Japan, 31 August–4 September 2015; pp. 394–399. [Google Scholar]

- Giraff. Available online: http://www.giraff.org/ (accessed on 15 October 2019).

- Walters, M.L.; Dautenhahn, K.; Boekhorst, R.T.; Koay, K.L.; Syrdal, D.S.; Nehaniv, C.L. An empirical framework for human-robot proxemics. In Proceedings of the New Frontiers in Human-Robot Interaction, Edinburgh, Scotland, 6–9 April 2009. [Google Scholar]

- Mead, R.; Mataric, M. Autonomous human-robot proxemics: Socially aware navigation based on interaction potential. Auton. Robot. 2017, 41, 1189–1201. [Google Scholar] [CrossRef]

- Mumm, J.; Mutlu, B. Human-robot proxemics: Physical and psychological distancing in human-robot interaction. In Proceedings of the 6th International Conference on Human-Robotinteraction, Lausanne, Switzerland, 6–9 March 2011; ACM: New York, NY, USA, 2011; pp. 331–338. [Google Scholar]

- Walters, M.L.; Oskoei, M.A.; Syrdal, D.S.; Dautenhahn, K. Along-term human-robot proxemic study. In Proceedings of the 2011 RO-MAN, Atlanta, GA, USA, 31 July–3 August 2011; pp. 137–142. [Google Scholar]

- Marshall, P.; Rogers, Y.; Pantidi, N. Using F-formations to analyse spatial patterns of interaction in physical environments. In Proceedings of the ACM 2011 Conference on Computer Supported Cooperative Work, Hangzhou, China, 19–23 March 2011; ACM: New York, NY, USA, 2011; pp. 445–454. [Google Scholar]

- Serna, A.; Tong, L.; Tabard, A.; Pageaud, S.; George, S. F-formations and collaboration dynamics study for designing mobile collocation. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Florence, Italy, 6–9 September 2016; ACM: New York, NY, USA, 2016; pp. 1138–1141. [Google Scholar]

- Cristani, M.; Bazzani, L.; Paggetti, G.; Fossati, A.; Tosato, D.; Bue, A.D.; Menegaz, G.; Murino, V.; Fossati, A.; del Bue, A. Social interaction discovery by statistical analysis of F-formations. In Proceedings of the British Machine Vision Conference (BMVC), Dundee, UK, 29 August–2 September 2011. [Google Scholar]

- Hung, H.; Kröse, B. Detecting F-formations as dominant sets. In Proceedings of the 13th International Conference on Multimodal Interfaces, Alicante, Spain, 14–18 November 2011; pp. 231–238. [Google Scholar]

- Pavan, M.; Pelillo, M. Dominant sets and pair wise clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 167–172. [Google Scholar] [CrossRef] [PubMed]

- Setti, F.; Russell, C.; Bassetti, C.; Cristani, M. F-formation detection: Individuating free-standing conversational groups in images. PLoS ONE 2015, 10, 2015. [Google Scholar]

- Vascon, S.; Mequanint, E.Z.; Cristani, M.; Hung, H.; Pelillo, M.; Murino, V. A game-theoretic probabilistic approach for detecting conversational groups. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 658–675. [Google Scholar]

- Ricci, E.; Varadarajan, J.; Subramanian, R.; Bulo, S.R.; Ahuja, N.; Lanz, O. Uncovering interactions and interactors: Joint estimation of head, body orientation and f-formations from surveillance videos. In Proceedings of the IEEE Computer Society, Washington, DC, USA, 7–23 December 2015; pp. 4660–4668. [Google Scholar]

- Zhang, L.; Hung, H. Beyond F-formations: Determining Social Involvement in Free Standing Conversing Groups from Static Images. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Johal, W.; Jacq, A.; Paiva, A.; Dillenbourg, P. Child-robot spatial arrangement in a learning by teaching activity. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 533–538. [Google Scholar]

- Vázquez, M.; Steinfeld, A.; Hudson, S.E. Parallel detection of conversational groups of free-standing people and tracking of their lower-body orientation. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 3010–3017. [Google Scholar]

- Pathi, S.K.; Kiselev, A.; Loutfi, A. Estimating f-formations for mobile robotic telepresence. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; ACM: New York, NY, USA, 2017; pp. 255–256. [Google Scholar]

- Pathi, S.K.; Kristofferson, A.; Kiselev, A.; Loutfi, A. Estimating Optimal Placement for a Robot in Social Group Interaction. In Proceedings of the 2019 28th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019. [Google Scholar]

- Fiore, S.M.; Wiltshire, T.J.; Lobato, E.J.C.; Jentsch, F.G.; Huang, W.H.; Axelrod, B. Toward understanding social cues and signals in human-robot interaction: Effects of robot gaze and proxemic behavior. Front. Psychol. 2013, 4, 859. [Google Scholar] [CrossRef] [PubMed]

- Desai, M.; Tsui, K.M.; Yanco, H.A.; Uhlik, C. Essential features of telepresence robots. In Proceedings of the 2011 IEEE Conference on Technologies for Practical Robot Applications, Woburn, MA, USA, 11–12 April 2011; pp. 15–20. [Google Scholar]

- Vázquez, M.; Carter, E.J.; McDorman, B.; Forlizzi, J.; Steinfeld, A.; Hudson, S.E. Towards robot autonomy in group conversations: Understanding the effects of body orientation and gaze. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; ACM: New York, NY, USA, 2017; pp. 42–52. [Google Scholar]

- Tsui, K.M.; Desai, M.; Yanco, H.A.; Uhlik, C. Exploring use cases for telepresence robots. In Proceedings of the 6th International Conference on Human-Robot Interaction, Lausanne, Switzerland, 6–9 March 2011; ACM: New York, NY, USA, 2011; pp. 11–18. [Google Scholar]

- Sketchup. Available online: https://www.sketchup.com/ (accessed on 15 October 2019).

- Turtlebot. Available online: https://www.turtlebot.com/turtlebot2/ (accessed on 15 October 2019).

- Takayama, L.; Marder-Eppstein, E.; Harris, H.; Beer, J.M. Assisted driving of a mobile remote presence system: System design and controlled user evaluation. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1883–1889. [Google Scholar]

- Cosgun, A.; Florencio, D.A.; Christensen, H.I. Autonomous person following for telepresence robots. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 4335–4342. [Google Scholar]

- Taylor, A.; Riek, L.D. Robot perception of human groups in the real world: State of the art. In AAAI Fall Symposium Series; AAAI Press: Palo Alto, CA, USA, 2016; p. 366. [Google Scholar]

- Tseng, S.H.; Chao, Y.; Lin, C.; Fu, L.C. Service robots: System design for tracking people through data fusion and initiating interaction with the human group by inferring social situations. Robot. Auton. Syst. 2016, 83, 188–202. [Google Scholar] [CrossRef]

- Kiselev, A.; Kristoffersson, A.; Loutfi, A. The effect of field of view on social interaction in mobile robotic telepresence systems. In Proceedings of the 2014 ACM/IEEE international conference on Human-robot interaction, Bielefeld, Germany, 3–6 March 2014; ACM: New York, NY, USA, 2014; pp. 214–215. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pathi, S.K.; Kristoffersson, A.; Kiselev, A.; Loutfi, A. F-Formations for Social Interaction in Simulation Using Virtual Agents and Mobile Robotic Telepresence Systems. Multimodal Technol. Interact. 2019, 3, 69. https://doi.org/10.3390/mti3040069

Pathi SK, Kristoffersson A, Kiselev A, Loutfi A. F-Formations for Social Interaction in Simulation Using Virtual Agents and Mobile Robotic Telepresence Systems. Multimodal Technologies and Interaction. 2019; 3(4):69. https://doi.org/10.3390/mti3040069

Chicago/Turabian StylePathi, Sai Krishna, Annica Kristoffersson, Andrey Kiselev, and Amy Loutfi. 2019. "F-Formations for Social Interaction in Simulation Using Virtual Agents and Mobile Robotic Telepresence Systems" Multimodal Technologies and Interaction 3, no. 4: 69. https://doi.org/10.3390/mti3040069

APA StylePathi, S. K., Kristoffersson, A., Kiselev, A., & Loutfi, A. (2019). F-Formations for Social Interaction in Simulation Using Virtual Agents and Mobile Robotic Telepresence Systems. Multimodal Technologies and Interaction, 3(4), 69. https://doi.org/10.3390/mti3040069