Using Sensory Wearable Devices to Navigate the City: Effectiveness and User Experience in Older Pedestrians

Abstract

1. Introduction

2. Related Work

2.1. Sensory Navigation Instructions and Wearable Devices

2.2. User Experience with Navigation Aids

3. Materials and Methods

3.1. Implementation of the Navigation Aids

3.1.1. Wearable Devices Used

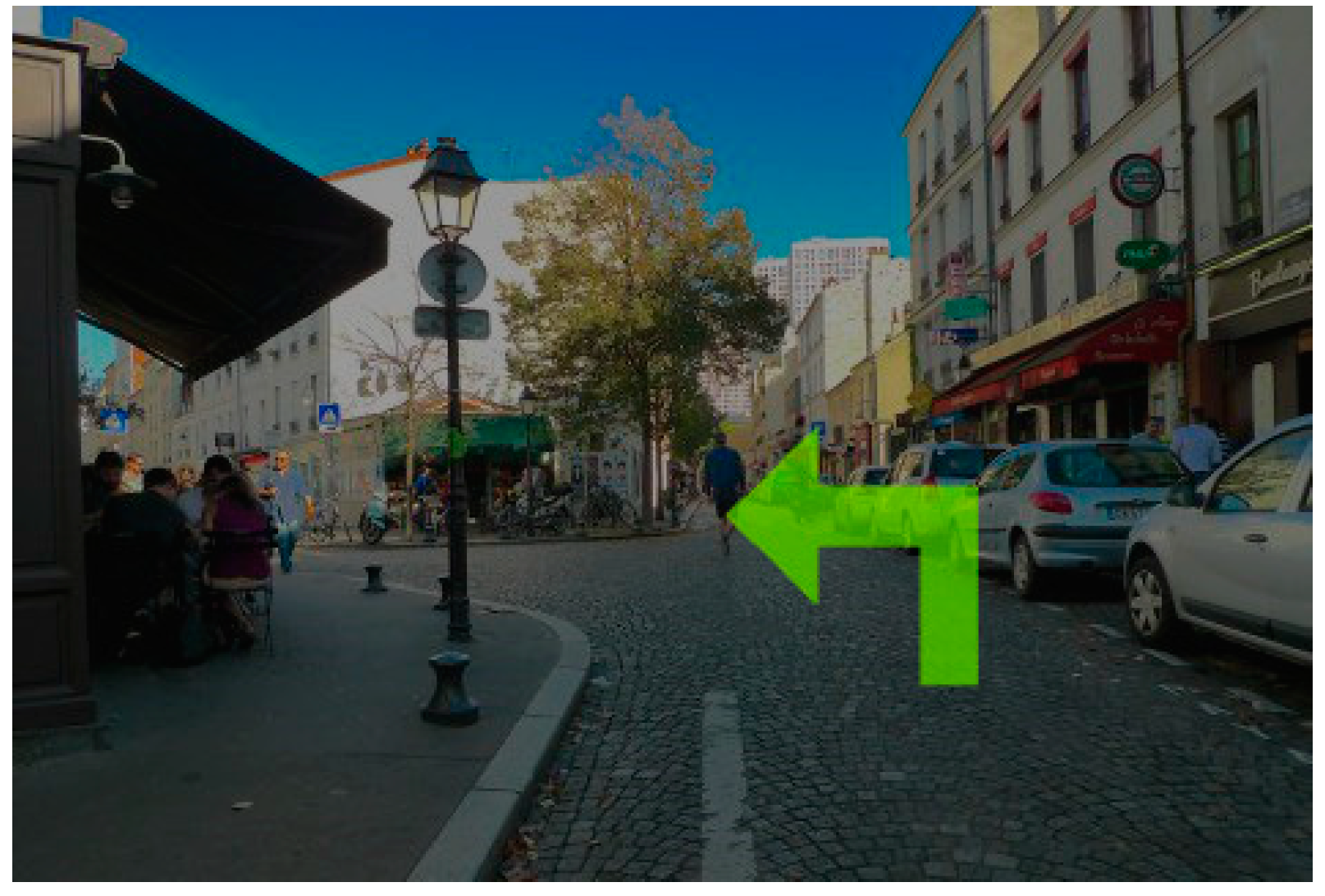

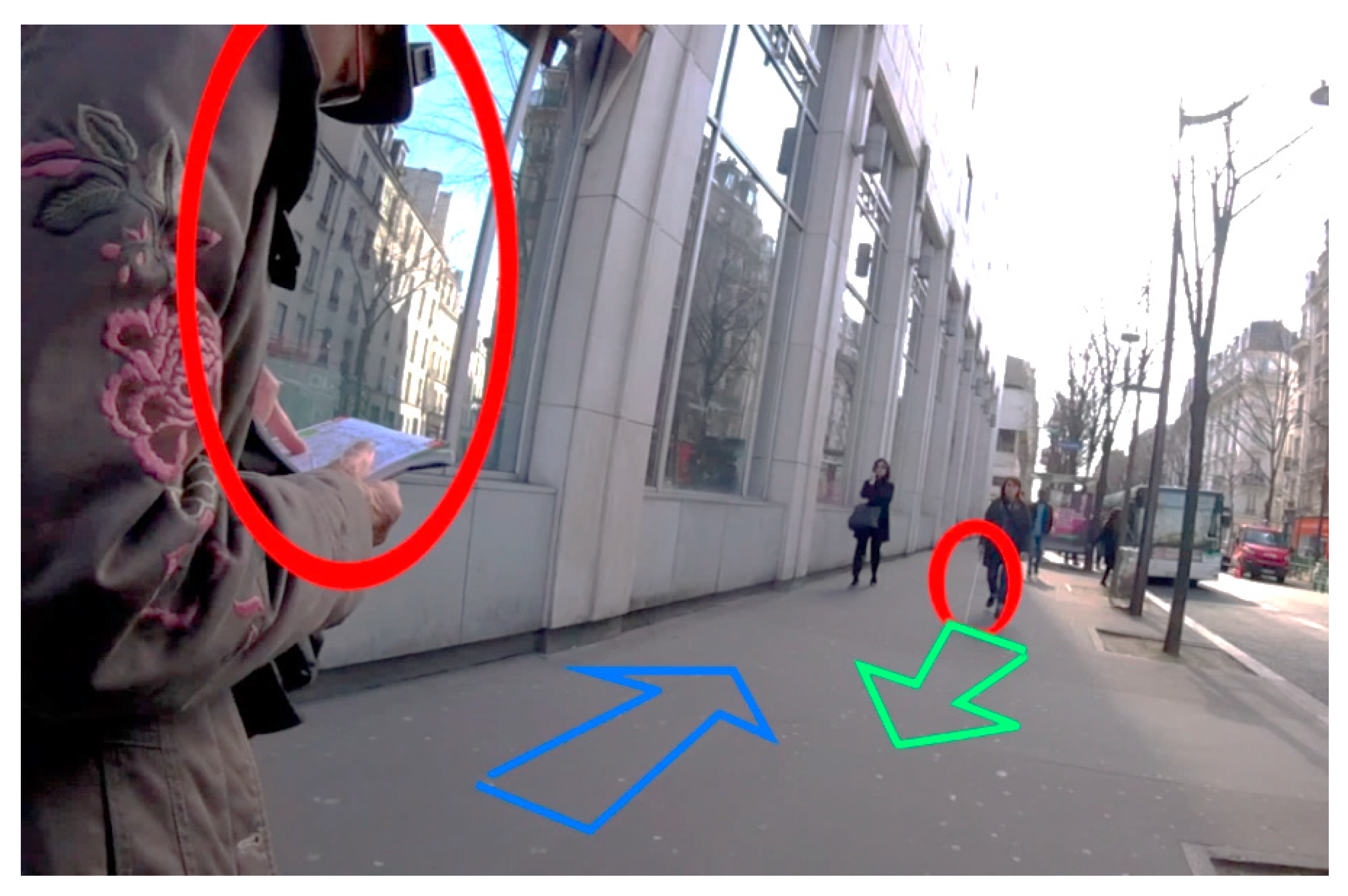

- The Optinvent ORA-2 AR glasses, aiming to provide participants with arrows inlayed in their field of view (Figure 1); they are of very good quality in the context of current technologies (cost 800€).

- The Sainsonic BM-7 bone conduction headphones, aiming to provide participants with spatialized sounds (Figure 2).

- The Huawei Watch Active smartwatch, aiming to provide participants with a warning vibration and an arrow displayed on the screen (Figure 3).

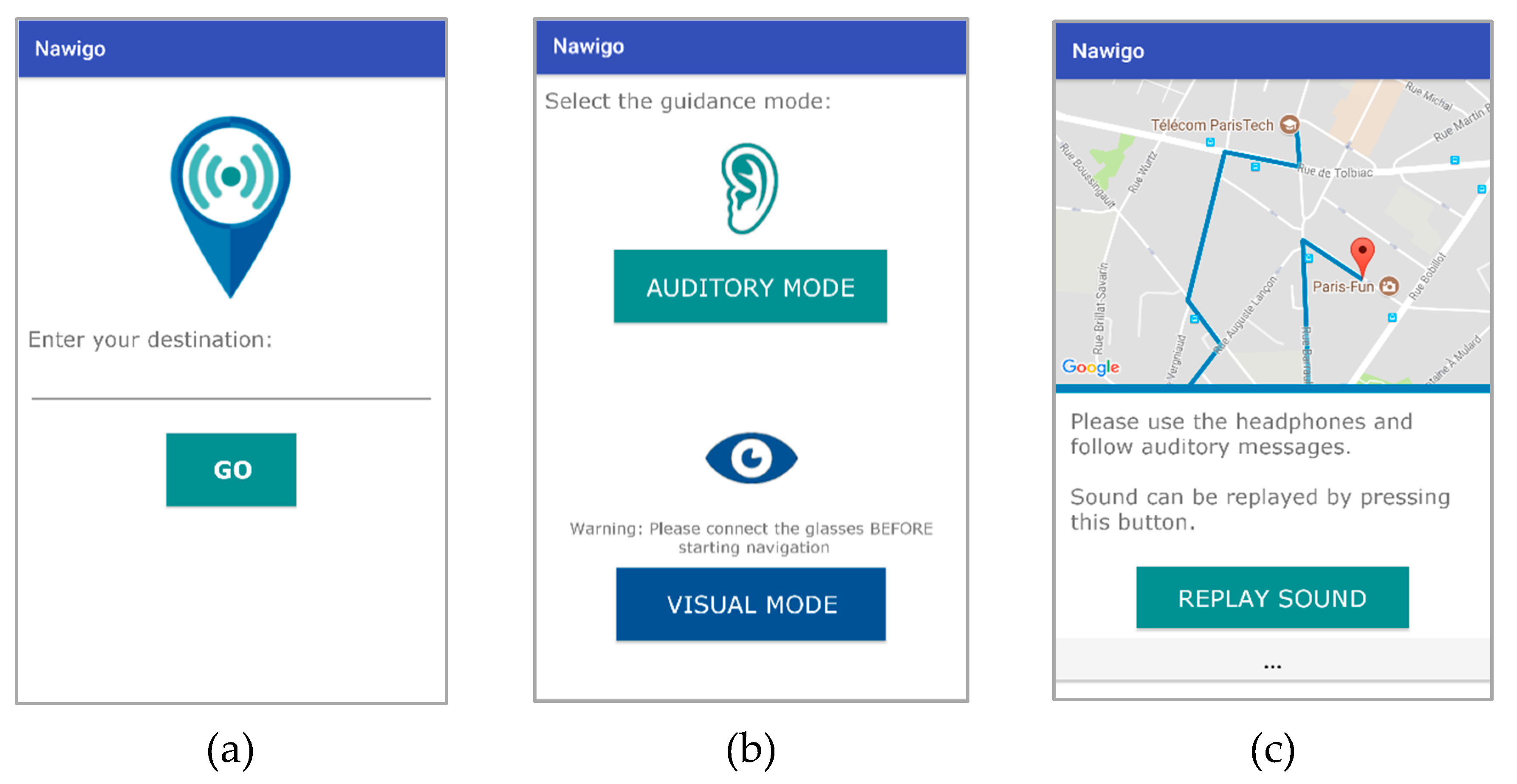

3.1.2. Application Design

3.1.3. Instructions Used

3.2. Methodology for Data Collection

3.2.1. Population

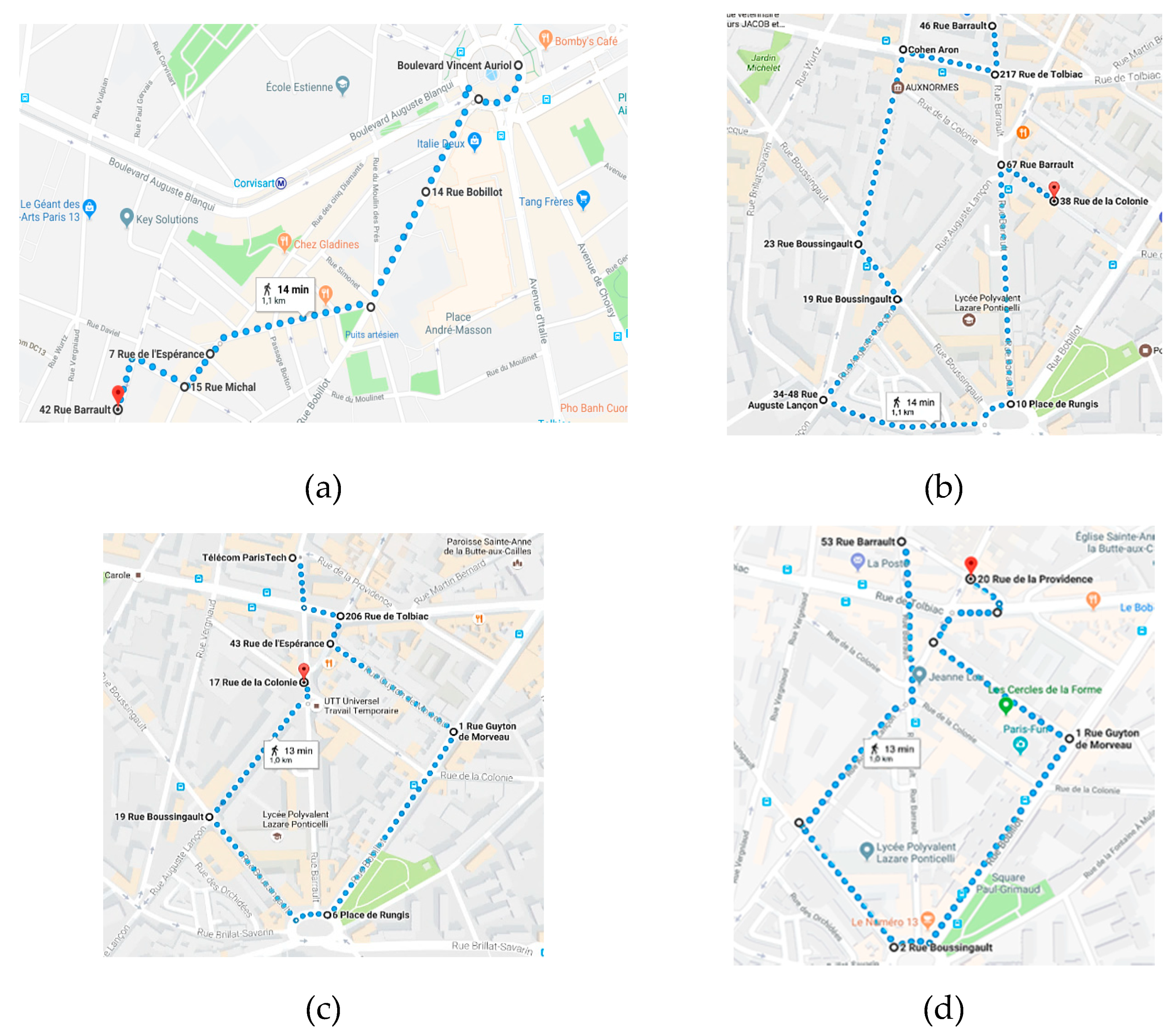

3.2.2. Navigation Task

3.2.3. Explicitation Interview

3.3. Methodology for Data Analysis

3.3.1. Efficiency Analysis

3.3.2. UX Analysis

3.3.3. Risky Inattention Analysis

4. Results

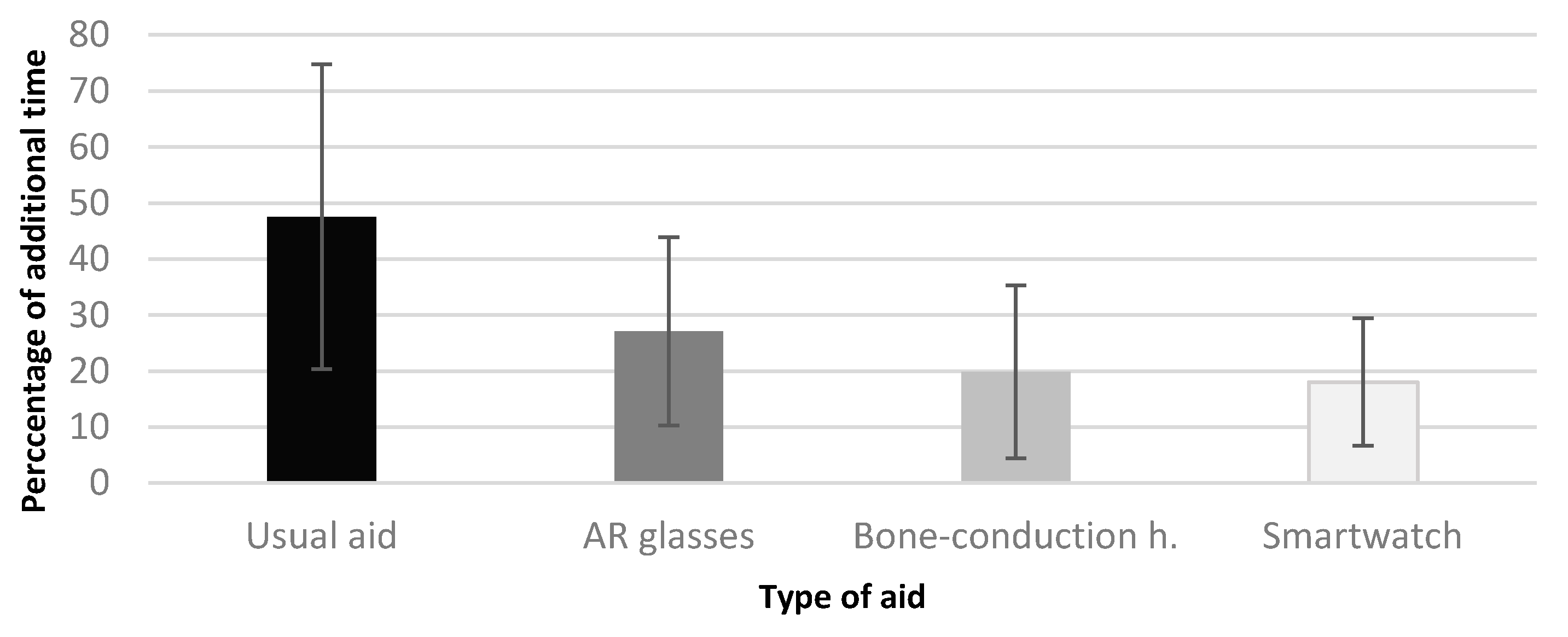

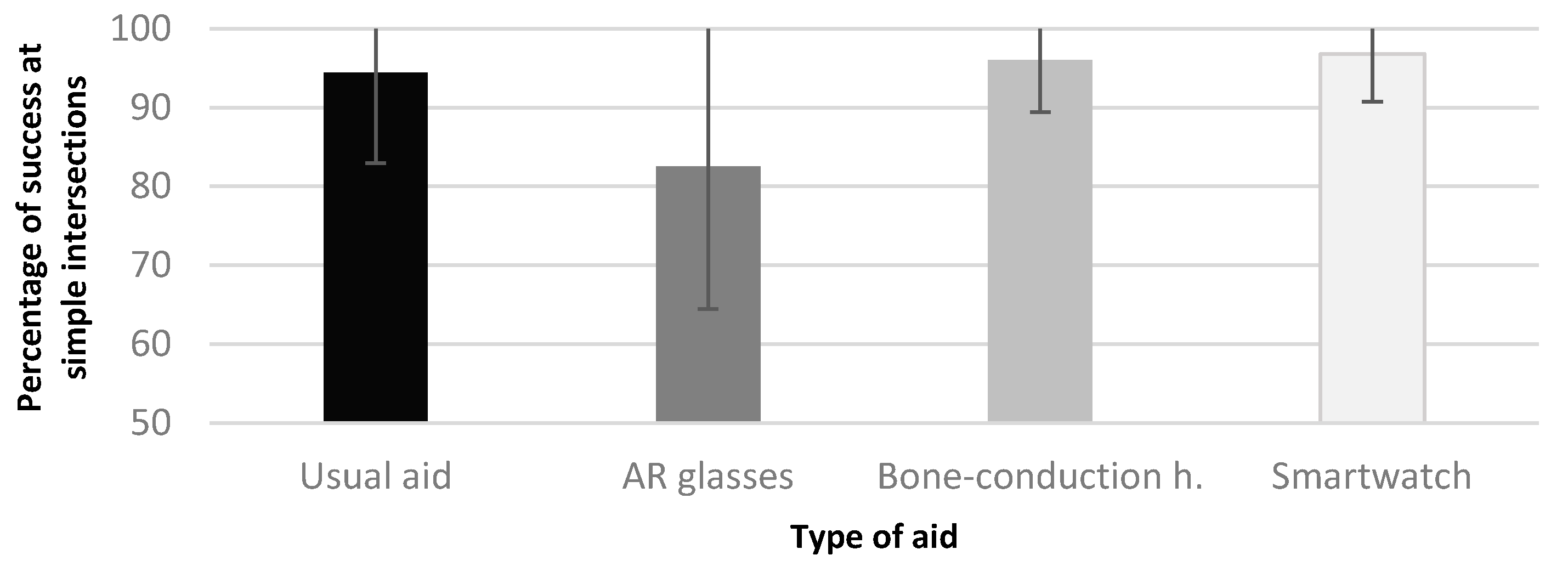

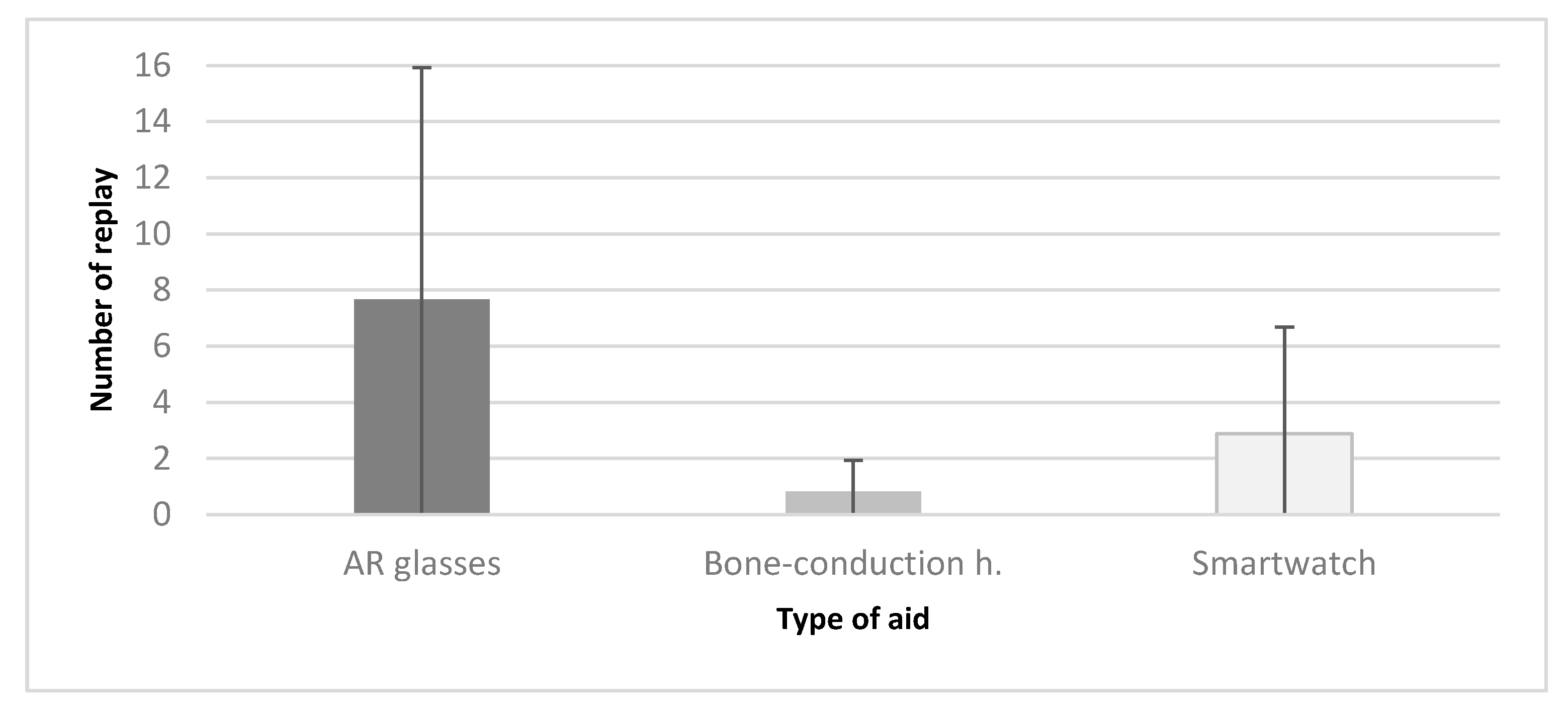

4.1. Efficiency of the Navigation Aids

4.2. User Experience with the Navigation Aids

4.2.1. Shifts in Attention

4.2.2. Understanding the Situation

4.2.3. Affective and Aesthetic Feelings

4.2.4. Prospective Evaluations

4.3. Observed Risky Inattentions

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Dunbar, G.; Holland, C.; Maylor, E. Older Pedestrians: A Critical Review of the Literature; Road Safety Research Report No 37; University of Warwick: Coventry, UK, 2004. [Google Scholar]

- Tournier, I.; Dommes, A.; Cavallo, V. Review of safety and mobility issues among older pedestrians. Accid. Anal. Prev. 2016, 91, 24–35. [Google Scholar] [CrossRef]

- Levasseur, M.; Desrosiers, J.; Noreau, L. Is social participation associated with quality of life of older adults with physical disabilities? Disabil. Rehabil. 2004, 26, 1206–1213. [Google Scholar] [CrossRef] [PubMed]

- Commissariat Général du Développement Durable (CGDD). La Mobilité des Français: Panorama Issu de l’enquête Nationale Transports et Déplacements 2008; Service de l’observation et des Statistiques: Paris, France, 2010. [Google Scholar]

- Lithfous, S.; Dufour, A.; Després, O. Spatial navigation in normal aging and the prodromal stage of Alzheimer’s disease: Insights from imaging and behavioral studies. Ageing Res. Rev. 2013, 12, 201–213. [Google Scholar] [CrossRef] [PubMed]

- Roche, R.P.; Mangaoang, M.; Commins, S.; O’Mara, S.M. Hippocampal contributions to neurocognitive mapping in humans: A new model. Hippocampus 2005, 15, 622–641. [Google Scholar] [CrossRef]

- Burles, F.; Guadagni, V.; Hoey, F.; Arnold, A.; Levy, R.M.; O’Neill, T.; Iaria, G. Neuroticism and self-evaluation measures are related to the ability to form cognitive maps critical for spatial orientation. Behav. Brain Res. 2014, 271, 154–159. [Google Scholar] [CrossRef] [PubMed]

- Head, D.; Isom, M. Age effects on wayfinding and route learning skills. Behav. Brain Res. 2010, 209, 49–58. [Google Scholar] [CrossRef] [PubMed]

- Taillade, M.; N’Kaoua, B.; Arvind Pala, P.; Sauzéon, H. Cognition spatiale et vieillissement: Les nouveaux éclairages offerts par les études utilisant la réalité virtuelle. Rev. Neuropsychol. 2014, 6, 36–47. [Google Scholar] [CrossRef]

- Klencklen, G.; Després, O.; Dufour, A. What do we know about aging and spatial cognition? Reviews and perspectives. Ageing Res. Rev. 2012, 11, 123–135. [Google Scholar] [CrossRef]

- Poucet, B.; Lenck-Santini, P.P.; Hok, V.; Save, E.; Banquet, J.P.; Gaussier, P.; Muller, R.U. Spatial Navigation and Hippocampal Place Cell Firing: The Problem of Goal Encoding. Rev. Neurosci. 2004, 5, 89–107. [Google Scholar] [CrossRef]

- Chittaro, L.; Burigat, S. Augmenting audio messages with visual directions in mobile guides: An evaluation of three approaches. In Proceedings of the 7th International Conference on Human Computer Interaction with Mobile Devices & Services, Salzburg, Austria, 19–22 September 2005. [Google Scholar]

- Emmerson, C.; Guo, W.; Blythe, P.; Namdeo, A.; Edwards, S. Fork in the road: In vehicle navigation systems and older drivers. Transp. Res. Part F Traffic Psychol. Behav. 2013, 21, 173–180. [Google Scholar] [CrossRef]

- Dejos, M. Approche Ecologique de L’évaluation de la Mémoire Episodique et de la Navigation Spatiale Dans la Maladie D’alzheimer. Ph.D. Thesis, Université Bordeaux, Bordeaux, France, 2013. [Google Scholar]

- Aubrey, J.B.; Li, K.Z.; Dobbs, R. Age differences in the interpretation of misaligned “You-Are-Here” maps. J. Gerontol. 1994, 49, 29–31. [Google Scholar] [CrossRef]

- Kolbe, T.H. Augmented Videos and Panoramas for Pedestrian Navigation. In Proceedings of the 2nd Symposium on Location Based Services and TeleCartography, Vienna, Austria, 28–29 January 2004. [Google Scholar]

- Tkacz, S. Learning map interpretation: Skill acquisition and underlying abilities. J. Environ. Psychol. 1998, 18, 237–249. [Google Scholar] [CrossRef]

- Craik, F.I.M.; Byrd, M. Aging and Cognitive Deficits. In Aging and Cognitive Processes. Advances in the Study of Communication and Affect; Craik, F.I.M., Trehub, S., Eds.; Springer: Boston, MA, USA, 1982. [Google Scholar]

- Liljedahl, M.; Lindberg, S.; Delsing, K.; Polojärvi, M.; Saloranta, T.; Alakärppä, I. Testing Two Tools for Multimodal Navigation. Adv. Hum. Comput. Interact. 2012. [Google Scholar] [CrossRef]

- McGookin, D.; Brewster, S.; Priego, P. Audio bubbles: Employing non-speech audio to support tourist wayfinding. In Proceedings of the HAID 2009, Dresden, Germany, 10–11 September 2009. [Google Scholar]

- Wenig, N.; Wenig, D.; Ernst, S.; Malaka, R.; Hecht, B.; Schöning, J. Pharos: Improving navigation instructions on smartwatches by including global landmarks. In Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services, Wien, Austria, 4–7 September 2017. [Google Scholar]

- Frey, M. CabBoots: Shoes with Integrated Guidance System. In Proceedings of the 1st International Conference on Tangible and Embedded Interaction, Baton Rouge, LA, USA, 15–17 February 2007. [Google Scholar]

- Pielot, M.; Boll, S. Tactile Wayfinder: Comparison of tactile waypoint navigation with commercial pedestrian navigation systems. Lect. Notes Comput. Sci. 2010, 6030, 76–93. [Google Scholar] [CrossRef]

- Kray, C.; Coors, V.; Elting, C.; Laakso, K. Presenting Route Instructions on Mobile Devices: From Textual Directions to 3D Visualization. Explor. Geovisualization 2005, 529–550. [Google Scholar] [CrossRef]

- Walther-Franks, B.; Malaka, R. Evaluation of an Augmented Photograph-based Pedestrian Navigation System. In Proceedings of the 9th International Symposium on Smart Graphics, Salamanca, Spain, 28–30 May 2008. [Google Scholar]

- Giannopoulos, I.; Kiefer, P.; Raubal, M. Gaze Nav: Gaze-Based Pedestrian Navigation. In Proceedings of the Mobile HCI’15, Copenhagen, Denmark, 24–27 August 2015; pp. 337–346. [Google Scholar]

- Wither, J.; Au, C.E.; Rischpater, R.; Grzeszczuk, R. Moving beyond the map. In Proceedings of the 15th International Conference on Human-Computer Interaction with Mobile Devices and Services, Munich, Germany, 27–30 August 2013. [Google Scholar]

- Kim, S.; Dey, A.K. Simulated augmented reality windshield display as a cognitive mapping aid for elder driver navigation. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009. [Google Scholar]

- Hile, H.; Liu, A.; Borriello, G. Visual Navigation for mobile devices. IEEE Multimed. 2009, 17, 16–25. [Google Scholar] [CrossRef]

- Montuwy, A.; Dommes, A.; Cahour, B. Helping Older Pedestrians Navigate in the City: Comparisons of Visual, Auditory and Haptic Guidance Instructions in a Virtual Environment. Behav. Inf. Technol. 2018. [Google Scholar] [CrossRef]

- Rehrl, K.; Häusler, E.; Leitinger, S.; Bell, D. Pedestrian navigation with augmented reality, voice and digital map: Final results from an in situ field study assessing performance and user experience. J. Locat. Based Serv. 2014, 8, 75–96. [Google Scholar] [CrossRef]

- Wingfield, A.; Tun, P.; Mccoy, S.L.; Mccoy, L. Hearing Loss in Older Adulthood: What It Is and How It Interacts with Cognitive Performance. Curr. Dir. Psychol. Sci. 2013, 14, 144–148. [Google Scholar] [CrossRef]

- Bouccara, D.; Ferrary, E.; Mosnier, I.; Bozorg Grayeli, A.; Sterkers, O. Presbyacousie. EMC Oto-Rhino-Laryngol 2005, 2, 329–342. [Google Scholar] [CrossRef]

- Heinroth, T.; Buhler, D. Arrigator—Evaluation of a speech-based pedestrian navigation system. In Proceedings of the 4th IET International Conference, Santa Margherita Ligure, Italy, 14–16 July 2008. [Google Scholar]

- Holland, S.; Morse, D.R.; Gedenryd, H. AudioGPS: Spatial audio navigation with a minimal attention interface. Pers. Ubiquitous Comput. 2002, 6, 253–259. [Google Scholar] [CrossRef]

- Etter, R.; Specht, M. Melodious walkabout: Implicit navigation with contextualized personal audio contents. Ext Proc. Pervasive 2005, 49, 43–49. [Google Scholar]

- Wilson, J.; Walker, B.N.; Lindsay, J.; Cambias, C.; Dellaert, F. SWAN: System for wearable audio navigation. In Proceedings of the International Symposium on Wearable Computers, ISWC, Boston, MA, USA, 11–13 October 2007. [Google Scholar]

- Tran, T.V.; Letowski, T.; Abouchacra, K.S. Evaluation of acoustic beacon characteristics for navigation tasks. Ergonomics 2000, 43, 807–827. [Google Scholar] [CrossRef]

- Goodman, J.; Brewster, S.; Gray, P. How can we best use landmarks to support older people in navigation? Behav. Inf. Technol. 2005, 24, 3–20. [Google Scholar] [CrossRef]

- Brunet, L. Etude Ergonomique de la Modalité Haptique Comme Soutien à L’activité de Déplacement Piéton Urbain: Un Projet de Conception de Produit Innovant. Ph.D. Thesis, Université Paris Sud, Orsay, France, 2014. [Google Scholar]

- Pielot, M.; Poppinga, B.; Heuten, W.; Boll, S. Pocketnavigator: Studying tactile navigation systems in-situ. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems CHI’12, Austin, TX, USA, 5–10 May 2012. [Google Scholar]

- Wickremaratchi, M.; Llewelyn, L.G. Effects of ageing on touch. Postgrad. Med. J. 2006, 82, 301–304. [Google Scholar] [CrossRef]

- Meier, A.; Matthies, D.; Urban, B.; Wettach, R. Exploring Vibrotactile Feedback on the Body and Foot for the Purpose of Pedestrian Navigation. In Proceedings of the 2nd International Workshop on Sensor-Based Activity Recognition and Interaction, Rostock, Germany, 25–26 June 2015. [Google Scholar]

- Czaja, S.J.; Charness, N.; Fisk, A.D.; Hertzog, C.; Nair, S.N.; Rogers, W.A.; Sharit, J. Factors. Predicting the Use of Technology: Findings from the Center for Research and Education on Aging and Technology Enhancement (CREATE). J. Psychol. Aging 2006, 21, 333–352. [Google Scholar] [CrossRef]

- Montuwy, A.; Dommes, A.; Cahour, B. What Sensory Pedestrian Navigation Aids for the Future? A Survey Study. In Proceedings of the 2018 Computer-Human Interaction Conference, CHI’18, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar]

- Février, F.; Gauducheau, N.; Jamet, E.; Rouxel, G.; Salembier, P. La prise en compte des affects dans le domaine des interactions homme-machine: Quels modèles, quelles méthodes, quels bénéfices ? Le Trav. Hum. 2011, 2, 183–201. [Google Scholar] [CrossRef]

- Hassenzahl, M. The thing and I: Understanding the relationship between user and product. In Funology: From Usability to Enjoyment; Blythe, M., Overbeeke, C., Monk, A.F., Wright, P.C., Eds.; Kluwer: Dordrecht, The Netherlands, 2003; pp. 31–42. [Google Scholar]

- Thüring, M.; Mahlke, S. Usability, aesthetics and emotions in human–technology interaction. Int. J. Psychol. 2007, 42, 253–264. [Google Scholar] [CrossRef]

- Arning, K.; Ziefle, M.; Li, M.; Kobbelt, L. Insights into user experiences and acceptance of mobile indoor navigation devices. In Proceedings of the 11th International Conference on Mobile and Ubiquitous Multimedia, Ulm, Germany, 4–6 December 2012. [Google Scholar]

- Sarjakoski, L.T.; Nivala, A.M. Adaptation to Context—A Way to Improve the Usability of Mobile Maps. In Map-Based Mobile Services, Theories, Methods and Implementations; Meng, L., Zipf, A., Reichenbacher, T., Eds.; Springer: New York, NY, USA, 2005; pp. 107–123. [Google Scholar]

- Kim, S.; Gajos, K.; Muller, M.; Grosz, B. Acceptance of Mobile Technology by Older Adults: A Preliminary Study. In Proceedings of the 2016 Conference on Mobile Human Computer Interaction, San Jose, CA, USA, 7–12 May 2016. [Google Scholar]

- Holzinger, A.; Searle, G.; Kleinberger, T.; Seffah, A.; Javahery, H. Investigating Usability Metrics for the Design and Development of Applications for the Elderly. In ICCHP 2008, LNCS 5105; Springer-Verlag: Berlin/Heidelberg, Germany, 2005; pp. 98–105. [Google Scholar]

- Lee, C.; Coughlin, J.F. PERSPECTIVE: Older Adults’ Adoption of Technology: An Integrated Approach to Identifying Determinants and Barriers. J. Prod. Innov. Manag. 2014. [Google Scholar] [CrossRef]

- Koelle, M.; El Ali, A.; Cobus, V.; Heuten, W.; Boll, S. All about Acceptability? Identifying Factors for the Adoption of Data Glasses. In Proceedings of the CHI’17, Denver, CO, USA, 6–11 May 2017. [Google Scholar]

- Westerkull, P.; Sjostrom, D. Performance and design of a new bone conduction device. In Proceedings of the Fifth International Congress on Bone Conduction Hearing and Related Technologies, Lake Louise, AB, Canada, 20–23 May 2015. [Google Scholar]

- Kim, K.J.; Shin, D.H. An acceptance model for smart watches: Implications for the adoption of future wearable technology. Internet Res. 2015, 25, 527–541. [Google Scholar] [CrossRef]

- Faisal, M.; Yusof, M.; Romli, N. Design for Elderly Friendly: Mobile Phone Application and Design that Suitable for Elderly. Int. J. Comput. Appl. 2014, 95, 3. [Google Scholar]

- Fisk, A.; Rogers, W.; Charness, N.; Czaja, S.; Sharit, J. Designing for Older Adults: Principles and Creative Human Factors Approaches; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Sjolinder, M.; Hook, K.; Nilsson, L.G.; Andersson, G. Age differences and the acquisition of spatial knowledge in a three-dimensional environment: Evaluating the use of an overview map as a navigation aid. Int. J. Hum. Comput. Stud. 2005, 63, 537–564. [Google Scholar] [CrossRef]

- Marais, J.; Ambellouis, S.; Meurie, C.; Ruichek, Y. Image processing for a more accurate GNSS-based positioning in urban environment. In Proceedings of the 22nd ITS World Congress, Bordeaux, France, 5–9 October 2015. [Google Scholar]

- Davidse, R. Assisting the Older Driver: Intersection Design and in-Car Devices to Improve the Safety of the Older Driver. Ph.D. Thesis, University of Groningen, Groningen, Netherlands, 2007. [Google Scholar]

- Oulasvirta, A.; Tamminen, S.; Roto, V.; Kuorelahti, J. Interaction in 4-Second Bursts: The Fragmented Nature of Attentional Resources in Mobile HCI. In Proceedings of the 1st Conference on Human Factors in Computing Systems CHI 2005, Portland, OR, USA, 2–7 April 2005. [Google Scholar]

- Ng, A.; Chan, A. Finger Response Times to Visual, Auditory and Tactile Modality Stimuli. In Proceedings of the International MultiConference of Engineers and Computer Scientists, IMECS 2012, Hong Kong, China, 14–16 March 2012. [Google Scholar]

- Montuwy, A.; Cahour, B.; Dommes, A. Questioning User Experience: A Comparison between Visual, Auditory and Haptic Guidance Messages among Older Pedestrians. In Proceedings of the 2017 CHItaly Conference on Computer-Human Interaction, Cagliari, Italy, 18–20 September 2017. [Google Scholar]

- Zhao, Y.; Hu, M.; Hashash, S.; Azenkot, S. Understanding Low Vision People’s Visual Perception on Commercial Augmented Reality Glasses. In Proceedings of the 2017 Computer-Human Interaction Conference, CHI’17, Denver, CO, USA, 6–11 May 2017. [Google Scholar]

- Vermersch, P. L’entretien D’explicitation; ESF Editeur: Paris, France, 1994. [Google Scholar]

- Cahour, B.; Salembier, P.; Zouinar, M. Analysing Lived Experience of Activity. Trav. Hum. 2016, 79, 2. [Google Scholar]

- Palmer, S.; Schloss, K. An ecological valence theory of human color preference. Proc. Natl. Acad. Sci. USA 2010, 107, 8877–8882. [Google Scholar] [CrossRef]

- Créno, L.; Cahour, B. Chronicles of lived experiences for studying the process of trust in carpooling. In Proceedings of the European Conference in Cog. Ergonomics, Vienna, Austria, 1–3 September 2014. [Google Scholar]

- Light, A. Adding method to meaning: A technique for exploring peoples’ experience with technology. Behav. Inf. Technol. 2006, 25, 175–187. [Google Scholar] [CrossRef]

- Obrist, M.; Comber, R.; Subramanian, S.; Piqueras-Fiszman, B.; Velasco, C.; Spence, C. Temporal, Affective, and Embodied Characteristics of Taste Experiences: A Framework for Design. In Proceedings of the 2014 CHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014. [Google Scholar]

- Fereday, J.; Muir-Cochrane, E. Demonstrating Rigor Using Thematic Analysis: A Hybrid Approach of Inductive and Deductive Coding and Theme Development. Int. J. Qual. Methods 2006, 5, 1. [Google Scholar] [CrossRef]

- Byrt, T. How Good Is That Agreement? Epidemiology 1996, 7, 561. [Google Scholar] [CrossRef]

- Phillips, J.; Walford, N.; Hockey, A.; Foreman, N.; Lewis, M. Older people and outdoor environments: Pedestrian anxieties and barriers in the use of familiar and unfamiliar spaces. Geoforum 2013, 47, 113–124. [Google Scholar] [CrossRef]

- Ariel, R.; Moffat, S. Age-related similarities and differences in monitoring spatial cognition. Aging Neuropsychol. Cogn. 2017, 25, 3. [Google Scholar] [CrossRef]

- Turano, K.; Munoz, B.; Hassan, S.; Duncan, D.; Gower, E.; Roche, K.; Keay, L.; Munro, C.; West, S. Poor Sense of Direction Is Associated with Constricted Driving Space in Older Drivers. J. Gerontol. 2009, 3, 348–355. [Google Scholar] [CrossRef]

- Millerand, F. La dimension cognitive de l’appropriation des artefacts communicationnels. In Internet: Nouvel Espace Citoyen; Jauréguiberry, F., Proulx, S., Eds.; L’Harmattan: Paris, France, 2002; pp. 181–203. [Google Scholar]

- Fang, Z.; Li, Q.; Shaw, S.L. What about people in pedestrian navigation? Geo-Spat. Inf. Sci. 2015, 18, 135–150. [Google Scholar] [CrossRef]

- Siéroff, E.; Auclair, L. L’attention préparatoire. In Neurosciences Cognitives de L’attention Visuelle; Michael, G., Ed.; Solal Editeur: Marseille, France, 2007. [Google Scholar]

- Von Watzdorf, S.; Michahelles, F. Accuracy of Positioning Data on Smartphones. In Proceedings of the Loc Web 2010, Tokyo, Japan, 29 November 2010. [Google Scholar]

- Van Hedger, K.; Necka, E.A.; Barakzai, A.K.; Norman, G.J. The influence of social stress on time perception and psychophysiological reactivity. Psychophysiology 2017, 54, 706–712. [Google Scholar] [CrossRef]

- Hsieh, M.H.; Pan, S.L.; Setiono, R. Product-, corporate- and country-image dimensions and purchase behavior: A multicountry analysis. J. Acad. Mark. Sci. 2004, 32, 251–270. [Google Scholar] [CrossRef]

- Nielsen, J. Usability Engineering; Morgan Kaufmann: San Francisco, CA, USA, 1993. [Google Scholar]

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

| UX Dimensions | Phenomena Impacting UX Over Time |

|---|---|

| Perceptibility of the instructions | Shifts in attention |

| Mental workload and attention to the instructions | |

| Attention to the surroundings and feeling of safety | |

| Ease of learning of the instructions | Understanding of the situation |

| Understandability of the instructions in urban context | |

| Trustworthiness | Affective and aesthetic feelings |

| Aesthetics and discretion | |

| Other feelings | |

| Perceived usefulness | Prospective evaluations |

| Participants’ expectations about navigation aid |

| Usual Aid | AR Glasses | Bone Conduction h. | Smartwatch | |

|---|---|---|---|---|

| Bad perceptibility of the instructions | 44% (8) | 72% (13) | 22% (4) | 22% (4) |

| High mental workload and attention to the instructions | 22% (4) | 56% (10) | 5% (1) | 22% (4) |

| Lack of attention to the surroundings | 17% (3) | 61% (11) | 0% (0) | 28% (5) |

| Difficulty in learning the instructions | NA | 11% (2) | 33% (6) | 0% (0) |

| Doubts about the instructions understanding in urban context | 61% (11) | 44% (8) | 11% (2) | 17% (3) |

| Weak trustworthiness | NA | 61% (11) | 22% (4) | 11% (2) |

| Lack of aesthetics and discretion | NA | 67% (12) | 5% (1) | 0% (0) |

| Other negative feelings | NA | 67% (12) | 33% (6) | 28% (5) |

| Lack of usefulness for oneself | NA | 89% (16) | 44% (8) | 39% (7) |

| Inattentions: | Usual Aid | AR Glasses | Bone-Cond. H. | Smartwatch |

|---|---|---|---|---|

| Due to the aid | 20 | 1 | 3 | 4 |

| Due to the surroundings | 2 | 8 | 6 | 0 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Montuwy, A.; Cahour, B.; Dommes, A. Using Sensory Wearable Devices to Navigate the City: Effectiveness and User Experience in Older Pedestrians. Multimodal Technol. Interact. 2019, 3, 17. https://doi.org/10.3390/mti3010017

Montuwy A, Cahour B, Dommes A. Using Sensory Wearable Devices to Navigate the City: Effectiveness and User Experience in Older Pedestrians. Multimodal Technologies and Interaction. 2019; 3(1):17. https://doi.org/10.3390/mti3010017

Chicago/Turabian StyleMontuwy, Angélique, Béatrice Cahour, and Aurélie Dommes. 2019. "Using Sensory Wearable Devices to Navigate the City: Effectiveness and User Experience in Older Pedestrians" Multimodal Technologies and Interaction 3, no. 1: 17. https://doi.org/10.3390/mti3010017

APA StyleMontuwy, A., Cahour, B., & Dommes, A. (2019). Using Sensory Wearable Devices to Navigate the City: Effectiveness and User Experience in Older Pedestrians. Multimodal Technologies and Interaction, 3(1), 17. https://doi.org/10.3390/mti3010017