1. Introduction

Over the last two decades, Building Information Modeling (BIM) has emerged as the fundamental paradigm for the digitization of the broadly understood construction industry–AECOO (Architecture, Engineering, Construction, Owners & Operators) [

1]. BIM combines geometric data, semantics (high-level relationships), and non-graphical data into coherent, shared models that support decision-making from the concept and design stage, through project implementation, to the maintenance and operation stage [

2,

3]. At the same time, there has been a strong evolution in artificial neural networks [

4]. Convolutional Neural Networks (CNNs) have become the standard in spatial-visual tasks [

5], and Recurrent Neural Networks (RNNs) in sequence and time series modeling [

6]. The convergence of the relative maturity of BIM ecosystems with the maturity of CNNs/RNNs has triggered a wave of applications that promise automation, scalability, and greater efficiency of engineering processes. In recent years, the notion of “active BIM” has emerged to describe BIM environments augmented with optimization and decision-making capabilities that dynamically update project information during design and construction

Despite significant progress, the evolution of Artificial Intelligence (AI) is still ongoing, and new obstacles are being overcome [

7]. However, there is a lack of standardized benchmarks dedicated to BIM tasks [

8], the cost of annotation in native environments is high, and the differences between synthetic data (generated from models) and real data (from construction sites, scans, photos) make it difficult to transfer new solutions into practice [

9]. In addition, the lack of seamless interoperability and different versions of IFC (Industry Foundation Class) standards complicates the replicability and comparability of results [

10], and the limited “explainability” of AI models undermines confidence in applications critical to key costs or schedules [

11], which are most important in the construction investment process. At the same time, the only source of truth, the so-called CDE (Common Data Environment), does not always function well in investment processes [

12], which hinders the development of SaaS (Software as a Service). These barriers point to the need for work that will not only present existing solutions but also organize the evolution and ask questions to which we still do not know the answers.

The aim of this article was to conduct a synthetic review of the literature related to the evolution of CNNs and RNNs in BIM applications and to organize the current state of knowledge in three complementary perspectives: (1) computer visualization coupled with BIM models (detection, segmentation, and quality verification in images, videos, and point clouds), (2) sequence and time series modeling (prediction of costs, energy, work progress, and risk), and (3) integration of deep learning results with the semantics and topology of IFC models. In each of these areas, the dominant architectures, typical data flows (including the use of synthetic data and learning methods), and a set of evaluation metrics used in practice were identified. At the same time, limitations and gaps that hinder the large-scale implementation of BIM–AI were pointed out.

The article is not only review-oriented but also discussion-oriented. Several fundamental questions are posed that will determine the direction of research and practice in the coming years. The research questions are as follows:

When are classic CNNs/RNNs sufficient for BIM tasks, and when do models that explore relationships and attention (transformers) give an advantage?

How can model predictions be linked to IFC elements and relationships so that they are operationalizable in workflows and CDE systems?

What balance between synthetic and real data minimizes the domain gap while reducing the already high cost of annotation?

What XAI (Explainable AI) mechanisms are practically useful for engineers and how can their value be measured?

What are the minimum requirements (data schemas, APIs, metrics) needed to make results comparable and transferable between tools?

The contribution of this work is threefold. (1) We provide a structured synthesis of scattered results on the evolution of CNN and RNN approaches for BIM-related tasks, highlighting typical data flows, model architectures and integration patterns. (2) We describe Bimetria as a concrete implementation of these ideas in an industrial setting, showing how AI-based perception can be linked with IFC structures and quantity takeoff in a repeatable way. (3) We adapt and extend the concept of “active BIM”–previously used mainly in the context of optimization-based construction management and logistics to an AI-perception-driven workflow in which CNN/RNN models automatically construct and update IFC-based building models and bills of quantities from 2D drawings. The structure of the article is as follows:

Section 1 covers the Introduction,

Section 2 presents theoretical background and terminology, as well as the origins and evolution of CNN/RNN.

Section 3 discusses the new Bimetria (CNN) tool—its operation and pipeline.

Section 4 discusses future research directions, identifies limitations, gaps, and challenges, and attempts to answer the fundamental questions posed.

Section 5 presents recommendations and conclusions.

2. Evolution of CNN/RNN

2.1. Literature Search Protocol

To make the review reproducible, a transparent literature search protocol was applied. Two major bibliographic databases, Scopus and Web of Science, were queried, focusing on original research articles at the intersection of BIM and deep learning. The core query combined BIM-related terms with CNN/RNN-related terms, such as (“Building Information Modeling” OR BIM OR “Industry Foundation Classes” OR IFC) AND (“convolutional neural network” OR CNN OR “recurrent neural network” OR RNN OR “deep learning”).

The time window was restricted to 2012–2025, corresponding to the period in which deep learning methods became practically relevant for BIM applications. The search was limited to peer-reviewed journal and conference papers in English. In the first screening stage, titles and abstracts were checked against the following inclusion criteria: (i) explicit use of BIM or IFCs in the problem formulation or data structures; (ii) use of CNNs, RNNs or closely related deep architectures; (iii) focus on AECOO-relevant tasks such as detection, segmentation, quality control, forecasting or model integration. Papers that only mentioned BIM tangentially, purely theoretical AI work without a BIM context, and non-archival publications such as position papers or theses were excluded.

In the second stage, full texts were assessed to confirm that BIM/IFC structures were effectively used and that quantitative evaluation was reported. Methodologically, the paper is based on an in-depth narrative review rather than a fully protocol-driven systematic review.

For each paper identified in the search, the primary task (e.g., object detection, semantic segmentation, defect detection, progress estimation, cost or energy forecasting), model family (e.g., 2D/3D CNN, U-Net/HR-Net, LSTM/GRU, graph neural networks, transformers), data type (raster drawings, 3D point clouds, IFC graphs, time series, video) and main evaluation metrics (e.g., IoU/Dice, mAP, accuracy/F1, MAE/RMSE) were noted. On this basis, papers were assigned to one or more of the three analytical lenses used in the article: computer visualization coupled with BIM models, sequence and time series modeling, and integration of deep learning outputs with IFC semantics and topology. The synthesis presented in

Section 2 follows this structure.

2.2. Three Eras of Evolution

The first concepts of neural networks dominated until the 1990s, but computational limitations and the vanishing gradient problem hampered the development of deep architectures. Convolutions became standard in images (LeCun/LeNet-5 ideas) [

13], but their potential only fully revealed itself in the era of GPUs and big data. In 2012, a major milestone was reached with the “AlexNet” network by Krizhevsky, Sutskever, and Hinton [

14]. Their model outperformed the competition in ImageNet, demonstrating that deep CNNs radically improve image classification quality through scaling. Since then, we have seen an avalanche of similar architectures. The following can be considered further milestones in the development of CNNs and RNNs:

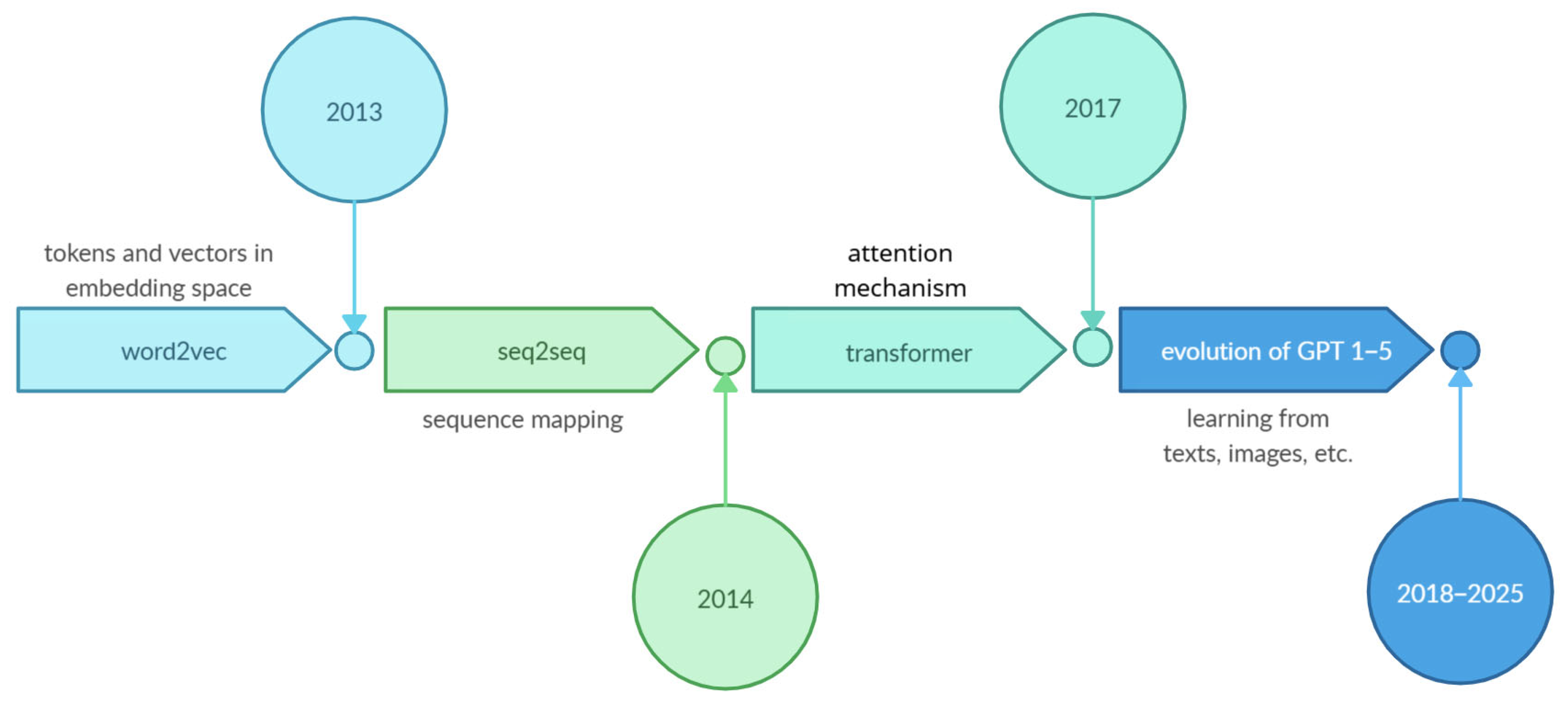

2013—word2vec by Tomas Mikolov et al. [

15];

2014—seq2seq Sutskever, Vinyals, Le [

16];

2016—AlphaGo (DeepMind) [

17];

2017—Transformer (“Attention Is All You Need”) as a milestone in the architecture behind GPT-x [

18];

2018—GPT1—the first LLM from OpenAI [

19];

2019—GPT2 — (described in the media as “too dangerous to release”) [

20];

2022—GPT3.5—public debut of ChatGPT on 30 November 2022 [

21];

2023—GPT4—“Sparks of Artificial General Intelligence” [

22];

2025—GPT5—with a built-in “thinking” mode, whose approach corresponds to the concept of System 2 (Kahneman) [

23] used in the description of reasoning models.

Key events are mapped on the timeline in

Figure 1.

For BIM practice, the most direct implications of these milestones are the emergence of attention-based architectures and transformers for point clouds and graphs, enabling richer reasoning over IFC topology, as well as the use of large language models to support tasks such as code compliance checking, automated documentation and conversational access to project data. In this article, we focus on CNN/RNN architectures but briefly discuss how attention and transformer-based models can complement them in

Section 2 and

Section 4.

As a result, BIM has access to CNN components that enable the detection and segmentation of structural elements, quality control in images or videos, and the integration of results with IFC models. At the same time, RNNs were also developing. For example, LSTM/GRU models (2014) established the standard for modeling time series and event sequences, and seq2seq (2014) showed how to map variable-length sequences to other sequences. The introduction of the attention mechanism (2014) shifted the emphasis to dynamic weighting of time steps, which improved performance and stability. For BIM, this translated into forecasting delays, costs, energy, and analysis of operational logs/records throughout the life cycle of a building.

With the advent of AlphaGo (a system combining deep CNN with reinforcement learning and tree search, which defeated champion Lee Sedol), it was proven that the combination of perception (CNN), memory/planning, and expert data scales to tasks with astronomical state space. AlphaGo Zero went even further, learning “from scratch” solely through self-play. Although Go has nothing to do with BIM, these milestones have changed the mental model of system design, as instead of solely supervised pipelines, the role of feedback, simulation, and decision policies is growing, which in turn translates into planning, scheduling, and active learning on synthetic data in AECOO.

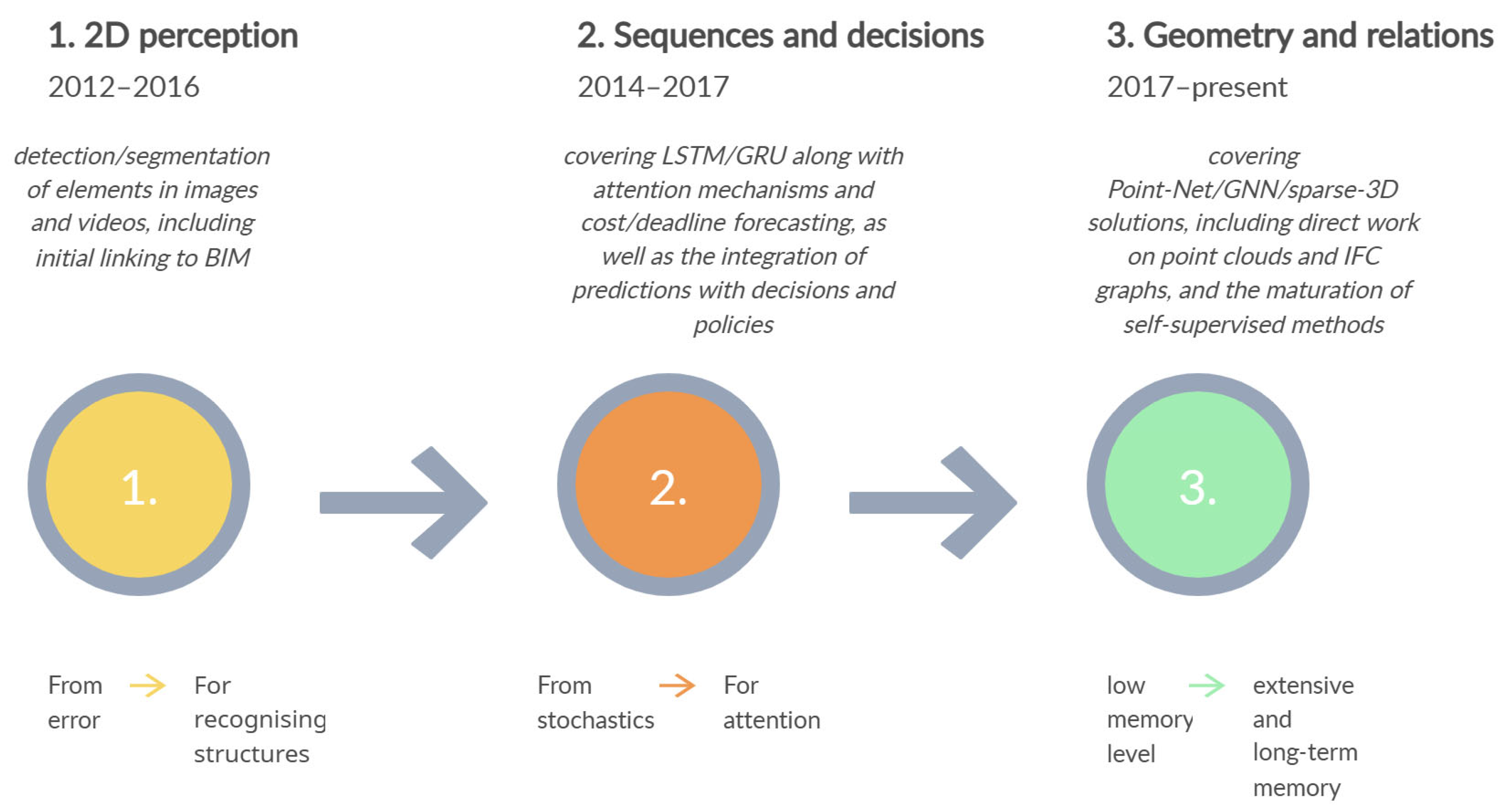

The evolution can be seen as three eras in the development of BIM-AI (

Figure 2):

2D perception (2012–2016) including CNNs (AlexNet→ResNet, U-Net) and detection/segmentation of elements in images and videos, including the first linking to BIM.

Sequences and decisions (2014–2017) including LSTM/GRU with attention mechanisms and cost/deadline forecasting, as well as the integration of predictions with decisions and policies.

Geometry and relations (2017 to present) covering PointNet/GNN/sparse-3D solutions and the important HR-Net [

24], including direct work on point clouds and IFC graphs, and the maturation of self-supervised methods.

In many current industrial deployments, CNNs remain the backbone choice for visual tasks, especially where stability and low latency are important, although systematic comparisons with transformer-based alternatives are still limited. RNNs (LSTM/GRU) are still practical for time series and event logs, although some tasks are being taken over by attention architectures. Geometric DL (GNN/3D) is crucial when predictions must be topologically and semantically consistent with IFC. Self-/semi-supervised + synthetics are the most effective response to benchmark deficiencies and high annotation costs.

3. Example of Bimetria

This chapter presents a practical implementation of the previously discussed concepts of deep learning in the context of BIM, namely the Bimetria system. The aim is to show how the combination of convolutional neural networks (CNNs) and optical character recognition (OCR), supported by domain rules and a geometric reconstruction stage, can automate the transition from 2D drawings in the form of scans or PDF files to structured information about a building object and to an IFC model ready for use in AECOO processes. This example complements the literature review with a practical dimension, highlighting the trade-offs between accuracy and stability, limitations due to data quality and diversity, and architectural decisions dictated by engineering design requirements. Conceptually, Bimetria can be seen as a specific form of “active BIM”. In existing research, active BIM usually refers to BIM environments enriched with optimization or decision-making capabilities that dynamically update project information, for example to optimize crane locations, site logistics or building energy performance [

25,

26,

27]. In our case, the “activeness” of BIM is driven primarily by AI-based perception: CNN/RNN models interpret 2D drawings, construct and update an IFC-based model, and populate quantitative attributes that feed downstream cost estimation and model management. This shifts active BIM towards workflows where automated recognition and model generation are tightly coupled with BIM/IFC structures and quantity takeoff.

At a high level, Bimetria implements a processing chain that includes preliminary cleaning and normalization of drawing sheets, detection and segmentation of graphic elements such as symbols, lines, and dimensions using CNN models, extraction of descriptive content and dimensional numbers using OCR methods, merging of geometric and semantic information, reconstruction of three-dimensional elements along with their parameterization, mapping to IFCs and relations, followed by data integrity validation and export of results. The system in question takes a pragmatic approach that includes transfer learning, semi-supervised labeling, and consistency checking rules, which together allow for repeatable results in production conditions.

3.1. Input Data

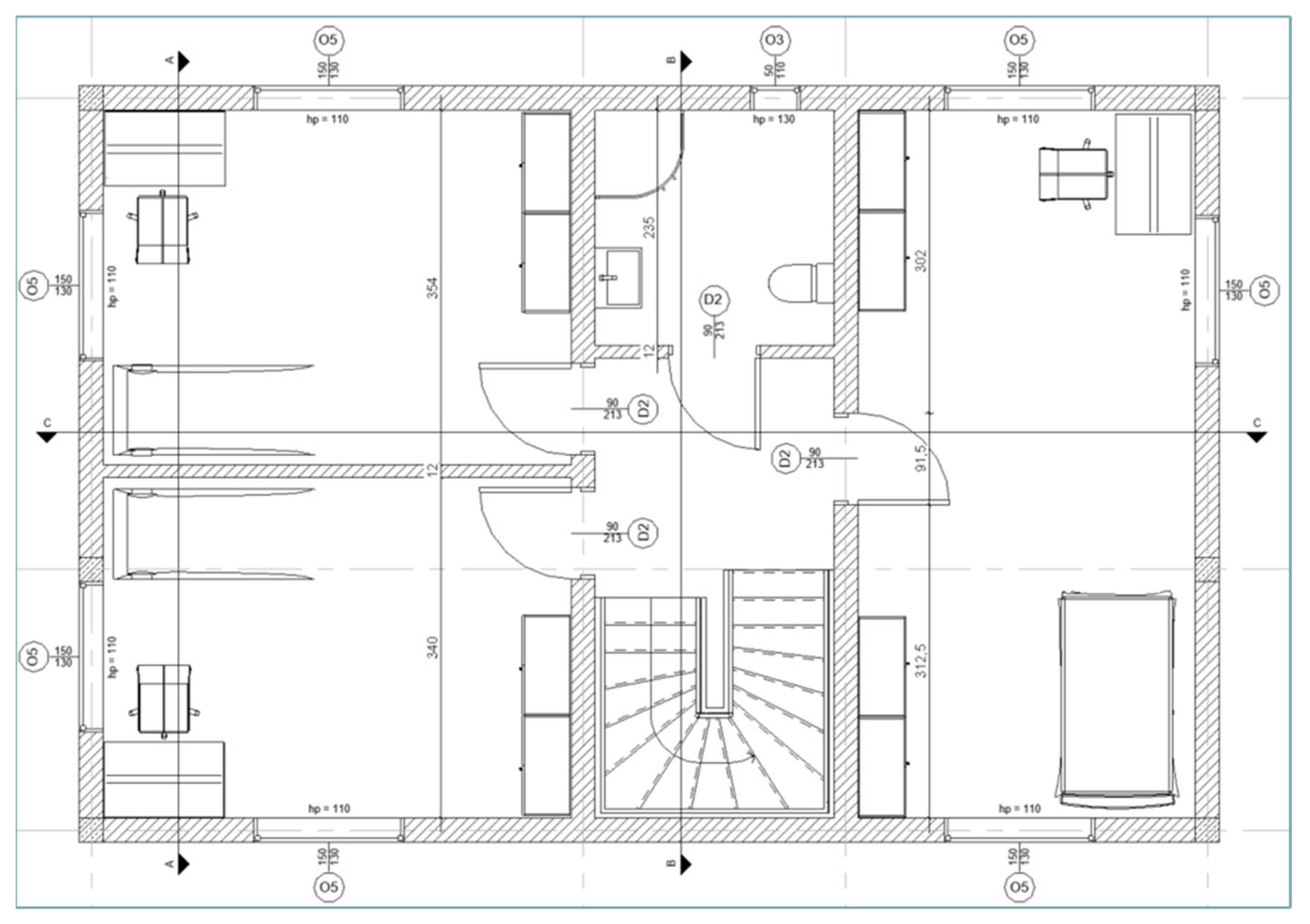

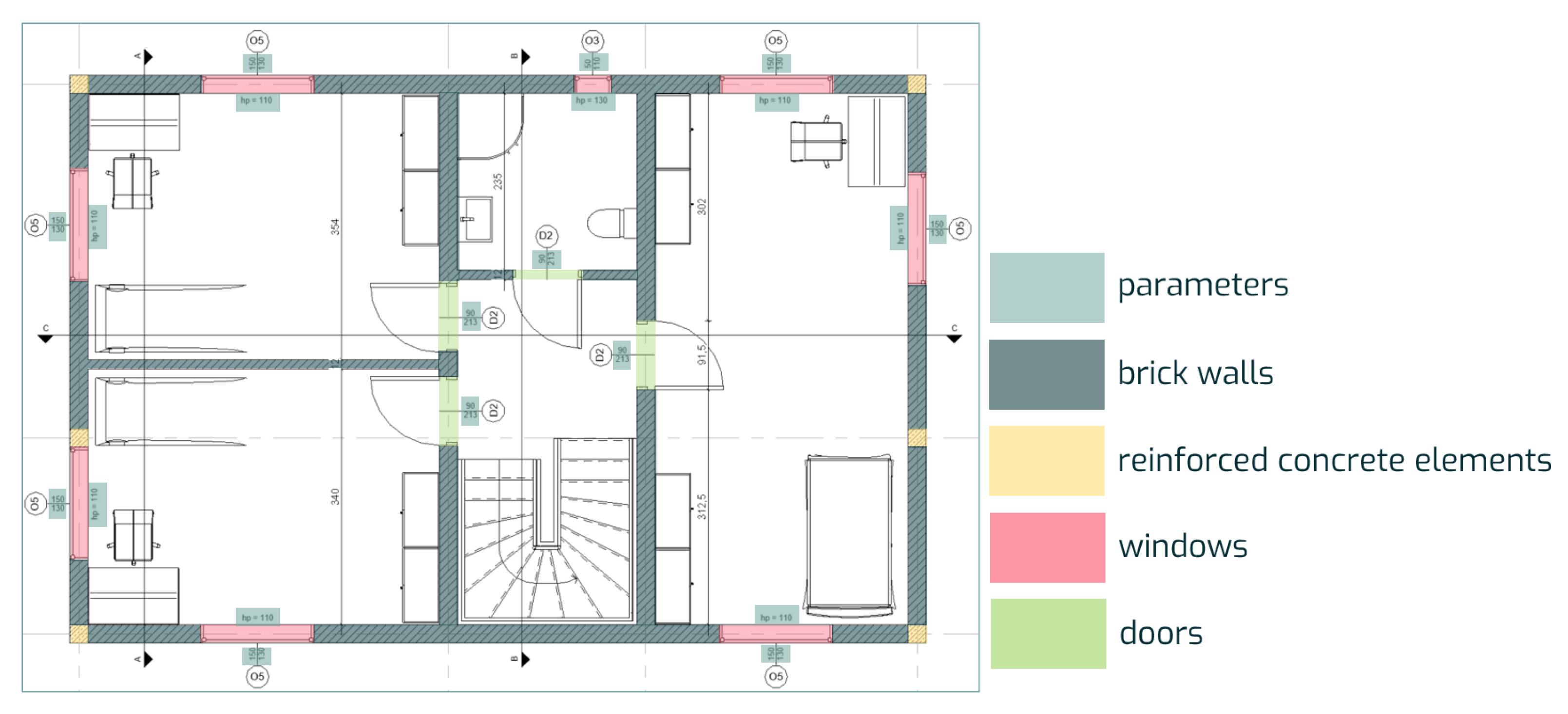

The starting point for the Bimetria pipeline is a single 2D drawing sheet (

Figure 3), which is the source of geometric and semantic information used to reconstruct the 3D model. A 2D drawing usually contains layers of a mixed nature: construction graphics, installation symbols, dimensions, text descriptions, legends, and title blocks. In practice, both vector PDF files and raster PDF, PNG, and JPEG files (including scans and photos of sheets) can be fed into the system. The goal of the actions embedded in the system is to obtain an image with stable visual properties, in which the topological relationships between lines, symbols, and text are not blurred.

To obtain results that are as close to reality as possible at the outset, the user defines information about the storeys by adding or subtracting records and entering the necessary data. For each storey to be analyzed, the ordinates of the base and top of the structural and partition walls should be assigned.

In the circulation of documentation, it often happens that projections are transmitted in the form of scans or photographs of printed sheets, which are captured in a perspective that distorts the drawing. Therefore, the first step is to straighten the image. This is done using a dedicated straightening tool, which, after the user has marked four corners of a known rectangle, gives the projection an appearance like the original drawing.

The next step in preparing the image is to improve its readability using ESRGAN [

28], a machine-trained model for improving the quality of raster images. This stage involves correcting the sharpness of lines and characters, and for scans or photos with uneven lighting, brightness adjustment is used.

To later map to IFC, it is crucial to preserve information about units and scales, so the user first assigns a scale to the drawing by calibrating the design using dimensions in two perpendicular axes.

This set of input data is standardized, described with metadata, and enriched with reliable annotations, thus providing CNN models and the OCR component with the conditions for stable detection of 2D elements and for subsequent deterministic transfer of information to 3D space in the BIM environment and IFC structures. The resulting representation can be mapped to an IFC-based parametric model used in further stages of the design process, following the Industry Foundation Classes (IFC) schema (ISO 16739-1:2018) [

29] and related standards for BIM-based data exchange. As a result, a single 2D drawing becomes a fully fledged knowledge carrier that can be transformed into a coherent, parametric model used in further stages of the design process.

From a methodological perspective, the data used to train and evaluate Bimetria come from 250 real design projects, involving buildings with reinforced concrete, masonry, or mixed wall structures. A representative floor plan in raster format with a resolution of 96 to 300 DPI was selected from each project. A segmentation mask was created for each drawing using a consistent labeling protocol that distinguishes between classes of elements, e.g., masonry walls, reinforced concrete walls, doors, windows. The annotations serve as ground truth for training and validation of the image recognition model. In the next step, an extensive system of image augmentation and corresponding masks was prepared in terms of scale, rotation, deformation, and blurring. After the class labeling stage, this was the most crucial stage in terms of the final quality of the model being built.

The images prepared in this way, together with the corresponding masks, were divided into tiles of a size that allowed for efficient learning on limited hardware resources with optimal context coverage for the classes sought. After dividing into tiles, tiles with zero participation of classes other than the background were selected and removed. The final number of fragments of projections prepared in this way and used for model training exceeded 16,000 images. For the entire model, mIoU ~85% was obtained, and none of the classes achieved less than 72 IoU.

3.2. Image Recognition Model

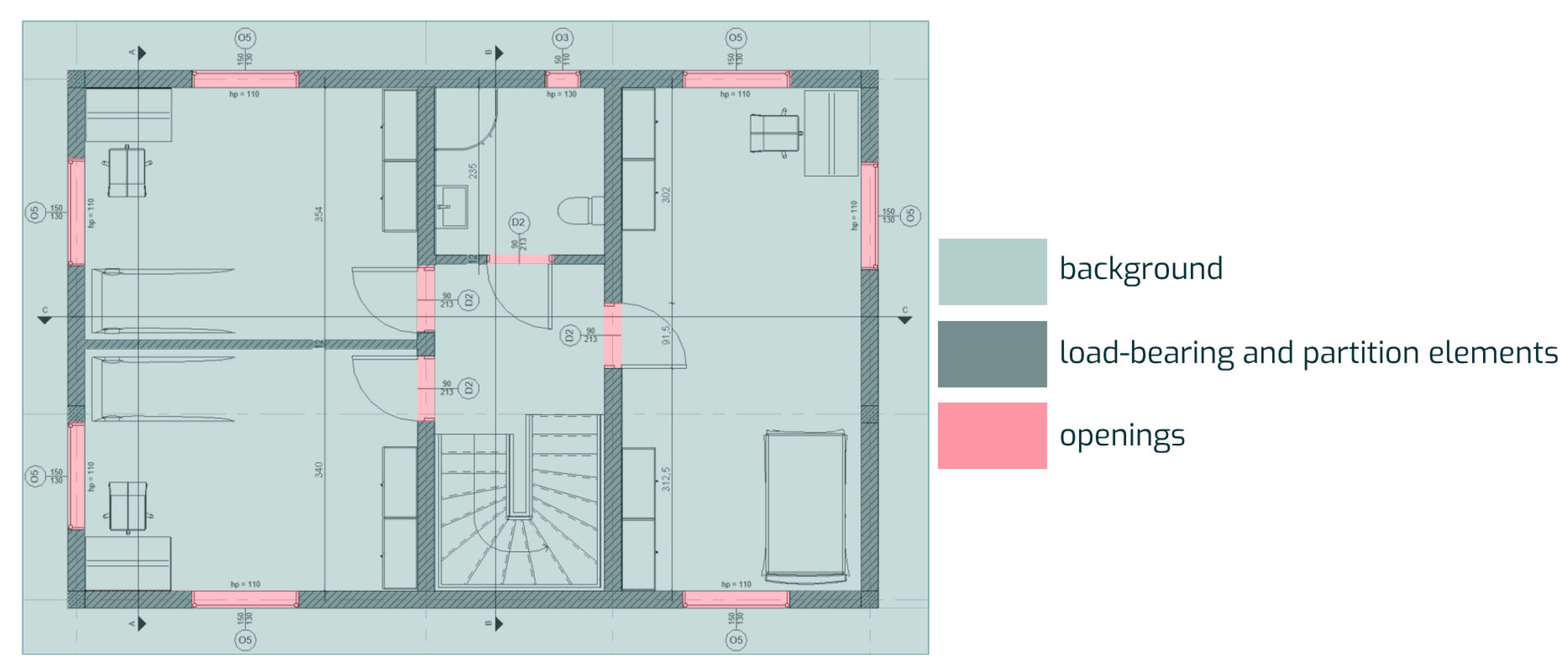

The image recognition process begins with dividing the image into square tiles, which optimize the model’s performance and are used to calculate the number of times the application is used by the user. From this point on, the processes related to the recognition of elements in images take place on separate parts, thus minimizing the risk of failure of the operation due to the large size of the input data. The core of the system is an encoder–decoder convolutional network with skip connections, which predicts a semantic segmentation map of the drawing together with auxiliary masks needed for post-processing. The model is trained to classify each pixel into 5 classes corresponding to the object categories used in Bimetria (masonry walls, reinforced concrete walls, doors, windows) and background. The loss function combines a standard cross-entropy term with to handle class imbalance between large background regions and relatively small structural elements.

Bimetria is a two-stage image recognition model tailored to the specifics of technical drawings. The first part is segmentation based on a network that allows for the stable separation of three types of regions: walls with columns, openings, and the background constituting the rest of the drawing. The direct results of image recognition have imperfections resulting from the prediction process, which is why they are immediately subjected to automatic analysis and correction to generate output data in the form of rectangles with appropriate dimensions and angles while maintaining the relationships between elements (

Figure 4).

The model was trained in a multi-task scheme. The model’s performance was designed for production use. The model uses quantization and agile convolution blocks, which enables the processing of large sheets in a time acceptable to the user using appropriate GPU (Graphics Processing Unit) cloud resources. Stability between drawing versions was achieved through input normalization and color calibration mechanisms. In the second stage of image recognition, the model separates masonry walls from reinforced concrete elements and distinguishes between windows and doors (

Figure 5). The network is implemented in Python + deep-learning framework and trained on a standard GPU-equipped workstation. It should be noted that no formal, large-scale quantitative evaluation or systematic ablation study of the Bimetria model is reported in this paper. The case study is intended to illustrate a feasible integration of CNN-based perception with BIM/IFC workflows in an industrial setting, rather than to provide a comprehensive benchmark of model performance.

The third stage of data recognition uses OCR, which analyses the image for text data. The model recognizes letters and numbers that carry information and then selects those that are relevant to the recognized elements. Information is filtered based on both content and geometric position. Ultimately, parameters such as the height and width of openings and the height of windowsills are selected.

After the data in the images have been recognized, the coordinates of the elements in the form of the beginning and end of the axes and the thickness of the elements are generated based on the generated raster data on walls, reinforced concrete elements, windows, and doors. The elements are assigned parameters read by OCR, and a JSON file is created to store all this information in a specific structure.

3.3. Verification of Results

After completing all stages related to the recognition of elements and information on the input data, the user has the option to verify the results. This is done based on vector data displayed on the input image. At this stage, the user has the option to add missing elements, remove redundant elements, and edit parameters. Once the user approves the design, the data in the JSON file is updated.

3.4. Intermediate JSON Layer and IFC Mapping

All recognition results are stored in an intermediate JSON representation that decouples perception outputs from the target BIM model. Each JSON record corresponds to a single building element and contains at least the following fields:

‘id’ (string): unique identifier of the element within a project;

‘type’ (string): semantic category, e.g., ‘wall’, ‘opening’, ‘column’, ‘door’, ‘window’;

‘geom_2d’ (object): 2D geometry in sheet coordinates, including the coordinates of endpoints or polygon vertices in sheet coordinates;

‘level_id’ (string): identifier of the storey/level the element belongs to;

‘params’ (object): dictionary of scalar parameters such as thickness, height, sill elevation, material code;

‘text_refs’ (array): references to OCR tokens that provided the parameter values;

‘confidence’ (float): model confidence score for the detection/segmentation of the element.

During IFC export, each JSON record is mapped to an IFC entity according to a set of deterministic rules. For example, elements with ‘type = wall’ and a non-zero thickness are converted to ‘IfcWall’ or ‘IfcWallStandardCase’; openings in walls are mapped to ‘IfcOpeningElement’; doors and windows are represented as ‘IfcDoor’ and ‘IfcWindow’ inserted into the corresponding openings. Quantitative attributes such as length, area and volume are computed from the 2D geometry and level information and stored in quantity sets (e.g., ‘Qto_WallBaseQuantities’), while descriptive attributes such as material and fire rating are stored in property sets (e.g., ‘Pset_WallCommon’). The use of a JSON layer makes the pipeline robust to changes in perception models and facilitates exporting to different BIM authoring tools that consume IFC.

3.5. Quantity Takeoff

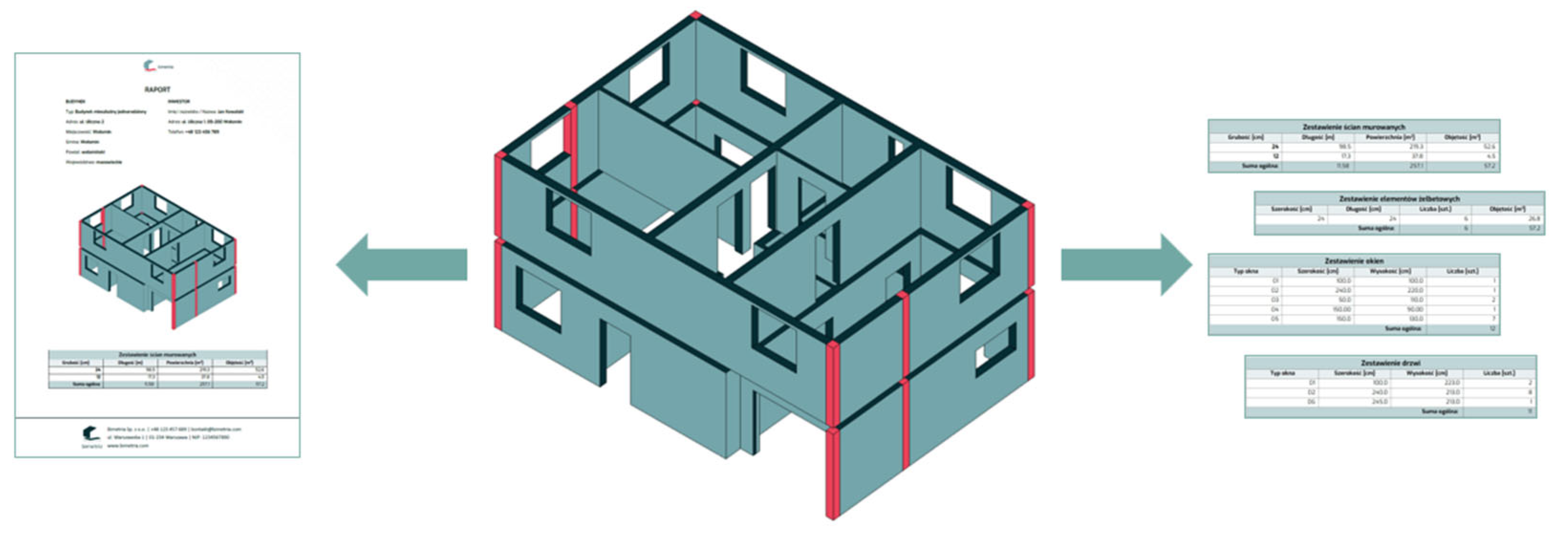

Based on the verification data, an IFC model containing all recognized elements is generated. The model is displayed in the application for better visual verification.

Quantities are calculated based on the approved data contained in the JSON file. The values that are calculated are areas, volumes, and numbers of elements. They are exported to tables in CSV/XLSX format, which can be downloaded and used for any calculations and analyses.

The application also generates a downloadable PDF report, allowing for quick printing and transfer of information if paper documentation is used in the process. It contains a 3D view of the BIM model and tables with quantities (

Figure 6). Quantity surveying in Bimetria is the stage of converting image recognition results into measurement values used for cost estimation and planning purchases or construction works. The starting point is a structured graph of elements obtained from masks and object detection with neighborhood relations and links to OCR readings. On this basis, the system determines the base quantities (lengths, areas, volumes) in accordance with a set of measurement rules, then converts them to target units and combines them with the classification of elements.

The process begins with the normalization of units and versioning of the sheet: all dimensions are converted to SI metrics, and the sheet is given an identifier and scale signature, which guarantees the repeatability of calculations between successive revisions. Recording relationships allows for the distinction between gross and net values, which is crucial for most standard catalogs (subtracting openings, including/excluding recesses, treating columns in floors, etc.).

For masonry and reinforced concrete walls, the system calculates lengths from alignment and developed areas from thickness determined based on the legend or layer caption; if the thickness is not explicitly stated, inference rules based on symbols and line scale are used, and the result is marked with a certain degree of uncertainty. Openings are subtracted from the net areas according to a minimum area threshold to avoid interference from small marker recognition artifacts. The measurement values are accompanied by a margin of uncertainty resulting from error propagation: the system aggregates segmentation deviations, OCR reading quality, and scale calibration accuracy.

The result of this stage is a bill of quantities organized according to the classification of elements. At the BIM level, the data is transferred to IFC structures. At the same time, CSV/XLSX reports and filter views are generated, enabling the mapping of items to cost estimate items, with the system able to use assignments to industry dictionaries and codes used in cost estimation. This integration allows for direct feeding into 5D processes, and thanks to input versioning and deterministic measurement rules, the lists are comparable between project revisions and suitable for tracking changes.

3.6. Scope and Limitations of the Case Study

It should be noted that no formal, large-scale quantitative evaluation or systematic ablation study of the Bimetria model is reported in this paper. The case study is intended to illustrate a feasible integration of CNN-based perception with BIM/IFC workflows in an industrial setting, rather than to provide a comprehensive benchmark of model performance.

4. Discussion

In interpreting the results, a distinction is made between observations that are directly grounded in the described pipeline and case study, and broader reflections informed by experience and the reviewed literature. Statements that refer to specific mechanisms or design choices in Bimetria should be understood as practice-oriented and illustrative, whereas more general claims about trends in BIM–AI are based on the narrative review rather than on new quantitative experiments.

The U-Net network was the starting point for the development of Bimetria and, in general, should be considered a breakthrough. In turn, thanks to the HR-Net network, i.e., its application to the analysis of documentation and details, much better results (accuracy) were obtained than in the case of U-Net. Due to its high resolution throughout the network, only HR-Net allows for the determination of, among other things, wall polygons in a manner acceptable from the point of view of quantity surveying.

The results of the literature review and the Bimetria case study allow us to look at the evolution of CNNs/RNNs in BIM from a more nuanced perspective than a simple exposure to “old” networks vs. “new” transformers. On the one hand, there is a clear shift in the focus of research towards attention-based architectures, graphs, and point clouds, which better capture 3D geometry and topological relationships in IFC models. On the other hand, practice shows that mature and well-understood components are still crucial for many critical tasks: convolutional networks segmenting 2D images, classic RNNs/LSTM for time series, and simple language/embedding models that can be relatively easily integrated into existing engineering processes. Bimetria fits into this logic of “reasonable conservatism”: instead of chasing the latest benchmarks, it uses the proven HR-Net network and OCR modules to solve a specific, business-critical problem—the transition from 2D drawings to BIM models and bills of quantities.

An analysis of Bimetria’s pipeline provides a partial answer to the question posed in the introduction about when classic CNNs/RNNs are “sufficient.” For a highly structured domain such as technical drawings, with well-designed pre-processing (scale normalization, distortion correction, resolution improvement) and a limited but precise object dictionary, convolutional networks still provide stable segmentation and element detection. The key is not so much the architecture itself, but rather its integration with domain rules (e.g., inferring missing wall thickness based on context) and rigorous user-level quality control woven into the verification process. From this point of view, more complex graph models or transformers could improve some marginal cases (complex details, non-standard symbols), but they are not a prerequisite for achieving production usability in typical building designs.

An important conclusion that emerges from a comparison of literature and the Bimetria example is the central role of the intermediate layer between prediction and the BIM/IFC world. In the proposed solution, this function is performed by a structured description in JSON format, which stores both the coordinates and semantics of the recognized elements. It is this layer that enables the mapping of CNN/OCR results to IFC objects, their subsequent use in quantity surveying processes, and tracking changes between revisions. The literature often assumes that an “intelligent” model will find the right representation on its own, but from an AECOO practice perspective, explicit, auditable bridges between input data, prediction, and the BIM model are key. This approach also promotes explainability: the designer or user not only receives a numerical result, but also the ability to trace which parts of the drawing generated specific IFC objects and what the quantity survey values are.

The issue of balance between synthetic and real data and the problem of domain gap manifests itself in Bimetria in a slightly different way than in typical scientific research. Instead of building huge synthetic corpora, the system relies on so-called dirty documentation from real projects, and the diversity of drawing styles, formats, scanning artifacts, and human errors is treated as a natural problem. While this limits the possibility of precise comparison with other solutions (lack of public benchmarks), it better reflects the actual conditions of implementation. In the broader context of BIM–AI, this suggests that it may make sense to build smaller, “local” data ecosystems around specific applications and organizations, rather than striving for a single correct and universal reference set.

The final topic of discussion concerns explainability and trust in AI systems in BIM. In literature, XAI is often reduced to general techniques for visualizing attention or feature importance, while experience with Bimetria indicates that from the user’s perspective, simple but well-integrated mechanisms are key: overlaying segmentation results on the original drawing, the ability to manually correct and immediately recalculate measurements, and versioning of input data and results. “Operational explainability” understood in this way may be less impressive than advanced XAI methods, but it better addresses the real needs of designers and cost estimators. It seems that in the coming years, it will be precisely such pragmatic solutions, combining mature CNN/RNN architecture with well-designed data semantics and user interfaces, that will determine whether AI becomes an integral part of everyday BIM workflows or remains mainly the domain of research experiments. This observation is consistent with experiential learning approaches in BIM education, where direct, hands-on interaction with BIM and AI-augmented tools is seen as a prerequisite for developing trust and deep understanding of digital workflows [

30].

Given the current lack of public benchmarks dedicated to BIM-specific tasks, it seems useful to outline at least a minimal open protocol aligned with the three lenses used in this article. As a starting point, such a protocol could include a set of varied 2D sheets with ground-truth segmentation masks and quantities for key structural and architectural elements, a small number of annotated site videos capturing construction progress, and several time-series datasets (e.g., cost, schedule, energy) with standard train/validation/test splits. For each lens, a core set of baseline metrics (e.g., IoU/mAP for perception, MAE/RMSE for forecasting, and topology-aware measures for IFC integration) should be agreed upon. The typology and case study presented here are intended as a conceptual blueprint for designing such community benchmarks rather than as a complete specification.

5. Conclusions

The article provides a synthetic overview of the evolution of convolutional and recurrent neural networks in BIM applications against the backdrop of the broader development of deep learning methods, showing how the community has progressed from simple 2D perception tasks to models that operate directly on point clouds, IFC graphs, and event sequences in the object lifecycle. The authors organize the current state of knowledge into three complementary perspectives: computer visualization coupled with BIM models (detection, segmentation, and quality control in images, videos, and point clouds), sequence and time series modeling (cost, energy, work progress, and risk forecasts), and the integration of deep learning results with the semantics and topology of IFC models and CDE environments. Based on a review of the literature from the last two decades, the dominant architectures (including the U-Net and HR-Net family, LSTM/GRU, 3D and graph networks, transformers), typical pipelines, and main implementation barriers have been identified, among which the key ones are: the lack of standardized benchmarks dedicated to BIM tasks, the high cost of annotation in native environments, the domain gap between synthetic and real data, the limited interoperability of the IFC standard, and the insufficient explainability of AI models in cost- and schedule-critical applications. The literature review is supplemented by a case study of the Bimetria system, which uses a hybrid processing chain based on CNNs, OCR, domain rules, indirect representation in JSON format, and geometric reconstruction to strongly automate the transition from 2D drawings (scans, PDF) to an IFC model integrated with quantity takeoff and cost estimation processes, while illustrating that mature and well-understood CNN/RNN architectures can be “sufficient” in many tasks if embedded in a carefully designed workflow and user verification mechanisms. Conceptually, Bimetria can be seen as an example of “Active BIM”. In such workflows, AI models are not limited to recognizing objects in drawings, but they actively construct and update a BIM/IFC model and its quantitative attributes. This means that the output of perception directly drives quantity takeoff, cost estimation and model management, instead of remaining a separate analytical layer detached from the BIM environment. The need to align advanced CNN/RNN-based workflows with the broader digital transformation of the AEC sector is also emphasized by Zawada et al. [

31], who argue that the potential of BIM and 4.0 technologies, including AI, is still far from being fully exploited in everyday engineering practice [

31]. Thus, the article proposes a framework typology of BIM-AI tasks and methods, formulates a set of fundamental questions concerning, among other things, the role of classical CNN/RNN models in relation to attention-based models, the balance between synthetic and real data, practically useful explainability, and minimum interoperability requirements, and indicates directions for further development aimed at building scalable, explainable, and comparable tools that can become a permanent part of everyday practice in the broadly understood construction industry—AECOO.