Using Eye Movements to Understand how Security Screeners Search for Threats in X-Ray Baggage

Abstract

1. Introduction

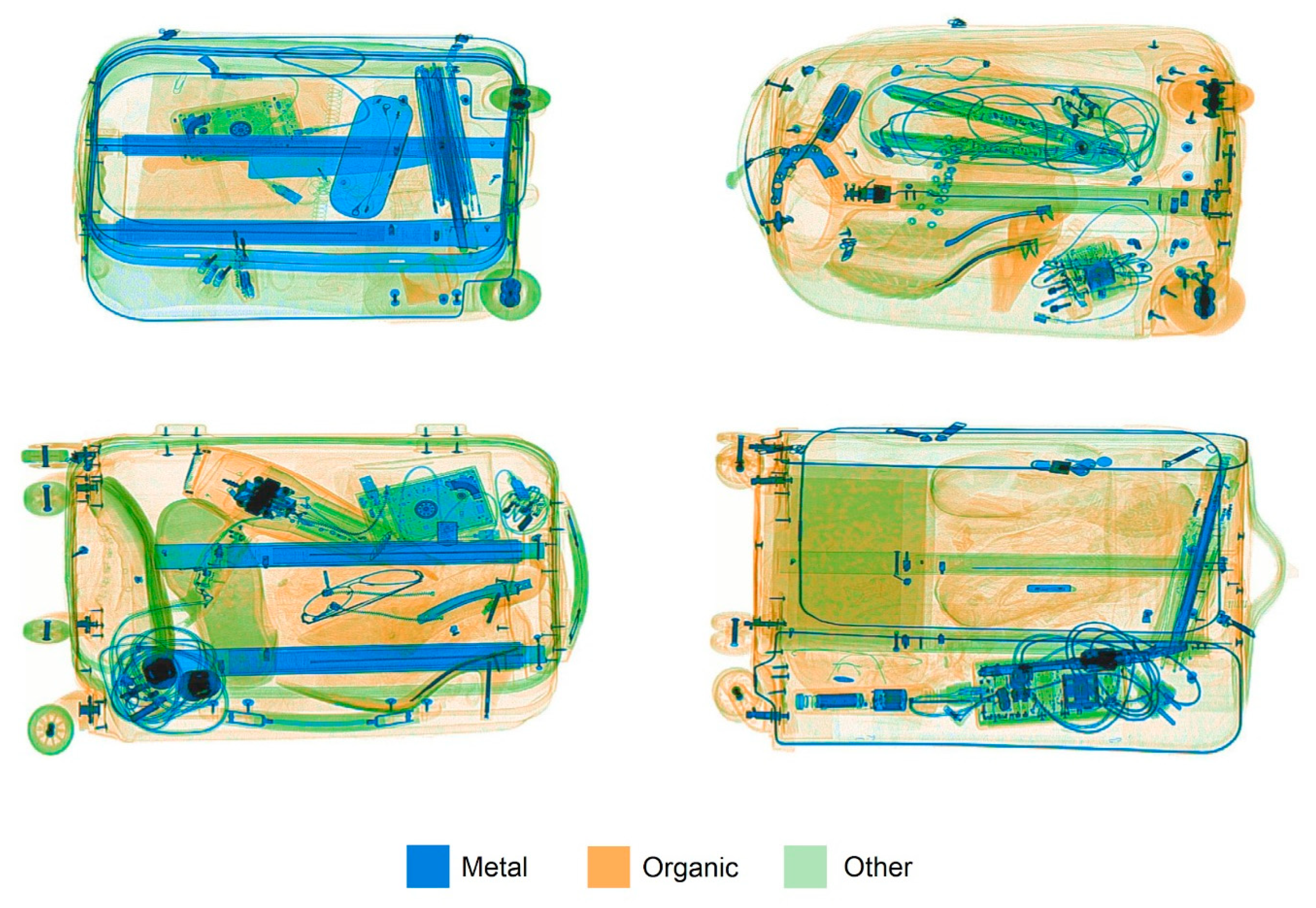

2. What Are the Images Used in Baggage Screening?

3. What Are the Decisions Made by Baggage Screeners?

4. The Security Search Task

5. Identification of Complex, Overlapping Transparent Objects

6. The Problem of Low Prevalence

7. Differences in Individual Screeners

8. Working Memory Capacity and Attentional Control

9. Setting Conservative Decision Thresholds

10. Searching through Three-Dimensional Volumes

11. General Discussion and Summary

Funding

Conflicts of Interest

References

- Davis, G. CaSePIX X-ray Image Library. University of Cambridge: Cambridge, UK, Unpublished work. 2017. [Google Scholar]

- Harris, D.H. How to Really Improve Airport Security. Ergon. Des. 2002, 10, 17–22. [Google Scholar] [CrossRef]

- Franzel, T.; Schmidt, U.; Roth, S. Object Detection In Multi-view X-Ray Images. In Proceedings of the Joint DAGM (German Association for Pattern Recognition) and OAGM Symposium, Graz, Austria, 28–31 August 2012; Pinz, A., Pock, T., Bischof, H., Leberl, F., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 144–154. [Google Scholar]

- Hättenschwiler, N.; Mendes, M.; Schwaninger, A. Detecting Bombs in X-Ray Images of Hold Baggage: 2D Versus 3D Imaging. Hum. Factors 2019, 61, 305–321. [Google Scholar] [CrossRef] [PubMed]

- Koehler, K.; Eckstein, M.P. Beyond Scene Gist: Objects Guide Search More Than Scene Background. J. Exp. Psychol. Hum. Percept. Perform. 2017, 43, 1177–1193. [Google Scholar] [CrossRef] [PubMed]

- Wolfe, J.M.; Cave, K.R.; Franzel, S.L. Guided Search: An Alternative To The Feature Integration Model For Visual Search. J. Exp. Psychol. Hum. Percept. Perform. 1989, 15, 419–433. [Google Scholar] [CrossRef] [PubMed]

- Duncan, J.; Humphreys, G. Beyond The Search Surface: Visual Search And Attentional Engagement. J. Exp. Psychol. 1992, 18, 578–588. [Google Scholar] [CrossRef]

- Treisman, A.; Gelade, G. A Feature-Integration Theory Of Attention. Cogn. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef]

- Zelinsky, G.J. A Theory of Eye Movements During Target Acquisition. Psychol. Rev. 2008, 115, 787–835. [Google Scholar] [CrossRef] [PubMed]

- Hulleman, J.; Olivers, C.N.L. The Impending Demise Of The Item In Visual Search. Behav. Brain Sci. 2017, 40, e132. [Google Scholar] [CrossRef] [PubMed]

- Eckstein, M.P. Visual Search: A Retrospective. J. Vis. 2011, 11, 14. [Google Scholar] [CrossRef]

- Wolfe, J.M. Guided Search 4.0: Current Progress With A Model Of Visual Search. In Integrated Models of Cognitive Systems; Gray, W., Ed.; Oxford University Press: New York, NY, USA, 2007; pp. 99–120. [Google Scholar]

- Wolfe, J.M. Guided Search 2.0 A Revised Model of Visual Search. Psychon. Bull. Rev. 1994, 1, 202–238. [Google Scholar] [CrossRef]

- Wolfe, J.M.; Gancarz, G. Guided Search 3.0. In Basic and Clinical Applications of Vision Science; Lakshminarayanan, V., Ed.; Springer: Dordrecht, The Netherlands, 1997. [Google Scholar]

- Chun, M.M.; Wolfe, J.M. Just Say No: How Are Visual Searches Terminated When There Is No Target Present? Cogn. Psychol. 1996, 30, 39–78. [Google Scholar] [CrossRef] [PubMed]

- Findlay, J.M.; Walker, R. A Model Of Saccade Generation Based On Parallel Processing And Competitive Inhibition. Behav. Brain Sci. 1999, 22, 661–674; discussion 674–721. [Google Scholar] [CrossRef] [PubMed]

- Tatler, B.W.; Brockmole, J.R.; Carpenter, R.H.S. LATEST: A Model Of Saccadic Secisions In Space And Time. Psychol. Rev. 2017, 124, 267–300. [Google Scholar] [CrossRef] [PubMed]

- Kundel, H.L.; La Follette, P.S. Visual Search Patterns And Experience With Radiological Images. Radiology 1972, 103, 523–528. [Google Scholar] [CrossRef] [PubMed]

- Nodine, C.F.; Kundel, H.L. Using Eye Movements To Study Visual Search And To Improve Tumor Detection. RadioGraphics 2013, 7, 1241–1250. [Google Scholar] [CrossRef]

- Mccarley, J.S.; Kramer, A.F.; Wickens, C.D.; Vidoni, E.D.; Boot, W.R. Visual Skills In Airport-Security Screening. Psychol. Sci. 2004, 15, 302–306. [Google Scholar] [CrossRef] [PubMed]

- Wolfe, J.M. Saved By A Log: How Do Humans Perform Hybrid Visual And Memory Search? Psychol. Sci. 2012, 23, 698–703. [Google Scholar] [CrossRef]

- Wolfe, J.M.; Aizenman, A.M.; Boettcher, S.E.P.; Cain, M.S. Hybrid Foraging Search: Searching For Multiple Instances Of Multiple Types Of Target. Vis. Res. 2016, 119, 50–59. [Google Scholar] [CrossRef]

- Hout, M.C.; Goldinger, S.D. Target Templates: The Precision Of Mental Representations Affects Attentional Guidance And Decision-Making In Visual Search. Atten. Percept. Psychophys. 2015, 77, 128–149. [Google Scholar] [CrossRef]

- Menneer, T.; Stroud, M.J.; Cave, K.R.; Li, X.; Godwin, H.J.; Liversedge, S.P.; Donnelly, N. Search For Two Categories Of Target Produces Fewer Fixations To Target-Color Items. J. Exp. Psychol. Appl. 2012, 18, 404–418. [Google Scholar] [CrossRef]

- Menneer, T.; Phillips, L.; Donnelly, N.; Barrett, D.J.K.; Cave, K.R. Search Efficiency For Multiple Targets. Cogn. Technol. 2004, 9, 22–25. [Google Scholar]

- Menneer, T.; Cave, K.R.; Donnelly, N. The Cost Of Search For Multiple Targets: Effects Of Practice And Target Similarity. J. Exp. Psychol. Appl. 2009, 15, 125–139. [Google Scholar] [CrossRef] [PubMed]

- Stroud, M.J.; Menneer, T.; Cave, K.R.; Donnelly, N.; Rayner, K. Search For Multiple Targets Of Different Colors: Misguided Eye Movements Reveal A Reduction Of Color Selectivity. Appl. Cogn. Psychol. 2011, 25, 971–982. [Google Scholar] [CrossRef]

- Stroud, M.J.; Menneer, T.; Cave, K.R.; Donnelly, N. Using The Dual-Target Cost To Explore The Nature Of Search Target Representations. J. Exp. Psychol. Hum. Percept. Perform. 2012, 38, 113–122. [Google Scholar] [CrossRef] [PubMed]

- Stroud, M.J.; Menneer, T.; Kaplan, E.; Cave, K.R.; Donnelly, N. We Can Guide Search By A Set Of Colors, But Are Reluctant To Do It. Atten. Percept. Psychophys. 2019, 81, 377–406. [Google Scholar] [CrossRef] [PubMed]

- Beck, V.M.; Hollingworth, A.; Luck, S.J. Simultaneous Control Of Attention By Multiple Working Memory Representations. Psychol. Sci. 2012, 23, 887–898. [Google Scholar] [CrossRef] [PubMed]

- Cave, K.R.; Menneer, T.; Nomani, M.S.; Stroud, M.J.; Donnelly, N. Dual Target Search Is Neither Purely Simultaneous Nor Purely Successive. Q. J. Exp. Psychol. 2018, 71, 169–178. [Google Scholar] [CrossRef]

- Menneer, T.; Cave, K.R.; Kaplan, E.; Stroud, M.J.; Chang, J.; Donnelly, N. The Relationship Between Working Memory And The Dual-Target Cost In Visual Search Guidance. J. Exp. Psychol. Hum. Percept. Perform. 2019. [Google Scholar] [CrossRef] [PubMed]

- Hardmeier, D.; Hofer, F.; Schwaninger, A. The X-Ray Object Recognition Test (X-Ray ORT)—A Reliable And Valid Instrument For Measuring Visual Abilities Needed In X-Ray Screening. In Proceedings of the 39th Annual 2005 International Carnahan Conference On Security Technology, Las Palmas, Spain, 11–14 October 2005; pp. 189–192. [Google Scholar]

- Verghese, P.; Mckee, S.P. Visual Search In Clutter. Vis. Res. 2004, 44, 1217–1225. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Adamo, S.H.; Cain, M.S.; Mitroff, S.R. Targets Need Their Own Personal Space: Effects Of Clutter On Multiple-Target Search Accuracy. Perception 2015, 44, 1203–1214. [Google Scholar] [CrossRef]

- Godwin, H.J.; Menneer, T.; Liversedge, S.P.; Cave, K.R.; Holliman, N.S.; Donnelly, N. Adding Depth To Overlapping Displays Can Improve Visual Search Performance. J. Exp. Psychol. Hum. Percept. Perform. 2017, 43, 1532–1549. [Google Scholar] [CrossRef] [PubMed]

- Mitroff, S.R.; Biggs, A.T. The Ultra-Rare-Item Effect: Visual Search For Exceedingly Rare Items Is Highly Susceptible To Error. Psychol. Sci. 2014, 25, 284–289. [Google Scholar] [CrossRef] [PubMed]

- Schwark, J.D.; Macdonald, J.; Sandry, J.; Dolgov, I. Prevalence-Based Decisions Undermine Visual Search. Vis. Cogn. 2013, 21, 541–568. [Google Scholar] [CrossRef]

- Menneer, T.; Donnelly, N.; Godwin, H.J.; Cave, K.R. High Or Low Target Prevalence Increases The Dual-Target Cost In Visual Search. J. Exp. Psychol. Appl. 2010, 16, 133–144. [Google Scholar] [CrossRef] [PubMed]

- Green, D.M.; Swets, J.A. Signal Detection Theory And Psychophysics; Wiley: New York, NY, USA, 1966. [Google Scholar]

- Fleck, M.S.; Mitroff, S.R. Rare Targets Are Rarely Missed In Correctable Search. Psychol. Sci. 2007, 18, 943–947. [Google Scholar] [CrossRef] [PubMed]

- Godwin, H.J.; Menneer, T.; Cave, K.R.; Helman, S.; Way, R.L.; Donnelly, N. The Impact Of Relative Prevalence On Dual-Target Search For Threat Items From Airport X-Ray Screening. Acta Psychol. 2010, 134, 79–84. [Google Scholar] [CrossRef]

- Godwin, H.J.; Menneer, T.; Cave, K.R.; Donnelly, N. Dual-Target Search For High And Low Prevalence X-Ray Threat Targets. Vis. Cogn. 2010, 18, 1439–1463. [Google Scholar] [CrossRef]

- Ishibashi, K.; Kita, S.; Wolfe, J.M. The Effects Of Local Prevalence And Explicit Expectations On Search Termination Times. Atten. Percept. Psychophys. 2012, 74, 115–123. [Google Scholar] [CrossRef]

- Wolfe, J.M.; Horowitz, T.S.; Kenner, N. Rare Items Often Missed In Visual Searches. Nature 2005, 435, 439–440. [Google Scholar] [CrossRef]

- Godwin, H.J.; Menneer, T.; Riggs, C.A.; Cave, K.R.; Donnelly, N. Perceptual Failures In The Selection And Identification Of Low-Prevalence Targets In Relative Prevalence Visual Search. Atten. Percept. Psychophys. 2015, 77, 150–159. [Google Scholar] [CrossRef]

- Peltier, C.; Becker, M.W. Eye Movement Feedback Fails To Improve Visual Search Performance. Cogn. Res. Princ. Implic. 2017, 2, 47. [Google Scholar] [CrossRef] [PubMed]

- Drew, T.; Williams, L.H. Simple Eye-Movement Feedback During Visual Search Is Not Helpful. Cogn. Res. Princ. Implic. 2017, 2, 44. [Google Scholar] [CrossRef]

- Godwin, H.J.; Menneer, T.; Riggs, C.A.; Taunton, D.; Cave, K.R.; Donnelly, N. Understanding The Contribution Of Target Repetition And Target Expectation To The Emergence Of The Prevalence Effect In Visual Search. Psychon. Bull. Rev. 2016, 23, 809–816. [Google Scholar] [CrossRef] [PubMed]

- Godwin, H.J.; Menneer, T.; Cave, K.R.; Thaibsyah, M.; Donnelly, N. The Effects Of Increasing Target Prevalence On Information Processing During Visual Search. Psychon. Bull. Rev. 2015, 22, 469–475. [Google Scholar] [CrossRef] [PubMed]

- Meuter, R.F.I.; Lacherez, P.F. When And Why Threats Go Undetected: Impacts Of Event Rate And Shift Length On Threat Detection Accuracy During Airport Baggage Screening. Hum. Factors 2015, 58, 218–228. [Google Scholar] [CrossRef] [PubMed]

- Schwaninger, A.; Hardmeier, D.; Hofer, F. Measuring Visual Abilities And Visual Knowledge Of Aviation Security Screeners. IEEE ICCST Proc. 2004, 38, 258–264. [Google Scholar]

- Koller, S.M.; Drury, C.G.; Schwaninger, A. Change Of Search Time And Non-Search Time In X-Ray Baggage Screening Due To Training. Ergonomics 2009, 52, 644–656. [Google Scholar] [CrossRef] [PubMed]

- Schwaninger, A.; Hardmeier, D.; Riegelnig, J.; Martin, M. Use It And Still Lose It? Geropsych 2010, 23, 169–175. [Google Scholar] [CrossRef]

- Bleckley, M.K.; Durso, F.T.; Crutchfield, J.M.; Engle, R.W.; Khanna, M.M. Individual Differences In Working Memory Capacity Predict Visual Attention Allocation. Psychon. Bull. Rev. 2003, 10, 884–889. [Google Scholar] [CrossRef]

- Halbherr, T.; Schwaninger, A.; Budgell, G.R.; Wales, A. Airport Security Screener Competency: A Cross-Sectional And Longitudinal Analysis. Int. J. Aviat. Psychol. 2013, 23, 113–129. [Google Scholar] [CrossRef]

- Poole, B.J.; Kane, M.J. Working-Memory Capacity Predicts The Executive Control Of Visual Search Among Distractors: The Influences Of Sustained And Selective Attention. Q. J. Exp. Psychol. 2009, 62, 1430–1454. [Google Scholar] [CrossRef] [PubMed]

- Sobel, K.V.; Gerrie, M.P.; Poole, B.J.; Kane, M.J. Individual Differences In Working Memory Capacity And Visual Search: The Roles Of Top-Down And Bottom-Up Processing. Psychon. Bull. Rev. 2007, 14, 840–845. [Google Scholar] [CrossRef] [PubMed]

- Gunseli, E.; Olivers, C.N.L.; Meeter, M. Effects Of Search Difficulty On The Selection, Maintenance, And Learning Of Attentional Templates. J. Cogn. Neurosci. 2014, 26, 2042–2054. [Google Scholar] [CrossRef]

- Olivers, C.N.L.; Peters, J.; Houtkamp, R.; Roelfsema, P.R. Different States In Visual Working Memory: When It Guides Attention And When It Does Not. Trends Cogn. Sci. 2011, 15, 327–334. [Google Scholar] [CrossRef] [PubMed]

- Hollingworth, A.; Luck, S.J. The Role Of Visual Working Memory In The Control Of Gaze During Visual Search. Atten. Percept. Psychophys. 2009, 71, 936–949. [Google Scholar] [CrossRef] [PubMed]

- Barrett, D.J.K.; Zobay, O. Attentional Control Via Parallel Target-Templates In Dual-Target Search. Plos ONE 2014, 9, E86848. [Google Scholar] [CrossRef]

- Meier, M.E.; Kane, M.J. Attentional Control And Working Memory Capacity. In The Wiley Handbook Of Cognitive Control; Wiley: Chichester, West Sussex, UK, 2017; pp. 50–63. [Google Scholar]

- Peltier, C.; Becker, M.W. Individual Differences Predict Low Prevalence Visual Search Performance. Cogn. Res. Princ. Implic. 2017, 2, 5. [Google Scholar] [CrossRef]

- Helton, W.S.; Russell, P.N. Feature Absence-Presence And Two Theories Of Lapses Of Sustained Attention. Psychol. Res. 2011, 75, 384–392. [Google Scholar] [CrossRef]

- Friedman, N.P.; Miyake, A. Unity And Diversity Of Executive Functions: Individual Differences As A Window On Cognitive Structure. Cortex 2017, 86, 186–204. [Google Scholar] [CrossRef]

- Kane, M.J.; Bleckley, M.K.; Conway, A.R.A.; Engle, R.W. A Controlled-Attention View Of Working-Memory Capacity. J. Exp. Psychol. Gen. 2001, 130, 169–183. [Google Scholar] [CrossRef]

- Meiran, N. Modeling Cognitive Control In Task-Switching. Psychol. Res. 2000, 63, 234–249. [Google Scholar] [CrossRef]

- Meiran, N.; Chorev, Z.; Sapir, A. Component Processes In Task Switching. Cogn. Psychol. 2000, 41, 211–253. [Google Scholar] [CrossRef]

- Pashler, H. Task Switching And Multitask Performance. In Attention And Performance XVIII: Control Of Cognitive Processes; Monsell, S., Driver, J., Eds.; MIT Press: Cambridge, MA, USA, 2000; pp. 277–307. ISBN 0262133679. [Google Scholar]

- Adamo, S.H.; Cain, M.S.; Mitroff, S.R. An Individual Differences Approach To Multiple-Target Visual Search Errors: How Search Errors Relate To Different Characteristics Of Attention. Vis. Res. 2017, 141, 258–265. [Google Scholar] [CrossRef]

- Kiyonaga, A.; Egner, T. Working Memory As Internal Attention: Toward An Integrative Account Of Internal And External Selection Processes. Psychon. Bull. Rev. 2013, 20, 228–242. [Google Scholar] [CrossRef]

- Wolfe, J.M.; Brunelli, D.N.; Rubinstein, J.; Horowitz, T.S. Prevalence Effects In Newly Trained Airport Checkpoint Screeners: Trained Observers Miss Rare Targets, Too. J. Vis. 2013, 13, 33. [Google Scholar] [CrossRef]

- Sterchi, Y.; Hättenschwiler, N.; Schwaninger, A. Detection Measures For Visual Inspection Of X-Ray Images Of Passenger Baggage. Atten. Percept. Psychophys. 2019. [Google Scholar] [CrossRef]

- Tuddenham, W.J. Visual Search, Image Organization, And Reader Error In Roentgen Diagnosis. Radiology 1962, 78, 694–704. [Google Scholar] [CrossRef]

- Cain, M.S.; Mitroff, S.R. Memory For Found Targets Interferes With Subsequent Performance In Multiple-Target Visual Search. J. Exp. Psychol. Hum. Percept. Perform. 2013, 39, 1398–1408. [Google Scholar] [CrossRef]

- Cain, M.S.; Adamo, S.H.; Mitroff, S.R. A Taxonomy Of Errors In Multiple-Target Visual Search. Vis. Cogn. 2013, 21, 899–921. [Google Scholar] [CrossRef]

- Berbaum, K.S.; Schartz, K.M.; Caldwell, R.T.; Madsen, M.T.; Thompson, B.H.; Mullan, B.F.; Ellingson, A.N.; Franken, E.A. Satisfaction Of Search From Detection Of Pulmonary Nodules In Computed Tomography Of The Chest. Acad. Radiol. 2013, 20, 194–201. [Google Scholar] [CrossRef][Green Version]

- Berbaum, K.S.; Krupinski, E.A.; Schartz, K.M.; Caldwell, R.T.; Madsen, M.T.; Hur, S.; Laroia, A.T.; Thompson, B.H.; Mullan, B.F.; Franken, E.A. Satisfaction Of Search In Chest Radiography 2015. Acad. Radiol. 2015, 22, 1457–1465. [Google Scholar] [CrossRef]

- Adamo, S.H.; Cain, M.S.; Mitroff, S.R. Satisfaction At Last: Evidence For The “Satisfaction” Account For Multiple-Target Search Errors. In Proceedings of the Medical Imaging 2018: Image Perception, Observer Performance, And Technology Assessment, Houston, TX, USA, 7 March 2018. [Google Scholar]

- Peltier, C.; Becker, M.W. Decision Processes In Visual Search As A Function Of Target Prevalence. J. Exp. Psychol. Hum. Percept. Perform. 2016, 42, 1466–1476. [Google Scholar] [CrossRef]

- Schwartz, B.; Ward, A.; Monterosso, J.; Lyubomirsky, S.; White, K.; Lehman, D.R. Maximizing Versus Satisficing: Happiness Is A Matter Of Choice. J. Personal. Soc. Psychol. 2002, 83, 1178–1197. [Google Scholar] [CrossRef]

- Onefater, R.A.; Kramer, M.R.; Mitroff, S.R. Perfection And Satisfaction: A Motivational Predictor Of Cognitive Abilities. In Proceedings of the Annual Workshop On Object Perception, Attention, And Memory, Vancouver, BC, USA, 8–9 November 2017. [Google Scholar]

- Rusconi, E.; Ferri, F.; Viding, E.; Mitchener-Nissen, T. Xrindex: A Brief Screening Tool For Individual Differences In Security Threat Detection In X-Ray Images. Front. Hum. Neurosci. 2015, 9, 1–18. [Google Scholar] [CrossRef]

- Birrell, J.; Meares, K.; Wilkinson, A.; Freeston, M.H. Toward A Definition Of Intolerance Of Uncertainty: A Review Of Factor Analytical Studies Of The Intolerance Of Uncertainty Scale. Clin. Psychol. Rev. 2011, 31, 1198–1208. [Google Scholar] [CrossRef]

- Buhr, K.; Dugas, M.J. Investigating The Construct Validity Of Intolerance Of Uncertainty And Its Unique Relationship With Worry. J. Anxiety Disord. 2006, 20, 222–236. [Google Scholar] [CrossRef]

- Muhl-Richardson, A.; Godwin, H.J.; Garner, M.; Hadwin, J.A.; Liversedge, S.P.; Donnelly, N. Individual Differences In Search And Monitoring For Color Targets In Dynamic Visual Displays. J. Exp. Psychol. Appl. 2018, 24, 564–577. [Google Scholar] [CrossRef]

- Venjakob, A.C.; Mello-Thoms, C.R. Review Of Prospects And Challenges Of Eye Tracking In Volumetric Imaging. J. Med. Imaging 2015, 3, 011002. [Google Scholar] [CrossRef]

- Godwin, H.J.; Donnelly, N.; Liversedge, S.P. Eye Movement Behavior Of Airport X-Ray Screeners When Using Different Dual-View Display Systems. 2009. Unpublished Report. [Google Scholar]

- Drew, T.; Boettcher, S.; Wolfe, J.M. Searching While Loaded: Visual Working Memory Does Not Interfere With Hybrid Search Efficiency But Hybrid Search Uses Working Memory Capacity. Psychon. Bull. Rev. 2016, 23, 201–212. [Google Scholar] [CrossRef]

- Williams, L.H.; Drew, T. Working Memory Capacity Predicts Search Accuracy For Novel As Well As Repeated Targets. Vis. Cogn. 2018, 26, 463–474. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Donnelly, N.; Muhl-Richardson, A.; Godwin, H.J.; Cave, K.R. Using Eye Movements to Understand how Security Screeners Search for Threats in X-Ray Baggage. Vision 2019, 3, 24. https://doi.org/10.3390/vision3020024

Donnelly N, Muhl-Richardson A, Godwin HJ, Cave KR. Using Eye Movements to Understand how Security Screeners Search for Threats in X-Ray Baggage. Vision. 2019; 3(2):24. https://doi.org/10.3390/vision3020024

Chicago/Turabian StyleDonnelly, Nick, Alex Muhl-Richardson, Hayward J. Godwin, and Kyle R. Cave. 2019. "Using Eye Movements to Understand how Security Screeners Search for Threats in X-Ray Baggage" Vision 3, no. 2: 24. https://doi.org/10.3390/vision3020024

APA StyleDonnelly, N., Muhl-Richardson, A., Godwin, H. J., & Cave, K. R. (2019). Using Eye Movements to Understand how Security Screeners Search for Threats in X-Ray Baggage. Vision, 3(2), 24. https://doi.org/10.3390/vision3020024