Hands Ahead in Mind and Motion: Active Inference in Peripersonal Hand Space

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

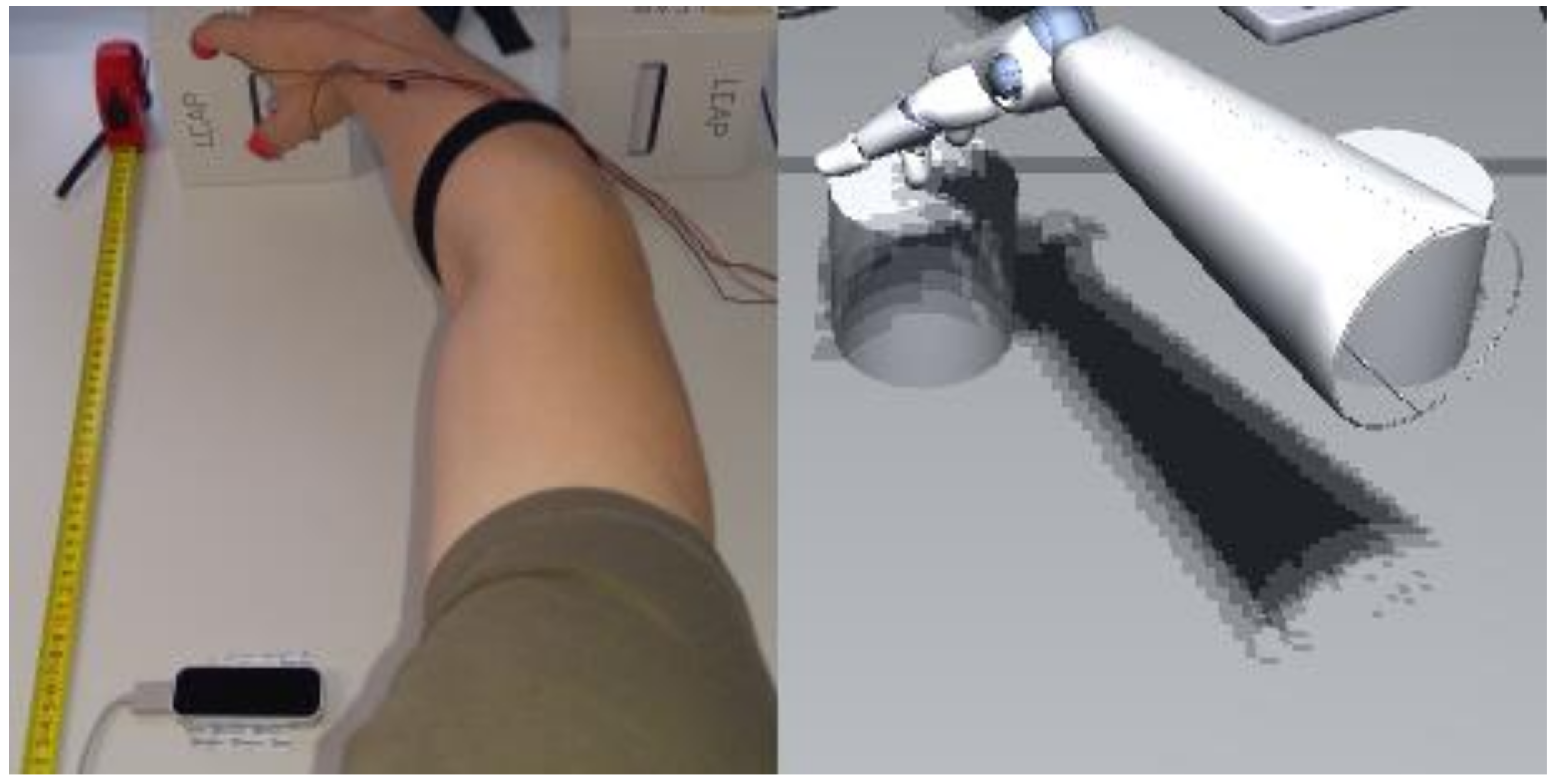

2.2. Apparatus

2.3. Virtual Reality Setup

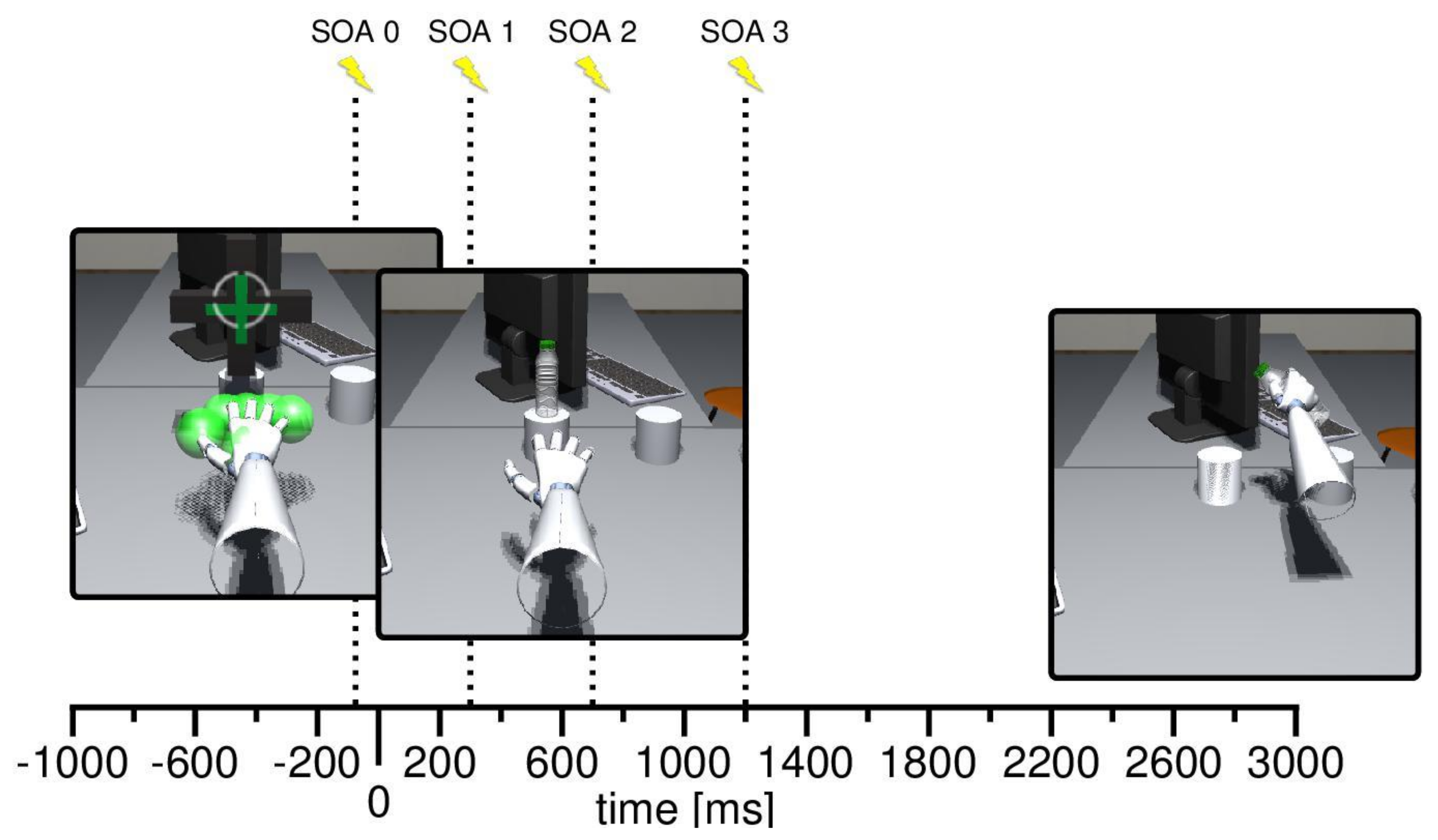

2.4. Procedure

2.5. Data Analysis

2.6. Congruency

3. Results

3.1. Experiment 1

3.2. Experiment 2

4. Discussion

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 2013, 36, 181–204. [Google Scholar]

- Cisek, P.; Kalaska, J.F. Neural mechanisms for interacting with a world full of action choices. Annu. Rev. Neurosci. 2010, 33, 269–298. [Google Scholar] [CrossRef]

- Bar, M. Predictions: A universal principle in the operation of the human brain. Philos. Trans. R. Soc. B 2009, 364, 1181–1182. [Google Scholar] [CrossRef]

- Hoffmann, J. Vorhersage und Erkenntnis: Die Funktion von Antizipationen in der menschlichen Verhaltenssteuerung und Wahrnehmung (Anticipation and Cognition: The Function of Anticipations in Human Behavioral Control and Perception); Hogrefe: Göttingen, Germany, 1993. [Google Scholar]

- Hoffmann, J. Anticipatory behavioral control. In Anticipatory Behavior in Adaptive Learning Systems: Foundations, Theories, and Systems; Butz, M.V., Sigaud, O., Gérard, P., Eds.; Springer: Berlin, Germany, 2003; pp. 44–65. [Google Scholar]

- Hommel, B.; Müsseler, J.; Aschersleben, G.; Prinz, W. The theory of event coding (TEC): A framework for perception and action planning. Behav. Brain Sci. 2001, 24, 849–878. [Google Scholar] [CrossRef]

- Prinz, W. A common coding approach to perception and action. In Relationships between Perception and Action; Neumann, O., Prinz, W., Eds.; Springer: Berlin/Heidelberg, Germany, 1990; pp. 167–201. [Google Scholar]

- Butz, M.V.; Kutter, E.F. How the Mind Comes into Being: Introducing Cognitive Science from a Functional and Computational Perspective; Oxford University Press: Oxford, UK, 2017. [Google Scholar]

- Stock, A.; Stock, C. A short history of ideo-motor action. Psychol. Res. 2004, 68, 176–188. [Google Scholar] [CrossRef]

- Friston, K.J. The free-energy principle: A rough guide to the brain? Trends Cognit. Sci. 2009, 13, 293–301. [Google Scholar] [CrossRef]

- Friston, K.J.; Daunizeau, J.; Kiebel, S.J. Reinforcement learning or active inference? PLoS ONE 2009, 4, e6421. [Google Scholar] [CrossRef]

- Friston, K.; Mattout, J.; Kilner, J. Action understanding and active inference. Biol. Cybern. 2011, 104, 137–160. [Google Scholar] [CrossRef]

- Zacks, J.M.; Speer, N.K.; Swallow, K.M.; Braver, T.S.; Reynolds, J.R. Event perception: A mind-brain perspective. Psychol. Bull. 2007, 133, 273–293. [Google Scholar] [CrossRef]

- Zacks, J.M.; Tversky, B. Event structure in perception and conception. Psychol. Bull. 2001, 127, 3–21. [Google Scholar] [CrossRef]

- Butz, M.V. Towards a unified sub-symbolic computational theory of cognition. Front. Psychol. 2016, 7, 925. [Google Scholar] [CrossRef] [PubMed]

- Yarbus, A.L. Eye and Vision, 1st ed.; Plenum Press: New York, NY, USA, 1967. [Google Scholar]

- Hayhoe, M.M.; Shrivastava, A.; Mruczek, R.; Pelz, J.B. Visual memory and motor planning in a natural task. J. Vis. 2003, 3, 49–63. [Google Scholar] [CrossRef] [PubMed]

- Belardinelli, A.; Stepper, M.Y.; Butz, M.V. It’s in the eyes: Planning precise manual actions before execution. J. Vis. 2016, 16, 18. [Google Scholar] [CrossRef] [PubMed]

- Graziano, M.S.; Cooke, D.F. Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia 2006, 44, 845–859. [Google Scholar] [CrossRef]

- Serino, A. Variability in multisensory responses predicts the self-space. Trends Cogn. Sci. 2016, 20, 169–170. [Google Scholar] [CrossRef]

- Holmes, N.P.; Spence, C. The body schema and multisensory representation(s) of peripersonal space. Cognit. Process. 2004, 5, 94–105. [Google Scholar] [CrossRef] [PubMed]

- Brozzoli, C.; Ehrsson, H.H.; Farnè, A. Multisensory representation of the space near the hand: From perception to action and interindividual interactions. Neuroscientist 2014, 20, 122–135. [Google Scholar] [CrossRef]

- Bernasconi, F.; Noel, J.P.; Park, H.D.; Faivre, N.; Seeck, M.; Spinelli, L.; Serino, A. Audio-tactile and peripersonal space processing around the trunk in human parietal and temporal cortex: An intracranial EEG study. Cereb. Cortex 2018, 28, 3385–3397. [Google Scholar] [CrossRef]

- Avillac, M.; Hamed, S.B.; Duhamel, J.R. Multisensory integration in the ventral intraparietal area of the macaque monkey. J. Neurosci. 2007, 27, 1922–1932. [Google Scholar] [CrossRef]

- Spence, C.; Pavani, F.; Maravita, A.; Holmes, N. Multisensory contributions to the 3-D representation of visuotactile peripersonal space in humans: Evidence from the crossmodal congruency task. J. Physiol. (Paris) 2004, 98, 171–189. [Google Scholar] [CrossRef]

- Hunley, S.B.; Lourenco, S.F. What is peripersonal space? An examination of unresolved empirical issues and emerging findings. Wires Cogn. Sci. 2018, 9, e1472. [Google Scholar] [CrossRef]

- Graziano, M.S.A. The organization of behavioral repertoire in motor cortex. Annu. Rev. Neurosci. 2006, 29, 105–134. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Fadiga, L.; Fogassi, L.; Gallese, V. The space around us. Science 1997, 277, 190–191. [Google Scholar] [CrossRef]

- Holmes, N.P.; Calvert, G.A.; Spence, C. Extending or projecting peripersonal space with tools? Multisensory interactions highlight only the distal and proximal ends of tools. Neurosci. Lett. 2004, 372, 62–67. [Google Scholar] [CrossRef]

- Maravita, A.; Driver, J. Cross-modal integration and spatial attention in relation to tool use and mirror use: Representing and extending multisensory space near the hand. In The Handbook of Multisensory Processes; Calvert, G.A., Spence, C., Stein, B.E., Eds.; The MIT Press: Cambridge, MA, USA, 2004; pp. 819–835. [Google Scholar]

- Holmes, N. Does tool use extend peripersonal space? A review and re-analysis. Exp. Brain Res. 2012, 218, 273–282. [Google Scholar] [CrossRef]

- Bufacchi, R.J.; Iannetti, G.D. An action field theory of peripersonal space. Trends Cogn Sci. 2018, 22, 1076–1090. [Google Scholar] [CrossRef]

- Brozzoli, C.; Pavani, F.; Urquizar, C.; Cardinali, L.; Farnè, A. Grasping actions remap peripersonal space. Neuroreport 2009, 20, 913–917. [Google Scholar] [CrossRef]

- Brozzoli, C.; Cardinali, L.; Pavani, F.; Farnè, A. Action-specific remapping of peripersonal space. Neuropsychologia 2010, 48, 796–802. [Google Scholar] [CrossRef]

- Belardinelli, A.; Lohmann, J.; Farnè, A.; Butz, M.V. Mental space maps into the future. Cognition 2018, 176, 65–73. [Google Scholar] [CrossRef]

- Herbort, O.; Butz, M.V. The continuous end-state comfort effect: Weighted integration of multiple biases. Psychol. Res. 2012, 76, 345–363. [Google Scholar] [CrossRef]

- Rosenbaum, D.A.; Marchak, F.; Barnes, H.J.; Vaughan, J.; Slotta, J.D.; Jorgensen, M.J. Constraints for action selection: Overhand versus underhand grips. In Attention and Performance 13: Motor Representation and Control; Jeannerod, M., Ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1990; pp. 321–345. [Google Scholar]

- Patané, I.; Cardinali, L.; Salemme, R.; Pavani, F.; Farnè, A.; Brozzoli, C. Action Planning Modulates Peripersonal Space. J. Cogn. Neurosci. 2018, 1–14. [Google Scholar] [CrossRef]

- Friston, K. The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Noel, J.P.; Samad, M.; Doxon, A.; Clark, J.; Keller, S.; Di Luca, M. Peri-personal space as a prior in coupling visual and proprioceptive signals. Sci. Rep. (UK) 2018, 8. [Google Scholar] [CrossRef]

- Smeragliuolo, A.H.; Hill, N.J.; Disla, L.; Putrino, D. Validation of the Leap Motion Controller using markered motion capture technology. J. Biomech. 2016, 49, 1742–1750. [Google Scholar] [CrossRef] [PubMed]

- Niechwiej-Szwedo, E.; Gonzalez, D.; Nouredanesh, M.; Tung, J. Evaluation of the Leap Motion Controller during the performance of visually-guided upper limb movements. PLoS ONE 2018, 13, e0193639. [Google Scholar] [CrossRef]

- Schubert, T.W.; Friedmann, F.; Regenbrecht, H. The experience of presence: Factor analytic insights. Presence Teleop. Virt. Environ. 2001, 10, 266–281. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, 2016. Available online: https://www.R-project.org/ (accessed on 17 April 2019).

- Lawrence, M.A. Ez: Easy Analysis and Visualization of Factorial Experiments, 2015. Available online: https://CRAN.R-project.org/package=ez, R package version 4.3 (accessed on 17 April 2019).

- Morey, R.D.; Rouder, J.N. BayesFactor: Compuatation of Bayes Factors for Common Designs, 2018. Available online: https://CRAN.R-project.org/package=BayesFactor (accessed on 17 April 2019).

- Burge, J.; Ernst, M.O.; Banks, M.S. The statistical determinants of adaptation rate in human reaching. J. Vis. 2008, 8, 1–19. [Google Scholar] [CrossRef]

- Tarr, M.J.; Pinker, S. Mental rotation and orientation-dependence in shape recognition. Cogn. Psychol. 1989, 21, 233–282. [Google Scholar] [CrossRef]

- Duhamel, J.R.; Colby, C.L.; Goldberg, M.E. The updating of the representation of visual space in parietal cortex by intended eye movements. Science 1992, 255, 90–92. [Google Scholar] [CrossRef]

- Cléry, J.; Guipponi, O.; Odouard, S.; Wardak, C.; Hamed, S.B. Impact prediction by looming visual stimuli enhances tactile detection. J. Neurosci. 2015, 35, 4179–4189. [Google Scholar] [CrossRef]

- Iachini, T.; Ruggiero, G.; Ruotolo, F.; Vinciguerra, M. Motor resources in peripersonal space are intrinsic to spatial encoding: Evidence from motor interference. Acta Psychol. 2014, 153, 20–27. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lohmann, J.; Belardinelli, A.; Butz, M.V. Hands Ahead in Mind and Motion: Active Inference in Peripersonal Hand Space. Vision 2019, 3, 15. https://doi.org/10.3390/vision3020015

Lohmann J, Belardinelli A, Butz MV. Hands Ahead in Mind and Motion: Active Inference in Peripersonal Hand Space. Vision. 2019; 3(2):15. https://doi.org/10.3390/vision3020015

Chicago/Turabian StyleLohmann, Johannes, Anna Belardinelli, and Martin V. Butz. 2019. "Hands Ahead in Mind and Motion: Active Inference in Peripersonal Hand Space" Vision 3, no. 2: 15. https://doi.org/10.3390/vision3020015

APA StyleLohmann, J., Belardinelli, A., & Butz, M. V. (2019). Hands Ahead in Mind and Motion: Active Inference in Peripersonal Hand Space. Vision, 3(2), 15. https://doi.org/10.3390/vision3020015