Abstract

We present a Riemannian geometry theory to examine the systematically warped geometry of perceived visual space attributable to the size–distance relationship of retinal images associated with the optics of the human eye. Starting with the notion of a vector field of retinal image features over cortical hypercolumns endowed with a metric compatible with that size–distance relationship, we use Riemannian geometry to construct a place-encoded theory of spatial representation within the human visual system. The theory draws on the concepts of geodesic spray fields, covariant derivatives, geodesics, Christoffel symbols, curvature tensors, vector bundles and fibre bundles to produce a neurally-feasible geometric theory of visuospatial memory. The characteristics of perceived 3D visual space are examined by means of a series of simulations around the egocentre. Perceptions of size and shape are elucidated by the geometry as are the removal of occlusions and the generation of 3D images of objects. Predictions of the theory are compared with experimental observations in the literature. We hold that the variety of reported geometries is accounted for by cognitive perturbations of the invariant physically-determined geometry derived here. When combined with previous description of the Riemannian geometry of human movement this work promises to account for the non-linear dynamical invertible visual-proprioceptive maps and selection of task-compatible movement synergies required for the planning and execution of visuomotor tasks.

1. Introduction

From the time of Euclid (300 BC) onwards builders and surveyors and the like have found the three-dimensional (3D) world in which they function to be adequately described by the theorems of Euclidean geometry. The shortest path between two points is a straight line, parallel lines never meet, the square on the hypotenuse equals the sum of squares on the other two sides, and so forth. When we look at the world about us we see a 3D world populated with 3D objects of various shapes and sizes. However, it is easy to show that what we see is a distorted or warped version of the Euclidean world that is actually out there. Hold the left and right index fingers close together about 10 cm in front of the eyes. They appear to be the same size. Fix the gaze on the right finger and move it out to arm’s length. The right finger now looks smaller than the left while, with the gaze fixed on the right finger, the left finger looks blurred and double. If the left finger is moved from side to side, neither of the double images occludes the smaller right finger; both images of the left finger appear transparent. The same phenomenon occurs regardless of which direction along the line of gaze the right finger is moved. Clearly, our binocular perception of 3D visual space is a distorted or warped version of the 3D Euclidean space actually out there.

Van Lier [1], in his thesis on visual perception in golf putting, reviewed many studies indicating that the perceived visual space is a warped version of the actual world. He likened this to the distorted image of the world reflected in a sphere as depicted in M C Escher’s 1935 lithograph “hand with reflecting sphere”. In Escher’s warped reflection straight lines have become curved, parallel lines are no longer parallel, and lengths and directions are altered. The exact nature of this warping can be defined by Riemannian geometry where the constant curvature of the sphere can be computed from the known Riemannian metric on a sphere. We mention Escher’s reflecting sphere simply to illustrate how the image of the 3D Euclidean world can be warped by the curvature of visual space. We are not claiming that the warped image of the Euclidean environment seen by humans is the same as a reflection in a sphere; indeed it is unlikely to be so. Nevertheless it provides a fitting analogy to introduce the Riemannian concept.

Luneburg [2] appears to have been first to argue that the geometry of the perceived visual space is best described as Riemannian with constant negative curvature. He showed that the geometry of any manifold can be derived from its metric which suggested that the problem is “to establish a metric for the manifold of visual sensation”. This is the approach we adopt here. We contend that the appropriate metric is that defined by the size–distance relationship introduced by the geometrical optics of the eye, as will be detailed later in this section. It is due to this relationship that objects are perceived to change in size without changing their infinitesimal shape as they recede along the line of gaze. A small ball, for example, appears to shrink in size as it recedes but it still looks like a ball. It does not appear to distort into an ellipsoid or a cube or any other shape. This gives an important clue to the geometry of 3D perceived visual space. For objects to appear to shrink in size without changing their infinitesimal shape as they recede along the line of gaze, the 3D perceived visual space has to shrink equally in all three dimensions as a function of Euclidean distance along the line of gaze. If it did not behave in this way objects would appear to shrink in size unequally in their perceived width, height and depth dimensions and, consequently, not only their size but also their infinitesimal shape would appear to change. (We use the term “infinitesimal shape” because, as explained in Section 4.1, differential shrinking in all three dimensions as a function of Euclidean distance causes a contraction in the perceived depth direction and so distorts the perceived shape of macroscopic objects in the depth direction as described by Gilinsky [3].)

The perceived change in size of objects causes profound distortion. Perceived depths, lengths, areas, volumes, angles, velocities and accelerations all are transformed from their true values. It is those transformations that are encapsulated in the metric from which we can define the warping of perceived visual space. The terms “perceived visual space” and “perceived visual manifold” occur throughout this paper and, since in Riemannian geometry a manifold is simply a special type of topological space, we apply these terms synonymously. Moreover, the use of “perceived” should be interpreted philosophically from the point of view of indirect realism as opposed to direct realism, something we touch on in Section 8. In other words, in our conceptualization, perceived visual space and the perceived visual manifold are expressions of the neural processing and mapping that form the physical representation of visual perception in the brain.

Since at least the eighteenth century, philosophers, artists and scientists have theorized on the nature of perceived visual space and various geometries have been proposed [4,5]. Beyond the simple demonstration above there has long been a wealth of formal experimental evidence to demonstrate that what we perceive is a warped transformation of physical space [2,3,6,7,8,9,10,11,12,13,14]. In some cases a Riemannian model has seemed appropriate but, as we shall see from recent considerations, the mathematics of the distorted transformation is currently thought to depend on the experiment. Prominent in the field has been the work of Koenderink and colleagues, who were first to make direct measurements of the curvature of the horizontal plane in perceived visual space using the novel method of exocentric pointing [15,16,17]. Results showed large errors in the direction of pointing that varied systematically from veridical, depending on the exocentric locations of the pointer and the target. The curvature of the horizontal plane derived from these data revealed that the horizontal plane in perceived visual space is positively curved in the near zone and negatively curved in the far zone. Using alternative tasks requiring judgements of parallelity [18] and collinearity [19] this same group further measured the warping of the horizontal plane in perceived visual space. Results from the collinearity experiment were similar to those from the previous exocentric pointing experiment but, compared with the parallelity results, the deviations from veridical had a different pattern of variation and were much smaller.

Using the parallelity data, Cuijpers et al. [20] derived the Riemannian metric and the Christoffel symbols for the perceived horizontal plane. They found that the Riemannian metric for the horizontal plane was conformal, that is, the angles between vectors defined by the metric are equal to the angles defined by a Euclidean metric. They computed the components of the curvature tensor and found them to be zero (i.e., flat) for every point in the horizontal plane. This was not consistent with their finding of both positive and negative curvature in the earlier pointing experiment. Meanwhile, despite the similarity of the experimental setups, these authors had concluded that their collinearity results could not be described by the same Riemannian geometry that applied to their parallelity results [19]. The implication was that the geometry of the perceived visual space is task-dependent. From the continued work of this group [21,22,23,24,25,26,27,28,29,30,31] along with that of other researchers, it is now apparent that experimental measures of the geometry of perceived visual space are not just task-dependent. They vary according to the many contextual factors that affect the spatial judgements that provide those measures [4,5,32]. Along with the nature of the task these can include what is contained in the visual stimuli, the availability of external reference frames, the setting (indoors vs. outdoors), cue conditions, judgement methods, instructions, observer variables such as age, and the presence of illusions.

The inconsistency of results in the many attempts to measure perceptual visual space has led some to question or even abandon the concept of such a space [19,33]. This is unnecessary. Wagner and Gambino [4] draw attention to researchers who argue that there really is only one visual space in our perceptual experience but that it has a cognitive overlay in which observers supplement perception with their knowledge of how distance affects size [11,34,35,36,37,38,39]. We agree. However, Wagner [32] argues that separation into sensory and cognitive components is meaningless unless the sensory component is reportable under some experimental condition. While there is, unfortunately, no unambiguous way to determine such a condition, mathematical models and simulations of sensory processes may provide a possible way around the dilemma. Rather than rejecting the existence of a geometrically invariant perceived visual space we suggest that the various measured geometries are accounted for by top-down cognitive mechanisms perturbing the underlying invariant geometry derivable mathematically from the size–distance relationship between the size of the image on the retina and the Euclidean distance between the nodal point of the eye and the object in the environment. This relationship is attributable to the anatomy of the human eye functioning as an optical system. For simplicity in what follows we will refer to this size–distance relationship as being attributable to the geometric optics of the eye. We now consider what determines that geometry.

The human visual system has evolved to take advantage of a frontal-looking, high-acuity central (foveal), low-acuity peripheral, binocular anatomy but at the same time it has had to cope with the inevitable size–distance relationship of retinal images that the geometric optics of such eyes impose. To allow survival in a changing and uncertain 3D Euclidean environment it would seem important for the visual system to have evolved so that the perceived 3D visual space matches as closely as possible the Euclidean structure of the actual 3D world. We contend that in order to achieve this, the visual system has to model the ever-present warping introduced by the geometrical optics of the eye and that this warping can be described by an invariant Riemannian geometry. Accordingly, this paper focuses on that geometry and on the way it can be incorporated into a realistic neural substrate.

A simple pinhole camera model of the human eye [40,41] shows that the size of the image on the retina of an object in the environment changes in inverse proportion to the Euclidean distance between the pinhole and the object in the environment. Modern schematic models of the eye are far more complex, with multiple refracting surfaces needed to emulate a full range of optical characteristics. However, as set out by Katz and Kruger [42], object–image relationships can be determined by simple calculations using the optics of the reduced human eye due to Listing. They state:

[Listing] reduced the eye model to a single refracting surface, the vertex of which corresponds to the principal plane and the nodal point of which lies at the centre of curvature. The justification for this model is that the two principal points that lie midway in the anterior chamber are separated only by a fraction of a millimetre and hardly shift during accommodation. Similarly, the two nodal points lie equally close together and remain fixed near the posterior surface of the lens. In the reduced model the two principal points and the two nodal points are combined into a single principal point and a single nodal point. Retinal image sizes may be determined very easily because the nodal point is at the centre of curvature of this single refractory surface. A ray from the tip of an object directed toward the nodal point will go straight to the retina without bending, therefore object and image subtend the same angle. The retinal image size is found by multiplying the distance from the nodal point to the retina (17.2 mm) by the angle in radians subtended by the object [42] (see Figure 18).

Thus the geometry of the eye determines that the size of the retinal image varies in proportion to the angle subtended by the object at the nodal point of the eye. Or stated equivalently, the geometry of the eye determines that the size of the image changes in inverse proportion to the Euclidean distance between the object and the nodal point of the eye. For the perceived sizes of objects in the perceived 3D visual space to change in inverse proportion to Euclidean distance along the line of gaze in the outside world without changing their perceived infinitesimal shape, the perceived 3D visual space has to shrink by equal amounts in all three dimensions in inverse proportion to the Euclidean distance. From this assertion, the Riemannian metric for the 3D perceived visual space can be deduced, and from the metric the geometry of the 3D perceived visual space can be computed and compared with the geometry measured experimentally.

Our principal aim is to present a mathematical theory of the information processing required within the human brain to account for the ability to form 3D images of the outside world as we move about within that world. As such, the theory developed is about the computational processes and not about the neural circuits that implement those computations. Nevertheless, the theory builds on established knowledge of the visual cortex (Section 2) as well as on the existence of place maps that have been shown to exist in hippocampal and parahippocampal regions of the brain [43,44,45,46,47,48,49,50]. Throughout this paper we take it as given that the place and orientation of the head, measured with respect to an external Cartesian reference frame (X,Y,Z), are encoded by neural activity in hippocampal and parahippocampal regions of the brain and that this region acts as a portal into visuospatial memory. We focus on the computational processes required within the brain to form a cognitive model of the 3D visual world experienced when moving about within that world. In that sense, the resulting Riemannian theory can be seen as an extension of the view-based theory of spatial representation in human vision proposed by Glennerster and colleagues [51].

The theory is presented in a series of steps starting in the periphery and moving centrally as described in Section 2 through Section 7. To provide a road map and to illustrate how the various sections relate to each other, we provide here a brief overview.

Section 2: It is well known that images on the retinas are encoded into neural activity by photoreceptors and transmitted via retinal ganglion cells and cells in the lateral geniculate nuclei to cortical columns (hypercolumns) in the primary visual cortex. We define left and right retinal hyperfields and hypercolumns and describe the retinotopic connections between them. We treat hyperfields and hypercolumns as basic modules of image processing. We describe extraction of orthogonal features of images on corresponding left and right retinal hyperfields during each interval of fixed gaze by minicolumns within each hypercolumn. We further describe how the coordinates of image points in the 3D environment projecting on to left and right retinal hyperfields can be computed stereoscopically and encoded within each hypercolumn.

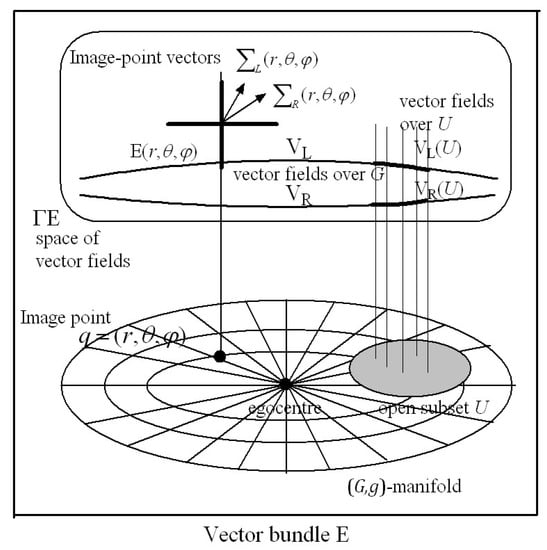

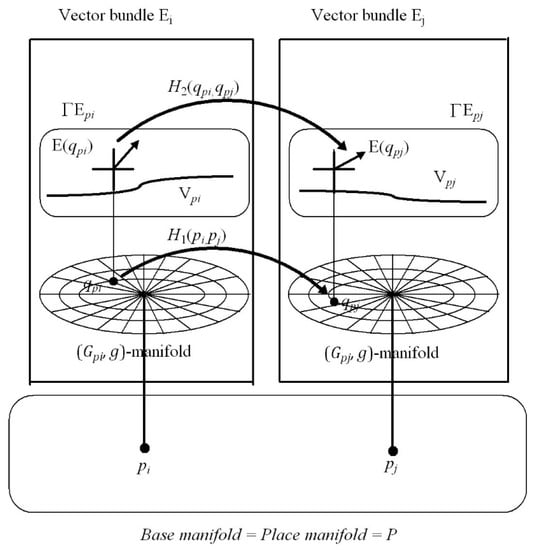

Section 3: Here we describe a means of accumulating an overall image of the environment seen from a fixed place. This depends on visual scanning of the environment via a sequence of fixed gaze points. We argue that at the end of each interval of fixed gaze, before the gaze is shifted and the information within the hypercolumns lost, the vectors of corresponding left and right retinal hyperfield image features encoded within each hypercolumn are pasted into a visuospatial gaze-based association memory network (G-memory) in association with their cyclopean coordinates. The resulting gaze-based G-memory forms an internal representation of the perceived 3D outside world with each ‘memory site’ accessed by the cyclopean coordinates of the corresponding image point in the 3D outside world. The 3D G-space with image-point vectors stored in vector-spaces at each image point in the G-space has the structure of a vector bundle in Riemannian (or affine) geometry. We also contend in Section 3 that the nervous system can adaptively model the size–distance relationship between the size of the 2D retinal image on the fovea and the Euclidean distance in the outside world between the eye and the object. From this relationship the Riemannian metric describing the perceived size of an object at each image point in the environment can be deduced and stored at the corresponding image point in -memory. The G-memory is then represented geometrically as a 3D Riemannian manifold (,) with metric tensor field . The geometry of the 3D perceived visual manifold can be computed from the way the metric varies from image point to image point in the manifold.

Section 4: Applying the metric deduced in Section 3, we use Riemannian geometry to quantify the warped geometry of the 3D perceived visual space.

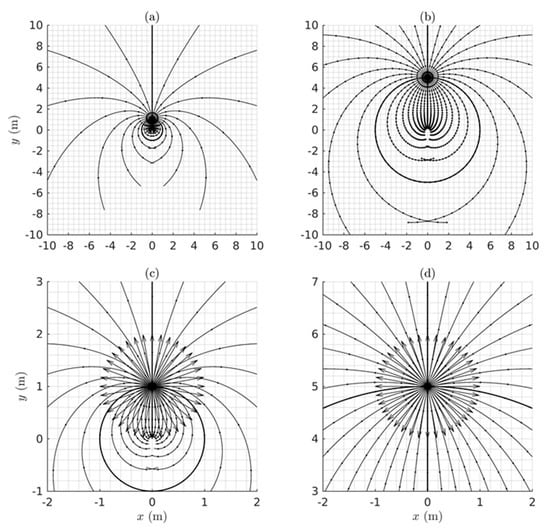

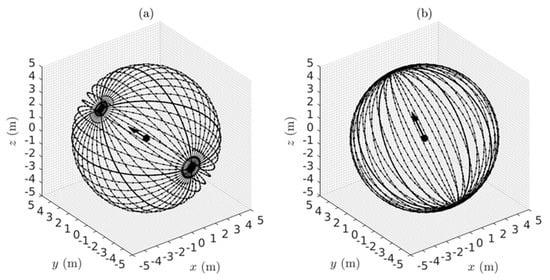

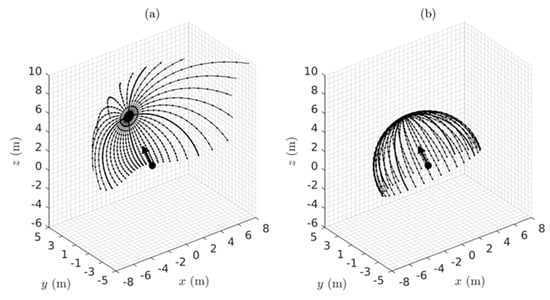

Section 5: By simulating families of geodesic trajectories we illustrate the warping of the perceived visual space relative to the Euclidean world.

Section 6: Here we describe the computations involved in perceiving the size and shape of objects in the environment. When viewing from a fixed place, occlusions restrict us to seeing only curved 2D patches on the surfaces of 3D objects in the environment. The perceived 2D surfaces can be regarded as 2D submanifolds with boundary embedded in the ambient 3D perceived visual manifold (,). We show how the size and shape of the submanifolds can be computed using Riemannian geometry. In particular, we compute the way the warped geometry of the 3D ambient perceived visual manifold (,) causes the perceived size and shape of embedded surfaces to change as a function of position and orientation of the object in the environment relative to the observer.

Section 7: This introduces the notion of place-encoded visual images represented geometrically by a structure in Riemannian geometry known as a fibre bundle. We propose that the place of the head in the environment, encoded by neural activity in the hippocampus, is represented geometrically as a point in a 3D base manifold called the place map. Each point in the base manifold acts as an accession code for a partition of visuospatial memory (i.e., a vector bundle) . As the person moves about in the local environment, the vectors of visual features acquired through visual scanning at each place are stored into the vector bundle accessed by the point (place) in the base manifold . Thus place-encoded images of the surfaces of objects as seen from different places in the environment are accumulated over time in different vector bundle partitions of visuospatial memory. We show that adaptively tuned maps between each and every partition (vector bundle) of visuospatial memory, known in Riemannian geometry as vector-bundle morphisms, can remove occlusions and generate a 3D cognitive model of the local environment as seen in the correct perspective from any place in the environment.

Section 8: In this discussion section, material in the previous sections is pulled together and compared with experimental findings and with other theories of visuospatial representation.

Section 9: This section points to areas for future research development.

Riemannian geometry is concerned with curved spaces and the calculus of processes taking place within those curved spaces. This, we claim, is the best computational approach for analysis of visual processes within the curved geometry of 3D perceived visual space. A Riemannian geometry approach will reveal novel aspects of visual processing and allow the theory of 3D visual perception to be expressed in the language of modern mathematical physics. Our intention is to develop the groundwork for a theory of visual information processing able to account for the non-linearities and dynamics involved. The resulting theory will be integrated with our previous theory on the Riemannian geometry of human movement [52] but in this paper we focus entirely on vision. We do not attempt to justify in a rigorous fashion the theorems and propositions that we draw from Riemannian geometry. For that we rely on several excellent texts on the subject and we direct readers to these at the appropriate places. However, for those unfamiliar with the mathematics involved, we have sought to provide intuitive descriptions of the geometrical concepts. We trust that these, together with similar descriptions in our previous paper [52], can assist in making the power and the elegance of this remarkable geometry accessible to an interdisciplinary readership.

2. Preliminaries

Constructing a Riemannian geometry theory of visuospatial representation requires us to build bridges between well-established elements in the known science of the visual system and abstract objects in the Riemannian geometry of curved spaces. These bridges can be taken as definitions that link the real-world structure of the visual system with the abstract but deductively logical structure of Riemannian geometry. Thus, the descriptions in this section are crafted in a form that facilitates the application of Riemannian geometry to visual processing. While these bridges are clearly important, we see them as preliminary and not the main focus of the geometrical theory developed in subsequent sections. Thus we give only abbreviated description of these preliminaries, and rely on the reader to refer as needed to texts such as Seeing. The Computational Approach to Biological Vision [40], Perceiving in Depth [53,54,55], Sensation and Perception [56] and papers referenced therein.

Section 2.1, Section 2.2, Section 2.3, Section 2.4, Section 2.5, Section 2.6 and Section 2.7 outline proposals concerning binocular vision and the encoding of retinal hyperfield images by the hypercolumns of V1. We define corresponding left and right retinal hyperfields and the cortical hypercolumn to which they connect as the basic computational module for extracting visual image features during an interval of fixed gaze. We propose that the parallel processing of many such modules provides the structure of vector fields over a Riemannian manifold. Then, in Section 2.8, Section 2.9 and Section 2.10, we address mechanisms of depth perception and the computation of cyclopean coordinates that provide the Riemannian manifold upon which the vector fields of image features sit.

2.1. Retinal Coordinates

The straight-line ray passing through the nodal point of the eye and impinging on the centre of the fovea is called the visual axis of the eye. The horizontal angle and the vertical angle measured relative to the visual axis of all other straight-line rays passing through the nodal point of the eye and impinging on the retina define a set of coordinates (,) on the retina. The angles (,) can be related to the homogeneous coordinates of the real projective plane defined in Riemannian geometry [57] but we do not explore this further here. For simplicity, we refer to the open subset of the projective plane corresponding to all the straight-line rays passing through the nodal point and impinging on the retina as the retinal plane and we use the angles (,) as a coordinate system for points on this retinal plane. We use the ‘hat’ notation to distinguish the retinal coordinates (,) from the coordinates used later to represent direction of gaze. We define corresponding points in the left and right retinas to be points having the same retinal coordinates (,). Retinal coordinates are important because, via projective geometry, they provide the only link between events in the Euclidean outside world with neural encoding of those visual events within the nervous system.

An advantage of using angles (,) as coordinates for each retina is that, as mentioned in Section 1, the size of the image on the retina of an object in the environment is proportional to the angle subtended by the object at the nodal point of the eye. We claim that the perceived size of an object in the 3D environment is also proportional to the angle subtended by the object at the nodal point of the eye. Later we will show that this is a property of the Riemannian geometry of the perceived visual space.

2.2. Hyperfields

A hyperfield is a collection of overlapping ganglion cell receptive fields in the retina. We assume that the number of ganglion cells making up a hyperfield varies from small (25) in the fovea to large (300–500) in the periphery. For descriptive simplicity we assume that a hyperfield typically involves about 100–200 overlapping ganglion cell receptive fields. The instantaneous frequency of ganglion-cell action potentials is modulated by the Laplacian , sometimes called edge detection, of the intensity (,) of light across the ganglion cell receptive field [58]. The response of a hyperfield to the spatial pattern of light (,) falling on the hyperfield is encoded by the temporospatial pattern of action potentials of the collection of ganglion cells whose overlapping ganglion cell receptive fields make up the hyperfield.

2.3. Retinotopic Connections between Hyperfields and Hypercolumns

Ganglion cells from corresponding hyperfields in the left and right retinas (i.e., hyperfields centred on the same retinal coordinates (,) in the left and right retinas) project in a retinotopic fashion via the lateral geniculate nucleus (LGN) to local clusters of cortical columns in V1. These local clusters of about 100–200 minicolumns can be defined as hypercolumns [40]. Each pair of nearby hyperfields in each retina is mapped to nearby hypercolumns in V1. Importantly, corresponding hyperfields in the left and right retinas map to the same hypercolumn in V1. Stimulation of a left-eye retinal hyperfield activates a subset of minicolumns within a hypercolumn while stimulation of the corresponding right-eye hyperfield activates another overlapping subset of minicolumns in the same hypercolumn. Thus we can define left ocular dominance minicolumns, right ocular dominance minicolumns, and binocular minicolumns within a hypercolumn.

2.4. Hypercolumns

We regard each hypercolumn in V1 together with the corresponding left and right retinal hyperfields connecting to it as a functional visual processing module. The response of any single cortical cell is too ambiguous for it to serve as a reliable feature detector on its own [40]. Instead, we see minicolumns within a hypercolumn as the feature detectors for the different spatial patterns of light (, ) falling during a fixed-gaze interval on corresponding left and right retinal hyperfields that project retinotopically to the hypercolumn. This is consistent with long-established work concerning (i) the functional organization of the neocortex into a columnar arrangement [59,60,61,62]; (ii) the reciprocal columnar organization between the thalamus and the cerebral cortex [63]; (iii) the existence of networks of interconnected columns within widely separated regions of the cortex [64]; and (iv) the computational modelling of cortical columns [65,66,67]. Microelectrode recordings as well as other methods for visualizing the activity of cortical columns in V1 show that cells within a cortical column respond to the same feature of the light pattern on the retina. This has led to terms such as orientation columns, ocular dominance columns, binocular columns and color blobs.

2.5. Visual Features Extracted by Cortical Columns

The synaptic connections to cortical columns in V1 are well known to be plastic. They tune slowly over several weeks [68,69,70]. The features extracted by minicolumns in V1 are, therefore, stochastic features averaged over the tens of thousands of different patterns of light falling on the retinal hyperfields over the several weeks needed to tune the synaptic weights.

The stochastic properties of natural scenes averaged over long time windows possess statistical regularities at multiple scales [71,72]. Amplitude spectra averaged over tens of thousands of natural images are maximal at low spatial frequencies and decrease linearly with increasing frequency. This reveals the presence of correlations between neighbouring points in the images that persist in the averaged image [71]. Recent studies have revealed that micro movements of the head and eyes during each interval of fixed gaze modify the averaged spectra. The resulting averaged amplitude spectra are flat up to a spatial frequency of about 10 cycles per degree, after which they decrease rapidly with increasing spatial frequency [72,73,74].

While this information is valuable, it depends on linear analysis where amplitude spectra are derived from second-order statistics without taking higher-order moments into account. Yet the probability distribution of natural scenes is known to be non-Gaussian, with the stochastic structure revealing the existence of persistent higher-order moments [75,76]. The visual system, therefore, has to deal with these non-linearities. It is established [71,75,77,78] that the behaviour of simple cells in V1 can be described by Gabor functions [79] whose responses extend in both spatial and temporal frequency. Indeed, Olshausen and Field [77] have shown that maximizing the non-Gaussianity (sparseness) of image components is enough to explain the emergence of Gabor-like filters resembling the receptive fields of simple cells in V1. Likewise, Hyvӓrinen and Hoyer [80,81] have shown that the technique of independent components analysis can explain the emergence of invariant features characteristic of both simple and complex cells in V1. In reviewing the statistics of natural images and the processes by which they might efficiently be neurally encoded, Simoncelli and Olshausen [76] discussed independent components analysis as equivalent to a two-stage process involving first a linear principal components decomposition followed by a second rotation to take non-Gaussianity (non-linearity) into account. Our description of non-linear singular value decomposition as a two-stage process for visual feature extraction (Section 2.7, Appendix A) parallels the two-stage process described by Simoncelli and Olshausen [76].

2.6. Gaze and Focus Control

High acuity vision derives from the foveal region of the retina where the density of photoreceptors is greatest (160,000 cones per sq mm). The central region of the foveal pit is only about 100 microns across and subtends an angle of only about 0.3 degrees [40] while the rod-free fovea covers only 1.7 degrees of the visual field leaving 99.9% in the periphery [82]. Consequently, with the gaze fixed, it is only possible to obtain a high resolution image for a relatively small patch centred about the gaze point on the surface of an object in the environment. To build a high resolution image of the environment as seen from a fixed place the gaze has to be shifted from point to point via a sequence of head rotations and/or saccades with fixations of the eyes allowing the left and right retinal images for each gaze point to be accumulated in visuospatial memory. The interval of fixed gaze can vary from as short as 100 ms up to many thousands of milliseconds.

To perform such visual scanning, the nervous system requires a precision movement control system able to control the place and orientation of the head in the environment as well as the rotation of the eyes in the head and the thicknesses of the lenses in the eyes. Given a required gaze point in the environment, the gaze control system has to plan and execute coordinated movements of the head and eyes. Once the gaze point has been acquired the control system has to hold the images of the gaze point fixed on the foveas. Simultaneously the focus control system has to adjust the thicknesses of the lenses to maximize the sharpness of the images on the foveas.

Eye-movement control has wired-in synergies for conjugate, vergence, vertical and roll movements of the two eyes in the head and has a vestibulo-ocular reflex (feedforward) system able to generate movements of the eyes to compensate for perturbations of the place and orientation of the head. However, on their own, these wired-in synergies are insufficient to account for the coordinations required for accurate gaze and focus control. The required coupling of conjugate, vergence, vertical and roll movements of the eyes with each other, and with focus control and with movements of the head differs from one shift of gaze to another depending on the initial and final gaze points. The wired-in synergies themselves have to be coordinated by a more comprehensive, overriding, non-linear, multivariable, adaptive, optimal, predictive, feedforward/feedback movement control system. Much of our previous work has been concerned with developing a systems theory description of how such a movement control can be achieved [83].

2.7. Singular Value Decomposition as a Model for Visual Feature Extraction

We have long emphasized the importance of orthogonalization (and deorthogonalization) in the central processing of sensory and motor signals [52,83,84,85]. Indeed, we have argued that orthogonalization is ubiquitous at all levels throughout all sensory and motor systems of the brain. Not only does it ensure that sensory information is encoded centrally in the most efficient way by removing redundancy, as argued by Barlow [86], but it is necessary for the nervous system to be able to form forward and inverse adaptive models of the non-linear dynamical relationships within and between sensory and motor signals. Previously we have described a network of neural adaptive filters able to extract independently-varying (orthogonal) feature signals using a non-linear, dynamical, Gramm–Schmidt orthogonalization algorithm [83]. The same process can be described mathematically by non-linear, dynamical, Q–R factorization or by non-linear singular value decomposition (SVD).

In Appendix A we give a theoretical description of a two-stage extraction within a hypercolumn of linear and non-linear stochastic orthogonal visual-image feature signals from corresponding left and right retinal hyperfields during an interval of fixed gaze using non-linear SVD. We propose that non-linear SVD provides a useful computational model for the extraction of image-point vectors by the slowly tuning synaptic connectivity of minicolumns within the hypercolumns of V1. As described in Appendix A, the resulting 30 (ball-park figure) orthogonal non-linear stochastic feature signals extracted from the image (,) on a left retinal hyperfield during an interval of a fixed gaze are represented by the temporospatial patterns of activity induced in 30 left ocular dominance minicolumns in a hypercolumn. Similarly, the 30 orthogonal non-linear stochastic feature signals extracted from the image (,) on the corresponding right retinal hyperfield during the same interval of fixed gaze are encoded by the temporospatial patterns of neural activity induced in 30 right ocular dominance minicolumns in the same hypercolumn.

The orthogonal feature signals are extracted from images on corresponding hyperfields across the left and right retinas and so, together, the hypercolumns provide an encoding of both central and peripheral visual fields. As stated by Rosenholtz [82], peripheral vision most likely supports a variety of visual tasks including peripheral recognition, visual search, and getting the “gist” of a scene. Incorporating the work of others [87,88,89], she models the encoding of peripheral vision with parameters that include luminance autocorrelations, correlations of magnitudes of oriented V1-like wavelets (Gabor filters) across different orientations, neighbouring positions and scales, and phase correlation across scale. She states that, when pooled over sparse local image regions that grow linearly with eccentricity, these provide a rich, high-dimensional, efficient, compressed encoding of retinal images. Given the change in size of hyperfields and in the density of rods and cones therein as a function of eccentricity, this description of the encoding of retinal images is consistent with the extraction by non-linear SVD of vectors of orthogonal image features and from hyperfields across the left and right retinas during each interval of fixed gaze.

For subsequent simplicity we refer to and as image-point vectors. As described in Appendix A, during an interval of fixed gaze they encode the 30-dimensional vectors of orthogonal non-linear stochastic features extracted from the images that project respectively on to left and right retinal hyperfields from small neighbourhoods of points on the surfaces of objects in the environment. The image-point vectors across all the left hyperfields form a 30-dimensional vector field VL over all the left ocular dominance minicolumns in the hypercolumns of V1. Similarly, the image-point vectors from all the corresponding right hyperfields form a 30-dimensional vector field VR over all the right ocular dominance minicolumns in the hypercolumns of V1. Due to the retinotopic projections between retinal hyperfields and cortical hypercolumns, the vector fields VL and VR can also be thought of as vector fields over the left and right retinal hyperfields, respectively.

Representing the extracted orthogonal visual feature vector fields VL and VR over hypercolumns and over retinal hyperfields in this way facilitates a mathematical framework appropriate for development of a Riemannian geometry theory of binocular vision. But this requires a mechanism for quantifying the depth of objects perceived. These depth measures then provide a coordinate system for the Riemannian manifold on which the above vector fields are defined.

2.8. Depth Perception

As can be quickly verified by closing one eye or by seeing depth in a flat two-dimensional (2D) picture, stereopsis is not the only mechanism in the brain for perception of depth. Indeed, a variety of mechanisms have evolved for depth perception. We refer to these as top-down cognitive mechanisms and they work in parallel to estimate the depth of points in the visual world [40,41,56,90]. Top-down cognitive processes employ information derived from occlusions, relative size, texture gradients, shading, height in the visual field, aerial perspective and perspective to estimate depth [91] and they depend on memorized experience [92]. Whenever an estimate of depth is altered by one or other top-down mechanism (e.g., as in the Ames room or the virtual expanding room described below) the geometry of the perceived visual space will change giving rise to an inhomogeneous geometry. This applies particularly to monocular and pictorial visual space but is not to deny that there exists an underlying visual space with a stable Riemannian geometry attributable to the size–distance relationship introduced by the eye.

Usually the depths estimated by the various depth-estimation modules are in agreement, but circumstances can arise where they disagree. Sometimes the contradictions are reconciled into a single coherent perception such as when seeing depth in a picture while at the same time seeing the flat plane of the picture. In other circumstances, one or more of the depth estimates is overruled leading to illusions and the analysis of illusions is important in perceptual science. Artists and magicians often take advantage of this phenomenon to trick the visual systems of their observers. When looking through the peep hole of a trapezoidal-shaped Ames room, for example, a normal room with parallel walls, horizontal floor and rectangular windows is seen rather than the actual distorted trapezoidal shape because experience tells us that rooms are normally shaped this way [93,94,95,96]. The Ames room illusion is compelling even to the extent of seeing people change size as they walk about in the room. Similarly, in the expanding virtual room experiment of Glennerster et al. [97], estimates of depth derived from convergence of the eyes, retinal disparity and optical flow as a person moves about in the virtual room are overruled in favour of a cognitive estimate based on the experience that rooms do not expand as we walk about within them.

Clearly there are many cues that can influence the perception of depth and, as argued by Gilinsky [3], changes in perceived depth can influence the perceived size of an object independently of the angle subtended by the object at the eye. Foley et al. [10] argued that while the retinal image decreases in size in proportion to object distance, the perceived size changes much less. The principal assumption in their model is that, in the computation of perceived extent, the visual angle undergoes a magnifying transformation. Given the variety of cues and cognitive mechanisms that can contribute to the perception of depth, it should not be surprising that experimental conditions have a strong influence on experimental results, particularly on the experimentally measured geometry of 3D perceived visual space.

While not underestimating the importance of top-down cognitive mechanisms, we focus in this paper on binocular depth perception as the only means of obtaining an absolute measure of Euclidean depth. For the vector fields of left and right retinal images encoded during an interval of fixed gaze to have meaning in terms of events in the outside world, the vectors of corresponding left and right hyperfield image features encoded within each hypercolumn during an interval of fixed gaze have to be associated with coordinates of the points and in the outside world projecting on to corresponding left and right hyperfields respectively. In Section 2.9 and Section 2.10 we outline processes able to compute those coordinates.

2.9. Cyclopean Gaze Coordinates

The nervous system has only one bottom-up mechanism for obtaining an absolute measure of the Euclidean distance to gaze points in the environment [91,98]. This module uses afferent information encoding the place and orientation of the head in the environment as well as proprioceptive and vestibular information encoding the angles of the eyes within the head [98]. By minimizing disparity between images on the left and right foveas, the gaze control system adjusts the orientation of the head in the environment as well as the angles of the eyes in the head so that the visual axes of the eyes intersect accurately at the gaze point. The geometry is shown in Figure 1, and is based on the reduced model of the human eye [42] which takes into account the fact that the visual axis does not pass through the centre of rotation of the eye.

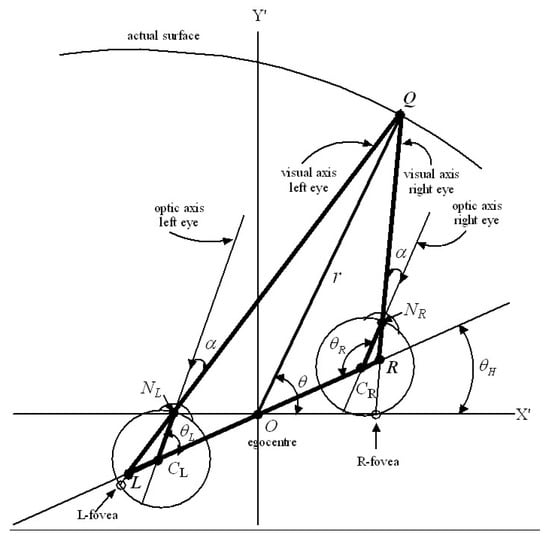

Figure 1.

A schematic 2D diagram based on the reduced model of the human eye [42]. CL and CR are the centres of rotation of the left and right eye, respectively, and NL and NR are the nodal points. The optic axis for each eye connects the centre of rotation to the nodal point. The visual axis for each eye connects the fovea to the nodal point. The distance between CL and CR is known as d. Its midpoint O is known as the egocentre and marks the position of a hypothetical cyclopean eye. The distances CLNL and CRNR are the same for each eye and are known as rE. The angle between the optic axis and the visual axis is also the same for each eye and is typically about 5 degrees in adults. gives the angle of the head relative to a translated external reference frame (, ) and and give the angles of the left and right eyes relative to the head when gaze is fixed on a surface point Q in the environment. The diagram shows the cyclopean gaze vector OQ in relation to the above geometry.

The position of the egocentre O measured with respect to the external reference frame (X,Y,Z) provides a measure of the egocentric place of the head in the environment. Since O is the point where a cyclopean eye would be located (if we had one) we define the line OQ connecting the O to the point of fixed gaze Q to be the cyclopean gaze vector. The distances d and rE and the angle are anatomical parameters that change with growth of the head and eye. Since these parameters influence the geometrical optics of images projected on to the retinas it does not seem unreasonable to suggest that the nervous system is able to model them adaptively through experience, for example, by modelling the relationship between the depth of an object and the size of its image on the retina, and by sensing the change in place of the head required to match the image on one retina with the memorized image on the other. The place and orientation of the head in the environment are encoded by neural activity in the hippocampus and parahippocampus so, referring to Figure 1, the angle of the head relative to the translated external coordinates (,) is known, and the angles of rotation and of the left and right eye within the head are sensed proprioceptively. Using the geometry of Figure 1, it can be shown that these known variables , , , d, rE and completely determine the Euclidean distance and angle from each eye to the gaze point as well as the length and direction of the cyclopean gaze vector OQ. This can be demonstrated by basic trigonometry (sine rule and cosine rule) of the three triangles NLLCL, NRLCR, and LQR. Importantly, this is not to say that the nervous system ‘does’ trigonometry in the same way we do. It is simply to establish that the information available to it is sufficient to determine uniquely the length and direction of the cyclopean gaze vector.

Thus we propose that during each interval of fixed gaze the nervous system is able to compute the cyclopean gaze coordinates of the fixed gaze point Q and hold them in working memory within the hypercolumn(s) receiving retinotopic input from the left and right foveal hyperfields. The length of the cyclopean gaze vector OQ provides an egocentric measure of the Euclidean distance (depth) of the gaze point Q while the angles provide a measure of the cyclopean direction of gaze relative to the external reference frame (X,Y,Z). A third angle equal to a rotation about the cyclopean gaze vector OQ is needed to completely specify the cyclopean gaze coordinates. Rolling of the head in the environment as well as internal and external rotation of the eyes in the head introduce a roll angle about the cyclopean gaze vector . However, while this roll angle is important in that it leads to rotation of the perceived visual image (e.g., when lying down or standing on one’s head), we will ignore it temporarily. We show in Section 5.2 that a roll about the gaze vector transforms the positions of all retinal image points in the 3D perceived visual space in an isometric fashion without changing the size or shape of the local image.

2.10. Cyclopean Coordinates of Peripheral Image Points

When gaze is fixed, points q other than the fixed gaze point Q on the surface of an object project with different angles into the two eyes and impinge on different retinal coordinates in the left and right eyes. This creates disparity between the left and right peripheral retinal images. Put alternatively, with the gaze point fixed, corresponding hyperfields on the left and right retinas receive projections from different points and () on the surface of an object in the environment. This is illustrated in Figure 2.

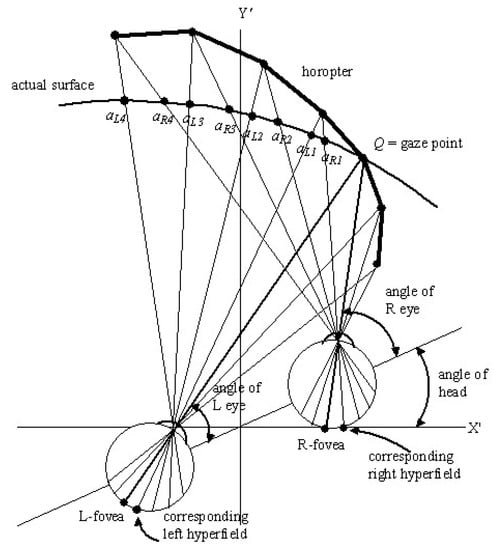

Figure 2.

A schematic 2D diagram illustrating the angle of the head relative to a translated external reference frame (, ) and the angles of the left and right eyes relative to the head when gaze is fixed on a surface point Q in the environment. The left and right eye visual axes are straight lines connecting the fovea through the nodal point of the eye to the gaze point Q. The fan-shaped grids of straight lines passing through the nodal point of each eye connect corresponding left and right retinal hyperfields to points and , respectively, on the surface. The image point projecting to a left retinal hyperfield is translated by a small amount relative to the image point projecting to the corresponding right retinal hyperfield. Thus the points and induce a disparity between the images projected to the corresponding left and right retinal hyperfields. The diagram also includes the hypothetical surface known as an horopter. This contains the points which induce no disparity between the images projected to corresponding left and right hyperfields.

We now propose that the difference between image-point vectors within each hypercolumn are computed and held on-line in binocular minicolumns within the same hypercolumns. In other words, we suggest that the high-dimensional statistics extracted in the form of image-point vectors from hyperfields across the retinas provide a rich encoding of disparity between images on the left and right retinas. Since certain ganglion cells respond to motion in retinal images, this can include disparity of velocity fields as proposed by Cormack et al. [99]. These difference vectors encode the disparity between the images from the points and () on corresponding left and right hyperfields. The image-disparity vectors form a disparity vector field over the hypercolumns. It has been established [100,101] that the geometry of visual disparity fields can be expressed in terms of four differential components; viz., expansion or dilation, curl or rotation, and two components of deformation or shear. An algebraic combination of these operators allows one eye image to be mapped on to the other. Likewise, in the Riemannian geometry theory presented here, we see the gradients, translations, rotations, dilations, and shear of the components of the image-disparity vector field over the hypercolumns as providing detailed measures of the local disparity between images on corresponding left and right retinal hyperfields.

The image-point vectors and provide sufficient information to accurately reconstruct the left and right hyperfield images. Hence the vectors between corresponding and neighbouring hyperfields in left and right eyes provide sufficient information to accurately reconstruct the difference between the images giving a measure of the shifts , , and () between them. With ‘knowledge’ of these shifts, the grid of overlapping triangles formed by the straight lines emanating from corresponding left and right retinal hyperfields via the nodal point of each eye (Figure 2) allows the cyclopean coordinates and for a line drawn from the egocentre O to each of the points and () to be computed relative to the cyclopean gaze coordinates of the fixed gaze point Q.

We propose that, along with the image-point vectors of all the points and , the cyclopean coordinates and () are also held on line in working memory by minicolumns within the hypercolumns receiving retinotopic projections from the corresponding left and right retinal hyperfields. In other words, during each interval of fixed gaze each hypercolumn encodes the two 30-dimensional vectors and of orthogonal image features extracted from the images on corresponding left and right retinal hyperfields and the cyclopean coordinates and of the points and () projecting the images on to those corresponding left and right hyperfields. To emphasize the fact that the coordinates and () are different even though they are encoded within the same hypercolumn, we use the term cyclopean gaze coordinates for the fixed gaze point Q and the term cyclopean coordinates for all other points and in the peripheral visual field.

Figure 2 also shows the horopter, defined in the Oxford Dictionary to be “a line or surface containing all those points in space of which images fall on corresponding points of the retinae; the aggregate of points of which are seen single in any given position of the eyes” [102]. As can be seen in the figure, this hypothetical curved line/surface contains the gaze point Q, and is constructed by plotting the intersections of all the straight lines that come from corresponding left and right hyperfields and pass through the nodal point of each eye. Because every point on the horopter projects to corresponding left and right retinal hyperfields, an actual surface that mimics it would generate a zero disparity image. From Figure 2 it can be seen that the shape of the horopter depends on the cyclopean gaze coordinates that in turn depend on the angles of the eyes in the head and the angle of the head relative to an external reference frame. It becomes less curved as the Euclidean distance to the fixed gaze point increases. With the gaze point Q and the orientation of the head fixed, the disparity between points on any actual surface in the environment projecting on to left and right retinal hyperfields increases in a non-linear way as the distance between the actual surface and the horopter increases. Because of this, the disparity between points on any actual surface varies in a non-linear fashion across the retinas depending on how its distance and shape varies from that of the horopter.

Despite this complicated variation of the image-disparity field across the retinas, its non-linear dependence on the location of the gaze point Q, the orientation of the head in the environment, and on the location of actual surfaces in the outside world relative to the horopter, it is well known from random-dot stereogram experiments [90,103,104] that the visual system can extract depth and direction of points on the surfaces of objects in the peripheral visual fields. Random-dot stereograms provide compelling evidence that disparity between left and right retinal images plays an important role in peripheral depth perception. Marr [90] defined disparity to mean the angular discrepancy in the positions of the images of an object in the environment in the two eyes. Marr and Poggio [105] developed a computer algorithm to detect disparities in computer-generated random-dot stereograms. Our method for measuring disparity based on differences between image-point vectors extracted from corresponding retinal hyperfields is slightly different and less demanding on neural resources in that it obviates the need for the stereo correspondence neural network proposed by Marr and Poggio or for local pattern matching (local correlation) mechanisms. Differencing 30 or so left and right hyperfield image features encoded in ocular dominance columns within a hypercolumn pools activity from an extended region of V1 and involves multiple image features. This is not inconsistent with the detailed picture of neural architectures for stereo vision in V1 described by Parker et al. [106].

3. The Three-Dimensional Perceived Visual Space

The size–distance relationship introduced into retinal images by the optics of the eye is independent of the scene being viewed and of the position of the head in the environment. While the retinal image itself changes from one viewpoint to another, the geometry of the 3D perceived visual space derived from stereoscopic vision with estimates of Euclidean depth based on triangulation remains the same regardless of the scene and of the place of the head in the environment. In this section we present theory that describes a means for the visual system to form an internal representation of the 3D perceived visual space and of the visual images in that space viewed from a fixed place.

3.1. Gaze-Based Visuospatial Memory

When the head is in a fixed place and gaze is shifted from one gaze point to another the vector fields VL and VR of image-point vectors and over the hypercolumns described in Section 2.10 are replaced by new image-point vectors and by new vector fields associated with the next gaze point in the scanning sequence. To build a visuospatial memory of an environment through scanning we argue that the information encoded by the vector fields VL and VR during a current interval of fixed gaze must be stored before the gaze is shifted and the information lost. Such memory is accumulated over time and scanning of an environment from a fixed place does not have to occur in one continuous sequence. Images associated with different gaze points from a fixed place can be acquired (and if necessary overwritten) in a piecemeal fashion every time the person passes through that given place.

We propose that, at the end of each interval of fixed gaze, the 30-dimensional image-point vectors encoding left-hyperfield images within each hypercolumn are stored into a gaze-based association memory or -memory in association with their cyclopean coordinates . Similarly, the 30-dimensional image-point vectors encoding right-hyperfield images within each hypercolumn are stored in the same -memory in association with their cyclopean coordinates . In other words, the cyclopean coordinates for each point ‘’ in the 3D Euclidean outside space provides an accession code for the -memory. This concept of an accession code for memory stems from an earlier proposal of ours (see [83] Section 8.3) and is analogous to the way the accession code in a library catalogue points to a book in the library. A particular cyclopean coordinate gives the ‘site’ in the -memory where two 30-dimensional image-point vectors and are stored. Actually, such a ‘site’ in an association memory network is distributed across the synapses of a large number of neurons in the network and the information is retrieved by activating the network with an associated pattern of neural activity encoding the cyclopean coordinate , as in a Kohonen association neural network (see [83] for further description).

The ‘library accession code’ analogy provides a simplified metaphor for the storage and retrieval of information in an association memory network and is used throughout the rest of the paper. Each site in the -memory corresponds to a cyclopean image point in the Euclidean environment. As shown in Appendix A the left and right hyperfield image-point vectors and extrapolated from the same image point in the outside space require 30 components (features) to adequately encode the non-linear stochastic characteristics of images on the hyperfields during each interval of fixed gaze. Thus each memory site can be thought of geometrically as a 30-dimensional vector space able to store two 30-dimensional image-point vectors. Because of disparity between left and right retinal images, the two 30-dimensional image-point vectors and stored at the image-point site in the -memory derive from different locations on the left and right retinas and are encoded within different hypercolumns. Thus, the storage of individual left and right image-point vectors and into the -memory in association with their respective cyclopean coordinates and performs the task of linking disparate sites on left and right retinas receiving an image from the same image point in the environment.

For a fixed gaze point , the left and right image-point vectors associated with foveal hyperfields are fused by the gaze control system into a single hyperfield image-point vector. However, as the point in the environment moves away from the fixed gaze point , the difference between the left and right image-point vectors and increases because the size of retinal hyperfields and the densities of rods and cones change with retinal eccentricity. The superimposed left and right hyperfield images stored at the same site in the -memory become less well fused and, consequently, appear more fuzzy.

We define the functional region of central vision to be an area containing all those points in the Euclidean environment where the fuzziness and imprecision of location of the superimposed left and right hyperfield images and stored at the same site in -memory is acceptable for the visual task at hand. The size of this region varies with the visual resolution required for the particular task and with the Euclidean depth of gaze . It varies with the extent of cluttering in the peripheral visual field [82]. Points in peripheral visual fields where the image-point vectors and stored at the same site in -memory are so different they cannot be fused into a single vector, give rise to the perception of double images, one from the left eye and one from the right eye, that may appear fuzzy.

As gaze is shifted from one point in the environment to another, large regions of the peripheral visual fields for the various gaze points overlap. Consequently, the sites in -memory where the peripheral image-point vectors associated with a current point of fixed gaze are to be stored may overlap with sites where image-point vectors associated with previous gaze points are already stored. We propose the following rule for determining whether or not image-point vectors already stored in -memory are overwritten by the image-point vectors associated with the current gaze point: Image-point vectors at sites in the peripheral visual field are only overwritten if the absolute difference between the left and right image-point vectors already stored at the site is larger than the absolute difference between the two image-point vectors for the same site associated with the current gaze point. Using this rule, the images accumulated in -memory from any given scanning pattern will consist of those image points that are closest to their regions of functional vision. As the number of gaze points in the scanning pattern increases, the above rule causes the acuity of the accumulated peripheral image to improve. In the extreme case, with an infinite number of gaze points in the scanning pattern, gaze is shifted to every point in the environment and only fused foveal images are stored at every site in an infinite -memory.

This is consistent with the evidence reviewed by Hulleman and Olivers [107] showing that it is the fixation of gaze that is the fundamental unit underlying the way the cognitive system scans the visual environment for relevant information. The finer the detail required in the search task, the smaller the functional region of central vision and the greater the number of fixations required. The notion of a variable functional region of central vision provides a link between visual attention and peripheral vision and attributes a more important role to peripheral vision as argued by Rosenholtz [82].

3.2. A Riemannian Metric for the G-Memory

As indicated in Section 1, the size of the 2D image on the retina varies in inverse proportion to the Euclidean distance between the nodal point of the eye and the object. A key proposal of the present theory is that the nervous system can model adaptively the relationship between the Euclidean distance to an object in the outside world (sensed by triangulation) and the size of its image on the retina. The notion that this modelling is adaptive is consistent with the observation that the visual system can adapt to growth of the eye and to wearing multifocal glasses. We hypothesize that the modelled size–distance relationship can be applied to three dimensions and encoded in the form of a Riemannian metric stored at every site in the 3D cyclopean -memory (for simplicity here and in what follows we have dropped the subscript for peripheral points). In other words, we hypothesize that the perceived size of a 3D object in the outside world varies in inverse proportion to the cyclopean Euclidean distance between the egocentre and the object, this being simply a reflection of the 2D size–distance relationship introduced by the optics of the eye.

When the -memory is endowed with the metric it can be represented geometrically as a Riemannian manifold (,). At each point in the (,) manifold there exists a 3D tangent space spanned by coordinate basis vectors . Any tangential velocity vector in the tangent space can be expressed as a linear combination of the coordinate basis vectors spanning that space. The metric inner product of any two vectors and in the tangent vector space at the point is denoted by and the angle between the two vectors is computed using . When the angle between the two vectors is rad the two vectors are said to be -orthogonal. We use this terminology throughout the paper.

The metric distance between any two points and in the (,) manifold is obtained by integrating the metric speed (i.e., metric norm or -norm ) of the tangential velocity vector along the geodesic path connecting the two points. This provides the visual system with a type of measure that can be used to compute distances, lengths and sizes in the perceived visual manifold (,). Since the metric changes from point to point in the manifold, and the metric speed depends on the metric, it follows that the metric distance between any two points and depends on where those points are located in the (,) manifold. In other words, the metric stretches or compresses (warps) the perceived visual manifold relative to the 3D Euclidean outside space.

In discussing the perception of objects, Frisby and Stone [40] suggest that it would be helpful to dispense with the confusing term size constancy and instead concentrate on the issue of the nature and function of the size representations that are built by our visual system. In keeping with this, we propose that, consistent with the size–distance relationship introduced by the optics of the eye, the Riemannian metric on the perceived visual manifold varies inversely with the square of the cyclopean Euclidean distance and is independent of the cyclopean direction . This causes metric distances between neighbouring points in the perceived visual manifold (,) to vary in all three dimensions in inverse proportion to cyclopean Euclidean distance in the outside world. As a result, the perceived size of 3D objects in the outside world varies in inverse proportion to the cyclopean Euclidean distance without changing their perceived infinitesimal shape. We use the term “infinitesimal shape” because, in attempting to define and establish a measure of subjective distance, Gilinsky states that “the depth dimension becomes perceptively compressed at greater distances” [3] (p. 463). Consequently, as a macroscopic object recedes its shape appears to change because its contraction is greater in the depth extent than in height or width.

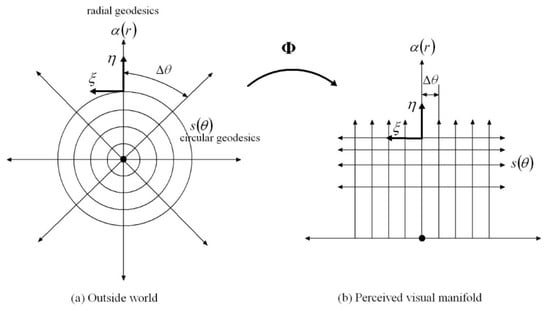

The proposal that the Riemannian metric (r) varies only as a function of cyclopean Euclidean distance and is independent of cyclopean direction implies that the metric is constant on concentric spheres in the outside world centred on the egocentre. These concentric spheres play an important role in describing the geometry of the perceived visual manifold and from now on we refer to them simply as the visual spheres.

For the size of an object to be perceived as changing in all three dimensions in inverse proportion to the cyclopean Euclidean distance without changing its infinitesimal shape, the Riemannian metric (r) must be such that the -norm of the tangential velocity vector at each point in the perceived visual manifold is equal to the norm of the velocity vector in Euclidean space (where is the metric of the 3D Euclidean space) divided by the cyclopean Euclidean distance , that is, at each point.

If egocentric Cartesian coordinates are employed, the Euclidean metric for the outside world is:

and the required Riemannian metric for the perceived visual manifold (,g) is:

That is,

However, the depth and direction of gaze is best described using spherical coordinates . Thus we require the Riemannian metric of the perceived visual manifold to be expressed in terms of . The transformation between spherical coordinates and Cartesian coordinates in Euclidean space is given by:

When the Euclidean metric in Equation (1) is pulled back to spherical coordinates using Equation (4) we get:

Consequently, the Riemannian metric for the perceived visual manifold is:

That is,

where:

In Euclidean space, using spherical coordinates, tangential velocities are related to angular velocities by:

If the inverses of the relationships in Equation (9) are used to express the angular velocities ( in terms of the tangential velocities (, then the terms and in Equation (5) cancel and we obtain:

In other words, to compute the norm of the Euclidean tangential velocity vector in Euclidean spherical coordinates we require the Euclidean metric:

Thus the Riemannian metric matrix required to compute the -norm of the tangential velocity vector at any point in the perceived visual manifold is:

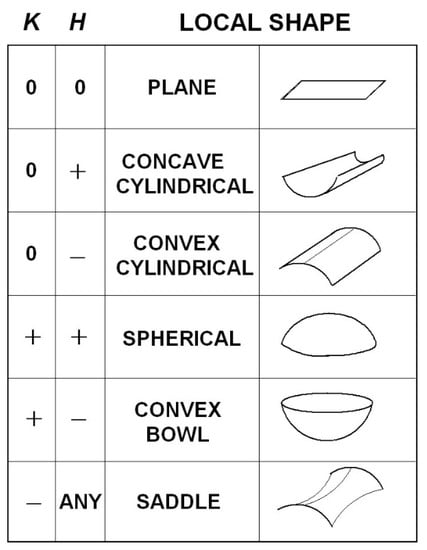

4. Quantifying the Geometry of the Perceived Visual Manifold

Given the metric in Equation (7) and/or Equation (12) depending on the coordinates employed, the theorems of Riemannian geometry can be applied to compute measures of the warping of the perceived visual manifold . In this section we use the geometry to quantify: (i) the relation between perceived depth and Euclidean distance in the outside world; (ii) the illusory accelerations associated with an object moving at constant speed in a straight line in the outside world; (iii) the perceived curvatures and accelerations of lines in the outside world; (iv) the curved accelerating trajectories (geodesic trajectories) in the outside world perceived as constant speed straight lines; (v) the Christoffel symbols describing the change of coordinate basis vectors from point to point in the perceived visual manifold; and (vi) the curvature at every point in the perceived visual manifold. Together, these Riemannian measures provide a detailed quantitative description of the warped geometry of the perceived visual manifold that can be compared with measures obtained experimentally.

4.1. The Relationship Between Perceived Depth and Euclidean Distance

As described by Lee [57] (Chapter 3), two metrics and on a Riemannian manifold are said to be conformal if there is a positive, real-valued, smooth function on the manifold such that . Two Riemannian manifolds and are said to be conformally equivalent if there is a diffeomorphism (i.e., one-to-one, onto, smooth, invertible map) between them such that the pull back is conformal to . Conformally equivalent manifolds have the same angles between tangent vectors at each point but the -norms of the vectors are different and the lengths of curves and distances between points are different. Conformal mappings between conformally equivalent manifolds preserve both the angles and the infinitesimal shape of objects but not their size or curvature. For example, a conformal transformation of a Euclidean plane with Cartesian rectangular coordinates intersecting at right angles produces a compact plane with curvilinear coordinates that nevertheless still intersect at right angles [108].

Let us now consider two spaces, one being corresponding to the Euclidean outside world with Euclidean metric (Equation (5)) and Euclidean distance and the other being corresponding to the perceived visual manifold with metric (Equations (7) or (12)) and metric distance . The Riemannian manifold is, by definition, locally Euclidean and can be mapped diffeomorphically to the Euclidean space . The symbol can be used, therefore, to represent both the distances from the origin in and from the egocentre in . We use this convention throughout the paper, however in this section (and only this section) we separate and because we are interested in the relationship between them. Comparing Equation (5) and Equation (7), it can be deduced that . This shows that the 3D Euclidean outside world and the 3D perceived visual manifold are conformally equivalent with conformal metrics and and with equalling the positive, smooth, real-valued function mentioned above.

Thus, from the theory of conformal geometry the following properties of the perceived visual manifold follow: (a) Objects appear to change in size in inverse proportion to the Euclidean distance in the Euclidean outside world without changing their apparent infinitesimal shape. (b) The perceived visual manifold is isotropic at the egocentre; i.e., the apparent change in size with Euclidean distance is the same in all directions radiating out from the egocentric origin. (c) There exists a diffeomorphic map between the two manifolds but it does not preserve the metric; i.e., the map is not an isometry. While there is a one-to-one mapping between points in the outside space and points in the perceived visual manifold, the two spaces are not isometric so distances between points are not preserved. (d) Angles between vectors in corresponding tangent spaces and are preserved. (e) As the Euclidean distance increases towards infinity in the perceived distance in converges uniformly to a limit point. Stars in the night sky, for example, appear as dots of light in the dome of the sky. (f) If is the Euclidean distance from O to a point in , the perceived distance from O to the corresponding point in can be computed using Equation (2) by integrating the metric norm of the unit radial velocity vector along the radial path to obtain:

The lower limit has been fixed to unity to remove ambiguity about the arbitrary constant of integration and, consequently, the integral is given by the following function of its upper limit:

as illustrated graphically in Figure 3. The perceived distance is foreshortened in all radial directions in the perceived visual manifold relative to the corresponding distance in the Euclidean outside space. The amount of foreshortening increases with increasing .

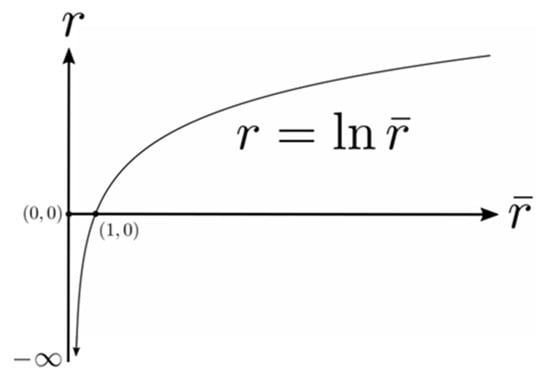

Figure 3.

A graph showing the logarithmic relationship between the perceived distance and the actual Euclidean distance . See text for details.

It is interesting to notice in Figure 3 that according to the logarithmic relationship the perceived distance is negative for values of less than one. This does not immediately make intuitive sense because perceived distance should always be a positive number. In fact it implies an anomaly, the existence of a hole about the egocentric origin in that cannot be perceived. This does make intuitive sense because we cannot see our own head let alone our own ego. The existence of a hole at the origin has consequences for the perception of areas and volumes containing the origin, but we will not explore that further here.

4.2. The Geodesic Spray Field

As defined in Riemannian geometry [109] (Chapter IV), the geodesic spray field,

is a second-order vector field in the double-tangent bundle over the tangent bundle at each point in the perceived visual manifold and at each velocity in the tangent vector space at . The geodesic spray field is well known in Riemannian geometry and we have previously given a detailed description of it [52]. At each point in the manifold , there exists a tangent space containing the velocity vector (or the direction vector) , and at each point in the tangent space, there exists a tangent space on the tangent space; that is, a double tangent space. The double tangent space contains the geodesic spray field. It is not a tensor field so it depends on the chosen coordinates and since it can be precomputed as described below it can be regarded as an inherent part of the perceived visual manifold . It has two parts, and , known as the horizontal part and vertical part, respectively. The horizontal part equals the tangential velocity in the 3D tangent vector space at each . The vertical part provides a measure of the negative of the illusory acceleration perceived by a person looking at an object that is actually moving relatively in the outside world at constant speed (the negative sign is explained in the next section).

To illustrate, telegraph poles observed from a car moving at constant speed along a straight road appear not only to loom in size but also to accelerate as they approach. This common example shows that our perceptions of the position, velocity and acceleration of moving objects are distorted in ways consistent with the proposed warped geometry of the visual system. The illusory acceleration can be attributed to the metric in Equations (7) and (12) causing the apparent distance between neighbouring points in to appear to increase as the distance in the Euclidean outside world decreases. Consequently, an object appears to travel through greater distances per unit time as it gets closer to the observer and, therefore, appears to accelerate as it approaches.

The velocity at each is measured relative to the cyclopean coordinate basis vectors spanning the 3D tangent vector space . However, warping of the perceived visual manifold causes these basis vectors to change relative to each other from point to point in the manifold. As a result, since the velocity at each point is measured with respect to these basis vectors, their changes from point to point give rise to apparent changes in the velocity vector , thereby inducing illusory accelerations. These illusory accelerations do not happen in flat Euclidean space. The negative of the geodesic spray vector provides a measure of this illusory acceleration at each position and velocity in the tangent bundle (i.e., union of all the tangent spaces over ). The geodesic spray field on the tangent bundle can be precomputed and can, therefore, be regarded as an inherent part of the perceived visual manifold .

As shown by Lang [109] and by Marsden and Ratiu [110], the acceleration part of the geodesic spray field is given by the equation: