Audio’s Impact on Deep Learning Models: A Comparative Study of EEG-Based Concentration Detection in VR Games

Abstract

1. Introduction

1.1. Motivation

1.2. Literature Review

1.3. Contribution and Article Structure

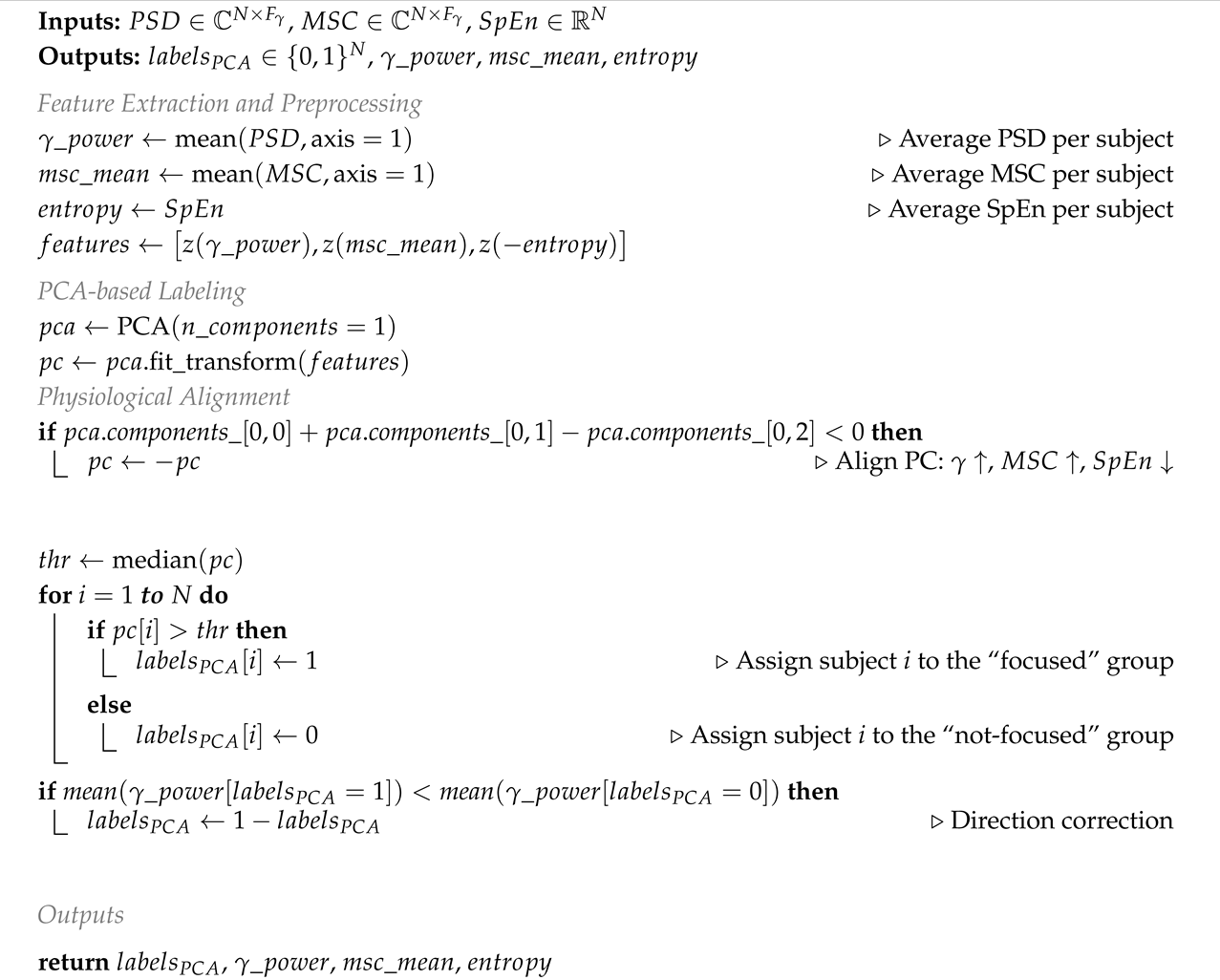

- A preprocessing stage for EEG signals was developed, specifically adapted for training deep learning models. This stage includes the normalization of neurophysiological features and the automatic labeling of cognitive states (focused/not-focused) through a proposed algorithm based on Principal Component Analysis (PCA).

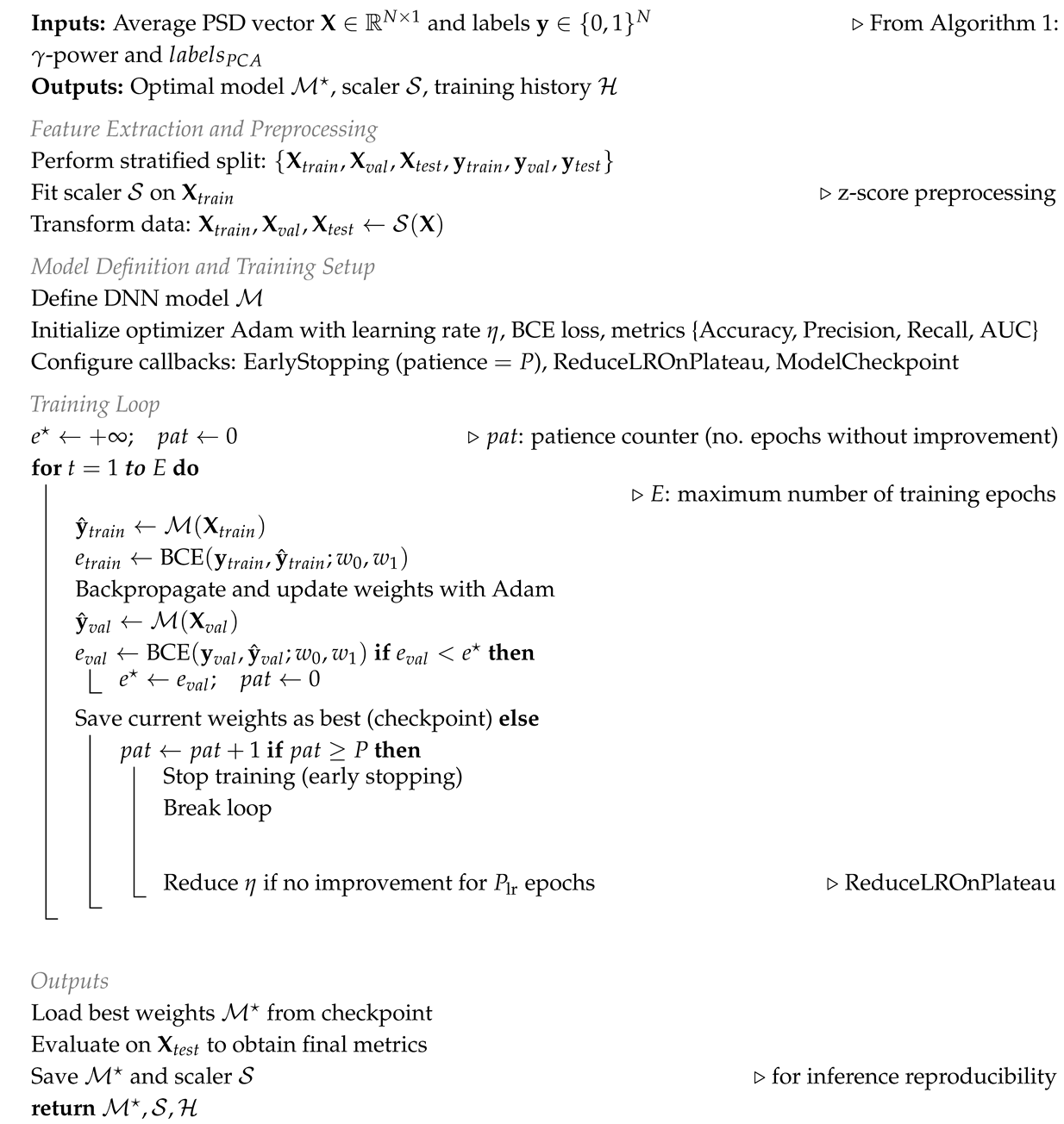

- The detection of cognitive concentration was formalized as a supervised classification problem, using a Deep Neural Network (DNN) trained on neuroscientific metrics extracted from EEG signals.

- An optimized training scheme for DNNs was proposed, achieving performance metrics that surpass those reported in the state of the art.

- Different configurations of the experimental dataset were evaluated, considering scenarios with and without audio during VR gaming sessions, which allowed the identification of the most suitable conditions for robust DNN training.

2. Materials and Methods

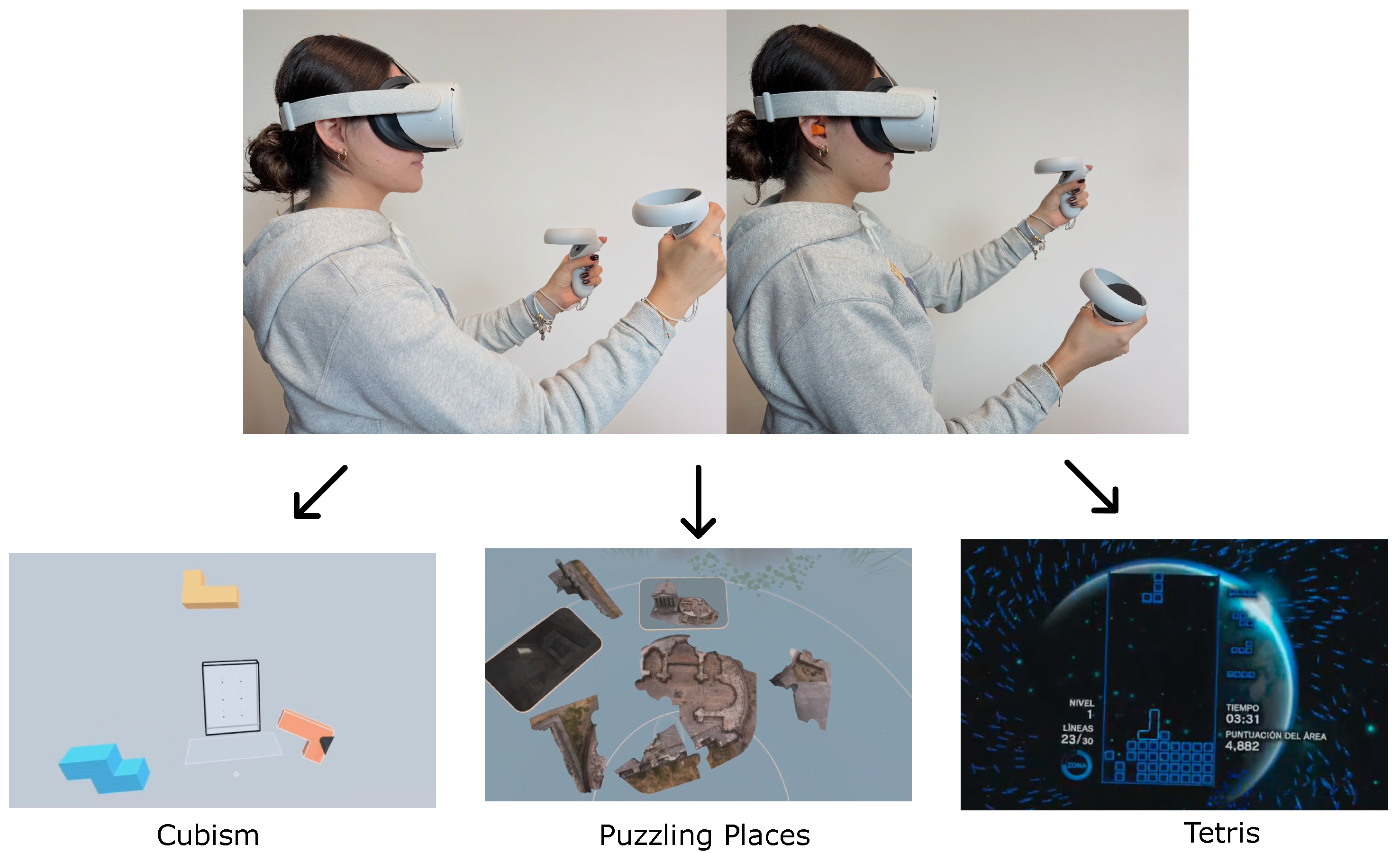

2.1. EEG Dataset Description

2.1.1. Subject Recruitment

- Calibration of the VR equipment (emphasizing comfort and correct visualization).

- 15 min gameplay session with audio + continuous EEG recording.

- 60 min resting period (to minimize cognitive fatigue).

- Switch to the next VR game title.

2.1.2. Experimental Setup

2.2. EEG Signal Analysis

2.3. Frequency Domain Analysis

2.4. Neuroscientific Metrics

2.4.1. Magnitude-Squared Coherence

2.4.2. Spectral Entropy

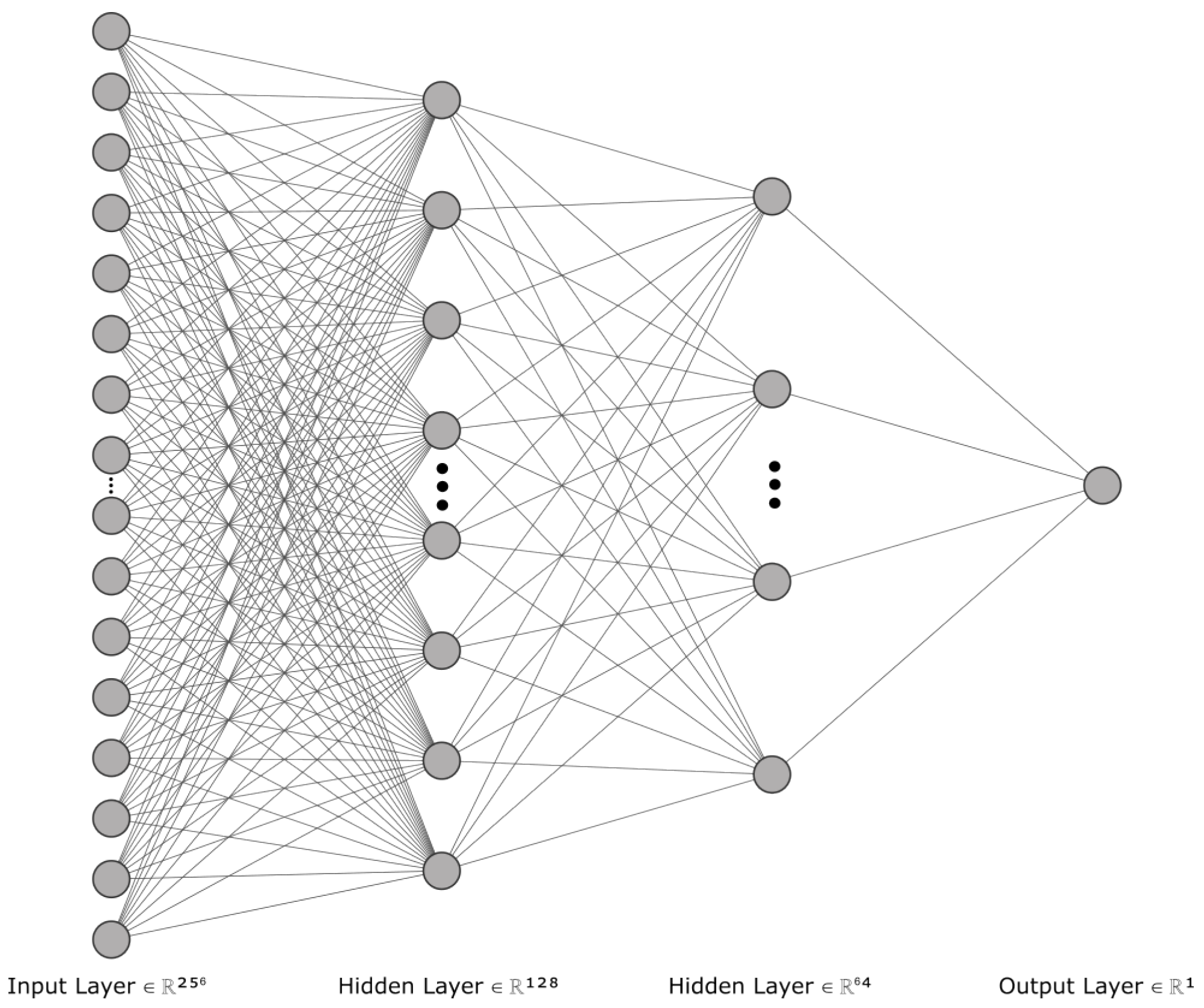

2.5. Deep Neural Network Model

2.5.1. Signal Preprocessing

| Algorithm 1: Automatic Labeling of Focused State using PCA |

|

2.5.2. Deep Learning Model

| Algorithm 2: DNN Training for Focused-State Classification |

|

2.5.3. DNN Architecture

2.5.4. Performance Metrics

3. Results

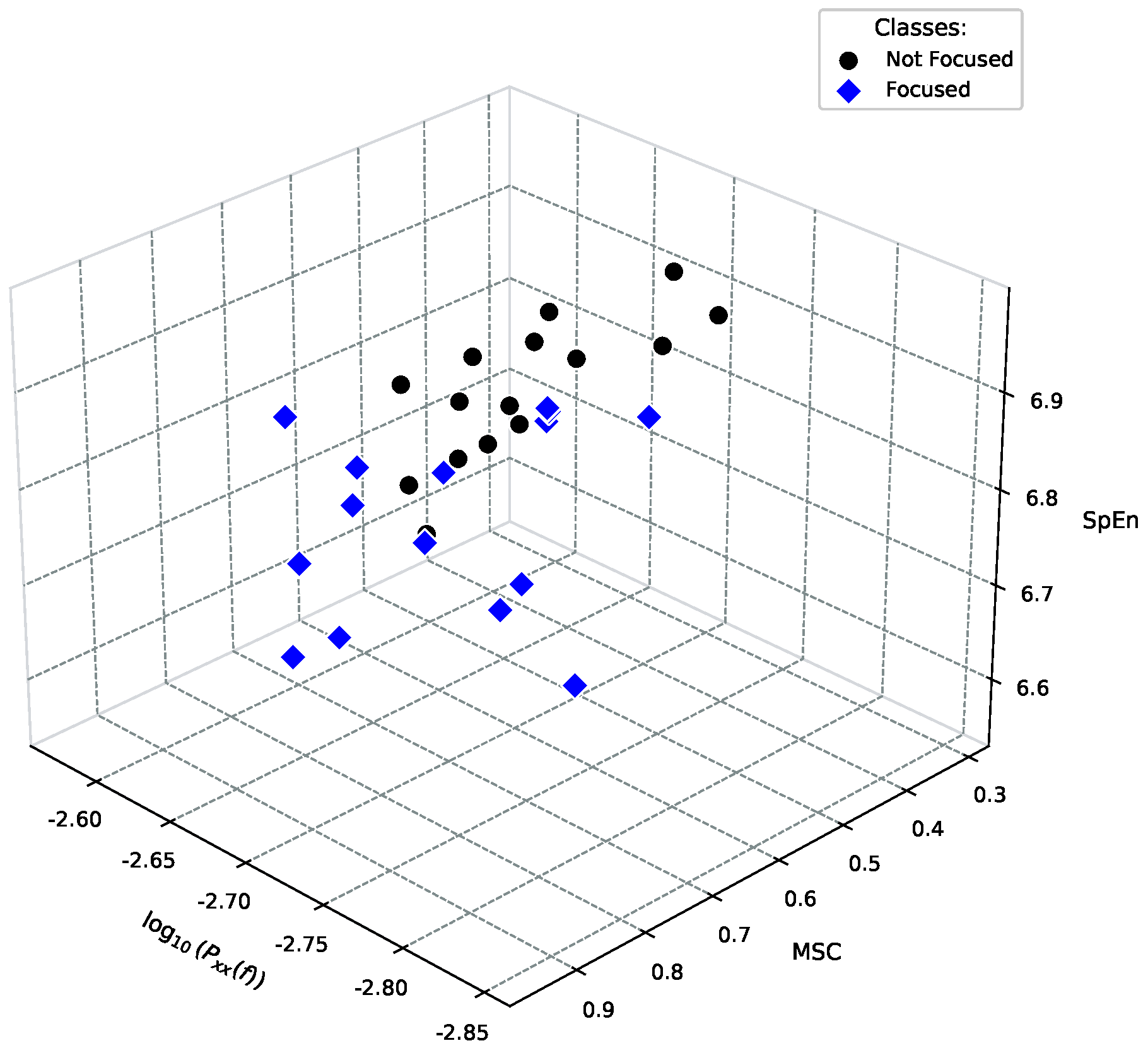

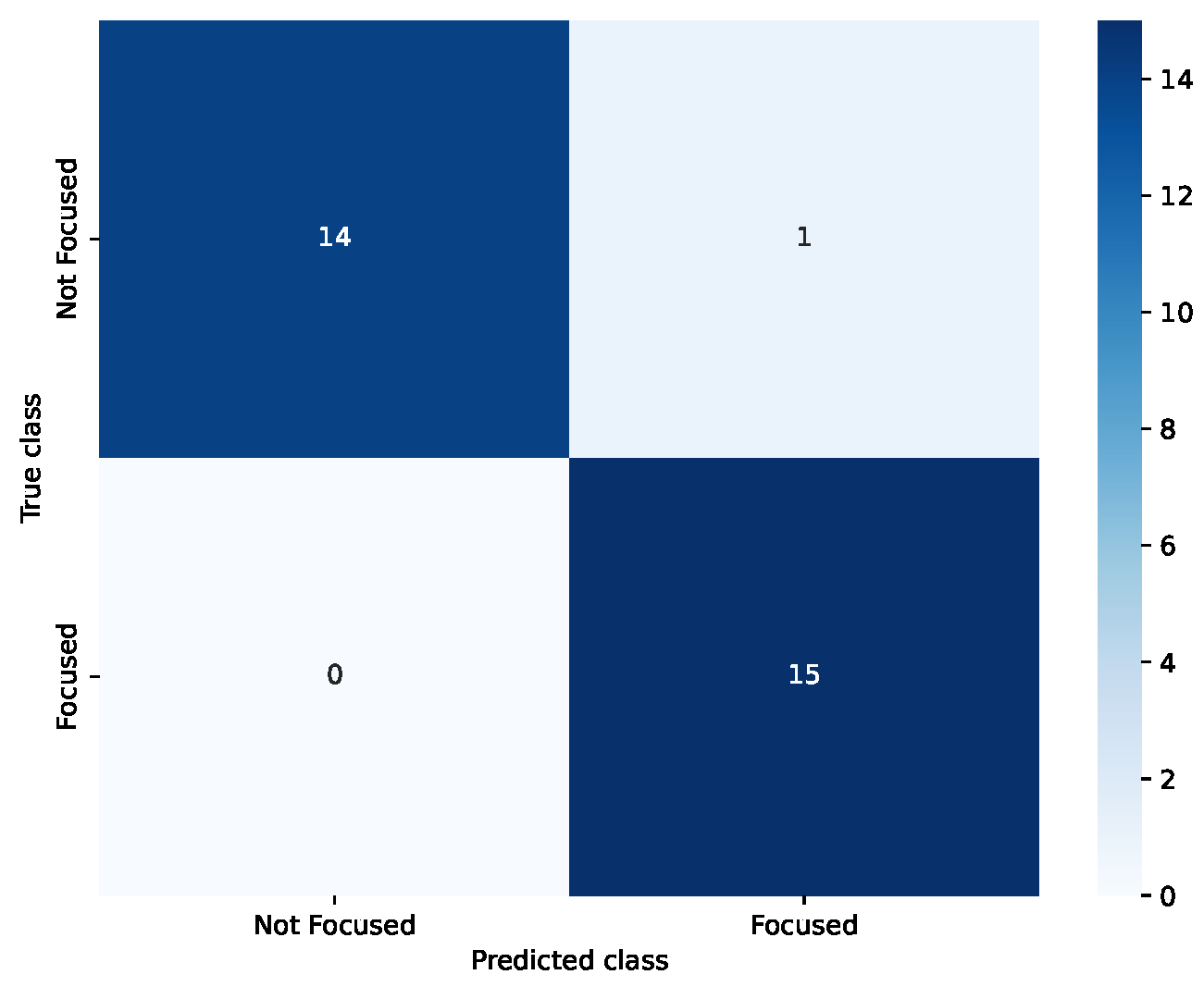

3.1. Experiment One

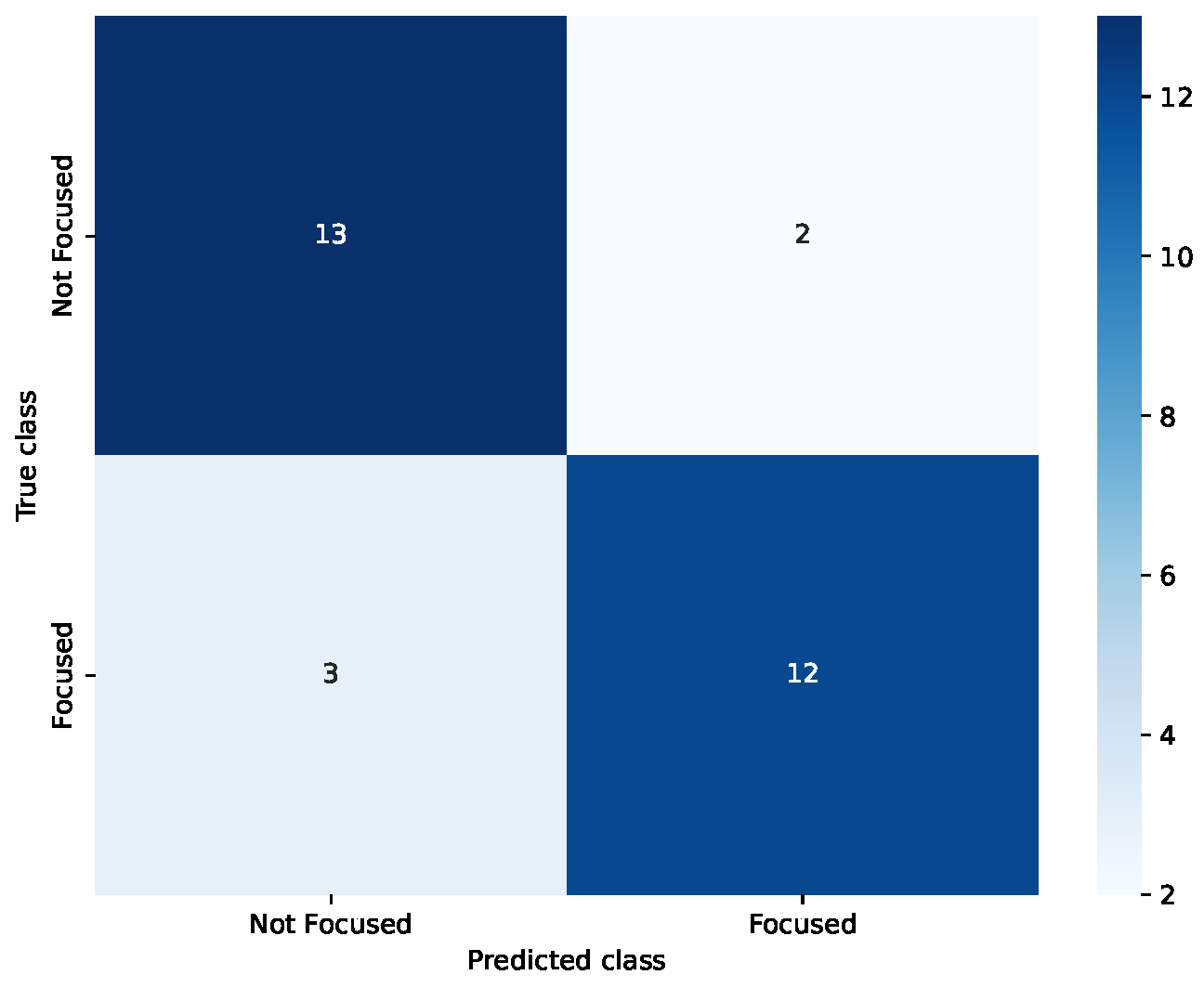

3.2. Experiment Two

3.3. Experiment Three

3.4. Experiment Four

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1

| Run | Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|---|

| 10 | Not Focused | 0.82 | 0.93 | 0.88 | 15 |

| Focused | 0.92 | 0.80 | 0.86 | 15 | |

| Accuracy | 0.87 | 30 | |||

| Macro avg | 0.87 | 0.87 | 0.87 | 30 | |

| Weighted avg | 0.87 | 0.87 | 0.87 | 30 | |

| 13 | Not Focused | 0.82 | 0.93 | 0.88 | 15 |

| Focused | 0.92 | 0.80 | 0.86 | 15 | |

| Accuracy | 0.87 | 30 | |||

| Macro avg | 0.87 | 0.87 | 0.87 | 30 | |

| Weighted avg | 0.87 | 0.87 | 0.87 | 30 | |

| 17 | Not Focused | 0.82 | 0.93 | 0.88 | 15 |

| Focused | 0.92 | 0.80 | 0.86 | 15 | |

| Accuracy | 0.87 | 30 | |||

| Macro avg | 0.87 | 0.87 | 0.87 | 30 | |

| Weighted avg | 0.87 | 0.87 | 0.87 | 30 | |

| 18 | Not Focused | 1.00 | 0.93 | 0.97 | 15 |

| Focused | 0.94 | 1.00 | 0.97 | 15 | |

| Accuracy | 0.97 | 30 | |||

| Macro avg | 0.97 | 0.97 | 0.97 | 30 | |

| Weighted avg | 0.97 | 0.97 | 0.97 | 30 | |

| 25 | Not Focused | 0.67 | 0.93 | 0.78 | 15 |

| Focused | 0.89 | 0.53 | 0.67 | 15 | |

| Accuracy | 0.73 | 30 | |||

| Macro avg | 0.78 | 0.73 | 0.72 | 30 | |

| Weighted avg | 0.78 | 0.73 | 0.72 | 30 | |

| 34 | Not Focused | 0.79 | 0.73 | 0.76 | 15 |

| Focused | 0.75 | 0.80 | 0.77 | 15 | |

| Accuracy | 0.77 | 30 | |||

| Macro avg | 0.77 | 0.77 | 0.77 | 30 | |

| Weighted avg | 0.77 | 0.77 | 0.77 | 30 | |

| 35 | Not Focused | 0.90 | 0.60 | 0.72 | 15 |

| Focused | 0.70 | 0.93 | 0.80 | 15 | |

| Accuracy | 0.77 | 30 | |||

| Macro avg | 0.80 | 0.77 | 0.76 | 30 | |

| Weighted avg | 0.80 | 0.77 | 0.76 | 30 | |

| 39 | Not Focused | 0.82 | 0.93 | 0.88 | 15 |

| Focused | 0.92 | 0.80 | 0.86 | 15 | |

| Accuracy | 0.87 | 30 | |||

| Macro avg | 0.87 | 0.87 | 0.87 | 30 | |

| Weighted avg | 0.87 | 0.87 | 0.87 | 30 | |

| 48 | Not Focused | 0.70 | 0.93 | 0.80 | 15 |

| Focused | 0.90 | 0.60 | 0.72 | 15 | |

| Accuracy | 0.77 | 30 | |||

| Macro avg | 0.80 | 0.77 | 0.76 | 30 | |

| Weighted avg | 0.80 | 0.77 | 0.76 | 30 | |

| 49 | Not Focused | 0.88 | 0.93 | 0.90 | 15 |

| Focused | 0.93 | 0.87 | 0.90 | 15 | |

| Accuracy | 0.90 | 30 | |||

| Macro avg | 0.90 | 0.90 | 0.90 | 30 | |

| Weighted avg | 0.90 | 0.90 | 0.90 | 30 |

Appendix A.2

| Run | Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|---|

| 11 | Not Focused | 0.56 | 1.00 | 0.71 | 15 |

| Focused | 1.00 | 0.20 | 0.33 | 15 | |

| Accuracy | 0.60 | 30 | |||

| Macro avg | 0.78 | 0.60 | 0.52 | 30 | |

| Weighted avg | 0.78 | 0.60 | 0.52 | 30 | |

| 22 | Not Focused | 0.65 | 0.87 | 0.74 | 15 |

| Focused | 0.80 | 0.53 | 0.64 | 15 | |

| Accuracy | 0.70 | 30 | |||

| Macro avg | 0.73 | 0.70 | 0.69 | 30 | |

| Weighted avg | 0.72 | 0.70 | 0.69 | 30 | |

| 23 | Not Focused | 0.59 | 0.87 | 0.70 | 15 |

| Focused | 0.75 | 0.40 | 0.52 | 15 | |

| Accuracy | 0.63 | 30 | |||

| Macro avg | 0.67 | 0.63 | 0.61 | 30 | |

| Weighted avg | 0.67 | 0.63 | 0.61 | 30 | |

| 46 | Not Focused | 0.80 | 0.53 | 0.64 | 15 |

| Focused | 0.65 | 0.87 | 0.74 | 15 | |

| Accuracy | 0.70 | 30 | |||

| Macro avg | 0.73 | 0.70 | 0.69 | 30 | |

| Weighted avg | 0.72 | 0.70 | 0.69 | 30 | |

| 48 | Not Focused | 0.56 | 1.00 | 0.71 | 15 |

| Focused | 1.00 | 0.20 | 0.33 | 15 | |

| Accuracy | 0.60 | 30 | |||

| Macro avg | 0.78 | 0.60 | 0.52 | 30 | |

| Weighted avg | 0.78 | 0.60 | 0.52 | 30 | |

| 63 | Not Focused | 0.70 | 0.47 | 0.56 | 15 |

| Focused | 0.60 | 0.80 | 0.69 | 15 | |

| Accuracy | 0.63 | 30 | |||

| Macro avg | 0.65 | 0.63 | 0.62 | 30 | |

| Weighted avg | 0.65 | 0.63 | 0.62 | 30 | |

| 72 | Not Focused | 0.81 | 0.87 | 0.84 | 15 |

| Focused | 0.86 | 0.80 | 0.83 | 15 | |

| Accuracy | 0.83 | 30 | |||

| Macro avg | 0.83 | 0.83 | 0.83 | 30 | |

| Weighted avg | 0.83 | 0.83 | 0.83 | 30 | |

| 81 | Not Focused | 0.50 | 1.00 | 0.67 | 15 |

| Focused | 0.00 | 0.00 | 0.00 | 15 | |

| Accuracy | 0.50 | 30 | |||

| Macro avg | 0.25 | 0.50 | 0.33 | 30 | |

| Weighted avg | 0.25 | 0.50 | 0.33 | 30 | |

| 94 | Not Focused | 0.00 | 0.00 | 0.00 | 15 |

| Focused | 0.50 | 1.00 | 0.67 | 15 | |

| Accuracy | 0.50 | 30 | |||

| Macro avg | 0.25 | 0.50 | 0.33 | 30 | |

| Weighted avg | 0.25 | 0.50 | 0.33 | 30 | |

| 96 | Not Focused | 0.50 | 0.07 | 0.12 | 15 |

| Focused | 0.50 | 0.93 | 0.65 | 15 | |

| Accuracy | 0.50 | 30 | |||

| Macro avg | 0.50 | 0.50 | 0.38 | 30 | |

| Weighted avg | 0.50 | 0.50 | 0.38 | 30 |

References

- GomezRomero-Borquez, J.; Del-Valle-Soto, C.; Del-Puerto-Flores, J.A.; Briseño, R.A.; Varela-Aldás, J. Neurogaming in Virtual Reality: A Review of Video Game Genres and Cognitive Impact. Electronics 2024, 13, 1683. [Google Scholar] [CrossRef]

- Chuah, Y.K.; Toa, C.K.; Goh, S.K.; Chan, C.K.; Takada, H. Emotion Classification Through EEG Signals: The EmoSense Model. In Proceedings of the 2024 International Conference on Computing Innovation, Intelligence, Technologies and Education, Sepang, Selangor, Malaysia, 5–7 September 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Fernandes, J.V.M.R.; Alexandria, A.R.d.; Marques, J.A.L.; Assis, D.F.d.; Motta, P.C.; Silva, B.R.D.S. Emotion Detection from EEG Signals Using Machine Deep Learning Models. Bioengineering 2024, 11, 782. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O.; Mamdouh, M.; Al-Kabbany, A. Cross-Context Stress Detection: Evaluating Machine Learning Models on Heterogeneous Stress Scenarios Using EEG Signals. AI 2025, 6, 79. [Google Scholar] [CrossRef]

- Li, G.; Khan, M.A. Deep Learning on VR-Induced Attention. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality, San Diego, CA, USA, 9–11 December 2019; pp. 163–1633. [Google Scholar] [CrossRef]

- Bello, K.; Aqlan, F.; Harrington, W. Extended reality for neurocognitive assessment: A systematic review. J. Psychiatr. Res. 2025, 184, 473–487. [Google Scholar] [CrossRef]

- Bhargava, Y.; Kottapalli, A.; Baths, V. Validation and comparison of virtual reality and 3D mobile games for cognitive assessment against ACE-III in 82 young participants. Sci. Rep. 2024, 14, 23918. [Google Scholar] [CrossRef]

- Park, J.H.; Jeon, H.S.; Kim, J.H. Effectiveness of non-immersive virtual reality exercises for balance and gait improvement in older adults: A meta-analysis. Technol. Health Care 2024, 32, 1223–1238. [Google Scholar] [CrossRef]

- Ribeiro, N.; Tavares, P.; Ferreira, C.; Coelho, A. Melanoma prevention using an augmented reality-based serious game. Patient Educ. Couns. 2024, 123, 108226. [Google Scholar] [CrossRef] [PubMed]

- You, D.; Ramli, S.H.b.; Ibrahim, R.; Chen, L.; Lin, Y.; Zhang, M. A thematic review on therapeutic toys and games for the elderly with Alzheimer’s disease. Disabil. Rehabil. Assist. Technol. 2024, 20, 1–13. [Google Scholar] [CrossRef]

- Sridhar, S.; Romney, A.; Manian, V. A Deep Neural Network for Working Memory Load Prediction from EEG Ensemble Empirical Mode Decomposition. Information 2023, 14, 473. [Google Scholar] [CrossRef]

- Datta, P.; Kaur, A.; Sassi, N.; Singh, R.; Kaur, M. An evaluation of intelligent and immersive digital applications in eliciting cognitive states in humans through the utilization of Emotiv Insight. MethodsX 2024, 12, 102748. [Google Scholar] [CrossRef] [PubMed]

- Shah, S.M.A.; Usman, S.M.; Khalid, S.; Rehman, I.U.; Anwar, A.; Hussain, S.; Ullah, S.S.; Elmannai, H.; Algarni, A.D.; Manzoor, W. An Ensemble Model for Consumer Emotion Prediction Using EEG Signals for Neuromarketing Applications. Sensors 2022, 22, 9744. [Google Scholar] [CrossRef]

- GomezRomero-Borquez, J.; Puerto-Flores, J.A.D.; Del-Valle-Soto, C. Mapping EEG Alpha Activity: Assessing Concentration Levels during Player Experience in Virtual Reality Video Games. Future Internet 2023, 15, 264. [Google Scholar] [CrossRef]

- Gireesh, E.D.; Gurupur, V.P. Information Entropy Measures for Evaluation of Reliability of Deep Neural Network Results. Entropy 2023, 25, 573. [Google Scholar] [CrossRef] [PubMed]

- Panachakel, J.T.; Ganesan, R.A. Decoding Imagined Speech from EEG Using Transfer Learning. IEEE Access 2021, 9, 135371–135383. [Google Scholar] [CrossRef]

- Mazher, M.; Abd Aziz, A.; Malik, A.S.; Ullah Amin, H. An EEG-Based Cognitive Load Assessment in Multimedia Learning Using Feature Extraction and Partial Directed Coherence. IEEE Access 2017, 5, 14819–14829. [Google Scholar] [CrossRef]

- Sun, J.; Hong, X.; Tong, S. Phase Synchronization Analysis of EEG Signals: An Evaluation Based on Surrogate Tests. IEEE Trans. Biomed. Eng. 2012, 59, 2254–2263. [Google Scholar] [CrossRef]

- Hu, S.; Stead, M.; Dai, Q.; Worrell, G.A. On the Recording Reference Contribution to EEG Correlation, Phase Synchorony, and Coherence. IEEE Trans. Syst. Man Cybern. Part B 2010, 40, 1294–1304. [Google Scholar] [CrossRef]

- GomezRomero-Borquez, J.; Del-Valle-Soto, C.; Del-Puerto-Flores, J.A.; Castillo-Soria, F.R.; Maciel-Barboza, F.M. Implications for Serious Game Design: Quantification of Cognitive Stimulation in Virtual Reality Puzzle Games through MSC and SpEn EEG Analysis. Electronics 2024, 13, 2017. [Google Scholar] [CrossRef]

- Ruiz-Gómez, S.J.; Gómez, C.; Poza, J.; Gutiérrez-Tobal, G.C.; Tola-Arribas, M.A.; Cano, M.; Hornero, R. Automated Multiclass Classification of Spontaneous EEG Activity in Alzheimer’s Disease and Mild Cognitive Impairment. Entropy 2018, 20, 35. [Google Scholar] [CrossRef]

- Wang, K.; Tian, F.; Xu, M.; Zhang, S.; Xu, L.; Ming, D. Resting-State EEG in Alpha Rhythm May Be Indicative of the Performance of Motor Imagery-Based Brain–Computer Interface. Entropy 2022, 24, 1556. [Google Scholar] [CrossRef]

- Zarjam, P.; Epps, J.; Chen, F. Spectral EEG featuresfor evaluating cognitive load. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 3841–3844. [Google Scholar] [CrossRef]

- Vulpe, A.; Zamfirache, M.; Caranica, A. Analysis of Spectral Entropy and Maximum Power of EEG as Authentication Mechanisms. In Proceedings of the 2023 International Conference on Speech Technology and Human-Computer Dialogue (SpeD), Bucharest, Romania, 25–27 October 2023; pp. 111–115. [Google Scholar] [CrossRef]

- Padma Shri, T.K.; Sriraam, N. EEG based detection of alcoholics using spectral entropy with neural network classifiers. In Proceedings of the 2012 International Conference on Biomedical Engineering (ICoBE), Penang, Malaysia, 27–28 February 2012; pp. 89–93. [Google Scholar] [CrossRef]

- Rusnac, A.L.; Grigore, O. Intelligent Seizure Prediction System Based on Spectral Entropy. In Proceedings of the 2019 International Symposium on Signals, Circuits and Systems (ISSCS), Iasi, Romania, 11–12 July 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Xi, X.; Ding, J.; Wang, J.; Zhao, Y.B.; Wang, T.; Kong, W.; Li, J. Analysis of Functional Corticomuscular Coupling Based on Multiscale Transfer Spectral Entropy. IEEE J. Biomed. Health Inform. 2022, 26, 5085–5096. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.L.; Zhu, J.Y.; Lu, B.L. Identifying Stable Patterns over Time for Emotion Recognition from EEG. IEEE Trans. Affect. Comput. 2019, 10, 417–429. [Google Scholar] [CrossRef]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG Emotion Recognition Using Dynamical Graph Convolutional Neural Networks. IEEE Trans. Affect. Comput. 2020, 11, 532–541. [Google Scholar] [CrossRef]

- Meta. Cubism. 2020. Available online: https://www.meta.com/experiences/cubism/2264524423619421 (accessed on 14 July 2025).

- Meta. Puzzling Places. 2021. Available online: https://www.meta.com/experiences/3931148300302917 (accessed on 14 July 2025).

- Meta. Tetris. 2020. Available online: https://www.meta.com/experiences/tetris-effect-connected/3386618894743567 (accessed on 14 July 2025).

- STEAM. 9 Years of Shadows. 2024. Available online: https://store.steampowered.com/app/1402120/9_Years_of_Shadows/ (accessed on 14 July 2025).

| Band | Frequency Range (Hz) | Functional Description |

|---|---|---|

| Theta | 4–8 | Associated with working memory, cognitive control, and planning; often linked to drowsiness and early stages of sleep. |

| Alpha | 8–12 | Characterized by relaxed wakefulness; typically decreases during states of focused attention or cognitive engagement. |

| Beta | 12–30 | Related to active concentration, alertness, and higher-order cognitive processes; also involved in motor control. |

| Gamma | 30–40 | Associated with higher-level cognitive functions, including feature binding, attentional control, and information integration across brain regions. |

| Label | PSD | MSC | SpEn |

|---|---|---|---|

| Focused (1) | High | High | Low |

| Not Focused (0) | Low | Low | High |

| Item | Value |

|---|---|

| Architecture | Dense–ReLU–BN–Dropout × 3 hidden layers; Sigmoid output |

| Dropout rates | [0.30, 0.30, 0.20] |

| Batch size | 32 |

| Epochs (max) | 100 |

| Optimizer | Adam (learning_rate = 1 ) |

| LR schedule | ReduceLROnPlateau (factor = 0.5, patience = 5, min_lr = 1 ) |

| Early stopping | patience = 10, restore_best_weights = True |

| Checkpoint | save_best_only = True(monitor = val_loss) |

| Weight init | GlorotUniform |

| Train/Val/Test split | 70%/15%/15% |

| Random seeds | numpy = 42, tensorflow = 42 |

| Layer | Number of Parameters |

|---|---|

| Dense (1, 256, bias = True) | (1 + 1) ∗ 256 = 512 |

| BatchNormalization (256) | 2 ∗ 256 = 512 |

| Dense (256, 128, bias = True) | (256 + 1) ∗ 128 = 32,896 |

| BatchNormalization (128) | 2 ∗ 128 = 256 |

| Dense (128, 64, bias = True) | (128 + 1) ∗ 64 = 8256 |

| Dense (64, 1, bias = True) | (64 + 1) ∗ 1 = 65 |

| Sigmoid | 0 |

| Total | 42,497 parameters ≈ 0.16 MB (float32) |

| Class | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| Not Focused | 1.00 | 0.93 | 0.97 | 15 |

| Focused | 0.94 | 1.00 | 0.97 | 15 |

| Accuracy | 0.97 | 30 | ||

| Macro Avg. | 0.97 | 0.97 | 0.97 | 30 |

| Weighted Avg. | 0.97 | 0.97 | 0.97 | 30 |

| Model | Accuracy %/ |

|---|---|

| LR | 67.09/15.92 |

| KNN | 61.56/20.8 |

| SVM | 71.96/15.02 |

| SVM [28] | 71.24/16.38 |

| DNN | 78.94/12.40 |

| DBN [28] | 63.42/19.22 |

| DGCNN | 75.87/18.33 |

| DGCNN [29] | 76.60/11.83 |

| Proposed model with audio | 97/9.24 |

| Proposed model without audio | 83/11.02 |

| Class | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| Not Focused | 0.81 | 0.87 | 0.84 | 15 |

| Focused | 0.86 | 0.80 | 0.83 | 15 |

| Accuracy | 0.83 | 30 | ||

| Macro Avg | 0.83 | 0.83 | 0.83 | 30 |

| Weighted Avg | 0.83 | 0.83 | 0.83 | 30 |

| Validation Strategy | Accuracy (±) | Precision (±) | Recall (±) | F1-Score (±) | ROC-AUC (±) |

|---|---|---|---|---|---|

| Group K-Fold (K = 5) | |||||

| LOSO (N = 30) | — |

| Validation Strategy | Accuracy (±) | Precision (±) | Recall (±) | F1-Score (±) | ROC-AUC (±) |

|---|---|---|---|---|---|

| Group K-Fold (K = 5) | |||||

| LOSO (N = 30) | — |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

GomezRomero-Borquez, J.; Del-Valle-Soto, C.; Del-Puerto-Flores, J.A.; López-Pimentel, J.-C.; Castillo-Soria, F.R.; Ibarra-Hernández, R.F.; Betancur Agudelo, L. Audio’s Impact on Deep Learning Models: A Comparative Study of EEG-Based Concentration Detection in VR Games. Inventions 2025, 10, 97. https://doi.org/10.3390/inventions10060097

GomezRomero-Borquez J, Del-Valle-Soto C, Del-Puerto-Flores JA, López-Pimentel J-C, Castillo-Soria FR, Ibarra-Hernández RF, Betancur Agudelo L. Audio’s Impact on Deep Learning Models: A Comparative Study of EEG-Based Concentration Detection in VR Games. Inventions. 2025; 10(6):97. https://doi.org/10.3390/inventions10060097

Chicago/Turabian StyleGomezRomero-Borquez, Jesus, Carolina Del-Valle-Soto, José A. Del-Puerto-Flores, Juan-Carlos López-Pimentel, Francisco R. Castillo-Soria, Roilhi F. Ibarra-Hernández, and Leonardo Betancur Agudelo. 2025. "Audio’s Impact on Deep Learning Models: A Comparative Study of EEG-Based Concentration Detection in VR Games" Inventions 10, no. 6: 97. https://doi.org/10.3390/inventions10060097

APA StyleGomezRomero-Borquez, J., Del-Valle-Soto, C., Del-Puerto-Flores, J. A., López-Pimentel, J.-C., Castillo-Soria, F. R., Ibarra-Hernández, R. F., & Betancur Agudelo, L. (2025). Audio’s Impact on Deep Learning Models: A Comparative Study of EEG-Based Concentration Detection in VR Games. Inventions, 10(6), 97. https://doi.org/10.3390/inventions10060097