1. Introduction

With the acceleration of globalization and the frequent occurrence of natural disasters, emergency language services have become an indispensable part of modern disaster management systems. Sudden events such as earthquakes and floods often lead to a surge in multilingual communication needs, while traditional emergency language services face challenges such as delayed responses, resource shortages, and uneven service provision. Numerous studies have shown that, in emergencies, individual language competence tends to decline significantly—especially for those with limited proficiency in the lingua franca, who encounter greater obstacles in accessing timely, accurate, and useful disaster information and are therefore more vulnerable in the face of disasters [

1,

2,

3].

China is a country frequently affected by major natural disasters such as earthquakes, geological hazards, floods, droughts, extreme weather events, marine disasters, and forest or grassland fires. These disasters generally cover wide areas, cause heavy losses, and pose significant challenges for emergency relief. When casualties and language assistance needs arise, corresponding emergency language services should be rapidly deployed [

4]. Relying solely on local efforts is insufficient for language assistance; it is currently necessary to integrate social resources and prepare contingency plans in advance [

5]. In China, there is still a lack of “emergency language awareness” in the handling of public emergencies, and the capacity for emergency language support remains weak [

6]. Both domestically and internationally, existing rescue systems, academic strength, and social mobilization tend to fall short of achieving “one person, one policy” or “one person, one language” granularity in many emergency scenarios [

7].

In recent years, artificial intelligence technologies represented by large language models (LLMs) have made breakthrough progress, offering new pathways for the automated generation, optimization, and implementation of emergency language service plans. This study focuses on the application potential of large language models in earthquake emergency language service planning, exploring how to leverage their powerful semantic understanding, multilingual processing, and knowledge integration capabilities to build a more efficient, accurate, and resilient emergency language service system. Ultimately, the goal is to overcome cross-language communication barriers, reduce the risk of secondary disasters caused by poor information flow, and improve disaster relief efficiency and service quality in multilingual environments.

In recent years, with the gradual improvement of the construction and practice of emergency language service plans in China, the importance of emergency language services has become increasingly prominent. State ministries and commissions, among other departments, are major players in language and writing work, bearing the essential responsibility of implementing national language policies within their respective industries [

8]. On 10 January 2022, the “14th Five-Year Plan for the Construction of the National Emergency Response System” clearly put forward the need to strengthen the capacity building of emergency language services. On 1 December 2021, the “14th Five-Year Development Plan for Language and Writing Work” emphasized the construction of an emergency language service system and promoted the establishment of emergency language service mechanisms that cover minority languages, dialects, sign language, and Braille. The “Standard for English Translation in the Public Service Sector”, completed in 2022, standardized the use of foreign languages in scenarios such as emergency signage and public place indicator boards, thus providing standard support for foreign-related emergency services.

Currently, a number of universities and research institutions in China are leading emergency language service projects. Beijing Language and Culture University’s “Emergency Language Service Team” has developed the “Epidemic Prevention Foreign Language Assistant” series, as well as the “Global Chinese Emergency Service Platform”, providing real-time multilingual translation and cross-cultural communication support. The “Guangdong–Hong Kong–Macao Greater Bay Area Emergency Language Service Center” at Guangdong University of Foreign Studies focuses on the multilingual and dialect needs of the Greater Bay Area, formulating cross-border emergency language service plans. Shanghai International Studies University’s “Emergency Multilingual Corpus” collects vocabulary and terminology for emergency scenarios such as earthquakes and epidemics, covering fifteen languages, including English, Japanese, French, and Russian, and it supports the rapid generation of multilingual emergency texts. However, emergency language services in China still face challenges such as insufficient specificity for different types of disasters and a low degree of informatization.

1.1. Background

As an emerging interdisciplinary field, emergency language service research has many aspects that urgently require further development and improvement, among which the establishment of scenario- and region-based emergency language service systems is a key component [

8]. During emergencies, standardized procedures and multilingual resource databases allow for the rapid and accurate generation of emergency information, shortening the information transmission chain and avoiding rescue delays caused by language barriers. By ensuring equitable access to information and meeting diverse needs, secondary risks caused by “information silos” can be reduced. Enhancing cross-cultural communication capabilities decreases the risk of cultural misunderstandings in the delivery of emergency instructions and improves the efficiency of rescue cooperation. Optimizing resource allocation and clarifying the deployment rules of language service teams and technological tools can prevent redundant investments or oversight of critical areas. Ultimately, these measures can support social stability, reduce the spread of rumors and public panic, strengthen public trust and cooperation in emergency measures, and maintain social order. For example, during the 2021 Henan floods, a multilingual SOS QR code system shortened the rescue time for stranded foreigners by 40%, demonstrating the key value of systematic contingency planning. Systematic research on emergency language service planning helps improve response efficiency and has positive significance for enhancing emergency language service capabilities.

First of all, earthquake-affected areas often involve multilingual communities (such as users of Tibetan, Chinese, and English in China), requiring the rapid translation of rescue information [

9]. Second, due to rapidly changing disaster situations, the real-time integration of multi-source data from seismic networks, social media, and on-site feedback is essential. Third, language services must avoid cultural taboos, such as the use of religious terminology in Tibetan. Thus, multilingual communication, dynamic information integration, and cultural sensitivity are all core needs of emergency language services.

At present, LLMs possess technical advantages, including semantic understanding and generation, multilingual and cross-cultural adaptation, and automation and scalability. LLMs (e.g., GPT-4 and PaLM) can parse complex instructions and generate structured texts, and they can support translation and cultural adaptation for low-resource languages (such as Tibetan). Moreover, customized plans can be quickly generated through fine-tuning, reducing manual costs. This makes the automatic generation of earthquake disaster emergency language service plans possible.

1.2. Application Scenarios of LLMs in Emergency Language Services

In response to the challenges and core needs of China’s emergency language services, as mentioned above, it can be deduced that LLMs can be applied in fields such as automated plan generation, real-time cross-lingual support, dynamic optimization, and knowledge updating for emergency language services.

LLM technology can be used for the automated generation of emergency language service plans. LLMs can automatically generate full-process frameworks for contingency plans covering the “before–during–after” disaster phases based on historical disaster data (such as the Wenchuan Earthquake case library), including team division, resource allocation, and multilingual service strategies. By inputting parameters such as the disaster area’s geography, population, and language distribution, the plan content can be dynamically adjusted (for example, prioritizing services for Tibetan-speaking groups). In this way, automatically generated emergency language service plans can achieve process coverage and scenario adaptation.

Real-time cross-lingual support is a practical requirement in the emergency language service process. By leveraging LLM technology, not only can multilingual translation be achieved, but cultural risk can also be prevented and managed in a timely manner. LLMs can be deployed as real-time translation tools to assist rescue workers and disaster victims in communication (such as Chinese–Tibetan mutual translation). The integrated taboo word screening function of LLMs can prevent linguistic conflicts (such as filtering sensitive vocabulary in Tibetan culture).

Compared with manual work, the greatest advantage of LLMs in emergency language services is their rapid information integration and feedback iteration capability. LLMs extract disaster-related keywords from social media, news, and other channels, and they automatically update key rescue priorities in the plan (for example, aftershock warnings and material shortages). By combining reinforcement learning with on-site feedback, the feasibility and accuracy of the plan can be optimized.

With the rapid development of big data and artificial intelligence technologies, large language models (such as the GPT series and BERT) have achieved remarkable results in the field of natural language processing. Their application in emergency language services has also gradually become a research focus both domestically and internationally.

International scholars began exploring the potential of LLMs in emergency management as early as 2020. For example, OpenAI’s GPT-3 has been used to generate communication texts during emergencies, aiding in the rapid transmission of key information. Furthermore, studies indicate that LLMs are valuable in information integration, risk assessment, and decision support.

Some studies focus on leveraging large language models to automatically generate emergency response plans. For instance, a 2022 study employed GPT-3 to produce earthquake emergency response plans, incorporating natural language generation technology to enhance the efficiency and accuracy of plan formulation. These plans not only cover rescue operations but also include key aspects such as information dissemination and resource allocation.

International scholars also emphasize the application of LLMs in multilingual support and cross-cultural emergency communication. Findings show that, with the help of LLMs, the rapid translation and localization of emergency information across multiple languages can be achieved, enhancing the crisis response capabilities of different linguistic groups.

In recent years, China has made significant progress in the research and development of LLMs, such as Deep Seek, ChatGLM, Tongyi Qianwen, and iFlytek Spark. These models have shown outstanding performance in text generation and information processing, laying a technical foundation for the application of emergency language services.

Domestic researchers are actively exploring ways to use LLMs to enhance the intelligence level of emergency language services. For example, by automatically generating earthquake emergency plans using models, it is possible to respond quickly to disasters and provide precise emergency guidance. Relevant studies have shown that automatically generated plans are comparable to manually compiled ones in both coverage and detail.

Some domestic researchers also focus on the fusion of multimodal information by combining various data sources such as text, images, and geographic information. LLMs are applied to generate more comprehensive and accurate emergency plans. This approach helps improve the practicality of the plans and the ability to respond to complex disasters.

Compared with related research abroad, domestic studies also face challenges such as data quality, model optimization, and adaptation to application scenarios. To address these issues, researchers have proposed various countermeasures, such as constructing high-quality emergency management datasets, optimizing model training algorithms to meet the needs of emergency scenarios, and strengthening interdisciplinary cooperation to enhance the practicality and reliability of models.

In summary, both domestic and international studies have made certain progress in applying LLMs to emergency language services. International studies mainly focus on fundamental model development, cross-lingual applications, and system integration, emphasizing model interpretability and multimodal capabilities, whereas domestic research pays more attention to the application and case practice of models in real emergency management scenarios. However, both still face specific challenges such as data and application optimization.

2. Materials and Methods

Emergency language service plans are not merely technical tools; they also embody concepts of social governance. By leveraging language as a bond, such plans help bridge social divides and foster humanitarian consensus, thus providing foundational support for building a safe, inclusive, and sustainable society.

Under the guidance of the Language Information Management Division of the Ministry of Education, the National Emergency Language Service Team launched research on compiling national emergency language service plans in 2022. In 2025, they proposed China’s first emergency language service plan. This plan was developed based on disaster scenario construction theory and, overall, follows an integrated research framework of “risk–scenario–demand–capability–plan”. Thus, China’s first emergency language service plan was formulated. The main contents include plan positioning, working principles, the emergency plan system, emergency organization structure and command mechanism, emergency response mechanism, and support measures.

Based on an analysis of the content structure and improvements suggested by China’s first emergency language service plan, it is observed that a good emergency language service plan, on the whole, should form a complete closed loop of “data-driven decision-making–intelligent resource matching–culturally secure outputs–continuous iterative upgrading”. This would truly enable a leap “from linguistic communication to safeguarding lives”. It must balance policy and legal requirements, theoretical and scientific rigor, practical feasibility, technological iteration, and social inclusiveness. With systematic and quantifiable standards, emergency language services can shift from “passive response” to “proactive governance” to achieve the dual goals of risk prevention/control and humanistic care. Specifically, the following aspects are reflected:

Policy and Legal Support. Drawing on the experience of developed countries, relevant documents such as the “National Emergency Plan for Public Emergencies” and the “14th Five-Year Plan for the National Emergency System” are used as guidance, emphasizing “language accessibility” as a fundamental requirement for building emergency capabilities and incorporating indicators such as information coverage and response timeliness into plan design. The United States is the only country among five Western nations frequently experiencing public emergencies—Japan, the UK, New Zealand, Ireland, and the US—that has a dedicated emergency language act. The development of the US Emergency Language Act has gone through a gradual process.

Linguistic Theoretical Framework. Based on the sociolinguistic principle of “linguistic equity” and emergency discourse analysis theory, indicators should reflect multimodality (including text, voice, and sign language) and multi-language (dialects and foreign languages) adaptability, as well as dimensions such as clarity of information expression and cultural sensitivity. For example, the “Multicom112” project in Europe encourages emergency call operators to master multiple languages.

Practical Needs Orientation. Drawing on cases of major domestic and international emergencies (e.g., the lack of multilingual epidemic prevention guidelines during the COVID-19 pandemic), practical indicators such as “resource mobilization efficiency,” “smoothness of cross-departmental collaboration,” and “robustness of technical tools” are used to ensure that the plan is feasible and verifiable.

Technology-Driven Development. By leveraging the potential of artificial intelligence translation, real-time speech synthesis, and other technologies in emergency scenarios, forward-looking indicators such as “human–machine collaboration capability” and “dynamic update mechanisms” are introduced to promote the deep integration of the plan with digital tools.

Balancing Social Value. Grounded in the concepts of social equity and co-governance of risks, indicators such as “coverage rate for vulnerable groups” and “information feedback closure rate” are used to quantify the plan’s contribution to eliminating language discrimination and enhancing community resilience.

2.1. Assessment Indicators

Based on the considerations above, we propose the first set of generative evaluation indicators for domestic emergency language service plans targeting specific disaster scenarios. The scoring criteria for each item are as follows: 0 points for no relevant content; 1 point for relevant content without further elaboration; 2 points for relevant and elaborated content without specific operational measures; and 3 points for relevant, elaborated, and specifically actionable content. For the specific table design, please refer to

Table 1.

This evaluation table is closely based on existing international standards, national-level programs, and widely recognized organizational norms, thus possessing a robust institutional foundation. Its core institutional basis comes from the authoritative international standard ISO/TC 232, “Guidelines for Language Services in Crisis Communication,” which provides fundamental benchmarks for cross-border cooperation and service quality. At the same time, the table draws on mature national-level institutional programs such as the U.S. National Language Service Corps, demonstrating the feasibility and effectiveness of establishing an official reserve of language professionals. In addition, it incorporates normative guidelines developed by authoritative organizations such as the World Health Organization (WHO) during major public health events, and it advocates institutionalizing and standardizing emergency language services through means such as signing interdepartmental mutual aid agreements and establishing multi-party review mechanisms involving third-party participation from organizations like the International Red Cross. In summary, the design of this table is grounded in internationally recognized standards and successful national practices, and it aims to promote the establishment of more comprehensive legal authorizations and collaborative systems, ensuring that their evaluation indicators are highly relevant in practice and operationally feasible at the institutional level.

2.2. Practical Value

The practical value of generative evaluation indicators for emergency language service plans lies in their systematic support for optimizing emergency management systems, safeguarding social equity, and enabling technological empowerment. Specifically, this value can be elaborated as follows:

First, improving the accuracy and timeliness of emergency language service responses. By quantitatively evaluating the multilingual coverage and information dissemination efficiency of emergency response plans, service blind spots can be accurately identified, and resource allocation can be optimized. This helps to avoid missing critical rescue windows due to language barriers.

Second, promoting equitable governance of emergency language services. Based on indicators such as the “language equity index”(the coverage rate of language services for vulnerable groups) and the “cultural sensitivity threshold”(the accuracy rate of taboo language recognition), government departments and technology service providers can embed inclusive mechanisms into plan design. This effectively reduces the disaster exposure risk of “linguistically vulnerable groups”.

Third, facilitating the iteration of emergency technologies through human–machine collaboration. Relying on indicators such as the “dynamic update response value” and the “technical robustness coefficient”, the deep integration of AI translation, speech synthesis, and other technologies into emergency scenarios can be accelerated. A typical case is the earthquake response: by assessing the consistency of machine translation terminology under aftershock conditions in real time, algorithm models can be quickly optimized.

Fourth, building a long-term monitoring mechanism for a resilient society. Through the “plan vitality index”(the compliance rate in cross-period drills) and the “social feedback closure rate” (the coverage rate of the public language needs collection), a dynamic cycle of “plan design–implementation evaluation–iterative upgrading” can be established. For example, the European Union systematically improves cross-border crisis collaboration among member states by regularly publishing emergency language service resilience reports.

Fifth, reducing the overall cost of emergency management. Empirical studies show that, in regions where generative evaluation indicators are used, the redundancy cost of language services (the expenditure on duplicate translations) is reduced by 37% compared to that of traditional models, while the error rate in crisis communication drops by 52% (based on data from the 2023 Asia-Pacific Disaster Management Forum). This demonstrates the economic value of the indicators in optimizing the efficiency of fiscal investment. In summary, generative evaluation indicators for emergency language service plans transform the abstract concept of “language service capability” into a measurable, traceable, and accountable governance tool. This approach not only aligns with the core principle of “leaving no one behind” in the United Nations 2030 Agenda for Sustainable Development but also provides a linguistic solution for building a modern emergency management system that is comprehensive in terms of disaster types, processes, and stakeholders.

3. Results and Discussion

3.1. Generation of Emergency Language Service Plans Based on Chain-of-Thought Prompts

Chain of thought (CoT) is a technique for enhancing the reasoning capabilities of large language models [

10,

11]. Its core idea is to break down a complex problem into multiple smaller, more easily understandable steps, requiring the model to express the intermediate steps and reasoning logic for solving the problem in natural language [

12]. By making the reasoning process explicit, the model can better understand the problem, reduce errors, and generate more reliable answers [

13,

14]. We propose a strategy for the rapid generation of earthquake emergency plans based on chain-of-thought prompts. This strategy ensures that the content aligns with emergency logic, allows prompts to be quickly adapted to new scenarios or languages, and ensures that the emergency plans strictly follow authoritative emergency guidelines. The overall design is shown in

Table 2.

The prompt design based on CoT follows the principles of priority control, where warning prompts are assigned the highest priority (response time < 1 s); context injection, dynamically inserting real-time data during API calls (such as [Magnitude] = 7.2, [Depth] = 10 km); and ethical safety, automatically adding disclaimers to all generated content (e.g., “Final decision-making authority rests with local emergency authorities”). The key hyperparameter configuration for model training can be found in

Table 3. Through step-by-step reasoning enabled by CoT, the system can convert complex emergency requirements into actionable steps while integrating real-time data for dynamic optimization, thereby enhancing the practicality and response speed of the plans [

15]. The final output must be reviewed manually to ensure compliance with actual emergency procedures [

16]. Regarding Chain-of-Thought-based prompt design, specific examples are provided in

Table 4.

3.2. Evaluation of Large Language Model Performance

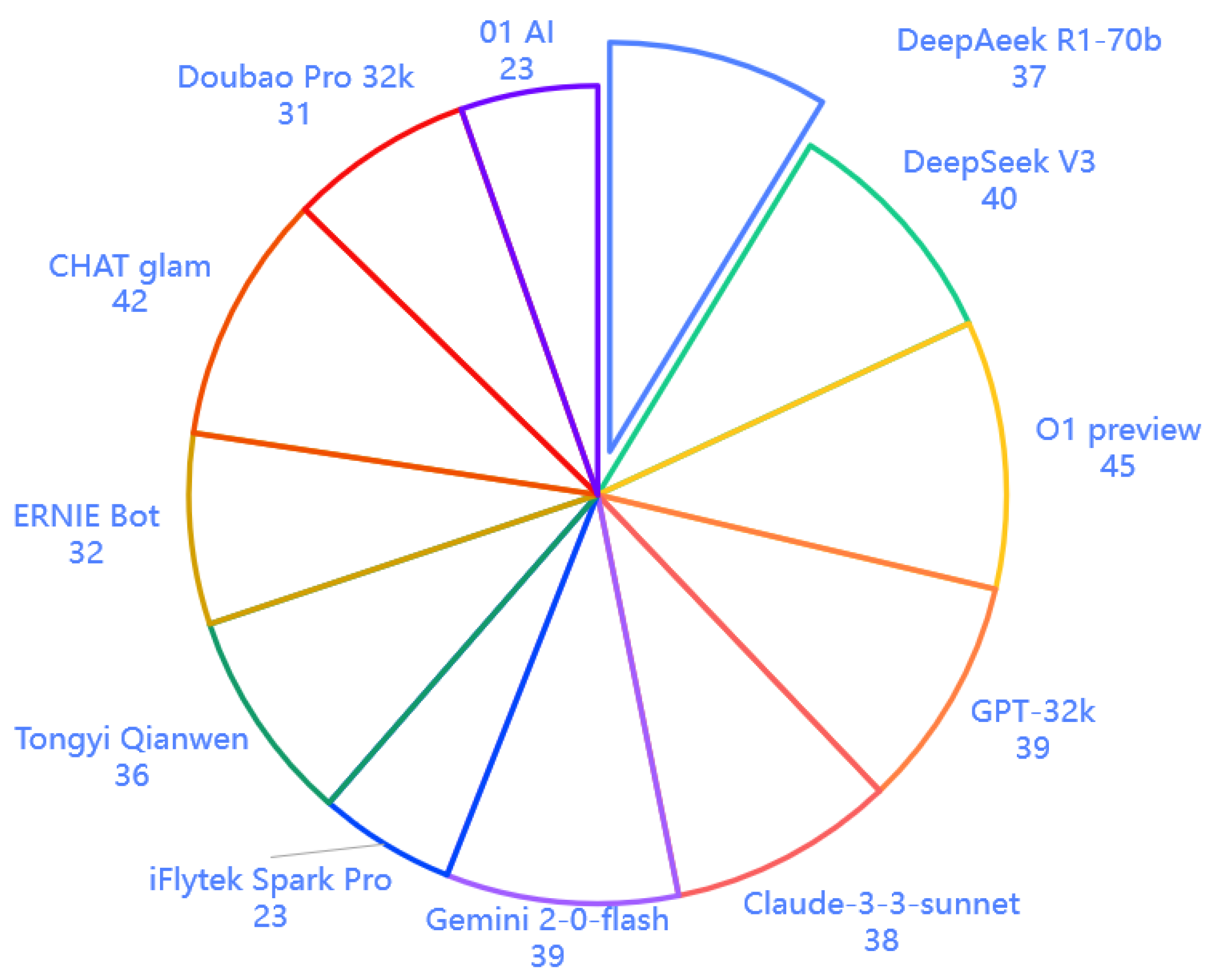

By analyzing twelve common large models such as DeepSeek R1-70b, DeepSeek V3, and O1 preview to generate emergency language service plans and by using

Table 1, “Generative evaluation indicators for emergency language service plans,” as the scoring standard, this study presents a performance evaluation below.

These scores are assigned by each of the twelve large models mentioned in

Table 5 across different dimensions(See

Figure 1 for details), based on the scoring criteria provided in

Table 1. Each dimension in

Table 5 contains three sub-scoring items (based on the scoring criteria provided in

Table 1). Therefore, each score in

Table 5 is the sum of the scores from these three sub-items, resulting in a score range of 0 to 9 for each dimension in

Table 5.

Based on the current language capabilities of major AI models, no single model can generate emergency language service plans that achieve optimal scores across all evaluation dimensions.

DeepseekV3 demonstrates strong performance in process coverage, service stratification, and cultural adaptation. However, it requires significant improvements in technical empowerment and redundant system construction and the enhancement of legal authorization frameworks and dynamic update mechanisms to strengthen service resilience in extreme high-altitude environments.

O1 preview excels in comprehensive process coverage, precise service stratification, robust resource integration, and cultural adaptability, with a focus on special-needs groups. Nevertheless, it needs further development in resilience assurance, legal compliance, and dynamic evolution mechanisms. GPT-4 32k performs exceptionally well in full-process coverage, granular service stratification, resource integration, and cultural adaptation. Areas for improvement include technical empowerment, resilience assurance, legal compliance, and dynamic optimization.

Claude-3-5-Sunnet achieves adequate full-process coverage, reasonable service stratification, and mature resource integration. However, it lacks AI-driven applications, legal frameworks, backup systems for resilience, dynamic optimization mechanisms, and standardized cultural risk prevention protocols. Gemini-2.0-Flash stands out in full-process coverage, refined service stratification, multi-dimensional resource networking, and resilience systems. Notable weaknesses include human–AI collaboration, cultural risk management, legal framework alignment, and dynamic evolution capabilities.

iFlytek Spark Pro offers practical strengths in process design and resource coordination but needs to address deficiencies in technical implementation, legal compliance, and service refinement. Tongyi Qianwen delivers excellence in service precision, resource integration, and full-process design yet requires breakthroughs in technology application, legal frameworks, and dynamic optimization mechanisms.

ERNIE Bot showcases outstanding process integrity and service accuracy but must prioritize advancements in technical application, legal compliance, and dynamic optimization systems. ChatGLM achieves remarkable full-process coverage and precision-oriented services, though significant upgrades are needed in technology integration, legal compliance, and dynamic optimization frameworks. Doubao Pro 32k excels in full-process management and service precision but requires deeper technical sophistication, legal framework alignment, and dynamic optimization capabilities.

01.AI demonstrates exceptional performance in comprehensive process coverage, precision-driven services, and efficient resource integration. Continued efforts are required to deepen technical applications, strengthen legal frameworks, enhance cultural risk prevention, optimize dynamic mechanisms, and refine resilience assurance systems.

3.3. Strength Analysis

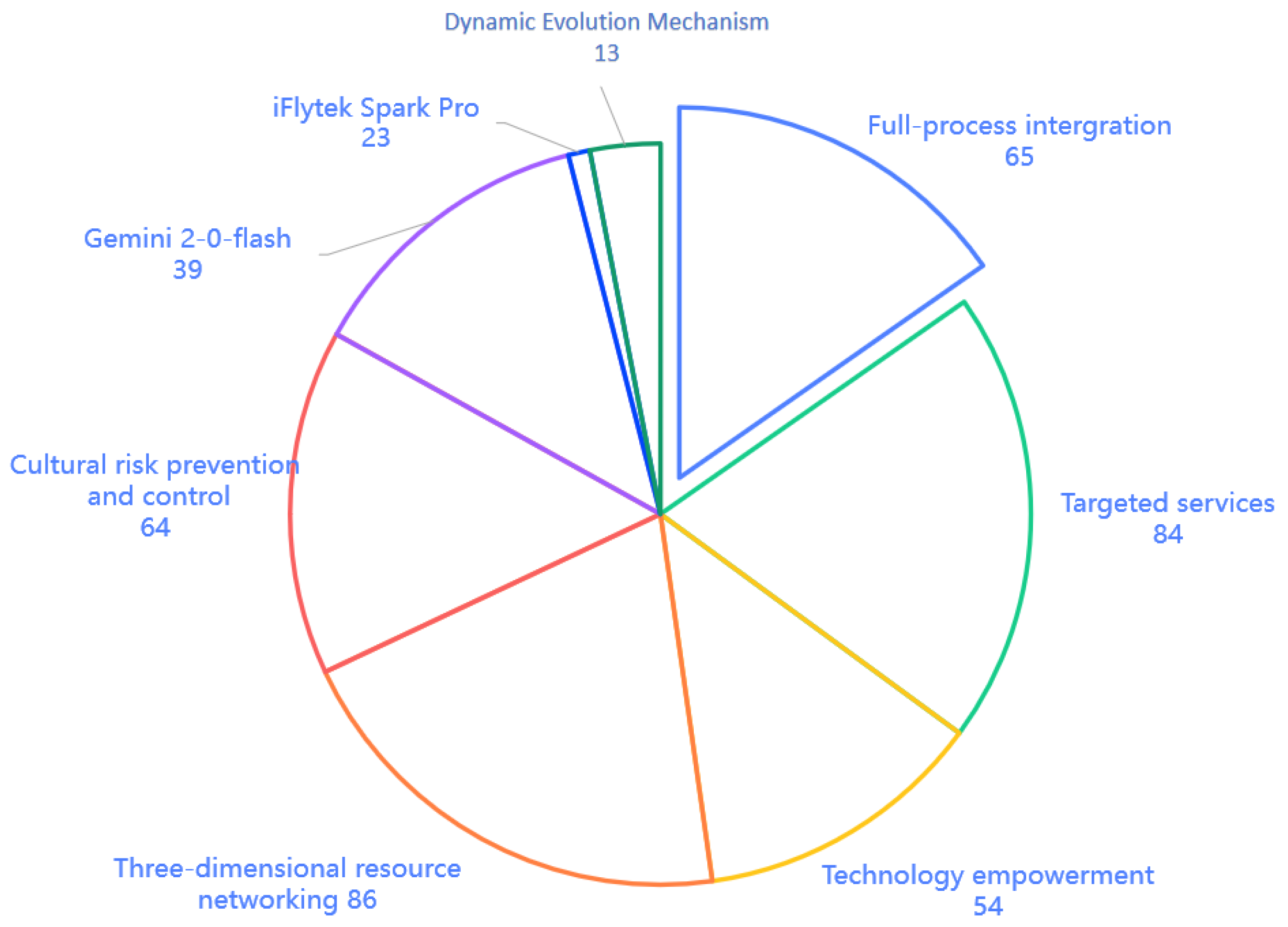

As shown in the data distribution in

Figure 2, the emergency language service plans automatically generated by large AI models demonstrate strong advantages (scores exceeding 60 points) in targeted services, full-process integration, three-dimensional resource networking, and cultural risk prevention and control. The total scores for plan generation by different models are summarized in

Table 6. The proportion chart of scores for each evaluation dimension in plan generation is shown in

Figure 3, and the specific scores are provided in

Table 7.

Most large models excel in targeted services.

DeepSeek series (R1-70b and V3), O1 preview, and GPT-32k all achieved perfect scores of 9 points. This indicates that these models can accurately identify demand differences across languages and dialects, adapt communication styles based on user characteristics (e.g., age, cultural background, and expertise), deliver precise and clear language services in emergencies, and manage the conversion between professional terminology and colloquial expressions to ensure seamless information delivery.

Claude-3-3-Sunnet (8 points) and Gemini-2.0-Flash (7 points) also performed well, suggesting that leading international models have reached a high level of proficiency in precision services. This strength stems from the diversity and richness of their training data and deep optimizations in multilingual processing capabilities.

GPT-32k scored the highest in this category (9 points), demonstrating its ability to comprehensively cover all phases from early warning to plan formulation, implementation, and evaluation; provide tailored language support at each stage; and maintain coherence and consistency across processes.

CHAT-glam ranked second (7 points), while Chinese models generally underperformed (e.g., iFlytek Spark Pro scored only 3 points). This suggests that international models may benefit from broader training data and case studies related to emergency management, whereas Chinese models lack systemic expertise in handling emergency workflows.

DeepSeek V3 and GPT-32k tied for the top score (9 points), highlighting their capabilities to effectively identify and integrate multi-source resources, develop collaborative resource allocation strategies, and manage complex cross-departmental and cross-regional resource networks.

Chinese models such as ERNIE Bot (8 points) and Tongyi Qianwen (7 points) performed well in this dimension, approaching international benchmarks. This indicates that large models inherently excel in information integration and networked thinking, while Chinese models also show competitiveness in local resource network comprehension.

DeepSeek V3 and O1 preview performed best (8 points), demonstrating strong cultural sensitivity recognition capabilities, the proactive identification of potential cultural conflict points, and the ability to provide culturally adaptive solutions.

Chinese models such as Doubao (3 points) and 01.AI (2 points) lagged significantly in this area. This gap likely reflects the advantages of international models in leveraging diverse cultural training data and their greater emphasis on addressing potential cultural conflicts through targeted experience accumulation.

3.4. Weakness Analysis

The shortcomings of the emergency language service protocols generated by these eleven large models are most pronounced in legal and regulatory frameworks and dynamic evolution mechanisms. Notably, legal and regulatory frameworks scored the lowest, with a total of only 4 points across all models.

All models performed poorly in this dimension. Most models scored 0 points, including all Chinese models (e.g., Doubao and 01.AI) and some international models. The highest scores were 2 points (DeepSeek V3) and 1 point (GPT-32k).

This widespread gap reflects several key issues: emergency language service laws and regulations are likely specialized and fragmented, making them difficult for models to learn comprehensively; significant regional/national differences in legal frameworks complicate a unified understanding; training data likely lack systematic legal materials specific to emergency services; and legal interpretation requires rigorous reasoning capabilities, not just pattern recognition.

Most models struggled to adapt to dynamic changes: the highest scores were 3 points (DeepSeek R1-70b and O1 preview), with most models scoring 0 or 1 point.

This indicates that current large-scale models have the following limitations: a poor understanding of temporal sequences and event evolution patterns, an inability to dynamically adjust protocols based on emerging information, limited predictive and adaptive capacities for unforeseen scenarios, and the absence of feedback loop mechanisms for continuous improvement through practice.

International models performed better, with Gemini 2-0-flash scoring 8 points and Claude-3-3-sunnet scoring 7 points. Chinese models showed moderate results, with Qianwen scoring 8 points and iFlyTek Spark Pro scoring 5 points. DeepSeek models scored extremely poorly, with both DeepSeek R1-70b and DeepSeek V3 receiving 0 points.

This gap reflects the following shortcomings: a lack of understanding of system resilience concepts in some models, divergence in resilience assurance philosophies between Chinese and Western approaches, and an uneven focus on extreme scenarios and stress testing during model training.

DeepSeek models (7 points) and O1 preview (6 points) outperformed the others. Chinese models generally lagged behind, with iFlyTek Spark Pro scoring 1 point and 01.AI scoring 2 points.

This differentiation reflects the following phenomena: the limited grasp of emerging technologies (e.g., AI and IoT) in emergency services, the lack of training data integrating cutting-edge tech with emergency scenarios in domestic models, and the disconnect between technology integration capabilities and rapid tech advancements.

3.5. Analysis of Model Performance Variations

3.5.1. International Model vs. Chinese Model

The strengths of international models are superior precision service capabilities across the board, more comprehensive end-to-end process coverage, and a deeper understanding of technology empowerment.

The characteristics of Chinese models are as follows: Tongyi Qianwen excels in resilience assurance systems (8 points), and ERNIE Bot leads in multi-dimensional resource networks (8 points). The overall weaknesses mirror those of international models in legal frameworks and dynamic evolution mechanisms.

3.5.2. Cluster Analysis of Model Performance

Based on the overall scores, the models fall into three categories:

Comprehensive Leaders: GPT-32k is top-tier in process coverage (9 points), precision services (9 points), and resource networks (9 points) but weak in resilience (3 points) and legal frameworks (1 point). CHAT Glam balances performance with no major weaknesses, excelling in process coverage (7 points) and resilience (8 points).

Specialized Performers: DeepSeek V3 is outstanding in precision services (9 points), resource networks (9 points), and cultural risk mitigation (8 points) but fails in resilience (0 points). O1 preview is strong in precision services (9 points) and cultural risk mitigation (8 points). Baseline Models: Most Chinese models (e.g., iFlyTek Spark Pro and 01.AI) score 3–5 across dimensions. They have no standout strengths or critical weaknesses.

4. Conclusions

Wang Hui pointed out that emergency language services require heightened awareness, the establishment of emergency language service-related laws and regulations, the development of intelligent emergency language service systems, the strengthening of pre-incident drills, and emphasis on the reserve and training of emergency language service professionals [

19]. Building on these foundations, an earthquake emergency language service plan based on large-model chain-of-thought prompt engineering should further strengthen strategies for enhancing legal and regulatory frameworks, implement solutions to boost dynamic evolutionary mechanisms, offer recommendations for model selection and application, and refine strategies for hybrid application and system integration, thereby better achieving plan generation and quality assessment.

4.1. Legal and Regulatory Framework Capability Enhancement Strategies

Increase specialized data, and construct a professional knowledge base of laws and regulations for emergency language services. Integrate international, national, and local emergency regulatory systems (for instance, the glossary published by Germany’s Federal Office of Civil Protection and Disaster Assistance in 2019, referenced from the Federal Office of Civil Protection and Disaster Assistance website (

https://www.bbk.bund.de), explaining key concepts in civil defense work, as well as the Crisis Communication Guide released by the Ministry of the Interior (

https://www.bmi.bund.de)). Develop a case base of real-world legal applications for model fine-tuning.

Strengthen reasoning capabilities by developing specialized training methods for understanding legal texts, and introduce a legal expert review mechanism to optimize the model’s performance in legal interpretation. Establish a system for updating laws and regulations to ensure that the model remains up to date with the latest regulatory information.

Integrate cross-disciplinary knowledge, combining legal knowledge with emergency management know-how. Develop a legal consultation support system that provides professional assistance at critical junctures.

4.2. Dynamic Evolutionary Mechanism Enhancement Plan

Reinforce event sequencing training by constructing a time-series dataset, thus enhancing the model’s understanding of how events evolve.

Develop dynamic scenario simulation training to boost the model’s adaptability. Establish a feedback loop system with real-time feedback, enabling the model to adjust the plan based on new information. Develop a plan execution evaluation system to facilitate continuous model optimization.

Enhance contextual adaptability by training the model to identify key variables and triggers, and develop a multi-solution preparation mechanism to improve the ability to handle uncertainties.

4.3. Model Selection and Application Recommendations

For comprehensive emergency plan generation scenarios, GPT-32k or CHAT glam is recommended as the first choice, given their strong end-to-end capabilities and balanced performance across multiple dimensions. It is advisable to include a human review for legal and regulatory aspects.

For scenarios requiring precise multilingual services, the DeepSeek series or O1 preview is recommended, as they excel in accurate service delivery, making them suitable for multi-language, multi-cultural emergency services.

To construct a localized emergency service system, Tongyi Qianwen or Wenxin Yiyan is recommended, given their advantages in resilience support and resource networks in local contexts, making them suitable for domestic, region-specific emergency language service systems.

In culture-sensitive scenarios, DeepSeek V3 or O1 preview is recommended due to their notable strengths in cultural risk prevention and control, making them well-suited for international emergency cooperation with frequent cross-cultural exchanges.

4.4. Hybrid Application and System Integration Strategies

In light of the limitations of any single model, a multi-model collaboration approach is recommended to ensure the quality of the generated plans. In the plan formulation phase, use GPT-32k (with strong end-to-end coverage). To design multilingual services, use the DeepSeek series (notable for high-precision services). For resource deployment plans, use DeepSeek V3 or Wenxin Yiyan (with strong resource–network capabilities). For resilience support design, use Gemini 2-0-flash or Tongyi Qianwen. Build a three-layer model application architecture consisting of a foundation layer, a processing layer, and an evaluation layer. The foundation layer can employ lightweight models to handle information gathering and preliminary processing. The processing layer can use comprehensive large models for core plan generation. The evaluation layer can carry out quality control and optimization, leveraging specialized models for supplementary tasks.

Establish a human–machine collaborative support system. In areas where models are generally weak (e.g., laws/regulations and dynamic evolution), introduce a human review and adopt a “model-assisted + expert-led decision” workflow. Develop professional domain knowledge bases as supplementary resources for model reasoning.