The Principle of Shared Utilization of Benefits Applied to the Development of Artificial Intelligence

Abstract

1. Definition and Origin of the BSP Principle in Biomedical and Biogenetic Contexts

1.1. Structural Inequalities and Profit Concentration in AI Development

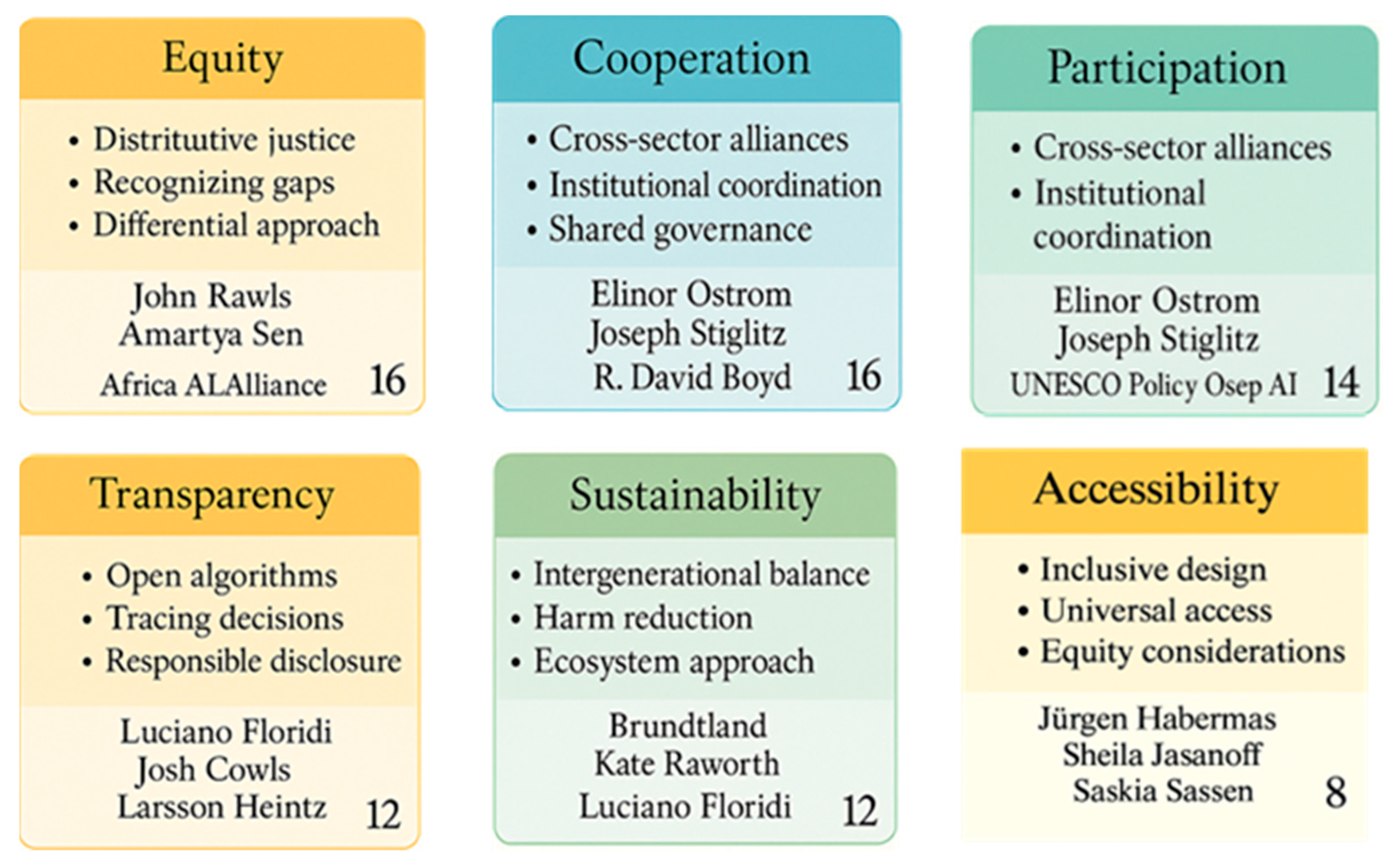

1.2. Normative and Ethical Bases of Fair Distribution in Science and Technology

1.3. Innovation, Unequal Access and Private Appropriation of Knowledge

1.4. International Regulatory Frameworks and Inclusive Governance Mechanisms

Theoretical and Critical Approaches to Distributive Justice and Emerging Technologies

1.5. Ethical Risks of Unregulated AI Development

1.5.1. Evolution of the Principle of Profit-Sharing in International Frameworks

1.5.2. Legal Instruments and Implementation Challenges

1.5.3. Bioprospecting, Innovation, and the Risks of Neocolonial Exploitation

1.6. The BSP Applied to Artificial Intelligence

1.6.1. Inclusive Governance and Equitable Access

1.6.2. Inclusion of the Global South in Technological Governance

1.7. Ethical and Participatory Governance of Artificial Intelligence

1.7.1. Structural Risks of Automation and Political Manipulation

1.7.2. Technocolonialism and Digital Sovereignty from the Global South

1.7.3. An AI Focused on Human Well-Being and the Common Good

1.7.4. Posthumanism, Artificial Sovereignty and Algorithmic Biopolitics

1.8. Obstacles and Tensions in the Implementation of the Principle

1.8.1. Digital Colonialism and Structural Inequalities

1.8.2. Multidimensional Impacts of the AI Deficit in the Global South

1.8.3. Ethical and Political Foundations of Fair AI

1.8.4. Proposals for Inclusive and Sustainable Artificial Intelligence

1.9. Participatory Governance and Equity in Benefit Distribution

Common Infrastructures and Digital Redistributive Justice

2. Current Context of AI Development

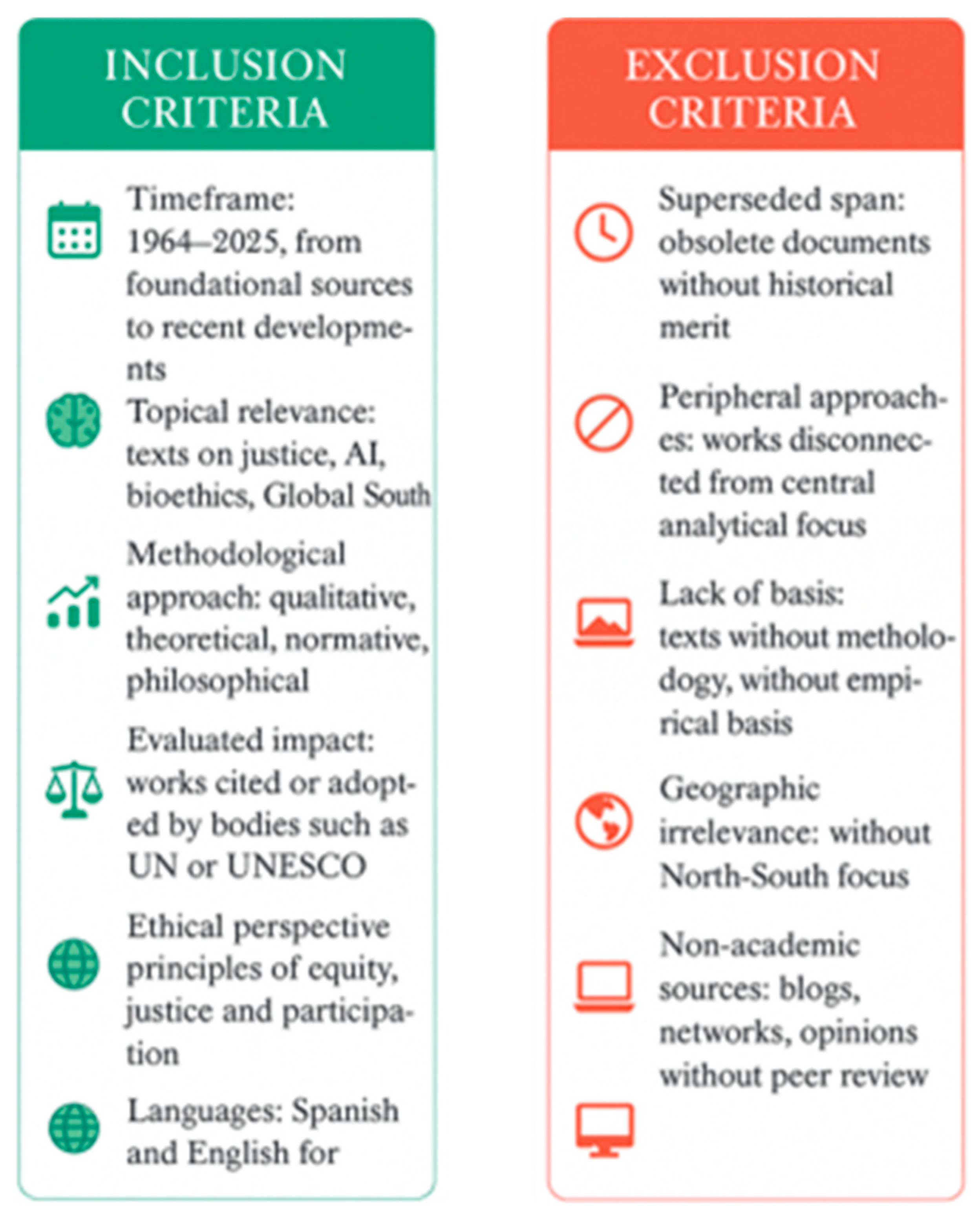

3. Research Design

4. Results

5. Discussion

5.1. Interpretation of Prior Positions and Results

5.2. Reflection on the Results

5.3. Proposal for a Global Regulatory Architecture

6. Central Findings and Problem Solution

Orientation of Future Research

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| 1 | The bioethetic principle of the shared use of benefits is expressed in article 15 of the Universal Declaration on Bioethics and Human Rights, issued by UNESCO in 2005. This promotes the fair and equitable distribution of fruits derived from the use of knowledge, data or recovery actors. |

| 2 | Distributive justice is an ethical doctrine that seeks to assign equitable resources and opportunities, prioritizing the well of historically disadvantaged groups. Rawls, J, is his work “A Theory of Justice” de 1971, argues that a fair society is one that structurally improves the situation of its less favored members. |

| 3 | The Global North is a geopolitical concept that refers to countries with high technological, economic and political capacity, which lead the global dynamics of production, innovation and governance. Although its delimitation does not depend on the geographical location, it commonly includes Western Europe, the United States, Canada, Japan and certain advanced economies of Asia-Pacific. In this regard, Escobar, A, in his 1995 book “Encountering Development: The Making and a Maging of the Third World”, deeply analyzes the power relationship between the “First World” (North) and the “Third World” (South). |

| 4 | Co-responsibility is an ethical approach that requires the equitable participation of all actors involved in the generation, control and distribution of technological benefits. In this field, the German philosopher Karl-Beto Apel is widely recognized as the main theoretical that systematized this concept in the framework of its universalist discursive ethics. |

| 5 | The Global South is an analytical category that identifies the historically relegated countries and communities within the international system, characterized by low levels of industrialization, technological dependence and limited influence on global decision -making processes. Although it is not strictly geographical, the concept points to structural inequalities in access to resources, knowledge and power. |

| 6 | Algorithmic sovereignty is described as the institutional and legal capacity of states to regulate the design, implementation and supervision of algorithmic systems that affect its population. In the body of this article, appointments are indicated where the concept can be deepened. |

| 7 | Digital colonialism is a contemporary form of domination that operates through data extraction, surveillance and information control by corporations of the global northern on peripheral countries. Kwet, M, in his book “Digital Colonialism: Us Empire and the New Imperialism in the Global South”, makes an interesting proposal that can expand this concept. |

References

- Vargas, C. Tendencias y principios en las corrientes bioéticas. Rev. Colomb. Bioét. 2021, 16, 1–22. Available online: http://www.scielo.org.co/scielo.php?script=sci_arttext&pid=S1900-68962021000200119 (accessed on 16 May 2025).

- Sulasa, T.; Kumar, R. Redefining the impetus of opulence and artificial intelligence on human productivity and work ethos. Russ. Law J. 2023, 11, 2628–2635. Available online: https://www.russianlawjournal.org/index.php/journal/article/view/3119/1914 (accessed on 9 May 2025).

- O’Sullivan, S.; Nevejans, N.; Allen, C.; Blyth, A.; Leonard, S.; Pagallo, U.; Holzinger, K.; Holzinger, A.; Sajid, M.I.; Ashrafian, H. Legal, regulatory, and ethical frameworks for development of standards in artificial intelligence (AI) and autonomous robotic surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2019, 15, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Bernal, D. Bioderecho internacional. Derecho y Realidad. 2015, 13, 33–54. [Google Scholar] [CrossRef]

- UN. Convention on Biological Diversity; Secretary-General of the United Nations: New York, NY, USA; Available online: https://treaties.un.org/doc/treaties/1992/06/19920605%2008-44%20pm/ch_xxvii_08p.pdf (accessed on 29 June 2024).

- UNEP. Bonn Guidelines on Access to Genetic Resources and Fair and Equitable Sharing of the Benefits Arising out of their Utilization; Secretariat of the Convention on Biological Diversity, United Nations Environment Programme: Montreal, QC, Canada; Available online: https://www.cbd.int/doc/publications/cbd-bonn-gdls-en.pdf (accessed on 19 September 2024).

- Rheeder, A. Benefit-sharing as a global bioethical principle: A participating dialogue grounded on a Protestant perspective on fellowship. Sabinet Afr. J. 2019, 51, 1–11. Available online: https://hdl.handle.net/10520/EJC-1b39c2c633 (accessed on 21 May 2025). [CrossRef]

- CNB. El Concepto de Justicia en Bioética. Volnei Garrafa. In Secretaría de Salud de los Estados Unidos Mexicanos, Comisión Nacional de Bioética, México D.F. Available online: https://www.gob.mx/salud%7Cconbioetica/videos/el-concepto-de-justicia-en-bioetica-dr-volnei-garrafa (accessed on 31 July 2024).

- UNESCO. Universal Declaration on Bioethics and Human Rights: Background, Principles and Application; UNESCO: Paris, France; Available online: https://unesdoc.unesco.org/ark:/48223/pf0000179844 (accessed on 24 October 2024).

- CEPAL. Protocolo de Nagoya sobre Acceso a los Recursos Genéticos y Participación Justa y Equitativa en los Beneficios que se Deriven de su Utilización al Convenio sobre la Diversidad Biológica. Montreal, Quebec, Canadá: Comisión Económica para América Latina y el Caribe. Available online: https://observatoriop10.cepal.org/es/media/148 (accessed on 10 July 2024).

- UNESCO. Recommendation on the Ethics of Artificial Intelligence; General Conference of the United Nations Educational, Scientific and Cultural Organization: Paris, France; Available online: https://unesdoc.unesco.org/ark:/48223/pf0000380455 (accessed on 24 November 2021).

- OECD. OECD AI Principles; Recommendation of the Council on Artificial Intelligence (OECD/LEGAL/0449); Organisation for Economic Co-operation and Development: Paris, France; Available online: https://www.oecd.org/en/topics/ai-principles.html (accessed on 12 May 2019).

- Khazieva, N.; Pauliková, A.; Chovanová, H. Maximising synergy: The benefits of a joint implementation of knowledge management and artificial intelligence system standards. Mach. Learn. Knowl. Extr. 2024, 6, 2282–2302. [Google Scholar] [CrossRef]

- ISO/IEC 42001:2023; Information Technology—Artificial Intelligence—Management System. International Organization for Standardization: Geneva, Switzerland, 2023. Available online: https://www.iso.org/standard/81296.html (accessed on 12 July 2025).

- United Nations. Sustainable Development Goals: Transforming Our World, 2030; United Nations: Nueva York, NY, USA, 2014; Available online: https://www.undp.org/sites/g/files/zskgke326/files/migration/gh/SDGs-Booklet_Final.pdf (accessed on 12 January 2025).

- Vallejo, F.; Álvares, D. Distribución justa y equitativa de los beneficios derivados del uso de los recursos genéticos y los conocimientos tradicionales. Revisión de algunas propuestas normativas y doctrinarias para su implementación. Rev. Ius Praxis 2023, 29, 184–203. [Google Scholar] [CrossRef]

- Garrafa, V.; Porto, D. Bioética de intervención. In Diccionario Latinoamericano de Bioética; Tealdi, J., Ed.; UNESCO: Bogotá, Colombia, 2008; p. 161. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000161848 (accessed on 16 May 2025).

- Rawls, J. A Theory of Justice; Harvard University Press: Cambridge, MA, USA, 1999; pp. 1–560. [Google Scholar] [CrossRef]

- Von Schomberg, R. A vision of responsible research and innovation. In Responsible Innovation: Managing the Responsible Emergence of Science and Innovation in Society; Owen, R., Bessant, J., Heintz, M., Eds.; John Wiley & Sons: Hoboken, NJ, USA, 2013; pp. 51–74. [Google Scholar] [CrossRef]

- Henk, T.; Do Céu, M. Dictionary of Global Bioethics; Springer Nature: Cham, Switzerland, 2021; pp. 1–688. [Google Scholar] [CrossRef]

- Ten, H. Justice and global health: Planetary considerations. In Environmental/Ecological Considerations and Planetary Health; Benatar, S., Brock, G., Eds.; Cambridge University Press: Cambridge, UK, 2021; pp. 316–325. [Google Scholar] [CrossRef]

- UNCTAD. United Nations Conference on Trade and Development; United Nations: New York, NY, USA, 1964; Available online: https://unctadstat.unctad.org/EN/FromHbsToDataInsights.html (accessed on 1 July 2024).

- Hinkelammert, F. Crítica a la Razón Utópica; Desclée de Brouwer: Bilbao, España, 2002; pp. 1–406. Available online: http://repositorio.uca.edu.sv/jspui/bitstream/11674/1756/1/Cr%C3%ADtica%20de%20la%20raz%C3%B3n%20ut%C3%B3pica.pdf (accessed on 5 July 2025).

- Hinkelammert, F. El Huracán de la Globalización: La Exclusión y la Destrucción del Medio Ambiente Vistos Desde la Teoría de la Dependencia, 1st ed.; Universidad Centroamericana José Simeón Cañas: San Salvador, El Salvador, 1999; pp. 17–33. Available online: http://hdl.handle.net/11674/1006 (accessed on 4 July 2025).

- Hinkelammert, F. Aprovechamiento compartido de los beneficios del progreso. In Proceedings of the VI Congreso Internacional de la Red Bioética-UNESCO, 1st ed.; UNESCO: Ciudad de Panamá, Panamá, 2016; pp. 1–7. Available online: http://repositorio.uca.edu.sv/jspui/bitstream/11674/824/1/Aprovechamiento%20compartido%20de%20los%20beneficios%20del%20progreso.pdf (accessed on 5 July 2025).

- Escobar, A. Una Minga Para el Postdesarrollo: Lugar, Medio Ambiente y Movimientos Sociales en las Transformaciones Globales; Programa Democracia y Transformación Global, Fundación Ford.: Lima, Perú, 2010; pp. 1–222. Available online: http://bdjc.iia.unam.mx/items/show/46 (accessed on 1 July 2025).

- Shiva, V. Biopiracy: The Plunder of Nature and Knowledge; South End Press: Boston, MA, USA, 1997; pp. 127–133. ISBN 9780896085553. [Google Scholar]

- De Campos Rudinsky, T. Multilateralism and the global co responsibility of care in times of a pandemic: The legal duty to cooperate. Ethics Int. Aff. 2023, 37, 206–231. [Google Scholar] [CrossRef]

- Rourke, M. Access and Benefit Sharing in Practice: Fostering Equity Between Users and Providers of Genetic Resources. J. Sci. Policy Gov. 2016, 13, 1–20. Available online: https://www.sciencepolicyjournal.org/uploads/5/4/3/4/5434385/rourke.pdf (accessed on 5 July 2025).

- Lavelle, J.; Wynberg, R. Benefit Sharing Under the BBNJ Agreement in Practice; Decoding Marine Genetic Resource Governance Under the BBNJ Agreement; Springer Nature: Cham, Switzerland, 2025; pp. 271–281. [Google Scholar] [CrossRef]

- United Nations. Nagoya Protocol on Access to Genetic Resources and the Fair and Equitable Sharing of Benefits Arising from their Utilization to the Convention on Biological Diversity; United Nations: Nueva York, NY, USA, 2010; Available online: https://treaties.un.org/pages/ViewDetails.aspx?src=TREATY&mtdsg_no=XXVII-8-b&chapter=27 (accessed on 4 July 2025).

- Craik, N. Equitable marine carbon dioxide removal: The legal basis for interstate benefit sharing. In Climate Policy; Taylor & Francis: Abingdon, UK, 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Kamau, E.; Winter, G. Equity and Innovation in International Biodiversity Law. Common Pools of Genetic Resources; Routledge: London, UK, 2013; pp. 1–456. [Google Scholar] [CrossRef]

- Morgera, E. Corporate Environmental Accountability in International Law; Oxford University Press: Oxford, UK, 2020; pp. 1–352. ISBN 0198738048. [Google Scholar] [CrossRef]

- Aubertin, C. Biopiraterie. In L’Encyclopédie du Développement Durable; Piéchaud, J., Ed.; Association 4D: Paris, France, 2006; pp. 117–120. Available online: https://horizon.documentation.ird.fr/exl-doc/pleins_textes/divers17-03/010069240.pdf (accessed on 5 July 2025).

- Ribeiro, S. Biopiraterie und geistiges Eigentum—Zur Privatisierung von gemeinschaftlichen Bereichen. In Mythen Globalen Umweltmanagements; Görg, C., Brand, U., Eds.; Westfälisches Dampfboot: Münster, Germany, 2002; pp. 118–136. Available online: https://www.ufz.de/export/data/2/81980_596-goerg-brand.pdf (accessed on 2 July 2025).

- Gitter, D. International conflicts over patenting human DNA sequences in the United States and the European Union: An argument for compulsory licensing and a fair use exemption. N. Y. Univ. Law Rev. 2001, 16, 1623–1691. Available online: https://heinonline.org/HOL/LandingPage?handle=hein.journals/nylr76&div=54&id=&page= (accessed on 5 July 2025).

- Ostrom, E. Governing the Commons; Cambridge University Press: Cambridge, UK, 1990; pp. 1–181. [Google Scholar] [CrossRef]

- International Telecommunication Union (ITU). AI for Good. Available online: https://aiforgood.itu.int/ (accessed on 4 February 2025).

- ADBI Institute. The APAC State of Open Banking and Open Finance Reporte; Cambridge Judge Business School, Cambridge Centre for Alternative Finance & Asian Development Bank Institute: Cambridge, UK, 2025; p. 105. Available online: https://www.adb.org/sites/default/files/publication/1065246/apac-state-open-banking-and-open-finance-report.pdf (accessed on 3 June 2025).

- Forbes India. More Data Access, and a New Way of Lending. Forbes India [Online] 16 May 2025. Available online: https://www.forbesindia.com/article/take-one-big-story-of-the-day/more-data-access-and-a-new-way-of-lending/95985/1 (accessed on 3 June 2025).

- Brynjolfsson, E.; McAfee, A. The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies; W. W. Norton & Company: New York, NY, USA, 2014; pp. 1–86. Available online: http://digamo.free.fr/brynmacafee2.pdf (accessed on 20 June 2025).

- Stiglitz, J. Globalization and Its Discontents; W. W. Norton & Company: New York, NY, USA, 2002; pp. 1–142. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J. A unified framework of five principles for AI in society. Harv. Data Sci. Rev. 2019, 1, 1–14. [Google Scholar] [CrossRef]

- Hintze, A.; Adami, C. Artificial intelligence for social good. arXiv 2019, arXiv:2412.05450. [Google Scholar] [PubMed]

- Floridi, L. Ethics of Artificial Intelligence; Oxford University Press: Oxford, UK, 2019; pp. 1–414. [Google Scholar] [CrossRef]

- Taherdoost, H.; Madanchian, M. Artificial intelligence and knowledge management: Impacts, benefits, and implementation. Computers 2023, 12, 72. [Google Scholar] [CrossRef]

- Conn, A. AI Should Provide a Shared Benefit for as Many People as Possible. Future Life Inst. 2018, pp. 1–5. Available online: https://futureoflife.org/recent-news/shared-benefit-principle/ (accessed on 20 June 2025).

- Lin, P. Why ethics matters for robots. In Robot Ethics: The Ethical and Social Implications of Robotics; Lin, P., Abney, K., Bekey, G., Eds.; MIT Press: Cambridge, MA, USA, 2014; ISBN 978-0-262-01666-7. [Google Scholar]

- Future of Life Institute (FLI). Asilomar AI principles. Available online: https://futureoflife.org/ (accessed on 21 December 2024).

- Morandín-Ahuerma, F. Twenty-three Asilomar principles for artificial intelligence and the future of life. In Principios Normativos Para una Ética de la Inteligencia Artificial; Center for Open Science: Charlottesville, VA, USA, 2023; pp. 1–25. [Google Scholar] [CrossRef]

- Cotino, L. Riesgos e impactos del big data, la inteligencia artificial y la robótica y enfoques, modelos y principios de la respuesta del Derecho. Rev. Gen. Derecho Adm. 2019, 50, 1–37. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=6823516 (accessed on 18 June 2025).

- Suárez, F. Inteligencia artificial: Una mirada crítica al concepto de personalidad electrónica de los robots. SADIO Electron. J. Inform. Oper. Res. 2024, 32, 214–229. [Google Scholar] [CrossRef]

- European Commission (EC). Making artificial intelligence available to all—How to avoid big tech’s monopoly on AI? Eur. Comm. 2024, 1–2. Available online: https://europa.eu/newsroom/ecpc-failover/pdf/speech-24-931_en.pdf (accessed on 20 October 2024).

- Bartlett, B. Towards accountable, legitimate and trustworthy AI in healthcare: Enhancing AI ethics with effective data stewardship. New Bioeth. 2025, 1–25. [Google Scholar] [CrossRef] [PubMed]

- Acemoglu, D.; Restrepo, P. Artificial intelligence, automation, and work. In The Economics of Artificial Intelligence; Agrawal, A., Gans, J., Goldfarb, A., Eds.; University of Chicago Press: Chicago, IL, USA, 2018; pp. 197–236. Available online: http://www.nber.org/books/agra-1 (accessed on 20 June 2025).

- Yampolskiy, R. Artificial Intelligence Safety and Security; Taylor & Francis: New York, NY, USA, 2018; ISBN 9780815369820. [Google Scholar]

- Ávila, R. ¿Soberanía digital o colonialismo digital? Sur Rev. Int. Derechos Hum. 2018, 15, 15–28. Available online: https://sur.conectas.org/wp-content/uploads/2018/07/sur-27-espanhol-renata-avila-pinto.pdf (accessed on 12 June 2025).

- Nur, A.; Muntasir, W. The de-democratization of AI: Deep learning and the compute divide in artificial intelligence research. arXiv 2020, arXiv:2010.15581. [Google Scholar]

- Latonero, M. Governing Artificial Intelligence: Upholding Human Rights & Dignity; Data & Society: New York, NY, USA, 2018; pp. 1–37. Available online: https://datasociety.net/wp-content/uploads/2018/10/DataSociety_Governing_Artificial_Intelligence_Upholding_Human_Rights.pdf (accessed on 21 June 2025).

- Allam, Z.; Jones, D. On the coronavirus (COVID-19) outbreak and the smart city network: Universal data sharing standards coupled with artificial intelligence to benefit urban health monitoring and management. Healthcare 2020, 8, 46. [Google Scholar] [CrossRef] [PubMed]

- Villagra, J. Robótica e inteligencia artificial más humanas y sostenibles. Pap. Econ. Esp. 2021, 169, 165–177. Available online: https://www.funcas.es/wp-content/uploads/2021/09/PEE-169_11.pdf (accessed on 20 June 2025).

- Orose, L. The potential impacts of artificial intelligence on the happiness of human beings. Asian J. Econ. Financ. Manag. 2021, 3, 151–159. Available online: https://www.journaleconomics.org/index.php/AJEFM/article/view/98 (accessed on 20 June 2025).

- Ünver, A. The Role of Technology: New Methods of Information Manipulation and Disinformation; Centre for Economics and Foreign Policy Studies: Istanbul, Turkey, 2023; pp. 1–211. [Google Scholar] [CrossRef]

- Andrade, R. Problemas filosóficos de la inteligencia artificial general: Ontología, conflictos éticos-políticos y astrobiología. Argum. Razón Téc. 2023, 26, 275–302. [Google Scholar] [CrossRef]

- Filippi, E.; Bannò, M.; Trento, S. Automation technologies and their impact on employment: A review, synthesis and future research agenda. Technol. Forecast. Soc. Change 2023, 191, 1–21. [Google Scholar] [CrossRef]

- Kamande, J.; Stanworth, M. AI and Data Monopolies: Navigating the legal and ethical implications of antitrust regulations; Researchgate: Boston, MA, USA, 2024; pp. 1–8. Available online: https://www.researchgate.net/publication/384628540 (accessed on 25 June 2025).

- Tully, R. Who owns artificial intelligence? In Proceedings of the 26th International Conference on Engineering and Product Design Education, Birmingham, UK, 5–6 September 2024; Technological University Dublin: Dublin, Ireland; Aston University: Birmingham, UK, 2024; Volume 131, pp. 1–6. Available online: https://www.designsociety.org/1353/E%26PDE+2024+-+26th+International+Conference+on+Engineering+%26+Product+Design+Education (accessed on 20 June 2025).

- Krasodomski, A.; Gwagwa, A.; Jackson, B.; Jones, E.; King, S.; Lane, M.; Mantegna, M.; Schneider, T.; Siminyu, K.; Tarkowski, A. Artificial Intelligence and the Challenge for Global Governance; Chatham House: London, UK, 2024; pp. 1–70. Available online: https://www.chathamhouse.org/sites/default/files/2024-06/2024-06-07-ai-challenge-global-governance-krasodomski-et-al.pdf (accessed on 29 June 2025).

- Fjeld, J.; Achten, N.; Hilligoss, H.; Nagy, A.; Srikumar, M. Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI; Berkman Klein Center: Cambridge, MA, USA, 2024; Available online: https://cyber.harvard.edu/publication/2020/principled-ai (accessed on 20 June 2025).

- Benkler, Y. The Wealth of Networks: How Social Production Transforms Markets and Freedom; Yale University Press: New Haven, CT, USA, 2006; Available online: https://www.benkler.org/Benkler_Wealth_Of_Networks.pdf (accessed on 10 June 2025).

- Raymond, E. The cathedral and the bazaar. Know. Techn. Pol. 1999, 12, 23–49. [Google Scholar] [CrossRef]

- Sen, A. Development as Freedom; Oxford University Press: Oxford, UK, 1999. [Google Scholar] [CrossRef]

- Kallot, K. The Global South Needs to Own Its AI Revolution. Available online: https://www.project-syndicate.org/commentary/global-south-must-use-ai-to-build-better-world-by-kate-kallot-2025-02 (accessed on 12 February 2025).

- Nadisha, A.; Kester, L.; Yampolskiy, R. Transdisciplinary AI observatory—Retrospective analyses and future-oriented contradistinctions. Philosophies 2021, 6, 6. [Google Scholar] [CrossRef]

- Korinek, A.; Stiglitz, J. Artificial intelligence and its implications for income distribution and unemployment. In The Economics of Artificial Intelligence; Agrawal, A., Gans, J., Goldfarb, A., Eds.; University of Chicago Press: Chicago, IL, USA, 2018; pp. 349–390. [Google Scholar] [CrossRef]

- Manvi, R.; Khanna, S.; Burke, M.; Lobell, D.; Ermon, S. Large language models are geographically biased. arXiv 2024. [Google Scholar] [CrossRef]

- Silva, V. The global governance of artificial intelligence and the future of work. In Handbook on the Global Governance of AI; Edward Elgar: Cheltenham, UK, 2024; pp. 1–18. [Google Scholar] [CrossRef]

- Warr, M.; Rather, S. Sociological analysis of artificial intelligence, benefits, concerns and its future implications. Int. J. Indian Psychol. 2024, 12, 1825–1830. [Google Scholar] [CrossRef]

- Muldoon, J.; Cant, C.; Graham, M.; Spilda, F.U. The poverty of ethical AI: Impact sourcing and AI supply chains. AI Soc. 2023, 40, 529–543. [Google Scholar] [CrossRef]

- Couldry, N.; Mejias, U. Data colonialism: Rethinking big data’s relation to the contemporary subject. Televis. New Media 2019, 20, 336–349. [Google Scholar] [CrossRef]

- Montero, A. Contexto histórico del origen de la ética de la investigación científica y su fundamentación filosófica. Ethika+ 2020, 1, 11–29. [Google Scholar] [CrossRef]

- Rodrigues, R. Legal and human rights issues of AI: Gaps, challenges and vulnerabilities. J. Responsib. Technol. 2020, 4, 1–12. [Google Scholar] [CrossRef]

- Vega, L. Conocimiento, diferencia y equidad. In Políticas Educativas, Diferencia y Equidad; Fuentes, L., Jiménez, B., Eds.; Universidad Central: Bogotá, Colombia, 2015; pp. 32–42. Available online: https://repositorio.unal.edu.co/bitstream/handle/unal/56990/9789582602277.pdf?sequence=1#page=32 (accessed on 20 June 2025).

- Ciruzzi, M. La “Cenicienta Bioética”: Justicia distributiva en un mundo injusto e inequitativo. In Anuario de Bioética y Derechos Humanos; Tinan, E., Ed.; Instituto Internacional de Derechos Humanos: Buenos Aires, Argentina, 2020; pp. 99–116. Available online: https://www.iidhamerica.org/pdf/16039938717206anuario-de-bioetica-2020-final609c3be0d60ae.pdf (accessed on 26 June 2025).

- Larsson, S.; Heintz, F. Transparency in artificial intelligence. Internet Policy Rev. 2020, 9, 1–16. [Google Scholar] [CrossRef]

- Mounkaila, O. Artificial Intelligence and Ethics; Institut d’électronique et d’informatique Gaspard Monge, Université Gustave Eiffel: Paris, France, 2024; pp. 1–8. Available online: https://www.researchgate.net/publication/381574628 (accessed on 13 June 2025).

- Raymond, E. Cultivando la Noosfera. Available online: http://sindominio.net/biblioweb/telematica/noosfera.html (accessed on 12 June 2000).

- Forti, M. A legal identity for all through artificial intelligence: Benefits and drawbacks in using AI algorithms to accomplish SDG 16.9. In The Ethics of Artificial Intelligence for the Sustainable Development Goals; Mazzi, F., Floridi, L., Eds.; Springer: Berlin/Heidelberg, Germany, 2023; pp. 253–267. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Kumar, V.; Verma, A.; Gupta, D.; Sapsomboon, A.; Viriyasitavat, W.; Dhiman, G. Demystifying. ChatGPT: An in-depth survey of OpenAI’s robust large language models. Arch. Comput. Methods Eng. 2024, 31, 4557–4600. [Google Scholar] [CrossRef]

- Ramakrishnan, R.; Pillai, S. Open innovation and crowd sourcing: Harnessing collective intelligence. In Evolving Landscapes of Research and Development: Trends, Challenges, and Opportunities; Datta, D., Jain, V., Halder, B., Raychaudhuri, U., Kumar, S., Eds.; IGI Global: Hershey, PA, USA, 2025; pp. 235–260. [Google Scholar] [CrossRef]

- Broekhuizen, T.; Dekker, H.; Faria, P.; Firk, S.; Khoi, D.; Sofka, W. AI for managing open innovation: Opportunities, challenges, and a research agenda. J. Bus. Res. 2023, 167, 1–14. [Google Scholar] [CrossRef]

- Fasnacht, D. Open innovation ecosystems. In Open Innovation Ecosystems: Creating New Value Constellations in the Financial Services; Springer International Publishing: Cham, Switzerland, 2018; pp. 131–172. Available online: https://link.springer.com/chapter/10.1007/978-3-319-76394-1_5 (accessed on 20 June 2025).

- Calzada, I. Artificial intelligence for social innovation: Beyond the noise of algorithms and datafication. Sustainability 2024, 16, 8638. [Google Scholar] [CrossRef]

- Gao, J.; Wang, D. Quantifying the use and potential benefits of artificial intelligence in scientific research. Nat. Hum. Behav. 2024, 8, 2281–2292. [Google Scholar] [CrossRef] [PubMed]

- World Economic Forum (WEF). Emerging Technologies. To Fully Appreciate AI Expectations, Look to the Trillions Being Invested. Available online: https://www.weforum.org/stories/2024/04/appreciate-ai-expectations-trillions-invested/ (accessed on 3 April 2024).

- Tech Giants Are Paying Huge Salaries for Scarce A.I. Talent. The New York Times, 2017; 1–3. Available online: https://www.nytimes.com/2017/10/22/technology/artificial-intelligence-experts-salaries.html (accessed on 2 September 2024).

- Lynch, S. AI Index: State of AI in 13 Charts. Stanford Institute for Human-Centered Artificial Intelligence (HAI); Stanford University: Stanford, CA, USA, 2024; pp. 1–14. Available online: https://hai.stanford.edu/news/ai-index-state-ai-13-charts (accessed on 3 February 2025).

- CNBC. China wants to be a $150 billion world leader in AI in less than 15 years. CNBC, 2017; 1–8. Available online: https://www.cnbc.com/2017/07/21/china-ai-world-leader-by-2030.html (accessed on 10 February 2025).

- Cusson, S.; Shea, P. AI and Private Capital in Canada—Context and Legal Outlook; McCarthy Law Firm: Toronto, Canada, 2025; pp. 1–3. Available online: https://www.mccarthy.ca/en/insights/blogs/spotlight-can-asia/ai-and-private-capital-canada-context-and-legal-outlook (accessed on 8 January 2025).

- Rodríguez, L.; Trujillo, G.; Egusquiza, J. Revolución industrial 4.0: La brecha digital en Latinoamérica. Rev. Interdiscip. Koin. 2021, 6, 147–162. [Google Scholar] [CrossRef]

- Ade-Ibijola, A.; Okonkwo, C. Artificial intelligence in Africa: Emerging challenges. In Responsible AI in Africa: Challenges and Opportunities; Springer International Publishing: Cham, Switzerland, 2023; pp. 101–117. [Google Scholar] [CrossRef]

- African Union (AU). Continental Artificial Intelligence Strategy; African Union: Adís Abeba, Etiopía, 2024; Available online: https://au.int/en/documents/20240809/continental-artificial-intelligence-strategy (accessed on 5 February 2025).

- Padmashree, G. Governing artificial intelligence in an age of inequality. Glob. Policy 2021, 12, 21–31. [Google Scholar] [CrossRef]

- Coleman, B. Human–machine communication, artificial intelligence, and issues of data colonialism. In The SAGE Handbook of Human-Machine Communication; Guzmán, A., McEwen, R., Jones, S., Eds.; Sage: New York, NY, USA, 2023; pp. 350–356. [Google Scholar] [CrossRef]

| Code ID | Label | Definition | Sample Quote |

|---|---|---|---|

| C03 | Human-centred AI | Systems designed for human flourishing | “AI must be human-centred…” UNESCO [9,11] |

| C11 | Transparency duty | Obligation to disclose algorithmic logic | “Stakeholders should ensure transparency…” OECD [12] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vargas-Machado, C.; Bedoya, A.R. The Principle of Shared Utilization of Benefits Applied to the Development of Artificial Intelligence. Philosophies 2025, 10, 87. https://doi.org/10.3390/philosophies10040087

Vargas-Machado C, Bedoya AR. The Principle of Shared Utilization of Benefits Applied to the Development of Artificial Intelligence. Philosophies. 2025; 10(4):87. https://doi.org/10.3390/philosophies10040087

Chicago/Turabian StyleVargas-Machado, Camilo, and Andrés Roncancio Bedoya. 2025. "The Principle of Shared Utilization of Benefits Applied to the Development of Artificial Intelligence" Philosophies 10, no. 4: 87. https://doi.org/10.3390/philosophies10040087

APA StyleVargas-Machado, C., & Bedoya, A. R. (2025). The Principle of Shared Utilization of Benefits Applied to the Development of Artificial Intelligence. Philosophies, 10(4), 87. https://doi.org/10.3390/philosophies10040087