1. Introduction

Quantum computing (QC) has become a popular topic in recent years due to its ability to handle complex computations faster than traditional methods [

1]. Despite the limited practical applications of QC, it holds great promise for computation. The concept of “quantum supremacy” has been demonstrated twice in recent years, first in 2019 [

2] and again in 2021 [

3].

Quantum computers can be implemented with different physical principles despite utilizing the same underlying quantum mechanics. One widely recognized model is the gate-based model, as described in [

1]. Other approaches include measurement-based, adiabatic quantum computing, as outlined in [

4], and topological quantum computing.

We are making use of a D-Wave-produced adiabatic quantum computer, although it currently falls short of adhering to the adiabatic principles. As a result, the current implementation of the quantum computer is not universal. To overcome the limitations of the current quantum computer, we employ the use of hybrid solvers provided by D-Wave, which integrate both classical and quantum computing capabilities, as discussed in [

5].

In this study, we explore the application of quantum computing in tomographic image reconstruction; see the graphical abstract in

Figure 1. Tomography, which refers to the imaging of cross-sectional views of an object, is a crucial tool in various fields, such as radiology, materials science, and astrophysics. In particular, we developed the method with an application in emission tomography (ET) [

6] in mind. However, our tomographic model in this manuscript is in principle extendable to any tomographic imaging technique with a matrix-based system model. For simplicity, we may use the term tomography throughout this work.

The central challenge in tomographic imaging is to reconstruct the inner sections of an object. This constitutes an inverse problem, which is ill-posed. More to the point, loss of information or an increase in noise makes the problem hard to solve, as outlined in [

6]. In the past, significant advancements in classical computing hardware were required to achieve the current level of image resolution of

in ET. With the continued development of quantum computing hardware, we anticipate that it will play a significant role in enhancing the performance of tomographic image reconstruction.

In the subsequent sections, we will provide an overview of quantum computing, with a specific focus on the D-Wave quantum computer used in our study. We will also delve into the basics of ET imaging and explain the inverse problem in tomographic reconstruction and current state-of-the-art methods for solving it. Then, we will elaborate on our approach to discrete tomographic reconstruction using hybrid quantum annealing (QA). Finally, we present results from our experiments, including small-scale reconstructed images with binary and integer values, test them in a variety of settings, and discuss the results.

2. Quantum Computing

QC is a new and innovative computing paradigm. Instead of using classical electronic bits, quantum computers utilize quantum bits (qubits) to exploit the quantum mechanical principles of superposition, entanglement, and interference. Using these paradigms, a quantum computer with

N qubits can be in

states simultaneously, compared to one state for a classical computer [

7]. This advantage ultimately leads to a benefit in terms of runtime speed-up while enabling computations that are impossible on a classical computer. The concept of universal QC is accomplishable by several models. The total number of qubits is currently limited to 5640 qubits on the QA-based D-Wave Advantage2 system. For gate-based systems, the current record is set by the IBM Eagle system with 127 superconducting qubits [

8].

2.1. Gate-Based Quantum Computing

Gate-based quantum computing utilizes a sequence or circuit of single-qubit and multiple-qubit gates to operate on the initial state of several qubits, similar to how classical gates operate on classical bits. Due to the exponential increase in Hilbert space, our Hilbert space doubles with every qubit that we add; we can achieve exponential speed-ups in particular algorithms [

1].

2.2. Adiabatic Quantum Computing

The fundamental assumption of adiabatic quantum computing (AQC) is that a physical system constantly evolves to its lowest energy over time [

2,

9]—associated with the global minimum of an optimization landscape. In contrast to gate-based QC, AQC does not perform unitary operations through gates on single or multiple qubits [

10]. Instead, we map the problem to the quantum computer with a problem-specific Hamiltonian [

11]. The Hamiltonian describes the energy spectrum of the system and the set of viable solutions. In theory, AQC is equivalent to gate-based QC and, therefore, universal [

4].

The adiabatic theorem states that if we initialize a quantum system with a ground state

and let it evolve with time

t for a fixed duration

T, we will end up in the ground state

, which is associated with the lowest energy solution [

12]:

The main limitation of AQC is the

gap. The

-gap refers to the minimum spectral gap of the problem’s Hamiltonian, which is the difference between the lowest and second-lowest energy levels. The

-gap inherently bounds the runtime of the adiabatic evolution. For further information, we guide the reader to [

13]. The speed limit

T is calculated as:

2.3. Quantum Annealing

QA is the current realization of AQC. The QA system is initialized in a superposition state. Subsequently, the problem formulation is embedded in the hardware such that the system’s ground state is the solution to the problem [

14]. However, how does QA overcome the

gap?

In short, it does not. Physical realizations of AQC usually let the system evolve multiple times for a specified time [

15]. After initialization, the system repeatedly anneals for a specified annealing time

. This way, a sample set is formed containing the samples, the associated energy level (lowest is best), and the number of times the solution occurred [

16].

QA builds upon the Hamiltonian of the Ising model:

The variables

of the Ising model, of

n variables, are either spin-up or spin-down

[

1]. Two variables

and

can have quadratic interactions

, known as the coupling strength. Furthermore, one variable can have a linear bias

. Every Ising model is translatable to a Quadratic Unconstrained Binary Optimization (QUBO) problem, with variables

being binary 0, 1, and vice-versa. QUBO problems can be NP-hard and are hard to solve using classical computers [

17]. We can describe a QUBO using an

upper-triangular matrix with the bias term on its diagonal and the quadratic interaction as the upper-triangular values:

The goal is to minimize QUBO’s objective. In matrix notation, this results in the following:

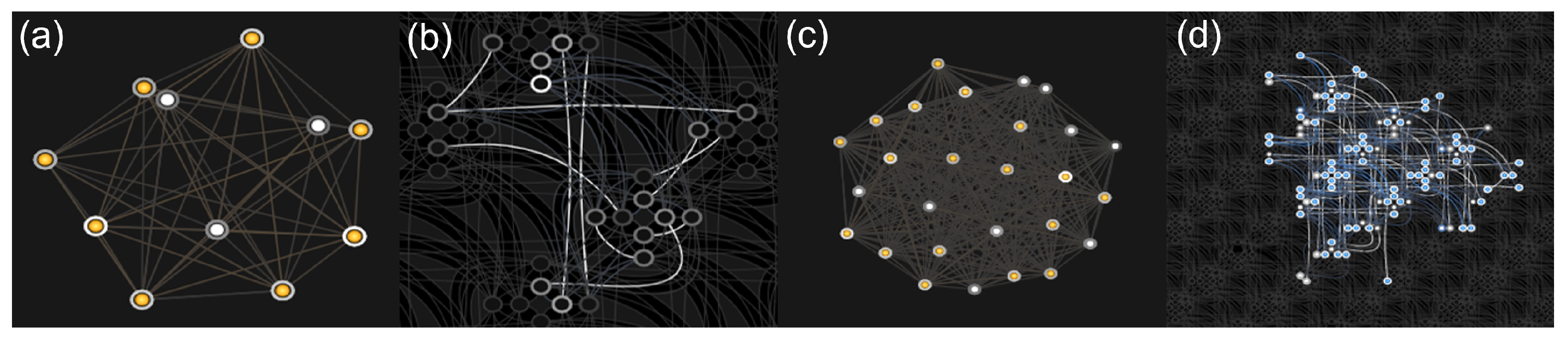

The connectivity of the qubits on the annealer’s topology limits the interaction between qubits; see

Figure 2. D-Wave has proposed different topologies over recent years, such as the Chimaera [

18,

19] or Pegasus graph. Using D-Wave’s Ocean interface [

20], we can embed and run the problem on the quantum annealer using the Leap cloud service [

21].

3. Emission Tomography

ET is a subset of tomographic imaging that encompasses two main modalities: Single Photon Emission Computed Tomography and Positron Emission Tomography [

6]. In contrast to transmission tomography (TT), where the radiation source is external, and the radiation passes through the object, in ET, the object of interest emits radiation from within. In a medical scenario, this typically refers to the injection of a radioactive tracer into the patient’s body, which accumulates in a target destination and emits radiation.

The mathematical foundation of TT is rooted in the Radon transform, which is an integral transform that maps a continuous object to continuous measurements [

22,

23]. In ET, the basic Radon transform is extended to incorporate object-specific attenuation. The solution to the attenuated Radon transform is not straightforward but has been presented by Bronnikov and Natterer [

24,

25]. This, however, is outside of the scope of this paper.

The ET imaging process is similar to any linear digital imaging system, which maps a continuous domain to a discrete domain [

26]. However, the image formation process is often simplified in a discrete-to-discrete forward model:

Here

represents our measurement,

is the system matrix describing the action of the linear imaging system, and

represents the imaged object. In practice, the system matrix

is sparse, exceptionally large, singular, ill-posed, and non-square and is therefore not usually applied as a simple matrix multiplication. It is important to mention that the system matrix

is only an estimate of the real forward operator, which is, in a real-case scenario, unknown. In reality,

is usually applied as a series of operators

, describing the relation from object space to measurement space.

The operators used in the imaging process allow for the inclusion of physical system-specific details, such as the point spread function, as well as operators to account for patient-induced attenuation and scatter. For this manuscript, we simplify the problem by disregarding the attenuation operator and use a deterministic system matrix model suitable for QA and their associated hybrid solvers. In the realistic case of an imperfect imaging system and setting, a common representation includes additive noise [

26], although the noise can also be signal-dependent on

:

3.1. Discrete Tomography

Due to the nature of adiabatic quantum computing and its combinatorial optimization through QA, the obtained reconstruction data in quantum algorithms is inherently discrete. Unlike most classical algorithms that yield continuous results for the reconstructed image, quantum computing is currently limited in achieving floating-point accuracy, especially in its early stages of development. To bridge the gap between classical and quantum approaches, we propose to embrace the discretization of the reconstruction problem. By adapting tomography to discrete tomography, we take a bold yet essential step in aligning with the combinatorial nature of QA. In fact, discrete tomography is a specialized and simplified case of tomography, where the image

consists of discrete, or in the simplest case, binary pixels

[

27] and has applications in real-world tomographic imaging. This case can apply to homogeneous scanning materials for non-destructive testing [

27] or angiography in TT. Applications of DT in ET include attenuation-map reconstruction and cardiac and phantom imaging. We refer the reader to a manuscript by Herman et al. summarizing the application of DT in the medical domain [

28]. Because the complexity of the optimization is constrained to discrete variables, the task’s difficulty is to reconstruct with as few views as possible. In our experimental setup, we focus on discrete tomography scenarios, as these problems are directly applicable to QA and associated hybrid solvers available at present.

3.2. Image Reconstruction

ET reconstruction is in the class of ill-conditioned noisy inverse problems and constitutes the problem of resolving an activity distribution that generated the measured projection data. Due to the reasons mentioned earlier, obtaining an exact inverse of

is not possible. Therefore, one conventional clinical method to reconstruct ET images is Filtered BackProjection (FBP), an analytical and linear approach [

29]. More advanced approaches to reconstructing ET images are iterative reconstruction algorithms [

30]. In contrast to FBP, these algorithms are often non-linear and seek to minimize the projection difference by repetitively applying back projections, updates, and forward projections [

31]. Three examples of iterative reconstruction techniques include Maximum Likelihood Expectation Maximization [

31,

32], Conjugate Gradient [

33], and Simultaneous Algebraic Reconstruction Technique (SART) [

34]. SART is an algebraic iterative reconstruction algorithm that performs additive and iterative updates from single projections. A popular reconstruction algorithm for discrete tomography is the Discretized Algebraic Reconstruction Technique (DART), which is an extension of the SART algorithm. Recently, deep-learning-based image reconstruction [

35] has gained popularity, and we acknowledge its success. However, the primary focus of this manuscript is not to contest deep-learning methods but rather to explore the application of image reconstruction on quantum computers. As the image size for the QA-based reconstruction is inherently limited by the size of the annealer, we also consider the Moore-Penrose general pseudoinverse (PI) as a reconstruction technique. The Moore-Penrose PI is defined by [

36]:

For this work, we have chosen to compare our method to an FBP, DART, and a Moore-Penrose-based PI [

36] image reconstruction. Due to the fact that both FBP and PI result in a continuous image, we observe a numerical error when compared to discrete reconstruction techniques, which we mitigate by introducing a subsequent discretization step. We compare the reconstructed images and their corresponding ground truth in terms of the root mean square error (RMSE), which measures the average magnitude of the differences between reconstruction and ground truth:

Here

represents the reconstructed image, whereas

represents the corresponding ground truth. Moreover, we compare the reconstructed images in terms of their Structural Similarity Index Measure (SSIM), which measures the quality of a reconstructed image in terms of their luminance, contrast, and structure as a mean over a moving window [

37]:

For the computation of the SSIM, and are the average pixel values of images and , respectively. is the covariance between images and and and are the variances of images and , respectively. and are small constants to stabilize the division. The SSIM represents a unitless index measure indicating if images are similar (SSIM of 1), dissimilar (SSIM of 0), or anti-correlated (SSIM of −1).

4. Related Work

Over recent years, QC research has accelerated rapidly as practical examples have come within reach. Specifically, image processing and machine learning on quantum computers have evolved to be an active area of research [

38,

39]. Here, relevant work of quantum algorithm development for tomographic image reconstruction should be highlighted.

The first who proposed to perform image reconstruction with QC for TT, positron ET, and magnetic resonance imaging were Kiani et al. [

40]. The paper proposed to substitute the classical Fourier transform with a quantum-based Fourier transform (QFT) for inverse integral transform-based reconstruction methods to decrease runtime [

40]. However, integral transform-based reconstruction, in contrast to analytic algorithms, fail to incorporate additional knowledge of the physical measurement process and the imaging system itself. Therefore, the incorporation of a system-specific matrix or operator is crucial to the success of an image reconstruction algorithm, which can then be posed as the solution to a linear system of equations. The initial proposal to solve linear equations on a gate-based quantum computer was made by Harrow et al. in 2009 when they introduced the Harrow-Hassidim-Lloyd (HHL) algorithm [

41]. The HHL algorithm is based on quantum phase estimation and, in theory, provides logarithmic speed-up against classical computers. Nonetheless, there are a few caveats for which situations this speed-up is lost [

42]. The first caveat is the loading of the vector

into a quantum state. This also applies to the QFT approach. The second caveat is that the matrix

needs to be sparse, and the third is that the matrix

needs to be robustly invertible, which is associated with the ratio of the largest and smallest eigenvalues and therefore limits the application to low-conditioned matrices. The last caveat is associated with the readout of the solution vector

. This would, in practice, have to be measured

n times, again ruining the proposed speed-up. Therefore, the HHL algorithm is not efficient to run on near-term quantum hardware, also due to the current qubit count and error. We, therefore, disregard this approach.

Another take to solving (combinatorial) optimization problems on near-term gate-based quantum computers is the Quantum Approximate Optimization Algorithm (QAOA) [

43], which is similar to QA-based systems in its problem formulation. The first example presented for QA was non-negative binary matrix factorization [

44]. Furthermore, Chang et al. demonstrated in 2019 that it is possible to solve polynomial equations with QA [

45]. The approach was refined to a linear system with floating-point values and floating-point division by Roger and Singleton in 2020 [

46]. Their paper utilizes the D-Wave 2000Q system to its whole extent and shows results for matrix inversions of

matrices. However, they fail for matrices with high condition numbers. The first practical application to utilize QA for linear systems was Souza et al., who presented a seismic inversion problem, which they solved in a least-square manner [

47]. Schielein et al. presented a road map towards QC-assisted TT, describing data loading, storing, image processing, and image reconstruction problems [

48]. For a long time, QA hardware needed to be more mature for realistic problems. Schielein et al. and Jun also proposed to solve tomographic reconstruction with QA or QAOA [

48,

49].

In this work, we want to present multiple achievements:

Comparison of binary and integer-based tomographic reconstruction run on actual QC hardware to classical reconstruction algorithms on small-scale images in a toy model

Analysis of capabilities and limitations of QA regarding image size, discretization, noise, underdetermination, and inverse crime of the reconstruction algorithm

A framework for the creation of tomographic toy problems to accelerate quantum image reconstruction research

5. Methods

This section will cover how we solve an inverse problem with QA. We cover the fundamentals of embedding the problem on the quantum processing unit’s (QPU) topology. We elaborate on the limitations of the current topology and how hardware must advance to run the optimization of problems at a significant scale. Furthermore, we describe how hybrid algorithms can utilize QA to its full extent and how we must embed the problem for the hybrid approach.

5.1. Problem Formulation

We recall that the reconstruction problem in tomography is an inverse problem of the form shown in Equation (

6). Because our system of equations is an ill-posed image reconstruction problem that contains noise and may not be fully determined, we approximate the solution in a least-squares manner. Therefore, we reformulate Equation (

6) as the objective of a quadratic minimization problem, with its minimum being the approximated solution of image

:

5.2. Quadratic Unconstrained Binary Optimization for Binary Tomography

In the QC fundamentals, we have discussed that the Ising model is the basic Hamiltonian for QA. In the case of binary tomography, the reconstruction problem is directly mappable to a QUBO problem. We recall that the binary tomographic model is defined like Equation (

6), where

,

and

. The linear bias values can be extracted by following Equation (

12):

Furthermore, we can extract the coupler values as:

The offset

does not change the minimization problem’s objective. The overview of the reconstruction process is as follows: We initialize the quantum annealer with the obtained linear biases and quadratic couplers, specify the number of times the system anneals, and then obtain a sample set containing the number of solutions, each associated with an energy. The solution to the minimization problem is then chosen as the one corresponding to the lowest energy. The optimization scheme is described in Algorithm 1.

| Algorithm 1: Quantum annealing tomographic reconstruction |

Data: : System matrix : Projection vector Parameter: number_reads: Number of annealing repetitions Result: : Image vector offset bqm ← CreateBQM(Linear_Coefficients, Quadratic_Coefficients, offset) // Scale chain strength of quantum annealer depending on problem chain_strength ← scaleChainStrengthToBQM(bqm) // Run BQM on quantum annealer sampleset ← SampleBQMOnQuantumAnnealer(bqm, number_reads, chain_strength) // Retrieve sample associated with the lowest energy x← sampleset[0] return x |

When directly mapping a QUBO problem to the quantum annealer, one must consider the embedding of the QPU’s topology. The qubits on a Chimaera topology, as embedded on the D-Wave 2000Q, are internally connected to four other qubits and have one or two external connections to other qubits. The newer D-Wave Advantage2 system features internal connectivity of one qubit to 12 other qubits and two to three external couplers. To map higher connectivity graphs to the QPU, one utilizes chains of physical qubits to represent one logical qubit. Fully connected graphs, like our problem, are mapped to the QPU using a clique embedding [

18]. The most extensive, mappable, fully connected graph on the QPU is a graph of 65 logical qubits on the D-Wave 2000Q and 100 logical qubits on the D-Wave Advantage2. Our binary reconstruction problem, now defined as the QUBO matrix

, is fully connected. More to the point, variables usually have quadratic interactions with all other variables. The limit in terms of reconstructed binary image size for the D-Wave Advantage2 is

. Therefore, using a QA to reconstruct a

R-bit integer image of size

without problem optimization will require

fully connected qubits. This qubit amount and connectivity will not be available soon.

Figure 2 shows examples of the graph and corresponding embedding for image sizes of

and

. In

Figure 2, the directed graph represents the structure of the QUBO problem, where one vertex is a binary variable, or logical qubit, with a corresponding linear bias. The edges represent the quadratic couplings between the variables. The physical embedding shows how the directed graph is mapped to the QPU topology using a clique embedding. Here, the marked vertices symbolize the physical qubits that are used to embed the directed graph. The edges, again, represent the quadratic couplings. For a more detailed description of how problem graphs are embedded in the corresponding hardware, we refer the reader to [

50]. To embed more significant problems on the D-Wave machine, we use hybrid algorithms, which are defined below.

5.3. Hybrid Optimization

The intermediate step to complete quantum-assisted computation is to design hybrid algorithms to enable the embedding of significant large problems on current QC hardware. On quantum annealers, one can make use of hybrid workflows. Raymond et al. [

5] introduced one type of hybrid computation. Their algorithm uses a large neighborhood local search to find subproblems in the original problem. The subproblems are then of a size that is mappable to the QPU. To enable the solution of larger quadratic problems that cannot be run efficiently on the quantum annealer, D-Wave has introduced a commercial hybrid solver available through the Leap cloud service [

21]. The D-Wave hybrid solver represents a devised solution that combines their primary quantum annealing approach with advanced classical algorithms. This hybrid solver efficiently allocates the quantum processing unit (QPU) to where it offers the most advantages in problem-solving. Moreover, one can utilize the hybrid solver to solve constrained quadratic models (CQM), which enable the use of integer values and, therefore, drastically expand solution possibilities. The new constrained quadratic model is defined as:

Here,

is the unknown integer variable (pixel value) we want to optimize for,

is the linear weight,

is the quadratic term between

and

and

c can define possible inequality and equality constraints. In principle, the workflow is defined by the classical problem formulation and a time limit

T [

51]. The time limit

T is automatically calculated depending on the problem if not specified by the user. The solvers run in parallel and utilize heuristic solvers to explore the solution space and then pass this information to a quantum module that utilizes D-Wave systems to find solutions. The QPU solutions then guide the heuristic solvers to find better quality solutions and restrict the search space. This process iteratively repeats for the specified time limit. Furthermore, one has the possibility of introducing quadratic and linear constraints and restricting the range of the integers [

51]. Similar to QA, one obtains a set of solutions, each associated with energy, where the solution associated with the lowest energy is the best-approximated solution to the minimization problem. The optimization scheme is described in Algorithm 2.

| Algorithm 2: Hybrid quantum annealing tomographic reconstruction |

Data: : System matrix : Projection vector Parameter: time_limit: Time limit for the optimization Result: : Image vector x← [] objective cqm ← CreateCQM(objective) cqm.add_constraint() // Run CQM on hybrid solver sampleset ← SampleCQMOnHybridSampler(cqm, time_limit) // Retrieve sample associated with lowest energy x← sampleset[0] return x |

6. Results

In this section, we present novel reconstruction results of our algorithm utilizing D-Wave’s quantum computers and compare them to classical methods. Due to the size restrictions on the actual quantum annealer, images are reconstructed using hybrid solvers to enable the representation of more significant problems. Every hybrid reconstruction is compared to three different classical methods: discretized FBP, DART, and discretized PI. We discretize the reconstruction result of the classical algorithms to compare them in the case of binary and discrete tomography. We refer to our hybrid reconstruction method as QA. Moreover, we compare the ground truth image (GT) to the reconstructions and visualize the corresponding sinogram (SG) to the tomographic problem. We utilize SymPy for our problem formulation and solve the reconstruction problem as a classical forward and inverse problem . Therefore, it, in principle, applies to any linear imaging or display system. The system matrix for the reconstruction problem is calculated using the Radon transform. We acknowledge that for more realistic and significant problems, the system matrix becomes infeasible to store, and our approach is not directly applicable. Nevertheless, we want to test the general performance of hybrid solvers utilizing QA on inverse problems regarding size, noise, and underdetermination of the linear equations, even at the very beginning of practical QC.

6.1. Experimental Setup

We have constructed a tomographic toy problem framework to test quantum computers’ initial stages of image reconstruction. We set up example problems for our QA-based reconstruction by generating tomographic problems in a linear system manner. We utilize scikit-image [

52] to perform Radon transforms of our GT images and create our system matrices. Here, the integration of the object rotated by an angle

defines one projection view. The number of angles is equally distributed between

and

. ET inspires our model. In contrast to TT, we do not model the attenuation of a source light ray through the object. Instead, we aim to model the emission process of photons (counts) within the object. For now, we are neglecting the attenuation of photons by the object. This aspect may be addressed in future research. The projection views at

are taken from the top of the image. Subsequently, the angles are distributed in a clockwise direction. We have utilized scikit-image’s iradon to perform FBP with a ramp filter and modified sart to a DART reconstruction algorithm. For DART, we perform two iterations of the algorithm. The PI-based reconstruction uses NumPy’s pinv function to estimate a Moore-Penrose PI. We utilize scikit-image’s structural_similarity function to quantify SSIM, using a default window size of

for comparison and adapting it to

for the smallest image size. Furthermore, we introduce an uncertainty map (UC) for our proposed reconstruction technique. The UC displays the pixel-wise variance of all returned samples by the hybrid sampler, independent of their associated energy. This UC will only be highlighted in the cases where the uncertainty is reasonably high.

Additive Noise

To test problems concerning noise in the data, we establish a simple noise model to alter the projection data. Now, we want to imitate the statistics of a low-count ET measurement. We apply the noise in an additive manner to the GT image for each projection view to create independent noise realizations:

The noise is therefore defined as follows:

With this noise model, we want to resemble the signal dependence of Poisson noise. Poisson noise is the most prominent noise factor in projection images with very low counts.

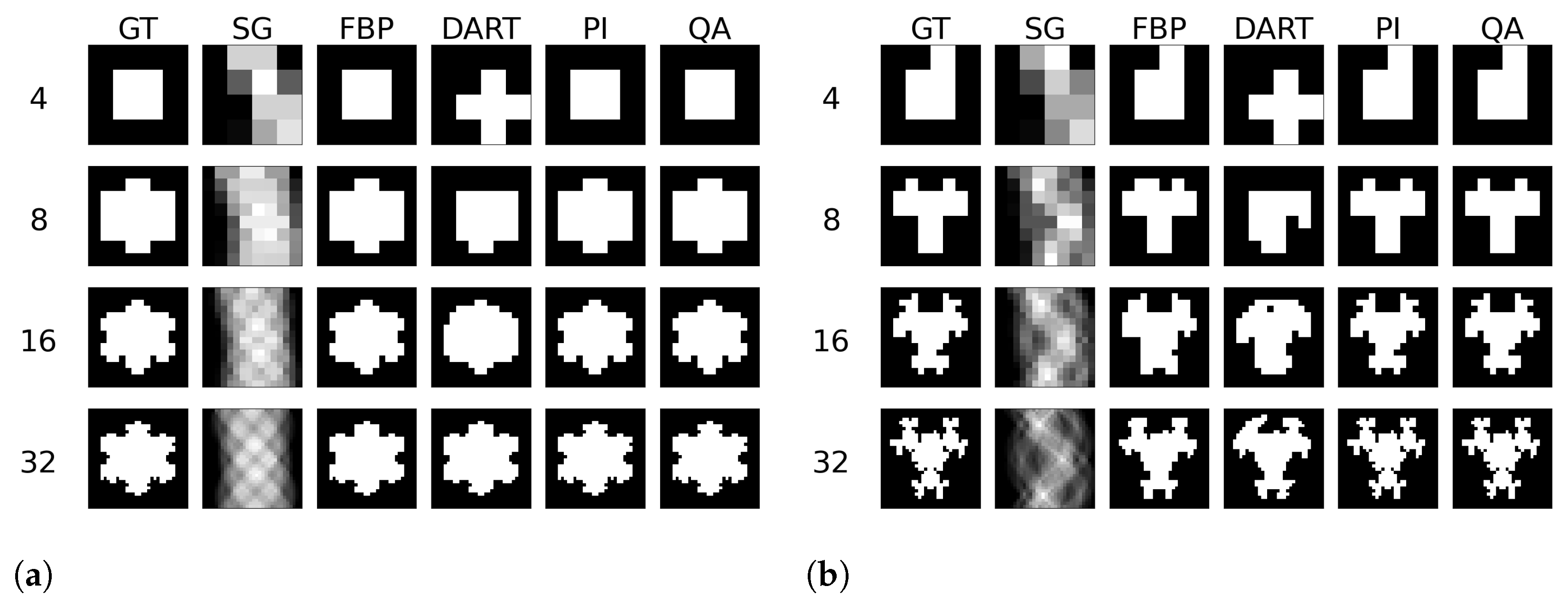

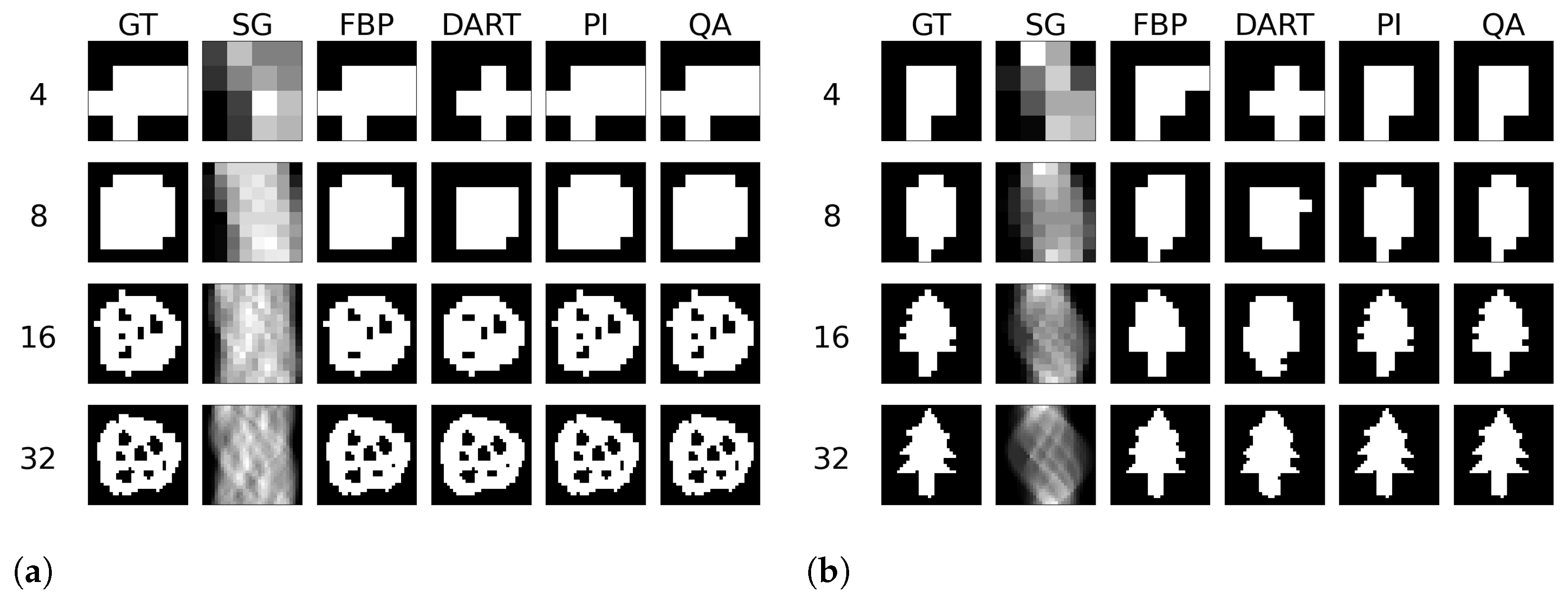

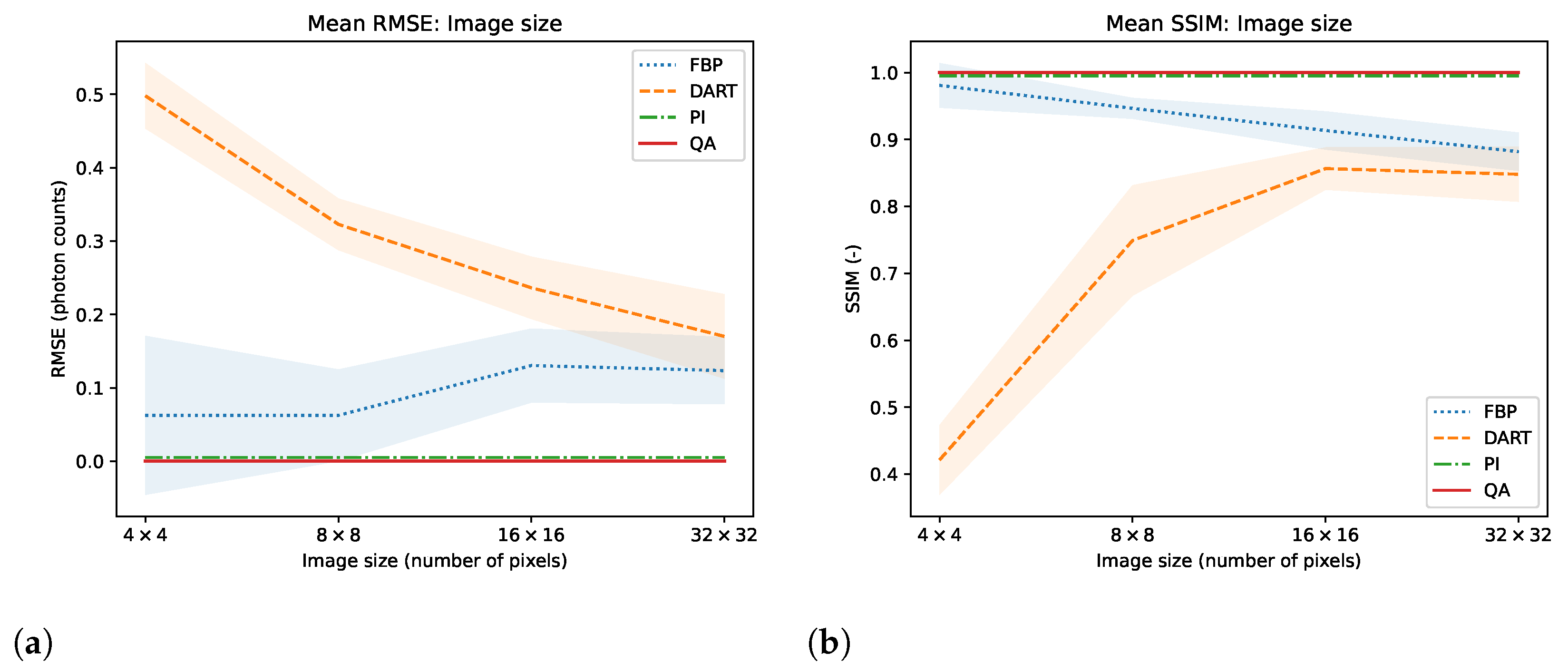

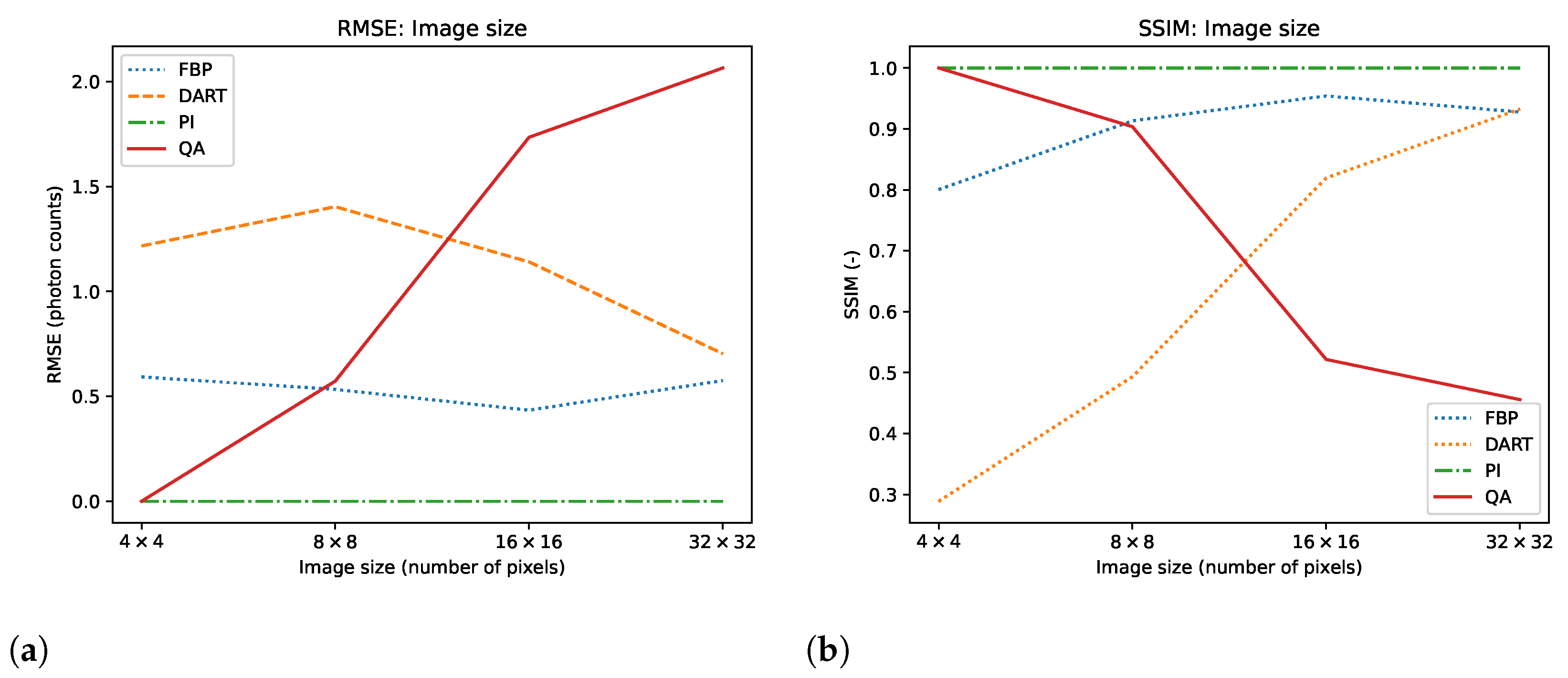

6.2. Experiment 1: Image Size Evaluation

We test the reconstruction capabilities of the hybrid solver concerning the image size

of

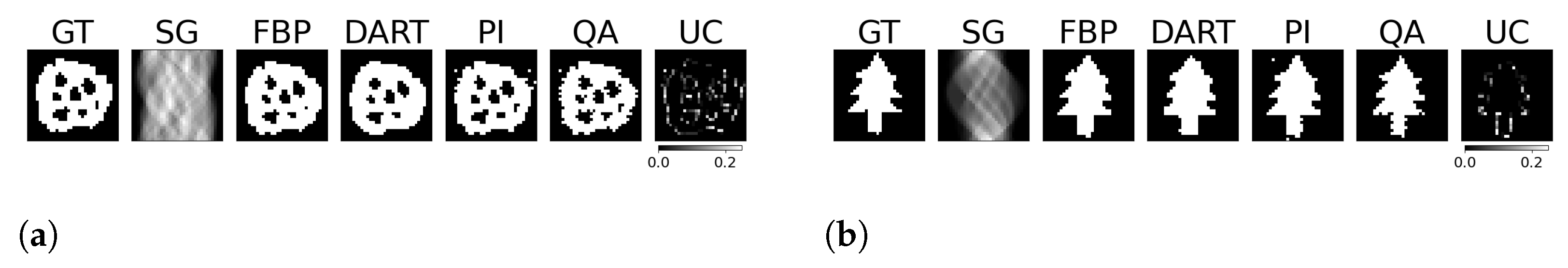

. Algorithm 2 describes the problem formulation for the hybrid solver. We apply our reconstruction technique to four different binary images ‘foam’, ‘tree’, ‘snowflake’, ‘molecule’ at four different squared image sizes 4, 8, 16, and 32. We chose the images to achieve a variance in frequency and image content. To downsample the image, we take local means of image blocks. We take

N projection view with

N measurements for each view. Thus, we have a fully determined system for image size

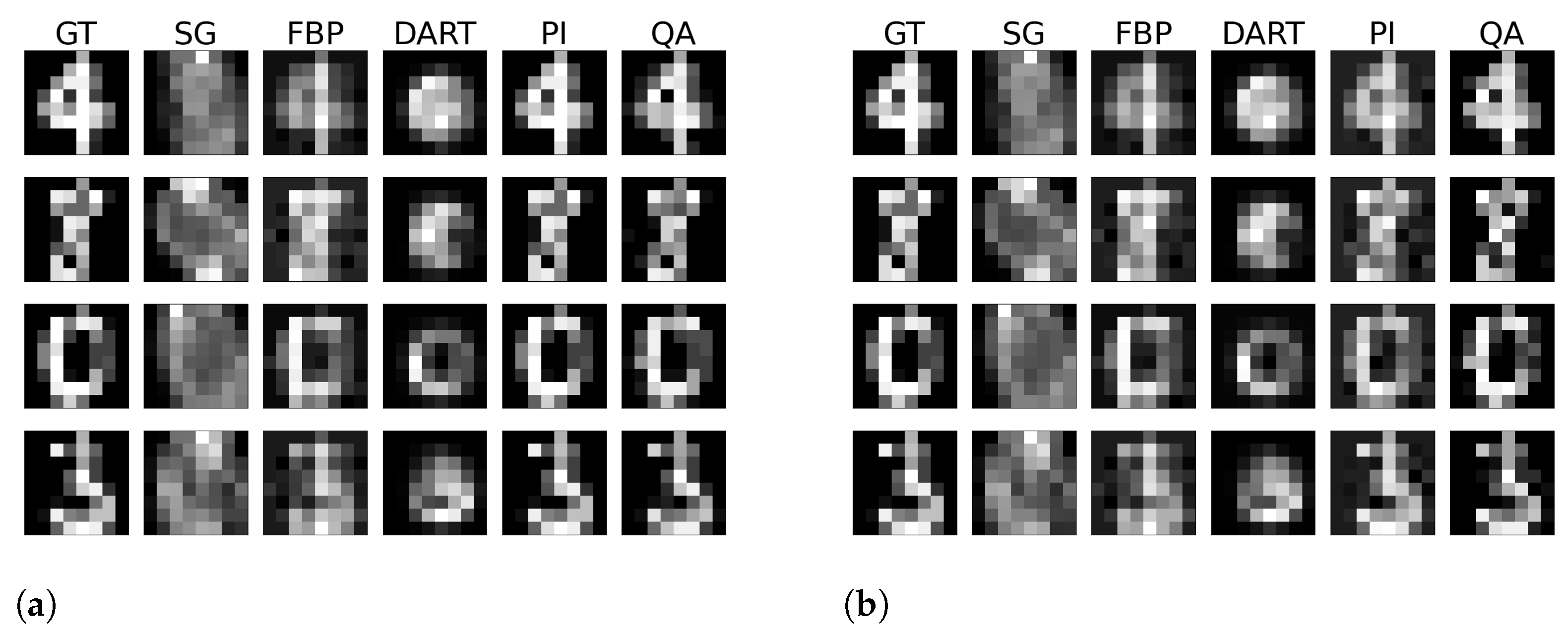

. We neglect the problem of ‘inverse crime’ for this experiment and test the algorithm in its simplest form. We show the reconstructed images and their corresponding GT, SG, and comparable classical results for the binary images ‘foam’ and ‘tree’ in

Figure 3. The examples for ‘snowflake’ and ‘molecule’ are in the

Appendix A.1. Moreover, we compare the reconstruction algorithms on the four binary images measured by root mean square error (RMSE) and structural similarity index (SSIM) in

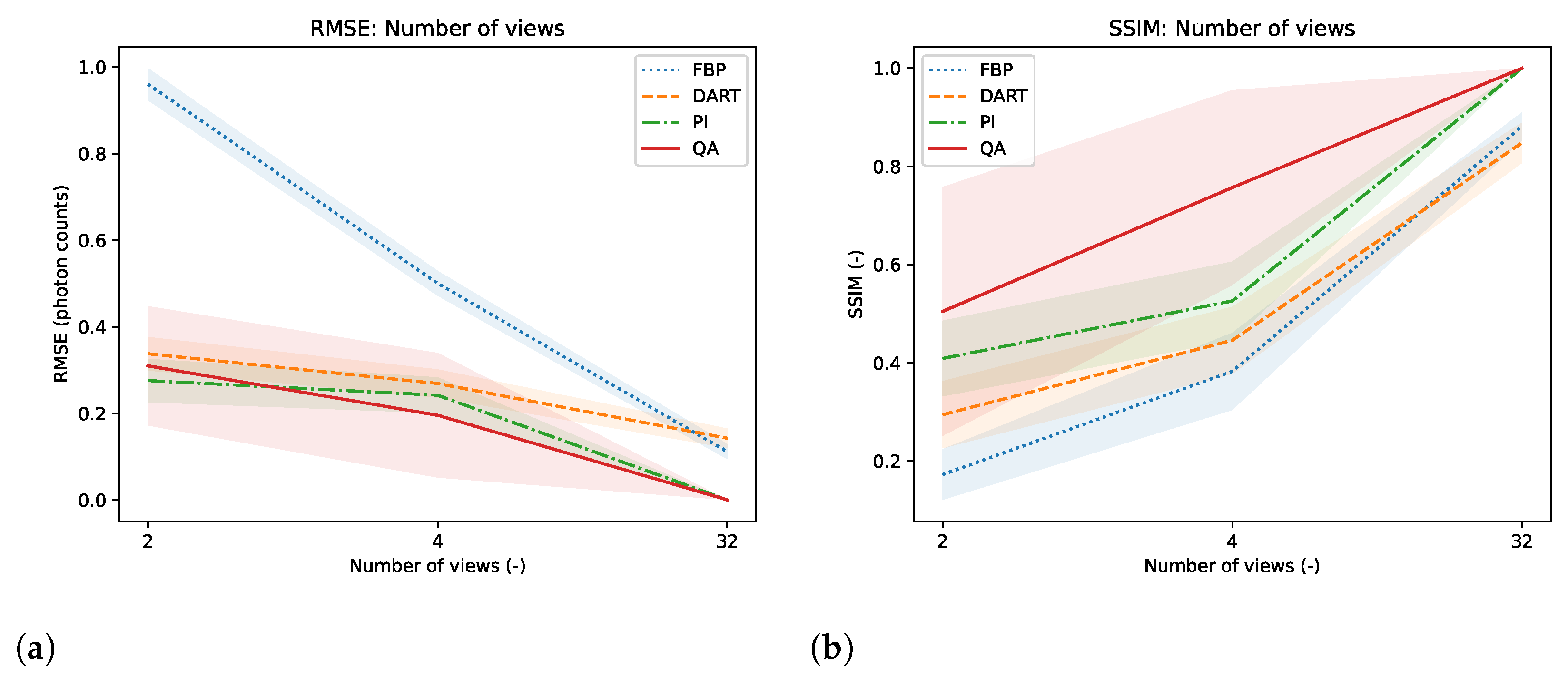

Figure 4. A comparison of the reconstruction runtimes is provided in

Table 1.

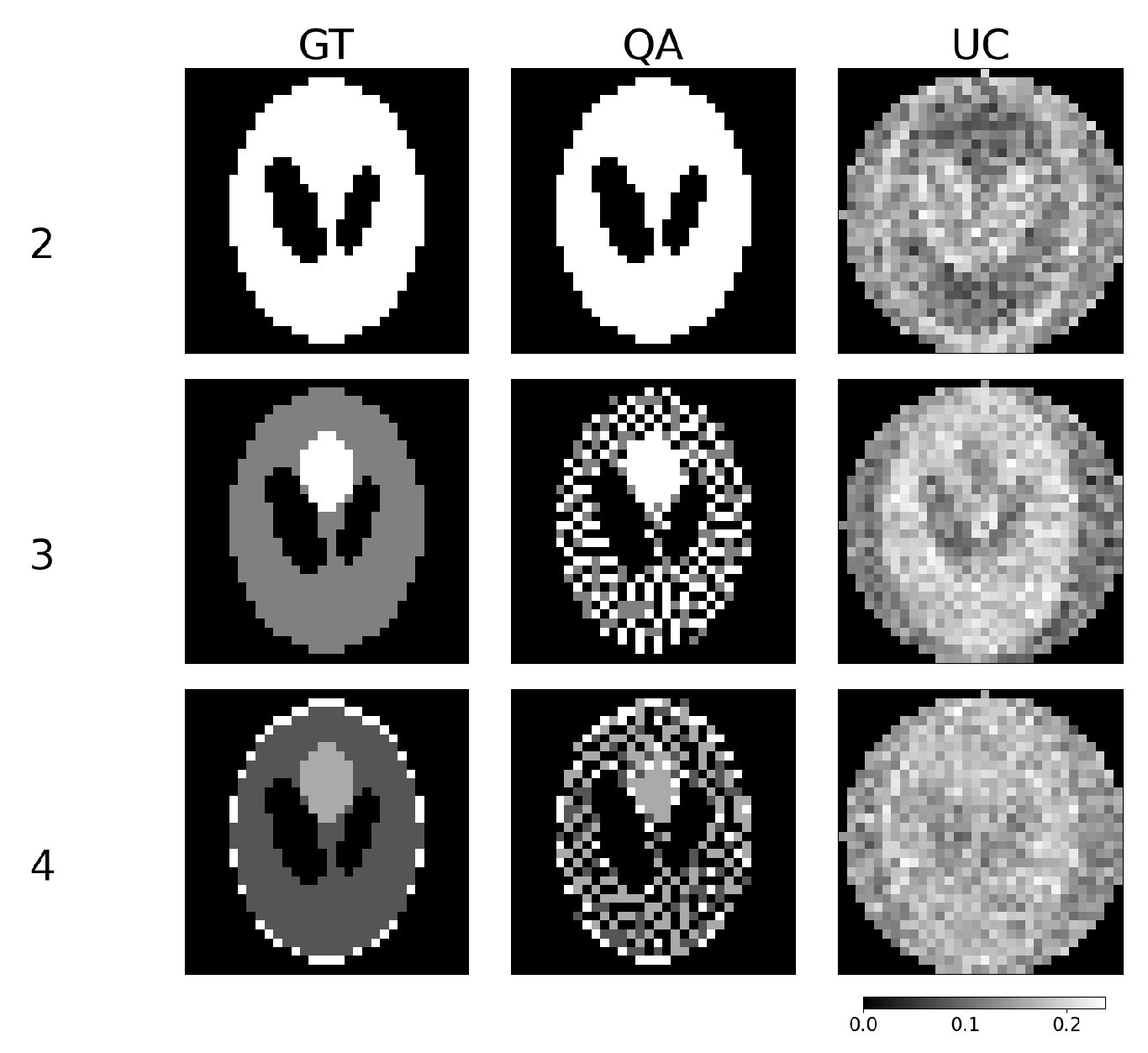

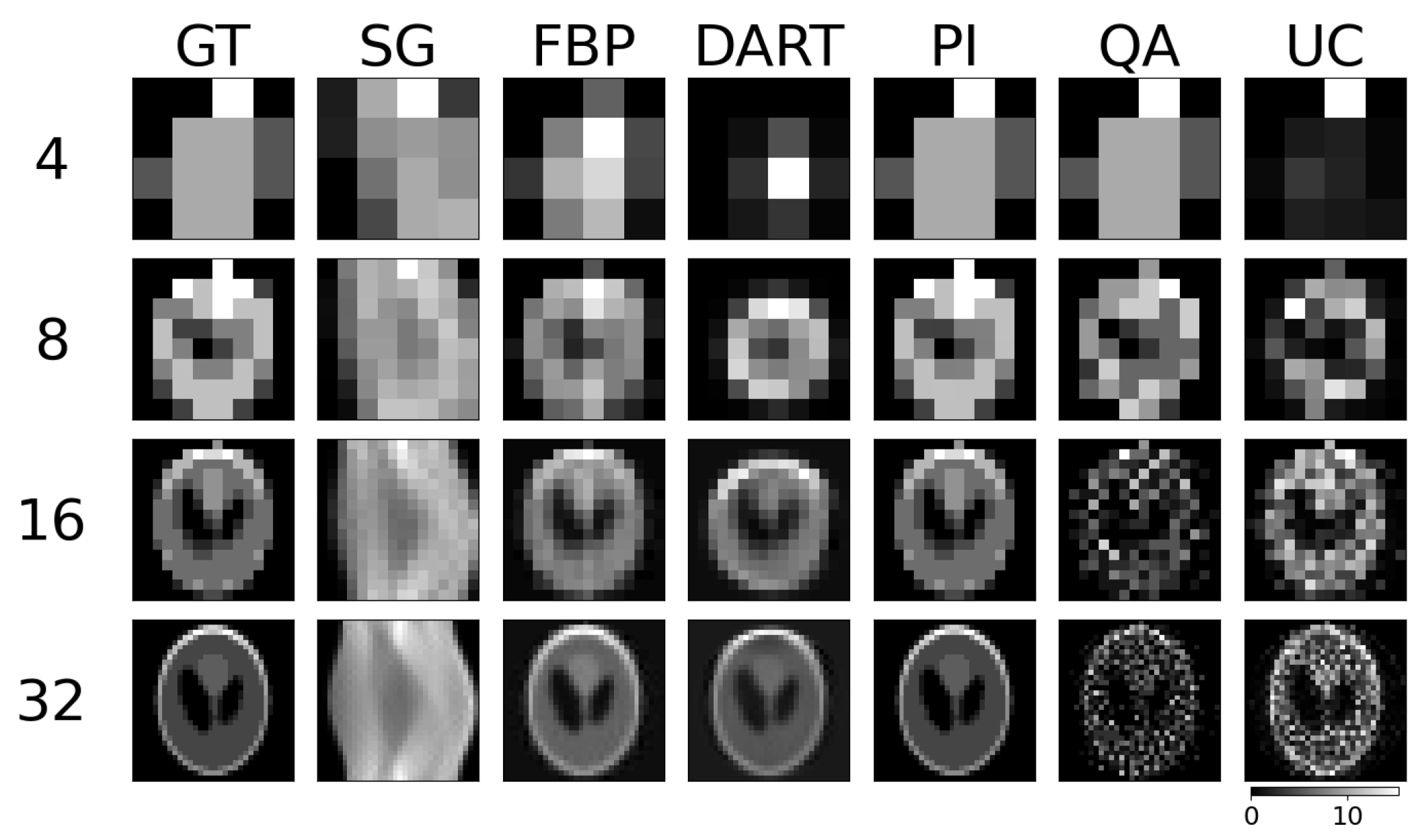

As a subsequent experiment, we employ the hybrid solver to solve for integer-valued variables in a 4-bit range representing the numbers from 0 to 16. With this, we move towards a more realistic use case. We simulate the well-known Shepp–Logan phantom at the possible 4-bit range, compare the hybrid integer reconstructions with conventional reconstruction methods, and visualize the UC in

Figure 5. Furthermore, we plot a comparison regarding RMSE and SSIM for the reconstructed image size in

Figure 6. Additionally, we provide a more thorough component-wise analysis of the Shepp–Logan phantom in the

Appendix A.2, which increases the discretization values step-by-step.

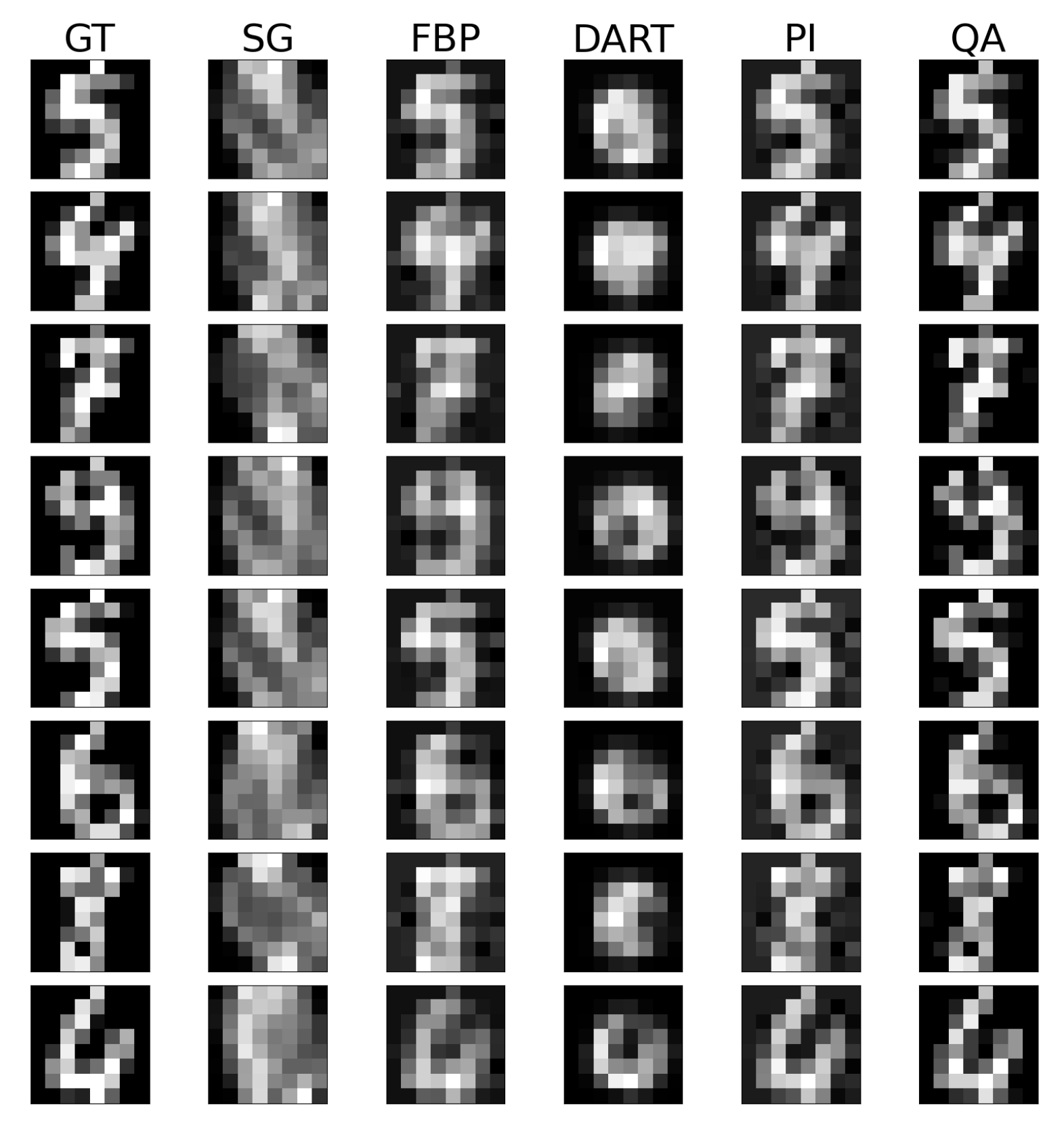

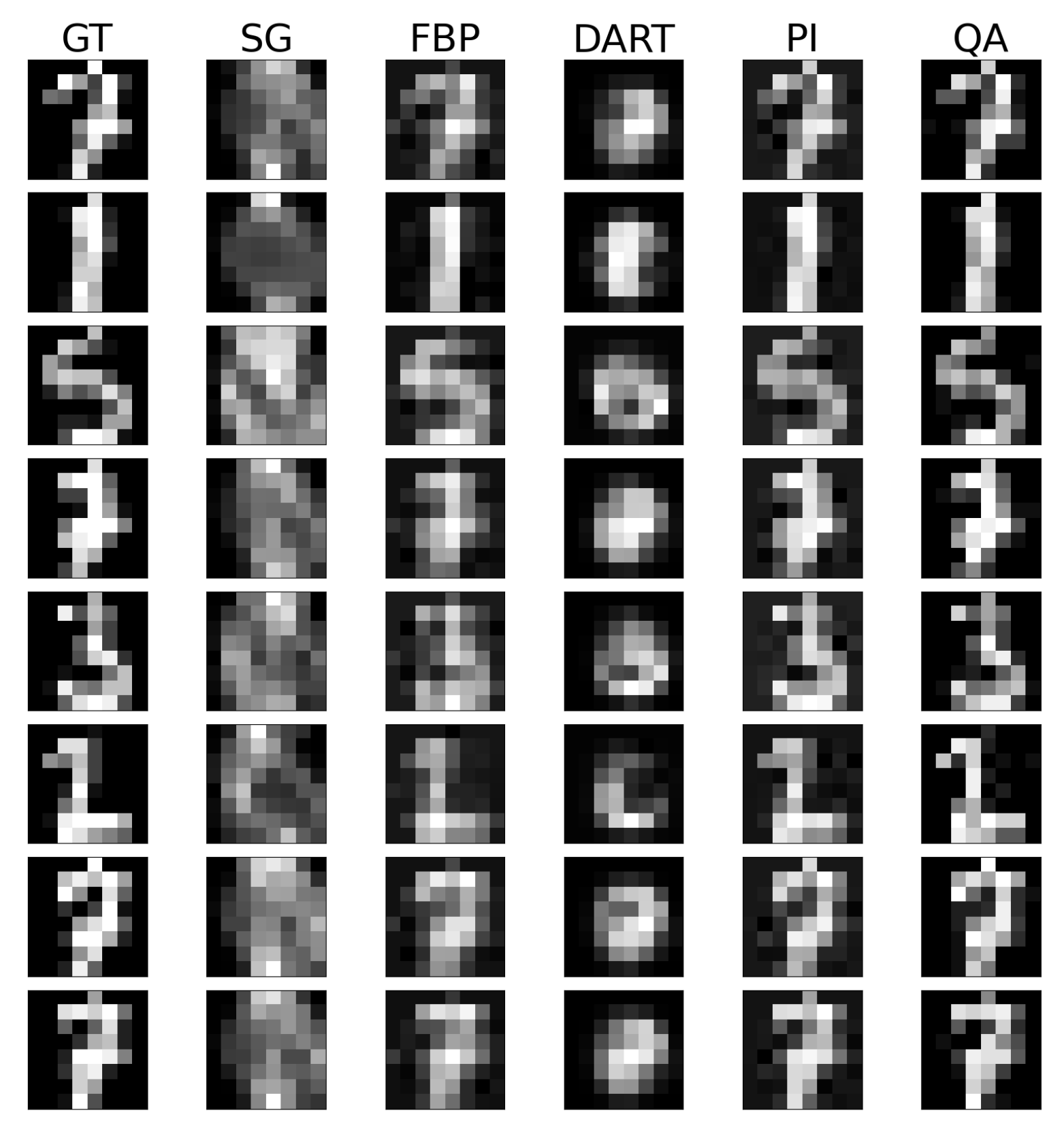

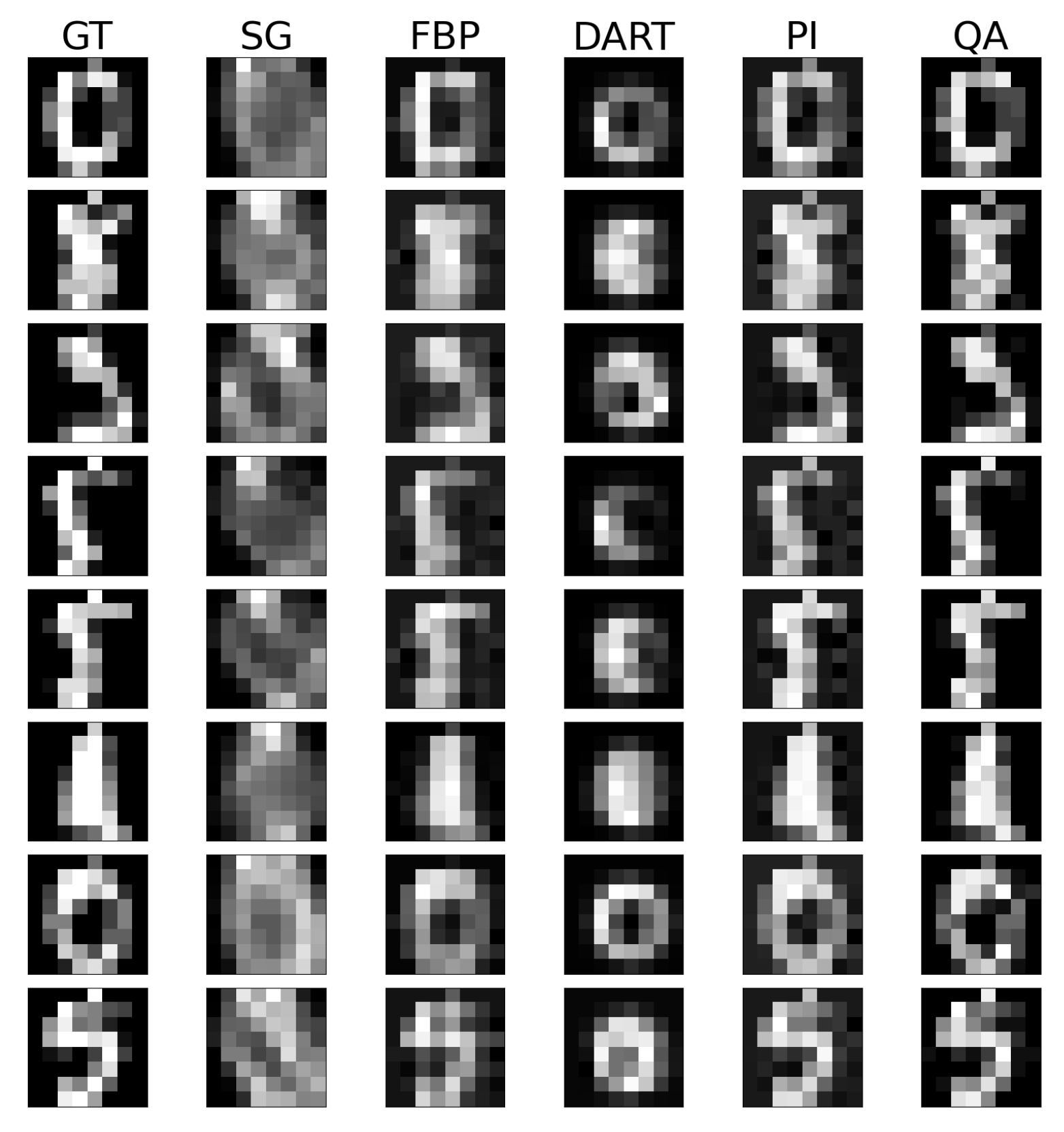

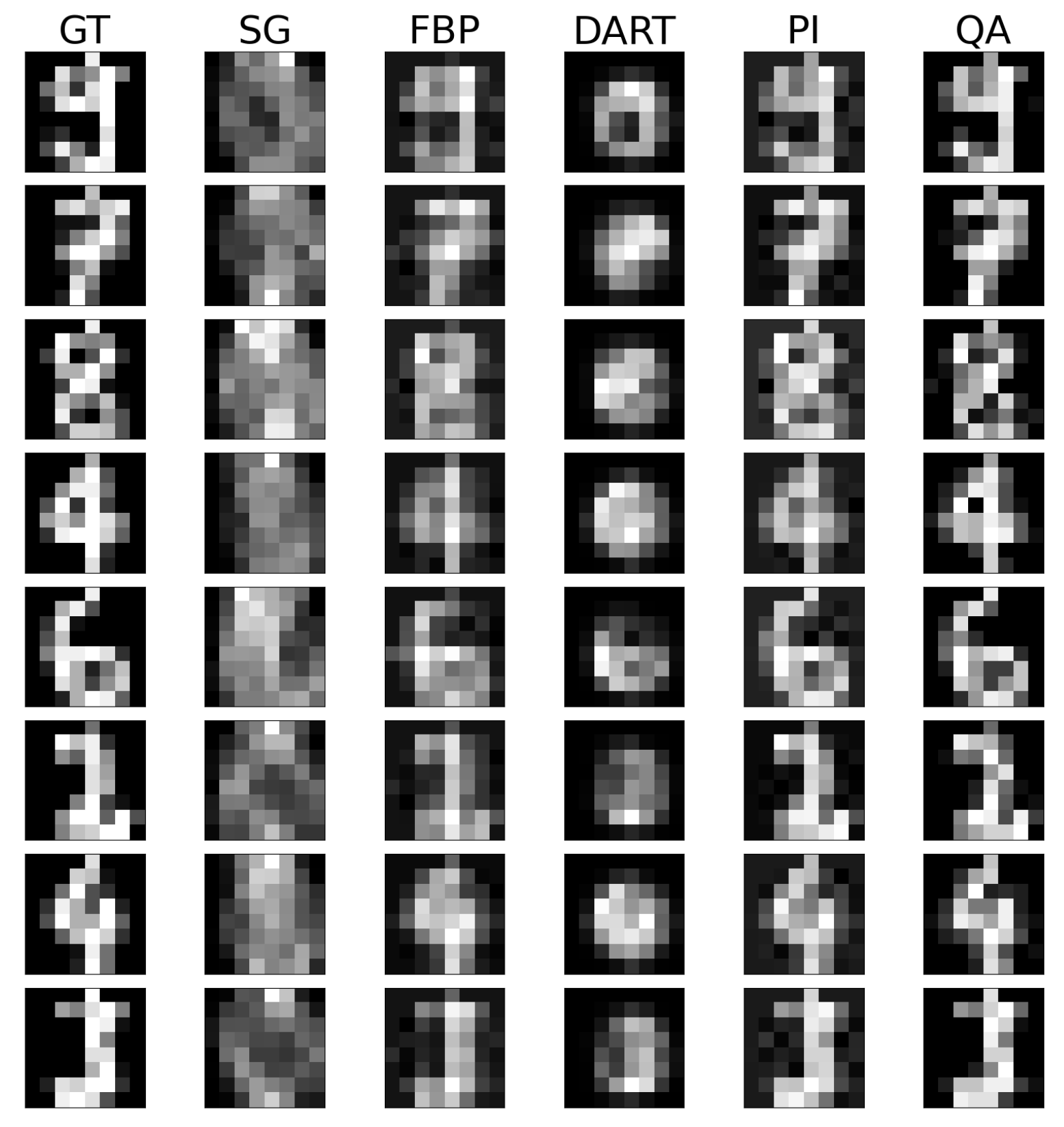

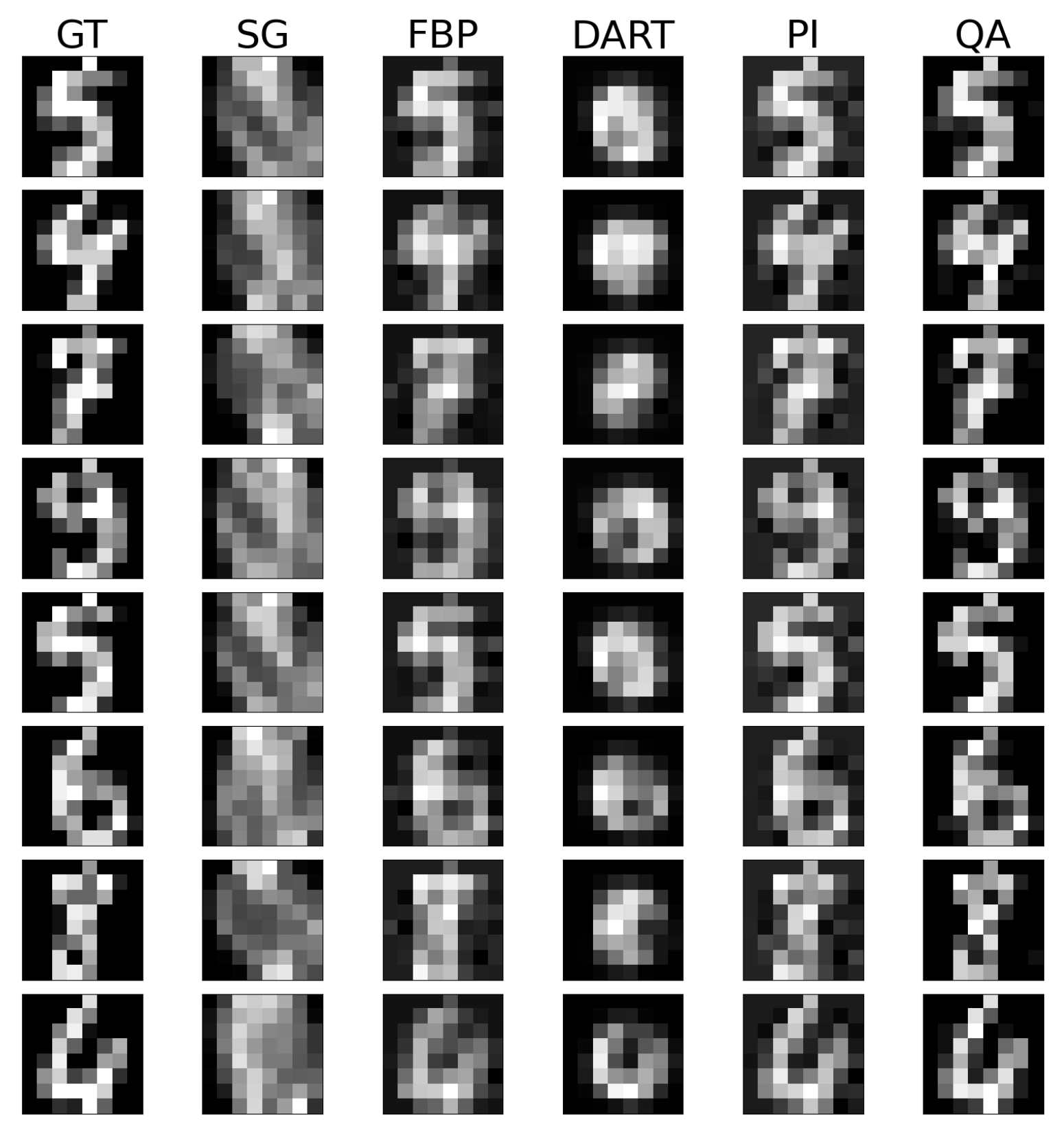

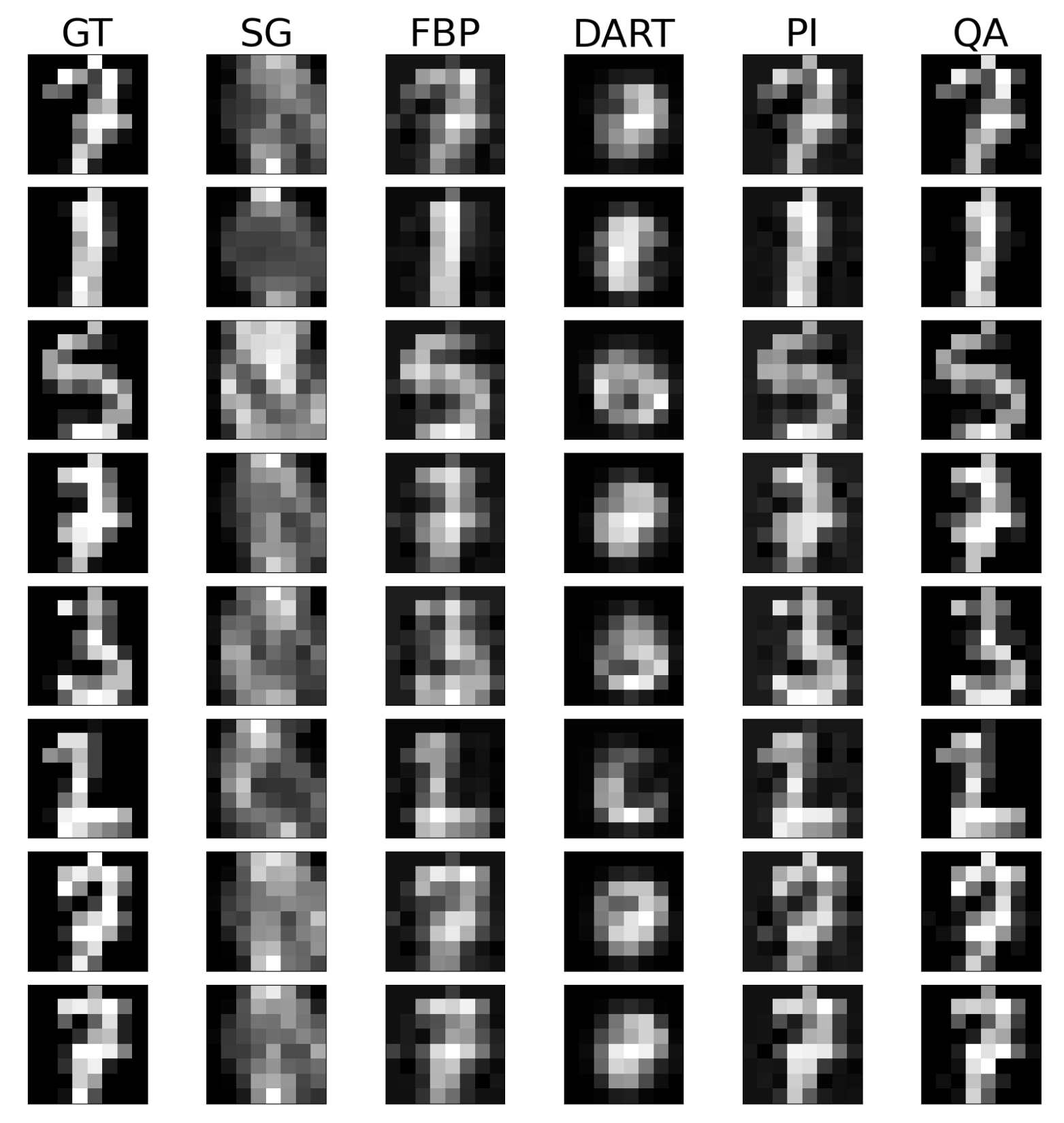

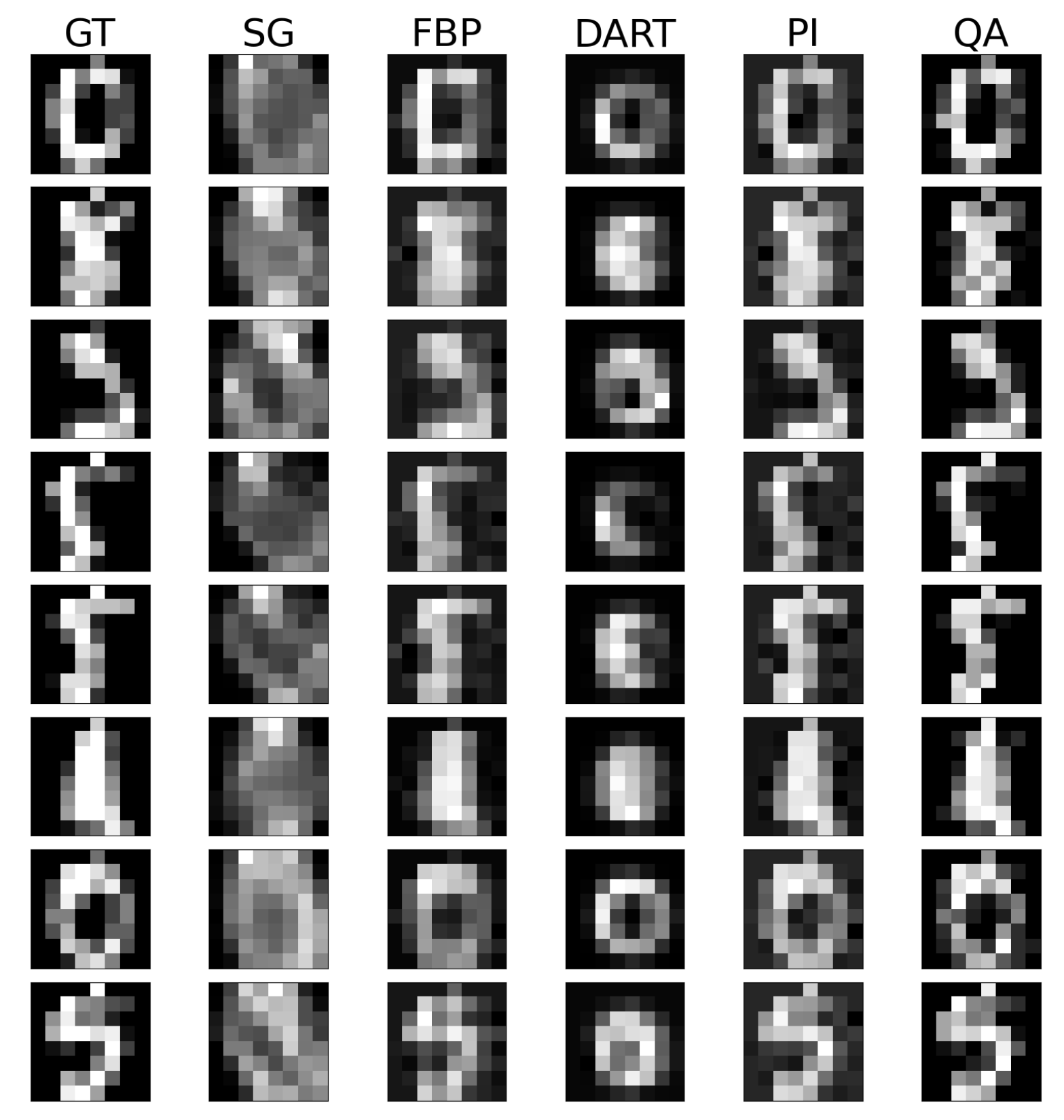

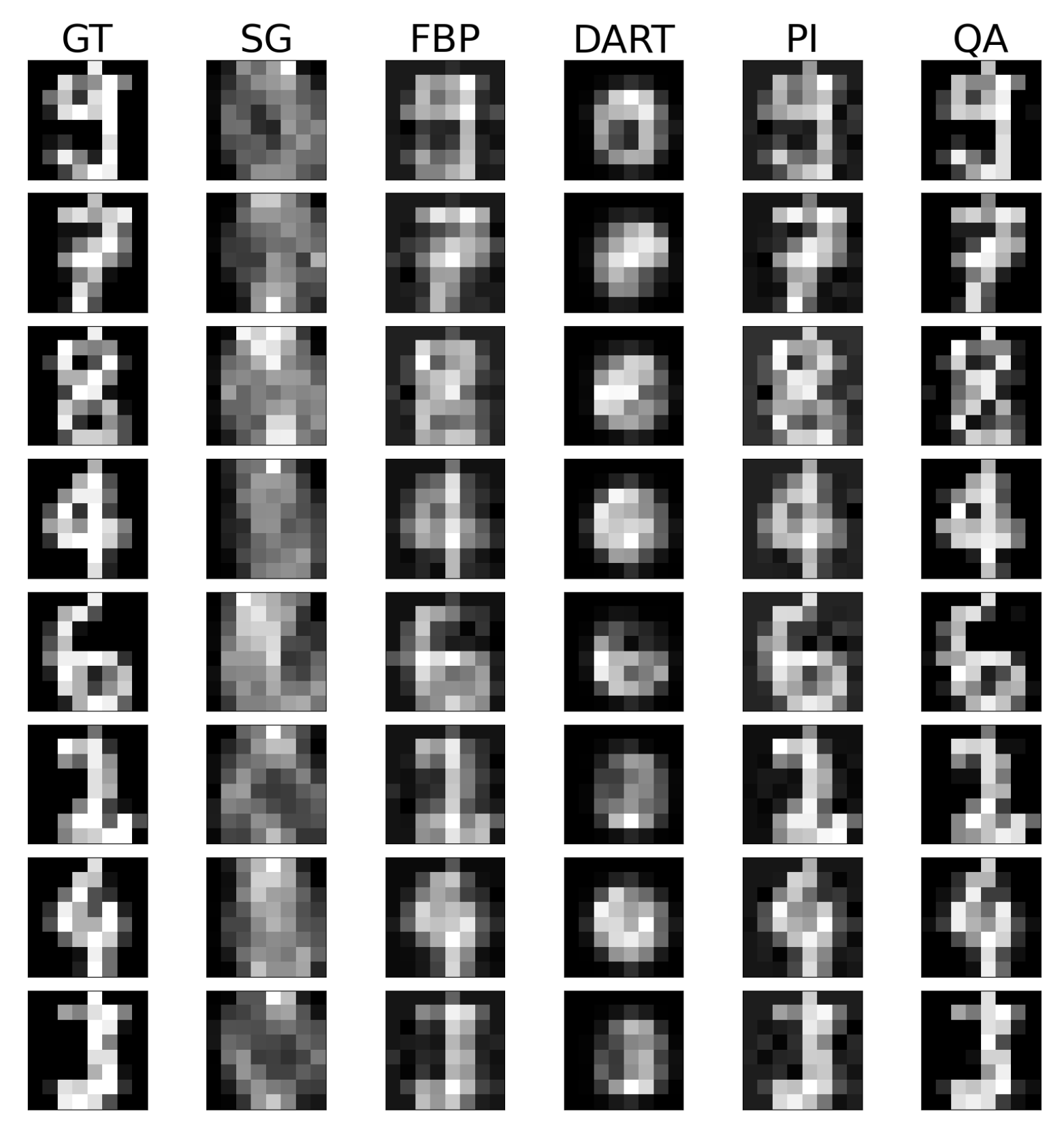

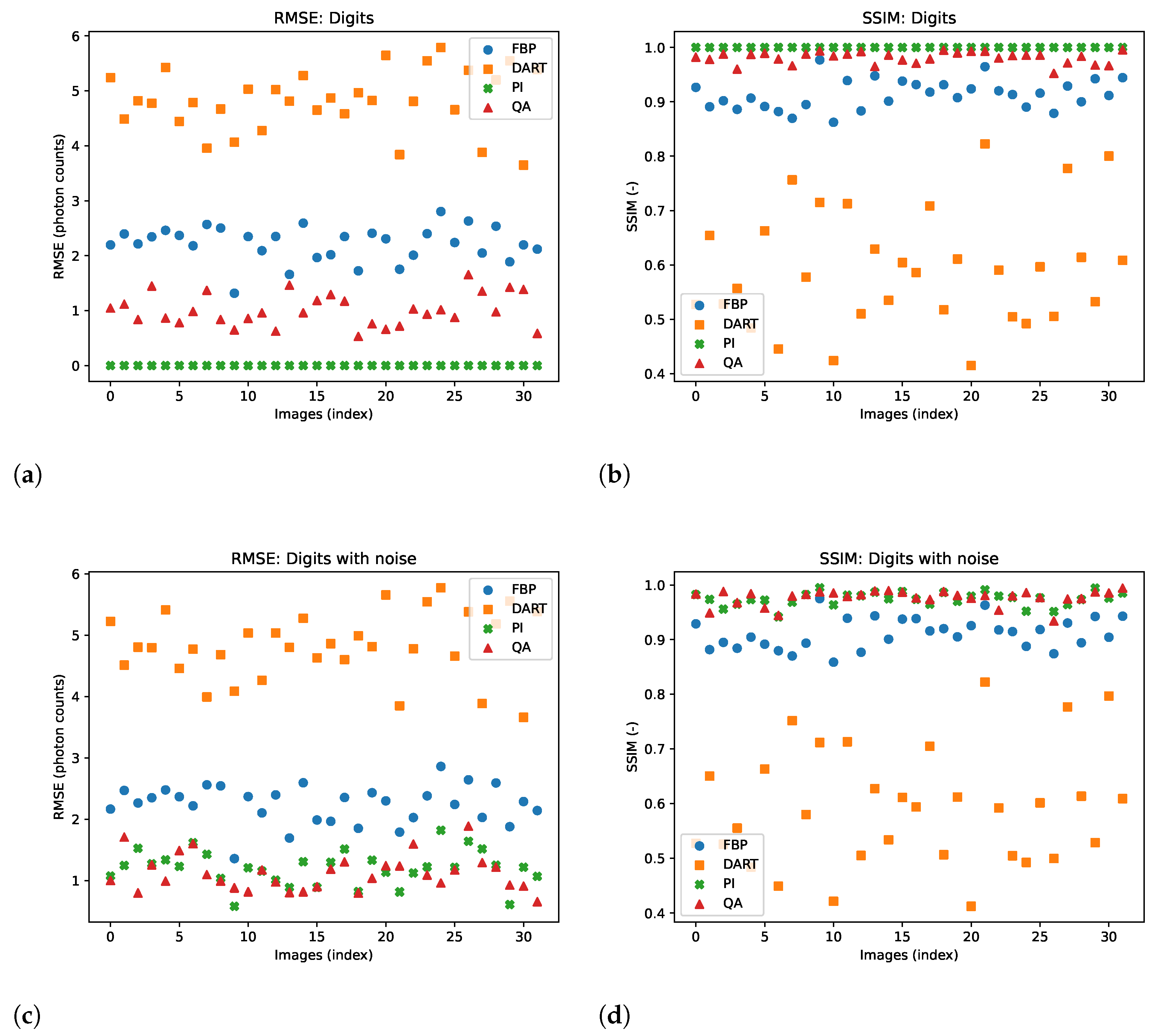

6.3. Experiment 2: Noise Evaluation

One typical problem in image reconstruction is the noise in the measured data. Especially in low-count tomography, one suffers from high photon noise. We alter the image data with our noise model to imitate the high-noise level in low-count ET, which addresses the problem of ‘inverse crime’. In comparison to the previous experiments, we use a truncated PI with a cut-off value of

to make the corresponding reconstruction robust to the noise induced. We test the hybrid-based reconstruction’s robustness with a simple noise alteration of the GT image during the acquisition. We utilize the UCI Machine Learning Repository Digits dataset [

53] for small-scale images with low bit range, as we see the limitations of the approach in the Shepp–Logan phantom. The dataset consists of 5620 digits of image size

with a bit range of

. We randomly chose 32 digits and reconstructed them with and without noise. The additive noise is described in Equation (

16). Visual results of the reconstructed images without and with noise are shown in

Figure 7. Again, we show the reconstructed images and their corresponding GT, SG, and comparable classical results. The remaining reconstructed digits can be found in the

Appendix A.3 and

Appendix A.4. Furthermore, we present a quantitative evaluation of the RMSE and SSIM for both noise-free and noisy data for each digit image in

Figure 8.

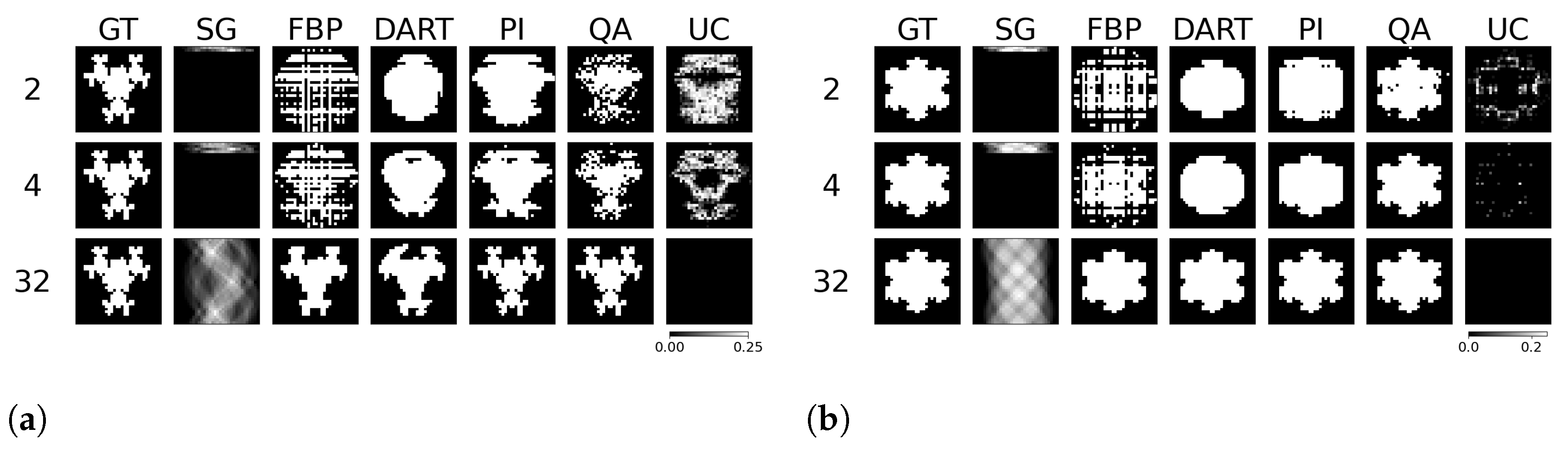

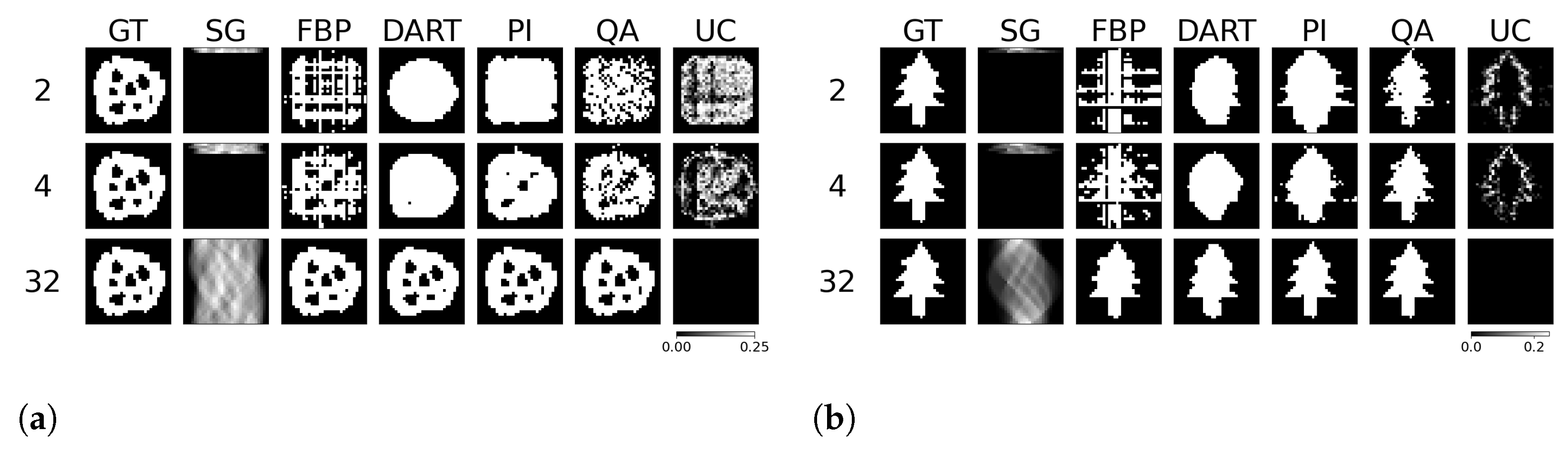

6.4. Experiment 3: Underdetermined Evaluation

The reconstruction of binary images in a fully determinant setting is easy for any reconstruction algorithm, as the number of combinatorial options is minimal compared to integer or floating-point-based reconstruction. The problem in binary tomography primarily results from reconstructing the objects with as few views as possible. In the past, methods have been presented to reconstruct an object with two views only [

27]. The methods usually enforce much regularization and prior knowledge of the object due to the associated null space of the projection operator in few-view reconstruction. Therefore, we want to present reconstruction results of binary images of size

with only 4 and 2 projection views acquired while only enforcing the prior knowledge of assuming binary pixels. The reconstructed images, their comparison algorithm results, and corresponding GT, SG, and UC are displayed for the binary image ’foam’ and ’tree’ in

Figure 9. The other examples are provided in the

Appendix A.5. Moreover, we compare the reconstruction algorithms on the four binary images measured by RMSE and SSIM in

Figure 10.

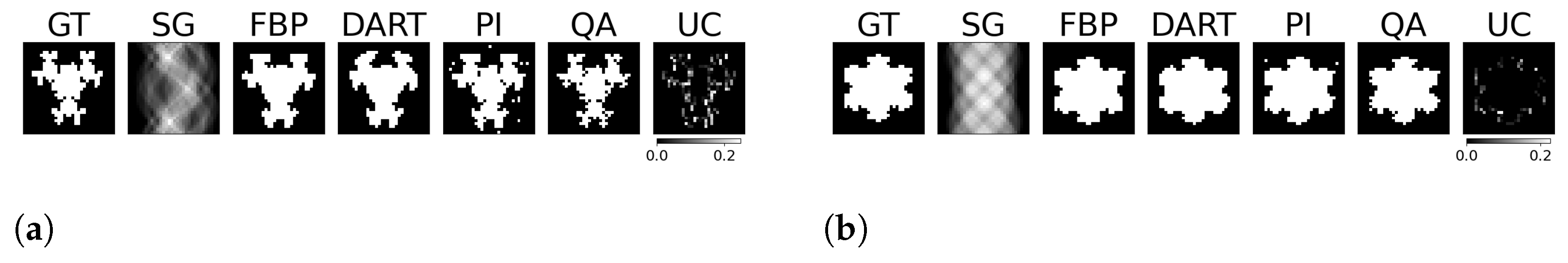

6.5. Experiment 4: Inverse Crime and Applications towards Reality

In our previous experiments, we assumed the exact system matrix for precise forward projection. This allows us to evaluate the algorithm in a controlled environment with simulated perfection. However, real-world situations rarely provide a perfect system matrix, and we often rely on estimated versions instead. To prevent biases caused by the ’inverse-crime’ scenario, we now simulate the images on a different, higher-resolution grid during simulation. To simulate these scenarios, we take the previously presented binary images and scale them up to a size of

. We then simulate the projections and perform the reconstructions using a rebinned SG. Again, we compare against a truncated PI in this case. The reconstructed images, their comparison algorithm results, and corresponding GT, SG, and UC are displayed for the binary image ’foam’ and ’tree’ in

Figure 11. The remaining examples are provided in the

Appendix A.6. A comparison of the mean RMSE and SSIM is given in

Table 2.

7. Discussion

Compared to previous approaches for matrix inversion based on QA, we observe an improvement in linear systems with high condition numbers as well as the size of the linear system itself. Rogers and Singleton’s method of solving linear systems using a quantum annealer is restricted to small systems,

, with very low condition numbers [

46]. With the use of hybrid solvers, we can overcome this issue. Our system matrices

are singular, with a condition number approaching infinity. When entirely determined, the results of small binary tomographic reconstruction contest against conventional methods. We hypothesize that the hybrid solver can handle binary image sizes of up to

without difficulty; see

Figure 3. With integer-based tomographic reconstructions, we encounter challenges with larger image sizes exceeding

, as observed from

Figure 5. For this reason, we further performed integer-valued tomography reconstruction only for the digits dataset with image size

in

Figure 7. Here, we can see that hybrid-based reconstruction can yield similar results to the conventional algorithms. An exciting finding is evident when comparing the RMSE and SSIM of noisy simulations. The hybrid-based reconstructions are robust to noise and yield similar performance to the conventional reconstruction technique for all 32 digits in this small-scale experiment, as displayed in

Figure 8. We acknowledge that the energy optimization landscape appears to be minimally affected by noise during the hybrid annealing process. However, further research is warranted to gain a deeper understanding of this phenomenon. Moreover, we see that the reconstruction from as few views as 2 or 4 projections can outperform standard reconstruction algorithms in binary-based images in this specific toy example. However, the variance of the quantitative results is relatively high, as seen in

Figure 10. The high variance can be attributed to the reconstructed images, which have higher frequency content, particularly within the imaged object. Finally, we observe comparable results to conventional algorithms in a more realistic binary reconstruction scenario, where the system matrix is not precisely known.

At this point, it is important to mention that the conventional reconstruction techniques are not designed for such small-scale images, and the comparison may not accurately reflect how the algorithm will compare on larger image sizes, especially in the case of real ET reconstruction with non-discrete value. This is particularly manifested in the observation that the error of conventional reconstruction methods minimizes when approaching larger image sizes, as seen in

Figure 4 and

Figure 6. This can be attributed to the fact that both FFT and DART utilize linear interpolation in their reconstruction process. In contrast, both QA and PI employ the direct system matrix, which mitigates these errors. However, for the current state-of-the-art QC, it is infeasible to conduct such experiments, and we still see great value in the experiments with such new computing technologies. If quantum annealing can be scaled up to reasonable image sizes and pixel value ranges in the future, it may offer advantages. These advantages include increased robustness to noise and the ability to reconstruct with fewer views. In a realistic scenario, this could translate into reduced radiation exposure for patients and shorter scanning times, but this remains an open research question for evaluation in the future. We also see potential drawbacks of our method. Most importantly, the D-Wave quantum annealer and the associated hybrid solvers are no universal quantum computers. Therefore, we can only perform the QA algorithm on the hardware. In return, this means that we cannot use the ability of quantum computers to represent extensive data with significantly fewer qubits. On the other hand, the data loading is part of the problem formulation for QA, which is a time-consuming task for gate-based QC. However, there is no guaranteed speed-up or better solutions proofed for QA [

54]. The use of hybrid solvers helps to improve the quality of solutions and problem size. However, the problems may require a long runtime, which cannot compete with purely classical methods. In the hybrid approach, a majority of the runtime is attributable to the overhead of classical computation. Another drawback is the current cost of QC, which is relatively high but is expected to decrease as it did for classical computers.

Finally, we observe that the hybrid-based integer reconstructions have problems reconstructing homogeneous regions. Adding smoothness constraints to the objective could improve reconstructed images in the future.

8. Conclusions and Outlook

We have given the reader an overview of quantum computing, especially adiabatic quantum computing. More to the point, we have explained how quantum annealing works and which problems it can solve. Subsequently, we present the inverse problem of tomographic image reconstruction and describe the use case of emission tomography and the difference to transmission tomography. We summarize previous work in the solution of linear systems and image reconstruction with quantum annealing and quantum computing and provide the fundamentals for our reconstruction method with quantum annealing and hybrid solvers. Finally, we showcase the results of binary- and integer-valued reconstruction for different matrix sizes. We also test the reconstruction concerning noise and underdetermination. We have observed that hybrid-based reconstruction shows potential benefits in noisy linear systems, especially for binary images and very small integer-valued images, where the problem’s solution space is still tractable for the hybrid solver. However, challenges arise as we increase the pixel bit range of images, and we do not observe performance comparable to that of classical techniques. Furthermore, the preparation of larger optimization problems, which is carried out on a classical computer, becomes a bottleneck in the reconstruction pipeline due to the increasing size of the system matrix used for reconstruction. Nevertheless, the stochastic nature of quantum annealers allows for the introduction of additional uncertainty measures to interpret the reconstructed images.

Author Contributions

Conceptualization, M.A.N. and A.H.V.; methodology, M.A.N.; software, M.A.N.; formal analysis, M.A.N., A.H.V., M.P.R., W.G. and A.K.M.; writing—original draft preparation, M.A.N.; writing—review and editing, M.A.N., A.H.V., M.P.R., W.G. and A.K.M.; supervision, A.H.V. and A.K.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Acknowledgments

The authors acknowledge the use of D-Wave’s quantum annealers and hybrid solvers. We acknowledge financial support by Deutsche Forschungsgemeinschaft and Friedrich-Alexander-Universität Erlangen-Nürnberg within the funding programme “Open Access Publication Funding”.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| AQC | Adiabatic Quantum Computing |

| CQM | Constrained Quadratic Model |

| DART | Discrete Algebraic Reconstruction Technique |

| ET | Emission Tomography |

| FBP | Filtered BackProjection |

| GT | Ground Truth |

| HHL | Harrow-Hassidim-Lloyd |

| PI | Pseudo Inverse |

| QA | Quantum Annealing |

| QAOA | Quantum Approximate Optimization Algorithm |

| QC | Quantum Computing |

| QFT | Quantum Fourier Transform |

| QFT | Quantum Fourier Transform |

| QPU | Quantum Processing Unit |

| QUBO | Quadratic Unconstrained Binary Optimization |

| RMSE | Root Mean Square Error |

| SART | Simultaneous Algebraic Reconstruction Technique |

| SG | Sinogram |

| SSIM | Structural Similarity Index Measure |

| TT | Transmission Tomography |

| UC | Uncertainty Map |

Appendix A

Appendix A.1. Matrix Size Comparison

Figure A1.

Binary reconstructions of sample image ‘snowflake’ (a) and ’molecule’ (b) for image sizes , where N is 4, 8, 16, and 32.

Figure A1.

Binary reconstructions of sample image ‘snowflake’ (a) and ’molecule’ (b) for image sizes , where N is 4, 8, 16, and 32.

Appendix A.2. Shepp–Logan Components

Figure A2.

Gradual analysis of the Shepp–Logan phantom. The y-axis shows the number of components (discretization values) in the Shepp–Logan phantom.

Figure A2.

Gradual analysis of the Shepp–Logan phantom. The y-axis shows the number of components (discretization values) in the Shepp–Logan phantom.

Appendix A.3. Digits

Figure A3.

4-bit integer reconstructions of digits from the UCI digits dataset.

Figure A3.

4-bit integer reconstructions of digits from the UCI digits dataset.

Figure A4.

4-bit integer reconstructions of digits from the UCI digits dataset.

Figure A4.

4-bit integer reconstructions of digits from the UCI digits dataset.

Figure A5.

4-bit integer reconstructions of digits from the UCI digits dataset.

Figure A5.

4-bit integer reconstructions of digits from the UCI digits dataset.

Figure A6.

4-bit integer reconstructions of digits from the UCI digits dataset.

Figure A6.

4-bit integer reconstructions of digits from the UCI digits dataset.

Appendix A.4. Digits with Noise

Figure A7.

4-bit integer reconstructions of digits from the UCI digits dataset with random noise.

Figure A7.

4-bit integer reconstructions of digits from the UCI digits dataset with random noise.

Figure A8.

4-bit integer reconstructions of digits from the UCI digits dataset with random noise.

Figure A8.

4-bit integer reconstructions of digits from the UCI digits dataset with random noise.

Figure A9.

4-bit integer reconstructions of digits from the UCI digits dataset with random noise.

Figure A9.

4-bit integer reconstructions of digits from the UCI digits dataset with random noise.

Figure A10.

4-bit integer reconstructions of digits from the UCI digits dataset with random noise.

Figure A10.

4-bit integer reconstructions of digits from the UCI digits dataset with random noise.

Appendix A.5. Underdetermination

Figure A11.

Binary reconstruction of the image ‘molecule’ (a) and ‘snowflake’ (b) from 2, 4, and 32 views.

Figure A11.

Binary reconstruction of the image ‘molecule’ (a) and ‘snowflake’ (b) from 2, 4, and 32 views.

Appendix A.6. Inverse Crime

Figure A12.

Outside of the inverse-crime scenario: Binary reconstructions of sample image ‘molecule’ (a) and ‘snowflake’ (b) for image size . The projections are simulated on an upscaled higher-resolution image of the input, and the sinogram is consequently rebinned.

Figure A12.

Outside of the inverse-crime scenario: Binary reconstructions of sample image ‘molecule’ (a) and ‘snowflake’ (b) for image size . The projections are simulated on an upscaled higher-resolution image of the input, and the sinogram is consequently rebinned.

References

- Nielsen, M.A.; Chuang, I. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Arute, F.; Arya, K.; Babbush, R.; Bacon, D.; Bardin, J.C.; Barends, R.; Biswas, R.; Boixo, S.; Brandao, F.G.; Buell, D.A.; et al. Quantum supremacy using a programmable superconducting processor. Nature 2019, 574, 505–510. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Bao, W.S.; Cao, S.; Chen, F.; Chen, M.C.; Chen, X.; Chung, T.H.; Deng, H.; Du, Y.; Fan, D.; et al. Strong quantum computational advantage using a superconducting quantum processor. Phys. Rev. Lett. 2021, 127, 180501. [Google Scholar] [CrossRef]

- Aharonov, D.; Van Dam, W.; Kempe, J.; Landau, Z.; Lloyd, S.; Regev, O. Adiabatic quantum computation is equivalent to standard quantum computation. SIAM Rev. 2008, 50, 755–787. [Google Scholar] [CrossRef]

- Raymond, J.; Stevanovic, R.; Bernoudy, W.; Boothby, K.; McGeoch, C.; Berkley, A.J.; Farré, P.; King, A.D. Hybrid quantum annealing for larger-than-QPU lattice-structured problems. arXiv 2022, arXiv:2202.03044. [Google Scholar] [CrossRef]

- Wernick, M.N.; Aarsvold, J.N. Emission Tomography: The Fundamentals of PET and SPECT; Elsevier: Amsterdam, The Netherlands, 2004. [Google Scholar]

- Rieffel, E.; Polak, W. An introduction to quantum computing for non-physicists. ACM Comput. Surv. (CSUR) 2000, 32, 300–335. [Google Scholar] [CrossRef]

- Chow, J.; Dial, O.; Gambetta, J. IBM Quantum Breaks the 100-Qubit Processor Barrier. 2021. Available online: https://research.ibm.com/blog/127-qubit-quantum-processor-eagle (accessed on 7 October 2023).

- Albash, T.; Lidar, D.A. Adiabatic quantum computation. Rev. Mod. Phys. 2018, 90, 015002. [Google Scholar] [CrossRef]

- Van Dam, W.; Mosca, M.; Vazirani, U. How powerful is adiabatic quantum computation? In Proceedings of the 42nd IEEE Symposium on Foundations of Computer Science, Washington, DC, USA, 14–17 October 2001; pp. 279–287. [Google Scholar]

- Farhi, E.; Goldstone, J.; Gutmann, S.; Lapan, J.; Lundgren, A.; Preda, D. A quantum adiabatic evolution algorithm applied to random instances of an NP-complete problem. Science 2001, 292, 472–475. [Google Scholar] [CrossRef]

- Born, M.; Fock, V. Beweis des adiabatensatzes. Z. Phys. 1928, 51, 165–180. [Google Scholar] [CrossRef]

- Jansen, S.; Ruskai, M.B.; Seiler, R. Bounds for the adiabatic approximation with applications to quantum computation. J. Math. Phys. 2007, 48, 102111. [Google Scholar] [CrossRef]

- Finnila, A.B.; Gomez, M.; Sebenik, C.; Stenson, C.; Doll, J.D. Quantum annealing: A new method for minimizing multidimensional functions. Chem. Phys. Lett. 1994, 219, 343–348. [Google Scholar] [CrossRef]

- McGeoch, C.C. Adiabatic Quantum Computation and Quantum Annealing: Theory and Practice; Synthesis Lectures on Quantum Computing; Springer Nature: Berlin/Heidelberg, Germany, 2014; Volume 5, pp. 1–93. [Google Scholar]

- Koshka, Y.; Novotny, M.A. Comparison of D-wave quantum annealing and classical simulated annealing for local minima determination. IEEE J. Sel. Areas Inf. Theory 2020, 1, 515–525. [Google Scholar] [CrossRef]

- Lucas, A. Ising formulations of many NP problems. Front. Phys. 2014, 2, 5. [Google Scholar] [CrossRef]

- Boothby, T.; King, A.D.; Roy, A. Fast clique minor generation in Chimera qubit connectivity graphs. Quantum Inf. Process. 2016, 15, 495–508. [Google Scholar] [CrossRef]

- King, A.D.; Carrasquilla, J.; Raymond, J.; Ozfidan, I.; Andriyash, E.; Berkley, A.; Reis, M.; Lanting, T.; Harris, R.; Altomare, F.; et al. Observation of topological phenomena in a programmable lattice of 1800 qubits. Nature 2018, 560, 456–460. [Google Scholar] [CrossRef] [PubMed]

- D-Wave. D-Wave Ocean Software. 2022. Available online: https://docs.ocean.dwavesys.com (accessed on 28 September 2022).

- D-Wave. D-Wave Leap. 2022. Available online: https://cloud.dwavesys.com/leap/ (accessed on 28 September 2022).

- Cormack, A.M. Representation of a function by its line integrals, with some radiological applications. J. Appl. Phys. 1963, 34, 2722–2727. [Google Scholar] [CrossRef]

- Beylkin, G. Discrete radon transform. IEEE Trans. Acoust. Speech Signal Process. 1987, 35, 162–172. [Google Scholar] [CrossRef]

- Natterer, F. Inversion of the attenuated Radon transform. Inverse Probl. 2001, 17, 113. [Google Scholar] [CrossRef]

- Bronnikov, A.V. Numerical solution of the identification problem for the attenuated Radon transform. Inverse Probl. 1999, 15, 1315. [Google Scholar] [CrossRef]

- Barrett, H.H.; Myers, K.J. Foundations of Image Science; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Herman, G.T.; Kuba, A. Discrete Tomography: Foundations, Algorithms, and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Herman, G.T.; Kuba, A. Discrete tomography in medical imaging. Proc. IEEE 2003, 91, 1612–1626. [Google Scholar] [CrossRef]

- Pan, X.; Sidky, E.Y.; Vannier, M. Why do commercial CT scanners still employ traditional, filtered back-projection for image reconstruction? Inverse Probl. 2009, 25, 123009. [Google Scholar] [CrossRef]

- Bruyant, P.P. Analytic and iterative reconstruction algorithms in SPECT. J. Nucl. Med. 2002, 43, 1343–1358. [Google Scholar] [PubMed]

- Lange, K.; Carson, R. EM reconstruction algorithms for emission and transmission tomography. J. Comput. Assist. Tomogr. 1984, 8, 306–316. [Google Scholar] [PubMed]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B (Methodol.) 1977, 39, 1–22. [Google Scholar] [CrossRef]

- Hestenes, M.R.; Stiefel, E. Methods of conjugate gradients for solving. J. Res. Natl. Bur. Stand. 1952, 49, 409. [Google Scholar] [CrossRef]

- Andersen, A.H.; Kak, A.C. Simultaneous algebraic reconstruction technique (SART): A superior implementation of the ART algorithm. Ultrason. Imaging 1984, 6, 81–94. [Google Scholar] [CrossRef] [PubMed]

- Würfl, T.; Ghesu, F.C.; Christlein, V.; Maier, A. Deep learning computed tomography. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention-MICCAI 2016: 19th International Conference, Athens, Greece, 17–21 October 2016; Proceedings, Part III 19. Springer: Berlin/Heidelberg, Germany, 2016; pp. 432–440. [Google Scholar]

- Penrose, R. A generalized inverse for matrices. In Mathematical Proceedings of the Cambridge Philosophical Society; Cambridge University Press: Cambridge, UK, 1955; pp. 406–413. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Caraiman, S.; Manta, V. Image processing using quantum computing. In Proceedings of the 2012 16th International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 12–14 October 2012; pp. 1–6. [Google Scholar]

- Biamonte, J.; Wittek, P.; Pancotti, N.; Rebentrost, P.; Wiebe, N.; Lloyd, S. Quantum machine learning. Nature 2017, 549, 195–202. [Google Scholar] [CrossRef]

- Kiani, B.T.; Villanyi, A.; Lloyd, S. Quantum medical imaging algorithms. arXiv 2020, arXiv:2004.02036. [Google Scholar]

- Harrow, A.W.; Hassidim, A.; Lloyd, S. Quantum algorithm for linear systems of equations. Phys. Rev. Lett. 2009, 103, 150502. [Google Scholar] [CrossRef]

- Aaronson, S. Read the fine print. Nat. Phys. 2015, 11, 291–293. [Google Scholar] [CrossRef]

- Farhi, E.; Goldstone, J.; Gutmann, S. A quantum approximate optimization algorithm. arXiv 2014, arXiv:1411.4028. [Google Scholar]

- O’Malley, D.; Vesselinov, V.V.; Alexandrov, B.S.; Alexandrov, L.B. Nonnegative/binary matrix factorization with a d-wave quantum annealer. PLoS ONE 2018, 13, e0206653. [Google Scholar] [CrossRef]

- Chang, C.C.; Gambhir, A.; Humble, T.S.; Sota, S. Quantum annealing for systems of polynomial equations. Sci. Rep. 2019, 9, 10258. [Google Scholar] [CrossRef] [PubMed]

- Rogers, M.L.; Singleton Jr, R.L. Floating-point calculations on a quantum annealer: Division and matrix inversion. Front. Phys. 2020, 8, 265. [Google Scholar] [CrossRef]

- Souza, A.M.; Martins, E.O.; Roditi, I.; Sá, N.; Sarthour, R.S.; Oliveira, I.S. An application of quantum annealing computing to seismic inversion. Front. Phys. 2022, 9, 748285. [Google Scholar] [CrossRef]

- Schielein, R.; Basting, M.; Dremel, K.; Firsching, M.; Fuchs, T.; Graetz, J.; Kasperl, S.; Prjamkov, D.; Semmler, S.; Suth, D.; et al. Quantum Computing and Computed Tomography: A Roadmap towards QuantumCT. In Proceedings of the 11th Conference on Industrial Computed Tomography, Wels, Austria, 8–11 February December 2022; Volume 3. [Google Scholar]

- Jun, K. Highly accurate quantum optimization algorithm for CT image reconstructions based on sinogram patterns. arXiv 2022, arXiv:2207.02448. [Google Scholar] [CrossRef] [PubMed]

- D-Wave. QPU Solvers: Minor-Embedding. 2022. Available online: https://docs.dwavesys.com/docs/latest/handbook_embedding.html (accessed on 22 September 2023).

- D-Wave. Hybrid Solvers for Quadratic Optimization. 2022. Available online: https://www.dwavesys.com/media/soxph512/hybrid-solvers-for-quadratic-optimization.pdf (accessed on 27 October 2022).

- van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T.; the scikit-image contributors. scikit-image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef] [PubMed]

- Alpaydin, E.; Kaynak, C. Optical Recognition of Handwritten Digits; UCI Machine Learning Repository: Irvine, CA, USA, 1998. [Google Scholar] [CrossRef]

- Yan, B.; Sinitsyn, N.A. Analytical solution for nonadiabatic quantum annealing to arbitrary Ising spin Hamiltonian. Nat. Commun. 2022, 13, 2212. [Google Scholar] [CrossRef] [PubMed]

Figure 1.

Graphical Abstract: Simulation and reconstruction of a two-view binary tomographic problem using hybrid quantum annealing.

Figure 1.

Graphical Abstract: Simulation and reconstruction of a two-view binary tomographic problem using hybrid quantum annealing.

Figure 2.

Graphs and physical embeddings on the QPU for binary reconstruction problems. (a,c) depict the directed graph for binary tomographic problems with image sizes of and , respectively. (b,d) show the corresponding embedding of the directed graph on the QPU topology for (a,c), respectively.

Figure 2.

Graphs and physical embeddings on the QPU for binary reconstruction problems. (a,c) depict the directed graph for binary tomographic problems with image sizes of and , respectively. (b,d) show the corresponding embedding of the directed graph on the QPU topology for (a,c), respectively.

Figure 3.

Binary reconstructions of sample image ‘foam’ (a) and ‘tree’ (b) for image sizes , where N is 4, 8, 16, and 32.

Figure 3.

Binary reconstructions of sample image ‘foam’ (a) and ‘tree’ (b) for image sizes , where N is 4, 8, 16, and 32.

Figure 4.

Mean and variance RMSE (a) and SSIM (b) evaluation of images ‘foam’, ‘molecule’, ‘snowflake’ and ‘tree’ for image sizes , where N is 4, 8, 16, and 32.

Figure 4.

Mean and variance RMSE (a) and SSIM (b) evaluation of images ‘foam’, ‘molecule’, ‘snowflake’ and ‘tree’ for image sizes , where N is 4, 8, 16, and 32.

Figure 5.

4-bit integer reconstructions of Shepp–Logan phantom for image sizes , where N is 4, 8, 16, and 32.

Figure 5.

4-bit integer reconstructions of Shepp–Logan phantom for image sizes , where N is 4, 8, 16, and 32.

Figure 6.

RMSE (a) and SSIM (b) of 4-bit integer reconstructions of the Shepp–Logan phantom for image sizes , where N is 4, 8, 16, and 32.

Figure 6.

RMSE (a) and SSIM (b) of 4-bit integer reconstructions of the Shepp–Logan phantom for image sizes , where N is 4, 8, 16, and 32.

Figure 7.

4-bit integer reconstructions of four digits from the UCI digits dataset without (a) and with random noise (b).

Figure 7.

4-bit integer reconstructions of four digits from the UCI digits dataset without (a) and with random noise (b).

Figure 8.

Comparison of RMSE and SSIM for 4-bit integer reconstructions of 32 images from the UCI digits dataset. (a,b) show results without noise, while (c,d) show results with noise.

Figure 8.

Comparison of RMSE and SSIM for 4-bit integer reconstructions of 32 images from the UCI digits dataset. (a,b) show results without noise, while (c,d) show results with noise.

Figure 9.

Binary reconstruction of the image ‘foam’ (a) and ‘tree’ (b) from 2, 4, and 32 views.

Figure 9.

Binary reconstruction of the image ‘foam’ (a) and ‘tree’ (b) from 2, 4, and 32 views.

Figure 10.

RMSE (a) and SSIM (b) of binary reconstructions from 2, 4, and 32 views.

Figure 10.

RMSE (a) and SSIM (b) of binary reconstructions from 2, 4, and 32 views.

Figure 11.

Outside of the inverse-crime scenario: Binary reconstructions of sample image ‘foam’ (a) and ‘tree’ (b) for image size . The projections are simulated on an upscaled higher-resolution image of the input, and the sinogram is consequently rebinned.

Figure 11.

Outside of the inverse-crime scenario: Binary reconstructions of sample image ‘foam’ (a) and ‘tree’ (b) for image size . The projections are simulated on an upscaled higher-resolution image of the input, and the sinogram is consequently rebinned.

Table 1.

Overview of the reconstruction times for the hybrid QA-based reconstruction technique compared to classical reconstruction methods FBP, DART, and PI. FBP, DART, and PI were carried out on a developer’s workstation with an Intel® Core™ i7-10850H CPU. The runtime of the hybrid QA-based reconstruction is subdivided into the creation of the binary quadratic model (BQM) on both the developer workstation and the commercial hybrid solver and then the subsequent CPU and QPU optimization carried out on the commercial hybrid solver. The time is measured as the mean of four binary reconstructed images.

Table 1.

Overview of the reconstruction times for the hybrid QA-based reconstruction technique compared to classical reconstruction methods FBP, DART, and PI. FBP, DART, and PI were carried out on a developer’s workstation with an Intel® Core™ i7-10850H CPU. The runtime of the hybrid QA-based reconstruction is subdivided into the creation of the binary quadratic model (BQM) on both the developer workstation and the commercial hybrid solver and then the subsequent CPU and QPU optimization carried out on the commercial hybrid solver. The time is measured as the mean of four binary reconstructed images.

| Reconstruction | Create BQM (s) | CPU Opt. (s) | QPU Opt. (s) | Total Runtime (s) |

|---|

| FBP | - | 0.002 | - | 0.002 |

| DART | - | 0.007 | - | 0.007 |

| PI | - | 0.641 | - | 0.641 |

| QA | 38.22 | 5.095 | 0.032 | 43.347 |

Table 2.

Overview of the RMSE and SSIM measured as the mean of the four binary reconstructed images outside the inverse-crime scenario.

Table 2.

Overview of the RMSE and SSIM measured as the mean of the four binary reconstructed images outside the inverse-crime scenario.

| | FBP | DART | PI | QA |

|---|

| RMSE (mean + std) | | | | |

| SSIM (mean + std) | | | | |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).