Abstract

The goal of background reconstruction is to recover the background image of a scene from a sequence of frames showing this scene cluttered by various moving objects. This task is fundamental in image analysis, and is generally the first step before more advanced processing, but difficult because there is no formal definition of what should be considered as background or foreground and the results may be severely impacted by various challenges such as illumination changes, intermittent object motions, highly cluttered scenes, etc. We propose in this paper a new iterative algorithm for background reconstruction, where the current estimate of the background is used to guess which image pixels are background pixels and a new background estimation is performed using those pixels only. We then show that the proposed algorithm, which uses stochastic gradient descent for improved regularization, is more accurate than the state of the art on the challenging SBMnet dataset, especially for short videos with low frame rates, and is also fast, reaching an average of 52 fps on this dataset when parameterized for maximal accuracy using acceleration with a graphics processing unit (GPU) and a Python implementation.

1. Introduction

We consider in this paper the task of static background reconstruction: starting from a sequence of images of a scene showing moving objects, for example cars, bikes or pedestrians, the goal is to recover the image of the background of this scene, without any of the moving objects. This task is fundamental in image analysis: The moving objects appearing in the scene may be considered as a nuisance, and background reconstruction allows to remove them completely and focus on the analysis of the background, for example to localize or map the scene. More frequently, for example for video surveillance or traffic monitoring, the moving objects are the main object of interest and the background itself is considered as a nuisance, so that background reconstruction is a first step which can be used to extract and analyze the moving objects of the scene. The task of background reconstruction should not be confused with the task of background modeling that involves building a statistical model of the background images whereas the task of background reconstruction requires to predict a unique background image.

It is often assumed that all the images share the same background, which is then called a static background. In this case, the output of the algorithm is composed of only one background image . It is however also possible that the backgrounds are slightly different in each image, for example if the illumination conditions change or if the camera is moving. In this situation, we expect a background reconstruction algorithm to output a sequence of backgrounds and we say that the background reconstruction is dynamic. In this paper, we consider the problem of static background reconstruction.

This problem is a difficult because there is no formal definition of what should be considered as background or foreground. Moving trees, fountains and moving shadows are examples of instances that are usually considered as belonging to the background although they show moving features. Other challenges such as illumination changes or the presence of objects staying still for a short time (a problem called intermittent motion) may severely impact the quality of a background reconstruction model.

One should distinguish between online methods, where the length of the dataset is unknown and the background reconstruction algorithm has to update the background model in real-time and batch methods, where the algorithm is provided with a fixed dataset. The method proposed in this paper is a batch method.

The main contribution of this paper are the followings:

- We implement a new consistency criterion for background estimation: The background estimate produced by a background estimation method should not change if we perform the background estimation using only pixels that are considered as background pixels with regards to this background estimate.

- We then show that this consistency criterion can be described as an optimization criterion and that that the associated optimization problem can be efficiently solved using stochastic gradient descent.

2. Related Work

Temporal median filtering (TMF) [1] simply computes the background color for a pixel p as the median of the colors of this pixel on all the images . Despite its simplicity, this algorithm and its variant temporal median filter with gaussian filtering (TMFG) [2] perform very well on several scene categories.

The current state-of-the-art models for unsupervised fixed background reconstruction are the Superpixel motion detection algorithm (SPMD) [3] and LaBGen-OF [4]. Both of these models, as well as the frame selection method and efficient background estimation procedure (FSBE) [5], implement the idea that the regions of the input frames showing foreground objects should not be considered to compute the background.

SPMD first selects the longest sequence with stable illumination, then uses superpixel segmentation [6], and removes all superpixels with contain at least one moving pixel using a frame difference method to detect moving pixels. The various pixel values associated with one pixel position are then clustered, and the median value of the best cluster is selected to produce the background value. Removing superpixels associated with moving pixels for background initialization is also developed in [7].

LaBGen and LaBGen-P [8,9] assume that a background/foreground segmentation algorithm is available. For a given pixel or spatial patch, these models select the frames showing the lowest number of foreground pixels, and then perform a pixel-wise median filtering. LaBGen-OF is a variant which uses an optical flow algorithm [4]. LaBGen-semantic is another variant with uses a supervised semantic segmentation model [10]. This model has also been adapted in [11] to detect illumination changes and use only a subsequence with stable illumination conditions.

The FSBE algorithm (frame selection and background estimation) [5] assumes that an optical flow algorithm is available. It first selects a sequence of frames where the illumination conditions do not change too much. Using the optical flow algorithm, it classifies as background all pixels which have an optical flow magnitude below some threshold and corrects this classification if it detects high dynamic motion or foreground intermittent motion in the sequence. It then takes the pixel-wise average of the selected background pixels.

Instead of only removing pixels or patches which show moving objects before performing temporal median filtering, some models [12,13] try to select for each pixel only one patch from the various frames, which is considered to be the best candidate to represent the background, so that temporal median filtering is not needed. Photomontage [14] builds the background as a seamless montage composed of patches extracted from the images so that the likelihood of the color at each pixel is maximum with respect to the probability distribution function formed from the color histogram of all pixels in the span.

Some models try to benefit from the fact that if the content of the background is known in some part of the image, it is easier to distinguish between background and foreground objects in adjacent parts of the image, using a spatial or temporal consistency criterion. The neighborhood exploration based background initialization (NExBI) algorithm [15] divides the frame in blocks, and perform a temporal clustering for each block location. A preliminary partial background model is then created for the blocks that remain stable during all the sequence, i.e., where all the image patches associated with this block form only one cluster, and then iteratively extended to the whole image as a puzzle game by enforcing consistency between candidate background blocks and the partial background model. Other iterative block completion models have been proposed in [16,17,18,19,20,21].

Another approach which has been investigated for background reconstruction is to consider the sequence as a 3D tensor or a spatiotemporal matrix and to decompose it as the sum of a low-rank part, which is assumed to be representative of the background, and a sparse part, which should be representative of the foreground objects. For example, the Motion-assisted spatiotemporal clustering of low-rank algorithm (MSCL) [22], which is a dynamic background reconstruction model using robust principal component analysis (RPCA) [23], is able to obtain better results than state of the art fixed background reconstruction models on the scene background modeling (SBMnet) dataset using this method, although it is not directly comparable to those models because it requires some human supervision to select the final frame from the various predicted backgrounds associated with the frames . Another linear method proposed to extract a low rank background is to apply singular value decomposition (SVD) to spatiotemporal slices of the tensor , consider that the first principal subspace is associated with the background, and use the other components to detect foreground objects, which can then be excluded from the background computation [24,25].

The background estimation by weightless neural network (BEWIS) [26] and self-organizing background subtraction (SOBS) algorithms [27,28,29] involve weightless neural networks, which are used as containers to build a statistical model of the background.

The current top performing algorithms for background reconstruction do not use deep learning techniques, but several papers have proposed to use them for fixed background reconstruction:

Fully-concatenated Flownet (FC-Flownet) [30] is a convolutional network with an architecture similar to a U-net which is used to predict a background from a set of 20 color images in a single inference step. Due to memory restrictions, the images are cut in superposed 64 × 64 patches, and the 20 patches associated with one location are given as input to the convolutional network. The output patches are then aggregated to build the background. The network is trained end-to-end using samples and ground truths coming from 54 different sequences.

Background modeling Unet (BM-Unet) [31] is a background reconstruction model which also uses a U-net network but is trained without any supervision or ground-truth data and can perform both fixed and dynamic background reconstruction. For fixed background reconstruction, it is trained with pairs of random images sampled from one frame sequence. Using the first image, the U-net network predicts a probability distribution over the possible 256 values of each pixel of the output image, and the second image is used as a target.

Deep context prediction (DCP) [32] considers the background reconstruction problem as an inpainting problem: Using an optical flow algorithm, it first computes the motion mask associated with the current frame and removes from this frame the pixels associated with this motion mask. It then uses a multi-scale neural path synthesis network [33] to fill the holes in the image and obtain a clean background. Other data reconstruction methods using classical matrix completion or exemplar-based approaches are also possible [34,35,36].

We refer to available surveys [37,38] for a more detailed description of related work.

3. Proposed Algorithm for Background Reconstruction

3.1. Motivation

We have noted in the review of previous work the good results of temporal median filtering, despite its simplicity, and observe that the two best unsupervised algorithms for background reconstruction, SPMD and LabGen-OF, also use some form of temporal median filtering. One can intuitively understand that background reconstruction involves performing some form of averaging of the input frames, and that computing the median will give better results than computing the average of the frames because the median is more robust to outliers.

We note however that using median filtering on color images may lead to inconsistencies. Let us for example consider RGB images showing a red background with large green and blue foreground objects. Assume that in the sequence considered, each red background pixel is masked by a green object during 26% of sequence duration and by a blue object during another 26% of the sequence duration. The red color channel of any pixel will then be equal to zero during of the sequence, and the blue and green channels are also equal to zero during of the sequence. As a consequence, the result of median filtering on such a sequence is a uniform black image, which is clearly not satisfactory.

One can think that a better method to select the background color of an image from a frame sequence would be first to guess in each frame which pixels are background pixels and then to consider only those pixels for temporal median filtering. However, to be able to guess which pixels are background pixels, we need to have some estimate of the background. The main idea introduced in this paper is that we can successfully build an iterative optimization process for background reconstruction, using the current estimate of the background to guess which pixels are background pixels and then refining the estimate of the background by performing temporal median filtering on those pixels only.

3.2. Bootstrap Weights

We observe that temporal median filtering can be described as a minimization problem associated with a error loss. More precisely, for a sequence of color images of size , noting the value (normalized in the range ) of the pixel associated with the image and the color channel c at position with and , the error loss associated with some background reconstruction can be described as

with

and it is immediate that if we take each to be a median of the sequence , then we obtain a minimum of this loss function, considering that the derivative of with respect to is equal to 1 if and if .

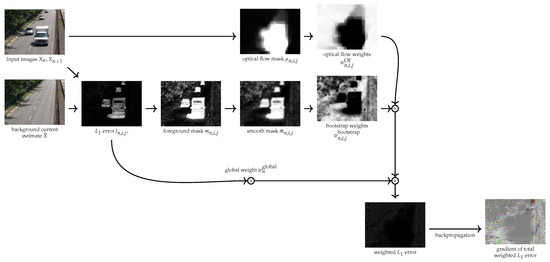

We bootstrap the current estimate of the background to smoothly restrict this loss function to background pixels only. We then give a low weight, called a bootstrap weight, to the pixel-wise error terms associated with foreground pixels in the loss function. These bootstrap weights are computed in the following way (Figure 1):

Figure 1.

Schematic of loss function and gradient computation (Images are normalized in the range [0,1]).

Let us note the sum of the errors for each color at the pixel between the predicted image and the input image for all the color channels:

If at least one of the color channels give a high error, then is large and we will consider that the pixel of the image is a foreground pixel. We then build a soft foreground mask for the image using the formula

where is some positive hyperparameter, which can be considered as a soft threshold. As a consequence, is close to zero for values of close to zero (background pixels), and close to 1 for values of which are significantly larger than (foreground pixels).

This mask will however be noisy. We then compute a spatially smoothed version of this mask by averaging using a square kernel of size , with (where w is the image width and r is some integer hyperparameter):

We then finally define the associated pixel-wise bootstrap weights as

where is some positive hyperparameter, which we call the bootstrap coefficient.

For pixels which are considered to be background pixels (), this weight will be close to 1 and will not change the pixel-wise loss terms associated with these pixels. However for pixels which are considered as foreground pixels (), this weight will have a very low value close to , which means that the associated pixel-wise loss terms will get a very low importance in the loss function.

3.3. Optical Flow Weights

We have seen that background reconstruction algorithms could be improved by using informations provided by optical flow models to remove parts of an image showing moving objects. We use the same approach to improve the loss functions . We then define optical flow weights associated with each pixel which will be close to zero if this pixel appears to be a moving pixel and has to be removed from the loss function computation. These weights are computed in the following way (cf Figure 1):

We use an external algorithm (OpenCV implementation of Dense Inverse Search algorithm [39]) to obtain an estimate of the magnitude of the optical flow associated with each pixel of an image . We chose this algorithm because it is very fast compared to other available optical flow implementations. We first normalize with respect to the image width w and then define an optical flow mask using the formula

where the hyperparameter can also be considered as a threshold. This mask is then equal to 1 for high values of the optical flow , which suggests that the associated pixels show a moving object, and it is close to zero if no motion is detected by the optical flow algorithm at the associated pixel.

The weight associated with this optical flow mask is then defined as

where is another positive hyperparameter. This weight will then be equal to 1 if no motion is detected at the associated pixel, and close to if a significant motion is detected, which suggests that the associated pixel is not a background pixel. is, however, set to 1 for all pixels on short videos (less than ten images), considering that optical flows computed from sequences with very low frame rates are not reliable.

3.4. Abnormal Image Weights

If the number of images in the dataset is large, we can afford to give a low weight to images which appear to be abnormal, for example if the illumination conditions are different on these images compared to the predicted background, or if there are too many pixel errors on the image. We then first compute the average error of the image as

and define a global weight associated with each image as

where is another positive hyperparameter. As a consequence, this weight will be close to zero if the image is globally very different from the current estimate of the background. We use this global weight if the size of the dataset is greater than 10. It should be noted that this weight is not pixel-specific, as opposed to the bootstrap weights and optical flow weights, but is assigned to a complete frame.

3.5. Management of Intermittent Motion

Existing benchmarks for background reconstruction require that objects which remain still for a long time in the sequence be considered as foreground objects if they are moving during some part of the sequence. This challenge is very difficult and is not addressed by the previous weights. In order to handle it, we follow Javed et al. [22,40] and remove from the frames sequence all frames which are not showing any motion. More precisely, we first compute the maximum of the optical flow mask values of the image as defined in previous section, and remove this image if , where is another threshold hyperparameter. The motivation of this suppression is that it appears that images containing still foreground objects are often motionless images, so that removing them improves the robustness of the proposed model against the intermittent motion challenge. We apply this motionless frame suppression when the number of frames in the sequence is higher than 10, considering as in previous section, that removing frames when the number of frames is very low will impact negatively the quality of the results. We note the number of frames after motionless frame suppression.

3.6. Statement of the Optimization Problem

Finally, the loss function is adapted using these weights and becomes the following:

We are then interested to solve the following optimization problem:

Considering the dataset , find an image so that, when the weights and are considered as constants, the loss function is minimal with respect to .

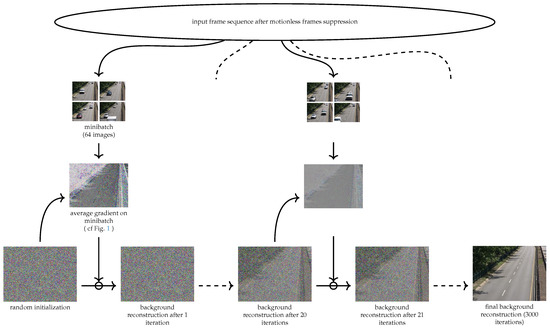

We can find a solution to this problem by performing an iterative computation of the weighted median of the images using the various weights defined in the previous paragraph followed by an update of the weights. We observe, however, that the images produced using this method are not smooth and that additional regularization is necessary. We then propose to use stochastic gradient descent on the loss function using standard deep learning tools. The pixel values are then considered as parameters and optimized using stochastic gradient descent (Figure 2).

Figure 2.

Overview of the stochastic gradient descent optimization process.

It should be noted that performing a stochastic gradient descent on this loss function is not equivalent to minimizing it: During the optimization process, the weights and depend on the current estimation of the background and change; we then call these weights dynamic weights. At each iteration they are, however, considered as fixed so that we do not compute and use the gradient of the loss function with respect to the value of these weights.

4. Evaluation of the Proposed Model

Two public benchmarks are available for the evaluation of fixed background reconstruction models: the SBMnet dataset [41] and the SBI dataset [42]. We first provide a quantitative evaluation of the proposed model on those two datasets. We then perform an ablation study and some computation speed measurements.

4.1. Implementation Details

A desktop computer with an Intel Core i7 7700K@4.2GHz CPU and a Nvidia RTX 2080 TI GPU is used for this experiment.The model is implemented in Python using the Pytorch framework and is publicly available on the Github platform. We use the Adam optimizer [43], with learning rate 0.03 and batch size 64, reduced by a factor of 10 when 3/4 of the epochs have been computed. The number of epochs depends on the size of the dataset and is adjusted so that the total number of optimization iterations is close to 3000, with a minimum of two epochs. In order to accelerate computations, each frame sequence is fully loaded in the GPU video RAM during the optimization process. A manual hyperparameter search has been performed using the video sequences of the SBI and SBM datasets for which a ground truth is available. The hyperparameters have then been set to the following values: . Before starting the optimization, background image pixel color values are initialized with random numbers sampled from a uniform distribution between 0 and 1. The DIS optical flow OpenCV implementation is used with the FAST preset mode. In order to obtain a low gradient when is close to zero, we replace the expression with a smooth loss using a threshold equal to 3 (assuming the pixel values are scaled in the range 0–255). When is lower than 3, we replace it with the quadratic expression , otherwise we replace it with .

4.2. Evaluation on SBMnet dataset

The SBMnet dataset [41] (http://scenebackgroundmodeling.net/, accessed on 20 November 2021) is composed of 79 sequences, which have been selected to cover a wide range of challenges and are representative of typical indoor and outdoor visual data captured today in surveillance, smart environment, and video database scenarios. The dataset includes the following eight categories with associated challenges: basic, intermittent motion, clutter, jitter, illumination changes, background motion, very long and very short. Although this dataset is freely available on the SBMnet website, ground truth images are publicly available for only 18 frame sequences, either on the SBMnet website or on the SBI dataset website. In order to benchmark a new algorithm, one has to submit the predicted fixed background images associated with each frame sequence to the website, which performs the evaluation of the submitted results.

Six criteria are computed to evaluate the accuracy of background reconstruction:

- Average Gray-level Error (AGE);

- Percentage of Error Pixels (pEPs);

- Percentage of Clustered Error Pixels (pCEPs);

- Multi-Scale Structural Similarity Index (MS-SSIM);

- Peak-Signal-to-Noise-Ratio (PSNR);

- Color image Quality Measure (CQM).

We refer to [41] for the full definition of these criteria. A good background reconstruction should minimize the criteria AGE, pEPs and pCEPs, but maximize the criteria MS-SSIM, PSNR and CQM. We have computed the 79 background images using the proposed algorithm and uploaded the reconstructed backgrounds to the SBMnet website, which provided the evaluation results described in Table 1 and Table 2.

Table 1.

Evaluation results per criteria on the SBMnet 2016 dataset. ↓ indicates lower score is better, ↑ indicates higher score is better. Source: SBMnet website http://pione.dinf.usherbrooke.ca/results/294/ (accessed on 20 November 2021).

Table 2.

Evaluation results for the AGE criterion per category on the SBMnet 2016 dataset. Source: SBMnet website http://pione.dinf.usherbrooke.ca/results/294/ (accessed on 20 November 2021).

We provide a comparison of the proposed model with models that are fully unsupervised, i.e., which do not use a supervised segmentation model (such as LabGen-semantic) and do not require any human supervision. The proposed model, named BB-SGD (background bootstrapping using stochastic gradient descent) obtains a better average score than all referenced unsupervised models on all criteria as shown in Table 1. Table 2 lists AGE results per category of the SBMnet dataset. It shows that the proposed models shows better AGE results than all referenced unsupervised models on 4 categories: basic, clutter, background motion and very short video, with a 15% accuracy improvement on the very short video category compared to the best unsupervised model in this category, which illustrates the efficiency of the bootstrapping mechanism introduced in the proposed model considering that for these sequences, the optical flow weights and global weights are not used and no frame is suppressed.

4.3. Evaluation on SBI Dataset

The SBI dataset [42] (https://sbmi2015.na.icar.cnr.it/SBIdataset.html, accessed on 20 November 2021) is composed of 14 image sequences. Ground truth backgrounds are available for all sequences. We use the Matlab tool available on the SBI website for fair comparison with other models, but do not report the CQM results considering that other sequences were evaluated with a Matlab tool which included a bug for the CQM computation, as indicated in the SBI website. We run the proposed model on the SBI dataset using the same hyperparameters as those used for the SBMnet dataset. The results of this evaluation are listed in Table 3 and show that the proposed model obtains better results than all other compared unsupervised models for the evaluation criteria AGE, MS-SSIM and PSNR, and is ranked second for the criteria pEPs and pCEPs.

Table 3.

Evaluation results per criteria on the SBI dataset. ↓ indicates lower score is better, ↑ indicates higher score is better.

4.4. Ablation Study

In order to check the contribution of the various weights described in this paper, we provide results obtained using truncated versions of the proposed model while keeping the hyperparameters fixed: Version 0 does not use any weight and does not remove motionless frames, and is then equivalent to temporal median filtering. Version 1 uses only the optical flow weights and does not remove motionless frames. Version 2 uses both optical flow weights and global weights and does not remove motionless frames. Version 3 uses bootsrap weights, global weights and optical flow weights, but does not remove motionless frames. The AGE scores obtained by these truncated models on the 18 videos of the SBMnet dataset for which a ground truth is available and using the evaluation tool available on the SBMnet website are provided in Table 4. They show that temporal median filtering (v0) gives the best results for five scenes, confirming that this is a good baseline. Introducing optical flow weights (v1) improves average AGE scores on scenes of the “clutter” category, but has no beneficial impact on other categories. Adding global weights (v2) has a positive impact on the “illumination change” category, which was expected, but also on the “clutter” category”. Adding bootstrap weights has an impact on the “clutter” category, but also on the “short video” category. Finally, removing motionless frames, which leads to the full model, has a positive impact on the “intermittent motion” category, which was expected, but also on the scene “boulevardJam” of the “clutter” category, which also shows some intermittent motions.

Table 4.

AGE scores obtained using various truncated versions of the algorithm on 18 SBMnet sequences where a ground truth background is available.

4.5. Computation Time

We have performed computation times measurements and tested the impact of reducing the number of optimization iterations, while keeping all other parameters frozen, excluding the learning rate. The results of these experiments are provided in Table 5. The total computation times necessary to reconstruct the 79 backgrounds from the associated video sequences of the SBMnet dataset are estimated by performing a sequential computation for all the videos, so that the computation times indicated in this table are the sum of the computation times of each of the 79 videos. If we divide the number of frames of the full dataset (73,355) with the total computation time of the proposed model, which is 1409 s, we obtain an average of 52 frames per second (fps). Table 5 shows, however, that the number of optimization iterations can be reduced from 3000 to 250, increasing the average speed to 187 fps, without major impact on the overall accuracy of the algorithm. The computation times with such a low number of iterations are mainly associated with optical flow computations and JPEG images decoding.

Table 5.

Impact of reducing the number of iterations on average AGE score and computation time.

Although the proposed model requires a GPU, these computation time measurements compare very favorably with the processing speeds reported by the authors of other models. The average computation speed of LabGen-OF is estimated to 5 fps in [4]. The computation speed of SPMD is estimated in [3] to 1.6 fps for 640 × 480 images and 22.8 fps for 200 × 144 images using a Intel Core i7 2600@3.4Ghz CPU.

The asymptotic time complexity of the proposed algorithm is where is the number of pixels of an image. It does not depend on the number of frames of the input frame sequence since a maximum of images are sampled from the input sequence (3000 minibatches of 64 images) and optical flow computations can be restricted to those images only. The quadratic expression is a consequence of Equation (5), which involves a kernel which has a size proportional to the size of the images for r fixed.

4.6. Image Samples

Figure 3 and Figure 4 show for qualitative evaluation some examples of background reconstruction for sequences of the SBMnet dataset, with the associated ground-truth when it is available and a comparison with the results obtained with LabGen-OF and SPMD. The bottom five rows of Figure 4 show some examples of poor quality reconstructions suffering from challenging issues such as intermittent motion, headlights and moving trees.

Figure 3.

Examples of background reconstruction using the proposed model and comparison with SPMD and LabGen-OF.

Figure 4.

Examples of background reconstruction. The bottom five rows show examples of low quality reconstructions.

4.7. Hyperparameter Tuning

The proposed model involves a significant number of hyperparameters. Although the default hyperparameters proposed in Section 4 allow us to obtain state-of-the-art performances on existing benchmarks, these hyperparameters may be fine-tuned to improve results on specific situations or use cases. We provide below some indications on the influence of the main hyperparameters:

- : the soft threshold used for computing soft foreground mask should be decreased for frame sequences with very low average illumination.

- : the soft threshold used for computing optical flow masks should be decreased for video sequences with high frame rates and increased for sequences with low frame rates, considering that optical flow values are lower for a high frame rate sequences and higher for a low frame rate sequences.

- Optical flow weight : as shown in the ablation study, the use of optical flow weights is only necessary for highly occluded scenes. More precise results may be obtained by setting this parameter to lower value if a high level of occlusion is not expected.

- r: the value of r is associated with the expected sizes of the foreground objects: If it is forecast that the scenes will contain only small foreground objects, this value may be increased on high definition images for faster training.

- Bootstrap coefficient : a lower value of leads to faster training, but decreases the ability to handle occlusions. A higher value of may lead to slower or unstable training and artifacts in the final image.

- Global weight : increasing the value of may be useful to handle low intensity illumination changes.

5. Conclusions

We have presented a new algorithm for fixed background reconstruction using stochastic gradient descent which is simple, fast using a GPU and is more accurate than the current state of the art. This shows that with modern hardware, stochastic gradient descent can be used efficiently for real-time applications and that the tools and frameworks which have been recently developed for deep learning and neural networks can also be useful for other optimization problems with a proper design of the loss function. Further works include using the same approach to handle the task of dynamic background reconstruction and change detection.

Author Contributions

Conceptualization, B.S.; methodology, B.S.; software, B.S.; validation, B.S.; formal analysis, B.S.; investigation; writing—original draft preparation, B.S.; writing—review and editing, A.d.L.F.; visualization, B.S.; supervision, A.d.L.F.; project administration, A.d.L.F.; resources, A.d.L.F., funding acquisition, A.d.L.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by french National Research Agency (ANR).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

SBM.net dataset is available at the the following web address: http://scenebackgroundmodeling.net/ (accessed on 20 November 2021). The SBI dataset is available at the following web address: https://sbmi2015.na.icar.cnr.it/SBIdataset.html (accessed on 20 November 2021).

Conflicts of Interest

The authors declare no conflict of interest.The funder had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Piccardi, M. Background subtraction techniques: A review. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics, The Hague, The Netherlands, 10–13 October 2004; Volume 4, pp. 3099–3104. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Cai, Y.; Zhang, M.; Li, H.; Gu, H. Scene background estimation based on temporal median filter with Gaussian filtering. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 132–136. [Google Scholar] [CrossRef]

- Xu, Z.; Min, B.; Cheung, R.C. A robust background initialization algorithm with superpixel motion detection. Signal Process. Image Commun. 2019, 71, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Laugraud, B.; Van Droogenbroeck, M. Is a memoryless motion detection truly relevant for background generation with labgen. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2017; pp. 443–454. [Google Scholar] [CrossRef] [Green Version]

- Djerida, A.; Zhao, Z.; Zhao, J. Robust background generation based on an effective frames selection method and an efficient background estimation procedure (FSBE). Signal Process. Image Commun. 2019, 78, 21–31. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2281. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, W.; Deng, Y.; Peng, B.; Liang, D.; Kaneko, S. Co-occurrence background model with superpixels for robust background initialization. arXiv 2020, arXiv:2003.12931. [Google Scholar]

- Laugraud, B.; Piérard, S.; Van Droogenbroeck, M. LaBGen: A method based on motion detection for generating the background of a scene. Pattern Recognit. Lett. 2017, 96, 12–21. [Google Scholar] [CrossRef] [Green Version]

- Laugraud, B.; Piérard, S.; Van Droogenbroeck, M. LaBGen-P: A pixel-level stationary background generation method based on LaBGen. In Proceedings of the International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2016; pp. 107–113. [Google Scholar] [CrossRef] [Green Version]

- Laugraud, B.; Piérard, S.; Van Droogenbroeck, M. Labgen-p-semantic: A first step for leveraging semantic segmentation in background generation. J. Imaging 2018, 4, 86. [Google Scholar] [CrossRef] [Green Version]

- Yu, L.; Guo, W. A Robust Background Initialization Method Based on Stable Image Patches. In Proceedings of the 2018 Chinese Automation Congress (CAC 2018), Xi’an, China, 30 November–2 December 2018; pp. 980–984. [Google Scholar] [CrossRef]

- Cohen, S. Background estimation as a labeling problem. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; Volume 1, pp. 1034–1041. [Google Scholar] [CrossRef]

- Xu, X.; Huang, T.S. A loopy belief propagation approach for robust background estimation. In Proceedings of the 26th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008. [Google Scholar] [CrossRef]

- Agarwala, A.; Dontcheva, M.; Agrawala, M.; Drucker, S.; Colburn, A.; Curless, B.; Salesin, D.; Cohen, M. Interactive digital photomontage. In ACM SIGGRAPH 2004 Papers, SIGGRAPH 2004; Association of Computing Machinery: New York, NY, USA, 2004; pp. 294–302. [Google Scholar] [CrossRef] [Green Version]

- Mseddi, W.S.; Jmal, M.; Attia, R. Real-time scene background initialization based on spatio-temporal neighborhood exploration. Multimed. Tools Appl. 2019, 78, 7289–7319. [Google Scholar] [CrossRef]

- Baltieri, D.; Vezzani, R.; Cucchiara, R. Fast background initialization with recursive Hadamard transform. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS 2010), Boston, MA, USA, 29 August–1 September 2010; pp. 165–171. [Google Scholar] [CrossRef]

- Colombari, A.; Fusiello, A. Patch-based background initialization in heavily cluttered video. IEEE Trans. Image Process. 2010, 19, 926–933. [Google Scholar] [CrossRef] [Green Version]

- Hsiao, H.H.; Leou, J.J. Background initialization and foreground segmentation for bootstrapping video sequences. EURASIP J. Image Video Process. 2013, 2013, 12. [Google Scholar] [CrossRef] [Green Version]

- Lin, H.H.; Liu, T.L.; Chuang, J.H. Learning a scene background model via classification. IEEE Trans. Signal Process. 2009, 57, 1641–1654. [Google Scholar] [CrossRef] [Green Version]

- Ortego, D.; SanMiguel, J.C.; Martínez, J.M. Rejection based multipath reconstruction for background estimation in video sequences with stationary objects. Comput. Vis. Image Underst. 2016, 147, 23–37. [Google Scholar] [CrossRef] [Green Version]

- Sanderson, C.; Reddy, V.; Lovell, B.C. A low-complexity algorithm for static background estimation from cluttered image sequences in surveillance contexts. EURASIP J. Image Video Process. 2011. [Google Scholar] [CrossRef] [Green Version]

- Javed, S.; Mahmood, A.; Bouwmans, T.; Jung, S.K. Background-Foreground Modeling Based on Spatiotemporal Sparse Subspace Clustering. IEEE Trans. Image Process. 2017, 26, 5840–5854. [Google Scholar] [CrossRef]

- Candès, E.J.; Li, X.; Ma, Y.; Wright, J. Robust principal component analysis? J. ACM 2011, 58, 1–37. [Google Scholar] [CrossRef]

- Kajo, I.; Kamel, N.; Ruichek, Y.; Malik, A.S. SVD-Based Tensor-Completion Technique for Background Initialization. IEEE Trans. Image Process. 2018, 27, 3114–3126. [Google Scholar] [CrossRef]

- Kajo, I.; Kamel, N.; Ruichek, Y. Self-Motion-Assisted Tensor Completion Method for Background Initialization in Complex Video Sequences. IEEE Trans. Image Process. 2020, 29, 1915–1928. [Google Scholar] [CrossRef] [PubMed]

- De Gregorio, M.; Giordano, M. Background modeling by weightless neural networks. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2015; Volume 9281, pp. 493–501. [Google Scholar] [CrossRef]

- Maddalena, L.; Petrosino, A. A self-organizing approach to background subtraction for visual surveillance applications. IEEE Trans. Image Process. 2008, 17, 1168–1177. [Google Scholar] [CrossRef]

- Maddalena, L.; Petrosino, A. Extracting a background image by a multi-modal scene background model. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 143–148. [Google Scholar] [CrossRef]

- Maddalena, L.; Petrosino, A. The SOBS algorithm: What are the limits? In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 21–26. [Google Scholar] [CrossRef]

- Halfaoui, I.; Bouzaraa, F.; Urfalioglu, O. CNN-based initial background estimation. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 101–106. [Google Scholar] [CrossRef]

- Tao, Y.; Palasek, P.; Ling, Z.; Patras, I. Background modelling based on generative unet. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS 2017), Lecce, Italy, 29 August–1 September 2017. [Google Scholar] [CrossRef]

- Sultana, M.; Mahmood, A.; Javed, S.; Jung, S.K. Unsupervised deep context prediction for background estimation and foreground segmentation. Mach. Vis. Appl. 2019, 30, 375–395. [Google Scholar] [CrossRef]

- Yang, C.; Lu, X.; Lin, Z.; Shechtman, E.; Wang, O.; Li, H. High-resolution image inpainting using multi-scale neural patch synthesis. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; Volume 2017, pp. 4076–4084. [Google Scholar] [CrossRef] [Green Version]

- Colombari, A.; Informatica, D.; Cristani, M.; Informatica, D.; Murino, V.; Informatica, D.; Fusiello, A.; Informatica, D. Exemplar-based Background Model Initialization Categories and Subject Descriptors. In Proceedings of the Third ACM International Workshop on Video Surveillance & Sensor Networks, Singapore, 11 November 2005. [Google Scholar] [CrossRef]

- Sobral, A.; Bouwmans, T.; Zahzah, E.H. Comparison of matrix completion algorithms for background initialization in videos. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2015; Volume 9281, pp. 510–518. [Google Scholar] [CrossRef]

- Sobral, A.; Zahzah, E.H. Matrix and tensor completion algorithms for background model initialization: A comparative evaluation. Pattern Recognit. Lett. 2017, 96, 22–33. [Google Scholar] [CrossRef]

- Bouwmans, T.; Maddalena, L.; Petrosino, A. Scene background initialization: A taxonomy. Pattern Recognit. Lett. 2017, 96, 3–11. [Google Scholar] [CrossRef]

- Bouwmans, T.; Javed, S.; Sultana, M.; Jung, S.K. Deep neural network concepts for background subtraction: A systematic review and comparative evaluation. arXiv 2019, arXiv:1811.05255. [Google Scholar]

- Kroeger, T.; Timofte, R.; Dai, D.; Van Gool, L. Fast optical flow using dense inverse search. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2016; pp. 471–488. [Google Scholar] [CrossRef] [Green Version]

- Javed, S.; Jung, S.K.; Mahmood, A.; Bouwmans, T. Motion-Aware Graph Regularized RPCA for background modeling of complex scenes. In Proceedings of the International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2016; pp. 120–125. [Google Scholar] [CrossRef]

- Jodoin, P.M.; Maddalena, L.; Petrosino, A.; Wang, Y. Extensive Benchmark and Survey of Modeling Methods for Scene Background Initialization. IEEE Trans. Image Process. 2017, 26, 5244–5256. [Google Scholar] [CrossRef] [PubMed]

- Maddalena, L.; Petrosino, A. Towards benchmarking scene background initialization. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2015; Volume 9281, pp. 469–476. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).