Abstract

The increased sensitivity of modern hyperspectral line-scanning systems has led to the development of imaging systems that can acquire each line of hyperspectral pixels at very high data rates (in the 200–400 Hz range). These data acquisition rates present an opportunity to acquire full hyperspectral scenes at rapid rates, enabling the use of traditional push-broom imaging systems as low-rate video hyperspectral imaging systems. This paper provides an overview of the design of an integrated system that produces low-rate video hyperspectral image sequences by merging a hyperspectral line scanner, operating in the visible and near infra-red, with a high-speed pan-tilt system and an integrated IMU-GPS that provides system pointing. The integrated unit is operated from atop a telescopic mast, which also allows imaging of the same surface area or objects from multiple view zenith directions, useful for bi-directional reflectance data acquisition and analysis. The telescopic mast platform also enables stereo hyperspectral image acquisition, and therefore, the ability to construct a digital elevation model of the surface. Imaging near the shoreline in a coastal setting, we provide an example of hyperspectral imagery time series acquired during a field experiment in July 2017 with our integrated system, which produced hyperspectral image sequences with 371 spectral bands, spatial dimensions of 1600 × 212, and 16 bits per pixel, every 0.67 s. A second example times series acquired during a rooftop experiment conducted on the Rochester Institute of Technology campus in August 2017 illustrates a second application, moving vehicle imaging, with 371 spectral bands, 16 bit dynamic range, and 1600 × 300 spatial dimensions every second.

1. Introduction

Hyperspectral imaging has been a powerful tool for identifying the composition of materials in scene pixels. Over the years, a large number of applications have been considered, ranging from environmental remote sensing to identification of man-made objects [1,2,3,4,5,6,7,8,9,10]. Some applications involve dynamic scenes which naturally would be well addressed by hyperspectral imaging systems operated at very high data rates. For example, coastal regions with rapidly changing conditions due to the persistent action of tides provide an example of a dynamic landscape where both the water and the land near shore change from moment to moment. Similarly, imaging of moving vehicles provides a challenging but different set of demands which would benefit from a system which can image rapidly.

The coastal zone, in particular, offers a range of important applications where hyperspectral imaging at video rates can have an impact. A wide variety of imaging systems have been used to study near-shore dynamics [11,12,13]. Considerable effort also has been made to develop hydrodynamic models that attempt to capture the dynamics of flowing sediment in the littoral zone [14], and models of flowing sediment are critical to understanding erosion and accretion processes. At the shoreline, modeling sediment transport and in particular accurately characterizing frictional effects is challenging due to the complicated dynamics as waves break on shore and then retreat [15].

Imaging of the coastal zone has taken many forms. For example, multi-spectral and hyperspectral imaging systems have been used to characterize in water constituents, bottom type, and bathymetry using radiative transfer models [7,16]. In addition, video imaging has been used to estimate flow of the water column and its constituents using video imaging (monochromatic and 3-band multi-spectral) through particle imaging velocimetry (PIV) [17,18,19]. Limitations of these past approaches are that traditional airborne and satellite remote sensing, while providing important details about sediment concentrations near shore, have produced essentially an instantaneous look at what is in fact a dynamical system. At the same time, while video systems have been used to image the water column and model its flow, the limited number of bands has meant that little information is available from these systems regarding in water constituents or bottom properties.

Relatively recently, the commercial marketplace has begun to deliver sensors which are advancing toward the long-term goal of high-frame rate hyperspectral imagery. Several different approaches have been taken, which include the use of so-called “snapshot” imaging systems [20,21]. Some hyperspectral imaging systems that fall into this category use a Fabry–Perot design [22]. However, the signal-to-noise ratio (SNR) that has been achieved by existing systems or those under development [23] is typically lower than that obtained with conventional hyperspectral imaging systems. In some cases, other trade-offs must be made to obtain comparable performance such as using fewer spectral bands or spatial pixels. Recently reported results using a snapshot imaging system based on a Fabry–Perot design indicate other issues such as mis-registration of band images during airborne data acquisition since whole band images are acquired sequentially in time [24]; this same system delivers image cubes that have 1025 × 648 spatial pixels with only 23 spectral bands and 12 bits per pixel acquired within 0.76 s, meaning that the data volumes recorded are only 9% of the data rates achieved below by our approach in comparable time (0.67 s). For our applications and the scientific goals described later in this Section, the 12-bit dynamic range found in the system described in [24] and in other snapshot hyperspectral imaging systems [25] is too limited, and this is one of the motivating factors for our having designed an overall system uses a hyperspectral line scanner with 16-bit dynamic range. On the other hand, progress has been made in co-registration of the mis-aligned band images captured via snapshot hyperspectral imaging, with one recent work demonstrating mis-registration errors of ≤0.5 pixels [26]. Some designs have included much smaller conventional hyperspectral imaging arrays that have been resampled then to a panchromatic image acquired simultaneously [27]. Of course, the potential limitation for these systems is that they may not produce hyperspectral image sequences that are truly representative of what would be recorded in an actual full-resolution imaging spectrometer.

At the same time, among conventional imaging spectrometer designs, the commercial marketplace, driven by consumer demand for portable imaging technologies as well as unmanned aerial systems (UAS) in a number of important commercial application areas, has led to cost-effective improvements to spectrographs with progressively greater sensitivity, and this in combination with improvements to data capture capabilities have together led to hyperspectral imaging systems that can frame at high rates while maintaining high data quality (low aberration) as well as excellent spectral and spatial resolution [28,29]. The current generation of conventional imaging systems requires shorter integration times, and therefore can record a line of hyperspectral pixels at much higher rates, in the 200–400 Hz range. Most of these spectrometers are incorporated in systems that operate as line scanners. The hyperspectral line-scanner incorporated in the system described in this paper is an example of a system operating with data rates in this range [30]. Line scanners such as this have been the norm in so called “push-broom” imaging system design, used in hyperspectral imaging from space- and air-borne systems [31,32,33,34,35,36,37,38,39], where the motion of the platform produces one spatial dimension, the along-track spatial dimension, of the image data cube.

In developing a system such as the the one described here in this paper, we had several specific objectives. For coastal applications, our objectives included: (1) to be able to acquire short-time-interval hyperspectral imagery time series to support long-term goals of modeling both dynamics of the near-shore water column including the mapping of in water constituents (suspended sediments, color-dissolved organic matter (CDOM), chlorophyll, etc.) and their transport, (2) to capture near-shore land characteristics (sediments and vegetation) and in particular change in sediments on short time scales due to the influence of waves and tides, (3) more broadly to be able to image from a variety of geometries from the same location in order to obtain multi-view imagery from samples of the bi-directional reflectance distribution function for use in retrieval of geophysical parameters of the surface through inversion of radiative transfer models [40] and for construction of digital surface models (DSM) to enhance derived products and contribute to validation, (4) to image at very fine-scale spatial resolutions (mm to cm in the near range) in order to derive water-column and land surface products as just described on scales where variation might occur and to consider how products derived at these resolutions then scale up to more traditional scales so often used in remote sensing from airborne and satellite platforms where resolutions have often been measured in meters, to tens of meters, or greater; and (5) to acquire imagery for all of these purposes with a hyperspectral imager with sufficient dynamic range that retrieval in both the water column and on land would be possible.

Our objectives for moving vehicle applications overlap a number of those just described for the water column, especially goals (1), (3) and (5) listed above. Our objectives here were: (a) to obtain short-time interval hyperspectral imagery, which is critical to identification and tracking of moving vehicles while minimizing distortion due to vehicle movement; (b) to be able to characterize BRDF effects for moving vehicles, and (c) to ensure that shadows due to occlusions and nearby structures in the vicinity of moving vehicles could be better characterized.

Our approach in this paper uses the very high data rates found in modern hyperspectral line scanners to achieve a low-rate hyperspectral video acquisition system. Our overall system design incorporates a modern hyperspectral imaging spectrometer integrated into a high-speed pan tilt system with onboard Inertial Measurement Unit Global Positioning System (IMU-GPS) for pointing and data time synchronization. In field settings, the system is deployed from a telescopic mast, meeting our objectives (3) and (4). By combining these components into one integrated system, we describe how a time sequence of hyperspectral images can be acquired at ∼1.5 Hz, thus operating as a low-rate hyperspectral video of dynamic scenes and satisfying objectives (1), (2), and (a). In order to meet objectives (5) and (c) above, the imaging system that we selected had 16-bit dynamic range. Traditional video systems have been used to examine coastal regions in the past, however, these have been primarily monochromatic [15] or multi-spectral imaging systems [17] with a very limited number of spectral bands (typically 3 bands); these systems provide a more limited understanding of the dynamics of the littoral zone and have been primarily used to estimate current flow vectors. Similarly, previous studies have recognized the potential of spectral information to improve persistent vehicle tracking, but most tracking studies have used panchromatic or RGB imaging due to the cost and availability of spectral imaging equipment [41,42,43,44,45,46,47].

2. Approach

2.1. Low-Rate Hyperspectral Video System

At the heart of our approach is a state-of-the-art Headwall micro High Efficiency (HE) Hyperspec [30]. This system is advertised to achieve “frame rates” of up to 250 Hz. Here the term “frame rate” refers to the rate at which a line of hyperspectral pixels can be acquired and stored in a data capture unit. Our Headwall micro HE Hyperspec E-Series is a hyperspectral line scanner with 1600 across-track spatial pixels and 371 spectral pixels, with 16-bit dynamic range. Headwall currently manufactures both visible and near infrared (VNIR) as well as short-wave infrared (SWIR) versions of the Hyperspec. This paper describes an overall system design in which a VNIR Hyperspec is the imaging unit of the system.

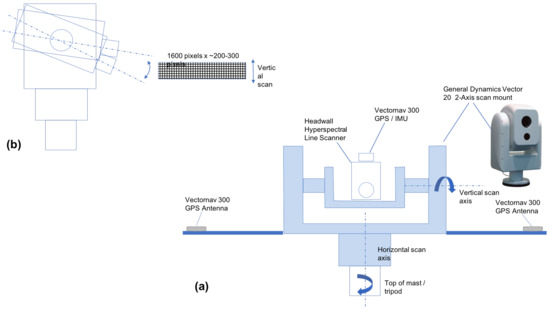

Our design integrates (Figure 1) a Headwall Hyperspec into a high-speed maritime-rated General Dynamics Vector 20 pan-tilt unit [48]. Along-track motion of the Headwall Hyperspec line-scanner is accomplished by nodding of the pan-tilt unit. A Vectornav VN-300 IMU-GPS [49] is also integrated to provide pointing information for the system as well as GPS time-stamps for acquired hyperspectral data. In field settings, we mount the integrated Headwall Hyperspec and General Dynamics pan-tilt and the Headwall compact data unit atop a BlueSky AL-3 telescopic mast [50] which can raise the system from 1.5–15 m above the ground. Integration of the Hyperspec, General Dyanmics pan-tilt, and Vectornav VN-300 GPS-IMU was accomplished by Headwall under contract to RIT, and under the same contract, Headwall modified their Hyperspec data acquisition software to meet our RIT data acquisition specifications. The key data acquisition features allow direct user control of camera parameters such as integration time as well as rates of azimuthal slewing and nodding in the zenith direction of the pan-tilt system. Additional engineering, including development of custom mounting plate for the General Dynamics pan-tilt containing the Headwall Hyperspec and the Vectornav GPS-IMU components, as well as development of a field portable power supply to provide power to all components, was undertaken at RIT to further integrate the Headwall/General-Dynamics/Vectornav configuration onto the BlueSky AL-3 telescopic mast to make the final configuration field-ready.

Figure 1.

Hyperspectral video imaging concept. (a) Headwall Hyperspec HE E-Series hyperspectral line scanner and Vectornav 300 GPS/IMU integrated into the General Dynamics Vector 20 high-speed pan-tilt unit. (b) Nodding motion of the pan-tilt provides the along-track motion normally produced by movement of an aircraft when these types of imaging systems are used in an airborne platform.

The control software allows a variety of scan sequences to be implemented. This includes nodding at the same azimuthal orientation, typical for hyperspectral video modes, where bi-directional scanning is used to maximize hyperspectral data acquisition rates, as well as scanning sequences that step in azimuth between image frames, in combination with the normal zenith nodding mode used to produce the along track motion for each full hyperspectral image frame.

Our current instrument configuration incorporates a 12 mm lens on the Headwall Hyperspec imaging system. When operated from our telescopic mast with this lens, the Headwall system provides very fine scale hyperspectral imagery with a GSD in the millimeter to centimeter range. A table of GSD values obtainable with our system at various mast heights and distances from the mast appears in Table 1.

Table 1.

GSD (m).

We note that in its current configuration, when the General Dynamics pan-tilt housing is leveled, it has a maximum deflection angle above or below the horizontal of . This, however, is not a permanent limitation as the addition of a rotational stage in future planned upgrades will allow the system to reach and measure hyperspectral data from a much broader range of zenith angles.

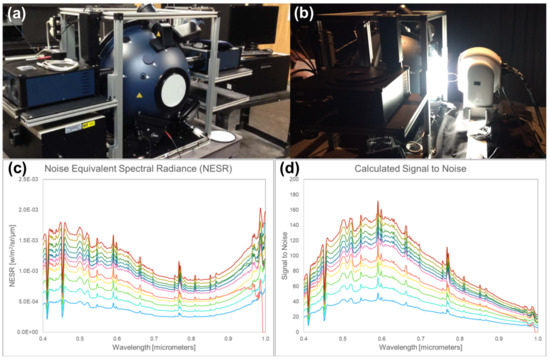

2.2. System Calibration

To characterize the system, we used our calibration facility, which includes a LabSphere Helios (Labsphere, North Sutton, NH, USA) 0.5 m diameter integrating sphere [51] paired with a calibrated spectrometer to collect radiance data in order to derive system calibration curves, signal-to-noise ratio (SNR), and noise-equivalent spectral radiance (NESR). In the examples provided here, we show results for an integration time of 2.5 ms, which is the integration time used in the surf zone hyperspectral imagery time series example provided below.

Through various system ports, our integrating sphere is configured with three different light sources (a quartz tungsten halogen (QTH) bulb and two highly stable xenon plasma arc lamps), a VNIR spectrometer (Ocean Optics, Largo, FL, USA), and two single point broad band detectors, one silicon detector (Hamamatsu Photonics, Hamamatsu City, Japan) measuring in the visible portion of the spectrum, and an Indium Gallium Arsenide (InGaAs) detector (Teledyne Judson, Montgomeryville, PA, USA) measuring the total energy in the shortwave infrared. The external illumination sources allow the instrument to be utilized as a source capable of outputting a constant illumination across the entire 0.2 m exit port, and the radiometrically calibrated detectors are capable of measuring internal illumination conditions. For calibration purposes, the sphere operates as a source, utilizing the two high intensity plasma lamps (Labsphere, North Sutton, NH, USA), which are capable of producing almost full daylight illumination conditions through the exit port.

In our calibration, we use typically in the range of 10–30 different illumination levels, and we average 255 scans at the desired integration time. The Xenon plasma lamps provide a highly stable illumination source for the measurements, however, in order to minimize any residual instrument drift, we use two sets of dark current measurements, one before and one after imaging system measurements for the various illumination levels provided by the integrating sphere. According to manufacturer specifications each lamp maintains an approximate correlated color temperature of 5100 K ± 200 K with rated lifetime of 30,000 h. Because the Plasma External Lamps (PEL) are microwave induced sources the emitter requires feedback to maintain desired light levels. This fluctuation results in a 0.1 Hz sawtooth shaped waveform. In rest mode short term stability is ±3% from peak to peak (P-P) resulting in 6% change in magnitude of desired output. To further reduce error, Labsphere has implemented a Test Mode, during which the short term drift is ±0.5% P-P, (0.6% magnitude). Test Mode can only be maintained for a 30 min period, after which the system requires a minimum of 5 min before the next activation cycle. The long term stability reported by Labsphere for every 100 h is less than 1%. Correlated Color Temperature (CCT) change for the same time period was reported to be <100 K. Lastly observed spectral stability had fluctuations <0.5 nm for every 10 h. The quoted stability values provided here are manufacturer specifications, indicating expected performance. To obtain the results provided below, we used the Test Mode during data collection.

To develop calibrations for each wavelength, we perform a linear regression between the NIST-traceable light levels (radiance) as recorded by the onboard spectrometer attached to our integrating sphere and the recorded radiance at each wavelength in our Headwall Hyperspec imaging system. We measure system dark current by blocking the entrance aperture with the lens cap in the dark room of the calibration facility. Figure 2 shows the noise equivalent spectral radiance (NESR) and signal-to-noise ratio (SNR) for the 2.5 ms integration time used by our Headwall system during the acquisition of the hyperspectral imagery time series of the surf zone described later in this paper. The curves correspond to different illumination levels, varying from the base noise of the system to just below the saturation limit of the detector at 30,000 electrons. The SNR curves in Figure 2 show that at near full daylight levels, the peak SNR in the visible part of the spectrum is around 150, while at 0.9 m in the near infra-red, the SNR drops to around 40. Note that the spatial resolution of our system is usually quite high (mm to cm range, as shown in Table 1, depending on the height of the mast and proximity of the ground element to the sensor). Thus, if higher SNR is desired, spatial binning by even a modest amount can provide significant enhancements; for example a 3 × 3 spatial window would provide a peak SNR of 450 at the peak in the visible and 120 at 0.9 m.

Figure 2.

(a) Labsphere Helios 0.5 m diameter integrating sphere in our Rochester Institute of Technology (RIT) calibration laboratory. Plasma lamps, attached to the sphere, are visible on the top shelves to the left and right. (b) Our Headwall imaging system in the pan-tilt unit in front of the sphere during calibration. (c) typical NESR curves for 10 light levels up to the maximum output of the two plasma lamps, near daylight levels. (d) Typical SNR obtained over the same 30 light levels for a 2.5 × 10 s integration time. Hyperspectral video sequences shown in this paper used either a 2.5 × 10 s or 3.0 × 10 s integration time.

2.3. Imaging the Dynamics of the Surf Zone

Imaging of the coastal zone has taken many forms. For example, multi-spectral and hyperspectral imaging systems have been used to quantify concentrations of water constituents and characterize bottom type and bathymetry using radiative transfer models [7,52]. In addition, video imaging has been used to estimate flow of the water column and its constituents using video imaging (monochromatic and 3-band multi-spectral) through particle imaging velocimetry (PIV) [18,19]. Limitations of these past approaches are that traditional airborne and satellite remote sensing, while providing important details about sediment concentrations near shore, have produced essentially an instantaneous look at what is in fact a dynamical system. At the same time, while video systems have been used to image the water column and model its flow, the limited number of bands has meant that little information is available from these systems regarding in water constituents or bottom properties.

The dynamics of sediment flow in coastal settings plays a significant role in the evolution of shorelines, determining processes such as erosion and accretion. As sea levels continue to rise, improved modeling of the evolution of coastal regions is a priority for environmental stewards, natural resource managers, urban planners, and decision makers. Understanding the details of this evolution is critical and improved knowledge of the dynamics of flowing sediment near shore can contribute significantly to hydrodynamic models that ultimately predict the future of coastal regions. Imaging systems have been used to acquire snapshots of the coastal zone from airborne and satellite platforms. Multi-spectral and especially hyperspectral imaging systems can provide an instantaneous look at the distribution of in-water constituents, bottom-type, and depth; however, these have not produced a continuous time series that looks at the short time scale dynamics of the flowing sediment. Video systems have also been used to examine coastal regions, however, these have been primarily monochromatic [15] or multi-spectral imaging systems [17] with a very limited number of spectral bands (typically 3 bands); these systems provide a more limited understanding of the dynamics of the littoral zone and have been primarily used to estimate current flow vectors without the ability to determine local particle densities. In the Results section below, we demonstrate a low-rate hyperspectral video time series. This imaging demonstration offers the advantage of bringing together the power of spectral imaging to estimate in water constituents and bottom type along with low-rate video that offers the potential to track the movement of these in-water constituents on very short time scales.

2.4. Real-Time Vehicle Tracking Using Hyperspectral Imagery

In recent years, vehicle detection and tracking has become important in a number of applications, including analyzing traffic flow, monitoring accidents, navigation for autonomous vehicles, and surveillance [41,47,53,54,55]. Most traffic monitoring and vehicle movement applications use relatively high-resolution video and have a high number of pixels on each vehicle, which allows tracking algorithms to rely on appearance features in the spatial domain for detection and identification. Tracking from airborne imaging platforms, on the other hand, poses several unique challenges. Airborne imaging systems typically have fewer pixels representing each vehicle within the scene due to the longer viewing distance, as well as being prone to blur or smear due to the relative motion of the sensor and the object and parallax error [41,56]. Beyond imaging system limitations, vehicle tracking/detection algorithms also must be able to handle complex, cluttered scenes that include traffic congestion and occlusions from the environment [41,47]. Occlusions are more common in airborne images and are particularly challenging for persistent vehicle tracking. When a tracked vehicle is obscured by a tree or a building, it is common for it to be assigned a new label once it reemerges. This can be avoided if the vehicle can be uniquely identified, however while traditional tracking methods can rely on high-resolution spatial features, the low resolution of airborne imagery, where the object is only represented by 100–200 or fewer pixels, makes reidentification by spatial features difficult and can lead to the tracker following a different vehicle or dropping the track entirely [41,56].

Compared to panchromatic or RGB systems, hyperspectral sensors can more effectively identify different materials based on their spectral signature and can thereby provide additional spectral features that can reidentify vehicles. Vodacek et al. [47] and Uzkent et al. [56] suggested using a multi-modal sensor design consisting of a wide field of view (FOV) panchromatic system alongside a narrow FOV hyperspectral sensor for real-time vehicle tracking and developed a tracking method leveraging the spectral information. Due to the lack of hyperspectral data, the method—along with subsequent additions to the tracking system—has only been tested on synthetic hyperspectral images generated by the Digital Imaging and Remote Sensing Image Generation model [41,56]. Results using the synthetic data have demonstrated that the spectral signatures can provide the necessary information to isolate targets of interest (TOI) in occluded backgrounds. This can be especially important when tracking vehicles in highly congested traffic or in the presence of dense buildings or trees within the scene. Experiments in cluttered synthetic scenes have shown that utilizing the spectral data outperforms other algorithms for persistent airborne tracking [56]. In addition, there has been increased recent interest in using advanced computer vision and machine learning algorithms to efficiently exploit the large amount of information contained in hyperspectral video, but no data sets currently exist with which to train—much less validate—a neural network model.

3. Results

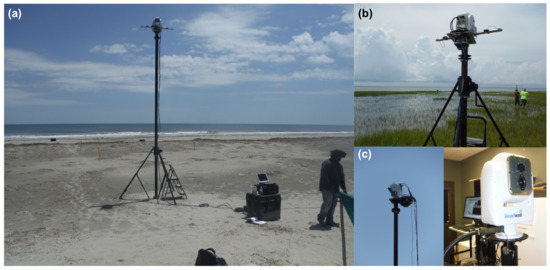

3.1. Hyperspectral Data Collection Experiment

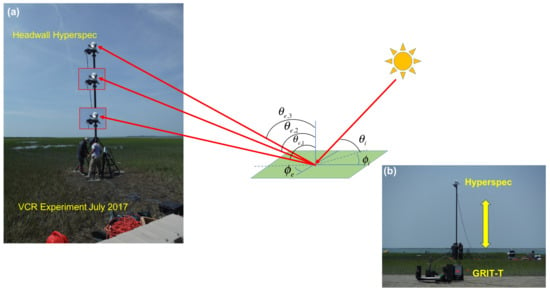

The first demonstration of the hyperspectral low-rate video imaging concept that we have described took place during an RIT experiment on Hog Island, VA, a barrier island which is part of the Virginia Coast Reserve (VCR) [57], a National Science Foundation Long-Term Ecological Research (LTER) site [58]. Over an 11-day period, the imaging system was used repeatedly from atop the BlueSky telescopic mast system (Figure 3) to acquire a wide variety of hyperspectral imagery of the island. By integrating the system onto the telescopic mast, the system is also able to acquire imagery from the same region on the ground, or of the water, from multiple viewing geometries, allowing the bi-directional reflectance distribution function (BRDF) of the surface to be sampled in collected imagery (Figure 4). For field data collections such as these, the term hemispherical conical reflectance factor (HCRF) is also sometimes used as a descriptor since: (a) the sediment radiance is compared with the radiance of a Lambertian standard reference (Spectralon panel), (b) the sensor has a finite aperture, and (c) the primary illumination source is not a single point source but contains both direct (solar) and indirect sources of illumination (skylight and adjacency effects) [59,60,61]. In our experiment, described in greater detail below, we deployed our hyperspectral field-portable goniometer system, the Goniometer of the Rochester Institute of Technology-Two (GRIT-T) [62] for direct comparison with hyperspectral imagery acquired from our Headwall integrated hyperspectral imaging system at varying heights on the telescopic mast. HCRF of the surface provides information on the geophysical state of the surface, such as the fill factor, which can be inferred by inverting radiative transfer models [40], which has been done previously using GRIT-T hyperspectral multi-angular data [63].

Figure 3.

Data collection with the integrated imaging system: Headwall Hyperspec imaging system, General Dynamics maritime pan-tilt, and Vectornav-300 GPS IMU atop a BlueSky AL-3 telescopic mast. (a) on the western shore of Hog Island, VA while imaging littoral zone dynamics; (b) on the eastern shore imaging coastal wetlands; (c) close-ups of the imaging system while in operation at Hog Island and in the lab during testing. Closer to the shoreline in (a), white Spectralon calibration panels are deployed; also visible are various fiducials (orange stakes) used for image registration and geo-referencing. Fiducials were surveyed with real-time kinematic GPS.

Figure 4.

(a) Hyperspectral HCRF imagery sequences from our integrated hyperspectral Hyperspec imaging system atop a telescopic mast. Mast height determines view zenith angle. (b) The Hyperspec imaging a salt panne region during the July 2017 experiment on Hog Island while the Goniometer of the Rochester Institute of Technology-Two (GRIT) [62] records HCRF from the surface.

3.2. Digital Elevation Model and HCRF from Hyperspectral Stereo Imagery

The hyperspectral imagery acquired from differing viewing geometries of the same surface also allows us to develop digital elevation models (DEMs) of the surface which can be merged with the hyperspectral imagery and used in modeling and retrieval of surface properties. An example DEM-derived from the multi-view hyperspectral imagery that we acquired at our study site on Hog Island in July 2017 appears in Figure 5, which shows the resulting DEM from a set of fourteen hyperspectral scenes acquired from our mast-mounted system. DEM construction used the structure from motion (SFM) algorithm PhotoScan developed by AgiSoft LLC [64]. Similar results have been obtained from stereo views of a surface from unmanned aerial system (UAS) platforms [38] as well as from a ground-based hyperspectral imaging system [65], although the latter result was obtained from significantly longer distances, requiring the use of atmospheric correction algorithms. The example provided in Figure 5 was for stand-off distances significantly less than those for which atmospheric correction would be necessary.

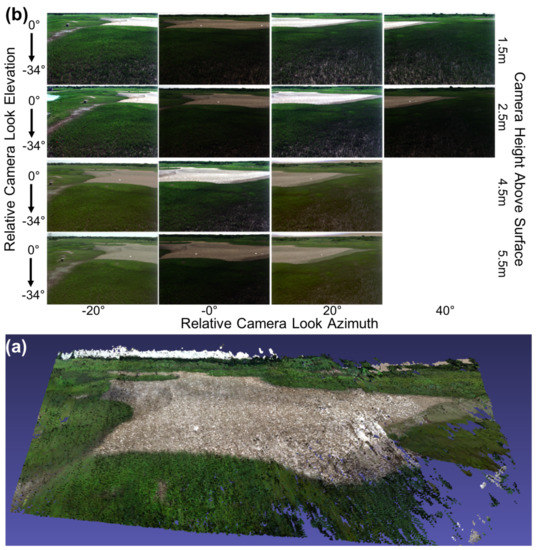

Figure 5.

(a) DEM-derived from multi-view imagery from our mast-mounted hyperspectral system. (b) the fourteen hyperspectral scenes used as input to a Structure-from-Motion (SFM) algorithm. These hyperspectral scenes had spatial dimensions 1600 × 971 with 371 spectral bands.

Each image of the set of 14 used in creating the DEM is a hyperspectral scene acquired with the full 371 spectral bands from 0.4–1.0 m, 1600 across track spatial pixels, and 971 spatial pixels in the second spatial dimension produced by the nodding of the pan-tilt. Each row was produced from a series of scans that overlapped in azimuth and were acquired at different mast heights. In these examples, the height of the hyperspectral imager above the surface during image acquisition was respectively 1.5 m, 2.5 m, 4.5m, and 5.5 m. Each scene shows a salt panne surrounded by coastal salt marsh vegetation, predominantly Spartina alterniflora.

Having the ability to produce a DEM as part of the data collection workflow has potential advantages. Lorenz et al. [65] used this information to correct for variations in illumination over rocky outcrops by determining the true angle of the sun to the surface normal derived from the DEM. For our own workflow, which is focused on problems such as inversion of radiative transfer models to retrieve geophysical properties of the surface [40,63], we require both the true viewing zenith and azimuth angles of our imaging system in the reference frame of the tilted surface normal as well as the incident zenith and azimuth angles of solar illumination within this tilted coordinate system. The onboard GPS-IMU of our mast-mounted imaging system, provides pointing (view orientation) and timestamps which together with the DEM allow the calculation of these angles. Fiducials placed in the scene enhance the overall accuracy of these angle calculations.

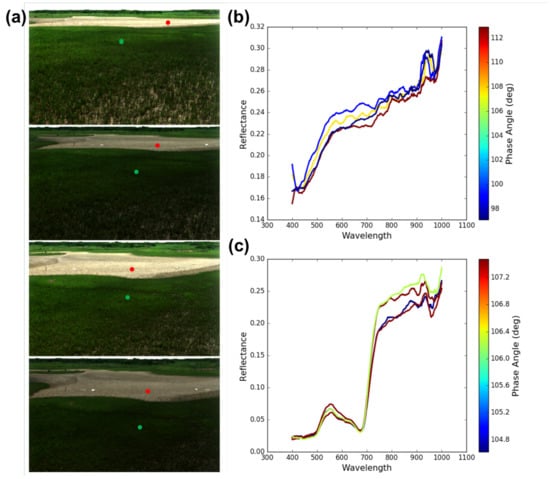

The fourteen scenes portrayed in Figure 5 also represent another important aspect of our overall approach described earlier in Section 3.1: the acquisition of multi-view imagery that sub-sample the HCRF distributions that form the core of inversion of radiative transfer models to retrieve geophysical properties of the surface [40,63] and satisfy goal (3) stated in the Introduction. Figure 6 shows examples of the spectral reflectance derived from 4 of the 14 scenes acquired from the salt panne at different mast heights, which as Figure 4 illustrates, provide us with a sub-sample of the HCRF. We have previously demonstrated an approach to retrieving sediment fill factor from laboratory bi-conical reflectance factor (BCRF) measurements [63] and then extended this to retrieval from multi-view hyperspectral time-series imagery acquired by NASA G-LiHT and multi-spectral time series imagery from GOES-R [40]; in each case, these retrievals represented a more restricted sub-sample of points from the HCRF distribution. Imagery from our mast-mounted system can allow us to more completely validate the inversion of this modified radiative transfer model to retrieve and map sediment fill factor from imagery and in particular help in assessing how many and which views of the surface are most critical for successful inversion.

Figure 6.

(a) Enlargement of four hyperspectral scenes acquired from four mast heights in the set of fourteen shown in Figure 5. (b,c) Set of spectra from the same location in each of the four scenes for (b) a position (red dot) in the salt panne, and (c) a position (green dot) in the salt marsh vegetation.

3.3. Low-Rate Hyperspectral Video Image Sequence of the Surf Zone

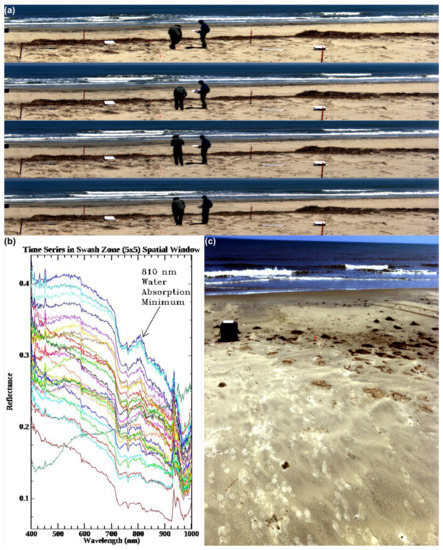

On 14 July 2017, our integrated system was used for the first time in the low-rate video mode to acquire imagery of the surf zone on the eastern shore of Hog Island, VA. One such image sequence is shown in Figure 7, which shows a subset of a longer sequence of images acquired every 0.67 s. Each image in the scene is 1600 across-track pixels (horizontal dimension) with 371 spectral bands each by 212 along-track pixels (vertical dimension produced by the nodding motion of the General Dynamics pan-tilt unit). The integration time for each line of 1600 across-track spatial pixels with 371 spectral pixels each was approximately 2.5 ms. Once other latencies in data acquisition are accounted for and the necessary time is allowed for the pan-tilt to reverse direction, the acquisition rate of 0.67 s for the full hyperspectral scene is achieved. We emphasize that there is no specific limitation of the system that prevents longer integration times and/or slower slewing rates from being used, and other data collected during the experiment did use slower scan rates to obtain larger scenes (see for example the 14 hyperspectral scenes in Figure 5 and the long scan in the lower right portion of Figure 7 which shows a scene with 1600 × 2111 spatial pixels and 371 spectral bands acquired over a 12-s interval during a very slow scan with a longer integration time). The latter hyperspectral scene, in particular, represents our goal (4) stated in the Introduction of being able to produce mm- to cm-scale imagery in the near range to better characterize the land surface at scales typical of the variation found near the waterline. However, in the hyperspectral low-rate video mode, image frames of the size shown in Figure 7 are typical. The quality of the spectra obtained in the imagery is indicated by the spectral time series derived from a small 5 × 5 window near the shoreline over time. A well-known local minimum in the liquid water absorption spectrum [66] normally appears in very shallow waters as a peak in the reflectance spectrum around 810 nm. This peak is well correlated with shallow water bathymetry typically in depths that are ≤1 m, and this feature was previously used in a shallow water bathymetry retrieval algorithm and demonstration which compared favorably with bathymetry directly measured in situ [67]. Obtaining spectral data of sufficient quality is important to the success of retrievals based on spectral features, such as the 810 nm feature just described, band combinations and regressions based on band combinations [68,69], “semi-analytical” models [70,71], or inversion of forward-modeled look-up tables generated from radiative transfer models such as Hydrolight [7], which rely on the spectral and radiometric accuracy of the hyperspectral data. These short-time-scale hyperspectral imagery sequences satisfy our stated goals (1) and (2).

Figure 7.

(a) Hyperspectral video image sequence using our integrated Headwall micro-HE VNIR hyperspectral imaging system on Hog Island, VA on 14 July 2017. The representative sequence subset (from a time series of 30 images) shown here contains hyperspectral image frames with spatial dimensions 1600 × 212 each with 371 spectral bands. Each hyperspectral scene was acquired approximately once every 0.67 s. Two Spectralon reference panels used in reflectance calculations and several orange fiducial stakes used in geo-referencing are also visible. (b) Spectral reflectance captured by the integrated system for a co-registered pixel in the swash zone of the hyperspectral video image sequence. The spectral reflectance, for a 5 × 5 spatial window, is shown over all 30 hyperspectral images, which were acquired once every 0.67 s. The 810 nm peak indicated corresponds to a well-known minimum in the water absorption spectrum [66], well-correlated with shallow water bathymetry [67]. (c) Slow scan/longer integration time hyperspectral scene with 1600 × 2111 spatial pixels and 371 spectral channels acquired closer to the waterline with the system deployed at 1.5 m height, showing the mm- to cm-scale resolution possible: the details of footprints can be clearly seen.

3.4. Time Series Hyperspectral Imagery of Moving Vehicles

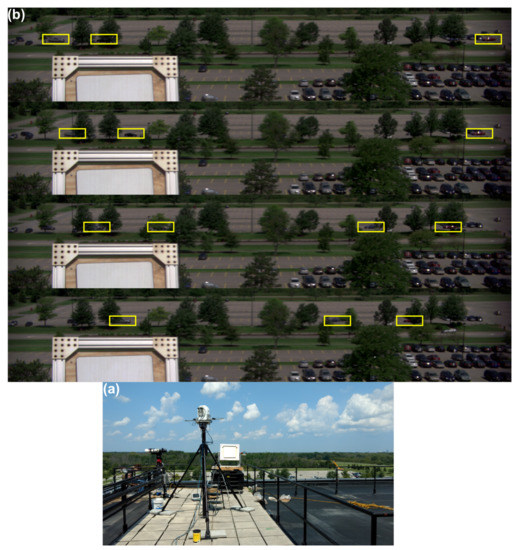

A second test of the video capabilities of our hyperspectral imaging system was performed on 9 August 2017 at RIT. For this experiment, imaging of moving vehicles was the primary focus. The same instrument configuration was used from atop the Chester F. Carlson Center for Imaging Science at RIT (Figure 8). Slewing rates of the pan-tilt as well as integration time of the Headwall micro HE were adjusted to achieve a larger image in the along-track dimension (zenith or nodding dimension). The integration time for this data collection was 3.0 ms, and the images in the sequence were acquired once every second. These images have 1600 across-track pixels and 299 along-track pixels with 371 spectral bands.

Figure 8.

(a) Our integrated system deployed on the roof of the Chester F. Carlson Center for Imaging Science on the RIT campus on 9 August 2017 during a second test focused on imaging of moving vehicles. Shown also is a Spectralon panel deployed on the roof and elevated to be within the field of view of the imaging system. (b) Hyperspectral image time series (top to bottom) of the RIT parking lot showing five moving vehicles in a cluttered environment. The yellow boxes outline the positions of the test vehicles over time, but a box is drawn only when a test vehicle is clearly visible.

The objective of the data collection was to obtain hyperspectral image sequences that would be useful for studies of the detection and tracking of vehicles driving through the parking lot at ∼2.75 mps and passing behind various occlusions within the scene, such as the trees in the background and parked cars. An example sequence of hyperspectral image frames from the experiment appear in Figure 8. Each image contains the calibration panel. Note the partly cloudy conditions leading to potential rapid changes to the illumination state. Yellow boxes are drawn around the test vehicles controlled for the experiment. Note that vehicles are occluded at times so the number of boxes drawn can change from image to image. The figure illustrates the complexity of vehicle tracking when a large number of occlusions are present. Video sequences such as this will be produced in future experiments but with a longer duration and with coincident intensive reference data collection to serve as community resources. Such data sequences of short-time interval data of moving vehicles are especially useful for modeling purposes to address the challenge of occlusions (our objective (c) stated in the Introduction) and mixtures that appear in the spectral imagery. Similarly, as vehicles move through the scene, the imaging geometry changes significantly leading to BRDF effects that must be properly modeled for successful extraction and tracking of moving vehicles. The imagery shown, therefore, is important to be able to meet objectives (a) and (b) described in the Introduction.

4. Conclusions

We have described an approach to acquiring full hyperspectral data cubes at low video rates. Our approach integrated a state-of-the-art hyperspectral line scanner capable of high data acquisition rates into a high speed maritime pan-tilt unit. The system also included an integrated GPS/IMU to provide position and pointing information. The entire system is integrated onto a telescopic mast system that allows us to acquire hyperspectral time series imagery from multiple vantage points. This feature also makes possible the creation of a DEM from the resulting stereo hyperspectral views, an approach which was illustrated in this study. Similarly, the multi-view capability also allows the system to sample the bi-directional reflectance distribution function. We provided two examples of the low-rate hyperspectral video approach, showing hyperspectral imagery time series acquired in two different settings for very different applications: imaging of the dynamics of the surf zone in a coastal setting and moving vehicle imaging in the presence of many occlusions. We evaluated SNR and NESR and found values within acceptable limits for the data rates and integration times used in the examples. We noted that SNR could be further improved by spatial binning, an acceptable trade-off in some applications given the very high spatial resolution that we obtain with this system. Within the present system architecture, we noted that further improvements in hyperspectral image acquisition rates could be achieved by reducing the size of the across-track spatial dimension. Our particular system does not allow spectral binning on-chip, however, such capabilities do exist in commercially available systems and could be used to further accelerate image acquisition rates.

Author Contributions

C.M.B. developed the hyperspectral video imaging concept and approach, and C.M.B., D.M., M.R. and T.B. designed the overall hardware implementation, which Headwall Photonics integrated under contract to RIT (Rochester Institute of Technology). R.L.K. designed the system power supply and cable system. T.B. and M.F. performed additional engineering for platform integration. C.M.B., R.S.E., C.S.L., and G.P.B. conceived the VCR LTER experiment, and C.M.B., R.S.E., C.S.L., G.P.B, and M.F. carried it out and processed the data. C.M.B., R.S.E., C.S.L., and G.P.B. also conducted the measurements for the RIT moving vehicle experiment, and processed the data. A.V., M.J.H., M.R., and D.M. conceived the RIT moving vehicle experiment and participated in the execution of the experiment. C.M.B., R.S.E., C.S.L., A.V., and M.J.H. wrote the article.

Funding

This research is funded by an academic grant from the National Geospatial-Intelligence Agency (Award No. #HM0476-17-1-2001, Project Title: Hyperspectral Video Imaging and Mapping of Littoral Conditions), Approved for public release, 19-083. The development of this instrument was made possible by a grant from the Air Force Office of Scientific Research (AFOSR) Defense University Research Instrumentation Program (DURIP). System integration was performed by Headwall Photonics under contract to RIT, as well as by co-authors identified under Author Contributions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dutta, D.; Goodwell, A.E.; Kumar, P.; Garvey, J.E.; Darmody, R.G.; Berretta, D.P.; Greenberg, J.A. On the feasibility of characterizing soil properties from aviris data. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5133–5147. [Google Scholar] [CrossRef]

- Huesca, M.; García, M.; Roth, K.L.; Casas, A.; Ustin, S.L. Canopy structural attributes derived from AVIRIS imaging spectroscopy data in a mixed broadleaf/conifer forest. Remote Sens. Environ. 2016, 182, 208–226. [Google Scholar] [CrossRef]

- Schlerf, M.; Atzberger, C.; Hill, J. Remote sensing of forest biophysical variables using HyMap imaging spectrometer data. Remote Sens. Environ. 2005, 95, 177–194. [Google Scholar] [CrossRef]

- Giardino, C.; Brando, V.E.; Dekker, A.G.; Strömbeck, N.; Candiani, G. Assessment of water quality in Lake Garda (Italy) using Hyperion. Remote Sens. Environ. 2007, 109, 183–195. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Garcia, R.A.; Lee, Z.; Hochberg, E.J. Hyperspectral Shallow-Water Remote Sensing with an Enhanced Benthic Classifier. Remote Sens. 2018, 10, 147. [Google Scholar] [CrossRef]

- Mobley, C.D.; Sundman, L.K.; Davis, C.O.; Bowles, J.H.; Downes, T.V.; Leathers, R.A.; Montes, M.J.; Bissett, W.P.; Kohler, D.D.; Reid, R.P.; et al. Interpretation of hyperspectral remote-sensing imagery by spectrum matching and look-up tables. Appl. Opt. 2005, 44, 3576–3592. [Google Scholar] [CrossRef]

- Liu, Y.; Gao, G.; Gu, Y. Tensor matched subspace detector for hyperspectral target detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1967–1974. [Google Scholar] [CrossRef]

- Prasad, S.; Bruce, L.M. Decision fusion with confidence-based weight assignment for hyperspectral target recognition. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1448–1456. [Google Scholar] [CrossRef]

- Chang, C.I.; Jiao, X.; Wu, C.C.; Du, Y.; Chang, M.L. A review of unsupervised spectral target analysis for hyperspectral imagery. EURASIP J. Adv. Signal Process. 2010, 2010, 503752. [Google Scholar] [CrossRef]

- Power, H.; Holman, R.; Baldock, T. Swash zone boundary conditions derived from optical remote sensing of swash zone flow patterns. J. Geophys. Res. Oceans 2011. [Google Scholar] [CrossRef]

- Lu, J.; Chen, X.; Zhang, P.; Huang, J. Evaluation of spatiotemporal differences in suspended sediment concentration derived from remote sensing and numerical simulation for coastal waters. J. Coast. Conserv. 2017, 21, 197–207. [Google Scholar] [CrossRef]

- Dorji, P.; Fearns, P. Impact of the spatial resolution of satellite remote sensing sensors in the quantification of total suspended sediment concentration: A case study in turbid waters of Northern Western Australia. PLoS ONE 2017, 12, e0175042. [Google Scholar] [CrossRef] [PubMed]

- Kamel, A.; El Serafy, G.; Bhattacharya, B.; Van Kessel, T.; Solomatine, D. Using remote sensing to enhance modelling of fine sediment dynamics in the Dutch coastal zone. J. Hydroinform. 2014, 16, 458–476. [Google Scholar] [CrossRef]

- Puleo, J.A.; Holland, K.T. Estimating swash zone friction coefficients on a sandy beach. Coast. Eng. 2001, 43, 25–40. [Google Scholar] [CrossRef]

- Concha, J.A.; Schott, J.R. Retrieval of color producing agents in Case 2 waters using Landsat 8. Remote Sens. Environ. 2016, 185, 95–107. [Google Scholar] [CrossRef]

- Puleo, J.A.; Farquharson, G.; Frasier, S.J.; Holland, K.T. Comparison of optical and radar measurements of surf and swash zone velocity fields. J. Geophys. Res. Oceans 2003. [Google Scholar] [CrossRef]

- Muste, M.; Fujita, I.; Hauet, A. Large-scale particle image velocimetry for measurements in riverine environments. Water Resour. Res. 2008. [Google Scholar] [CrossRef]

- Puleo, J.A.; McKenna, T.E.; Holland, K.T.; Calantoni, J. Quantifying riverine surface currents from time sequences of thermal infrared imagery. Water Resour. Res. 2012. [Google Scholar] [CrossRef]

- Gao, L.; Wang, L.V. A review of snapshot multidimensional optical imaging: Measuring photon tags in parallel. Phys. Rep. 2016, 616, 1–37. [Google Scholar] [CrossRef]

- Hagen, N.A.; Gao, L.S.; Tkaczyk, T.S.; Kester, R.T. Snapshot advantage: A review of the light collection improvement for parallel high-dimensional measurement systems. Opt. Eng. 2012, 51, 111702. [Google Scholar] [CrossRef] [PubMed]

- Saari, H.; Aallos, V.V.; Akujärvi, A.; Antila, T.; Holmlund, C.; Kantojärvi, U.; Mäkynen, J.; Ollila, J. Novel miniaturized hyperspectral sensor for UAV and space applications. In Proceedings of the International Society for Optics and Photonics, Sensors, Systems, and Next-Generation Satellites XIII, Berlin, Germany, 31 August–3 September 2009; Volume 7474, p. 74741M. [Google Scholar]

- Pichette, J.; Charle, W.; Lambrechts, A. Fast and compact internal scanning CMOS-based hyperspectral camera: The Snapscan. In Proceedings of the International Society for Optics and Photonics, Instrumentation Engineering IV, San Francisco, CA, USA, 28 January–2 February 2017; Volume 10110, p. 1011014. [Google Scholar]

- De Oliveira, R.A.; Tommaselli, A.M.; Honkavaara, E. Geometric calibration of a hyperspectral frame camera. Photogramm. Rec. 2016, 31, 325–347. [Google Scholar] [CrossRef]

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Honkavaara, E.; Rosnell, T.; Oliveira, R.; Tommaselli, A. Band registration of tuneable frame format hyperspectral UAV imagers in complex scenes. ISPRS J. Photogramm. Remote Sens. 2017, 134, 96–109. [Google Scholar] [CrossRef]

- Aasen, H.; Bendig, J.; Bolten, A.; Bennertz, S.; Willkomm, M.; Bareth, G. Introduction and Preliminary Results of a Calibration for Full-Frame Hyperspectral Cameras to Monitor Agricultural Crops with UAVs; ISPRS Technical Commission VII Symposium; Copernicus GmbH: Istanbul, Turkey, 29 September–2 October 2014; Volume 40, pp. 1–8.

- Hill, S.L.; Clemens, P. Miniaturization of high spectral spatial resolution hyperspectral imagers on unmanned aerial systems. In Proceedings of the International Society for Optics and Photonics, Next-Generation Spectroscopic Technologies VIII, Baltimore, MD, USA, 3 June 2015; Volume 9482, p. 94821E. [Google Scholar]

- Warren, C.P.; Even, D.M.; Pfister, W.R.; Nakanishi, K.; Velasco, A.; Breitwieser, D.S.; Yee, S.M.; Naungayan, J. Miniaturized visible near-infrared hyperspectral imager for remote-sensing applications. Opt. Eng. 2012, 51, 111720. [Google Scholar] [CrossRef]

- Headwall E-Series Specifications. Available online: https://cdn2.hubspot.net/hubfs/145999/June%202018%20Collateral/MicroHyperspec0418.pdf (accessed on 28 December 2018).

- Greeg, R.O.; Eastwood, M.L.; Sarture, C.M.; Chrien, T.G.; Aronsson, M.; Chippendale, B.J.; Faust, J.A.; Pavri, B.E.; Chovit, C.J.; Solis, M.; et al. Imaging Spectroscopy and the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS). Remote Sens. Environ. 2013, 65, 227–248. [Google Scholar]

- Pearlman, J.S.; Barry, P.S.; Segal, C.C.; Shepanski, J.; Beiso, D.; Carman, S.L. Hyperion, a space-based imaging spectrometer. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1160–1173. [Google Scholar] [CrossRef]

- Diner, D.J.; Beckert, J.C.; Reilly, T.H.; Bruegge, C.J.; Conel, J.E.; Kahn, R.A.; Martonchik, J.V.; Ackerman, T.P.; Davies, R.; Gerstl, S.A.; et al. Multi-angle Imaging SpectroRadiometer (MISR) instrument description and experiment overview. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1072–1087. [Google Scholar] [CrossRef]

- Guanter, L.; Kaufmann, H.; Segl, K.; Foerster, S.; Rogass, C.; Chabrillat, S.; Kuester, T.; Hollstein, A.; Rossner, G.; Chlebek, C.; et al. The EnMAP spaceborne imaging spectroscopy mission for earth observation. Remote Sens. 2015, 7, 8830–8857. [Google Scholar] [CrossRef]

- Babey, S.; Anger, C. A compact airborne spectrographic imager (CASI). In Proceedings of the IGARSS ’89 and Canadian Symposium on Remote Sensing: Quantitative Remote Sensing: An Economic Tool for the Nineties, Vancouver, BC, Canada, 10–14 July 1989; Volume 1, pp. 1028–1031. [Google Scholar]

- Johnson, W.R.; Hook, S.J.; Mouroulis, P.; Wilson, D.W.; Gunapala, S.D.; Realmuto, V.; Lamborn, A.; Paine, C.; Mumolo, J.M.; Eng, B.T. HyTES: Thermal imaging spectrometer development. In Proceedings of the 2011 IEEE Aerospace Conference, Big Sky, MT, USA, 5–12 March 2011; pp. 1–8. [Google Scholar]

- Rickard, L.J.; Basedow, R.W.; Zalewski, E.F.; Silverglate, P.R.; Landers, M. HYDICE: An airborne system for hyperspectral imaging. In Proceedings of the International Society for Optics and Photonics, Imaging Spectrometry of the Terrestrial Environment, Orlando, FL, USA, 11–16 April 1993; Volume 1937, pp. 173–180. [Google Scholar]

- Lucieer, A.; Malenovskỳ, Z.; Veness, T.; Wallace, L. HyperUAS—Imaging spectroscopy from a multirotor unmanned aircraft system. J. Field Robot. 2014, 31, 571–590. [Google Scholar] [CrossRef]

- Cocks, T.; Jenssen, R.; Stewart, A.; Wilson, I.; Shields, T. The HyMapTM airborne hyperspectral sensor: The system, calibration and performance. In Proceedings of the 1st EARSeL Workshop on Imaging Spectroscopy, EARSeL, Zurich, Switzerland, 6–8 October 1998; pp. 37–42. [Google Scholar]

- Eon, R.; Bachmann, C.; Gerace, A. Retrieval of Sediment Fill Factor by Inversion of a Modified Hapke Model Applied to Sampled HCRF from Airborne and Satellite Imagery. Remote Sens. 2018, 10, 1758. [Google Scholar] [CrossRef]

- Uzkent, B.; Rangnekar, A.; Hoffman, M.J. Aerial vehicle tracking by adaptive fusion of hyperspectral likelihood maps. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 233–242. [Google Scholar]

- Bhattacharya, S.; Idrees, H.; Saleemi, I.; Ali, S.; Shah, M. Moving object detection and tracking in forward looking infra-red aerial imagery. In Machine Vision beyond Visible Spectrum; Springer: New York, NY, USA, 2011; pp. 221–252. [Google Scholar]

- Cao, Y.; Wang, G.; Yan, D.; Zhao, Z. Two algorithms for the detection and tracking of moving vehicle targets in aerial infrared image sequences. Remote Sens. 2015, 8, 28. [Google Scholar] [CrossRef]

- Teutsch, M.; Grinberg, M. Robust detection of moving vehicles in wide area motion imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 27–35. [Google Scholar]

- Cormier, M.; Sommer, L.W.; Teutsch, M. Low resolution vehicle re-identification based on appearance features for wide area motion imagery. In Proceedings of the 2016 IEEE Applications of Computer Vision Workshops (WACVW), Lake Placid, NY, USA, 10 March 2016; pp. 1–7. [Google Scholar]

- Tuermer, S.; Kurz, F.; Reinartz, P.; Stilla, U. Airborne vehicle detection in dense urban areas using HoG features and disparity maps. IEEE J. Sel. To. Appl. Earth Obs. Remote Sens. 2013, 6, 2327–2337. [Google Scholar] [CrossRef]

- Vodacek, A.; Kerekes, J.P.; Hoffman, M.J. Adaptive Optical Sensing in an Object Tracking DDDAS. Procedia Comput. Sci. 2012, 9, 1159–1166. [Google Scholar] [CrossRef]

- General Dynamics Vector 20 Maritime Pan Tilt. Available online: https://www.gd-ots.com/wp-content/uploads/2017/11/Vector-20-Stabilized-Maritime-Pan-Tilt-System-1.pdf (accessed on 8 October 2018).

- Vectornav VN-300 GPS IMU. Available online: https://www.vectornav.com/products/vn-300 (accessed on 8 October 2018).

- BlueSky AL-3 (15m) Telescopic Mast. Available online: http://blueskymast.com/product/bsm3-w-l315-al3-000/ (accessed on 9 October 2018).

- Labsphere Helios 0.5 m Diameter Integrating Sphere. Available online: https://www.labsphere.com/labsphere-products-solutions/remote-sensing/helios/ (accessed on 9 October 2018).

- Mobley, C.D. Light and Water: Radiative Transfer in Natural Waters; Academic Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Coifman, B.; Beymer, D.; McLauchlan, P.; Malik, J. A real-time computer vision system for vehicle tracking and traffic surveillance. Transp. Res. Part C Emerg. Technol. 1998, 6, 271–288. [Google Scholar] [CrossRef]

- Hsieh, J.W.; Yu, S.H.; Chen, Y.S.; Hu, W.F. Automatic traffic surveillance system for vehicle tracking and classification. IEEE Trans. Intell. Transp. Syst. 2006, 7, 175–187. [Google Scholar] [CrossRef]

- Kim, Z.; Malik, J. Fast vehicle detection with probabilistic feature grouping and its application to vehicle tracking. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; p. 524. [Google Scholar]

- Uzkent, B.; Hoffman, M.J.; Vodacek, A. Real-time vehicle tracking in aerial video using hyperspectral features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 36–44. [Google Scholar]

- Virginia Coast Reserve Long Term Ecological Research. Available online: https://www.vcrlter.virginia.edu/home2/ (accessed on 10 November 2018).

- National Science Foundation Long Term Ecological Research Network. Available online: https://lternet.edu/ (accessed on 10 November 2018).

- Nicodemus, F.E.; Richmond, J.; Hsia, J.J. Geometrical Considerations and Nomenclature for Reflectance; US Department of Commerce, National Bureau of Standards: Washington, DC, USA, 1977; Volume 160. [Google Scholar]

- Schaepman-Strub, G.; Schaepman, M.; Painter, T.H.; Dangel, S.; Martonchik, J.V. Reflectance quantities in optical remote sensing—Definitions and case studies. Remote Sens. Environ. 2006, 103, 27–42. [Google Scholar] [CrossRef]

- Bachmann, C.M.; Abelev, A.; Montes, M.J.; Philpot, W.; Gray, D.; Doctor, K.Z.; Fusina, R.A.; Mattis, G.; Chen, W.; Noble, S.D.; et al. Flexible field goniometer system: The goniometer for outdoor portable hyperspectral earth reflectance. J. Appl. Remote Sens. 2016, 10, 036012. [Google Scholar] [CrossRef]

- Harms, J.D.; Bachmann, C.M.; Ambeau, B.L.; Faulring, J.W.; Torres, A.J.R.; Badura, G.; Myers, E. Fully automated laboratory and field-portable goniometer used for performing accurate and precise multiangular reflectance measurements. J. Appl. Remote Sens. 2017, 11, 046014. [Google Scholar] [CrossRef]

- Bachmann, C.M.; Eon, R.S.; Ambeau, B.; Harms, J.; Badura, G.; Griffo, C. Modeling and intercomparison of field and laboratory hyperspectral goniometer measurements with G-LiHT imagery of the Algodones Dunes. J. Appl. Remote Sens. 2017, 12, 012005. [Google Scholar] [CrossRef]

- AgiSoft PhotoScan Professional (Version 1.2.6) (Software). 2016. Available online: http://www.agisoft.com/downloads/installer/ (accessed on 10 November 2018).

- Lorenz, S.; Salehi, S.; Kirsch, M.; Zimmermann, R.; Unger, G.; Vest Sørensen, E.; Gloaguen, R. Radiometric correction and 3D integration of long-range ground-based hyperspectral imagery for mineral exploration of vertical outcrops. Remote Sens. 2018, 10, 176. [Google Scholar] [CrossRef]

- Curcio, J.A.; Petty, C.C. The near infrared absorption spectrum of liquid water. JOSA 1951, 41, 302–304. [Google Scholar] [CrossRef]

- Bachmann, C.M.; Montes, M.J.; Fusina, R.A.; Parrish, C.; Sellars, J.; Weidemann, A.; Goode, W.; Nichols, C.R.; Woodward, P.; McIlhany, K.; Hill, V.; Zimmerman, R.; Korwan, D.; Truitt, B.; Schwarzschild, A. Bathymetry retrieval from hyperspectral imagery in the very shallow water limit: A case study from the 2007 virginia coast reserve (VCR’07) multi-sensor campaign. Marine Geodesy 2010, 33, 53–75. [Google Scholar] [CrossRef]

- Pacheco, A.; Horta, J.; Loureiro, C.; Ferreira, Ó. Retrieval of nearshore bathymetry from Landsat 8 images: A tool for coastal monitoring in shallow waters. Remote Sens. Environ. 2015, 159, 102–116. [Google Scholar] [CrossRef]

- Lyzenga, D.R.; Malinas, N.P.; Tanis, F.J. Multispectral bathymetry using a simple physically based algorithm. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2251–2259. [Google Scholar] [CrossRef]

- Brando, V.E.; Anstee, J.M.; Wettle, M.; Dekker, A.G.; Phinn, S.R.; Roelfsema, C. A physics based retrieval and quality assessment of bathymetry from suboptimal hyperspectral data. Remote Sens. Environ. 2009, 113, 755–770. [Google Scholar] [CrossRef]

- Lee, Z.; Weidemann, A.; Arnone, R. Combined Effect of reduced band number and increased bandwidth on shallow water remote sensing: The case of worldview 2. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2577–2586. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).