Abstract

Hyperspectral (HS) imaging involves the sensing of a scene’s spectral properties, which are often redundant in nature. The redundancy of the information motivates our quest to implement Compressive Sensing (CS) theory for HS imaging. This article provides a review of the Compressive Sensing Miniature Ultra-Spectral Imaging (CS-MUSI) camera, its evolution, and its different applications. The CS-MUSI camera was designed within the CS framework and uses a liquid crystal (LC) phase retarder in order to modulate the spectral domain. The outstanding advantage of the CS-MUSI camera is that the entire HS image is captured from an order of magnitude fewer measurements of the sensor array, compared to conventional HS imaging methods.

1. Introduction

Hyperspectral (HS) imaging has gained increasing interest in many fields and applications. These techniques can be found in airborne and remote sensing applications [1,2,3,4], biomedical and medical studies [5,6,7], food and agricultural monitoring [8,9,10], forensic applications [11,12], and many more. The HS images captured for these applications are usually arranged in three-dimensional (3D) datacubes, which include two dimensions (2D) for the spatial information and one additional dimension (1D) for the spectral information. With a 2D spatial domain of megapixel size and with the third (spectral) dimension typically containing hundreds of spectral bands, the HS data is usually huge. Consequently, its scanning, storage and digital processing is challenging.

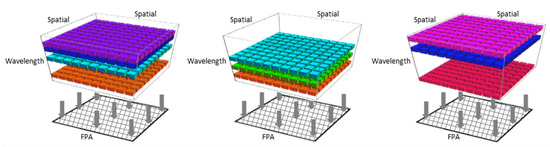

Studies have shown that the huge HS datacubes are often highly redundant [13,14,15,16,17] and, therefore, very compressible or sparse. This gives the incentive to implement Compressive Sensing (CS) theory in HS systems. CS is a sampling framework that facilitates efficient acquisition of sparse signals. Numerous techniques that employ the CS framework for spectral imaging [18,19,20,21,22,23,24,25,26] have been proposed in order to reduce the scanning efforts. Most of these techniques involve spatial–spectral multiplexing, which is suitable for CS; however, this multiplexing inevitably impairs both the spatial and spectral domains. In References [27,28], we introduced a novel CS HS camera dubbed the Compressive Sensing Miniature Ultra-Spectral imaging (CS-MUSI) camera. The CS-MUSI camera overcomes the impairment in the spatial domain by performing only spectral multiplexing without any spatial multiplexing. Figure 1 provides a schematic description of HS datacubes that undergo only spectral multiplexing. The figure presents three examples of a HS datacube modulated at three different exposures. Different spectral multiplexing is obtained by applying different conditions on the modulator. The spectrally multiplexed data is ultimately integrated by a focal plane array (FPA).

Figure 1.

Spectral multiplexing. The figure represents three different examples of spectral multiplexing. Each sub-figure illustrates multiplexing of a few spectral bands onto a FPA.

Within the framework of CS, the CS-MUSI camera can reconstruct HS images with hundreds of spectral bands from only spectrally multiplexed shots, numbering an order of magnitude less than would be required using conventional systems. Furthermore, the CS-MUSI camera benefits from high optical throughput, small volume, light weight, and reduced acquisition time. In this paper, we review the evolution of the CS-MUSI camera and its different applications.

In this article, we first review the innovative concept behind our CS-MUSI camera. We describe spectral multiplexing within the CS framework using a liquid crystal (LC) phase retarder, and expand on the sensing and reconstruction processes. In the following, we outline the optical setup of the CS-MUSI camera and its realization. Lastly, we describe different possible applications for the CS-MUSI camera, including HS staring imaging, HS scanning imaging, 4D imaging, and target detection tasks.

2. Spectral Modulation for CS with LC

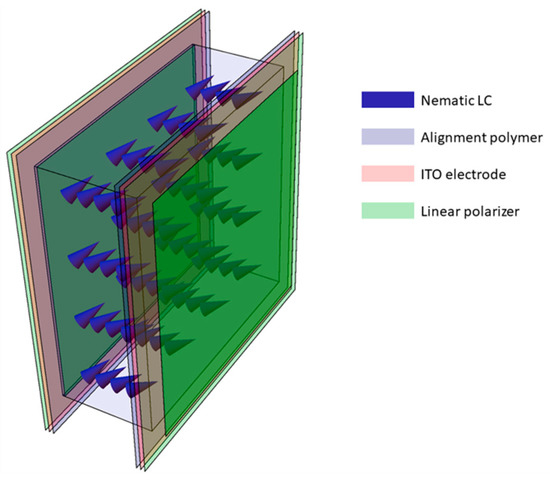

The core of the CS-MUSI camera is a single LC phase retarder (Figure 2), which we designed to work as a spectral modulator that is compliant with the CS framework [27]. By using the LC phase retarder, the signal multiplexing is accomplished entirely in the spectral domain, and no spectral-to-spatial transformations are required. The LC phase retarder is built by placing a LC cell between two polarizers. The spectral transmission is controlled by the voltage applied on the LC cell, causing variations in the cell’s birefringence, which, in turn, cause refractive index changes. For the case where the optical axis of the LC cell is at 45° to two perpendicular polarizers, the spectral transmission response of the LC phase retarder can be described by [29]:

where is the birefringence produced by voltage , d is the cell thickness and is the wavelength. The voltage applied to the LC cell is an AC voltage, usually in the form of sine or square wave and with a typical frequency in the order of kHz.

Figure 2.

LC cell phase retarder. The LC phase retarder is made of a Nematic LC layer (blue arrow) sandwiched between two glass plates and two linear polarizers (green layers). The glass plates are coated with Indium Tin Oxide (ITO, pink layers) and a polymer alignment layer (purple layers).

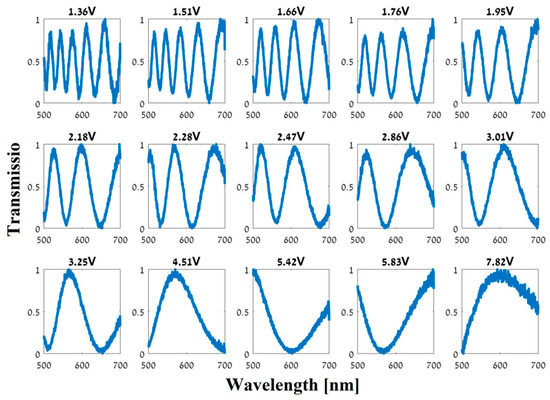

We designed the LC cell to have a relatively thick cavity (tens of microns) to facilitate modulation over a broad spectrum with oscillatory behavior, as can been seen in the different spectral responses presented in Figure 3. The figure presents 15 plots of 15 different measured spectral responses for different AC voltages (2 kHz square wave) applied on the LC cell. It can be noticed that as the voltage decreases [higher birefringence, ], the number of peaks in the spectral transmission graphs rises. Theoretically, these spectral responses should follow expression (1), spanning the entire range from 0 to 1 for all the wavelength range. However, in practice, we can see that the modulation depths in Figure 3 are not equal for all the peaks. This is due to the quality of the polarizers.

Figure 3.

Measured spectral responses (intensity transmission vs. wavelength in nm) of the fabricated LC phase retarder. Each graph represents the spectral modulation with a different voltage applied on the LC cell (15 different voltages).

The acquisition process and the optical scheme of the CS-MUSI camera are shown in Figure 4. The spatial–spectral power distribution of the HS object, , is modulated by the LC phase retarder transmittance function, (the graph from Figure 3). The i’th modulated spectral signal is spectrally multiplexed and integrated at each pixel in the 2D sensor array, which gives the encoded measurements:

Figure 4.

(a) CS-MUSI acquisition process. (b) CS-MUSI optical scheme diagram. The HS object is modulated according to , yielding the multiplexed measurement .

As the sensor samples discrete values, and for CS analysis and reconstruction purposes, it is more convenient to present the sensing process from Equation (2) in a matrix-vector format. Let us denote the spectral signal with N spectral bands by and the multiplexed measured spectral signal with M entries by . By using these vectors, the measurement process can be described by:

where represents the CS sensing matrix. Compressive sensing [22,30,31,32] provides a framework to capture and to recover signals from fewer measurements than required by the Shannon-Nyquist sampling theorem (i.e., M < N). The CS framework relies on three main ingredients. First, the sparsity of the signal, namely, the spectral signal with N spectral bands can be expressed by , where is a K-sparse vector (containing K << N non-zero elements) and is the sparsifying operator. In accordance with the CS framework, Equation (3) can be written as:

where . Second, the CS systems needs an appropriate sensing design, which is represented by the system sensing matrix, . Third, the CS framework relies on the existence of an appropriate reconstruction algorithm, upon which we will expand in the next section.

3. Reconstruction Process

The CS-MUSI camera was designed in accordance with the CS framework. Consequently, the captured data is a compressed version of the scene’s HS datacube. Therefore, a reconstruction process that solves Equation (4) should be performed. Over the years, several CS algorithms have been developed [32,33,34,35,36,37] in order to recover the original signal. A common class of these reconstruction algorithms solve the minimization problem:

where is the estimated K-sparse signal, τ is a regularization parameter and is the norm. The algorithms recover the original signal by using the known system sensing matrix, , and the signal sparsifying operator, [22,37,38,39]. The sparsifying operator can be a mathematical transform (DCT, Wavelet, Curvelets etc.) or a learned dictionary; the latter has shown promising results [40] and will be described in the next subsection. Common algorithms developed to solve Equation (5) are TwIST [33], GPSR [34], SpaRSA [35], TVAL3 [36], etc.

Dictionary for Sparse Representation

The first ingredient that the CS framework relies on is the sparsity of the signal. The sparser the representation of the signal is, the better the CS reconstruction algorithms perform. We found [40] that using a learned dictionary [38] as the sparsifying operator can significantly improve the reconstruction accuracy in comparison to using a mathematical basis. In addition, using a dictionary can reduce both the time and the number of measurements required in order to reconstruct the original signal. A dictionary is a learned sparsifier from exemplars. In order to be able to use a dictionary in CS algorithms, a preprocessing stage has to be performed, and it is done only once.

First, a large database of spectral signals, , is collected. This database contains spectra with N spectral bands:

where is the i’th spectrum in the database. Then, an over-complete spectral dictionary with atoms to train, is created by applying a dictionary-learning algorithm, such as the K-SVD algorithm [38], to the spectral database S:

is a dictionary that relates the spectral data f to its sparse representation, . Each column of is referred to as an atom of the dictionary. Therefore, the spectrum f can be viewed as a linear combination of atoms in according to weights in . Based on Equation (7), a corresponding system dictionary is created by the inner products of the spectral dictionary with the CS-MUSI sensing matrix, :

After the dictionary has been prepared, it can be used as the sparsifying operator to reconstruct the original spectral signal by finding the estimated atom weights vector, . These weights could be found, for example, by the minimization problem from Equation (5) that becomes:

Once the atom weights are found the original spectral signal is estimated by applying the atom weights on the spectral dictionary we created, (Equation (7)):

For more details on the utilization of dictionaries for CS-MUSI data reconstruction and its advantages over other sparsifiers, the reader is referred to [40].

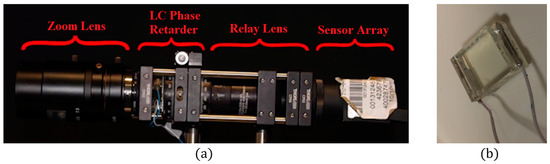

4. Compressive Hyperspectral and Ultra-Spectral Imaging

The CS-MUSI camera we built is shown in Figure 5a. It is slightly different from the optical setup that was designed and presented in Figure 4, but is optically equivalent. It has a 1:1 optical relay that projects the LC plane onto a 2D sensor array, thus avoiding the need to attach the LC cell to the 2D sensor array. A LC cell is placed in the image plane of a zoom lens. The light transmitted through the LC cell is conjugated to a sensor array using a 1:1 relay lens. The optical sensor of the camera is a uEye CMOS UI-3240CP-C-HQ with 1280 × 1024 pixels, with a pixel size of 5.3 μm × 5.3 μm and 8-bit grayscale level radiometric sampling. The camera LC cell from Figure 5b was manufactured in-house and has a cell gap of approximately 50 μm and a clear aperture of about 8 mm × 8 mm. The LC cell was fabricated from two flat glass plates coated with Indium Tin Oxide (ITO) and a polymer alignment layer. The cavity is filled with LC material E44 (Merck, Darmstadt, Germany). Together with two linear polarizers on both sides of the LC cell, the LC phase retarder is created (Figure 2).

Figure 5.

(a) Realization of the CS-MUSI camera. (b) In-house manufactured LC cell.

4.1. Camera Calibration

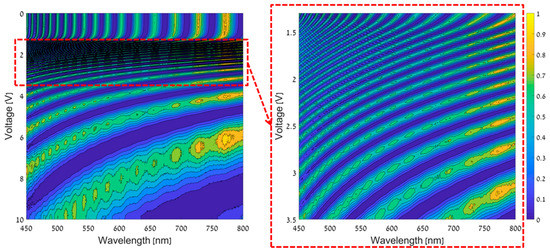

The theoretical expression in Equation (1) cannot be used directly in order to obtain the system-sensing matrix, because the dependence of the birefringence on the voltage and the material dispersion are unknown. Consequently, a calibration process in which the spectral responses of the camera were precisely measured, was performed once. Using a point light source as the object and by replacing the sensor of the camera with a commercial high-precision grating spectrometer, the spectral responses of the camera were measured. The calibration process was performed by using a halogen light source with a pre-measured spectrum and by applying voltages from 0 V to 10 V on the LC cell with steps of 2 mV. Figure 6, presents the CS-MUSI system’s spectral response map that was measured in the calibration process.

Figure 6.

CS-MUSI spectral response map for voltages from 0 V to 10 V (left map) and a zoom in on the area where the voltages are from 1.3 V to 3.5 V (right map).

The system sensing matrix, , is obtained by selecting M rows from the CS-MUSI system’s spectral response map (Figure 6, left). This is done by using a sequential forward floating selection method [28,41] that aims to achieve a highly incoherent set of measurements.

4.2. Staring Mode

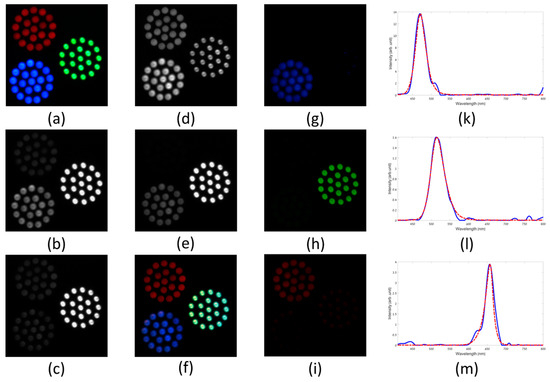

The basic imaging mode of the CS-MUSI camera is the staring mode, so that the camera and scene do not move. Scanning mode acquisition is described in the next subsection. In staring mode, each spectrally multiplexed shot covers the same field-of-view (FOV) and, by taking M shots, a compressed HS datacube is captured. Figure 7, Figure 8 and Figure 9 demonstrate the reconstruction of spectral images (HS and ultra-spectral images) attained by the CS-MUSI camera in staring mode. Figure 7 presents the results of an experiment where the emission spectra of three arrays of red, green and blue light sources (Thorlabs LIU001, LIU002 and LIU003 LED arrays) were imaged using the CS-MUSI camera. Figure 7a shows the image of the light sources captured by a standard RGB color camera. The imaging experiment was performed by capturing 32 spectrally multiplexed images containing 1024 × 1280 pixels. Figure 7b–e shows four captured images that represent four single grayscale frames from the spectrally-compressed measurements. The images show the total optical intensity that has passed through the LC phase retarder and was collected by the sensor array at a given shot with a given LC voltage. From the captured data, a window of 700 × 700 pixels was used in the reconstruction process. Using the TwIST solver [33] and orthogonal Daubechies-5 wavelet as the sparsifying operator, a HS datacube with 391 spectral bands (410–800 nm) was reconstructed, yielding a compression ratio of about 12:1. Figure 7f presents a pseudo-color image obtained by projecting the reconstructed HS datacube onto the RGB space. Figure 7g–i displays three images from the reconstructed datacube at different wavelengths (460 nm, 520 nm and 650 nm). Figure 7k–m demonstrate spectrum reconstruction for three points in the HS datacube and a comparison to the measured spectra of the three respective LEDs with a commercial grating-based spectrometer. The reconstruction PSNR is 32.4 dB, 34.8 dB and 27.9 dB for the blue, green and red LED points, respectively.

Figure 7.

Staring mode reconstruction result of three LED arrays. (a) RGB color image of three LED arrays that were used as objects to be imaged with CS-MUSI. (b–e) Representative single exposure images for LC cell voltage of 0 V, 5.8373 V, 7.6301 V and 8.6552 V, respectively. (f) RGB representation of the reconstructed HS image (700 × 700 pixels× 391 bands). (g–i) Reconstructed images at 460 nm, 520 nm and 650 nm, respectively. (k–m) Spectrum reconstruction for three points in the HS datacube and comparison to the measured spectra of the three respective LEDs with a commercial grating-based spectrometer.

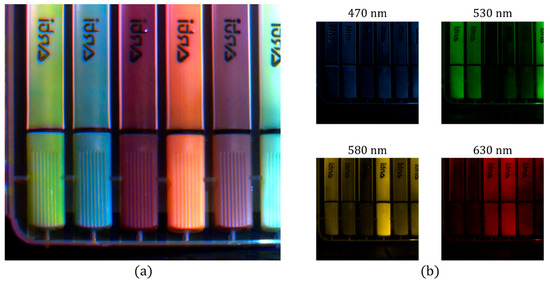

Figure 8.

Staring mode reconstruction result of six different markers. (a) RGB representation of the reconstructed HS image (800 × 900 pixels × 1171 bands). (b) Four reconstructed images at four different wavelengths (470 nm, 530 nm, 580 nm, and 630 nm).

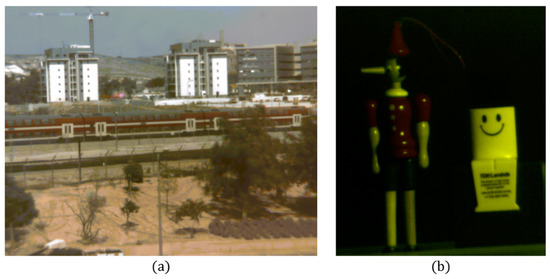

Figure 9.

Staring mode reconstruction results with the dictionary of (a) outdoor and (b) indoor HS images taken with CS-MUSI camera. The figures show RGB representation of the reconstructed HS datacube.

Figure 8 presents an indoor scene where six different markers are imaged using the CS-MUSI camera. The imaging experiment was performed by capturing 100 spectrally multiplexed images containing 1024 × 1280 pixels. From the captured data, a window of 800 × 900 pixels was used in the reconstruction process. Using the TwIST solver [33] and orthogonal Daubechies-5 wavelet as the sparsifying operator, a HS datacube with 1171 spectral bands (410–800 nm) was restored, yielding a compression ratio of almost 12:1. Figure 8a presents a pseudo-color image obtained by projecting the reconstructed HS datacube onto the RGB space. Figure 8b displays four images from the reconstructed datacube at different wavelengths (470 nm, 530 nm, 580 nm and 630 nm).

The quality and time expenditure of reconstructed HS images depends significantly on the sparsifying operator. We found that by using a learned dictionary as the sparsifying operator [40] the quality and time improves. Figure 9 illustrates the reconstruction of HS images attained by the CS-MUSI camera using a spectral dictionary as the sparsifying operator (Section 3.1). The size of this dictionary was atoms and was computed by using 100,000 spectrum exemplars taken form a large database of HS images [42] and from a library of different spectra [40,43]. The HS images reconstructed from the CS-MUSI camera are in the range of 500 nm to 700 nm with 579 spectral bands, and were reconstructed from only 32 measurements, thus yielding a compression ratio of approximately 18:1. Figure 9a,b shows an RGB representation of the reconstructed HS images of outdoor and indoor scenes, respectively.

4.3. Scanning Mode

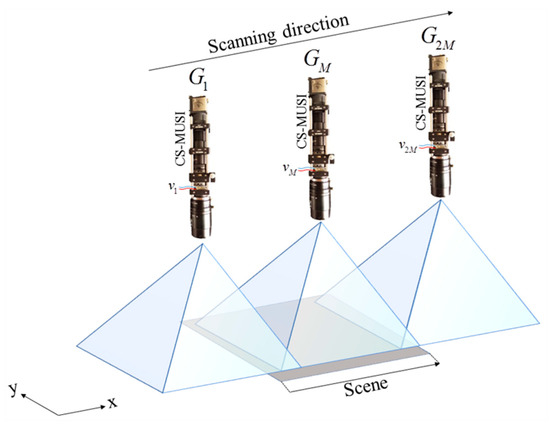

The CS-MUSI camera can also be applied in a mode where the camera, the scene, or objects in the scene, are not stationary [44]. Such scenarios include microscope applications with moving cells or scanning platforms, and airborne and remote sensing systems. By capturing a sequence of spectrally multiplexed shots and tracking the object, it is possible to reconstruct HS data by an appropriate registration. It is required that the object appears in M shots. For example, in the case of along-track scanning [44] (Figure 10) the CS-MUSI camera needs 2M measurements in order to capture a scene of the size of the camera FOV. A second requirement is image registration, since the FOV of each shot is slightly different. As a result, before solving Equation (4) it is necessary to register all the measured images along a common spatial grid. This can be done with one of the many available algorithms [45,46,47].

Figure 10.

CS-MUSI camera along-track scanning. Each shot of the CS-MUSI camera, , captures a shifted scene with a different LC spectral transmission (which depends on the voltage ).

Figure 11 shows experimental results that demonstrate the ability to reconstruct HS images in a scanning mode. In this experiment, the scanning is along-track [44], which is similar to the push broom scanning technique [3]. The spectral multiplexed imaging acquisition process was conducted while the CS-MUSI camera was moving in front of three arrays of LED light sources (Thorlabs LIU001, LIU002, and LIU003) (Figure 11a). While the CS-MUSI camera was moving, a set of 100 voltages between 0 V and 10 V was applied repetitively. Figure 11b–e presents four representative spectrally multiplexed intensity measurements (shot #30, #100, #150 and #300, respectively) from a total of 300 measurements (Supplementary Materials). These images illustrated the along-track scanning of the camera from right to left, where in the first shot only part of the blue and red LEDs appears in the spectral multiplexed image, and in the last shot only the green LED appears.

Figure 11.

Scanning mode (Figure 10) reconstruction result. (a) RGB color image of three LED arrays. (b–e) representative single exposure images (frame #30, #90, #150 and #300, respectively) and (f–i) the RGB representation of the reconstructed HS image up to the appropriate column.

Since the reconstruction was performed column by column [48], the reconstruction process can start before the scanning process is completed. Once a column is measured in M = 100 shots it can be reconstructed. Figure 11f–i shows an RGB representation of the reconstructed HS images up to different shot numbers. It can be noticed that at 30 shots (Figure 11f), no image column can be reconstructed as the total number of shots is smaller than M and no object column has enough measurements in order to be reconstructed. However, after M = 100 shots, some of the column images can be reconstructed after they have been measured M times. In this example, the HS image was reconstructed using the SpaRSA solver [35] and orthogonal Daubechies-4 wavelet as the sparsifying operator. From the 100 shots of each column, a HS image with 1171 spectral bands (410–800 nm) was restored, yielding a compression ratio of almost 12:1.

5. 4D Imaging

Integrating the CS-MUSI camera with an appropriate 3D imaging technique enables achieving a four-dimensional (4D) camera that can efficiently capture 3D spatial images together with their spectral information [49,50,51,52]. Joint spectral and volumetric data can be very useful for object shape detection [51,52] and material classification in various engineering and medical applications. The CS approach facilitated the acquisition effort associated with the huge dimensionality of the 4D spectral-volumetric data.

For 3D imaging we used Integral Imaging (InIm) [53,54,55,56], since its implementation is relatively simple. The first step of InIm is the acquisition of an actual 3D scene. In this step, multiple 2D images from slightly different perspectives are captured. Each of these images is called an elemental image (EI). Generally, the acquisition process can be implemented by a lenslet array (or pinhole array) or by synthetic aperture InIm. Synthetic aperture InIm (Figure 12) can be realized by an array of cameras distributed on the image plane, or by a single moving camera which moves perpendicularly to the system’s optical axis. Replacing the moving camera with our CS-MUSI camera enables capturing 3D spatial images together with their spectral information. By using the captured InIm data it is possible to synthesize depth maps, virtual perspectives and refocused images.

Figure 12.

Compressive HS synthetic aperture InIm acquisition setup.

After the acquisition of the data and reconstruction of the spectral information from its compressed version, acquired with the moving CS-MUSI camera, the 3D image for each spectral channel (in terms of focal-stack) can be reconstructed numerically in different ways [49,56,57,58]. One of the most popular methods is based on back-projection, also known as shift-and-add. In the case of synthetic aperture InIm, the refocusing process can be performed as follows [49]:

where is the reconstructed data tesseract, is the EI at wavelength showing the perspective from camera j. is a normalizing matrix that normalizes each pixel in the image according to the number of EIs that the pixel appears in, and P is the overall number of EIs. and are the scaled size of the shifts in the horizontal and vertical directions of the CS-MUSI camera [49].

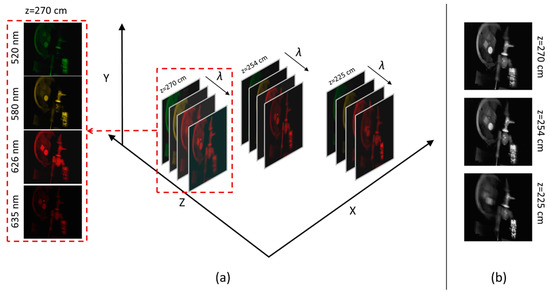

In the 4D imaging application, we acquired spectrally compressed images with our CS-MUSI camera from six perspectives, where in each perspective we captured 29 compressed images. Then, by using the TwIST solver [27], a HS datacube with 261 spectral bands (430–690 nm) was reconstructed. Next, we generated refocused images at different depths by using a shift-and-add algorithm [49]. The data can be ordered as a tesseract, as shown in Figure 13. Figure 13a demonstrates the reconstruction results at three selected depths for four selected wavelengths. The zoom on the HS datacube from the depth of 270 cm illustrates the spectral reconstruction quality, which can be observed from the fact that the laser beam appears clearly only at 635 nm, whereas it is completely filtered out in the other spectral bands (520 nm, 580 nm and 626 nm). From the grayscale images from Figure 13b it can be observed that the closest object was a green alien toy, whose best focus is at 225 cm; the Pinocchio toy’s best focus is at 254 cm and the best focus of the different colored shape objects and red laser is at 270 cm.

Figure 13.

(a) 4D Spectro-Volumetric imaging. (b) Grayscale representation of HS images at three different depths (225 cm, 254 cm and 270 cm).

6. Target Detection

One key usage of spectral imagery is subpixel target detection, when an a priori known spectral signature is sought in each pixel of the spectral datacube. Previous researches dealt with target detection in the reconstructed domain [59], but in the case of the CS-MUSI camera, target detection can be applied in the compressed domain [60,61] since the CS-MUSI camera performs compression only in the spectral domain, without any spatial multiplexing. This yields a significant reduction of processing time and memory storage compared to non-compressing systems, of around an order of magnitude.

In order to test the subpixel target detection performance, we used the match filter (MF) algorithm [62], which can be derived by maximizing the SNR, or even by simply considering two hypotheses, as shown in Equation (12).

where assumes that no target, , is present in the pixel and the pixel contains only background, . assumes that both background and target are present in the pixel. For simplicity, both hypotheses are modeled as multidimensional Gaussian distributions.

By applying the log likelihood ratio test for and we may derive the MF, which is equal to:

where x is the pixel signature, t is the target spectral signature and m is the estimated background. is the covariance matrix, which holds the statistics of the background and can be approximated using:

where L is the number of pixels in the datacube. For a pixel that does not include the target, the MF takes the form of Equation (13). However, when the target is present we use an additive, as shown in Equation (15), and the MF takes the form of Equation (16):

where x’ is a pixel that contains the target and p is the ratio of the target present in the pixel.

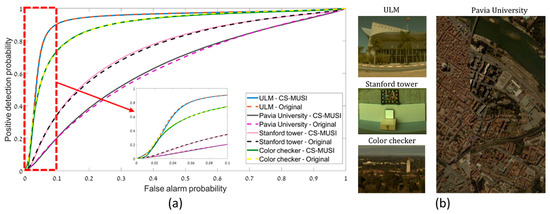

In order to assess the algorithm’s performance, we adopt the performance metric that is mentioned in References [60,63]. Finally, we compare the Receiver Operating Characteristics (ROC) curve of the algorithm applied to an original HS datacube and the ROC curve of the same algorithm applied to a simulated compressed CS-MUSI datacube. The curve presents the positive detection vector as a function of false alarm probability, using the calculated value per threshold. The simulation is performed by applying a measured CS-MUSI sensing matrix, , to each voxel of the HS datacube.

Figure 14 presents results of CS-MUSI camera target detection performance. Figure 14a shows the comparison of ROC curves obtained from conventional HS datacubes (solid lines) to those captured with the CS-MUSI camera (dotted lines). Four pairs of ROC curves are presented for the four images shown in Figure 14b (from [42,64,65]). The compression ratio sets for the compressed datacube varied between 3.5:1 (lowest) and 25:1 (highest). From the ROC curves it can be seen that the performance is not degraded by the compression. Moreover, the detection speed in the compressed HS datacubes is increased due to lower computational complexity.

Figure 14.

(a) Comparison of ROC curves for target detection in conventional (dotted lines) and CS-MUSI (solid lines) HS datacubes. (b) RGB representation of the four HS datacubes in the comparison [42,64,65].

7. Discussion

We have overviewed an evaluation of the CS-MUSI camera [28] together with its different applications. We demonstrated reconstruction of HS images in the case where the camera and scene are stationary and for the case where the camera moves in the along-track direction. Furthermore, we demonstrated the ability to use the CS-MUSI camera for 4D spectral-volumetric imaging. Experiments in these scenarios and applications have demonstrated compressibility of at least an order of magnitude. Moreover, the results provide a spectral uncertainty of less than one nanometer, e.g., in Reference [28] we demonstrated an example that has a spectral localization accuracy of 0.44 ± 0.04 nm.

Additionally, we presented a remarkable property of the CS-MUSI camera showing that the target detection algorithm performs similarly with the CS-MUSI camera as with traditional HS systems, despite the fact that the CS-MUSI data is up to an order of magnitude less than that in conventional HS datacubes. Another important advantage of the CS-MUSI camera is its high optical throughput, due to the Fellgett’s multiplex advantage [66]. Furthermore, the CS-MUSI camera (Figure 5a) can be built with a small geometrical form and low weight by fabricating the LC cell to be attached to the sensor array.

It should be mentioned that the reviewed CS-MUSI camera has some limitations. The response time for a thick LC cell is relatively slow (in the order of a few seconds), which limits the acquisition frame rate. This limitation can be reduced by operating the cell in its transition state or with specially designed electronic functions, or by using faster LC structures such as ferroelectric LCs. Another limitation of the camera is the requirement for large computational resources for processing the data. This limitation can be mitigated by using parallel processing using GPU or multi-core CPU systems. Additionally, as with any HS processing algorithm, our reconstruction algorithm demands high memory capacity, since the storage of HS images can require gigabit sized memory. The additional memory requirements associated with the CS implementation are negligible compared to those of the HS data storage. From a theoretical CS point of view, the fact that there is no encoding in the spatial domain can be viewed as a limitation, since the compression obtained with the spectral encoding is lower than could have been theoretically obtained with encoding in all the three spatial-spectral domains [18]. On the other hand, the lack of spatial encoding makes it possible to maintain the full spatial resolution, allows parallel processing and facilitates the spectral imaging of moving objects.

The method of spectral multiplexing used in the CS-MUSI camera was carried out with a LC phase retarder as the spectral modulator. This method can be also realized with other spectral modulators [67]. In References [68,69], we used a modified Fabry-Perot resonator (mFPR) for spectrometry [68] and for imaging [69], which has a much faster response time compared to the LC cell. The method of spectral multiplexing can also be performed in parallel in order to achieve a snapshot HS camera. Lastly, in Reference [70] we presented a snapshot compressive HS camera that uses an array of mFPRs together with a lens array in order to acquire an array of spectrally multiplexed modulated sub-images.

8. Patents

In reference to the work presented here, a patent with the patent number US10036667 has been granted.

Supplementary Materials

The following are available online at http://www.mdpi.com/2313-433X/5/1/3/s1, Video S1: Along-track scanning measurement. Each frame represents a multiplexed intensity measurement (total of 300 shots).

Author Contributions

Conceptualization, I.A. and A.S.; methodology, I.A. and Y.O.; software, I.A., Y.O., V.F. (Section 6) and D.G. (Section 7); formal analysis, I.A. and Y.O.; investigation, I.A., Y.O., V.F. (Section 6) and D.G. (Section 7); data curation, I.A. and Y.O.; writing—original draft preparation, Y.O. and A.S.; writing—review and editing, I.A., V.F. and D.G.; supervision, A.S.; project administration, A.S.; funding acquisition, A.S.

Funding

This research was funded by Ministry of Science, Technology and Space, Israel, grant number 3-18410 and 3-13351.

Acknowledgments

We wishes to thank Ibrahim Abdulhalim’s research group (Department of Electro-Optical Engineering and The Ilse Katz Institute for Nanoscale Science and Technology, Ben-Gurion University) for providing the liquid crystal cell.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Schott, J.R. Remote Sensing: The Image Chain Approach; Oxford University Press: Oxford, UK, 2007. [Google Scholar]

- Borengasser, M.; Hungate, W.S.; Watkins, R. Hyperspectral Remote Sensing: Principles and Applications; CRC Press: Boca Raton, FL, USA, 2007. [Google Scholar]

- Eismann, M.T. Hyperspectral Remote Sensing; SPIE PRESS: Bellingham, WA, USA, 2012. [Google Scholar]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Akbari, H.; Halig, L.; Schuster, D.M.; Fei, B.; Osunkoya, A.; Master, V.; Nieh, P.; Chen, G. Hyperspectral imaging and quantitative analysis for prostate cancer detection. J. Biomed. Opt. 2012, 17, 076005. [Google Scholar] [CrossRef] [PubMed]

- Lu, G.; Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef] [PubMed]

- Calin, M.A.; Parasca, S.V.; Savastru, D.; Manea, D. Hyperspectral imaging in the medical field: Present and future. Appl. Spectrosc. Rev. 2014, 49, 435–447. [Google Scholar] [CrossRef]

- Sun, D.W. Hyperspectral Imaging for Food Quality Analysis and Control; Academic Press/Elsevier: San Diego, CA, USA, 2010. [Google Scholar]

- Kamruzzaman, M.; ElMasry, G.; Sun, D.; Allen, P. Non-destructive prediction and visualization of chemical composition in lamb meat using NIR hyperspectral imaging and multivariate regression. Innov. Food Sci. Emerg. Technol. 2012, 16, 218–226. [Google Scholar] [CrossRef]

- ElMasry, G.; Kamruzzaman, M.; Sun, D.; Allen, P. Principles and applications of hyperspectral imaging in quality evaluation of agro-food products: A review. Crit. Rev. Food Sci. Nutr. 2012, 52, 999–1023. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Beveridge, P.; O’Hare, W.T.; Islam, M. The application of visible wavelength reflectance hyperspectral imaging for the detection and identification of blood stains. Sci. Justice 2014, 54, 432–438. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Messinger, D.W.; Dube, R.R. Bloodstain detection and discrimination impacted by spectral shift when using an interference filter-based visible and near-infrared multispectral crime scene imaging system. Opt. Eng. 2018, 57, 033101. [Google Scholar] [CrossRef]

- Brook, A.; Ben-Dor, E. A spatial/spectral protocol for quality assurance of decompressed hyperspectral data for practical applications. In Proceedings of the 2010 2nd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Reykjavik, Iceland, 14–16 June 2010; pp. 1–4. [Google Scholar] [CrossRef]

- Li, C.; Sun, T.; Kelly, K.F.; Zhang, Y. A compressive sensing and unmixing scheme for hyperspectral data processing. IEEE Trans. Image Process. 2012, 21, 1200–1210. [Google Scholar] [CrossRef] [PubMed]

- August, Y.; Vachman, C.; Stern, A. Spatial versus spectral compression ratio in compressive sensing of hyperspectral imaging. In Compressive Sensing II, Proceedings of the SPIE Defense, Security, and Sensing 2013, Baltimore, MD, USA, 29 April–3 May 2013; SPIE: Bellingham, WA, USA, 2013; Volume 8717. [Google Scholar]

- Willett, R.M.; Duarte, M.F.; Davenport, M.; Baraniuk, R.G. Sparsity and structure in hyperspectral imaging: Sensing, reconstruction, and target detection. IEEE Signal Process Mag. 2014, 31, 116–126. [Google Scholar] [CrossRef]

- Parkinnen, J.; Hallikainen, J.; Jaaskelainen, T. Characteristic spectra of surface Munsell colors. J. Opt. Soc. Am. A 1989, 6, 318–322. [Google Scholar] [CrossRef]

- August, Y.; Vachman, C.; Rivenson, Y.; Stern, A. Compressive hyperspectral imaging by random separable projections in both the spatial and the spectral domains. Appl. Opt. 2013, 52, D46–D54. [Google Scholar] [CrossRef] [PubMed]

- Stern, A.; Yitzhak, A.; Farber, V.; Oiknine, Y.; Rivenson, Y. Hyperspectral Compressive Imaging. In Proceedings of the 2013 12th Workshop on Information Optics (WIO), Puerto de la Cruz, Spain, 15–19 July 2013; pp. 1–3. [Google Scholar] [CrossRef]

- Lin, X.; Wetzstein, G.; Liu, Y.; Dai, Q. Dual-coded compressive hyperspectral imaging. Opt. Lett. 2014, 39, 2044–2047. [Google Scholar] [CrossRef] [PubMed]

- Arce, G.R.; Brady, D.J.; Carin, L.; Arguello, H.; Kittle, D.S. Compressive coded aperture spectral imaging: An introduction. IEEE Signal Process Mag. 2014, 31, 105–115. [Google Scholar] [CrossRef]

- Stern, A. Optical Compressive Imaging; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Golub, M.A.; Averbuch, A.; Nathan, M.; Zheludev, V.A.; Hauser, J.; Gurevitch, S.; Malinsky, R.; Kagan, A. Compressed sensing snapshot spectral imaging by a regular digital camera with an added optical diffuser. Appl. Opt. 2016, 55, 432–443. [Google Scholar] [CrossRef] [PubMed]

- Arce, G.R.; Rueda, H.; Correa, C.V.; Ramirez, A.; Arguello, H. Snapshot compressive multispectral cameras. In Wiley Encyclopedia of Electrical and Electronics Engineering; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2017; pp. 1–22. [Google Scholar] [CrossRef]

- Saragadam, V.; Wang, J.; Li, X.; Sankaranarayanan, A.C. Compressive spectral anomaly detection. In Proceedings of the 2017 IEEE International Conference on Computational Photography (ICCP), Stanford, CA, USA, 12–14 May 2017; pp. 1–9. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, Y.; Ma, X.; Xu, T.; Arce, G.R. Compressive spectral imaging system based on liquid crystal tunable filter. Opt. Express 2018, 26, 25226–25243. [Google Scholar] [CrossRef]

- August, Y.; Stern, A. Compressive sensing spectrometry based on liquid crystal devices. Opt. Lett. 2013, 38, 4996–4999. [Google Scholar] [CrossRef]

- August, I.; Oiknine, Y.; AbuLeil, M.; Abdulhalim, I.; Stern, A. Miniature Compressive Ultra-spectral Imaging System Utilizing a Single Liquid Crystal Phase Retarder. Sci. Rep. 2016, 6, 23524. [Google Scholar] [CrossRef]

- Yariv, A.; Yeh, P. Optical Waves in Crystals; Wiley: New York, NY, USA, 1984. [Google Scholar]

- Candès, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Eldar, Y.C.; Kutyniok, G. Compressed Sensing: Theory and Applications; Cambridge University Press: Cambridge, UK, 2012; ISBN 9781107005587. [Google Scholar]

- Bioucas-Dias, J.M.; Figueiredo, M.A. A new TwIST: Two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 2007, 16, 2992–3004. [Google Scholar] [CrossRef] [PubMed]

- Figueiredo, M.A.; Nowak, R.D.; Wright, S.J. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 2007, 1, 586–597. [Google Scholar] [CrossRef]

- Wright, S.J.; Nowak, R.D.; Figueiredo, M.A. Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 2009, 57, 2479–2493. [Google Scholar] [CrossRef]

- Li, C.; Yin, W.; Zhang, Y. User’s guide for TVAL3: TV minimization by augmented lagrangian and alternating direction algorithms. CAAM Rep. 2009, 20, 46–47. [Google Scholar]

- Elad, M. Sparse and Redundant Representations: From Theory to Applications in Signal and Image Processing; Springer Science & Business Media: New York, NY, USA, 2010. [Google Scholar]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Chakrabarti, A.; Zickler, T. Statistics of real-world hyperspectral images. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 193–200. [Google Scholar] [CrossRef]

- Oiknine, Y.; Arad, B.; August, I.; Ben-Shahar, O.; Stern, A. Dictionary based hyperspectral image reconstruction captured with CS-MUSI. In Proceedings of the 2018 9nd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 23–26 September 2018. [Google Scholar]

- Pudil, P.; Novovičová, J.; Kittler, J. Floating search methods in feature selection. Pattern Recognit. Lett. 1994, 15, 1119–1125. [Google Scholar] [CrossRef]

- Arad, B.; Ben-Shahar, O. Sparse Recovery of Hyperspectral Signal from Natural RGB Images. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 19–34. [Google Scholar] [CrossRef]

- Kokaly, R.F.; Clark, R.N.; Swayze, G.A.; Livo, K.E.; Hoefen, T.M.; Pearson, N.C.; Wise, R.A.; Benzel, W.M.; Lowers, H.A.; Driscoll, R.L. USGS Spectral Library Version 7. USGS 2017, 1035, 61. [Google Scholar] [CrossRef]

- Oiknine, Y.; August, I.; Stern, A. Along-track scanning using a liquid crystal compressive hyperspectral imager. Opt. Express 2016, 24, 8446–8457. [Google Scholar] [CrossRef]

- Reddy, B.S.; Chatterji, B.N. An FFT-based technique for translation, rotation, and scale-invariant image registration. IEEE Trans. Image Process. 1996, 5, 1266–1271. [Google Scholar] [CrossRef]

- Stern, A.; Kopeika, N.S. Motion-distorted composite-frame restoration. Appl. Opt. 1999, 38, 757–765. [Google Scholar] [CrossRef]

- Usama, S.; Montaser, M.; Ahmed, O. A complexity and quality evaluation of block based motion estimation algorithms. Acta Polytech. 2005, 45, 29–41. [Google Scholar]

- Oiknine, Y.; August, Y.I.; Revah, L.; Stern, A. Comparison between various patch wise strategies for reconstruction of ultra-spectral cubes captured with a compressive sensing system. In Compressive Sensing V: From Diverse Modalities to Big Data Analytics, Proceedings of the SPIE Commercial + Scientific Sensing and Imaging 2016, Baltimore, MD, USA, 17–21 April 2016; SPIE: Bellingham, WA, USA, 2016; Volume 985705. [Google Scholar] [CrossRef]

- Farber, V.; Oiknine, Y.; August, I.; Stern, A. Compressive 4D spectro-volumetric imaging. Opt. Lett. 2016, 41, 5174–5177. [Google Scholar] [CrossRef] [PubMed]

- Stern, A.; Farber, V.; Oiknine, Y.; August, I. Compressive hyperspectral synthetic aperture integral imaging. In 3D Image Acquisition and Display: Technology, Perception and Applications; Paper DW1F. 1; Optical Society of America (OSA): Washington, DC, USA, 2017. [Google Scholar]

- Farber, V.; Oiknine, Y.; August, I.; Stern, A. 3D reconstructions from spectral light fields. In Three-Dimensional Imaging, Visualization, and Display 2018, Proceedings of the SPIE Commercial + Scientific Sensing and Imaging 2018, Orlando, Florida, USA, 15–19 April 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10666. [Google Scholar] [CrossRef]

- Farber, V.; Oiknine, Y.; August, I.; Stern, A. Spectral light fields for improved three-dimensional profilometry. Opt. Eng. 2018, 57, 061609. [Google Scholar] [CrossRef]

- Lippmann, G. Epreuves reversibles Photographies integrals. C. R. Acad. Sci 1908, 146, 446–451. [Google Scholar]

- Arimoto, H.; Javidi, B. Integral three-dimensional imaging with digital reconstruction. Opt. Lett. 2001, 26, 157–159. [Google Scholar] [CrossRef] [PubMed]

- Stern, A.; Javidi, B. Three-dimensional image sensing, visualization, and processing using integral imaging. Proc. IEEE 2006, 94, 591–607. [Google Scholar] [CrossRef]

- Hong, S.; Jang, J.; Javidi, B. Three-dimensional volumetric object reconstruction using computational integral imaging. Opt. Express 2004, 12, 483–491. [Google Scholar] [CrossRef]

- Aloni, D.; Stern, A.; Javidi, B. Three-dimensional photon counting integral imaging reconstruction using penalized maximum likelihood expectation maximization. Opt. Express 2011, 19, 19681–19687. [Google Scholar] [CrossRef]

- Llavador, A.; Sánchez-Ortiga, E.; Saavedra, G.; Javidi, B.; Martínez-Corral, M. Free-depths reconstruction with synthetic impulse response in integral imaging. Opt. Express 2015, 23, 30127–30135. [Google Scholar] [CrossRef]

- Busuioceanu, M.; Messinger, D.W.; Greer, J.B.; Flake, J.C. Evaluation of the CASSI-DD hyperspectral compressive sensing imaging system. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XIX, Proceedings of the SPIE Defense, Security, and Sensing 2013, Baltimore, MD, USA, 29 April–3 May 2013; SPIE: Bellingham, WA, USA, 2013; Volume 8743. [Google Scholar] [CrossRef]

- Gedalin, D.; Oiknine, Y.; August, I.; Blumberg, D.G.; Rotman, S.R.; Stern, A. Performance of target detection algorithm in compressive sensing miniature ultraspectral imaging compressed sensing system. Opt. Eng. 2017, 56, 041312. [Google Scholar] [CrossRef]

- Oiknine, Y.; Gedalin, D.; August, I.; Blumberg, D.G.; Rotman, S.R.; Stern, A. Target detection with compressive sensing hyperspectral images. In Image and Signal Processing for Remote Sensing XXIII, Proceedings of the SPIE Remote Sensing, 2017, Warsaw, Poland, 11–14 September 2017; SPIE: Bellingham, WA, USA, 2017; Volume 10427. [Google Scholar]

- Caefer, C.E.; Stefanou, M.S.; Nielsen, E.D.; Rizzuto, A.P.; Raviv, O.; Rotman, S.R. Analysis of false alarm distributions in the development and evaluation of hyperspectral point target detection algorithms. Opt. Eng. 2007, 46, 076402. [Google Scholar] [CrossRef]

- Bar-Tal, M.; Rotman, S.R. Performance measurement in point source target detection. In Proceedings of the Eighteenth Convention of Electrical and Electronics Engineers in Israel, Tel Aviv, Israel, 7–8 March 1995; pp. 3.4.6/1–3.4.6/5. [Google Scholar] [CrossRef]

- Skauli, T.; Farrell, J. A collection of hyperspectral images for imaging systems research. In Digital Photography IX, Proceedings of the IS&T/SPIE Electronic Imaging, Burlingame, CA, USA, 3–7 February 2013; SPIE: Bellingham, WA, USA, 2013; Volume 8660. [Google Scholar] [CrossRef]

- Hyperspectral Remote Sensing Scenes. Available online: http://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes (accessed on 26 October 2018).

- Fellgett, P. The Multiplex Advantage. Ph.D. Thesis, University of Cambridge, Cambridge, UK, 1951. [Google Scholar]

- Oiknine, Y.; August, I.; Stern, A. Compressive spectroscopy by spectral modulation. In Optical Sensors 2017, Proceedings of the SPIE Optics + Optoelectronics, 2017, Prague, Czech Republic, 24–27 April 2017; SPIE: Bellingham, WA, USA, 2017; Volume 10231. [Google Scholar] [CrossRef]

- Oiknine, Y.; August, I.; Blumberg, D.G.; Stern, A. Compressive sensing resonator spectroscopy. Opt. Lett. 2017, 42, 25–28. [Google Scholar] [CrossRef] [PubMed]

- Oiknine, Y.; August, I.; Blumberg, D.G.; Stern, A. NIR hyperspectral compressive imager based on a modified Fabry–Perot resonator. J. Opt. 2018, 20, 044011. [Google Scholar] [CrossRef]

- Oiknine, Y.; August, I.; Stern, A. Multi-aperture snapshot compressive hyperspectral camera. Opt. Lett. 2018, 43, 5042–5045. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).