1. Introduction

Fundus autofluorescence (FAF) imaging [

1,

2,

3] is a fast non-invasive imaging technique that captures the natural fluorescence emitted by intrinsic fluorophores in the eye’s fundus, particularly, lipofuscin in the retinal pigment epithelium. When excited by blue light, lipofuscin releases green–yellow fluorescence, allowing for the visualization of the metabolic activity and health of the retinal cells. Areas with abnormal accumulation or loss of lipofuscin can indicate retinal pathology, making FAF a valuable tool for assessing various eye conditions. In clinical practice, fundus autofluorescence serves as a crucial diagnostic and monitoring tool for ophthalmologists. It provides insights into retinal health by highlighting changes in lipofuscin distribution, aiding in the identification and tracking of retinal diseases, such as age-related macular degeneration (AMD) and central serous chorioretinopathy (CSCR). In combination with other imaging modalities, FAF helps clinicians to establish the initial diagnosis, to assess disease progression, to plan interventions, and to evaluate treatment efficacy.

However, while the complete loss of signal in the cause of cell death leads to clearly demarked areas of no signal, distinguishing relative changes from the normal background proves challenging. While strategies like quantitative autofluorescence (qAF) [

4] were developed to assess these changes more objectively, reliably annotating exact areas is difficult, with borders being particularly open to interpretation. Consequently, significant variations emerge among different graders, and even within the same grader during repeated annotations.

Especially in CSCR, areas of increased hyperfluorescence (HF) and reduced autofluorescence (RA, also hypofluorescence) are pathognomonic and can be used as a valuable diagnostic and monitoring tool. A means to reliably quantify these changes would allow for the fast and objective assessment of disease progression. Also, when combined with other imaging modalities, such as color fundus photography or optical coherence tomography, an even better understanding of a disease’s course and potentially new biomarkers seem feasible.

Artificial intelligence (AI) and convolutional neural networks (CNNs), as established in retinal image analysis [

5], seem to be an obvious choice to automatically perform the segmentation of FAF images. However, they often need training data with a clearly defined ground truth by human annotators. Now, if this ground truth is subject to the above-mentioned large inter- and intragrader variability, as is often the case in medical image segmentation [

6], and which was very recently shown on FAFs with inherited retinal diseases [

7], this leads to the consequent dilemma that the CNN has to deal with these ambiguities.

In this work, we break out of this dilemma through the utilization of segmentation ensembles and bagging [

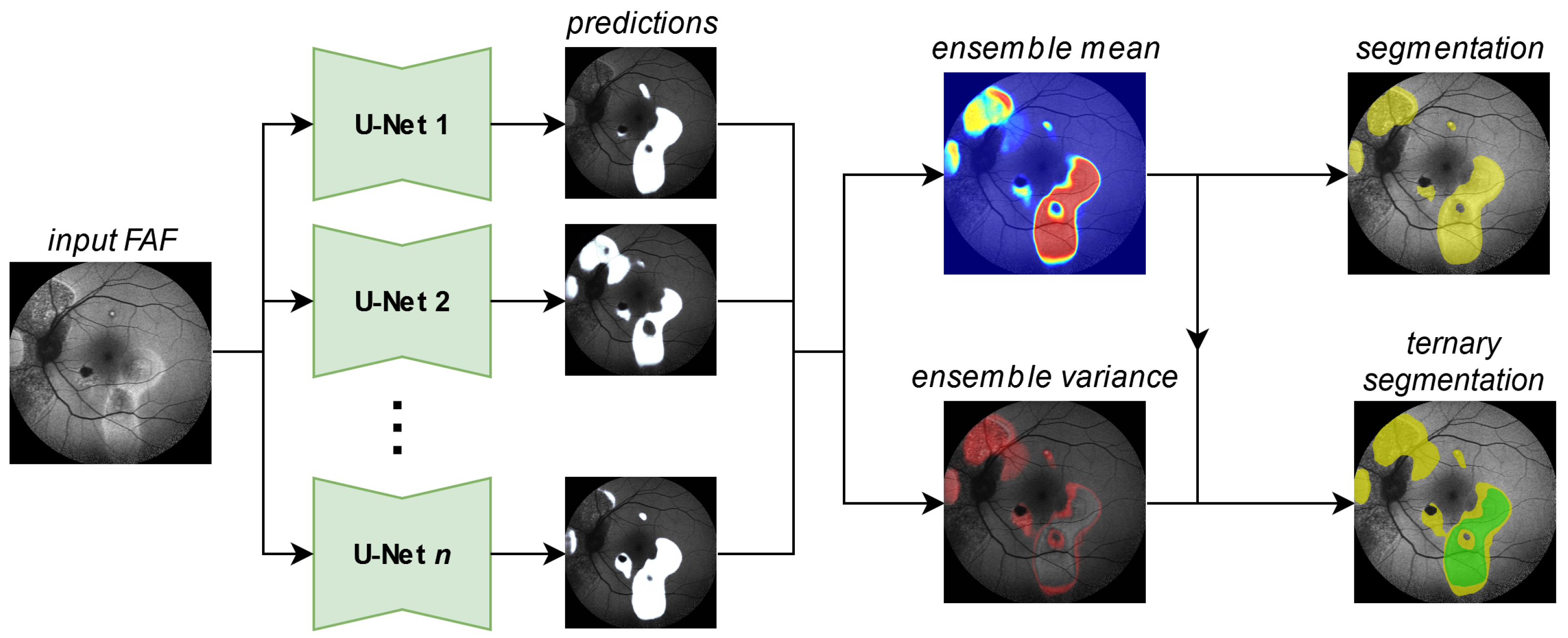

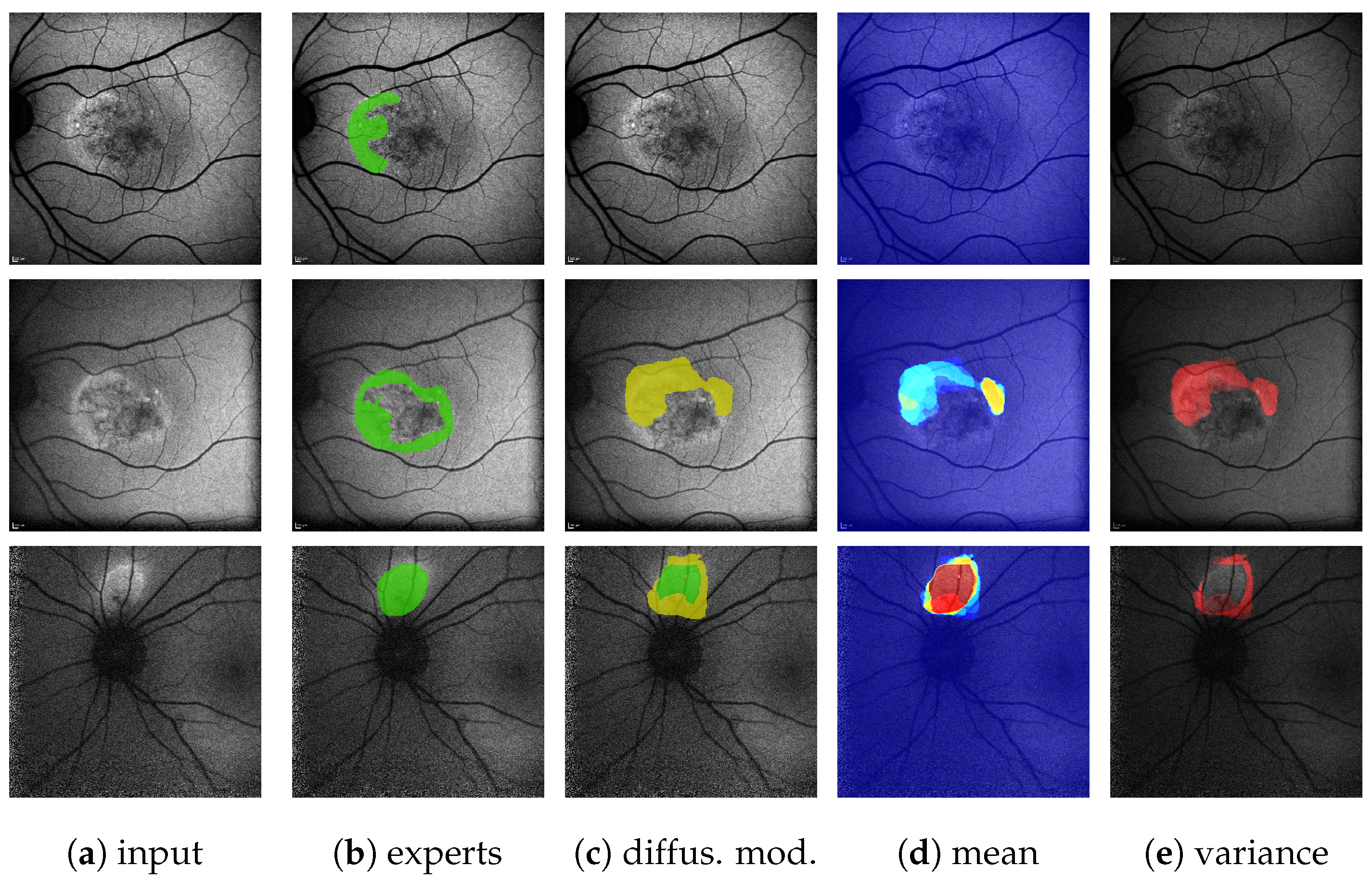

8], i.e., training identical segmentation network architectures on different subsets of our training data. With this setup, even though each image in our training data features only one—potentially biased—annotation, the ensemble as a whole can accurately represent the combined and possibly diverse annotations of multiple experts. Furthermore, by leveraging the mean and variance of our ensemble prediction, we can robustly differentiate segmentations into areas of potential HF and confident HF, where a single segmentation network suffers from overconfident predictions, as shown in

Figure 1.

Leakage segmentation in retinal images is an ongoing task. Some previous works have attempted automated segmentation of retinal vascular leakage in wide-field fluorescein angiography images [

9,

10,

11]. Li et al. [

12] and Dhirachaikulpanich et al. [

13] use deep learning approaches to segment retinal leakages in fluorescein angiography images. However, only a few works so far have focused on the specific task of HF segmentations: Zhou et al. [

14] combine diagnostic descriptions and extracted visual features through Mutual-aware Feature Fusion to improve HF segmentations in gray fundus images. Arslan et al. [

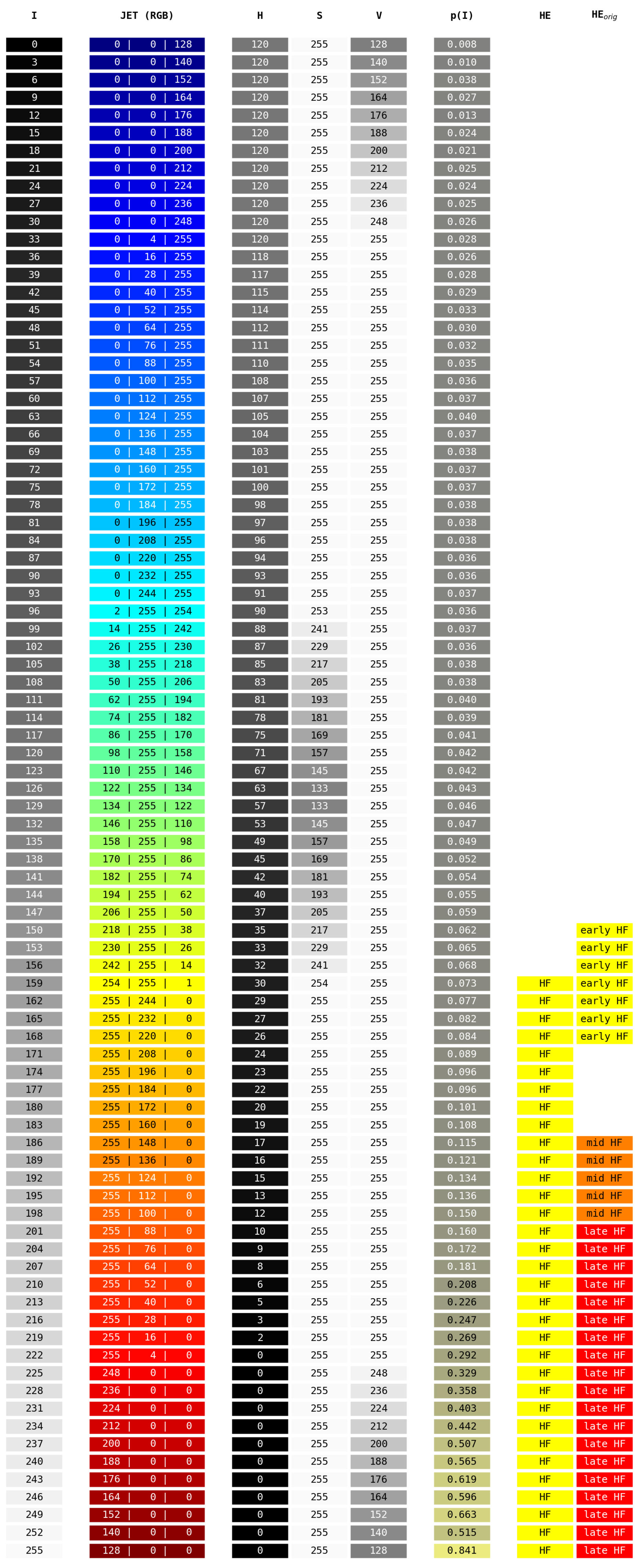

15] use image preprocessing and color-maps in the HSV [

16] color domain to extract HF in FAF images of patient with geographic atrophy. Their approach constitutes one of our baselines. Woof et al. [

7] very recently used a single nn-U-Net [

17] ensemble consisting of five U-Nets to segment, inter alia, HF, and RA in FAFs with inherited retinal diseases.

Ensemble learning has been applied to retinal image segmentation in several publications. Multiple works [

18,

19,

20,

21,

22,

23] have analyzed blood vessel segmentation in fundus images. Other ensemble tasks include segmentation of exudates [

24,

25], retinal fluids [

26,

27], and retinal lesions [

28] in different retinal image modalities. From the above we differentiate the following two categories of ensemble learning approaches: (1) Those where identical architectures are generated differently and a final result is obtained by voting [

18,

21,

22,

24,

26]; (2) Those where the elements of the ensemble fulfill separate tasks before fusing their results [

22,

25,

27,

28]. Combinations of both do, of course, exist [

19,

20]. Our approach falls into the first category and, as such, mimics the intuitive approach of asking for and weighing the opinions of multiple experts, reducing the likelihood of bad predictions due to an outlier prediction.

Learning segmentations from potentially ambiguous medical annotations is a known challenge. Schmarje et al. [

29] use rough segmentations to identify collagen fiber orientations in SHG microscopy images by local region classification. Baumgartner et al. [

30] use a hierarchical probabilistic model to derive, inter alia, lesion segmentations in thoracic CT images at different levels of resolution. Their method can be trained on single and multiple annotations. Wolleb et al. [

31] utilize the stochastic sampling process of recent diffusion models to implicitly model segmentation predictions. They learn from single annotations. Rahma et al. [

32] similarly use a diffusion model approach for, inter alia, lesion segmentation, but improve the segmentation diversity and accuracy by specifically learning from multiple annotations. In contrast, we use an explicit segmentation ensemble trained on single annotations. While we indeed take measures to align very fine annotation structures for segmentation consistency (see

Section 2), we do not use rough labels. Available sample sizes (less than 200 training images) due to an absence of stacked scans are also lower compared to [

30,

31,

32] whose training set sizes, e.g., for lesions range from 1k to 16k images. To the best of our knowledge, and compared to methods like [

7], we are the first to explicitly approach the problem of HF and RA segmentation for ambiguous FAF annotations.

For our work, as in [

33,

34], we sample from the database of the University Eye Clinic of Kiel, Germany, a tertiary care center that is specialized on CSCR patients. This unique large database consists of over 300 long-term CSCR disease courses with a median follow-up of 2.5 years. Given this database and the aforementioned challenges, our contributions are as follows:

A segmentation ensemble capable of predicting hyperfluorescence and reduced autofluorescence segmentation in FAF images on the same level as human experts. As the ensemble consists of standard U-Nets, no special architecture or training is required;

Leveraging the inheritable variance of our proposed ensemble to divide segmentations into three classes for a ternary segmentation task: areas that are (1) confident background, (2) confident HF, or (3) potential HF, accurately reflecting the combined annotations of multiple experts despite being trained on single annotations;

An algorithm to sample from the main ensemble a sub-ensemble of as few as five or three networks. Keeping a very similar segmentation performance to the whole ensemble, the sub-ensemble significantly reduces computational cost and time during inference;

An additional segmentation metric

based on the established Dice score (

) [

35] better suited to reflect the clinical reality of HF and RA segmentation, where the exact shape of a fluid prediction is less important than the fact whether HF and RA have been detected in the same area by multiple graders or not;

Supplementary analysis of the ensemble training, from which we derive insights into optimizing the annotation approach in order to reduce costs for future endeavors in a domain, where acquiring additional training data is very costly.

2. Materials

To the best of our knowledge, which is supported by recent surveys [

36,

37,

38] and research [

39], there currently exists no public FAF dataset with annotated HF. Hence, like others [

7,

15], we can show results solely on in-house data, all of which were acquired with institutional review board approval from the University Eye Clinic of Kiel, Germany.

As in our previous works [

33,

34], the base of our data stems from 326 patients with CSCR, collected from 2003 to 2020. From this, we sampled patients for our retrospective study. The selected patients required a reliable diagnosis of chronically recurrent CSCR, at least three visits and a long term course of the disease of at least 2 years. Patients with an uncertain diagnosis of CSCR or an acute form of CSCR were excluded.

For this work specifically, we collected patients with FAF images. FAF images are

pixels and were taken with a field of view of 30° or 55°, resulting in an average resolution of 11.3 µm/pixel or 22 µm/pixel. FAF images were acquired with the Heidelberg Retina Angiograph II (HRAII) [

40]. A blue solid-state laser (wavelength 488 nm) was used to excite the fluorescein with a barrier filter at 500 nm. Image acquisition times range from 48 ms to 192 ms, the maximum Z-scan depth is 8 mm. Please note that all FAF images shown in this manuscript except for

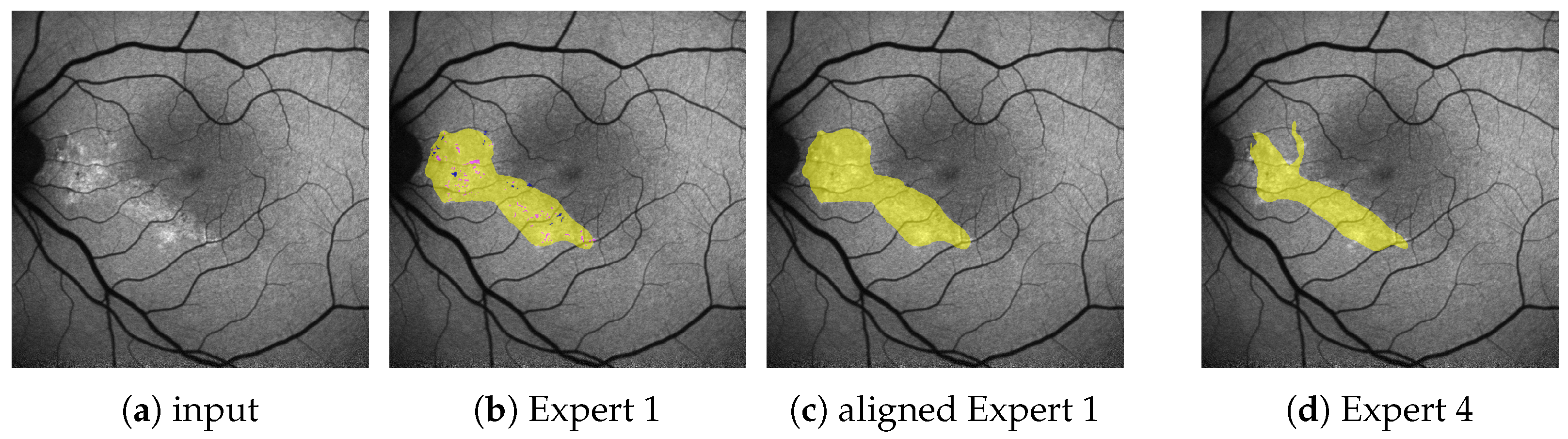

Figure 3 have a field of view of 30°.

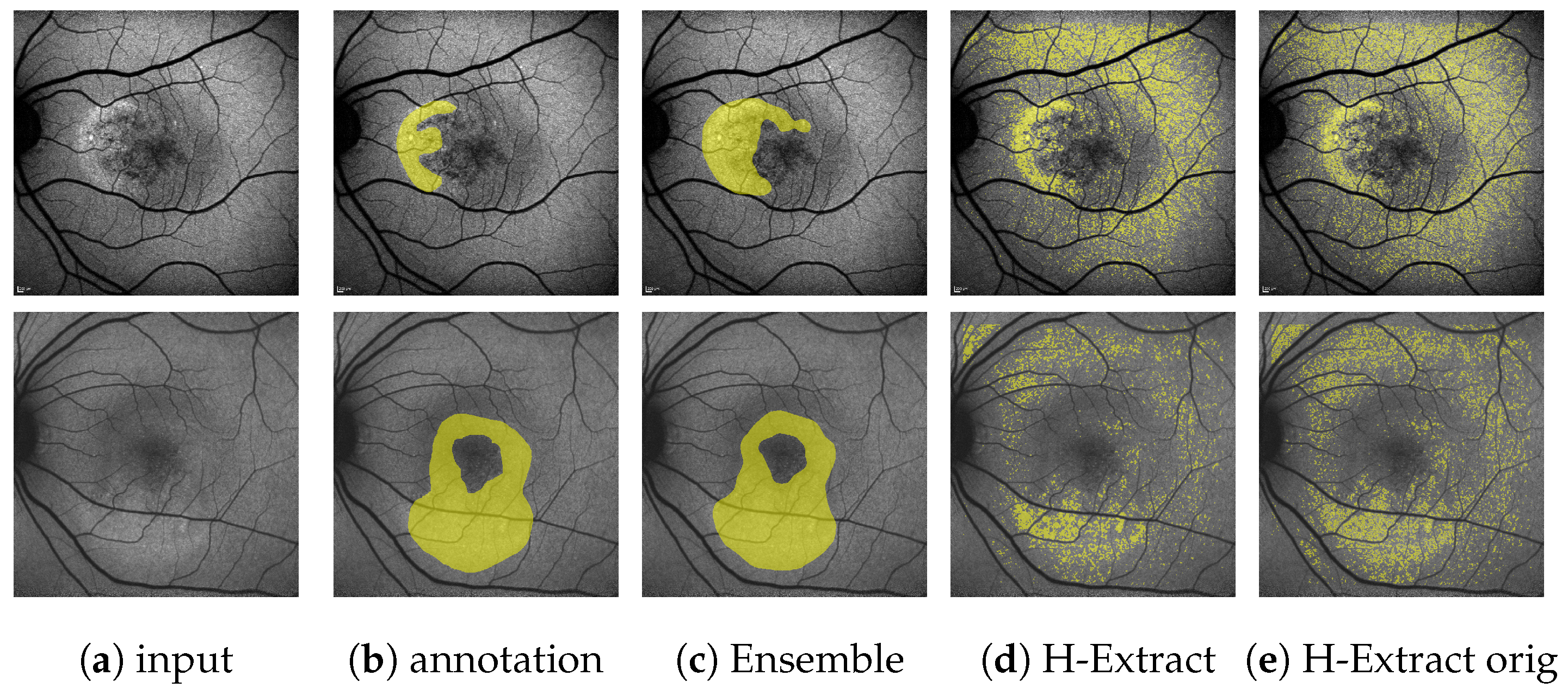

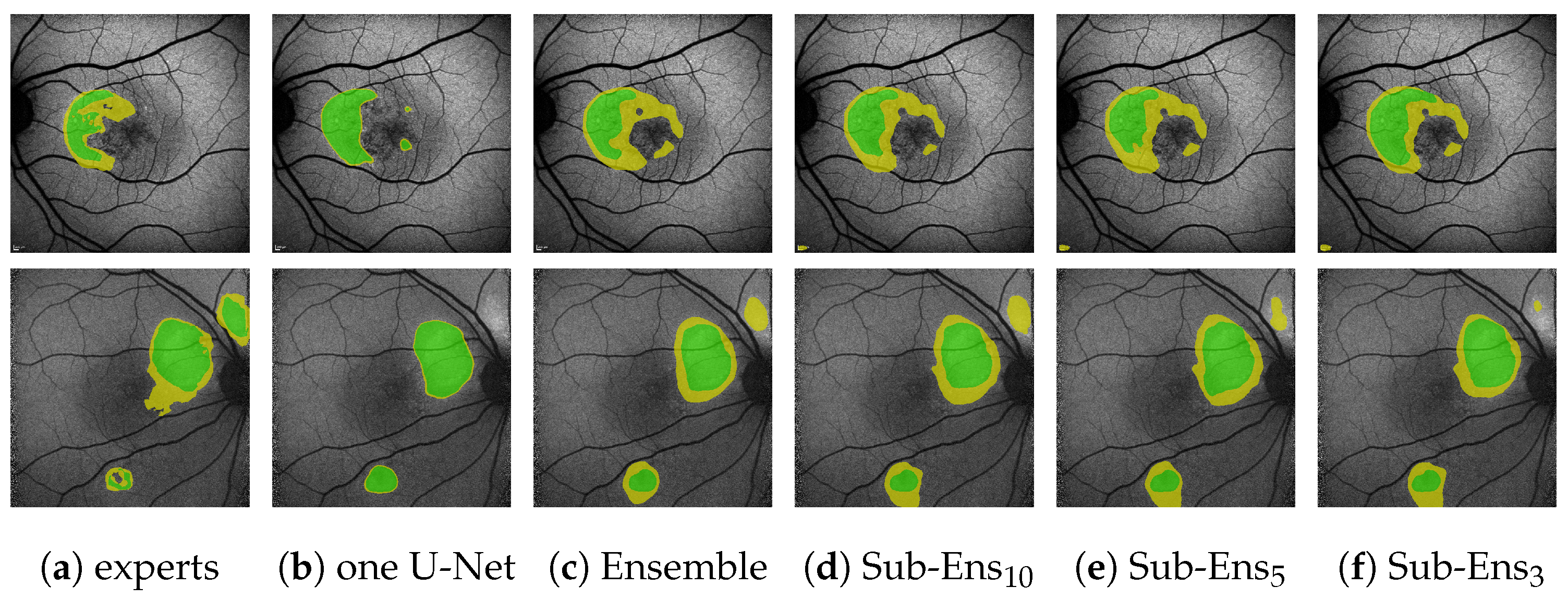

Annotations for HF and RA are based on clinical experience and were accordingly provided by two ophthalmological experts. In order to streamline the annotation process and since initial analysis had shown considerable inter-observer variability, HF and RA were annotated coarsely, as shown

Figure 2d. In total, our train split contains 180 FAF images from 100 patients. Our validation split contains 65 images of 20 patients (44 with HF, 30 with RA annotations). Our test split contains 72 images of 36 patients (60 with HF, 55 with RA annotations). Training, validation, and test data do not share any patients.

Furthermore, to analyze inter- and intra-observer variability, we utilize 9 images from 9 different patients from the validation set originally annotated by Expert 1. Additional HF and RA annotations were provided by Expert 2 and two other clinical experts, all from the same clinic, as well as Expert 1 again several months after the original annotations.

A subset of annotations by Expert 1 does not follow the aforementioned guidelines, but is significantly finer and contains the additional labels granular hyper autofluorescence and granular hypo autofluorescence. As these labels are of subordinate importance for this work, we omit them and align the finer annotations with the others by the use of morphological operations, as explained in

Figure 2b,c. All results presented in this work are performed on these aligned annotations.

Figure 3.

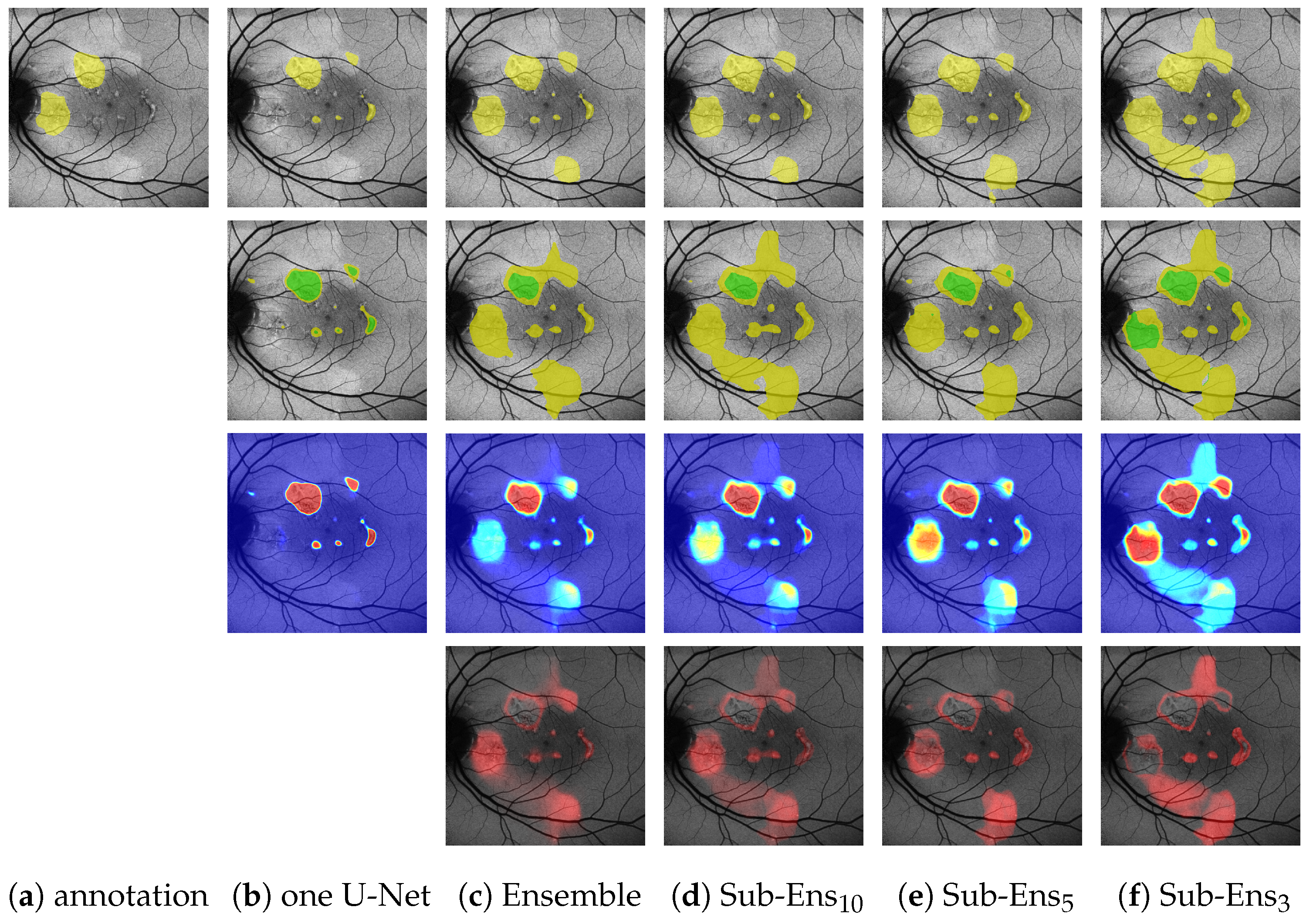

Generating a segmentation prediction (yellow HF, rest no HF) and a ternary prediction (green confident HF, yellow potential HF, rest no HF) from the proposed segmentation ensemble. Details for the generation of the ternary segmentation output are given in Figure 5.

Figure 3.

Generating a segmentation prediction (yellow HF, rest no HF) and a ternary prediction (green confident HF, yellow potential HF, rest no HF) from the proposed segmentation ensemble. Details for the generation of the ternary segmentation output are given in Figure 5.

4. Results

We present results regarding four different aspects of our work: (1) For the performance regarding datasets with only one available annotation, we present the segmentation and ternary scores on our validation and test data in

Section 4.3 and

Section 4.4, respectively. We further show pair-wise agreement among single experts and our proposed methods in

Section 4.5. (2) Regarding the performance of our ternary segmentation compared to multiple accumulated expert annotations, we present results in

Section 4.6. (3) For our sampling choice regarding networks for our proposed sub-ensemble, we depict the results in

Section 4.7. (4) For analyzing the ensemble and network training, we show results in

Sections S1 and S2 of the Supplementary.

4.1. Metrics

Table 2 shows the metrics use for the different tasks throughout this work, all of which will be explained in the following.

Regarding the segmentation task, an established [

60] metric is the Dice score (also Sørensen–Dice coefficient, F1 score)

[

35,

59], where

,

, and

denote the set of true positive, false positive, and false negative segmented pixels, respectively. For ease of understanding, we keep the notation of

,

, and

(i.e., by regarding the first segmentation as the prediction and the second segmentation as the ground truth).

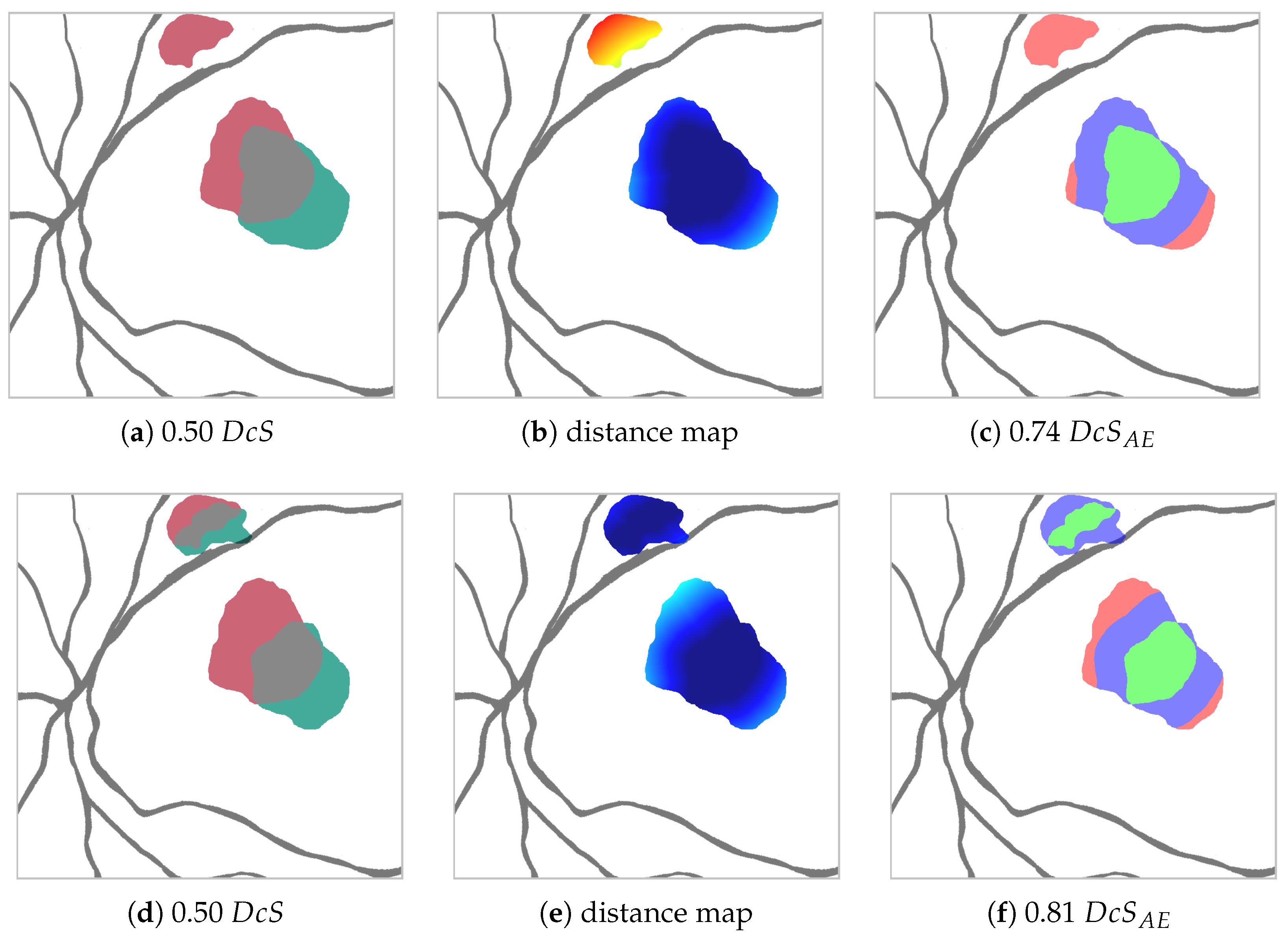

While the Dice score is able to measure overall performance, it cannot necessarily depict how or why any two segmentations differ. Regarding HF and RA, we identify the following two general reasons for low segmentation scores: (1) Cases in which two segmentations agree that a biomarker is present in a certain area, but disagree over the exact outline of this area due to the absence of sharp edges; (2) Cases in which two segmentations disagree over a biomarker being present in a certain area (compare

Figure 4a top and bottom).

Even though the distinction between these cases can be blurry, it is an important distinction to make. For example, the consciously perceived visual impression is generated disproportionately in the area of the fovea. Hence, retinal changes in this area are much more significant than in more peripheral locations of the retina.

This is why, given two segmentations, it is our goal to partition the set of differently labeled pixels into two sets , where (edge errors) is the set of pixels labeled differently due to the aforementioned case (1), and (area errors) is the set of pixels labeled differently due to case (2).

In order to achieve this partition, we calculate, for each pixel in

, its Euclidean distance to the closest pixel in

(see

Figure 4b). Should

be empty, the distance is set to the length of the image diagonal. Pixels, whose distance is smaller or equal to the threshold

, are assigned to

, the rest to

, as shown in

Figure 4c. With this, we are able to define for a given

an adapted Dice score

, where, due to the aforementioned relationship

, it follows that

.

Since is a sensitive parameter, we try to avoid any bias introduced by choosing a specific by instead calculating a mean over an equally spaced distribution of such that .

For the ternary task, we differentiate not only between HF and background pixels, but instead between the three categories

C (confident HF),

P (potential HF), and

B (background) according to the diagram in

Figure 5. Hence, when comparing two segmentations we now have, for each pixel, nine possible prediction ground truth combinations instead of the previous four (TP, FP, FN, and TN, with TN being true negative). We therefore decided to focus on the following metrics based on precision (also positive predictive value) and recall (also sensitivity) for evaluation:

where a high value for (1) ensures that confident predictions are definitely correct, and a high value for (2) ensures that we do not miss any HF, whether confident or potential. In addition, we utilize two more lenient but nonetheless important metrics:

where a high value for (3) ensures that confident predictions are not definitely wrong, and a high value for (4) ensures that we do not miss a confident annotation.

Though it does not happen in our case, it must be noted that the metrics presented above can be bypassed by predicting

every pixel as

P. The result would be undefined for (1) and (3), and a perfect score of 1one for (2) and (4). We hence also observe

from which, together with Equation (

2), we calculate a Dice score as a sanity check:

In the case where we apply the ternary task to images with just one expert annotation, we note that (1) = (3) and (2) = (4) since

according to

Figure 5.

Please also note that for the ternary task, we do not calculate EEs or AEs. The reason for this is the fact that we did not find it to provide much further insight compared to already given for the segmentation task, which we report on each dataset.

4.2. Parameter Selection

For evaluation, we chose the following values for our parameters. For the

metric,

px was set as that high a value made it sure that all instances would be covered where differences in predictions could reasonably be interpreted as an EE. Measurements of EEs among experts, as later depicted in Figure 8 in

Section 4.5, support these observations.

Regarding the ternary task, for annotations we set

in order to classify an area as

C only if all five experts segmented it.

was set, as review with the graders revealed that occasionally single graders mislabeled areas, e.g., of background fluorescence as HF. Hence, an area is classified as

P if not all, but at least two experts segmented it. For our ensemble’s predictions, we set

following the logic that, similar to the experts, more than 1/5th of our ensemble needed to confidently detect it.

was set empirically to

. For single predictions or annotations, we set

and

. From confidence maps as, e.g., depicted in the second-lowest row in

Figure 6b, we inferred that these parameters are not very sensitive, as predictions of a single network tend to be very close to either 0 or 1 with small edges between these two extremes. For HyperExtract, we chose

as this is the optimal value for the validation set (see

Figure A6).

4.3. Segmentation and Ternary Performance—Validation Set

Table 3 and

Table 4 depict segmentation and ternary scores for HF and RA on the validation dataset, respectively.

Comparing a single U-Net to the proposed ensemble, we see that for the classical segmentation task we marginally lose performance (from 0.668 to 0.677

on HF|from 0.497 to 0.501 on RA). For the

score, where the edge error is heavily suppressed, we see a very comparable margin for HF (from 0.825 to 0.814) and even a significant improvement for RA (from 0.677 to 622). We can hence infer that this difference in performance is not mainly caused by EEs (i.e., unsharp edges), but indeed due to new areas being segmented.

Figure 6b,c (top row) shows an example of this.

However, when looking at the ternary task performance, we see that, in regard to

and

, our proposed ensemble drastically outperforms the single U-Net. Especially the improvement for

(from 0.872 to 0.804 on HF|from 0.754 to 0.512 on RA) has to be noted, indicating that the ensemble is detecting far more of the annotated HF (at the cost of some potential overpredictions, as depicted by 0.035 lower

). This again is shown by

Figure 6b,c (second row), where the ensemble, in contrast to the single U-Net, is able to detect the leftmost HF area to the immediate right of the optic disc. Furthermore, the depicted images also reflect the differences in

: The ensemble is only confident in one part of the two segments annotated by the expert, whereas the single U-Net is also highly confident in image areas that have not been annotated by the expert.

Looking at the sub-ensembles, we note that for the segmentation task and they perform slightly worse than the full ensemble, scores getting closer the more networks we include. However, regarding , the sub-ensembles especially for RA perform even better than the whole ensemble. We also want to highlight the fact that even the minimal sub-ensemble with three networks significantly outperforms the single U-Net for (from 0.868 to 0.804 on HF|from 0.662 to 0.512 RA).

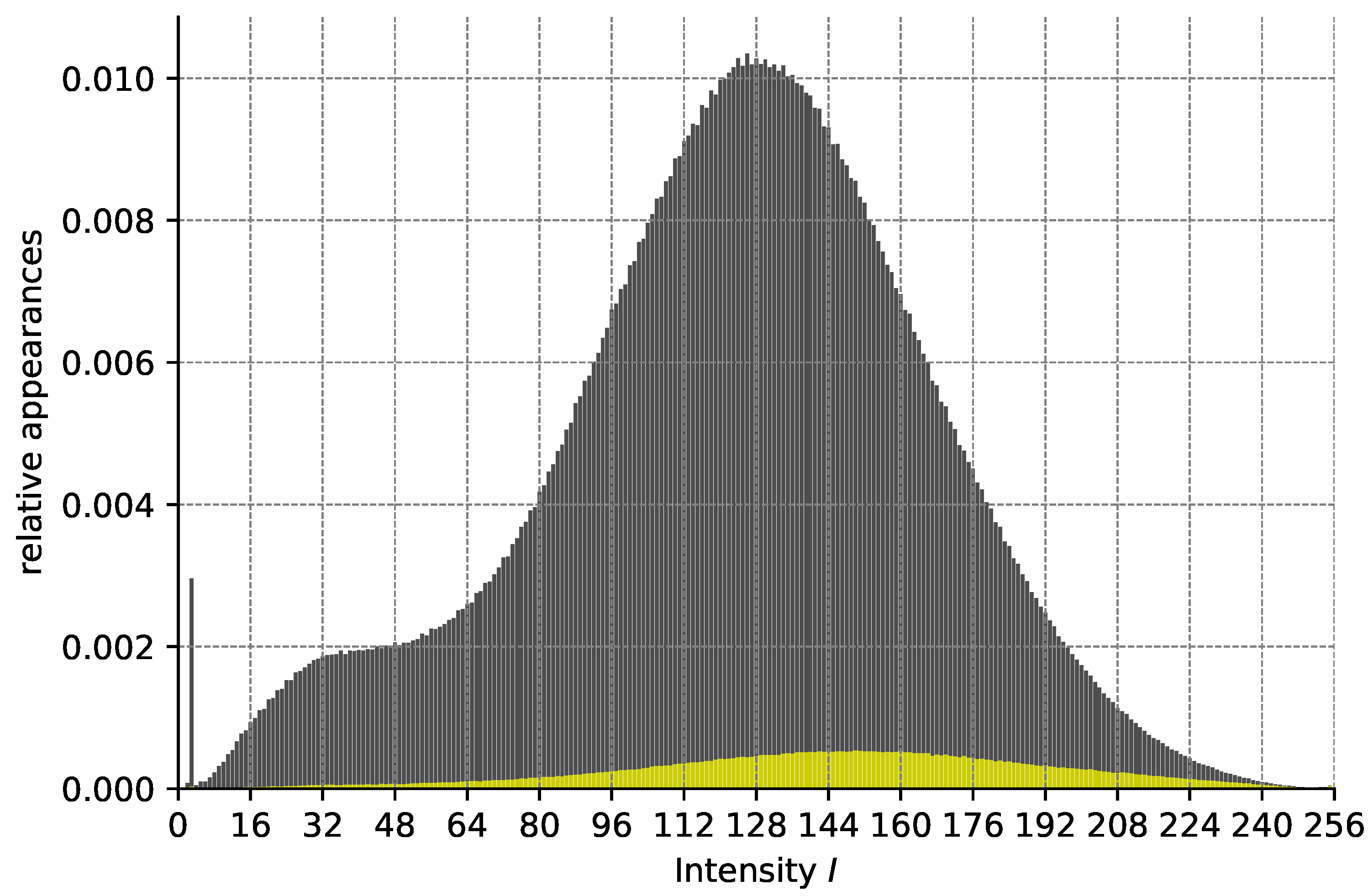

Regarding the mean U-Net, we see competitive results on HF, but not on RA. The combination of a mean and a variance network drastically decreases performance on both HF and RA. H-Extract is not competitive and tends to generate scattershot segmentations despite optimized extraction values, as visible in

Figure A7. Analyzing the relationship between HF and pixel intensities, as performed in

Figure A5, reveals that on our FAF data, pixel intensity is not a good indicator for HF except for very high intensities, which, however, only appear very rarely. The diffusion model, despite some good segmentations as seen in the bottom row of

Figure A8 does, in other cases, not predict anything (top row of the same figure). It is also susceptible to small changes, as seemingly similar images from the same eye lead to very different predictions, as shown in the middle row of

Figure A8.

4.4. Segmentation and Ternary Performance—Test Set

Table 5 and

Table 6 depict the segmentation and ternary scores on the test set for HF and RA, respectively. Notably, the results for all methods are lower than on the validation set. This is true even for the diffusion model, which was not optimized for the validation set, indicating that the test set is more dissimilar to the training set than the validation set. Since the possible optimal score for HyperExtract, as shown in

Figure A6, is also significantly lower on the test than the validation, the indication might be that the test set is overall more diverse and hence more challenging to segment.

Still, the general observations from the validation dataset hold true. We want to point out the fact that the ensemble significantly outperforms the single U-Net on all metrics (except on RA, where it is 0.002 worse).

Regarding the sub-ensembles, we observe Sub-Ens

10 to perform even better than the ensemble on

(from 0.631 to 0.606 on HF|from 0.403 to 0.631). The mean U-Net, similar to the results on the validation dataset, performs comparatively well on HF, but not on RA data. The combination mean + variance U-Net again fails to improve the results on both pathologies. The H-Extract results are not competitive, despite the chosen

being close to the optimum for the test dataset (see

Figure A6). Neither is the diffusion model, the results being lowest among all methods.

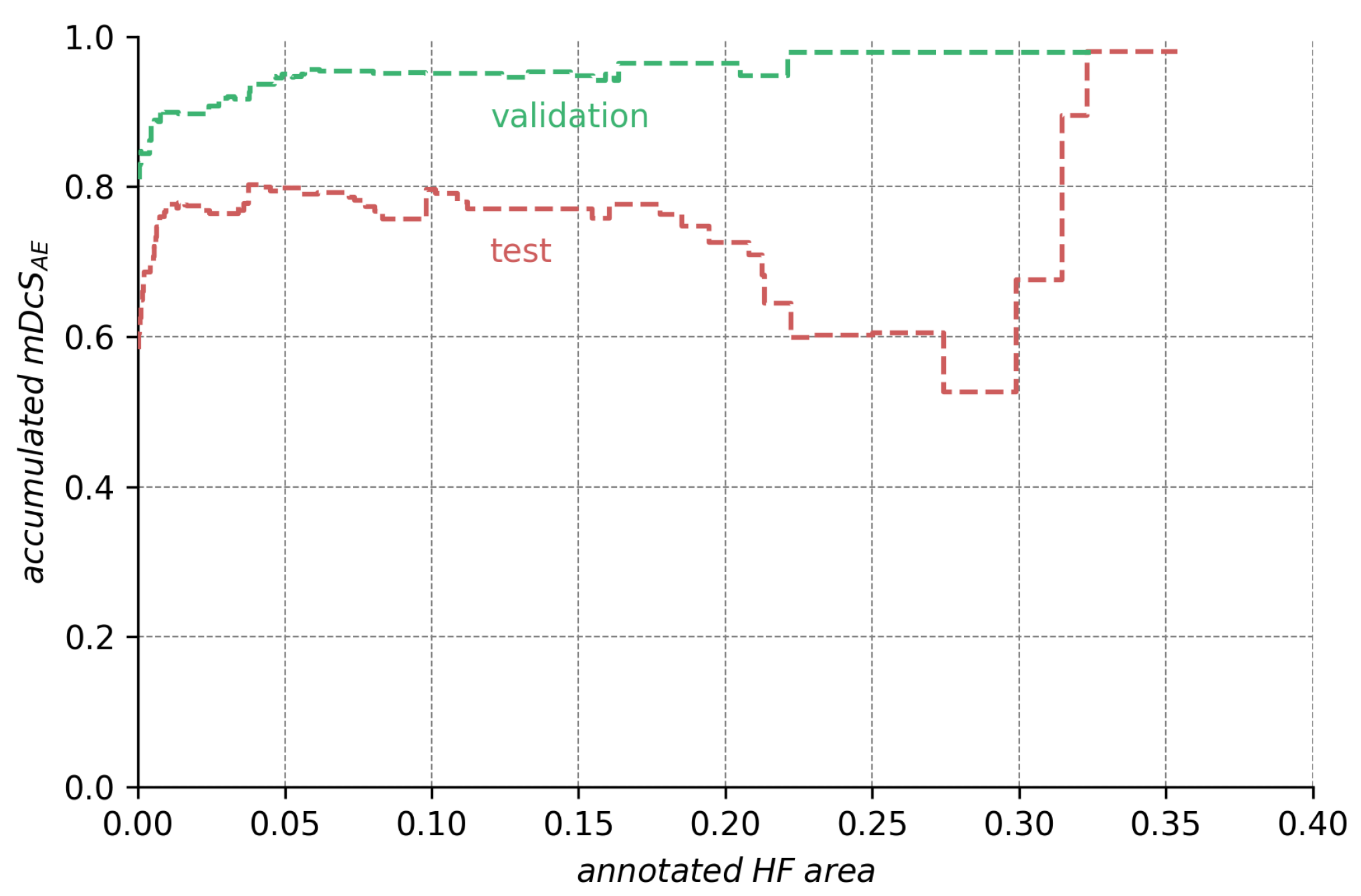

Analyzing the ensemble’s expected performance on the test dataset, we see from

Figure 7 that the average results for the ensembles are still acceptable. To investigate the factors contributing to the disparity between the test and validation performance, we analyzed those differences in regard to HF annotation size in

Figure A1,

Figure A2,

Figure A3 in the

Appendix A. From the data depicted, we see that the decreased performance stems (1) from the test set’s higher ratio of samples with very small HF annotations (<5% image size), but also (2) from bad performance on a few images with large annotations (>20% image size).

4.5. Segmentation Performance—Agreement among Experts and Ensembles

Table 7 and

Table 8 show the agreement among all five available expert annotations for nine FAF images as segmentation scores. Looking at

alone, we see that the experts only have an agreement of from 0.63 to 0.80 (mean 0.69) for HF and from 0.14 to 0.52 (mean 0.36) for RA.

However, significantly higher scores (mean 0.82 for HF|0.48 for RA) indicate that the comparatively low scores are, in many cases, caused by edge errors and not—which would be clinically relevant—by area errors, i.e., differently seen locations of HF and RA.

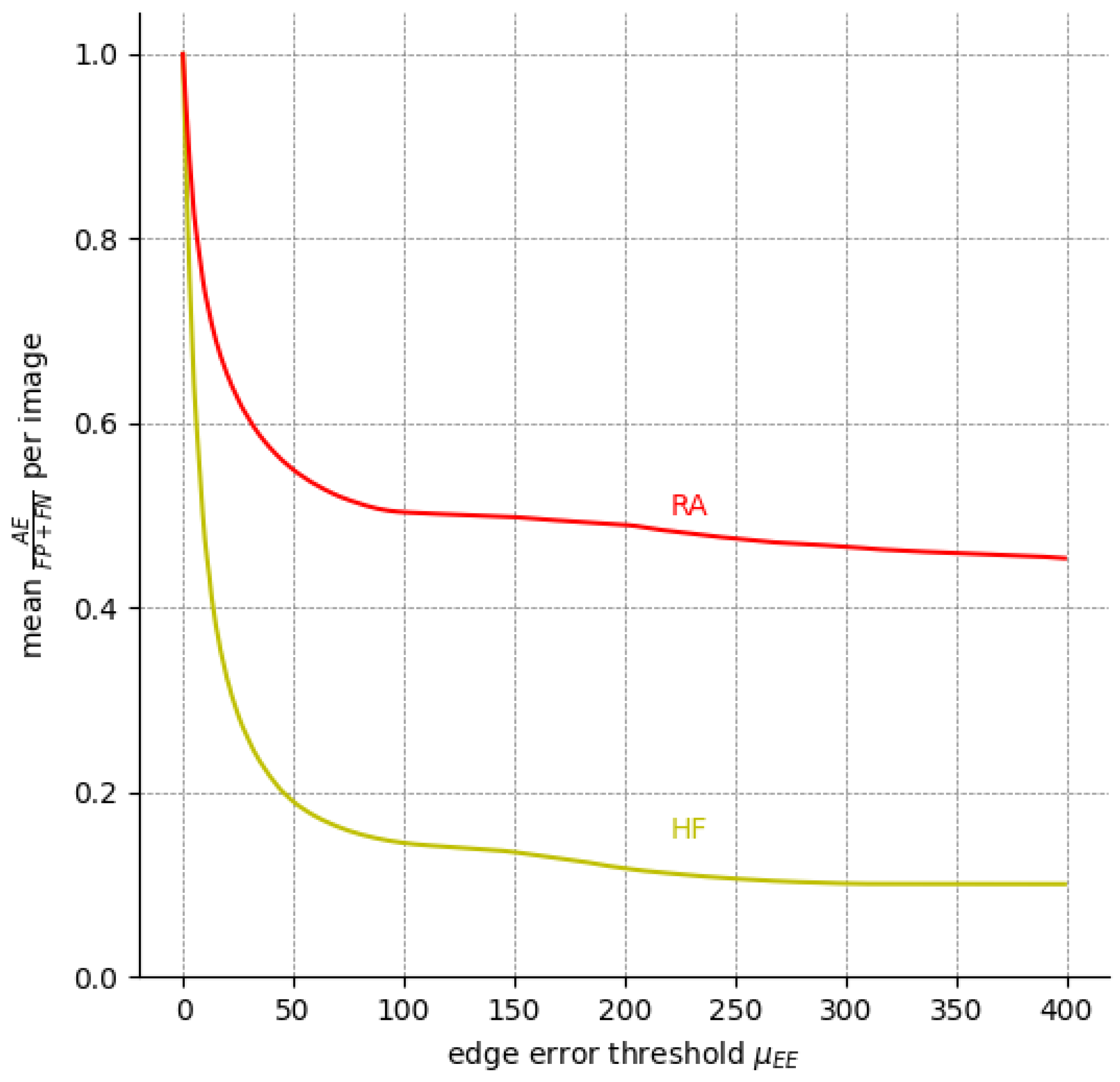

We can infer the same from

Figure 8, which depicts the percentage of EEs and AEs among all expert annotation pairs for possible values of the edge error thresholds

. Evidently, EEs outweigh AEs for a threshold as low as

px for HF. For

px, AEs already make up less than 20% of HF the errors, whereas RA is a more difficult label.

Still, at least 8% of HF errors are due to cases where no pair-wise overlap between the expert annotations exists, and are hence an AE by default.

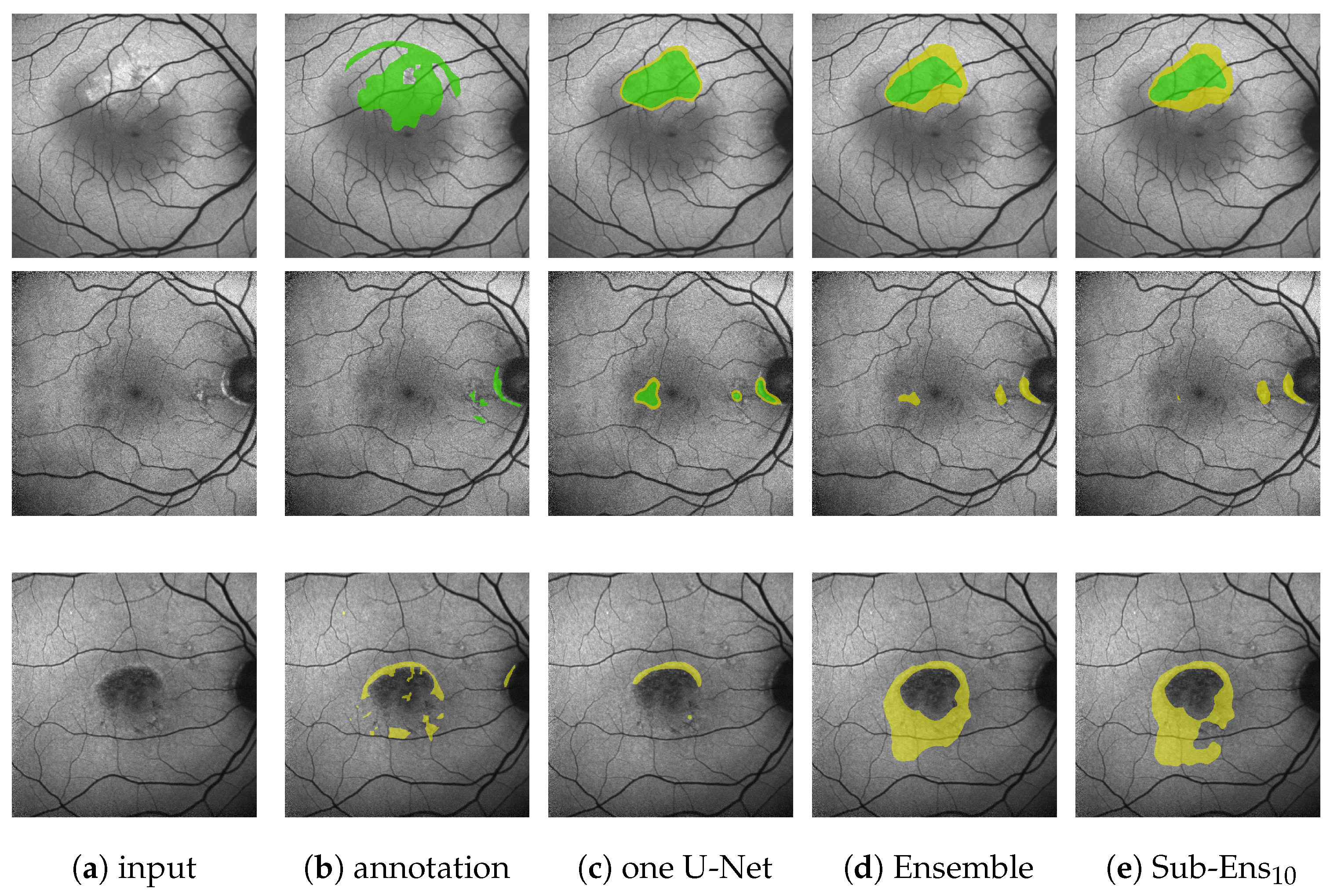

Figure 9 shows one such case where significant AEs are present, even among expert annotations.

Regarding the performance of the AI-driven methods, we note that for HF, all of them reach scores comparable to the experts ( 0.87 for ensemble|0.86 for Sub-Ens10|0.86 for mean U-Net|0.84 for single U-Net). However, on RA, the ensembles perform noticeably better than the single U-Net ( 0.47 for ensemble|0.47 for Sub-Ens10|0.45 for mean U-net|0.38 for single U-Net), reflecting the previous results on the validation and test data. The mean U-Net, on the other hand, performs surprisingly well on RA, given the previous results.

4.6. Ternary Performance—Comparison against Accumulated Expert Annotations

Table 9 and

Table 10 illustrate the ternary performance on the ground truth from multiple experts for HF and RA, respectively. We see that the ensemble and sub-ensembles perform better than a single U-Net on all of the four main metrics.

Of notable significance are the ensemble’s improvements over a single U-Net for

(0.88 compared to 0.79) and

(0.99 compared to 0.96). Especially the latter score is important, as it shows that on the given data we detect

every instance of HF annotated by all experts, whereas a single U-Net does not. An example of this can be seen in the bottom row of

Figure 10, where the single U-Net, despite a very confident prediction, misses a significant HF area above the optic disk, which was indeed annotated by all experts. The ensemble, while not confident, does detect that very same area.

Though we are careful not to draw too decisive conclusions from only nine images, we do point attention to the fact that the ensemble’s

score of 0.88 is very comparable to aforementioned results on the validation dataset (0.87 as reported in

Table 3; note that on the validation set

=

).

Regarding the sub-ensembles, we infer from

Table 9 that especially the sub-ensembles with 10 and 5 networks are very close in performance to the full ensemble. This is supported by the visual examples in

Figure 10. Here, e.g., in the top row, the ensemble and all sub-ensembles robustly, and similarly to some experts, segment HF above and below the fovea (as well as almost encircling it to the right, contrary to the expert annotations), while the single U-Net, albeit confident, only predicts a large area of HF to the left of the fovea.

From this example, as well as from the

scores in

Table 9, we see that the improved ternary scores for the sub-ensembles again come at the cost of slight overpredictions.

Regarding the RA predictions given in

Table 10, we note that the precision scores have to be interpreted very carefully due to little to no area being actually predicted as

C. This explains missing and seemingly perfect precision scores. The

scores, however, do show that the proposed ensemble and sub-ensemble do detect potential RA with significantly more reliability than the single U-net approaches (from 0.57 to 0.68 for ensembles vs. a maximum of 0.31 for the single U-Nets). Notably, Sub-Ens

10 again detects more considerably more RA (0.68 to 0.59

) at the cost of some overpredictions (0.45

vs 0.55

).

4.7. Sub-Ensemble Comparison

Figure 11 compares the test segmentation and ternary scores for the different sub-ensemble sampling approaches both on HF and RA depending on the number of models included in the sub-ensemble.

We see that our proposed method performs very comparably for and on both HF and RA, despite our sampling not being optimized for segmentation scores. Regarding , our proposed sub-ensemble performs significantly better on both modalities for all numbers of models except for four models on RA, where it is second best.

A direct comparison for is difficult if the area of C is not taken into account. This is especially notable for results on RA, where for seven or more models all sub-ensembles see very drastic changes, both positive and negative. For HF, sampling the best n models for the sub-ensemble performs consistently best.

Table 11 compares the inference times for our proposed ensemble and sub-ensembles. An initial cost in time is required to load the models and for predicting the first image. Afterwards, due to caching and optimizations by Tensorflow, the inference times are significantly reduced. Notably, sequential inference over the whole ensemble takes less than 4 s per image. Not explicitly included in this are the time it takes to load a single image, push it to the GPU, and receive the ensemble’s prediction, since all these operations are parallelized during batch prediction. Hence, the inference time for a predicting single image as it might occur in clinical practice could be slightly higher.

Also given are the inference times for a single forward pass of the diffusion model (i.e., the time needed to generate for one FAF image a number of segmentations equal to the batch size). We report no separate time for the first image, since the diffusion model itself is applied times per single HF prediction due to the iterative denoising approach. We note that our full ensemble is faster by a factor of over 16 compared to the diffusion model. Should GPU limitations only allow for a batch size smaller than the desired number of segmentations per FAF image, this factor multiplies by .

Comparing memory requirements the same way as runtimes is difficult due to dynamic memory allocation and serialization, but we note that while both the NVIDIA Titan Xp GPU and the NVIDIA GTX 1070 GPU could run all sub-ensembles proposed in this work, only the Titan Xp GPU could run the whole ensemble in parallel.

5. Discussion

5.1. Inter-Grader Agreement

From our analysis, we see that HF and particularly RA are difficult labels to segment precisely. Our mean results of 0.69 DcS agreement on HF and 0.36 DcS on RA are partly in accordance to the numbers recently reported by [

7], who on FAFs with inherited retinal diseases had an inter-grader agreement of 0.72 DcS for HF and 0.75 DcS for RA.

Their higher RA agreement can be explained by their definition of RA having to be at least 90–100% as dark as the optic disc [

7]. If we compare this to our nine images with multiple annotations, we see that if we calculate the relative darkness as

our expert’s annotated RA being only 71–85% as dark as the optic disc.

5.2. Area Error Dice Score

The introduction of

was very helpful in distinguishing disagreements over the exact shape of HF from the much more important case where two graders disagree over the presence of HF in certain image areas. While for the most part in our evaluation, due to similar architectures close

values do indicate close

values, there are cases where this is not the case, e.g., Sub-Ens

3 vs. Sub-Ens

5 in

Table 5 or most notable in

Figure S2 in the Supplementary for

max sampling.

We also think that presenting

over

for some specific threshold avoids a selection bias. This comes at the cost of making the metric less intuitive to visualize, i.e., it is not as clear as with

or intersection over union (also Jaccard index) [

61] what a specific value of

looks like.

It should also be noted that, in its current state, could be expressed as linearly weighing each FP and FN pixel based on its distance to the nearest TP pixel (or, more precisely, the minimum of its distance and the threshold ). In the future, other weighing functions might be found even more useful.

5.3. Ternary Task

Our approach of utilizing a ternary segmentation system in contrast to classical binary segmentation has shown to be useful on the available data. Providing an additional label of “potential segmentation” allows us to handle the difficult task of HF and RA segmentation by keeping a high precision for confident predictions (more than 97% of confident predictions seen by at least two experts) and a very high recall (99% of HF annotated by all experts found).

A current limitation in our analysis is the fact that we do possess only very few images with multiple annotations, somewhat limiting our evaluation on the benefits of the ternary system. Future work on larger FAF datasets with multiple annotation is desired.

5.4. Ensemble Segmentation

We could show the benefit of using an ensemble over a single network repeatedly on all datasets, especially in regard to the ternary task on annotations from multiple experts. This is all the more notable as our training data stems from annotation data of just one expert. Future work might analyze how comparatively low agreement of the expert with themselves (0.85 , mean 0.82 for all experts among each other) might have helped in avoiding overfitting and creating diverse ensemble networks.

Our sampling approach for the sub-ensembles aiming at maximum diversity is well suited, as all sub-ensembles perform consistently well on all datasets. With as little as five networks (1/20th) we are within 1% of the full ensemble’s performance on three of the four main ternary metrics (and within 3% for

). For 10 networks, we achieve equal or even higher recall values than the full ensemble on all datasets, indicating that this particular sub-ensemble entails a good set of varied models, which, in the full ensemble, are partly suppressed by the majority of more similar networks. Considering these achievements, we think the occasional and slight overprediction (0.05 and 0.06 lower

for HF) to be very acceptable. Additional evaluation, detailed in

Section S3 of the Supplementary, shows that our segmentation U-Nets are generally robust against image noise.

We see a limitation in the fact that we currently cannot systematically set up diverse networks before or during training (compare

Section S1 in the Supplementary). While bootstrapping is evidently capable of creating sufficiently diverse networks from annotations of just one expert to avoid overfitting and overconfident predictions, we currently have little control over the process of how to generate this diversity. The similarity or dissimilarity of the selected training data or patients between networks gives little indication for the similarity of the output predictions. This necessitates the time and hardware resources for the training of many networks in the hope that we can select a suitable subset afterward. Future work hence needs to look at the influence of the other random parameters (random initialization, augmentation, data shuffling, GPU parallelization) in order to allow the selected training of diverse networks.

Future work will also analyze on how well our ensembles generalize to new data, e.g., data of other centers or from other FAF imaging devices. While we do not apply any data-specific preprocessing, but do use strong augmentations during training and hence would expect some robustness to new data, we do note that further evaluation is required.

In this regard, we would also like to point out that our proposed sub-ensemble sampling-approach based on diversity does not require ground truth. This opens up the possibility of selecting from a set of models trained on one dataset a subset with maximum diversity on another dataset without the need for further annotation.

5.5. Baselines

Regarding HyperExtract [

15], we showed that the color conversion proposed in the original paper brings no benefit over working directly on the grayscale values (

Figure A4). Still, even with our optimization approach i.r.t. to the probability of a pixel intensity indicating HF, the prediction performance is not competitive. We hence conclude that on our data, HF segmentation based solely on pixel intensity does not yield sufficient results.

Regarding the diffusion model by [

31], we note that even with our very limited training data compared to the original work, we occasionally generate very good results, indicating that with sufficient amounts of data, these more expressive models might be able to generate good HF segmentations. Still, while having to train only a single network for multiple predictions is beneficial, we notice drawbacks both in the fact that we have little to no control over the diversity of the output due to feeding random noise into the algorithm and in that the denoising process takes considerably longer, which inferences our proposed segmentation ensembles.

5.6. Runtimes

The runtimes of our proposed models (less than of a second for all sub-ensembles) are very suitable for a clinical setting. It is notable that even the whole ensemble with 100 models is able to predict an image in roughly 3 s. We believe this to be acceptable for clinical application, where a typical use case would encase loading the model once, and then providing predictions as a microservice, hence rendering the drawback of a long initial loading time of up to 6 min almost irrelevant. Optimizations and parallelization could further reduce this latency, though we consider these out of scope for this work.

We also note that we are significantly faster than the diffusion model baseline by a factor of at least 16 for the full ensemble and by a factor of at least 181 for the sub-ensemble with 10 models.

5.7. Annotation Selection

While the results of our current analysis (shown in

Section S2 of the Supplementary) are interesting in the sense that a number of averaged size annotations seem to yield better results than an equal number of mixed or large annotations, we do note that further evaluation is necessary to derive general annotation guidelines from this. One aspect that should be analyzed in future work are the properties of the validation and test set, though we see, e.g., from

Figure A2, our current validation set does contain a mix of small, medium and large samples.

Other factors besides annotated area were considered as well (e.g., number of individual patients vs. data from multiple appointments of the same patient), but due to limitations in our current set of annotated data, they could not be sufficiently analyzed.

Now that we possess an initial dataset capable of training a segmentation ensemble, the integration of active learning aspects as in [

62] to specifically target annotation ambiguity could prove to be useful.