UAV Photogrammetry-Based Apple Orchard Blossom Density Estimation and Mapping

Abstract

1. Introduction

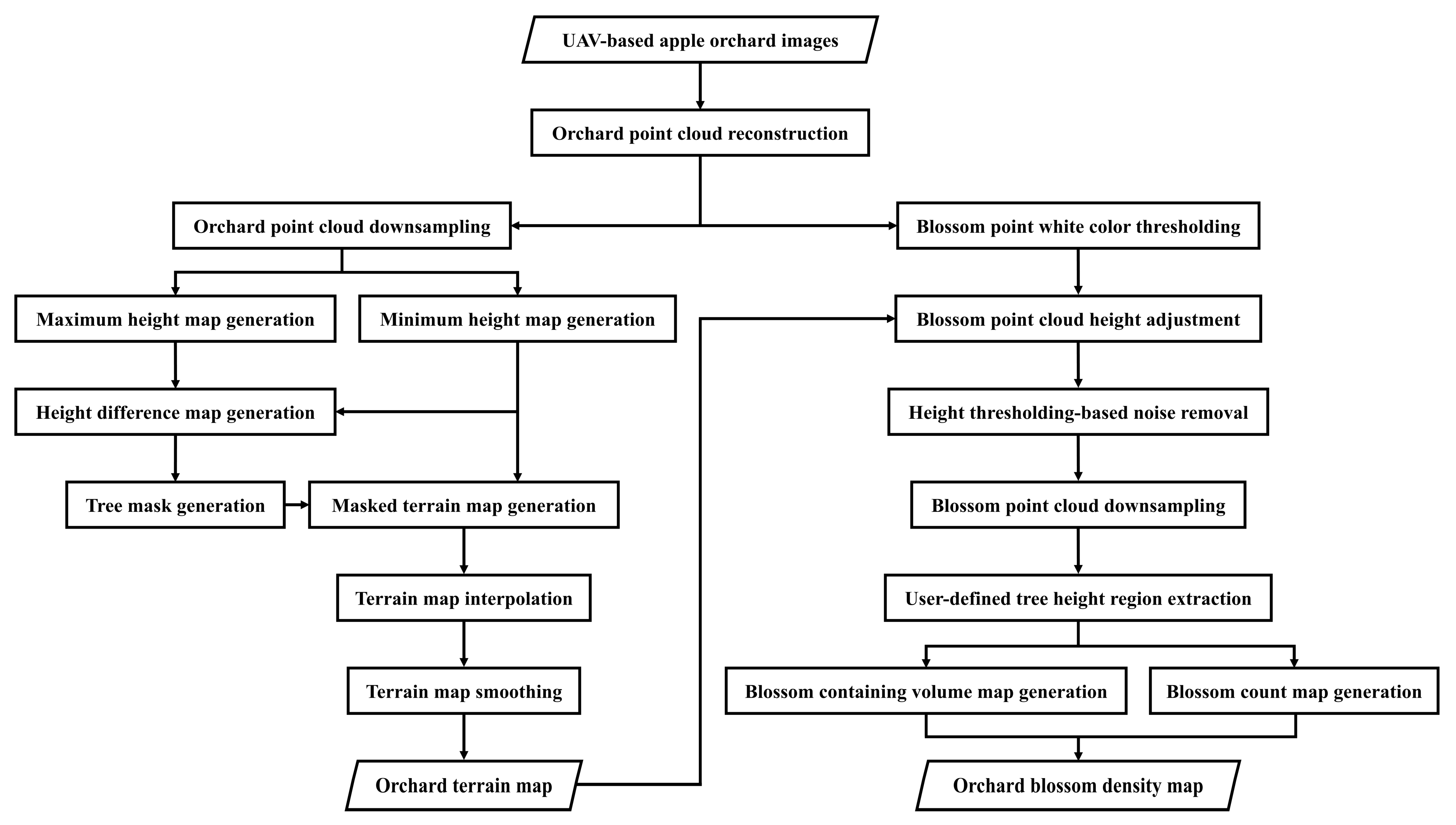

2. The Proposed Mapping Algorithm

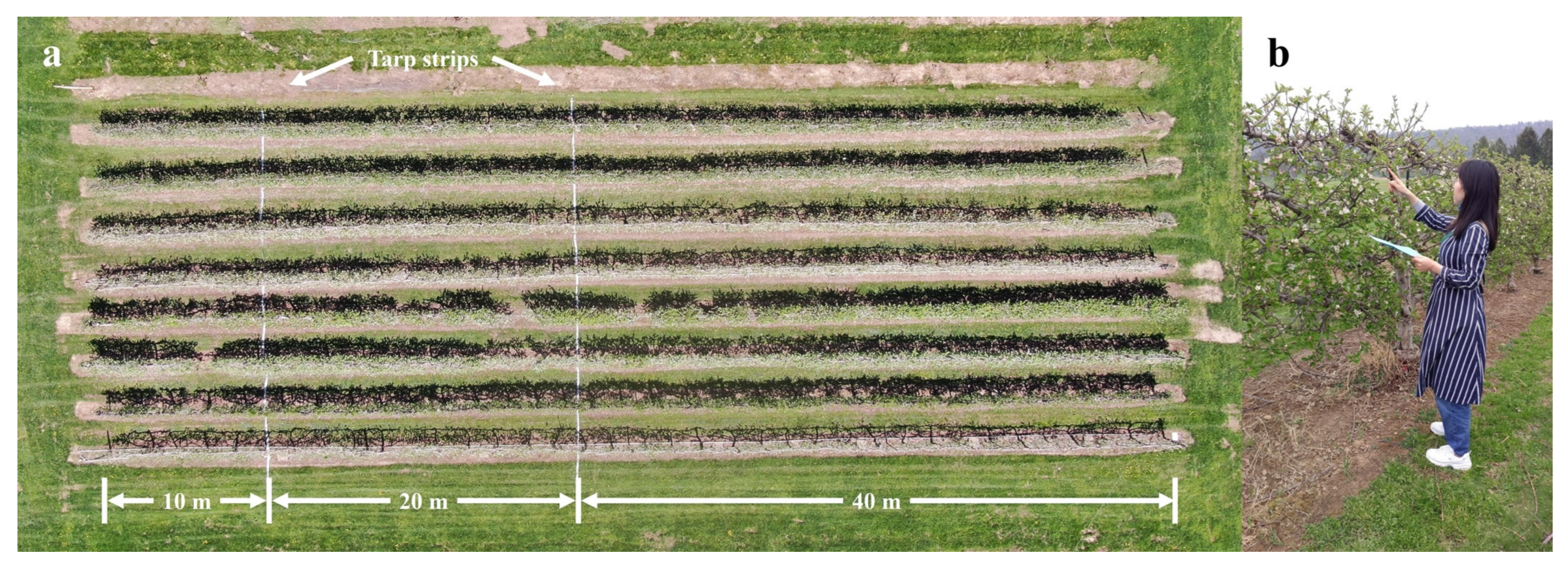

3. Preliminary Field Experiment

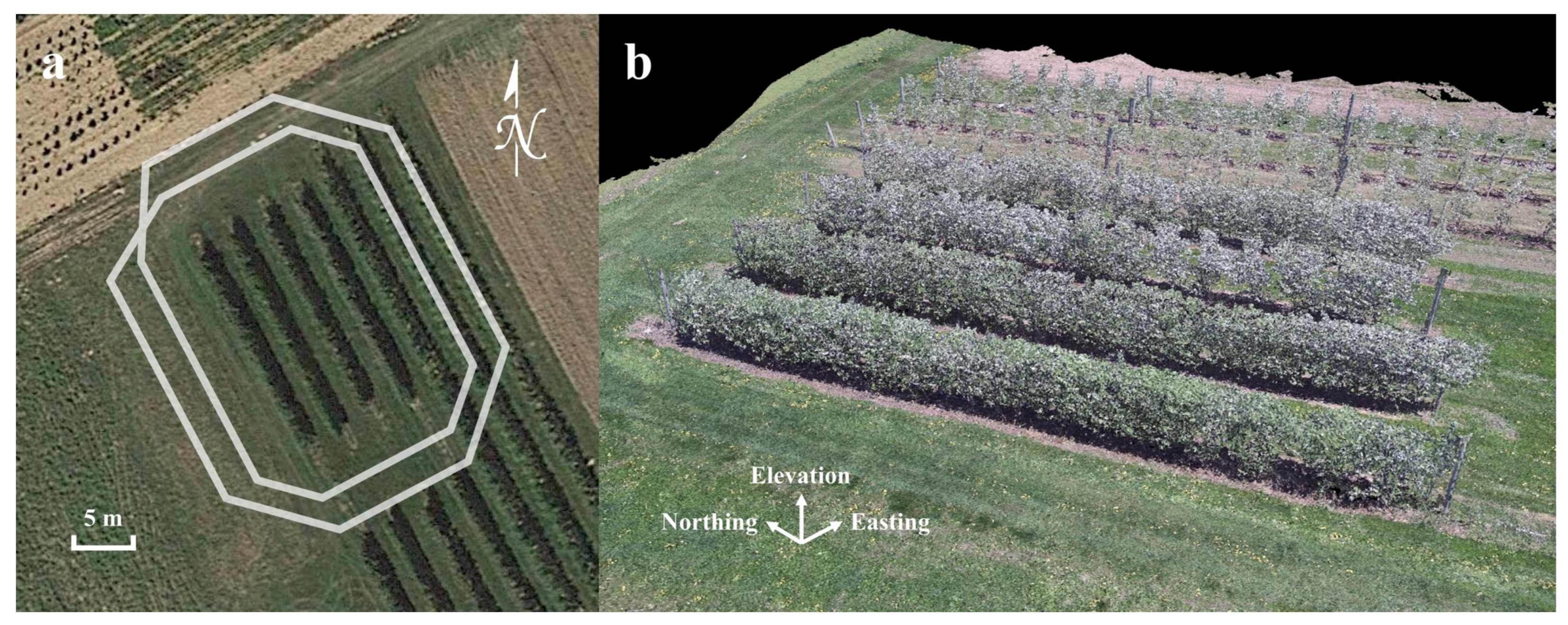

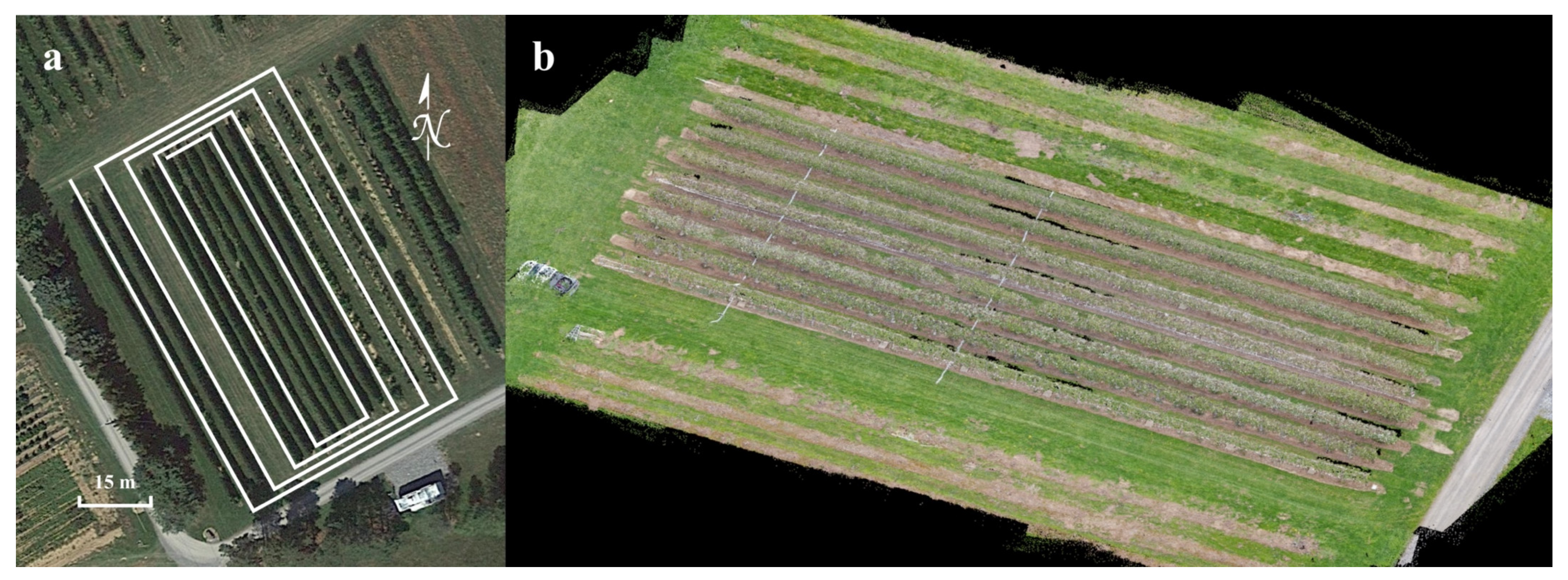

3.1. Data Collection

3.2. Data Processing and Analysis

4. Results and Discussion

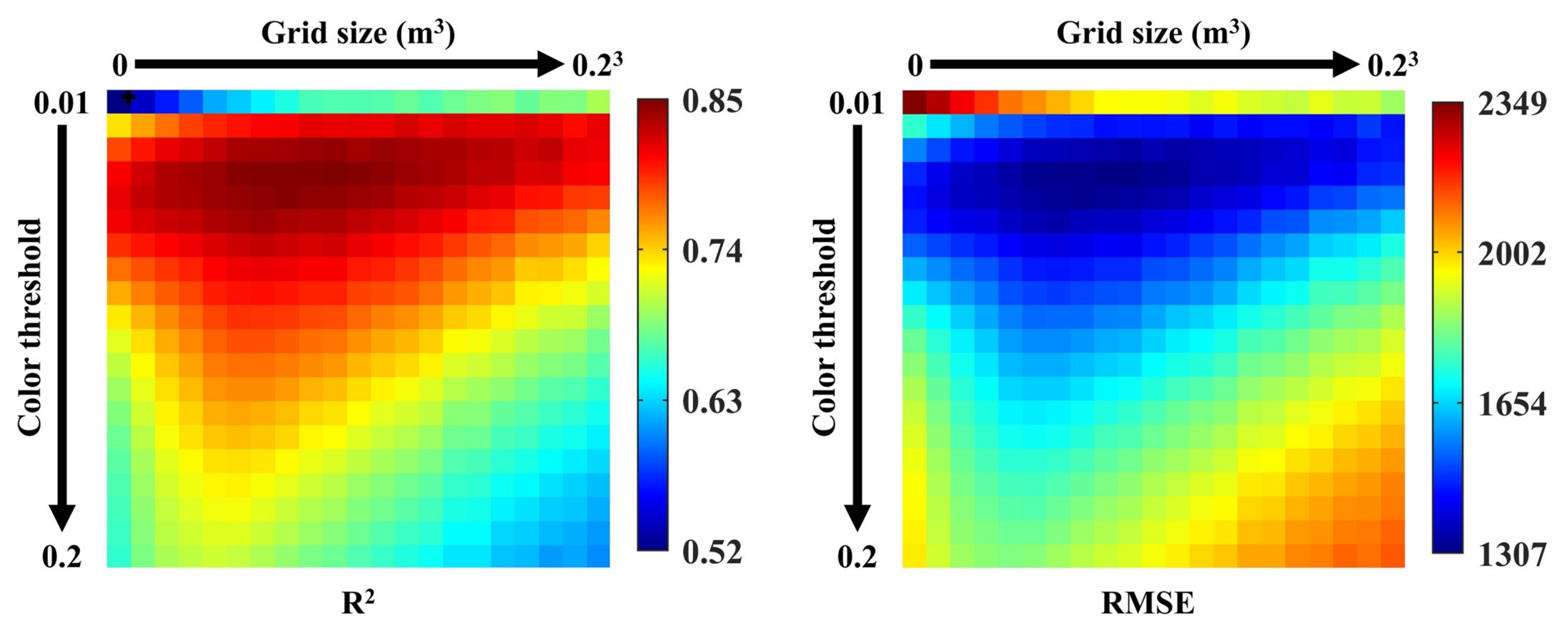

4.1. Optimal White Blossom Color Threshold and Blossom Grid Filter Size

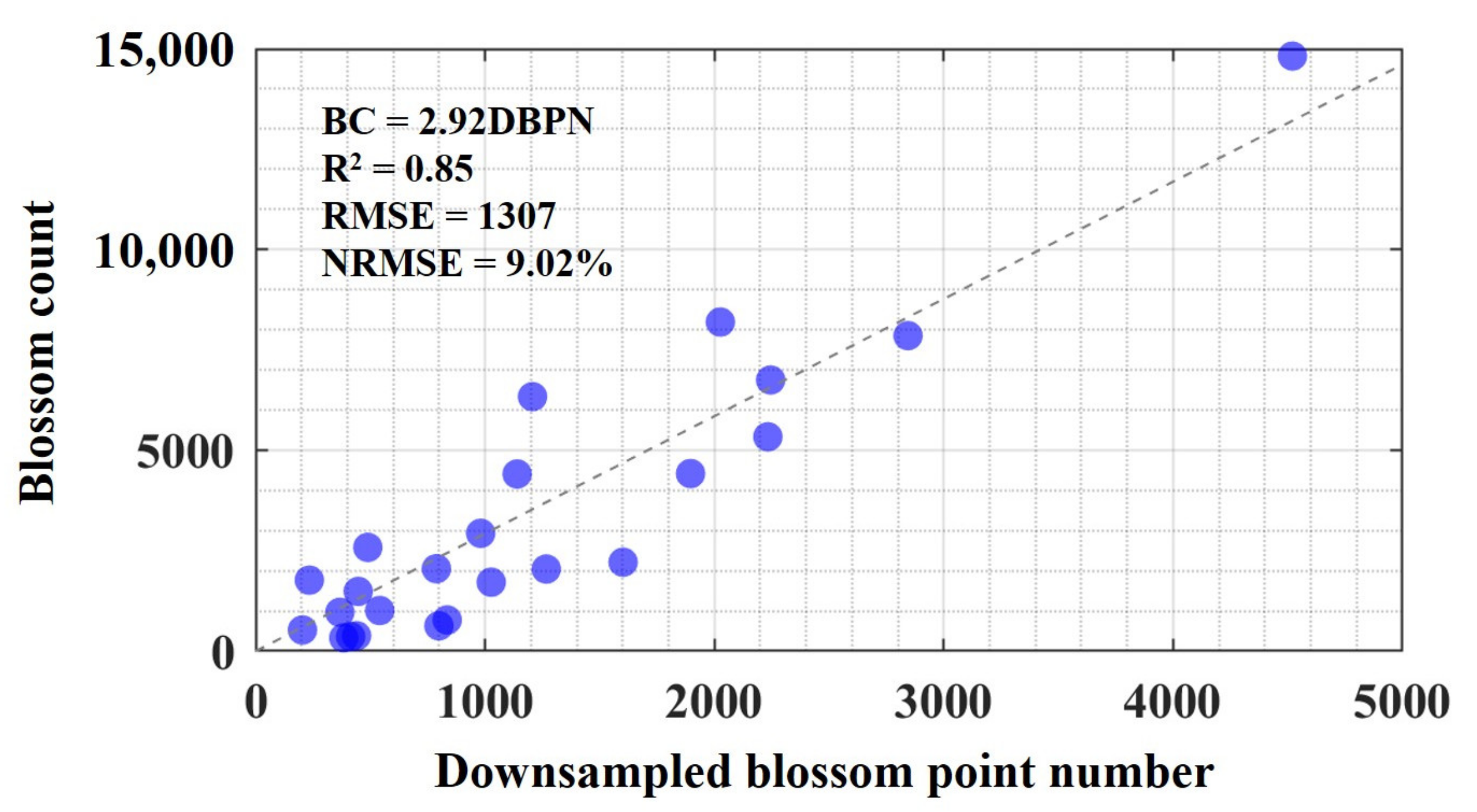

4.2. Blossom Count Estimation Accuracy

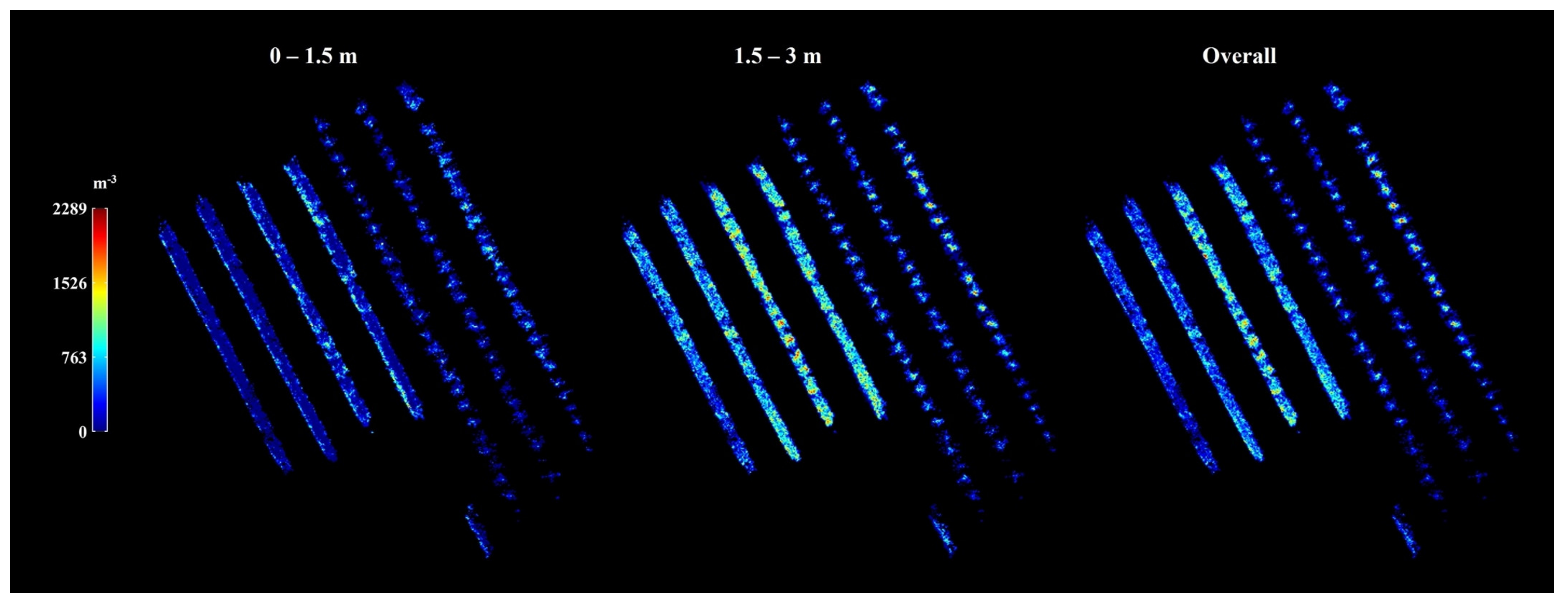

4.3. Blossom Density Monitoring Application

4.4. Implications and Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Detailed Algorithm Breakdown

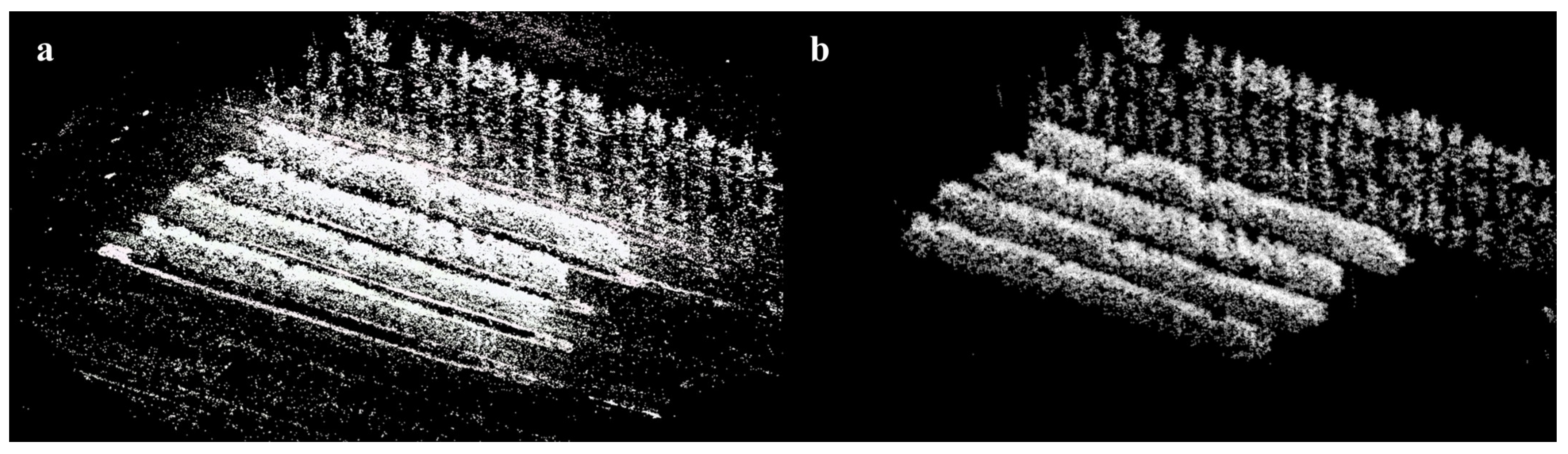

Appendix A.1. Sample Data Collection and Point Cloud Generation

Appendix A.2. Terrain Map Generation

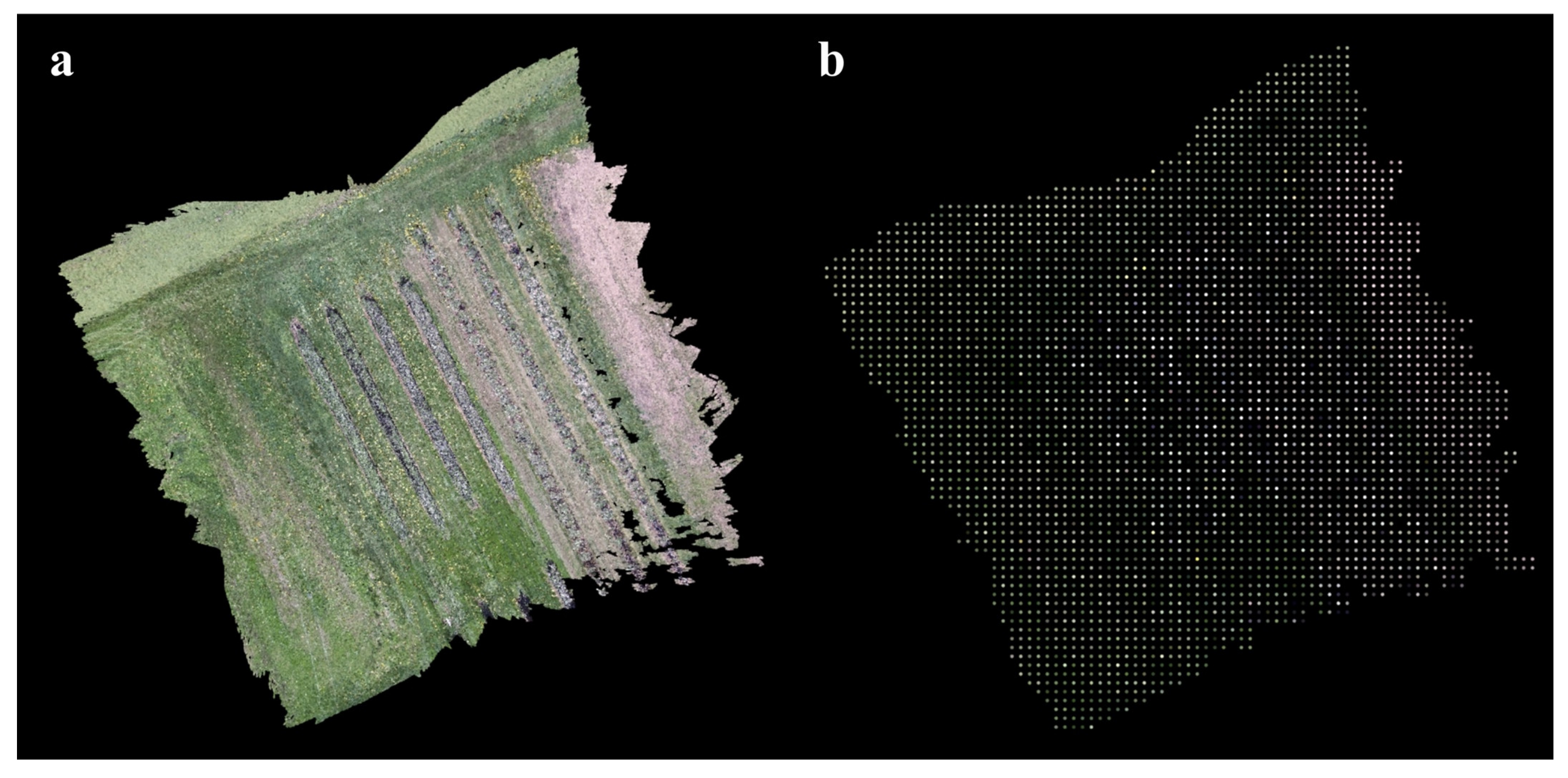

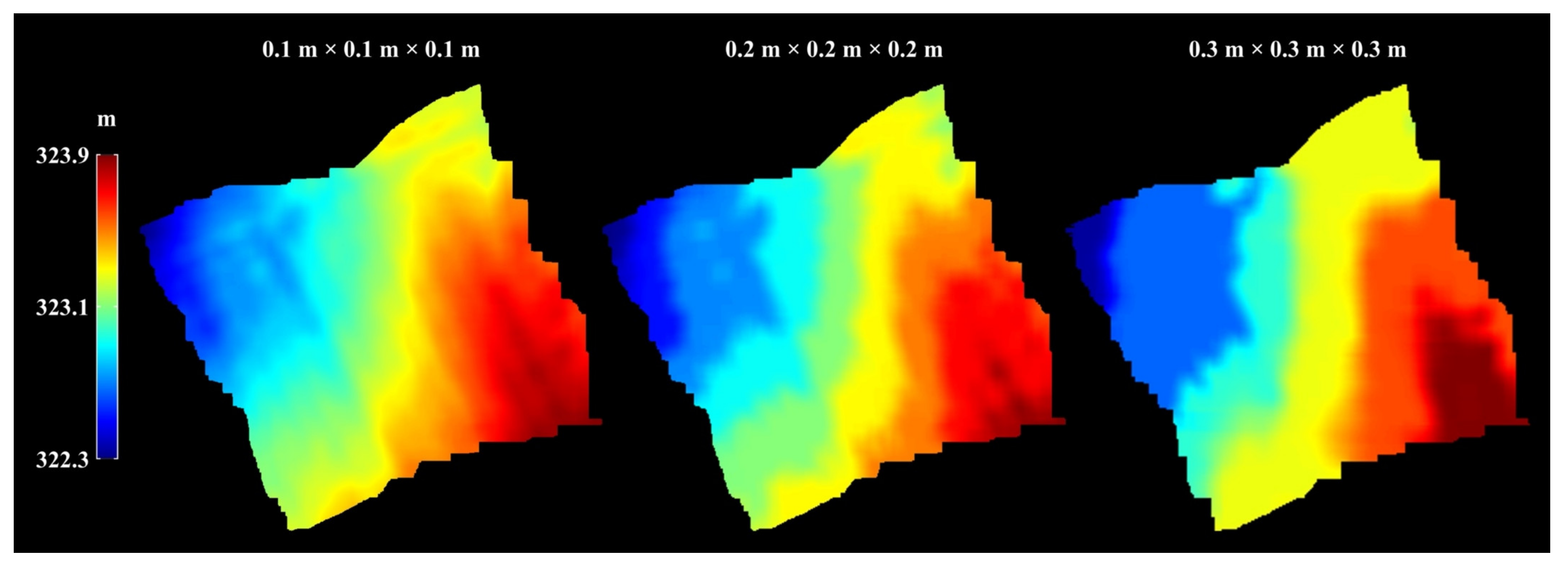

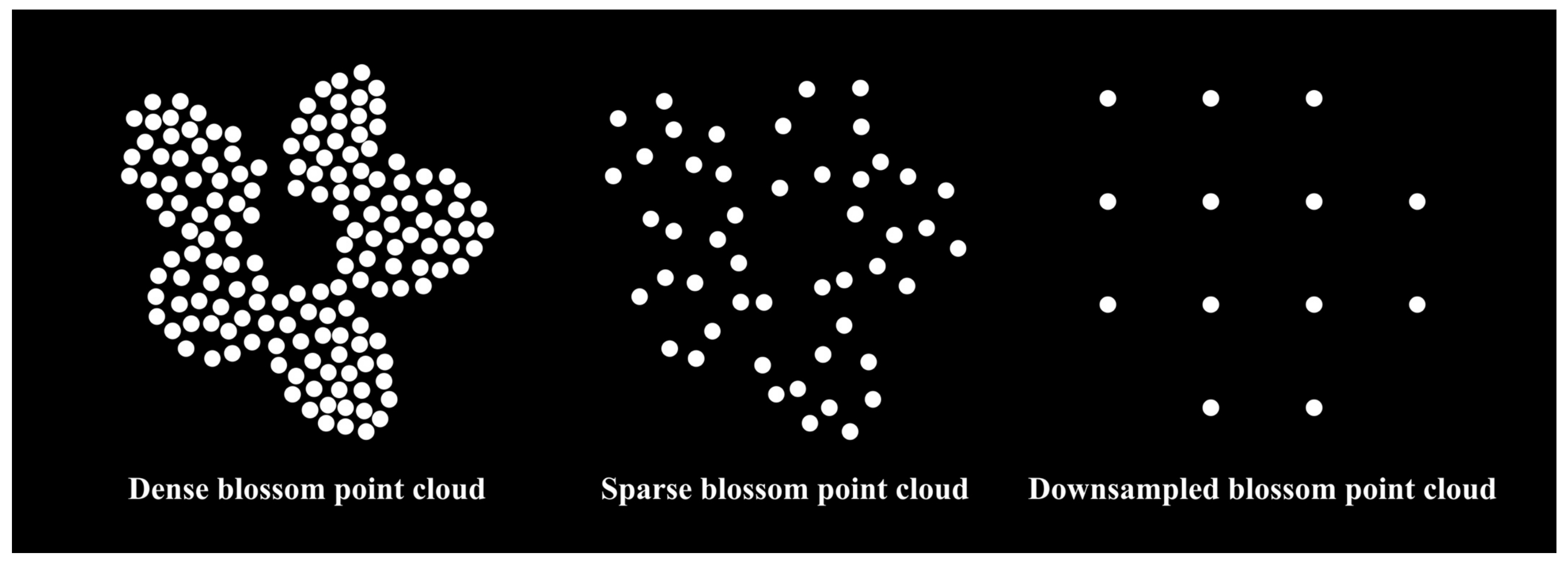

Appendix A.2.1. Point Cloud Downsampling

Appendix A.2.2. Height Map Generation

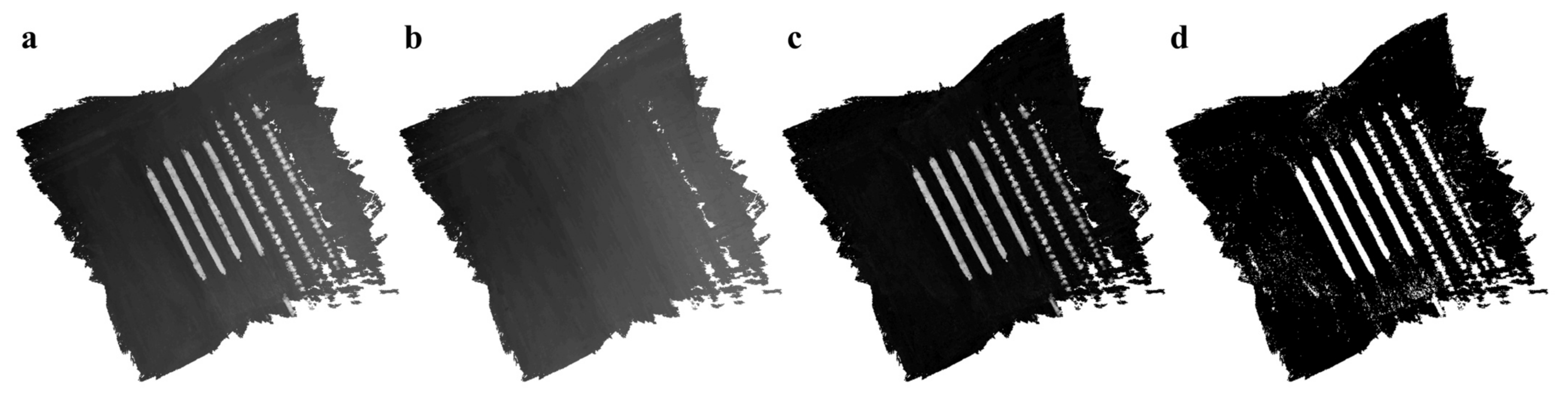

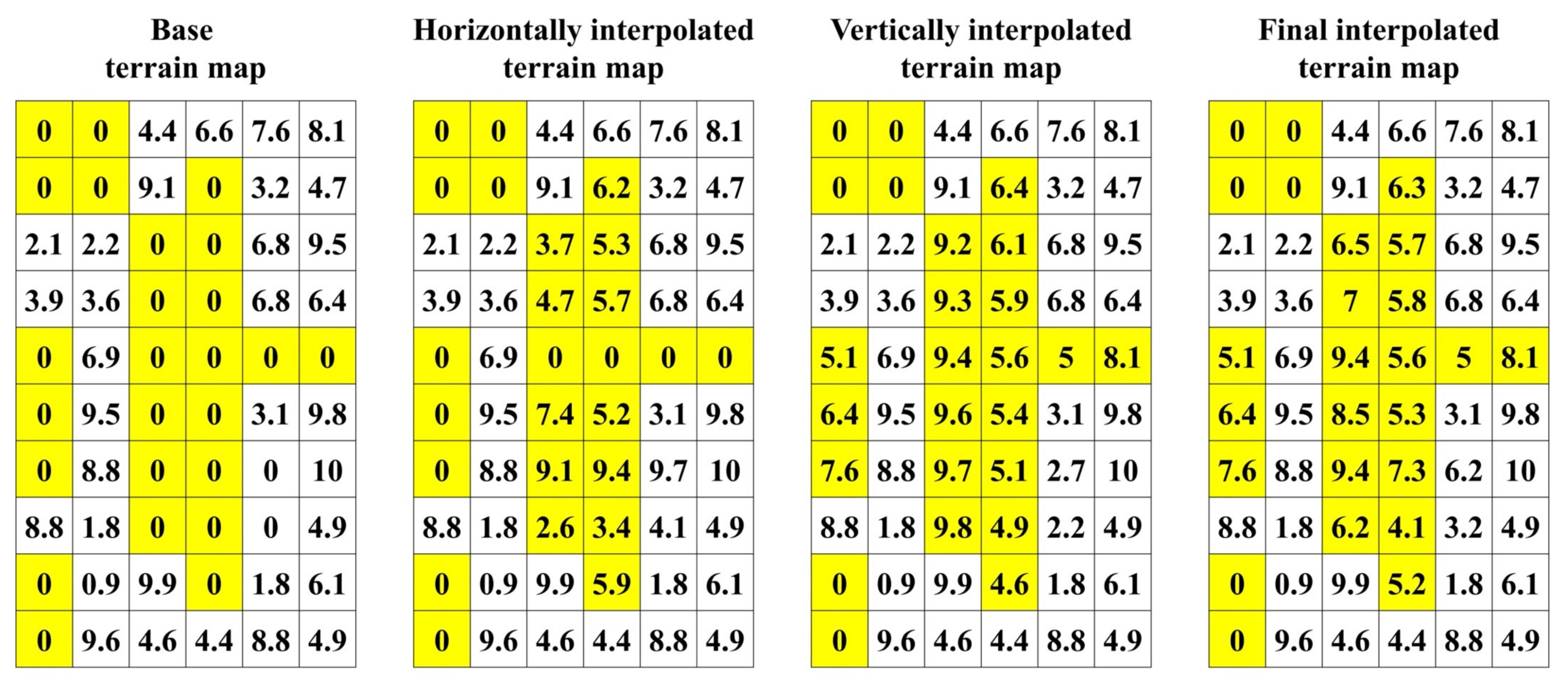

Appendix A.2.3. Terrain Map Interpolation and Smoothing

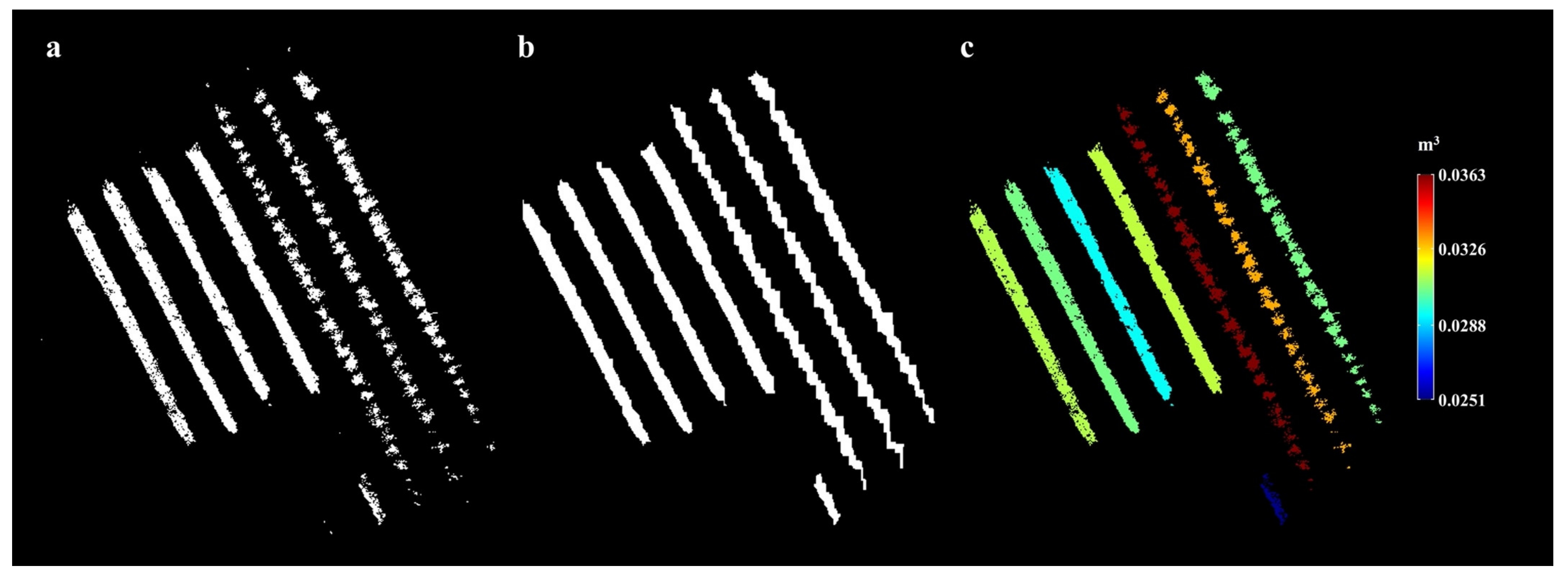

Appendix A.3. Blossom Point Cloud Extraction and Downsampling

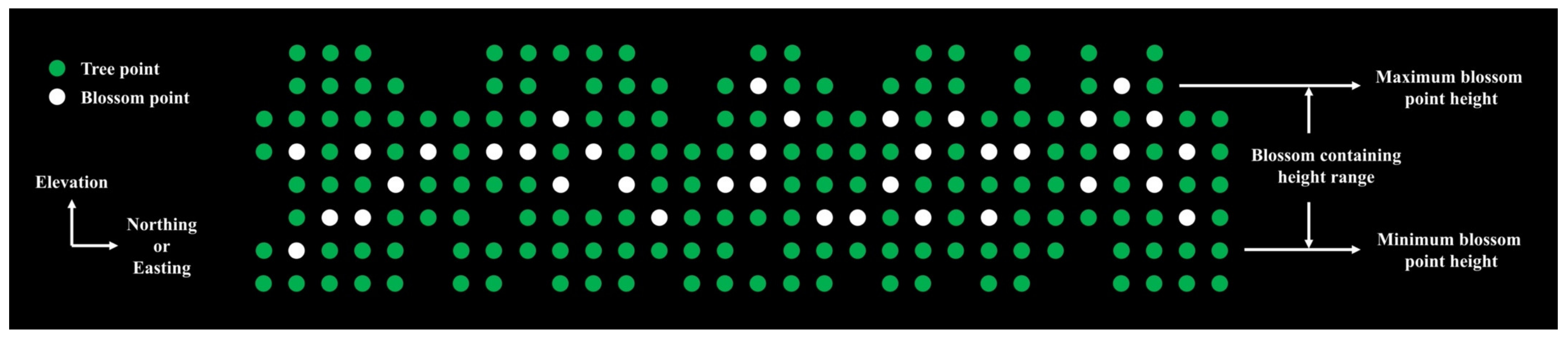

Appendix A.4. User-Defined Tree Height Region

Appendix A.5. Blossom Density Map Generation

Appendix A.5.1. Blossom Containing Volume Map Generation

Appendix A.5.2. Blossom Count and Density Calculation

References

- Spengler, R.N. Origins of the apple: The role of megafaunal mutualism in the domestication of Malus and rosaceous trees. Front. Plant Sci. 2019, 10, 617. [Google Scholar] [CrossRef] [PubMed]

- USDA Foreign Agricultural Service. Fresh Apples, Grapes, and Pears: World Markets and Trade. 2022. Available online: https://www.fas.usda.gov/data/fresh-apples-grapes-and-pears-world-markets-and-trade (accessed on 12 January 2023).

- Crassweller, R.M.; Kime, L.F.; Harper, J.K. Apple Production. Agric. Altern. 2016, 1–12. [Google Scholar]

- Guitton, B.; Kelner, J.J.; Velasco, R.; Gardiner, S.E.; Chagné, D.; Costes, E. Genetic control of biennial bearing in apple. J. Exp. Bot. 2012, 63, 131–149. [Google Scholar] [CrossRef] [PubMed]

- Pflanz, M.; Gebbers, R.; Zude, M. Influence of tree-adapted flower thinning on apple yield and fruit quality considering cultivars with different predisposition in fructification. Acta Hortic. 2016, 1130, 605–611. [Google Scholar] [CrossRef]

- Link, H. Significance of flower and fruit thinning on fruit quality. Plant Growth Regul. 2000, 31, 17–26. [Google Scholar] [CrossRef]

- Farjon, G.; Krikeb, O.; Hillel, A.B.; Alchanatis, V. Detection and counting of flowers on apple trees for better chemical thinning decisions. Precis. Agric. 2020, 21, 503–521. [Google Scholar] [CrossRef]

- Kolarič, J. Abscission of young apple fruits (Malus domestica Borkh): A review. Agricultura 2010, 7, 31–36. [Google Scholar]

- Apple Chemical Thinning. Available online: http://cpg.treefruit.wsu.edu/bioregulator-sprays/apple-chemical-thinning/ (accessed on 12 January 2023).

- Yoder, K.S.; Peck, G.M.; Combs, L.D.; Byers, R.E.; Smith, A.H. Using a pollen tube growth model to improve apple bloom thinning for organic production. Acta Hortic. 2013, 1001, 207–214. [Google Scholar] [CrossRef]

- Lakso, A.N.; Robinson, T.L.; Greene, D.W. Using an Apple Tree Carbohydrate Model to Understand Thinning Responses to Weather and Chemical Thinners. N. Y. State Hortic. Soc. 2002, 15, 16–19. [Google Scholar]

- Greene, D.W.; Lakso, A.N.; Robinson, T.L.; Schwallier, P. Development of a fruitlet growth model to predict thinner response on apples. HortScience 2013, 48, 584–587. [Google Scholar] [CrossRef]

- Yoder, K.; Yuan, R.; Combs, L.; Byers, R.; McFerson, J.; Schmidt, T. Effects of Temperature and the Combination of Liquid Lime Sulfur and Fish Oil on Pollen Germination, Pollen Tube Growth, and Fruit Set in Apples. HortScience 2009, 44, 1277–1283. [Google Scholar] [CrossRef]

- Robinson, T.L.; Lakso, A.N. Advances in Predicting Chemical Thinner Response of Apple Using a Carbon Balance Model. N. Y. Fruit Q. 2011, 19, 15–20. [Google Scholar] [CrossRef]

- Basak, A.; Juraś, I.; Białkowski, P.; Blanke, M.M.; Damerow, L. Efficacy of mechanical thinning of apple in Poland. Acta Hortic. 2016, 1138, 75–82. [Google Scholar] [CrossRef]

- Kon, T.M.; Schupp, J.R. Apple crop load management with special focus on early thinning strategies: A US perspective. In Horticultural Reviews; Wiley: Hoboken, NJ, USA, 2018; Volume 46, pp. 255–298. ISBN 9781119521082. Available online: https://onlinelibrary.wiley.com/doi/10.1002/9781119521082.ch6 (accessed on 12 January 2023).

- Schupp, J.R.; Auxt Baugher, T.; Miller, S.S.; Harsh, R.M.; Lesser, K.M. Mechanical thinning of peach and apple trees reduces labor input and increases fruit size. Horttechnology 2008, 18, 660–670. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Nowak, B. Precision Agriculture: Where do We Stand? A Review of the Adoption of Precision Agriculture Technologies on Field Crops Farms in Developed Countries. Agric. Res. 2021, 10, 515–522. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Triantafyllou, A.; Bibi, S.; Sarigannidis, P.G. Data acquisition and analysis methods in UAV-based applications for precision agriculture. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 377–384. [Google Scholar]

- Everaerts, J. The use of unmanned aerial vehicles (UAVs) for remote sensing and mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sciendes 2014, 37, 1187–1191. [Google Scholar]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sensing 2015, 81, 281–329. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, X.; Wang, Z.; Yang, L.; Xie, Y.; Huang, Y. UAVs as remote sensing platforms in plant ecology: Review of applications and challenges. J. Plant Ecol. 2021, 14, 1003–1023. [Google Scholar] [CrossRef]

- Guo, Y.; Fu, Y.H.; Chen, S.; Robin Bryant, C.; Li, X.; Senthilnath, J.; Sun, H.; Wang, S.; Wu, Z.; de Beurs, K. Integrating spectral and textural information for identifying the tasseling date of summer maize using UAV based RGB images. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102435. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, S.; Li, X.; Cunha, M.; Jayavelu, S.; Cammarano, D.; Fu, Y.H. Machine Learning-Based Approaches for Predicting SPAD Values of Maize Using Multi-Spectral Images. Remote Sens. 2022, 14, 102435. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Koenig, K.; Höfle, B.; Hämmerle, M.; Jarmer, T.; Siegmann, B.; Lilienthal, H. Comparative classification analysis of post-harvest growth detection from terrestrial LiDAR point clouds in precision agriculture. ISPRS J. Photogramm. Remote Sens. 2015, 104, 112–125. [Google Scholar] [CrossRef]

- Park, J.; Kim, H.; Tai, Y.-W.; Brown, M.S.; Kweon, I. High quality depth map upsampling for 3D-TOF cameras. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1623–1630. [Google Scholar]

- Gee, A.; Prager, R.; Treece, G.; Berman, L. Engineering a freehand 3D ultrasound system. Pattern Recognit. Lett. 2003, 24, 757–777. [Google Scholar] [CrossRef]

- Armstrong, M.; Zisserman, A.; Beardsley, P. Euclidean Reconstruction from Uncalibrated Images. In Proceedings of the British Machine Vision Conference, York, UK, 13–16 September 1994; pp. 509–518. [Google Scholar]

- Pollefeys, M.; Koch, R.; Gool, L.V. Self-Calibration and Metric Reconstruction in spite of Varying and Unknown Intrinsic Camera Parameters. Int. J. Comput. Vis. 1999, 32, 7–25. [Google Scholar] [CrossRef]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evaluation of multi-view stereo reconstruction algorithms. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2006, 1, 519–526. [Google Scholar]

- Johansen, K.; Raharjo, T.; McCabe, M.F. Using multi-spectral UAV imagery to extract tree crop structural properties and assess pruning effects. Remote Sens. 2018, 10, 854. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. Assessing optimal flight parameters for generating accurate multispectral orthomosaicks by uav to support site-specific crop management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef]

- Dehkordi, R.H.; Burgeon, V.; Fouche, J.; Gomez, E.P.; Cornelis, J.T.; Nguyen, F.; Denis, A.; Meersmans, J. Using UAV collected RGB and multispectral images to evaluate winter wheat performance across a site characterized by century-old biochar patches in Belgium. Remote Sens. 2020, 12, 2504. [Google Scholar] [CrossRef]

- Taylor, J.E.; Charlton, D.; Yuńez-Naude, A. The End of Farm Labor Abundance. Appl. Econ. Perspect. Policy 2012, 34, 587–598. [Google Scholar] [CrossRef]

- Wang, X.A.; Tang, J.; Whitty, M. DeepPhenology: Estimation of apple flower phenology distributions based on deep learning. Comput. Electron. Agric. 2021, 185, 106123. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Apple flower detection using deep convolutional networks. Comput. Ind. 2018, 99, 17–28. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Multispecies fruit flower detection using a refined semantic segmentation network. IEEE Robot. Autom. Lett. 2018, 3, 3003–3010. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Wang, X.A.; Tang, J.; Whitty, M. Side-view apple flower mapping using edge-based fully convolutional networks for variable rate chemical thinning. Comput. Electron. Agric. 2020, 178, 105673. [Google Scholar] [CrossRef]

- Tubau Comas, A.; Valente, J.; Kooistra, L. Automatic apple tree blossom estimation from UAV RGB imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 631–635. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Instance segmentation of apple flowers using the improved mask R–CNN model. Biosyst. Eng. 2020, 193, 264–278. [Google Scholar] [CrossRef]

- Hočevar, M.; Širok, B.; Godeša, T.; Stopar, M. Flowering estimation in apple orchards by image analysis. Precis. Agric. 2014, 15, 466–478. [Google Scholar] [CrossRef]

- Braun, B.; Bulanon, D.M.; Colwell, J.; Stutz, A.; Stutz, J.; Nogales, C.; Hestand, T.; Verhage, P.; Tracht, T. A Fruit Yield Prediction Method Using Blossom Detection. In Proceedings of the ASABE 2018 Annual International Meeting, Detroit, MI, USA, 29 July 2018–1 August 2018; p. 1801542. [Google Scholar]

- Xiao, C.; Zheng, L.; Sun, H.; Zhang, Y.; Li, M. Estimation of the apple flowers based on aerial multispectral image. In Proceedings of the ASABE 2014 Annual International Meeting, Montreal, QC, Canada, 13–16 July 2014; p. 141912593. [Google Scholar]

- Yuan, W.; Choi, D. UAV-Based Heating Requirement Determination for Frost Management in Apple Orchard. Remote Sens. 2021, 13, 273. [Google Scholar] [CrossRef]

- Wang, C.; Liu, Y.; Zhang, Z.; Han, L.; Li, Y.; Zhang, H.; Wongsuk, S.; Li, Y.; Wu, X.; He, X. Spray performance evaluation of a six-rotor unmanned aerial vehicle sprayer for pesticide application using an orchard operation mode in apple orchards. Pest Manag. Sci. 2022, 78, 2449–2466. [Google Scholar] [CrossRef]

- Liu, Z.; Guo, P.; Liu, H.; Fan, P.; Zeng, P.; Liu, X.; Feng, C.; Wang, W.; Yang, F. Gradient boosting estimation of the leaf area index of apple orchards in uav remote sensing. Remote Sens. 2021, 13, 3263. [Google Scholar] [CrossRef]

- Dong, X.; Kim, W.Y.; Lee, K.H. Drone-Based Three-Dimensional Photogrammetry and Concave Hull by Slices Algorithm for Apple Tree Volume Mapping. J. Biosyst. Eng. 2021, 46, 474–484. [Google Scholar] [CrossRef]

- Valente, J.; Almeida, R.; Kooistra, L. A comprehensive study of the potential application of flying ethylene-sensitive sensors for ripeness detection in apple orchards. Sensors 2019, 19, 372. [Google Scholar] [CrossRef]

- Xiao, D.; Pan, Y.; Feng, J.; Yin, J.; Liu, Y.; He, L. Remote sensing detection algorithm for apple fire blight based on UAV multispectral image. Comput. Electron. Agric. 2022, 199, 107137. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Pérez-Ruiz, M.; Martínez-Guanter, J.; Valente, J. A Cloud-Based Environment for Generating Yield Estimation Maps From Apple Orchards Using UAV Imagery and a Deep Learning Technique. Front. Plant Sci. 2020, 11, 1086. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, A.H.; Alaloul, W.S.; Murtiyoso, A.; Saad, S.; Manzoor, B. Comparison of Photogrammetry Tools Considering Rebar Progress Recognition. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B2-2, 141–146. [Google Scholar] [CrossRef]

- Maiwald, F.; Maas, H.G. An automatic workflow for orientation of historical images with large radiometric and geometric differences. Photogramm. Rec. 2021, 36, 77–103. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, W.; Hua, W.; Heinemann, P.H.; He, L. UAV Photogrammetry-Based Apple Orchard Blossom Density Estimation and Mapping. Horticulturae 2023, 9, 266. https://doi.org/10.3390/horticulturae9020266

Yuan W, Hua W, Heinemann PH, He L. UAV Photogrammetry-Based Apple Orchard Blossom Density Estimation and Mapping. Horticulturae. 2023; 9(2):266. https://doi.org/10.3390/horticulturae9020266

Chicago/Turabian StyleYuan, Wenan, Weiyun Hua, Paul Heinz Heinemann, and Long He. 2023. "UAV Photogrammetry-Based Apple Orchard Blossom Density Estimation and Mapping" Horticulturae 9, no. 2: 266. https://doi.org/10.3390/horticulturae9020266

APA StyleYuan, W., Hua, W., Heinemann, P. H., & He, L. (2023). UAV Photogrammetry-Based Apple Orchard Blossom Density Estimation and Mapping. Horticulturae, 9(2), 266. https://doi.org/10.3390/horticulturae9020266