Machine Vision for Ripeness Estimation in Viticulture Automation

Abstract

:1. Introduction

2. Grape Ripeness Peculiarities

3. Grape Ripeness Estimation Indices

4. Machine Vision Methods for Grape Ripeness Estimation

4.1. Color Imaging

4.2. Hyperspectral Imaging

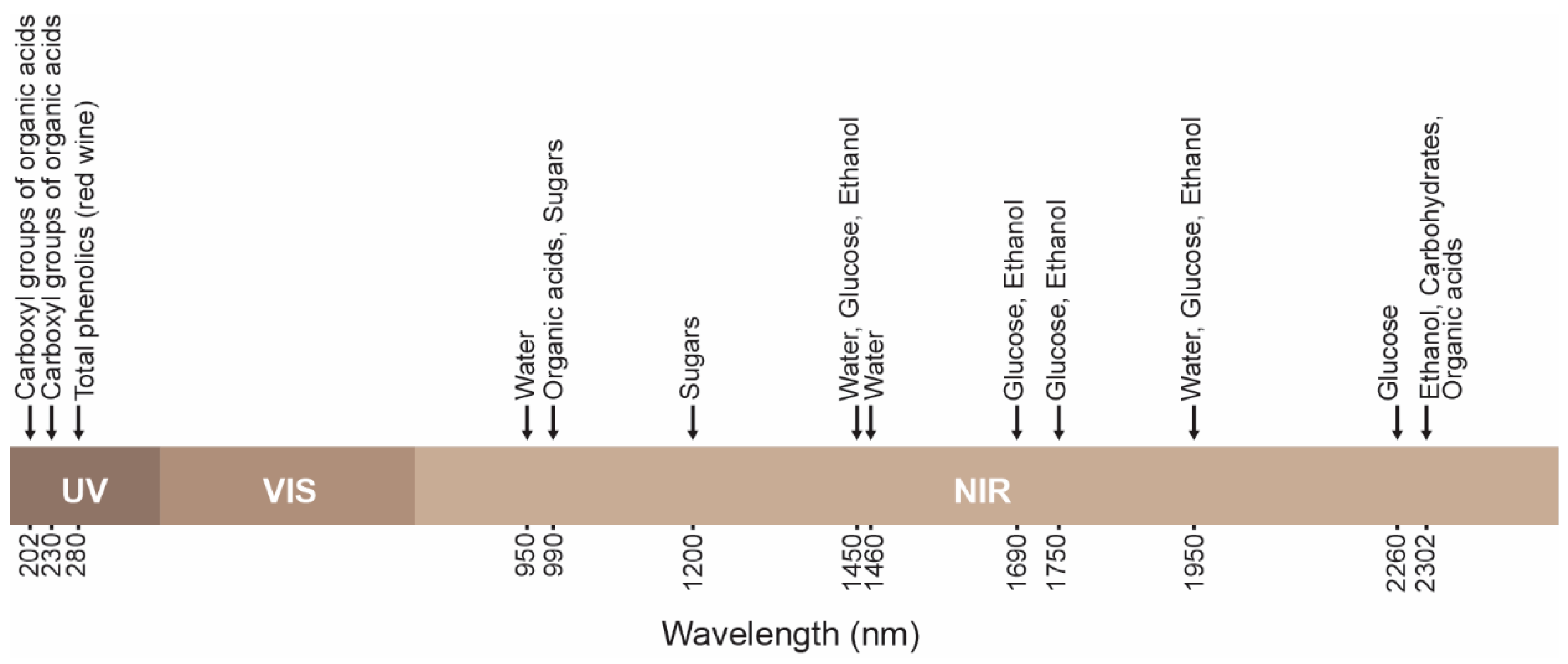

4.3. NIR Spectroscopy

5. Limitations and Perspectives

Crop Growth Models

6. Integration to Grape Harvesting Agrobots

| Ref. | Integrated to Agrobots/Harvest Actions | Advantages | Limitations/Review | Equipment | Time | Performance |

|---|---|---|---|---|---|---|

| [22] | No/No | Ripeness estimation in real-time and construction of georegistered spatial maps during growing season. | Segmentation algorithm fails due to the range of cirle radius and the weak gradient across grape boundaries. A monitoring agrobot. | <Not defined> | <Not defined> | Up to 96.88% classification rate |

| [24] | Yes/No | Real-time ripeness estimation, generation of spatial maps to show distribution of color development, can enable selective harvesting, better imaging results compared to human measurements. | Uses a flash illumination system, great variations in color development across vineyards due to not dense measurements. A monitoring robot. | RGB Point grey Grasshopper cameras (8.8mm lens, baseline 90 mm) and a pair of Xenon flashlamps (5−10 J) | 0.2 s | 0.56 R2 |

| [28] | No/No | Robust method that uses color images, method able to detect complex and high non-linear relashionships. | Huge image data is required. It has the potential to be integrated in a harvesting agrobot. | Smartphone one plus 3T | <Not defined> | Up to 79% classification rate |

| [29] | Yes/Yes | Ripeness estimation in real-time and decision making upon harvesting the detected grapes according to the estimated maturity degree. The method takes into account all order statistics extracted from image histograms. | Only the green channel of RGB color space is investigated, small image dataset acquired from video frames. Able for monitoring and harvesting, already integrated in an agrobot [60]. | <Sensor on simulation is not defined, based on video frames of public dataset> ZED Mini 3D IMU Camera (on-site) | 0.125 s | 5.36% average error |

| [34] | No/No | Can provide rapidly spatial information for crop’s status in farm scale, determine maturity zones. | Does not perform in real-time. Acquired images first need to be processed to derive vineyard maps. Depends on UAV images and therefore cannot be integrated. | Multispectral camera Multispec 4C, Airinov, France (12 cm pixel size on the ground, 13 mm lens-to-focus distance | <Not defined> | Up to 83.33% classification rate |

| [37] | Yes/No | Ripeness estimation is performed in real-time while the agrobot is moving. | Ripeness estimation is determined for a block of five trees not for each cluster in the image. It is a monitoring agrobot and no action is further taken, i.e., harvest. | Push broom Resonon Pika L VNIR hyperspectral imaging Camera, Resonon, Bozeman, MA, USA (8 mm focal length) | 0.84 s | Up to 83.30% classification rate |

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Sohaib Ali Shah, S.; Zeb, A.; Qureshi, W.S.; Arslan, M.; Ullah Malik, A.; Alasmary, W.; Alanazi, E. Towards fruit maturity estimation using NIR spectroscopy. Infrared Phys. Technol. 2020, 111, 103479. [Google Scholar] [CrossRef]

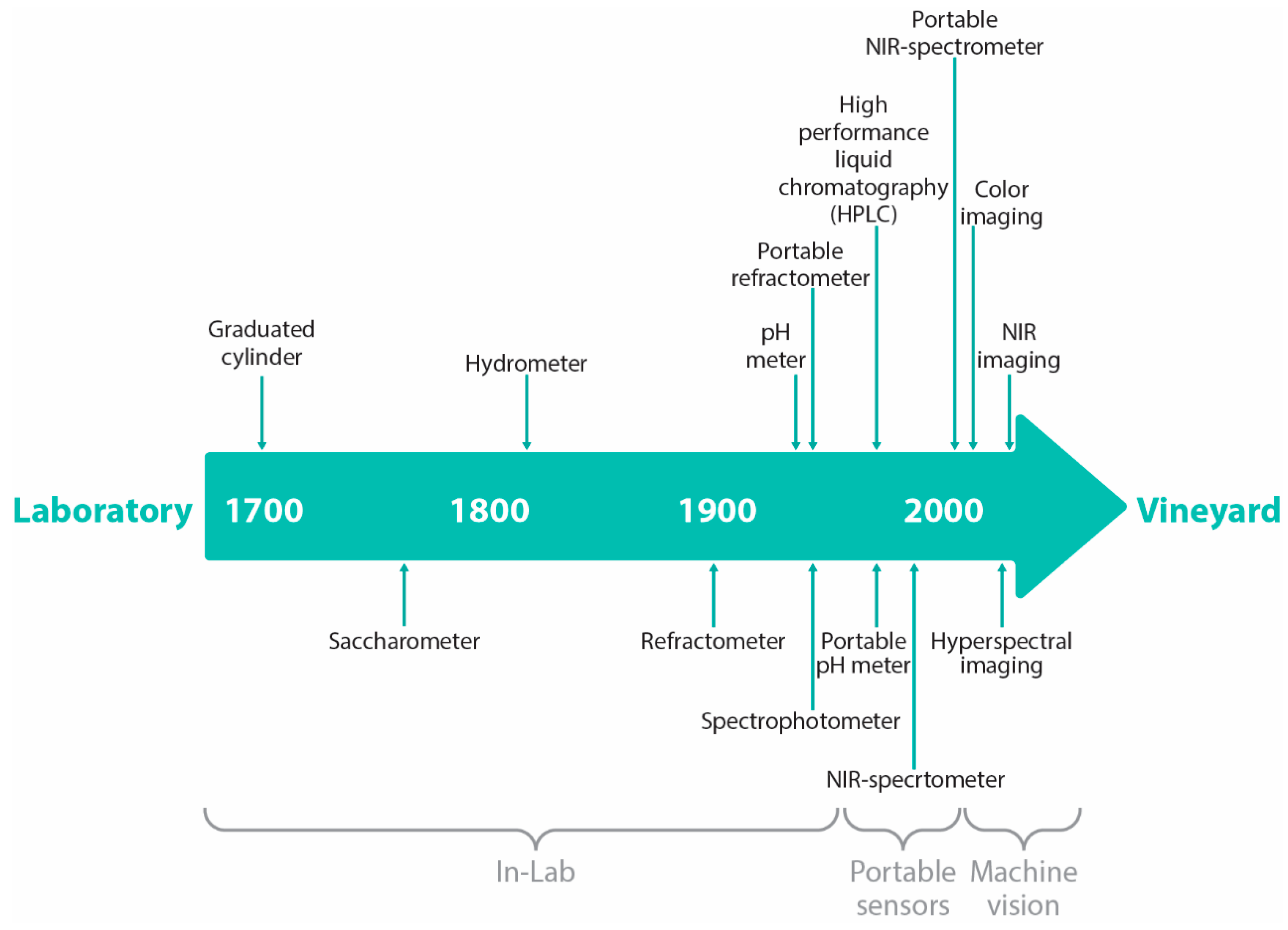

- Power, A.; Truong, V.K.; Chapman, J.; Cozzolino, D. From the Laboratory to The Vineyard—Evolution of The Measurement of Grape Composition using NIR Spectroscopy towards High-Throughput Analysis. High-Throughput 2019, 8, 21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Drappier, J.; Thibon, C.; Rabot, A.; Geny-Denis, L. Relationship between wine composition and temperature: Impact on Bordeaux wine typicity in the context of global warming—Review. Crit. Rev. Food Sci. Nutr. 2019, 59, 14–30. [Google Scholar] [CrossRef] [PubMed]

- Cramer, G.R.; Cochetel, N.; Ghan, R.; Destrac-Irvine, A.; Delrot, S. A sense of place: Transcriptomics identifies environmental signatures in Cabernet Sauvignon berry skins in the late stages of ripening. BMC Plant Biol. 2020, 20, 41. [Google Scholar] [CrossRef] [Green Version]

- González-Lázaro, M.; Martínez-Lapuente, L.; Guadalupe, Z.; Ayestaran, B.; Bueno-Herrera, M.; López de la Cuesta, P.; Pérez-Magariño, S. Evaluation of grape ripeness, carbonic maceration and pectolytic enzymes to improve the chemical and sensory quality of red sparkling wines. J. Sci. Food Agric. 2020, 100, 2618–2629. [Google Scholar] [CrossRef]

- Jackson, R.S. Innovations in Winemaking. In Science and Technology of Fruit Wine Production; Elsevier: Amsterdam, The Netherlands, 2017; pp. 617–662. ISBN 9780128010341. [Google Scholar]

- Mavridou, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Machine Vision Systems in Precision Agriculture for Crop Farming. J. Imaging 2019, 5, 89. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Rothen, F. International Organisation of Vine and Wine (OIV). In International Code of Oenological Practices; International Organisation of Vine and Wine (OIV): Paris, France, 2021; ISBN 978-2-85038-030-3. [Google Scholar]

- Wolf, T. Wine Production Guide for Eastern North America (NRAES 145); Natural Resource, Agriculture, and Engineering Service (NRAES): Ithaca, NY, USA, 2008. [Google Scholar]

- Bisson, L. In search of optimal grape maturity. Pract. Winer. Vineyard J. 2001, 23, 32–34. [Google Scholar]

- Rabot, A. Using of a combined approach by biochemical and image analysis to develop a new method to estimate seed maturity stage for Bordeaux area grapevine. OENO One 2017, 51, 29–35. [Google Scholar] [CrossRef] [Green Version]

- Hernández-Hierro, J.M.; Nogales-Bueno, J.; Rodríguez-Pulido, F.J.; Heredia, F.J. Feasibility Study on the Use of Near-Infrared Hyperspectral Imaging for the Screening of Anthocyanins in Intact Grapes during Ripening. J. Agric. Food Chem. 2013, 61, 9804–9809. [Google Scholar] [CrossRef]

- Rodríguez-Pulido, F.J.; Gómez-Robledo, L.; Melgosa, M.; Gordillo, B.; González-Miret, M.L.; Heredia, F.J. Ripeness estimation of grape berries and seeds by image analysis. Comput. Electron. Agric. 2012, 82, 128–133. [Google Scholar] [CrossRef]

- Rodríguez-Pulido, F.J.; Ferrer-Gallego, R.; Lourdes González-Miret, M.; Rivas-Gonzalo, J.C.; Escribano-Bailón, M.T.; Heredia, F.J. Preliminary study to determine the phenolic maturity stage of grape seeds by computer vision. Anal. Chim. Acta 2012, 732, 78–82. [Google Scholar] [CrossRef] [PubMed]

- Gowen, A.; Odonell, C.; Cullen, P.; DowneyY, G.; Frias, J. Hyperspectral imaging—An emerging process analytical tool for food quality and safety control. Trends Food Sci. Technol. 2007, 18, 590–598. [Google Scholar] [CrossRef]

- ElMasry, G.; Wang, N.; ElSayed, A.; Ngadi, M. Hyperspectral imaging for nondestructive determination of some quality attributes for strawberry. J. Food Eng. 2007, 81, 98–107. [Google Scholar] [CrossRef]

- Taghizadeh, M.; Gowen, A.A.; O’Donnell, C.P. Comparison of hyperspectral imaging with conventional RGB imaging for quality evaluation of Agaricus bisporus mushrooms. Biosyst. Eng. 2011, 108, 191–194. [Google Scholar] [CrossRef]

- Versari, A.; Parpinello, G.P.; Mattioli, A.U.; Galassi, S. Determination of grape quality at harvest using Fourier-transform mid-infrared spectroscopy and multivariate analysis. Am. J. Enol. Vitic. 2008, 59, 317–322. [Google Scholar]

- Martelo-Vidal, M.J.; Vázquez, M. Evaluation of ultraviolet, visible, and near infrared spectroscopy for the analysis of wine compounds. Czech J. Food Sci. 2014, 32, 37–47. [Google Scholar] [CrossRef] [Green Version]

- Cozzolino, D.; Dambergs, R.G.; Janik, L.; Cynkar, W.U.; Gishen, M. Analysis of Grapes and Wine by near Infrared Spectroscopy. J. Near Infrared Spectrosc. 2006, 14, 279–289. [Google Scholar] [CrossRef]

- Rahman, A.; Hellicar, A. Identification of mature grape bunches using image processing and computational intelligence methods. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence for Multimedia, Signal and Vision Processing (CIMSIVP), Orlando, FL, USA, 9–12 December 2014; pp. 1–6. [Google Scholar]

- Avila, F.; Mora, M.; Oyarce, M.; Zuñiga, A.; Fredes, C. A method to construct fruit maturity color scales based on support machines for regression: Application to olives and grape seeds. J. Food Eng. 2015, 162, 9–17. [Google Scholar] [CrossRef]

- Pothen, Z.; Nuske, S. Automated Assessment and Mapping of Grape Quality through Image-based Color Analysis. IFAC-PapersOnLine 2016, 49, 72–78. [Google Scholar] [CrossRef]

- Xia, Z.; Wu, D.; Nie, P.; He, Y. Non-invasive measurement of soluble solid content and pH in Kyoho grapes using a computer vision technique. Anal. Methods 2016, 8, 3242–3248. [Google Scholar] [CrossRef]

- Hernández, S.; Morales, L.; Urrutia, A. Unsupervised learning for ripeness estimation from grape seeds images. Int. J. Smart Sens. Intell. Syst. 2017, 10, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Cavallo, D.P.; Cefola, M.; Pace, B.; Logrieco, A.F.; Attolico, G. Non-destructive and contactless quality evaluation of table grapes by a computer vision system. Comput. Electron. Agric. 2019, 156, 558–564. [Google Scholar] [CrossRef]

- Kangune, K.; Kulkarni, V.; Kosamkar, P. Grapes Ripeness Estimation using Convolutional Neural network and Support Vector Machine. In Proceedings of the 2019 Global Conference for Advancement in Technology (GCAT 2019), Bangalore, India, 18–20 October 2019. [Google Scholar]

- Kaburlasos, V.G.; Vrochidou, E.; Lytridis, C.; Papakostas, G.A.; Pachidis, T.; Manios, M.; Mamalis, S.; Merou, T.; Koundouras, S.; Theocharis, S.; et al. Toward Big Data Manipulation for Grape Harvest Time Prediction by Intervals’ Numbers Techniques. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 28 September 2020; pp. 1–6. [Google Scholar]

- Ramos, R.P.; Gomes, J.S.; Prates, R.M.; Simas Filho, E.F.; Teruel, B.J.; Dos Santos Costa, D. Non-invasive setup for grape maturation classification using deep learning. J. Sci. Food Agric. 2021, 101, 2042–2051. [Google Scholar] [CrossRef]

- Baiano, A.; Terracone, C.; Peri, G.; Romaniello, R. Application of hyperspectral imaging for prediction of physico-chemical and sensory characteristics of table grapes. Comput. Electron. Agric. 2012, 87, 142–151. [Google Scholar] [CrossRef]

- Fernandes, A.M.; Franco, C.; Mendes-Ferreira, A.; Mendes-Faia, A.; da Costa, P.L.; Melo-Pinto, P. Brix, pH and anthocyanin content determination in whole Port wine grape berries by hyperspectral imaging and neural networks. Comput. Electron. Agric. 2015, 115, 88–96. [Google Scholar] [CrossRef]

- Gomes, V.; Fernandes, A.; Martins-Lopes, P.; Pereira, L.; Mendes Faia, A.; Melo-Pinto, P. Characterization of neural network generalization in the determination of pH and anthocyanin content of wine grape in new vintages and varieties. Food Chem. 2017, 218, 40–46. [Google Scholar] [CrossRef] [PubMed]

- Iatrou, G.; Mourelatos, S.; Gewehr, S.; Kalaitzopoulou, S.; Iatrou, M.; Zartaloudis, Z. Using multispectral imaging to improve berry harvest for wine making grapes. Ciênc. e Téc. Vitiviníc. 2017, 32, 33–41. [Google Scholar] [CrossRef] [Green Version]

- Zhang, N.; Liu, X.; Jin, X.; Li, C.; Wu, X.; Yang, S.; Ning, J.; Yanne, P. Determination of total iron-reactive phenolics, anthocyanins and tannins in wine grapes of skins and seeds based on near-infrared hyperspectral imaging. Food Chem. 2017, 237, 811–817. [Google Scholar] [CrossRef] [PubMed]

- Baca-Bocanegra, B.; Nogales-Bueno, J.; Heredia, F.; Hernández-Hierro, J. Estimation of Total Phenols, Flavanols and Extractability of Phenolic Compounds in Grape Seeds Using Vibrational Spectroscopy and Chemometric Tools. Sensors 2018, 18, 2426. [Google Scholar] [CrossRef] [Green Version]

- Gutiérrez, S.; Tardaguila, J.; Fernández-Novales, J.; Diago, M.P. On-the-go hyperspectral imaging for the in-field estimation of grape berry soluble solids and anthocyanin concentration. Aust. J. Grape Wine Res. 2019, 25, 127–133. [Google Scholar] [CrossRef] [Green Version]

- Gabrielli, M.; Lançon-Verdier, V.; Picouet, P.; Maury, C. Hyperspectral Imaging to Characterize Table Grapes. Chemosensors 2021, 9, 71. [Google Scholar] [CrossRef]

- Das, A.J.; Wahi, A.; Kothari, I.; Raskar, R. Ultra-portable, wireless smartphone spectrometer for rapid, non-destructive testing of fruit ripeness. Sci. Rep. 2016, 6, 32504. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Daniels, A.J.; Poblete-Echeverría, C.; Opara, U.L.; Nieuwoudt, H.H. Measuring Internal Maturity Parameters Contactless on Intact Table Grape Bunches Using NIR Spectroscopy. Front. Plant Sci. 2019, 10, 1517. [Google Scholar] [CrossRef] [Green Version]

- Zoecklein, B.W. Grape Maturity, Section 4; Virginia Polytechnic Institute and State University: Blacksburg, VA, USA, 2017; pp. 1–18. [Google Scholar]

- HuMaIN-Lab Ripeness Estimation Video Frames Dataset. Available online: https://github.com/humain-lab/ripeness-estimation-videoframes-dataset (accessed on 28 July 2021).

- Seng, J.; Ang, K.; Schmidtke, L.; Rogiers, S. Grape Image Database. Available online: https://researchoutput.csu.edu.au/en/datasets/grape-image-database (accessed on 28 July 2021).

- Davies, E.R. Machine Vision; Elsevier: Amsterdam, The Netherlands, 2005; ISBN 9780122060939. [Google Scholar]

- Andreoli, V.; Cassardo, C.; Iacona, T.; Spanna, F. Description and Preliminary Simulations with the Italian Vineyard Integrated Numerical Model for Estimating Physiological Values (IVINE). Agronomy 2019, 9, 94. [Google Scholar] [CrossRef] [Green Version]

- Cassardo, C.; Andreoli, V.; Spanna, F. Validation of IVINE crop growth model using MACSUR2 project measurements in a few European vineyards. In Proceedings of the EGU General Assembly Conference Abstracts, Online, 4–8 May 2020; p. 18259. [Google Scholar]

- Mania, E.; Andreoli, V.; Cavalletto, S.; Cassardo, C.; Guidoni, S. VICMOTO: Physical modeling and numerical simulation applied to vineyard. In Proceedings of the BIO Web of Conferences, Piacenza, Italy, 9–11 July 2019; Volume 13, p. 02006. [Google Scholar]

- Andreoli, V.; Cassardo, C.; Cavalletto, S.; Ferrarese, S.; Guidoni, S.; Mania, E. Measurements and simulations with the crop growth model VICMOTO in Nebbiolo vineyards. In Proceedings of the EGU General Assembly Conference Abstracts, Vienna, Austria, 7–12 April 2019; Volume 21. [Google Scholar]

- Brisson, N.; Gary, C.; Justes, E.; Roche, R.; Mary, B.; Ripoche, D.; Zimmer, D.; Sierra, J.; Bertuzzi, P.; Burger, P.; et al. An overview of the crop model stics. Eur. J. Agron. 2003, 18, 309–332. [Google Scholar] [CrossRef]

- Carteni, F.; Rossi, C.; Marcos, R.; Porras, I.; Basile, B.; Scognamiglio, P.; Teobaldelli, M.; Mataffo, A.; Mazzoleni, S.; Garcia-Tejera, O.; et al. LEAF: A process-based model of berry ripening in vineyards. In Proceedings of the 2019 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Portici, Italy, 24–26 October 2019; pp. 152–157. [Google Scholar]

- Kaburlasos, V.; Spais, V.; Petridis, V.; Petrou, L.; Kazarlis, S.; Maslaris, N.; Kallinakis, A. Intelligent clustering techniques for prediction of sugar production. Math. Comput. Simul. 2002, 60, 159–168. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F. Technology in precision viticulture: A state of the art review. Int. J. Wine Res. 2015, 7, 69–81. [Google Scholar] [CrossRef] [Green Version]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Li, B.; Ling, Y.; Zhang, H.; Zheng, S. The Design and Realization of Cherry Tomato Harvesting Robot Based on IOT. Int. J. Online Eng. 2016, 12, 23–26. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An autonomous strawberry-harvesting robot: Design, development, integration, and field evaluation. J. Field Robot. 2020, 37, 202–224. [Google Scholar] [CrossRef] [Green Version]

- Tardaguila, J.; Rovira-Más, F.; Blasco, J.; Saiz-Rubio, V.; Faenzi, E.; Évain, S.; Labails, S.; Stoll, M.; Scheidweiler, M.; Millot, C.; et al. VineRobot: A new robot for vineyard monitoring using non-invasive sensing technologies. In Proceedings of the International Cool Climate Wine Symposium, Brighton, UK, 26–28 May 2016. [Google Scholar]

- VINBOT. Available online: http://vinbot.eu/ (accessed on 15 July 2021).

- Roure, F.; Moreno, G.; Soler, M.; Faconti, D.; Serrano, D.; Astolfi, P.; Bardaro, G.; Gabrielli, A.; Bascetta, L.; Matteucci, M. GRAPE: Ground Robot for vineyArd Monitoring and ProtEction. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2018; pp. 249–260. [Google Scholar]

- Sivilotti, P.; Falchi, R.; Herrera, J.C.; Škvarč, B.; Butinar, L.; Sternad Lemut, M.; Bubola, M.; Sabbatini, P.; Lisjak, K.; Vanzo, A. Combined Effects of Early Season Leaf Removal and Climatic Conditions on Aroma Precursors in Sauvignon Blanc Grapes. J. Agric. Food Chem. 2017, 65, 8426–8434. [Google Scholar] [CrossRef] [PubMed]

- Vrochidou, E.; Tziridis, K.; Nikolaou, A.; Kalampokas, T.; Papakostas, G.A.; Pachidis, T.P.; Mamalis, S.; Koundouras, S.; Kaburlasos, V.G. An Autonomous Grape-Harvester Robot: Integrated System Architecture. Electronics 2021, 10, 1056. [Google Scholar] [CrossRef]

| Chemical Attributes | Unit |

|---|---|

| Soluble solid content (SSC) or total soluble solids (TSS) | °Brix |

| Titratable acidity (TA) | g L−1 |

| SSC/TA | °Brix/g L−1 |

| pH | <Logarithmic scale> |

| Volatile compounds | μg L−1 |

| Phenolic compounds (Polyphenols) | mg g−1 |

| Anthocyanins, tannins, terpenes | mg g−1 |

| Chlorophyll | μg L−1 |

| Antioxidants | mmol g−1 |

| Flavanols/Total Flavonoid Content (TF) | mg g−1 |

| Basic Chemical Attributes’ Limits in Ripened Wine Grapes |

|---|

| 3.2 < pH < 3.5 |

| 20 < SSC < 23 |

| 4 < TA < 7 |

| Sensory Attributes | |

|---|---|

| Visual attributes | Browning of stalks and pedicels |

| Turgidity of stalks and pedicels | |

| Berry color uniformity | |

| Presence of spots and rots on berries | |

| Grape seed morphological parameters (roundness, length, width, area, aspect, heterogeneity, perimeter, aspect ratio) | |

| Color scale | |

| Grape seed browning index | |

| Olfactory attributes | Grape fruity flavor |

| Fruity flavor different from grape | |

| Fermented flavor | |

| Recognizable varietal aroma | |

| Taste/tactile attributes | Hardness, crispness, juiciness, sweetness |

| Acidity | |

| Astringency | |

| Grape fruity taste intensity | |

| Intensity of fruity taste different from grape | |

| Intensity of fermented taste | |

| Abscission of berries | |

| Overall Liking Score (OLS) | |

| Cultivar | Maturity Index | Color Space | Features | Number of Images | Pre-Processing | Prediction Model | Evaluation R-Squared (R2) | Year | Ref. |

|---|---|---|---|---|---|---|---|---|---|

| Red grapes: Vitis vinifera L. cv. Graciano | 21 phenolic compounds | CIELAB | Colorimetric, chromatic heterogeneity, morphological | 15,000 | Thresholding, CIELAB color channel value and morphological variables extraction | Forward stepwise multiple regressor | Up to 0.97 | 2012 | [15] |

| Red grapes: Syrah, Tempranillo White grapes: Zalema cultivated in Sand and Clay soil | Visual assessment | CIELAB HSI | Colorimetric, morphological | 100 | Histogram thresholding segmentation, morphological restrictions, CIELAB and HSI color channel values, browning index, color ellipses | Discriminant analysis | Classification rates 100% (Red-Syrah) 87.50% (Red-Tempranillo) 71.43% (White-Zalema-Sand) 57.14% (White-Zalema-Clay) | 2012 | [14] |

| White grapes in Cambridge, Tasmania | Visual assessment | RGB HSV | Colorimetric, texture | 31 | Circle detection and classification by k-means, color and texture feature extraction, filtering | Support Vector Machine (SVM) | Classification rates of up to 96.88% | 2014 | [22] |

| <Not defined> | Visual assessment (color scale) | CIELAB Invariant illumination color model c1c2c3 | Colorimetric | 450 | Segmentation, representative color estimation | Support Vector Regressor (SVR) | Mean Squared Error 22.64 | 2015 | [23] |

| Red grapes: Flame Seedless | Visual assessment (color scale) | HSV | Colorimetric | <Not defined> | Berries detection algorithm, berries counting, color measurement extraction | Grading scheme for categorizing clusters based on color development | 0.56 | 2016 | [24] |

| Red grapes: Kyoto grapes | SSC pH | RGB HIS NTSC YCbCr HSV CMY | Colorimetric | 180 | Color feature extraction in different color spaces from arithmetically calculated images, calibration algorithm | Multiple Linear Regressor (MLR), Partial Least-Squares Regressor (PLSR) | Mean Squared Error pH: 0.0987 (MLR), 0.1257 (PLSR) SSC: 0.7982 (MLR), 0.9252 (PLSR) | 2016 | [25] |

| <Not defined> | Visual assessment | RGB HSV | Colorimetric | 289 | Image color distribution, segmentation algorithm, color discretization | Dirichlet Mixture Model (DMM) | 125.04 Perplexity 0.29 Average perplexity per color | 2017 | [26] |

| White grapes: Italia, Victoria | Visual assessment | HSV CIELAB | Colorimetric | 800 | Estimation of color variations, white-balance, denoising, segmentation, thresholding | Random Forest (RF) | Cross-Validation classification accuracy Up to 100% (Italia) Up to 0.92 (Victoria) | 2019 | [27] |

| White grapes: Sonaka | Visual assessment | RGB HSV | Colorimetric, morphological | 4000 | Denoising, color features extraction | Convolutional Neural Network (CNN), Support Vector Machine (SVM) | Classification rates 79% (CNN) 69% (SVM) | 2019 | [28] |

| Red grapes: Cabernet Sauvignon | Visual assessment | RGB | Colorimetric | 13 | Segmentation algorithm, color histogram extraction | Neural Network (NN) | Average Error 5.36% | 2020 | [29] |

| Red grapes: Syrah, Cabernet Sauvignon | TSS Anthocyanins Flavonoids | RGB | Texture | 2880 | Image adjustment, pixel normalization | Convolutional Neural Networks (CNN) | Classification rates 93.41% (Syrah) 72.66% (Cabernet) | 2021 | [30] |

| Cultivar | Maturity Index | Spectral Range (nm) | Number of Images | Pre-Processing | Prediction Model | Evaluation R-Squared (R2) | Year | Ref. |

|---|---|---|---|---|---|---|---|---|

| Red grapes: Michele Palieri, Red Globe, Crimson Seedless White grapes: Pizzutello, Thompson, Italia, Baresana Seedless | Sensory evaluation SSC TA pH | 400–1000 | 140 | Binary segmentation mask, morphological structured element erosion, mean-centering correction, Predicted REsidual Sums of Squares (P.RE.S.S.) statistic | Partial Least Square Regressor (PLSR) | TA: 0.95 (white), 0.82 (red) SSC: 0.94 (white), 0.93 (red) pH: 0.80 (white), 0.90 (red) | 2012 | [31] |

| Red grapes: Touriga Franca (TF) | pH SSC Anthocyanin | 380–1028 | 240 | Reflectance determination, Principal component analysis (PCA) to reduce input data dimensionality | Neural Network (NN) | pH: 0.73 SSC: 0.92 Anthocyanin: 0.95 | 2015 | [32] |

| Red grapes: Touriga Franca (TF), Touriga Nacional (TN), Tinta Barroca (TB) | pH Anthocyanin | 380–1028 | 225 | Reflectance determination, spectrum normalization | Neural Network (NN) | pH: 0.723 (TF), 0.661 (TN), 0.710 (TB) Anthocyanin: 0.906 (TF), 0.751 (TN), 0.697 (TB) | 2017 | [33] |

| White grapes: cv. Malagousia | TSS pH | 510–790 | 12 | Calculation of Carotenoid Reflectance Index 1 and 2, Structure Intensive Pigment Index, Pigment Specific Simple Ratio Carotenoids, Normalized Difference Vegetation Index | Multiple Linear Regressor (MLR), Support Vector Machine (SVM) | Classification rates TSS: 83.33% (MLR), 83.33% (SVM) pH: 75% (MLR), 75% (SVM) | 2017 | [34] |

| Red grapes: Cabernet Sauvignon, Shiraz, Pinot Noir, Marselan, Meili | Phenolic contents | 865–1711 | 120 | Outliers’ detection, image correction, pretreatment methods for spectral data, threshold segmentation method | Principal component regression (PCR), PLSR and Support Vector Regression (SVR) | Between 0.8789–0.9243 | 2017 | [35] |

| Red grapes: Syrah, Tempranillo | Flavanols Total phenols | 900–1700 | 200 | Correction, segmentation, average reflectance spectra and relative absorbances calculation | Principal Component Analysis (PCA), Modified Partial Least Squares (MPLS), K-means cluster analysis, Linear Discriminant Analysis (LDA) | Classification rates Up to 83.30% (leave-one-out cross-validation) Up to 76.90% (external validation) | 2018 | [36] |

| Red grapes: Vitis vinifera, (L.) cultivar Tempranillo | TSS Anthocyanins | 400–1000 | 144 | Standard normal variate and Saitzky–Golay filter | Epsilon-Support Vector Machines (ε-SVMs) | TSS: 0.92 Anthocyanins: 0.83 | 2018 | [37] |

| White grapes: Sugarone Superior Seedless, Thompson Seedless, Victoria Red grapes: Sable Seedless, Alphonse Lavallée, Lival, Black Magic | TSS TA TF | 411–1000 | 150 | Image correction with dark reference, spectral response extraction, selection of effective wavelengths and physicochemical parameters prediction | Multiple Linear Regression (MLR) models, PLS regression model | TF: 0.93 (MLR), 0.95 (PLS) TA: 0.98 (MLR), 0.99 (PLS) TSS: 0.86 (MLR), 0.94 (PLS) | 2021 | [38] |

| Cultivar | Maturity Index | Spectrometer | Spectral Range | Mode | Number of Images | Pre-Processing | Prediction Model | Evaluation R-Squared (R2) | Year | Ref. |

|---|---|---|---|---|---|---|---|---|---|---|

| Red grapes: Vitis vinifera L. cv. Tempranillo, Syrah | Anthocyanins | NIR | 900–1700 | Reflectance | 99 | Calibration, discriminant method, outlier detection | Principal Component Analysis | 0.86 | 2013 | [13] |

| Ref. | Intact/On-Site Estimation | Limitations/Review |

|---|---|---|

| [15] | No/No | Applied to grape seeds in an in-lab closed illumination box with a digital camera, illumination-dependent |

| [14] | No/No | Applied to grape seeds and grape berries in an in-lab illumination box with a digital camera, illumination-dependent |

| [22] | Yes/Yes | Applied to grape bunches on-site, fails occasionally due to segmentation algorithm setup of berries circle radius and circle detection algorithm |

| [23] | No/No | Applied to grape seeds and berries in an in-lab set |

| [24] | Yes/Yes | Applied to grape bunches, camera system mounted on a vehicle |

| [25] | No/No | Applied to grape berries, cost-effective in-lab setup |

| [26] | No/No | Applied to grape seeds, in-lab, depends only on color histograms |

| [27] | Yes/No | Applied to grape bunches, in-lab set, on a black background, under eight halogen lamps |

| [28] | Yes/Yes | Applied to grape bunches on site by using a smartphone camera |

| [29] | Yes/Yes | Applied to grape bunches on site, pilot study where only the green color channel histograms were selected and post-processed |

| [30] | No/No | Applied to grape berries, in-lab inside a dark chamber, with 15 3W LED red, green, blue, warm white, and cool white illuminants |

| [31] | No/No | Applied to removed grape berries in an in-lab dark room, use of costly hyperspectral imaging system |

| [32] | Yes/No | Applied to grape bunch in-lab inside a dark room under blue reflector lamps, only six berries as samples from each bunch |

| [33] | Yes/No | Applied to grape bunch in-lab dark room under blue reflector lamps, only six berries as samples from each bunch, low generalization ability |

| [34] | Yes/Yes | Farm scale, based on a hypothesis on carotenoid content |

| [35] | No/No | Applied to grape skins and seeds, under an illumination unit of four tungsten halogen lamps |

| [36] | No/No | Applied to grape seeds, in-lab under iodine halogen lamps |

| [37] | Yes/Yes | Applied to grape bunches on-site, using images acquired by a motorized platform |

| [38] | No/No | Applied to grape berries in a box under a quartz tungsten halogen lighting unit |

| [13] | No/No | Applied to grape berries in-lab under illumination source |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vrochidou, E.; Bazinas, C.; Manios, M.; Papakostas, G.A.; Pachidis, T.P.; Kaburlasos, V.G. Machine Vision for Ripeness Estimation in Viticulture Automation. Horticulturae 2021, 7, 282. https://doi.org/10.3390/horticulturae7090282

Vrochidou E, Bazinas C, Manios M, Papakostas GA, Pachidis TP, Kaburlasos VG. Machine Vision for Ripeness Estimation in Viticulture Automation. Horticulturae. 2021; 7(9):282. https://doi.org/10.3390/horticulturae7090282

Chicago/Turabian StyleVrochidou, Eleni, Christos Bazinas, Michail Manios, George A. Papakostas, Theodore P. Pachidis, and Vassilis G. Kaburlasos. 2021. "Machine Vision for Ripeness Estimation in Viticulture Automation" Horticulturae 7, no. 9: 282. https://doi.org/10.3390/horticulturae7090282

APA StyleVrochidou, E., Bazinas, C., Manios, M., Papakostas, G. A., Pachidis, T. P., & Kaburlasos, V. G. (2021). Machine Vision for Ripeness Estimation in Viticulture Automation. Horticulturae, 7(9), 282. https://doi.org/10.3390/horticulturae7090282