Abstract

The accurate and efficient identification of pear varieties is paramount to the intelligent advancement of the pear industry. This study introduces a novel approach to classifying pear varieties by recognizing their leaves. We collected leaf images of 33 pear varieties against natural backgrounds, including 5 main cultivation species and inter-species selection varieties. Images were collected at different times of the day to cover changes in natural lighting and ensure model robustness. From these, a representative dataset containing 17,656 pear leaf images was self-made. YOLOv10 based on the PyTorch framework was applied to train the leaf dataset, and construct a pear leaf identification and classification model. The efficacy of the YOLOv10 method was validated by assessing important metrics such as precision, recall, F1-score, and mAP value, which yielded results of 99.6%, 99.4%, 0.99, and 99.5%, respectively. Among them, the precision rate of nine varieties reached 100%. Compared with existing recognition networks and target detection algorithms such as YOLOv7, ResNet50, VGG16, and Swin Transformer, YOLOv10 performs the best in pear leaf recognition in natural scenes. To address the issue of low recognition precision in Yuluxiang, the Spatial and Channel reconstruction Convolution (SCConv) module is introduced on the basis of YOLOv10 to improve the model. The result shows that the model precision can reach 99.71%, and Yuluxiang’s recognition and classification precision increased from 96.4% to 98.3%. Consequently, the model established in this study can realize automatic recognition and detection of pear varieties, and has room for improvement, providing a reference for the conservation, utilization, and classification research of pear resources, as well as for the identification of other varietal identification of other crops.

1. Introduction

Pear (Pyrus) is the world’s major fruit tree species. China is the world’s largest pear-producing country, with pear production and area accounting for about 70% of the world [1]. China is also one of the three major centers of pear diversity in the world, so the varietal resources are extremely rich, with more than 3000 preserved resource types. The varieties in China cover 5 major species, including P. bretschneideri, P. pyrifolia, P. ussuriensis, P. sinkiangensis, and P. communis, and more than 130 varieties were widely used in production practice. Given that pear plants hold significant economic value as a cash crop, extensive varietal selection and breeding programs have been implemented by research institutions across China. And over 180 representative and excellent pear varieties were developed, around the fruit quality, disease resistance, stress resistance, different ripening periods, and other objectives of genetic improvement, which further enrich the diversity of pear varieties [2]. As the exchange of pear germplasm resources increases, the diversity of pear cultivars is constantly enriched. However, this has led to challenges in standardized variety identification, classification, and naming, which now constrain the development of the industry. At the same time, it is also a major issue that needs to be solved in the collection, preservation, and innovative utilization of pear germplasm resources. At present, the identification of varieties in production still relies mainly on the analysis of phenotypic characteristics, but phenotypes are susceptible to environmental factors. For example, the fruits of Akiziki and Aikansui are both flattened and round in shape, with a brown outer skin. Their fruits cannot be completely distinguished solely by the naked eye. In addition, both have elliptical-shaped leaves with sharp serrated margins. Even experienced professionals cannot guarantee the correct classification of pear varieties by observing their leaves. The identification error rate is still very high. With the rapid development of high-throughput sequencing and molecular biology techniques, pear varieties can be accurately identified using molecular markers [3]. However, the time-consuming and costly limitations of molecular marker technology have greatly restricted its application. In recent years, the fields of machine vision and artificial intelligence have rapidly emerged. Combining modern information technology with agriculture can gradually make the planting, production, and development of agricultural products more intelligent [4]. Machine vision technology has the advantages of high efficiency, high precision, and low cost in recognizing and classifying agricultural products, so it has gradually become a popular research direction for agricultural recognition and classification [5].

In plant variety identification studies, leaves are easier to collect than other organs. The leaves of any plant have unique characteristics that vary in size and shape, such as shape, tip, veins, and margins. But as far as a plant is concerned, the morphological characteristics of the leaves are relatively stable, so they can be used as a basis for plant identification and classification [6]. The method of classifying plant varieties by recognizing leaf images is feasible. In traditional agricultural practices, the classification of pear leaves is based on empirical knowledge and subjective evaluation, but it is difficult to accurately recognize small differences in pear leaf characteristics with the naked eye. This manual recognition method based on leaf shape, size, and color is not only human resource-intensive, but inefficient, with a high error rate, and the efficiency is greatly affected by human factors [7]. For example, the leaves of P. communis are ovate, suborbicular, or elliptic; the edges of the leaves are mostly crenate or obtuse serrate. The leaves of P. ussuriensis are nearly ovoid, and the edges of the leaves are mostly sharply serrated. When the two are categorized, observers with a wealth of experience and knowledge may be able to make a general distinction between the two when they look carefully. Both Huagai and Nanguoli belong to P. ussuriensis varieties, and the appearances of the leaves are highly similar, which are almost difficult to recognize by the naked eye. Therefore, how to efficiently and accurately recognize and classify pear varieties through the leaves is a difficult task, although it is of great significance to the development of the pear industry and the protection of pear resources.

With the rise of computer vision technology and the maturity of deep learning techniques, Convolutional Neural Networks (CNNs) have made significant progress in image recognition, target detection, image generation, and other fields [8], becoming one of the most important networks in the field of deep learning [9]. The CNN model automatically extracts leaf feature information from multi-species image data for variety classification, overcoming the limitations of manual methods [10]. For the field of plant leaf recognition and classification in complex natural scenes, some progress has been made in recent years. Liu et al. [11] used a Deep Convolutional Neural Network (DCNN) and leaf image input to classify 14 apple cultivars. Siravenha et al. [12] proposed a method to classify plants by analyzing leaf textures. Grinblat et al. [13] focused on leaf vein patterns and used DCNN to classify three different legume species. Zhang et al. [14] constructed a seven-layer CNN to automatically classify 32 species of plants by leaf images. Although deep learning has achieved significant accuracy in leaf classification, challenges still exist. The inference speed is acceptable for individual images, but the complex architecture of standard CNNs (such as ResNet, that focus on deep design) demands extensive computational resources for training. Although laboratory-grade GPUs can handle inference well, agricultural edge devices such as orchard robots often require lighter models. In addition, model compression techniques such as quantization often yield accuracy degradation. These factors necessitate trade-offs between performance and deployability in agricultural edge devices.

The “You Only Look Once” (YOLO) target detection algorithm was first proposed by Redmon et al. [15] in 2016. The YOLO series is the leading single-stage algorithm in deep learning object detection methods. Its basic principle is to divide the input image into multiple grids and predict the category and location of objects in each grid. YOLO-series algorithms have the advantage of faster speed and higher real-time performance, and have made significant progress in target recognition, with better performance and application prospects in quickly recognizing plant leaves. From YOLOv1 to YOLOv10, its performance has been continuously improved, gradually reaching a balance between recognition speed and accuracy [16,17,18,19,20,21]. Many recent studies have demonstrated that the YOLO family of target detection algorithms can effectively achieve the task of leaf species identification and classification. Yang et al. [22] used the YOLOX algorithm combined with the homemade tea bud dataset to establish a tea bud classification model, which recognized and classified four types of tea buds, in which the recognition accuracy for the yellow mountain species could reach 90.54%. Susa et al. [23] used the YOLOv3 algorithm to build a cotton plant classification system, which recognized and detected cotton leaves, and could classify damaged and healthy cotton leaves. Soeb et al. [24] proposed a solution to the problem of tea leaf disease detection based on the training of the YOLOv7 model, using datasets of diseased tea leaves from four famous tea gardens in Bangladesh. This helps in the rapid identification and classification of tea leaf diseases, minimizing economic losses to the greatest extent.

YOLOv10 embodies a breakthrough in real-time object detection, achieving unprecedented performance. It selects YOLOv8 as the baseline model and proposes a new model design based on it [25]. YOLOv10 uses a dual label strategy to improve accuracy without excessive impact on detection speed. Eliminating the need for non-maximum suppression (NMS) in earlier models reduces latency. YOLOv10 integrates two core modules in the model design: the compact inverted block (CIB) and partial self-attention (PSA) module. This design reduces the computational complexity and improves the model’s ability to extract sample features. Tests on performance show that YOLOv10 outperforms previous state-of-the-art models across various model scales. Compared with the baseline model YOLOv8-N, YOLOv10-N has reduced its parameter by 28% and FLOPs by 23%, reducing model complexity. And the latency has been reduced by 70%, which is a great improvement. When considering the detection accuracy and computational efficiency, YOLOv10-N is able to achieve good results for the detection of samples.

Plant recognition and classification using neural networks and leaf image inputs has become the focus and challenge of precision agriculture and has attracted extensive attention from many scholars. However, the application of the YOLO series of algorithms to the task of recognizing and classifying pear leaf varieties, a challenging class of leaf image recognition problems, has not been reported.

Based on the above background, we combine the YOLOv10 algorithm with pear leaf images to construct a pear leaf recognition and classification model as a way to classify pear varieties. The proposed method strikes an appropriate balance between accuracy and efficiency, and is able to quickly and accurately recognize pear leaves and classify the variety. A dataset of images of pear leaves consisting of 33 varieties with 500 to 600 sample images in each category was crafted to meet multi-task classification requirements. YOLOv10 was trained using the dataset of images of pear leaves, and in this way, a recognition and classification model was constructed to classify pear varieties by recognizing the features of pear leaves. The trained model was evaluated using the self-made dataset, and satisfactory results were obtained. In order to verify the effectiveness of the method, we trained YOLOv7, ResNet50, VGG16, and Swin Transformer using the same dataset and under the same experimental environment. Upon comparing the evaluation results of five models, it was found that all metrics of YOLOv10 outperformed those of the other four models. Moreover, while ensuring high recognition accuracy, it improved the speed of recognition. It performed well in both inter-species and intra-species recognition of pears. This proves that the model is suitable for the pear leaf recognition and classification task. On the basis of YOLOv10, Spatial and Channel reconstruction Convolution (SCConv) is introduced to optimize the model. The results indicate that the performance of the optimized model in recognition and classification, as well as the complexity of the model, have been improved. The study of the pear leaf classification model is of great significance for improving its pear leaf identification ability, promoting scientific research and innovation, and promoting the intelligent development of pear industry. And it provides certain scientific references for the classification, protection, and production research of pear varieties and variety identification of other crops.

2. Materials and Methods

2.1. Image Acquisition and Dataset Building

Training a pear leaf recognition and classification model requires feeding a large number of pear leaf images as sample images into the network architecture. The quality of the dataset directly affects the accuracy and efficiency of the model. High quality image data should not only be visually clear and accurate, but also representative, diverse, and balanced in content. The varieties in China cover 5 main cultivation species. Therefore, we selected 6 representative varieties each from P. bretschneideri, P. pyrifolia, P. ussuriensis, and P. communis, and selected 3 varieties from P. sinkiangensis. And in order to make the dataset more abundant, we also selected 6 varieties from P.hybrid. The leaf photos of pear varieties were collected from the “National Pear and Apple Germplasm Resource Repository (Xingcheng)”. Images of pear leaves were captured under natural conditions using a mobile phone with a rear camera, the iPhone 13 model, with a resolution of 12 MP. All camera optimizations such as HDR are disabled to avoid affecting model generalization. Placing the phone at a distance of 30–40 cm from the leaves for shooting, images were collected at different times of the day (8:00–18:00) to cover changes in natural lighting and ensure model robustness. During the shooting, there were cloudy and sunny days. The leaves selected were adult leaves, fully expanded, with no obvious signs of nutrient deficiencies, pathogen infection, or insect damage. In the images of dense foliage, there was leaf shading and leaf overlap. And when photographed under well-lit conditions, some leaves cast light and shadows from other leaves. Frontal images of different leaves of 33 pear varieties were taken, and 500 to 600 images were collected for each variety. The images were captured in JPG format with an image size of 3024 × 4032 pixels. A total of 18,316 images of pear leaves were captured during the entire shooting process, due to the fact that the task of capturing leaf images is carried out outdoors, and many factors in the natural environment are inevitable. For example, during filming, excessive wind can cause leaves to move, resulting in blurred leaf features, such as shape and margin, in the captured image. In addition, due to the outdoor cultivation of pear trees, some leaves are affected by pests and diseases, resulting in severe damage to the leaves. Therefore, in order to ensure the quality of the dataset, we manually filtered the images, and finally, a total of 17,656 pear leaf images were used to compose the pear leaf dataset. Some representative samples of the dataset are shown in Figure 1. Table 1 shows the information about the 33 pear leaf samples.

Figure 1.

Some representative samples of the leaf-datasets; (1) Anli, (2) Bartlett, (3) Cangxi Xueli, (4) Chili, (5) Cuiguan, (6) Dangshan Suli, (7) Dayexue, (8) Fojianxi, (9) Beurré Hardy, (10) Harrow Sweet, (11) Hongshaobang, (12) Hongxiangsu, (13) Huachangba, (14) Huagai, (15) Huangguan, (16) Whangkeumbae, (17) Jianbali, (18) Jinfeng, (19) Jingbaili, (20) Conference, (21) Korla Pear, (22) Mandingxue, (23) Nanguoli, (24) Akiziki, (25) Dr. Jules Guyot, (26) Seerkefu, (27) Shuihongxiao, (28) Xiaoxiangshui, (29) Xinli 7, (30) Xuehuali, (31) Yali, (32) Yuluxiang, (33) Early Red Comice.

Table 1.

Leaf sample information of 33 species of pears.

The pear leaf dataset was randomly divided into a training set, validation set, and testing set in the ratio of 6:3:1 for training, validation, and prediction processes, respectively. The image data were manually labeled using the LabelImg tool (can be in https://github.com/HumanSignal/labelImg get, accessed on 19 February 2025). Target leaves in the image were marked with rectangular bounding boxes and assigned category labels, and then an annotation file in the .txt format was generated that contained the category of the leaf, the coordinates x and y of the rectangular centroid, and the width w and height h relative to the image.

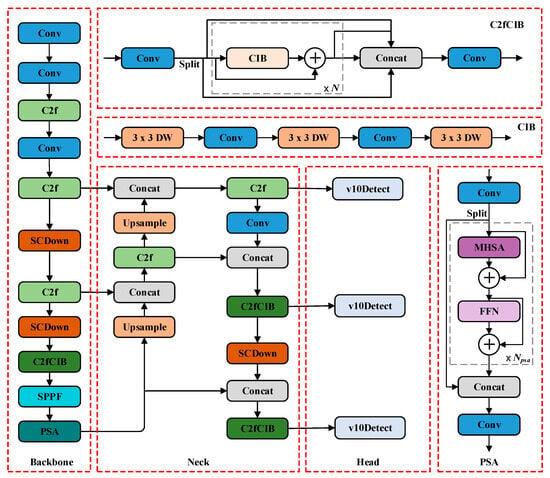

2.2. YOLOv10

YOLOv10 is a real-time object detection method proposed by Wang et al. in 2024. YOLOv10 (https://github.com/THU-MIG/yolov10, accessed on 30 April 2025) has improved detection accuracy and speed compared to previous versions. The dual label assignment strategy of this model eliminates NMS requirements while maintaining detection performance. To achieve high performance and efficiency in modeling, YOLOv10 proposes a CIB structure. Replacing some basic blocks of the model with CIB reduces the redundancy and improves the efficiency of the model [26]. The structure of CIB is shown in (1), where DW (Depthwise Convolution) represents a depthwise separable convolution layer. In addition, a PSA module design is proposed. The channel features are uniformly divided into two parts, one of which is processed by the multi-head self-attention module (MHSA) and a feed-forward network (FFN) before being fused with the other part [27]. This design enhances the model’s ability to capture diverse contextual information and boosts detection accuracy while keeping computational overhead low. The structure of the PSA is shown in (2).

A series of real-time end-to-end detectors YOLOv10-N/S/M/B/L/X with different model scales was successfully implemented. YOLOv10-N/S/M/B/L/X is trained from scratch on COCO [28]. During the training process, we used the SGD optimizer (momentum of 0.937) for 500 epochs. The initial learning rate was 0.01. The experimental results demonstrate their performance and efficiency over previous state-of-the-art models. Among them, YOLOv10-N achieves a balance between accuracy and efficiency in edge devices, making it suitable for plant phenotype applications. Therefore, for the purpose of this study, we chose YOLOv10-N for our experiment. The network architecture of YOLOv10 is shown in Figure 2.

Figure 2.

Network architecture diagram of YOLOv10.

2.3. Selection of Other Network Models

YOLOv7 is a real-time object detector proposed by Wang et al. in early 2023. It uses a trainable free package that provides a significant improvement in object detection accuracy without incurring additional inference cost. YOLOv7 focuses on optimizing the training process, which includes optimization of modules to improve the accuracy of object detection. In addition, YOLOv7 proposes “extend” and “compound scaling” methods, which effectively reduces the number of parameters and computation and improves the detection rate. The model is trained from scratch on the MS COCO dataset without using any other datasets or pre-trained weights. The results show that YOLOv7 outperforms other object detectors such as YOLOR, YOLOX, YOLOv5, and DETR in terms of accuracy and speed.

ResNet (Residual Neural Network), also known as traditional neural network, is a neural network proposed by He et al. [29]. It won the first place in ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation in the ILSVRC & COCO 2015 competitions, and profoundly influenced the design of subsequent deep neural networks. ResNet incorporates a batch normalization (BN) layer, combined with normalized initialization and intermediate normalization, to accelerate training and mitigate gradient vanishing or exploding issues. Additionally, residual structures are introduced to address the degradation problem, facilitating the development of ultra-deep network architectures. Among them, ResNet50 is a CNN with 50 layers. In this work, we used ResNet50 for pre-training on ImageNet and trained our dataset with pre-trained weights. The input images were uniformly scaled to 224 × 224 pixels. We used the SGD optimizer (initial learning rate of 0.001, momentum of 0.9) to optimize the model. The batch size was 16, with continuous iterations of 100.

VGG is a deeper convolutional network proposed by the Visual Geometry Group [30] at the University of Oxford. It builds on AlexNet [31] to further explore the effects of depth and network performance. In the 2014 ImageNet Challenge, training from scratch on ImageNet, VGG19 achieved first place in the localization task, and VGG16 came in second place in classification. Among them, VGG16 is a convolutional network with 16 network layers, which uses several consecutive 3 × 3 convolutional kernels instead of the larger convolutional kernels (11 × 11, 7 × 7, 5 × 5) in AlexNet. Under the condition of the same receptive field, the depth of the network is improved, and the performance of the network model is thus improved. In the experiment, we trained our dataset using pre-trained weights of VGG16 on ImageNet. The model used SGD as the optimizer (initial learning rate of 0.001, momentum of 0.9) for 100 iterations, with a batch size set to 16. And its image input size remains at 224 × 224.

Swin Transformer is a hierarchical vision transformer proposed by Liu et al. [32] in 2021. It is based on the idea of the ViT model [33] and uses a hierarchical structure similar to CNN to process images, allowing the model to flexibly handle images of different scales and reducing computational complexity. The model introduces a shifted windowing mechanism, which facilitates cross-window information learning and enhances the capture of local contextual information. This approach improves the model’s expressive power and global perception while effectively lowering computational complexity, achieving an optimal balance between performance and efficiency. Swin Transformer demonstrates state-of-the-art performance in COCO object detection, outperforming previous leading methods. It is widely applied in various visual tasks, including image classification, object detection, and segmentation.

2.4. SCConv Module

SCConv is an efficient convolution module proposed by Li et al. [34] in 2023. It reduces spatial and channel redundancy in convolutional neural networks through a Spatial Reconstruction Unit (SRU) and Channel Reconstruction Unit (CRU). Two units are combined in order, first reducing redundancy in the spatial dimension through the SRU, and then reducing redundancy in the channel dimension through the CRU. Specifically, the SRU processes feature maps by dividing them into features that contain rich spatial information and redundant features that do not contain too much information. Subsequently, these two features are recombined to obtain spatially refined features. The CRU divides the channel of spatially refined features into two parts, and then extracts representative high-level features and supplementary shallow features separately. Finally, through global average pooling and an attention mechanism, high-level features and shallow features are fused to obtain the final channel refinement features. The SCConv module enhances the feature extraction capability of the model, significantly reducing the parameters and computational costs while ensuring accuracy.

2.5. Evaluation Metrics

Commonly used evaluation metrics include precision (P), recall (R), average precision (AP), and mean average precision (mAP), which are defined [35] as shown below.

The precision rate denotes the probability that all samples predicted to be positive are actually positive. The formula for precision is shown in Equation (3), where true positive (TP) denotes the number of samples that are predicted to be correct and are actually correct; false positive (FP) denotes the number of samples that are predicted to be correct but are actually incorrect.

The recall rate denotes the probability that a sample that is actually positive is predicted to be positive and it measures the ability of the model to correctly identify the sample. The recall rate is calculated as shown in Equation (4), where true positive (TP) denotes the number of samples that are predicted to be correct and are actually correct; false negative (FN) denotes the number of samples that are predicted to be wrong but are actually correct.

The average precision is the area enclosed by the axes below the precision–ecall curve at different detection thresholds, which is plotted with precision as the y-axis and recall as the x-axis. As recall increases, a robust model can maintain high accuracy. In general, AP indicates the detection accuracy of the model for each category, and the higher the AP value, the better the detection performance of the model. mAP denotes the average of the accuracy of each category, which is used to measure the detection performance of the model for all the categories and is used as the main metric to evaluate the overall performance of the model. YOLO prioritizes the precision and speed, and mAP reflects this by considering both [36]. Among them, mAP50 indicates the value of mAP when the IOU threshold is 0.5. mAP50-95 represents mAP at multiple IOU thresholds, with larger values indicating more accurate prediction boxes. The formulas for AP and mAP are shown in (5) and (6), where C denotes the total number of categories.

2.6. Experimental Environment

This experiment uses the Python programming language and is based on the Windows 11 operating system with an Intel(R) Xeon(R) Gold 6330 CPU processor and 80 GB of RAM. GPU training and inference are performed on the AutoDL experiment platform. The details of the experimental environment are shown in Table 2.

Table 2.

The experimental conditions.

2.7. Statistical Analysis

Data analysis was conducted using BM SPSS 20, with p < 0.05 indicating statistical significance in one-way ANOVA (Duncan’s test). The error value (%) was used to indicate the size of the error in the actual value, and was calculated using Microsoft Excel 2007.

3. Results and Discussion

3.1. Image and Label Datasets

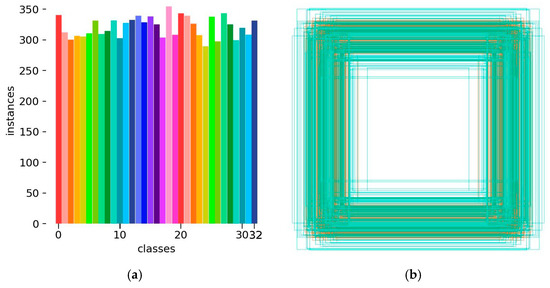

After labeling the leaf samples in the images using the labeling tool, the labeled dataset was generated. The number and distribution of labels were counted, and the result is shown in Figure 3. The horizontal axis in Figure 3a represents the category of the label in the training set, and the vertical axis represents the quantity of labels. Figure 3b represents the boxes labeled around each leaf instance in the pear leaf dataset, reflecting the statistical information on the size as well as the number of all the boxes. The YOLO algorithm usually uses these bounding boxes to learn how to detect objects with similar categories in a new image and to localize the recognized objects using anchor boxes. Figure 3c displays the distribution of labels, from which one can roughly see the position of the centroid of the leaf in the pear leaf dataset relative to the whole image, and the height-to-width ratio of the leaf samples in the figure relative to the whole image.

Figure 3.

Labels and label distribution: (a) Class and number of training set; the horizontal axis 0–32 represents pear variety: (0) Anli, (1) Bartlett, (2) Cangxi Xueli, (3) Chili, (4) Cuiguan, (5) Dangshan Suli, (6) Dayexue, (7) Fojianxi, (8) Beurré Hardy, (9) Harrow Sweet, (10) Hongshaobang, (11) Hongxiangsu, (12) Huachangba, (13) Huagai, (14) Huangguan, (15) Whangkeumbae, (16) Jianbali, (17) Jinfeng, (18) Jingbaili, (19) Conference, (20) Korla Pear, (21) Mandingxue, (22) Nanguoli, (23) Akiziki, (24) Dr. Jules Guyot, (25) Seerkefu, (26) Shuihongxiao, (27) Xiaoxiangshui, (28) Xinli 7, (29) Xuehuali, (30) Yali, (31) Yuluxiang, (32) Early Red Comice. (b) Size and quantity of bounding boxes, (c) Location and size of the labels in the images.

3.2. Model Training

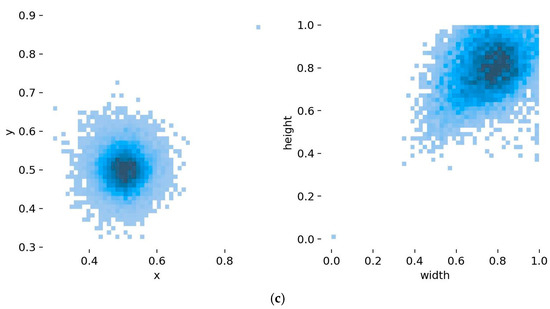

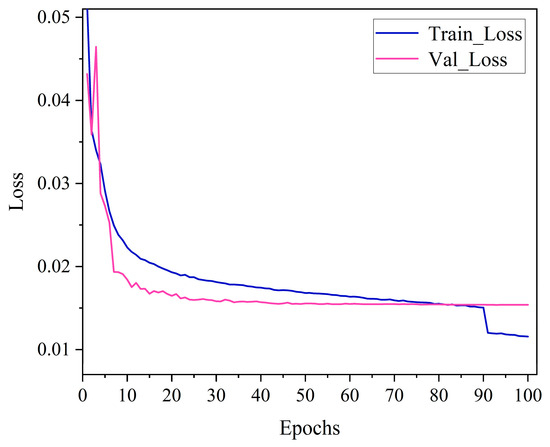

The YOLOv10 source code documentation was obtained via GitHub, and the generalization ability of the training model was enhanced using the officially provided pre-training weights file. The model went through 100 epochs using SGD as the gradient optimization function, and the batch size was set to 16. During the training model phase, the input images were uniformly scaled to 640 × 640 pixels, and 8 threads were used for processing. Figure 4 shows the YOLOv10 training results. Figure 5 shows the loss curves generated by YOLOv10 during training as well as validation. Combining the two figures, the value of the loss function shows a decreasing trend, indicating that the SGD optimizer continuously optimizes the model by updating parameters such as the network weights. YOLOv10 has a convergence process in both phases, where the value of the loss function decreases rapidly until the epoch reaches 10, accompanied by a rapid increase in recall, mAP50, mAP50-95, and precision. After the epoch reaches 10, the loss curve decreases slowly until it almost no longer decreases, and at the same time, the values of recall, mAP50, mAP50-95, and precision level off, indicating that the model basically converges. In addition, YOLOv7, ResNet50, VGG16, and Swin Transformer were trained using the same pear leaf dataset, and the training environment and training parameter settings were kept the same as for YOLOv10.

Figure 4.

YOLOv10 training result. Loss function indicators during the training phase: train/box_om—bounding box regression loss (one-to-many head); train/box_oo—bounding box regression loss (one-to-one head); train/cls_om—classification loss (one-to-many head); train/cls_oo—classification loss (one-to-one head); train/dfl_om—box position distribution learning (one-to-many head); train/dfl_oo—lightweight distribution learning (one-to-one head).

Figure 5.

Changes in loss values of YOLOv10 recognition and classification model.

3.3. Comparative Analysis of Different Models on the Self-Made Dataset

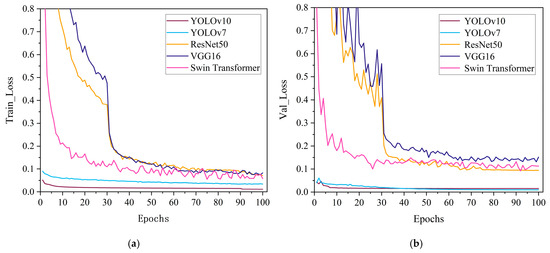

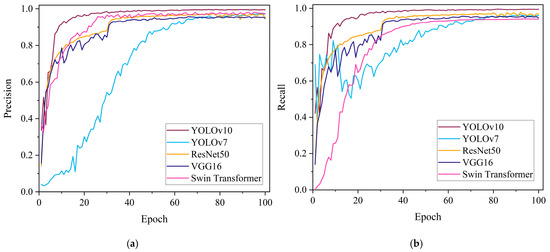

In order to evaluate whether YOLOv10 is more suitable for the pear leaves recognition and classification task compared with other algorithms, we chose five models, YOLOv10, YOLOv7, ResNet50, VGG16 and Swin Transformer, to conduct experiments and analyze the results. In the above setting, the pear leaf dataset was used to train each of the five models, with 100 iterations for each model. Subsequently, the models were evaluated on the validation set to provide a comprehensive analysis of their ability to recognize classification. The details of precision, recall, F1-score, and mAP of the recognition and classification results of the five models are shown in Table 3. From Table 3, it can be seen that YOLOv10 shows superior performance in terms of detection precision. Its precision (99.6%), recall (99.4%), and mAP50 (99.5%) are significantly higher than those of the other four models. Loss function is an important index to quantify the gap between the prediction result and the real result, and it is also the basic standard to evaluate the quality of the model. The smaller the value of the loss function, the closer the prediction result of the model is to the real result. The variation in the loss values for pear leaf recognition and classification for the five models is shown in Figure 6. As can be seen from Figure 6, all five models have a convergence process, and YOLOv10 and YOLOv7 converge faster than ResNet50, VGG16, and Swin Transformer. The loss value of the YOLOv10 model is always in a low state, which indicates that the prediction result of this model for the pear leaf types is closer to the actual results. Figure 7 shows the variation in precision and recall for the five classification models. As can be seen from Figure 7, the precision and recall values of YOLOv10 stabilize faster and have higher values compared to other models.

Table 3.

Precision, recall, F1-scores, and mAP for recognition and classification results of different models.

Figure 6.

Comparison of loss function curves: (a) Loss function curve during the training phase, (b) Loss function curve during the verification phase.

Figure 7.

Result curves of five models: (a) Comparison of precision, (b) Comparison of recall.

In addition, we calculated the parameters, floating-point operations (FLOPs), and the training time for the five models, and the specific information is shown in Table 4. From the table, we can see that VGG16 has the most parameters and YOLOv10 has the least number of parameters. And compared with YOLOv7 of the same series, YOLOv10 has 33.05 M fewer parameters and 97.2 G fewer FLOPs, which shortens the training time. Among the five models, ResNet50 has the fewest FLOPs. But in terms of recognition precision, it is far inferior to YOLOv10. This indicates that YOLOv10 has improved the precision of object detection by optimizing the network structure and training strategy. And this model design effectively reduces the complexity of the model, and the feature extraction ability of the model is improved. While maintaining high performance, it reduces the parameters and FLOPs, and lowers resource consumption. More suitable for deployment on edge devices. Therefore, when considering the overall evaluation of recognition accuracy and efficiency, YOLOv10 becomes a more suitable choice for the pear leaf blade recognition and classification task. The pear leaf recognition and classification model will continue to be constructed using YOLOv10.

Table 4.

Comparison of the training parameters, floating point operations, and training time of each network.

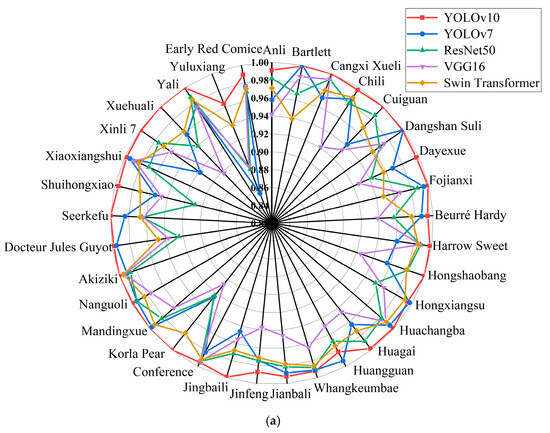

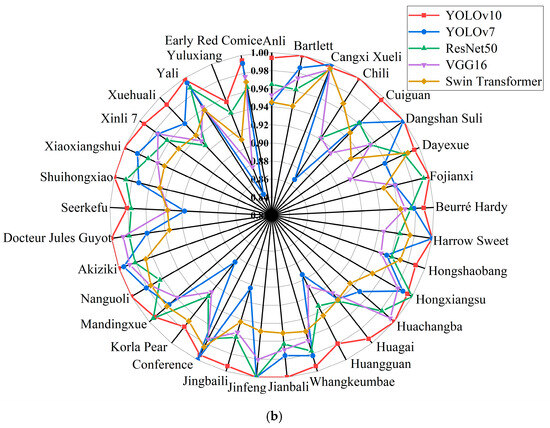

3.4. Comparative Experiment on Leaf Recognition and Classification Performance of Different Pear Varieties

The pear leaf recognition and classification model constructed using YOLOv10 in this article can accurately identify pear leaves of different varieties. At the same time, in order to verify the reliability of the results and evaluate the ability of the model to recognize and distinguish leaf related features of different varieties, while maintaining consistency in the training platform configuration, we compared the recognition results of YOLOv10 and the other four models for each variety. The specific information is shown in Figure 8. From Figure 8a, it can be seen that YOLOv7 has the highest recognition accuracy when identifying Bartlett, Dangshan Suli, Hongxiangsu, and Huangguan varieties. In addition, YOLOv10 can achieve the highest recognition accuracy on 29 other varieties. Overall, the model constructed using YOLOv10 has higher detection accuracy for 33 pear leaf samples, with an average accuracy of 99.5%. The higher the recall rate, the lower the probability of missed detections. From Figure 8b, it can be seen that YOLOv7 has the highest recall rate when identifying Cangxi Xueli and Conference varieties. When identifying Hongxiangsu and Mandingxue, ResNet50 can achieve a higher recall rate. And in the recognition of Jinfeng, YOLOv10, YOLOv7, and ResNet50 can all achieve a 100% recall rate. The remaining 28 varieties have the highest recall rate on YOLOv10. Compared with the other four models, YOLOv10 has a more stable recall rate among different varieties.

Figure 8.

Recognition accuracy and recall of YOLOv10, YOLOv7, ResNet50, VGG16, and Swin Transformer models: (a) Precision of 33 varieties in different models, (b) Recall rates of 33 varieties in different models.

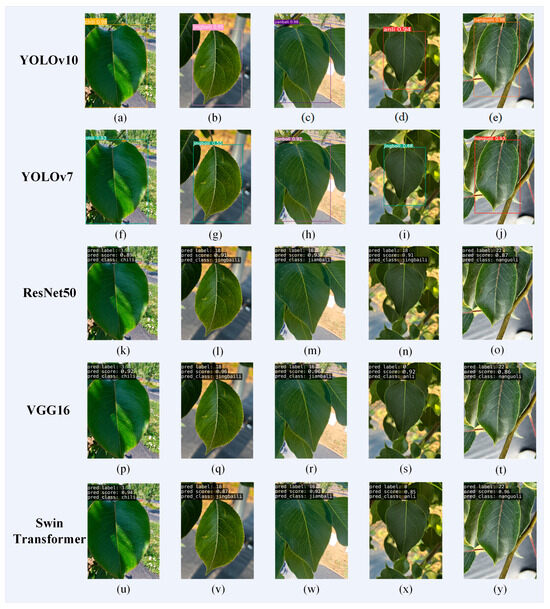

We used the same testing set to perform pear leaf recognition, classification, and prediction on five models, and randomly selected 70 pear leaf images in the test set for prediction. Figure 9 shows the prediction of several images in a natural environment. From Figure 9, it can be seen that there are differences in the prediction results of the five models for the same image. Among them, YOLOv10 has the highest accuracy in identifying and classifying leaves. From Figure 9i,n, it can be seen that YOLOv7 and ResNet50 mistakenly identified Anli as Jingbaili. This is because both Anli and Jingbaili are varieties of P. ussuriensis, with extremely high similarity in leaf characteristics, making it difficult to identify and classify them. From this, it can be seen that, compared with other models, YOLOv10 has fewer misclassification phenomena of different types due to high similarity of leaf surface features.

Figure 9.

Pear leaf recognition and classification results. Among them, (a–e) are the recognition results of YOLOv10; (f–j) are the recognition results of YOLOv7; (k–o) are the recognition results of ResNet50; (p–t) are the recognition results of VGG16; (u–y) are the recognition results of Swin Transformer.

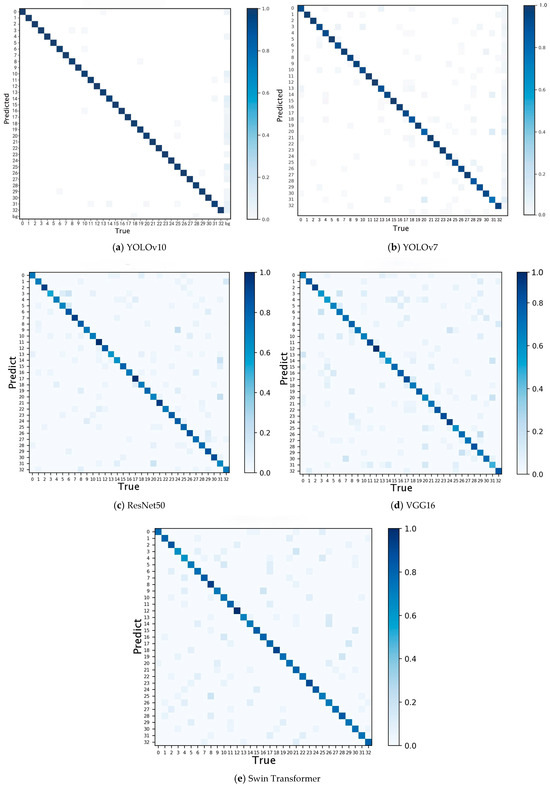

The confusion matrices of the five recognition and classification models are shown in Figure 10. It describes the accuracy of the model in identifying and classifying 33 pear leaf varieties in the dataset. In the confusion matrix, columns represent the predicted labels of the model for each class of instances, rows represent the true labels of each class of instance labels, and the values on the diagonal represent the correct detection rate. Dark colors indicate high rates, while light colors indicate low rates [37]. This visualization helps to observe the differences between instances belonging to different categories. The diagonals of the five confusion matrices are all dark, indicating that these five models have relatively high accuracy for pear leaf classification. However, as shown in Figure 8, the five models have poor recognition and classification performance for Yuluxiang. Analyzing Figure 10, it was found that YOLOv10 incorrectly identified some samples as Hongxiangsu and Huangguan for Yuluxiang’s recognition and classification. YOLOv7 recognized them as Cuiguan, Huangguan, Korla Pear. These were identified as Cuiguan, Huangguan, Korla Pear, Whangkeumbae in ResNet50. VGG16 recognized them as Huangguan, Jingbaili, Korla Pear. And Swin Transformer recognized them as Hongshaobang, Seerkefu, Xiaoxiangshui. Among them, Cuiguan, Hongxiangsu, Huangguan, and Yuluxiang are all P.hybrid. Yuluxiang’s parents are Korla Pear and Xuehuali. Huangguan’s parents include Xuehuali, and Hongxiangsu’s parents include Korla Pear. There is a certain correlation between the three varieties, so there are some interferences when identifying them. However, Cuiguan’s parents are Kosui and Hangqing × Shinseiki, and there is not much correlation with Yuluxiang. Except for YOLOv10, which incorrectly identified it as a variety with the same parent, the other four models also identified it as a variety in other populations. From this, it can be seen that YOLOv10 has a stronger ability to extract leaf feature information and perform recognition and classification, and has better recognition performance for some varieties with extremely high leaf feature similarity. The pear leaf recognition and classification model constructed using YOLOv10 can accurately identify and classify pear leaves of various varieties.

Figure 10.

Confusion matrix diagram for all five models; (0) Anli, (1) Bartlett, (2) Cangxi Xueli, (3) Chili, (4) Cuiguan, (5) Dangshan Suli, (6) Dayexue, (7) Fojianxi, (8) Beurré Hardy, (9) Harrow Sweet, (10) Hongshaobang, (11) Hongxiangsu, (12) Huachangba, (13) Huagai, (14) Huangguan, (15) Whangkeumbae, (16) Jianbali, (17) Jinfeng, (18) Jingbaili, (19) Conference, (20) Korla Pear, (21) Mandingxue, (22) Nanguoli, (23) Akiziki, (24) Dr. Jules Guyot, (25) Seerkefu, (26) Shuihongxiao, (27) Xiaoxiangshui, (28) Xinli 7, (29) Xuehuali, (30) Yali, (31) Yuluxiang, (32) Early Red Comice.

Table 5 shows the results of identifying and classifying 33 pear varieties using the YOLOv10 recognition and classification model. From Table 5, it can be seen that the YOLOv10 recognition and classification model has the highest recognition accuracy for Cangxi Xueli, Cuiguan, Hongshaobang, Korla Pear, Mandingxue, Akiziki, Seerkefu, Xuehuali, and Yali, reaching 100% (Error value = 0.44).

Table 5.

Test results of the YOLOv10n model on 33 pear varieties.

3.5. Improved YOLOv10 Model

The pear leaf classification model based on YOLOv10 performs well in pear leaf recognition and classification, with better indicators than other models, showing great prospects in accurately identifying pear leaf types. However, through the analysis of the recognition and classification results of 33 pear leaves, it is found that the precision of the model for Yuluxiang’s recognition and classification is 96.4% (Error value = −3.18), which is lower compared to other varieties. Therefore, we used YOLOv10 as the baseline model to improve the model. SCConv is an efficient convolution module that compresses CNN models and improves their performance by reducing spatial and channel redundancy between features in the convolutional layer. We introduced this convolution module in the Backbone section of YOLOv10 and used SCConv instead of Bottleneck in C2f. The improved model was used to identify and classify pear leaves, and the result shows that the precision, recall, and mAP of the model are 99.71%, 99.46%, and 99.6%, respectively. Compared with YOLOv10, they have increased by 0.11%, 0.06%, and 0.1% respectively. The model has 2.52 M parameters and 7.9 G FLOPs. Compared with YOLOv10, these have decreased by 0.07 M and 0.6 G, respectively. And the precision of Yuluxiang’s recognition and classification can reach 98.3%. By introducing the SCConv module, the redundant features of the model have been reduced, and the parameters and computational costs have been reduced. Model complexity is reduced, and detection performance is improved.

4. Discussion

The object detection algorithm based on deep learning is practical for the variety classification problem in agricultural production and research. This is also the mainstream method commonly used in current intelligent agricultural production. Intelligent recognition and classification of plant varieties can not only reduce labor and time costs, but also improve the accuracy of recognition and classification. In the research of plant variety recognition, plant leaves have the advantages of easy collection and stable features. Therefore, in order to classify pear varieties, we used pear leaves as experimental samples and used YOLOv10 based on the PyTorch framework to perform variety recognition and classification tasks on pear leaves, considering recognition accuracy and efficiency. We constructed a pear leaf recognition and classification model that can recognize 33 pear varieties. These 33 varieties cover 27 representative cultivation species and 6 P.hybrid varieties. The pear leaf image data we collected were captured using a cell phone, which can capture as many images as possible from different angles. Taking photos of leaves in natural environments may result in occlusions, cluttered backgrounds, and uneven lighting in the captured images. Not only did this enrich the pear leaf dataset, but it also improved the practicality of the model. YOLOv10, as the latest algorithm released in the YOLO series, has improved in both efficiency and accuracy. It proposes an efficiency driven and accuracy driven model design, which solves the problems of computational redundancy and performance limitations. Generally speaking, YOLO has more FLOPs and parameter quantities in the classification head than in the regression head. However, compared to the classification head, the regression head has a greater impact on the performance of YOLO. Therefore, simplifying the classification head architecture can reduce computational overhead. And by using point-by-pointwise convolution to modulate dimensions and performing spatial downsampling using deep convolution, computational costs are reduced and higher efficiency is achieved.

The application of YOLOv10 in pear leaf recognition and classification tasks has shown overall good results. However, through analysis of the recognition results of individual varieties, it was found that the model had lower precision in identifying Yuluxiang. Therefore, the SCConv convolution module was introduced in YOLOv10 to optimize the model. The results showed that the precision, recall, and mAP of the optimized model were all improved, for more accurate identification of Yuluxiang. At the same time, the parameters and FLOPs are reduced, and the complexity of the model is reduced. This indicates that there is still space for improvement in the model. Therefore, we need to further study the model and conduct more testing and performance optimization.

Future work will collect more leaf images of different pear varieties to enrich the dataset, in order to train better models and improve their generalization performance and efficiency on more pear varieties, and deploy the model on mobile devices, truly contributing to pear variety classification tasks and the intelligent pear industry. This method can also be used for the recognition, classification, and detection of other fruit tree varieties and other plants. Our model was only tested on the pear leaf image dataset. The performance in other professional fields has not been tested yet. We can try to apply this model to real-world research and production to achieve its practicality. YOLOv10 performs well in real-time detection and excels in accuracy and latency, but still has some issues. For example, balancing the weight of the model and the accuracy of the model for specific problems still requires appropriate design [38]. Therefore, in future work, specific modular design of models can be carried out for different application scenarios.

5. Conclusions

The recognition and classification of pear varieties play a crucial role in improving the efficiency of pear production and professionalism. In this study, we propose an efficient and lightweight YOLOv10 pear leaf recognition and classification model, taking pear leaves as an example, specifically designed for the task of identifying and classifying pear leaves. In order to provide sufficient pear variety leaf images for the training model to achieve high generalization ability, we created a pear leaf dataset containing 17,656 images, including a total of 33 pear variety leaves. In order to ensure the universality and representativeness of the dataset, we selected 6 varieties each from P. bretschneideri, P. pyrifolia, P. ussuriensis, P. communis, P.hybrid, and selected 3 varieties from P. sinkiangensis. We used the LabelImg tool to annotate pear leaf images, trained YOLOv10 using the training set, and validated the weights of the trained model using the validation set. To investigate whether YOLOv10 is the most suitable for building pear leaf recognition and classification model, we trained YOLOv7, ResNet50, VGG16, and Swin Transformer on the same dataset in the same experimental environment. Using pre-trained models to train the network accelerates the training process, improves network performance, accelerates convergence speed, and enhances the model’s generalization ability. The results indicate that the model constructed using YOLOv10 has high precision, recall, and F1-score in pear leaf recognition and classification tasks. Under the consideration of recognition precision and speed, it outperforms the other four models. And compared with other models, YOLOv10 is more accurate in identifying five cultivated varieties and P. hybrid. When there is a close genetic relationship between varieties, YOLOv10 also has an advantage. Overall, the classification model constructed using YOLOv10 has better performance in identifying pear varieties.

Due to the poor performance of the model in recognizing and classifying Yuluxiang, we attempted to improve YOLOv10. On the basis of YOLOv10, the SCConv module was introduced, and pear leaves were tested again. The result shows that the improved model improved detection performance while reducing model complexity. Yuluxiang’s precision has increased from 96.4% to 98.3%. This indicates that there is still room for improvement in the model. Therefore, further improvements can be made to the model in the future. The model established in this study can achieve the recognition and classification of pear varieties. In the pear germplasm resource bank, this model can assist in quickly identifying and recording the morphological characteristics of different varieties and assist researchers in efficiently managing pear germplasm resources. In addition, the successful application of YOLOv10 in pear variety recognition can also provide relevant technical references for the classification and detection of other plants.

Author Contributions

Conceptualization, X.J. and H.H.; methodology, Z.J. and Y.M.; writing—original draft preparation, N.L.; writing—review and editing, X.D. and Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by The Science and Technology Innovation Project of the Chinese Academy of Agricultural Sciences (CAAS-ASTIP-RIP), the Earmarked Fund for the China Agriculture Research System (CARS-28-01), and National Natural Science Foundation of China project (32272676).

Data Availability Statement

The original data presented in the study are openly available in GitHub at https://github.com/gss-pear/leaf-dataset (accessed on 27 April 2025).

Acknowledgments

The authors would like to acknowledge the contributions of the participants in this study and the support provided by the Earmarked Fund for the China Agriculture Research System.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CIB | Compact Inverted Block |

| Conv | Convolutional Layer |

| C2f | Cross Stage Partial Fusion with 2 convolutions |

| DW | Depthwise Convolution |

| FFN | Feed-Forward Network |

| PSA | Partial Self-Attention |

| SPPF | Spatial Pyramid Pooling Fast |

| SCDown | Spatial Channel Downsampling |

References

- Hussain, S.Z.; Naseer, B.; Qadri, T.; Fatima, T.; Bhat, T.A. Pear (Pyrus Communis)—Morphology, Taxonomy, Composition and Health Benefits. In Fruits Grow in Highland Regions of the Himalayas: Nutritional and Health Benefits; Springer International Publishing: Cham, Switzerland, 2021; pp. 35–48. [Google Scholar]

- Fruits and Nuts; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007.

- Liu, J.; Wang, H.; Fan, X.; Zhang, Y.; Sun, L.; Liu, C.; Fang, Z.; Zhou, J.; Peng, H.; Jiang, J. Establishment and application of a Multiple nucleotide polymorphism molecular identification system for grape cultivars. Sci. Hortic. 2024, 325, 112642. [Google Scholar] [CrossRef]

- Sun, H.; Zhang, S.; Ren, R.; Su, L. Surface defect detection of “Yuluxiang” pear using convolutional neural network with class-balance loss. Agronomy 2022, 12, 2076. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, Q.; Sun, Q.; Feng, D.; Zhao, Y. Research on the size of mechanical parts based on image recognition. J. Vis. Commun. Image Represent. 2019, 59, 425–432. [Google Scholar] [CrossRef]

- Wang, Z.; Cui, J.; Zhu, Y. Review of plant leaf recognition. Artif. Intell. Rev. 2023, 56, 4217–4253. [Google Scholar] [CrossRef]

- Aslam, T.; Qadri, S.; Qadri, S.F.; Nawaz, S.A.; Razzaq, A.; Zarren, S.S.; Ahmad, M.; Rehman, M.U.; Hussain, A.; Hussain, I.; et al. Machine learning approach for classification of mangifera indica leaves using digital image analysis. Int. J. Food Prop. 2022, 25, 1987–1999. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef]

- Zhang, Y.; Peng, J.; Yuan, X.; Zhang, L.; Zhu, D.; Hong, P.; Wang, J.; Liu, Q.; Liu, W. MFCIS: An automatic leaf-based identification pipeline for plant cultivars using deep learning and persistent homology. Hortic. Res. 2021, 8, 172. [Google Scholar] [CrossRef]

- Liu, C.; Han, J.; Chen, B.; Mao, J.; Xue, Z.; Li, S. A novel identification method for apple (Malus domestica Borkh.) cultivars based on a deep convolutional neural network with leaf image input. Symmetry 2020, 12, 217. [Google Scholar] [CrossRef]

- Siravenha, A.C.; Carvalho, S.R. Plant classification from leaf textures. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, QLD, Australia, 30 November–2 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar]

- Grinblat, G.L.; Uzal, L.C.; Larese, M.G.; Granitto, P.M. Deep learning for plant identification using vein morphological patterns. Comput. Electron. Agric. 2016, 127, 418–424. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, P.; Li, C.; Liu, L. A convolutional neural network for leaves recognition using data augmentation. In Proceedings of the 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing, Liverpool, UK, 26–28 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 2143–2150. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ultralytics yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 5 March 2025).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Lu, J.; Yu, M.; Liu, J. Lightweight strip steel defect detection algorithm based on improved YOLOv7. Sci. Rep. 2024, 14, 13267. [Google Scholar] [CrossRef]

- Yang, M.; Yuan, W.; Xu, G. Yolox target detection model can identify and classify several types of tea buds with similar characteristics. Sci. Rep. 2024, 14, 2855. [Google Scholar]

- Susa, J.A.B.; Nombrefia, W.C.; Abustan, A.S.; Macalisang, J.; Maaliw, R.R. Deep learning technique detection for cotton and leaf classification using the YOLO algorithm. In Proceedings of the 2022 International Conference on Smart Information Systems and Technologies (SIST), Nur-Sultan, Kazakhstan, 28–30 April 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Alam Soeb, J.; Jubayer, F.; Tarin, T.A.; Al Mamun, M.R.; Ruhad, F.M.; Parven, A.; Mubarak, N.M.; Karri, S.L.; Meftaul, I.M. Tea leaf disease detection and identification based on YOLOv7 (YOLO-T). Sci. Rep. 2023, 13, 6078. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Qiu, X.; Chen, Y.; Cai, W.; Niu, M.; Li, J. LD-YOLOv10: A lightweight target detection algorithm for drone scenarios based on YOLOv10. Electronics 2024, 13, 3269. [Google Scholar] [CrossRef]

- Aktouf, L.; Shivanna, Y.; Dhimish, M. High-Precision Defect Detection in Solar Cells Using YOLOv10 Deep Learning Model. Solar 2024, 4, 639–659. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, part v 13. Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Communications of the ACM; AcM: New York, NY, USA, 2017; pp. 84–90. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Li, J.; Wen, Y.; He, L. Scconv: Spatial and channel reconstruction convolution for feature redundancy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6153–6162. [Google Scholar]

- Wang, Y.; Yang, C.; Yang, Q.; Zhong, R.; Wang, K.; Shen, H. Diagnosis of cervical lymphoma using a YOLO-v7-based model with transfer learning. Sci. Rep. 2024, 14, 11073. [Google Scholar] [CrossRef] [PubMed]

- Vijayakumar, A.; Vairavasundaram, S. Yolo-based object detection models: A review and its applications. Multimed. Tools Appl. 2024, 83, 83535–83574. [Google Scholar] [CrossRef]

- Chen, G.; Hou, Y.; Cui, T.; Li, H.; Shangguan, F.; Cao, L. YOLOv8-CML: A lightweight target detection method for Color-changing melon ripening in intelligent agriculture. Sci. Rep. 2024, 14, 14400. [Google Scholar] [CrossRef]

- Nguyen, P.T.; Nguyen, G.L.; Bui, D.D. LW-UAV–YOLOv10: A lightweight model for small UAV detection on infrared data based on YOLOv10. Geomatica 2025, 77, 100049. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).