Abstract

Reconstruction of fine-scale information from sparse data is often needed in practical fluid dynamics where the sensors are typically sparse and yet, one may need to learn the underlying flow structures or inform predictions through assimilation into data-driven models. Given that sparse reconstruction is inherently an ill-posed problem, the most successful approaches encode the physics into an underlying sparse basis space that spans the manifold to generate well-posedness. To achieve this, one commonly uses a generic orthogonal Fourier basis or a data specific proper orthogonal decomposition (POD) basis to reconstruct from sparse sensor information at chosen locations. Such a reconstruction problem is well-posed as long as the sensor locations are incoherent and can sample the key physical mechanisms. The resulting inverse problem is easily solved using minimization or if necessary, sparsity promoting minimization. Given the proliferation of machine learning and the need for robust reconstruction frameworks in the face of dynamically evolving flows, we explore in this study the suitability of non-orthogonal basis obtained from extreme learning machine (ELM) auto-encoders for sparse reconstruction. In particular, we assess the interplay between sensor quantity and sensor placement in a given system dimension for accurate reconstruction of canonical fluid flows in comparison to POD-based reconstruction.

1. Introduction

Multiscale fluid flow phenomena are ubiquitous in engineering and geophysical settings. Depending on the situation, one encounters either a data-sparse or a data-rich problem. In the data-sparse cases, the goal is to recover more information about the dynamical system while in the data-surplus case, the goal is to reduce the information into a simpler form for analysis or to build evolutionary models for prediction and then recover the full state. Thus, both these situations require reconstruction of the full state. To expand on this view, for many practical fluid flow applications, accurate simulations may not be feasible for a multitude of reasons including, lack of accurate models, unknown governing equations, and extremely complex boundary conditions. In such situations, measurement data represents the absolute truth and is often acquired from very few probes, which limits the potential for in-depth analysis. A common recourse is to combine such sparse measurements with underlying knowledge of the flow system, either in the form of idealized simulations, or a sparse basis from phenomenology or previous knowledge to recover detailed information. The former approach is termed as data assimilation while we refer to the latter as sparse reconstruction (SR). In the absence of such a mechanism, the only method to identify structural information of the flow is to use phenomenology such as Taylor’s frozen eddy hypothesis. On the other hand, simulations typically represent data surplus settings that offer the best avenue for analysis of realistic flows, as one can identify and visualize coherent structures, perform well converged statistical analysis including quantification of spatio–temporal coherence, and scale content due to the high density of data probes in the form of computational grid points. With growth in computing power, such simulations often generate big data contributing to an ever growing demand for quick analytics and machine learning tools [1] to both sparsify, i.e., dimensionality reduction [2,3,4,5] and reconstruct the data without loss of information. Thus, tools for encoding information into a low-dimensional feature space (convolution) complement sparse reconstruction tools that help decode compressed information (deconvolution). This in essence is a key aspect of leveraging machine learning for fluid flow analysis [6,7]. The other aspects of machine learning-driven studies of fluid flows include building data-driven predictive models [5,8,9], pattern detection, and classification. This work broadly contributes to the decoding problem of reconstructing high resolution fields data in both data-sparse and data-rich environments.

A primary target of this work is to address the practical problems of flow sensing and control in the field or a laboratory where a few affordable probes are expected to sense effectively. Advances in compressive sensing (CS) [10,11,12,13] have opened the possibility of direct compressive sampling [6] of data in real-time without having to collect high resolution information and then sample as necessary. Thus, sparse data-driven decoding and reconstruction ideas have been gaining popularity in their various manifestations such as gappy proper orthogonal decomposition (GPOD) [14,15], Fourier-based compressive sensing (CS) [10,11,12,13] and Gaussian kernel-based Kriging [16,17,18]. The overwhelming corpus of literature on this topic focuses on theoretical expositions of the framework and demonstrations of performance. The novelty of this work is two-fold. First, we combine sparse reconstruction principles with machine learning ideas for learning data-driven encoders/decoders using extreme learning machines (ELMs) [19,20,21,22,23], a close cousin of the single hidden layer artificial neural network architecture. Second, we explore the performance characteristics of such methods for nonlinear fluid flows within the parametric space of system dimensionality, sensor quantity, and to a limited extent, their placement.

Sparse reconstruction is an inherently ill-posed and under-determined inverse problem where the number of constraints (i.e., sensor quantity) are much less than the number of unknowns (i.e., high resolution field). However, if the underlying system is sparse in a feature space then the probability of recovering a unique solution increases by solving the reconstruction problem in a lower-dimensional space. The core theoretical developments of such ideas and their first practical applications happened in the realm of image compression and restoration [12,24]. Data reconstruction techniques based on the Karhunen–Loeve (K–L) procedure with least squares error minimization ( minimization), known as gappy proper orthogonal decomposition (POD) or GPOD [14,15,25], was originally developed in the nineties to recover marred faces [25] in images. The fundamental idea is to utilize the POD basis computed offline from the data ensemble to encode the reconstruction problem into a low-dimensional feature space. This way, the sparse data can be used to recover the sparse unknowns in the feature space (i.e., sparse POD coefficients) by minimizing the errors. If the POD bases are not known a priori, an iterative formulation [14,25] to successively approximate the POD basis and the coefficients was proposed. While this approach has been shown to work in principle [14,16,26], it is prone to numerical instabilities and inefficiency. Advancements in the form of a progressive iterative reconstruction framework [16] are effective, but impractical for real-time application. A major issue with POD-basis techniques is that they are data-driven and hence cannot be generalized, but are optimally sparse for the given data. This requires that they be generated offline and be used for efficient online sparse reconstruction using little sensor data. However, if training data are unavailable or if the prediction regime is not spanned by the precomputed basis, then the reconstruction becomes untenable.

A way to overcome the above limitations is to use generic basis such as wavelets [27] or Fourier-based kernels. Such choices are based on the assumption that most systems are sparse in the feature space. This is particularly true for image processing applications but may not be optimal for fluid flows, whose dynamics obey partial differential equations (PDEs). While avoiding the cost of computing the basis offline, such approaches run into sparsity issues as the basis do not optimally encode the underlying dynamical system. In other words, the larger the basis, the more the sensors needed for complete and accurate reconstruction. Thus, once again the reconstruction problem is ill-posed even when solving in the feature space because the number of sensors could be smaller than the system dimensionality in the basis space. error minimization produces a solution with sparsity matching the dimensionality of the feature space (without being aware of the system rank), thus requiring sensor quantity exceeding the underlying system dimension. The magic of compressive sensing (CS) [10,11,12,13] is in its ability to overcome this constraint by seeking a solution that can be less sparse than the dimensionality of the chosen feature space using -norm regularized least-squares reconstruction. Such methods have been successfully applied in image processing using a Fourier or wavelet basis and also to fundamental fluid flows [6,7,9,28,29,30]. Compressive sensing essentially looks for a regularized sparse solution using norm minimization of the sparse coefficients by solving a convex optimization problem that is computationally tractable and thereby, avoids the tendency of unregularized or -regularized methods to overfit to the data. In recent years, compressive sensing-type regularized reconstruction using a POD basis has been successfully employed for reconstruction of sparse particle image velocimetry (PIV) data [6] and pressure measurements around a cylindrical surface [7]. Since the POD bases are data-driven and designed to minimize variance, they represent an optimal basis for reconstruction and require the least quantity of sensor measurements for a given reconstruction quality. However, the downside is requirement of highly sampled training data as a one-time cost to build a library of POD bases. Such a framework has been attempted in Reference [7] where POD modes from simulations over a range of Reynolds () numbers in a cylinder wake flow were used to populate a library of bases and then used to classify the flow regime based on sparse measurements. In order to reduce this cost, one could also downsample the measurement data and learn the POD or discrete cosine transform (DCT) bases as used in Reference [6]. Recent efforts also combine CS with data-driven predictive machine learning tools such as dynamic mode decomposition (DMD) [31,32] to identify flow characteristics and classify flow into different stability regimes [30]. In the above, SR is embedded into the analysis framework for extracting relevant dynamical information.

Both SR and CS can be viewed as generalizations of sparse regression in a higher-dimensional basis space. This way, one can relate SR to other statistical estimation methods such as Kriging. Here, the data is represented as a realization of a random process that is stationary in the first and second moments. This allows one to interpolate information from the known to unknown data locations by employing a kernel (commonly Gaussian) in the form of a variogram model and the weights are learned under conditions of zero bias and minimal variance. The use of Kriging to recover flow-field information from sparse PIV data has been reported [16,17,18] with encouraging results.

The underlying concept in all the above described techniques is that they solve the reconstruction inverse problem in a feature or basis space where the number of unknowns are comparable to the number of constraints (sparse sensors). This mapping is done through a convolution or projection operator that can be constructed from data or kernel functions. Hence, we refer to this class of methods as sparse basis reconstruction (SR) in the same vein as sparse convolution-based Markov models [5,9,33,34]. This requires the existence of an optimal sparse basis space in which the physics can be represented. For many common applications in image and signal processing, this optimal set exists in the form of wavelets and Fourier functions, but these may not be optimally sparse for fluid flow solutions to PDEs. Thus, the reason why data-driven bases such as POD/principal component analysis (PCA) [3,4] are popular. Further, since they are optimally sparse, such methods can reconstruct with a very little amount of data as compared to say, Kriging which employs generic Gaussian kernels. In this article, we introduce for the first time, the use of ELM-based encoders as basis for sparse reconstruction. As part of an earlier effort [35], we explored how sensor quantity and placement, and the system dimensionality impact the accuracy of POD-based sparse reconstructed field. In this effort, we extend this analysis for two different choices of data-driven sparse basis including POD and ELM. In particular, we aim to accomplish the following: (i) explore whether the relationship between system sparsity and sensor quantity for accurate reconstruction of the fluid flow is independent of the basis employed and (ii) understand the relative influence of sensor placement on the different choice of SR basis.

The rest of manuscript is organized as follows. In Section 2, we review the basics of sparse reconstruction theory, the different choices of data-driven bases including POD and ELM, the role of the measurement locations, and we summarize the algorithm employed for SR. In Section 3, we discuss how the training data is generated. In Section 4, we discuss the results from our analysis on SR of a cylinder wake flow using both POD and ELM bases. This is followed by a summary of major conclusions from this study in Section 5.

2. The Sparse Reconstruction Problem

Let the high resolution data representing the state of the flow system at any given instant be denoted by , and its corresponding sparse representation be given by with . Then, the sparse reconstruction problem is to recover , when given along with information of the sensor locations in the form the measurement matrix C, as shown in Equation (1). The measurement matrix C determines how the sparse data is collected from . The variables P and N are the number of sparse measurements and the dimension of the high resolution field, respectively.

In this article, we focus on vectors that have a sparse representation in a basis space such that and yielding . Here represents the appropriate basis coefficient vector. Naturally, when one loses the information about the system, the recovery of said information is not absolute as the reconstruction problem is ill-posed, i.e., there are more unknowns than equations in Equation (1). Even if one were to estimate by computing the Moore-Penrose pseudoinverse of C as using a least-squares error minimization procedure (Equation (2)), it does lead to stable solutions as the system is ill-posed (under-determined).

2.1. Sparse Reconstruction Theory

The theory of sparse reconstruction has strong foundations in the field of inverse problems [36] with applications in diverse fields of study such as a geophysics [37,38] and image processing [39,40]. In this section, we formulate the reconstruction problem which has been presented in CS literature [10,12,41,42,43]. Many signals tend to be “compressible”, i.e., they are sparse in some K-sparse basis as shown below:

where and with K non-zero elements. In the sparse reconstruction formulation above, is used instead of as the sparsity of the system K is not known a priori. Consequently, a more exhaustive basis set of dimension is typically employed. To represent N-dimensional data, one can at most use N basis vectors, i.e., . In practice, the number of possible basis need not be N and can be represented by as only K of them are needed to represent the acquired signal up to a desired quality. This is typically the case when is composed of optimal data-driven basis vectors such as POD modes. The reconstruction problem is then recast as the identification of these K coefficients. In many practical situations, the knowledge of and K is not known a priori and are typically user input. Standard transform coding [27] practice in image compression involves collecting a high resolution sample, transforming it to a Fourier or wavelet basis space where the data is sparse, and retaining the K-sparse structure while discarding the rest of the information. This is the basis of JPEG and JPEG-2000 compression standards [27] where the acronym JPEG stands for Joint Photographic Experts Group. This sample and then compress mechanism still requires acquisition of high resolution samples and processing them before reducing the dimensionality. This is highly challenging as handling large amounts of data is difficult in practice due to demands on processing power, storage, and time.

Compressive sensing [10,12,41,42,43] focuses on direct sparse sensing based inference of the K-sparse coefficients by essentially combining the steps in Equations (1) and (3) as below:

where is the map between the basis coefficients a that represent the data in a feature space and the sparse measurements in the physical space. The challenge in solving for using the underdetermined Equation (1) is that C is ill-conditioned and in itself is not sparse. However, when is sparse in , the reconstruction using in Equation (4) becomes practically feasible by solving for an a that is K-sparse. Thus, one effectively solves for K unknowns (in a) using P constraints (given by ) by computing a sparse solution for a as per Equation (7). This is achieved by minimizing the corresponding s-norm regularized least squares error with s chosen appropriately and is then recovered from Equation (3). The choice yields the norm regularized reconstruction of by penalizing the larger elements of a. The -regularized method finds a that minimizes the expression shown in Equation (5):

Using the regularized left pesudo-inverse of , Equation (4) becomes:

where can be approximated as a solution to the normal equation as . Here is the regularization parameter and I, the identity matrix of the appropriate dimensions. This regularized least-squares solution procedure is nearly identical to the original GPOD algorithm developed by Everson and Sirovich [25] when is chosen as the POD basis. However, in GPOD contains zeros as placeholders for all the missing elements whereas the above formulation retains only the measured data points. The GPOD formulation summarized in Section 2.5 is plagued by issues that are beyond the scope of this article. While this approach provides numerical stability and improved predictions, it rarely, if ever finds the K-sparse solution. A natural way to enhance sparsity of a is to minimize , i.e., minimize the number of non-zero elements such that is satisfied. It has been shown [44] that with ( in general) independent measurements, one can recover the sparse coefficients with high probability using reconstruction. This condition can be heuristically interpreted as each measurement needing to excite a different basis vector so that its coefficient can be optimally identified. If two or more measurements excite the same basis then additional measurements may be needed to produce acceptable reconstruction. On the other hand, for independent measurements, the probability of recovering the sparse solution is highly diminished. Nevertheless, -reconstruction is a computationally complex, -hard, and poorly conditioned problem with no stability guarantees.

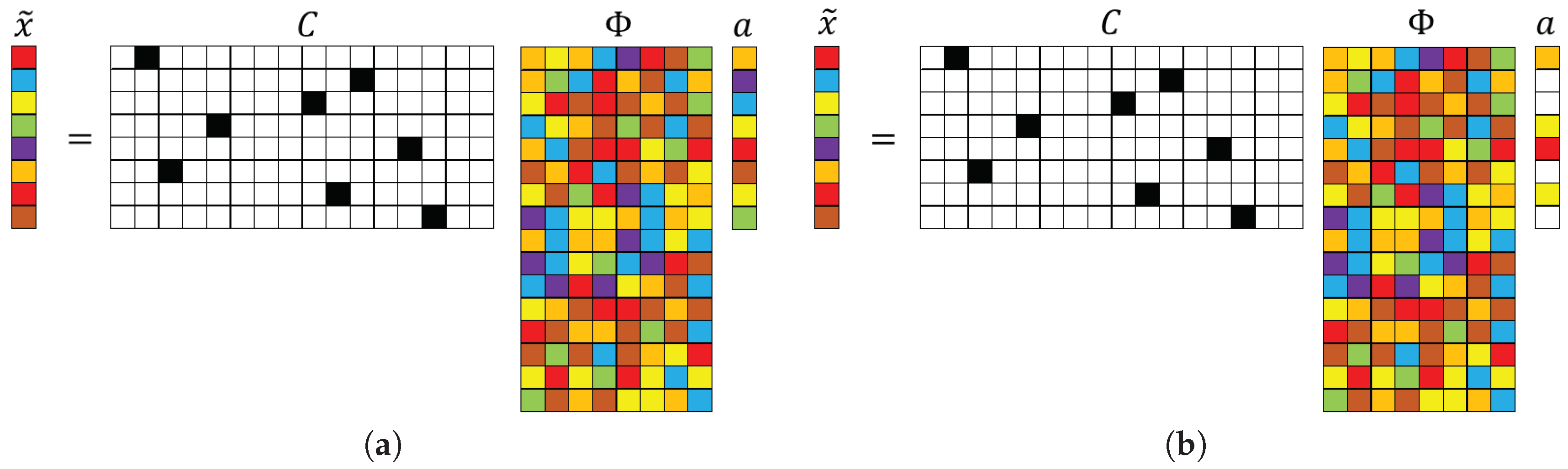

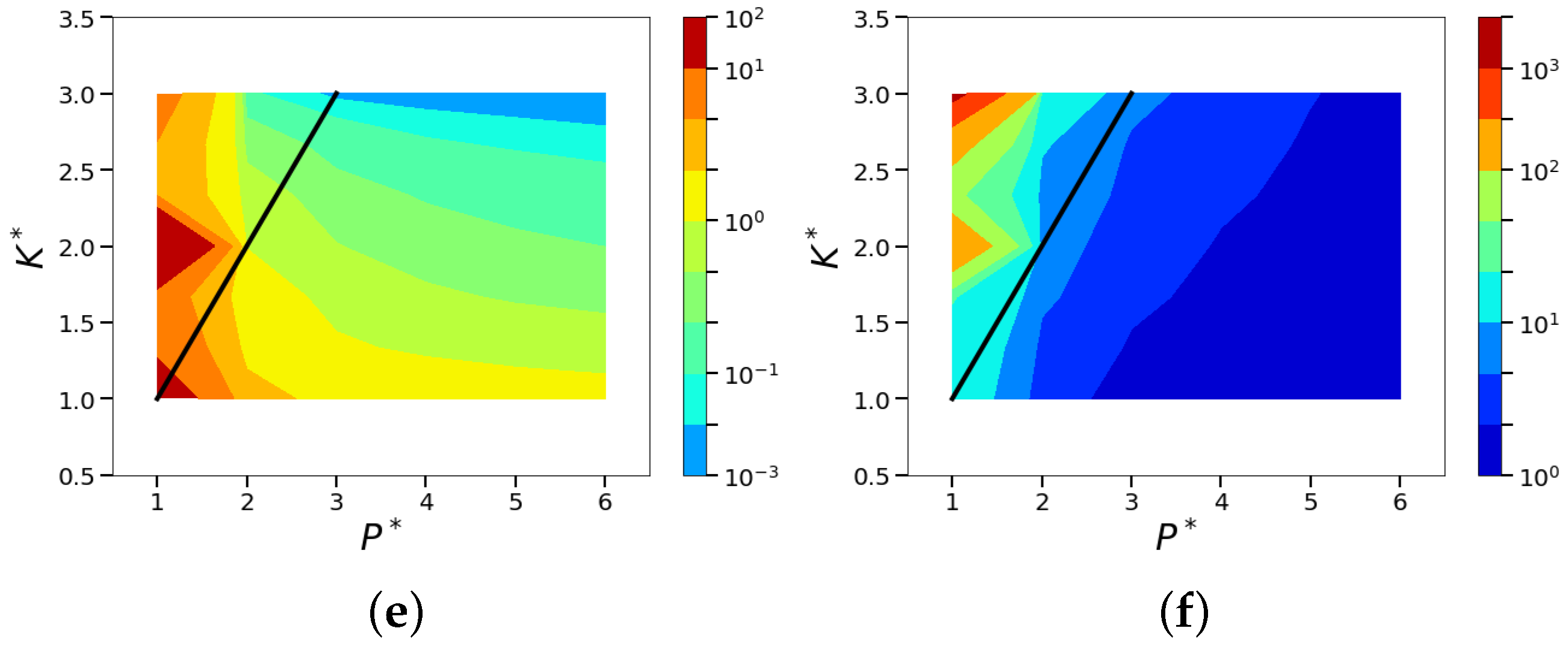

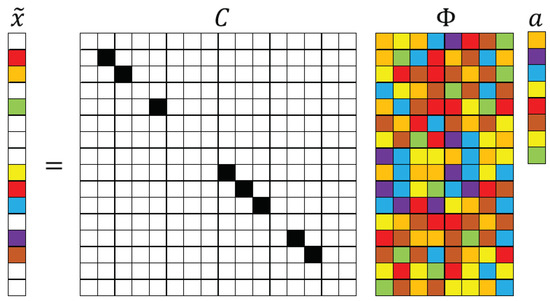

The popularity of compressed sensing arises due to the theoretical advances [45,46,47,48] guaranteeing near-exact reconstruction of the uncompressed information by solving for the K sparsest coefficients using methods. reconstruction is a relatively simpler convex optimization problem (as compared to ) and solvable using linear programming techniques such as basis pursuit [10,41,49] and shrinkage methods [50]. Theoretically, one can perform the traditional brutal search to locate the largest K coefficients of a, but the computational effort increases exponentially with the dimension. To overcome this burden, a host of greedy algorithms [11,13,51] have been developed to solve the reconstruction problem in Equation (7) with complexity for . However, the price one pays here is that measurements are needed [10,41,45] to exactly reconstruct the K-sparse vectors using this approach. The schematics of both and -based formulations are illustrated in Figure 1.

Figure 1.

Schematic illustration of (a) and (b) minimization reconstruction for sparse recovery using a single-pixel measurement matrix. The numerical values in C are represented by colors: black (1), white (0). The other colors represent numbers that are neither 0 nor 1. In the above schematics , , and , where . The number of colored cells in a represents the system sparsity K. for and for .

Solving the regularized norm minimization problem shown in Equation (7) is complicated as compared to the minimization solution described in Equation (5). This is because, unlike the cost function to be minimized in Equation (5), the cost function in Equation (7) is non-differentiable for any , which necessitates an iterative solution instead of a closed form solution. Further, the minimization of the cost function is also an unconstrained optimization problem that is commonly converted into a constrained optimization as shown in Equation (8). Here t is a user defined sparsity knob related to . The goal of the regularization parameter, in Equations (5) and (7) is to ‘shrink’ the coefficients. However, for the case, larger values of tends to make the different coefficients equal as against what happens in the regularized case.

This constrained optimization in Equation (8) is quadratic in a and therefore, yields a quadratic programming problem with the feasible region bounded by a polyhedron (in the space of a). The solution to such regularized least squares regression has two classes of solution methodologies: (i) least absolute selection and shrinkage operator or LASSO [50] and (ii) basis pursuit denoising [49]. LASSO and its variant essentially convert the constrained formulation into a set of linear constraints. Recently popular approaches include greedy methods such as optimal matching pursuit (OMP) [13,28] and interior point methods [52]. A simpler iterative sequential least-squares thresholding framework is suggested by Brunton et al. [53] as a robust method. The idea here is to achieve ‘shrinkage’ by repeatedly zeroing out the coefficients smaller than a given choice of hyperparameter.

In summary, there are three parameters ( and P) that impact the reconstruction framework. represents the candidate basis space dimension employed for this reconstruction and can at worst obey . K represents the desired system sparsity and is tied to the desired quality of reconstruction. That is, K is chosen such that if these features are predicted accurately, then the achieved reconstruction is satisfactory. The more sparse a system, the smaller K is for a desired reconstruction quality. P represents the available quantity of sensors provided as input to the problem. The interplay of and P determines the choice of the algorithm employed, i.e., whether the reconstruction is based on or minimization, and the reconstruction quality as summarized in Table 1. In general, K is not known a priori and is tied to the desired quality of reconstruction. N and are chosen by the practitioner and depends on the feature space in which the reconstruction will happen. N is the preferred dimension of the reconstructed state, and is the dimension of the candidate basis space in where the reconstruction problem is formulated. As shown in Table 1, for the case with , the best reconstruction will predict the K weights correctly (using for the over determined problem) and can be as bad as P when (using minimization for the under determined problem). In all the cases explored in this discussion, the underlying assumption of is used. When and , the worst case prediction will be K weights (for a desired sparsity K) as compared to P weights for the best case (maximum possible sparsity) using minimization. With and , the best case reconstruction will be P weights using . For , the best reconstruction will predict weights, as compared to K for the worst case. Thus in cases the desired reconstruction sparsity is always realized whereas in cases 2 and 3, the sensor quantity determines the outcome.

Table 1.

The choice of sparse reconstruction algorithm based on problem design using parameters P (sensor sparsity), K (targeted system sparsity), and (candidate basis dimension).

All of the above sparse recovery estimations are conditional upon the measurement basis (rows of C) being incoherent with respect to the sparse basis . In other words, the measurement basis cannot sparsely represent the elements of the “data basis”. This is usually accomplished by using a random sampling for sensor placement, especially when is made up of Fourier functions or wavelets. If the basis functions are orthonormal, such as wavelet and POD basis, one can discard the majority of the small coefficients in a (setting them as zeros) and still retain reasonably accurate reconstruction. The mathematical explanation of this conclusion has been previously shown in Reference [12]. However, it should be noted that incoherency is a necessary, but not sufficient condition for exact reconstruction. Exact reconstruction requires optimal sensor placement to capture the most information for a given flow field. In other words, incoherency alone does not guarantee optimal reconstruction which also depends on the sensor placement as well as quantity.

2.2. Data-Driven Sparse Basis Computation Using POD

In the SR framework, basis such as POD modes, Fourier functions, and wavelets [10,12] can be used to generate low-dimensional representations for both and -based methods. While an exhaustive study on the effect of different choices on reconstruction performance is potentially useful, in this study we compare SR using POD and ELM bases. A similar effort using has been reported in Reference [6] where a comparison between the discrete cosine transform and POD bases was performed.

Proper orthogonal decomposition (POD), also known as principal components analysis (PCA) or singular value decomposition (SVD), is a dimensionality reduction technique that computes a linear combination of low-dimensional basis functions (POD modes) and weights (POD coefficients) from snapshots of experimental or numerical data [2,4] through eigen-decomposition of the spatial correlation tensor of the data. It was introduced in the turbulence community by Lumley [54] to extract coherent structures in turbulent flows. The resulting singular vectors or POD modes represent an orthogonal basis that maximizes the energy capture from the flow field. For this reason, such eigenfunctions are considered optimal in terms of energy capture and other optimality constraints are theoretically possible. Taking advantage of the orthogonality, one can project these POD basis onto each snapshot of data in a Galerkin sense to deduce coefficients that represent evolution over time in the POD feature space. The optimality of the POD basis also allows one to effectively reconstruct the full field information with knowledge of very few coefficients; a feature that is attractive for solving sparse reconstruction problems such as in Equation (4). However, this is contingent on the spectrum of the spatial correlation tensor of the data having sufficiently rapid decay of the eigenvalues, i.e., it supports a low-dimensional representation. This is typically not true in the case of turbulent flows with very gradual decay of energy across the singular values. Further, in such dynamical systems, the small scales with low-energy can still be dynamically important and will need to be reconstructed, thus requiring a significant number of sensors.

Since the eigen-decomposition of the spatial correlation tensor of the flow field requires handling a system of dimension N, it requires a significant computational expense. An alternative method is to compute the POD modes using the method of snapshots [55] where the eigen-decomposition problem is reformulated in a reduced dimension framework (assuming the number of snapshots in time is smaller than the spatial dimension), as summarized below. Consider that is the full field representation with only the fluctuating part, i.e., the temporal mean is taken out of the data. N is the dimension of the full field representation and M is the number of snapshots. The procedure involves computation of the temporal correlation matrix as:

The resulting correlation matrix is symmetric and an eigendecomposition problem can be formulated as:

where the eigenvectors are given in and the diagonal elements of are the eigenvalues . Typically, both the eigenvalues and corresponding eigenvectors are sorted in descending order such that . The POD modes and coefficients can then be computed as

One can represent the field X as a linear combination of the POD modes as shown in Equation (3) and leverage orthogonality to compute the Moore–Penrose pseudo-inverse, i.e., . Thus, computing the POD coefficients, as shown in Equation (12):

It is worth mentioning that subtracting the temporal mean from the input data is not critical to the success of this procedure. As shown in Appendix B, retaining the mean of the data during the SVD computation generates an extra mean mode which modifies the energy spectrum. To illustrate, for the low-dimensional cylinder wake used in this study, retaining the dominant mode when performing SVD with the mean captures energy, whereas for the SVD without the mean, the dominant mode captures energy. However, as results in Appendix B show, it does not impact the reconstruction performance. In fact, during practical application, one does not remove the mean because it is not known a priori.

Using the snapshot procedure for the POD/SVD computation fixes the maximum number of POD basis vectors to M which is typically much smaller than the dimension of full state vector, N. If one wants to reduce the dimension further, then a criterion based on energy capture is devised so that the modes carrying the least amount of energy are truncated to dimension . For many common fluid flows, using the first few POD modes and coefficients are sufficient to capture almost all the relevant dynamics. However, for turbulent flows with large-scale separation, a significant number of POD modes will need to be retained.

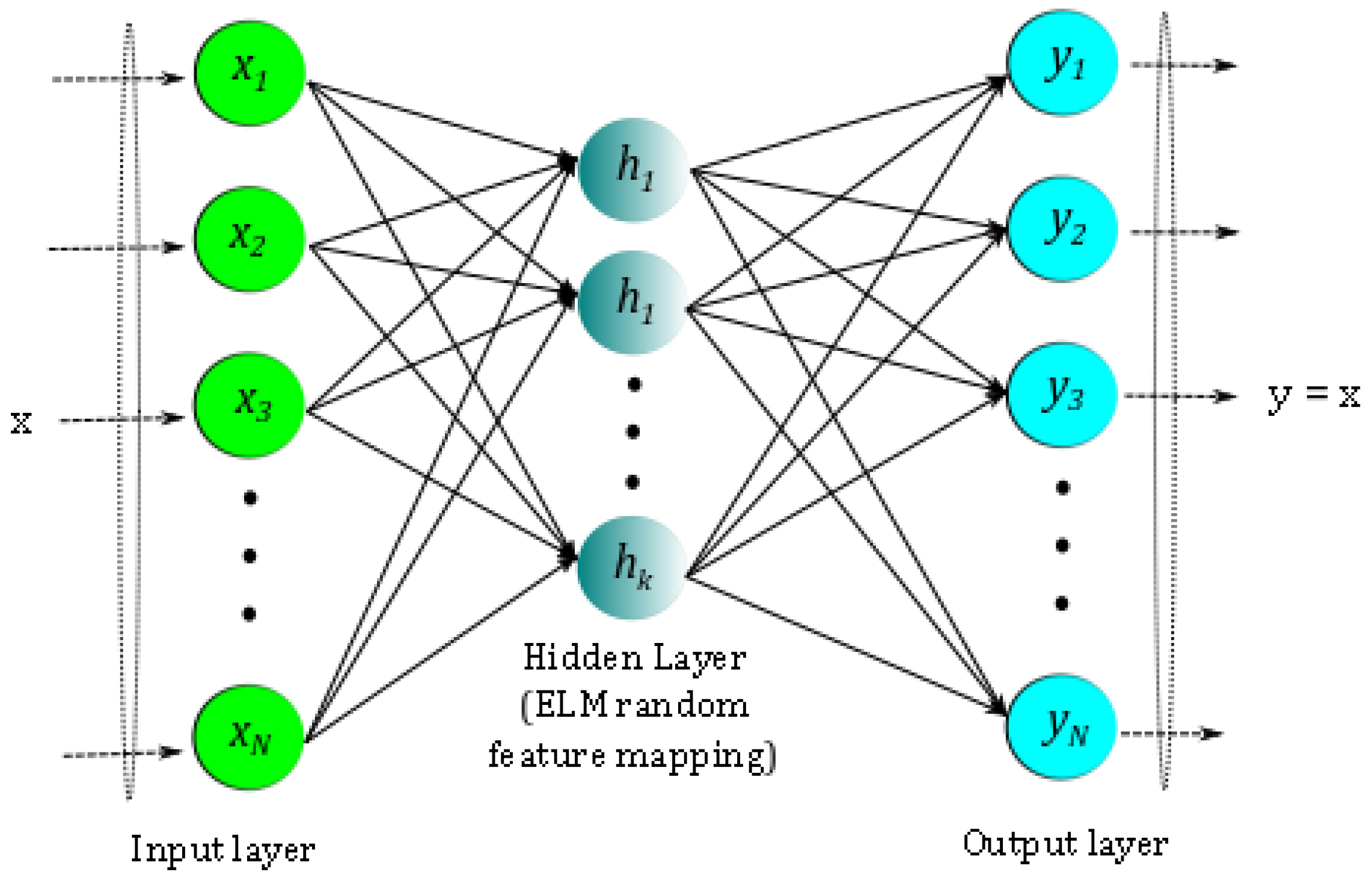

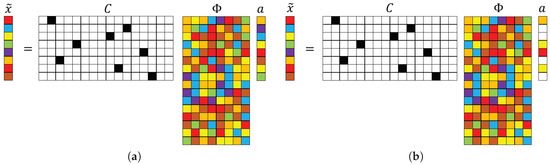

2.3. Data-Driven Sparse Basis Computation Using an ELM Autoencoder

While POD represents the energy optimal basis for a given data [56,57], they tend to be highly data-specific and do not span highly evolving flows [5]. As an alternative, one typically reconstructs data from systems with no prior knowledge using a generic basis such as Fourier functions [10,12]. Another alternative is to use radial basis function (RBFs) or Gaussian function regression to represent the data, which are known to be more robust representations of dynamically evolving flow conditions [5]. In this work, we adopt this flavor by leveraging extreme learning machines (ELMs) [19,20] which are regressors employing a Gaussian prior. An extension of this framework to learn encoding–decoding maps of a given data using an autoencoder formulation, was was proposed by Zhou et al. [21,22,23]. ELM is a single hidden layer feedforward neural network (SLFN) with randomly generated weights for the hidden nodes and bias terms followed by the application of an activation function. Finally, the output weights are computed by constraining to the output data. If we set the number of hidden nodes smaller than the dimensionality of the input data then we can get compressed feature representations of the original data as the output weight of ELM autoencoder as show in Figure 2. Given data with N as the full state dimension and M as the number of snapshots (or simply for ), we map the full state to a K-dimensional hidden layer feature space using the ELM autoencoder as shown below in Figure 2 and Equation (13).

Figure 2.

Schematic of the extreme learning machine (ELM) autoencoder network. In this architecture, the output features are the same as input features.

In the above equation, is the input data, j is the snapshot index, N is the dimension of input flow state, are the random input weights that map input features to the hidden layer, is the random bias, is the activation function operating on the linearly transformed input state and are the weights that map hidden layer features to the output features. Additionally, represents the map from input state to the ith hidden layer feature. In this autoencoder architecture, the output and input features are identical. An example of the activation function is the radial basis function as shown in Equation (14):

In matrix form, the linear Equation (13) can be written as in Equation (15) where is the matrix of outputs (with elements ) from the hidden layer (Equation (16)) and represents the output from the K hidden nodes as a row vector for the jth input snapshot . is also called the feature transformation that maps the data, from the N dimensional input space to the K dimensional hidden layer feature space .

The primary difference between POD and the ELM autoencoder is that in the former, the basis is learned first whereas in ELM, the features are derived first followed by . The other major difference is that the columns of the POD basis vectors represent coherent structures contained in the data snapshots, whereas the interpretation of the ELM basis is not clear.

2.4. Measurement Locations, Data Basis, and Incoherence

Recal from Section 2.1, that the reconstruction performance is strongly tied to the measurement matrix C being necessarily incoherent with respect to the low-dimensional basis [12], which is usually accomplished by employing a random sampling for the sensor placement. In practice, one can adopt two types of random sampling of the data, namely, single-pixel measurements [7,35,58] or random projections [10,12,42]. Typically, single-pixel measurements refers to measuring information at the particular spatial locations, such as measurements by unmanned aerial systems (UAS) in the atmospheric fields. Another popular choice of sensing method in the compressive sensing or image processing community is random projections, where the measurement matrix is populated using normally distributed random numbers on to which the full state data is projected. In theory, the random matrix is highly likely to be incoherent with any fixed basis [12], and hence efficient for sparse recovery purposes. However, for most of the fluid flow applications, the sparse data is usually sourced from point measurements and hence, the single-pixel approach is practically relevant. Irrespective of the approach adopted, the measurement matrix C (Equations (1) and (2)) and basis should be incoherent to ensure optimal sparse reconstruction. This essentially implies that one should have sufficient measurements distributed in space to excite the different modes relevant to the data being reconstructed. Mathematically, this implies that is full rank and invertible.

There exist metrics to estimate the extent of coherency between C and in the form of a coherency number, as shown in Equation (20) [59]:

where is a row vector in C and is a column vector of . typically ranges from 1 (incoherent) to (coherent). The smaller the , the less measurements one needs to reconstruct the data in an sense. This is because the coherency parameter enters as the prefactor in the lower-bound for the sensor quantity in -based CS for accurate recovery. There exist optimal sensor placement algorithms such as K-means clustering, the data-driven online sparse Gaussian processes [60], physics-based approaches [61], and mathematical approaches [15] that minimize the condition number of and maximize the determinant of the Fisher information matrix [62]. A thorough study on the role of sensor placement on reconstruction quality is much needed and an active topic of research, but not considered within the scope of this work. For this analysis, which focuses on the role of basis choice on sparse reconstruction, we simplify the sensor placement strategy by using the Matlab function randperm (N) to generate a random permutation from 1 to N and the first p values are chosen as the sampling locations in the data. However, to minimize the impact of sensor placement on the conclusions of this study, we perform an ensemble of numerical experiments with different random sensor placements and explore averaged error metrics to make our interpretations robust. Further, in most practical flow measurements the sensor placement is random or based on knowledge of the flow physics. The current approach can be considered to be consistent with that strategy.

2.5. Sparse Recovery Framework

The algorithm used in this work is inspired from the gappy POD or GPOD framework [25] and can be viewed as an minimization reconstruction of the sparse recovery problem, summarized by Equations (4)–(6) with composed of basis vectors, i.e., the dimension of a is . At this point, we remind the reader of naming conventions adopted in this paper: the instantaneous jth full flow state is denoted by , whereas the entire set consisting of M snapshots is assembled into a matrix form denoted by . In the following, we focus on single snapshot reconstruction and the extension to multiple snapshots is trivial, i.e., each snapshot can be reconstructed sequentially or in some cases be grouped together as a batch reconstruction. This allows for such algorithms to be easily parallelized.

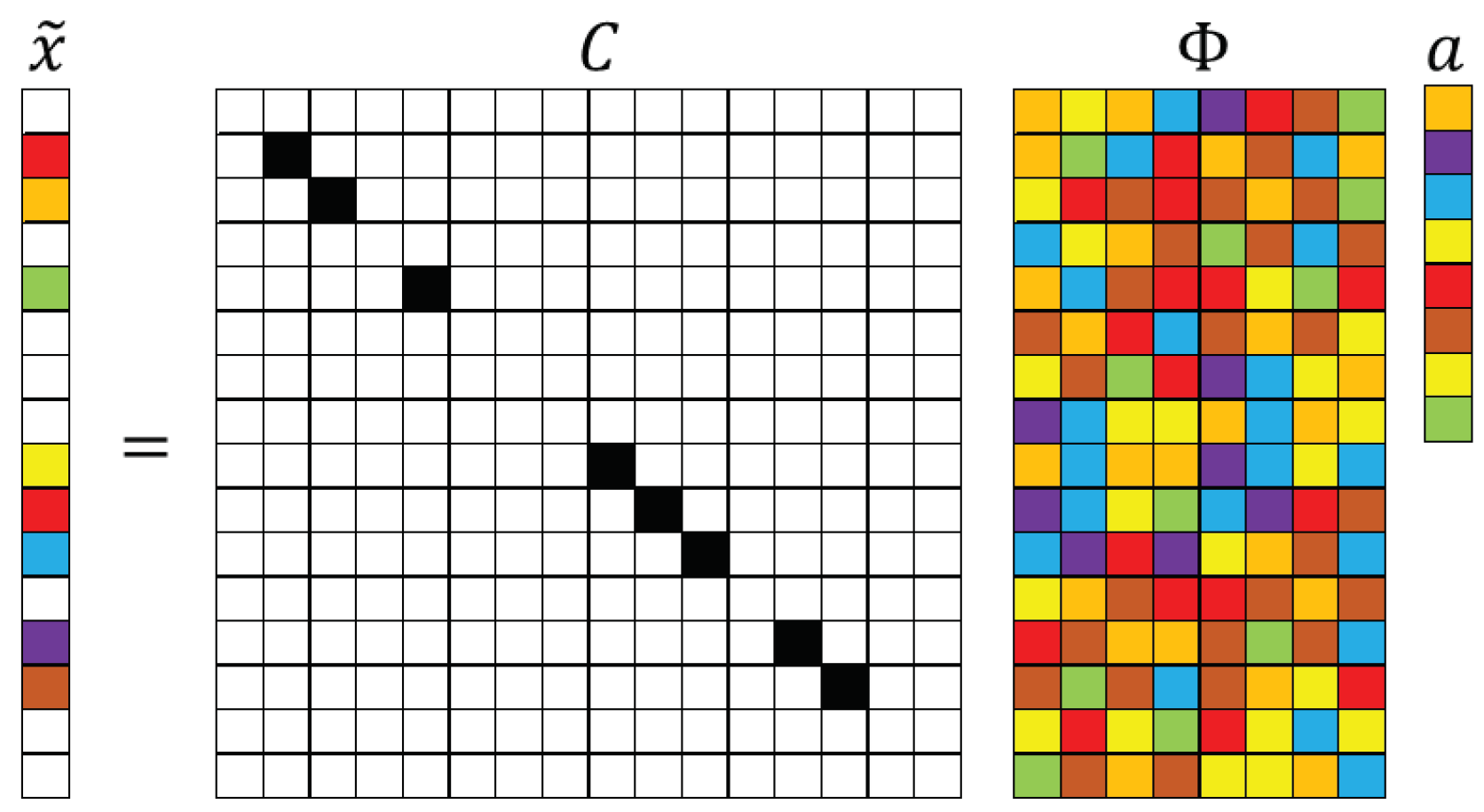

The primary difference between the SR framework in Equation (4) as used by the image processing community and GPOD [14,15,25,57] as shown in Equation (22) is the construction of the measurement matrix C and the sparse measurement vector . For the purposes of this discussion, we will consider reconstruction of a single snapshot. In SR (Equation (4)) is a compressed version containing only the measured data, whereas in the GPOD framework, is a masked version of the full state vector, i.e., values outside of the P measured locations are zeroed out to generate a filtered version of . For high resolution data with the chosen basis , the low-dimensional features, are obtained from the relationship shown below:

The masked (incomplete) data , corresponding measurement matrix C, and mask vector are related by:

where . Therefore, the GPOD algorithm results in a larger measurement matrix with numerous rows of zeros as shown in Figure 3 (compare with Figure 1). To bypass the complexity of handling the matrix, a mask vector, with 1 s and 0 s operates on through a point-wise multiplication operator . As an illustration, the point-wise multiplication is represented as for each snapshot where each element of multiplies the corresponding element of . This is applicable when each data snapshot has its own measurement mask , which is a useful way to represent the evolution of sparse sensor locations over time. The SR formulation in Equation (4) can also support time varying sensor placement, but would require a compression matrix that changes with each snapshot. This approach is by design much more computationally and storage intensive, but can handle situations that do not incorporate point sensor compression.

Figure 3.

Schematic of a gappy proper orthogonal decomposition (GPOD)-like sparse reconstruction (SR) formulation. The numerical values represented by the colored blocks are: black (1), white (0), color (other numbers).

The goal of the SR procedure is to recover the full data from the masked data given in Equation (23) by approximating the coefficients (in the sense) with basis , learned offline using training data (snapshots of the full field data).

The coefficient vector cannot be computed by direct projection of onto as these are not designed to optimally represent the sparse data. Instead, one needs to obtain the “best” approximation of the coefficient , by minimizing the error in the sense as show in Equation (24).

In Equation (24) we see that m acts on each column of through a point-wise multiplication operation, which is equivalent to masking each basis vector . Unless the sensor placement is constant with time, we remind one that the above formulation is valid for a single snapshot reconstruction where the mask vector, , changes with every snapshot for . Thus, the error represents the single snapshot reconstruction error that will be minimized to compute the approximate features . It can easily be seen from below that one will have to minimize the different ’s sequentially to learn the entire coefficient matrix, for all M snapshots. Denoting the masked basis functions as , Equation (24) is rewritten as in Equation (25).

In the above formulation, is analogous to in Equation (4). To minimize , one computes the derivative with respect to and equates it to zero as below:

The result is the linear normal equation given by Equation (27):

where or and or . The reconstructed solution is given by Equation (28) below:

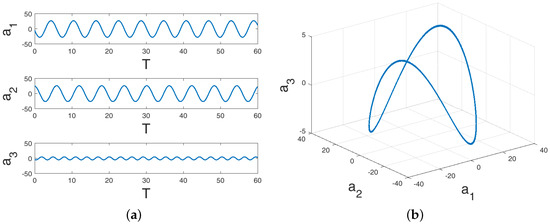

Algorithm 1 summarizes the above SR framework assuming the basis functions () are known. The above solution procedure for sparse recovery is the same as that described in Section 2.1 except for the dimensions of and C.

| Algorithm 1:-based algorithm: Sparse reconstruction with known basis, . |

|

2.6. Algorithmic Complexity

The cost of computing both the ELM and POD basis is where N is the full reconstructed flow state dimension and M is the number of snapshots. The cost of sparse reconstruction turns out to be for both the methods, where is the system dimension chosen for reconstruction. Naturally, for many practical problems with an underlying low-dimensional structure, POD is expected to result in a smaller K than ELM. This will reduce the sensor quantity requirement and the reconstruction cost to a certain extent. Further, since the number of snapshots (M) is also tied to the desired basis dimension (K), a larger K requires a larger M and in turn a higher cost to generate the basis.

3. Data Generation for Canonical Cylinder Wake

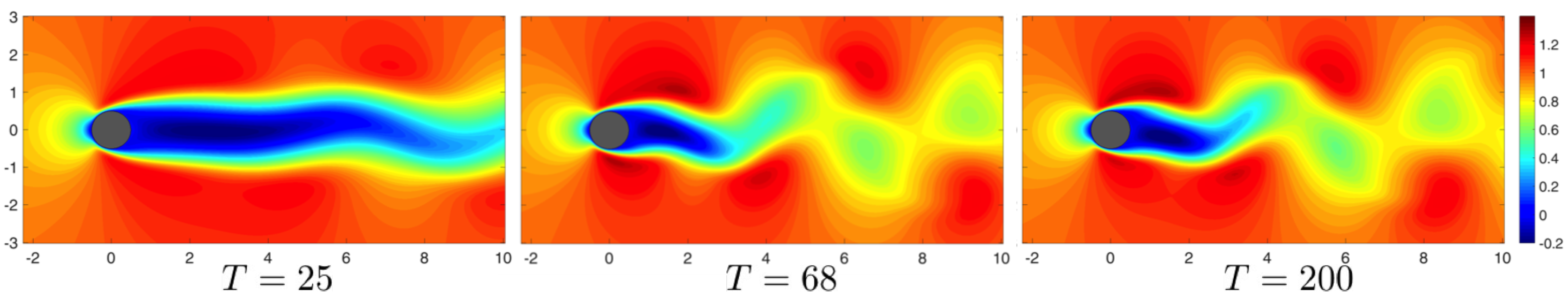

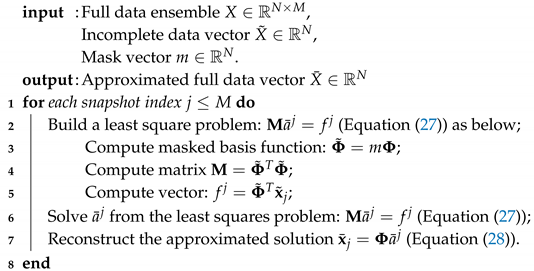

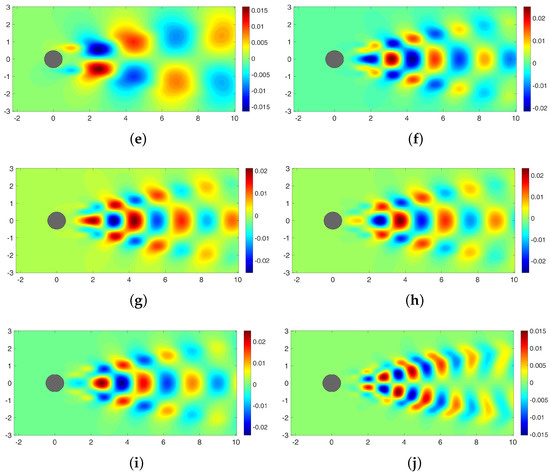

Studies of cylinder wakes [33,63,64,65] have attracted considerable interest from the data-driven modeling community for their particularly rich flow physics content, encompassing many complexities of nonlinear fluid flow dynamical systems and ease of simulation using established computational fluid dynamics (CFD) tools. In this study, we explore data-driven sparse reconstruction for the cylinder wake flow at . It is well known that the cylinder wake is initially stable and then becomes unstable before settling into a limit-cycle attractor. To illustrate this dynamics, snapshots showing evolution of the stream-wise velocity component (u) are shown in Figure 4, for .

Figure 4.

Isocontour plots of the stream-wise velocity component for cylinder flow at and and 200, showing the evolution of the flow field. Here, T represents the time non-dimensionalized by the advection time-scale.

For this study, we focus on reconstruction in the temporal regime with periodic limit cycle dynamics, in contrast to our earlier work [35] where we focused on reconstruction in both the limit cycle and transient regimes. The two-dimensional cylinder flow data is generated using a spectral Galerkin method [66] to solve incompressible Naiver–Stokes equations, as shown in Equations (29)–(31) below:

where u and v are the horizontal and vertical velocity components, P is the pressure field, and is the fluid viscosity. The rectangular domain used for this flow simulation is and , where D is the diameter of the cylinder. For the purposes of this study, data from a reduced domain, i.e., and , is used. The mesh was designed to sufficiently resolve the thin shear layers near the surface of the cylinder and transit wake physics downstream. For the case with , the grid includes 24,000 points. The computational method employed is a fourth order spectral expansion within each element in each direction. The sampling rate for each snapshot output is chosen as s.

While the use of the 2D version of the incompressible Navier–Stokes equations, to compute the full cylinder data is justified for laminar flows, for turbulent flows this full flow state will be a high resolution 3D dataset with large state dimension that can escalate the computational complexity. The increased state dimension can severely impact the cost of POD-Galerkin based reduced order models (ROMs) through the nonlinear term, whose computation scales with the large full state dimension. This, in turn, can negate the advantages of such ROMs for large-scale systems. To accelerate such models, methods such as gappy POD [14,15,25], the discrete empirical interpolation method (DEIM) [67] and missing point estimation (MPE) [68], estimate a low rank mask matrix to approximate the full state nonlinear term using data at sparse sampled locations. This allows for the expensive inner products using high-dimensional vectors to be replaced with low-dimensional ones. Given the strong connections between compressive sensing, sparse reconstruction, and gappy POD, it is not far fetched to adopt the algorithms presented in this work for complexity reduction of POD-Galerkin models. Dimitriu et al. [69] compares the performance of these hyper reduction techniques for use in online model computations and observes that DEIM and GPOD generate similar sensor placement, online computational cost, and accuracy. On the other hand, MPE was less computationally expensive in the offline cost, but prone to inaccuracies.

4. Sparse Reconstruction of Cylinder Wake Limit-Cycle Dynamics

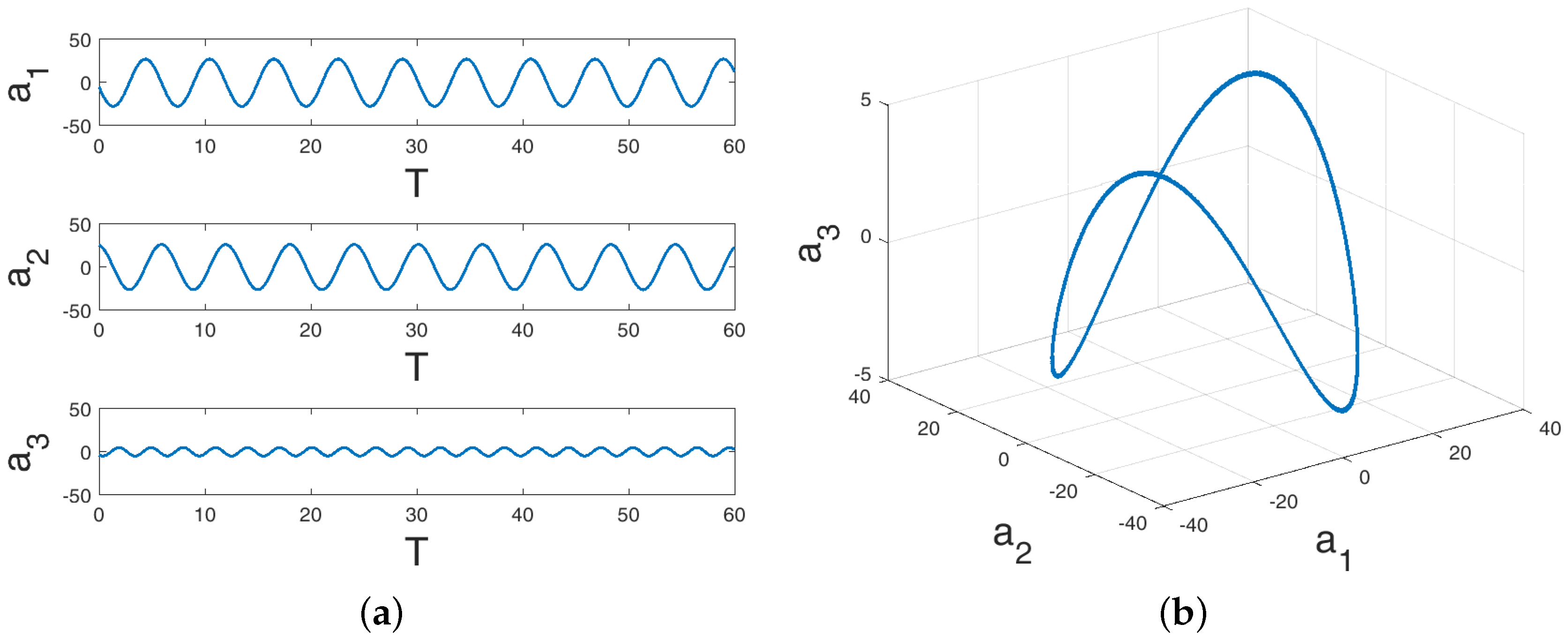

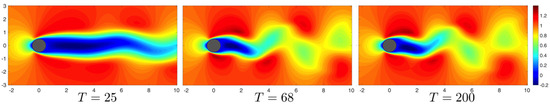

In this section, we explore sparse reconstruction of fluid flows at using the above SR infrastructure for the cylinder flow with well-developed periodic vortex shedding behavior. The GPOD formulation is chosen over the traditional SR formulation to bypass the need for maintaining a separate measurement matrix, since we are focusing only on point sensors in this discussion. Maintaining a separate measurement matrix involves storing a lot of elements that are zeros which do not impact the matrix multiplications in both the versions of the SR algorithm. In most cases reported here, Tikhonov regularization is employed to reduce overfitting and provide uniqueness to the solution. In this study, we choose 300 snapshots of data corresponding to a non-dimensional time () of with a uniform temporal spacing of s. corresponds to multiple (≈10) cycles of periodic vortex shedding behavior for flow with , as seen from the temporal evolution of the POD coefficients as shown in Figure 5 below.

Figure 5.

The temporal evolution of the first three normalized proper orthogonal decomposition (POD) coefficients for limit cycle cylinder flow at . (a) 2D view; (b) 3D view.

4.1. Sparse Reconstruction Experiments and Analysis

For this a priori assessment of SR performance, we reconstruct sparse data from simulations where the full field representation is available. The sparse sensor locations are chosen as single point measurements using a random sampling of the full field data and these locations are fixed for the ensemble of snapshots used for the reconstruction. Specifically, we employ the Matlab function randperm (N) to generate random permutation from 1 to N with the first p values chosen as the sensor locations. Reconstruction performance is evaluated by comparing the original simulation predicted field with those from SR using both POD and ELM basis, across the entire ensemble of numerical experiments. We undertake this approach in order to assess the relative roles of system sparsity (K), sensor sparsity (P), and sensor placement (C) for both the POD and ELM-based SR. In particular, we aim to accomplish the following through this study: (i) check if is a necessary condition for accurate reconstruction of the fluid flow irrespective of the basis employed; (ii) determine the dependence of the estimated sparsity metric K for the desired reconstruction quality on the choice of basis; and (iii) understand how sensor placement impacts reconstruction quality for different choice of basis.

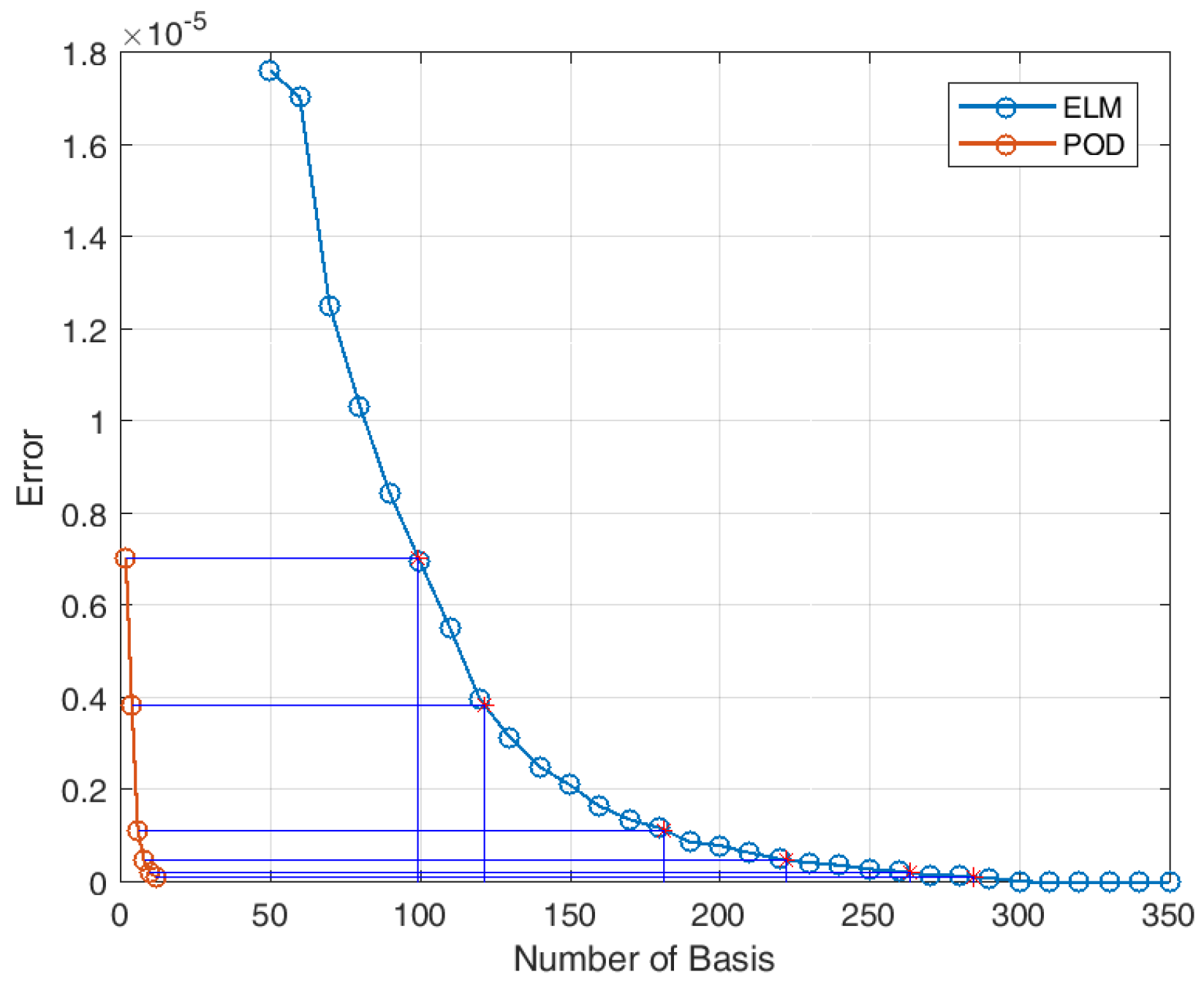

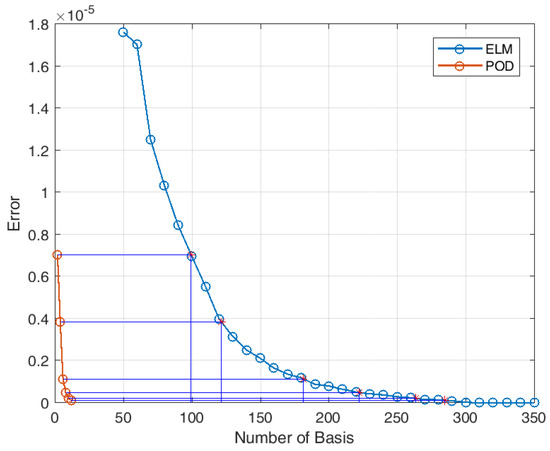

To learn the data-driven basis, we employ the method of snapshots [55] as shown in Equations (9)–(12) for POD and train an autoencoder as shown in Equations (13)–(19) for the ELM basis. For the numerical experiments described here, the data-driven basis and coefficients are obtained from the full data ensemble, i.e., snapshots corresponding to non-dimensional times. This gives rise to at most M basis for use in the reconstruction process in Equation (3), i.e., a candidate basis dimension of . While this is obvious for the POD case, we observe that for ELM does not improve the representational accuracy (see Figure 6). As shown in Table 1, the choice of algorithms depend on the choice of system sparsity (K), data sparsity (P), and the dimension of the candidate basis space, . Recalling from the earlier discussion in Section 2, we see that would require an method for a desired reconstruction sparsity K as long as . In the case of POD, the basis are energy optimal for the training data and hence, contain built-in sparsity. That is, as long as the basis is relevant for the flow to be reconstructed, retaining only the most energetic modes (basis) should generate the best possible reconstruction for the given sensor locations. Therefore, the POD basis needs to be generated once and the sparsity level of the representation is determined by just choosing to retain the first few modes in the sequence. On the other hand, ELM basis does not have a built-in mechanism for order reduction. The underlying basis hierarchy for the given sparse data is not known a priori and therefore requires one to search for the K most significant bases among the maximum possible dimension of , using sparsity promoting methods. However, for this work we bypass the need for the algorithm in favor of the less expensive method by learning a new set of basis for each choice of . The increased cost of methods such as the iterative thresholding algorithms [28,70] require multiple solutions of the least-squares problem before a sparse solution is realized.

Figure 6.

Error () using different number of basis (K) for both POD reconstruction and ELM prediction.

4.2. Sparsity and Energy Metrics

For this SR study, we explore the conditions for accurate recovery of information in terms of data sparsity (P) and system sparsity (K), which also represents the dimensionality of the system in a given basis space. In other words, sparsity represents the size of a given basis space needed to capture a desired amount of energy. As long as the measurements are incoherent with respect to the basis and the system is overdetermined, i.e., , one should be able to invert to recover the higher dimensional state, X. From earlier discussions in Section 2, we know is a sufficient condition for accurate reconstruction using minimization. Thus, both interpretations require a minimum quantity of sensor data for accurate reconstruction, which is verified through numerical experiments in Section 4.3.

In this section, we describe how the different system sparsity metrics, , are chosen for the numerical experiments with both POD and ELM basis. Since the basis spaces are different, a good way to compare the system dimension is through the energy captured by the respective basis representations. For POD one easily defines a cumulative energy fraction captured by the K most energetic modes, , using the corresponding singular values of the data as shown in Equation (32).

In the above, the singular values are computed from Equation (10), and M is the total number of possible eigenvalues for M snapshots.

For the cylinder flow case with , one requires two and five POD modes to capture and of the energy content, respectively, indicative of the sparsity of the dynamics in this basis space. In this case, we compute , where represent the matrix comprising of the K POD bases and the corresponding coefficients for the different snapshots, respectively. The approach of tying the system sparsity with the energy content or alternatively the error obtained by reconstructing the basis with full data, allows one to compare different choices of basis space. Since there exists no natural hierarchy for the ELM basis, we characterize the system sparsity K through the reconstruction error (with respect to the true data) obtained during the training of the ELM network as in Equation (19). This is computed as the 2-norm of the difference between the ELM trained flow field, i.e., the ELM output layer, and the exact flow field, i.e., the ELM input layer, . Mathematically, we compute . To relate the system dimensionality in the ELM and POD spaces and assess their relative sparsity, we compare the energy captured for different values of K in terms of their respective reconstruction errors as shown in Figure 6. We note that the ELM training in Equation (13) employs random weights which produces variability in the error metric, . To minimize this variability, we perform a set of twenty different training for each value of and compute the average error as plotted in Figure 6.

From this, we observe that for ELM, produces the same representation error as produced by using just for the POD basis. It turns out that for POD captures of the energy as per Equation (32), i.e., and . The decay of with K is nearly exponential, but slower than that observed for . Further, for , the ELM training over-fits the data which drives the reconstruction error to near zero. To assess SR performance across different flow regimes (that have different ) with different values of K we define a normalized system sparsity metric, and a normalized sensor sparsity metric, . This allows us to design an ensemble of numerical experiments in the discretized space and the outcomes can be generalized. In this study, the design space is populated over the range and for POD SR, and for ELM SR the range is and as the K is bounded by the total number of snapshots, . The lower bound of one is chosen such that the minimally accurate reconstruction captures of the energy. If one desires a different reconstruction norm, then can be changed to without loss of generality and the corresponding K-space modified accordingly. Alternately, one can choose , the normalized energy fraction metric to represent the desired energy capture as a fraction of , but this is not used in this study.

To quantify the reconstruction performance, we define the mean squared reconstruction error as shown in Equation (33) below:

where X is the true data, and is the reconstructed field using sparse measurements as per Algorithm 1 and either POD or ELM as the choice of basis. N and M represent the state and snapshot dimensions affiliated with indices i and j, respectively. Similarly, the mean squared error and for the full reconstruction from both POD and ELM based SR are computed as:

where is the full field reconstruction using exactly computed coefficients for both POD and ELM cases. is the normalized system sparsity metric (i.e., number of basis normalized by ) corresponding to energy capture and represents the desired system sparsity. The superscript corresponds to the full reconstruction using exactly computed coefficients a. For POD, this is simply as per Equation (12). However for ELM, where and is obtained as per Equation (19). However, this computed a is not the same as used in the ELM training step (Equation (19)) as is not exactly an identity matrix. Therefore, the error in the pseudo-inverse computation of produces two sets of a, one from direct estimation using the ELM basis () and the other from ELM training step () through a direct estimation. This results in two types of error estimations with being used to estimate and a being used to compute in Equation (34) and in Equation (35).

Using the above definitions, we can now generate normalized versions of the absolute () and relative () errors as shown in Equation (36). represents the SR error normalized by the corresponding full reconstruction error for energy capture. represents the normalized error relative to the desired reconstruction accuracy for the chosen system sparsity, K. These two error metrics are chosen so as to achieve the twin goals of assessing the overall absolute quality of the SR in a normalized sense () and the best possible reconstruction accuracy for the chosen problem set-up (i.e., ). Thus, if the best possible reconstruction for a given K is realized then will take the same value across different . This error metric is used to assess relative dependence of on for the chosen flow field. On the other hand, provides an absolute estimate of the reconstruction accuracy so that minimal values of needed to achieve a desired accuracy can be identified.

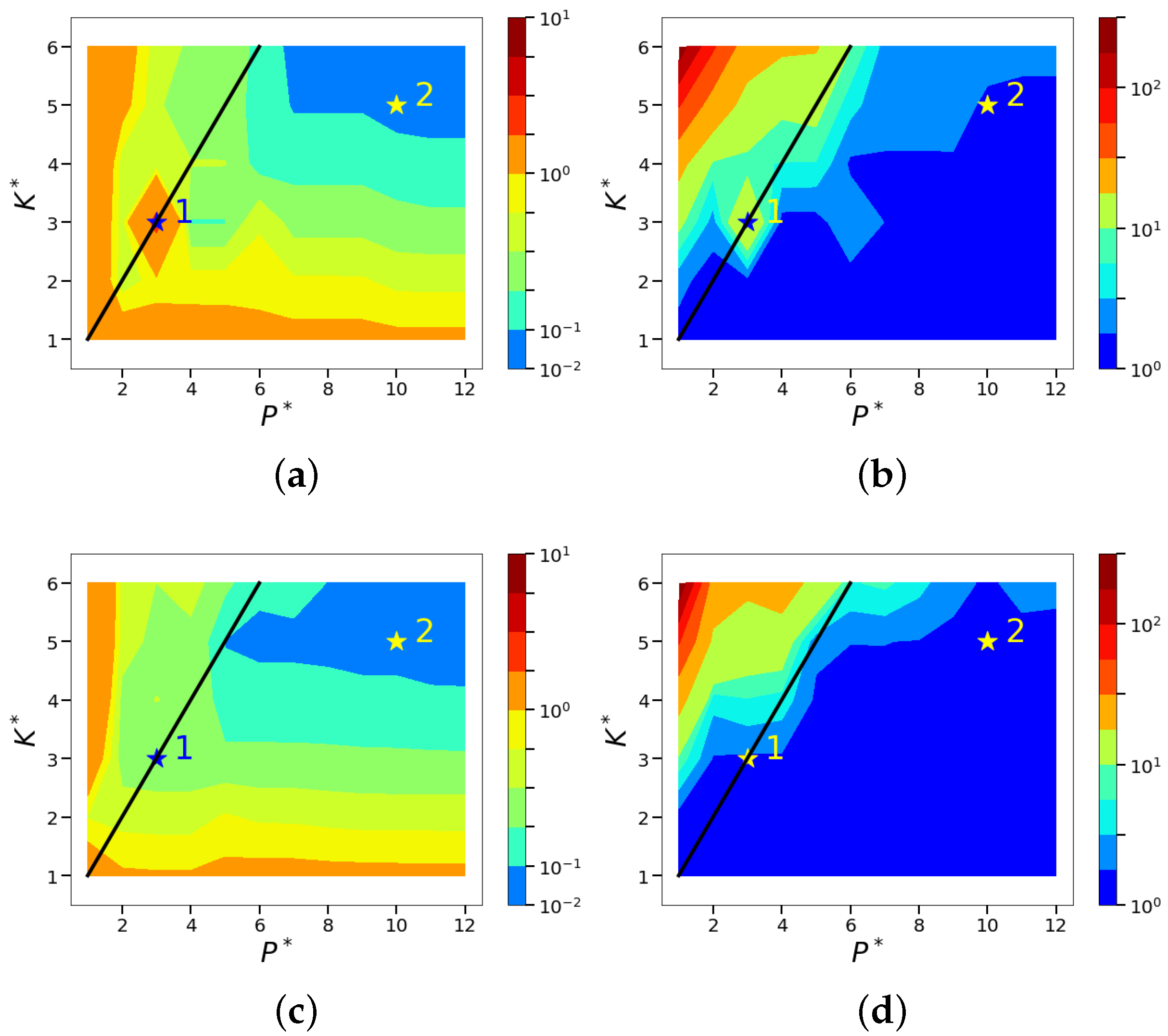

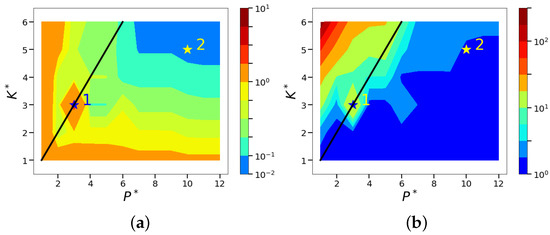

4.3. Sparse Reconstruction of Limit-Cycle Dynamics in Cylinder Wakes Using the POD Basis

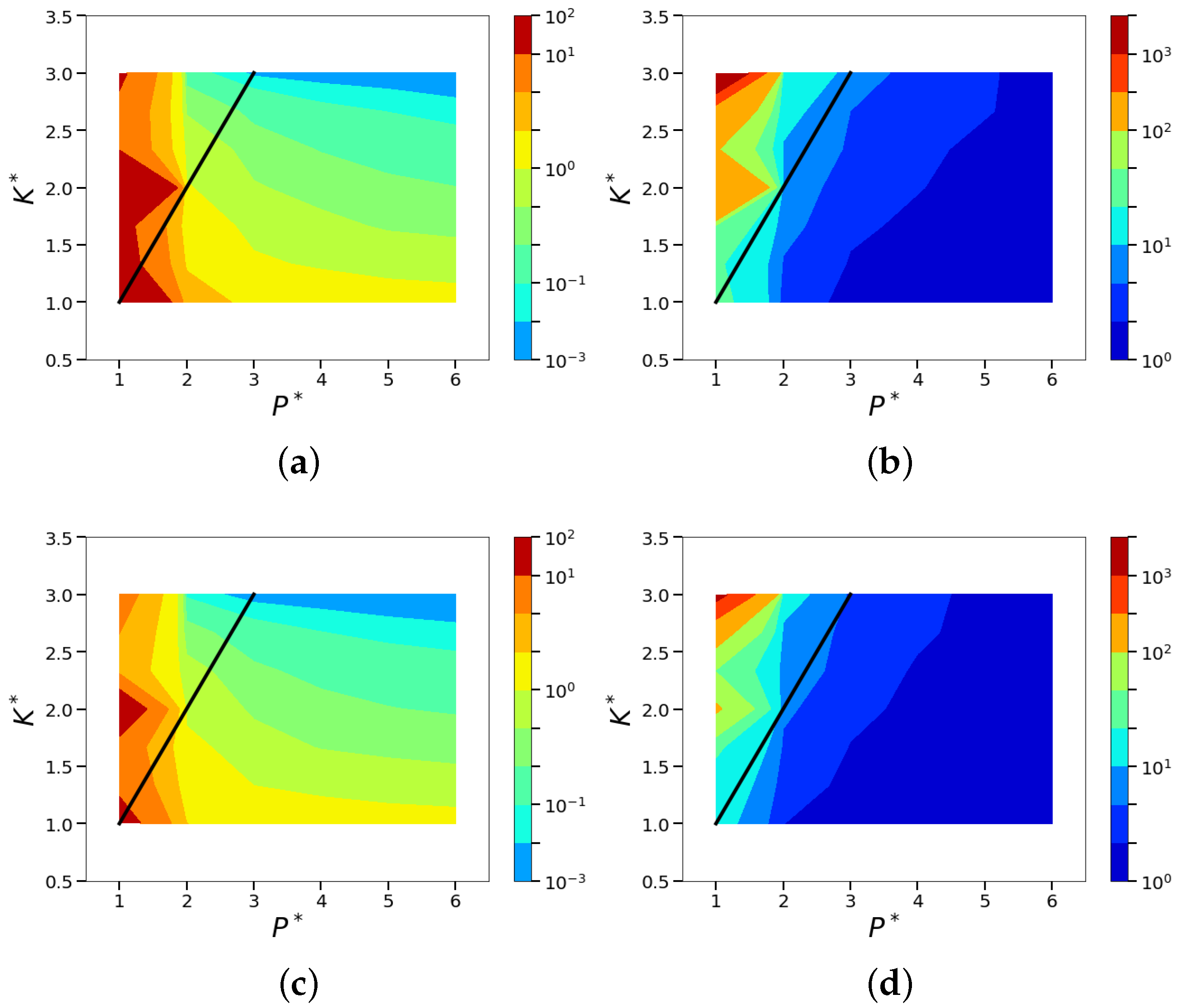

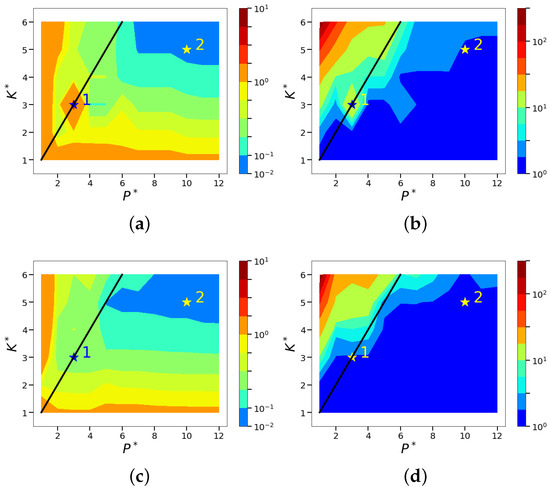

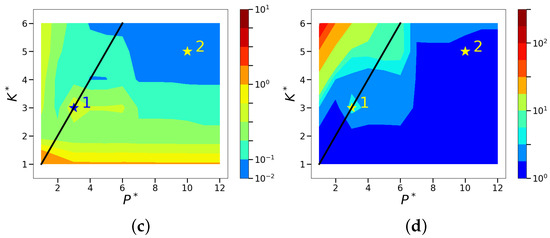

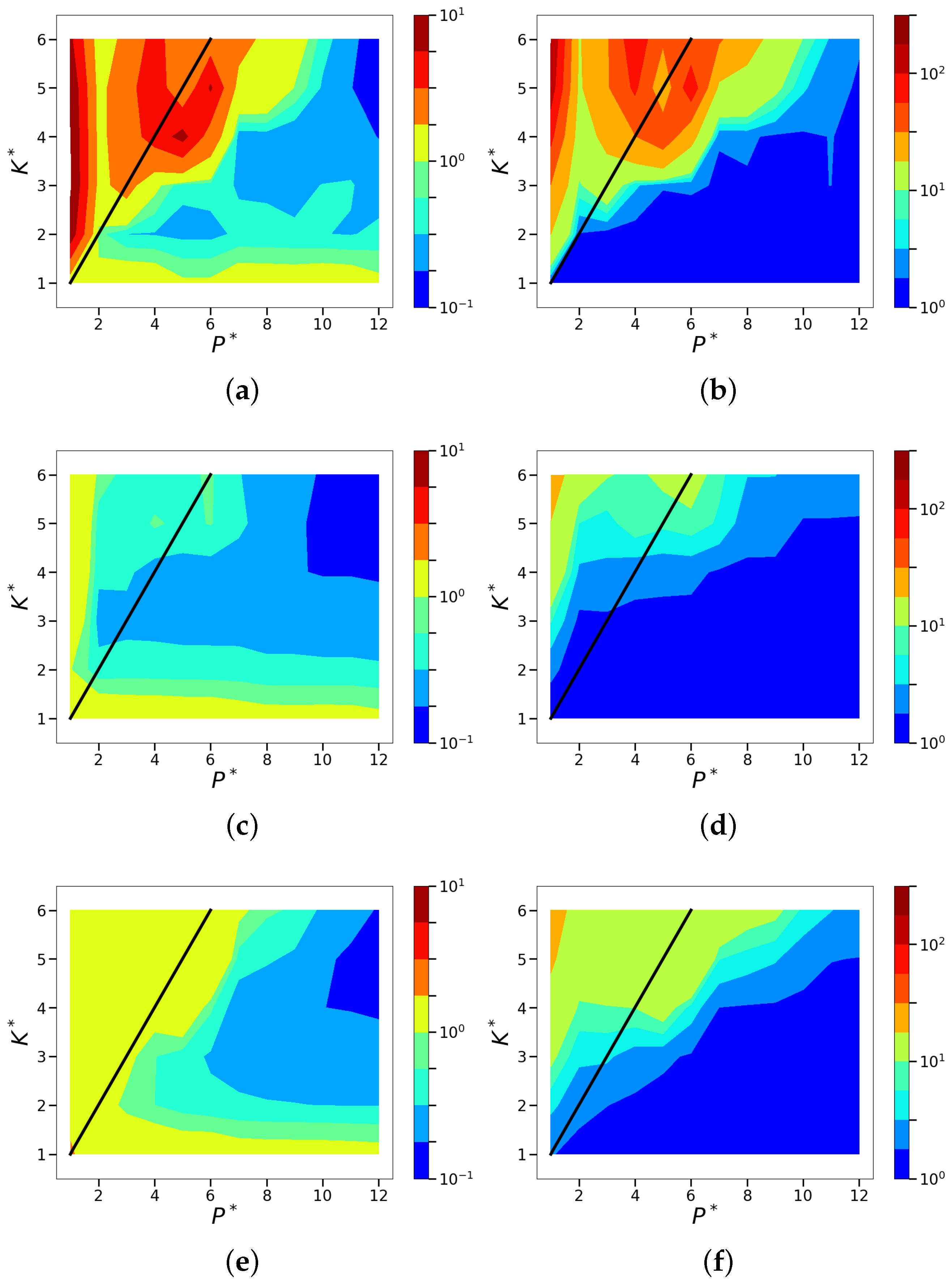

As the first part of this study is designed to establish a baseline SR performance using the POD basis similar to that performed in Reference [35], we carried out a series of POD-based sparse reconstruction (SR) experiments corresponding to different points in the design space and spread over the different sensor placements. In these experiments, the sparse data is obtained from a priori high resolution flow field data with randomly placed sparse sensors that do not change with each snapshot. The randomized sensor placement in this case is controlled using a seed value in Matlab which is fixed for all the experiments within a given design space. By computing the errors as described in Section 4.2 across the space, the contours of and at for a few different random sensor placements are shown in Figure 7 and Figure 8.

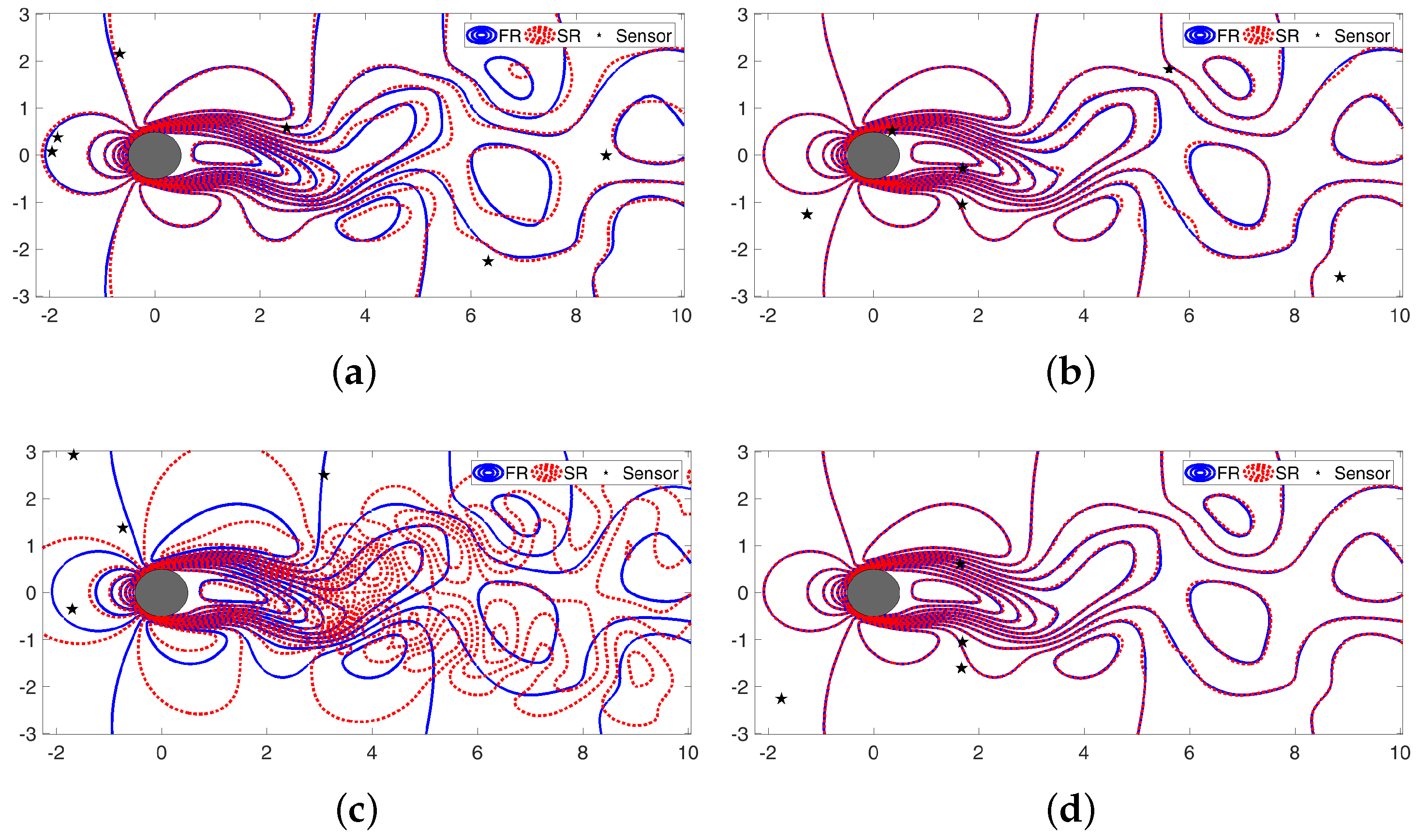

Figure 7.

Isocontours of the normalized mean squared POD-based sparse reconstruction errors ( norms) using different random seeds (101 and 102) for sensor placements. Left: normalized absolute error metric, . Right: normalized relative error metric, . (a) (seed = 101); (b) (seed = 101); (c) (seed = 102); and (d) (seed = 102).

Figure 8.

Isocontours of the normalized mean squared POD-based sparse reconstruction errors ( norm) using different random seed (108 and 109) for sensor placements. Left: normalized absolute error metric, . Right: normalized relative error metric, . (a) (seed = 108); (b) (seed = 108); (c) (seed = 109); and (d) (seed = 109).

The relative error metric (the right column in Figure 7 and Figure 8), shows that the smaller errors (both light and dark blue regions) are predominantly located over the region where , separated from the other region using a diagonal line corresponding to . This indicates that the over specified SR problem with , i.e., having more sensors than the dimensionality chosen to represent the system yields good results in terms of . For small , the normalized relative error can reach as high as –. Since is normalized by the error contained in the exact K-sparse POD reconstruction, this metric represents how effectively the sparse sensor data can approximate the K-sparse solution using minimization. In principle, the exact K-sparse POD reconstruction is the best possible outcome to expect irrespective of how much sensor data is available, as long as . We note that by constraining the SR problem by choosing the desired energy sparsity K, the reconstruction is reconciled with the minimization solution [35]. Consistent with the observations in Reference [35], we observe that contours adhere to an L-shaped structure indicating that absolute normalized error reduces as K is increased to capture more energy contained in the full field data.

While this ‘best’ reconstruction is almost always observed for the higher values of and for the different sensor placements, there appear to be some exceptions. Notably, for a few sensor placement choices (seed 101 in Figure 7 and 108 in Figure 8), a small portion of in the region abutting the line shows nearly an order of magnitude higher error, (colored as red in Figure 7 and Figure 8) as compared to the expected values of observed for sensor placement using seeds 102 and 109 in Figure 7 and Figure 8, respectively.

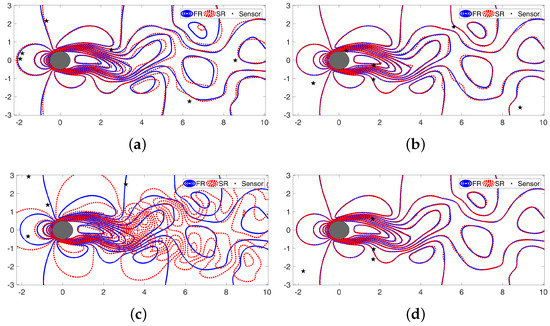

We probe these ‘anomalous’ points by visualizing the SR of a random snapshot along with their corresponding sensor placements to deduce possible insight into the reason for high errors in terms of sensor placement. In Reference [35], it was shown that although the coherency number was small for a given sensor placement choice, the sparse data points should still span the physics to be captured. Inspired by this, we experiment the impact of sensor placement for two regions corresponding to and .

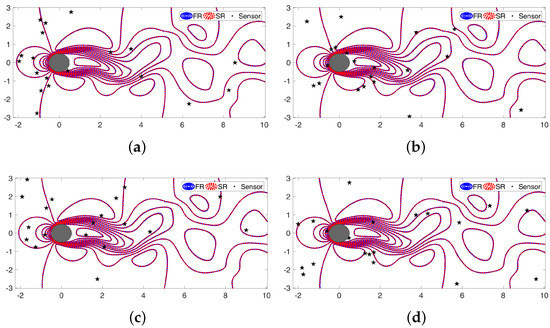

At point 1 (where ) for sensor placement with seed 101 (Figure 7) both and indicate an order of magnitude higher error relative to its neighborhood. But for the same point with modified sensor placement using seed 102, we observe nearly an order of magnitude lower error. Comparing the SR flow fields for both the sensor placements with different seeds, as shown in Figure 9, we see that seed 102 places more data points in the wake of the cylinder as compared to that for seed 101, hence the better reconstruction. A follow up study is needed to explore data-driven methods for optimal sensor placement and their influence on SR error. Mathematically, data points in the cylinder wake region excite the most energetic POD modes as compared to sensors placed elsewhere. This is clearly shown in Reference [35] where the coefficients or features corresponding to the most energetic POD modes are erroneous when recovered using inadequate sensor placement. This trend is observed even for the under specified (ill-posed) case with , i.e., point 3 in Figure 8 for cases with different sensor placement (seeds 108 and 109) as shown in Figure 9. As part of the Appendix, we further illustrate the mechanism of how sensor placement impacts the predictions in Figure A1 where we compute the reconstructed POD features corresponding to and for the four different sensor placement cases (seeds 101–102 and 108–109) discussed here. This plot clearly shows that for the cases with inadequate sensor placement (seed 101 & 108), we observe large deviations of the predicted POD features for those corresponding to full data reconstruction. Not surprisingly, the SR cases with adequate sensor placement show closer agreement with the exact POD features obtained by projecting the full data onto the POD basis space.

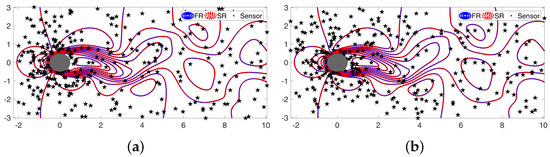

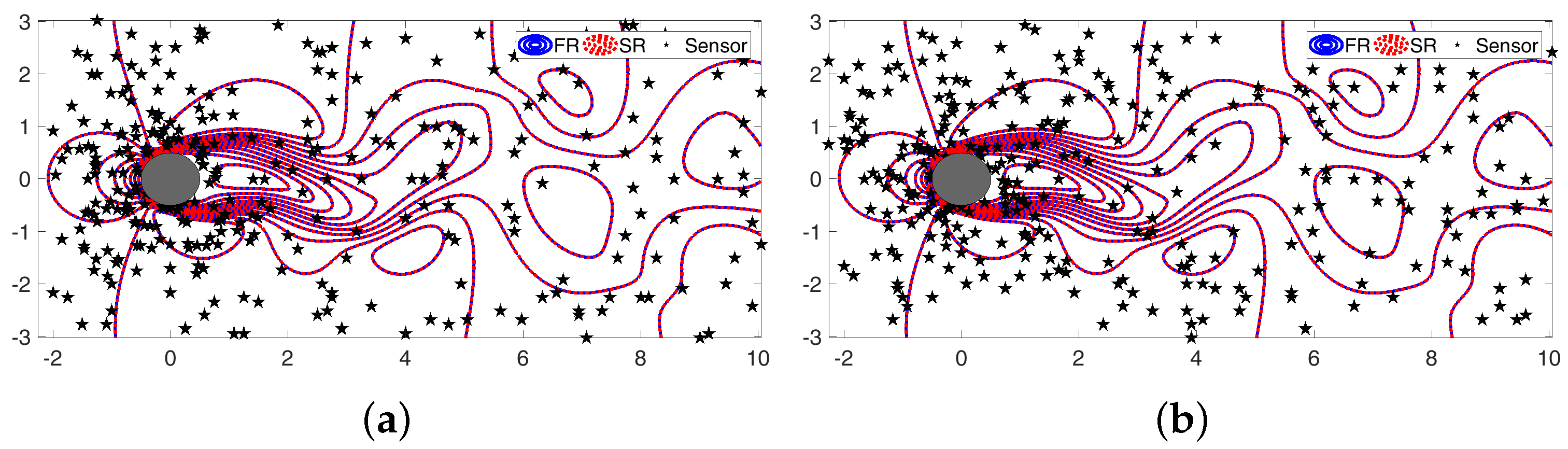

Figure 9.

Comparison of POD-based SR and full reconstruction (FR) for different random sensor placement and . Blue solid line: FR. Red dashed line: SR. Black stars: sensor locations. (a) Seed = 101 (at and ); (b) Seed = 102 (at and ); (c) Seed = 108 (at and ); and (d) Seed = 109 (at and ).

In conclusion, we observe that although the POD-based SR is very efficient in terms of sensor quantity requirement, the reconstruction errors are sensitive to sensor location even when . For cases with (points 2 and 4 in Figure 7 and Figure 8), we observe that this sensitivity to sensor placement is highly minimized although small differences exist as shown in Figure 10. This is because, an increase in the number of measurement points enhances the probability of locating points within the cylinder wake.

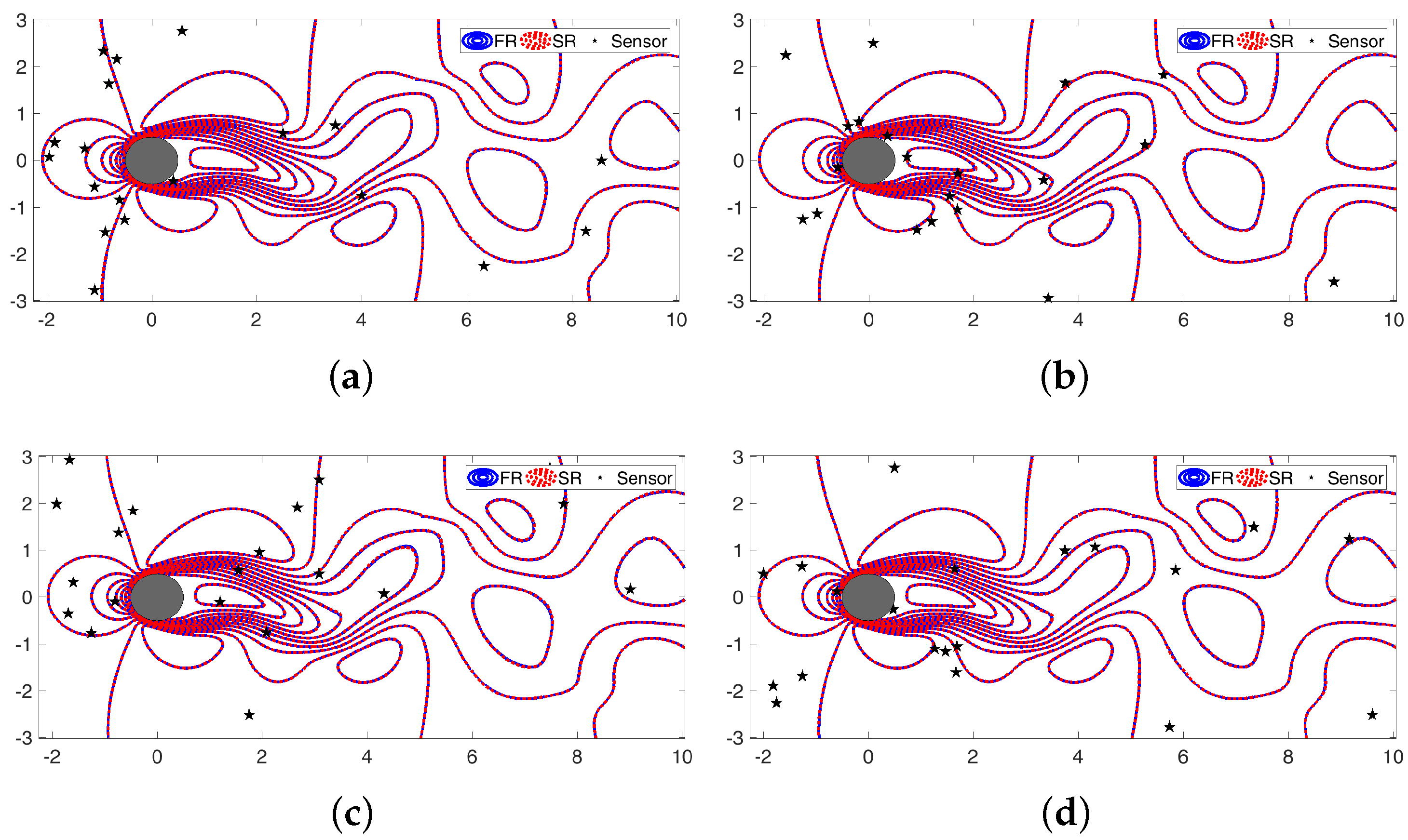

Figure 10.

Comparison of POD-based SR and FR for different random sensor placement and . Blue solid line: FR. Red dashed line: SR. Black stars: sensor locations. (a) Seed = 101 (at and ); (b) Seed = 102 (at and ); (c) Seed = 108 (at and ); and (d) Seed = 109 (at and ).

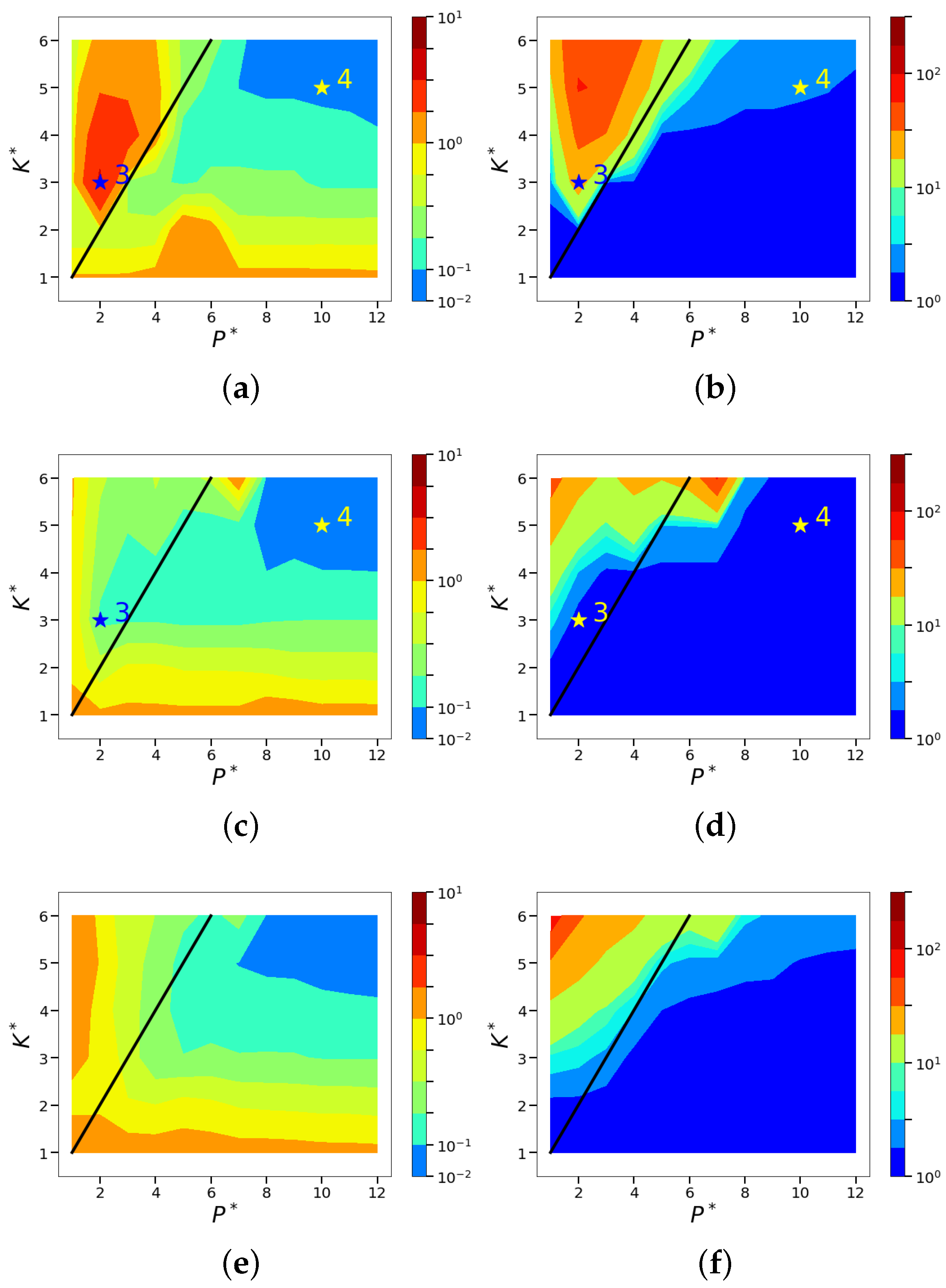

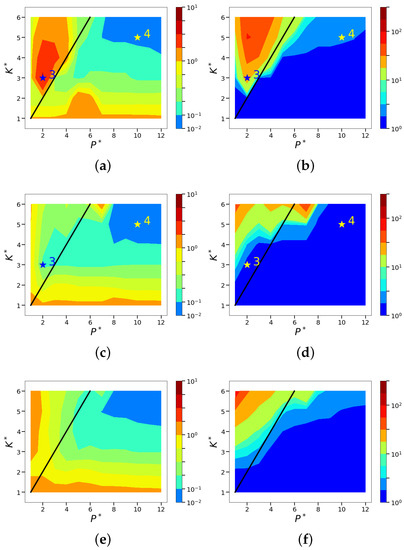

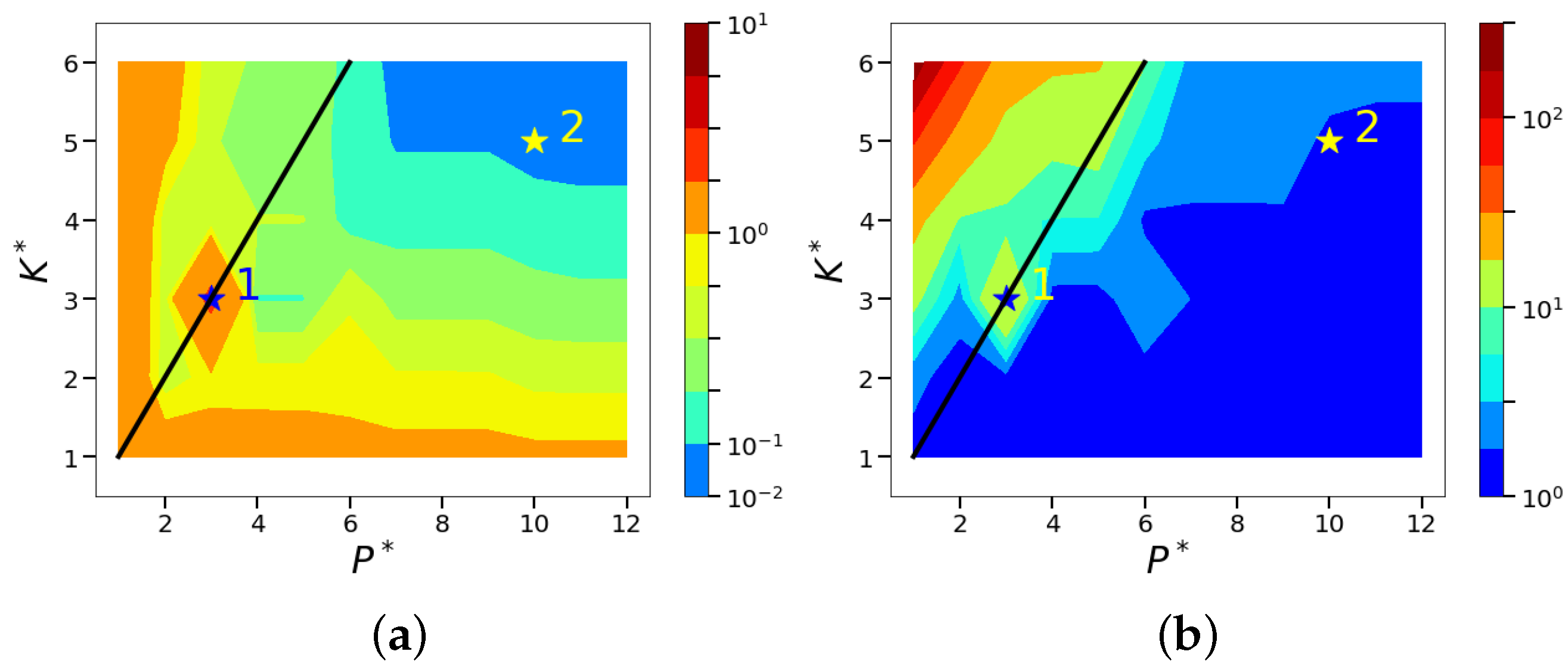

In order to generalize the error metrics computed in Figure 7 and Figure 8, we perform an ensemble of ten different sensor placements corresponding to seeds ranging from 101 to 110. We plot the error contours corresponding to maximum and minimum errors estimated across the entire design space and also the average error over this ensemble, as shown in Figure 11. The maximum error is found for seed 108 and minimum error for seed 109. The average error contours represent the most probable SR outcome independent of the anomalies from sensor placement.

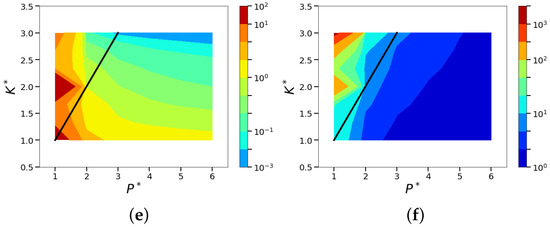

Figure 11.

Isocontours of the normalized mean squared POD-based sparse reconstruction errors ( norm) corresponding to the sensor placement with maximum and minimum errors from the chosen ensemble of random sensor arrangements. The average error across the entire ensemble of ten random sensor placements is also shown. Left: normalized absolute error metric, . Right: normalized relative error metric, . (a) (maximum); (b) (maximum); (c) (minimum); (d) (minimum); (e) (average); and (f) (average).

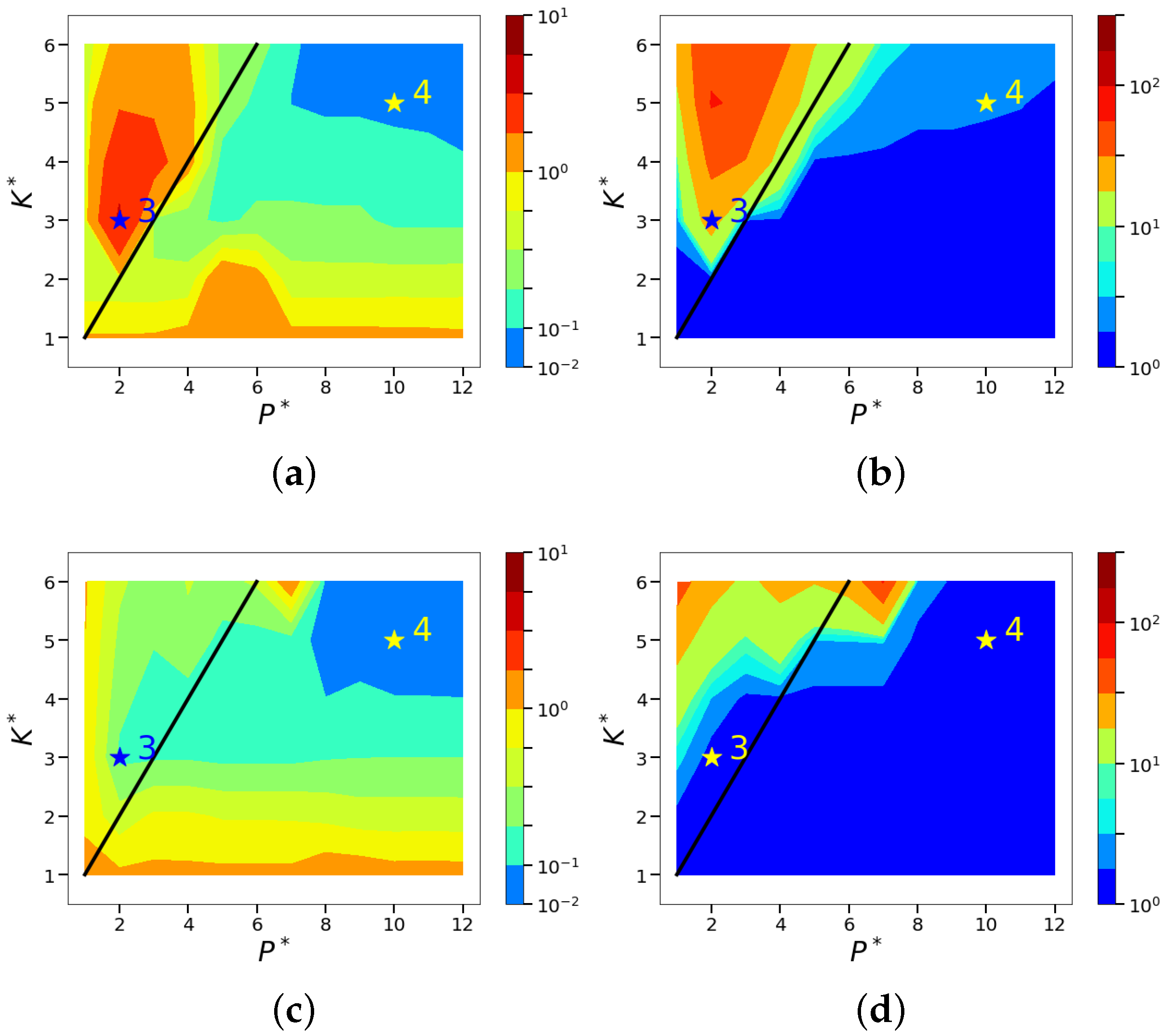

4.4. Sparse Reconstruction Using the ELM Basis

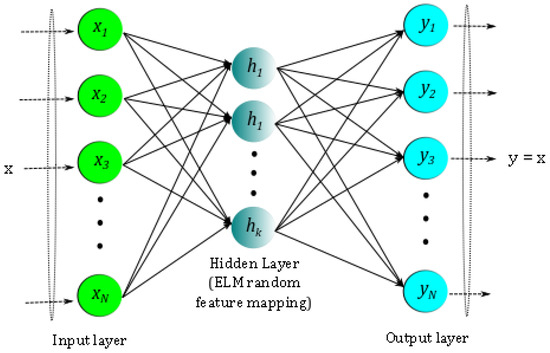

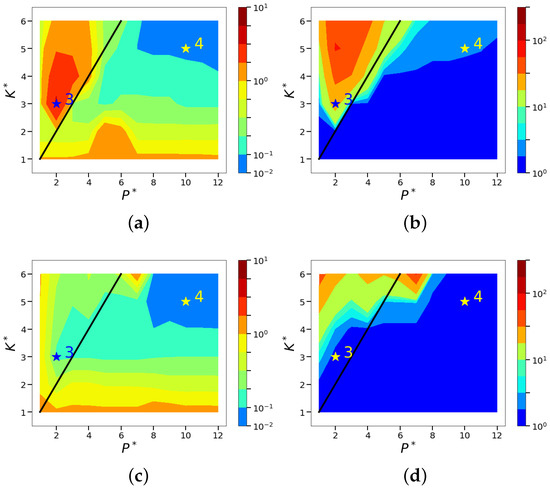

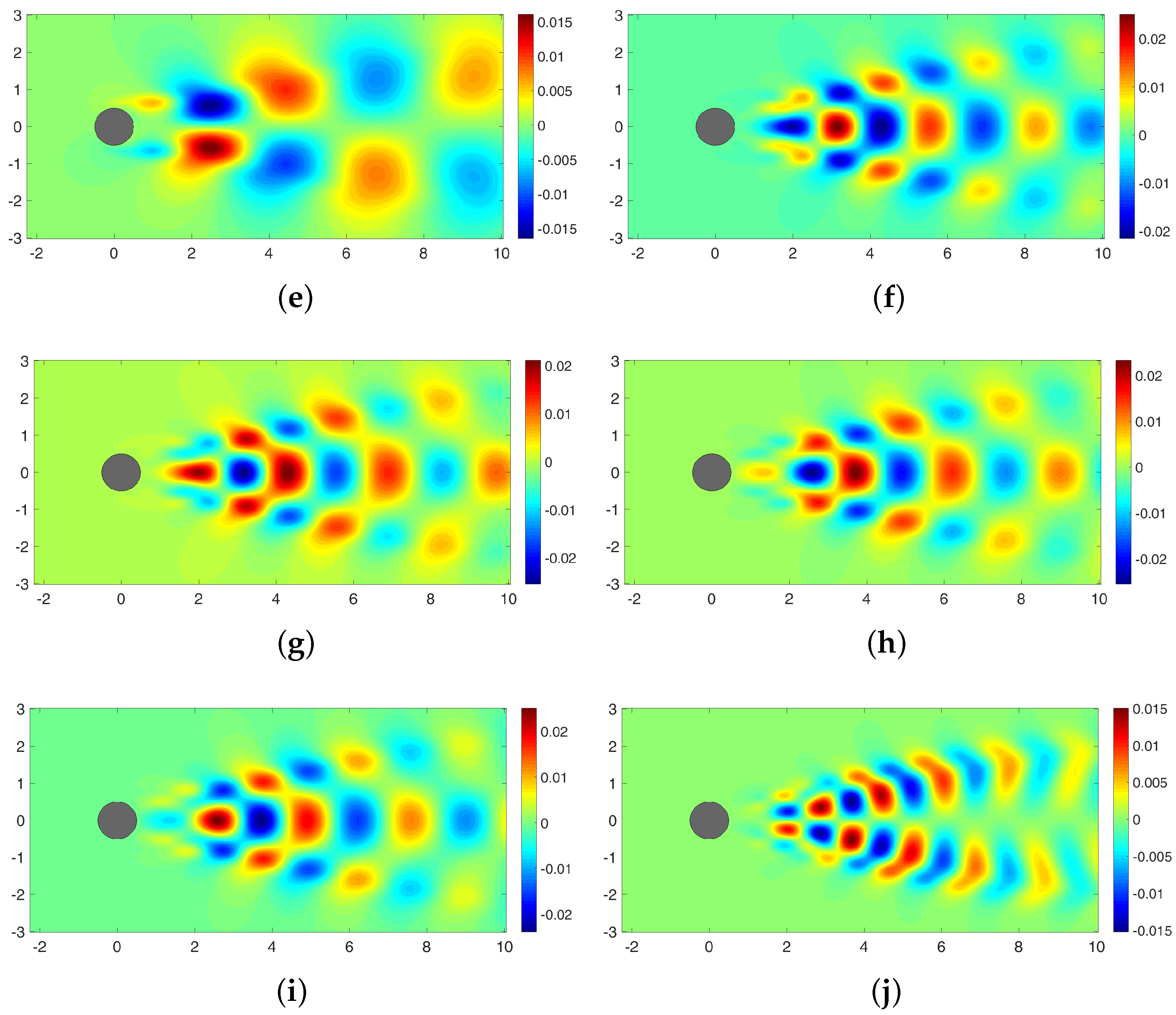

The previous section focused on the SR performance of limit-cycle cylinder wake dynamics using the POD basis; which was shown to perform well if certain conditions are met, namely, and reasonable sensor placement. A key issue identified with POD-based SR for low-dimensional systems, such as the cylinder wake dynamics, is that it requires a small number of sensors for reconstruction, but is sensitive to their placement. As observed in Section 4.2, ELM requires a greater number of basis for the same amount of energy capture. For example, for energy capture, we will need for ELM and just for POD. While SR using ELM basis is similar in concept, the non-orthogonality of the ELM basis and their relative lack of sparsity brings about certain differences. It was shown in Section 4.2 that there exist two different kinds of errors—a training error and the reconstruction error. If the ELM basis were to be orthogonal just like the POD basis, both these errors (and the ELM features a and ) will be equivalent. Second, in Section 2.3 the algorithm for computing the basis using an ELM autoencoder uses randomly chosen input weights when generating the K-dimensional hidden layer features, which represents the low-dimensional representation. Consequently, the realized ELM basis are not unique, unlike the POD basis for a given training data set. It was observed from our numerical experiments that while the sensitivity of this randomness in the ELM basis to the reconstruction errors is not severe, it is perceptible. To analyze the performance of ELM-based SR, we carry out two different types of analysis. The first explores of the effect of randomness in the weights used within ELM training, while fixing the sensor placement. The second explores randomness from the choice of sensor placement for a given ELM basis.

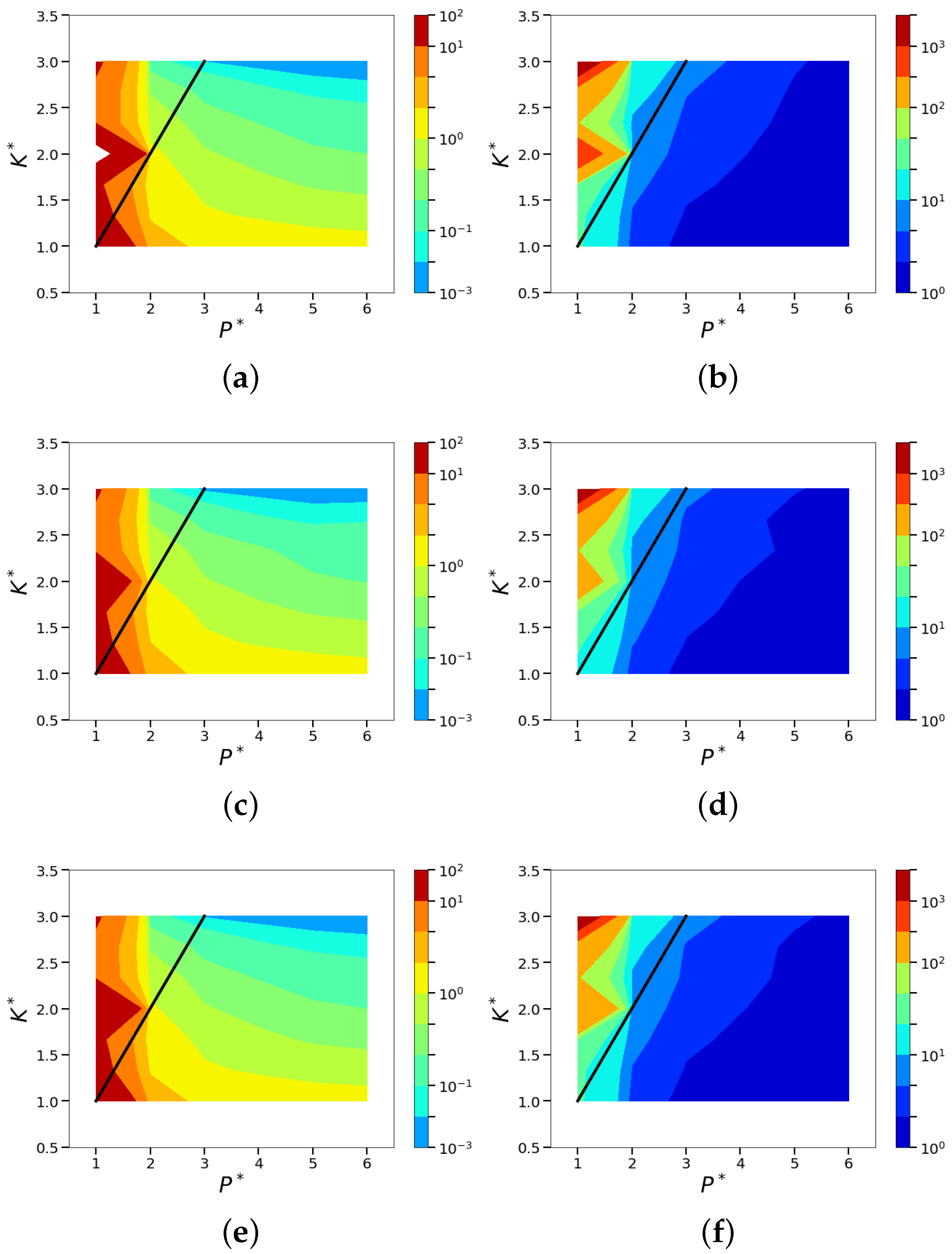

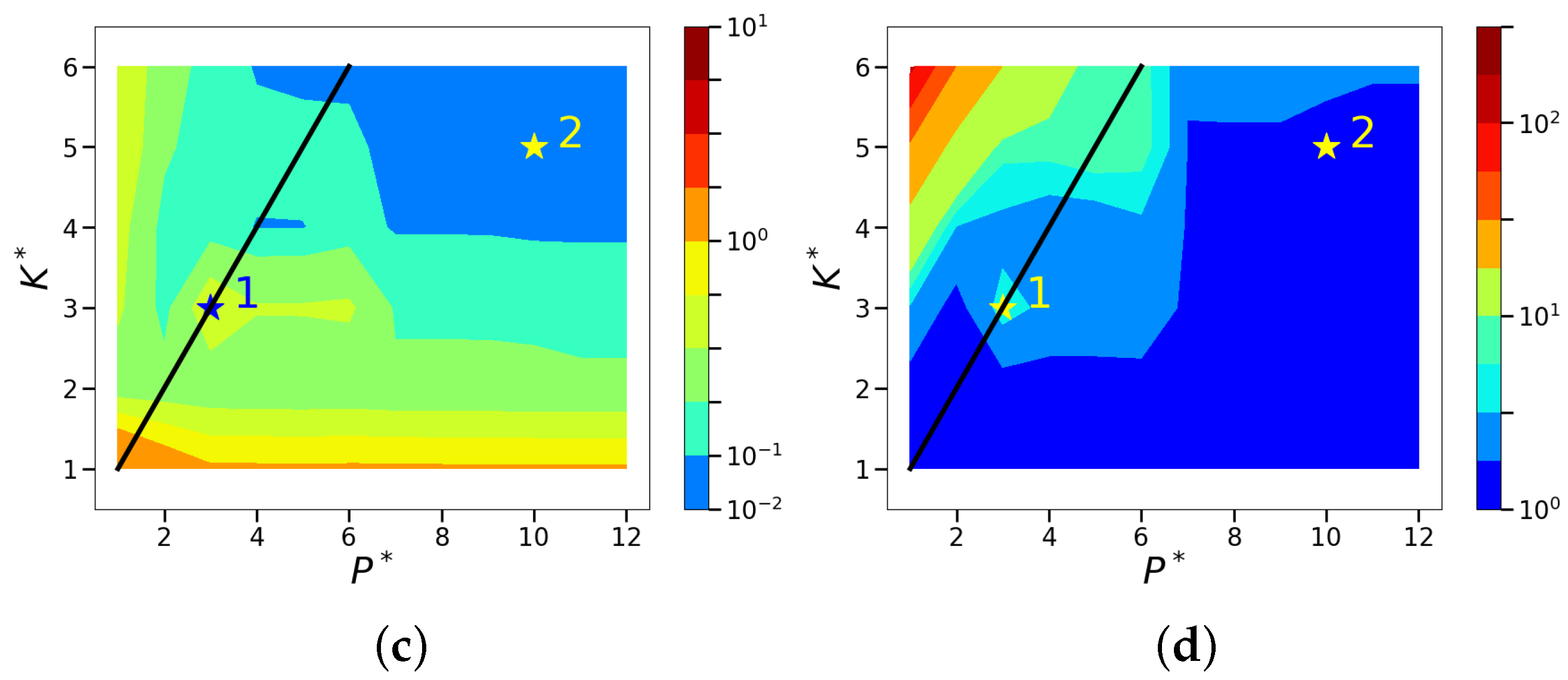

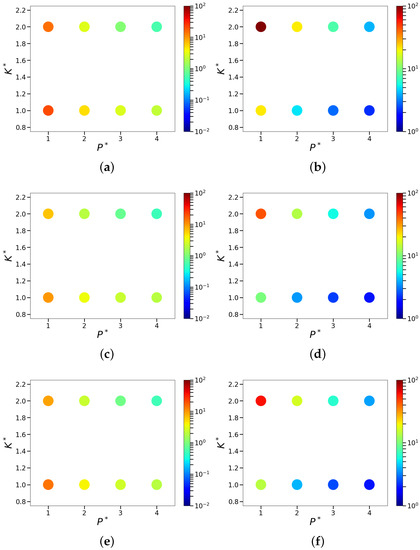

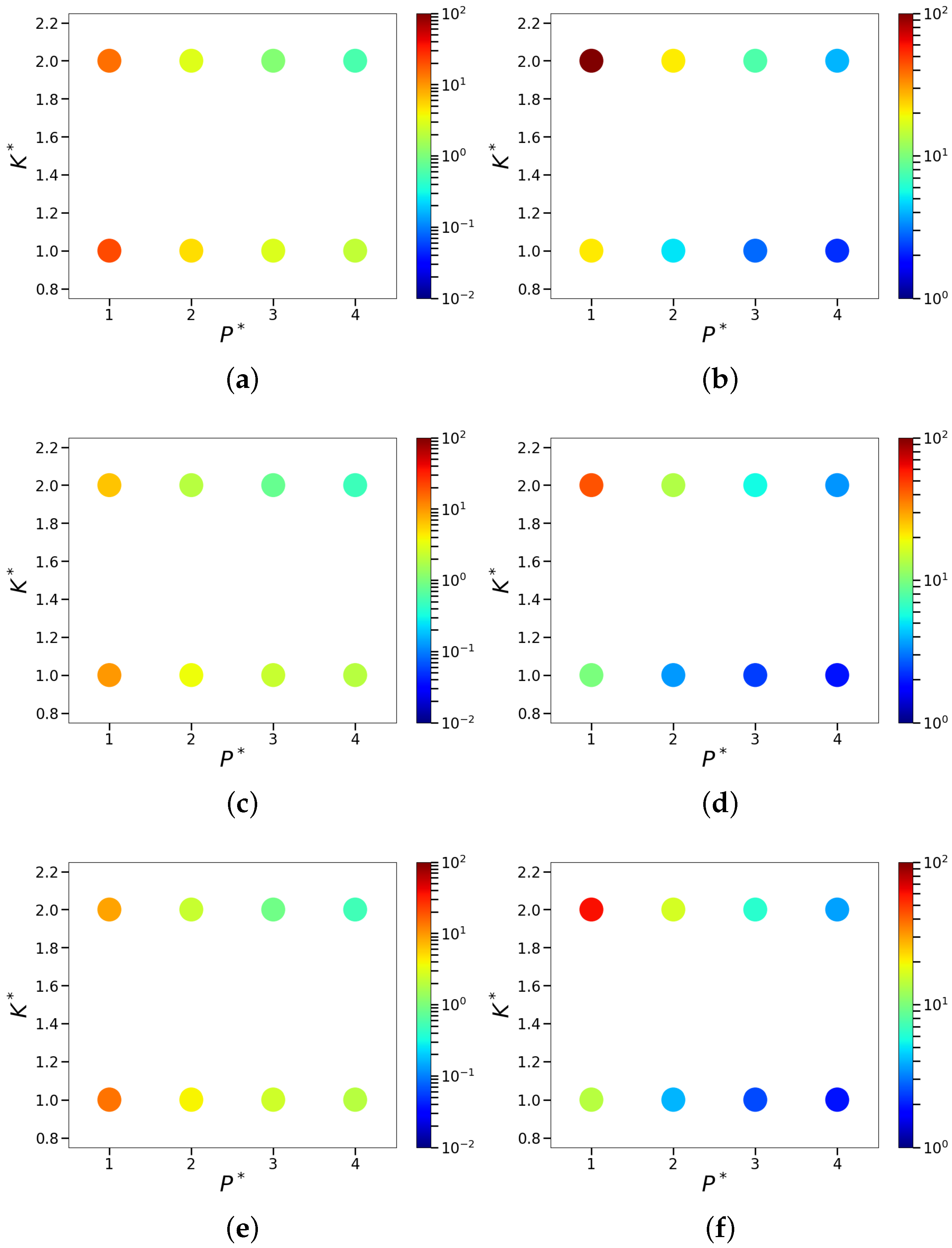

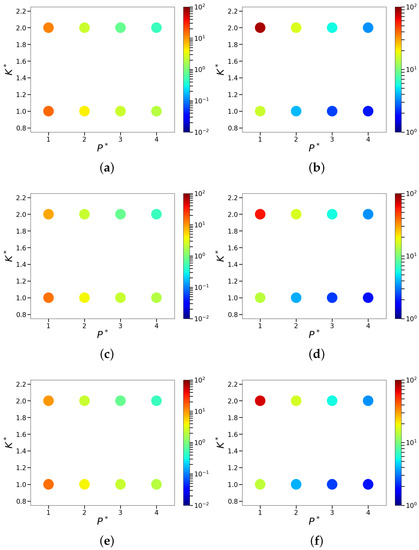

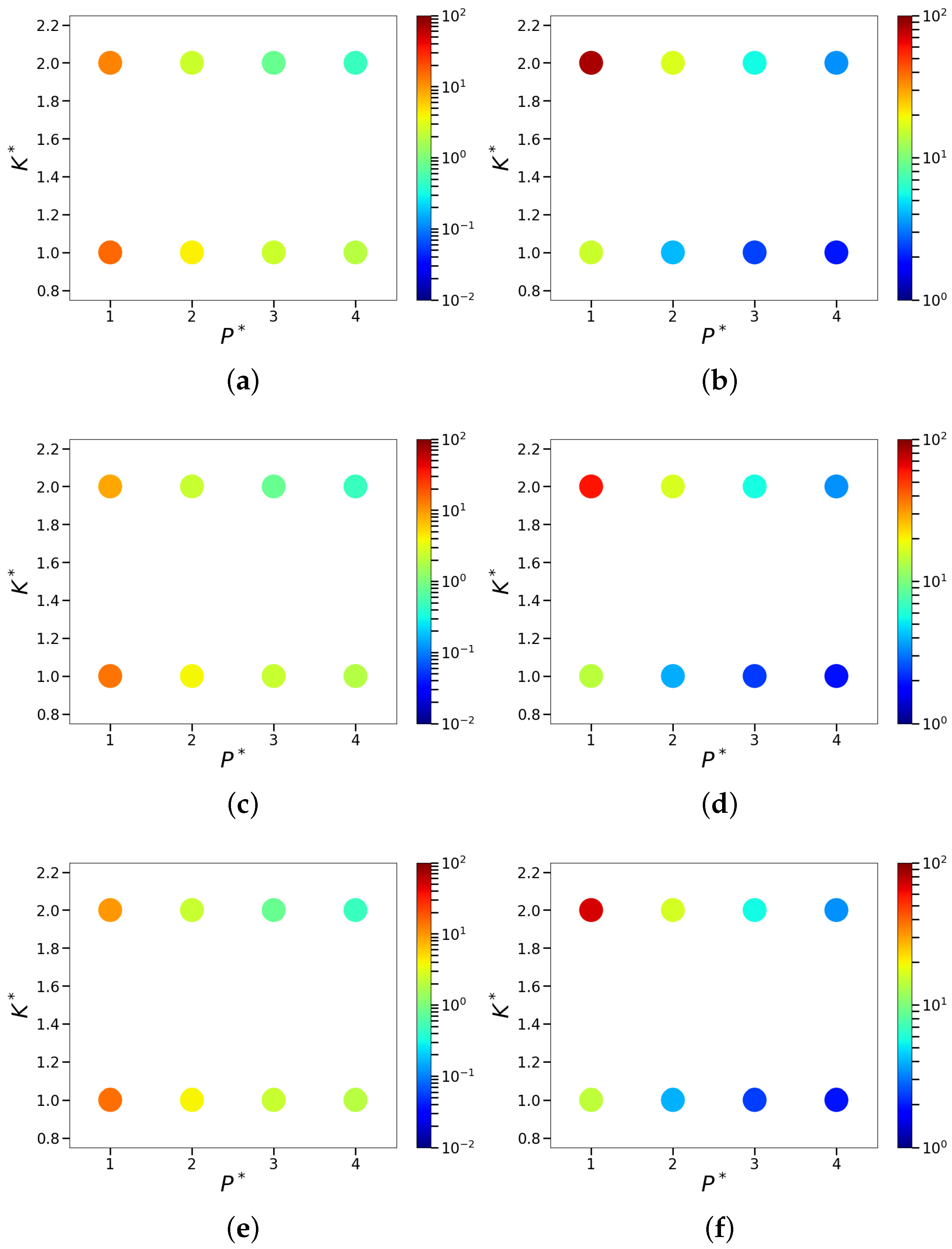

To accomplish the first, we compute the normalized ELM-based SR error metrics and using ten different choices for the random input weight sets. The contours corresponding to the seed with maximum, minimum, and the average error are shown in Figure 12. These plots clearly show that there exist very little sensitivity to the choice of random weights in the ELM training. Further, as was the case for the POD-based SR, the region of good reconstruction is separated from the high-error region by the straight line corresponding to indicating that similar normalized performance bounds exist for both POD (orthogonal basis) and ELM (non-orthogonal basis).

Figure 12.

Isocontours of the normalized mean squared () ELM-based sparse reconstruction errors for the maximum, minimum, and average using different choices of the random input weights. Left: normalized absolute error metric, . Right: normalized relative error metric, . (a) (maximum); (b) (maximum); (c) (minimum); (d) (minimum); (e) (average); and (f) (average).

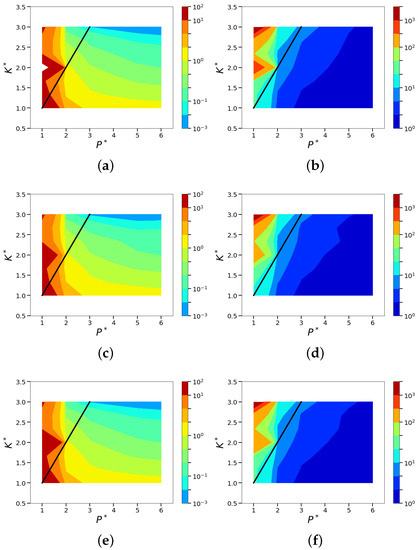

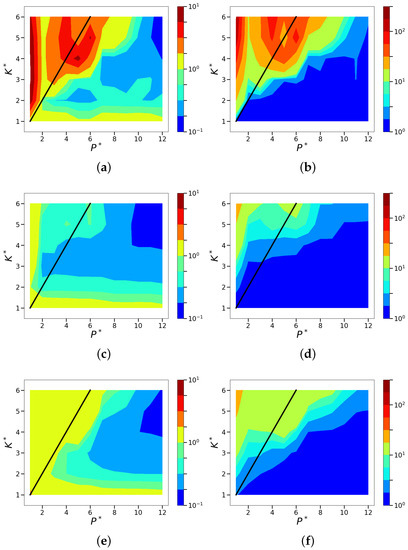

The second part of the ELM-based SR analysis is to assess the effect of sensor placement on the reconstruction performance for a fixed choice of random weights in the ELM training. To accomplish this, we consider an ensemble of numerical simulations with ten different choices of sensor placement across the entire design space. Figure 13 shows the normalized error contours for the case with sensor placement resulting in the maximum error, minimum error, and the average error field over the entire ensemble. Once again, we observe accurate SR performance for , which results in a well-posed reconstruction problem. Further, we observe that the choice of sensor placement has very minimal impact on the overall SR error metrics. This is not surprising given that ELM requires more sensors as compared to POD to achieve the same level of reconstruction accuracy. As verification, we have included in Appendix A, Figure A2 and Figure A3 that compare the reconstruction of a single snapshot flow field and the corresponding ELM features respectively for two different sensor placement choices. The two sensor placements correspond to cases with maximum and minimum errors observed in Figure 13 at design points using and . The comparison of the reconstructed snapshots using sparse and full data shown in Figure A2 do not show any significant errors (in comparison to the full data representation) upon visual inspection. However, comparison of the SR predicted ELM features with those for the FR display strong sensitivity to sensor placement. In particular, we observe that for sensor placement with seed 101, the ELM features are grossly incorrect as compared to that for seed 106. In spite of this, the visually accurate prediction of the reconstructed field for seed 101 is surprising and can be explained as follows. In the ELM-based SR with random sensor placement, a significant number of these sensors find themselves in the most dynamically relevant regions of the flow and even if a few of the sensors were to be misplaced, the contribution to the overall error metric from this is much smaller as compared to POD-based SR. The second speculation is that the higher dimensional ELM basis represents redundant structures that enable it to be relatively insensitive to non-optimal sensor placement. This suggests that the ELM basis space could be represented more compactly, possibly through orthogonalization for improved performance, which will be explored in the future.

Figure 13.

Isocontours of the normalized mean squared () ELM-based sparse reconstruction error for maximum, minimum, and average error using different choices for the random sensor placement. Left: normalized absolute error metric, . Right: normalized relative error metric, . (a) (maximum); (b) (maximum); (c) (minimum); (d) (minimum); (e) (average); and (f) (average).

5. Conclusions

In this article, we explore the interplay of sensor quantity (P) and placement, and system dimensionality or sparsity (K) using optimal data-driven POD & ELM bases with an sparse reconstruction (SR) of a cylinder wake flow. Particularly, we attempted to (i) explore whether the relationship between system dimensionality and sensor quantity for accurate reconstruction of the fluid flow is independent of the basis employed, and (ii) understand the relative influence of sensor placement on the different choices of SR basis.

Regarding the first goal of this study, we observed that the choice of the sparse basis plays a crucial role in the SR performance as it determines system dimensionality or sparsity (K) and in turn the sensor quantity (P) to ensure the problem is well posed. Specifically, in terms of non-dimensional variables () normalized using the characteristic system dimension for a given basis (), all one needed was the system to be well posed, i.e., to realize reasonably accurate reconstruction. This requirement turned out to be independent of the chosen basis. However, the POD/SVD basis represent optimal energy capture for a given training data and allows for efficient energy-based SR. Further, unlike generic basis spaces such as Fourier or radial basis functions, the data-driven POD basis needs to be highly flow relevant as it retains the K most energetic modes for reconstruction and implicitly generates the K-sparse solution for the given sensor locations. For the more generic ELM basis, relevance to the reconstructed flow is less important, but there exists no inherent hierarchy in the basis vectors. Thus, when using a smaller number of sensors, one may need to employ the regularized least squares algorithms to generate meaningful results. In addition, training the ELM network with partially random weights generates a new K-dimensional basis space every time. As such ELM basis are not designed to optimally capture the variance of the data, they are less parsimonious and non-orthogonal when compared to the orthogonal POD basis. For the datasets considered in this this study, one needed an order of magnitude larger number of ELM basis and sensors as compared to POD modes to realize the same reconstruction accuracy.

As for the second goal of this study, we observed that the ELM-based SR was less sensitive to the choice of random sensor placement as compared to the POD-based SR. With the POD basis being low-dimensional, the SR problem required very few sensors for accurate reconstruction of this cylinder wake dynamics, but displayed sensitivity to the choice of random sensor placement. To account for this sensitivity, all the error metrics reported in this article were ensemble averaged over multiple choices of sensor placement. On the other hand, ELM-basis being relatively high dimensional required substantially more sensors for accurate reconstruction and turned out to be less sensitive to the choice of random sensor placement. To illustrate this, we showed the variability between the worst and best reconstructions across the different choices of sensor locations is insignificant and not discernable by the naked eye. Therefore, we expect optimal sensor placement algorithms to offer more value in POD-based SR than in ELM-based SR. On a related note, we have provided a brief overview of different classes of methods for estimating optimal sensor locations in Section 2.4, which operate on modifying the structure of the matrix . Therefore, these algorithms should be equally applicable to both POD and ELM-based frameworks.

Author Contributions

B.J. conceptualized the research with input from A.A.-M. and C.L. A.A.-M. and C.L. developed the sparse reconstruction codes with input from B.J. B.J. and A.A.-M. analyzed the data. A.A.-M. and C.L. developed the first draft of the manuscript and B.J. edited the final written manuscript.

Funding

This research was supported by Oklahoma State University start-up grant and also through a Cooperative Research Agreement (CRA) with Baker Hughes General Electric, Oklahoma City, OK.

Acknowledgments

We acknowledge computational resources from Oklahoma State University High Performance Computing Center (HPCC). We also thank the anonymous reviewers for their detailed and thought-provoking comments that helped us improve the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Comparison of Predicted ELM Features and Snapshot Reconstruction

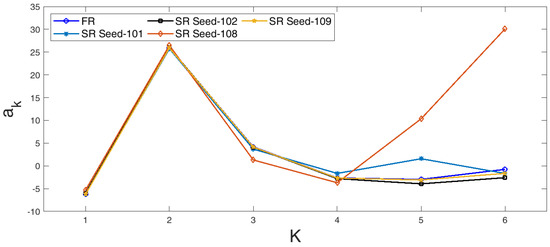

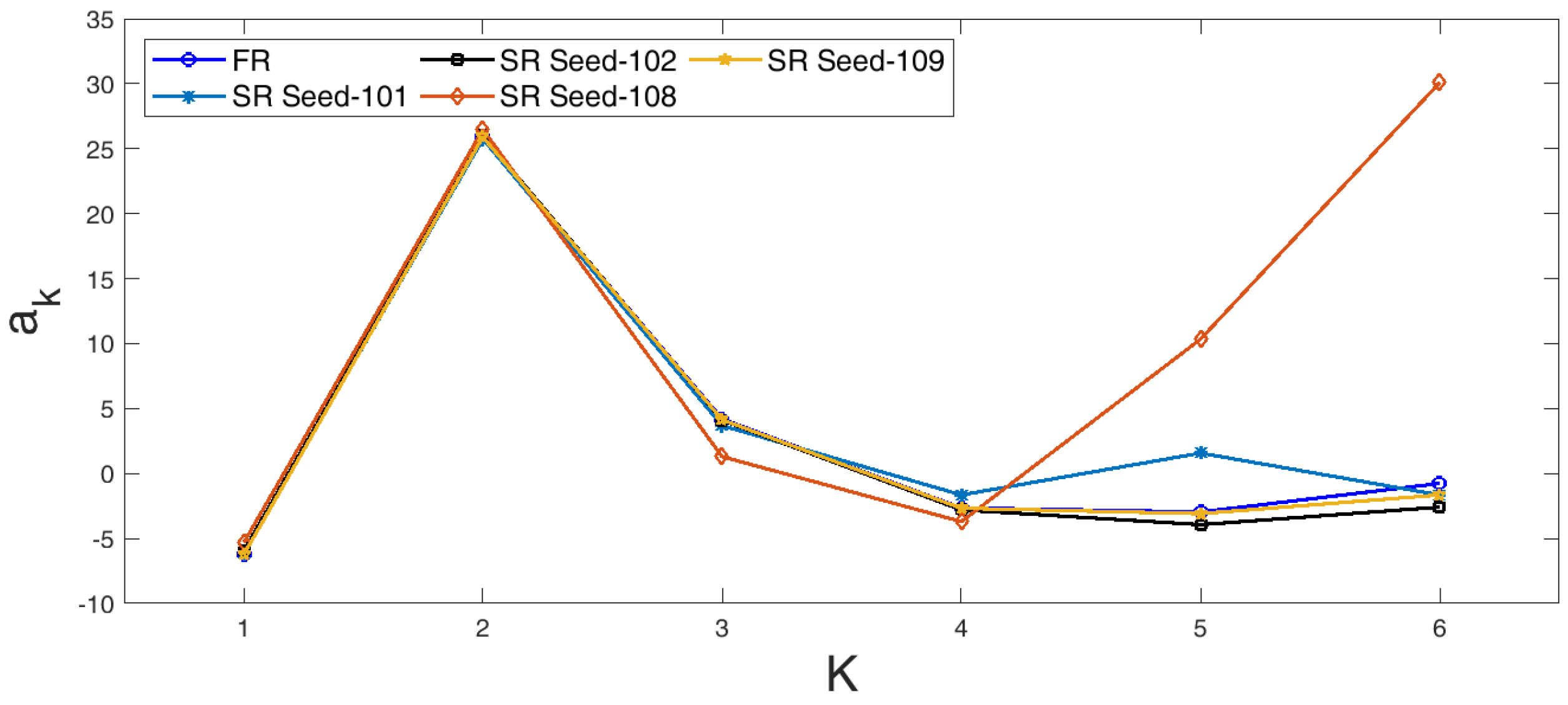

Figure A1.

Sparse reconstructed (SR) POD coefficients for different sensor placements compared with full reconstruction (FR) for a given .

Figure A1.

Sparse reconstructed (SR) POD coefficients for different sensor placements compared with full reconstruction (FR) for a given .

Figure A2.

Comparison of ELM-based parse reconstruction with full reconstruction flow fields for a single snapshot at T = 48.8, using random sensor placement with seed = 101 (maximum reconstruction error) and seed = 106 (minimum reconstruction error) from the choice of ten different experiments. Blue solid line: FR. Red dashed line: SR. Black stars: sensor locations. (a) Seed = 101 (max error); and (b) Seed = 106 (min error).

Figure A2.

Comparison of ELM-based parse reconstruction with full reconstruction flow fields for a single snapshot at T = 48.8, using random sensor placement with seed = 101 (maximum reconstruction error) and seed = 106 (minimum reconstruction error) from the choice of ten different experiments. Blue solid line: FR. Red dashed line: SR. Black stars: sensor locations. (a) Seed = 101 (max error); and (b) Seed = 106 (min error).

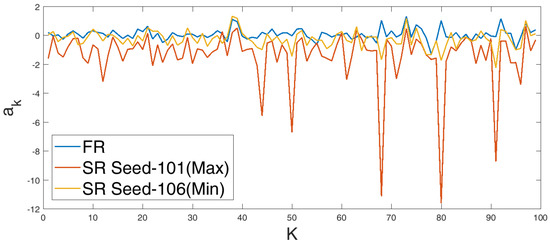

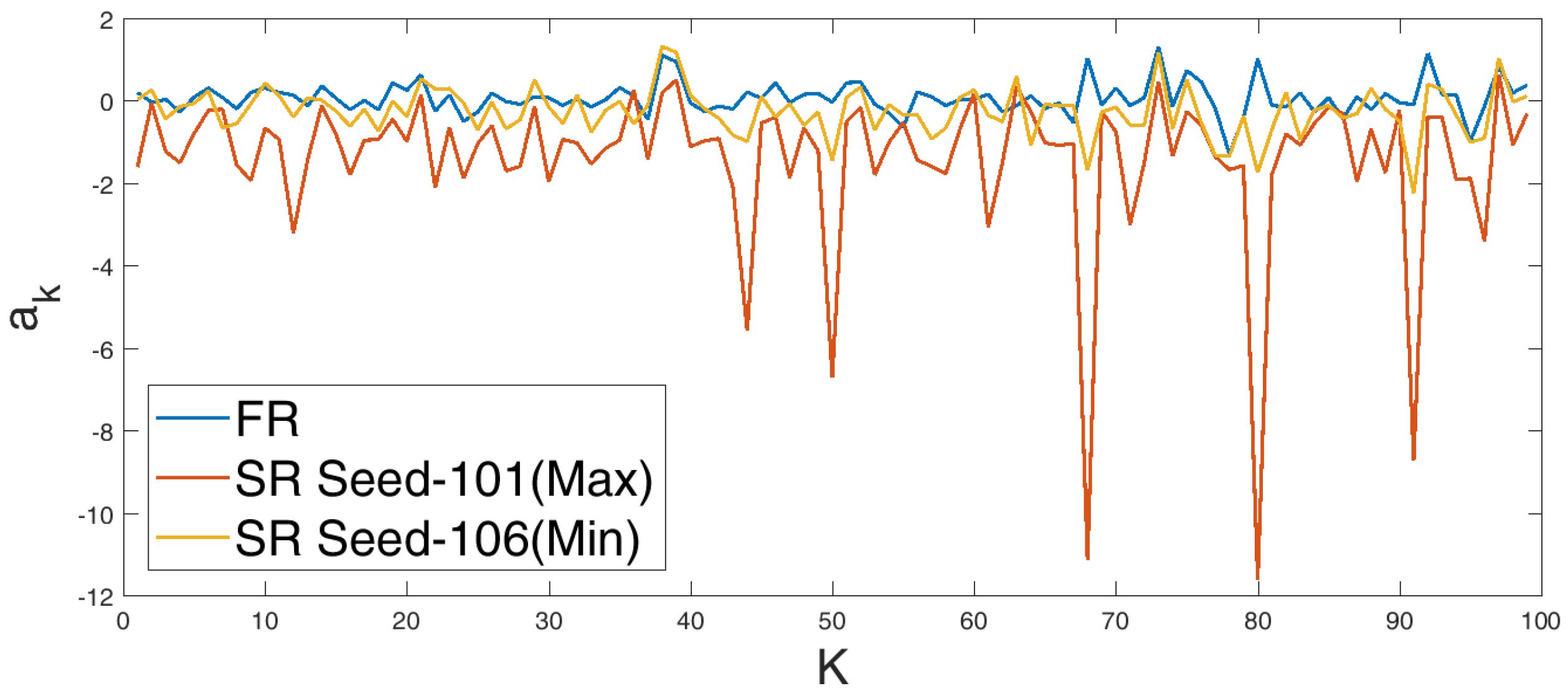

Figure A3.

Comparison of the sparse reconstructed (SR) ELM features with the corresponding full data reconstruction (FR) predicted features. The two SR cases correspond to sensor placement with maximum error (seed = 101) and minimum error (seed = 106).

Figure A3.

Comparison of the sparse reconstructed (SR) ELM features with the corresponding full data reconstruction (FR) predicted features. The two SR cases correspond to sensor placement with maximum error (seed = 101) and minimum error (seed = 106).

Appendix B. Impact of Retaining the Data Mean for Sparse Reconstruction

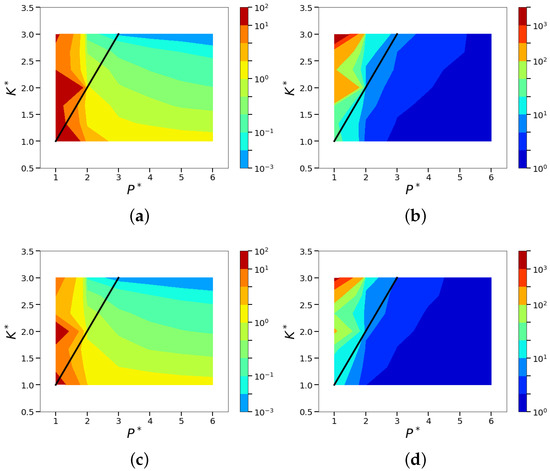

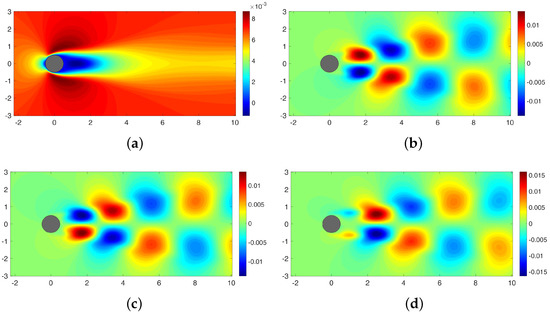

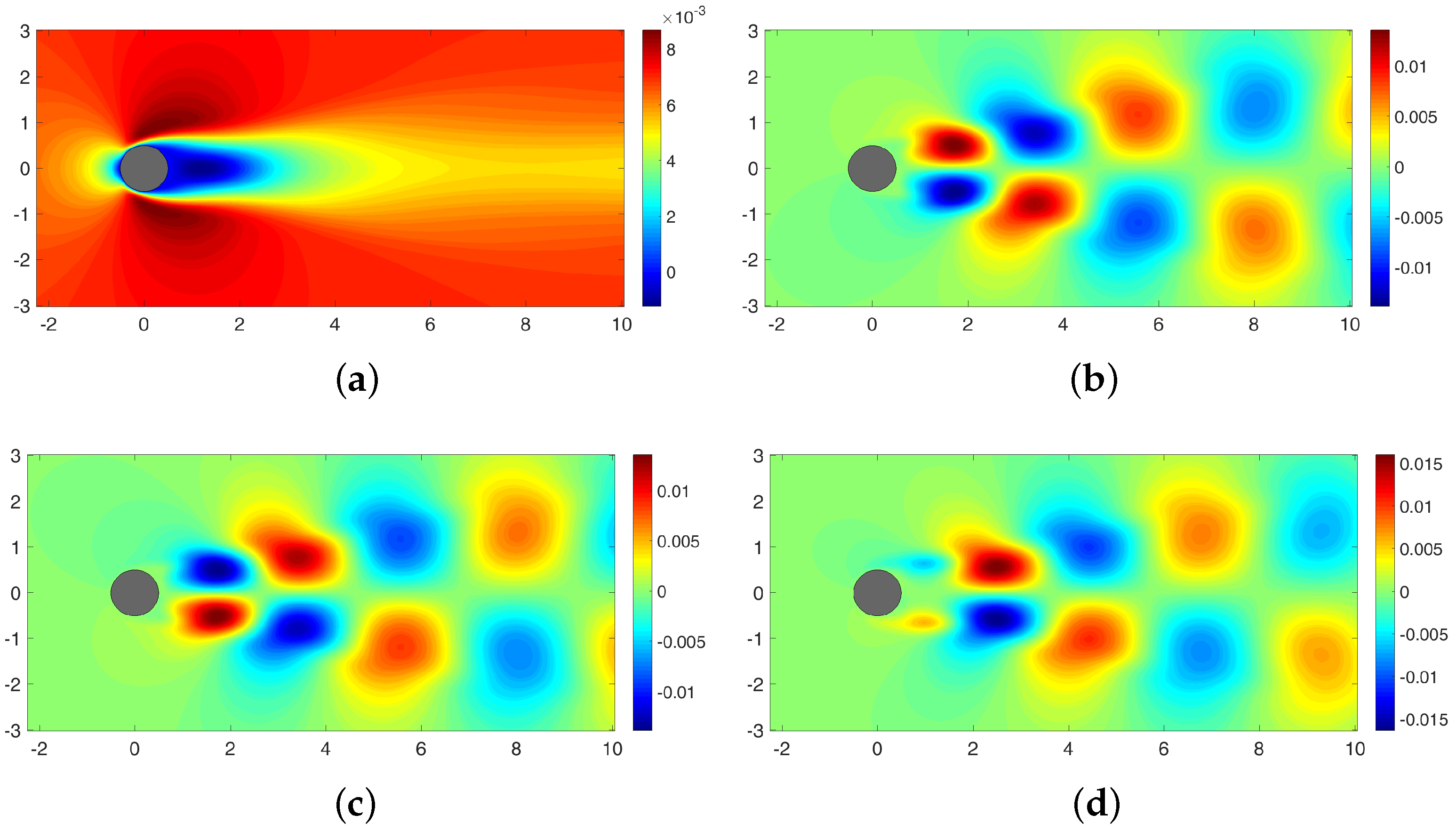

The SR framework described through the early sections of this article removed the mean before computing the data-driven basis as a matter of choice. As mentioned in Section 2.2, this step is not critical to the success of this method. In this section, we compare the effect of retaining the mean, against removing it, on the computed basis and the reconstruction performance. Figure A4 compares the first five POD modes using data with and without the mean. It is observed that retaining the mean (i.e., just the SVD step) generates an extra ‘mean’ POD mode that carries significant energy but the rest of the basis is similar to those computed after removing the mean. This choice in essence shifts the resulting energy spectrum. Figure A5 compares the normalized mean squared POD-based SR errors. This comparison clearly shows that both the variants of the SR framework yield nearly similar error distribution over the space for the most part. However, the SR with the mean retained have slightly increased errors in the region .

Figure A4.

Isocontours of first five POD modes with and without removing the mean from the snapshot data for cylinder wake flow with = 100. (a) 1st POD mode (with mean); (b) 1st POD mode (without mean); (c) 2nd POD mode (with mean); (d) 2nd POD mode (without mean); (e) 3rd POD mode (with mean); (f) 3rd POD mode (without mean); (g) 4th POD mode (with mean); (h) 4th POD mode (without mean); (i) 5th POD mode (with mean); and (j) 5th POD mode (without mean).

Figure A4.

Isocontours of first five POD modes with and without removing the mean from the snapshot data for cylinder wake flow with = 100. (a) 1st POD mode (with mean); (b) 1st POD mode (without mean); (c) 2nd POD mode (with mean); (d) 2nd POD mode (without mean); (e) 3rd POD mode (with mean); (f) 3rd POD mode (without mean); (g) 4th POD mode (with mean); (h) 4th POD mode (without mean); (i) 5th POD mode (with mean); and (j) 5th POD mode (without mean).

Figure A5.

The isocontours of the normalized mean squared POD-based sparse reconstruction errors ( norms). The top figures correspond to the case with mean removed for learning the basis. The bottom figures correspond to the case with the mean retained. Left: normalized absolute error metric, . Right: normalized relative error metric, . (a) (without mean); (b) (without mean); (c) (with mean); and (d) (with mean).

Figure A5.