Abstract

As digitalization is gradually transforming reality into Big Data, Web search engines and recommender systems are fundamental user experience interfaces to make the generated Big Data within the Web as visible or invisible information to Web users. In addition to the challenge of crawling and indexing information within the enormous size and scale of the Internet, e-commerce customers and general Web users should not stay confident that the products suggested or results displayed are either complete or relevant to their search aspirations due to the commercial background of the search service. The economic priority of Web-related businesses requires a higher rank on Web snippets or product suggestions in order to receive additional customers. On the other hand, web search engine and recommender system revenue is obtained from advertisements and pay-per-click. The essential user experience is the self-assurance that the results provided are relevant and exhaustive. This survey paper presents a review of neural networks in Big Data and web search that covers web search engines, ranking algorithms, citation analysis and recommender systems. The use of artificial intelligence (AI) based on neural networks and deep learning in learning relevance and ranking is also analyzed, including its utilization in Big Data analysis and semantic applications. Finally, the random neural network is presented with its practical applications to reasoning approaches for knowledge extraction.

1. Introduction

The Internet has enabled the direct connection between users and information. This has fundamentally changed how businesses operate, including the travel industry and e-commerce. The Internet provides real-time information and the direct purchase of services and products. Web users can directly buy flight tickets, hotel rooms, and holiday packs. Travel industry supply charges have been eliminated or decreased because the Internet has provided a shorter value chain; however, services or products not displayed within the higher order of web search engines or recommender systems lose tentative customers. A similar scenario also applies in academic and publication searches where the Internet has permitted the open publication and accessibility of academic research. Authors are able to avoid the conventional method of human evaluation of the journal and upload their work onto their personal websites. With the intention to expand the research contribution to a wider number of readers and be cited more, authors have the personal interest to show publications in high academic search rank orders.

Web search engines and recommender systems were developed as interfaces between users and the Internet to address the need for searching precise data and items. Although they provide a straight link between web users with the pursued products or wanted information, any web search result list or suggestion will be biased due profitable economic or personal interests along with by the users’ own inaccuracy when typing their queries or requests. Sponsored search enables the economic revenue that is needed by web search engines [1]; it is also vital for the survival of numerous web businesses and the main source of income for free to use online services. Multiple payment options adapt to different advertiser targets while allowing a balanced risk share among the advertiser and the web search engine for which the pay-per-click method is the widest used model.

Ranking algorithms are critical in the presented examples as they decide on the result relevance and order, therefore, marking data as transparent or nontransparent to e-commerce customers and general web users. Considering the web search commercial model, businesses or authors are interested in distorting ranking algorithms by falsely enhancing the appearance of their publications or items, whereas web search engines or recommender systems are biased to pretend relevance exists with respect to the rank in which they order results from explicit businesses or web sites in exchange for a commission or payment. The main consequence for a web user is that relevant products or results may be “hidden” or displayed at the very low order of the search list and unrelated products or results on a higher order.

Searching for information or meaning needs three elements: a universe formed of entities or ideas to be searched, a high-level query that specifies the properties or concepts requested by a user, and a method that searches and selects entities from the universe according to an algorithm or rule. The examples used by animals when they search in a large unknown space were investigated and the role of teamwork was discussed [2]; these ideas were then applied to the search for information when N concurrent “search agents” with similar characteristics are being used [3] establishing that the total average search time can be reduced by aborting and re-starting a search process after a pre-determined time-out if it has not been successful. The use of more concurrent agents can actually reduce the total energy costs, despite the increase in the number of agents, as well as the search times [4]. These results were confirmed in terms of large datasets distributed over large networks [5].

Artificial neural networks are models based on the brain within the central nervous system; they are usually presented as artificial nodes or “neurons” in different layers connected together via synapses. The learning properties of artificial neural networks have been applied to resolve extensive and diverse tasks that would have been difficult to solve by ordinary rules-based programming. Neural networks and artificial intelligence have also been applied to web searching in result ranking and relevance as a method to learn and adapt to variable user interests.

This paper presents a survey of web searches in Section 2, including internet assistants, web search engines, meta-search engines, web result clustering, travel services, and citation analysis. Ranking is described in Section 3; with ranking algorithms, relevance metrics and learning to rank. We define recommender systems in Section 4 and neural networks in web searches, learning to rank, recommender systems, and deep learning are analyzed in Section 5, including the random neural network and its application to web searches and ranking algorithms. Finally, conclusions are explained on Section 6, followed by the survey bibliography.

2. Web Search

With the development of the Internet, several user semantic interfaces, applications, and services have been proposed or developed to manage the increasingly greater volume of information and data accessible in the World Wide Web.

2.1. Internet Assistants

Internet assistants learn and adapt to variable user’s interests in order to filter and recommend information. These agents normally define a user as a set of weighted terms which are either explicitly introduced or implicitly extracted from the web browsing behavior. Relevance algorithms are determined by a vector space model that models both query and answer as an ordered vector of weighed terms. Web results are the parsed fragments obtained from either Web pages, documents or web results retrieved from different sources. The user provides explicit or implicit feedback on the results considered relevant or interesting. This is then used to adapt the weights of the term set profile.

Intelligent agents [6] are defined as a self-contained independent software module or computer program that performs simple and structurally repetitive automated actions or tasks in the representation of web users while cooperating with other intelligent agents or humans. Their attributes are autonomy, cooperation with other agents, and learning from interaction with the environment and the interface with users’ preferences and behavior. Intelligent agents [7] behave in a manner analogous to a human agent with autonomy, adaptability and mobility as desirable qualities. They have two ways to make the web invisible to the user: by abstraction where the used technology and the resources accessed by the agent are user-transparent, and by distraction, where the agent runs in parallel to the web user and performs tedious and complex tasks faster than would be possible for a human alone.

Spider Agent [8] is a metagenetic assistant to whom the user provides a set of relevant documents where the N highest frequency keywords form a dictionary which is represented as a N × 3 matrix. The first column of the dictionary contains the keywords, whereas the second column measures the total amount of documents that contain the keywords. Finally, the third column contains the sum frequency of the specific word over the overall documents. The metagenetic algorithm first creates a population of keyword sets from the dictionary based on three genetic operators: crossover, mutation, and inversion. Then it creates a population of logic operators sets (AND, OR, NOT) for each of the first populations. Spider Agent forms different queries by the combination of both populations and searches for relevant documents for each combination. The main concept is that different combinations of words in different queries may result in search engines providing additional different relevant results.

Syskill and Webert [9] helps users to select relevant web pages on specific topics where each user has a set of profiles, one for each topic, and web pages are rated as relevant or irrelevant. Syskill and Webert transforms the source code of the web page, based on the Hyper Text Markup Language (HTML), into a binary feature vector which designates the presence of words using a learning algorithm based on a naive Bayesian classifier. Letizia [10] is a web user interface agent that helps web browsing. Letizia learns user behavior and provides additional interesting web pages by exploring the current web page links where the user interest assigned to a web document is calculated as the reading time, the addition to favorites, or the click of a shown link.

WebWatcher [11] is a web tour guide agent that provides relevant web links to the user while browsing the web; it acts as a learning apprentice observing and learning interest from its user actions when selecting relevant links. WebWatcher uses reinforcement learning where the reward is the frequency of each the searched terms within the web page recommending web pages that maximize the reward path to users with similar queries.

Lifestyle Finder [12] is an agent that generates user profiles with a large-scale database of demographic data. Users and their interests are grouped by their input data according to their demographic information. Lifestyle Finder generalizes user profiles along with common patterns within the population; if the user data corresponds to more than one cluster, the demographic variables whose estimates are close with the entire corresponding groups generate a limited user profile. The demographic feature that best distinguishes the corresponding groups is utilized to ask the Web user for additional details where the final group of corresponding clusters is obtained after several user iterations.

2.2. Web Search Engines

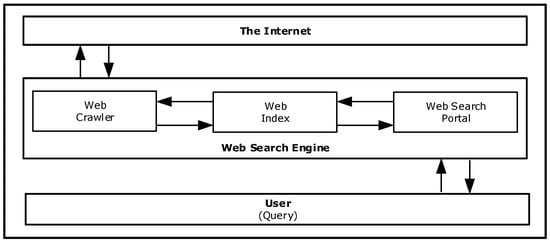

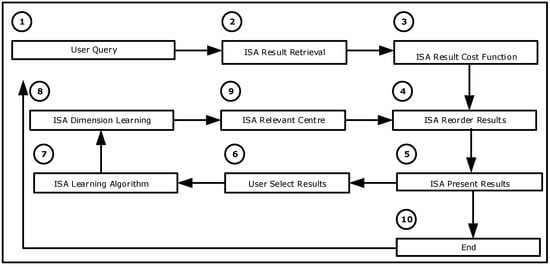

Internet assistants have not been practically adopted by Internet users as an interface to reach relevant information. Instead, web search engines are the preferred option as the portal between users and the Internet. Web search engines are software applications that search for information in the World Wide Web while retrieving data from web sites and online databases or web directories. Web search engines have already crawled the web, fetched its information, and indexed it into databases so when the user types a query, relevant results are retrieved and presented promptly (Figure 1). The main issues of web search engines are result overlap, rank relevance, and adequate coverage [13] for both sponsored and non-sponsored results.

Figure 1.

Web search engine architecture.

Web personalization builds the user’s interest profile by using their browsing behavior and the content of the visited web pages to increase result extraction and rank efficiency in web searches. A model [14] that represents a user’s needs and its search context is based on content and collaborative personalization, implicit and explicit feedback, and contextual search. A user is modeled by a set of terms and weights [15] related to a register of clicked URLs with the amount of visits to each, and a set of previous searches and web results visited; this model is applied to re-order the web search results provided by a non-personalized web search engine. Web queries are associated to one or more related web page features [16] and a group of documents is associated with each feature; a document is both associated with the feature and relevant to the query. These double profiles are merged to attribute a web query with a group of features that define the user’s search relevance; the algorithm then expands the query during the web search by using the group of categories. There are different methods to improve the personalized web search based on the increment of the diversity of the top results [17] where personalization comprises the re-ranking of the first N search results to the probable order desired by the user and query reformulations are implemented to include variety; the three diversity methods proposed are: the most frequent selects the queries that most frequently precede the user query; the maximum result variety choses queries that have been frequently reformulated but distinct from the already chosen queries; and finally, the most satisfied selects queries that usually are not additionally reformulated, but they have a minimum frequency.

2.2.1. Spatial Search Variation

Spatial variation based on information from web search engine query records and geolocation methods can be included in web search queries [18] to provide results focused on marketing and advertising, geographic information, or local news; accurate locations are assigned to the IP addresses that issue the queries. The center of a topic is calculated by the physical areas of the users searching for it where a probabilistic model calculates the greatest probability figure for a geographic center and the geographical dispersion of the query importance. Aspects for a web query are calculated [19] as an effective tool to explore a general topic in the web; each aspect is considered as a set of search terms which symbolizes different information requests relevant to the original search query. Aspects are independent from each other while having a high combined coverage, two sources of information are combined to expand the user search terms: query logs and mass collaboration knowledge databases, such as Wikipedia. Spatial search variation can also be combined with time search variation [20] where a user can specify both the temporal and spatial attributes in addition to spatial attributes of the geographic entities in a time-specific regional web search system

2.2.2. Time Search Variation

Time is a key consideration role in Web search because web queries may be time-related: user relevance varies with time and most web pages contain temporal information. The web is not a static environment, its information changes constantly; relevant pages in the past may not be relevant pages at the present or in the future; showing new and relevant results to users is key as users want the latest information. The inclusion of time in web searches presents three issues [21]: the extraction of implicit temporal expressions from web pages, the determination of the precise and explicit time from the extracted temporal information, and the integration of the precise time into a search engine. Current web ranking techniques favor older pages because these pages have many in-links accumulated over time whereas new pages, which may be highly relevant, have few or no in-links, therefore, showing a lower rank [22]. In order to address this issue a timed PageRank is proposed with an aging factor weight that penalizes older pages. The best results of temporally-dependent searches change over time, therefore, these results should be fresh and most current [23], and a web search engine must be able to detect that certain queries have an implicit temporal intent and use this information to improve the search quality. Factual information associated with some targeted entity, such as time [24], can be represented as snippets and considered as a document sentence fragment that encodes the full length of the web page. Offering the user a comprehensive temporal perspective of a topic by clustering relevant temporal web search results is intuitively more informative than retrieving a result that only contains topical information [25]. A concept can be annotated with temporal, precise, and structured information that reflects its explicit and complex meanings to be used in trend analysis, aspect exploration and query suggestion [26]; the temporal semantic context can help users to learn, understand, and search unfamiliar or newly emerged concepts on the web, such as LinkedIn or Wikipedia, without any need for any prior knowledge, such as ontology or a hierarchical knowledge base.

2.3. Metasearch Engines

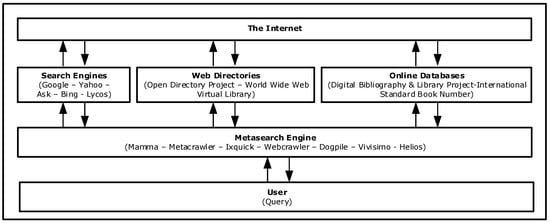

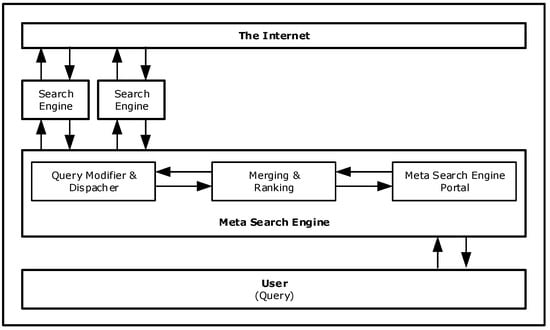

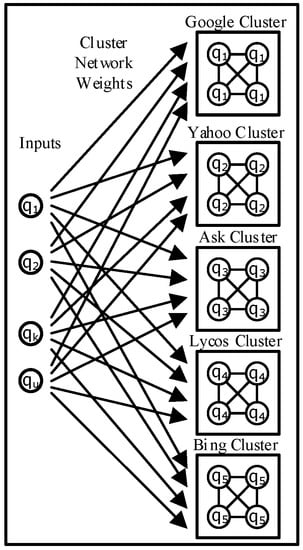

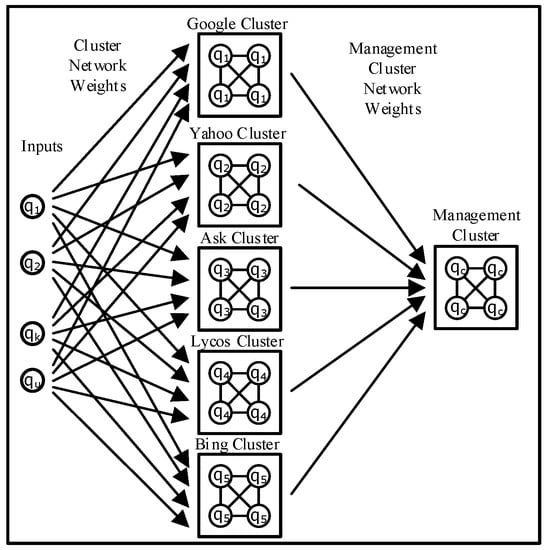

Metasearch engines were developed based on the concept that single web search engines were not able to crawl and index the entire web. While web search engines are useful for finding specific information, like home pages, they may be less effective with a comprehensive search or wide queries due to their result overlap and limited coverage area. Metasearch engines try to compensate their disadvantages by sending user queries to different web search engines simultaneously, databases, web directories, and digital libraries and combining their results into a single ranked list (Figure 2 and Figure 3). The main operational difference with web search engines is that metasearch engines do not index the web.

Figure 2.

Metasearch service model.

Figure 3.

Metasearch engine architecture.

There are challenges for developing metasearch engines [27]: different web search engines are expected to provide relevant results which must be selected and combined. Different parameters shall be considered when developing a metasearch engine [28]: functionality, working principles—including querying, collection, and fusion of results—architecture, and underlying technology, growth, evolution, and popularity. Numerous metasearch architectures can be found [29] such as Helios, Tadpole, and Treemap, with different query dispatcher, result merger, and ranking configurations. Helios [30] is an open-source metasearch engine that runs above different web search engines where additional ones can be flexibly plugged into the architecture. Helios retrieves, parses, merges, and reorders results given by the independent web search engines.

An extensive modular metasearch engine with automatic search engine discovery [31] that incorporates several autonomous search engines is based on three components: automatic web search engine recognition, automatic web search engine interconnection, and automatic web search result retrieval. The solution crawls and fetches web pages choosing the web search engine ones, which, once discovered, are connected by parsing the HTML source code, extracting the form parameters and attributes, and sending the query to be searched. Finally, URLs or snippets provided by the different web search engines are extracted and displayed to the web user.

There are several rank aggregation metasearch models to combine results in a way that optimizes their relevance position based on the local result ranks, titles, snippets, and entire Web pages. The main aggregation models are defined as [32]: Borda-fuse is founded on the Borda Count, a voting mechanism on which each voter orders a set of web results; the web result with the most points wins the election. The Bayes-fuse is built on a Bayesian model of the probability of web result relevance. The difference between the probability of relevance and irrelevance, respectively, determines relevance of a web Page based on the Bayes optimal decision rule. Rank aggregation [33] can be designed against spam, search engine commercial interest, and coverage.

The use of the titles and associated snippets [34] can produce a higher success than parsing the entire web pages where the effective algorithm of a metasearch engine for web result merging outperforms the best individual web search engine. A formal approach to normalize scores for metasearch by balancing the distributions of scores of the first irrelevant documents [35] is achieved by using two different methods: the distribution of scores of all documents and the combination of an exponential and a Gaussian distribution to the ranks of the documents where the developed exponential distribution is used as an approximation of the irrelevant distribution.

Metasearch engines provide personalized results using different approaches [36]. The first method provides distinct results for users in separate geographical places where the area information is acquired for the user and web page server. The second method has a ranking method where the user can establish the relevance of every web search engine and adjusts its weight in the result ranking in order to obtain tailored information. The third methods analyses web domain structures of web pages to enable the user the possibility to limit the amount of web pages with close subdomains.

Results from different databases present in the web can also be merged and ranked [37] with a representative database that is highly scalable to a large number of databases and representative for all local databases. The method assigns only a reduced but relevant number of databases for each query term where single-term queries are assigned the right databases and multi-term queries are examined to extract dependencies between them in order to generate phrases with adjacent terms. Metasearch performance against web search has been widely studied [38] where the search capabilities of two metasearch engines, Metacrawler and Dogpile, and two web search engines, Yahoo and Google, are compared.

2.4. Web Result Clustering

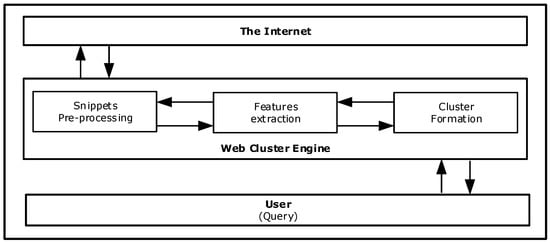

Web result clustering groups results into different topics or categories in addition to web search engines that present a plain list of result to the user where similar topic results are scattered. This feature is valuable in general topic queries because the user gets the theme bigger picture of the created result clusters (Figure 4). There are two different clustering methods; pre-clustering calculates first the proximity between web pages and then it assigns the labels to the defined groups [39] whereas post-clustering discovers first the cluster labels and then it assigns web pages to them [40]. Web clustering engines shall provide specific additional features [41] in order to be successful: fast subtopic retrieval, topic exploration and reduction of browsing for information. The main challenges of web clustering are overlapping clusters [42], precise labels [43], undefined cluster number [44], and computational efficiency.

Figure 4.

Web cluster engine architecture.

Suffix tree clustering (STC) [39] is an incremental time method which generates clusters using shared phrases between documents. STC considers a web page as an ordered sequence of words and uses the proximity between them to identify sets of documents with common phrases between them in order to generate clusters. STC has three stages: document formatting, base clusters identification, and its final combination into clusters.

The Lingo algorithm [40] finds first clusters utilizing the vector space model to create a “T×D term document matrix where T is the number of unique terms and D is the number of documents”. A singular value decomposition method is used to discover the relevant matrix orthogonal basis where orthogonal vectors correspond to the cluster labels. Once clusters are defined documents are assigned to them.

A cluster scoring function and selection algorithm [42] overcomes the overlapping cluster issue; both methods are merged with suffix tree clustering to create a new clustering algorithm called extended suffix tree clustering (ESTC) which decreases the amount of the clusters and defines the most useful clusters. This cluster scoring function relies on the quantity of different documents in the cluster where the selection algorithm is based on the most effective collection of clusters that have marginal overlay and maximum coverage; this makes the greatest amount of different clusters where most of the documents are covered in them.

A cluster labelling strategy [43] combines intra-cluster and inter-cluster term extraction where snippets are clustered by mapping them into a vector space based on the metric k-center assigned with a distance function metric. To achieve an optimum compromise between defining and distinctive labels, prospect terms are selected for each cluster applying an improved model of the information gain measurement. Cluster labels are defined as a supervised salient phrase ranking method [44] based on five properties: “sentence frequency/inverted document frequency, sentence length, intra-cluster document similarity, cluster overlap entropy, and sentence independency” where a supervised regression model is used to extract possible cluster labels and human validators are asked to rank them.

Cluster diversification is a strategy used in ambiguous or broad topic queries that takes into consideration its contained terms and other associated words with comparable significance. A method clusters the top ranked web pages [45] and those clusters are ordered following to their importance to the original query however diversification is limited to documents that are assigned to top ranked clusters because potentially they contain a greater number of relevant documents. Tolerance classes approximate concepts in web pages to enhance the snippets concept vector [46] where a set of web pages with similar enhanced terms are clustered together. A technique that learns relevant concepts of similar topics from previous search logs and generates cluster labels [47] clusters web search results based on these as the labels may be better than those calculated from the current terms of the search result.

Cluster hierarchy groups data over a variety of scales by creating a cluster tree. A hierarchy of snippets [48] is based on phrases where the snippets are assigned to them; the method extracts all salient phrases to build a document index assigning a cluster per salient phrase; then it merges similar clusters by selecting the phrase with highest number of indexing documents as the new cluster label. Finally, it assigns the snippets whose indexing phrases belong to the same cluster where the remaining snippets are assigned to neighbors based on their k-nearest distance.

Web search results are automatically grouped through semantic, hierarchical, online clustering (SHOC) [49]. A web page is considered as a string of characters where a cluster label is defined as a meaningful substring which is both specific and significant. The latent semantic of documents is calculated through the analysis of the associations between terms and web pages. Terms assigned with the same web page should be close in semantic space, the same as the web pages assigned with the same terms. Densely-allocated terms or web pages are close to each other in semantic space, therefore, they should be assigned to the same cluster. Snake [50] is a clustering algorithm based on two information databases. The anchor text and link database assigns each web document to the terms contained in the web document itself and the words included in the anchor text related to every link in the document, the semantic database ranks a group of prospect phrases and choses the most significant ones as labels. Finally, a hierarchical organizer combines numerous base clusters into a few super-clusters. A query clustering approach [51] calculates the relevance of web pages using previous choices from earlier users, the method applies a clustering process where collections of semantically-similar queries are identified and the similarity between a couple of queries is provided by the percentage of shared words in the clicked URL within the web results.

2.5. Web Search Business Case 1: Travel Services

Originally, travel service providers, such as airlines or hotels, used global distribution systems to combine their offered services to travel agencies. Global distribution systems required high investments due to their technical complexity and physical dimensions as they were mainframe-based infrastructure; this generated the monopoly of a few companies that charged a high rate to the travel services providers in order to offer their services to the travel agencies [52]. With this traditional model, customers could purchase travel provider services directly at their offices or through a travel agent. This scenario has now been changed by two factors: the Internet has enabled e-commerce—the direct accessibility of customers to travel services providers’ information in real-time with the availability of online purchase [53]—and higher computational servers and software applications can implement the same services as the global distribution systems did, at a lower cost.

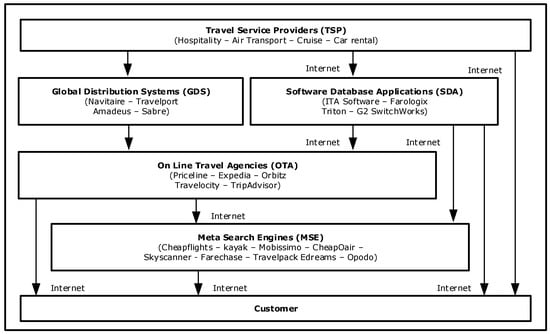

As a consequence, new players have entered to this scenario [54]; software database applications, such as ITA Software or G2 SwitchWorks, use high computational server applications and search algorithms to process the data provided from travel services providers; they are the replacement of the global distribution systems. Online travel agents, such as Expedia or TripAdvisor, are traditional travel agents that have automatized the global distribution systems interface: customers can interact with them directly through the Internet and buy the final chosen product. Metasearch engines like Cheapflights or Skyscanner, among many others, use the Internet to search customers’ travel preferences within travel services providers and online travel agents however customers cannot purchase products directly; they are mainly referred to the supplier web site (Figure 5).

Figure 5.

Metasearch engine architecture.

Other players that have an active part in this sector are Google and other web search engines [55]; they provide search facilities that connect directly travel service providers with consumers bypassing global distribution systems, software distribution systems, Metasearch engines and online travel agents. This allows the direct product purchase that can reduce distribution costs due to a shorter value chain. Travel ranking systems [56] also recommend products and provide the greatest value for the consumer’s budget with a crucial concept where products that offer a higher benefit are ranked in higher positions.

With the integration of the customer and the travel service provider through the Internet, different software applications have been developed to provide extra information or to guide through the purchase process [57] based on user interactions. Travel related web interfaces [58] help users to make the process of planning their trip more entertaining and engaging while influencing users’ perceptions and decisions. There are different information search patterns and strategies [59] within specific tourism domain web search queries based on search time, IP address, language, city, and key terms; the study shows that clicking and re-clicking on the search engines homepage is the most repetitive and iterative task of web users, the city is most likely to be included as a key term in the search queries, followed by the hotel where, finally, web users normally select the results that show the city home web page.

2.6. Web Search Business Case 2: Citation Analisys

Citation analysis consists on the access and referral of research information including articles, papers and publications. Its importance is based on its measurement of the value or relevance of personal academic work; it is becoming more respected since its evaluation impacts on other decisions, such as promotion, scholarships, and research funds. The journal impact factor [60] is calculated as “the number of citations received in the current year to articles published in the two preceding years divided by the total number of articles published in the same two years”. It is based on the concept that high-impact journals will only try to publish very relevant work, therefore, they will be used for outstanding academics. These traditional measurements are peer review based on human judgment, however, it is time consuming and assessors may be biased by personal judgments, such as the quality of journal or the status of the university, instead of competent and impartial reviews.

The web has provided the possibility of other alternative citation measures making the citation monopoly to change because of the accessibly it provides. New online platforms [61] such as Scopus, Web of Science, Google Scholar, Microsoft Academic, and Semantic Scholar provide other measures that may represent more accurately the relevance of an academic contribution. Bibliometric indicators [62] evaluate the quality of the publication based on empirical values; mostly number of citations or number of downloads. They are not only quicker to obtain but they also provide a wider coverage because they also retrieve results from relevant academic works published on personal or academic web pages and open journals based on conference papers, book chapters, or theses. In addition, academics are personally interested to artificially influence ranking methods by “optimizing” the appearance of their research publications to show them in higher ranks with the purpose to extend their reader audience [63] in order to get cited more.

The h-index [64] is applied to qualify the contribution of individual scientific research work; “An academic has an h-index of h if the x publications have individually received at least h citations and the other (x − h) publications have up to h citations”. The h-index has become popular among researchers because it solves the disadvantages of the journal impact factors and it has also a good correlation with the citation analysis database. It is simple and fast to calculate utilizing databases like Google Scholar, Microsoft Academic, Semantic Scholar, Scopus, or Web of Science, however, it suffers some disadvantages [60]: it does not differentiate negative citations from positive ones, so both are equally taken into account; it does not consider the entire number of citations an author has collected; and it disadvantages a reduced but highly-cited paper set very strongly.

Google Scholar, Semantic Scholar, Microsoft Academic, DBLP Computer Science Bibliography, CiteSeerX, and IEEE Xplore are free web academic search engines that register the metadata or the complete text of academic articles from different databases and journals. Google Scholar result relevance is calculated by a ranking algorithm that combines the term occurrence, author, publications, and citation weights [65]. Google Scholar has received both good and bad critics since it started in 2004 [66]: the lack of transparency in the relevance assessment of the information due to its automatic process of retrieving, indexing, and storing information has been criticized; however, the more transparent its evaluation algorithms, the easier it becomes to influence its metrics. Google Scholar has been widely analyzed and studied by the research community against other online databases [67,68].

A hybrid recommender system based on content-based and collaborative-based relevance [69] expands the method of the traditional keyword or key term search by adding citation examination, author examination, source examination, implicit ratings and explicit marks. Two ranking methods are applied: “in-text citation frequency examination” evaluates the occurrence which a publication is “cited within the citing document” and “in-text citation distance examination” determines the similarity between references within a publication by evaluating the word separation.

3. Ranking

Ranking is the core process on many information retrieval applications and Internet user semantic interfaces, including web search engines and recommender systems, for two main reasons: the user evaluation of quality or performance from a customer point of view and sponsored adverts based on ranking from a commercial perspective. There are several methods and techniques for ranking web pages and products that exploit different algorithms built on the analysis of the web page content, the anchor text of the links between pages or the network structure of the World Wide Web hyperlinked environment.

3.1. Ranking Algorithms

Web search engine evaluation comprises the measurement of the quality and services they provider to users; it is also used to compare their different search capabilities. Automatic evaluation analyses the different presented URLs whereas human evaluation is based on the measurement of result relevance. While the latest one is more precise, it takes more effort, and therefore is more expensive, as it is normally done by surveys. Other methods that combine both automatic and user evaluation are applications that monitor user activities such as click-troughs or time spent of each web site. “Dynamic ranking or query-dependent ranking” improves the order of the results returned to the user based on the query, while “static ranking or query-independent” calculates the relevance of the web pages to different topics.

Web spamming refers to any deliberate human action with the purpose of generating an unjustifiably favorable relevance or rank for some web pages, against the page’s true value and purpose [70]. There are two main categories of web spamming: boosting techniques influence the ranking algorithms used by search engines based on term and link spamming that optimizes the keywords of the web Pages or the links pointing between them, respectively, and hiding techniques conceal the applied boosting methods from regular web users that visit spam pages. Hiding techniques are based on web Page content hiding, cloaking from web crawlers, and URL redirection.

There are different methods to assess web pages relevance.

3.1.1. HTML Properties

The first method focuses on the HyperText Markup Language (HTML) properties of the web pages. The internal web page structure consists of specified text segments defined by semantic tags or mark up. HTML defines a set of roles assigned to the text in a web document related to formatting (bold or italics) or richer semantic values such as headlines or hyperlinks to other web pages. Web spammers mostly base their strategy in the design of the HTML properties of the web page to get an artificial better rank from web search engines.

HTML ranking algorithms make use of the structure HTML imposes on web documents and the relative order between terms and semantic tags [71]; the parameters for weighting words in the HTML fields are based on type of text, section, size, position, anchor text and URL. The structure of a HTML page can be represented as a tag tree by a Document Object Model (DOM). Although DOM, in general, provides a useful structure for a web page, its defined tags are used not only for content organization, but also for layout presentation. DOM analysis tends to disclose the presentation structure rather than the content structure [72]; it is often not accurate enough to discriminate different semantic blocks in a web page, therefore, ranking algorithms based only on HTML properties will not accurately measure the meaning or relevance of a web page. In order to address the DOM structure issues, a vision-based page segmentation algorithm divides a web page into semantically-related units [73]. The vision segmentation algorithm is based on two key concepts: the visual presentation of the page that groups together semantically related content and the division of the web page into regions for different contents using explicit or implicit visual separators [74].

3.1.2. TF-IDF

The second method is founded on the inspection of the web page text itself and how the words in the query relate within it. TF-IDF or “Term frequency and inverse document frequency” applies the vector space model with a scoring function where the importance of a term increases if it is repeated often in a document, however, its weight decreases if the same term appears multiple times on numerous documents as it is considered less descriptive:

where t is the keyword, d is the web page, ft,d the amount of occurrences that keyword t is contained within the web page d, N is the entire amount of web pages, and dt is the quantity of web pages that include the keyword t.

A probabilistic TF-IDF retrieval model [75] emulates the decision making of a human brain for two categories of relevance: “local relevance” is first applied to a particular web page location or section, whereas the “wide relevance” spans to cover the whole web page; the method then merges the “local relevance” decisions for each position as the web page “wide relevance” decision. Document transformation adapts the web page vector close to the query vector by adding or removing terms. An example of long-term incremental learning [76] is based on a search log that stores information about click-throughs web users have browsed among the web result pages from a web search engine and web pages selected by the user. Content-time-based ranking is a web search time focus ranking algorithm [77] that includes both “text relevance” and “time relevance” of web pages. “Time relevance” is the interval computed between the query and the content or update time where every term in a web page is assigned to an explicit content time interval and, finally, the time focus TF-IDF value of each keyword is calculated to obtain the overall rank.

3.1.3. Link Analysis.

The third method is the link analysis which it is founded on the relation between web pages and their web graph.

In-Degree [78] was the first link analysis ranking algorithm; it ranks pages according to their popularity which it is calculated by the sum of links that point to the page:

where p1, p2, … pN correspond to the web pages to be ranked and M(pi) defines the collection of web documents pointing to pi.

Page Rank [79] is founded on the concept that a web document has a top “page rank” if there are many web pages with reduced “page rank” or a few web pages with elevated “page rank” that link to it. The Page Rank algorithm provides a likelihood distribution that represents the probability that a web user arbitrarily following the links will reach to the specific web page:

where p1, p2, … pN correspond to the web pages to be ranked, d the dumping factor, M(pi) defines the collection of web documents pointing to pi, L(pj) is the amount of external connections from page pj, and N is the entire quantity of web pages.

The HITS algorithm [80], or Hyperlink Induced Topic Search algorithm, assigns relevance founded on the authority concept and the relation among a group of relevant “authoritative web pages for a topic” and the group of “hub web pages that link to many related authorities” in the web link arrangement. The hyperlink structure among web pages is analyzed to formulate the notion of authority; the developer of web document p when inserts a hyperlink to web document q, provides some relevance or authority on q. Hubs and authorities have a reciprocally strengthening association: “a relevant hub is a web page that links to many relevant authorities; a relevant authority is a web page which is linked by numerous relevant hubs”.

The authority value of a web document is the addition of every hub value of web documents linking to it:

where p the relevant authority web document, i is a web document linked to p and n is the entire quantity of web documents linked to p.

The hub value of a web document is the addition of total number of authorities’ values of web documents linking to it:

where p is the relevant authority web document, i is a web document that p links to and n is the entire quantity of web documents p connects to.

The SALSA algorithm [81], or Stochastic Approach for Link-Structure Analysis, combines Page Rank and HITS by taking a random walk alternating between hubs and authorities. Two random walks are assigned two scores per web page; it is based on the in link and out link that represent the proportion of authority and hub respectively.

The hub array is expressed in the below sum:

The authority array is expressed in the below sum:

where web document h links to mutual documents i and j and deg is the amount of hyperlinks connecting to a page.

The QS page-rank is a query-sensitive algorithm [82] that combines both global scope and local relevance. Global web page importance is calculated by using general algorithms, such as Page Rank. Local query sensitive relevance is calculated by a voting system that measures the relation between the top web pages retrieved.

The priority of a query sensitiveness rank (QS) is defined where document 1 is ordered in front of document 2:

(QS1 > QS2) || (QS1 == QS2 && PR1 > PR2)

The priority of a global importance rank (PR) is defined where document 1 is ordered in front of document 2:

where QS1 and QS2 are the query sensitive values and PR1 and PR2 are the global importance values for document 1 and document 2, respectively.

(PR1 > PR2) || (PR1 == PR2 && QS1 > QS2)

DistanceRank [83] is an iterative algorithm built on reinforcement learning; the distance between pages is counted as punishment where distance is established as the number of links among two web pages. The objective of the method is the reduction of the punishment or distance therefore a web document with reduced “distance” has a greater rank. The main concept is that as correlated web pages are connected between them; the distanced built method finds relevant web pages quicker:

where i is the collection of web documents linking to j and O(i) is the amount of outbound hyperlinks in web document i and α is the learning rate of the user.

distancen[j] = (1 − α) × distancen−1[j] + α × mini(log(O[i]) + distancen−1[i])

DING is a dataset ranking [84] algorithm that calculates dataset ranks; it uses the connections among them to combine the resulting scores with internal semantic based ranking strategies. DING ranks in three stages: first, a dataset rank is calculated by an external dataset hyperlink measurement on the higher layer, then for each dataset, local ranks are calculated with hyperlink measurements between the inner entities and, finally, the relevance of each dataset entity is the combination of both external and internal ranks.

The internal rank score of entity e is calculated as:

where Lσ,i,j is a linkset (σ is term, i is the source and j is the target), N represents the amount of datasets and freq(σ) defines the rate of appearance of the term σ in the group of datasets.

The dataset rank score is calculated as:

where D is a dataset and EDj is the set of nodes within Dj.

The final global score of entity e for dataset D is:

where r(e) and r(D) are the internal rank entities and dataset rank score, respectively

rg(e) = r(e) × r(D)

ExpertRank [85] is an online community and discussion group expert ranking algorithm that integrates a vector space method to calculate the expert subject importance and a method similar to Page Rank algorithm to evaluate topic authority. The expert subject relevance score is calculated as a likeness of the candidate description and the provided request where the candidate description is generated by combining all the comments in the topic conversation group and the authority rating is computed according to the user interaction graph:

where c is the candidate, q is the query, i is the integration method, RE(c,q) represents the relevance rating among the query q and candidate c knowledge, and AU(c) represents the authority rating.

3.2. Relevance Metrics

Performance can be described as the display of relevant, authoritative, updated results on first positions. Retrieval performance is mostly evaluated on two parameters: precision is the percentage of applicable results within the provided web document list and recall is the percentage of the entire applicable results covered within the provided web document list. Due to the intrinsic extent of the web, where it is difficult to measure the quantity of all web pages and the fact users only browse within the first 20 results; precision is widely used as a performance measurement. In addition, another factor to consider is that web search engines are different and, therefore, they perform differently [86]; some may perform better than others for different queries and metrics.

There are different parameters to assess the performance of recommender systems:

- Precision evaluates the applicability of the first N results of the rating result set in relation to a query:

- Recall represents the applicability of the first N results of the rating result set in relation to the complete result set:where S is the result set.

- The mean absolute error (MAE) evaluates the error between user predictions and user ratings:

- The root squared mean error (RSME) measures the differences between predictions and user ratings:where Pui is the forecasted ranking for u on product i, Rui is the current rank for u on product I, and N is the entire amount of products ranked by the user u.

- F-score combines Precision and Recall in an evenly weighted metric:

- The average precision calculates the mean figures of P@n over the number n of retrieved web pages:where N is the total amount of retrieved web pages in the result list, and rel(n) represents that the n-th web page is either applicable or not to the query with values 1 or 0.

- The mean average precision measures the balance of average precision within a collection of Q web searches:

- The normalized discounted cumulative gain uses a rated relevance metric to measure the relevance of a result that uses its rank within the web page set:where R(j) is the rated applicability of the result at position j within the web page set, ZN is the standardization parameter that assures the optimum NDDC@N matches to 1. For binary ranking R(j) is fixed to 1 when the result at position j is relevant to the web search, and is fixed to 0 when the web page is irrelevant.

- TREC average precision includes rank position on the performance evaluation; it is defined at a cutoff N:

- The reciprocal rank of a web search is defined as the multiplicative inverse applied to the rank of the first relevant result:where ranki is the order of the first relevant result.

- The mean reciprocal rank corresponds to averaged value of the RR (reciprocal rank) over a collection of Q searches:where RR(q) is the reciprocal rank associated to the search q and Q is the total collection of searches.

- The expected reciprocal rank is based on a cascaded model which penalizes documents which are shown below very relevant documents:where N is the amount of web pages in the ranking, r is the position at which the web user stops its search and R measures the probability of a click which can be interpreted as the relevance of the snippet.

Different research analyses have studied the efficiency of web search engines [87] and the level of overlap among the web pages presented by the search engines. Two new measurements to assess web search engine performance are proposed [88]: the relevance of result rating by a web search engine is assessed by the association among web and human rating and the capability to retrieve applicable web pages as the percentage of first ranked within the retrieved web pages. Four relevance metrics are applied to calculate the quality of web search engines [89]; three of them are adapted from the information retrieval: vector space method, Okapi likeness value, and coverage concentration rating and a new method which is developed from the human interaction to take human expectations into account based on a raw score and a similarity score.

The Text REtrieval Conference (TREC) [78] is supported by the National Institute of Standards and Technology (NIST) and the U.S. Department of Defense to sponsor studies in information and data retrieval research. TREC provides the framework for extensive evaluation applied to different text retrieval methods. Effectiveness of TREC algorithms against web search engines has been compared [90] where tested algorithms were developed with Okapi or Smart based on the term weighting methods (TF-IDF). Web search engines are evaluated against a series of relevance metrics [91,92]: precision, TREC-based mean reciprocal rank (MRR), and the TREC style average precision (TSAP) obtained from double relevance human evaluations for the top 20 web results displayed.

Web search evaluation methods can be assigned into eight different categories [93]: assessment founded on relevance, assessment founded on ranking, assessment founded on user satisfaction, assessment founded on size and coverage of the web, assessment founded on dynamics of search results, assessment founded on few relevant and known items, assessment based on specific topic, or domain and automatic assessment.

Different automatic web search engine evaluation methods have been proposed [94,95] to reduce human intervention. The algorithm first submits a query to the different web search engines where the top 200 web pages of every web search engine are retrieved; then it ranks the pages in relation to their likeness to the web user information requirements; the model is built on the vector space method for query-document matching and ranking using TF-IDF. After ranking; the method lists as relevant the web pages that are in the first 20 documents retrieved by each web search engine with the top 50 ranked results. An automatic C++ application [96] compares and analyzes web result sets of several web search engines; the research analyses the URL coverage and the URL rank where recall and accuracy are used as relevance metrics however there is not description regarding the method that decides URL relevance. A technique for evaluating web search engines robotically [97] is founded in the way they order already-rated web search results where a search query is transmitted to different web search engines and the rank of retrieved web results is measured against the document that has already been paired with that query.

Web search engine evaluation has been examined from a webometric perspective [98] using estimates of success values, amount of provided URL, amount of domains retrieved, and the number of sites returned where evaluations are made to assess the retrieval of the most precise and comprehensive web results from every single web engine.

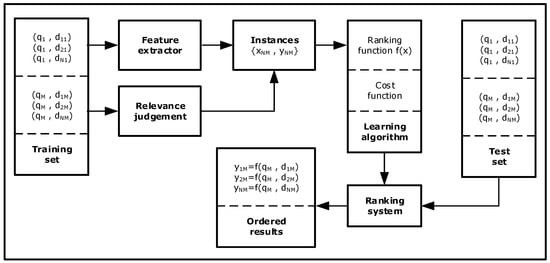

3.3. Learning to Rank

Learning to rank is defined as the implementation of semi-supervised or supervised computer learning techniques to generate a ranking model by combining different documents’ features obtained from training data in an information retrieval system. The training data contains sets of elements with a specified position represented by a numerical score, order, or a binary judgment for each element. The purpose of a ranking method is to order new result lists in order to produce rankings similar to the order in the training data. Query document sets are normally defined by quantitative vectors which components are denoted features, factors or ranking signals. The main disadvantages of the learning to rank approach are that the optimization of relevance is based on evaluation measurements without addressing the decision of which measures to use; in addition, learning to rank does not take into consideration that user relevance judgment changes over time.

The model of learning to rank consists of:

- a set Q of M queries, Q = {q1, q2, …, qM}

- a set D of N web pages per query qM, D = { d11, d12, …, dNM}

- a set X of M feature vectors per each query qM and web page dN pairs, X = {x11, x12, …, xNM}

- a set Y of M relevance decisions per query qM and web page dN pairs, Y = {y11, y12, …, yNM}

The set of query-web page pairs {qM, dNM} has associated a set of feature vectors {xNM} that represents specific ranking parameters describing the match between them. The set of relevance judgments {yNM} can be a binary decision, order, or score. Each feature xNM and the corresponding score yNM form an instance. The input to the learning algorithm is the set feature vectors {xNM} that represents the pair {qM, dNM} and the desired output corresponds to set of relevance judgments {yNM}. The ranking function f(x) is acquired by the learning method during the training process on which relevance assessments are optimized with the performance measurement or cost function (Figure 6).

Figure 6.

Learning to rank model.

The RankSVM learning to rank algorithm learns retrieval functions using clickthrough data for training based on a support vector machine (SVM) approach [99]. It uses a mapping function to correlate a search query with the features of each of the possible results where each data pair is projected into a feature space combined with the corresponding click-through data that measures relevance. Distinct ranking methods are developed for several classes of contexts [100] based on RankSVM that integrates the ranking principles into a new ranking model that encodes the context details as features. It learns a support vector machine model for a binary categorization on the selection between a couple of web pages where the evaluation is based on human conclusions and indirect user clicks.

A machine learned ranking model [101] routinely perceives and reacts to recent related queries where recent ranking takes into account freshness and relevance; the system is divided into two approaches: a high-accuracy recent related query detector and a specific recent related sorter trained to represent the characteristics and data distribution applicable for recent ordering.

Random forests is a low computational cost alternative to point-wise ranking approach algorithms [102] founded on the machine learning method “gradient boosted regression trees”. The learning algorithm of random forests is applied multiple times to different subsets and the results are averaged whereas gradient boosted regression trees sequentially adds small trees at each iteration instead of training many full trees. The combination of both two algorithms first learns a ranking function with random forests and later uses it as initialization for Gradient boosted regression trees.

3.4. User Experience Feedback

User feedback can be explicit by directly asking the user to evaluate the results or implicit by analyzing the user behavior. Relevance feedback is normally applied for query expansion during the short term modelling of a user’s instantaneous information need and for user profiling during the long term modelling of a user’s persistent interests and preferences [103]. Web search user experience can be increased by the collection of implicit user behavior measures as signs of user interest and satisfaction rather than forcing the user to submit explicit feedback, which can be costly in time and resources while altering the pattern and web search user experience [104]. Explicit and implicit feedback present diverse properties of users’ preferences with advantages and disadvantages; their combination in a user preference model provides a number of challenges however this can also overcome their associated issues by complementing each other, with similar performances despite their different characteristics. Implicit feedback can act as a substitute for explicit feedback as interchangeable sources of relevance information [105] when compared in terms of user perception and search effectiveness in some defined simulations rather than general web search scenarios. Three types of implicit feedback with two strategies for using implicit feedback to make recommendations based on rating estimation and predicted observations are proposed [106]: examination captures temporary interactions that begin and end during a single session, retention category groups user behaviors that suggest an intention for future use of the material, and reference includes user behaviors that create explicit or explicit links between information objects.

There is a connection between implicit measures of user activity and user’s explicit satisfaction ratings [107] where the best model is the combination of clickthrough, time spent on the search result page, and how a user exited a result or ended a search session. In addition, behavioral patterns can also be used to predict user satisfaction for search sessions by analyzing genes. Clicks on web results shall not be taken as absolute implicit feedback rather than informative but biased as users’ clicking decisions are not only influenced by the relevance of the results, but also by the trust they have in the retrieval function, and by the overall quality of the result set [108]. Web search users frequently make a sequence of queries with a similar information need where the use of query chains obtained from clickthrough data enables preference judgments from search engine logs [109].

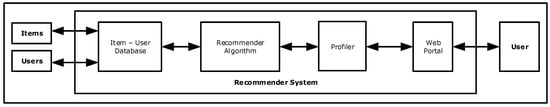

4. Recommender Systems

Recommender systems as Internet user semantic interfaces predict users’ interest of different items or products providing a set of suggested items applying tailored relevance algorithms. Recommender systems consist on a database where user ratings and item description are stored and updated iteratively. A user interface interacts with the user where a profiler extracts user properties with explicit and implicit feedback; different suggestions and their order are computed by the recommender ranking algorithm (Figure 7). Due to their filtering properties they are widely used within e-commerce [110] as they also support e-commerce customers to find rare products they might not have discovered by themselves.

Figure 7.

Recommender system architecture.

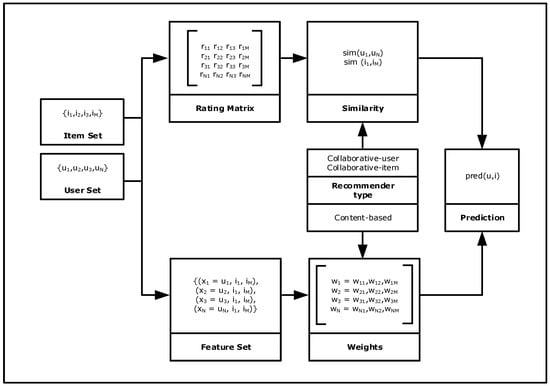

The model of a Recommender system (Figure 8) consists of:

Figure 8.

Recommender system model.

- a set Q of N users, Q = {u1, u2, …, uN}

- a set I of M items, I = {i1, i2, …, iM}

- a rating matrix R, R = [rij] where i ∈ Q and j ∈ I

- a set X of N feature vectors, X = {x1, x2, …, xN}

- a weight matrix W, W = [wij] where i ∈ N and j ∈ N + M

The set of user-items {uN, iM} has an associated set of feature vector {xN} that represents users and the different items assigned to them in the content model. The relevance judgment pred(u,i) can be a binary decision, order, or score based on the similarity between users or items in the collaborative model and weights in the content model.

4.1. Recommender System Classification

There are two main different categories of recommender systems [111]. Content-based recommender systems are built on a description of the product and a profile of the customer’s wishes where different properties of the items and users are used to identify products with similar features without including other user’s ratings. They suffer from some disadvantages [112], such as its inability to recommend completely different items that the user may also consider relevant and a customer needs to mark several products before getting relevant recommendations. Collaborative recommender systems are founded on the customer’s previous marks to other products and the consideration of other decisions made by similar users; they made suggestions based on a high correlation between users or items. Although collaborative recommendation reduces the issues from the content-based solution; it has other drawbacks such as it needs a large number of rating data to calculate accurate correlations and predictions; it also ignores on its calculations new added users or items. Hybrid recommender systems take a combination of both approaches.

The user based recommender generally uses the Pearson’s relationship similarity:

which the prediction on the user-based is defined below:

where N(u) defines a set of neighbors of u, similarity(u,v) represents the correlation between user u and v, ru,i is the mark of customer u to product i, is the average rating of customer u and M is the total quantity of items.

The item based recommender systems generally use the cosine similarity:

although the Euclidean distance similarity can also be used:

and the Manhattan distance similarity:

where the item-based prediction is defined below:

where N(i) is a collection of neighbors of i and similarity(i,p) is the correlation between item i and p is the relevance of item i to user d, and N is the overall quantity of users.

The linear prediction on the content-based is defined below:

where x are the instance vectors and w the matrix weights.

In multi-criteria ranking recommenders, users give ratings on several characteristics of an item as a vector of ratings [113] whereas cross domain recommender systems suggest items from multiple sources with item or user-based formulas founded on locality collaborative filtering [114]; they operate by first modelling the traditional likeness association as a directly connected graph and then exploring the entire potential paths that links users or items to discover new cross-domain associations.

There are several relevance metrics for assessment of recommender systems in various e-commerce business frameworks [115]. Accuracy evaluation metrics are allocated into three major categories: predictive, based on the error between estimated and true user ratings; classification, based on the successful decision making; and rank, based on the correct order.

Social network information is inserted to recommender systems [116] as an additional input to improve accuracy; collaborative filtering-based social recommender systems are classified into two categories: the matrix factorization approach, where user-to-user social information is integrated with user item feedback history, and neighborhood-based social approaches based on social network graphs.

4.2. Personalized Information and Recommender Systems

Personal recommender systems are one of the most efficient tools to solve the information overload problem, such as shopping in the Internet. The common problem faced by the different recommender systems is that the user and product information are dynamically changing [117]; in order to overcome this issue, an improved dynamic algorithm based on local information updating is proposed to avoid the computational expensive process to update static information when one new piece of information is produced by the user or products. A personalized recommendation methodology to obtain further effectiveness and quality when applied to e-commerce is based on a variety of data mining techniques, such as web usage mining learned from a clickstream, a decision tree induction that minimizes recommendation errors by making recommendation only for customers who are likely to buy recommended products, product taxonomy and, finally, association rule mining that chooses highly business-efficient products among the candidate recommendable products [118].

Theories related to the use of personalized content services and their effect on user satisfaction can be identified as [119]: information overload theory, which implies that user satisfaction increases when the recommended content fits user interests, uses and gratifications theory focuses on motivations for information access, and user involvement theory covers the user’s preferred content recommended by a process in which the user has explicit involvement. The Internet of Things (IoT) Big Data sharing enable personal recommender systems to model their user relevance from external sources [120]; data from a variety of social web services, such as Facebook and LinkedIn, can improve personal recommender system quality as it contains large amounts of personal information about users.

Personal recommender systems can also be applied in traditional transport information systems [121]. In the current applications, users must explicitly provide information related to both their profiles and travels in order to receive a personalized response at additional extra effort from the user in terms of search time. The proposed model identifies implicit users’ information and predicts their needs even if some data is missing by the use of an ontology that more accurately models semantic data. The contradiction between the expansion of tourism information and the difficulty of tourists obtaining tourism information enables the tourism information recommender system to have a business model [122]; the framework of a recommender system that combines key algorithms and existing tourism information recommendation websites allows customers to broaden their experience of the tourism information service and make tourism decisions more accurately and promptly.

A user model web recommender system for recommending, predicting and personalizing music playlists is based on a hybrid similarity matching method that combines collaborative filtering with ontology-based semantic distance measurements [123]; a personalized music playlist, from a selection of recommended playlists that comprises the most relevant tracks to the user is dynamically generated. A hybrid travel recommender system makes use of the individual demographic information to suggest what suits the user thereby making it more intelligent and user acceptable [124]. The model combines the features of content-based travel with collaborative and demographic filtering techniques.

5. Neural Networks

Artificial neural networks are representations of the brain, the principal component of the central nervous system. They are usually presented as artificial nodes or “neurons” in different layers connected together via synapses to create a network that emulates a biological neural network. The synapses have values called weights which are updated during network calculations.

Neurons are defined by:

- the activation, aj(t), that represents the neuron j state;

- the activation threshold Фj;

- the activation function fa that computes the new activation; and

- the output function fout that calculates the output from the activation.

The neuron model is represented mathematically as:

where pj(t) represents the combined input to neuron j:

where oj(t) is the new output from the activation aj(t).

Artificial neural networks are normally described by three variables:

- the links among independent layers of neurons, w;

- the learning method for adapting the weights of the connections; and

- the propagation function f(t) that calculates the input ij(t) to the neuron j from the outputs oj(t) of the predecessor neurons:where wij is the network weight matrix.

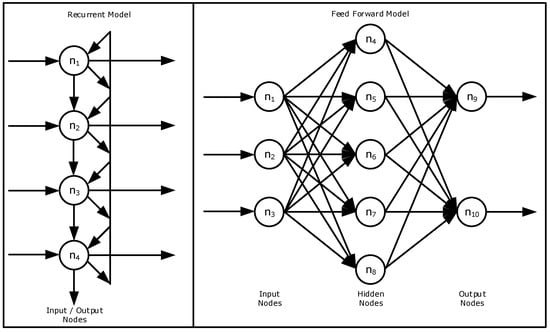

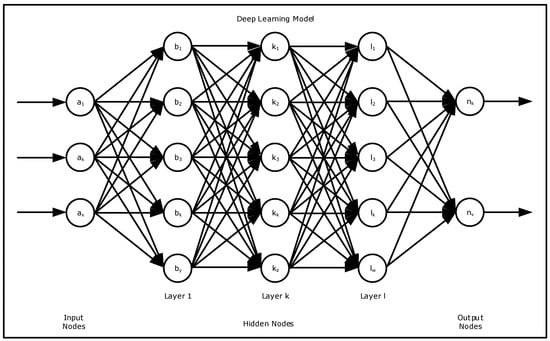

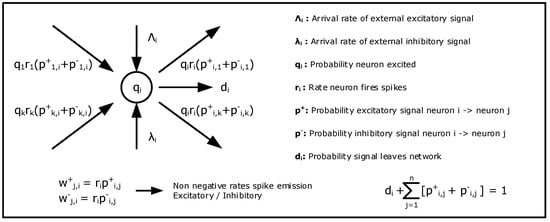

There are two main models to represent a neural network: the feed-forward model, where connections between neurons follow only the forward direction, and the recurrent model, where connections between neurons form a direct cycle (Figure 9).

Figure 9.

Neural networks.

Artificial intelligence is also applied to web search and recommender systems to increase search user experience and provide relevant results. AI is used for quality control to separate relevant results from spam and ranking algorithms in learning to rank. natural language processing (NLP), and image analysis are developed to understand user relevance and search queries. Future applications of AI in e-commerce include the understanding of human intent rather than keywords only, predicting the user’s next search or need, understanding the buyer cycle, target and personalize customers or users with products or information, respectively, and, finally, real-time customer analytics of the increasing Big Data.

RankBrain is an artificial intelligence algorithm learning method used by Google that focuses on what the user means, rather than specific keywords or topics. RankBrain divides search queries into word vectors or distributed representations with linguistic or semantic similarity; it predicts words or phrases that may have a similar meaning and filter the results accordingly [125].

5.1. Neural Networks in Web Search

The capability of a neural network to sense and learn recursively from several input figures to obtain the preferred output values has also been applied in the World Wide Web as a user interest adjustment method to provide relevant answers. Neural networks have modelled web graphs to compute page ranking; a graph neural network [126] consists on nodes with labels to include features or properties of the web page and edges to represent their relationships; a vector called state is associated to each node, and is modelled using a feed-forward neural network that represents the reliance of a node with its neighborhood. Another graph model [127] assigns every node in the neural network to a web page where the synapses that connect neurons denotes the links that connect web pages. The web page material rank approximation uses the web page heading, text material, and hyperlinks. The link score approximation applies the similarity between the quantity of phrases in the anchor text that are relevant pages. Both web page material rank approximation and web link rank approximation are included within the connection weights between the neurons.

An unsupervised neural network is used in a web search engine [128] where the k-means method is applied to cluster n web results retrieved from one or more web search engines into k groups where k is automatically estimated. Once the results have been retrieved and feature vectors are extracted, the k means grouping algorithm calculates the clusters by training the unsupervised neural network. In addition, a neural network method [129] classifies the relevance and reorders the web search results provided by a metasearch engine. The neural network is a three layer feed-forward model where the input vector represents a keyword table created by extracting the title and snippets of words from all the results retrieved and the output layer consists of a unique node with a value of 1 if the web page is relevant and 0 if irrelevant.

A neural network ranks pages using the HTML properties of the web documents [71] where words in the title have a stronger weight than in the body specific. Then, it propagates the reward back through the hypertext graph reducing it at each step. A back-propagation neural network [130] is applied to web search engine optimization and personalization where its input nodes are assigned to an explicit measured web user profile and a single output node represents the likelihood the web user may regard web page as relevant.

An agent learning method is applied to web information retrieval [131] where every agent uses different web search engines and learns their suitability based on user’s relevance response; a back-propagation neural network is applied where the input and the output neurons are configured to characterize any training term vector set and relevance feedback for a given query.

5.2. Neural Networks in Learning to Rank Algorithms

Although there are numerous learning to rank methods we only analyze the ones based on neural networks. Learning to rank algorithms are categorized within three different methods according to their input and output representations:

The pointwise method considers every query web page couple within a training set is assigned to a quantitative or ordinal value. It assumes an individual document as its only learning input. Pointwise is represented as a regression model where, provided a unique query document couple, it predicts its rating.

The pairwise method evaluates only the relative order between a pair of web pages; it collects documents pairs from the training data on which a label that represents the respective order of the two documents is assigned to each document pair. Pairwise is approximated by a classification problem. Algorithms take document pairs as instances against a query where the optimization target is the identification of the best document pair preferences. RankNet [132] is a pairwise model based on a neural network structure and gradient descent as the method to optimize the probabilistic ranking cost function; a set of sample pairs together with target likelihoods that one result is to be ranked higher than the other is given to the learning algorithm. RankNet is used to combine different static web page attributes, such as web page content, domain or outlinks, anchor text or inlinks, and popularity [133]; it outperforms PageRank by selecting attributes that are detached from the link fabric of the web where accuracy can be increased by using the regularity with which web pages are visited. RankNet adjusts the attribute weights to best meet pairwise user choices [134] where the implicit feedback such as clickthrough and other user interactions is treated as vector of features which is later integrated directly into the ranking algorithm. SortNet is a pairwise learning method [135] with its associated priority function provided by a multi-layered neural network with a feed-forward configuration and is is trained in the learning phase with a dataset formed of pairs of documents where the associated score of the preference function is provided. SortNet is based on the minimization of the square error function between the network outputs and preferred targets on every unique couple of documents.