Identification of Calculous Pyonephrosis by CT-Based Radiomics and Deep Learning

Abstract

1. Introduction

2. Materials and Methods

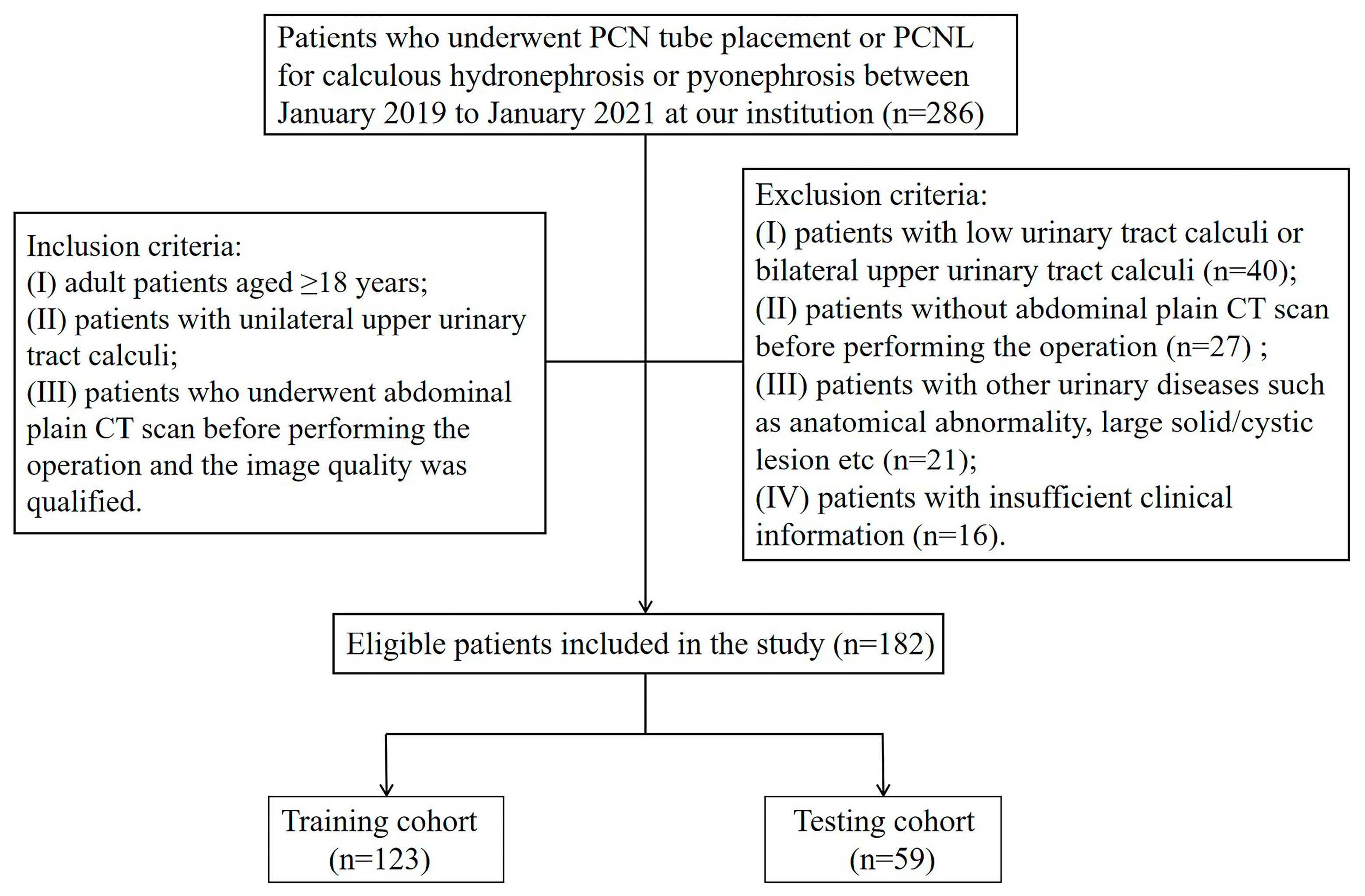

2.1. Patient Selection

2.2. Confirmation of Pyonephrosis, Clinical Data Collection, and Clinical Model Building

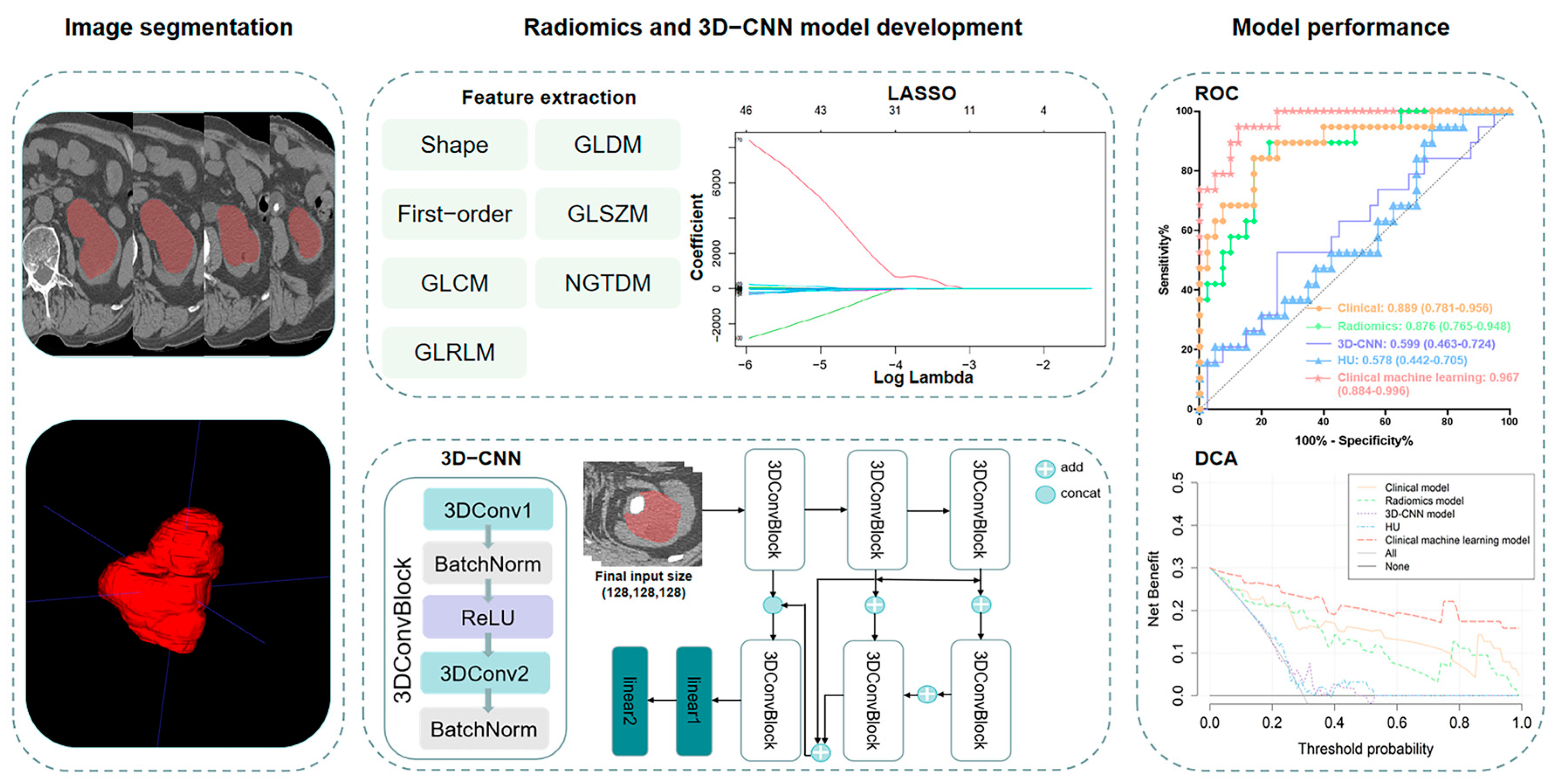

2.3. Image Acquisition and Segmentation

2.4. Feature Extraction, Selection, and Radiomics Model Building

2.5. 3D-CNN Model Development

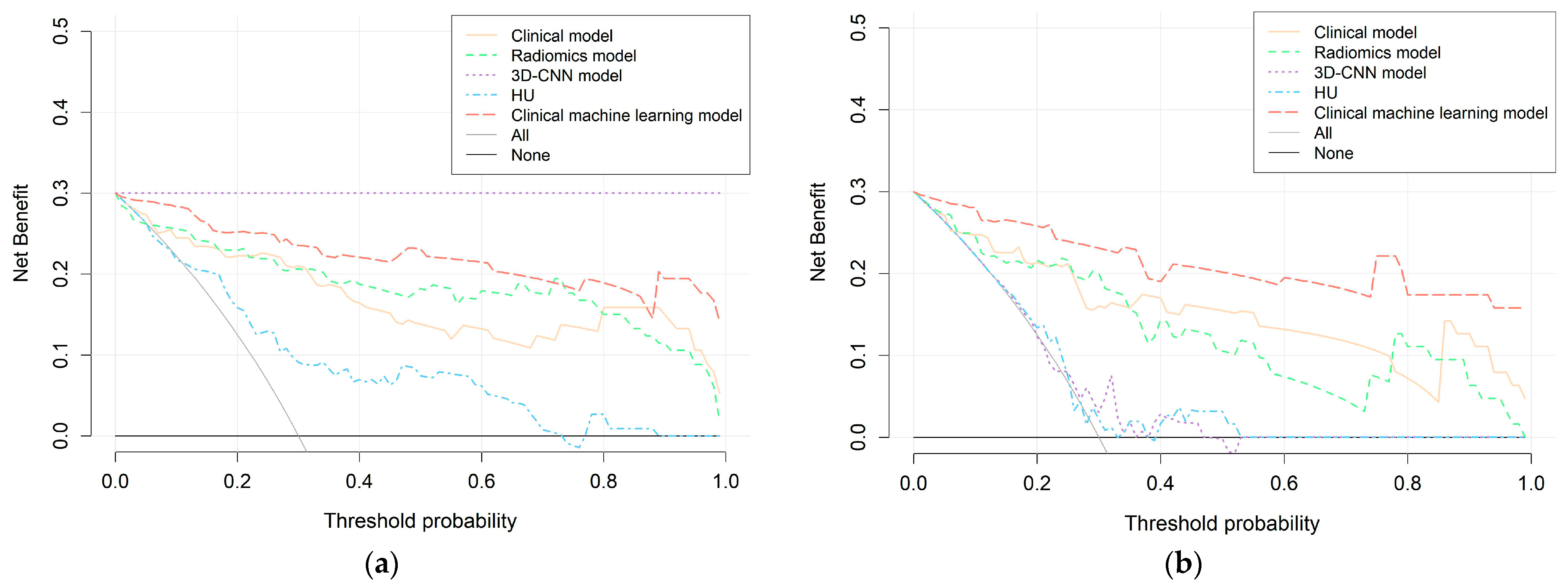

2.6. Performance and Clinical Utility Assessment of Models

2.7. Statistical Analysis

3. Results

3.1. Patient Characteristics and Clinical Model Building

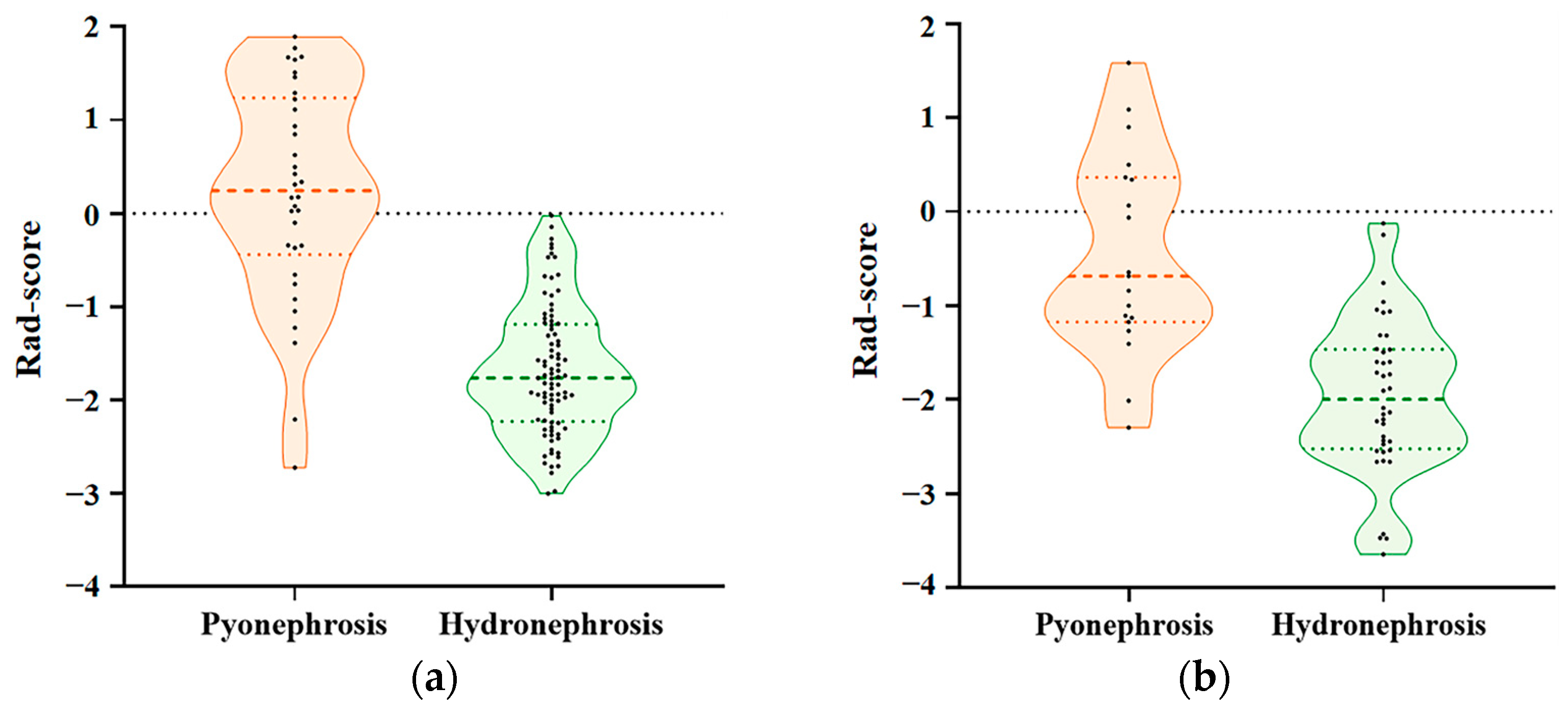

3.2. Construction of the Radiomics Model

3.3. Development of the 3D-CNN Model

3.4. Comparison of Radiomics Model, 3D-CNN Model, and HU Performance

3.5. Establishment of the Clinical Machine-Learning Model

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wagenlehner, F.M.; Tandogdu, Z.; Bjerklund Johansen, T.E. An update on classification and management of urosepsis. Curr. Opin. Urol. 2017, 27, 133–137. [Google Scholar] [CrossRef] [PubMed]

- Jimenez, J.F.; Lopez Pacios, M.A.; Llamazares, G.; Conejero, J.; Sole-Balcells, F. Treatment of pyonephrosis: A comparative study. J. Urol. 1978, 120, 287–289. [Google Scholar] [CrossRef] [PubMed]

- Jung, H.; Osther, P.J. Acute management of stones: When to treat or not to treat? World J. Urol. 2015, 33, 203–211. [Google Scholar] [CrossRef] [PubMed]

- Mokhmalji, H.; Braun, P.M.; Martinez Portillo, F.J.; Siegsmund, M.; Alken, P.; Kohrmann, K.U. Percutaneous nephrostomy versus ureteral stents for diversion of hydronephrosis caused by stones: A prospective, randomized clinical trial. J. Urol. 2001, 165, 1088–1092. [Google Scholar] [CrossRef] [PubMed]

- Bjerklund Johansen, T.E. Diagnosis and imaging in urinary tract infections. Curr. Opin. Urol. 2002, 12, 39–43. [Google Scholar] [CrossRef] [PubMed]

- Li, A.C.; Regalado, S.P. Emergent percutaneous nephrostomy for the diagnosis and management of pyonephrosis. Semin. Intervent. Radiol. 2012, 29, 218–225. [Google Scholar] [PubMed]

- Vaidyanathan, S.; Singh, G.; Soni, B.M.; Hughes, P.; Watt, J.W.; Dundas, S.; Sett, P.; Parsons, K.F. Silent hydronephrosis/pyonephrosis due to upper urinary tract calculi in spinal cord injury patients. Spinal Cord 2000, 38, 661–668. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Dede, G.; Deveci, O.; Dede, O.; Utangac, M.; Daggulli, M.; Penbegul, N.; Hatipoglu, N.K. For reliable urine cultures in the detection of complicated urinary tract infection, do we use urine specimens obtained with urethral catheter or a nephrostomy tube? Turk. J. Urol. 2016, 42, 290–294. [Google Scholar] [CrossRef]

- Laquerre, J. Hydronephrosis: Diagnosis, Grading, and Treatment. Radiol. Technol. 2020, 92, 135–151. [Google Scholar]

- Browne, R.F.; Zwirewich, C.; Torreggiani, W.C. Imaging of urinary tract infection in the adult. Eur. Radiol. 2004, 14 (Suppl. S3), E168–E183. [Google Scholar] [CrossRef]

- Kawashima, A.; Sandler, C.M.; Goldman, S.M. Imaging in acute renal infection. BJU Int. 2000, 86 (Suppl. S1), 70–79. [Google Scholar] [CrossRef] [PubMed]

- Erdogan, A.; Sambel, M.; Caglayan, V.; Avci, S. Importance of the Hounsfield Unit Value Measured by Computed Tomography in the Differentiation of Hydronephrosis and Pyonephrosis. Cureus 2020, 12, e11675. [Google Scholar] [CrossRef] [PubMed]

- Boeri, L.; Fulgheri, I.; Palmisano, F.; Lievore, E.; Lorusso, V.; Ripa, F.; D’Amico, M.; Spinelli, M.G.; Salonia, A.; Carrafiello, G.; et al. Hounsfield unit attenuation value can differentiate pyonephrosis from hydronephrosis and predict septic complications in patients with obstructive uropathy. Sci. Rep. 2020, 10, 18546. [Google Scholar] [CrossRef] [PubMed]

- Yuruk, E.; Tuken, M.; Sulejman, S.; Colakerol, A.; Serefoglu, E.C.; Sarica, K.; Muslumanoglu, A.Y. Computerized tomography attenuation values can be used to differentiate hydronephrosis from pyonephrosis. World J. Urol. 2017, 35, 437–442. [Google Scholar] [CrossRef]

- Lambin, P.; Leijenaar, R.T.H.; Deist, T.M.; Peerlings, J.; de Jong, E.E.C.; van Timmeren, J.; Sanduleanu, S.; Larue, R.; Even, A.J.G.; Jochems, A.; et al. Radiomics: The bridge between medical imaging and personalized medicine. Nat. Rev. Clin. Oncol. 2017, 14, 749–762. [Google Scholar] [CrossRef] [PubMed]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images Are More than Pictures, They Are Data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef] [PubMed]

- Lambin, P.; Rios-Velazquez, E.; Leijenaar, R.; Carvalho, S.; van Stiphout, R.G.; Granton, P.; Zegers, C.M.; Gillies, R.; Boellard, R.; Dekker, A.; et al. Radiomics: Extracting more information from medical images using advanced feature analysis. Eur. J. Cancer 2012, 48, 441–446. [Google Scholar] [CrossRef]

- Kumar, V.; Gu, Y.; Basu, S.; Berglund, A.; Eschrich, S.A.; Schabath, M.B.; Forster, K.; Aerts, H.J.; Dekker, A.; Fenstermacher, D.; et al. Radiomics: The process and the challenges. Magn. Reson. Imaging 2012, 30, 1234–1248. [Google Scholar] [CrossRef] [PubMed]

- Binson, V.A.; Thomas, S.; Subramoniam, M.; Arun, J.; Naveen, S.; Madhu, S. A Review of Machine Learning Algorithms for Biomedical Applications. Ann. Biomed. Eng. 2024, 52, 1159–1183. [Google Scholar] [CrossRef]

- Goh, G.D.; Lee, J.M.; Goh, G.L.; Huang, X.; Lee, S.; Yeong, W.Y. Machine Learning for Bioelectronics on Wearable and Implantable Devices: Challenges and Potential. Tissue Eng. Part A 2022, 29, 20–46. [Google Scholar] [CrossRef]

- Zemouri, R.; Zerhouni, N.; Racoceanu, D. Deep Learning in the Biomedical Applications: Recent and Future Status. Appl. Sci. 2019, 9, 1526. [Google Scholar] [CrossRef]

- Neupane, D.; Kim, Y.; Seok, J.; Hong, J. CNN-Based Fault Detection for Smart Manufacturing. Appl. Sci. 2021, 11, 11732. [Google Scholar] [CrossRef]

- Goecks, J.; Jalili, V.; Heiser, L.M.; Gray, J.W. How Machine Learning Will Transform Biomedicine. Cell 2020, 181, 92–101. [Google Scholar] [CrossRef]

- Singh, S.P.; Wang, L.; Gupta, S.; Goli, H.; Padmanabhan, P.; Gulyas, B. 3D Deep Learning on Medical Images: A Review. Sensors 2020, 20, 5097. [Google Scholar] [CrossRef] [PubMed]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Jianming, L. Convolutional Neural Networks for Medical Image Analysis: Full Training or Fine Tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef]

- Liao, H.; Yang, J.; Li, Y.; Liang, H.; Ye, J.; Liu, Y. One 3D VOI-based deep learning radiomics strategy, clinical model and radiologists for predicting lymph node metastases in pancreatic ductal adenocarcinoma based on multiphasic contrast-enhanced computer tomography. Front. Oncol. 2022, 12, 990156. [Google Scholar] [CrossRef]

- Zheng, J.; Yu, H.; Batur, J.; Shi, Z.; Tuerxun, A.; Abulajiang, A.; Lu, S.; Kong, J.; Huang, L.; Wu, S.; et al. A multicenter study to develop a non-invasive radiomic model to identify urinary infection stone in vivo using machine-learning. Kidney Int. 2021, 100, 870–880. [Google Scholar] [CrossRef]

- Xun, Y.; Chen, M.; Liang, P.; Tripathi, P.; Deng, H.; Zhou, Z.; Xie, Q.; Li, C.; Wang, S.; Li, Z.; et al. A Novel Clinical-Radiomics Model Pre-operatively Predicted the Stone-Free Rate of Flexible Ureteroscopy Strategy in Kidney Stone Patients. Front. Med. 2020, 7, 576925. [Google Scholar] [CrossRef]

- Fitri, L.A.; Haryanto, F.; Arimura, H.; YunHao, C.; Ninomiya, K.; Nakano, R.; Haekal, M.; Warty, Y.; Fauzi, U. Automated classification of urinary stones based on microcomputed tomography images using convolutional neural network. Phys. Med. 2020, 78, 201–208. [Google Scholar] [CrossRef] [PubMed]

- Yoder, I.C.; Lindfors, K.K.; Pfister, R.C. Diagnosis and treatment of pyonephrosis. Radiol. Clin. N. Am. 1984, 22, 407–414. [Google Scholar] [CrossRef]

- Noble, V.E.; Brown, D.F. Renal ultrasound. Emerg. Med. Clin. N. Am. 2004, 22, 641–659. [Google Scholar] [CrossRef] [PubMed]

- Roberts, F.J. Quantitative urine culture in patients with urinary tract infection and bacteremia. Am. J. Clin. Pathol. 1986, 85, 616–618. [Google Scholar] [CrossRef] [PubMed]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed]

- Gupta, K.; Grigoryan, L.; Trautner, B. Urinary Tract Infection. Ann. Intern. Med. 2017, 167, ITC49–ITC64. [Google Scholar] [CrossRef] [PubMed]

- Rungelrath, V.; Kobayashi, S.D.; DeLeo, F.R. Neutrophils in innate immunity and systems biology-level approaches. Wiley Interdiscip. Rev. Syst. Biol. Med. 2020, 12, e1458. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Tang, K.; Xia, D.; Peng, E.; Li, R.; Liu, H.; Chen, Z. A novel comprehensive predictive model for obstructive pyonephrosis patients with upper urinary tract stones. Int. J. Clin. Exp. Pathol. 2020, 13, 2758–2766. [Google Scholar] [PubMed]

- Liu, H.; Wang, X.; Tang, K.; Peng, E.; Xia, D.; Chen, Z. Machine learning-assisted decision-support models to better predict patients with calculous pyonephrosis. Transl. Androl. Urol. 2021, 10, 710–723. [Google Scholar] [CrossRef] [PubMed]

- Rutman, A.M.; Kuo, M.D. Radiogenomics: Creating a link between molecular diagnostics and diagnostic imaging. Eur. J. Radiol. 2009, 70, 232–241. [Google Scholar] [CrossRef]

- Kuo, M.D.; Gollub, J.; Sirlin, C.B.; Ooi, C.; Chen, X. Radiogenomic analysis to identify imaging phenotypes associated with drug response gene expression programs in hepatocellular carcinoma. J. Vasc. Interv. Radiol. 2007, 18, 821–831. [Google Scholar] [CrossRef]

- Lambin, P.; van Stiphout, R.G.; Starmans, M.H.; Rios-Velazquez, E.; Nalbantov, G.; Aerts, H.J.; Roelofs, E.; van Elmpt, W.; Boutros, P.C.; Granone, P.; et al. Predicting outcomes in radiation oncology—Multifactorial decision support systems. Nat. Rev. Clin. Oncol. 2013, 10, 27–40. [Google Scholar] [CrossRef]

- Kindler, O.; Pulkkinen, O.; Cherstvy, A.G.; Metzler, R. Burst statistics in an early biofilm quorum sensing model: The role of spatial colony-growth heterogeneity. Sci. Rep. 2019, 9, 12077. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Lv, W.; Wang, C.; Tu, D.; Qiao, J.; Fan, C.; Niu, J.; Zhou, W.; Liu, Q.; Xia, L. A potential biomarker based on clinical-radiomics nomogram for predicting survival and adjuvant chemotherapy benefit in resected node-negative, early-stage lung adenocarcinoma. J. Thorac. Dis. 2022, 14, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Lu, J.; Gao, C.; Zeng, J.; Zhou, C.; Lai, X.; Cai, W.; Xu, M. Predicting the response to neoadjuvant chemotherapy for breast cancer: Wavelet transforming radiomics in MRI. BMC Cancer 2020, 20, 100. [Google Scholar] [CrossRef] [PubMed]

- Majeed Alneamy, J.S.; Hameed Alnaish, Z.A.; Mohd Hashim, S.Z.; Hamed Alnaish, R.A. Utilizing hybrid functional fuzzy wavelet neural networks with a teaching learning-based optimization algorithm for medical disease diagnosis. Comput. Biol. Med. 2019, 112, 103348. [Google Scholar] [CrossRef]

- Wei, C.; Li, N.; Shi, B.; Wang, C.; Wu, Y.; Lin, T.; Chen, Y.; Ge, Y.; Yu, Y.; Dong, J. The predictive value of conventional MRI combined with radiomics in the immediate ablation rate of HIFU treatment for uterine fibroids. Int. J. Hyperth. 2022, 39, 475–484. [Google Scholar] [CrossRef]

- Muñoz-Gil, G.; Garcia-March, M.A.; Manzo, C.; Martín-Guerrero, J.D.; Lewenstein, M. Single trajectory characterization via machine learning. New J. Phys. 2020, 22, 013010. [Google Scholar] [CrossRef]

| Characteristics | Training Cohort (n = 123) | Testing Cohort (n = 59) | p Value |

|---|---|---|---|

| Age (years), median [IQR] | 54 (47–59) | 50 (42–60) | 0.138 |

| Gender, n (%) | 0.479 | ||

| Male | 64 (52.0%) | 34 (57.6%) | |

| Female | 59 (48.0%) | 35 (42.4%) | |

| BMI (kg∙m−2) | 23.5 (21.5–25.5) | 23.1 (21.6–26.0) | 0.673 |

| Fever | 0.689 | ||

| Yes | 15 (12.2%) | 6 (10.2%) | |

| No | 108 (87.8%) | 53 (89.8%) | |

| Renal colic | 0.243 | ||

| Yes | 68 (55.3%) | 38 (64.4%) | |

| No | 55 (44.7%) | 21 (35.6%) | |

| Hypertension | 0.110 | ||

| Yes | 39 (31.7%) | 12 (20.3%) | |

| No | 84 (68.3%) | 47 (79.7%) | |

| Diabetes | 0.435 | ||

| Yes | 20 (16.3%) | 7 (11.9%) | |

| No | 103 (83.7%) | 52 (88.1%) | |

| History of stone surgery | 0.888 | ||

| Yes | 43 (35.0%) | 20 (33.9%) | |

| No | 80 (65.0%) | 39 (66.1%) | |

| Stone laterality | 0.710 | ||

| Left | 62 (50.4%) | 28 (47.5%) | |

| Right | 61 (49.6%) | 31 (52.5%) | |

| Stone location | 0.423 | ||

| Renal calculus | 30 (24.4%) | 19 (32.2%) | |

| Ureteral calculus | 31 (25.2%) | 14 (23.7%) | |

| Renal and ureteral calculus | 62 (50.4%) | 26 (44.1%) | |

| Stone size (mm) | 15.2 (11.1–21.5) | 16.0 (11.0–19.0) | 0.807 |

| Levels | 0.486 | ||

| Mild/Moderate | 90 (73.2%) | 46 (78.0%) | |

| Severe | 33 (26.8%) | 13 (22.0%) | |

| WBC (×109/L) | 6.06 (5.05–8.09) | 6.42 (5.24–8.11) | 0.544 |

| Neutrophils (×109/L) | 58.9 (53.5–68.2) | 60.1 (53.6–68.4) | 0.709 |

| Platelet (×109/L) | 226 (182–276) | 232 (171–279) | 0.569 |

| Urine leukocyte (/µL) | 88.6 (37.8–321.2) | 150.4 (41.1–1111.9) | 0.129 |

| Urinary nitrite | 0.507 | ||

| Positive | 12 (9.8%) | 4 (6.8%) | |

| Negative | 111 (90.2%) | 55 (93.3%) | |

| Urine culture | 0.693 | ||

| Positive | 26 (21.1%) | 14 (23.7%) | |

| Negative | 97 (78.9%) | 45 (76.3%) |

| Characteristics | Univariate Analysis | Multivariate Analysis | ||

|---|---|---|---|---|

| OR (95% CI) | p Value | OR (95% CI) | p Value | |

| Age (years) | 1.011 (0.978–1.044) | 0.524 | ||

| Gender | 2.168 (0.965–4.870) | 0.061 | ||

| BMI (kg∙m−2) | 0.924 (0.822–1.039) | 0.188 | ||

| Fever | 15.636 (4.058–60.258) | <0.001 * | 24.436 (4.405–135.547) | <0.001 * |

| Renal colic | 1.444 (0.644–3.236) | 0.373 | ||

| Hypertension | 1.500 (0.655–3.436) | 0.338 | ||

| Diabetes | 1.974 (0.727–5.361) | 0.182 | ||

| History of stone surgery | 1.720 (0.764–3.872) | 0.190 | ||

| Stone laterality | 1.203 (0.545–2.656) | 0.646 | ||

| Stone location | 0.705 (0.319–1.562) | 0.390 | ||

| Stone size (mm) | 1.038 (0.988–1.092) | 0.140 | ||

| Hydronephrosis | 3.114 (1.328–7.300) | 0.009 * | ||

| WBC (×109/L) | 1.247 (1.092–1.425) | 0.001 * | ||

| Neutrophils (×109/L) | 1.107 (1.061–1.155) | <0.001 * | 1.107 (1.047–1.171) | <0.001 * |

| Platelet (×109/L) | 1.005 (1.001–1.009) | 0.033 * | ||

| Urine leukocyte (/µL) | 1.001 (1.000–1.002) | 0.002 * | 1.001 (1.000–1.002) | 0.003 * |

| Urinary nitrite | 2.020 (0.594–6.864) | 0.260 | ||

| Urine culture | 2.922 (1.181–7.228) | 0.020 * | ||

| Model | Training Cohort (n = 123) | |||||

|---|---|---|---|---|---|---|

| AUC (95% CI) | pα Value | Sensitivity | pβ Value | Specificity | pγ Value | |

| Clinical model | 0.904 (0.837–0.950) | 0.866 a | 0.853 | 0.549 a | 0.865 | 0.210 a |

| Radiomics model | 0.912 (0.848–0.956) | 0.065 b | 0.765 | 0.063 b | 0.944 | 0.375 b |

| 3D-CNN model | 1.000 (0.970–1.000) | 0.018 c* | 1.000 | / | 1.000 | / |

| HU | 0.747 (0.660–0.821) | 0.007 d* | 0.765 | >0.999 d | 0.607 | <0.001 d* |

| Clinical machine-learning model | 0.975 (0.929–0.994) | 0.019 e* | 0.912 | 0.687 e | 0.900 | 0.607 e |

| Model | Testing Cohort (n = 59) | |||||

|---|---|---|---|---|---|---|

| AUC (95% CI) | pα Value | Sensitivity | pβ Value | Specificity | pγ Value | |

| Clinical model | 0.889 (0.781–0.956) | 0.852 a | 0.842 | >0.999 a | 0.825 | 0.774 a |

| Radiomics model | 0.876 (0.765–0.948) | 0.061 b | 0.895 | >0.999 b | 0.775 | 0.219 b |

| 3D-CNN model | 0.599 (0.463–0.724) | 0.003 c* | 0.526 | 0.039 c* | 0.750 | >0.999 c |

| HU | 0.578 (0.442–0.705) | 0.002 d* | 0.947 | >0.999 d | 0.250 | <0.001 d* |

| Clinical machine-learning model | 0.967 (0.884–0.996) | 0.045 e* | 0.947 | 0.500 e | 0.875 | 0.687 e |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, G.; Cai, L.; Qu, W.; Zhou, Z.; Liang, P.; Chen, J.; Xu, C.; Zhang, J.; Wang, S.; Chu, Q.; et al. Identification of Calculous Pyonephrosis by CT-Based Radiomics and Deep Learning. Bioengineering 2024, 11, 662. https://doi.org/10.3390/bioengineering11070662

Yuan G, Cai L, Qu W, Zhou Z, Liang P, Chen J, Xu C, Zhang J, Wang S, Chu Q, et al. Identification of Calculous Pyonephrosis by CT-Based Radiomics and Deep Learning. Bioengineering. 2024; 11(7):662. https://doi.org/10.3390/bioengineering11070662

Chicago/Turabian StyleYuan, Guanjie, Lingli Cai, Weinuo Qu, Ziling Zhou, Ping Liang, Jun Chen, Chuou Xu, Jiaqiao Zhang, Shaogang Wang, Qian Chu, and et al. 2024. "Identification of Calculous Pyonephrosis by CT-Based Radiomics and Deep Learning" Bioengineering 11, no. 7: 662. https://doi.org/10.3390/bioengineering11070662

APA StyleYuan, G., Cai, L., Qu, W., Zhou, Z., Liang, P., Chen, J., Xu, C., Zhang, J., Wang, S., Chu, Q., & Li, Z. (2024). Identification of Calculous Pyonephrosis by CT-Based Radiomics and Deep Learning. Bioengineering, 11(7), 662. https://doi.org/10.3390/bioengineering11070662