Abstract

The rapid development of computers and robots has seen robotic minimally invasive surgery (RMIS) gradually enter the public’s vision. RMIS can effectively eliminate the hand vibrations of surgeons and further reduce wounds and bleeding. However, suitable RMIS and virtual reality-based digital-twin surgery trainers are still in the early stages of development. Extensive training is required for surgeons to adapt to different operating modes compared to traditional MIS. A virtual-reality-based digital-twin robotic minimally invasive surgery (VRDT-RMIS) simulator was developed in this study, and its effectiveness was introduced. Twenty-five volunteers were divided into two groups for the experiment, the Expert Group and the Novice Group. The use of the VRDT-RMIS simulator for face, content, and structural validation training, including the peg transfer module and the soft tissue cutting module, was evaluated. Through subjective and objective evaluations, the potential roles of vision and haptics in robot surgery training were explored. The simulator can effectively distinguish surgical skill proficiency between experts and novices.

1. Introduction

Over the past 30 years, minimally invasive surgery (MIS) has significantly impacted surgical techniques across various specialties and has nearly supplanted traditional open surgery. Nonetheless, MIS presents two primary drawbacks: an extended learning curve for minimally invasive procedures and the challenge of addressing the surgeon’s hand tremors, which can significantly impact intraoperative bleeding and postoperative recovery [1,2]. Since the birth of the Automated Endoscopic System for Optimal Positioning (AESOP) as the first FDA-certified auxiliary surgical robot in 1994, various types of surgical robot systems have sprung up like mushrooms. The most renowned and successful platform is the da Vinci Surgical Robot developed by Intuitive Surgical® Inc (Sunnyvale, CA, USA). The system was initially used for remote military minimally invasive surgery and was successfully commercialized to surgical hospitals. The main innovation of the da Vinci system lies in the integrated multi-degree-of-freedom surgical robotic arm, which has 3D vision and a 5 mm scope endoscope at the same time [3]. However, most RMISs currently lack tactile feedback, which impairs surgeons’ ability to perceive tissue texture, tension, and 3D depth [4]. Consequently, most of the current research hotspots on surgical robots tend to enable them to have the ability to provide tactile feedback [5] and shorten the learning curve for junior surgeons [6].

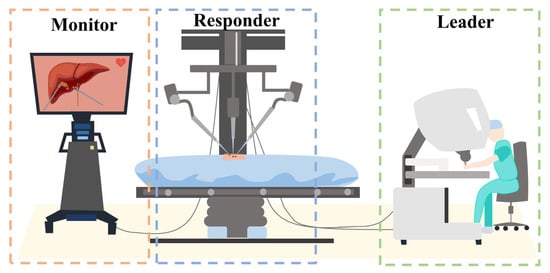

The control method for RMIS differs from traditional surgical instruments. A distinct approach is employed where the leader and responder components are separated [7,8]. In other words, the secondary robot is not directly controlled by the surgeon’s hand but is manipulated by the surgeon through motion capture using the leader console. The framework is depicted in Figure 1.

Figure 1.

Leader–responder framework: the secondary robot is not directly controlled by the surgeon’s hand but is manipulated by the surgeon through motion capture using the leader console.

Given that most surgeons are accustomed to traditional minimally invasive surgery (MIS) methods, there is a significant adjustment period required for them to transition from conventional techniques to the current methods employed in surgical robot operations. Therefore, the learning curve for robotic minimally invasive surgery (RMIS) becomes notably steep. To alleviate the learning curve of controlling RMIS, researchers have developed incorporating advanced technologies such as three-dimensional displays, haptic feedback, and virtual reality/augmented reality (VR/AR) [9,10,11,12,13,14]. Currently, there is a substantial shortage of cadaveric resources in medical schools, which presents a major hindrance for surgeons seeking to undergo RMIS training. Therefore, VR/AR technology offers a promising solution to help surgeons enhance their RMIS skills without the need for cadavers [15,16].

This paper proposes a robotic minimally invasive surgical simulator based on VR digital twins (VRDT-RMIS) and verifies the surgical skill training effect of the simulator through two module experiments.

Our contributions:

- A set of laparoscopic surgical robot virtual reality simulators, encompassing both software and hardware design, has been devised and validated;

- The simulator has been evaluated using two modules: peg transfer and soft tissue cutting;

- The effectiveness of simulator training has been quantitatively assessed through the utilization of the entropy method.

The article is organized as follows: Section 2 presents the design of the robot, including the remote center of motion (RCM) algorithm for robot control and the hardware–software framework. Section 3 primarily focuses on introducing the experimental design, including participants, experimental tasks, data collection, and data analysis methods. Section 4 integrates the aforementioned evaluation methods to assess face and content validation and construct validation. It also provides a detailed description of calculating the weight of different metrics in surgical skills using the entropy method based on expert surgical proficiency. This serves as a reference for evaluating the training outcomes of novice doctors on the surgical robot simulator in the future. The results of data analysis are presented in Section 5, where the significant differences in performance between experts and novices in different tasks are discussed. This affirms the positive significance of this simulator in training doctors to operate robotic minimally invasive surgery robots.

2. Design of the VRDT-RMIS

2.1. Remote Center of Motion Algorithm

In traditional MIS, surgeons receive thorough training in endoscopic procedures to ensure that their surgical instruments operate within the confines of a trocar. This practice significantly minimizes the risk of unintentional injuries occurring outside the intended target area. However, due to the dissimilarity between robotic inverse kinematic (IK) motion and the natural hand motions of surgeons, it is imperative to incorporate a remote center of motion (RCM) algorithm into the robot control system. This addition enables robotic MIS to replicate the surgeon’s actions effectively, thereby reducing patient wounds and minimizing intraoperative bleeding.

In recent years, as surgical robot technology has advanced, the RCM algorithm has undergone significant refinement. In 2013, Nastaran and colleagues [17] addressed a visual task involving a manipulator with six degrees of freedom, specifically one holding an endoscopic camera. An inverse kinematic control law was successfully derived, allowing the manipulator to perform a visual task while adhering to the RCM constraint. However, it should be noted that their approach only guaranteed local convergence, and external factors such as the patient’s involuntary movements, for example, heartbeats and breathing, were not considered. In 2019, a novel design based on artificial neural network architecture was introduced by Ahmed and colleagues [18]. Their work demonstrated the feasibility and effectiveness of utilizing neural networks in the context of RMIS. In 2020, an approach combining model-based and model-free methods for calibrating and controlling robots in terms of their forward kinematics and inverse kinematics was proposed by Alireza and colleagues [19]. The aforementioned approaches are unable to provide real-time control of the robot with haptic feedback, potentially leading to suboptimal surgical outcomes. In 2021, a platform was designed by Oliva Wilz et al. [20] that permits a 3-DOF haptic device to control a 6-DOF manipulator. A novel design of concurrent inverse kinematics solvers that combines classical Jacobian inverse kinematics and optimization-based methods was presented by Jacinto and colleagues [21] in 2023.

- Remote Center of Motion Constraint Task

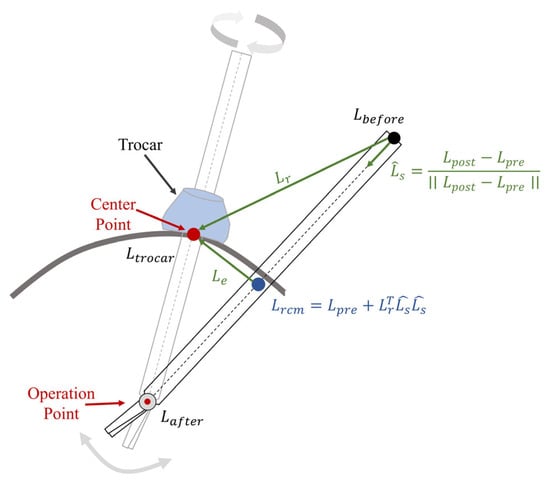

The remote center of motion (RCM) constraint is defined based on the kinematic distance between the trocar point and the tool axis [22]. The locations of the joints before and after the RCM point are defined as and . The point near the trocar point is defined as , allowing us to obtain (1).

where means the direction of the surgical instrument axis, and represents the disparity in position between the trocar point and its location before the RCM point. In addition, the vector to the trocar point, from its nearest point on the instrument axis, is denoted as . The above definition is shown in Figure 2.

Figure 2.

In robotic minimally invasive surgery (RMIS), the remote center of motion (RCM) process restricts the movement of surgical instruments through a trocar point.

As differentiating with respect to the manipulator joints, , the RCM constraint task in the Jacobian matrix, could be calculated as

with

where and are the configuration-dependent analytical Jacobian matrices of the immediate joints before and after the RCM.

The residual of the RCM constraint task, denoted as is defined as the minimum distance to the trocar point, and can be calculated as follows:

The residual of the RCM task, and the Jacobian representation of the RCM constraint, , will be used to calculate the hierarchical constraint inverse kinematic formulation.

- Inverse Jacobian Inverse Kinematic Solver

The classical Jacobian method is a widely used approach for solving inverse kinematic problems, valued for its simplicity and low computation cost. This method is rooted in the concept of differential kinematics, expressed as where represents the task space vector, is the joint vector of the manipulator, and is the configuration-dependent Jacobian matrix task, connecting joint velocities with task space velocities. When given a desired task space velocity, , the inverse kinematic problem will transform into the task of finding a solution of , as depicted in Equation (5).

A least squares solution can be obtained by utilizing the Moore–Penrose pseudoinverse of , as illustrated in Equation (6).

where and presents the orthogonal projection operator in the null space of , while is an arbitrary task velocity vector which cannot disturb task .

Following this, if tasks with different priorities are assigned, the inverse kinematic problem can be solved for each task in the null space by the previous one, thus creating a hierarchy between tasks. The recursive formula is defined in (9) [23].

where and denotes the projector in the null space of the augmented Jacobian matrix .

Considering an RCM constraint task, the desired joint velocities will be given as

2.2. Hardware

2.2.1. Leader Part

The digital-twin robots are primarily controlled using 3DSystem Touch devices (3D Systems®, Rock Hill, SC, USA). These devices are capable of refreshing at 1000 Hz, which is on par with the tactile sensation of human hands. The data processing part is a computing station which is equipped with an Ubuntu 20.04 LTS system and an Nvidia GTX3060 graphics card (Nvidia Santa® Clara, CA, USA). There are virtual reality (VR) glasses, named Pimax 8K X (Pimax®, Shanghai, China), which provide a 200° field of view (FOV).

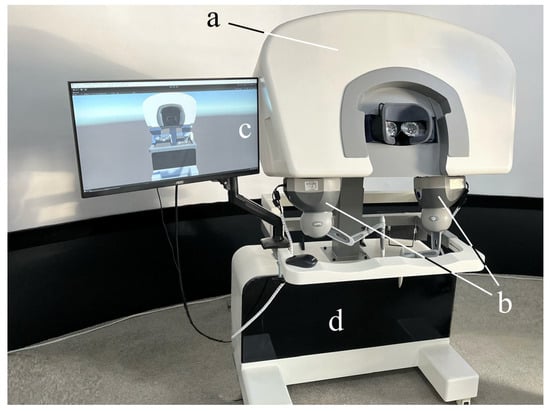

The hardware components of the leader part of the system are depicted in Figure 3 and their details are listed in Table 1.

Figure 3.

The hardware equipment of the leader part: (a) 200°FOV VR glasses (Pimax®, Shanghai, China). (b) Two 3DSystems Touch devices (3D Systems®, Rock Hill, SA, USA). (c) Monitor for exhibiting the digital-twin robot (AOC, Fuzhou, Fujian, China). (d) Computing station with Windows 10 (Intel(R) Core(TM) i7-6700K CPU @ 4.00 GHz, Santa Clara, CA, USA) system and an Nvidia GTX3060 graphics card (Nvidia®, Santa Clara, CA, USA).

Table 1.

Hardware components of the leader.

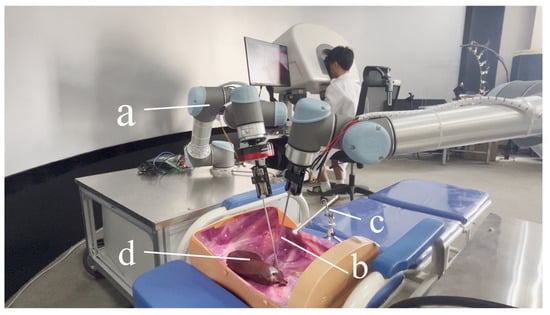

2.2.2. Responder Part

To facilitate the manipulation of target objects at a distance, two responder robots equipped with end-effectors are utilized as remote extensions of the surgeon’s hands. A 1920 × 1080 endoscope serves as the video input device, delivering a high-quality view for the operators. The components of the responder part are illustrated in Figure 4 and are detailed in Table 2.

Figure 4.

Equipment of the responder part. (a) Two UR5 robots. (b) End effectors to operate target objects. (c) Endoscope with 1920 × 1080 resolution. (d) Target object.

Table 2.

Hardware components of the responder.

2.3. Software

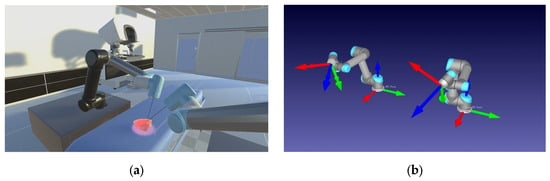

A system was designed to enable the real-time control of the digital-twin robots, as illustrated in Figure 5. This system is built upon platforms known as Unity3D [24] (2021.3.30f1 LTS) and RoboDK v5.6.3. [25,26].

Figure 5.

Environment for digital-twin robot control in real time. (a) Unity3D vision rendering environment. (b) RoboDK digital-twin robots (The arrows represent the Transform Frame (TF).).

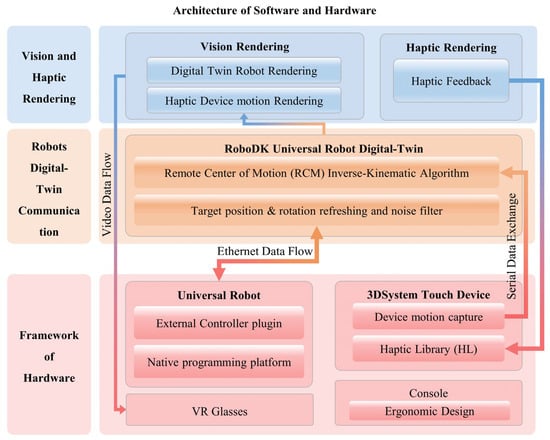

To ensure an effective integration of hardware and software, a comprehensive framework has been meticulously designed, as depicted in Figure 6. It combines bottom-up mechanisms through the hardware layer, the robots’ digital-twin communication layer, and the visual and haptic rendering layer. The Universal Robots and 3DSystem Touch devices are seamlessly integrated into the hardware layer, where they gather data from the operator’s movements. Once the motion data are collected within a 2 ms interval, it is packaged and transmitted to the digital-twin communication layer for noise filtering and processing through the remote center of motion (RCM) inverse kinematic algorithm. Subsequent to the data processing phase, Unity3D extracts digital-twin (DT) data and translates them into visual and haptic feedback data for the leader part. This comprehensive framework ensures smooth interaction and communication between the hardware and software components.

Figure 6.

The system’s architecture encompasses both software and hardware components and can be primarily divided into three main sections. Hardware layer: This layer includes a Universal Robot, a 3Dsystem Touch device, and VR glasses. The Touch device’s motion capture plugin transmits data via a serial connection to the RCM IK algorithm, while the UR exchanges data through an ethernet connection with the digital-twin communication layer in RoboDK. Digital-Twin Communication Layer: within this layer, data exchanged by the Universal Robot are processed and managed. Vision and Haptic Rendering Layer: This layer is responsible for rendering video data and haptic feedback, which are subsequently relayed to the VR glasses and Touch device. The entire architecture ensures seamless interaction and communication between the software and hardware components, facilitating the control and feedback loop for the digital-twin robots.

3. Experimental Design

3.1. Participants

A total of 25 doctors, comprising 10 experts and 15 novices, were invited to participate in this experiment. The selection criteria for participants were based on their robotic minimally invasive surgery experience, with novices having no more than 3 years of experience, while experts had a minimum of 6 years of experience. Detailed participant information is provided in Table 3.

Table 3.

Participants in two groups.

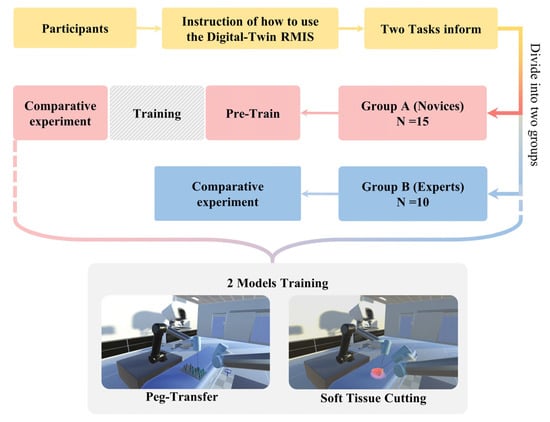

Due to a low proficiency in RMIS operation, it takes novices more than 2 weeks to practice before their actual experiments. All of the participants should be familiar with the operating rules and training tasks before conducting experiments to ensure the validity of the data. The period of the whole experiment is exhibited in Figure 7.

Figure 7.

Period of the whole experiment. Participants are divided into two groups since they are instructed about how to use the digital-twin RMIS platform and the tasks of the experiment.

3.2. Tasks

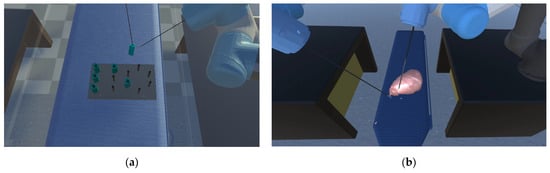

At the outset of the experiment, a designated experimental recorder is tasked with recording the hand, head, and instrument movements of all participants during the experiment. These recordings will serve as the raw data for the subsequent assessment of their surgical skills. The experiment primarily consists of two key tasks [27,28]: peg transfer and soft tissue cutting. Following the completion of one training mode, participants are provided with a 30 min break before the next training mode to ensure that their experimental data are not influenced by subjective physical fatigue.

To enhance the accuracy of evaluating the effectiveness of the various training modes in the experiment, all operators will receive detailed information regarding the scoring criteria for the operations and the specific operational requirements for each training module before the experiment commences. In the peg transfer module, the operators should employ the left surgical instrument to elevate the small pegs positioned on the left side and subsequently use the right surgical clamp to grasp and transfer the pegs to the columns on the right. If a small object falls during the operation, it will be counted as the number of drops, but the operator can use the instruments to pick up the object and continue with the previous operation. In the soft tissue cutting mode, the operators must utilize the left surgical clamp to grasp and gently tug on the soft tissue, followed by using the right surgical knife to precisely remove the specified soft tissue entirely. The duration, operation path, and cutting frequency used to complete the entire step will be recorded.

These two training modules are shown in Figure 8. Through the above two modules, the surgical skills of the experimental participants can be recorded, and their surgical skills will be quantitatively reflected.

Figure 8.

(a) Peg transfer module: the operators should employ the left surgical instrument to elevate the small pegs positioned on the left side, and subsequently use the right surgical clamp to grasp and transfer the pegs to the columns on the right. (b) Soft tissue cutting module: the operators must utilize the left surgical clamp to grasp and gently tug on the soft tissue, followed by using the right surgical knife to precisely remove the specified soft tissue entirely.

3.3. Data Collection

Because the simulator cannot automatically record all the data required for our experiment, the recorder will manually document relevant information during the operation. This includes the number of objects dropped in the peg transfer module and the cutting frequency in the soft tissue cutting module. A comprehensive surgical skill assessment will be conducted after merging the experimental data. A comparison will be made between the Novice Group and the Expert Group. Common evaluation criteria for both modules include the total experiment time (T) and the length of movement of surgical instruments (ML) for both the left and right instruments. Additionally, specific tasks have unique parameters to consider. In the peg transfer Module, the evaluation will consider the number of peg drops (ND), whereas for the soft tissue cutting task, performance will be assessed using the cutting frequency (FC).

This paper assesses the pros and cons of different modules by employing face and content validation through the use of questionnaires. The questionnaire employs a 5-point Likert scale to assess the visual and tactile experiences of the two training methods, with ratings ranging from 1 (Poor) to 5 (Excellent) [29]. The questionnaire, presented in Table 4, was completed by an expert team to provide a more precise evaluation of the simulator.

Table 4.

Questionnaire of the face and content validity.

3.4. Data Analysis

For face and content validation, the average values of subjective questions were calculated using descriptive statistical methods. Prior to statistical analysis, a reliability analysis was performed on the questionnaires, with a Cronbach’s alpha coefficient exceeding 0.7 considered as a credible measure.

In terms of construct validation, we compared the experimental data from the Novice Group and the Expert Group using an independent sample t-test. The simulator’s impact is considered significant when the p-value is less than 0.05.

We utilize the entropy method to determine the weighting for each evaluation item within the modules. To mitigate the impact of physical quantities, we employ a range-based method, followed by the calculation of the coefficient of variance for each evaluation item, resulting in their respective index weights. This paper applies the entropy method to establish the weight of each evaluation item within various modules. Initially, the scope method is employed to standardize each evaluation item by removing dimensionality, thereby eliminating the influence of physical quantities on the data. Subsequently, the coefficient of variance for each evaluation item is calculated to determine its index weight. The score function for each training module is then used to compute the experimental scores for both the Novice Group and the Expert Group. Finally, a block diagram is employed to compare the specific performance of these two groups. The motion trajectory diagram of the surgical instruments will be used to reveal the proficiency of the two groups. A statistical analysis software (SciPy 1.8.1 Python 3.9.5, based on miniconda) was used for the whole analysis process.

4. Evaluation

4.1. Face and Content Validation

To assess the visual and haptic perception of various modules, we designed a subjective questionnaire using a 5-point Likert scale, consisting of five questions. The questionnaire data from Expert Group doctors after the experiment are presented in Table 5.

Table 5.

Subjective questionnaire results of face and content assessment.

The reliability analysis of the statistical questionnaire yielded a total Cronbach’s alpha coefficient exceeding 0.7. Additionally, the data from each group exhibited a roughly normal distribution, as confirmed by the Shapiro–Wilk test.

4.2. Construct Validation

When the operator is training, the virtual operation simulator will automatically record the movement of the instrument and the completion time of the experiment.

Detailed data are provided in Table 6, illustrating that the Expert Group exhibits significantly higher proficiency compared to the Novice Group, as observed in both the figure and the table. The p-value results, displayed in Table 7, indicate the statistical significance of the differences between the Novice Group and the Expert Group.

Table 6.

Construct validation of face and content assessment.

Table 7.

The comparison of objective parameters for construct validity.

4.3. The Entropy Method

4.3.1. The Steps of the Entropy Method Steps

When selecting experts and evaluation items (), it is necessary to standardize the measurements of various indicators before calculating comprehensive scores. This standardization process involves converting absolute indicator values into relative values, addressing the differences in measurement scales. Furthermore, it is important to note that the interpretation of positive and negative indicators differs—higher values are better for positive indicators, while lower values are preferable for negative ones. Therefore, we employ distinct standardization algorithms for high and low indicators to account for these differences. The standardization process follows these specific methods:

Positive index:

Negative index:

Calculate the contribution or characteristic ratio of the expert for index using the following formula.

For entropy calculation, calculate the entropy of the th index.

Calculate the weight of the evaluation index, , and finally, normalize the index weight.

4.3.2. Data Processing

We established that the sore function and the index weight of each evaluation item in the modules is determined by the entropy method. The index weight for the Expert Group was determined through experimental data, as presented in Table 8.

Table 8.

Data for each training module experiment (experts).

The index weight and score function model for each module is presented in Table 9.

Table 9.

Weight and score function model of metrics in each training module.

5. Result and Discussion

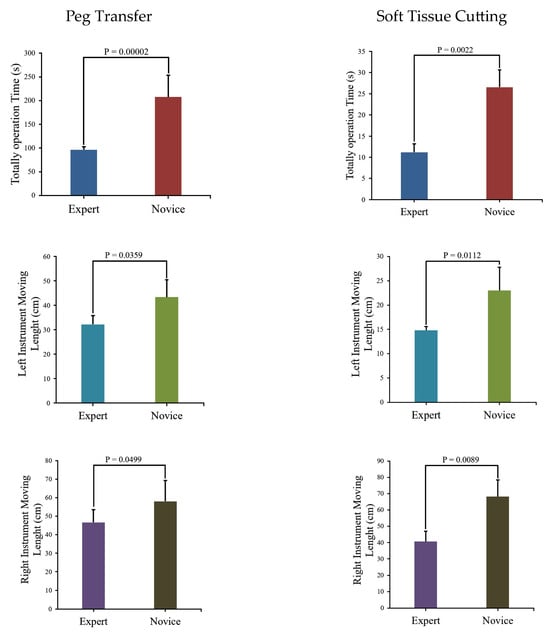

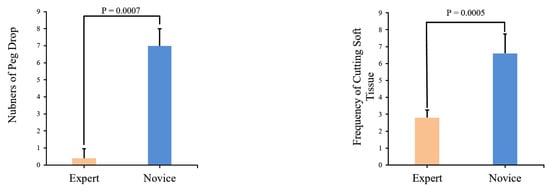

We use Matplotlib (Python 3.9.3) to create block diagrams that display p-values for comparing the same parameters in different training modules between the Novice Group and Expert Group, as depicted in Figure 9.

Figure 9.

Comparison of module parameters and associated p-values between the Novice Group and Expert Group.

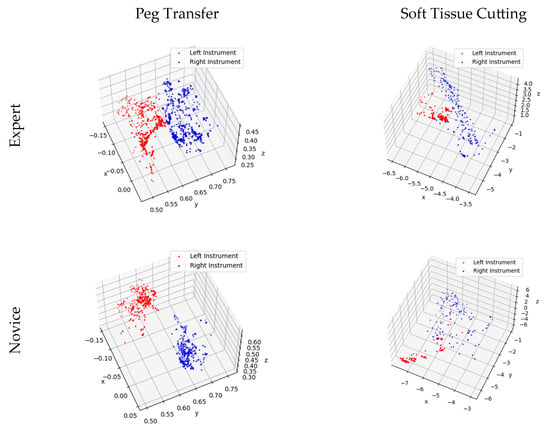

The figure indicates that the Novice Group requires significantly more time to complete two modules and their surgical instrument moving lengths are notably longer than those of the Expert Group. Instrument trajectories, illustrating the disparities between the Novice Group and Expert Group, were generated using Matplotlib [30] and are presented in Figure 10.

Figure 10.

Trajectory of surgical instrument movement in the training modules for the Novice and Expert Groups.

Upon the analysis of Table 7 and Figure 9, it becomes evident that a substantial disparity exists between expert and novice medical practitioners. The Expert Group demonstrate significantly shorter task completion times, reduced distances traveled during both left- and right-hand operations, and notably fewer instances of task failure when compared to their novice counterparts. Furthermore, the statistical significance tests conducted on the data yield p-values consistently below 0.05, underscoring the robustness and reliability of the data.

Figure 10 provides further insight into the matter, revealing that expert doctors exhibit methodical and predictable motion trajectories while manipulating virtual surgical instruments. These trajectories closely resemble the movements required for peg transfer and soft tissue cutting tasks under real-world surgical conditions. In contrast, novice doctors’ motion trajectories appear less structured, displaying irregular and less predictable patterns.

5.1. Peg Transfer

In Figure 9, a comparison of experimental data between the Novice Group and the Expert Group has been conducted for the peg transfer module. The key distinguishing factor between experts and novices in terms of surgical skill is the number of peg drops. This implies that more experienced operators tend to drop fewer pegs. Additionally, experts complete the training module in considerably less time and with shorter instrument movement lengths. Furthermore, in Figure 10, it is apparent that experts’ instrument trajectories are more uniformly distributed. The right trajectory closely resembles the arrangement of columns in Figure 8a. In contrast, novices take more time to complete the training task, and their instrument trajectories lack discernible patterns.

5.2. Soft Tissue Cutting

It is evident from Figure 9 that the Novice Group require significantly more time, longer instrument movement lengths, and a higher cutting frequency to complete the soft tissue cutting task compared to the Expert Group. Furthermore, as illustrated in Figure 10, the experts exhibit a higher level of precision in their soft tissue cutting skills, resulting in instrument trajectories that are more concentrated on a fixed path. In contrast, novices tend to exhibit more dispersed instrument trajectories when compared to experts.

5.3. Limitation of the VRDT-RMIS

Limited Assessment Metrics: The relatively limited set of assessment metrics used to evaluate the performance of participants is considered one of the limitations. While specific parameters were the focus of this study, future research could benefit from a more comprehensive range of metrics to provide a more nuanced evaluation of surgical skills.

Lack of Post-Training Assessment: A post-training assessment to measure the extent of improvement in the surgical abilities of novice participants after training was not conducted. Such assessments should be considered in future research to gain a comprehensive understanding of the simulator’s training effectiveness.

Small Sample Size: A sample size of 25 participants was involved in our study, which, while sufficient for the initial evaluation, may benefit from larger sample sizes in future studies to enhance the generalizability of the results.

Realism of the Virtual Environment: While aiming to replicate real surgical scenarios, the simulator’s realism can be further improved in certain aspects to better mimic real-world conditions.

In future research, we intend to address these limitations by expanding our assessment metrics, conducting post-training assessments, increasing sample sizes, and refining the realism of the virtual environment. These enhancements will contribute to a more comprehensive and accurate evaluation of the simulator’s effectiveness in surgical skill training.

5.4. Comparison with Existing RMIS Simulators

To better demonstrate the improved performance of the VRDT-RMIS simulator, a comparison was conducted with some of the existing surgical robot simulators [31]. It was found that our simulator not only closely approximates the dV-Trainer® (MultiCare Health Systems, Tacoma, WA, USA) in terms of robotic simulation, 3D visualization, and 3D mode, but also includes a high-refresh-rate haptic feedback that is lacking in most simulators. The information mentioned above is presented in Table 10.

Table 10.

Comparison with the existing RMIS simulators.

6. Conclusions

In this paper, we used the RCM task to constrain the motion of the robot digital twin in a Unity3D virtual environment and control it in real time through haptic devices. Then, we set up two surgical skill training modules within the virtual environment and invited 25 volunteers to verify the effectiveness of the simulator. An entropy method was employed to assign weights to each parameter during the experiment for the purpose of comparing the performance of the Novice Group and the Expert Group. Last but not least, we discussed the recorded data and found that the simulator can extremely distinguish novices and experts. However, due to a lack of time, we did not compare the improvements in surgical abilities and the extent of improvement for novices after training. This is something that we will further strengthen in our future work.

Integrating VRDT-RMIS into routine surgical training in modern surgical departments is our long-term vision. To achieve this goal, we will focus on the following improvements:

- Enhancing the coherence between real robot control and the virtual robot environment in the simulator remains a priority. We aim to seamlessly integrate these two components into a responsive system.

- Given the significant differences in communication speeds today, remote surgery is becoming a reality. We are committed to advancing the connectivity of the VRDT-RMIS system, allowing surgeons to access it from different locations, facilitating guidance and training from experts worldwide.

- The simulator’s data collection capabilities are invaluable for objective skill assessment and surgical competency certification. Surgeons can use the system to track their progress, identify areas for improvement, and attain certification based on their proficiency levels.

Furthermore, our objective is to conduct broader clinical validation studies to demonstrate the simulator’s effectiveness in improving real-world surgical outcomes.

Author Contributions

Conceptualization, X.C. and Y.T.; methodology, X.C., Z.W. and Y.T.; software, X.C. and S.L.; validation, X.C., S.L. and Z.W.; formal analysis, X.C.; investigation, S.L.; resources, J.P. and Y.T.; data curation, S.L.; writing—original draft preparation, X.C.; writing—review and editing, Y.T.; visualization, C.L.; supervision, Y.T.; project administration, Y.T.; funding acquisition, Y.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by funding from the National Natural Science Foundation of China (62062070, and 62005235).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the author Xiaoyu Cai.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fuchs, K.H. Minimally invasive surgery. Endoscopy 2002, 34, 154–159. [Google Scholar] [CrossRef] [PubMed]

- Sang, H.; Yang, C.; Liu, F.; Yun, J.; Jin, G.; Chen, F. A zero phase adaptive fuzzy Kalman filter for physiological tremor suppression in robotically assisted minimally invasive surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2016, 12, 658–669. [Google Scholar] [CrossRef] [PubMed]

- Kalan, S.; Chauhan, S.; Coelho, R.F.; Orvieto, M.A.; Camacho, I.R.; Palmer, K.J.; Patel, V.R. History of robotic surgery. J. Robot. Surg. 2010, 4, 141–147. [Google Scholar] [CrossRef] [PubMed]

- Alkhatib, O.; Snodin, C.; Dunne, D. Robotic cholecystectomy advantages and disadvantages, a literature review. Surgery 2023. preprint. [Google Scholar]

- Dupont, P.E.; Nelson, B.J.; Goldfarb, M.; Hannaford, B.; Menciassi, A.; O’malley, M.K.; Simaan, N.; Valdastri, P.; Yang, G.-Z. A decade retrospective of medical robotics research from 2010 to 2020. Sci. Robot. 2021, 6, eabi8017. [Google Scholar] [CrossRef] [PubMed]

- Zhang, N.; Yan, P.; Feng, L.; Chu, X.; Li, J.; Li, J.; Guo, K.; Guo, T.; Liu, X.; Yang, K. Top 100 most-cited original articles, systematic reviews/meta-analyses in robotic surgery: A scientometric study. Asian J. Surg. 2022, 45, 8–14. [Google Scholar] [CrossRef] [PubMed]

- Bramhe, S.; Pathak, S.S. Robotic surgery: A narrative review. Cureus 2022, 14, e29179. [Google Scholar] [CrossRef] [PubMed]

- Lane, T. A short history of robotic surgery. Ann. R. Coll. Surg. Engl. 2018, 100 (Suppl. 6), 5–7. [Google Scholar] [CrossRef]

- Valls-Esteve, A.; Adell-Gómez, N.; Pasten, A.; Barber, I.; Munuera, J.; Krauel, L. Exploring the Potential of Three-Dimensional Imaging, Printing, and Modeling in Pediatric Surgical Oncology: A New Era of Precision Surgery. Children 2023, 10, 832. [Google Scholar] [CrossRef] [PubMed]

- Valls-Esteve, A.; Tejo-Otero, A.; Lustig-Gainza, P.; Buj-Corral, I.; Fenollosa-Artes, F.; Ruio-Palau, J.; de la Torre, I.B.-M.; Munuera, J.; Fondevila, C.; Krauel, L. Patient-Specific 3D Printed Soft Models for Liver Surgical Planning and Hands-On Training. Gels 2023, 9, 339. [Google Scholar] [CrossRef]

- Alsofy, S.Z.; Sakellaropoulou, I.; Nakamura, M.; Ewelt, C.; Salma, A.; Lewitz, M.; Saravia, H.W.; Sarkis, H.M.; Fortmann, T.; Stroop, R. Impact of virtual reality in arterial anatomy detection and surgical planning in patients with unruptured anterior communicating artery aneurysms. Brain Sci. 2020, 10, 963. [Google Scholar] [CrossRef] [PubMed]

- Jo, Y.-J.; Choi, J.-S.; Kim, J.; Kim, H.-J.; Moon, S.-Y. Virtual reality (VR) simulation and augmented reality (AR) navigation in orthognathic surgery: A case report. Appl. Sci. 2021, 11, 5673. [Google Scholar] [CrossRef]

- Sørensen, S.M.D.; Savran, M.M.; Konge, L.; Bjerrum, F. Three-dimensional versus two-dimensional vision in laparoscopy: A systematic review. Surg. Endosc. 2016, 30, 11–23. [Google Scholar] [CrossRef] [PubMed]

- Konstantinova, J.; Jiang, A.; Althoefer, K.; Dasgupta, P.; Nanayakkara, T. Implementation of tactile sensing for palpation in robot-assisted minimally invasive surgery: A review. IEEE Sens. J. 2014, 14, 2490–2501. [Google Scholar] [CrossRef]

- Zia, A.; Essa, I. Automated surgical skill assessment in RMIS training. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 731–739. [Google Scholar] [CrossRef] [PubMed]

- Peng, W.; Xing, Y.; Liu, R.; Li, J.; Zhang, Z. An automatic skill evaluation framework for robotic surgery training. Int. J. Med. Robot. Comput. Assist. Surg. 2019, 15, e1964. [Google Scholar] [CrossRef] [PubMed]

- Aghakhani, N.; Geravand, M.; Shahriari, N.; Vendittelli, M.; Oriolo, G. Task control with remote center of motion constraint for minimally invasive robotic surgery. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; IEEE: Piscataway, NJ, USA; pp. 5807–5812. [Google Scholar]

- Almusawi, A.R.J.; Dülger, L.C.; Kapucu, S. Artificial neural network based kinematics: Case study on robotic surgery. In Advances in Mechanism and Machine Science: Proceedings of the 15th IFToMM World Congress on Mechanism and Machine Science, Krakow, Poland, 15–18 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 1839–1848. [Google Scholar]

- Alamdar, A.; Samandi, P.; Hanifeh, S.; Kheradmand, P.; Mirabagheri, A.; Farahmand, F.; Sarkar, S. Investigation of a Hybrid Kinematic Calibration Method for the “Sina” Surgical Robot. IEEE Robot. Autom. Lett. 2020, 5, 5276–5282. [Google Scholar] [CrossRef]

- Wilz, O.; Sainsbury, B.; Rossa, C. Constrained haptic-guided shared control for collaborative human–robot percutaneous nephrolithotomy training. Mechatronics 2021, 75, 102528. [Google Scholar] [CrossRef]

- Colan, J.; Davila, A.; Fozilov, K.; Hasegawa, Y. A concurrent framework for constrained inverse kinematics of minimally invasive surgical robots. Sensors 2023, 23, 3328. [Google Scholar] [CrossRef]

- Colan, J.; Nakanishi, J.; Aoyama, T.; Hasegawa, Y. Optimization-Based Constrained Trajectory Generation for Robot-Assisted Stitching in Endonasal Surgery. Robotics 2021, 10, 27. [Google Scholar] [CrossRef]

- Mansard, N.; Chaumette, F. Task sequencing for high-level sensor-based control. IEEE Trans. Robot. 2007, 23, 60–72. [Google Scholar] [CrossRef]

- Kibsgaard, M.; Thomsen, K.K.; Kraus, M. Simulation of surgical cutting in deformable bodies using a game engine. In Proceedings of the 2014 International Conference on Computer Graphics Theory and Applications (GRAPP), Lisbon, Portugal, 5–8 January 2014; IEEE: Piscataway, NJ, USA; pp. 1–6. [Google Scholar]

- Sivasankaran, P.; Karthikeyan, R. Simulation of Robot Kinematic Motions using Collision Mapping Planner using RoboDK Solver. Blue Eyes Intell. Eng. Sci. Publ. 2020, 9, 21–27. [Google Scholar]

- Braumann, J.; Singline, K. Towards Real-Time Interaction with Industrial Robots in the Creative Industries. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA; pp. 9453–9459. [Google Scholar]

- Ritter, E.M.; Kindelan, T.W.; Michael, C.; Pimentel, E.A.; Bowyer, M.W. Concurrent validity of augmented reality metrics applied to the fundamentals of laparoscopic surgery (FLS). Surg. Endosc. 2007, 21, 1441–1445. [Google Scholar] [CrossRef]

- Nagyné Elek, R.; Haidegger, T. Next in Surgical Data Science: Autonomous Non-Technical Skill Assessment in Minimally Invasive Surgery Training. J. Clin. Med. 2022, 11, 7533. [Google Scholar] [CrossRef] [PubMed]

- Korzeniowski, P.; Brown, D.C.; Sodergren, M.H.; Barrow, A.; Bello, F. Validation of NOVISE: A novel natural orifice virtual surgery simulator. Surg. Innov. 2017, 24, 55–65. [Google Scholar] [CrossRef] [PubMed]

- Zachár, G. Visualization of large-scale trajectory datasets. In Proceedings of the CPS-IoT Week ‘23: Cyber-Physical Systems and Internet of Things Week, San Antonio, TX, USA, 9–12 May 2023; Volume 2023, pp. 152–157. [Google Scholar]

- Larkins, K.M.; Mohan, H.M.; Gray, M.; Costello, D.M.; Costello, A.J.; Heriot, A.G.; Warrier, S.K. Transferability of robotic console skills by early robotic surgeons: A multi-platform crossover trial of simulation training. J. Robot. Surg. 2023, 17, 859–867. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).