OpenForecast v2: Development and Benchmarking of the First National-Scale Operational Runoff Forecasting System in Russia

Abstract

1. Introduction

2. Data

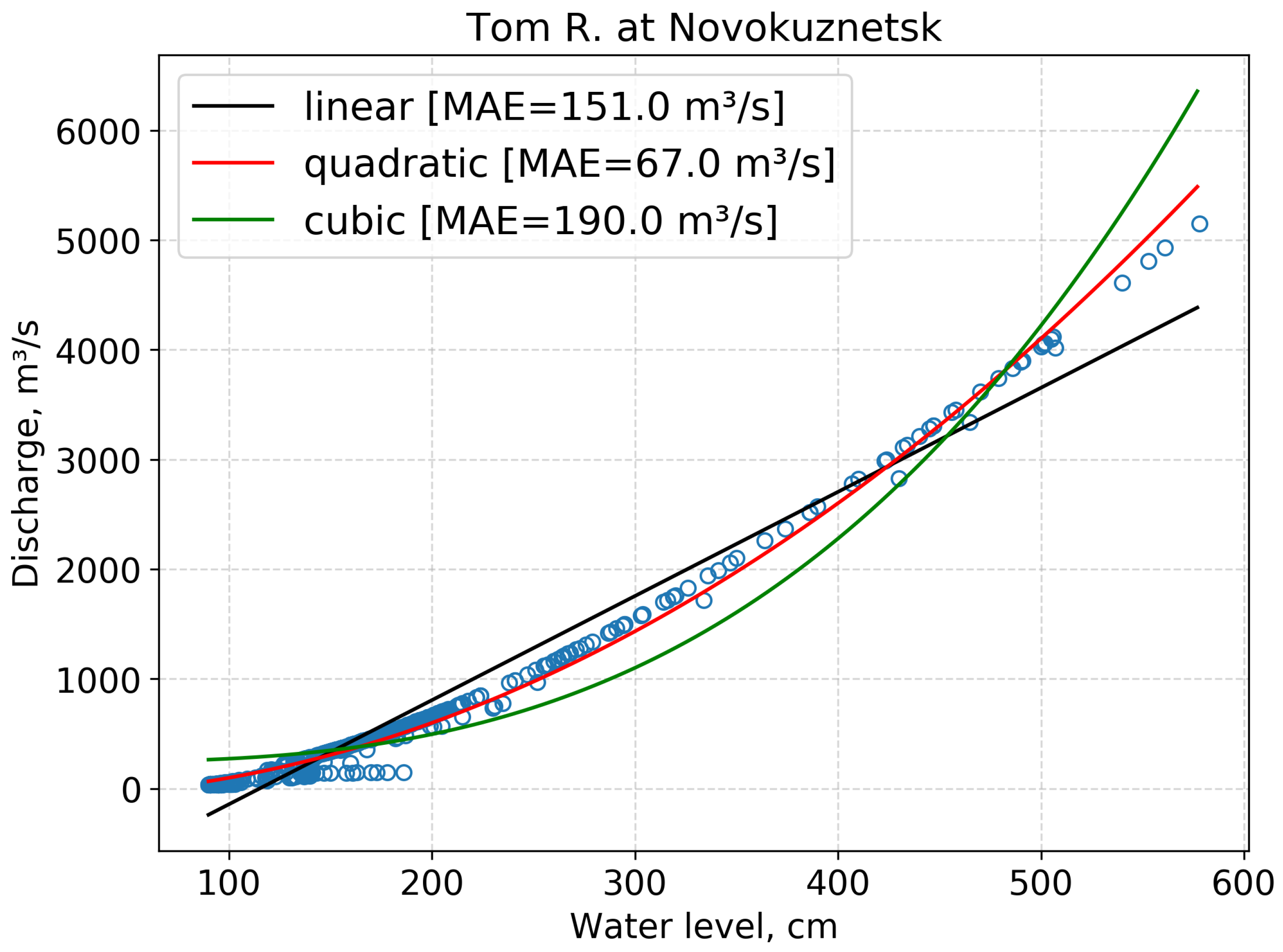

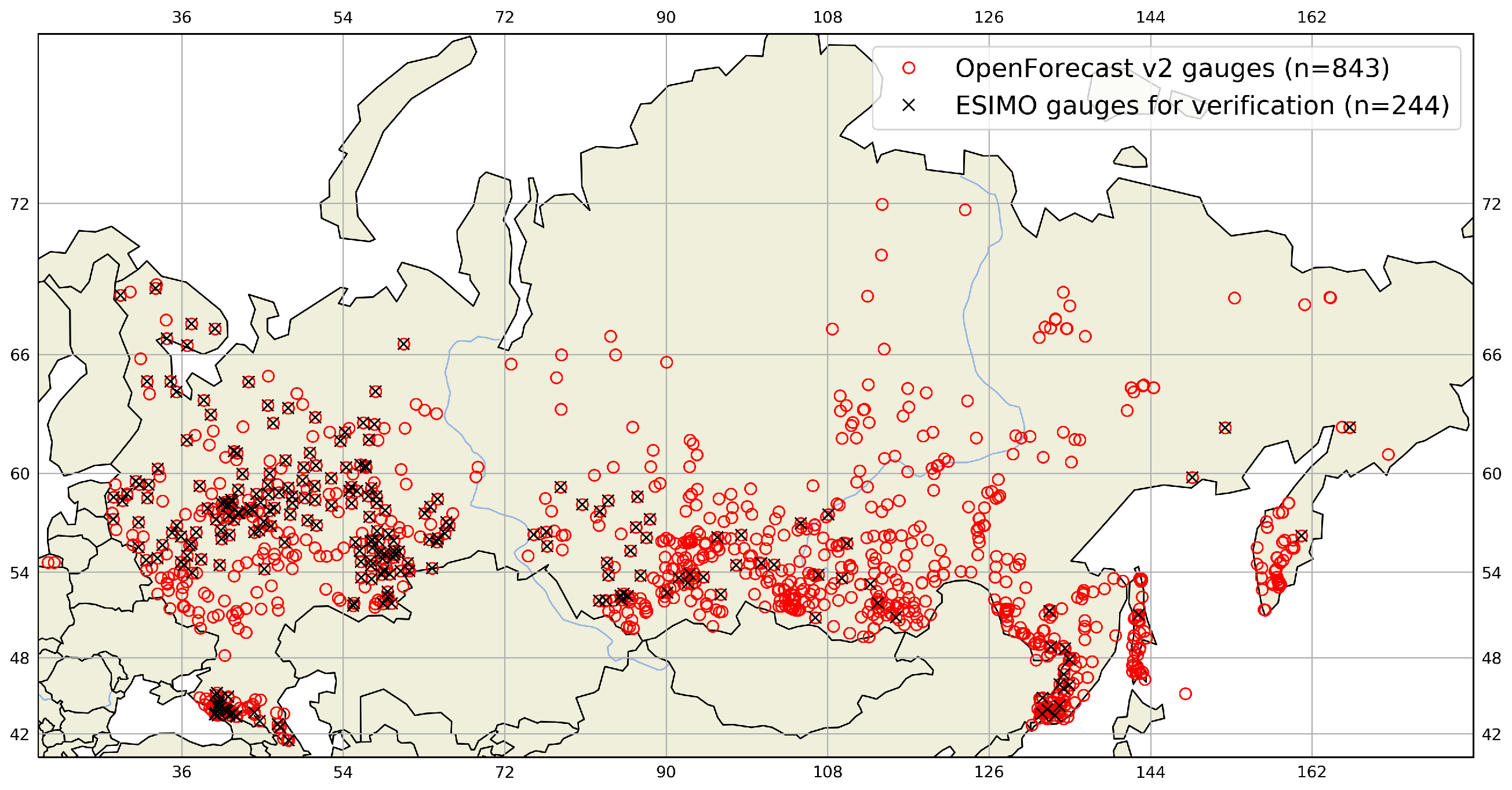

2.1. Streamflow and Water Level Observations

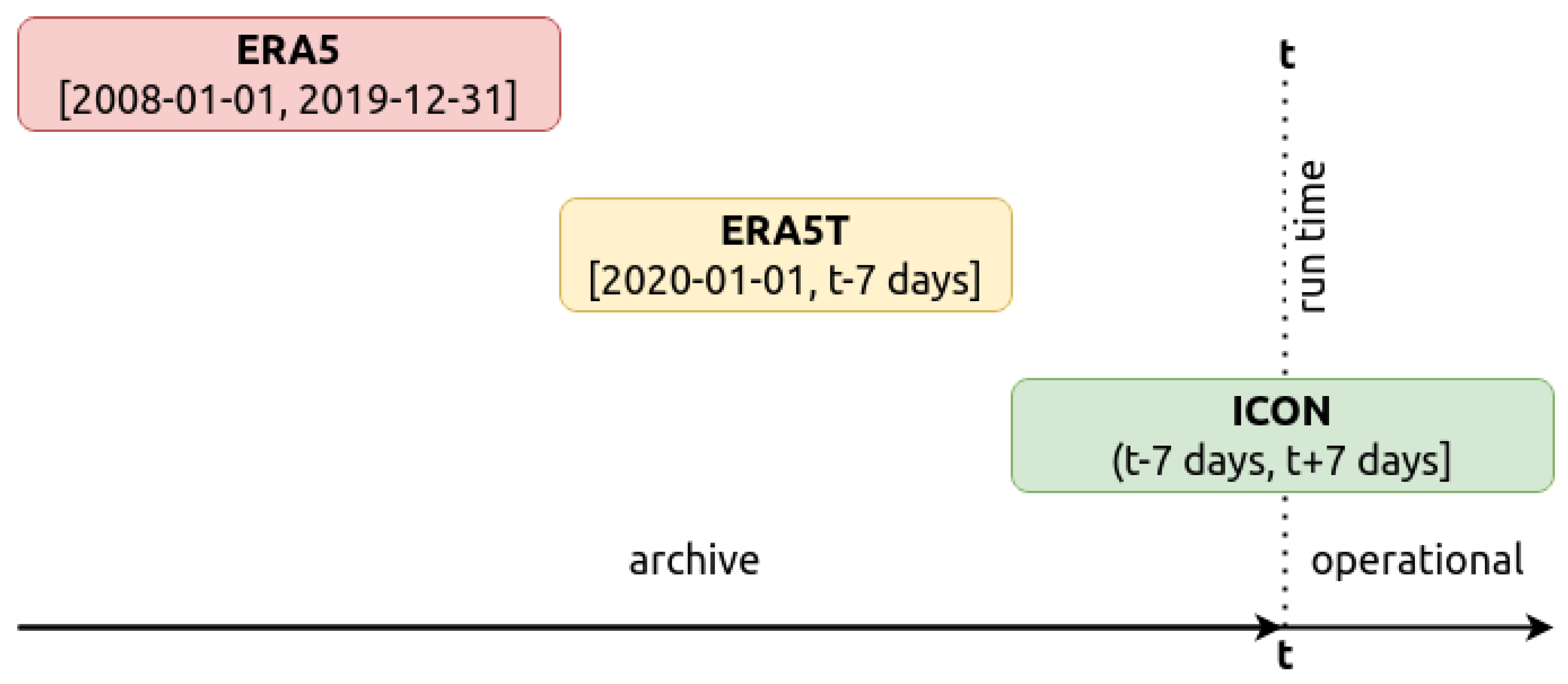

2.2. Meteorological Data

2.3. Gauge Attributes and Basin Boundaries

3. Methods

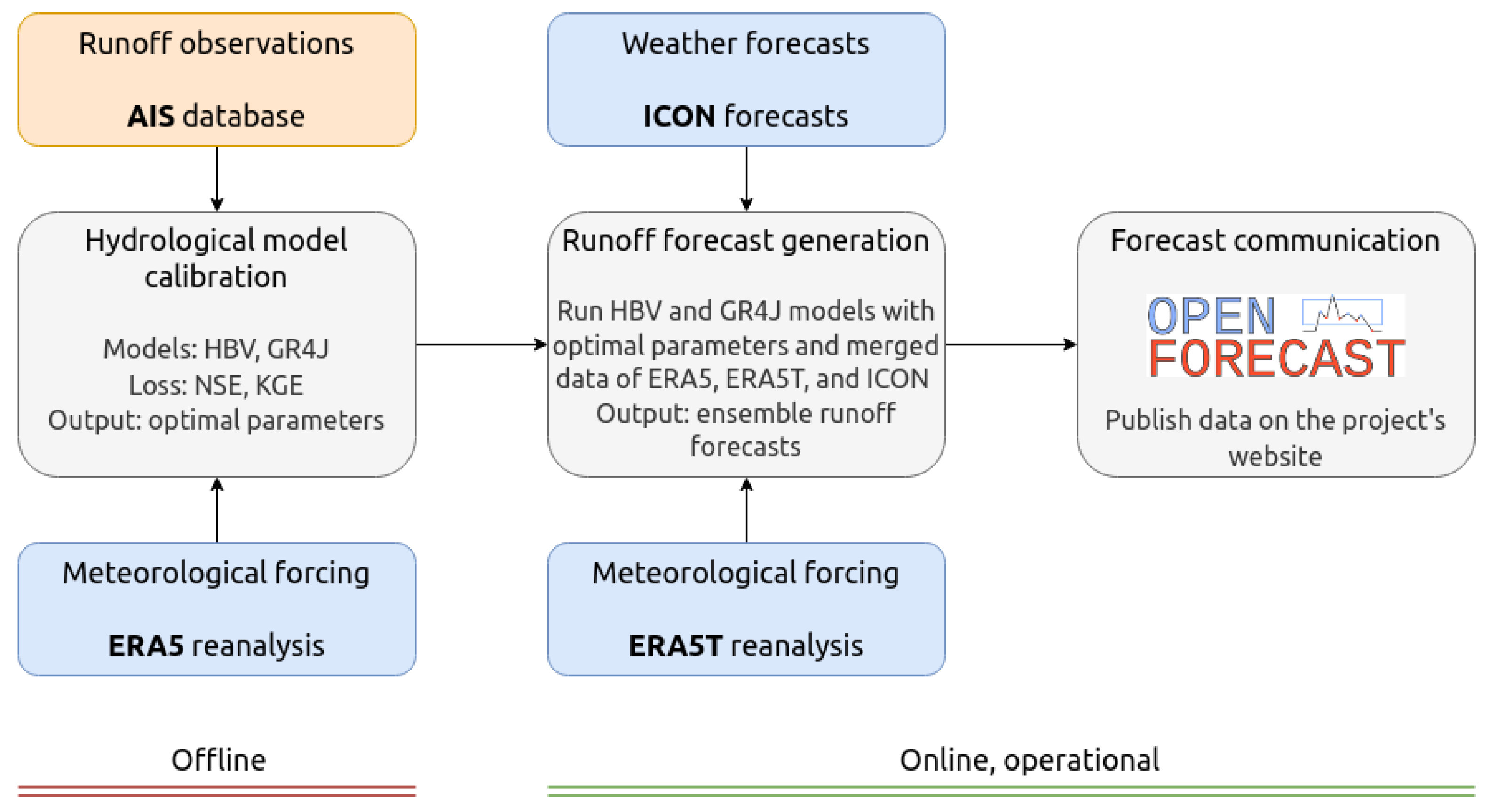

3.1. OpenForecast Computational Workflow

- Although the first version uses ERA-Interim reanalysis, the second one uses ERA5, a gradual development over ERA-Interim.

- While the first version of the system derives a forecast for three days ahead, the second version extends this to seven days.

- The number of gauges increased from two in the first version to 843 in the second version.

3.1.1. Model Calibration

| Parameters | Description | Calibration Range |

|---|---|---|

| TT | Threshold temperature when precipitation is simulated as snowfall (C) | –2.5 |

| SFCF | Snowfall gauge undercatch correction factor | 1–1.5 |

| CWH | Water holding capacity of snow | 0–0.2 |

| CFMAX | Melt rate of the snowpack (mm/(day*C)) | 0.5–5 |

| CFR | Refreezing coefficient | 0–0.1 |

| FC | Maximum water storage in the unsaturated-zone store (mm) | 50–700 |

| LP | Soil moisture value above which actual evaporation reaches potential evaporation | 0.3–1 |

| BETA | Shape coefficient of recharge function | 1–6 |

| UZL | Threshold parameter for extra outflow from upper zone (mm) | 0–100 |

| PERC | Maximum percolation to lower zone (mm/day) | 0–6 |

| K0 | Additional recession coefficient of upper groundwater store (1/day) | 0.05–0.99 |

| K1 | Recession coefficient of upper groundwater store (1/day) | 0.01–0.8 |

| K2 | Recession coefficient of lower groundwater store (1/day) | 0.001–0.15 |

| MAXBAS | Length of equilateral triangular weighting function (day) | 1–3 |

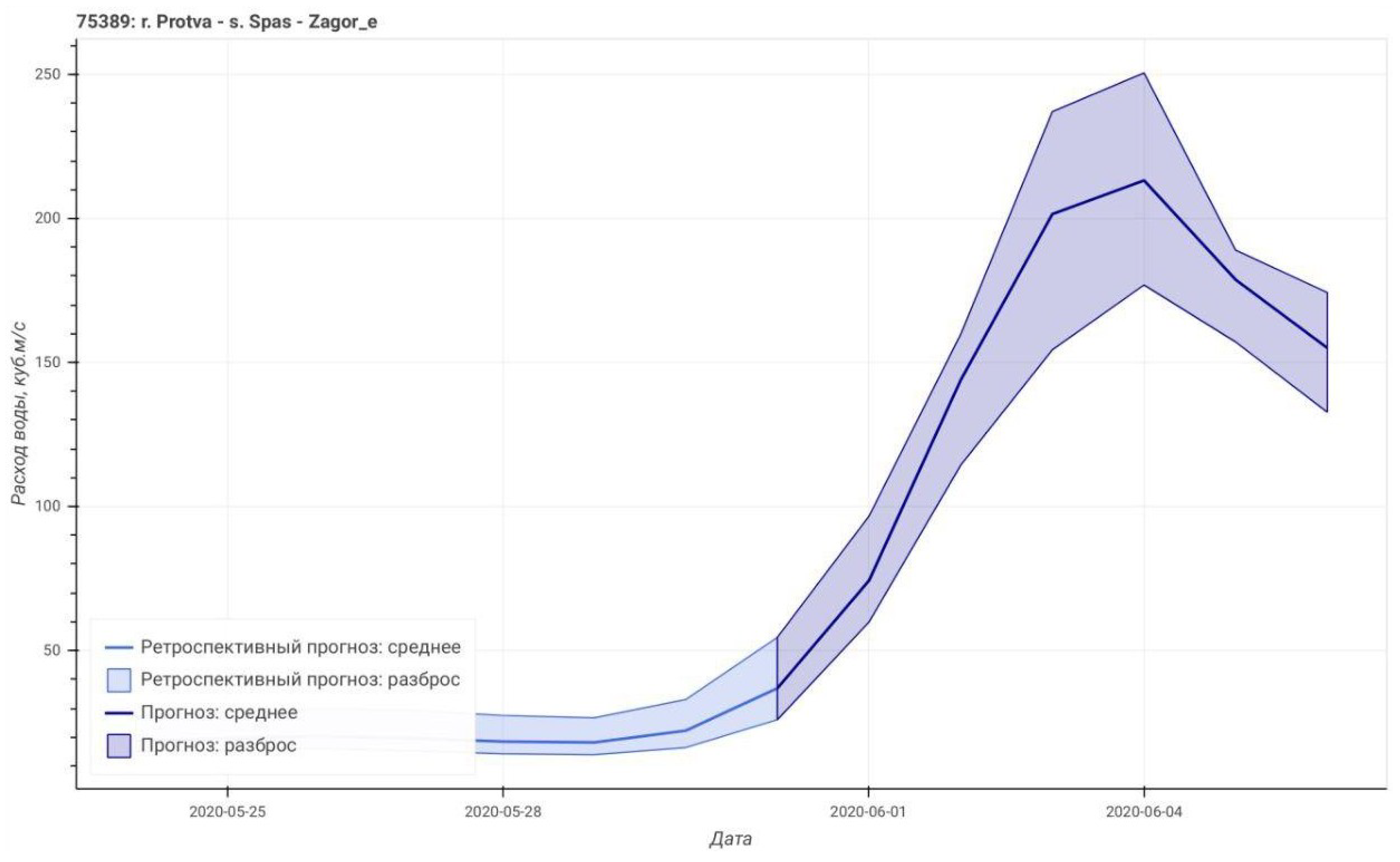

3.1.2. Generation of Runoff Forecast

3.1.3. Forecast Communication

3.1.4. Computational Details

3.2. Benchmarks and Verification Setup

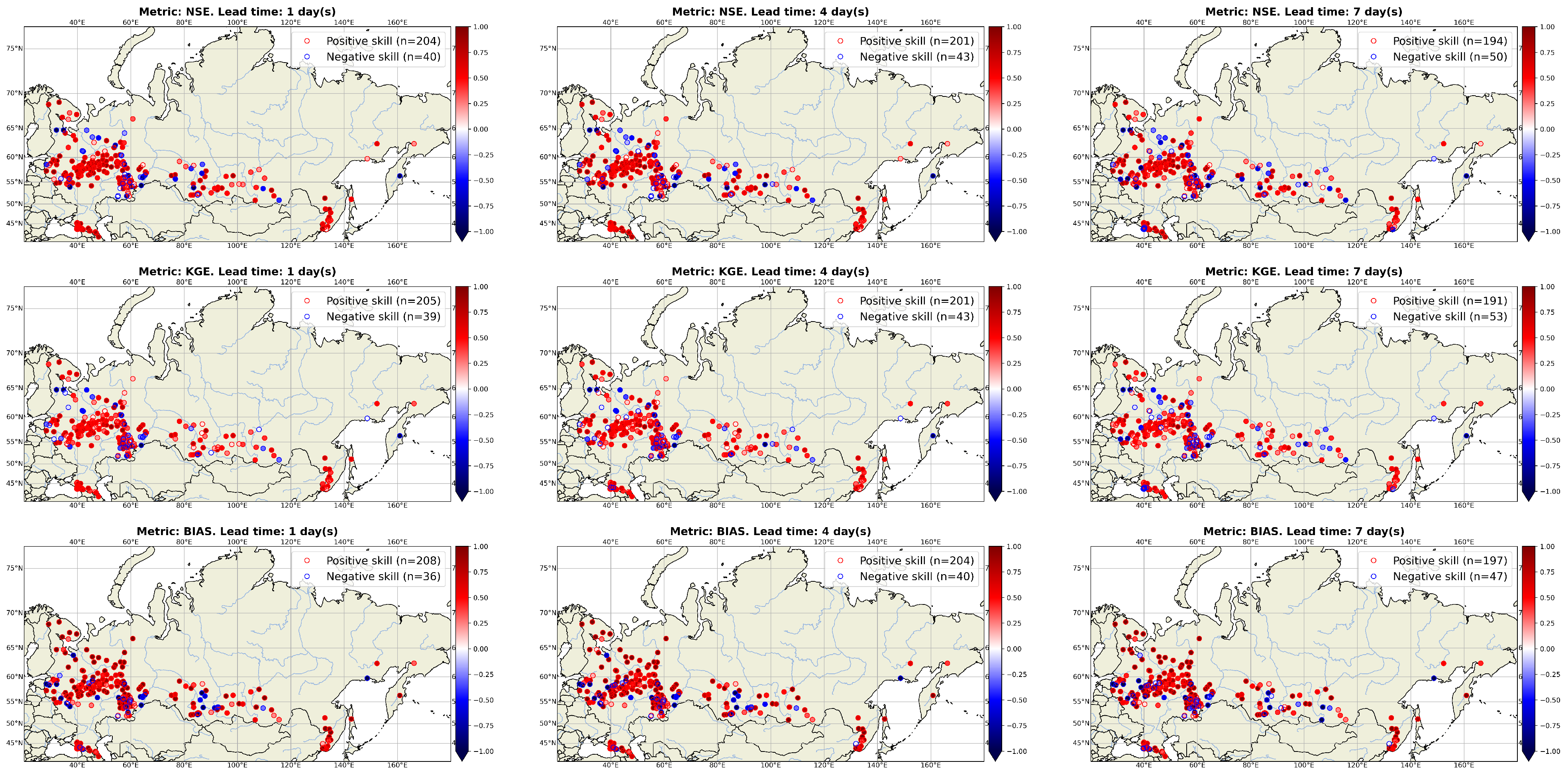

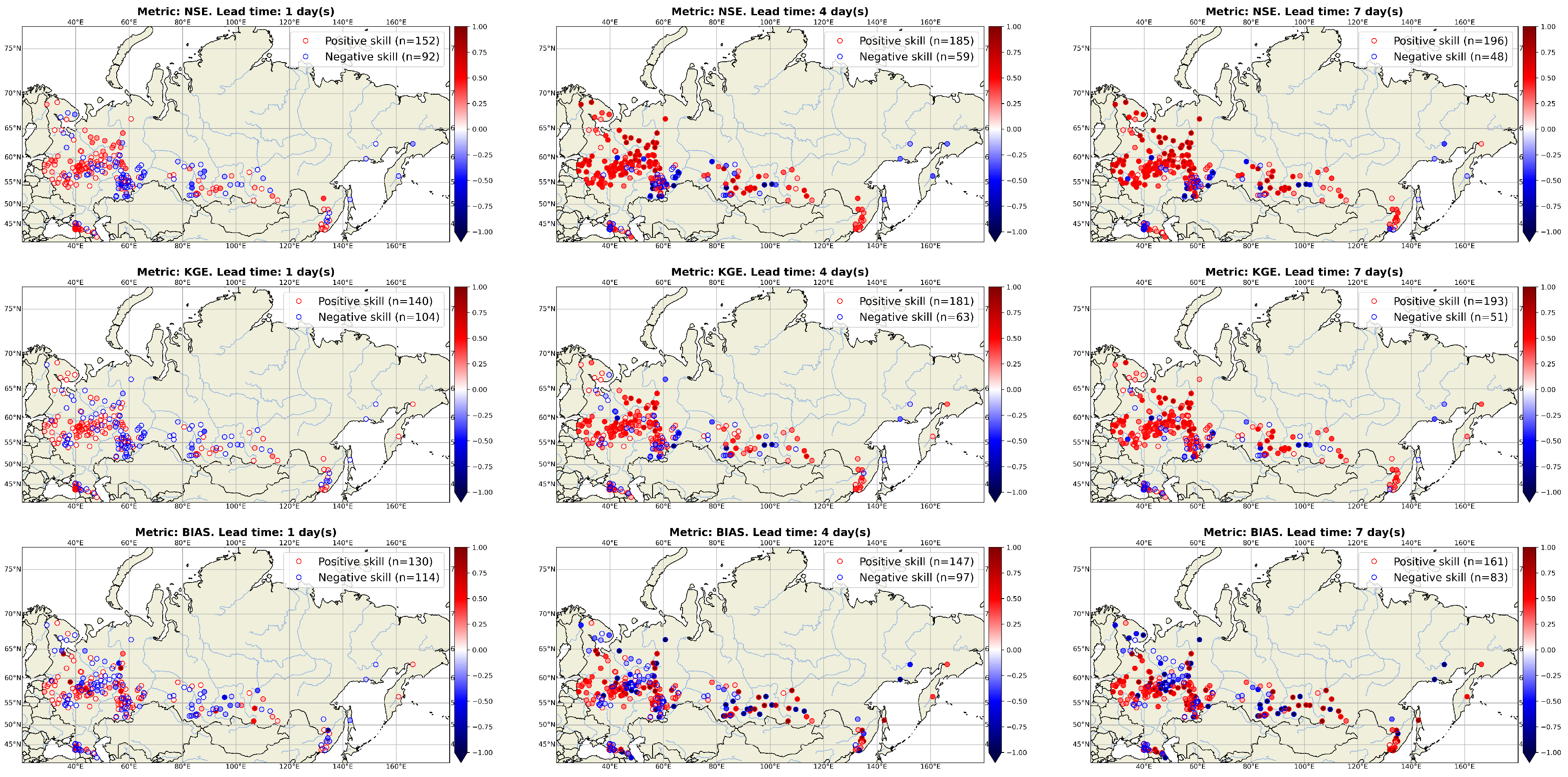

- Runoff climatology(hereafter climatology) is a naive benchmark that requires only information about historical runoff observations. From the general public’s perspective, this benchmark can be formulated as “The situation will be the same as in the year YYYY”. Although the climatology benchmark can be dynamically calculated for each date of the forecast, here, the use of an a posteriori estimate is proposed; i.e., the single-year realization from the available 10-year climatological sample (2008–2017) that has the highest correlation coefficient with observations from the verification period. In this way, the climatology benchmark here will be “the best guess” one can make based on the available climatological sample; i.e., without any forecasting system at all.

- The runoff persistence (hereafter persistence) benchmark belongs to the change-signal category of benchmarks. It assumes that for any lead time, the runoff will be the same as the last observation (at forecast time). Despite its simplicity, persistence may be useful for short-range forecasting where the forecast signal is dominated by the auto-regression of flow [29]. Following Pappenberger et al. [29], “the last observation” is not considered here as a measured discharge, rather as the last runoff prediction simulated by the hydrological model. That choice ensures consistency and offers a homogeneous verification data set that is usually not readily available for operational observations. Thus, persistence shows the gain provided by the use of a deterministic meteorological forecast.

4. Results and Discussion

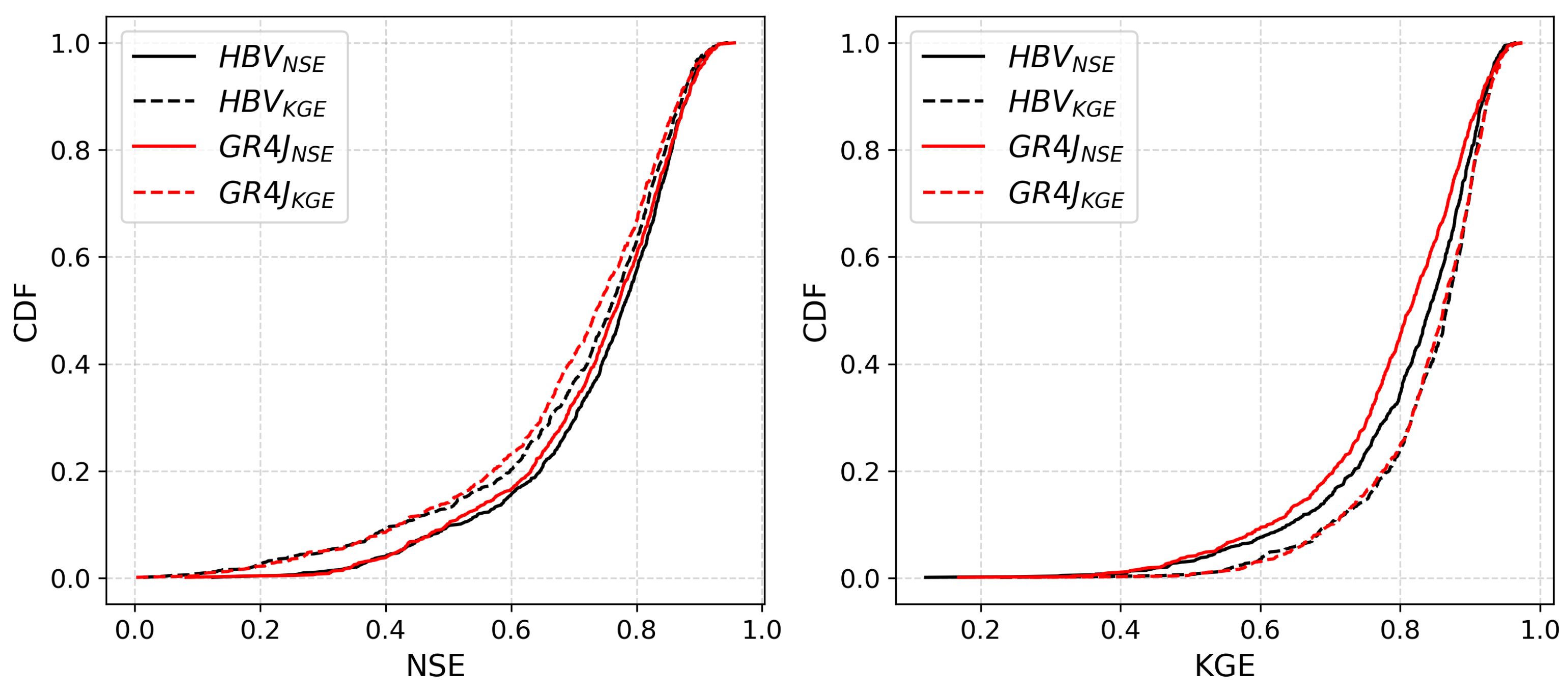

4.1. Hydrological Model Calibration

4.2. Selection of Reference Gauges

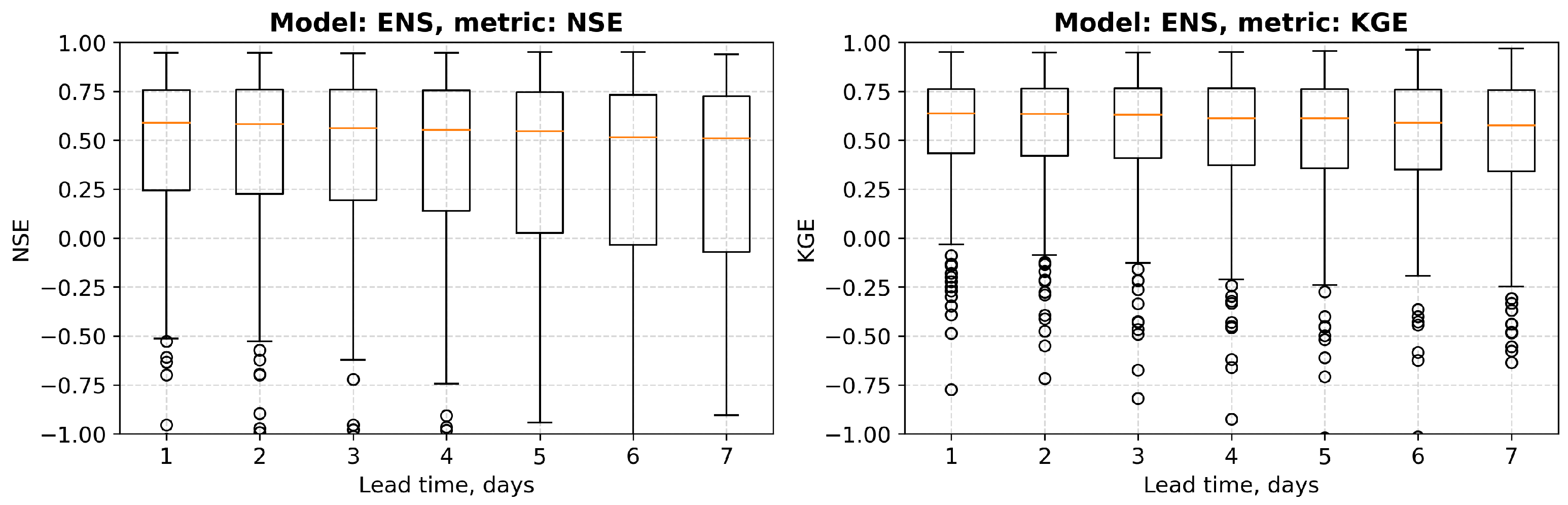

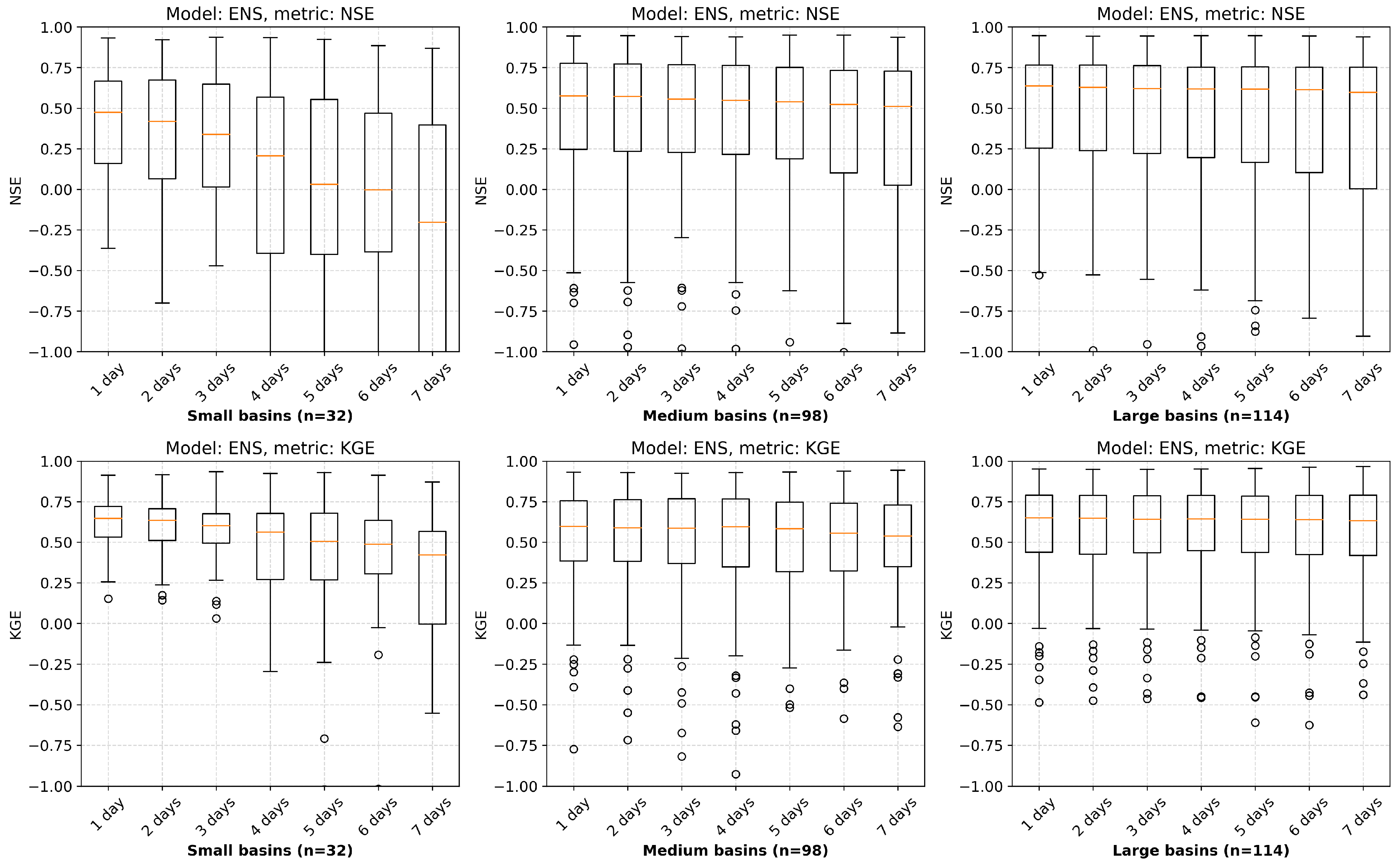

4.3. Benchmark and Verification Results

- The transition from ERA-Interim to ERA5 meteorological reanalysis (including the use of ERA5T product; Section 2.2).

- The transition from deterministic to ensemble runoff forecast, which is produced by different hydrological model configurations (Section 3.1).

- The use of meteorological reanalysis data instead of observation-based products.

- The use of non-homogeneous meteorological data—i.e., ERA5 reanalysis—but ICON NWP forecast.

- The lack of observational streamflow data assimilation.

- The lack of an error correction routine.

- The use of lumped conceptual hydrological models, while far more advanced models exist.

4.4. Website Traffic and Demand for Forecasts

5. Conclusions and Outlook

Funding

Acknowledgments

Conflicts of Interest

References

- CRED. Natural Disasters. 2019. Available online: https://emdat.be/sites/default/files/adsr_2019.pdf (accessed on 19 November 2020).

- Pappenberger, F.; Cloke, H.L.; Parker, D.J.; Wetterhall, F.; Richardson, D.S.; Thielen, J. The monetary benefit of early flood warnings in Europe. Environ. Sci. Policy 2015, 51, 278–291. [Google Scholar] [CrossRef]

- Ward, P.J.; Blauhut, V.; Bloemendaal, N.; Daniell, J.E.; de Ruiter, M.C.; Duncan, M.J.; Emberson, R.; Jenkins, S.F.; Kirschbaum, D.; Kunz, M.; et al. Review article: Natural hazard risk assessments at the global scale. Nat. Hazards Earth Syst. Sci. 2020, 20, 1069–1096. [Google Scholar] [CrossRef]

- Blöschl, G.; Hall, J.; Viglione, A.; Perdigão, R.A.; Parajka, J.; Merz, B.; Lun, D.; Arheimer, B.; Aronica, G.T.; Bilibashi, A.; et al. Changing climate both increases and decreases European river floods. Nature 2019, 573, 108–111. [Google Scholar] [CrossRef] [PubMed]

- Blöschl, G.; Kiss, A.; Viglione, A.; Barriendos, M.; Böhm, O.; Brázdil, R.; Coeur, D.; Demarée, G.; Llasat, M.C.; Macdonald, N.; et al. Current European flood-rich period exceptional compared with past 500 years. Nature 2020, 583, 560–566. [Google Scholar] [CrossRef]

- Emerton, R.; Zsoter, E.; Arnal, L.; Cloke, H.L.; Muraro, D.; Prudhomme, C.; Stephens, E.M.; Salamon, P.; Pappenberger, F. Developing a global operational seasonal hydro-meteorological forecasting system: GloFAS-Seasonal v1.0. Geosci. Model Dev. 2018, 11, 3327–3346. [Google Scholar] [CrossRef]

- Harrigan, S.; Zoster, E.; Cloke, H.; Salamon, P.; Prudhomme, C. Daily ensemble river discharge reforecasts and real-time forecasts from the operational Global Flood Awareness System. Hydrol. Earth Syst. Sci. Discuss. 2020, 2020, 1–22. [Google Scholar] [CrossRef]

- Cloke, H.; Pappenberger, F. Ensemble flood forecasting: A review. J. Hydrol. 2009, 375, 613–626. [Google Scholar] [CrossRef]

- Emerton, R.E.; Stephens, E.M.; Pappenberger, F.; Pagano, T.C.; Weerts, A.H.; Wood, A.W.; Salamon, P.; Brown, J.D.; Hjerdt, N.; Donnelly, C.; et al. Continental and global scale flood forecasting systems. Wiley Interdiscip. Rev. Water 2016, 3, 391–418. [Google Scholar] [CrossRef]

- Wu, W.; Emerton, R.; Duan, Q.; Wood, A.W.; Wetterhall, F.; Robertson, D.E. Ensemble flood forecasting: Current status and future opportunities. Wiley Interdiscip. Rev. Water 2020, 7, e1432. [Google Scholar] [CrossRef]

- Bartholmes, J.C.; Thielen, J.; Ramos, M.H.; Gentilini, S. The european flood alert system EFAS—Part 2: Statistical skill assessment of probabilistic and deterministic operational forecasts. Hydrol. Earth Syst. Sci. 2009, 13, 141–153. [Google Scholar] [CrossRef]

- Alfieri, L.; Burek, P.; Dutra, E.; Krzeminski, B.; Muraro, D.; Thielen, J.; Pappenberger, F. GloFAS: Global ensemble streamflow forecasting and flood early warning. Hydrol. Earth Syst. Sci. 2013, 17, 1161–1175. [Google Scholar] [CrossRef]

- Robertson, D.E.; Shrestha, D.L.; Wang, Q.J. Post-processing rainfall forecasts from numerical weather prediction models for short-term streamflow forecasting. Hydrol. Earth Syst. Sci. 2013, 17, 3587–3603. [Google Scholar] [CrossRef]

- Bennett, J.C.; Wang, Q.J.; Robertson, D.E.; Schepen, A.; Li, M.; Michael, K. Assessment of an ensemble seasonal streamflow forecasting system for Australia. Hydrol. Earth Syst. Sci. 2017, 21, 6007–6030. [Google Scholar] [CrossRef]

- Kollet, S.; Gasper, F.; Brdar, S.; Goergen, K.; Hendricks-Franssen, H.J.; Keune, J.; Kurtz, W.; Küll, V.; Pappenberger, F.; Poll, S.; et al. Introduction of an experimental terrestrial forecasting/monitoring system at regional to continental scales based on the terrestrial systems modeling platform (v1. 1.0). Water 2018, 10, 1697. [Google Scholar] [CrossRef]

- Emerton, R.; Cloke, H.; Ficchi, A.; Hawker, L.; de Wit, S.; Speight, L.; Prudhomme, C.; Rundell, P.; West, R.; Neal, J.; et al. Emergency flood bulletins for Cyclones Idai and Kenneth: A critical evaluation of the use of global flood forecasts for international humanitarian preparedness and response. Int. J. Disaster Risk Reduct. 2020, 50, 101811. [Google Scholar] [CrossRef]

- Hirpa, F.A.; Salamon, P.; Beck, H.E.; Lorini, V.; Alfieri, L.; Zsoter, E.; Dadson, S.J. Calibration of the Global Flood Awareness System (GloFAS) using daily streamflow data. J. Hydrol. 2018, 566, 595–606. [Google Scholar] [CrossRef]

- Ayzel, G.; Kurochkina, L.; Zhuravlev, S. The influence of regional hydrometric data incorporation on the accuracy of gridded reconstruction of monthly runoff. Hydrol. Sci. J. 2020, 1–12. [Google Scholar] [CrossRef]

- Antonetti, M.; Zappa, M. How can expert knowledge increase the realism of conceptual hydrological models? A case study based on the concept of dominant runoff process in the Swiss Pre-Alps. Hydrol. Earth Syst. Sci. 2018, 22, 4425–4447. [Google Scholar] [CrossRef]

- Antonetti, M.; Horat, C.; Sideris, I.V.; Zappa, M. Ensemble flood forecasting considering dominant runoff processes – Part 1: Set-up and application to nested basins (Emme, Switzerland). Nat. Hazards Earth Syst. Sci. 2019, 19, 19–40. [Google Scholar] [CrossRef]

- Ayzel, G.; Varentsova, N.; Erina, O.; Sokolov, D.; Kurochkina, L.; Moreydo, V. OpenForecast: The First Open-Source Operational Runoff Forecasting System in Russia. Water 2019, 11, 1546. [Google Scholar] [CrossRef]

- Robson, A.; Moore, R.; Wells, S.; Rudd, A.; Cole, S.; Mattingley, P. Understanding the Performance of Flood Forecasting Models; Technical Report SC130006; Centre for Ecology and Hydrology, Environment Agency: Wallingford, OX, USA, 2017.

- Cohen, S.; Praskievicz, S.; Maidment, D.R. Featured Collection Introduction: National Water Model. JAWRA J. Am. Water Resour. Assoc. 2018, 54, 767–769. [Google Scholar] [CrossRef]

- Prudhomme, C.; Hannaford, J.; Harrigan, S.; Boorman, D.; Knight, J.; Bell, V.; Jackson, C.; Svensson, C.; Parry, S.; Bachiller-Jareno, N.; et al. Hydrological Outlook UK: An operational streamflow and groundwater level forecasting system at monthly to seasonal time scales. Hydrol. Sci. J. 2017, 62, 2753–2768. [Google Scholar] [CrossRef]

- McMillan, H.K.; Booker, D.J.; Cattoën, C. Validation of a national hydrological model. J. Hydrol. 2016, 541. [Google Scholar] [CrossRef]

- Viglione, A.; Borga, M.; Balabanis, P.; Blöschl, G. Barriers to the exchange of hydrometeorological data in Europe: Results from a survey and implications for data policy. J. Hydrol. 2010, 394, 63–77. [Google Scholar] [CrossRef]

- Frolova, N.; Kireeva, M.; Magrickiy, D.; Bologov, M.; Kopylov, V.; Hall, J.; Semenov, V.; Kosolapov, A.; Dorozhkin, E.; Korobkina, E.; et al. Hydrological hazards in Russia: Origin, classification, changes and risk assessment. Nat. Hazards 2017, 88, 103–131. [Google Scholar] [CrossRef]

- Meredith, E.P.; Semenov, V.A.; Maraun, D.; Park, W.; Chernokulsky, A.V. Crucial role of Black Sea warming in amplifying the 2012 Krymsk precipitation extreme. Nat. Geosci. 2015, 8, 615–619. [Google Scholar] [CrossRef]

- Pappenberger, F.; Ramos, M.H.; Cloke, H.L.; Wetterhall, F.; Alfieri, L.; Bogner, K.; Mueller, A.; Salamon, P. How do I know if my forecasts are better? Using benchmarks in hydrological ensemble prediction. J. Hydrol. 2015, 522, 697–713. [Google Scholar] [CrossRef]

- Hersbach, H.; Bell, B.; Berrisford, P.; Hirahara, S.; Horányi, A.; Muñoz-Sabater, J.; Nicolas, J.; Peubey, C.; Radu, R.; Schepers, D.; et al. The ERA5 global reanalysis. Q. J. R. Meteorol. Soc. 2020, 146, 1999–2049. [Google Scholar] [CrossRef]

- Tarek, M.; Brissette, F.P.; Arsenault, R. Evaluation of the ERA5 reanalysis as a potential reference dataset for hydrological modelling over North America. Hydrol. Earth Syst. Sci. 2020, 24, 2527–2544. [Google Scholar] [CrossRef]

- Reinert, D.; Prill, F.; Frank, H.; Denhard, M.; Baldauf, M.; Schraff, C.; Gebhardt, C.; Marsigli, C.; Zängl, G. DWD Database Reference for the Global and Regional ICON and ICON-EPS Forecasting System; Technical Report Version 2.1.1; Deutscher Wetterdienst (DWD): Offenbach, Germany, 2020. [Google Scholar] [CrossRef]

- Oudin, L.; Hervieu, F.; Michel, C.; Perrin, C.; Andréassian, V.; Anctil, F.; Loumagne, C. Which potential evapotranspiration input for a lumped rainfall–runoff model? Part 2—Towards a simple and efficient potential evapotranspiration model for rainfall–runoff modelling. J. Hydrol. 2005, 303, 290–306. [Google Scholar] [CrossRef]

- Lehner, B. Derivation of Watershed Boundaries for GRDC Gauging Stations Based on the HydroSHEDS Drainage Network; Technical Report 41; Global Runoff Data Centre (GRDC): Koblenz, Germany, 2012; Available online: https://www.bafg.de/GRDC/EN/02_srvcs/24_rprtsrs/report_41.html (accessed on 20 November 2020).

- Yamazaki, D.; Ikeshima, D.; Sosa, J.; Bates, P.D.; Allen, G.H.; Pavelsky, T.M. MERIT Hydro: A High-Resolution Global Hydrography Map Based on Latest Topography Dataset. Water Resour. Res. 2019, 55, 5053–5073. [Google Scholar] [CrossRef]

- Perrin, C.; Michel, C.; Andréassian, V. Improvement of a parsimonious model for streamflow simulation. J. Hydrol. 2003, 279, 275–289. [Google Scholar] [CrossRef]

- Bergström, S.; Forsman, A. Development of a conceptual deterministic rainfall-runoff model. Hydrol. Res. 1973, 4, 147–170. [Google Scholar] [CrossRef]

- Lindström, G. A simple automatic calibration routine for the HBV model. Hydrol. Res. 1997, 28, 153–168. [Google Scholar] [CrossRef]

- Nash, J.E.; Sutcliffe, J.V. River flow forecasting through conceptual models part I—A discussion of principles. J. Hydrol. 1970, 10, 282–290. [Google Scholar] [CrossRef]

- Gupta, H.V.; Kling, H.; Yilmaz, K.K.; Martinez, G.F. Decomposition of the mean squared error and NSE performance criteria: Implications for improving hydrological modelling. J. Hydrol. 2009, 377, 80–91. [Google Scholar] [CrossRef]

- Valéry, A.; Andréassian, V.; Perrin, C. ‘As simple as possible but not simpler’: What is useful in a temperature-based snow-accounting routine? Part 1—Comparison of six snow accounting routines on 380 catchments. J. Hydrol. 2014, 517, 1166–1175. [Google Scholar] [CrossRef]

- Valéry, A.; Andréassian, V.; Perrin, C. ‘As simple as possible but not simpler’: What is useful in a temperature-based snow-accounting routine? Part 2—Sensitivity analysis of the Cemaneige snow accounting routine on 380 catchments. J. Hydrol. 2014, 517, 1176–1187. [Google Scholar] [CrossRef]

- Arsenault, R.; Brissette, F.; Martel, J.L. The hazards of split-sample validation in hydrological model calibration. J. Hydrol. 2018, 566, 346–362. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Beck, H.E.; van Dijk, A.I.J.M.; de Roo, A.; Miralles, D.G.; McVicar, T.R.; Schellekens, J.; Bruijnzeel, L.A. Global-scale regionalization of hydrologic model parameters. Water Resour. Res. 2016. [Google Scholar] [CrossRef]

- Ayzel, G. Runoff predictions in ungauged Arctic basins using conceptual models forced by reanalysis data. Water Resour. 2018, 45, 1–7. [Google Scholar] [CrossRef]

- Ayzel, G.; Izhitskiy, A. Coupling physically based and data-driven models for assessing freshwater inflow into the Small Aral Sea. Proc. Int. Assoc. Hydrol. Sci. 2018, 379, 151–158. [Google Scholar] [CrossRef]

- Ayzel, G.; Izhitskiy, A. Climate change impact assessment on freshwater inflow into the Small Aral Sea. Water 2019, 11, 2377. [Google Scholar] [CrossRef]

- Pushpalatha, R.; Perrin, C.; Le Moine, N.; Mathevet, T.; Andréassian, V. A downward structural sensitivity analysis of hydrological models to improve low-flow simulation. J. Hydrol. 2011, 411, 66–76. [Google Scholar] [CrossRef]

- Pappenberger, F.; Stephens, E.; Thielen, J.; Salamon, P.; Demeritt, D.; van Andel, S.J.; Wetterhall, F.; Alfieri, L. Visualizing probabilistic flood forecast information: Expert preferences and perceptions of best practice in uncertainty communication. Hydrol. Process. 2013, 27, 132–146. [Google Scholar] [CrossRef]

- Demargne, J.; Mullusky, M.; Werner, K.; Adams, T.; Lindsey, S.; Schwein, N.; Marosi, W.; Welles, E. Application of forecast verification science to operational river forecasting in the US National Weather Service. Bull. Am. Meteorol. Soc. 2009, 90, 779–784. [Google Scholar] [CrossRef][Green Version]

- Knoben, W.J.M.; Freer, J.E.; Woods, R.A. Technical note: Inherent benchmark or not? Comparing Nash–Sutcliffe and Kling–Gupta efficiency scores. Hydrol. Earth Syst. Sci. 2019, 23, 4323–4331. [Google Scholar] [CrossRef]

- Luo, Y.Q.; Randerson, J.T.; Abramowitz, G.; Bacour, C.; Blyth, E.; Carvalhais, N.; Ciais, P.; Dalmonech, D.; Fisher, J.B.; Fisher, R.; et al. A framework for benchmarking land models. Biogeosciences 2012, 9, 3857–3874. [Google Scholar] [CrossRef]

- Harrigan, S.; Prudhomme, C.; Parry, S.; Smith, K.; Tanguy, M. Benchmarking ensemble streamflow prediction skill in the UK. Hydrol. Earth Syst. Sci. 2018, 22, 2023–2039. [Google Scholar] [CrossRef]

- Mansanarez, V.; Renard, B.; Le Coz, J.; Lang, M.; Darienzo, M. Shift happens! Adjusting stage-discharge rating curves to morphological changes at known times. Water Resour. Res. 2019. [Google Scholar] [CrossRef]

- Moriasi, D.; Arnold, J.; Van Liew, M.; Binger, R.; Harmel, R.; Veith, T. Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Trans. ASABE 2007, 50, 885–900. [Google Scholar] [CrossRef]

- Essou, G.R.C.; Sabarly, F.; Lucas-Picher, P.; Brissette, F.; Poulin, A. Can Precipitation and Temperature from Meteorological Reanalyses Be Used for Hydrological Modeling? J. Hydrometeorol. 2016, 17, 1929–1950. [Google Scholar] [CrossRef]

- Raimonet, M.; Oudin, L.; Thieu, V.; Silvestre, M.; Vautard, R.; Rabouille, C.; Le Moigne, P. Evaluation of Gridded Meteorological Datasets for Hydrological Modeling. J. Hydrometeorol. 2017, 18, 3027–3041. [Google Scholar] [CrossRef]

- Kuncheva, L.I.; Whitaker, C.J. Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach. Learn. 2003, 51, 181–207. [Google Scholar] [CrossRef]

- Pinnington, E.; Quaife, T.; Black, E. Impact of remotely sensed soil moisture and precipitation on soil moisture prediction in a data assimilation system with the JULES land surface model. Hydrol. Earth Syst. Sci. 2018, 22, 2575–2588. [Google Scholar] [CrossRef]

- Poncelet, C.; Merz, R.; Merz, B.; Parajka, J.; Oudin, L.; Andréassian, V.; Perrin, C. Process-based interpretation of conceptual hydrological model performance using a multinational catchment set. Water Resour. Res. 2017, 53, 7247–7268. [Google Scholar] [CrossRef]

- Blöschl, G.; Bierkens, M.F.; Chambel, A.; Cudennec, C.; Destouni, G.; Fiori, A.; Kirchner, J.W.; McDonnell, J.J.; Savenije, H.H.; Sivapalan, M.; et al. Twenty-three Unsolved Problems in Hydrology (UPH)—A community perspective. Hydrol. Sci. J. 2019. [Google Scholar] [CrossRef]

- Schaefli, B.; Gupta, H.V. Do Nash values have value? Hydrol. Process. 2007, 21, 2075–2080. [Google Scholar] [CrossRef]

- Thober, S.; Kumar, R.; Wanders, N.; Marx, A.; Pan, M.; Rakovec, O.; Samaniego, L.; Sheffield, J.; Wood, E.F.; Zink, M. Multi-model ensemble projections of European river floods and high flows at 1.5, 2, and 3 degrees global warming. Environ. Res. Lett. 2018, 13, 014003. [Google Scholar] [CrossRef]

- Bertola, M.; Viglione, A.; Lun, D.; Hall, J.; Blöschl, G. Flood trends in Europe: Are changes in small and big floods different? Hydrol. Earth Syst. Sci. 2020, 24, 1805–1822. [Google Scholar] [CrossRef]

- Arnell, N.W.; Gosling, S.N. The impacts of climate change on river flood risk at the global scale. Clim. Chang. 2016, 134, 387–401. [Google Scholar] [CrossRef]

- Addor, N.; Do, H.X.; Alvarez-Garreton, C.; Coxon, G.; Fowler, K.; Mendoza, P.A. Large-sample hydrology: Recent progress, guidelines for new datasets and grand challenges. Hydrol. Sci. J. 2019. [Google Scholar] [CrossRef]

- Beck, H.E.; Vergopolan, N.; Pan, M.; Levizzani, V.; van Dijk, A.I.J.M.; Weedon, G.P.; Brocca, L.; Pappenberger, F.; Huffman, G.J.; Wood, E.F. Global-scale evaluation of 22 precipitation datasets using gauge observations and hydrological modeling. Hydrol. Earth Syst. Sci. 2017, 21, 6201–6217. [Google Scholar] [CrossRef]

- Beck, H.E.; van Dijk, A.I.J.M.; de Roo, A.; Dutra, E.; Fink, G.; Orth, R.; Schellekens, J. Global evaluation of runoff from ten state-of-the-art hydrological models. Hydrol. Earth Syst. Sci. Discuss. 2016, 1–33. [Google Scholar] [CrossRef]

- Kneis, D.; Abon, C.; Bronstert, A.; Heistermann, M. Verification of short-term runoff forecasts for a small Philippine basin (Marikina). Hydrol. Sci. J. 2017, 62, 205–216. [Google Scholar] [CrossRef]

- Kauffeldt, A.; Wetterhall, F.; Pappenberger, F.; Salamon, P.; Thielen, J. Technical review of large-scale hydrological models for implementation in operational flood forecasting schemes on continental level. Environ. Model. Softw. 2016, 75, 68–76. [Google Scholar] [CrossRef]

- Hrachowitz, M.; Clark, M.P. HESS Opinions: The complementary merits of competing modelling philosophies in hydrology. Hydrol. Earth Syst. Sci. 2017, 21, 3953–3973. [Google Scholar] [CrossRef]

- Li, M.; Wang, Q.J.; Robertson, D.E.; Bennett, J.C. Improved error modelling for streamflow forecasting at hourly time steps by splitting hydrographs into rising and falling limbs. J. Hydrol. 2017, 555, 586–599. [Google Scholar] [CrossRef]

- Li, M.; Robertson, D.E.; Wang, Q.J.; Bennett, J.C.; Perraud, J.M. Reliable hourly streamflow forecasting with emphasis on ephemeral rivers. J. Hydrol. 2020, 125739. [Google Scholar] [CrossRef]

- Arnal, L.; Anspoks, L.; Manson, S.; Neumann, J.; Norton, T.; Stephens, E.; Wolfenden, L.; Cloke, H.L. “Are we talking just a bit of water out of bank? Or is it Armageddon?” Front line perspectives on transitioning to probabilistic fluvial flood forecasts in England. Geosci. Commun. 2020, 3, 203–232. [Google Scholar] [CrossRef]

| Parameters | Description | Calibration Range |

|---|---|---|

| X1 | Production store capacity (mm) | 0–3000 |

| X2 | Intercatchment exchange coefficient (mm/day) | −10–10 |

| X3 | Routing store capacity (mm) | 0–1000 |

| X4 | Time constant of unit hydrograph (day) | 0–20 |

| X5 | Dimensionless weighting coefficient of the snowpack thermal state | 0–1 |

| X6 | Day-degree rate of melting (mm/(day*C)) | 0–10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayzel, G. OpenForecast v2: Development and Benchmarking of the First National-Scale Operational Runoff Forecasting System in Russia. Hydrology 2021, 8, 3. https://doi.org/10.3390/hydrology8010003

Ayzel G. OpenForecast v2: Development and Benchmarking of the First National-Scale Operational Runoff Forecasting System in Russia. Hydrology. 2021; 8(1):3. https://doi.org/10.3390/hydrology8010003

Chicago/Turabian StyleAyzel, Georgy. 2021. "OpenForecast v2: Development and Benchmarking of the First National-Scale Operational Runoff Forecasting System in Russia" Hydrology 8, no. 1: 3. https://doi.org/10.3390/hydrology8010003

APA StyleAyzel, G. (2021). OpenForecast v2: Development and Benchmarking of the First National-Scale Operational Runoff Forecasting System in Russia. Hydrology, 8(1), 3. https://doi.org/10.3390/hydrology8010003