Abstract

Background: Supply chain performance measurement is an integral part of supply chain management today, as it makes many critical contributions to supply chains, especially for companies and supply chains to identify potential problems and improvement fields, evaluate the efficiency of processes, and enhance the health and success of supply chains. The purpose of this study is to contribute to future research and practical applications by presenting a more standard, comprehensive, and up-to-date measurement scale developed based on the SCOR model version 13.0 performance measures in the disruptive technology era. Methods: The study was performed in seven stages and the sample size consists of 227 companies for pilot data and 452 companies for the main data. The stages comprise item generation and purification, exploratory factor analysis for the pilot study and main study, confirmatory factor analysis for the main study, convergent, discriminant, and nomological validity appraisal, and investigation of bias effect. Results: The scale was developed and validated as a five-factor and thirty-one item structure. Conclusions: Some key trends and indicators must be followed today to perceive the landscape of future supply chains. This measurement scale closely follows the future supply chains. Additionally, the findings have been confirmed by the contributions of disruptive technologies and the conceptual structure of supply chain management.

1. Introduction

The concept of supply chain (SC) has arisen with the comprehension that the achievement of companies depends on the interplay between raw materials, orders, information flow, workforce, money, and existing equipment and machinery [1], and the transition from individual organization-based management to an integrated management approach being actualized [2]. In today’s world, competition takes place between SCs [3,4] and the effective supply chain management (SCM), which comprises whole activities relevant to the flow and conversion of goods from the raw material phase to the final user [5], makes many remarkable contributions to SCs, especially enabling a sustainable competitive advantage [6] and creating value for SC stakeholders [7], and has a critical significance in the tremendously competitive global business world. Effective SCM enables strategic and operational benefits to countries, regions, and undoubtedly companies [8,9]. In the light of these developments, its analysis and improvement have become vitally significant and inevitable [10,11].

The foundation of analysis and improvement of the SC is based on performance measurement [12].Performance measurement is described as “the process of quantifying effectiveness and efficiency of actions” [13]. Supply chain performance measurement (SCPM) is a set of measures used for measuring the efficiency and effectiveness of relationships and processes of SC, comprising multiple organizational functions and companies, and providing the regulation of the SC. It is an activity that measures the efficiency of SC processes, enables information about the relationships in the SC, contributes to the effective usage of resources [14,15], enables information about the success and health of the SC by identifying potential problems and improvement fields [16], and facilitates decision-making by ensuring information to senior management and operations managers. It also enables effective planning and control in the SC [17] and contributes to operational excellence [18]. Moreover, the customer identifies whether the needs are met, and ensures the identification of wasteful issues and bottlenecks. It offers a more transparent cooperation and communication and provides a better comprehension of processes in the SC [19]. Thanks to these contributions, it is an integral part of SCM [20].

The main aim of SCPM is to comprehend how the SC system works and then try to determine occasions for supply chain performance (SCP) improvement. It is the foundation for improving SCP [21,22]. Although it is a critical issue for improving SCP and enhancing productivity and operational efficiency for SC stakeholders [23], the extant studies have highlighted that many companies fail to tap into the potential of the SC, that is, to maximize SCP [24,25]. There are two main aspects to consider in SCPM. They are the determination of appropriate SCP measures and an appropriate SCP measurement system [26,27]. SCM is an integrative philosophy that manages the whole flow in the SC [28]. SCP measures should be comprehensive, measurable, not far from the real world, and universal, and consider the SC as a whole [29,30]. Moreover, they should be consistent with organizational goals and allow comparison under different conditions [31]. The growing complexity and magnitude of the SC often has the effect of complicating coordination and undermining performance in the SC. For this reason, it is vital to use a systematic approach to analyzing performance [32] and to determine performance measures accurately [10].

The Supply Chain Operations Reference (SCOR) model is the well-known systemic and balanced performance measurement system among the prevailing SCPM systems. It was introduced by the Supply Chain Council (SCC) in 1996 and is defined as “systematic approach for identifying, evaluating and monitoring supply chain performance” [33]. It is accepted as the basis of performance measurement [34,35], and has been adopted by many companies since its launch. Additionally, this model is critical in enabling universally accepted standard performance metrics because it is difficult for senior management to agree on SCP metrics, unlike SC strategies [36]. Consequently, this model ensures a common language for implementing, organizing, and deciding the procedures of SC [37,38]. Its implementation contributes to quicker assessment and improvement of performance in SC, identification of performance gaps clearly, analysis of the competitive basis, implementation of processes and systems, and structuring of the SC [39,40]. It is also critically important as it assists managers in making strategic decisions [41].

There are some difficulties encountered in practice, in contrast to contributions of the SCOR model [42]. When investigating company-level challenges, one of the challenges is the need for automated data collection in the company. Likewise, other companies in the industry must have automated data collection to be able to compare performance [43]. On the other hand, the researchers in this field cannot investigate the performance of companies, and cannot make inter-sectoral comparisons by this model’s measures. While investigating SCM operations and their interrelationships, they face some difficulties, such as time constraints, access to administrators, and data privacy. This situation is a limitation for future research in this field [44,45]. Measurement scales have been utilized as a data collection tool in the literature to measure SCP for reasons such as time constraints, easier access to data, and faster measurement and comparison. Statistical analysis is used for data analysis [46].

SCs have looked for ways to overcome the insurmountable difficulties associated with SCs and carry out the highest possible potential of them by implementing innovative notions, policies, and strategies today [47,48]. The theoretical aim of this study is to develop and validate an SCP scale based on the standardized SC performance structure and measures of the SCOR model, and which deals with SCP more comprehensively than the extant scales in the literature. It also aims to adapt the SCP structure to the requirements of the era by performing the measurement on the usage levels of disruptive technologies (DTs) and to determine the contributions of the technologies. The practical aim of this study is to prevent the calculation of performance measures of the SCOR model separately, to enable a faster and more practical performance measurement. The performance attributes of the SCOR model version 13.0 (v13.0) [49,50] were defined as factor structures (or sub-dimensions) in this scale study, and the performance measures of SCOR v13.0 were expanded by an exhaustive literature review, expert group interviews, and effects of disruptive technologies within the scope of the study. Consequently, the effect of DTs was considered both when generating the item pool and taking measurements.

The remainder of this paper is designated as follows: Section 2 illustrates the theoretical background of this study with its widely utilized performance measurement systems and scales developed in the SCP field. Section 3 describes the research methodology and quantitative findings on which the study is based. This scale was developed and validated as “Supply Chain Performance Scale as SCOR-Based (SCPSS)”. Section 4 discusses the findings, illustrates the theoretical contributions, managerial implications, limitations of the study, and suggestions for further research. Section 5 outlines the most significant insights of this study.

2. Theoretical Background

Nowadays, there are various SCPM systems and measurement scales developed by academicians and practitioners [51]. In this part of the study, the prevailing systems and measurement scales for SCP in the extant literature have been evaluated.

2.1. Prevailing SCPM Systems

The scope of performance management has expanded from a single company to performance measurement of the whole SC, due to enhancing significance of SCM [52]. SCP could be measured in various ways [53]. Today, the most widespread models in SCPM are the balanced scorecard (BSC), the supply chain operations reference (SCOR), the benchmarking method, and the key performance indicator (KPI) [54]. When the fundamentals of performance improvement are investigated, it is comprehended that it extends to total quality management, just-in-time systems, and Kaizen [55,56,57,58]. Afterwards, sale, financial, time, and flexibility dimensions of performance were also taken into consideration. Different frameworks and performance measurement models have been introduced over time [59].

The Balance Scorecard (BSC) is a model developed with the aim of balancing performance measures, and it enables the balance by avoiding measures that illustrate one dimension well while dampening the other, and by minimizing negative competition between individuals and functions. It provides finding performance factors, discovering, and defining an action plan, implementing strategies effectively, and learning from the cyclical process [60]. The basis of the BSC is based on converting a company’s goals into a critical set of performance measures based on key dimensions or perspectives, and it ensures a rapid and comprehensive performance assessment [61]. Additionally, this system lessens confusion in management and enhances clarity [62]. Although it has developed for the aim of measuring the business performance of a single company initially, the scope of it has expanded to measuring SCP today. It is not only a performance measurement system, but also a strategic approach that turns vision and strategies into action [63]. It consists of four dimensions (or perspective), namely learning and growth, internal operations, customer, financial, and there are reason and effect relationships between measures and dimensions. For this reason, they are evaluated together [64]. Including both financial and non-financial measures, BSC contains the human dimension in performance measurement [65,66].

The SCOR model is the most widely utilized and intended to be the industry standard among whole SCPM systems. It is an instrument for mapping, benchmarking, and improving the operations of SCs. The role and significance of this system is further enhanced as it enables a practical approach to determining process decisions and performance measures in the SC [67], and also an effective means for the analysis, design, and implementation of the SC [68,69,70]. It covers whole customer interplays, physical material processes, and market interplays in SCs. SCP structures are analyzed utilizing performance attributes named “metrics” [71]. This model includes hundreds of SCP measures (or metrics) based on the key processes on which it is based [72]. Performance measures in the SCOR model consist of three basic levels [41,73]. Level-1 measures enable information about the general health of the SC, and they are also called strategic measures or key performance indicators (KPIs) and support strategic goals. Level-2 measures are utilized to predict or diagnose performance in Level-1 measures [74]. They contribute to identifying the root causes if there is a performance gap as a result of measuring Level-1 measures. Level-3 measures are diagnostic for Level-2 measures [75]. Performance measurement systems must remain in tune with the dynamic environment and varying strategies [76,77]. Accordingly, SCOR model performance measures are regularly updated in the light of necessities and developments in SCM [78].

The benchmarking method is a performance measurement and comparison process consisting of implementations and measures that allow the company to compare its performance with the others anywhere in the world and support actions to improve it [79]. This system allows for the identification of troubles and gaps in performance and the realization of improvements [80,81]. It has seven types: general benchmarking, internal, strategic, process, performance, competitive, and functional [82]. Using this system in SCM allows measuring the progress towards maturity and identifying the best practices for the business. Furthermore, it contributes to the implementation of a maturity model and standards by making comparisons with the best companies outside the industry. Consequently, it promotes best practices and improves processes [83]. The benchmarking process consists of five steps in this measurement system. The steps are determining the process, who will perform it best, observing and analyzing the process, analyzing performance gaps, and improvements based on these gaps [84].

The key performance indicator (KPI) is a quantitative system in which performance measures are determined to measure the performance and SCP is measured according to these measures [85]. There are two aspects of measurement as magnitude and unit of measure [86]. If performance measurement is industrially focused, considerations of time, budget, error rate, efficiency, first-time accuracy, and safety are significant. The measures in SCM focus on customer service, flexibility, quality, cost, time innovation, delivery performance of suppliers, inventory, and logistics costs [87]. They have revealed the gap between planning and execution, and enabled the opportunity to determine accurately potential troubles [14].

2.2. Extant SCP Scales

The measurement scales that will enable SCP measures to be utilized in empirical research are rare [88], although there are many studies in the academic literature that determine them [2,19,89,90]. When the scales developed in this field are investigated, Lai et al. (2002) [91] carried out a scale development study, named “Supply Chain Performance Scale”, for the transportation logistics industry. The SCP structure is defined as three-dimensional, and each dimension consists of two sub-dimensions (or sub-factors or sub-scales) at this scale. The conceptual background of the study is based on the SCOR model and various established performance measures. It is a comprehensive twenty-six-item scale, but its ability to be utilized in all industries should be investigated thoroughly.

Green et al. (2008) [92], a scale named “Supply Chain Performance Scale” developed and the SCP structure was defined as one-dimensional. The scale consists of eleven items, and has construct, convergent, discriminant, and predictive validity. However, they focus heavily on delivery performance, when their items are investigated in terms of the content. It does not consider SCP in the way of whole SC processes and performance attributes. Sindhuja (2014) [93] developed a “Supply Chain Performance Scale” based on the framework of the SCOR model version of that era and used this scale in the same study. Their items have been expanded by making use of both academic literature and extant scale studies. The scale is defined as five sub-dimensions and consists of twenty items. In the study, supplier performance is considered as a separate sub-dimension. Convergent and discriminant validity have not been tested statistically in this scale.

Gawankar et al. (2016) [94] argued that the retail industry in India has metrics to be considered alongside traditional performance metrics, and accordingly developed a scale called “Supply Chain Performance Metrics (SCPMS)”. The traditional measures are efficiency, quality, product innovation, and market performance according to this study. This scale has incorporated the current SC concepts (e.g., flexibility and integration) into the SCP structure, so it is a more appropriate scale for modern SCs. Moreover, the scale is comprehensive consisting of eight sub-dimensions and forty-three items. It has content and construct validity.

Rana and Sharma (2019) [95] performed an implementation in the Indian pharmaceutical industry, due to their sight that there is no consensus on performance measures for SCP, and developed the “Supply Chain Performance Scale”. In terms of the number of items and its content, this scale is a more comprehensive scale compared to other scales developed until that time. It also has convergent and discriminant validity. However, the items are designed for the pharmaceutical industry, although this scale is a generally developed scale in terms of SCP. For this reason, the significance and usability of the items in other industries should be investigated thoroughly. As comprehended by the previous studies, the long-standing interest in SCP has fueled future studies on it. In this direction, the SCP structure has been handled in various ways in line with the dynamic structure of the SC and the horizons of the researchers. A summary of the scale development studies carried out in this field is illustrated in Table 1:

Table 1.

The summary of extant SCP scales.

3. Materials and Methods

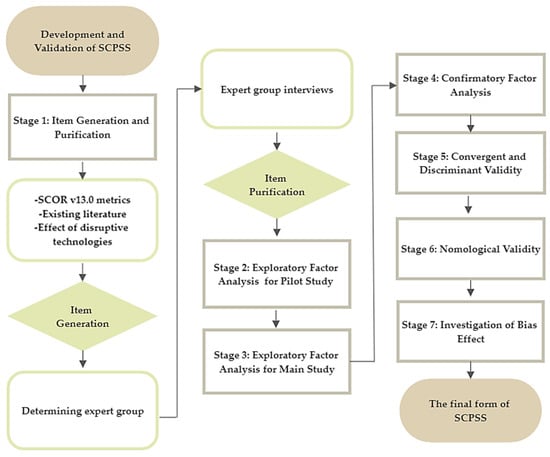

This study designed a seven-stage model to measure, validate, and constitute the structure and predictability of SCP. Stage 1 focused on item generation and purification. In Stage 2, a draft questionnaire was made up, the pilot study was performed, and the item pool was finalized for the main study. In Stage 3, exploratory factor analysis (EFA) was executed by compressing five factors to perform SCOR v13.0-based dimensionality, and the initial determination of dimensionality was performed. Confirmatory factor analysis (CFA) was carried out performing confirmation of dimensionality in Stage 4. While convergent and discriminant validity appraisal was executed in Stage 5, nomological validity was evaluated in Stage 6. Bias effect was investigated in Stage 7. The macro view of the research process is illustrated in Figure 1 below:

Figure 1.

The macro view of research process.

In this study, data were collected face-to-face and by e-mail, after necessary ethics committee permissions were acquired. The sample sizes of the study for the pilot study and main study comprise 227 companies and 452 companies, respectively. The industrial sectors included six groups: retail and FMCG, transportation, distribution and warehousing, e-trade, service, manufacturing, and import-export—so the study aimed at a large population size. Accordingly, the sample size for the main study is more than the 384 sample size for the 1,000,000-population size recommended by Sekaran and Bougie (2016) [96]. The measurement is carried out according to the difference created by the use of DTs in a year. SPSS V.21 for EFA, LISREL 8.51 for CFA, nomological validity, and investigation of bias effect. Office 365 Excel for convergent and discriminant validity were used. The questionnaire was constituted using a five-point Likert scale ranging from 1 (I totally disagree) to 5 (I totally agree).

3.1. Stage 1: Item Generation and Purification

Item generation was performed by considering the Level-1 and Level-2 performance metrics of the SCOR model v13.0, the current academic literature, and the effects of DTs on SCP. The most current version of the SCOR model was version 13.0 (v13.0) at the time of this study. For this reason, this version was considered in the scope of this study. A list of expert groups to be interviewed was identified to discuss the clarity of the items and whether there were any missing or added SCP measures. Accordingly, a total of nine experts were identified, five of which are industry professionals working in this field, and four of them are previous academicians from Turkey who maintain their academic studies in this field. Industry professionals were selected from companies’ SCM and digital transformation departments. Academicians who are experts in the fields of SCM and management information systems have continued to work as faculty members in these fields. The interviewed expert group list is included in Appendix A of this study. The interviews were conducted face-to-face or online through the Zoom platform. In this way, the content validity of the study was realized with expert group interviews.

The SCOR model enables standard and up-to-date performance metrics and defines the SC structure from all aspects. The sub-dimensions of the scale are based on SCOR v13.0 performance attributes, and scale items are based on SCOR v13.0 performance metrics, the extant literature, and the effects of DTs on SCP, as previously stated. This version includes five performance attributes: reliability, responsiveness, agility, cost, and asset management [47,48]. First, performance metrics are generated. Afterwards, these metrics are grouped according to these attributes, and the item pool for the pilot study is constituted. Level-1 and Level-2 measures of SCOR model v13.0 are given in Appendix A of this study, and the generated items are given in Appendix B of this study. In the next step of this study, the findings of the pilot study performed before reaching the findings of the main study are given.

3.2. Stage 2: Exploratory Factor Analysis for Pilot Study

Pilot studies allow for the research subject to be addressed, to obtain information about the feasibility of the study, and to determine a roadmap before launching the main study [97]. There are different values in the literature for the number of samples required for the pilot study. Treece and Treece (1982) emphasized that a sample corresponding to 10% of the required sample in the main study is adequate [98]. In this study, a pilot study was conducted with the data of 227 different companies, which exceeds approximately 10% of the targeted main sample. The findings of the reliability analysis of the alternative models for the pilot study and the findings of the sub-scales and factor analysis reached by the EFA are illustrated in Table 2 and Table 3, respectively:

Table 2.

The results of reliability analyses for the Pilot Study.

Table 3.

The results of sub-scales and factor analyses for the Pilot Study.

The Cronbach’s alpha value allows testing the internal consistency of the scale and its sub-scales. The minimum value of it should be at least 0.70 for existing scales and at least 0.60 for newly developed scales [99,100]. These values are at a high level for the pilot study, as seen Table 2. Before evaluating the findings of the EFA for the pilot study, KMO and Bartlett’s Test of Sphericity values are investigated, as is whether the data are convenient for factor analysis. It is expected that the KMO value is above 0.60 and the Bartlett’s Test value is statistically significant (p < 0.05) [101,102]. The data are appropriate for factor analysis as they enable relevant threshold values for the pilot study.

EFA is a type of factor analysis that allows the correlations between observed variables to be explained by fewer latent variables or factors [103]. It ensures the initial definition of the sub-dimensions of the structure, or in other words, the factors [104,105]. The type of rotation and extraction are determined by the researcher while performing the analysis. Oblique rotation is used if sub-dimensions are highly correlated to each other [106,107]. Structures are usually highly correlated to each other in social sciences, so this rotation is commonly used [108]. In this study, the correlations between the items were investigated to understand the relationships between the structures, and since most of the correlations were above 0.30, direct oblimin, a type of oblique rotation, was utilized. Principal axis factoring, which is an effective method to reveal weak factors, was used as an extraction method [109].

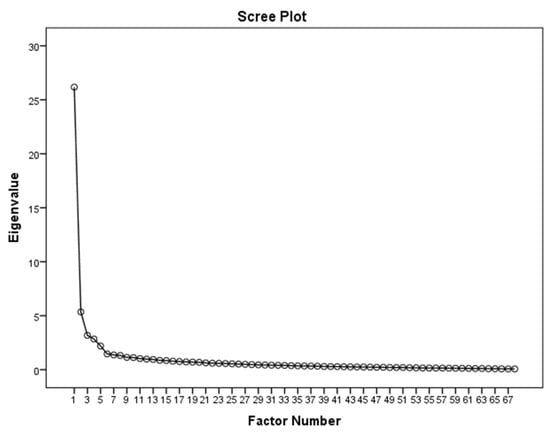

The eigenvalue indicates the amount of information obtained from a factor. According to the eigenvalue rule, factors with an eigenvalue lower than 1.0 should not be considered [110]. The scree plot is based on eigenvalues and supports deciding the correct number of factors. The change in eigenvalue for successive factors is taken into consideration, while investigating this graph [111]. Figure 2 gives information about the eigenvalues for the factor structures. This value is 27.00 for a single factor structure and 3.00 for a three-factor structure approximately. Since the study is based on SCOR v13.0, it was compressed into five factors due to its theoretical background. The eigenvalue is 2.50 for a five-factor structure approximately. The structures compressed into the five factors given in Table 2 reflect 58.436%, 60.732%, and 61.137% of the total variances, respectively. Since a flattening is seen in the graph after the fifth factor in the scree plot, it is possible to say that the data point to the five-factor structure.

Figure 2.

Scree plot for pilot study.

The factor loadings of 0.50 or higher are considered as practically significant [112]. Standardized loadings should have a lower limit of at least 0.50 or 0.60 for newly developed scales [113]. As a finding of EFA, six items that encountered cross loading problems were excluded from the item pool and the structure in Table 4 was obtained. The loadings for the five-factor model consisting of sixty-eight items altered between 0.324 and 0.843. Subsequently, the items with the loadings below 0.50 were removed from the item pool and the structure given in Table 5 was obtained. The loadings for the five-factor model consisting of fifty-seven items altered between 0.468 and 0.843. The same process was performed to this structure, and the structure presented in Table 6 was obtained by removing the items with the loadings below the threshold value. The loadings for the five-factor model consisting of fifty-five items altered between 0.505 and 0.841.

Table 4.

The factor loadings of Model 1 for the Pilot Study.

Table 5.

The factor loadings of Model 2 for the Pilot Study.

Table 6.

The factor loadings of Model 3 for the Pilot Study.

3.3. Stage 3: Exploratory Factor Analysis for Main Study

The demographic characteristics of the 452 samples collected for the main study were investigated, before the EFA for the main study was performed. While creating these questions, the opportunity to leave them blank was enabled due to the participants’ concerns about data privacy. Demographic characteristics of the participants are illustrated in Table 7. The unanswered questions are indicated in the table as no response.

Table 7.

Demographic Characteristics of the Participants.

A preliminary version of the study was generated, and the alternative factor structures were examined in the pilot study [114]. Items with cross loading problems and factor loading values below 0.50 [113] were removed and the relevant items were renumbered. Accordingly, the item pool was updated, and the items were renumbered. The item pool for the main study is presented in Table 8 below:

Table 8.

The main study item pool.

All alternative models obtained for the main study are structures obtained by compressing the structure to five factors on the theoretical grounds that the scale is based on SCOR v13.0. When the conceptual structures that make up the sub-dimensions (or factors) of the scale are investigated, reliability (RL) is the ability to carry out tasks as expected and focuses on the predictability of the outcome of a process. Responsiveness (RV) is defined as the velocity at which tasks are accomplished and the velocity at which an SC delivers product to a customer. Agility (AG) is the ability to respond to external influences and market changes. Cost (C) is the performance dimension that consists of the costs associated with operating SC processes. Asset management (ASM) refers to the ability to use assets efficiently [49].

When the EFA was performed for the item pool presented in Table 6, the RV12, RV13, C1, and C2 items were removed from the analysis due to the cross-loading problem, and Model 1 was obtained. Afterwards, a total of six items with factor loading values below 0.50 (RL1, RL12, AS3, AS1, RV1, RV11) were excluded from this structure, and Model 2 was obtained. One item with a loading value lower than 0.50 (RV2) was encountered in Model 2, and the analysis was repeated by excluding them from the model, and Model 3 was attained. The findings of the reliability analysis of the structures and sub-scales and factor analysis are given in Table 9 and Table 10, and the factor loadings of them are presented in Table 11, Table 12 and Table 13:

Table 9.

The results of reliability analyses.

Table 10.

The results of sub-scales and factor analyses.

Table 11.

The factor loadings of Model 1.

Table 12.

The factor loadings of Model 2.

Table 13.

The factor loadings of Model 3.

The Cronbach’s alpha value is above the threshold value (p > 0.60) and the sub-scales have a high internal consistency (Table 9). Before evaluating the findings of the EFA for the main study, KMO and Bartlett’s Test of Sphericity values are investigated, as is whether the data are convenient for factor analysis. It is expected that the KMO value is above 0.60 and the Bartlett’s Test value is statistically significant (p < 0.05) [101,102]. The data is appropriate for factor analysis as it enables relevant threshold values for the main study.

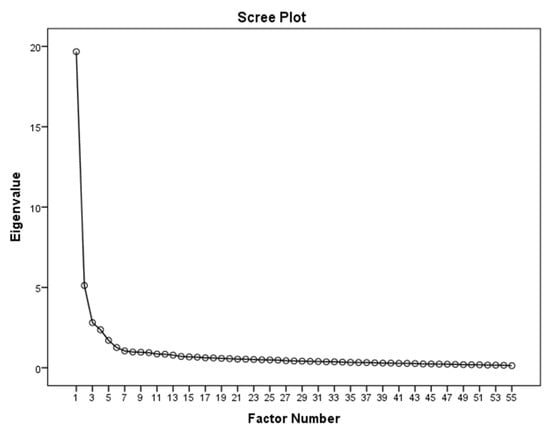

The three structures, compressed into five factors, explain 58.538%, 61.084%, and 61.487% of the total variance, respectively (Table 10). According to the scree plot chart for the main study illustrated in Figure 3 below, the eigenvalue for the single factor structure is approximately 19.00. The eigenvalue is approximately 3.00 for the three-factor structure, while it is approximately 2.00 for the five-factor structure. Since there is a flattening in the graph after the fifth factor in the scree plot, it is possible to say that the data point to the five-factor structure and support the theoretical background of the study.

Figure 3.

The scree plot for main study.

3.4. Stage 4: Confirmatory Factor Analysis

CFA enables confirmation of the construct revealed by the EFA or provides confirmation of previously determined structures on theoretical grounds [155,156]. It is a statistical confirmation [157]. Since this scale is based on the SCOR v13.0 model, its sub-dimensions and items belonging to sub-dimensions are certain. Nevertheless, the EFA was carried out initially and then this structure was confirmed to carry out a more rigorous study and to investigate the compatibility of the data with the theoretical structure.

After the alternative structures reached in the EFA were verified, the model obtained by removing the items with factor loading below 0.70 was confirmed to avoid problems in convergent validity, which will be evaluated in the next stage. As in the other models, compression was applied to five factors and this structure was named Alternative Model 4. The CFA offers several suggestions to improve the model’s fit indices values. One of them is to correlate items belonging to the same factor that demonstrate high correlation with each other in the model. It must be based on theoretical grounds for the suggestion to be performed. Accordingly, the following items are correlated in alternative models. The related items and the chi-square reductions that this correlation will enable are given in Table 14 below:

Table 14.

Correlated items and chi-square decreases.

Cost of goods sold (COGS) is the total cost related to purchasing raw materials and producing goods, and includes both direct costs (e.g., materials, labor) and indirect costs [158,159]. Distribution of goods or services among company activities is significant [160]. Distribution costs include the costs related with receiving, storing, and transporting goods [161]. C7 and C8 were correlated in the indicated models, as the distribution of raw materials and materials to warehouses or factories for production has a direct effect on the COGS. The negotiation process with suppliers is part of the purchasing function [162]. The cycle time for selecting and negotiating suppliers affects the purchasing cycle time. RV1 and RV2 were correlated with this theoretical reason. Since the purchasing materials cost will affect the unit production cost, C3 and C5 were correlated in the relevant models [163].

As delivery accuracy enhances, delivery performance improves [164]. Therefore, RL8 and RL9 were correlated. Working capital can be defined as the ability to pay due debts. Moreover, cash and working capital are used synonymously in some sources [165]. The cash conversion cycle (CCC) is a measure of working capital and demonstrates how well it is managed [166]. Accordingly, AS8 and AS9, which were expected to be highly correlated, were correlated in Model 4. Storage cost is also a factor that affects the unit production cost [167]. Hence, C4 and C5 were correlated in the related model.

Model fit indices for these four structures are given in Table 15 below. χ2/df, RMSEA, and SRMR indices are at acceptable levels for all models. Alternative Model 2-3-4 also has an acceptable level for CFI. Alternative Model 4 is also acceptable in terms of NFI and AGFI indices. Four alternative models are different from each other in terms of the scope of item, so the chi-square difference test was not carried out. Therefore, statistically, the optimal model is Alternative Model 4, which has better model fit indices values according to the other alternatives.

Table 15.

The comparison of alternative models.

The t-values of the loading are investigated in order to control whether the factor loadings are statistically significant. The threshold for t-values is 1.96 at the 0.05 significance level [169,170]. Factor loading, t-values, and R2 for the items are given in Table 16 below. As comprehended in the table, whole t-values of the loadings are statistically significant.

Table 16.

Factor loading, t-values, and R2 for items.

3.5. Stage 5: Convergent and Discriminant Validity

Convergent validity is a type of validity that proves that the observed variables represent the same structure, measure a single conceptual structure, and are related to each other [171]. Average variance extracted (AVE), composite reliability (CR), and factor loading criteria are considered for this validity [172]. The AVE value must be higher than or equal to 0.50 [173]. The threshold value for CR is 0.60 [174]. The closer it is to 1.00, the better it is considered [100]. Factor loadings indicate at what level the items are related to the factor, which is called the latent structure [105,170]. The loading values are expected to be higher than or equal to 0.708 to ensure convergent validity, and this also achieves indicator reliability [172]. Lastly, the Cronbach’s Alpha values of relevant factors are investigated and if they are higher the threshold value of 0.70, internal consistency reliability has been ensured [172,175,176]. The scale has convergent validity as it has fulfilled whole all necessary criteria. The factor loading values are given in Table 16 above. The other values are given in Table 17 below:

Table 17.

Values of convergent validity.

Discriminant validity is a type of validity that is accepted as a prerequisite for analyzing the relationships between latent variables and is calculated separately for each latent variable or for each factor [112,177]. The factors should be related to each other to ensure this validity, but the level of this relationship should be low enough for them to be defined as independent structures [178]. Although structures or concepts are often interrelated, they need to be defined statistically separately. This validity reveals that they are separate structures [179]. The Fornell-Larcker criterion method is frequently used in the literature to investigate it [172,174]. According to this method, the square root of the AVE value of each factor is taken and these values are compared with the correlations of the factors. The square root of AVE must be more than the latent variable correlation for enabling discriminant validity [172]. The values of the Fornell-Larcker criterion are given in Table 18 below.

Table 18.

The values of Fornell-Larcker criterion.

The values given in parentheses show the square roots of the AVE values for the factors, and the remaining values show the correlations between the latent variables in the table above. The square roots of the AVEs of the structures are lower than the correlations of the latent variables with others, so all factors have discriminant validity.

3.6. Stage 6: Nomological Validity

Nomological validity is a type of validity that reveals whether the developed scale reflects the relationships that exist based on theory or previous research. The scale must have nomological validity as well as convergent and discriminant validity to ensure construct validity in scale development studies. The relationships covered and supported by extant research or theoretically accepted principles are identified to test it. Afterwards, it is investigated whether the developed scale is consistent with them [112].

The most theoretically similar measurement scale to the SCPSS in the extant literature is the SCP scale developed by Sindhuja (2014) [93]. However, this scale could not be compared with the SCPSS, since the inter-factor correlation of it was not reported by the researcher. For this reason, the correlation coefficient (r) levels between the sub-dimensions of the SCPSS were compared with the existing literature to test the nomological validity. The interpretation of Taylor (1990) [180] was considered for the r levels. Accordingly, if r is less than or equal to 0.35, it is considered a weak or low correlation. While 0.36 to 0.67 indicates moderate or modest correlation, 0.68 to 0.90 indicates a strong correlation. If r is equal to or above 0.90, it indicates high correlation. Accordingly, all relations between latent variables are modest and statistically significant (Table 18, p < 0.05). There is a positive correlation between all variables because its items were generated to point in the same direction. For instance, while the items for responsiveness are generated in the direction of decrease, the items for the cost dimension are generated in the direction of decrease. The findings of this study are in line with the existing literature, as the costs will decrease as the cycle times decrease in the SC [181]. Cirtita and Glaser-Segura (2012) [182] conducted a study on SCP measurement using the SCOR model of that period. The latent variables defined are the same except for agility. They have addressed flexibility performance rather than agility. When the findings of the two studies are compared, all correlation levels are moderate except for the correlation between cost and asset management. They found a strong correlation between cost and asset management, while the SCPSS found a modest level correlation. However, most of the study findings are generally coherent with each other, and the conceptual structure of the study is confirmed by the current literature.

3.7. Stage 7: Investigation of Bias Effect

A bias may occur depending on the perceptions of the participants in the data obtained through the questionnaire. It is called the “bias effect” or the “common method bias” [183], which causes a measurement error and distorts the empirical values of the relationship among structures [184]. It is necessary to check whether the data have a bias effect, and if there is bias, it must be eliminated [185]. The controlling of bias is tested by adding an artificial observed variable called CMV, which stands for common method bias, to the final model, which exists in theory but does not exist in implementation. This variable affects all observed variables in the model equally [186].

The CMV artificial variable was added to Alternative Model 4, which is the final model obtained in Stage 4, and it was tested. The new model’s degree of freedom is 451 and the chi-square value is 4355.31 (p < 0.05). The difference between the degree of freedom of the new model and the final model is 30, and the two models are statistically different since the difference in chi-square values between the two models is more than 43,773. In this case, the optimal model is the final model because it has a lower chi-square value (Δχ2 = 3393.54, Δdf = 30, p < 0.05). Therefore, the scale does not have the bias effect. In addition, the inclusion of CMV in the model deteriorates the values of model fit indices (RMSEA = 0.19; SRMR = 0.43; CFI = 0.58; NFI = 0.55; AGFI = 0.39). The final form of the scale is presented in Appendix B.

4. Discussion

SCM is the systematic and strategic coordination of SC activities among SC members with the aim of enhancing the long-term performance of both individual members and the entire SC [187]. Therefore, the basis of effective SCM is based on measuring SCP. The most crucial difficulties encountered in measuring SCP are the selection and determination of the accurate and most appropriate measures and measurement systems [10]. The SCOR model enables performance measures set regularly to be updated in line with SC requirements and ensures a universally accepted and cross-industry standard [188,189] and is currently the most widely used instrument for measuring SCP [190]. On the other hand, it is quite difficult to observe this standard in measurement scales in this field.

There are various scale development studies for measuring SCP in the literature. When the extant scales are investigated, in fact, each scale reflects the structure of that era and the horizon of the researcher. However, some of these scales have some different limitations in terms of their scope and validity. For instance, the SCP scale developed by Lai et al. (2002) [91] is service industry-oriented, since it was developed based on transportation logistics, and how many of these items implemented in the manufacturing industry should be investigated carefully. The items of the scale developed by Green et al. (2008) [92] have focused heavily on delivery performance, but SCP needs to be addressed in the way of whole SC processes and performance attributes. The items of the SCP scale developed by Rana and Sharma (2019) [95] have been designed for the pharmaceutical industry; the utilization of some items in other industries should be examined. The items related to the drug-related costs factor are examples of this. Undoubtedly, the dynamics of the SC for each industry are different from each other, but developing a scale that will cover whole industries will be a guide for future scale adaptation studies. The scale developed by Gawankar et al. (2016) [94] is a comprehensive scale considering the retail industry in India. Although it is comprehensive in terms of the scope, the convergent and discriminant validity of these eight sub-dimensional scales has not been statistically tested.

There are two scale development studies based on the SCOR model in the extant literature. They have been developed by Lai et al. (2002) [91] and Sindhuja (2004) [93] according to this model’s performance measures and the existing literature, and so they were performed in line with the perspective of this study. However, the SC structure has changed considerably over the past decade, and the SCOR model, which has been updated accordingly, has changed greatly. For this reason, the scale developed by Lai et al. (2002) [91] is different from today’s SC structure. The scale developed by Sindhuja (2014) [93] is the most similar scale with the SCPSS in terms of its measures and sub-scales. It is based on the SCOR model version of the relevant era, as in this study. However, it has defined a structure called the supplier performance, unlike the SCPSS. The SCOR v13.0 performance attribute does not include this structure as a separate attribute. Moreover, the data collected for the development of the SCPSS have not indicated such a structure. SCOR v13.0 distinctly has a performance attribute named asset management or asset management efficiency. Furthermore, the four common latent variables (reliability, responsiveness, agility, and cost) have been defined differently in terms of their content. The SCPSS has described the structures involved in much more detail. For instance, the performance measures for the reliability dimension are not as comprehensive as those of the SCPSS. It only consists of order fulfillment rate, inventory turns, safety stocks, and inventory obsolescence. On the other hand, responsiveness deals with metrics related to cycle times in a general sense; it is not specific to SC activities such as transportation, storage, and manufacturing. This makes it difficult to identify the source of the problem when identifying SC problems through performance measurement. The agility dimension is more about market, demand, and delivery. However, the SCPSS considers transport and information system flexibility in agility performance. Cost dimension has been taken from a general perspective and consists of inbound and outbound costs, warehousing, inventory holding, and reducing product warranty claims. The SCPSS offers a wide range of cost performance metrics. It is a more up-to-date scale due to the SCOR model version it is based on and offers a more comprehensive scale in terms of its measures.

The disruptive technologies (DTs) offered by the Fourth Industrial Revolution make significant contributions to SCM [191]. This study also reveals the performance measurements most affected by DTs among the pilot study item pool determined by SCOR v13.0, the extant literature, the effect of DTs, and expert group interviews. The final items of the scale are also the performance measures in which DTs enable the greatest improvement. The DTs include cyber physical systems, internet of things (IoT), artificial intelligence, autonomous robots, big data analytics, blockchain, cloud computing, 3D printers, augmented reality, autonomous (driverless) vehicles, digital twin, horizontal and vertical software integrations, simulation, cyber security, and 5G in this study because they have been widely used in the supply chain context [168]. According to the findings of this study, these technologies contribute the most to warehouse efficiency and perfect order fulfillment rate in terms of reliability performance. Production cycle time and order fulfillment cycle time provide the highest contribution in terms of responsiveness performance. The item that these technologies contribute the most in terms of agility performance is information system flexibility. They contribute the highest to transportation costs in terms of cost performance, while they contribute the highest to return on working capital and CCC in terms of asset management.

This study is the most comprehensive study that deals with whole aspects of the SCP in the extant literature thanks to the pilot study item pool it generated. This study has revealed the key metrics in SCPM and the performance measures to which DTs contributed the most among the seventy-four meticulously generated items. When the contribution of the study to the understanding of SCP is investigated, cost performance has a significant place in SCP, since the performance metrics related to cost have the highest place among all measures. In other words, the latent variable cost is explained by more observed variables than others. In this respect, it is followed by the reliability latent variable. Reliability performance is based on lessening errors in whole processes at SC, predicting accuracy, and delivering on time, in the right quantity, at the right place. It is also a customer-oriented performance dimension [192]. “SCM is the management of upstream and downstream relationships with suppliers and customers to deliver superior customer value at lower cost to the SC as a whole” [95]. As it is, the main purpose of SCM is to enable superior customer value at the lowest SC cost. Accordingly, the findings of the study are validated by the conceptual structure of SCM.

The performance metrics measuring SC flexibility and SC agility were taken together in this study, while addressing the items regarding the agility performance. The concepts of agility and flexibility are different in the literature. While agility is defined as “the ability to react to external influences” [49], flexibility is defined as “the ability to respond to change with the least loss of time and money” [193]. However, SC agility performance has been considered as flexibility performance in some research on SCP based on the SCOR model [194] because flexibility combined with a skilled, knowledgeable, motivated, and empowered workforce discovers agility [195], so flexibility reveals agility. The findings of this study revealed that DTs, which are among the performance metrics related to both concepts, only contribute to the performance metrics related to flexibility. These items are order flexibility, transportation flexibility, information system flexibility, and market flexibility. The intertwined nature of two concepts and the fact that they are utilized interchangeably in the current literature clearly explain this finding.

The current literature has proved the contribution of DTs to SCP, as have the findings of this study. IoT has contributed to efficient stocktaking and reducing accident risks in warehouses [196]. Autonomous robots have the effect of increasing efficiency and safety in warehouses. They have also enabled the reduction of human-based operational errors [197]. Augmented reality is a technology used in warehouses that enhances warehouse efficiency and lessens error rates in warehouses [198]. The use of 5G technology in warehouse operations has enhanced warehouse efficiency by reducing transportation times, human errors, and accidents in warehouses [199]. Artificial intelligence, autonomous robots, and 5G are important technologies that boost the perfect order fulfillment rate and shorten the order fulfillment cycle time [200]. Using robots has shortened production cycle times [201]. Three-dimensional printers have allowed for lower transportation costs in manufacturing processes [202,203]. IoT and big data analytics are among the technologies that contribute to information system flexibility [204,205]. Almost all DTs make a significant contribution to the return on working capital and the CCC [206]. Consequently, this study’s findings are in line with the extant literature.

4.1. Theoretical Contributions

This study enables three main contributions theoretically. Firstly, the most fundamental and profound contribution to a field can be made on measurement [207]. Although interest in measuring and improving SCP has enhanced considerably over the past decade [208,209] and its critical role in SCM has been highlighted, the studies of scale developed in this field are rare [88]. When the extant scales in the literature are investigated, the necessity of an SCP scale that comprises comprehensive, standardized, and universally accepted performance measures is clear. This study fills this gap and enables the most significant theoretical contribution to the SC literature. It also adapted it to digital SCs, which are the contemporary SCs. Secondly, this study gathers the SCP measures in the literature under a single roof in the item pool for the pilot study and makes them more standard by considering them based on SCOR v13.0. Thirdly, SCP measures were discussed from a wide perspective in the pilot study item pool and contributed to the literature by determining the performance measures that DTs contributed the most among them.

4.2. Managerial Implications

This study enables many contributions practically. SC managers have significant duties to improve SCP [210] and the SCPSS has the effect of facilitating these duties for them. This study presents a measurement scale based on its measures to overcome the difficulties faced by the SCOR model in practice. Information is significant for SC managers to make decisions [211]. This measurement scale facilitates the decision-making of SC managers with the information it ensures. It provides them with a more practical and quicker performance measurement opportunity by enabling performance measurement to be performed by measurement scales because it prevents the performance measures from being calculated separately. Therefore, it also speeds up the decision-making processes. This will enable critical benefits to managers in times of crisis. SC managers can compare the present and past of the company in which they operate using the SCPSS. In addition, they could compare the SCPs of the industries in which they operate and accordingly, they could conduct improvement studies at the points where they fall behind according to the industry. They may also investigate how SCP relates to specific operations.

4.3. Limitations and Future Research

The main limitation of this study is that it does not consider sustainability performance in the SC. Nowadays, the sustainability performance is key for SCM [212,213]. The reason why sustainability performance measures were not included in this study is that the current SCOR model was version 13.0 at the time of the study and this version did not comprise sustainability performance attributes. Today, the current version for the SCOR model is version 14.0 (v14.0) and one of the major changes in this version is the addition of sustainability to the performance attributes [188]. Sustainability performance in SCM plays a crucial role not only for the survival of companies, but also for their long-term success and development [214]. SC managers must simultaneously consider how these decisions affect people, the planet, and the company, with a holistic approach when they make decisions [215].

The SCOR v14.0 consists of resilience, economic, and sustainability attributes and sub-attributes related to these attributes. These sub-attributes are reliability, responsiveness, agility, cost, profit, assets, environmental, and social. The sustainability attribute in SCOR v14.0 consists of environmental and social sub-attributes [188]. For further studies, scale development or adaptation studies can be carried out by considering these performance attributes and sub-attributes and their measures. However, there is one issue to take into consideration. The concept of sustainability is discussed in the academic literature in three dimensions: economic, environmental, and social [216], and it is emphasized that these three dimensions should be handled in a balanced way and with a holistic approach [217]. Therefore, if the SCP structure is to be considered in three sub-dimensions of resilience, economic, and sustainability in future studies, it is likely that the measures will be distributed differently from the SCOR model due to the conceptual structure of sustainability. Therefore, testing an eight sub-dimensions structure by considering eight sub-attributes will allow performance measures to be more clearly differentiated from each other statistically and conceptually.

SCP needs can be evaluated from different perspectives according to companies, processes, employees, technologies, and strategies [218], and can be aimed to improve different performance measures [219]. As an alternative, future researchers can perform a scale adaptation study by selecting measures in line with the company’s needs and goals from the performance measures list in the item pool for the pilot study. Moreover, SCM is a dynamic process [220] and performance measurement systems need to be compatible with these dynamic environments and processes [76,77]. In recent decades, SCs have evolved in light of current developments such as sustainability, the use of information systems, and DTs [221,222,223,224]. Undoubtedly, the issues discussed or addressed in the SCs of tomorrow will be different from those of today. In line with the current developments, research agenda, and new concepts to be introduced to the literature, the SCP structure can be defined in various ways, within the horizon and knowledge of the researcher, and new scale development studies can be carried out in the future.

5. Conclusions

There are some key indications of their background today, although it is not known for certain what will change in the SCs of the future. Some key trends and indicators must be followed today to perceive the landscape of future SCs [225]. This scale offers a standard, up-to-date, and comprehensive measurement scale by taking SCP measures in a more standardized and up-to-date position grounded on SCOR v13.0, while also extending it with extant academic literature in terms of the measures. It also has determined the contributions of DTs and the performance metrics that should be considered in digital SCs, since the measurement was performed according to the change in using DTs. Furthermore, this study ensures a measurement system that enables quicker and easier utilization for managerial implications by enabling SCPM to be performed with a measurement scale. Consequently, it will make a crucial contribution to the development of SCM, the foundation of future research, the SC literature, and to the SCs of the future.

This scale was developed with thirty-one items and five factors. As clarified above, this scale has acceptable model fit values for many types of scales (x2/df, RMSEA, SRMR, CFI, NFI, AGFI). The scale has convergent, discriminant, and nomological validity. It does not have the bias effect. The findings of this paper show that the scale can be utilized confidently by researchers and practitioners in their relevant fields. The scale was developed as “Supply Chain Performance Scale as SCOR-Based (SCPSS)”, but it can also be used to measure digital supply chain performance since the measurement is based on the use of DTs. It can be utilized with confidence in studies where the SCP or its sub-scales are variable in their current form.

Author Contributions

Author Contributions: Conceptualization, Ö.Ö.; methodology, Ö.Ö. and F.B.; software, Ö.Ö.; validation, Ö.Ö. and F.B.; formal analysis, Ö.Ö. and F.B.; investigation, Ö.Ö.; resources, Ö.Ö.; data curation, Ö.Ö. and F.B.; writing—original draft preparation, Ö.Ö. and F.B., writing—review and editing methodology, F.B.; visualization, Ö.Ö.; supervision, F.B.; project administration, F.B.; funding acquisition, Ö.Ö. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was carried out with the approval of the ethics committee received from Yeditepe University Social and Human Sciences Ethics Committee (Ethics Committee Decision No. 28/2022, Date of Approval 25 March 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

Data can be requested from the correspondence author when necessary.

Acknowledgments

We are grateful to the expert group that generated the item pool and ensured content validity, and to all participating companies that constituted the sample of our study.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| SC | Supply Chain |

| SCM | Supply Chain Management |

| SCPM | Supply Chain Performance Measurement |

| SCP | Supply Chain Performance |

| SCOR | Supply Chain Operations Reference |

| SCC | Supply Chain Council |

| v13.0 | version 13.0 |

| SCPSS | Supply Chain Performance Scale as SCOR-Based |

| BSC | Balanced Scorecard |

| KPI | Key Performance Indicator |

| EFA | Exploratory Factor Analysis |

| OTIF | On time in full |

| COGS | Cost of Goods Sold |

| ROIC | Return on Fixed Assets |

| ROA | Return on Assets |

| ROE | Return on Equity |

| CCC | Cash Conversion Cycle |

| CFA | Confirmatory Factor Analysis |

| AVE | Average Variance Extracted |

| CR | Composite Reliability |

| v14.0 | version 14.0 |

| DTs | Disruptive Technologies |

Appendix A

Table A1.

List of expert groups.

Table A1.

List of expert groups.

| Number | Name Surname | Company, University, Position * |

|---|---|---|

| Experts from Professional Life | ||

| 1 | B. Mahir Yamakoğlu | Doğuş Çay, Supply Chain Manager |

| 2 | Utku Genç | Migros, Supply Chain Manager |

| 3 | Bora Tanyel | Yıldız Holding, Supply Chain Director |

| 4 | Erman Keskin | Colgate-Palmolive, Supply Chain Leader (Africa Eurasia Region) |

| 5 | Evren Ersoy | Siemens, Digital Transformation Specialist and Business University of Costa Rica, PhD Candidate |

| Experts from Academy | ||

| 6 | Prof. Dr. Mehmet Tanyaş | Maltepe University, Head of Logistics Management |

| 7 | Prof. Dr. Batuhan Kocaoğlu | Piri Reis University, Head of Management Information Systems |

| 8 | Asst. Prof. Mehmet Sıtkı Saygılı | Bahçeşehir University, Faculty Member of Logistics Management |

| 9 | Asst. Prof. Özlem Sanrı | Yeditepe University, Faculty Member of Logistics Management |

* It consists of the knowledge of the expert group at the time of the interviews.

Table A2.

SCOR Model v13.0 performance metrics at Level-1 and Level-2 *.

Table A2.

SCOR Model v13.0 performance metrics at Level-1 and Level-2 *.

| Performance Attribute | Level-1 Performance Measures | Level-2 Performance Measures |

|---|---|---|

| Reliability | Perfect Order Fulfillment | Percentage of Orders Delivered in Full |

| Delivery Performance to Customer Commit Date | ||

| Documentation Accuracy | ||

| Perfect Condition | ||

| Responsiveness | Order Fulfillment Cycle Time | Source Cycle Time |

| Make Cycle Time | ||

| Deliver Cycle Time | ||

| Delivery Retail Cycle Time | ||

| Return Cycle Time | ||

| Agility | Upside Supply Chain Adaptability | Upside Adaptability (Source) |

| Upside Adaptability (Make) | ||

| Upside Adaptability (Deliver) | ||

| Upside Return Adaptability (Source) | ||

| Upside Return Adaptability (Deliver) | ||

| Downside Supply Chain Adaptability | Downside Adaptability (Source) | |

| Downside Adaptability (Make) | ||

| Downside Adaptability (Deliver) | ||

| Overall Value at Risk | Supplier’s, Customer’s, or Product’s Risk Rating | |

| Value at Risk (Plan) | ||

| Value at Risk (Source) | ||

| Value at Risk (Make) | ||

| Value at Risk (Deliver) | ||

| Value at Risk (Return) | ||

| Time to Recovery (TTR) | ||

| Cost | Total Supply Chain Management Costs | Cost to Plan |

| Cost to Source | ||

| Cost to Make | ||

| Cost to Deliver | ||

| Cost to Return | ||

| Mitigation Costs | ||

| Cost of Goods Sold | Direct Material Cost | |

| Direct Labor Cost | ||

| Indirect Cost Related to Production | ||

| Asset Management | Cash-to-Cash Cycle Time | Days Sales Outstanding |

| Inventory Days of Supply | ||

| Days Payable Outstanding | ||

| Return on Supply Chain Fixed Assets | Supply Chain Revenue | |

| Supply Chain Fixed Assets | ||

| Return on Working Capital | Accounts Payable | |

| Accounts Receivable | ||

| Inventory |

* Reproduced from source: [47,48].

Appendix B

Table A3.

Item pool for pilot study.

Table A3.

Item pool for pilot study.

| Item Number | Item | References |

|---|---|---|

| Reliability | ||

| Q1 | The percentage of suppliers meeting environmental standards has increased. | [49,50] |

| Q2 | Order entry accuracy has increased. | [115] |

| Q3 | Forecast accuracy (demand, order, sales) has increased. | [25,116,117] |

| Q4 | Stock accuracy has increased. | [118] |

| Q5 | The probability of being out of stock has reduced. | [90] |

| Q6 | Stock loss rate has decreased. | [119] |

| Q7 | Warehouse efficiency has increased. | [120,121] |

| Q8 | Delivery accuracy (location and quantity) has increased. | [128] |

| Q9 | Delivery performance has improved. | [2] |

| Q10 | On time in full (OTIF) rate has increased (percentage of orders delivered exactly and on the promised date to the customer) | [122] |

| Q11 | The perfect order fulfillment rate has increased. | [123] |

| Q12 | Transport errors have reduced. | [10] |

| Q13 | Product delivery and reliability are enabled by anticipating potential delays and disruptions. | [124] |

| Q14 | Product availability has increased. | [226] |

| Q15 | Faulty product rates have decreased. | [115] |

| Q16 | The number of expired products has decreased. | [132] |

| Q17 | Product malfunctions have reduced. | [123] |

| Q18 | Documentation and billing accuracy has improved. | [115] |

| Q19 | The entire supply chain network has been monitored. | [227] |

| Q20 | Errors occurring in all processes (errors in packaging, transportation, in-vehicle placement, etc.) have decreased. | [16] |

| Responsiveness | ||

| Q21 | The cycle time for selecting and negotiating suppliers has reduced. | [49,50] |

| Q22 | Supply cycle time has decrased. | [228] |

| Q23 | Purchase cycle time has decreased. | [2,227] |

| Q24 | Inventory cycle time decreased (inventory turnover increased). | [125] |

| Q25 | Warehouse cycle times (average receiving, placing, picking, preparing and delivering) have decreased. | [126] |

| Q26 | Transportation cycle time has decreased. | [127] |

| Q27 | Production cycle time has decreased. | [128] |

| Q28 | Order fulfillment cycle time has decreased. | [129] |

| Q29 | Returned product cycle time has decreased. | [49,50] |

| Q30 | Reverse logistics cycle time has decreased. | [130,131] |

| Q31 | Product development cycle time has decreased. | [25] |

| Q32 | The overall supply chain response time has decreased. | [30] |

| Agility | ||

| Q33 | Customer problems have been be resolved faster. | [132] |

| Q34 | The firm has the ability to deliver products to customers on time and respond quickly to changes in delivery requirements. | [2,10,133] |

| Q35 | Overall value at risk has decreased. | [49,50] |

| Q36 | The upstream adaptability of the supply chain (increase % in quantity delivered in 30 days) has improved | [49,50] |

| Q37 | The downstream adaptability of the supply chain (reduction % of orders ordered in 30 days) has improved | [49,50] |

| Q38 | It has increased the ability to process difficult, non-standard orders to meet special customer product orders (product flexibility). | [137] |

| Q39 | The firm’s ability to react immediately to changes in production processes (manufacturing flexibility) has increased. | [229] |

| Q40 | The ability of capacity to adapt to changing demand and rescheduling (capacity flexibility) has increased. | [30,230] |

| Q41 | The ability to adapt to the new order in case the order deviates from the forecast in parallel with the changing demand (order flexibility) has increased. | [133,134] |

| Q42 | The ability such as diversification of transport modes, alliances between multiple transport carriers, and operating multiple transport routes (transport flexibility) has increased. | [135] |

| Q43 | The ability to align information systems architectures and systems with the organization’s changing information needs while responding to changing customer demand (information system flexibility) has increased. | [136] |

| Q44 | The ability to detect and predict market changes (market flexibility) has increased. | [137] |

| Cost | ||

| Q45 | Transaction costs have decreased. | [231,232] |

| Q46 | Marginal costs have decreased. | [233,234] |

| Q47 | Order management costs (the sum of the costs associated with managing an order) have decreased. | [129] |

| Q48 | Out-of-stock costs have decreased. | [89] |

| Q49 | Purchasing materials cost has decreased. | [138] |

| Q50 | Storage cost has been reduced. | [139] |

| Q51 | Unit production costs have decreased. | [140] |

| Q52 | Transport costs have decreased. | [141] |

| Q53 | Distribution costs have decreased. | [10] |

| Q54 | Cost of goods sold (COGS) has decreased. | [49,50] |

| Q55 | Reverse logistics costs have decreased. | [143,143] |

| Q56 | Intangible costs (quality costs, product adaptation or performance costs, and coordination costs, etc.) have decreased. | [90,144] |

| Q57 | Direct labor costs have decreased. | [49,50] |

| Q58 | Resource usage costs (labor, machinery, capacity, 3PL logistics agreements, etc.) have decreased. | [90] |

| Q59 | Risk reduction costs have decreased. | [49,50] |

| Q60 | Idle cost has decreased. | [235] |

| Q61 | Overhead cost has decreased. | [139,145] |

| Q62 | Supply chain total cost has decreased. | [146] |

| Asset Management | ||

| Q63 | Idle capacity has decreased. | [236] |

| Q64 | The return rate has decreased. | [147] |

| Q65 | The rate of recycling or reuse of materials has increased. | [148] |

| Q66 | Waste has decreased in the supply chain network. | [237] |

| Q67 | The percentage of hazardous materials used in the production process has decreased. | [149] |

| Q68 | Return on fixed assets (ROIC) has increased. | [150] |

| Q69 | Return on supply chain fixed assets increased. | [151] |

| Q70 | Return on assets (ROA) has increased. | [152,153] |

| Q71 | Return on equity (ROE) has increased. | [154] |

| Q72 | Return on working capital has increased. | [151] |

| Q73 | The cash conversion cycle (CCC) has decreased. | [49,50,153] |

| Q74 | Supply chain revenue increased. | [49,50] |

Table A4.

The final form of scale. The following questions are intended to measure the performance of the supply chain of which your company is a member in the disruptive technology era. Please answer the following questions, considering the change in supply chain performance during your use of disruptive technologies (cyber physical systems, internet of things (IoT), artificial intelligence, autonomous robots, big data analytics, blockchain, cloud computing, 3D printers, augmented reality, autonomous (driverless) vehicles, digital twin, horizontal and vertical software integrations, simulation, cyber security, and 5G) in your company.

Table A4.

The final form of scale. The following questions are intended to measure the performance of the supply chain of which your company is a member in the disruptive technology era. Please answer the following questions, considering the change in supply chain performance during your use of disruptive technologies (cyber physical systems, internet of things (IoT), artificial intelligence, autonomous robots, big data analytics, blockchain, cloud computing, 3D printers, augmented reality, autonomous (driverless) vehicles, digital twin, horizontal and vertical software integrations, simulation, cyber security, and 5G) in your company.

| Supply Chain Performance Scale as SCOR-Based (SCPSS) | ||||||

|---|---|---|---|---|---|---|

| Item Number | Item | 1: I Totally Disagree | 2: I Disagree | 3: I Have No Idea | 4: I Agree | 5: I Totally Agree |

| Reliability | ||||||

| 1 | Order entry accuracy has increased. | |||||

| 2 | Forecast accuracy (demand, order, sales) has increased. | |||||

| 3 | Stock accuracy has increased. | |||||

| 4 | Warehouse efficiency has increased. | |||||

| 5 | Delivery accuracy (location and quantity) has increased. | |||||

| 6 | Delivery performance has improved. | |||||

| 7 | On time in full (OTIF) rate has increased (percentage of orders delivered exactly and on the promised date to the customer). | |||||

| 8 | The perfect order fulfillment rate has increased. | |||||

| Responsiveness | ||||||

| 9 | Warehouse cycle times (average receiving, placing, picking, preparing, and delivering) have decreased. | |||||

| 10 | Transportation cycle time has decreased. | |||||

| 11 | Production cycle time has decreased. | |||||

| 12 | Order fulfillment cycle time has decreased. | |||||

| 13 | Returned product cycle time has decreased. | |||||

| Agility | ||||||

| 14 | The ability to adapt to the new order in case the order deviates from the forecast in parallel with the changing demand (order flexibility) has increased. | |||||

| 15 | The ability such as diversification of transport modes, alliances between multiple transport carriers and operating multiple transport routes (transport flexibility) has increased. | |||||

| 16 | The ability to align information systems architectures and systems with the organization’s changing information needs while responding to changing customer demand (information system flexibility) has increased. | |||||

| 17 | The ability to detect and predict market changes (market flexibility) has increased. | |||||

| Cost | ||||||

| 18 | Storage costs have reduced. | |||||

| 19 | Unit production costs have decreased. | |||||

| 20 | Transport costs have decreased. | |||||

| 21 | Distribution costs have decreased. | |||||

| 22 | Cost of goods sold (COGS) has decreased. | |||||

| 23 | Reverse logistics costs have decreased. | |||||

| 24 | Intangible costs (quality costs, product adaptation, or performance costs and coordination costs, etc.) have decreased. | |||||

| 25 | Direct labor costs have decreased. | |||||

| 26 | Resource usage costs (labor, machinery, capacity, 3PL logistics agreements, etc.) have decreased. | |||||

| 27 | Supply chain total costs have decreased. | |||||

| Asset Management | ||||||

| 28 | Return on equity (ROE) has increased. | |||||

| 29 | Return on working capital has increased. | |||||

| 30 | The cash conversion cycle (CCC) has decreased. | |||||

| 31 | Supply chain revenue has increased. | |||||

References

- Avelar-Sosa, L.; García-Alcaraz, J.L.; Maldonado-Macías, A.A. Evaluation of Supply Chain Performance: A Manufacturing Industry Approach; Springer: Cham, Switzerland, 2019; p. 11. [Google Scholar] [CrossRef]

- Gunasekaran, A.; Patel, C.; Tirtiroglu, E. Performance measures and metrics in a supply chain environment. Int. J. Oper. Prod. Manag. 2001, 21, 71–87. [Google Scholar] [CrossRef]

- Christopher, M. The agile supply chain: Competing in volatile markets. Ind. Mark. Manag. 2000, 29, 37–44. [Google Scholar] [CrossRef]

- Lambert, D.; Cooper, M. Issues in supply chain management. Ind. Mark. Manag. 2000, 29, 65–83. [Google Scholar] [CrossRef]

- Handfield, R.B.; Nichols, E.L. Introduction to Supply Chain Management; Prentice-Hall: Englewood Cliffs, NJ, USA, 1999. [Google Scholar]

- Ellinger, A.E. Improving marketing/logistics cross functional collaboration in the supply chain. Ind. Mark. Manag. 2000, 29, 85–96. [Google Scholar] [CrossRef]

- Gashti, S.G.; Seyedhosseini, S.M.; Noorossana, R. Developing a framework for supply chain value measurement based on value index system: Real case study of manufacturing company. Afr. J. Bus. Manag. 2012, 6, 11023–11034. [Google Scholar] [CrossRef]

- Silvestre, B.S. Sustainable supply chain management in emerging economies: Environmental turbulence, institutional voids and sustainability trajectories. Int. J. Prod. Econ. 2015, 167, 156–169. [Google Scholar] [CrossRef]

- Cahyono, Y.; Purwoko, D.; Koho, I.R.; Setiani, A.; Supendi, S.; Setyoko, P.I.; Sosiady, M.; Wijoyo, H. The role of supply chain management practices on competitive advantage and performance of halal agroindustry SMEs. Uncertain Supply Chain Manag. 2023, 11, 153–160. [Google Scholar] [CrossRef]

- Beamon, B.M. Measuring supply chain performance. Int. J. Oper. Prod. Manag. 1999, 19, 275–292. [Google Scholar] [CrossRef]

- Saleheen, F.; Habib, M.M. Embedding attributes towards the supply chain performance measurement. Clean. Logist. Supply Chain 2023, 6, 100090. [Google Scholar] [CrossRef]

- Charan, P.; Shankar, R.; Baisya, R.K. Analysis of interactions among the variables of supply chain performance measurement system implementation. Bus. Process Manag. J. 2008, 14, 512–529. [Google Scholar] [CrossRef]

- Neely, A. The performance measurement revolution: Why now and what next? Int. J. Oper. Prod. Manag. 1999, 19, 205–228. [Google Scholar] [CrossRef]

- Chae, B.K. Developing key performance indicators for supply chain: An industry perspective. Supply Chain Manag. Int. J. 2009, 14, 422–428. [Google Scholar] [CrossRef]

- Maestrini, V.; Luzzinii, D.; Maccarrone, P.; Caniato, F. Supply chain performance measurement systems: A systematic review and research agenda. Int. J. Prod. Econ. 2007, 183, 299–315. [Google Scholar] [CrossRef]

- Aramyan, L.H.; Oude Lansink, A.G.; Van Der Vorst, J.G.; Van Kooten, O. Performance measurement in agri-food supply chains: A case study. Supply Chain Manag. Int. J. 2007, 12, 304–315. [Google Scholar] [CrossRef]

- Bhagwat, R.; Sharma, M.K. Performance measurement of supply chain management: A balanced scorecard approach. Comput. Ind. Eng. 2007, 53, 43–62. [Google Scholar] [CrossRef]