Spatial Frequency Domain Imaging System Calibration, Correction and Application for Pear Surface Damage Detection

Abstract

:1. Introduction

2. Materials and Methods

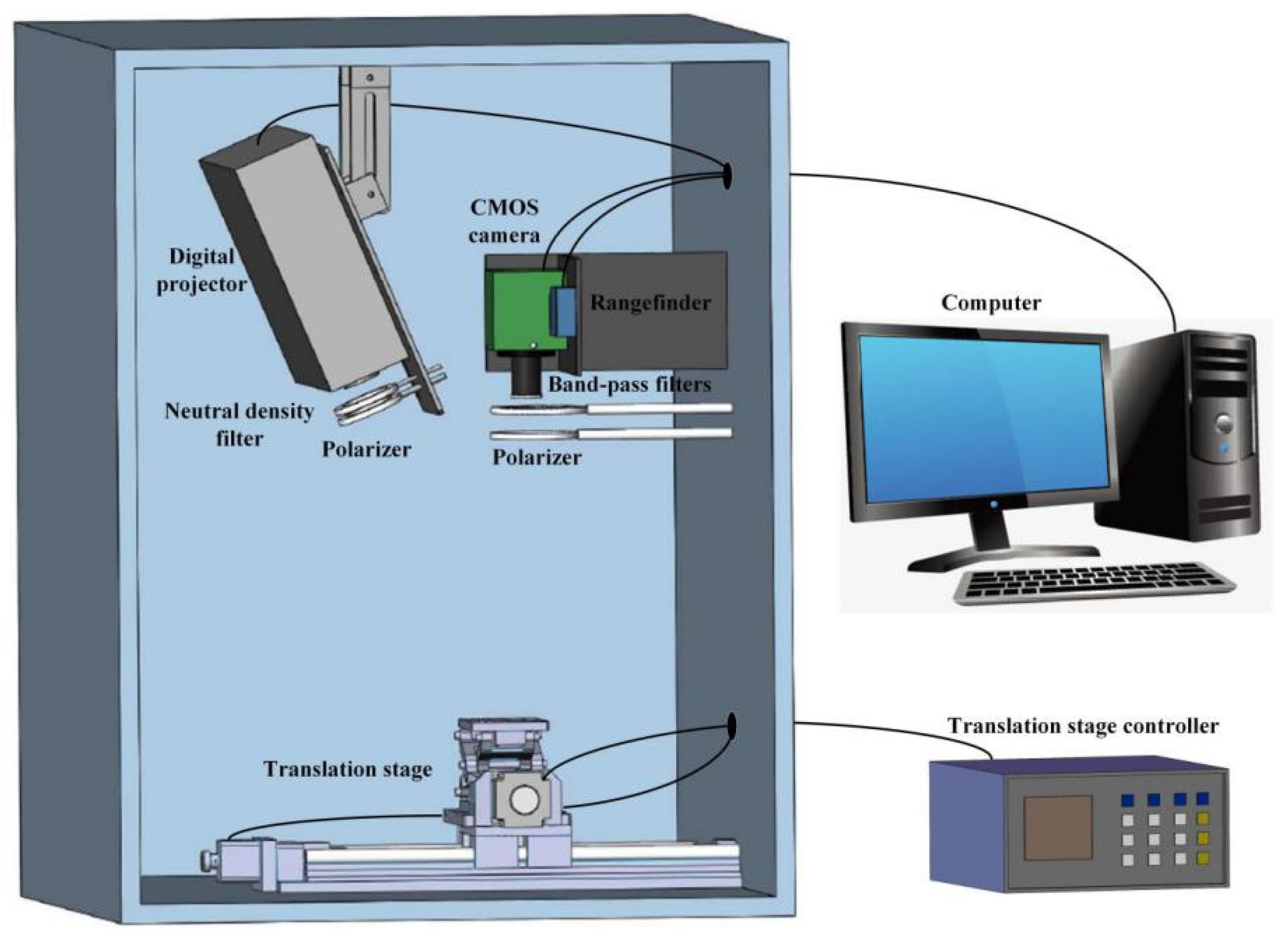

2.1. SFDI System Construction

2.1.1. Hardware

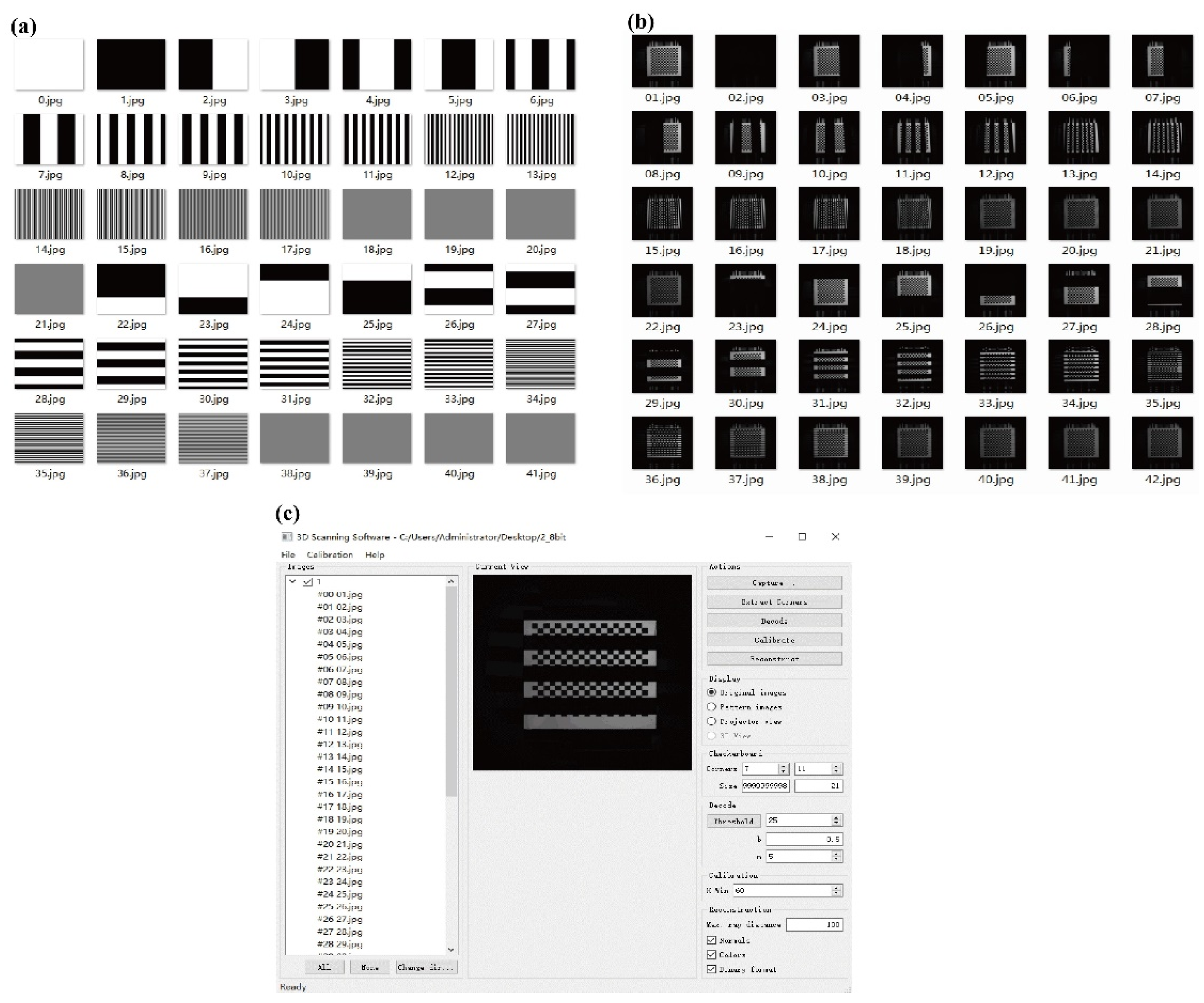

2.1.2. Software

2.1.3. System Operation

2.2. System Calibrations

2.2.1. Projector–Camera Calibration

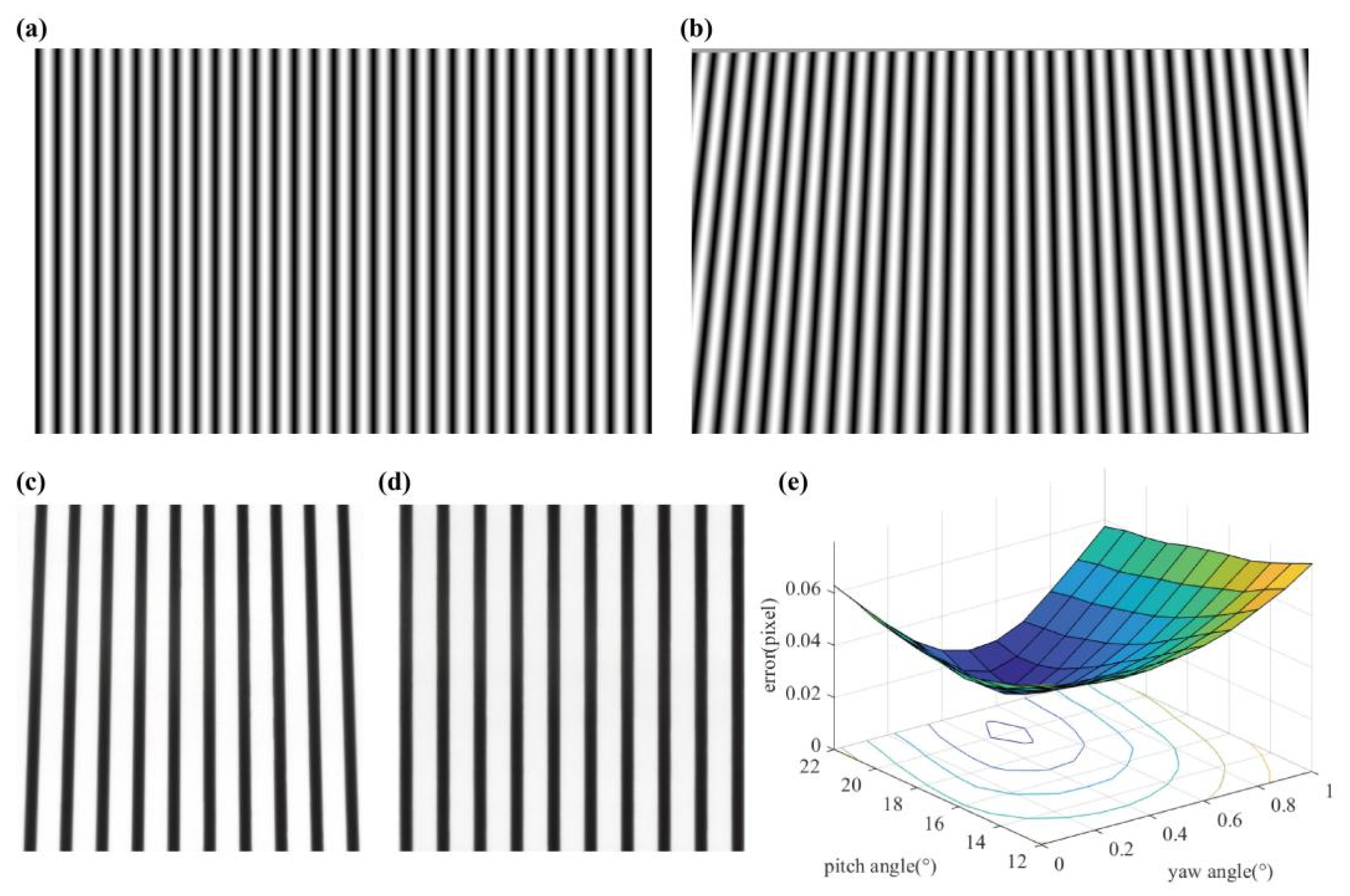

2.2.2. Keystone Correction

- ①

- Use the projector’s calibration parameters and the original image to generate point coordinates of the world coordinates plane, where the set angle was opposite to the actual angle of the projector.

- ②

- Convert the scattered point coordinates into pixel coordinates to obtain a corrected projection image.

- ③

- Calculate the error and set the projection image at different angles until the error has been minimized.

2.2.3. Frequency Calibration

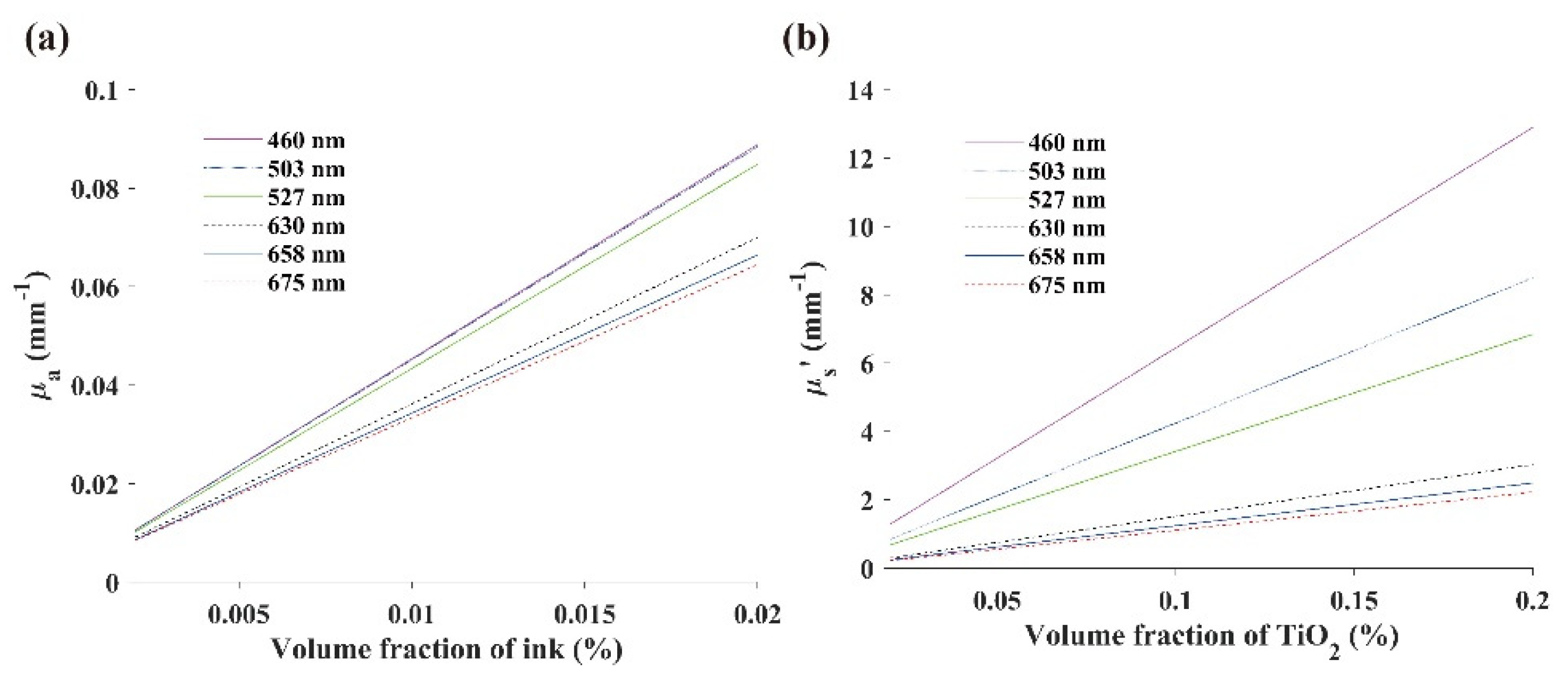

2.2.4. Calibration of the Optical Properties

2.3. Sample Preparation

2.4. Discriminant Model Analysis

3. Results and Discussion

3.1. Projector–Camera Calibration Results

3.2. Keystone Correction Results

3.3. Frequency Calibration Results

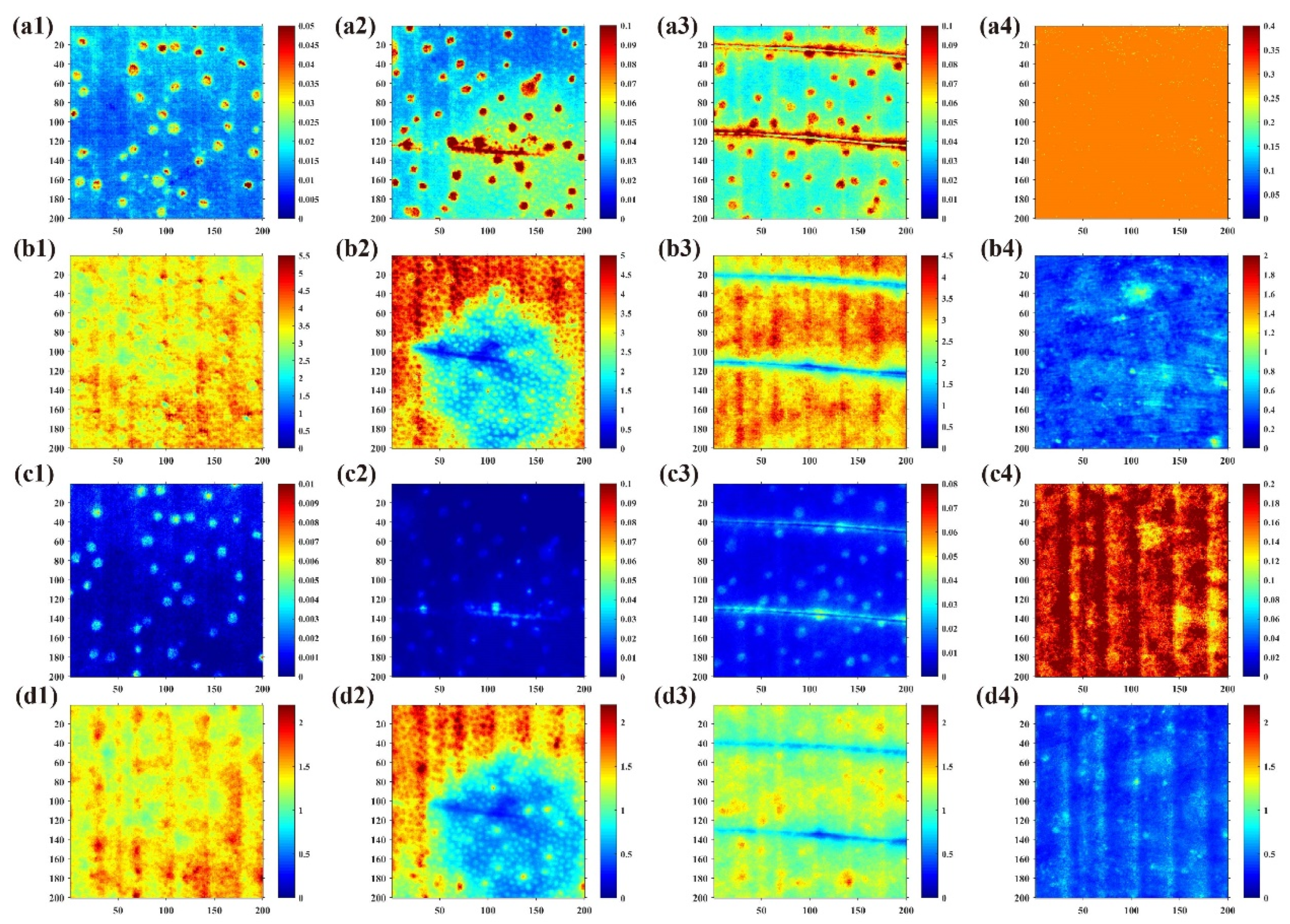

3.4. Optical Property Calibration Results

3.5. Damage Discrimination Results

4. Conclusions

Author Contributions

Funding

Acknowledgments

Data Availability Statement

Conflicts of Interest

References

- Su, W.H.; Sun, D.W. Fourier transform infrared and Raman and hyperspectral imaging techniques for quality determinations of powdery foods: A review. Compr. Rev. Food Sci. Food Saf. 2018, 17, 104–122. [Google Scholar] [CrossRef]

- Su, W.H.; Yang, C.; Dong, Y.; Johnson, R.; Page, R.; Szinyei, T.; Hirsch, C.; Steffenson, B.J. Hyperspectral imaging and improved feature variable selection for automated determination of deoxynivalenol in various genetic lines of barley kernels for resistance screening. Food Chem. 2021, 343, 128507. [Google Scholar] [CrossRef]

- Rivero, F.J.; Ciaccheri, L.; González-Miret, M.L.; Rodríguez-Pulido, F.J.; Mencaglia, A.A.; Heredia, F.J.; Mignani, A.G.; Gordillo, B. A Study of Overripe Seed Byproducts from Sun-Dried Grapes by Dispersive Raman Spectroscopy. Foods 2021, 10, 483. [Google Scholar] [CrossRef]

- Li, J.B.; Huang, W.Q.; Zhao, C.J. Machine vision technology for detecting the external defects of fruits—A review. Imaging Sci. J. 2015, 63, 241–251. [Google Scholar] [CrossRef]

- Martinsen, P.; Oliver, R.; Seelye, R.; McGlone, V.A.; Holmes, T.; Davy, M.; Johnston, J.; Hallett, I.; Moynihan, K. Quantifying the diffuse reflectance change caused by fresh bruises on apples. Trans. ASABE 2014, 57, 565–572. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, X.; Zhang, S.; Cheng, Q.; Tan, Z. An effective method to inspect and classify the bruising degree of apples based on the optical properties. Postharvest Biol. Technol. 2017, 127, 44–52. [Google Scholar] [CrossRef]

- Hu, D.; Lu, R.; Huang, Y.; Ying, Y.; Fu, X. Effects of optical variables in a single integrating sphere system on estimation of scattering properties of turbid media. Biosyst. Eng. 2020, 194, 82–98. [Google Scholar] [CrossRef]

- Lurie, S.; Vanoli, M.; Dagar, A.; Weksler, A.; Lovati, F.; Zerbini, P.E.; Spinelli, L.; Torricelli, A.; Feng, J.; Rizzolo, A. Chilling injury in stored nectarines and its detection by time-resolved reflectance spectroscopy. Postharvest Biol. Technol. 2011, 59, 211–218. [Google Scholar] [CrossRef]

- Chen, Y.W.; Chen, C.C.; Huang, P.J.; Tseng, S.H. Artificial neural networks for retrieving absorption and reduced scattering spectra from frequency-domain diffuse reflectance spectroscopy at short source-detector separation. Biomed. Opt. Express 2016, 7, 1496–1510. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lu, R.; Van Beers, R.; Saeys, W.; Li, C.; Cen, H. Measurement of optical properties of fruits and vegetables: A review. Postharvest Biol. Technol. 2020, 159, 111003. [Google Scholar] [CrossRef]

- Cuccia, D.J.; Bevilacqua, F.; Durkin, A.J.; Tromberg, B.J. Modulated imaging: Quantitative analysis and tomography of turbid media in the spatial-frequency domain. Opt. Lett. 2005, 30, 1354–1356. [Google Scholar] [CrossRef] [PubMed]

- Travers, J.B.; Poon, C.; Rohrbach, D.J.; Weir, N.M.; Cates, E.; Hager, F.; Sunar, U. Noninvasive mesoscopic imaging of actinic skin damage using spatial frequency domain imaging. Biomed. Opt. Express 2017, 8, 3045–3052. [Google Scholar] [CrossRef] [Green Version]

- Travers, J.B.; Poon, C.; Bihl, T.; Rinehart, B.; Borchers, C.; Rohrbach, D.J.; Borchers, S.; Trevino, J.; Rubin, M.; Donnelly, H. Quantifying skin photodamage with spatial frequency domain imaging: Statistical results. Biomed. Opt. Express 2019, 10, 4676–4683. [Google Scholar] [CrossRef]

- Schmidt, M.; Aguénounon, E.; Nahas, A.; Torregrossa, M.; Tromberg, B.J.; Uhring, W.; Gioux, S. Real-time, wide-field, and quantitative oxygenation imaging using spatiotemporal modulation of light. J. Biomed. Opt. 2019, 24, 071610. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, M.T.; Durr, N.J. Rapid tissue oxygenation mapping from snapshot structured-light images with adversarial deep learning. J. Biomed. Opt. 2020, 25, 112907. [Google Scholar] [CrossRef]

- Rowland, R.A.; Ponticorvo, A.; Baldado, M.L.; Kennedy, G.T.; Burmeister, D.M.; Christy, R.J.; Bernal, N.P.; Durkin, A.J. Burn wound classification model using spatial frequency-domain imaging and machine learning. J. Biomed. Opt. 2019, 24, 056007. [Google Scholar] [CrossRef]

- Sun, Z.; Xie, L.; Hu, D.; Ying, Y. An artificial neural network model for accurate and efficient optical property mapping from spatial-frequency domain images. Comput. Electron. Agric. 2021, 188, 106340. [Google Scholar] [CrossRef]

- He, X.; Fu, X.; Li, T.; Rao, X. Spatial frequency domain imaging for detecting bruises of pears. J. Food Meas. Charact. 2018, 12, 1266–1273. [Google Scholar] [CrossRef]

- He, X.; Hu, D.; Fu, X.; Rao, X. Spatial frequency domain imaging for determining absorption and scattering properties of bruised pears based on profile corrected diffused reflectance. Postharvest Biol. Technol. 2021, 179, 111570. [Google Scholar] [CrossRef]

- Erfanzadeh, M.; Nandy, S.; Kumavor, P.D.; Zhu, Q. Low-cost compact multispectral spatial frequency domain imaging prototype for tissue characterization. Biomed. Opt. Express 2018, 9, 5503–5510. [Google Scholar] [CrossRef]

- Applegate, M.B.; Karrobi, K.; Angelo, J.P., Jr.; Austin, W.M.; Tabassum, S.M.; Aguénounon, E.; Tilbury, K.; Saager, R.B.; Gioux, S.; Roblyer, D.M. OpenSFDI: An open-source guide for constructing a spatial frequency domain imaging system. J. Biomed. Opt. 2020, 25, 016002. [Google Scholar] [CrossRef]

- Chae, S.; Yoon, S.; Yun, H. A Novel Keystone Correction Method Using Camera-Based Touch Interface for Ultra Short Throw Projector. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA , 10–12 January 2021; pp. 1–3. [Google Scholar] [CrossRef]

- Chen, X.; Wu, J.; Fan, R.; Liu, Q.; Xiao, Y.; Wang, Y.; Wang, Y. Two-Digit Phase-Coding Strategy for Fringe Projection Profilometry. IEEE Trans. Instrum. Meas. 2020, 70, 1–9. [Google Scholar] [CrossRef]

- Koolstra, K.; O’Reilly, T.; Börnert, P.; Webb, A. Image distortion correction for MRI in low field permanent magnet systems with strong B-0 inhomogeneity and gradient field nonlinearities. MAGMA 2021, 34, 631–642. [Google Scholar] [CrossRef]

- An, X.; Li, X. LCD-based method for evaluating modulation transfer function of optical lenses with poorly corrected distortion. Opt. Eng. 2021, 60, 063102. [Google Scholar] [CrossRef]

- Moreno, D.; Taubin, G. Simple, accurate, and robust projector-camera calibration. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; pp. 464–471. [Google Scholar] [CrossRef]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar] [CrossRef] [Green Version]

- He, X.; Jiang, X.; Fu, X.; Gao, Y.; Rao, X. Least squares support vector machine regression combined with Monte Carlo simulation based on the spatial frequency domain imaging for the detection of optical properties of pear. Postharvest Biol. Technol. 2018, 145, 1–9. [Google Scholar] [CrossRef]

- Mätzler, C. MATLAB Functions for Mie Scattering and Absorption. 2002. Available online: https://omlc.org/software/mie/maetzlermie/Maetzler2002.pdf (accessed on 27 July 2021).

- Paiva, D.N.A.; Perdiz, R.D.; Almeida, T.E. Using near-infrared spectroscopy to discriminate closely related species: A case study of neotropical ferns. J. Plant Res. 2021, 134, 509–520. [Google Scholar] [CrossRef] [PubMed]

- Applegate, M.B.; Spink, S.S.; Roblyer, D. Dual-DMD hyperspectral spatial frequency domain imaging (SFDI) using dispersed broadband illumination with a demonstration of blood stain spectral monitoring. Biomed. Opt. Express 2021, 12, 676–688. [Google Scholar] [CrossRef]

- Gioux, S.; Mazhar, A.; Cuccia, D.J. Spatial frequency domain imaging in 2019: Principles, applications, and perspectives. J. Biomed. Opt. 2019, 24, 071613. [Google Scholar] [CrossRef] [Green Version]

- Bodenschatz, N.; Brandes, A.R.; Liemert, A.; Kienle, A. Sources of errors in spatial frequency domain imaging of scattering media. J. Biomed. Opt. 2014, 19, 071405. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Fu, X.; Rao, X.; Fang, Z. Assessing firmness and SSC of pears based on absorption and scattering properties using an automatic integrating sphere system from 400 to 1150 nm. Postharvest Biol. Technol. 2016, 121, 62–70. [Google Scholar] [CrossRef]

- Qin, J.; Lu, R. Measurement of the optical properties of fruits and vegetables using spatially resolved hyperspectral diffuse reflectance imaging technique. Postharvest Biol. Technol. 2008, 49, 355–365. [Google Scholar] [CrossRef]

- Hu, D.; Lu, R.; Ying, Y. Spatial-frequency domain imaging coupled with frequency optimization for estimating optical properties of two-layered food and agricultural products. J. Food Eng. 2020, 277, 109909. [Google Scholar] [CrossRef]

| Wavelength (nm) | 460 | 503 | 527 | 630 | 658 | 675 |

|---|---|---|---|---|---|---|

| R2 | 0.9955 | 0.9964 | 0.9961 | 0.9965 | 0.9965 | 0.9963 |

| Wavelength (nm) | 460 | 503 | 527 | 630 | 658 | 675 | Average | |

|---|---|---|---|---|---|---|---|---|

| Relative error (%) | μa | 6.92 | 8.47 | 8.54 | 11.03 | 10.12 | 8.23 | 8.88 |

| μ’s | 4.02 | 5.08 | 4.55 | 6.21 | 4.76 | 2.6 | 4.54 | |

| Wavelength (nm) | 527 | 675 | ||

|---|---|---|---|---|

| Cross-validation accuracy for the training set (%) | Four categories (bruised, scratched and abraded) | 0 mm−1 (planar) | 82.5 | 77.5 |

| All spatial frequencies (SFDI) | 92.5 | 83.8 | ||

| Three categories (normal, minor damage and serious damage) | 0 mm−1 (planar) | 93.8 | 93.8 | |

| All spatial frequencies (SFDI) | 100 | 98.8 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, Y.; Jiang, X.; Fu, X. Spatial Frequency Domain Imaging System Calibration, Correction and Application for Pear Surface Damage Detection. Foods 2021, 10, 2151. https://doi.org/10.3390/foods10092151

Luo Y, Jiang X, Fu X. Spatial Frequency Domain Imaging System Calibration, Correction and Application for Pear Surface Damage Detection. Foods. 2021; 10(9):2151. https://doi.org/10.3390/foods10092151

Chicago/Turabian StyleLuo, Yifeng, Xu Jiang, and Xiaping Fu. 2021. "Spatial Frequency Domain Imaging System Calibration, Correction and Application for Pear Surface Damage Detection" Foods 10, no. 9: 2151. https://doi.org/10.3390/foods10092151

APA StyleLuo, Y., Jiang, X., & Fu, X. (2021). Spatial Frequency Domain Imaging System Calibration, Correction and Application for Pear Surface Damage Detection. Foods, 10(9), 2151. https://doi.org/10.3390/foods10092151