DynaFlowNet: Flow Matching-Enabled Real-Time Imaging Through Dynamic Scattering Media

Abstract

1. Introduction

2. Methods

2.1. Principle and Methodology

- 1.

- Temporal variation in operator owing to media inhomogeneity;

- 2.

- Solution space ambiguity arising from inherent ill-posedness;

- 3.

- High computational complexity associated with high-dimensional reconstruction.

2.2. Dual-Conditioned Continuous Flow Transport for Dynamic Scattering Imaging

2.2.1. Forward Path Construction

2.2.2. Backward Generation Process

2.2.3. Vector Field Learning Mechanism

2.3. DynaFlowNet: Spectro-Temporal Flow Matching for Real-Time Dynamic Scattering Imaging

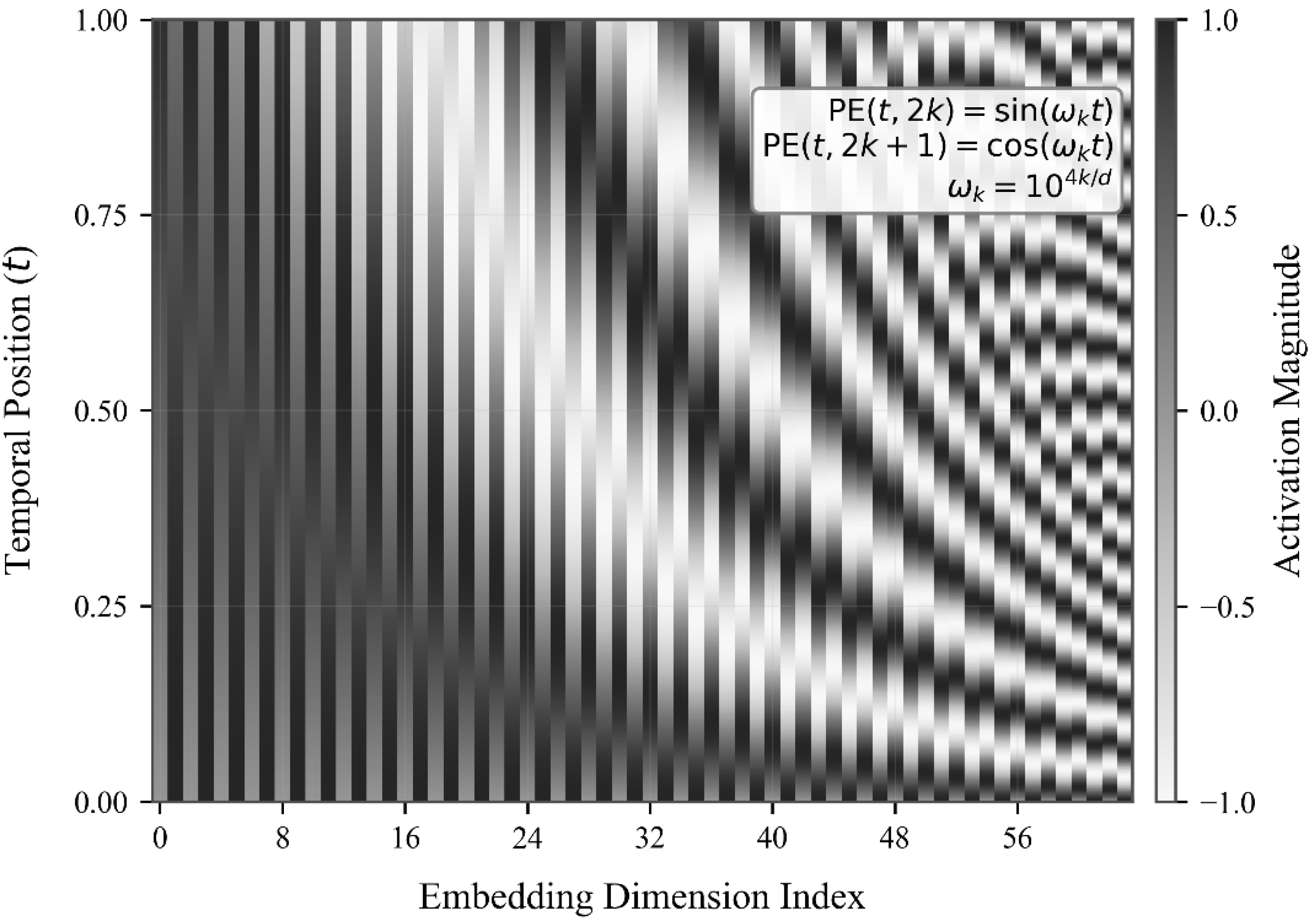

2.3.1. Spectro-Dynamic Time Embedding (SD-Embedding)

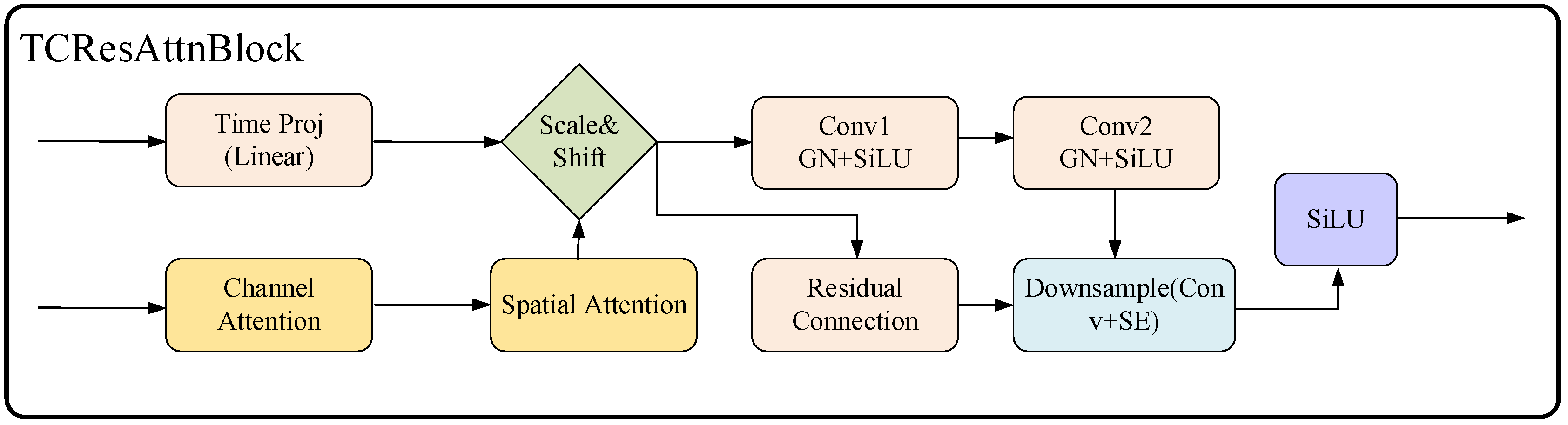

2.3.2. Temporal-Conditional Residual Attention Blocks (TCResAttnBlocks)

2.3.3. Multiscale Encoder–Decoder Architecture

2.4. Experimental Setup

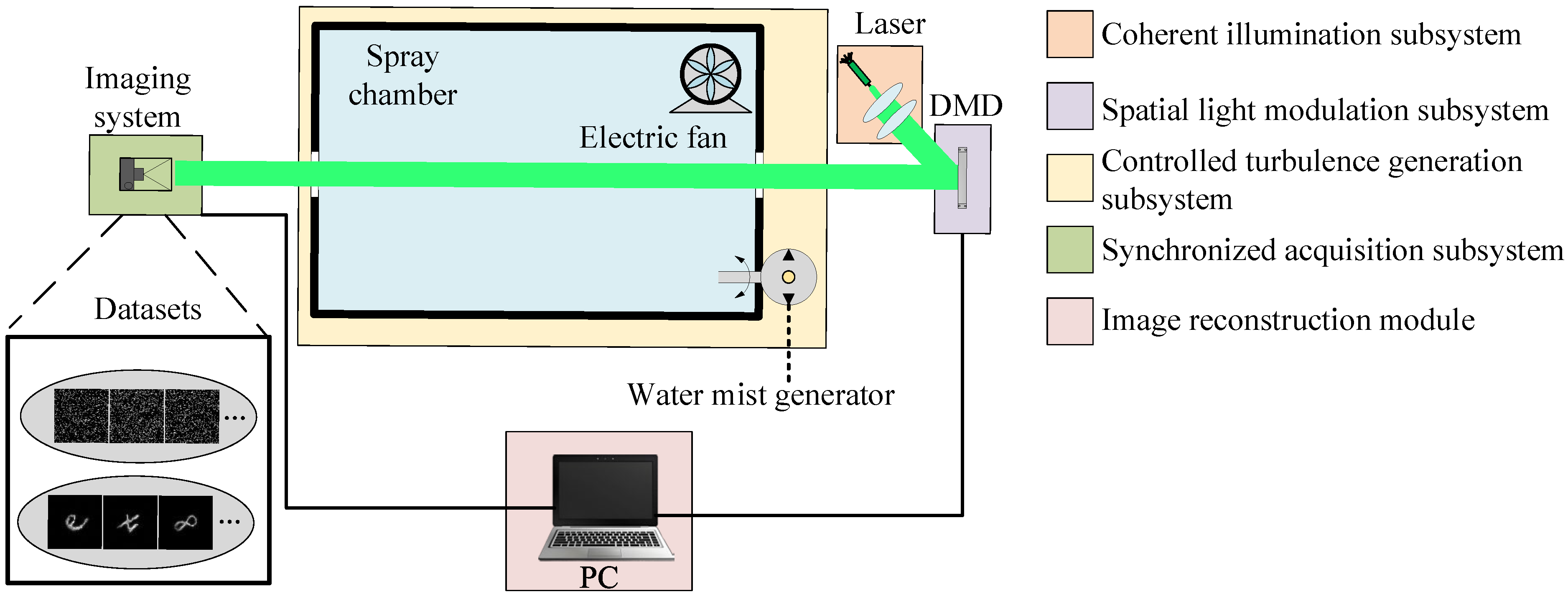

2.4.1. Optical Imaging System Configuration

- Coherent illumination subsystem: The radiation beam from a 532 nm semiconductor laser (ADR-1805, Changchun New Industries Optoelectronics, Changchun, China) was collimated and expanded through a 10× beam expander (GCO-25, Daheng Optics, Beijing, China) to generate a uniform spatial intensity profile (beam divergence < 0.1 mrad).

- Spatial light modulation subsystem: A DLP6500 digital micromirror device (DMD, Jin Hua Feiguang Electronic, Jin Hua, China) with 1920 × 1080 micromirrors (7.56 µm pixel pitch) enabled programmable wavefront encoding at λ = 532 nm, supporting 8-bit grayscale modulation.

- Controlled turbulence generation subsystem: A Y09-010 deionized-water aerosol generator produced polydisperse fog particles (mean diameter = 2.5 ± 0.3 µm) at 0.95 m3·min−1 volumetric flow. The turbulent scattering medium (ε = 0.35 turbulence intensity) was confined within a 100 cm × 80 cm × 60 cm environmental chamber, with spatiotemporal dynamics induced by a programmable 360° multiblade rotary assembly (stepper motor control, 0–300 rpm).

- Synchronized acquisition subsystem: A MER2-160-227U3M CMOS camera (Sony IMX273 global shutter sensor, 1440 × 1080 resolution, Beijing, China) captured the images. An FPGA (field-programmable gate array)-based triggering mechanism achieved ±0.5 ms temporal synchronization between DMD pattern updates (at a 20 kHz refresh rate), turbulence modulation, and camera exposure.

- Scattering domain adaptability dataset:

2.4.2. Training DynaFlowNet via Flow Matching with Time-Conditioned Vector Fields

| Algorithm 1: DynaFlowNet Training |

| 1: repeat 2: Sample data pair 3: Sample time 4: Compute latent state: 5: Generate time embedding: 6: Predict vector field: 7: Compute target flow: 8: Calculate loss: 9: Compute gradient: 10: Update parameters: 11: until convergence |

| Algorithm 2: DynaFlowNet Sampling |

| 1: Initialize state (speckle input) 2: Set step size and total steps 3: For to do 4: 5: Generate embedding 6: Predict flow field 7: Update state 8: end for 9: Output reconstruction |

2.4.3. Ablation Study Design

2.4.4. Evaluating Generalization to Unseen Binary Geometries

3. Results and Analysis

3.1. Comparative Performance Analysis of DynaFlowNet

3.1.1. Quantitative Imaging Performance Metrics

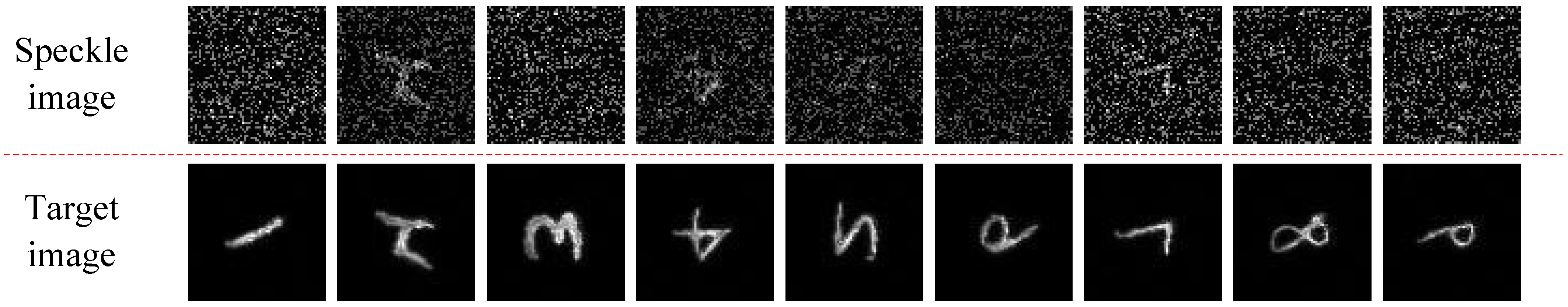

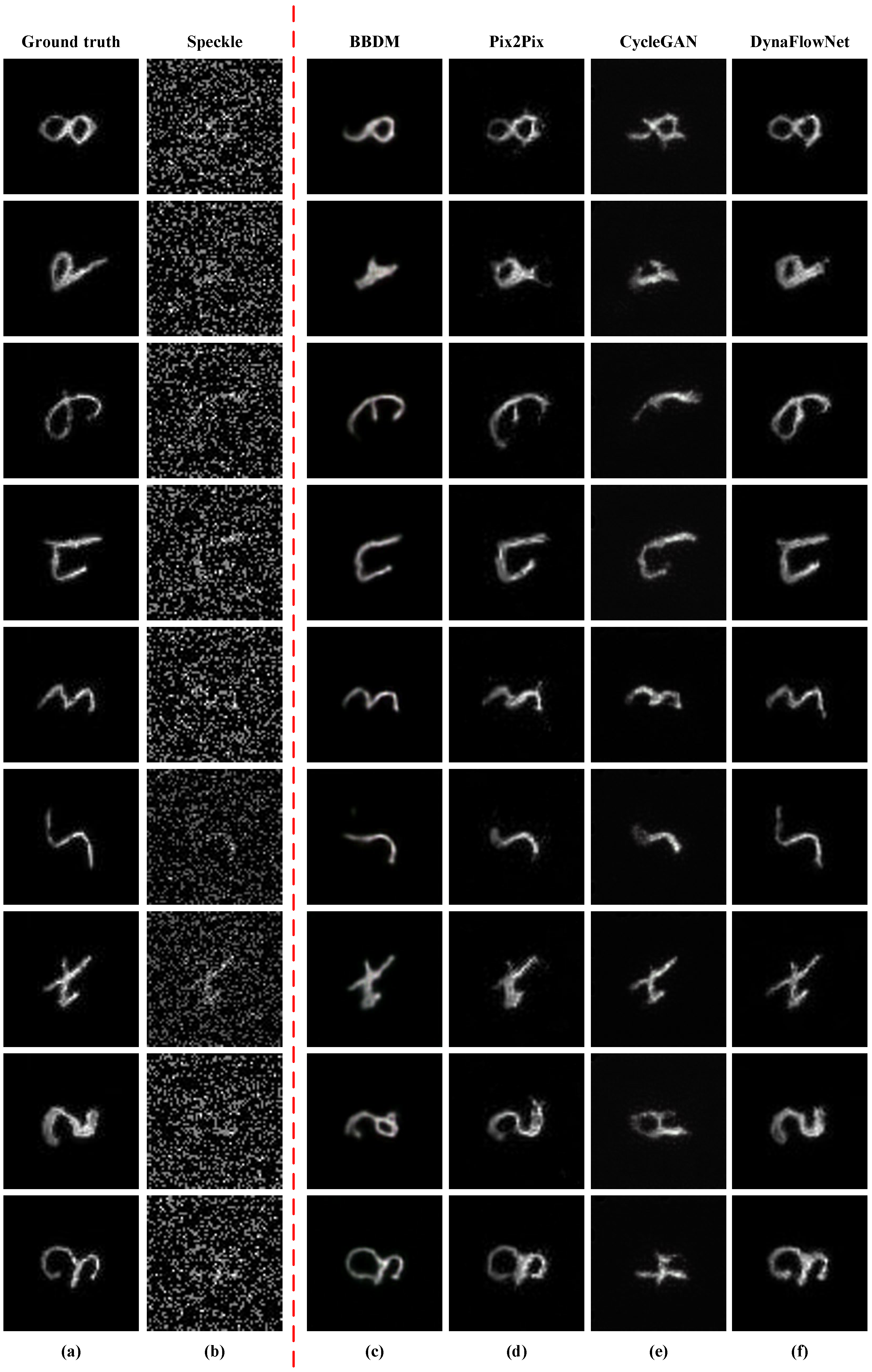

3.1.2. Qualitative Reconstruction Quality Assessment

3.2. Ablation Study on Core Components

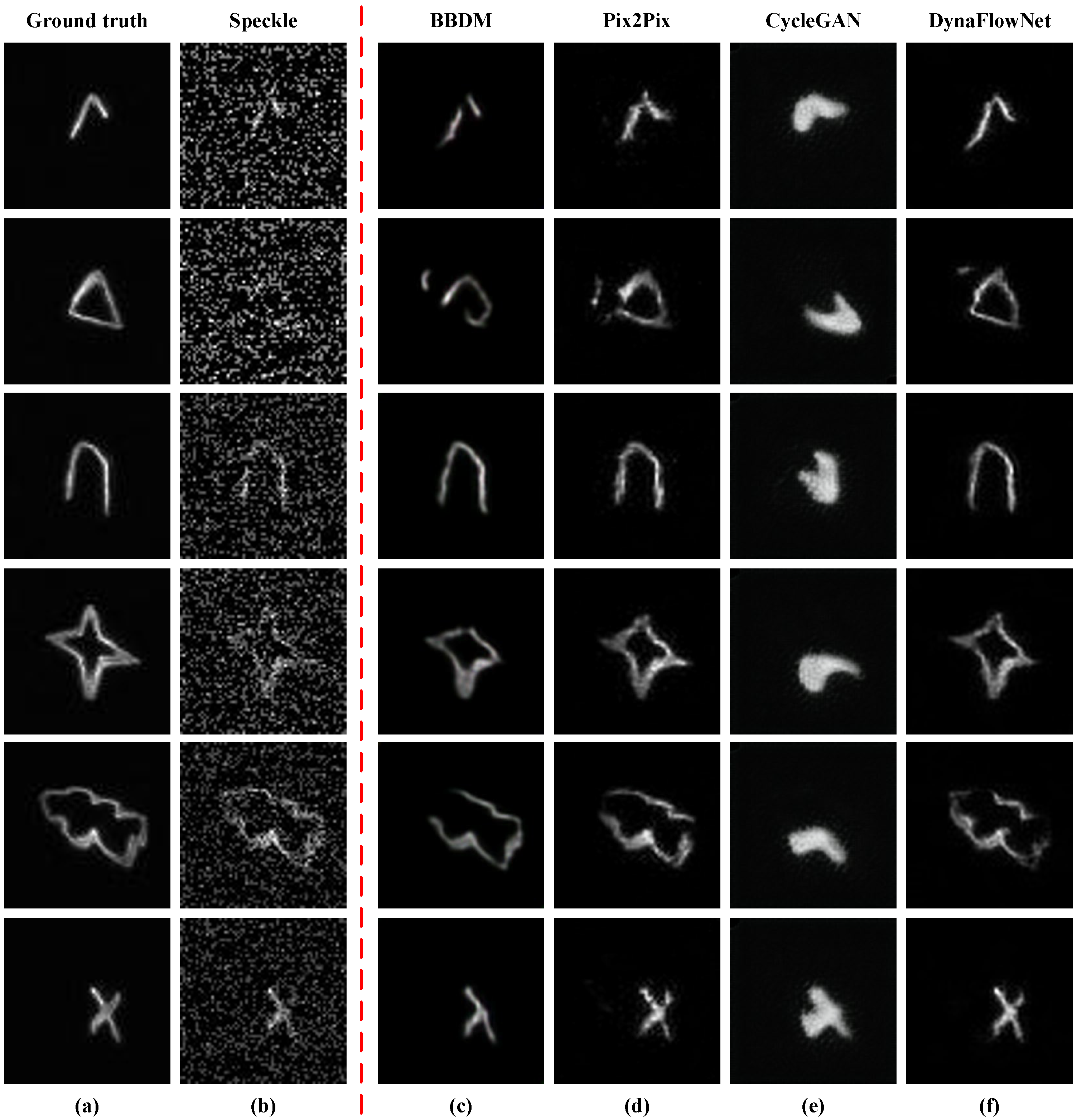

3.3. Generalization to Unseen Binary Geometries

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, H.; Wang, F.; Jin, Y.; Ma, X.; Li, S.; Bian, Y.; Situ, G. Learning-based real-time imaging through dynamic scattering media. Light Sci. Appl. 2024, 13, 194. [Google Scholar] [CrossRef]

- Redo-Sanchez, A.; Heshmat, B.; Aghasi, A.; Naqvi, S.; Zhang, M.; Romberg, J.; Raskar, R. Terahertz time-gated spectral imaging for content extraction through layered structures. Nat. Commun. 2016, 7, 12665. [Google Scholar] [CrossRef] [PubMed]

- Kang, S.; Jeong, S.; Choi, W.; Ko, H.; Yang, T.D.; Joo, J.H.; Lee, J.-S.; Lim, Y.-S.; Park, Q.H.; Choi, W. Imaging deep within a scattering medium using collective accumulation of single-scattered waves. Nat. Photonics 2015, 9, 253–258. [Google Scholar] [CrossRef]

- Chen, B.-C.; Legant, W.R.; Wang, K.; Shao, L.; Milkie, D.E.; Davidson, M.W.; Janetopoulos, C.; Wu, X.S.; Hammer III, J.A.; Liu, Z. Lattice light-sheet microscopy: Imaging molecules to embryos at high spatiotemporal resolution. Science 2014, 346, 1257998. [Google Scholar] [CrossRef] [PubMed]

- Kanaev, A.V.; Watnik, A.T.; Gardner, D.F.; Metzler, C.; Judd, K.P.; Lebow, P.; Novak, K.M.; Lindle, J.R. Imaging through extreme scattering in extended dynamic media. Opt. Lett. 2018, 43, 3088–3091. [Google Scholar] [CrossRef]

- Ntziachristos, V. Going deeper than microscopy: The optical imaging frontier in biology. Nat. Methods 2010, 7, 603–614. [Google Scholar] [CrossRef]

- Sheng, P.; van Tiggelen, B. Introduction to Wave Scattering, Localization and Mesoscopic Phenomena; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Harmany, Z.T.; Marcia, R.F.; Willett, R.M. This is SPIRAL-TAP: Sparse Poisson Intensity Reconstruction ALgorithms—Theory and Practice. IEEE Trans. Image Process. 2012, 21, 1084–1096. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, H.; Li, W.; Wang, J. Enhanced Deconvolution and Denoise Method for Scattering Image Restoration. Photonics 2023, 10, 751. [Google Scholar] [CrossRef]

- Bertolotti, J.; van Putten, E.G.; Blum, C.; Lagendijk, A.; Vos, W.L.; Mosk, A.P. Non-invasive imaging through opaque scattering layers. Nature 2012, 491, 232–234. [Google Scholar] [CrossRef]

- Chen, M.; Liu, H.; Liu, Z.; Lai, P.; Han, S. Expansion of the FOV in speckle autocorrelation imaging by spatial filtering. Opt. Lett. 2019, 44, 5997–6000. [Google Scholar] [CrossRef]

- Suo, J.; Zhang, W.; Gong, J.; Yuan, X.; Brady, D.J.; Dai, Q. Computational imaging and artificial intelligence: The next revolution of mobile vision. Proc. IEEE 2023, 111, 1607–1639. [Google Scholar] [CrossRef]

- Li, S.; Deng, M.; Lee, J.; Sinha, A.; Barbastathis, G. Imaging through glass diffusers using densely connected convolutional networks. Optica 2018, 5, 803–813. [Google Scholar] [CrossRef]

- Lyu, M.; Wang, H.; Li, G.; Zheng, S.; Situ, G. Learning-based lensless imaging through optically thick scattering media. Adv. Photonics 2019, 1, 036002. [Google Scholar] [CrossRef]

- Zhu, S.; Guo, E.; Gu, J.; Bai, L.; Han, J. Imaging through unknown scattering media based on physics-informed learning. Photonics Res. 2021, 9, B210–B219. [Google Scholar] [CrossRef]

- Chen, Z.Y.; Lin, B.Y.; Gao, S.Y.; Wan, W.B.; Liu, Q.G. Imaging through scattering media via generative diffusion model. Appl. Phys. Lett. 2024, 124, 051101. [Google Scholar] [CrossRef]

- Zhu, S.; Guo, E.; Zhang, W.; Bai, L.; Liu, H.; Han, J. Deep speckle reassignment: Towards bootstrapped imaging in complex scattering states with limited speckle grains. Opt. Express 2023, 31, 19588–19603. [Google Scholar] [CrossRef]

- Sun, Y.; Shi, J.; Sun, L.; Fan, J.; Zeng, G. Image reconstruction through dynamic scattering media based on deep learning. Opt. Express 2019, 27, 16032–16046. [Google Scholar] [CrossRef]

- Lin, H.; Huang, C.; He, Z.; Zeng, J.; Chen, F.; Yu, C.; Li, Y.; Zhang, Y.; Chen, H.; Pu, J. Phase Imaging through Scattering Media Using Incoherent Light Source. Photonics 2023, 10, 792. [Google Scholar] [CrossRef]

- Zhang, W.; Zhu, S.; Liu, L.; Bai, L.; Han, J.; Guo, E. High-throughput imaging through dynamic scattering media based on speckle de-blurring. Opt. Express 2023, 31, 36503–36520. [Google Scholar] [CrossRef]

- Hu, Y.; Tang, Z.; Hu, J.; Lu, X.; Zhang, W.; Xie, Z.; Zuo, H.; Li, L.; Huang, Y. Application and influencing factors analysis of Pix2pix network in scattering imaging. Opt. Commun. 2023, 540, 129488. [Google Scholar] [CrossRef]

- Levy, D.; Peleg, A.; Pearl, N.; Rosenbaum, D.; Akkaynak, D.; Korman, S.; Treibitz, T. Seathru-nerf: Neural radiance fields in scattering media. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 56–65. [Google Scholar]

- Ma, Q.; Li, X.; Li, B.; Zhu, Z.; Wu, J.; Huang, F.; Hu, H. STAMF: Synergistic transformer and mamba fusion network for RGB-Polarization based underwater salient object detection. Inf. Fusion 2025, 122, 103182. [Google Scholar] [CrossRef]

- Shen, L.; Zhang, L.; Qi, P.; Zhang, X.; Li, X.; Huang, Y.; Zhao, Y.; Hu, H. Polarimetric binocular three-dimensional imaging in turbid water with multi-feature self-supervised learning. PhotoniX 2025, 6, 24. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar]

- Senapati, R.K.; Satvika, R.; Anmandla, A.; Ashesh Reddy, G.; Anil Kumar, C. Image-to-image translation using Pix2Pix GAN and cycle GAN. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics, Tirunelveli, India, 27–28 June 2023; pp. 573–586. [Google Scholar]

- Ganjdanesh, A.; Gao, S.; Alipanah, H.; Huang, H. Compressing image-to-image translation gans using local density structures on their learned manifold. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 12118–12126. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. In Proceedings of the Advances in Neural Information Processing Systems 33 (NeurIPS 2020), Virtual, 6–12 December 2020; pp. 6840–6851. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Li, B.; Xue, K.; Liu, B.; Lai, Y.-K. Bbdm: Image-to-image translation with brownian bridge diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–23 June 2023; pp. 1952–1961. [Google Scholar]

- Liu, X.; Gong, C.; Liu, Q. Flow straight and fast: Learning to generate and transfer data with rectified flow. arXiv 2022, arXiv:2209.03003. [Google Scholar] [CrossRef]

- Lipman, Y.; Chen, R.T.; Ben-Hamu, H.; Nickel, M.; Le, M. Flow matching for generative modeling. arXiv 2022, arXiv:2210.02747. [Google Scholar]

- Montesuma, E.F.; Mboula, F.M.N.; Souloumiac, A. Recent Advances in Optimal Transport for Machine Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 1161–1180. [Google Scholar] [CrossRef]

- Tasinaffo, P.M.; Gonçalves, G.S.; Marques, J.C.; Dias, L.A.V.; da Cunha, A.M. The Euler-Type Universal Numerical Integrator (E-TUNI) with Backward Integration. Algorithms 2025, 18, 153. [Google Scholar] [CrossRef]

- Huang, D.Z.; Huang, J.; Lin, Z. Convergence Analysis of Probability Flow ODE for Score-Based Generative Models. IEEE Trans. Inf. Theory 2025, 71, 4581–4601. [Google Scholar] [CrossRef]

- Cao, H.; Tan, C.; Gao, Z.; Xu, Y.; Chen, G.; Heng, P.A.; Li, S.Z. A Survey on Generative Diffusion Models. IEEE Trans. Knowl. Data Eng. 2024, 36, 2814–2830. [Google Scholar] [CrossRef]

| Model Name | Core Structure | Time Embedding Mechanism | Attention Mechanism | Condition Modulation Mechanism |

|---|---|---|---|---|

| Ablation-A (Baseline) | Residual Convolution with Convolution (Conv) + Group Normalization (GN) + Sigmoid Linear Unit (SiLU) | Basic (Linear Projection) | × | None |

| Ablation-B | A + CBAM Attention Module | Basic (Linear Projection) | ✓ | None |

| Ablation-C | B + Enhanced Time Modulation | Spectro-DynaTime Embedding | ✓ | None |

| Ablation-D | C + Deep Condition Modulation | Spectro-DynaTime Embedding | ✓ | Deep Feature Fusion |

| DynaFlowNet (Full) | Full TCResAttnBlock | Spectro-DynaTime Embedding | ✓ | Deep Feature Fusion |

| Model | Training Time (50 Epochs) | GPU Memory (MB) | Latency per Frame (ms) | FPS | Parameters (Million) |

|---|---|---|---|---|---|

| CycleGAN | 3.97 h | 19590 | 53.9 ms | 18.55 | 28.3 |

| Pix2Pix | 0.18 h | 4944 | 17.8 ms | 56.18 | 57.2 |

| BBDM | 0.90 h | 17978 | 867 ms | 1.15 | 237.1 |

| DynaFlowNet | 0.15 h | 3974 | 7.4 ms | 134.77 | 19.4 |

| Model | Mean | Std | Median | Min | Max | 95%CI | CV (%) | |

|---|---|---|---|---|---|---|---|---|

| PSNR (dB) | Speckle | 15.53 | 3.79 | 15.09 | 11.16 | 31.58 | ±0.20 | 24.4 |

| Pix2Pix† | 27.01 | 3.05 | 27.56 | 17.93 | 34.52 | ±0.16 | 11.3 | |

| BBDM† | 28.07 | 3.75 | 28.71 | 17.35 | 36.20 | ±0.20 | 13.4 | |

| CycleGAN† | 24.34 | 3.24 | 24.62 | 16.40 | 33.31 | ±0.17 | 13.3 | |

| DynaFlowNet† | 28.46 | 3.95 | 28.88 | 18.72 | 38.04 | ±0.21 | 13.9 | |

| SSIM | Speckle | 0.0725 | 0.0604 | 0.0560 | 0.0048 | 0.6430 | ±0.0032 | 83.3 |

| Pix2Pix† | 0.8987 | 0.0232 | 0.9059 | 0.7946 | 0.9350 | ±0.0012 | 2.6 | |

| BBDM† | 0.8964 | 0.0551 | 0.9088 | 0.6435 | 0.9811 | ±0.0029 | 6.1 | |

| CycleGAN† | 0.3510 | 0.1976 | 0.2708 | 0.1155 | 0.8934 | ±0.0103 | 56.3 | |

| DynaFlowNet† | 0.9112 | 0.0540 | 0.9263 | 0.6622 | 0.9843 | ±0.0028 | 5.9 | |

| PCC | Speckle | 0.3337 | 0.2507 | 0.2581 | −0.0386 | 0.9762 | ±0.0131 | 75.1 |

| Pix2Pix† | 0.8578 | 0.1359 | 0.9099 | 0.0997 | 0.9782 | ±0.0071 | 15.8 | |

| BBDM† | 0.8651 | 0.1620 | 0.9333 | 0.0119 | 0.9882 | ±0.0085 | 18.7 | |

| CycleGAN† | 0.7257 | 0.2580 | 0.8294 | −0.0625 | 0.9830 | ±0.0135 | 35.6 | |

| DynaFlowNet† | 0.8832 | 0.1316 | 0.9361 | 0.1932 | 0.9907 | ±0.0069 | 14.9 |

| Model | PSNR (dB) | ΔPSNR | SSIM | PCC |

|---|---|---|---|---|

| Ablation-A (Baseline) | 25.84 | - | 0.5970 | 0.8046 |

| Ablation-B (w/Attention) | 26.29 | +0.45 dB | 0.7855 | 0.8127 |

| Ablation-C (w/Attention + Time) | 27.86 | +2.20 dB | 0.9078 | 0.8785 |

| Ablation-D (w/Attention + Time + Condition) | 28.16 | +2.32 dB | 0.9076 | 0.8677 |

| DynaFlowNet (Full) | 28.46 | +2.62 dB | 0.9112 | 0.8832 |

| Model | PSNR (dB) | Domain Shift ΔPSNR | SSIM | PCC |

|---|---|---|---|---|

| Pix2Pix | 24.87 | −2.14 dB | 0.4174 | 0.8036 |

| BBDM | 24.37 | −3.70 dB | 0.2873 | 0.7755 |

| CycleGAN | 18.98 | −5.36 dB | 0.7755 | 0.5828 |

| DynaFlowNet (Full) | 27.41 | −1.05 dB | 0.7351 | 0.8607 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, X.; Wang, J.; Wang, M.; Zhu, J. DynaFlowNet: Flow Matching-Enabled Real-Time Imaging Through Dynamic Scattering Media. Photonics 2025, 12, 923. https://doi.org/10.3390/photonics12090923

Lei X, Wang J, Wang M, Zhu J. DynaFlowNet: Flow Matching-Enabled Real-Time Imaging Through Dynamic Scattering Media. Photonics. 2025; 12(9):923. https://doi.org/10.3390/photonics12090923

Chicago/Turabian StyleLei, Xuelin, Jiachun Wang, Maolin Wang, and Junjie Zhu. 2025. "DynaFlowNet: Flow Matching-Enabled Real-Time Imaging Through Dynamic Scattering Media" Photonics 12, no. 9: 923. https://doi.org/10.3390/photonics12090923

APA StyleLei, X., Wang, J., Wang, M., & Zhu, J. (2025). DynaFlowNet: Flow Matching-Enabled Real-Time Imaging Through Dynamic Scattering Media. Photonics, 12(9), 923. https://doi.org/10.3390/photonics12090923