1. Introduction

Recent work by the present Author has succeeded in achieving a vast reduction in integration times that have plagued the Hanbury Brown–Twiss effect for so long. These results are shown in References [

1,

2,

3], culminating (in Reference [

3]) with a sequence of asymptotic theorems required for the stochastic search algorithm. The way is now open to reap the advantages of simple, inexpensive flux-collecting hardware, immunity to seeing conditions, and unlimited baselines and image resolution. Furthermore, since the Brown–Twiss effect has been extended to two-dimensional imaging, it is appropriate that we term the algorithm the Intensity Correlation Imaging (ICI) algorithm.

Within the “The Rise of the Brown-Twiss Effect” surveyed in this Special Issue, the reduction in integration times has been accomplished by means of the Noise-Reducing Phase Retrieval (NRPR) algorithm, which is embedded within a stochastic search algorithm. In the thorough analysis of [

3], initial conditions, such as small random perturbations in the pixel intensities and other complexities within the algorithm performed very well. However, in this paper, we update and simplify the algorithm by constructing a discrete-time dynamic system. Moreover, not only does the algorithm perform as well as the original, but we also introduce the benefits of a nonnegative dynamic systems.

We pause to note the simplicity of the ICI hardware and measurements. The imaging process begins with the calculation of the reduced integration time,

, relative to the Brown–Twiss calculation,

:

where

may include redundant baselines and multispectral components as treated in “the Rise of the Brown-Twiss Effect” and in Reference [

3]. The quantity

is the partial coherence effect, which concerns the size of the aperture area,

A; this is relative to the area that is small enough that

. Furthermore, we emphasize that

, the number of constrained black pixels in our observatory grid of apertures, should be approximately the same number of apertures as in the

grid, minus the number of pixels,

, such that

is roughly the size of the illuminated object. Thus, we set

. This selection is in line with the best box size strategy that starts with the smallest box sizes and increases with size until the zero-noise image is determined.

Next, having computed , the observatory apertures are exercised. Each aperture is a mere flux collector having an opening that places the light of the celestial object onto a photodetector, coupled with a high-pass filter. The output of the aperture consists of a time series of intensity fluctuations that are transmitted to a computational facility. Then, all pairs of the intensity fluctuations (including redundant baselines and multispectral components) are cross-correlated using the averaging time .

Having secured the batch of cross-correlation data for a particular image, the Stellar Intensity Interferometry (SII) community can operate our stochastic search algorithm to determine the zero-noise image. Please note that the stochastic search uses only one data batch so that the integration time is not increased. The computation time for the stochastic search for a megapixel image can typically run for seconds to a few minutes.

Fundamentally, ICI mathematics is an algorithm that, when combined with the proper inexpensive hardware (including by all means the SII community), enormously reduces the integration times and opens the way to the long-standing benefits of ICI for astronomy. Now, I would encourage my readers to become familiar with both the earlier and the newer algorithm. I have found that the best way to validate an algorithm is to try it. My manuscripts establish an algorithm that produces the reality of a noise-free image. Dear readers, you are welcome to peruse the prior algorithm I have listed the in the next Section—also a MatLab version in “The Rise of the Brown-Twiss Effect”—as well as the new algorithm presented here. The goal of the present article is to streamline the algorithm in the form of a discrete-time dynamic system, reduce the size of the state space, and render the algorithm suitable for a compartmental dynamic nonnegative system that can join with a spiking neural network Artificial Intelligence.

We progress as follows.

Section 2 begins with the NRPR algorithm, which contains the correct integration times and sets up the foreground/background dichotomy.

Section 3 transforms the NRPR steps into a discrete-time dynamic system where the variables consist of the coherence magnitude and the phase.

Section 4 reveals that the dynamic system has two separate phases: (1) the demarcation of the postulated “box” such that the exterior of the box fades away, and (2) the final convergence of the nonnegative image intensity within the box. The second convergence constitutes the nonnegative dynamic system. Thus, the six steps of the NRPR algorithm are reduced to two steps, and the two variables are strictly nonnegative.

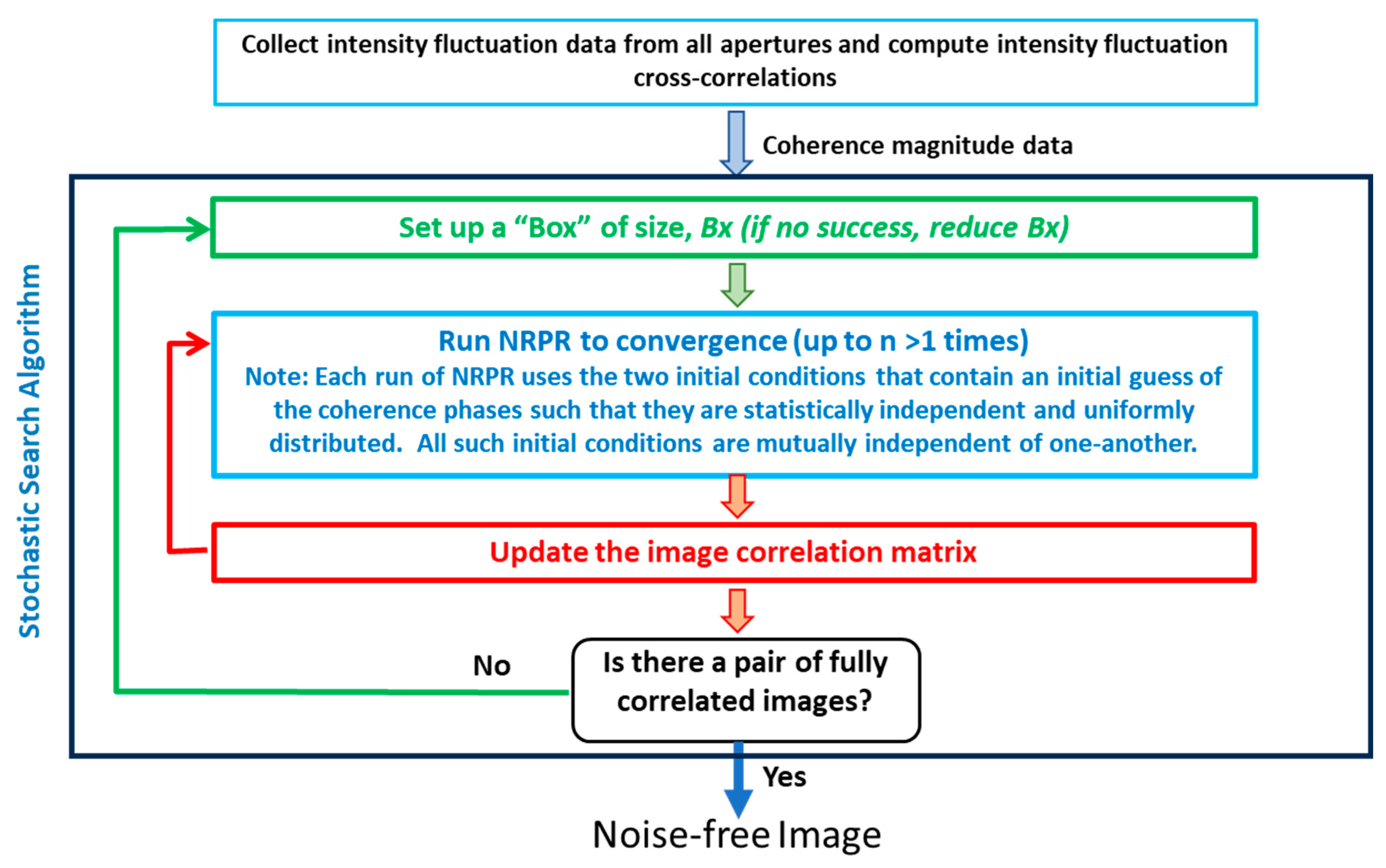

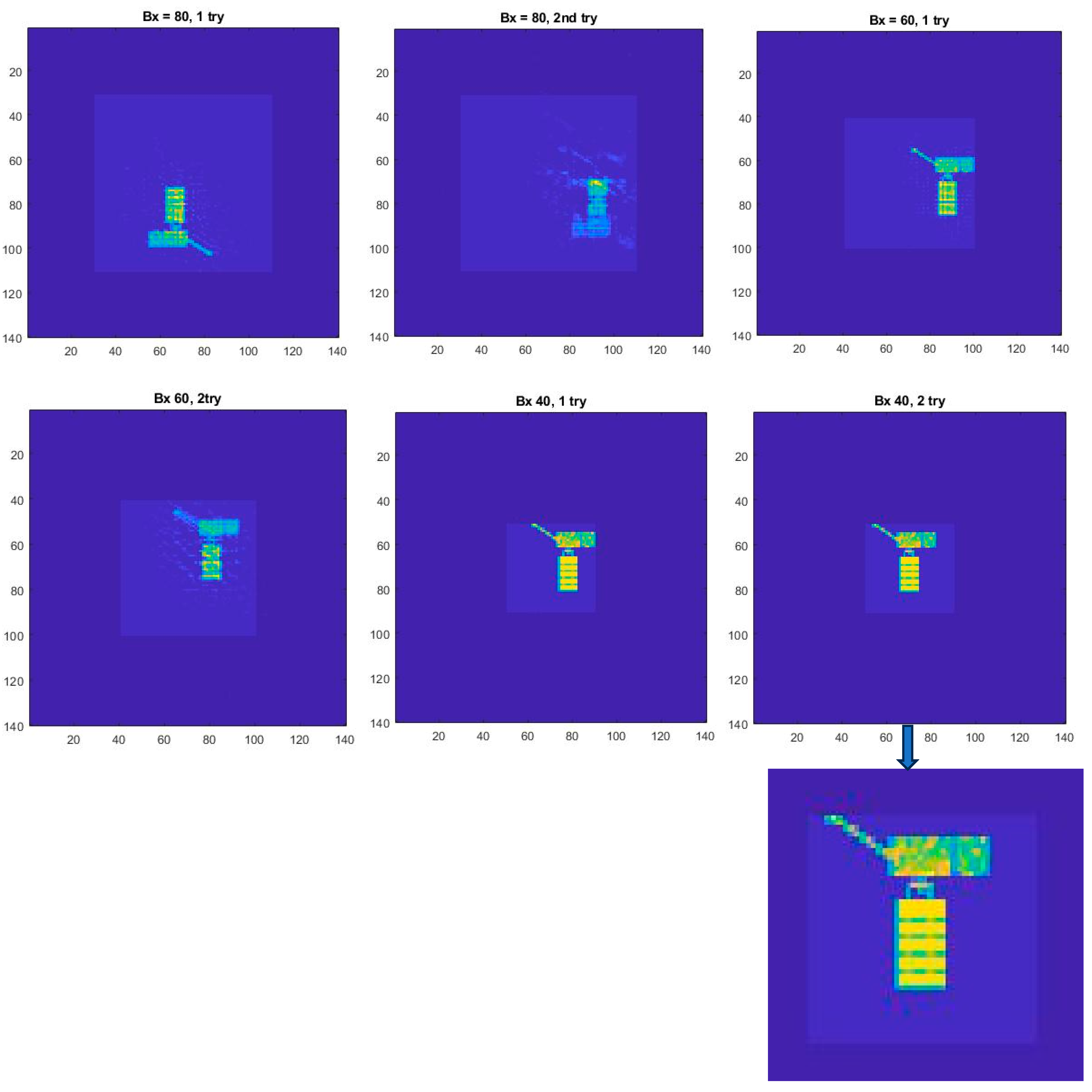

Given the nonnegative dynamic system,

Section 5 merges the dynamic system within the stochastic search algorithm to gradually reduce the “box” sizes. In this formulation, we consider the stochastic search algorithm employing random coherence phases within the two initial conditions rather than random pixel intensities. The embedded NRPR runs use statistically independent and uniformly distributed coherence phases spanning

. The search sets up a square “Bx” and runs NRPR

times until there are two images that are fully correlated. In this case, the two outcomes are the noise-free image (baring 180 degrees of rotation or translations). If there are no such correlations within

n computations, then the algorithm reduces the box size and tries again. Alternately, a quicker convergence is to start with a box size that is smaller than the illuminated object and then increase the box sizes until the noise-free image is found.

Having explained how integration times are enormously reduced via a nonnegative dynamic system,

Section 6 discusses the advantages of the ICI algorithm over other imaging technologies. We start with large-amplitude interferometry systems. These systems are still useful but are extremely complex in comparison with ICI. New, large facilities face an enormous cost barrier in comparison with ICI observatories, which are composed of inexpensive flux collectors and rapid integration times. Next, we consider adaptive optics. Adaptive optics remove the wavefront distortions introduced by the Earth’s atmosphere by means of an optical component that is introduced in the light beam, and which can introduce a controllable counter wavefront distortion that both spatially and temporally follows that of the atmosphere. We cite the following quotation:

“The application of adaptive optics to astronomical telescopes therefore requires the development of expensive, complex opto-mechanical devices and their control systems.”

Why is adaptive optics nearly extinct? ICI flux collectors are insensitive to atmospheric turbulence. Moreover, the flux collectors are exceedingly inexpensive.

Finally, a review of the Stellar Intensity Interferometry (termed SII) community is more promising. The apertures transmit intensity fluctuations to a computational facility where each pair of signals is cross-correlated in accordance with the Brown–Twiss formulation. In any case, SII is a steppingstone toward the incorporation of the ICI–stochastic search algorithm. In particular, basic SII can reduce integration times by means of redundant baseline measurements and multispectral channels. Such is also the case for ICI with the stochastic search algorithm. Furthermore, baseline redundancy and multispectral channels are entirely consistent with nonnegative dynamic systems.

In

Section 7, we address a practical application, namely a ground-based imaging observatory design for commercial geostationary satellite inspection, focusing on apparent magnitudes of 10 to 14. In the process, we will compare the performance of both SII and ICI, in which the integration times of SII are

-fold larger than ICI integration times that range from 6 s to a few hours. Nor is the ICI satellite observatory incapable of high-resolution imaging of celestial objects. For example, there are numerous galaxies whose brightness is better than apparent magnitude 14. Comparing the James Webb Space Telescope and the ICI satellite observatory, the angular resolution is significantly better, and the JWST cost is enormously greater. This confirms the validity of the low-cost, streamlined, nonnegative ICI dynamic system.

2. Description of the NRPR Algorithm

It is supposed that there is an array of flux-collecting apertures that are arranged so as to form a square, evenly spaced grid on the “u-v plane” (which, in interferometry, denotes the Fourier domain projected on the plane perpendicular to the target line of sight). The grid has a one-to-one correspondence to a matrix of

pixels forming the construction of an image of a luminous object amidst a black sky. The defining characteristics of the NRPR algorithm are as follows:

The matrix

defines the set of constraints.

declares the pixels

to be constrained to be zero, whereas

if

is unconstrained. The matrix

is the opposite, with unity for unconstrained pixels and zero for constrained pixels. The region wherein

we have termed “the background”, and the remaining region “the foreground”. In this scenario, the initial inputs and the subsequent algorithm are defined as in

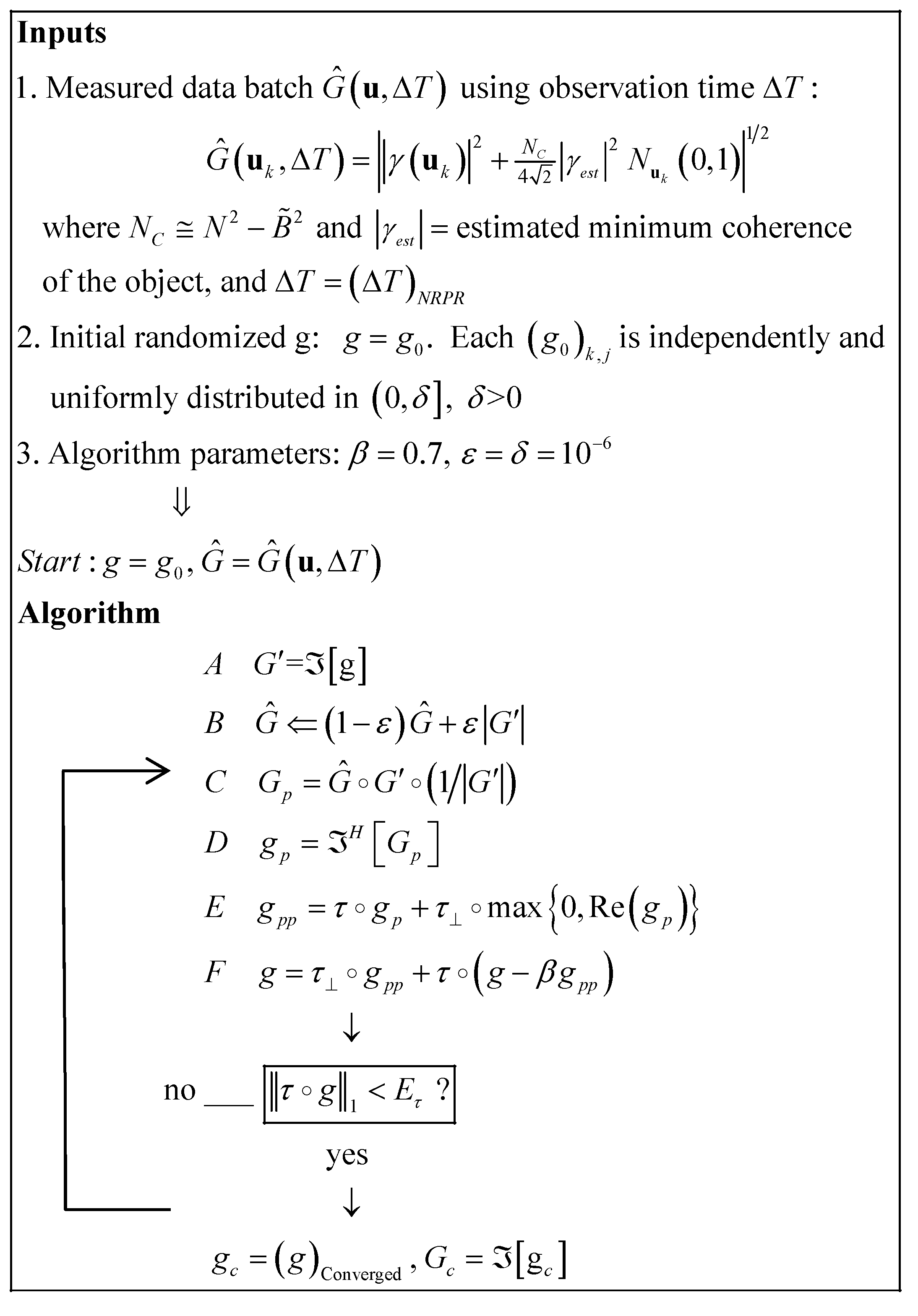

Figure 1.

Figure 1 shows the various steps of the NRPR algorithm. There are several initial specifications. The first is the measured coherence magnitude,

, which obeys a positivity constraint. This is composed of the noise-free normalized coherence magnitude,

, where

is the relative position vector of a pair of the flux-collecting apertures,

is a complex-valued Gaussian noise of zero mean and unit variance, and

is the number of constrained pixels, i.e.,

, as prescribed in the Introduction. In addition, the integration time,

, used for the NRPR integration time, is also related to Equation (1) and the appearance of the partial coherence effect. This will be considered in due course in a later Section. The formulation of this noise model was accomplished in [

1], resulting in an asymptotic expression for the precision estimate of the necessary integration time. The third initial specification is

, where

is independently and uniformly distributed in

, and

is real and positive. It is these random specifications of the initial values of the pixel intensities that we wish to supplant with a dynamic system having additional simplifications. In passing, the closing of the image computation is measured in one norm of the magnitude of the pixel intensity. The image precision is measured by

.

It must be emphasized that only a single data batch of measured coherence magnitude measurements will be used in the process by which the zero-noise image is determined. Thus, the adaptive algorithms described here do not increase the necessary integration time. However, the adaptive algorithms require repeated NRPR computations, and each such repetition uses a new seed for the randomized initial guess for the image.

The various steps

A to

F of the algorithm are discussed in References [

1,

2,

3]. We must note, however, that the parameter

must equal

in order for step B to converge. Secondly, the parameter

must also equal

in order to make step D converge. Now, there are two pathways to converge. There is the path depicted in

Figure 1, in which

is independently and uniformly distributed in

. But there is a second path where the argument of the Fourier transform in Step A is independently and uniformly distributed. These two paths can be denoted as follows:

where

is a set of independent and uniformly random variables in

. In order to create a nonnegative dynamic system,

must equal

; they must both equal to

. In the next Section, we must transform

into the

path. Clearly, the phase factor,

, in

is a statistical ensemble that encompasses all possible coherence phases. Thus, there is a very significant non-zero probability that in any one trial, NRPR converges to the correct coherence phase and magnitude, and therefore to the noise-free image.

Having obtained this transformation, the basic computations of NRPR are directed to the hypothesis that a square “box” of size , and positioned in the center of the field of view, contains the noise-free image. Then, NRPR is embedded within the stochastic search wherein the hypothesis is tested, and the noise-free image is discovered.

3. The NRPR Algorithm as a Discrete-Time Dynamic System

As the next step in our analysis, we recast the equations of

Figure 1 into a discrete-time dynamic system so that all the principal quantities are indicated by the sequence of integers

. We start with the initial conditions and the first iteration, and recite the sequence of further iterations, keeping the algorithm in its proper order, as follows:

Now consider the equations pertaining to

and

and we note that the following equations are distinct from (4):

Notice that in (5), the quantities are actually the factor in . We can replace with . Next, at the outset of the small integers, the second term in dominates the expression with a huge contribution from , regardless of the factor . Within the Fourier transform in (5), the term is of the order of at least hundreds, while the factor is of the order of .

Next, in the asymptotic analysis of Reference [

3], the magnitude of

drops precipitately by an order of magnitude within

. Pursuing the analysis in Reference [

3] further, we focus on the average magnitude of the image intensity of the background, namely

. The Kronecker algebra in Reference [

3] results in the following outcomes: (1) at

, the magnitude of the average of

is zero; (2) for

the magnitude of the average of

is completely controlled by

. The combination of (1) and (2) implies that (2) cannot contain

, meaning that there are no high-order terms in

. However, the average of

from

must contain

, since the average of

varies smoothly from its initial condition to zero (see

Figure 2 and

Figure 3). Therefore, it follows that the only increment to the magnitudes of the terms

must approximately accumulate to

. As the image precision is

, it is thus clear that this accumulation is extremely negligible and that all the terms

can be discarded at the outset to produce Equation (6); moreover, the deletion of

is well-founded. Again, Equation (6) are transformations of (5).

We can see that there is a bifurcation into two dynamic systems: one involving

, and the rest consisting of

. Now, let us examine k = 1 formulated as the initial conditions. First, we may eliminate

in

, because

is a very small shift and

can be easily adjusted by a slight change in

. Thus, we can formulate the initial conditions for k = 2, as follows:

However, turning back to (4), we can complete the entire sequence starting from k = 1:

These equations are transformations of (4)–(7). This dynamic system replaces six steps per iteration with three steps. Note that the quantity

is the phase factor of the Fourier transform of the random initial pixel intensities. The new initial conditions in Equation (8) are correct within

(

). In particular, one must note that (8) also leads to the convergence of

to zero, as demonstrated in Reference [

3]. Therefore, in the limit,

is the ultimate nonnegative dynamic system, which is discussed in the next section.

4. Two Dynamics: The Reduction of and the Affirmation of

The first step in setting up the stochastic search algorithm is to hypothesize a square perimeter with size . The exterior of the box is where we find the constrained pixels, called the “background”, whereas the interior of the box is the “foreground”, wherein all pixels are determined to have . Starting from the initial conditions in (8), the dynamic system must be able to converge to zero background pixels. Once that is completed, the dynamic system can converge to strict nonnegative-intensity pixels. Each run of NRPR can produce similar results using the same initial conditions, until a pair of images are found that are completely correlated, indicating the true, noise-free image.

To determine the convergence of the background pixels, we use the Kronecker vector notation on (8).

is represented by the following column vector:

In other words,

is a vector composed of the matrix elements of the background pixels, and

is the vector composed of the matrix elements of the foreground pixels. Corresponding to this, we have the following:

is the unitary matrix corresponding to the Fourier transform operator in the Kronecker notation. Finally,

is represented by the following:

In Reference [

3], an asymptotic approximation of the background was computed, introducing the averages of

as defined below:

For the first order, the maximum estimate for the limit on

can be found as follows:

The resulting asymptotic approximation is as follows:

The computations for the first 40 iterations are shown in

Figure 2 and

Figure 3 gives the complete 7000 iterations.

A comparison of the predicted and computed results in

Figure 2 and

Figure 3 establishes that the averaged background intensity is reduced by one order of magnitude at the outset, and then levels off beyond iterate

to form a floor that does not vary appreciably beyond

. However,

Figure 3 shows that after

, the reduction in

is completely driven by

. These features match the computed results rather well, especially for the immediate drop-off for

, where it is established that

is very weakly dependent on

.

Figure 3 shows the complete averaged background from k = 1 to k = 7000.

The conclusion of this derivation is that after

,

is so suppressed relative to

, its influence on the foreground may be neglected. Thus, we set

, so that in Kronecker notation, (8) becomes the following:

Thus, as , and both and constitute a nonnegative dynamic system.

6. Advantages over Other Two-Dimensional Imaging Methods

Now that integration times are enormously reduced, we can appreciate the benefits of ICI over previous imaging technologies. We may start with optical interferometer telescopes and refer the reader to the technical background in Reference [

4]. ICI does not require the propagation of collected light beams to combiner units as in existing amplitude interferometers. The ICI hardware consists of simple, self-contained flux collectors that are immune to seeing conditions and need not collect phase information. Thus, routing optics and beam combiners are eliminated, thereby saving a great deal of expense. ICI does not require nanometer-level control of nor nanometer knowledge of the relative positions of the optical components. While margins for optical components in amplitude interferometry are a small fraction of a wavelength, ICI margins are in the centimeter range. Finally, the signal-to-noise ratio (SNR) does not suffer from beam splitting and the throughput losses that plague amplitude interferometry. These remarks indicate a great deal of performance advantages with a corresponding reduction in expense.

Next, we consider adaptive optics. Reference [

5] provides an excellent overview. Adaptive optics remove the wavefront distortions introduced by the Earth’s atmosphere by means of an optical component which is introduced in the light beam, and which can introduce a controllable counter wavefront distortion which both spatially and temporally follows that of the atmosphere. This optical component is generally a mirror whose surface can be distorted. To control the mirror, the wavefront distortions have to be known. These are measured by means of a wavefront sensor using either the object under study or a laser-generated object. In the case where the wavefront is measured with the required accuracy and spatial and temporal resolution, and in which the adaptive mirror control is perfect, the atmospheric effects are removed, and the telescope will give a diffraction-limited image. Reference [

5] states that “The application of adaptive optics to astronomical telescopes therefore requires the development of expensive, complex opto-mechanical devices and their control systems.” Why is adaptive optics nearly extinct? We answer that ICI flux collectors are insensitive to atmospheric turbulence. Moreover, the flux collectors are exceedingly inexpensive.

Finally, a review of Stellar Intensity Interferometry (termed SII) [

6] extolls the virtues of the basic Brown–Twiss technology. The apertures transmit intensity fluctuations to a computational facility where each pair of signals is cross-correlated in accordance with the Brown–Twiss formulation. It is acknowledged that the weak signals must be boosted via an array of large apertures (i.e., the VERITAS array), but it is not certain that the partial coherence factor has been included. In any case, SII is a steppingstone toward the incorporation of the ICI–Stochastic Search algorithm. The SII community has made significant progress over the last few decades. In particular, the basic SII can reduce integration times by means of redundant baseline measurements [

7,

8]. These measurements are composed of radial baselines appropriate to stellar diameter computations, but it is easy to adopt the two-dimensional, baseline redundancy featured in

Section 7. Also, of course, multiple spectral channels are introduced in Reference [

9]. Such is also the case for ICI with the stochastic search algorithm. Progress has also been made in the area of photonic integrated circuits that have been adapted to visible light, [

10,

11,

12] and fitted with Avalanche Photodiodes [

13] to produce enormous bandwidths [

14]. In view of this progress, it makes good sense to couple the SII community with the ICI–stochastic search algorithm. Furthermore, baseline redundancy and multispectral channels are entirely consistent with nonnegative dynamic systems. In the following Section, we address a practical application, namely an imaging observatory design for commercial geostationary satellite inspection. In the process, we will compare the performance of both SII and IC and their combination.

7. Observatory Design for Commercial Geostationary Satellite Inspection

This section addresses the characteristics of a ground-based observatory suited to the high-resolution imaging of commercial geostationary satellites, as treated in [

10]. The first consideration is the range of apparent magnitudes. Many of the smaller scale satellites range in magnitude from 10 to 14. These are also typical of GOES 16-sized bodies. Therefore, we select a satellite that is 6 by 6 m, exhibiting the apparent magnitudes,

, in the range of 10 to 14. We wish to achieve a fairly challenging degree of image resolution. Accordingly, we choose a resolution,

, of 10 cm in size. This is an angular resolution of 2.8

.

For simplicity, it is assumed that the tilt of the u-v plane from zenith is modest, so that the positioning of the observatory apertures is approximately on the horizontal ground plane. The geometry of the observatory is defined by the location vectors, termed here as the baseline vectors, linking all the pairs of aperture locations in the horizontal plane. Each baseline vector is associated with the time averaged, intensity fluctuation cross-correlation (i.e., the coherence magnitude data) collected by the aperture pairs.

In the SII community, the integration time reduction due to baseline redundancy has been well-treated within a one-dimensional setting [

7,

8]. In imaging, it is our task to extend the analysis to the much more complex u-v plane. The reward, however, is that the integration times are enormously reduced in the two-dimensional setting—both in the SII and the ICI settings.

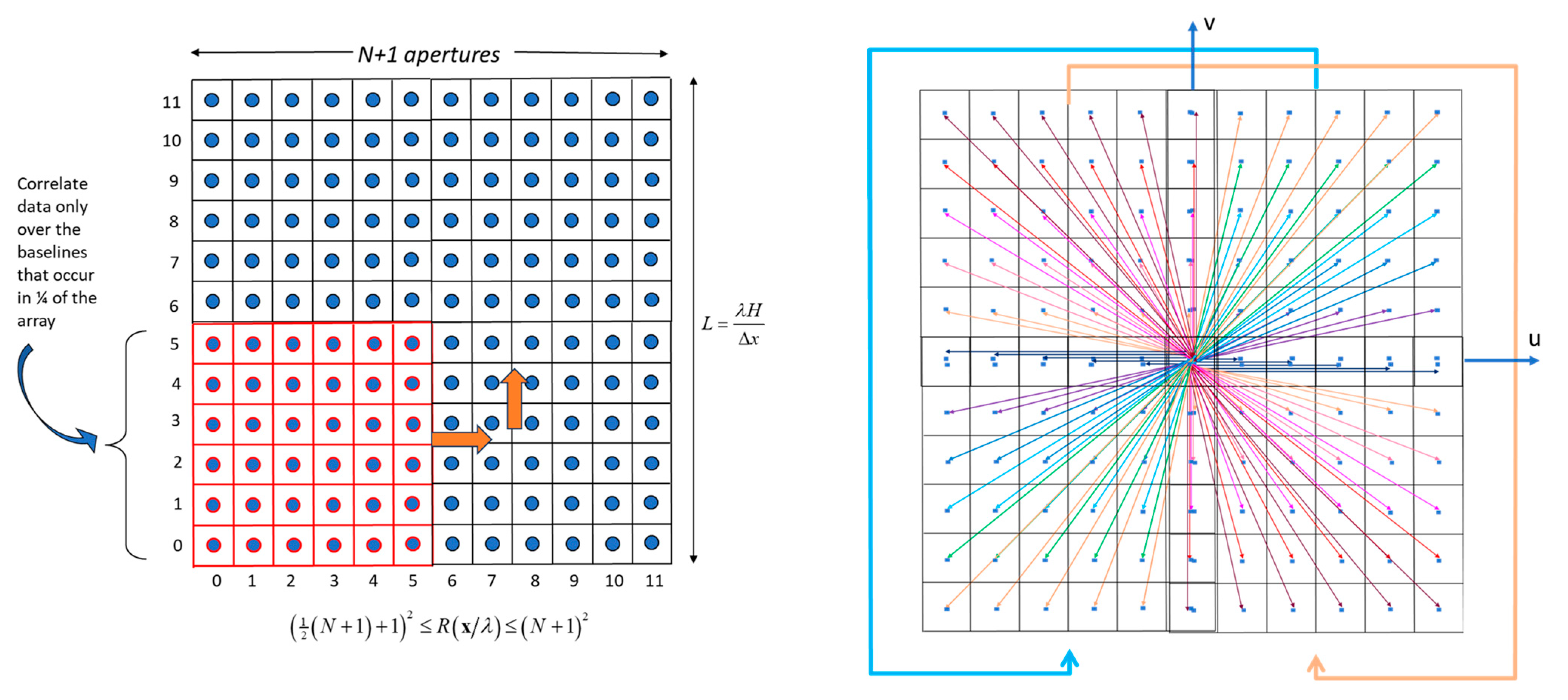

To estimate the further reduction in integration time, it is assumed that the aperture positions occupy a square grid. If there were no baseline redundances, the image plane and the u-v plane would be one-fourth the size of the aperture grid. However, we can make the aperture grid four times larger than the u-v plane. This implies a square grid for both the aperture grid and the u-v plane, as well as the desired image field of view. In the latter case, the size of the image is denoted by pixels on the side, where is an odd integer. The following discussion demonstrates how a square grid of aperture positions that are on one side can produce a significant reduction in integration time by means of redundant baseline vectors.

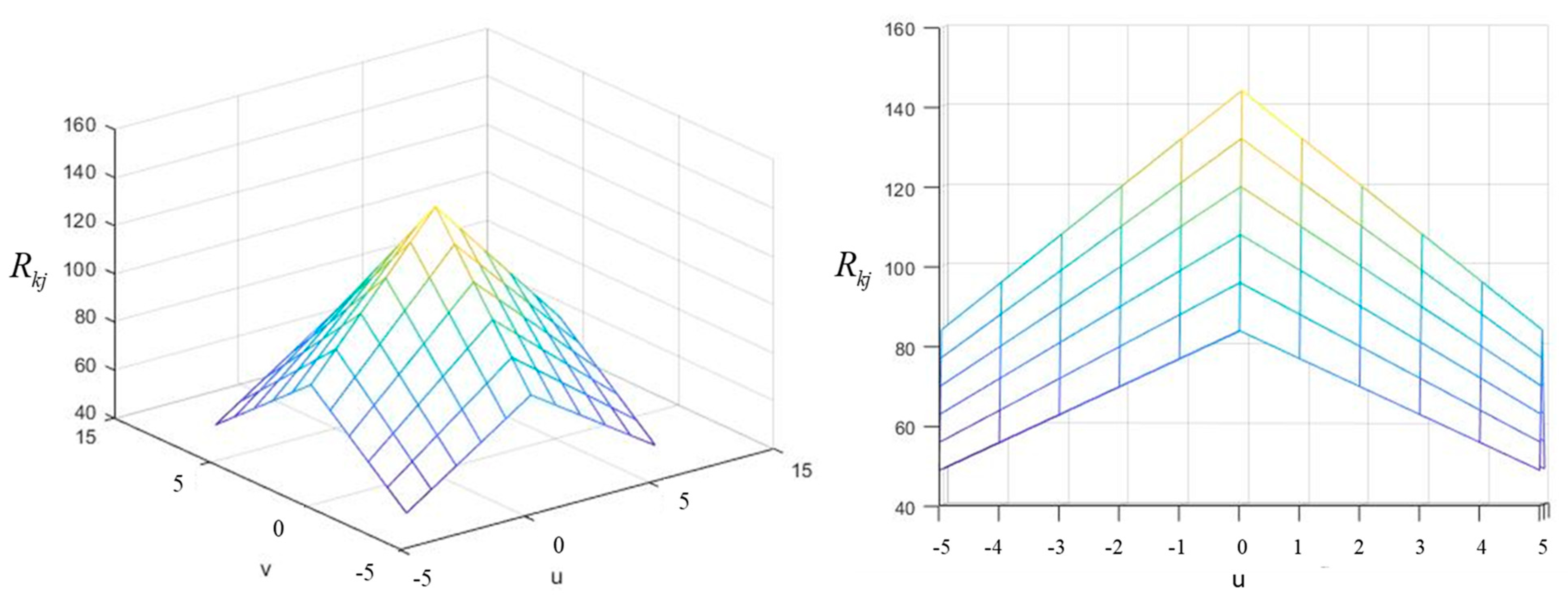

To begin, we use

Figure 7 to explain the process, where

. The figure shows

apertures in a square array. We take one-fourth of this array, shown by the red outlines, and compute the intensity fluctuation cross-correlations for all the baseline vectors in the

region. Then, we reposition this array within the

array without repeating any position and without repeating a cross-correlation for a 180-degree rotation of a baseline vector. As is described in Appendix A of [

10], which explains the computational aspects of the design, all of these calculations are readily parallelizable, and therefore can be produced within the same integration time. Given these cross-correlation data, we can construct an

array, where for each of the fundamental baselines the sample averages of the redundant coherence magnitude measurements are assigned.

The resulting redundancy matrix for our

example is shown in

Figure 8. In general, for each u-v vector,

, the redundancy, denoted by

, is determined as follows:

According to [

10], the integration time is as follows:

where

An additional method that results in decreased integration time is to equipe each photodetector with multiple non-overlapping frequency channels (so that all channels are statistically independent), denoted here by

. One then averages the detector output channels. Workers in SII [

9], have studied multispectral theory with good, albeit modest, results. For two-dimensional imaging with ICI, reference [

10] introduces a multispectral approach pertaining to Geostationary Satellite Inspection. This adopts a more recent approach which exploits rapid advances in photonic integrated circuits (PIC). Programmable PICs have been adapted to visible light [

11,

12] and combined with Avalanche Photodiode (APD) electronics [

13] to produce photodetectors with enormous bandwidths [

14] (published June 2023). In consequence, for each photodetector, we adopt an APD with a PIC that separates broadband light into numerous (~1000 channels), non-overlapping and equal-bandwidth spectral channels. Since the Fock states of each channel commute, the channels are mutually statistically independent. The correlation data are then computed for each pair of apertures and for each corresponding spectral channel, and the results are averaged.

Reference [

10] discusses the state of the art in multispectral detectors that admits reasonably priced and modest numbers of frequency channels. Within the present state of the art, if

, one can sustain 600 channels. With future advances in PICs, we estimate that many more channels can be supplied, but this requires further development and price reduction. Therefore, we concentrate on the 600 channel devices.

Thus, Reference [

10] gives us the following:

Now we take account of the parameters needed for the integration time computations. First, ref. [

10] stipulates that

. This leads to the following:

The reason why the equation

was placed near all the

expressions is that the partial coherence effect,

, is precisely satisfied by

, thanks to the Brown–Twiss integration [

15]. The flux collectors are the size of flashlights. Next, the additional parameters are as follows:

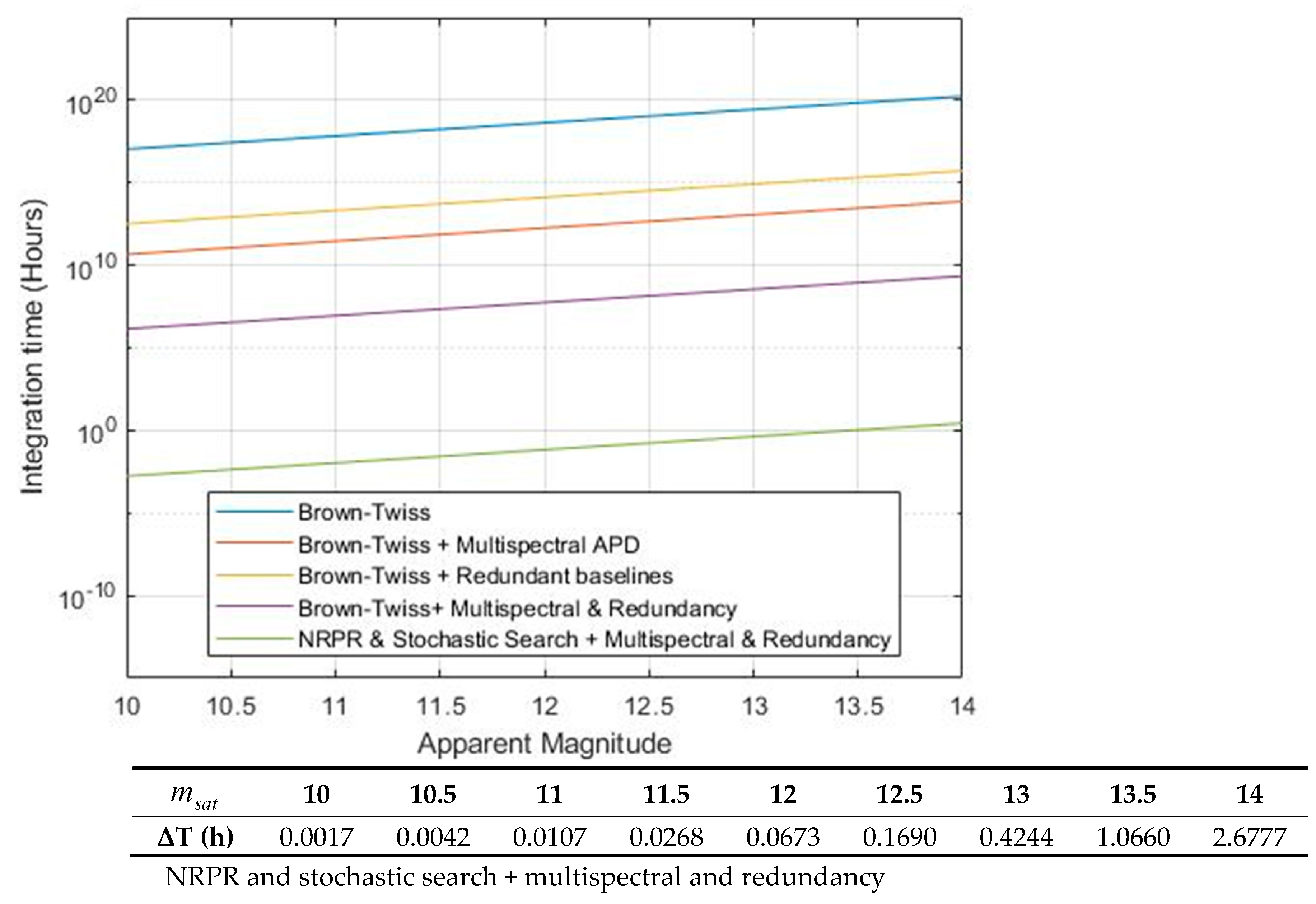

With these parameters, we can plot the integration times versus the apparent magnitudes for various combinations of Brown–Twiss integration times with redundant baselines, multispectral channels, and a combination of NRPR and stochastic search with multispectral and baseline redundancy.

The blue line in

Figure 9 shows the conventional Brown–Twiss integration times. Then, the red and yellow lines are the conventual Brown–Twiss with separate multispectral APDs and redundant baselines. Each of these are more or less comparable. Then, the violet line features Brown–Twiss conventions with both multispectral and redundant baselines simultaneously. This represents the full-force SII integration times. Note that this line still does not, by any means, bring the integration times down to the level of an hourly integration time limit. On the contrary, the SII integration times are roughly

times larger than the green line that represents the NRPR and stochastic search combined with the multispectral and redundant baselines. Thus, to achieve low integration times, the NRPR and Stochastic algorithm is absolutely necessary.

Furthermore, the ICI Satellite observatory can also outperform the James Webb Space Telescope (JWST) in imaging galaxies that are brighter than the apparent magnitude 14, as shown in the following

Table 1. The observatory is enormously larger than JWST, and due to its size and its wavelengths in the visible spectrum, the image resolution is 0.025 that of the space telescope. Then, there is the relative cost between USD 10 billion and USD 16 million. JWST is extremely complex, while the ICI ground-based observatory has the same immunity to atmospheric seeing conditions and is composed of an array of self-contained flux collectors (the size of which is that of a small flashlight), each of which is equipped with inexpensive multispectral channels. The flux collectors transmit intensity fluctuations to an onsite, highly parallelized computational facility where all the pairs of the intensity fluctuation signals are crosscorrelated to produce all the coherence magnitude measurements.

8. Conclusions

References [

1,

2,

3] created an algorithm that emphatically reduced the integration times of the Brown–Twiss effect as applied to two-dimensional imaging (termed ICI). However, there were a number of complexities in the algorithm that merited simplification. This paper has succeeded in streamlining the ICI algorithm by transforming the six steps of the original algorithm into a discrete-time, nonnegative dynamic system having two-dimensional state space. It is demonstrated that this dynamic system fully replicates the original to within

. Furthermore, the simplified product, being a nonnegative system [

16], is well-suited to partner with Artificial Intelligence automations such as nonnegative spiking neural networks. Such automation can be expected in the near future.

Further, having reduced the ICI nonnegative dynamic system to very small integration times, we compared ICI performance to other existing imaging technologies, including large-amplitude interferometry systems, adaptive optics, and Stellar Intensity Interferometry (termed SII). The latter was the most promising because the SII community, making use of the conventional Brown–Twiss estimates of integration time, has reduced integration times by computing redundant baselines and multispectral channels. This is also the case for ICI using the stochastic search algorithm. Furthermore, baseline redundancy and multispectral channels are entirely consistent with nonnegative dynamic systems.

To compare the results of SII, we chose a practical example: a ground-based, Geostationary commercial satellite inspection observatory with 10 cm resolution and a range of apparent magnitudes from 10 to 14. It turns out that while the satellite inspection observatory had integration times from 6 s to a few hours for the ICI–stochastic search algorithm using redundant baselines and multispectral channels, the SII computations were ~ larger than ICI. Thus, to achieve low integration times, the NRPR and Stochastic algorithm is absolutely necessary.

Furthermore, the ICI Satellite observatory can also outperform the James Webb Space Telescope in imaging galaxies that are brighter than the apparent magnitude 14, as shown in

Table 1. The observatory is enormously larger than JWST, and due to its size and its wavelengths in the visible spectrum, the image resolution is finer. Then, there is the relative cost between USD 10 billion and USD 16 million. In later publications, there will be a need for a space-based ICI celestial observatory.

This work is an algorithm that greatly reduces the integration times so that the remaining excellent properties of Intensity Correlation Imaging can be attained. This means that the SII community need only learn to run the algorithm to achieve enormous advantages beyond conventional technologies. SII has developed many inexpensive flux-collecting aperture designs and has understood redundant baselines, multispectral channels, and computational facilities. A consortium including the SII community and the continued development of ICI algorithms will surely expand to enhance the practical impact of this work. I must emphasize the importance of the SII capabilities in this article and the enormous time and financial savings that shall accrue via the amalgamation of SII with ICI. This consolidation provides a quick roadmap for future validation.