1. Introduction

Wireless power transmission is expected to cover power levels ranging from milliwatts to several megawatts for stationary and moving targets, over transmission distances from a few meters to kilometers in future society [

1]. Optical wireless power transmission (OWPT) is considered a promising candidate for such multi-scale systems, due to the narrow beam divergence of light [

2,

3,

4,

5]. To increase the power generation efficiency of photovoltaic (PV) targets, accurate beam alignment and shaping according to the target’s position and attitude are necessary [

6]. Thus, real-time detection of the position and attitude of PV is crucial for OWPT systems. Although obtaining position information using satellites or indoor navigation systems is possible [

7,

8], the availability of such infrastructure for OWPT operations is not always guaranteed. Moreover, it is uncertain whether such systems meet the requirements of a specific OWPT system, and the estimation of the target’s attitude using such systems is limited. Therefore, research on position and attitude estimation specific to OWPT is necessary.

Previous research on the position and attitude estimation of targets in OWPT has been limited [

9,

10], and the authors faced challenges such as changes in background light due to weather conditions and diurnal variations, as well as misrecognition of surrounding objects. In OWPT, target detection should be minimally dependent on the background environment. One such method for detecting the target was proposed by the authors, and involved the use of differential absorption images of the target [

11]. This method captures images of the target using the absorption wavelength (

) and non-absorption wavelength (

) of the PV. If

and

are close enough to each other, by generating the difference between these two wavelength images, the background will be canceled out and the PV image will be extracted. In a former study, the non-diffuse angle characteristics of the rear surface of the PV were investigated [

12]. Then, position and attitude determination of stationary targets, using a combination of the differential absorption and stereo imagery, was reported [

13]. It was found that a consistency condition, referred to as ‘integrity measure’, holds for the center coordinates of the target estimated from the left and the right images. It was also discovered that there exists a minimum exposure time for the integrity measure to hold, and that this depends on the attitude angle of the target. A physical model was constructed to determine this minimum exposure time under general conditions. The attitude angle estimation based on this model was reported in a previous paper [

14], demonstrating that the method shows no degradation in accuracy near the normal, which is suitable for OWPT operations.

This paper discusses real-time position and attitude estimation for moving targets. For targets with non-diffuse reflection characteristics, the position estimation error increases when the target attitude deviates from normal to the light source. This can lead to outliers and data loss, which is particularly problematic in real-time consecutive position estimation. Thus, every PV tracking algorithm should include smoothing processes that appropriately handle outliers, interpolation processes that complement missing data, and position estimation and correction (if necessary) processes for the target. This paper compares the following three methods for the tracking algorithm and evaluates their performance.

A method utilizing an autoregressive (AR) model to correct the target position estimation when changes exceed the predetermined thresholds.

A uniform motion model estimated by a Kalman filter.

The AR model estimated by a Kalman filter.

An accuracy improvement is proposed for the estimation using the fact that the direction (azimuth angle) can be estimated with precision, as has been reported in a former paper [

13]. It was found that some challenges must be addressed for the camera that will be used in real-time attitude estimation. These requirements must be considered when selecting or developing the appropriate hardware for the operational system. The authors conducted an analysis of the high-level requirements for OWPT beam alignment and shaping in a former study [

6]. It is confirmed that this research and the former study are consistent.

Unlike traditional solar power generation, OWPT requires the detection of the PV from the transmitter, tracking it if necessary, and then irradiating the power beam. The authors’ series of studies validated a concept that is essential in OWPT and established a definite step towards the practical implementation of OWPT systems.

This paper is structured as follows:

Section 2 provides a review of previous research papers focusing on positioning and attitude determination utilizing a combination of differential absorption and stereo imagery. In

Section 3, the tracking of moving targets is discussed. This includes the optimization of the camera parameters used in experiments for successive position estimation, as well as presenting initial experimental results for position and attitude estimation.

Section 4 explores three algorithms for tracking moving targets, accompanied by discussions on their evaluation metric.

Section 5 discusses algorithmic improvements and introduces the error model of the metric.

Section 6 concludes the paper with a comparative analysis of a system-level misalignment requirement proposed in a former study. In

Appendix A, data are added to support the discussion in

Section 5.

2. Review of Positioning and Attitude Determination of PV by Differential Absorption Imaging

The section below discusses the application of the principle of position determination using differential absorption and stereo imaging, along with the introduction of an integrity measure as a consistency condition in position determination. The information presented here is based on former research papers [

12,

13,

14].

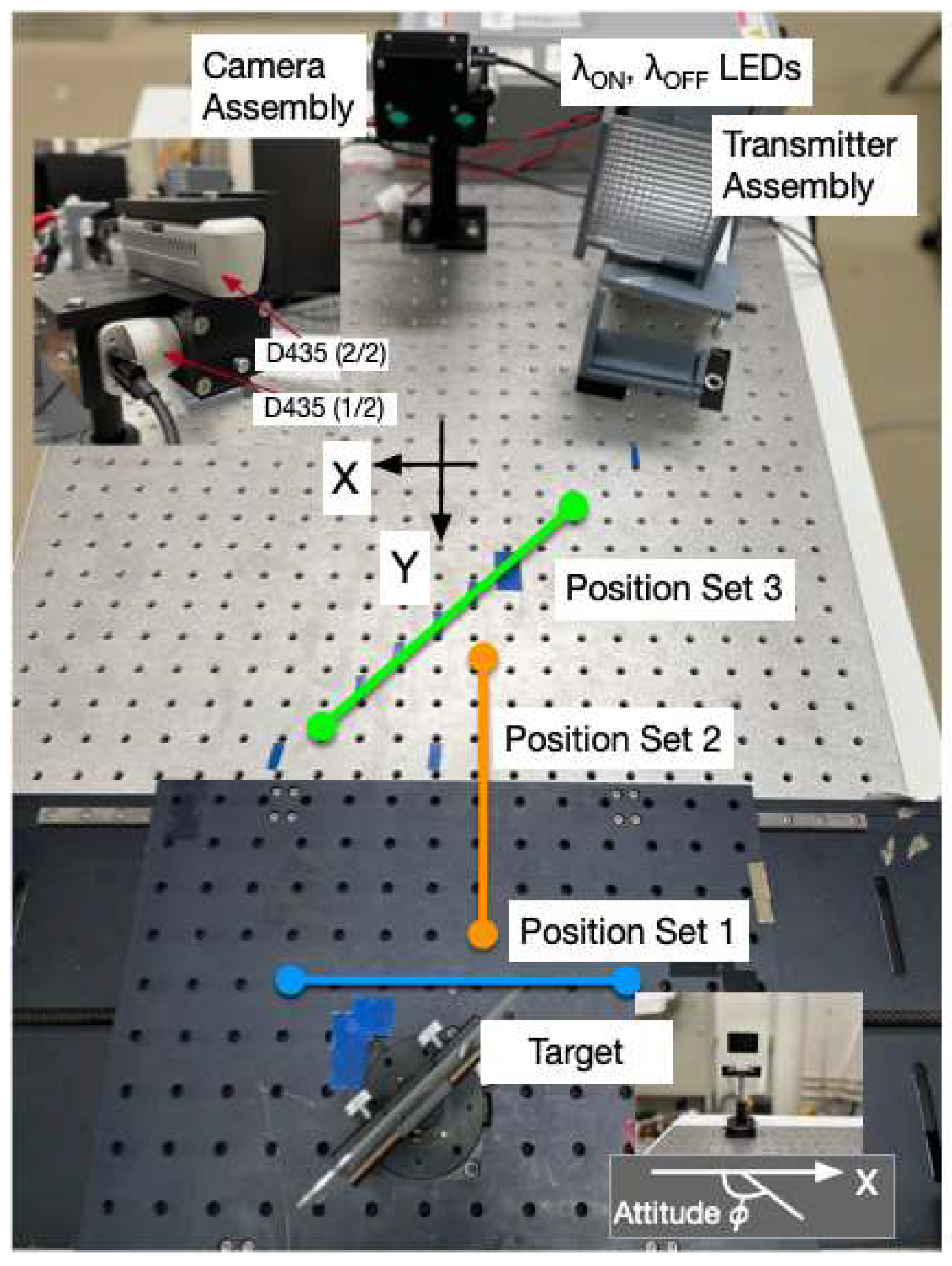

In this method, a total of four infrared image sensors are required. These sensors correspond to the left and the right images of

and of

, respectively. The left and the right differential images are generated and binarized. The center coordinates of the target are estimated from these images, and the position of the target is then determined from their parallax. To evaluate the accuracy of the target position determination, experiments were conducted by varying the position and attitude of the target. The layout of the experimental setup is shown in

Figure 1. Two Intel D435

TM depth cameras were used in the experiments. These have two image sensors per unit [

15] and were assembled as per the camera assembly shown in the figure. Although these cameras can generate depth information, this feature was not utilized in the experiments in this paper and the former papers. Instead, output from the camera assembly was processed by independent software to generate the differential absorption images and target’s position. The software was developed in Python

TM [

16] for camera control, real-time position determination, and data output. Then, the position data processing to estimate the target’s tracked position was implemented in Mathematica

TM [

17]. The Python program ran on a Dell OptiPlex 7040 PC running Windows 10 OS, including an Intel(R) Core

TM i7-6700 CPU with a speed of 3.41 GHz and 16 GB RAM.

The optical bench used in the experiment has 23 (horizontal) × 31 (vertical) threaded holes arranged with a pitch of 25.4 mm. They are considered as lattice points in the 2D coordinate system

,

. In this paper, X and Y coordinates are represented using these coordinates. In the experiment, the target was placed at positions selected from

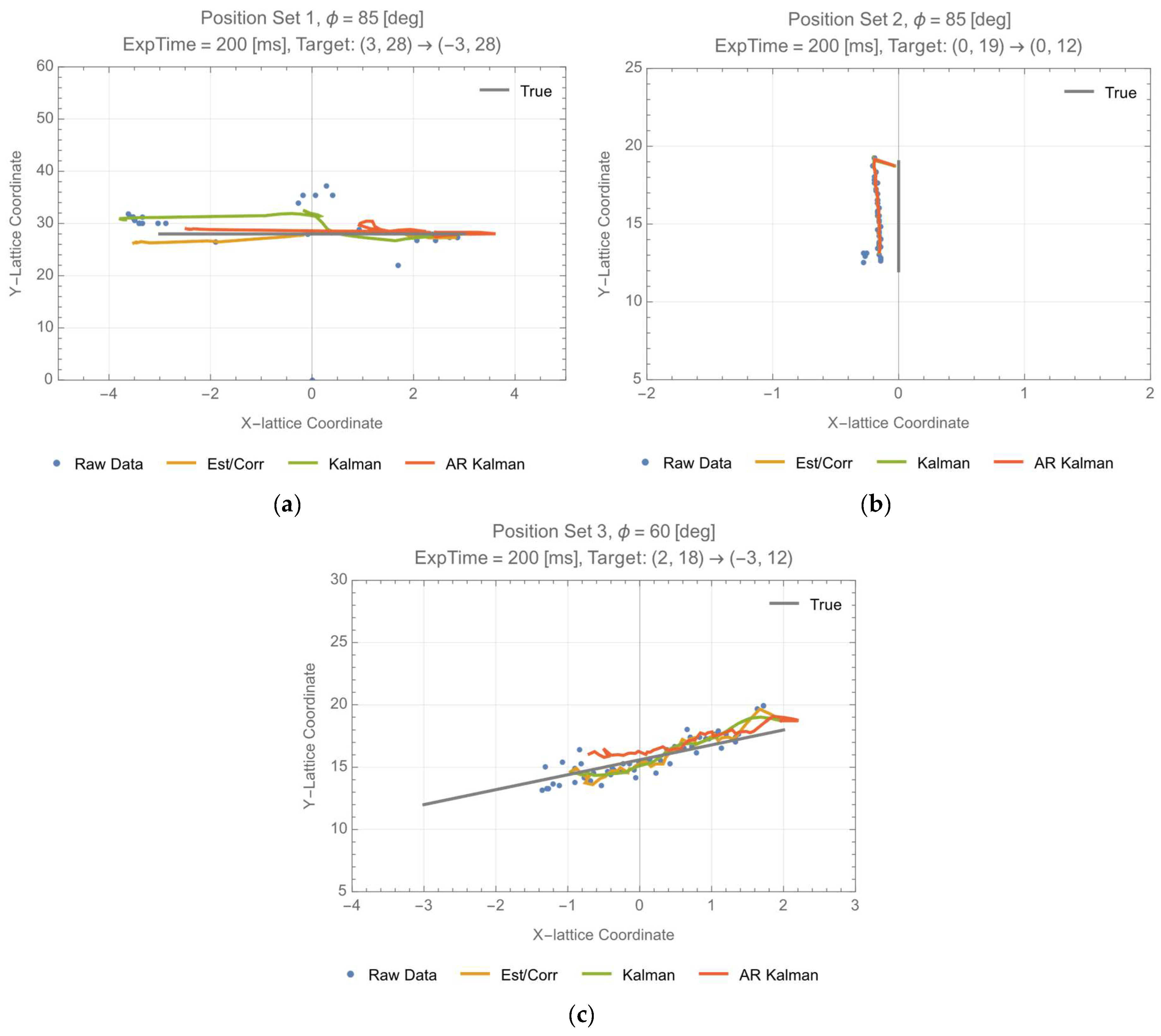

Figure 1 and position sets 1, 2, and 3.

It should be noted that the target in the experiment was not necessarily moving exactly along these position sets. The true track is displayed in each plot figure.

Table 1 summarizes the experiment parameters, and specific details are outlined in a former paper [

13].

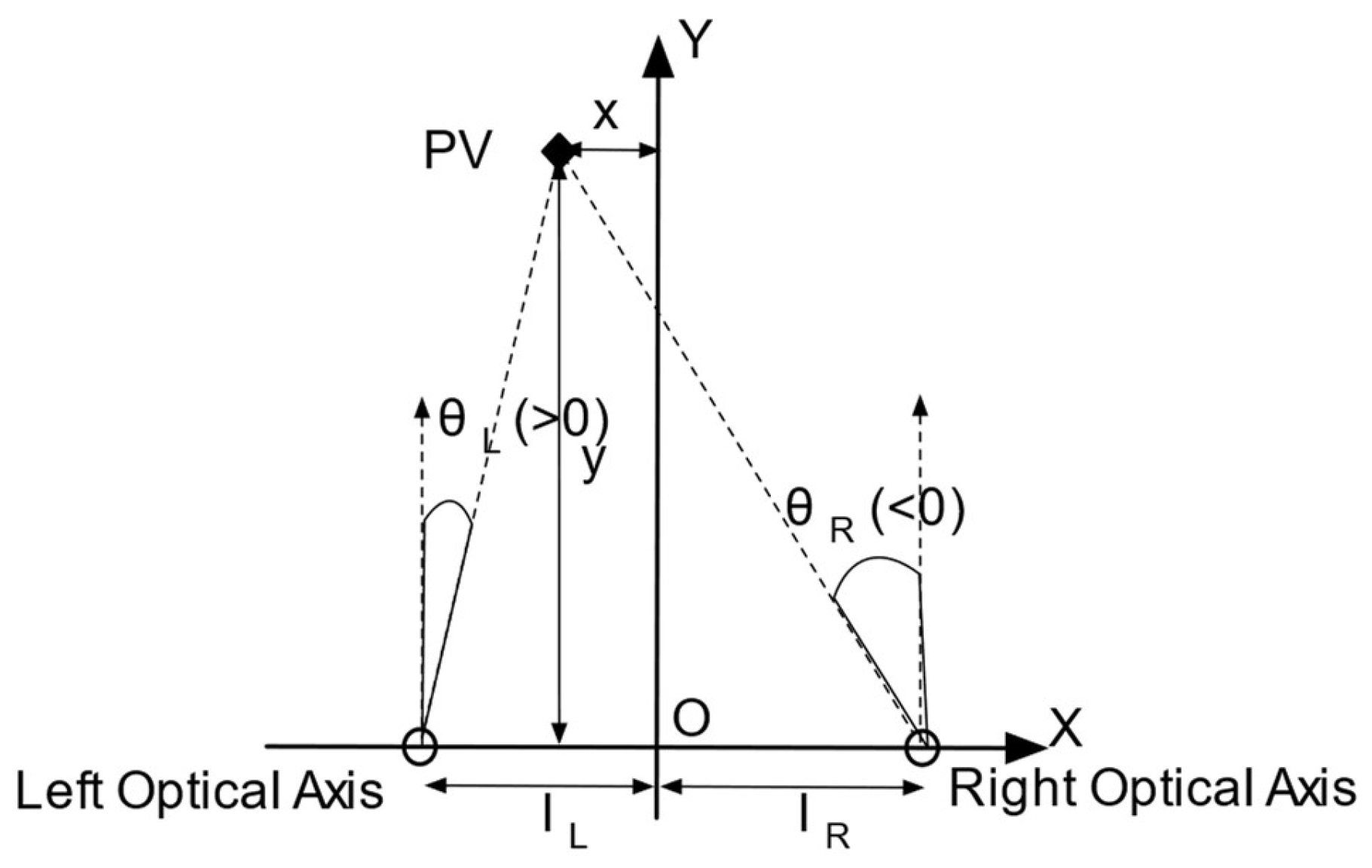

The camera assembly was positioned at the coordinate origin

. There are two optical axes of the left and right image sensors shown in

Figure 2 (left and Right optical axis). These define the XY plane and are aligned parallel along the Y-axis.

The output image size of the sensors was set to 640 × 480 pixels. The origin

of the pixel coordinate system for the image sensors is defined at the lower-left corner. The pixel coordinates

for both the left and the right image sensors are defined as

and

, respectively. Let the left and the right pixel coordinates of the target be

and

, respectively. The target position

in the 2D plane can then be determined from

Figure 2, as follows:

where

and

are known dimensions, with values of 17.5 mm and 32.5 mm, respectively, according to the D435 datasheet.

is a constant that depends on the experiment system, which has been described in a former paper [

13].

The target’s center coordinates, (

ξL,

ηL) and (

ξR,

ηR), should correspond with the same point. From the obtained pixel coordinates of the target, the consistency conditions (integrity measure) given by Equations (4) and (5) holds.

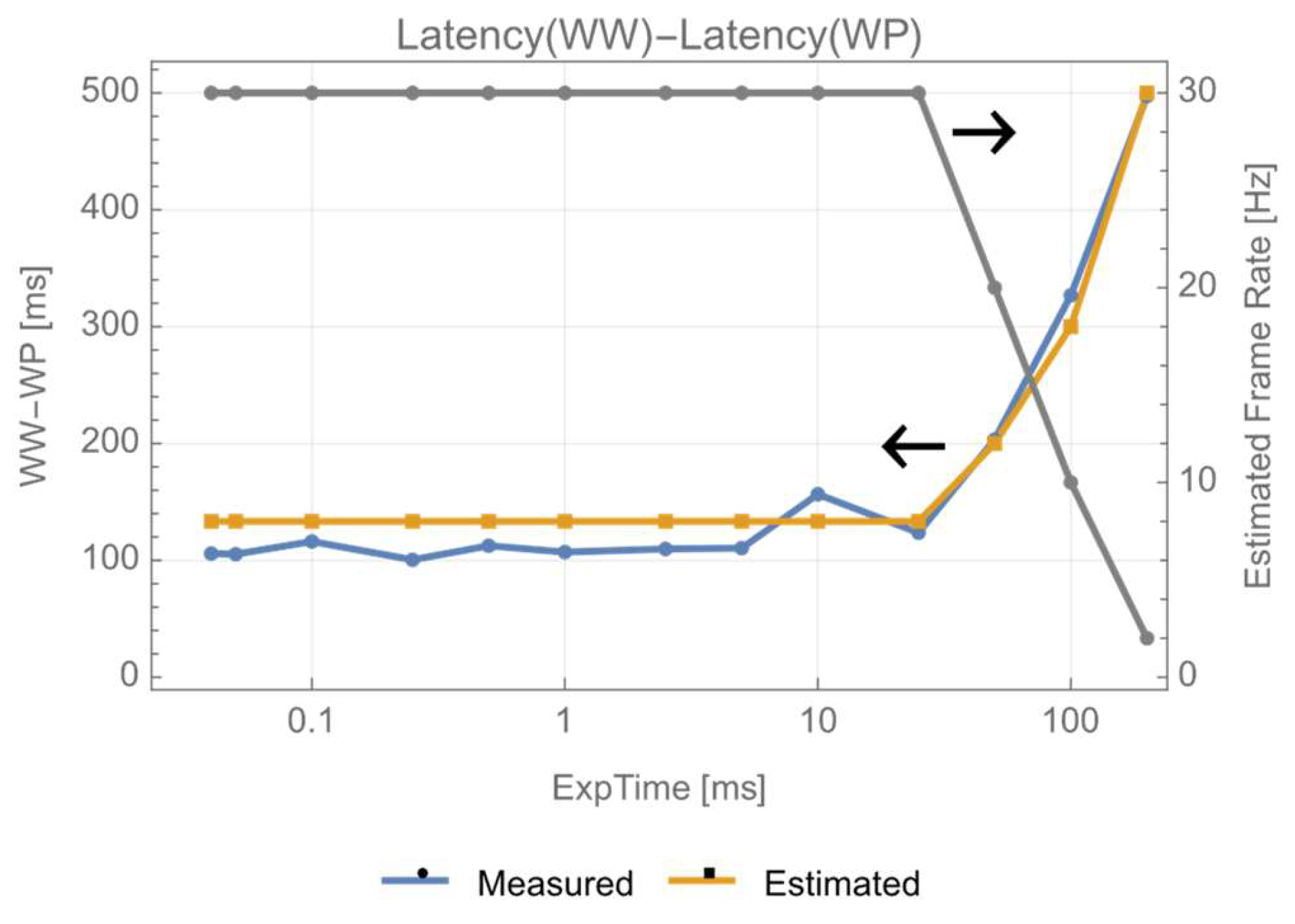

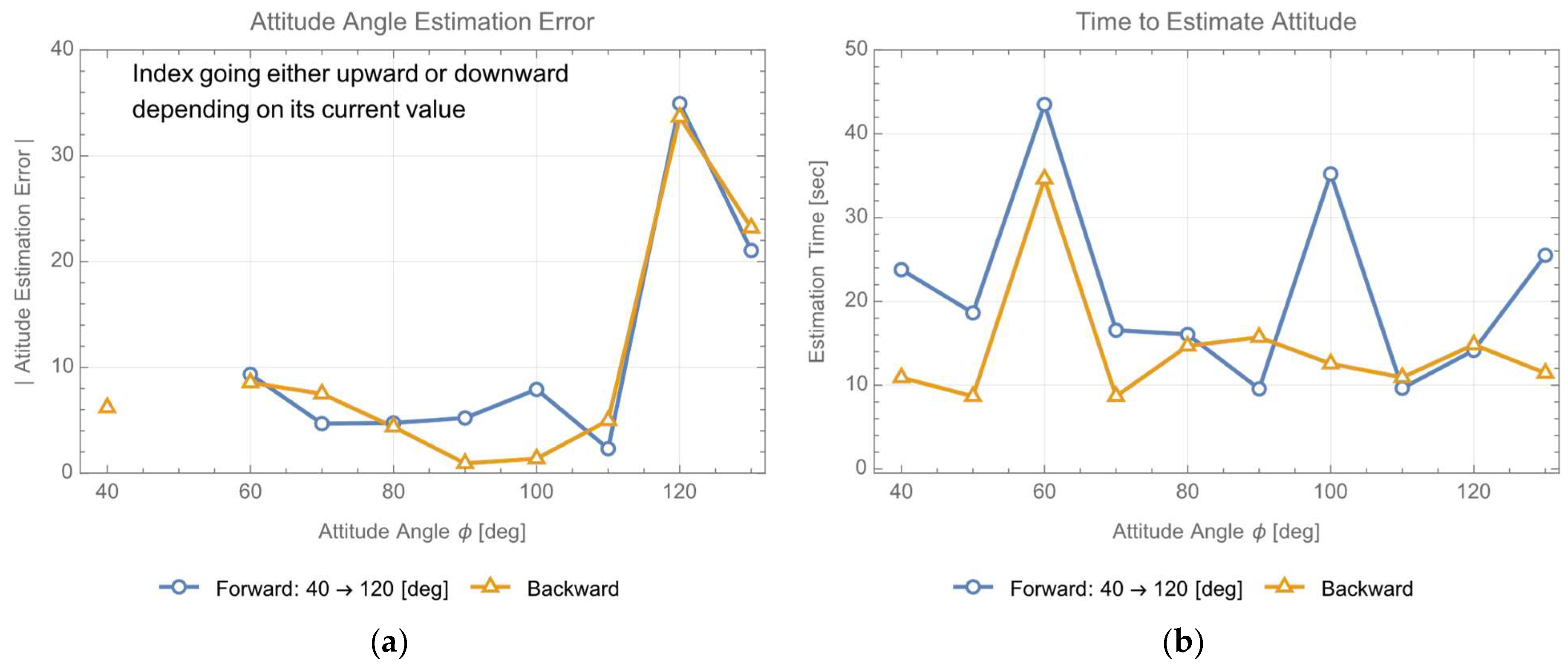

It is important to note that the integrity measure behaves randomly when the exposure time of the image sensor is short. However, it rapidly converges for values above a certain threshold. This minimum exposure time can be used to estimate the attitude angle of the target. A physical model was established in order to determine the minimum exposure time required for any given target position [

14]. Leveraging this physical model and the measurement of the minimum exposure time allows the attitude angles of the target to be estimated.

4. Tracking of the PV

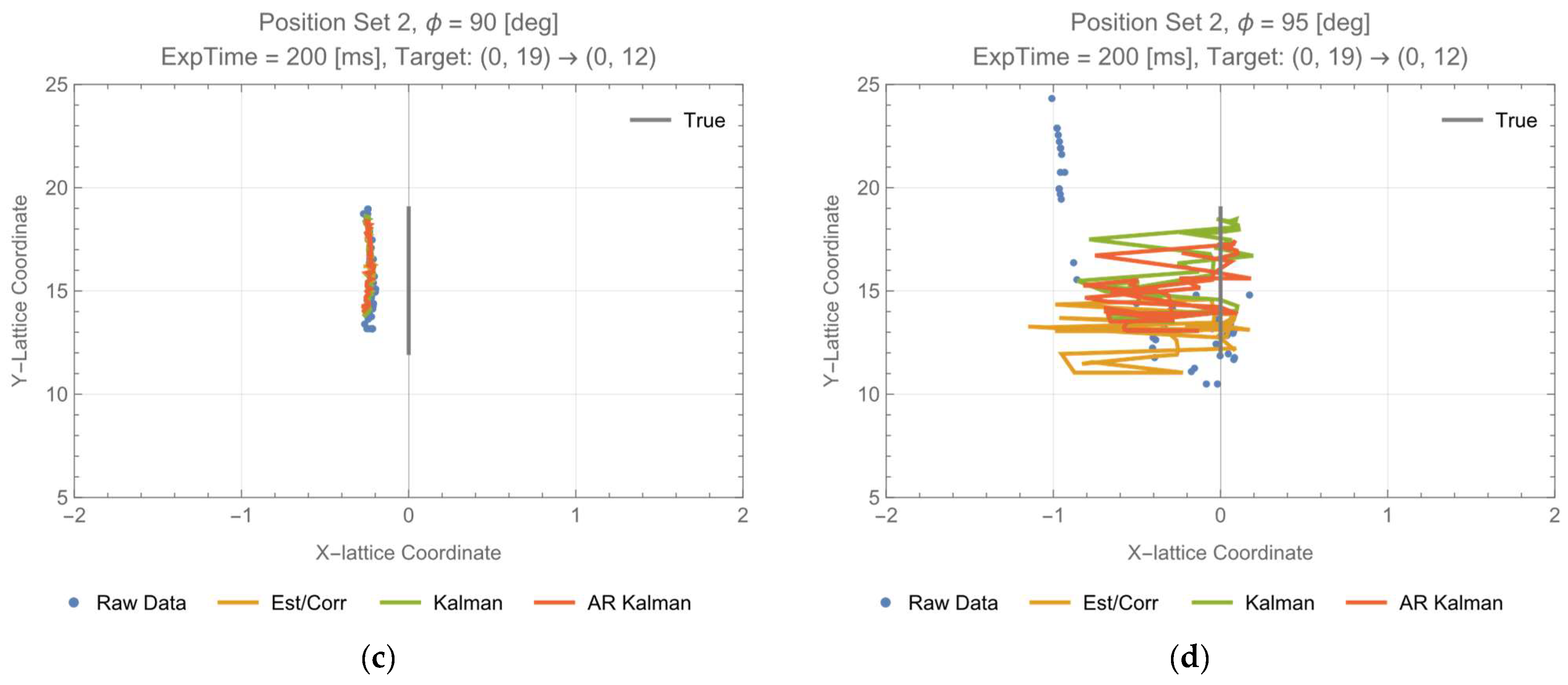

In

Figure 7b, there are outliers near the position estimation at

, and there is data loss around

. Data loss can happen when the attitude angle

ϕ deviates from the normal to the transmitter, even when the received light is excessively intense near the normal direction. Since

Figure 7b is for data with angles close to the normal direction (

ϕ = 85 deg), outliers and data loss were likely caused by excessively intense light.

To address the issue of outliers and missing data, it is necessary to appropriately interpolate the raw data, smooth it, and correct outliers. In this section, three algorithms are discussed for this purpose. To assess the algorithms, a score function is defined based on the true values of the target positions.

4.1. Tracking Algorithms

Three tracking algorithms are presented. The first employs a combination of thresholds and an autoregressive (AR) model. It is a supervised anomaly rejection algorithm and requires training before operation. On the other hand, the other two algorithms use Kalman filters for estimation and do not require any training.

4.1.1. AR Model with Threshold (Estimator/Corrector)

The raw data from successive position estimation can be interpreted as time-series data. Analyzing such data using an autoregressive model, including outlier handling, is widely used in various fields [

21,

22].

Let

and

be two successive raw data (measurements). If the change from

to

does not exceed a predefined threshold, neither smoothing nor interpolation is performed. If the change from

to

exceeds a predefined threshold

or

is missing, primary smoothing and interpolation of missing data for the (n + 1)-th position data

are executed as per Equation (7).

- 2.

Estimator (the secondary smoothing and the primary estimate)

After the primary smoothing, the secondary smoothing is carried out using a Butterworth low-pass filter [

23]. The cutoff frequency of the filter is set to

rad/s, and its order is N = 8. The outcome of this process is referred to as the primary estimate, which is denoted as

.

- 3.

Corrector (the secondary estimate)

The process applies the same procedure to as per Equation (7), then results in the secondary estimates (tracker output) . The thresholds in this case are denoted as .

The above three processes are applied to the X coordinate and the Y coordinate estimations. The specified thresholds for each are and . Before tracking, the initial data for the AR model and the low-pass filter are needed. The median of 50 consecutive position determinations for the stationary target was used for this purpose, and it was then duplicated as necessary (four times for the AR model and eight times for the low-pass filter). This algorithm requires acquiring data before its operation and to optimize both and beforehand.

4.1.2. Uniform Motion Model Estimated by Kalman Filter (Kalman)

Algorithm in

Section 4.1.1, the estimator/corrector, is a supervised model that requires threshold optimization through training. On the other hand, in

Section 4.1.2 and

Section 4.1.3, models that do not require any training are explored.

Assuming no external force on the target, the steady state Kalman filter [

24] is used to estimate the position and the velocity of the n-th data point. Let measurement

, and the raw data for

be given by

. The prediction for the n-th data using the data up to (n − 1)-th estimation is denoted as

, and the estimation for the n-th data using the data up to n-th measurement and the n-th prediction is denoted as

. The corresponding state equation is given by Equation (8a), Equation (8b), Equation (8c), and the observation equation is by Equation (9a) and Equation (9b).

In this experiment, the fact that the data rate is 1 Hz implies

. Let

a Gaussian distribution with mean

and standard deviation

. The system noise

is assumed to be

. Similarly, the measurement noise is assumed to follow

, where

represents the standard deviation during initial value determination. The estimation

can be obtained using the prediction

, the measurement

and the Kalman gain

as follows:

In case of missing raw data, the missing values are interpolated using Equation (11), as follows:

- 2.

Smoothing

The interpolated raw data

are input to the same Butterworth low-pass filter as in

Section 4.2.1. The resulting values are denoted as

.

- 3.

Position estimation (tracker output)

The n-th prediction

is obtained from Equation (8a) using the (n − 1)-th estimation

. Along with

, the n-th estimation

is calculated using Equation (10). The final tracker output is

. This process is applied to both the X-coordinate and the Y-coordinate estimations. The initial values generation process is the same as in

Section 4.1.1.

4.1.3. AR Model Estimated by Kalman Filter (AR Kalman)

Additionally, estimation using a Kalman filter for an AR model is widely employed in the analysis of time-series data [

25,

26,

27]. In this algorithm, the AR model in Equation (7) is estimated using a Kalman filter. Equation (8b), Equation (8c), Equation (9b) are replaced by Equation (12c), Equation (12d), Equation (12e).

Equation (11) is replaced by a similar equation as Equation (12b). The estimation procedure used to obtain the tracker output is

, and the initialization method is the same as in

Section 4.1.1.

4.2. Assessment of the Three Algorithms

4.2.1. Score Function and Estimation of True Position

The Python program outputs position data in real time and each measurement (position determination) is successively stored in a list (list A).

The Mathematica program takes measurement data from list A and estimates its tracked position one by one using the algorithms. Then, each estimation is successively stored in a list (list B).

The tracking plots are generated from list B.

It should be noted that, except for the plot generation process using Mathematica, the rest is conducted on an essentially real-time basis. When comparing these algorithms under the same target distance, etc., because the amount of computation is not so different among the three algorithms, the difference appears in tracking errors.

To assess the performance of the algorithms, a score function is defined. It quantifies the tracked results.

where

denotes a position error associated with each tracked target data, and

represents the average over N (=50) data of the tracking process. Each

is defined as follows:

where

,

are the i-th estimates obtained by the algorithms. On the other hand,

,

are the true values of the target, which are generally determined by measuring the target position using an independent device from the experimental setup in

Figure 1. However, this paper proposes a simpler method to estimate them.

The score utilizing

defined by Equation (14) is referred to as the true position (TP)-based score in this paper. A former study has shown that estimating the direction angle

, defined by

, is more accurate compared with the position estimation

[

13]. The direction error

, due to position estimation errors

and

, is obtained by Equation (15), where

is the horizontal coordinate in the left (right) image sensor of the target, and

represents its error.

Assuming

and

are quantities of the same order, and assuming the same for

and

, the evaluation of

is approximated as

. By re-evaluating the accuracy of the direction angle estimation from the data included in a former paper [

13],

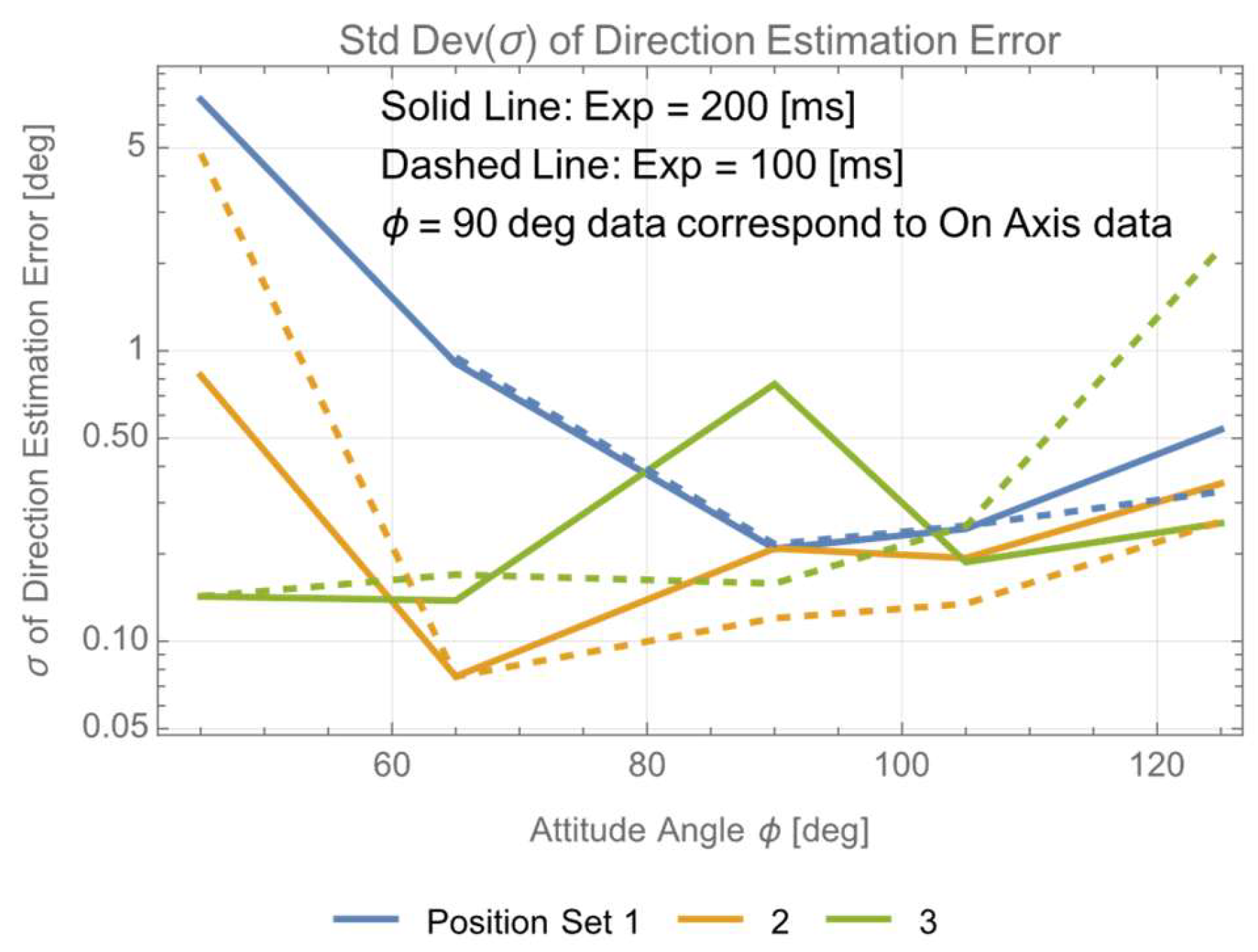

Figure 10 is obtained. The actual experimental data included a constant offset that varied from day to day, and this constant offset is due to errors in the daily setup of the experimental apparatus. In

Figure 10, the accuracy of the direction angle estimation is evaluated by the standard deviation across each position set, excluding this offset.

From

Figure 10, the accuracy of the direction angle

estimation varies with the target’s attitude angle

ϕ, and

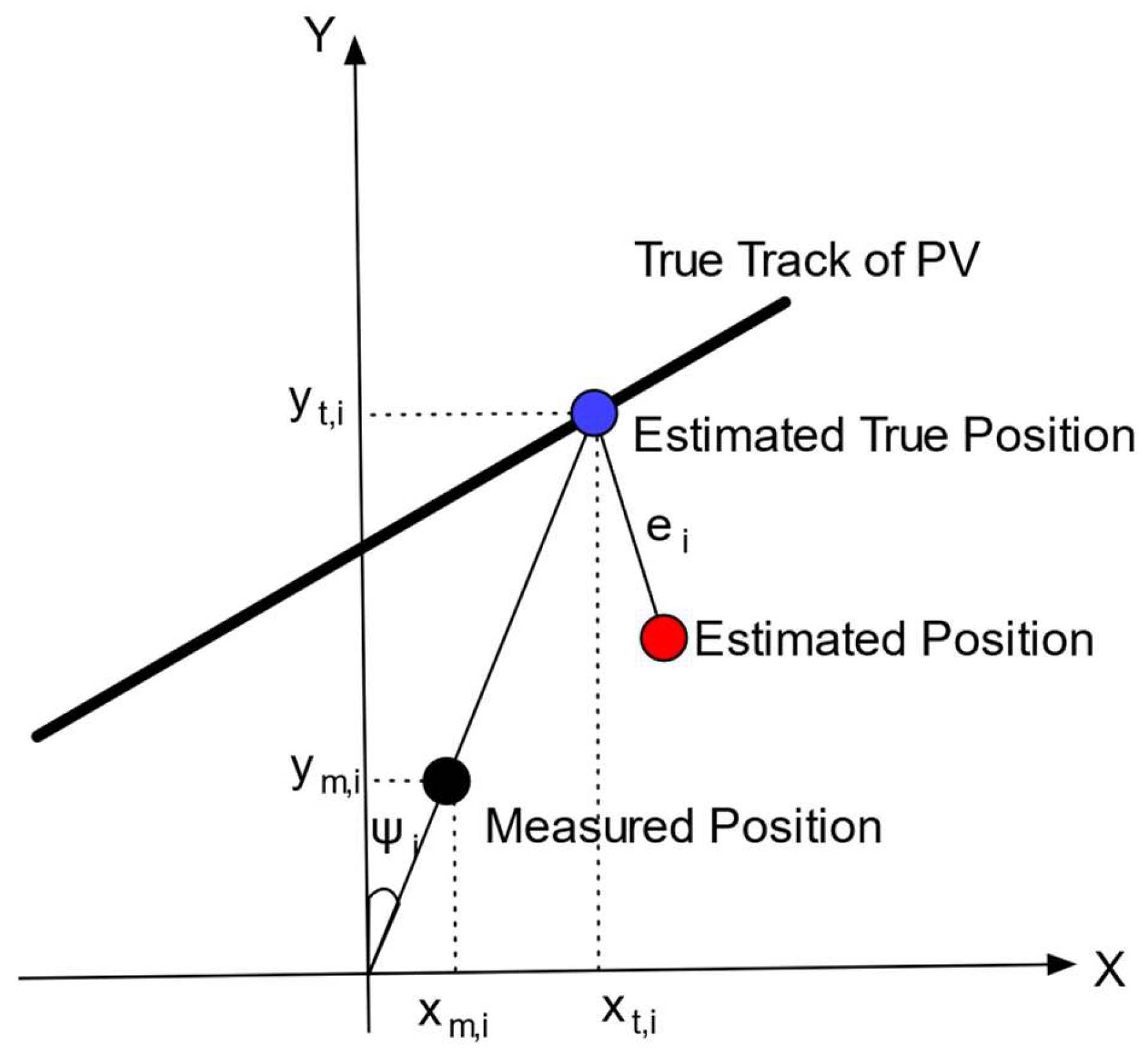

can be estimated with an accuracy of approximately 0.15 degrees when the target is close to the normal direction. From Equation (15), this corresponds to about 1 pixel error in the pixel coordinate system, which can be regarded as the measurement limit. Then, the true position can be estimated by the intersection of the true track of the target, which is known in the experiments and the line

as depicted in

Figure 11.

can be calculated from Equation (14) as the distance between the estimated position by the algorithm and the estimated true position.

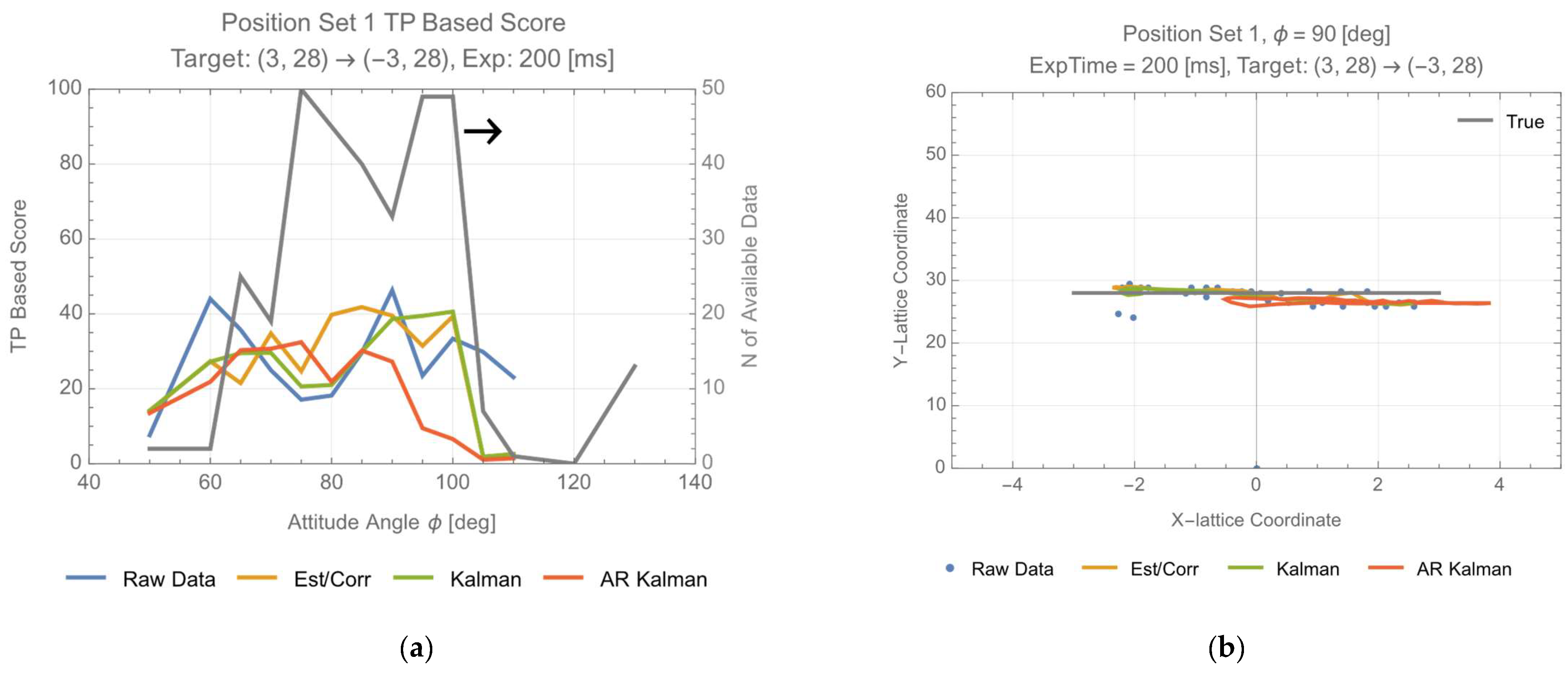

4.2.2. Assessment of the Three Algorithms

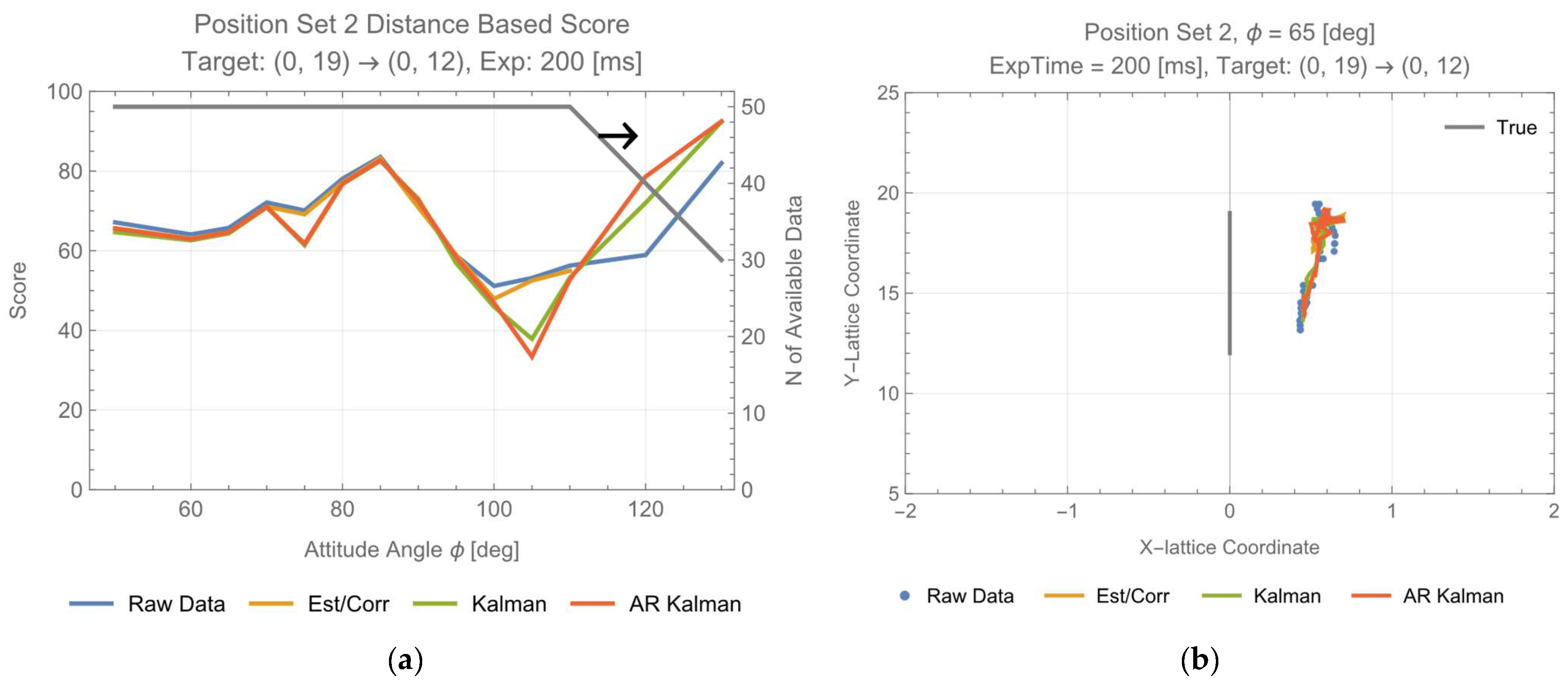

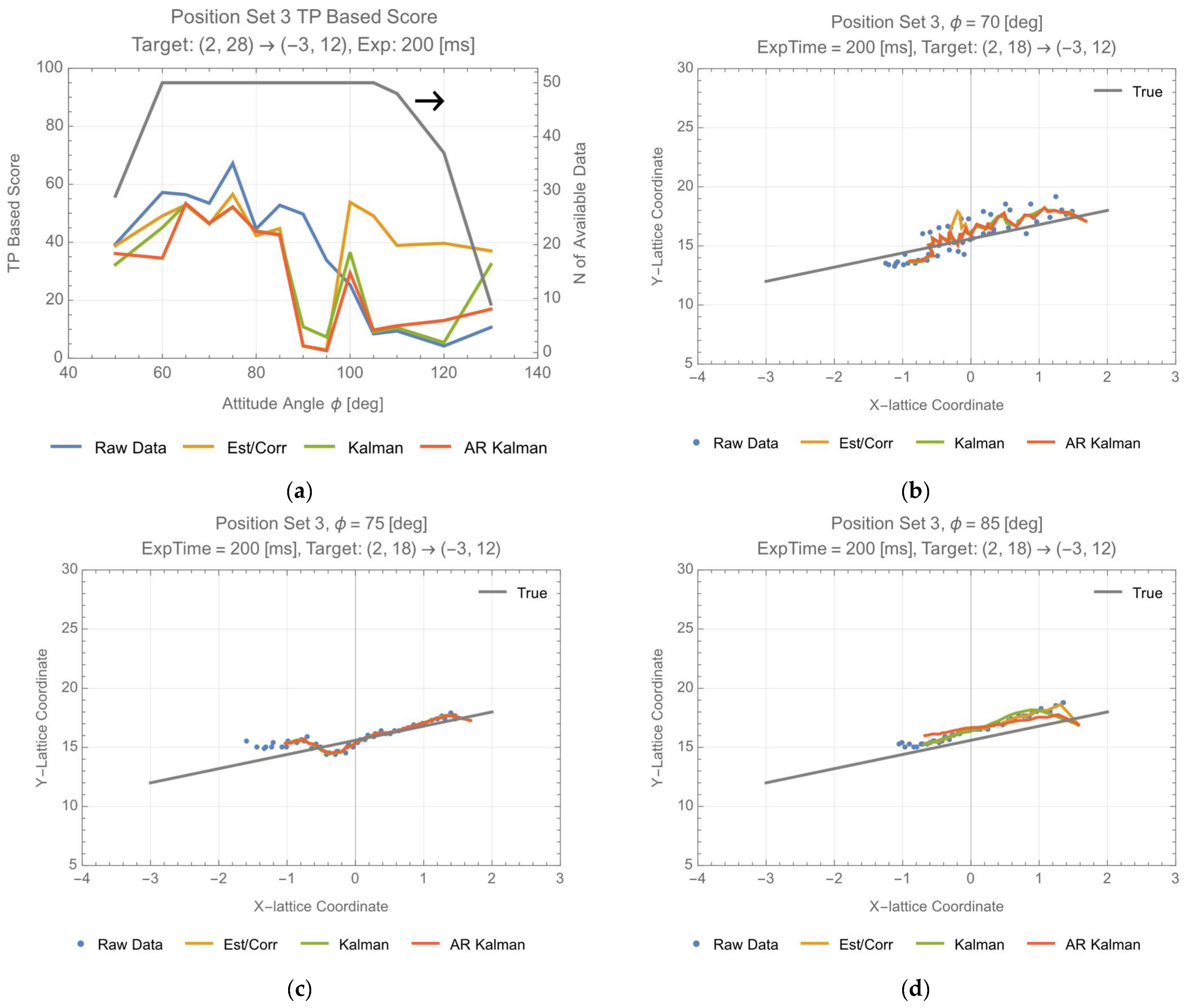

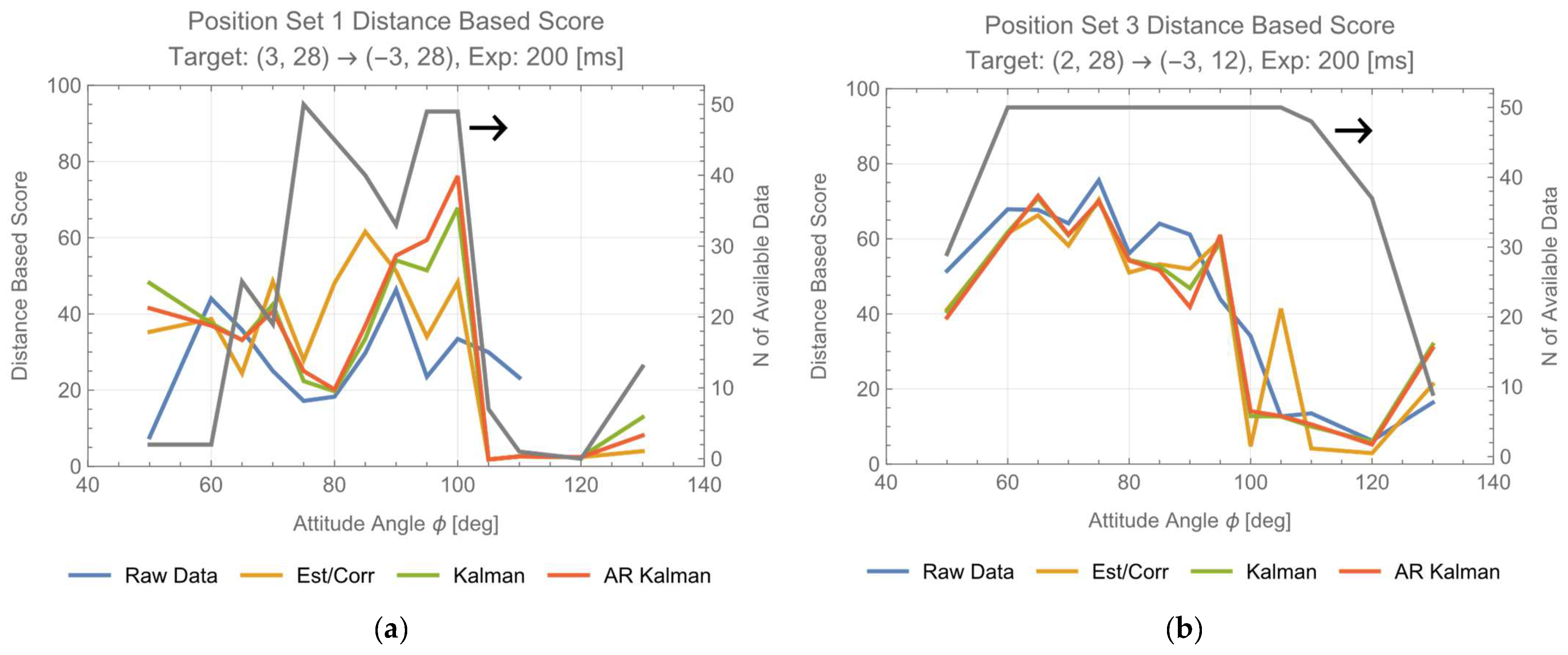

The results of the TP-based score are displayed in

Figure 12 (position set 1) and

Figure 13 (position set 3). Along with the score evaluation results, the right vertical axis is showcased by the N (number) of available data which meet the integrity measure. Based on the plot, it appears that the values on the horizontal axis corresponding with the maximum values (=50) on the right vertical axis are near the normal direction, and that the scores seem to increase there. In

Figure 12, position set 1 shows many outliers and missing data, suggesting that the correction by the algorithm is effective. However, since position set 3 data contain fewer outliers and missing data, the algorithm correction is limited in comparison with the raw data. Although the evaluation results of the three algorithms do not differ significantly, it seems that the estimator/corrector, which requires costs for additional training, behaves slightly superior to (the uniform motion/) Kalman filter and AR (model/) Kalman filter.

6. Conclusions

This paper discussed real-time position and attitude estimation for moving targets.

The target’s direction can be accurately estimated with about ±0.15 deg (~±1 px) precision when it is near the normal. This feature can be used to improve the accuracy of position estimation by estimating the X coordinate as using Y coordinate estimation and . As a result, within the experiment set of 660 × 200 mm size, real-time tracking of the PV confirmed that the target positions were estimated within 17 mm error, and that its attitude was estimated within 10 deg error around the normal. It is worth noting that this accuracy can be improved by using a higher-resolution camera.

A score function based on the position estimation error can be used to compare three tracking algorithms. Position sets 1 and 3 allow for the estimation of true values using the azimuth angle and the true track, enabling evaluation with true position (TP)-based score. For evaluation of position set 2 data, distance-based score based on the distance from the true track can be defined and rationally used. Based on the evaluation results of the score functions for the three algorithms, the estimator/corrector, a supervised model using AR models and thresholds, is generally superior, while there is little difference between the method of using the Kalman filter to estimate the position with a uniform motion model and the method of estimating the AR model with the Kalman filter. The choice of tracking algorithm depends on the system design. If a system allows for the training of a supervised algorithm, then choosing estimator/corrector would yield generally superior results, particularly near the normal position. However, if training is not an option, then choosing one of the algorithms using the Kalman filter should suffice.

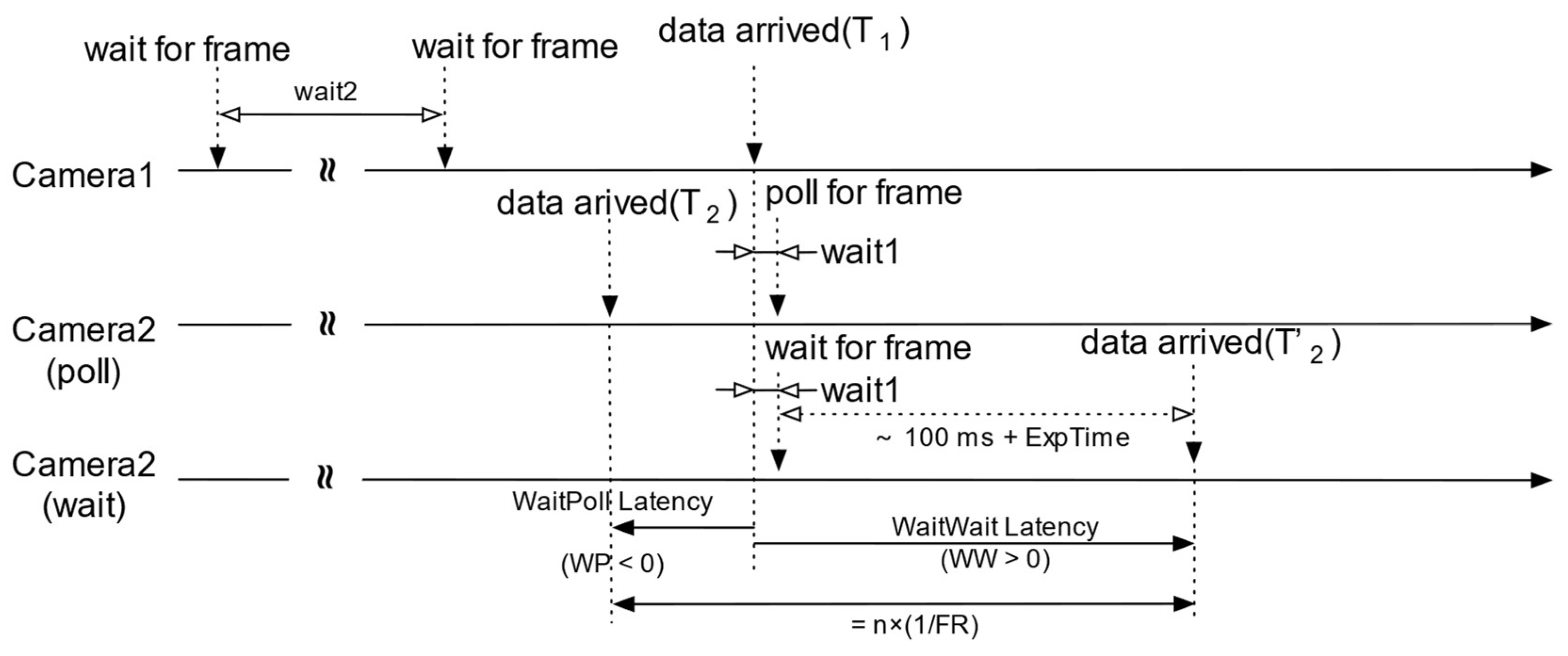

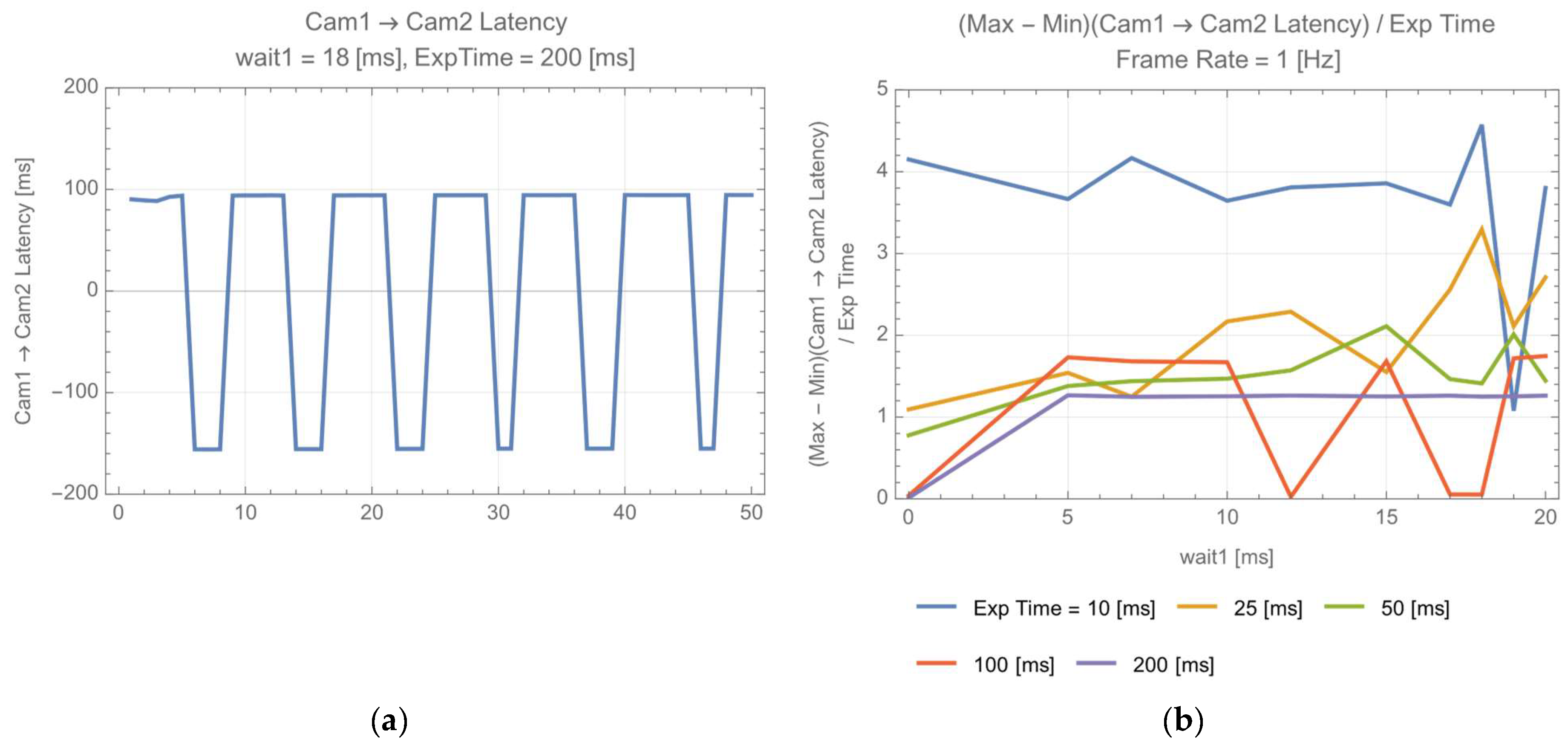

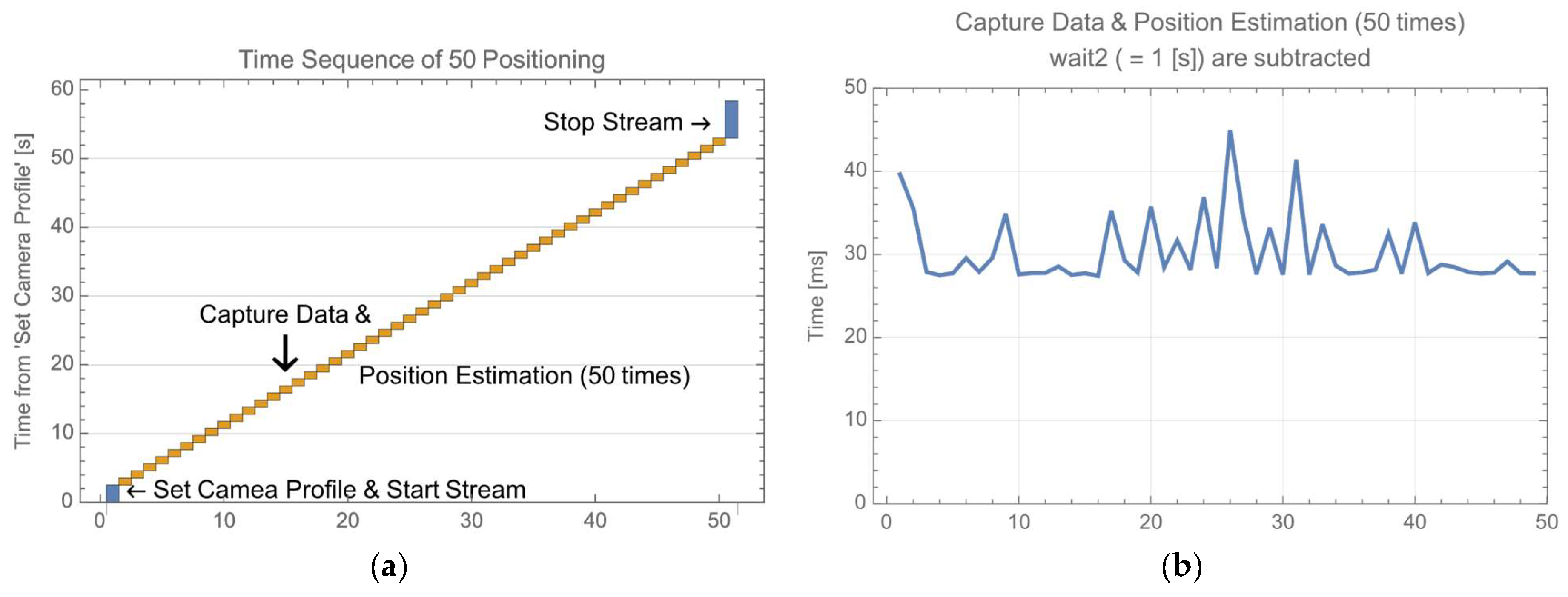

This paper investigated the necessary parameter settings for real-time position and attitude estimation based on an experimental setup that utilizes Intel D435. During the experiments, certain challenges related to the cameras were identified. To track the target and perform real-time position and attitude estimation, the initial configuration and the start/stop procedures for the camera must be executed within a short enough time relative to the camera’s data rate. Additionally, to minimize the latency between two cameras respectively corresponding to and , hardware-based synchronization between the cameras is desirable. Suppose the time for the start/stop frame can be reduced from 8 s to 100 ms, the total time, including positioning and start/stop stream, is less than 125 ms. Such reduction, with the use of a high-speed PC, will generate attitude estimation data up to 4 Hz.

The detection and estimation of PV targets’ position and attitude angles are crucial for the implementation of OWPT systems. A series of studies by the authors have proposed a method addressing a unique and indispensable process for OWPT. From high-level requirements analysis in former research to target tracking in this paper, the conceptual verification of the method has been conducted. These works exhibit an advancement towards the practical implementation of OWPT systems.