Extreme Learning Machine Based on Firefly Adaptive Flower Pollination Algorithm Optimization

Abstract

1. Introduction

- It is shown how to use FA-FPA to train ELM. This method can optimize the input weights and thresholds of ELM algorithm, so as to achieve the goal of finding the optimal parameter combination.

- Finding the optimal parameters of the ELM algorithm through FA-FPA can effectively solve the problem of local minima and overfitting, and enhance the generalization capability of the network.

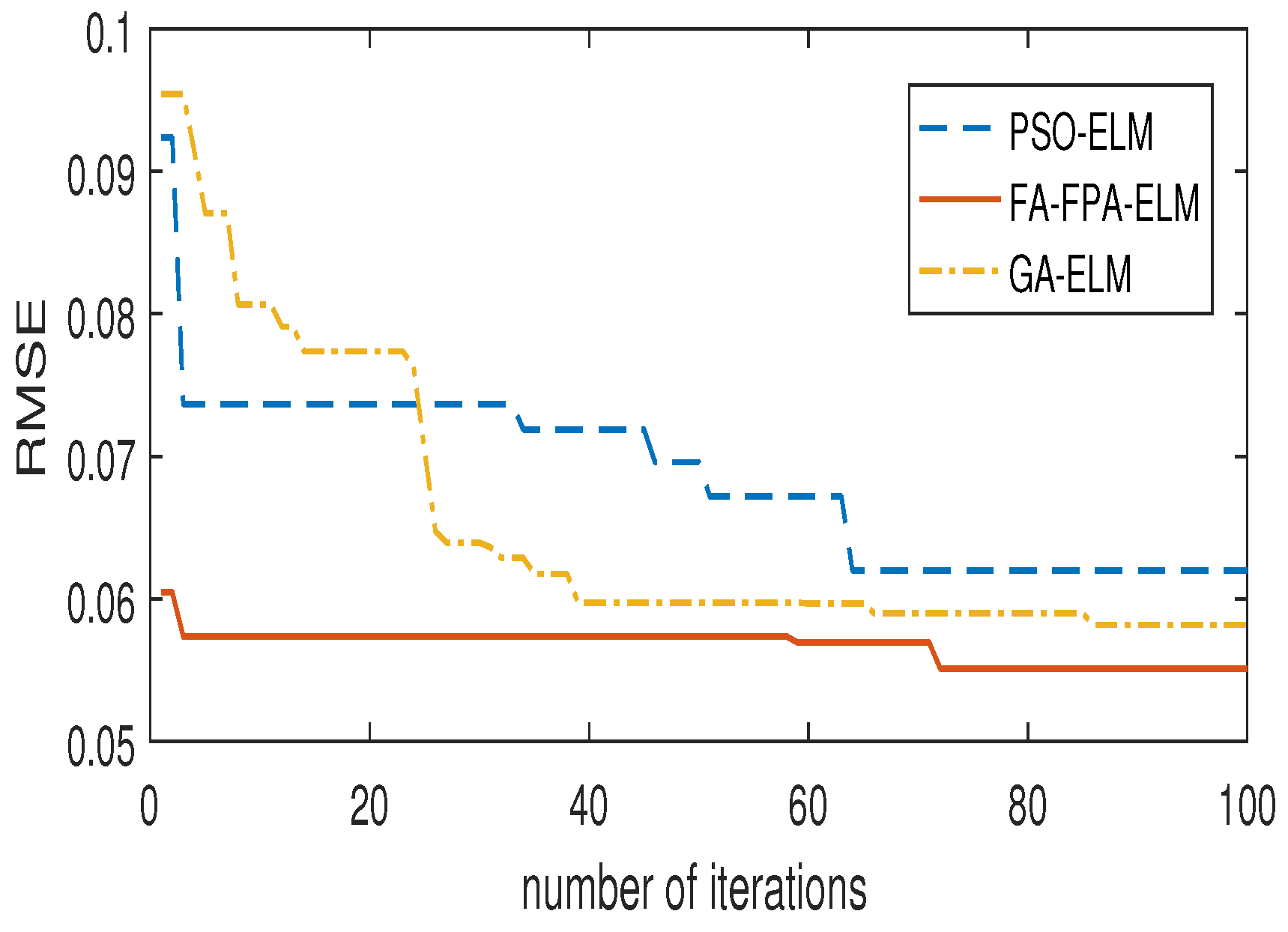

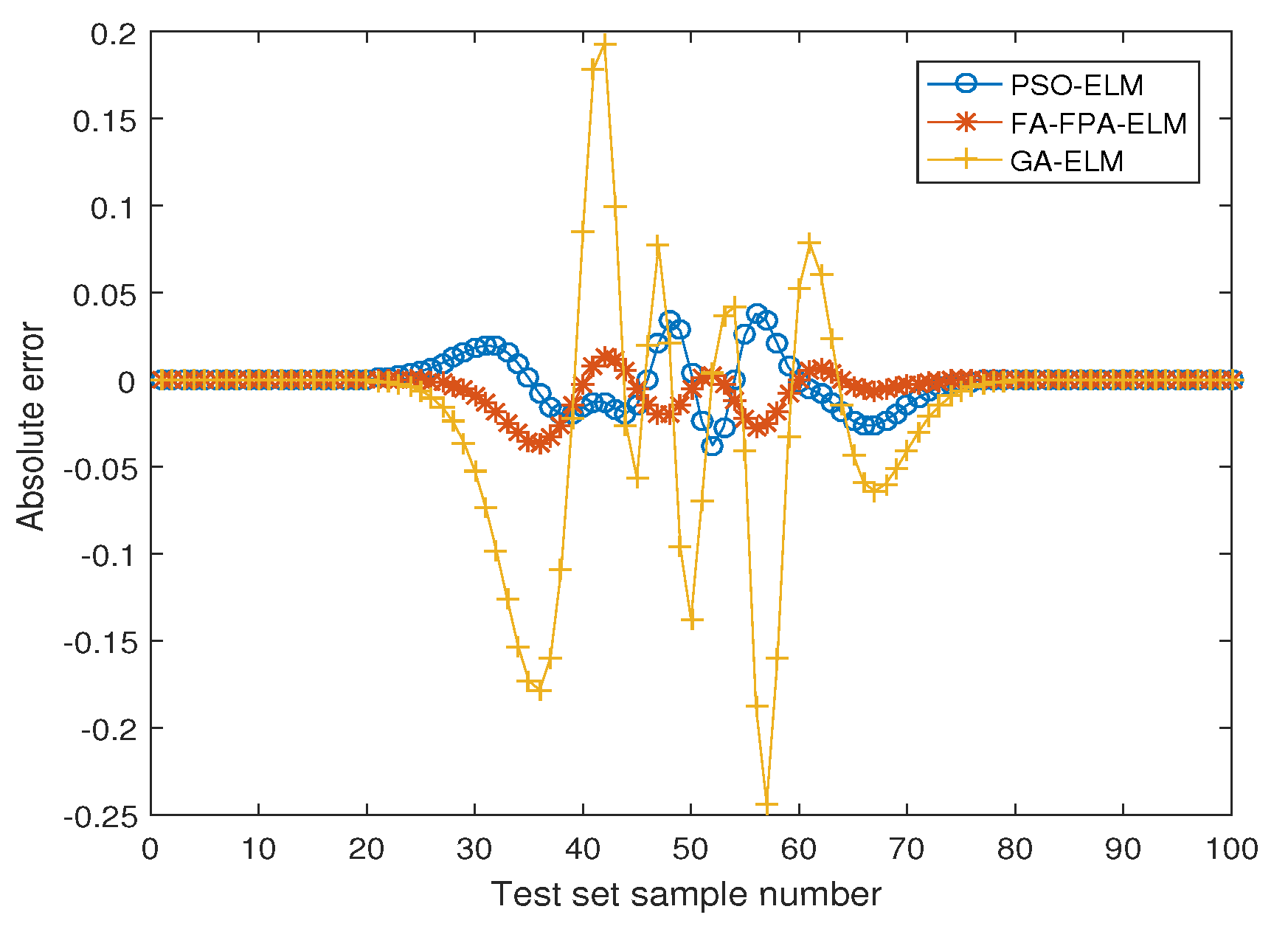

- Nonlinear function fitting, iris classification and personal credit rating problems show that the FA-FPA-ELM algorithm can obtain better generalization performance than traditional ELM, FA-ELM, FPA-ELM, GA-ELM and PSO-ELM algorithms.

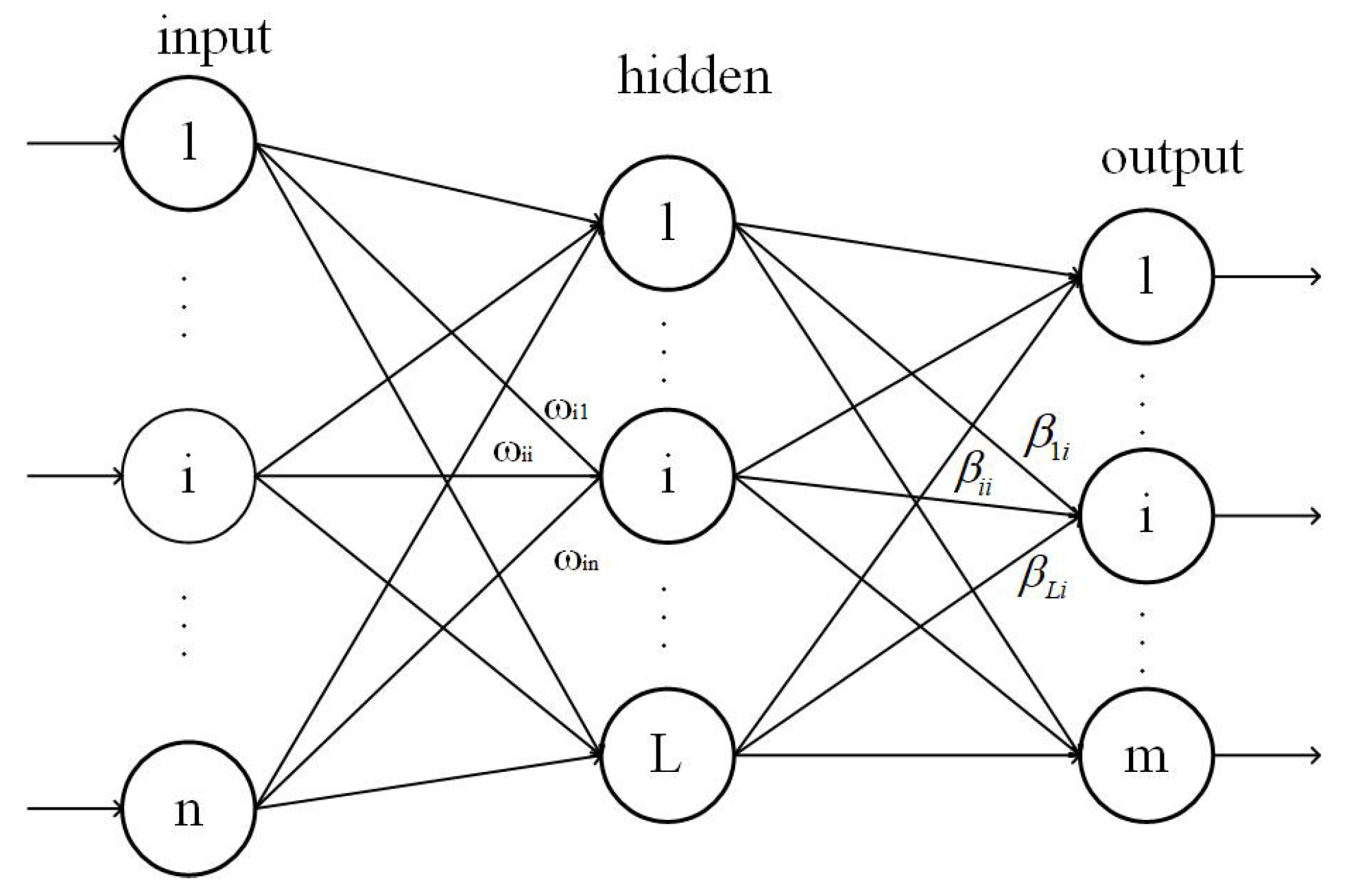

2. Extreme Learning Machine (ELM)

3. Firefly-Based Adaptive Flower Pollination Algorithm (FA-FPA)

3.1. Adaptive Flower Pollination Algorithm (FPA)

- Cross-pollination can be deemed a global pollination process, while pollinators carrying pollen move in a way that follows flights.

- Self-pollination can be seen as a process of local pollination.

- The constancy of the flower is the probability of reproduction, which is proportional to the similarity of the two flowers involved.

- The switch probability can adjust local pollination and global pollination. Owing to the influence of distance and other factors, the whole pollination process is more inclined to local pollination.

3.2. Firefly Algorithm (FA)

- Regardless of the sex of the fireflies.

- The attraction between fireflies is only pertinent to the brightness and distance of fireflies.

- The brightness of fireflies is determined by the fitness function.

3.3. Firefly-Based Adaptive Flower Pollination Algorithm (FA-FPA)

4. Firefly Adaptive Flower Pollination Extreme Learning Machine Algorithm (FA-FPA-ELM)

5. Numerical Experiments and Comparison

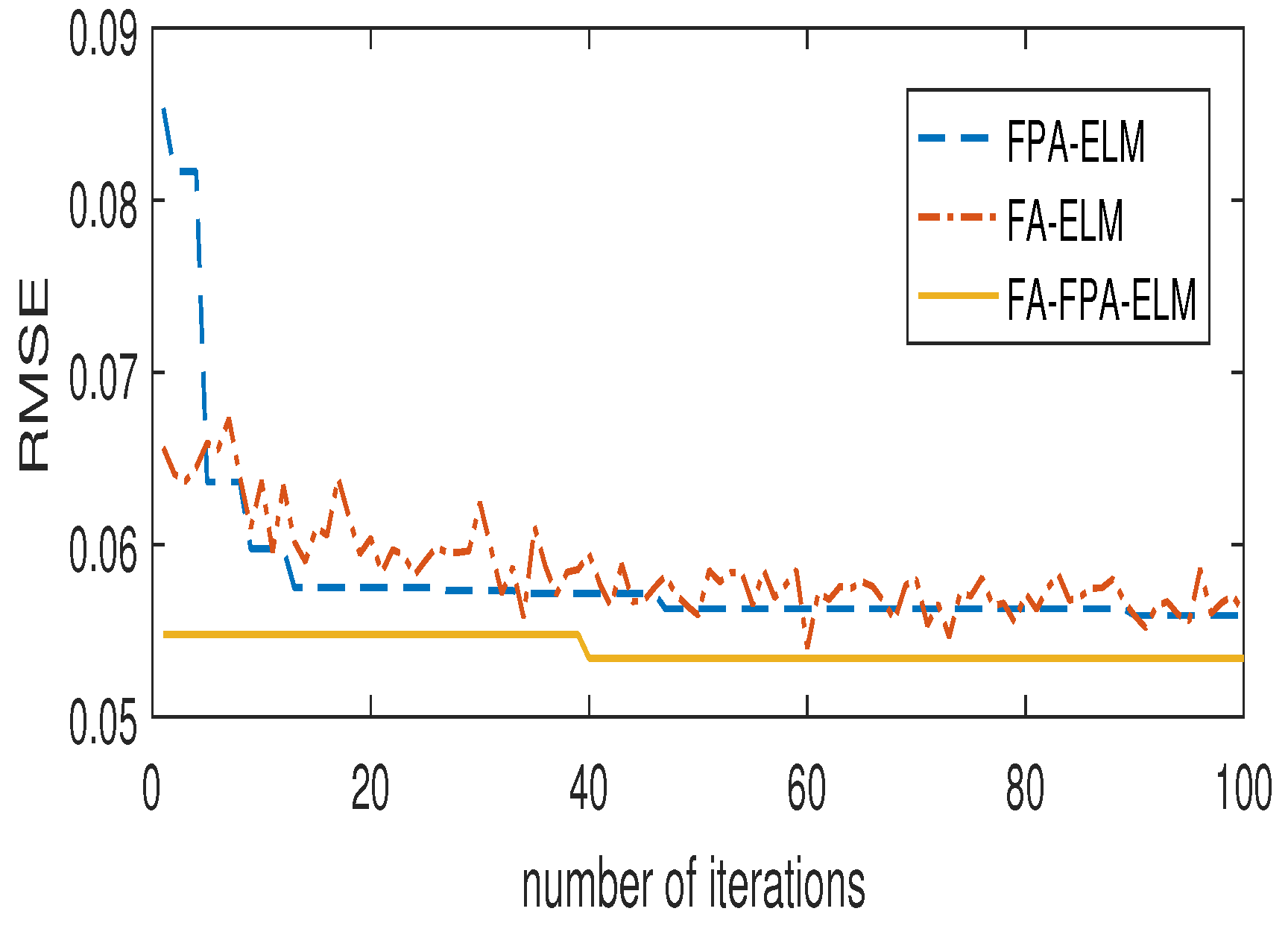

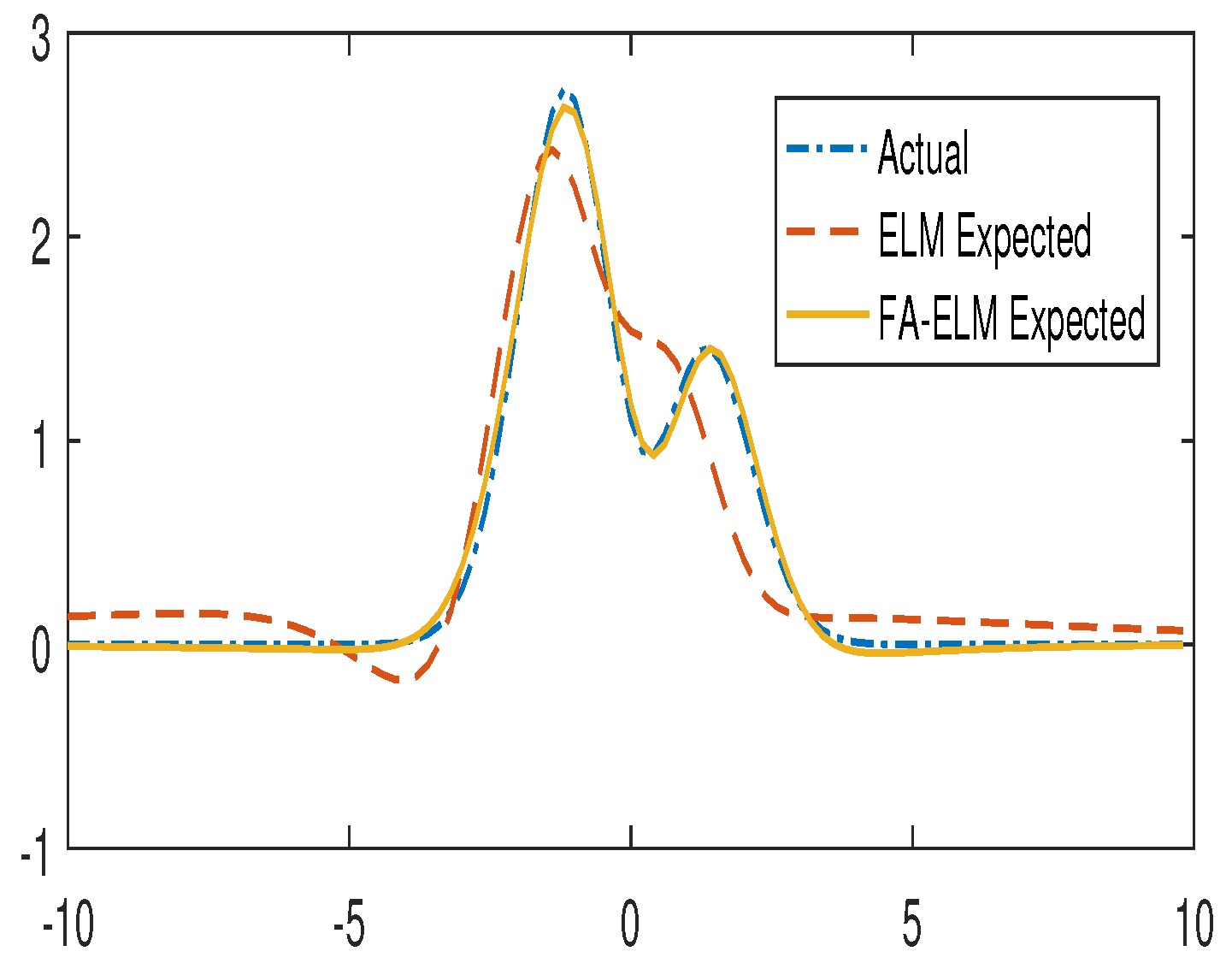

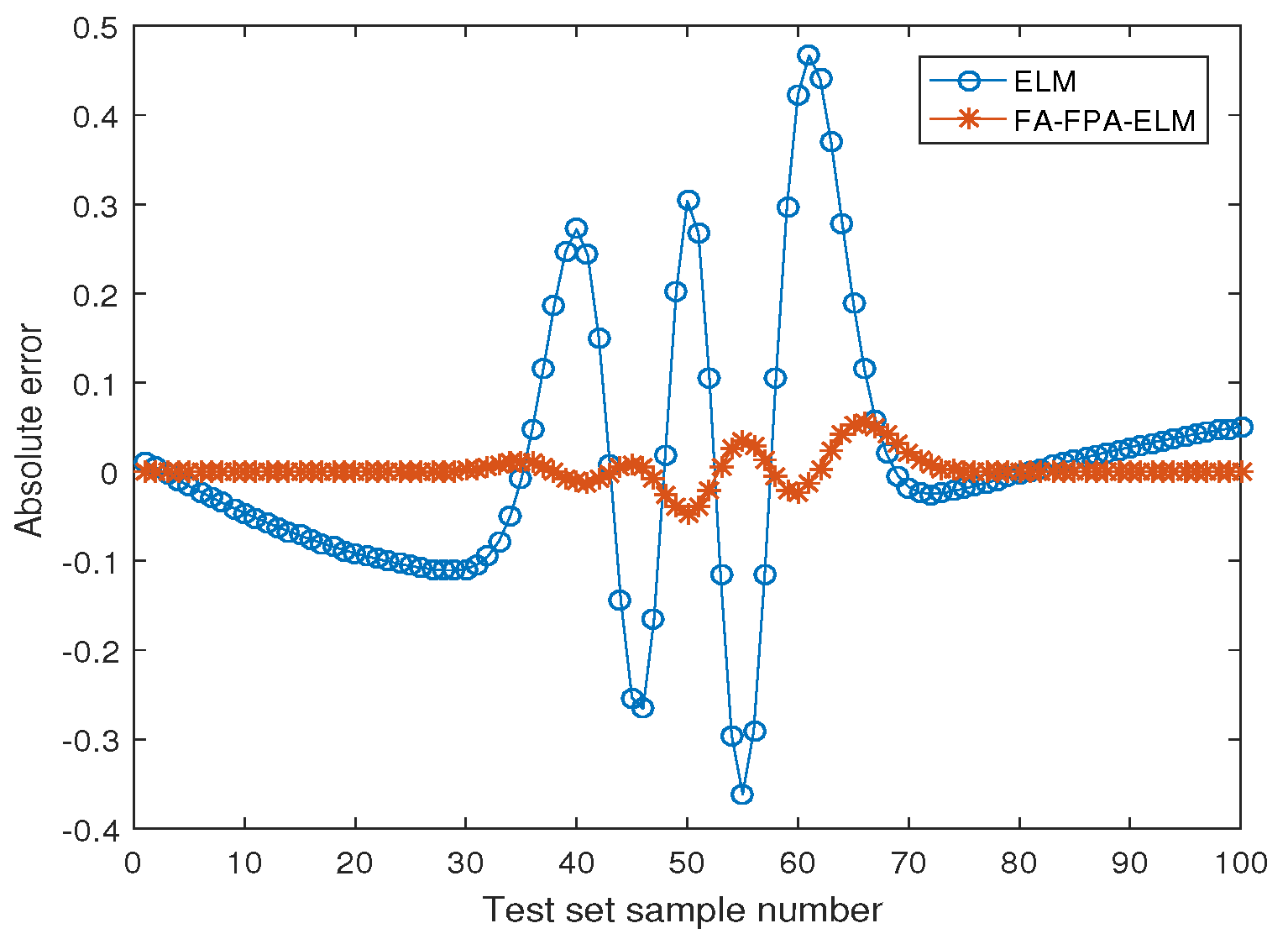

5.1. Nonlinear Function Fitting Problem

5.2. Iris Classification Problem

5.3. Personal Credit Rating

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liu, D.; Baskett, W.; Beversdorf, D.Q.; Shyu, C.-R. Exploratory Data Mining for Subgroup Cohort Discoveries and Prioritization. IEEE J. Biomed. Health Inform. 2019, 24, 1456–1468. [Google Scholar] [CrossRef] [PubMed]

- Tsuruoka, Y. Deep Learning and Natural Language Processing. Brain Nerve 2019, 71, 45–55. [Google Scholar] [PubMed]

- Yasin, I.; Drga, V.; Liu, F.; Demosthenous, A.; Meddis, R. Optimizing Speech Recognition Using a Computational Model of Human Hearing: Effect of Noise Type and Efferent Time Constants. IEEE Access 2020, 8, 56711–56719. [Google Scholar] [CrossRef]

- Levene, M. Search Engines: Information Retrieval in Practice. Comput. J. 2011, 54, 831–832. [Google Scholar] [CrossRef]

- Fan, Q.; Zurada, J.M.; Wu, W. Convergence of Online Gradient Method for Feedforward Neural Networks with Smoothing L1/2 Regularization Penalty. Neurocomputing 2014, 131, 208–216. [Google Scholar] [CrossRef]

- Ruck, D.W.; Rogers, S.K. Comparative analysis of backpropagation and the extended Kalmanfilter for training multilayer perceptrons. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 686–691. [Google Scholar] [CrossRef]

- Li, J.; Cheng, J.-H.; Shi, J.-Y.; Huang, F. Brief Introduction of Back Propagation (BP) Neural Network Algorithm and Its Improvement. Comput. Sci. Inf. Eng. 2012, 169, 553–558. [Google Scholar]

- Sanz, J.; Perera, R.; Huerta, C. Fault diagnosis of rotating machinery based on auto-associative neural networks and wavelet transforms. J. Sound Vibr. 2007, 302, 981–999. [Google Scholar] [CrossRef]

- Yingwei, L.; Sundararajan, N.; Saratchandran, P. Performance evaluation of a sequential minimal radial basis function (RBF) neural network learning algorithm. IEEE Trans. Neural Netw. 1998, 9, 308–318. [Google Scholar] [CrossRef]

- Beltran-Perez, C.; Wei, H.-L.; Rubio-Solis, A. Generalized Multiscale RBF Networks and the DCT for Breast Cancer Detection. Intern. J. Auto Comput. 2020, 17, 55–70. [Google Scholar] [CrossRef]

- Pfister, T.; Simonyan, K.; Charles, J.; Zisserman, A. Deep Convolutional Neural Networks for Efficient Pose Estimation in Gesture Videos. Asian Conf. Comput. Vis. 2014, 9003, 538–552. [Google Scholar]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: A new learning scheme of feedforward neural networks. IEEE Intern. J. Conf. Neural Netw. 2004, 2, 985–990. [Google Scholar]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Huang, G.-B. Learning capability and storage capacity of two hidden-layer feedforward networks. IEEE Trans. Neural Netw. 2003, 14, 274–281. [Google Scholar] [CrossRef]

- Huang, G.-B.; Chen, L.; Siew, C.-K. Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans. Neural Netw. 2006, 17, 879–892. [Google Scholar] [CrossRef]

- Fan, Q.; Wu, W.; Zurada, J.M. Convergence of Batch Gradient Learning with Smoothing Regularization and Adaptive Momentum for Neural Networks. SpringerPlus 2016, 5, 1–17. [Google Scholar] [CrossRef]

- Yu, D.; Deng, L. Efficient and effective algorithms for training single-hidden-layer neural networks. Pattern Recognit. Lett. 2012, 33, 554–558. [Google Scholar] [CrossRef]

- Fan, Q.; Liu, T. Smoothing L0 Regularization for Extreme Learning Machine. Math. Probl. Eng. 2020, 2020, 9175106. [Google Scholar] [CrossRef]

- Fan, Q.; Niu, L.; Kang, Q. Regression and Multiclass Classification Using Sparse Extreme Learning Machine via Smoothing Group L1/2 Regularizer. IEEE Access 2020, 8, 191482–191494. [Google Scholar] [CrossRef]

- Ding, J.; Chen, G.; Yuan, K. Short-Term Wind Power Prediction Based on Improved Grey Wolf Optimization Algorithm for Extreme Learning Machine. Processes 2020, 8, 109. [Google Scholar] [CrossRef]

- Nabipour, N.; Mosavi, A.; Baghban, A.; Shamshirband, S.; Felde, I. Extreme Learning Machine-Based Model for Solubility Estimation of Hydrocarbon Gases in Electrolyte Solutions. Processes 2020, 8, 92. [Google Scholar] [CrossRef]

- Salam, M.A. FPA-ELM Model for Stock Market Prediction. Intern. J. Adv. Res. Comput. Sci. Sof. Eng. 2015, 5, 1050–1063. [Google Scholar]

- Zhu, Q.Y.; Qin, A.K.; Suganthan, P.N. Evolutionary extreme learning machine. Pattern Recognit. 2005, 38, 1759–1763. [Google Scholar] [CrossRef]

- Ling, Q.L.; Han, F. Improving the Conditioning of Extreme Learning Machine by Using Particle Swarm Optimization. Intern. J. Digit. Cont. Techn. Appl. 2012, 6, 85–93. [Google Scholar]

- Alexandre-Cortizo, E.; Cuadra, L.; Salcedo-Sanz, S.; Pastor-Sánchez, Á.; Casanova-Mateo, C. Hybridizing Extreme Learning Machines and Genetic Algorithms to select acoustic features in vehicle classification applications. Neurocomputing 2015, 152, 58–68. [Google Scholar] [CrossRef]

- Wu, L.; Zhou, H.; Ma, X.; Fan, J.; Zhang, F. Daily reference evapotranspiration prediction based on hybridized extreme learning machine model with bio-inspired optimization algorithms: Application in contrasting climates of China. J. Hydrol. 2019, 577. [Google Scholar] [CrossRef]

- Alam, D.; Yousri, D.; Eteiba, M. Flower Pollination Algorithm based solar PV parameter estimation. Energy Convers. Manag. 2015, 101, 410–422. [Google Scholar] [CrossRef]

- Bekdaş, G.; Nigdeli, S.M.; Yang, X.-S. Sizing optimization of truss structures using flower pollination algorithm. Appl. Sof. Comput. 2015, 37, 322–331. [Google Scholar] [CrossRef]

- Abdelaziz, A.; Ali, E.; Elazim, S.A. Flower Pollination Algorithm and Loss Sensitivity Factors for optimal sizing and placement of capacitors in radial distribution systems. Int. J. Electr. Power Energy Syst. 2016, 78, 207–214. [Google Scholar] [CrossRef]

- Fister, L.; Yang, X.S.; Brest, J. A comprehensive review of firefly algorithms. Swarm Evol. Comput. 2013, 13, 34–46. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.-S.; Alavi, A.H. Mixed variable structural optimization using Firefly Algorithm. Comput. Struct. 2011, 89, 2325–2336. [Google Scholar] [CrossRef]

- Coelho, L.D.S.; Mariani, V.C. Improved firefly algorithm approach applied to chiller loading for energy conservation. Energ. Build. 2013, 59, 273–278. [Google Scholar] [CrossRef]

- Xia, X.; Gui, L.; He, G.; Xie, C.; Wei, B.; Xing, Y.; Wu, R.; Tang, Y. A hybrid optimizer based on firefly algorithm and particle swarm optimization algorithm. J. Comput. Sci. 2018, 26, 488–500. [Google Scholar] [CrossRef]

- Yang, X.-S.; Hosseini, S.S.S.; Gandomi, A.H. Firefly Algorithm for solving non-convex economic dispatch problems with valve loading effect. Appl. Soft Comput. 2012, 12, 1180–1186. [Google Scholar] [CrossRef]

- Konak, A.; Coit, D.W.; Smith, A.E. Multi-objective optimization using genetic algorithms: A tutorial. Reliab. Eng. Syst. Saf. 2006, 91, 992–1007. [Google Scholar] [CrossRef]

- Karakatič, S.; Podgorelec, V. A survey of genetic algorithms for solving multi depot vehicle routing problem. Appl. Sof. Comput. 2015, 27, 519–532. [Google Scholar] [CrossRef]

- Trelea, I.C. The particle swarm optimization algorithm: Convergence analysis and parameter selection. Inform. Process. Lett. 2003, 85, 317–325. [Google Scholar] [CrossRef]

- Tasgetiren, M.F.; Liang, Y.C.; Sevkli, M.; Gencyilmaz, G. A particle swarm optimization algorithm for makespan and total flowtime minimization in the permutation flowshop sequencing problem. Eur. J. Oper. Res. 2007, 177, 1930–1947. [Google Scholar] [CrossRef]

- Li, S.-A.; Hsu, C.-C.; Wong, C.-C.; Yu, C.-J. Hardware/software co-design for particle swarm optimization algorithm. Inform. Sci. 2011, 181, 4582–4596. [Google Scholar] [CrossRef]

- Yang, X.-S.; Karamanoglu, M.; He, X. Flower pollination algorithm: A novel approach for multiobjective optimization. Eng. Optimiz. 2013, 46, 1222–1237. [Google Scholar] [CrossRef]

- Liu, W.; Luo, F.; Liu, Y.; Ding, W. Optimal Siting and Sizing of Distributed Generation Based on Improved Nondominated Sorting Genetic Algorithm II. Processes 2019, 7, 955. [Google Scholar] [CrossRef]

- Han, Z.; Zhang, Q.; Shi, H.; Zhang, J. An Improved Compact Genetic Algorithm for Scheduling Problems in a Flexible Flow Shop with a Multi-Queue Buffer. Processes 2019, 7, 302. [Google Scholar] [CrossRef]

- Pavlyukevich, I. Lévy flights, non-local search and simulated annealing. J. Comput. Phys. 2007, 226, 1830–1844. [Google Scholar] [CrossRef]

- Yang, X.S. Flower Pollination Algorithm for Global Optimization. Unconv. Comput. Natural. Comput. 2012, 7445, 240–249. [Google Scholar]

| step 1: Initialize algorithm parameters, and set loop termination condition; |

| step 2: Stochastically initialize the position of fireflies, and calculate the target function value of each individual; |

| step 3: Use formulas (11) and (13) to determine the direction of movement of individuals; |

| step 4: Update the individual’s spatial position according to formulas (14); |

| step 5: Calculate the fitness function value of each individual on the grounds of the updated individual’s position; |

| step 6: Generate randomly, calculate the conversion probability p according to formulas (10); |

| step 7: Generate randomly, if , then conduct a global search according to formula (9); |

| step 8: If , then conduct a local search according to formula (8); |

| step 9: Calculate the fitness function value of each pollen to find the current optimal solution; |

| step 10: Judge whether the loop termination condition is met. If not, go to Step 3; if satisfied, go to Step 6; |

| step 11: Output the result, the algorithm ends. |

| step 1: Initialize algorithm parameters, and set loop termination condition; |

| step 2: Encode ELM input parameters into individual pollen according to formula (16); |

| step 3: Normalize the data according to formula (15) and randomly initialize individual positions; |

| step 4: Use the formula (19) to calculate the fitness function value of each individual; |

| step 5: Use FA to get the initial population, Generate randomly, calculate the conversion probability p; |

| step 6: Generate randomly, if , then conduct a global search; |

| step 7: If , then conduct a local search in the light of formula (8); |

| step 8: Calculate the fitness value of each pollen according to formula (19) and find the current optimal solution; |

| step 9: Judge whether the loop termination condition is met. If not, go to Step 5; if satisfied, go to Step 10; |

| step 10: Decode individual pollen into ELM input parameters for network training; |

| step 11: Output the result, the algorithm ends. |

| Maximum Iteration Number | Time (s) | Training | Testing | |||

|---|---|---|---|---|---|---|

| Training | Testing | RMSE | MAE | RMSE | MAE | |

| 5 | 0.6177 | 0.0006 | 0.0648 | 0.0546 | 0.0287 | 0.0175 |

| 10 | 0.9632 | 0.0006 | 0.0638 | 0.0534 | 0.0262 | 0.0127 |

| 20 | 1.6182 | 0.0006 | 0.0607 | 0.0508 | 0.0206 | 0.0097 |

| 50 | 3.6309 | 0.0006 | 0.0589 | 0.0497 | 0.0141 | 0.0078 |

| 100 | 7.0524 | 0.0007 | 0.0583 | 0.0503 | 0.0122 | 0.0063 |

| 500 | 34.0895 | 0.0008 | 0.0579 | 0.0510 | 0.0102 | 0.0052 |

| Hidden Nodes | Training Time (s) | Training (RMSE) | Testing (RMSE) | |||

|---|---|---|---|---|---|---|

| ELM | FA-FPA-ELM | ELM | FA-FPA-ELM | ELM | FA-FPA-ELM | |

| 2 | 0.0076 | 7.8363 | 0.5104 | 0.1041 | 0.5259 | 0.0871 |

| 5 | 0.0090 | 7.6754 | 0.2892 | 0.0582 | 0.2807 | 0.0108 |

| 10 | 0.0086 | 8.2132 | 0.0711 | 0.0576 | 0.0393 | 0.0104 |

| 15 | 0.0094 | 8.9231 | 0.0566 | 0.0565 | 0.0120 | 0.0113 |

| 20 | 0.0115 | 9.5575 | 0.0561 | 0.0560 | 0.0125 | 0.0128 |

| Learning Algorithms | Training Time (s) | Testing Time (s) | Training (RMSE) | Testing (RMSE) |

|---|---|---|---|---|

| ELM | 0.0119 | 0.0017 | 0.2315 | 0.2274 |

| FA-ELM | 3.2371 | 0.0008 | 0.0605 | 0.0194 |

| FPA-ELM | 3.3384 | 0.0011 | 0.0562 | 0.0102 |

| GA-ELM | 5.3392 | 0.0014 | 0.0737 | 0.0459 |

| PSO-ELM | 4.8809 | 0.0032 | 0.0621 | 0.0221 |

| FA-FPA-ELM | 9.5787 | 0.0045 | 0.0580 | 0.0099 |

| Learning Algorithms | Training Time (s) | Testing Time (s) | Training Accuracy | Testing Accuracy |

|---|---|---|---|---|

| ELM | 0.0010 | 0.0051 | 84.60% | 85.80% |

| FA-ELM | 4.5769 | 0.0003 | 85.20% | 84.60% |

| FPA-ELM | 4.7329 | 0.0003 | 97.60% | 95.60% |

| GA-ELM | 4.9440 | 0.0042 | 96.30% | 93.60% |

| PSO-ELM | 4.8876 | 0.0034 | 96.40% | 94.80% |

| FA-FPA-ELM | 9.6465 | 0.0112 | 98.20% | 97.40% |

| Learning Algorithms | Training Time (s) | Testing Time (s) | Training Accuracy | Testing Accuracy |

|---|---|---|---|---|

| ELM | 0.0010 | 0.0053 | 70.37% | 70.07% |

| FA-ELM | 52.0175 | 0.0032 | 71.85% | 70.20% |

| FPA-ELM | 52.7629 | 0.0141 | 73.71% | 73.50% |

| GA-ELM | 70.7881 | 0.0101 | 72.86% | 72.13% |

| PSO-ELM | 64.8698 | 0.0065 | 74.20% | 73.73% |

| FA-FPA-ELM | 123.3332 | 0.0055 | 75.09% | 76.80% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, T.; Fan, Q.; Kang, Q.; Niu, L. Extreme Learning Machine Based on Firefly Adaptive Flower Pollination Algorithm Optimization. Processes 2020, 8, 1583. https://doi.org/10.3390/pr8121583

Liu T, Fan Q, Kang Q, Niu L. Extreme Learning Machine Based on Firefly Adaptive Flower Pollination Algorithm Optimization. Processes. 2020; 8(12):1583. https://doi.org/10.3390/pr8121583

Chicago/Turabian StyleLiu, Ting, Qinwei Fan, Qian Kang, and Lei Niu. 2020. "Extreme Learning Machine Based on Firefly Adaptive Flower Pollination Algorithm Optimization" Processes 8, no. 12: 1583. https://doi.org/10.3390/pr8121583

APA StyleLiu, T., Fan, Q., Kang, Q., & Niu, L. (2020). Extreme Learning Machine Based on Firefly Adaptive Flower Pollination Algorithm Optimization. Processes, 8(12), 1583. https://doi.org/10.3390/pr8121583