1. Introduction

China’s medium and shallow coalbed methane has been commercially exploited, and two major coalbed methane industry bases have been built in Qingshui Basin and the eastern edge of Ordos Basin [

1]. Deep coalbed methane (referred to as deep CBM) is rich in resources, and is an important replacement resource for tight gas in China. Since 2019, PetroChina has achieved a breakthrough in deep CBM in the Daning–Jixian block, submitting 76.2 billion cubic metres of proven geological reserves, and the daily production of deep CBM has reached 4 million cubic metres as of 21 September 2023. In 2022, PetroChina carried out a deep CBM appraisal in the Da Niu Di gas field In 2022, PetroChina carried out deep CBM evaluation in Da Niu Di gas field, and the horizontal well ‘Yang Coal 1HF’ gained a major breakthrough, with a daily production of 104,000 mcf, and achieved continuous and stable test mining. In 2022, the first deep CBM horizontal well ‘Deep Coal 1’ implemented in Linxing block gained a daily production of 60,000 mcf of high-yield industrial gas flow, which formally started the prelude to the exploration and development of deep CBM of CNOOC [

2].

Post-pressure deep coalbed methane production prediction methods mainly include analytical methods, reservoir numerical simulation, decreasing production curves, and traditional statistical-based time-series prediction methods. The analytical method presupposes a large number of assumptions in advance to idealize the complex conditions, and the prediction accuracy is difficult to guarantee; the reservoir numerical simulation greatly relies on accurate geological models and input parameters, and the history fitting process is time-consuming and labour-intensive; the decreasing production curve is not applicable to fractured wells with short production time and frequent changes in production regimes; and the traditional time-sequence prediction method is based on the linear statistical method, which is unable to accurately capture the characteristics of the nonlinear and non-smooth changes in time-sequence production.

The time-series prediction problem can be divided into traditional time-series prediction methods based on statistics and deep learning methods based on convolutional neural networks. Traditional time-series prediction methods based on statistics and stochastic process theory have been successfully applied to dynamic production forecasting of oil and gas wells [

3,

4,

5,

6]. This class of methods is theoretically mature and simple to use, and it predicts the trend of production change in the future period by mining the relational features of the historical production data and current production data. Autoregressive model (AR), moving average model (MA), autoregressive moving average model (ARMA), and ARIMA are mature time-series forecasting methods. In 2010, Chen et al. [

7] established a monthly production forecasting model for a single well based on ARIMA, which confirms that ARIMA can be used for time-series production capacity forecasting and has high accuracy in short-term forecasting; the following year, Wang et al. [

8] combined ARIMA and fractal theory to establish a monthly production prediction model for coalbed methane wells; in 2014, Gupta et al. [

9] applied ARIMA to establish a monthly production prediction model for a single well of shale gas wells and compared it with the Duong method, and the results showed that ARIMA could achieve prediction results with comparable accuracy to the Duong method; in the same year, Olominu et al. [

10] based on ARIMA established a single-well cumulative production prediction model, and concluded that the prediction accuracy of ARIMA method was higher than that of Arps production decreasing method in the medium and long term.

With the development of artificial intelligence, the research on machine learning-assisted temporal capacity prediction has been continuously advanced. With the ability of ‘memory’ and flexible structure, recurrent neural network (RNN) and its variants such as long and short-term memory (LSTM) and gated recurrent unit (GRU) [

11] are often used to solve multivariate time-series prediction problems. Deng [

12] established an LSTM model for the cumulative gas production of a single well in a gas field considering only the production itself, and the comparison found that its prediction performance was much higher than that of ARIMA. Sun et al. [

13] compared the prediction effect of LSTM model with tubing pressure as an exogenous variable with that of the Arps production decline analysis, and found that the LSTM model had a much smaller error. Lee et al. [

14] established an LSTM model for shale gas that considered the effect of well shut-in time to shale gas LSTM monthly gas production prediction model, and pointed out that the prediction accuracy of the multivariate model considering well shut-in time was higher than that of the univariate model considering only production. Li (2023) [

15] proposed a dynamic production prediction method based on bidirectional gated recurrent unit neural network (BiGRU) to address the problem that traditional production prediction methods cannot consider the influence of field operation, and the performance is better than that of the production decreasing curve analysis, the traditional time-series prediction method, recurrent neural network, and its unidirectional variant. Zhu (2022) [

16] conducted a comparative analysis of four coalbed methane production capacity prediction models and revealed that the hybrid deep neural network prediction model, which integrates convolutional and gated recurrent units, exhibited superior predictive performance. Furthermore, this model facilitated the optimization of the production and recovery system. conducted a comparative analysis of four coalbed methane production capacity prediction models and revealed that the hybrid deep neural network prediction model, which integrates convolutional and gated recurrent units, exhibited superior predictive performance. Furthermore, this model facilitated the optimization of the production and recovery system. Gao (2022) [

17] constructed a GRU-TGCN model to mine the time-series implicit information, and established the adjacency matrix and feature matrix based on the inter-well relationship graph and well gas production data, so as to adjust the network parameters for production. Liu (2022) [

18] used the GRU-MLP network model to establish a physical constraints data-driven dynamic prediction model, optimized the model hyperparameters by genetic algorithm, and applied the model to two coalbed methane field multi-stage fractured horizontal wells in Linfen block of Ordos Basin and Qinshui Basin Daning well field; the prediction accuracy reached more than 90%.

Multi-step timing prediction has always been a research hotspot and difficulty in the field of timing prediction. In the petroleum industry, although it is still in its infancy, multi-step timing prediction has attracted the attention of more and more scholars. Lee et al. [

14] used an iterative strategy to predict the monthly gas production of shale gas wells from the next 1 month to the next 20 months. Mohd Razak et al. [

19] used an iterative strategy to achieve the monthly production prediction of the three phases, namely oil, gas, and water, for the next 6 months based on migration learning and LSTM. However, the study treated the multiphase production as a multivariate output of an LSTM model, ignoring the need for different neural network structures for different production volumes. Based on a hybrid model of convolutional neural network and RNN, Chaikine and Gates [

20] used a direct strategy to predict the annual gas production and water content for the next 5 years; again, the two outputs were multivariate outputs of the same network, ignoring the different demands of different tasks on the structure of the model. Werneck et al. [

21] used a multiple-input–multiple-output prediction strategy to achieve the future 30-day prediction of daily oil production and bottomhole flow pressure, again without considering the coupling between oil, gas, and water phases. Most of the above multi-step time-series prediction models are only for single phase, ignoring the interaction between oil, gas, and water during multiphase seepage, and the few multi-output models do not consider the different requirements of different production sequences on the neural network structure. In summary, the post-pressure dynamic yield prediction problem is complex, involving multivariate, multi-step, and multiphase time-series yield prediction, and the model structures and prediction strategies vary greatly in different application scenarios.

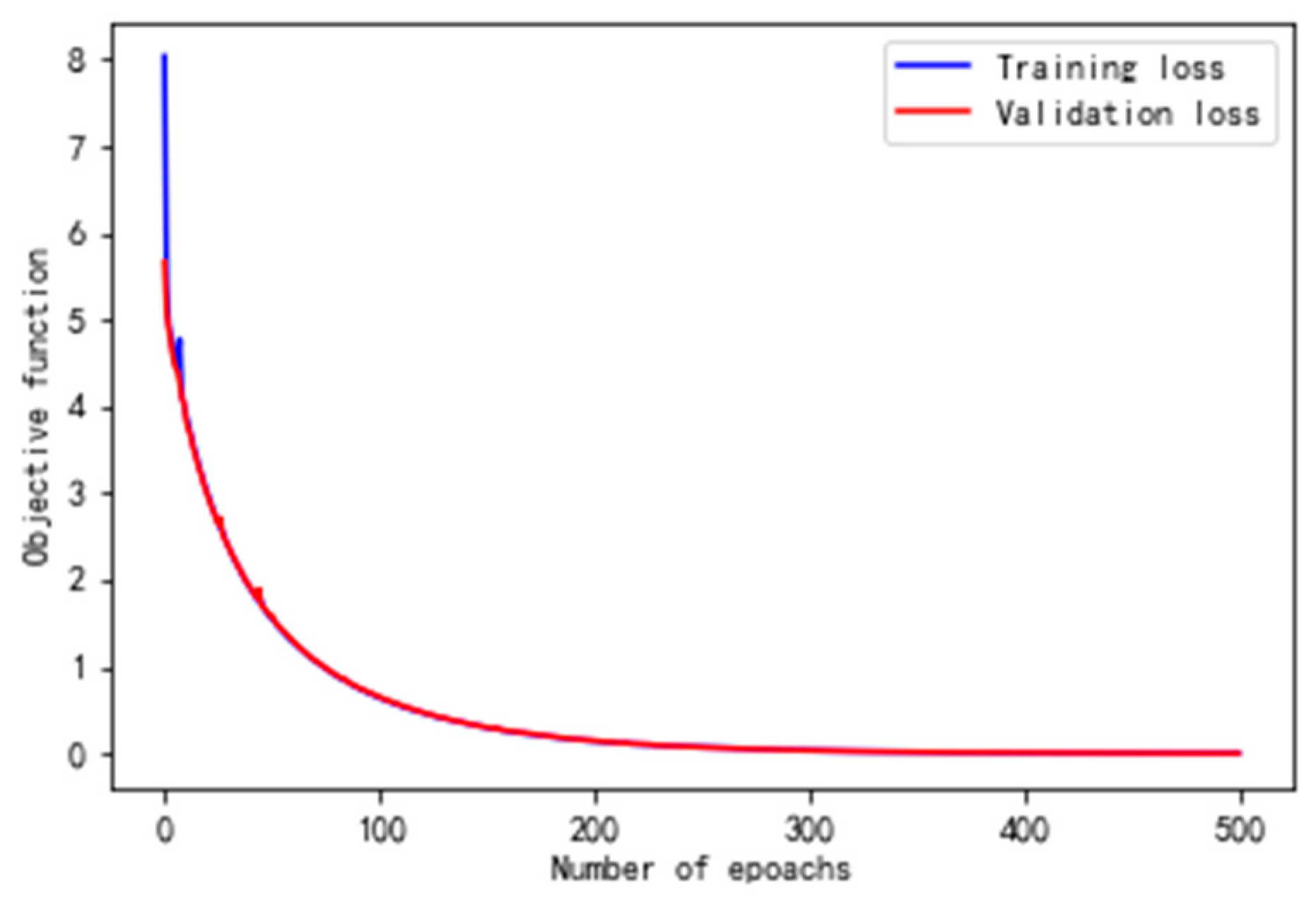

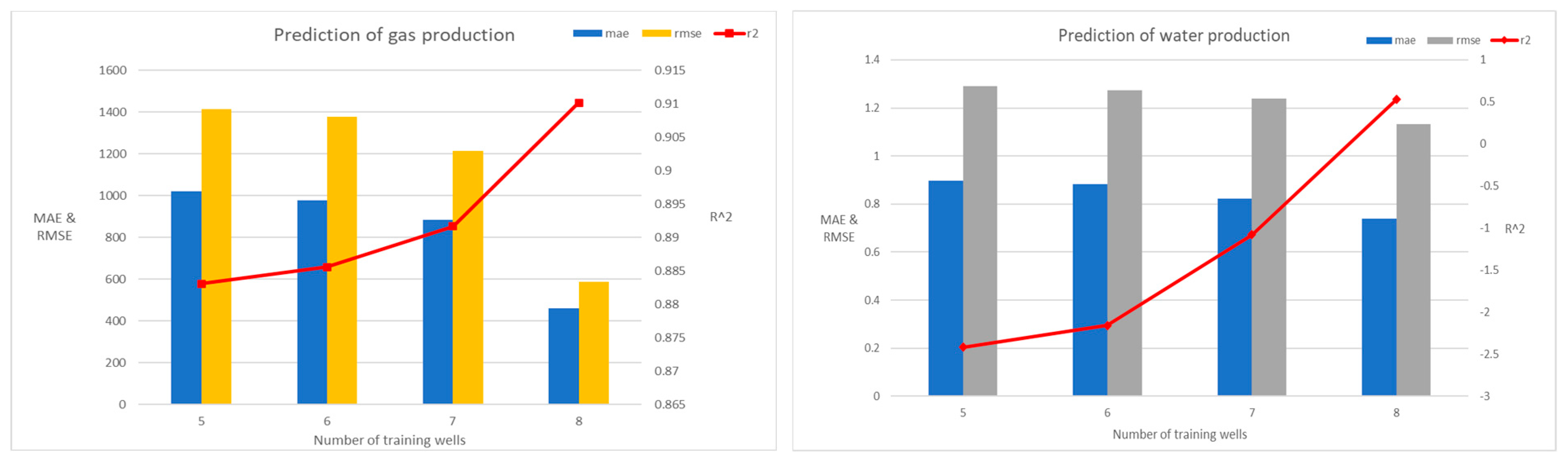

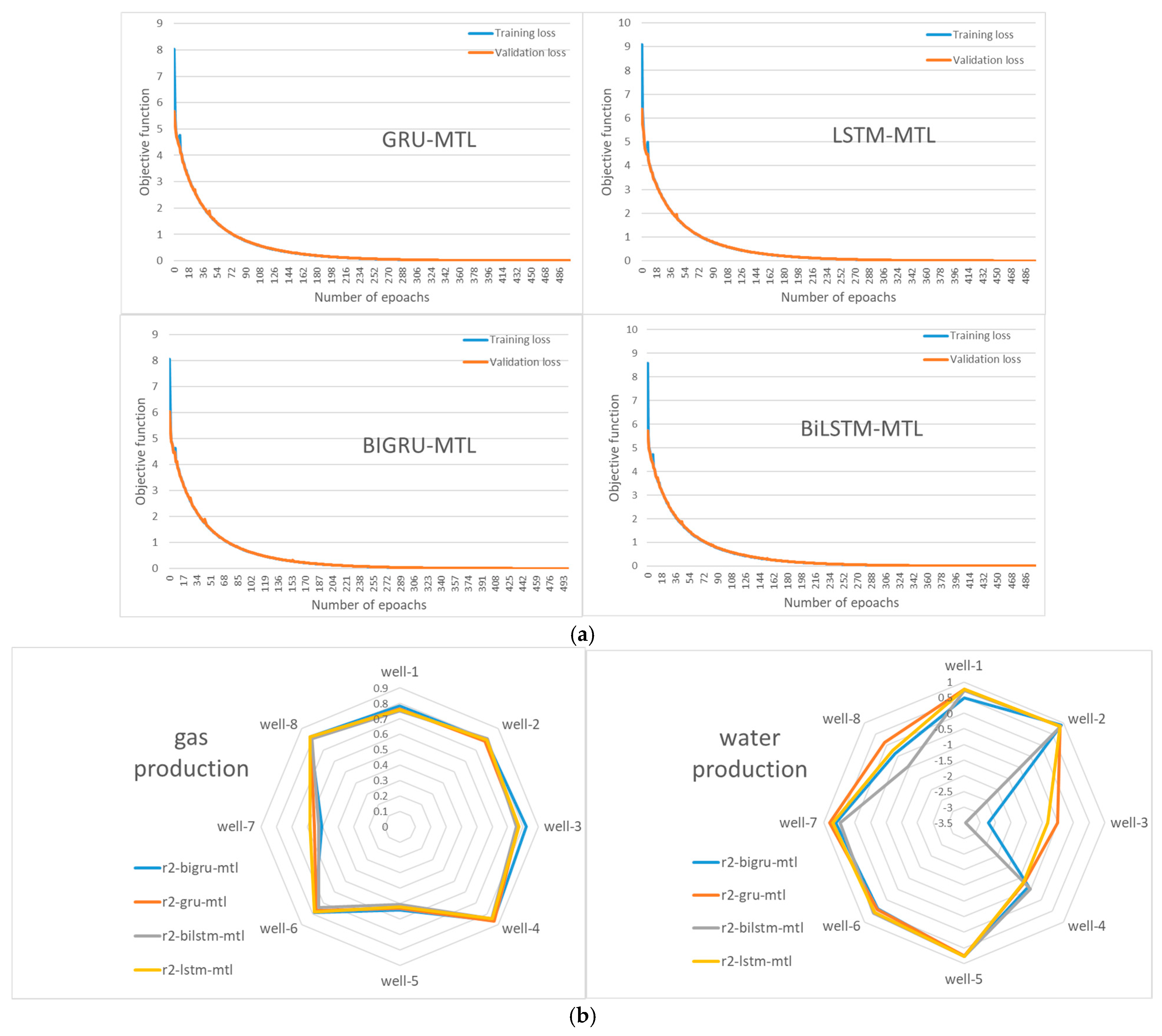

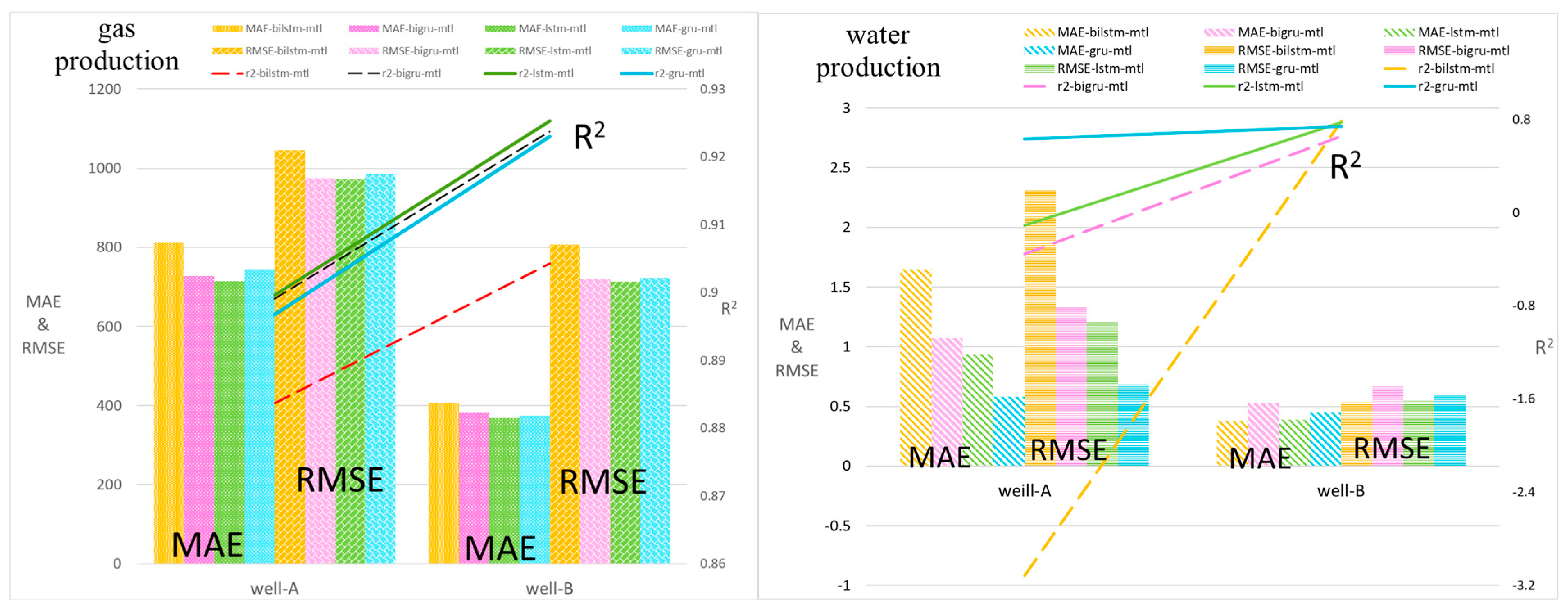

The first section of this paper introduces the attention mechanism and sharing module on the basis of the GRU model to consider the attention allocation and feature sharing of multi-task; the second section constructs the model framework diagram and arranges the pre-processing of the data; the third section configures the environment, and then normalises the data as well as constructs the time-series data by sliding window. In this paper, we choose MAE, RMSE, and R2 as the evaluation indexes, and following super-parameter optimisation to obtain the model, the next step is to compare the multi-task model. Finally, the preferred model will be subjected to adaptive analysis.

2. Yield Prediction Method Based on Multi-Task Machine Learning

2.1. Gated Recurrent Unit (GRU)

GRU is a variant of RNN, which adopts a ‘gated structure’ to solve the long-time sequence memory problem. Compared with LSTM, its structure is simpler, but can achieve the same prediction performance as LSTM. The structure of the GRU unit is shown in

Figure 1, which consists of two gates, the update gate and the reset gate.

Among these structural elements, the update gate (

rT) determines which input information is forgotten and updated, denoted as

where

Wr and

br are the weight matrix and bias matrix of the update gate, respectively.

The reset gate (

ZT) determines the degree of information forgetting, and its value ranges from 0 to 1, with the closer to 0 representing the higher degree of forgetting, which is mathematically expressed as

where

Wz and

bz are the weight matrix and bias matrix of the reset gate, respectively.

After the input message passes through the update gate and reset gate, the candidate hiding state

at time step T is updated to

where

Wh and

bh are the weight and bias matrices of the cell’s hidden state, respectively, and

is the Hadamard product.

Finally, the output

hT at time step T is obtained as

2.2. Multi-Task Learning

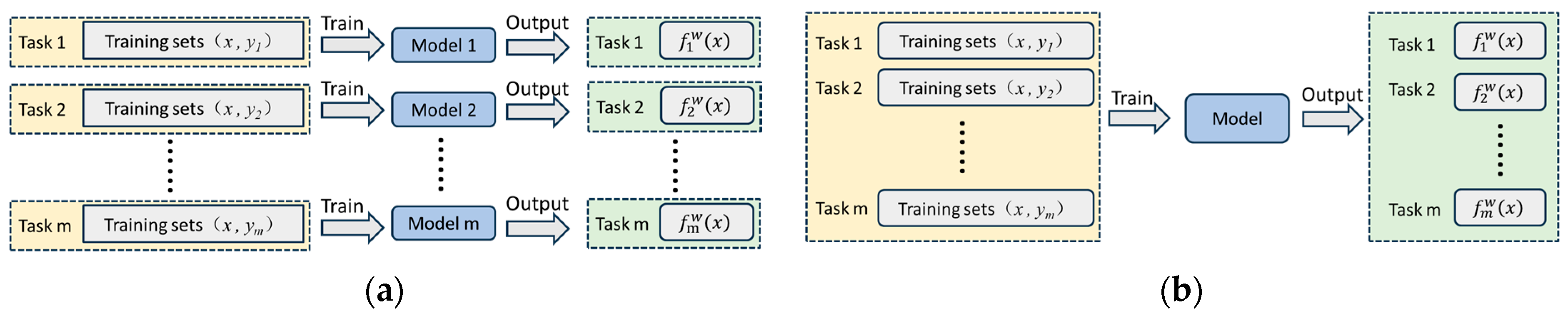

Most of the current yield prediction models are single-task models, i.e., one model can only predict one target variable. If multiple target variables need to be predicted, multiple single-task models need to be built separately. This repetitive training and optimisation process is not only time-consuming and laborious, but also each model can only make use of limited single-task data. With limited samples, it is difficult for single-task models to accurately understand the true distribution of the data and learn the implicit knowledge behind the yield prediction problem, which can easily lead to overfitting and weak generalisation. In contrast, multi-task learning can more effectively improve prediction performance and computational efficiency.

Figure 2 compares single-task learning and multi-task learning. As shown in the figure, single-task learning trains models independently and ignores the correlation information between multiple tasks, while multi-task learning implements model training for multiple tasks simultaneously and improves model performance by making full use of shared representations in the training signals of multiple related tasks. In this paper, we define multi-task learning as follows: given m learning tasks Ti (i∈[1,m]), all of which, or some of which, are related but not identical, and by using the information embedded in the m tasks, multi-task learning is able to facilitate the knowledge learning of the Ti tasks.

It is clear from the definition that task relevance is one of the elements of multi-task learning. Tasks selected in multi-task learning should be interrelated so that all tasks can utilise the useful information contained in the tasks to improve performance, whereas irrelevant or weakly relevant tasks can confuse valuable knowledge and lead to a decrease in prediction performance. In fractured well capacity prediction, multi-task relevance judgements need to be made in the context of expertise background and understanding of the yield prediction task.

The working mechanism of multi-task learning to improve the generalisation ability of limited samples can be summarised for the following reasons [

22]:

(1) From the perspective of data volume, multi-task learning pools samples from multiple tasks, increasing the amount of data available for model training and alleviating the limited sample problem to some extent;

(2) For finite samples with high-dimensional inputs, it is difficult for machine learning models to distinguish valuable features from noise, resulting in poor generalisation. In multi-task learning, other tasks can provide additional information to help the main task determine which features are useful, thus allowing the model to focus more on the truly critical features;

(3) Due to complex data structures and domain-specific non-linearities, it is difficult for some tasks to extract features efficiently, while for other tasks, features may be easily extracted. Multi-task learning can help one task learn features by ‘eavesdropping’ on another task. In other words, one task can learn the required features directly from another task, solving the problem of difficult feature extraction;

(4) By biasing the model towards features that are recognised by the majority of tasks, multi-task learning acts as a regulariser, thus reducing the risk of overfitting and improving resistance to noise.

Based on the above analysis, the problem of limited sample data and low generalisation performance can be alleviated by embedding the multi-task learning concept into the limited sample yield prediction model.

2.3. Shared Module Design

For a multi-task model, it is crucial and challenging to determine the partition structure of the model and the degree of sharing among multiple tasks, and which layers should be shared and which should be partitioned depends on the data and task situation. In this paper, we adopt the cross-stitch network style [

23] to build a sharing module, which uses a linear combination of shared and task-specific representations of multiple tasks to connect a neural network of multiple tasks, and automatically determines the segmentation structure and the degree of sharing through end-to-end learning, which solves the problem that the structure of the model is difficult to determine manually. The core of the cross-stitch network is the cross-stitch unit, and its core idea is shown in

Figure 3, where each task has its own independent network structure, and information sharing is achieved by adding a cross-stitch unit to connect the networks of different tasks between task layers.

Using two-task learning as an example, the cross-stitch unit can be described as

where

and

are the feature maps of task 1 and task 2 at (

i,

j), respectively;

represents the cross-stitch unit, and the smaller its value means the lower degree of sharing between tasks; when

= 0, the layer at (

i,

j) is a task-specific layer, the value of which can be used to determine the segmentation structure of the network and the degree of sharing;

and

are the feature maps of task 1 and task 2 after learning from the cross-stitch unit, respectively.

End-to-end learning is achieved by calculating the partial differential of the loss function L for Task 1 and Task 2 through Equations (6)–(8):

2.4. Design of the Loss Function

Multi-task learning makes predictions accurately and efficiently by optimising the losses of multiple tasks simultaneously, and the performance of the whole model is highly dependent on the relative weights of each loss. The traditional approach is to use the weighted linear sum of all task losses as the total loss, as shown in Equation (9), and the weights are typically taken as

1/

m or fine-tuned, which makes it difficult to manually find the optimal relative weight values. In addition, the size of the loss varies from task to task, which may result in a particular task with large-scale loss dominating in minimising the loss, while other tasks fail to participate in the learning process, leading to insufficient training.

where

is the total loss function of the multi-task model,

and

are the loss function and weight of the

i-th task, respectively, and

m is the number of tasks.

Based on the knowledge that the optimal weight of a task depends on the magnitude of task noise, this study introduces homoskedastic uncertainty [

24] to measure the weight of a task in order to solve the problem of difficulty in determining the weight of multi-task loss. In Bayesian modelling, uncertainty can be divided into cognitive uncertainty and chance uncertainty. Cognitive uncertainty explains the uncertainty of the model parameters, which means that the model has a low confidence in the ‘unseen’ data due to the insufficient amount of training data, and the cognitive uncertainty can be eliminated by increasing the amount of training data. Incidental uncertainty refers to the noise inherent in the observations, which cannot be eliminated by increasing the amount of training data because the noise is random. Chance uncertainty can be further subdivided into heteroskedastic uncertainty, which is dependent on the input data, and homoskedastic uncertainty, which is dependent on the task. Heteroskedastic uncertainty is also known as task uncertainty, which is constant for the input data but varies across tasks, and hence this study utilises heteroskedastic uncertainty to maximise the Gaussian likelihood estimation.

For the regression problem, we can define a probabilistic model as follows:

where

represents the output of the neural network with weight

w and input

x,

y is the observation, and

N represents the normal distribution with scale parameter

.

The logarithm can still be written as

where

represents loss.

The multi-task Gaussian likelihood function with

m tasks is

where

are the observations for task 1, …, task m, respectively.

The regression multi-task total loss function is derived as

In applications,

is often used instead of

because its value is more stable and division by zero can be avoided, so the total loss function can be rewritten as

2.5. Attention Mechanism (AT)

In neural network technology, the use of attention mechanisms has similarities with the brain’s information-processing model. The brain sifts and refines information to extract the valuable parts. For example, when people view a painting, they tend to focus their attention on the more prominent elements of the picture, such as sharp angles or vivid colours. The principle of the attention mechanism is similar in that it identifies data with high relevance to the target when the model is running and selectively focuses attention so that the model can concentrate on the most critical parts of the input data. The concept originated in the field of neuroscience and has since been introduced into machine learning models, particularly in the fields of natural language processing (NLP) and computer vision.

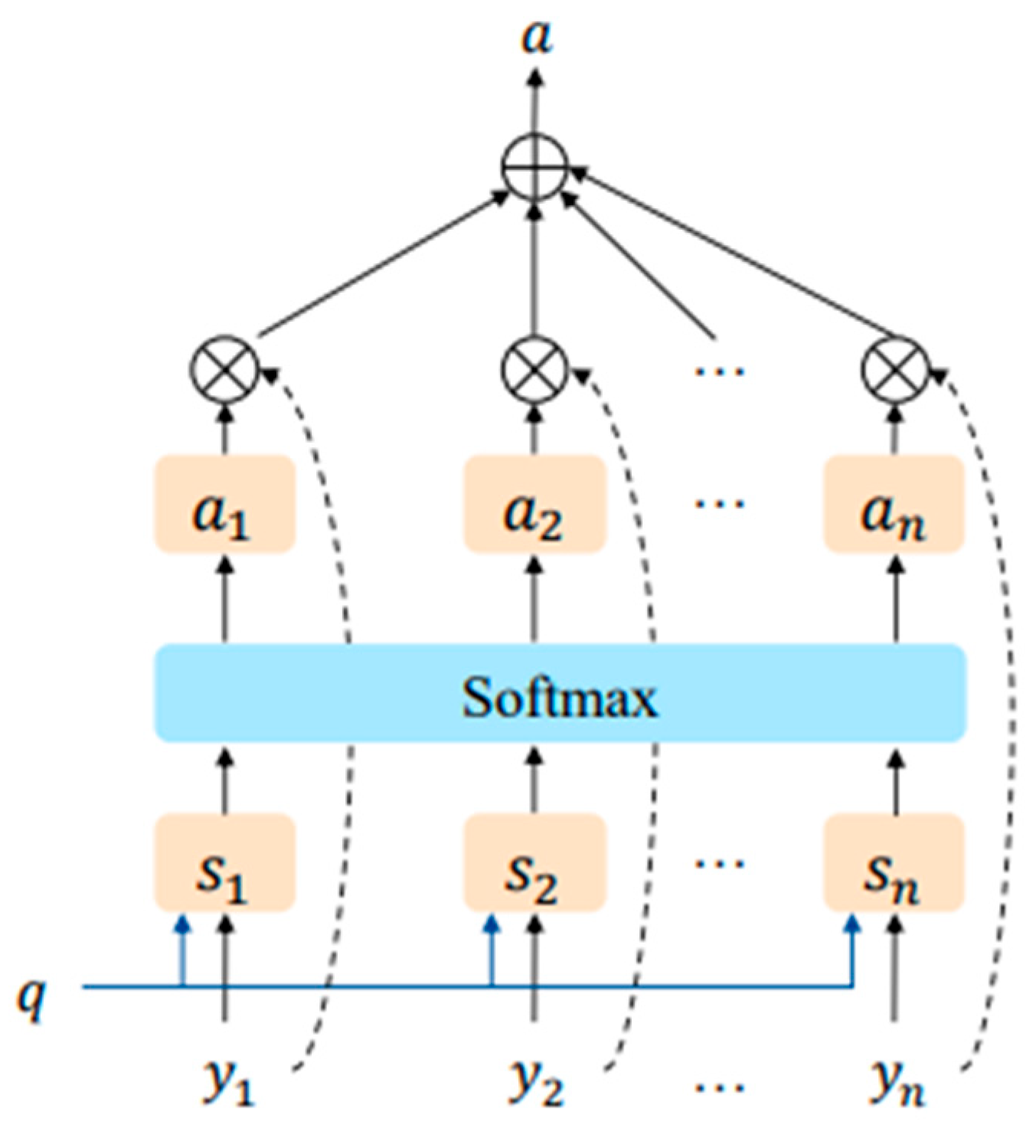

In deep learning models, the attention mechanism enables the model to dynamically assign different processing weights to different parts of the input sequence, as shown in

Figure 4. This means that the model can take into account information from other elements in the sequence while processing one element, and can focus on different information depending on the task at hand. Attention models are widely used in a variety of fields such as natural language processing, image recognition, speech recognition, and so on.

The core idea of the attention mechanism is to represent the extent of the model’s attention to different parts of the input data by means of weights (or scores). These weights are usually computed in a learnable way to reflect the importance of different parts of the input for the task at hand. A mathematical model of an attention mechanism usually consists of the following steps:

(1) Calculate weights: for a given query and set of keys, calculate the similarity or correlation score between them.

(2) Normalisation: the scores are normalised using the softmax function to get the weights corresponding to each key.

(3) Weighted Summation: Weight and sum the corresponding values according to the weights to get the final attention output.

2.6. Data Pre-Processing

2.6.1. Data Segmentation

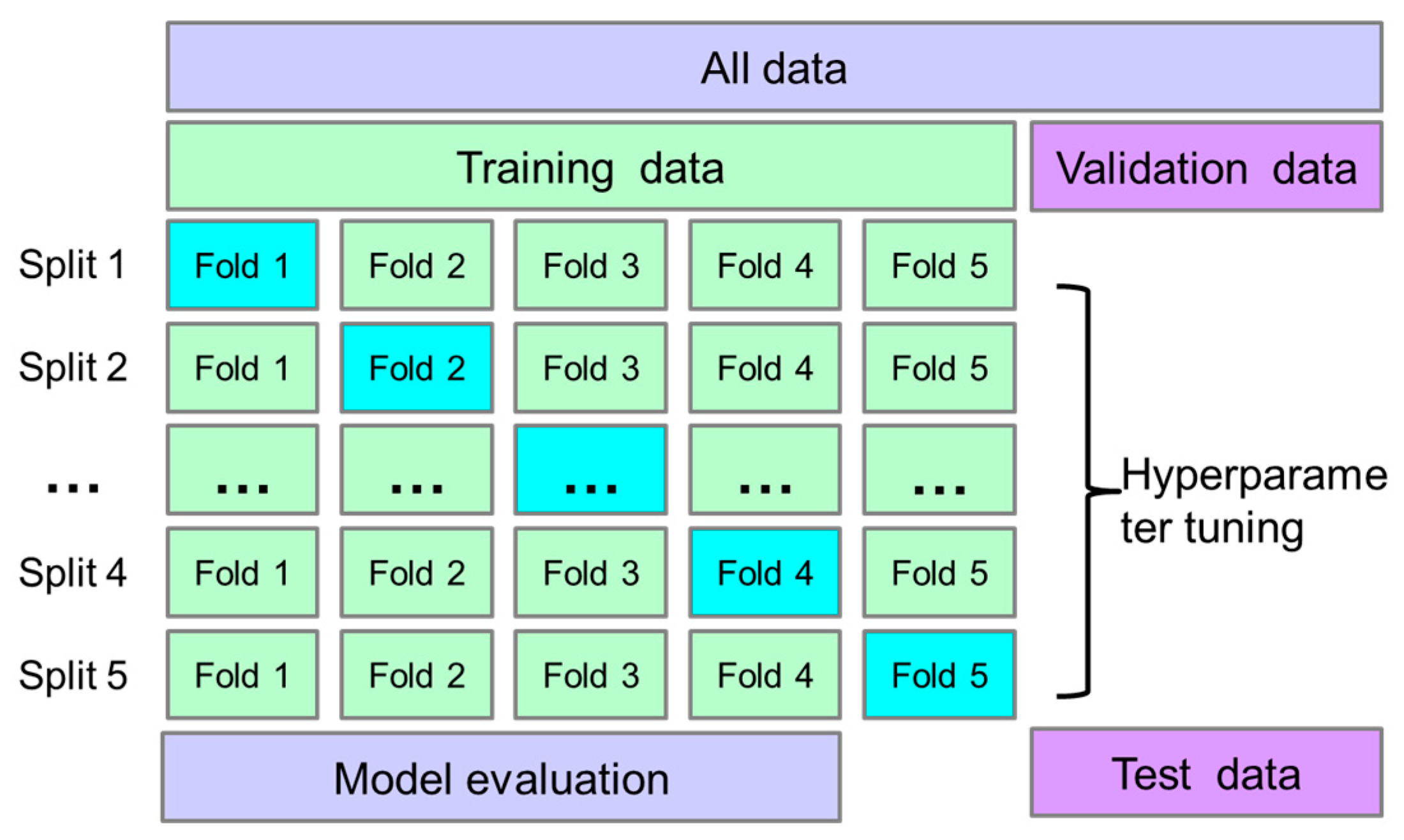

Dividing the dataset into training data, validation data, and test data according to a certain ratio is a commonly used method of dataset division. Among them, the training data are used to train the machine learning model; the validation data are used to check the training degree of the model during the training process, to avoid overfitting and underfitting, to determine the model with the best performance; and finally, the test data are used to evaluate the final effect of the model. It is worth noting that the test data are never involved in the training and optimisation process of the model, and are only used when the model is tested, so as to test the generalisation ability and application effect of the model on ‘unfamiliar’ data. For larger datasets, the proportionally drawn test data are representative enough of the distribution of the whole dataset to fairly evaluate the model performance, but for small and medium-sized datasets, the model may perform well on one randomly drawn test datum and poorly on another, making it difficult to fairly evaluate the model performance with unstable results.

The k-fold cross-validation is a more complex type of dataset partitioning method, which solves the problem to some extent, and

Figure 5 shows the 5-fold cross validation. In this method, the dataset is divided into k subsets; one subset is selected as the test data without repetition each time; the remaining k-1 subsets are used as the training data; the training is repeated k times; and the mean of the model’s prediction error on the k test data is used as the final model error. In this method, all the samples in the training data necessarily have the opportunity to participate in model training and testing, which enables a fairer evaluation of the robustness and generalisation ability of the model.

2.6.2. Feature Scaling

Features in a dataset vary greatly in size, unit, and range, but many machine learning algorithms (e.g., neural networks) use the Euclidean distance between two samples in their calculations, which, if the data magnitude is not taken into account, results in features with a large scale being much more important than those with a small scale. Therefore, feature scaling is required before modelling to eliminate the data incidental magnitude so that different features have the same scale. Commonly used feature scaling methods include 0–1 normalisation and z-score normalisation, which are calculated as shown in Equations (18) and (19), respectively:

where

x represents a feature;

,

,

, and

are the minimum, maximum, mean, and variance of the feature in the training set, respectively; and

represents the scaled feature.

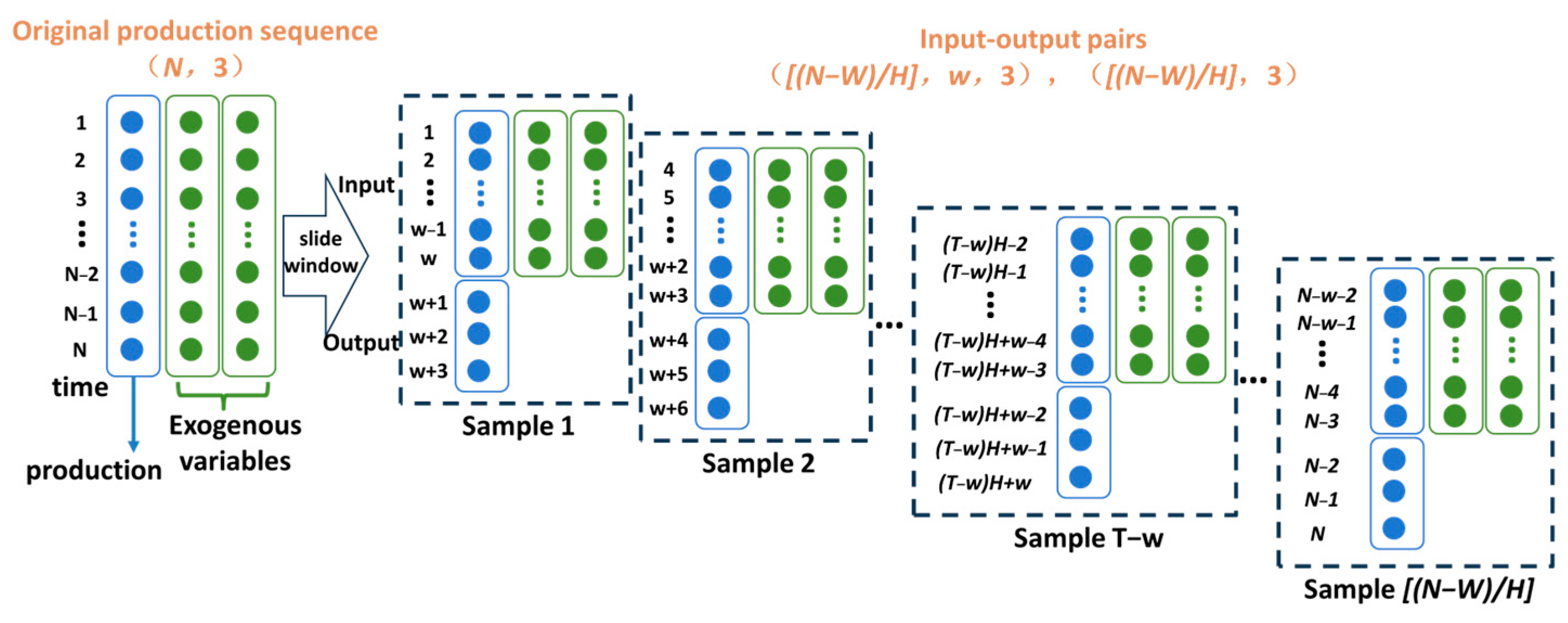

(3) Sliding window to construct timing training samples

To accommodate the input requirements of the recurrent neural network, the time-series production data collected in the field needs to be converted into input–output pairs using a sliding window approach before it can be used to train the model. Taking single-step time-series prediction as an example, assume that the original production data includes three sequences of length

N, one of which is the production sequence, and the other two are exogenous variable sequences, with a window size of

w and a prediction step set to

H, i.e., predicting the production for the next

H days based on the production of the past

w days and the data of the exogenous variables. The multi-step temporal sampling approach takes a sliding

H-day-at-a-time approach to constructing samples, as shown in

Figure 6. Construct the first sample of input–output pairs by taking the data from day 1 to day

w as the input to the 1st sample and the production from day

w + 1 to

w + 3 as the output of the 1st sample. The sliding window slides down by

H = 3 days, the data from day 4 to day

w + 3 are used as input for the 2nd sample, and the production from day

w + 4 to day

w + 6 are the outputs of the 2nd sample, constructing the 2nd sample of input–output pairs, and the sliding window slides down by

H days again. The process is repeated until the remaining data cannot form another sample. Up to this point, a multi-step input–output pair with input size (

,

w, 3) and output dimension (

, 3) is constructed using a sliding window, with a total number of samples n =

, where

stands for upward rounding.