PreSubLncR: Predicting Subcellular Localization of Long Non-Coding RNA Based on Multi-Scale Attention Convolutional Network and Bidirectional Long Short-Term Memory Network

Abstract

1. Introduction

2. Materials and Methods

2.1. Datasets

2.2. Network Framework of PreSubLncR

2.3. Feature Encoding

2.4. Multi-Scale One-Dimensional Convolutional Neural Network with Attention

2.5. Bidirectional Long Short-Term Memory

2.6. Performance Evaluation Metrics

2.7. Hyperparameter Optimization

3. Results and Discussion

3.1. Comparison of Different k-mer Features

3.2. Ablation Experiment

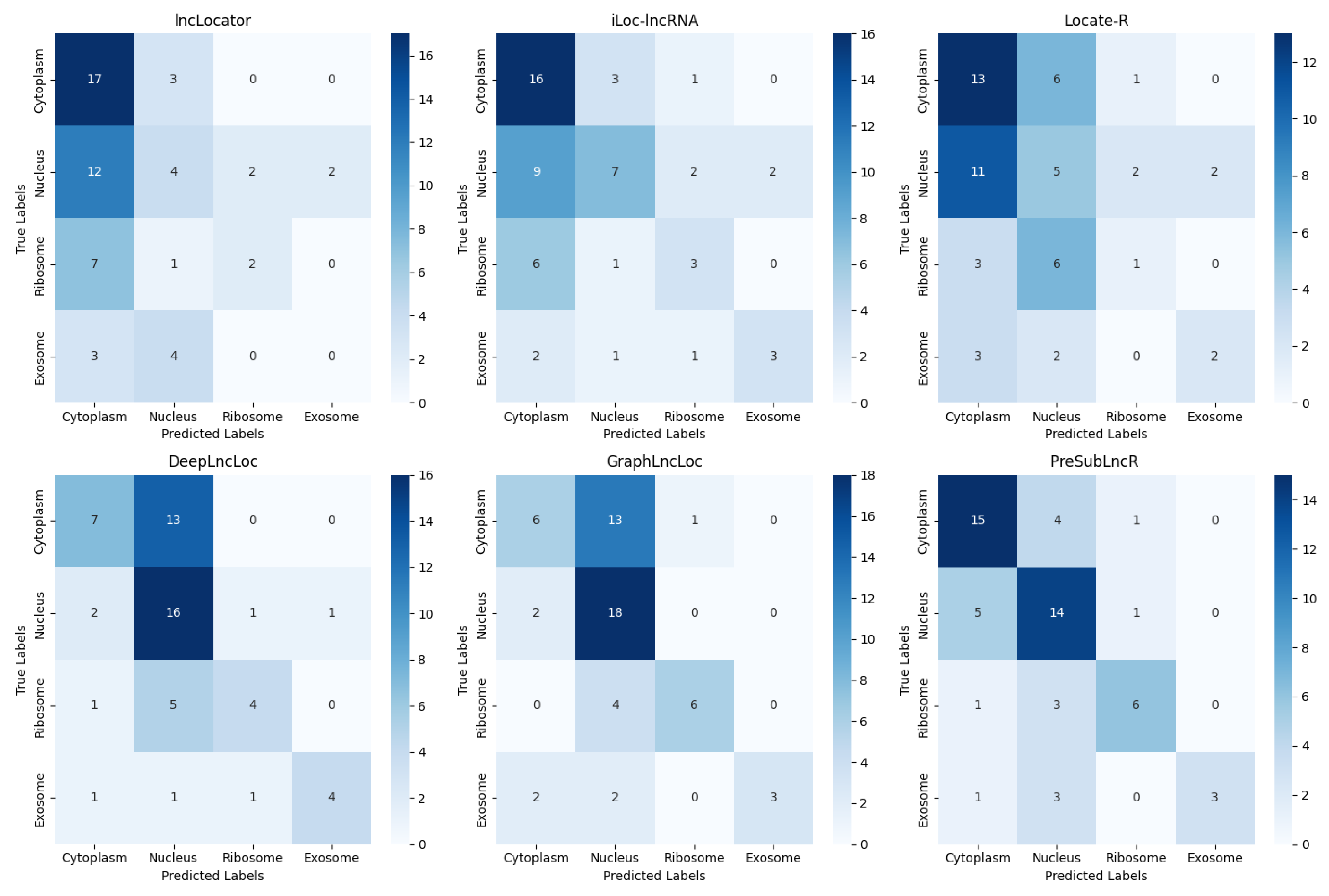

3.3. Comparison with Other Predictors

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kung, J.T.; Colognori, D.; Lee, J.T. Long noncoding RNAs: Past, present, and future. Genetics 2013, 193, 651–669. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Liu, X.; Liu, L.; Deng, H.; Zhang, J.; Xu, Q.; Cen, B.; Ji, A. Regulation of lncRNA expression. Cell. Mol. Biol. Lett. 2014, 19, 561–575. [Google Scholar] [CrossRef] [PubMed]

- Yoon, J.H.; Abdelmohsen, K.; Srikantan, S.; Yang, X.; Martindale, J.L.; De, S.; Huarte, M.; Zhan, M.; Becker, K.G.; Gorospe, M. LincRNA-p21 Suppresses Target mRNA Translation. Mol. Cell 2012, 47, 648–655. [Google Scholar] [CrossRef]

- Carlevaro-Fita, J.; Johnson, R. Global Positioning System: Understanding Long Noncoding RNAs through Subcellular Localization. Mol. Cell 2019, 43, 869–883. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.L. Linking Long Noncoding RNA Localization and Function. Trends Biochem. Sci. 2016, 41, 761–772. [Google Scholar] [CrossRef] [PubMed]

- Meyer, C.; Garzia, A.; Tuschl, T. Simultaneous detection of the subcellular localization of RNAs and proteins in cultured cells by combined multicolor RNA-FISH and IF. Methods 2017, 118–119, 101–110. [Google Scholar] [CrossRef] [PubMed]

- Lu, C.; Yang, M.; Luo, F.; Wu, F.X.; Li, M.; Pan, Y.; Li, Y.; Wang, J. Prediction of lncRNA-disease associations based on inductive matrix completion. Bioinformatics 2018, 34, 3357–3364. [Google Scholar] [CrossRef] [PubMed]

- Cabili, M.N.; Dunagin, M.C.; McClanahan, P.D.; Biaesch, A.; Padovan-Merhar, O.; Regev, A.; Rinn, J.L.; Raj, A. Localization and abundance analysis of human lncRNAs at single-cell and single-molecule resolution. Genome Biol. 2015, 16, 20. [Google Scholar] [CrossRef]

- Mas-Ponte, D.; Carlevaro-Fita, J.; Palumbo, E.; Pulido, T.H.; Guigo, R.; Johnson, R. LncATLAS database for subcellular localization of long noncoding RNAs. RNA 2017, 23, 1080–1087. [Google Scholar] [CrossRef]

- Chin, A.; Lécuyer, E. RNA localization: Making its way to the center stage. Biochim. Biophys. Acta Gen. Subj. 2017, 1861, 2956–2970. [Google Scholar] [CrossRef]

- Winter, J.; Jung, S.; Keller, S.; Gregory, R.I.; Diederichs, S. Many roads to maturity: MicroRNA biogenesis pathways and their regulation. Nat. Cell Biol. 2009, 11, 228–234. [Google Scholar] [CrossRef] [PubMed]

- Meng, Y.; Liu, Y.; Li, K.; Fu, T. Prognostic value of long non-coding RNA breast cancer anti-estrogen resistance 4 in human cancers: A meta-analysis. Medicine 2019, 98, e15793. [Google Scholar] [CrossRef] [PubMed]

- Yu, B.; Shan, G. Functions of long noncoding RNAs in the nucleus. Nucleus 2016, 7, 155–166. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, I.; Valverde, A.; Ahmad, F.; Naqvi, A.R. Long Noncoding RNA in Myeloid and Lymphoid Cell Di ff erentiation, Polarization and Function. Cells 2020, 9, 269. [Google Scholar] [CrossRef] [PubMed]

- Kirk, J.M.; Kim, S.O.; Inoue, K.; Smola, M.J.; Lee, D.M.; Schertzer, M.D.; Wooten, J.S.; Baker, A.R.; Sprague, D.; Collins, D.W.; et al. Functional classification of long non-coding RNAs by k-mer content. Nat. Genet. 2018, 50, 1474–1482. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Liang, Y.; Du, W.; Lv, W.; Li, Y. Lnclocation: Efficient subcellular location prediction of long non-coding rna-based multi-source heterogeneous feature fusion. Int. J. Mol. Sci. 2020, 21, 7271. [Google Scholar] [CrossRef] [PubMed]

- Wen, X.; Gao, L.; Guo, X.; Li, X.; Huang, X.; Wang, Y.; Xu, H.; He, R.; Jia, C.; Liang, F. LncSLdb: A resource for long non-coding RNA subcellular localization. Database 2018, 2018, bay085. [Google Scholar] [CrossRef]

- Ahmad, A.; Lin, H.; Shatabda, S. Locate-R: Subcellular localization of long non-coding RNAs using nucleotide compositions. Genomics 2020, 112, 2583–2589. [Google Scholar] [CrossRef]

- Fan, Y.; Chen, M.; Zhu, Q. LncLocPred: Predicting LncRNA Subcellular Localization Using Multiple Sequence Feature Information. IEEE Access 2020, 8, 124702–124711. [Google Scholar] [CrossRef]

- Su, Z.D.; Huang, Y.; Zhang, Z.Y.; Zhao, Y.W.; Wang, D.; Chen, W.; Chou, K.C.; Lin, H. ILoc-lncRNA: Predict the subcellular location of lncRNAs by incorporating octamer composition into general PseKNC. Bioinformatics 2018, 34, 4196–4204. [Google Scholar] [CrossRef]

- Zhang, Z.Y.; Ning, L.; Ye, X.; Yang, Y.H.; Futamura, Y.; Sakurai, T.; Lin, H. iLoc-miRNA: Extracellular/intracellular miRNA prediction using deep BiLSTM with attention mechanism. Brief. Bioinform. 2022, 23, bbac395. [Google Scholar] [CrossRef] [PubMed]

- Zuckerman, B.; Ulitsky, I. Predictive models of subcellular localization of long RNAs. RNA 2019, 25, 557–572. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Han, L.; Wang, R.; Wang, X. An accurate identification method of bitter peptides based on deep learning. J. Light Ind. 2023, 38, 11–16. [Google Scholar]

- Voit, E.O.; Martens, H.A.; Omholt, S.W. 150 Years of the Mass Action Law. PLoS Comput. Biol. 2015, 11, e1004012. [Google Scholar] [CrossRef] [PubMed]

- Cao, Z.; Pan, X.; Yang, Y.; Huang, Y.; Shen, H.B. The lncLocator: A subcellular localization predictor for long non-coding RNAs based on a stacked ensemble classifier. Bioinformatics 2018, 34, 2185–2194. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Zhao, B.; Yin, R.; Lu, C.; Guo, F.; Zeng, M. GraphLncLoc: Long non-coding RNA subcellular localization prediction using graph convolutional networks based on sequence to graph transformation. Brief. Bioinform. 2023, 24, bbac565. [Google Scholar] [CrossRef] [PubMed]

- Zeng, M.; Zhang, F.; Wu, F.X.; Li, Y.; Wang, J.; Li, M. Protein-protein interaction site prediction through combining local and global features with deep neural networks. Bioinformatics 2020, 36, 1114–1120. [Google Scholar] [CrossRef]

- Wang, J.; Li, J.; Yue, K.; Wang, L.; Ma, Y.; Li, Q. NMCMDA: Neural multicategory MiRNA-disease association prediction. Brief. Bioinform. 2021, 22, bbab074. [Google Scholar] [CrossRef]

- Zeng, M.; Wu, Y.; Lu, C.; Zhang, F.; Wu, F.X.; Li, M. DeepLncLoc: A deep learning framework for long non-coding RNA subcellular localization prediction based on subsequence embedding. Brief. Bioinform. 2022, 23, bbab360. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Compeau, P.E.C.; Pevzner, P.A.; Tesler, G. How to apply de Bruijn graphs to genome assembly. Nat. Biotechnol. 2011, 29, 987–991. [Google Scholar] [CrossRef] [PubMed]

- Zhou, R.; Lu, Z.; Luo, H.; Xiang, J.; Zeng, M.; Li, M. NEDD: A network embedding based method for predicting drug-disease associations. BMC Bioinform. 2020, 21, 387. [Google Scholar] [CrossRef] [PubMed]

- Shibuya, Y.; Belazzougui, D.; Kucherov, G. Space-efficient representation of genomic k-mer count tables. Algorithms Mol. Biol. 2022, 17, 5. [Google Scholar] [CrossRef]

- Yu, A.; Choi, Y.H.; Tu, M. RNA drugs and RNA targets for small molecules: Principles, progress, and challenges. Pharmacol. Rev. 2020, 72, 862–898. [Google Scholar] [CrossRef] [PubMed]

- Chou, K. Some remarks on protein attribute prediction and pseudo amino acid composition. J. Theor. Biol. 2011, 273, 236–247. [Google Scholar] [CrossRef] [PubMed]

- Cui, T.; Dou, Y.; Tan, P.; Ni, Z.; Liu, T.; Wang, D.L.; Huang, Y.; Cai, K.; Zhao, X.; Xu, D.; et al. RNALocate v2.0: An updated resource for RNA subcellular localization with increased coverage and annotation. Nucleic. Acids Res. 2022, 50, D333–D339. [Google Scholar] [CrossRef] [PubMed]

- Taliaferro, J.M. Transcriptome-scale methods for uncovering subcellular RNA localization mechanisms. Biochim. Biophys. Acta Mol. Cell Res. 2022, 1869, 119–202. [Google Scholar] [CrossRef]

- Zhang, T.; Tan, P.; Wang, L.; Jin, N.; Li, Y.; Zhang, L.; Yang, H.; Hu, Z.; Zhang, L.; Hu, C.; et al. RNALocate: A resource for RNA subcellular localizations. Nucleic. Acids Res. 2017, 45, D135–D138. [Google Scholar]

- Huang, Y.; Niu, B.; Gao, Y.; Fu, L.; Li, W. CD-HIT Suite: A web server for clustering and comparing biological sequences. Bioinformatics 2010, 26, 680–682. [Google Scholar] [CrossRef]

- Xu, M.; Chen, Y.; Xu, Z.; Zhang, L.; Jiang, H.; Pian, C. MiRLoc: Predicting miRNA subcellular localization by incorporating miRNA-mRNA interactions and mRNA subcellular localization. Brief. Bioinform. 2022, 23, bbac044. [Google Scholar] [CrossRef]

- Ameen, Z.S.; Mostafa, H.; Ozsahin, D.U.; Mubarak, A.S. Accelerating SARS-CoV-2 Vaccine Development: Leveraging Novel Hybrid Deep Learning Models and Bioinformatics Analysis for Epitope Selection and Classification. Processes 2023, 11, 1829. [Google Scholar] [CrossRef]

- Eze, M.C.; Vafaei, L.E.; Eze, C.T.; Tursoy, T.; Ozsahin, D.U.; Mustapha, M.T. Development of a Novel Multi-Modal Contextual Fusion Model for Early Detection of Varicella Zoster Virus Skin Lesions in Human Subjects. Processes 2023, 11, 2268. [Google Scholar] [CrossRef]

- Kondo, Y. Long non-coding RNAs as an epigenetic regulator in human cancers. Cancer Sci. 2017, 108, 1927–1933. [Google Scholar] [CrossRef]

- Zhang, Z.Y.; Yang, Y.H.; Ding, H.; Wang, D.; Chen, W.; Lin, H. Design powerful predictor for mRNA subcellular location prediction in Homo sapiens. Brief. Bioinform. 2021, 22, 526–535. [Google Scholar] [CrossRef] [PubMed]

- Quang, D.; Xie, X. DanQ: A hybrid convolutional and recurrent deep neural network for quantifying the function of DNA sequences. Nucleic Acids Res. 2016, 44, e107. [Google Scholar] [CrossRef] [PubMed]

- Bai, T.; Yan, K.; Liu, B. DAmiRLocGNet: miRNA subcellular localization prediction by combining miRNA–disease associations and graph convolutional networks. Brief. Bioinform. 2023, 24, bbad212. [Google Scholar] [CrossRef] [PubMed]

- Muhammod, R.; Ahmed, S.; Farid, D.; Shatabda, S.; Dehzangi, A. PyFeat: A Python-based effective feature generation tool for DNA. RNA and protein sequences. Bioinformatics 2019, 35, 3831–3833. [Google Scholar] [CrossRef]

- Rna, N.; Quinn, J.J.; Chang, H.Y. Unique features of long non-coding RNA biogenesis and function. Nat. Rev. Genet. 2016, 17, 47–62. [Google Scholar]

| Subcellular Localization | Number of Samples |

|---|---|

| Cytoplasm | 328 |

| Nucleus | 325 |

| Ribosome | 88 |

| Exosome | 28 |

| Total | 769 |

| Accuracy | Precision | Recall | F1-score | |

|---|---|---|---|---|

| CNN | 0.579 | 0.627 | 0.532 | 0.557 |

| CNN + attention | 0.614 | 0.736 | 0.557 | 0.589 |

| CNN + attention + BiLSTM | 0.667 | 0.754 | 0.620 | 0.654 |

| Prediction | Accuracy | Precision | Recall | F1-score |

|---|---|---|---|---|

| lncLocator | 0.421 | 0.374 | 0.325 | 0.289 |

| iLoc-lncRNA | 0.509 | 0.524 | 0.470 | 0.474 |

| Locate-R | 0.368 | 0.362 | 0.321 | 0.321 |

| GraphLncLoc | 0.579 | 0.736 | 0.557 | 0.584 |

| PreSubLncR | 0.667 | 0.754 | 0.620 | 0.654 |

| iLoc-lncRNA | PreSubLncR | |||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Cytoplasm | 0.553 | 0.700 | 0.618 | 0.680 | 0.750 | 0.710 |

| Nucleus | 0.467 | 0.350 | 0.400 | 0.580 | 0.700 | 0.640 |

| Ribosome | 0.333 | 0.300 | 0.316 | 0.750 | 0.600 | 0.670 |

| Exosome | 0.600 | 0.429 | 0.500 | 1.000 | 0.430 | 0.600 |

| DeepLncLoc | PreSubLncR | |||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1-score | Precision | Recall | F1-score | |

| Cytoplasm | 0.800 | 0.400 | 0.533 | 0.680 | 0.750 | 0.71 |

| Nucleus | 0.400 | 0.800 | 0.533 | 0.580 | 0.700 | 0.64 |

| Ribosome | 0.500 | 0.400 | 0.444 | 0.750 | 0.600 | 0.67 |

| Exosome | 1.000 | 0.571 | 0.727 | 1.000 | 0.430 | 0.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Wang, S.; Wang, R.; Gao, X. PreSubLncR: Predicting Subcellular Localization of Long Non-Coding RNA Based on Multi-Scale Attention Convolutional Network and Bidirectional Long Short-Term Memory Network. Processes 2024, 12, 666. https://doi.org/10.3390/pr12040666

Wang X, Wang S, Wang R, Gao X. PreSubLncR: Predicting Subcellular Localization of Long Non-Coding RNA Based on Multi-Scale Attention Convolutional Network and Bidirectional Long Short-Term Memory Network. Processes. 2024; 12(4):666. https://doi.org/10.3390/pr12040666

Chicago/Turabian StyleWang, Xiao, Sujun Wang, Rong Wang, and Xu Gao. 2024. "PreSubLncR: Predicting Subcellular Localization of Long Non-Coding RNA Based on Multi-Scale Attention Convolutional Network and Bidirectional Long Short-Term Memory Network" Processes 12, no. 4: 666. https://doi.org/10.3390/pr12040666

APA StyleWang, X., Wang, S., Wang, R., & Gao, X. (2024). PreSubLncR: Predicting Subcellular Localization of Long Non-Coding RNA Based on Multi-Scale Attention Convolutional Network and Bidirectional Long Short-Term Memory Network. Processes, 12(4), 666. https://doi.org/10.3390/pr12040666