Deep Learning Model for Industrial Leakage Detection Using Acoustic Emission Signal

Abstract

1. Introduction

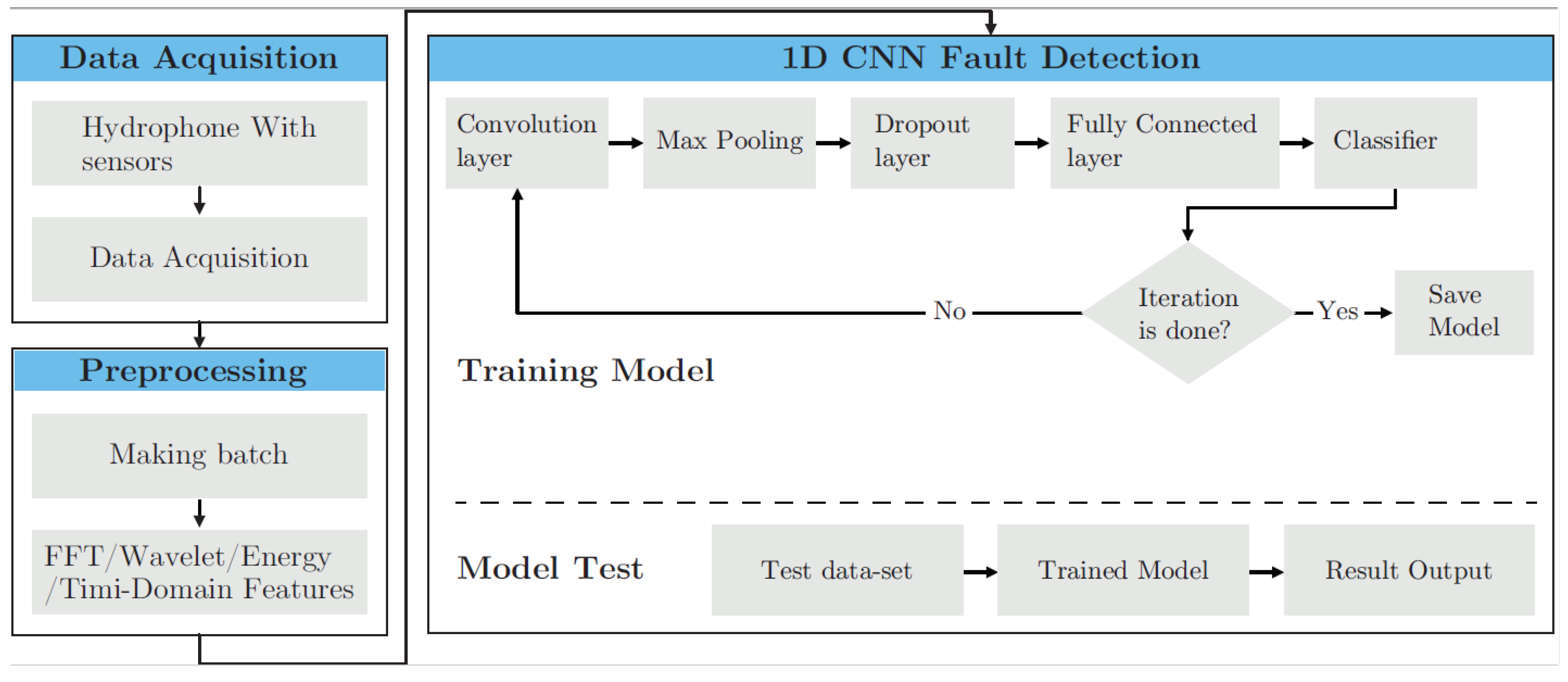

2. Methodology

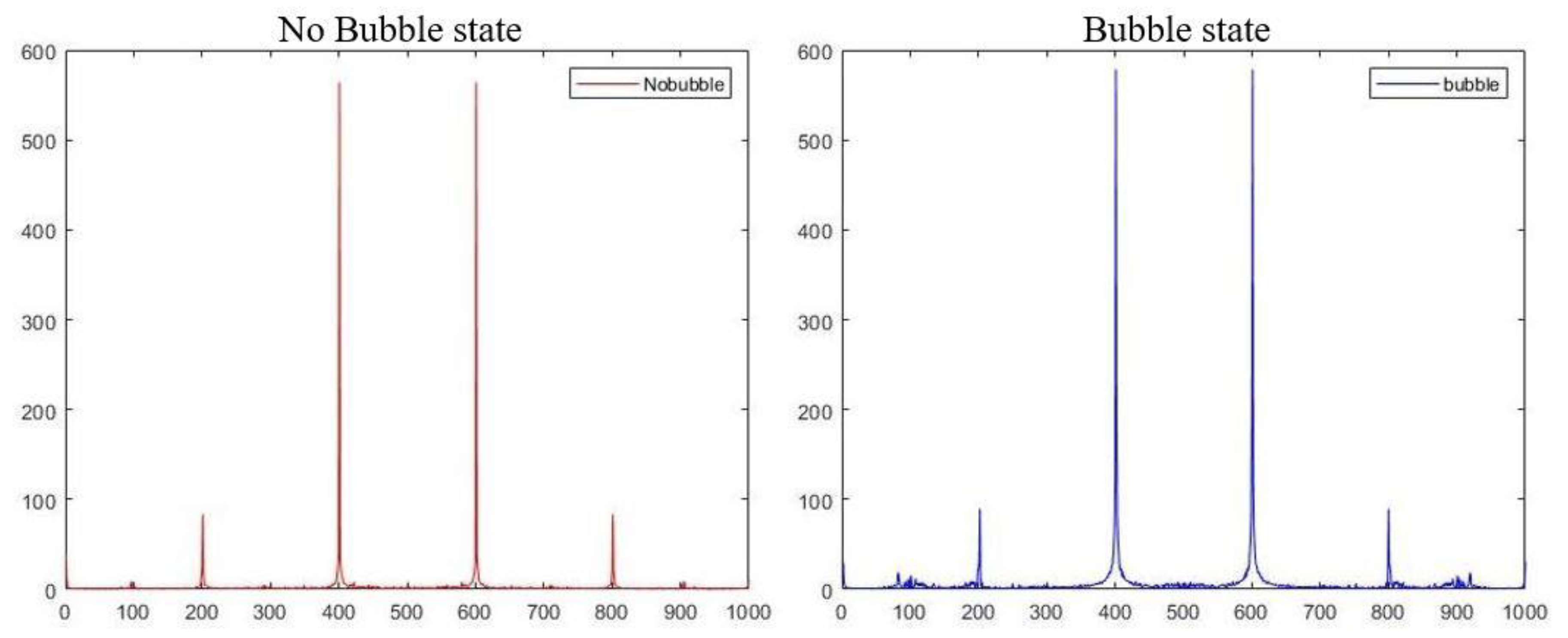

2.1. Fast Fourier Transform

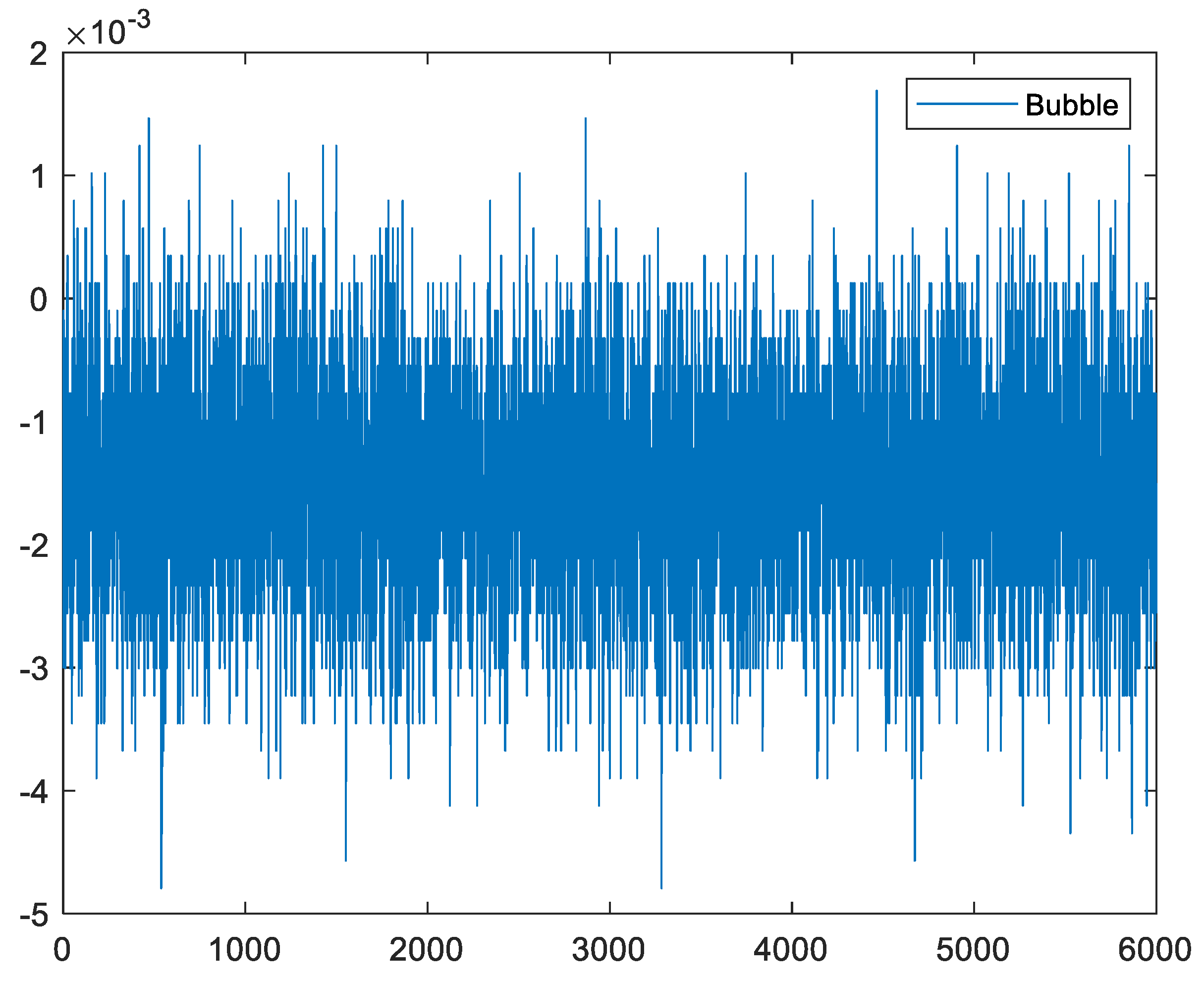

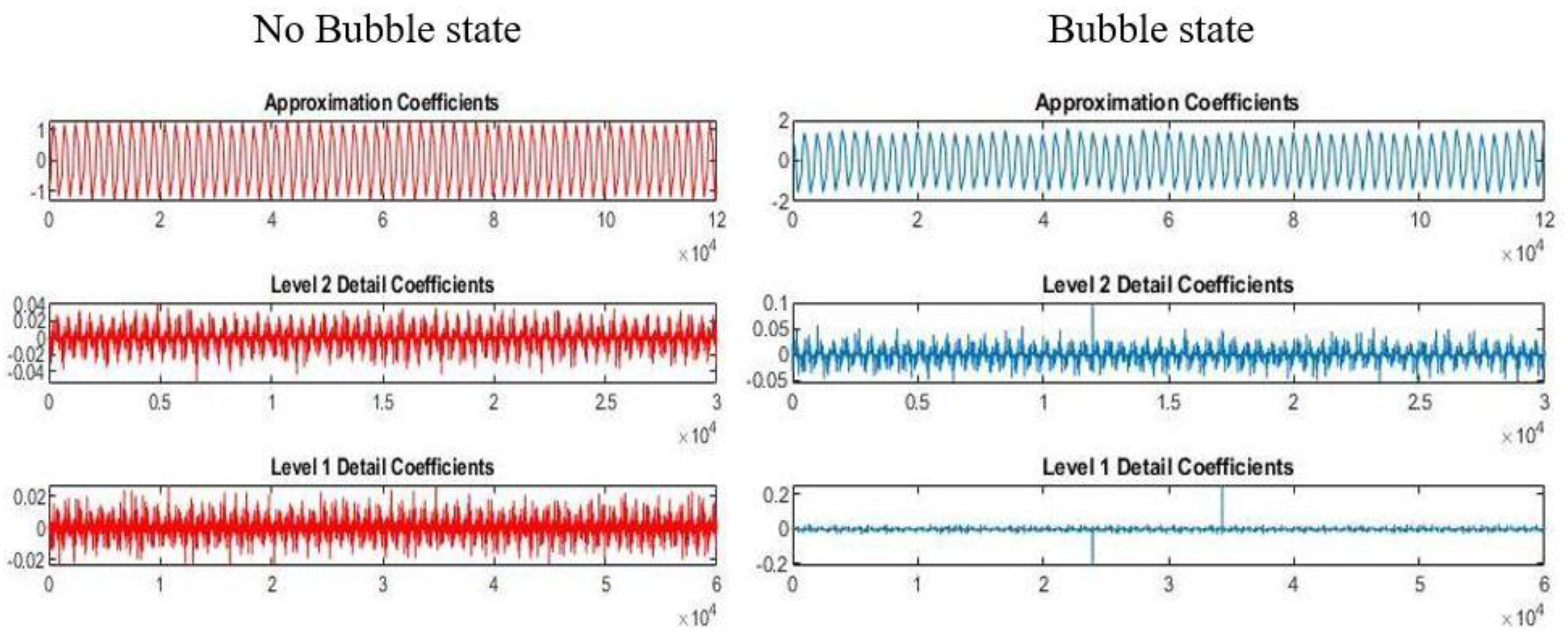

2.2. Wavelet Transform

2.3. Statistical Features

2.4. Convolution Neural Network

3. Experimental Procedure

3.1. Experimental Set-Up

3.2. Dataset and Algorithm

| Algorithm 1 Pseudo-code of FFT_1D CNN Algorithm |

| Input: Raw healthy and faulty signals Output: Binary value Step 1: Calling raw input samples (127 samples) one by one, each sample would be as follows: , Where is the vector of 1×12,000,000 over R. Step 2: Extracting the name of each sample Step 3: Assigning 0 and 1 labels to each sample If the filename starts with bubble, then label = 1 else label = 0 Step 4: Splitting each sample into 120 equal size buckets, each bucket would have the following shape: , Where is the vector of 1 × 100,000 over R. Each bucket is obtained during batch processing ((i−1) × Δ + 1) → (I × Δ), where Δ is the sampling rate 100 kHz and i = 1, …, 120, corresponds to the number of batches at time T= 1 sec. Step 5: Calculating FFT of each bucket, the collected FFT values of all buckets (127 × 120) can be arranged in a the matrix as , where is the vector of 15,240 × 1000 over R. Step 6: Making equal the number of healthy and faulty samples so that the FFT matrix size would be 4840 × 1000. Step 7: Specifying training, validation, and test datasets. Each dataset would be as follows: The training dataset is a vector of 2172 × 1000, and the validation dataset would be a vector of 1070 × 1000 Moreover, the test dataset is 1598 × 1000. Step 8: Expanding the shape of training, validation, and test datasets so they would be as follows: The training dataset is a vector of 2172 × 1000 × 1. The validation dataset would be a vector of 1070 × 1000 × 1 Moreover, the test dataset would be 1598 × 1000 × 1. Step 9: Creating a sequential model Step 10: Defining the proper layers

Specifying binary cross-entropy as loss function and Adam as an optimizer, finally calling compile ( ) function on the model Step 12: Fitting the model: A sample of data should be trained by calling the fit ( ) function on the model. Step 13: Making a prediction Generating predictions on new data by calling evaluate ( ) function. The output of this step would be two values, 0 and 1, with their accuracy as follows: If index = 1 then the related value shows the accuracy of fault detection, else it shows healthy accuracy. End |

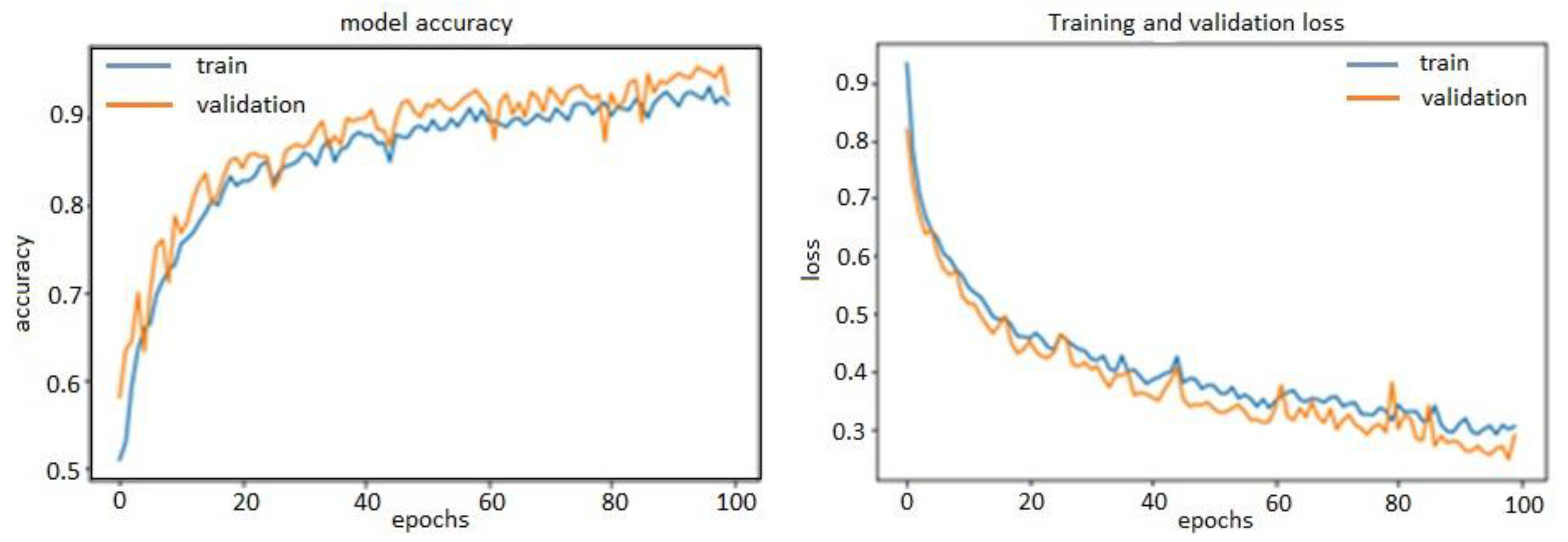

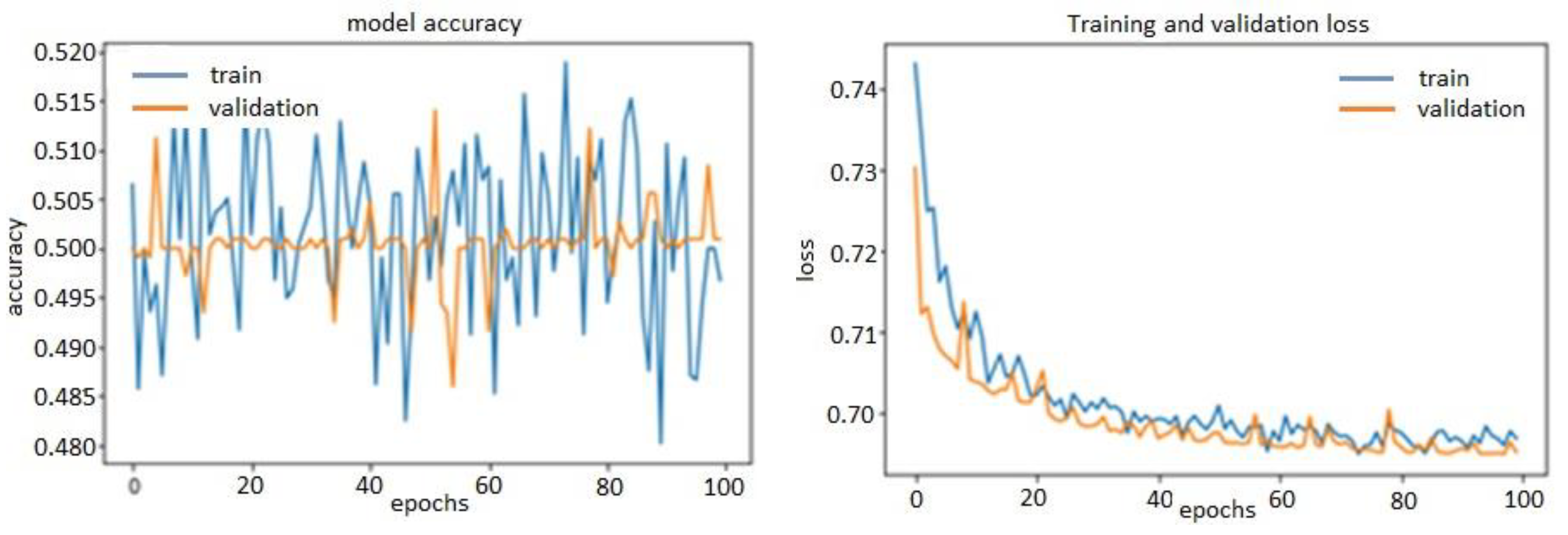

4. Experimental Results and Analysis

- Adding dropout layers;

- Trying different architectures, e.g., adding or removing layers;

- Adding L1 and/or L2 regularization;

- Trying different hyper-parameters (such as the number of units per layer or the optimizer’s learning rate) to find the optimal configuration;

- Optionally, iterating on feature engineering, e.g., adding new features or removing features that were not informative.

5. Conclusions

- Three types of feature extraction methods, i.e., FFT transform, wavelet, and time-domain features of the signal, were implemented to differentiate healthy and faulty states.

- 1D-CNN-based architecture was used to determine leakage along with the three different feature extraction methodologies.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Giantomassi, A.; Ferracuti, F.; Iarlori, S.; Ippoliti, G.; Longhi, S. Electric motor fault detection and diagnosis by kernel density estimation and kullback–leibler divergence based on stator current measurements. IEEE Trans. Ind. Electron. 2014, 62, 1770–1780. [Google Scholar] [CrossRef]

- Gao, Z.; Cecati, C.; Ding, S.X. A survey of fault diagnosis and fault-tolerant techniques—Part I: Fault diagnosis with model-based and signal-based approaches. IEEE Trans. Ind. Electron. 2015, 62, 3757–3767. [Google Scholar] [CrossRef]

- Dai, X.; Gao, Z. From model, signal to knowledge: A data-driven perspective of fault detection and diagnosis. IEEE Trans. Ind. Inform. 2013, 9, 2226–2238. [Google Scholar] [CrossRef]

- Zhou, W.; Habetler, T.G.; Harley, R.G. Bearing fault detection via stator current noise cancellation and statistical control. IEEE Trans. Ind. Electron. 2008, 55, 4260–4269. [Google Scholar] [CrossRef]

- Schoen, R.; Habetler, T.; Kamran, F.; Bartfield, R. Motor bearing damage detection using stator current monitoring. IEEE Trans. Ind. Appl. 1995, 31, 1274–1279. [Google Scholar] [CrossRef]

- Kliman, G.B.; Premerlani, W.J.; Yazici, B.; Koegl, R.A.; Mazereeuw, J. Sensor-less, online motor diagnostics. IEEE Comput. Appl. Power 1997, 10, 39–43. [Google Scholar] [CrossRef]

- Pons-Llinares, J.; Antonino-Daviu, J.A.; Riera-Guasp, M.; Bin Lee, S.; Kang, T.-J.; Yang, C. Advanced induction motor rotor fault diagnosis via continuous and discrete time–frequency tools. IEEE Trans. Ind. Electron. 2014, 62, 1791–1802. [Google Scholar] [CrossRef]

- Arthur, N.; Penman, J. Induction machine condition monitoring with higher order spectra. IEEE Trans. Ind. Electron. 2000, 47, 1031–1041. [Google Scholar] [CrossRef]

- Benbouzid, M.; Vieira, M.; Theys, C. Induction motors’ faults detection and localization using stator current advanced signal processing techniques. IEEE Trans. Power Electron. 1999, 14, 14–22. [Google Scholar] [CrossRef]

- Li, D.Z.; Wang, W.; Ismail, F. An enhanced bi-spectrum technique with auxiliary frequency injection for induction motor health condition monitoring. IEEE Trans. Instrum. Meas. 2015, 64, 2679–2687. [Google Scholar] [CrossRef]

- Eren, L.; Devaney, M.J. Bearing damage detection via wavelet packet decomposition of the stator current. IEEE Trans. Instrum. Meas. 2004, 53, 431–436. [Google Scholar] [CrossRef]

- Ye, Z.; Wu, B.; Sadeghian, A. Current signature analysis of induction motor mechanical faults by wavelet packet decomposition. IEEE Trans. Ind. Electron. 2003, 50, 1217–1228. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Ince, T.; Gabbouj, M. Real-Time patient-specific ECG classification by 1-D convolutional neural networks. IEEE Trans. Biomed. Eng. 2015, 63, 664–675. [Google Scholar] [CrossRef]

- Ince, T.; Kiranyaz, S.; Eren, L.; Askar, M.; Gabbouj, M. Real-Time motor fault detection by 1-D convolutional neural networks. IEEE Trans. Ind. Electron. 2016, 63, 7067–7075. [Google Scholar] [CrossRef]

- Cataldo, A.; Cannazza, G.; De Benedetto, E.; Giaquinto, N. A new method for detecting leaks in underground water pipelines. IEEE Sens. J. 2011, 12, 1660–1667. [Google Scholar] [CrossRef]

- Giaquinto, N.; D’Aucelli, G.M.; De Benedetto, E.; Cannazza, G.; Cataldo, A.; Piuzzi, E.; Masciullo, A. Criteria for automated estimation of time of fight in tdr analysis. IEEE Trans. Instrum. Meas. 2016, 65, 1215–1224. [Google Scholar] [CrossRef]

- Knapp, C.; Carter, G. The generalized correlation method for estimation of time delay. IEEE Trans. Acoust. Speech Signal Process. 1976, 24, 320–327. [Google Scholar] [CrossRef]

- Hero, A.; Schwartz, S. A new generalized cross correlator. IEEE Trans. Acoust. Speech Signal Process. 1985, 33, 38–45. [Google Scholar] [CrossRef]

- Li, B.; Chow, M.-Y.; Tipsuwan, Y.; Hung, J. Neural-network-based motor rolling bearing fault diagnosis. IEEE Trans. Ind. Electron. 2000, 47, 1060–1069. [Google Scholar] [CrossRef]

- Pandiyan, V.; Tjahjowidodo, T. In-process endpoint detection of weld seam removal in robotic abrasive belt grinding process. Int. J. Adv. Manuf. Technol. 2017, 93, 1699–1714. [Google Scholar] [CrossRef]

- Kwak, J.-S.; Ha, M.-K. Neural network approach for diagnosis of grinding operation by acoustic emission and power signals. J. Mater. Process. Technol. 2004, 147, 65–71. [Google Scholar] [CrossRef]

- Al-Habaibeh, A.; Gindy, N. A new approach for systematic design of condition monitoring systems for milling processes. J. Mater. Process. Technol. 2000, 107, 243–251. [Google Scholar] [CrossRef]

- Niu, Y.M.; Wong, Y.S.; Hong, G.S. An intelligent sensor system approach for reliable tool flank wear recognition. Int. J. Adv. Manuf. Technol. 1998, 14, 77–84. [Google Scholar] [CrossRef]

- Betta, G.; Liguori, C.; Paolillo, A.; Pietrosanto, A. A DSP-based FFT-analyzer for the fault diagnosis of rotating machine based on vibration analysis. IEEE Trans. Instrum. Meas. 2002, 51, 1316–1322. [Google Scholar] [CrossRef]

- Rai, V.; Mohanty, A. Bearing fault diagnosis using _t of intrinsic mode functions in Hilbert-huang transform. Mech. Syst. Signal Process. 2007, 21, 2607–2615. [Google Scholar] [CrossRef]

- Pandiyan, V.; Caesarendra, W.; Tjahjowidodo, T.; Tan, H.H. In-process tool condition monitoring in compliant abrasive belt grinding process using support vector machine and genetic algorithm. J. Manuf. Process. 2018, 31, 199–213. [Google Scholar] [CrossRef]

- Sohn, H.; Park, G.; Wait, J.R.; Limback, N.P.; Farrar, C.R. Wavelet-based active sensing for delamination detection in composite structures. Smart Mater. Struct. 2003, 13, 153–160. [Google Scholar] [CrossRef]

- Hou, Z.; Noori, M.; Amand, R.S. Wavelet-Based approach for structural damage detection. J. Eng. Mech. 2000, 126, 677–683. [Google Scholar] [CrossRef]

- Yang, H.-T.; Liao, C.-C. A de-noising scheme for enhancing wavelet-based power quality monitoring system. IEEE Trans. Power Deliv. 2001, 16, 353–360. [Google Scholar] [CrossRef]

- LeCun, Y. Deep learning and convolutional networks. In Hot Chips 27 Symposium (HCS); IEEE: New York, NJ, USA, 2015; pp. 1–95. [Google Scholar]

- Sohaib, M.; Islam, M.; Kim, J.; Jeon, D. Leakage Detection of a Spherical Water Storage Tank in a Chemical Industry Using Acoustic Emissions. Appl. Sci. 2019, 9, 196. [Google Scholar] [CrossRef]

| Signal Analysis | Feature | Sensor Used | Process |

|---|---|---|---|

| Time-domain | Root mean square (RMS) | Acoustic emission (AE) and power | Monitoring grinding operation [21] |

| Skewness | Vibration | Condition monitoring for milling [22] | |

| Kurtosis | AE | Tool flank wear recognition [23] | |

| Frequency- domain | FFT | Vibration | Fault diagnosis of the rotating machine [24] |

| FFT | Vibration | Bearing fault diagnosis [25] | |

| FFT | Vibration, AE, force | Tool wear monitoring [26] | |

| Wavelet | Morlet wavelet | Piezoelectric sensor | Delamination detection [27] |

| Daubechies-4 | Vibration | Structural damage detection [28] | |

| Daubechies-4 | Power | Power quality monitoring [29] |

| Features | Expression |

|---|---|

| Mean value | |

| Standard deviation | |

| Kurtosis | K = |

| Skewness | S = |

| Root mean square | RMS = |

| Crest factor | C = |

| Peak-to-peak (PPV) value | PPV = max value min value |

| Label | Mean | STD | Skewness | Kurtosis | RMS | Peak-to-Peak | Crest-Factor |

|---|---|---|---|---|---|---|---|

| 1 | −0.00303 | 0.932716 | 0.001772 | −1.55262 | 0.932721 | 3.166601 | 1.694493 |

| 1 | 0.001391 | 0.934791 | 0.002414 | −1.55813 | 0.934792 | 3.135015 | 1.649781 |

| 1 | 0.004578 | 0.942283 | −0.00174 | −1.50837 | 0.942295 | 3.411634 | 1.81576 |

| 1 | −0.00252 | 0.936973 | 0.005309 | −1.52431 | 0.936976 | 3.431415 | 1.81483 |

| 0 | 5.40 ×10−5 | 0.813413 | −0.00043 | −1.56246 | 0.813413 | 2.636973 | 1.594723 |

| 0 | 0.001712 | 0.813213 | 0.002127 | −1.57254 | 0.813215 | 2.619106 | 1.583342 |

| 0 | 0.001307 | 0.815626 | 0.001999 | −1.57703 | 0.815627 | 2.609215 | 1.582571 |

| 0 | 0.000724 | 0.812949 | 0.002658 | −1.57934 | 0.812949 | 2.607301 | 1.604661 |

| Variables | Quantity |

|---|---|

| Data | 127 samples |

| Number of buckets | 120 |

| Each bucket | (100,000, 1) |

| Full data array | (15,240, 100,000) |

| Full label array | (15,240, 1) |

| Train data | (2172, 1000) |

| Validation data | (1070, 1000) |

| Test data | (1598, 1000) |

| Parameters Methods | Activation Function (Tanh) | Dropout (0.5) | Having 2 Dense Layers | Having 2 Convolution Layers | Optimizer Stochastic Gradient Descent (SGD) |

|---|---|---|---|---|---|

| FFT_1D-CNN | 74.53 | 81.66 | 83.85 | 83.55 | 56.45 |

| Wavelet_1D-CNN | 47.68 | 47.68 | 47.68 | 47.68 | 47.68 |

| Time-domain features_1D-CNN | 52.88 | 53.69 | 53.94 | 56.45 | 48.19 |

| Layer | Name | Specification |

|---|---|---|

| 1 | Convolution | 2×2×1 |

| 2 | Relu | N/A |

| 3 | Max pooling | 2×2 |

| 4 | Flatten | 998 |

| 5 | Dense | 128 |

| 6 | Sigmoid | N/A |

| 7 | Dense | 64 |

| 8 | Sigmoid | N/A |

| 9 | Dropout | 20% |

| 10 | Fully Connected | 1 |

| 11 | Sigmoid | N/A |

| 12 | Classification | Binary cross-entropy |

| Layers | Methods | ||

|---|---|---|---|

| FFT | Wavelet | Time Domain | |

| Shape Param | Shape Param | Shape Param | |

| Conv 1D | (None, 999, 2) 6 | (None, 6, 2) 6 | (None, 999, 2) 6 |

| MaxPooling1 | (None, 449, 2) 0 | (None, 2) 0 | (None, 449, 2)0 |

| Flatten | (None, 998) 0 | (None, 6) 0 | (None, 998)0 |

| Dense | (None, 128) 127,872 | (None, 128) 896 | (None, 128) 127,872 |

| Dense | (None, 64) 8256 | (None, 64) 8256 | (None, 64) 8256 |

| Dropout | (None, 64) 0 | (None, 64) 0 | (None, 64) 0 |

| Output (Dense) | (None, 1) 65 | (None, 1) 65 | (None, 1) 65 |

| Total Params | 136199 | 9223 | 136199 |

| Methods | Accuracy (%) | ||

|---|---|---|---|

| Epoch = 50 | Epoch = 100 | ||

| 1 | FFT_1D-CNN | 84.23 | 86.42 |

| 2 | Wavelet_1D-CNN | 52.88 | 54.57 |

| 3 | Time-domain Features_1D-CNN | 54.32 | 53.94 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahimi, M.; Alghassi, A.; Ahsan, M.; Haider, J. Deep Learning Model for Industrial Leakage Detection Using Acoustic Emission Signal. Informatics 2020, 7, 49. https://doi.org/10.3390/informatics7040049

Rahimi M, Alghassi A, Ahsan M, Haider J. Deep Learning Model for Industrial Leakage Detection Using Acoustic Emission Signal. Informatics. 2020; 7(4):49. https://doi.org/10.3390/informatics7040049

Chicago/Turabian StyleRahimi, Masoumeh, Alireza Alghassi, Mominul Ahsan, and Julfikar Haider. 2020. "Deep Learning Model for Industrial Leakage Detection Using Acoustic Emission Signal" Informatics 7, no. 4: 49. https://doi.org/10.3390/informatics7040049

APA StyleRahimi, M., Alghassi, A., Ahsan, M., & Haider, J. (2020). Deep Learning Model for Industrial Leakage Detection Using Acoustic Emission Signal. Informatics, 7(4), 49. https://doi.org/10.3390/informatics7040049